ALICE le strategie per lanalisi Massimo Masera Dipartimento

- Slides: 22

ALICE: le strategie per l’analisi Massimo Masera Dipartimento di Fisica Sperimentale e INFN sezione di Torino Workshop CCR e INFN-GRID 2009

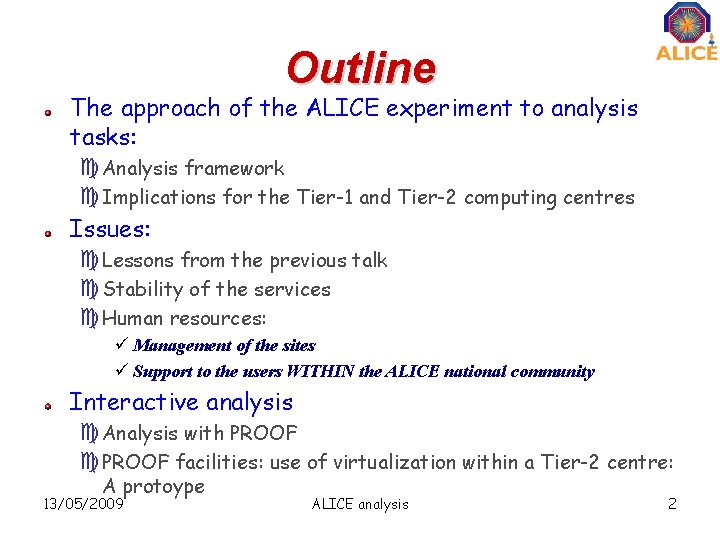

Outline The approach of the ALICE experiment to analysis tasks: c. Analysis framework c. Implications for the Tier-1 and Tier-2 computing centres Issues: c. Lessons from the previous talk c. Stability of the services c. Human resources: ü Management of the sites ü Support to the users WITHIN the ALICE national community Interactive analysis c. Analysis with PROOF c. PROOF facilities: use of virtualization within a Tier-2 centre: A protoype 13/05/2009 ALICE analysis 2

Analysis in ALICE The proposed sub-title for this talk is: c Un rappresentante senior dell'esperimento descrive il piano con cui la collaborazione italiana si sta preparando ad utilizzare le risorse ai T 2 e T 3 per l'analisi dei dati prodotti ad LHC… (it goes on, but it is enough for now) There are 2 points which deserve a preliminary comment c“Italian collaboration”. Well, the plan to analyze LHC data is unique for the whole experiment. There is no room, within the ALICE computing rules, for approaches, which are not integrated with the ALICE offline framework. Nevertheless: ü The Italian community has to organize the human resources to manage the computing centres and to support the users. This is critical, but it is not peculiar to the analysis part only. ü Interactive analysis. We are planning to deploy an analysis facility to be operated with PROOF. 13/05/2009 ALICE analysis 3

Analysis in ALICE There are 2 points which deserve a preliminary comment (cont’d) c How do we plan to use Tier-3 c. Within INFN-Grid, Tier-3 has been a forbidden word so far (often a forbidden dream): ü A too easy way to keep normal users away from GRID solutions; ü A way to make everybody happy with a local computing farm to play with. c. In the ALICE computing model there is no a specific role for Tier-3 in the model are considered “community oriented” contributions, open to the whole collaboration c. Physics results can be published only if obtained on the GRID, with input/output available on the GRID c. Local computing resources are hence considered “private” and intended only for the development phase of the code and of the analysis tasks very small communities/single physicists ü Sometimes a desktop does the job ü Small farms proved to be very effective for these purposes 13/05/2009 ALICE analysis 4

Analysis in ALICE There are 2 points which deserve a preliminary comment (cont’d) c How do we plan to use Tier-3 c. PROOF clusters do not fit in the MONARC naming scheme and are not operated as LCG centres c. However the only PROOF cluster mentioned in our computing model, the CERN Analys Facility, proved to be very effective and it is quite popular in the ALICE community. c. PROOF analysis facilities have been deployed in Germany (GSI) and are in the final stage of deployment in France (Lyon). They are opened (at least nominally) to any member of the collaboration. c. We are planning to build PROOF clusters also in Italy, intended as virtual facilities largely based on existing hardware in Tier-2 centres. c. PROOF clusters are somehow the ALICE reinterpretation of the Tier-3 concept ü They need to be integrated in the production framework and 13/05/2009 opened to potentially every ALICE user 5

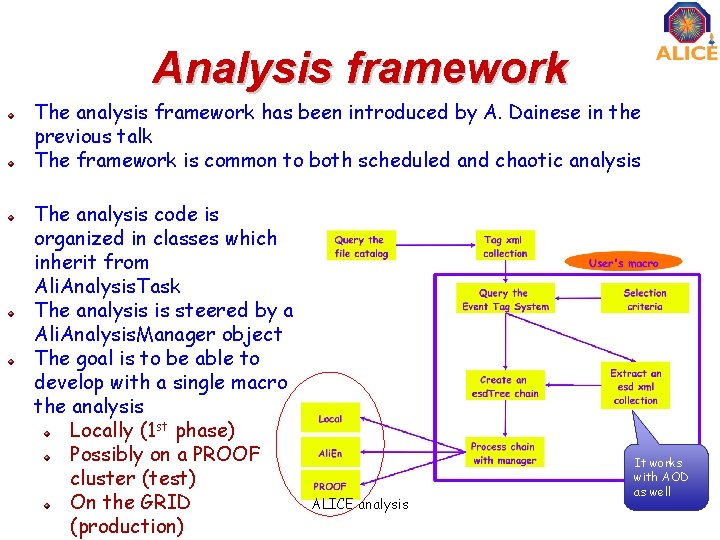

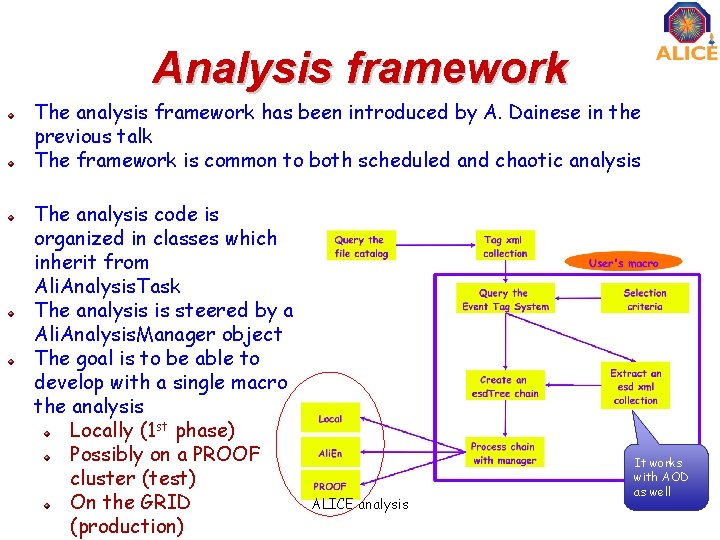

Analysis framework The analysis framework has been introduced by A. Dainese in the previous talk The framework is common to both scheduled and chaotic analysis The analysis code is organized in classes which inherit from Ali. Analysis. Task The analysis is steered by a Ali. Analysis. Manager object The goal is to be able to develop with a single macro the analysis Locally (1 st phase) Possibly on a PROOF cluster (test) On the GRID (production) ALICE analysis It works with AOD as well 6

Scheduled analysis The first analysis step has ESD (Event Summary Data) as input. It is a scheduled analysis procedure. Analyses which are carried out on ESDs are done where ESDs are stored: mainly in Tier-1 sites for RAW ESDs and Tier-2 for MC ESDs. The output can range from simple histograms to AODs (Analysis Object Datasets), which in turn can be used as input for subsequent analysis passes AODs are replicated in at least 3 Tier-2 centres (more depending on popularity) to optimize data access and availability 13/05/2009 ALICE analysis 7

Scheduled analysis Analysis tasks to be included in scheduled analyses must obey to basically two validation criteria: c. Physics-wise: validation is done within the Physics Working Groups c Framework-wise: the code must run seamlessly on the GRID with a released version of Ali. Root. Different tasks are executed together, grouped in the so called analysis trains Scheduled analysis passes are steered by the core offline team on the sites contributing to ALICE Priorities defined by the Physics Board 13/05/2009 ALICE analysis 8

Chaotic analysis Steered by small groups of physicists (at the limit single physicists) Done on Tier-2 sites: AODs as input Same framework as for scheduled analysis c. Essentially shorter trains sent by individual users Tier-2 resources will be devoted (actually are) with priority to the user analysis The management of user priorities is not an issue (single task queue for all the jobs) c. Priorities are managed at central services level Results of the analysis can be directly accessed from a root session running on a ALICE user’s laptop c. Only alien-client is needed c. Done with TGrid class c. File accessed via xrootd 13/05/2009 ALICE analysis 9

Chaotic analysis The analysis framework provides tools to allow the development of an analysis macro on local resources and its usage on a PROOF cluster or on the GRID Local CPU resources do not mean local data: ALICE catalogue and data can be accessed from virtually any PC All the AODs corresponding to a given dataset are replicated on several Tier-2. There are no different roles for different Tier-2’s. Data localization in principle is not relevant c. In some cases we asked to replicate on Italian T 2’s data of particular interest for our community, mostly to have the opportunity to test our sites performance c. In any case, Physics Working Groups are naturally spread c. We need to support ALICE users from any country participating in the experiment 13/05/2009 ALICE analysis 10

Issues for INFN Tier-x sites Lessons from the previous talk: c. The analysis framework is being tested by physicists in view of its use with real data c. Results are positive: no urgent need (and time is running out) for new features ü Problems like file collocation issues will be addressed at the level of the central catalogue ü With recent xrootd, the global redirector solves this problem c. Stability and reliability of the sites and of local ALICE services are essential to run an analysis in an effective way . 13/05/2009 ALICE analysis 11

Issues for INFN Tier-x sites Lessons from the previous talk: c. Human resources, also within the collaboration, are the main asset for a successful usage of the resources ü As a national community we are aiming to form a group of collaboration members able to monitor and to some extent manage Tier-2 activities ü This group should contribute also to the user support. ü We had a first “hands-on” meeting last January in Torino. c. We decided to exploit the existing INFN-GRID ticketing system ü An ALICE support unit has been created ü A couple of people/site has been registered as supporter 13/05/2009 ALICE analysis 12

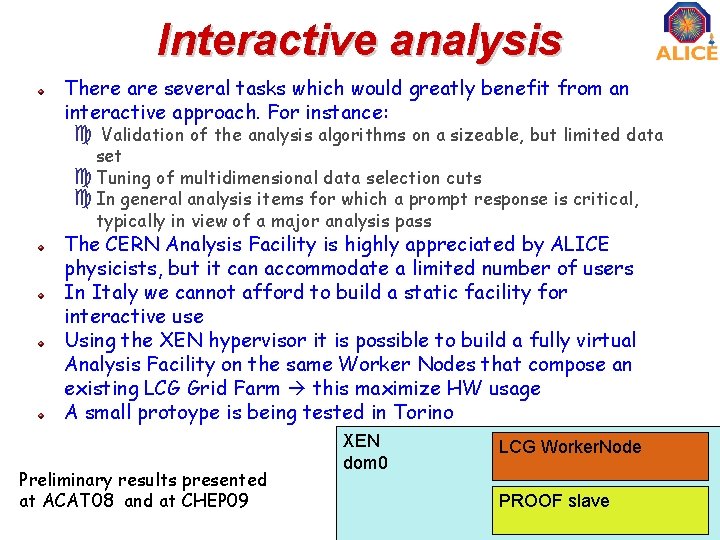

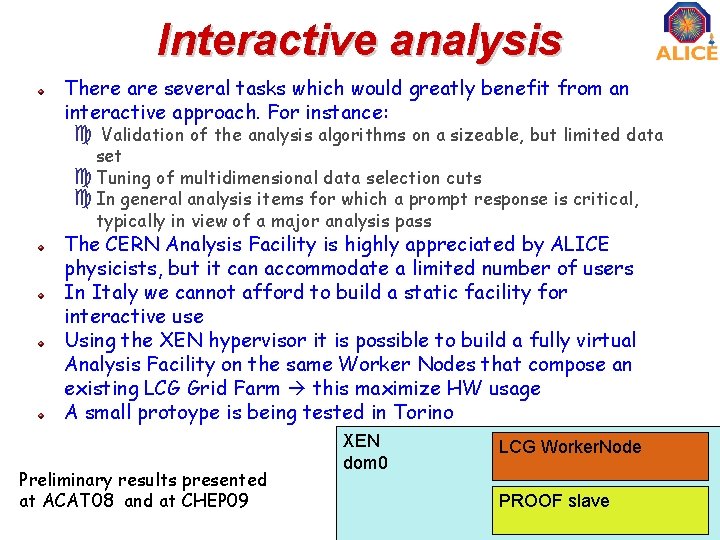

Interactive analysis There are several tasks which would greatly benefit from an interactive approach. For instance: c Validation of the analysis algorithms on a sizeable, but limited data set c Tuning of multidimensional data selection cuts c In general analysis items for which a prompt response is critical, typically in view of a major analysis pass The CERN Analysis Facility is highly appreciated by ALICE physicists, but it can accommodate a limited number of users In Italy we cannot afford to build a static facility for interactive use Using the XEN hypervisor it is possible to build a fully virtual Analysis Facility on the same Worker Nodes that compose an existing LCG Grid Farm this maximize HW usage A small protoype is being tested in Torino Preliminary results presented at ACAT 08 13/05/2009 and at CHEP 09 XEN dom 0 LCG Worker. Node PROOF slave 13

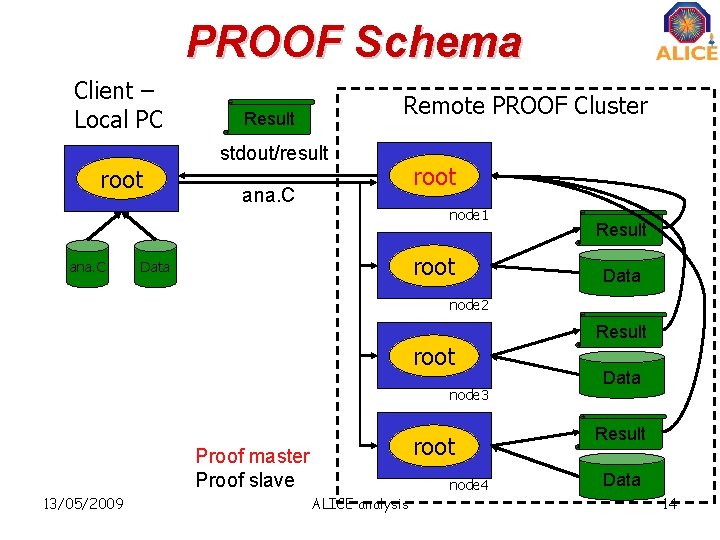

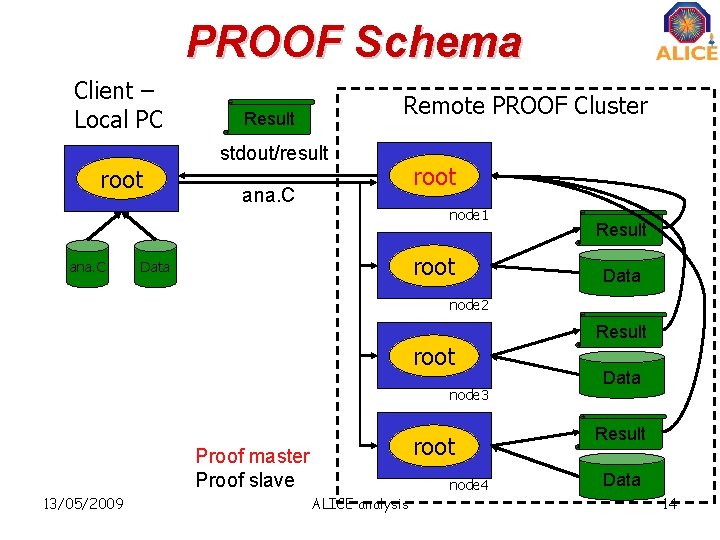

PROOF Schema Client – Local PC root ana. C Remote PROOF Cluster Result stdout/result ana. C root node 1 root Data Result Data node 2 root node 3 root Proof master Proof slave 13/05/2009 ALICE analysis node 4 Result Data 14

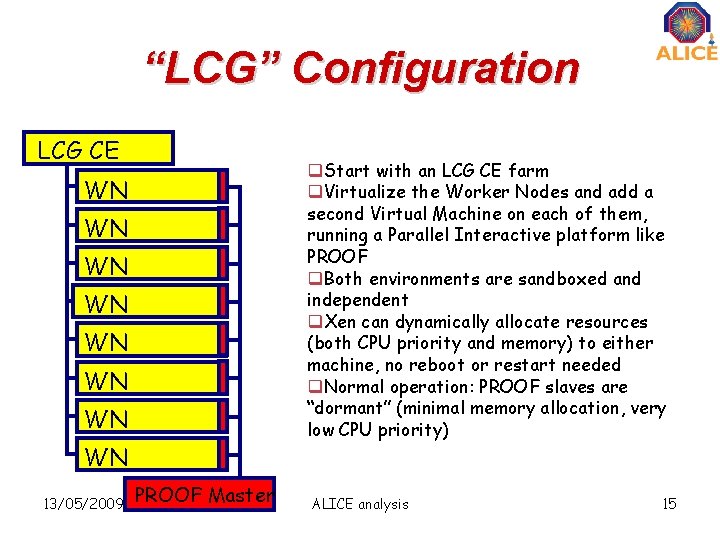

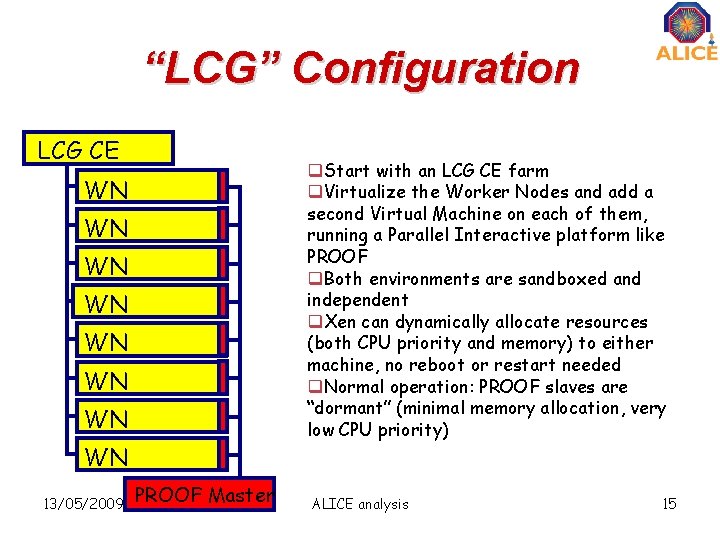

“LCG” Configuration LCG CE q. Start with an LCG CE farm q. Virtualize the Worker Nodes and add a second Virtual Machine on each of them, running a Parallel Interactive platform like PROOF q. Both environments are sandboxed and independent q. Xen can dynamically allocate resources (both CPU priority and memory) to either machine, no reboot or restart needed q. Normal operation: PROOF slaves are “dormant” (minimal memory allocation, very low CPU priority) WN WN 13/05/2009 PROOF Master ALICE analysis 15

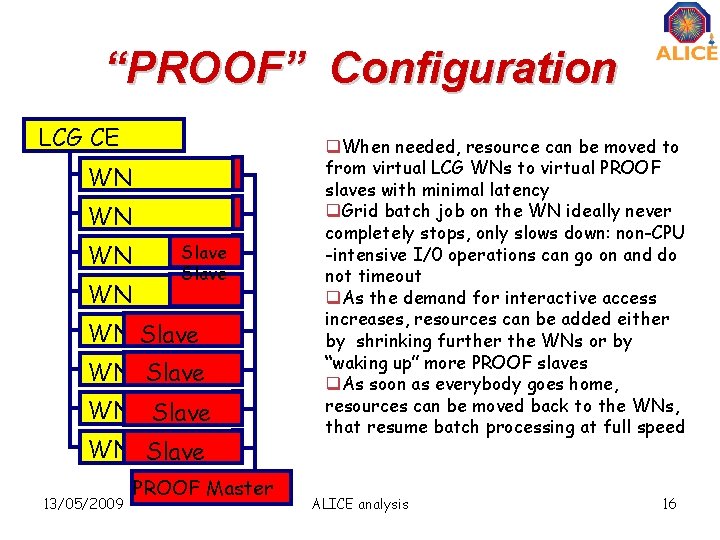

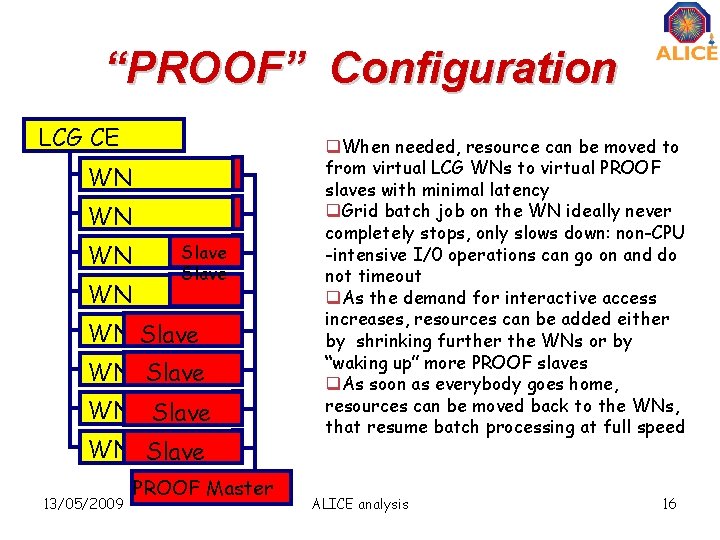

“PROOF” Configuration LCG CE WN WN Slave WN Slave 13/05/2009 PROOF Master q. When needed, resource can be moved to from virtual LCG WNs to virtual PROOF slaves with minimal latency q. Grid batch job on the WN ideally never completely stops, only slows down: non-CPU -intensive I/0 operations can go on and do not timeout q. As the demand for interactive access increases, resources can be added either by shrinking further the WNs or by “waking up” more PROOF slaves q. As soon as everybody goes home, resources can be moved back to the WNs, that resume batch processing at full speed ALICE analysis 16

The prototype • Hardware ü 4 x HP Pro. Liant 360 DL, dual quad-core, plus one head node for access, management and monitoring üSeparate physical 146 GB SAS disk for each virtual machine performance isolation üPrivate network with NAT to outside world (currently including storage) • Software üLinux Cent. OS 5. 1 with kernel 2. 6. 18 -53 on dom 0 üXen 3. 0 üg. Lite 3. 1 Worker Node suite üPROOF/Scalla 20090217 -0500 üCustom monitoring & management tools 13/05/2009 ALICE analysis 17

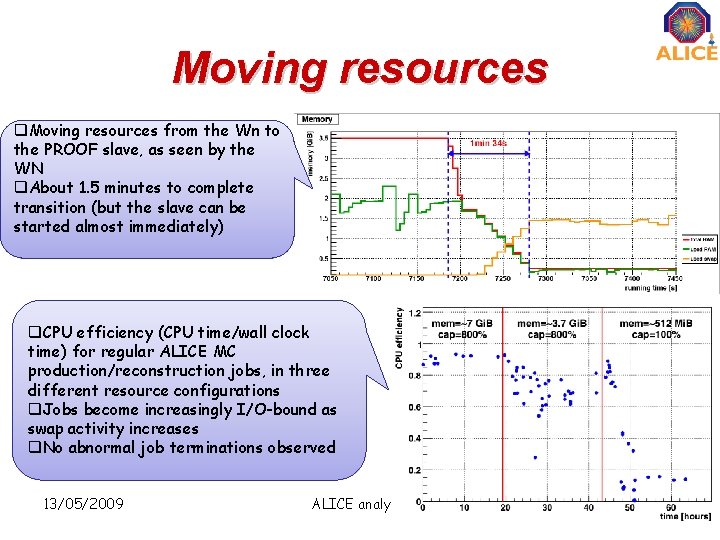

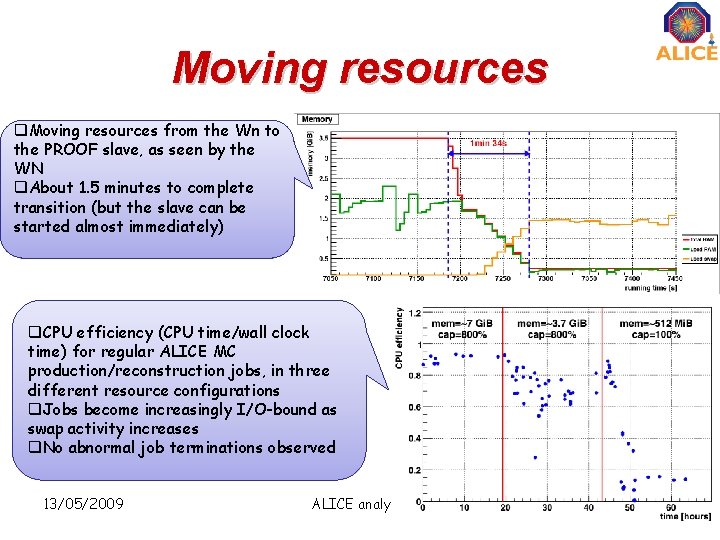

Moving resources q. Moving resources from the Wn to the PROOF slave, as seen by the WN q. About 1. 5 minutes to complete transition (but the slave can be started almost immediately) q. CPU efficiency (CPU time/wall clock time) for regular ALICE MC production/reconstruction jobs, in three different resource configurations q. Jobs become increasingly I/O-bound as swap activity increases q. No abnormal job terminations observed 13/05/2009 ALICE analysis 18

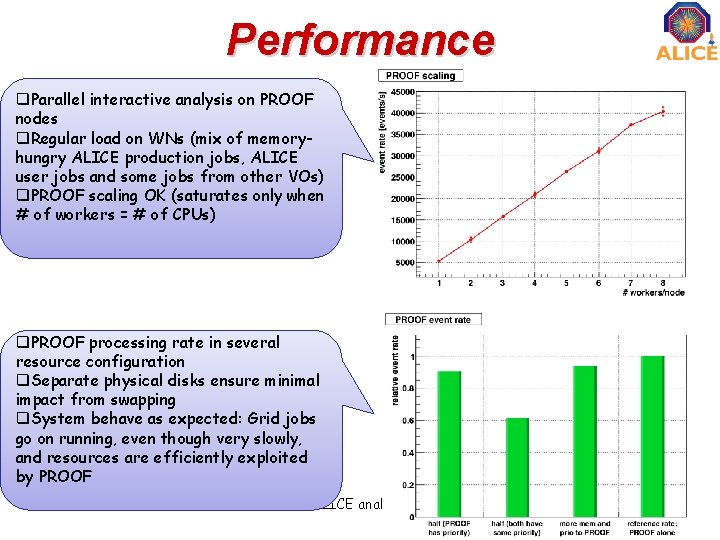

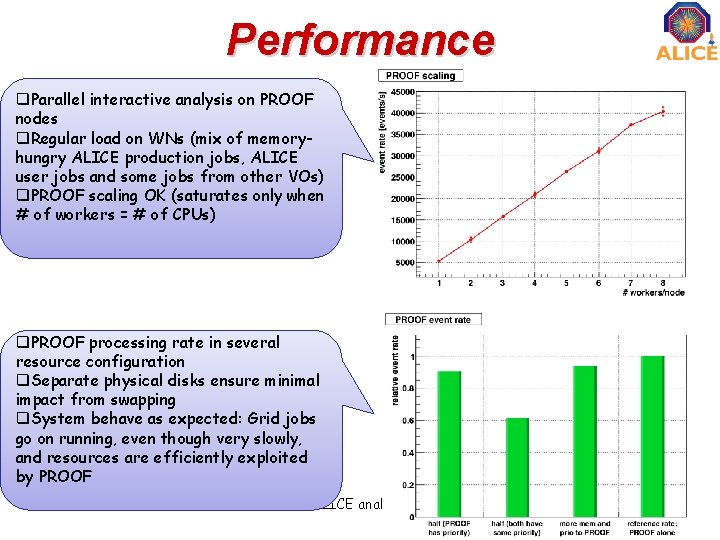

Performance q. Parallel interactive analysis on PROOF nodes q. Regular load on WNs (mix of memoryhungry ALICE production jobs, ALICE user jobs and some jobs from other VOs) q. PROOF scaling OK (saturates only when # of workers = # of CPUs) q. PROOF processing rate in several resource configuration q. Separate physical disks ensure minimal impact from swapping q. System behave as expected: Grid jobs go on running, even though very slowly, and resources are efficiently exploited by PROOF 13/05/2009 ALICE analysis 19

to-do list Develop an automatic system for resource allocation Investigate the optimal resource allocation policy Test for diret access to local SE c. Local network optimization Accounting: c. Interface with standard accounting system 13/05/2009 ALICE analysis 20

Conclusions INFN sites will participate in the ALICE analysis effort, according to the computing model The analysis framework is being used by real users it has the functionality to deal with real LHC data Issues: c. Storage solution must be stable and efficient. See S. Bagnasco’s talk for a discussion of the existing solutions suitable for ALICE (data accessed with xrootd) c. Human resources: growing involvement of ALICE members in production and user support activities. Needed to have an adequate service level We are planning to devote dynamically a limited amount of resources (at T 2) to PROOF 13/05/2009 ALICE analysis 21

13/05/2009 Siamo attrezzati per l’analisi ALICE analysis 22

Massimo masera

Massimo masera Massimo masera

Massimo masera Iteenary

Iteenary Massimo masera

Massimo masera Forza normale fisica

Forza normale fisica Dipartimento di psicologia vanvitelli

Dipartimento di psicologia vanvitelli Relazione finale coordinatore di dipartimento lettere

Relazione finale coordinatore di dipartimento lettere Biblioteche unipr

Biblioteche unipr Ppa psicologia

Ppa psicologia Nord (dipartimento)

Nord (dipartimento) Sotto un ponte passano due anatre

Sotto un ponte passano due anatre Dipartimento ingegneria trento

Dipartimento ingegneria trento Dipartimento di matematica genova

Dipartimento di matematica genova Dipartimento di matematica firenze

Dipartimento di matematica firenze Dipartimento organizzazione giudiziaria

Dipartimento organizzazione giudiziaria Dipartimento scienze mediche ferrara

Dipartimento scienze mediche ferrara Dipartimento di chimica bari

Dipartimento di chimica bari Dipartimento delle istituzioni

Dipartimento delle istituzioni Centro salute mentale arezzo

Centro salute mentale arezzo Marco bagli unige

Marco bagli unige Dipartimento medicina perugia

Dipartimento medicina perugia Dipartimento prevenzione asl viterbo

Dipartimento prevenzione asl viterbo Gecos protezione civile

Gecos protezione civile