A Study of Supervised Spam Detection Applied to

- Slides: 34

A Study of Supervised Spam Detection Applied to Eight Months of Personal EMail Gordon Cormack and Thomas Lynam Presented by Hui Fang 1

Feel free to interrupt when you have any question or comment! 2

Detour: Some background about Email Spam Some slides are adapted from the Tutorial on Junk Mail Filtering by Geoff Hulten and Joshua Goodman. 3

What is Spam? • Typical legal definition – Unsolicited commercial email from someone without a pre-existing business relationship • Definition mostly used – Whatever the users think 4

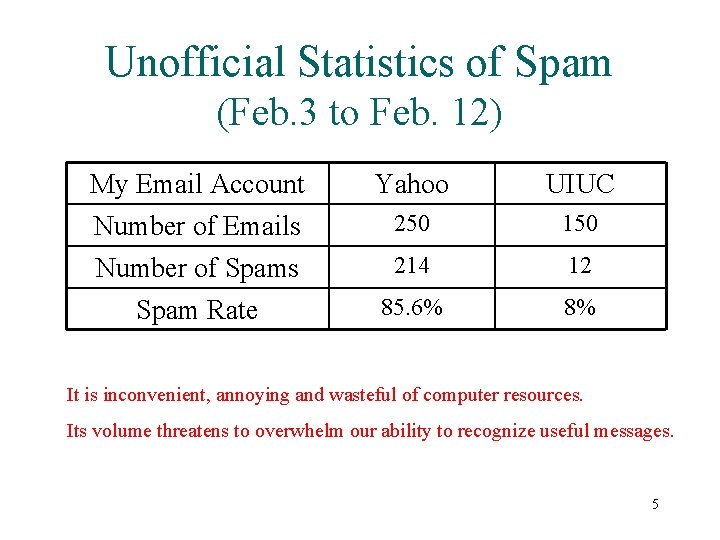

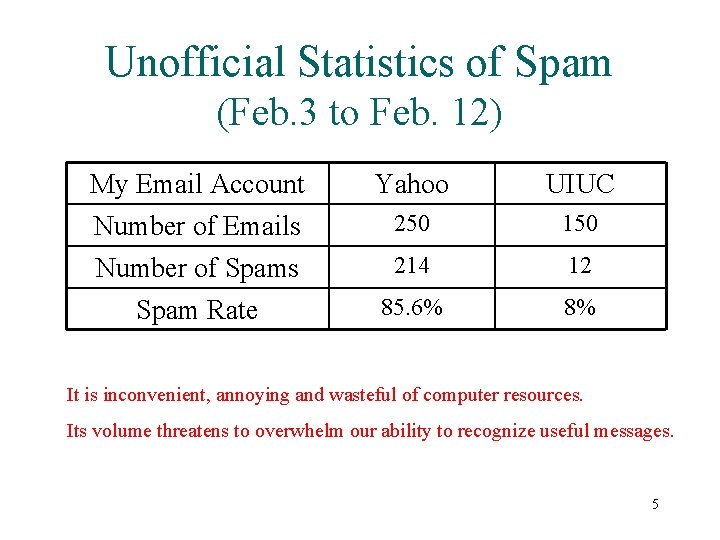

Unofficial Statistics of Spam (Feb. 3 to Feb. 12) My Email Account Number of Emails Number of Spams Yahoo UIUC 250 150 214 12 Spam Rate 85. 6% 8% It is inconvenient, annoying and wasteful of computer resources. Its volume threatens to overwhelm our ability to recognize useful messages. 5

Spam Detection Ham Spam Is this just text categorization? What are the special challenges? 6

Text classification alone is not enough • Spammers now often try to obscure text. • Special features are necessary. – E. g. subject line vs. body text – E. g. Mail in the middle of the night is more likely to be spam than mail in the middle of the day. • … 7

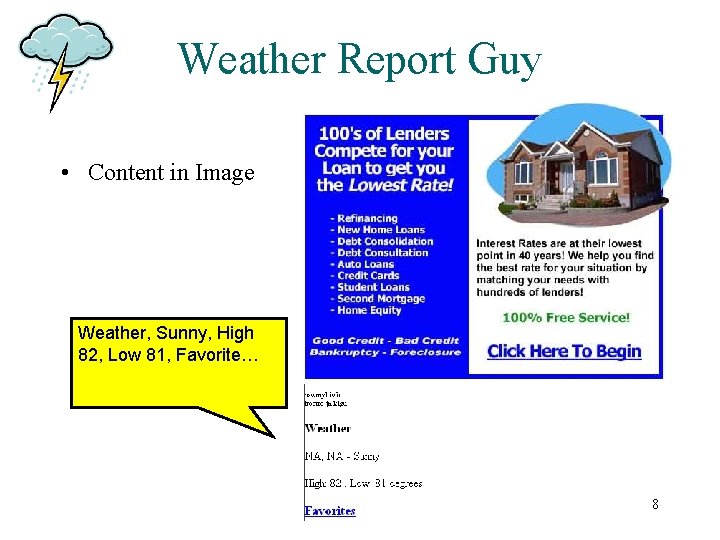

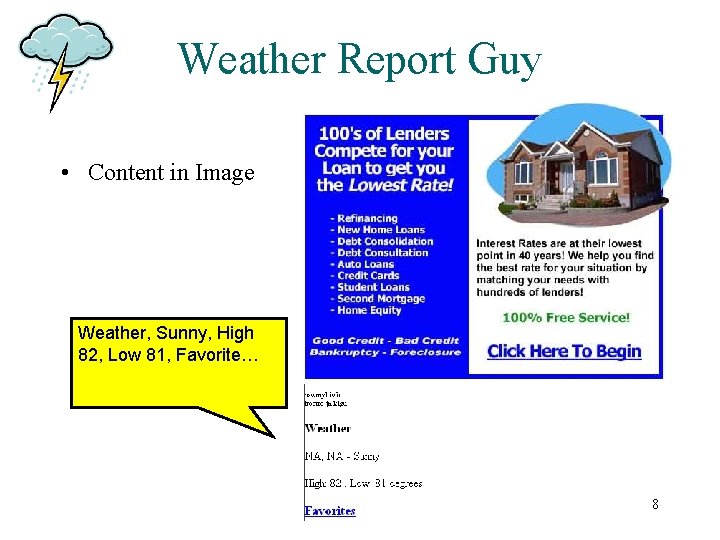

Weather Report Guy • Content in Image Weather, Sunny, High 82, Low 81, Favorite… 8

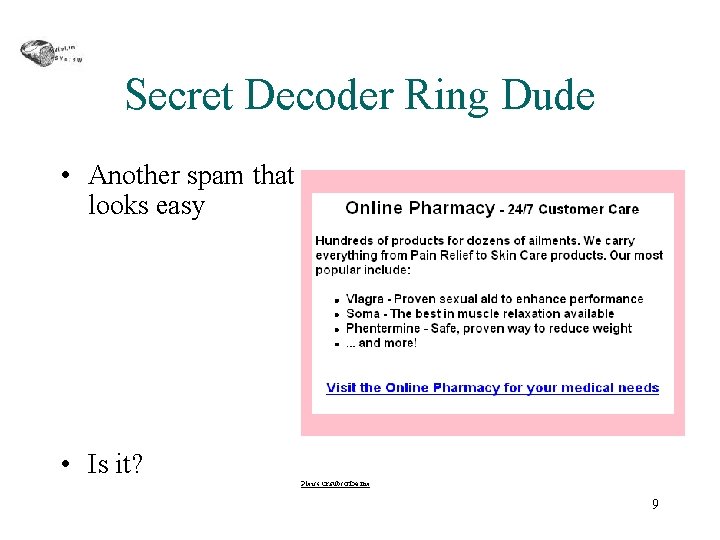

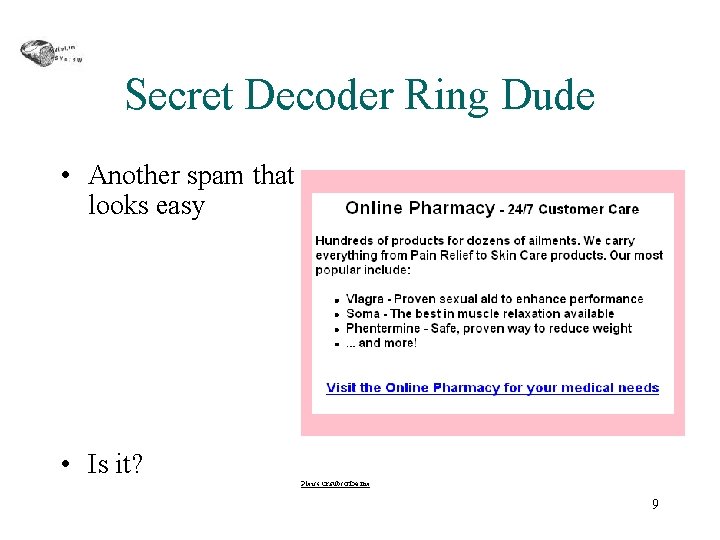

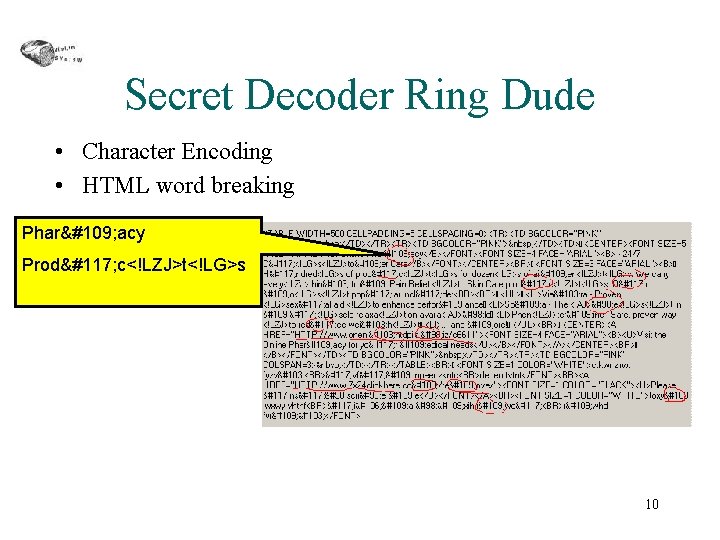

Secret Decoder Ring Dude • Another spam that looks easy • Is it? 9

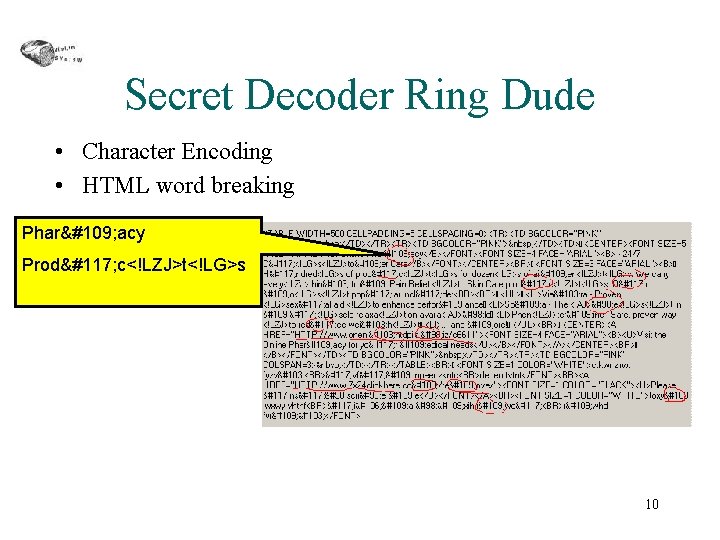

Secret Decoder Ring Dude • Character Encoding • HTML word breaking Pharm acy Produ c<!LZJ>t<!LG>s 10

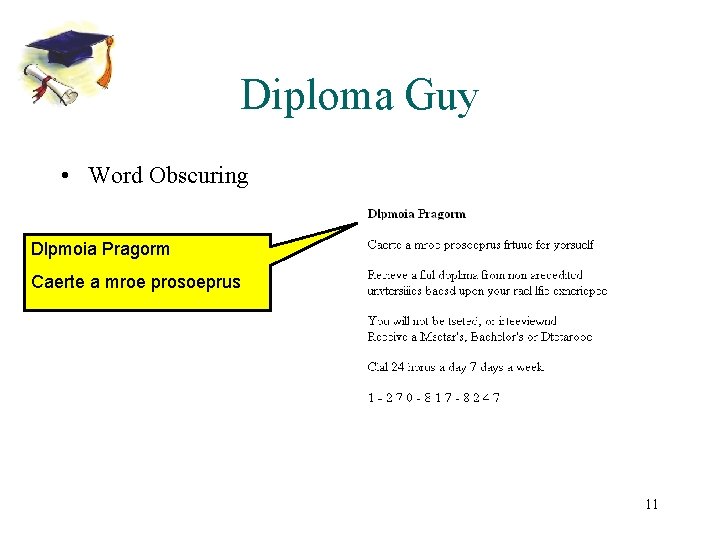

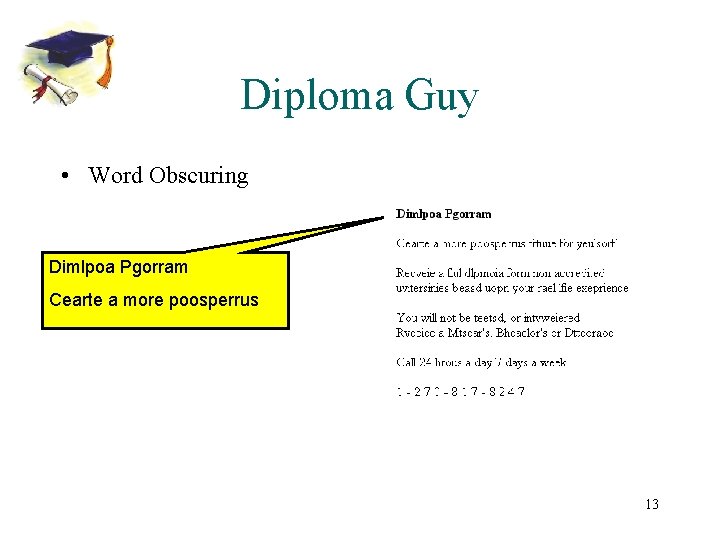

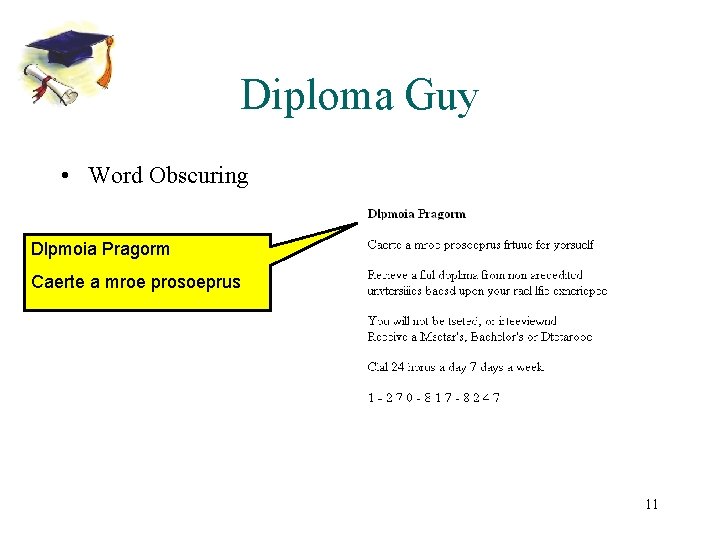

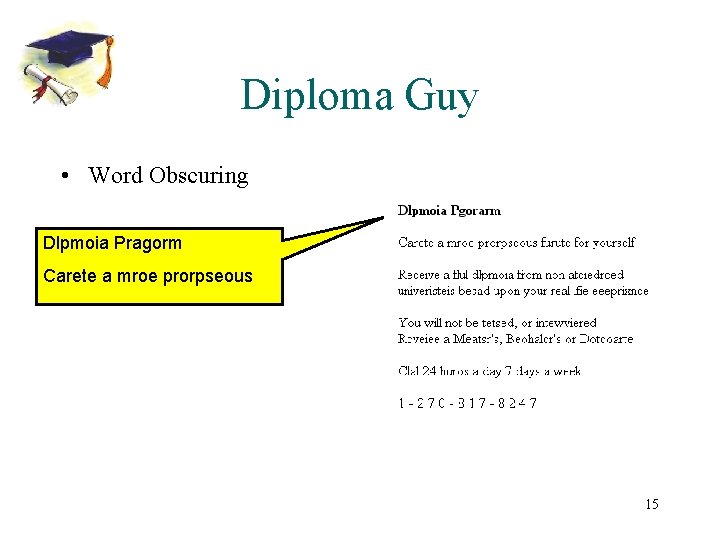

Diploma Guy • Word Obscuring Dlpmoia Pragorm Caerte a mroe prosoeprus 11

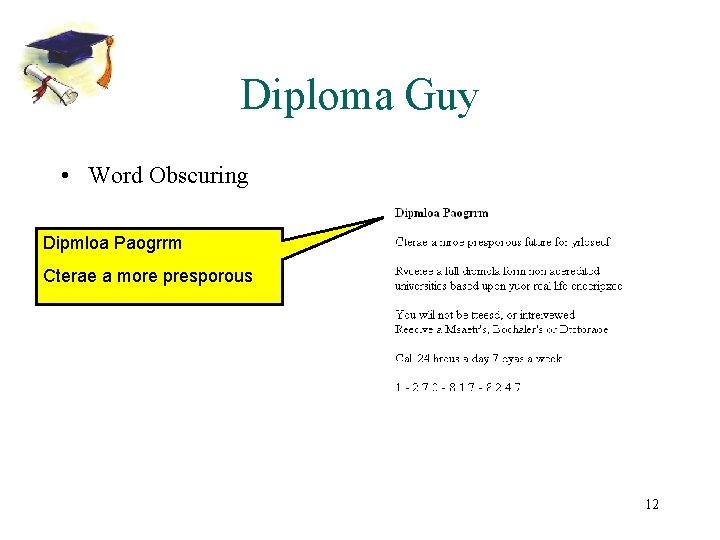

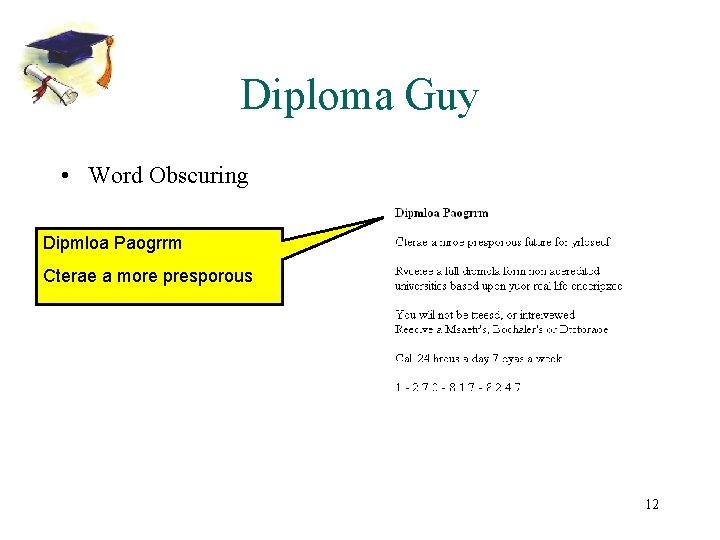

Diploma Guy • Word Obscuring Dipmloa Paogrrm Cterae a more presporous 12

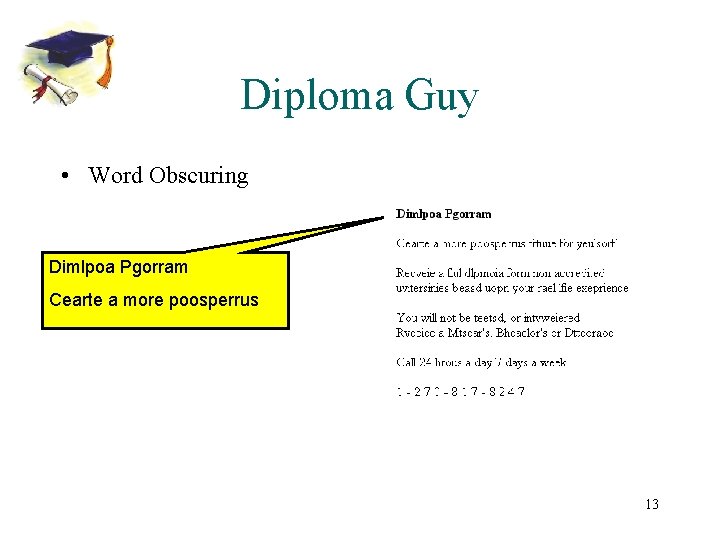

Diploma Guy • Word Obscuring Dimlpoa Pgorram Cearte a more poosperrus 13

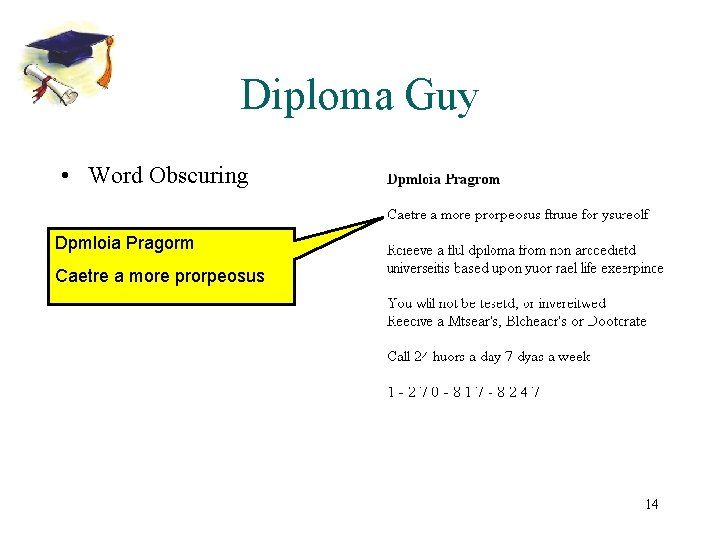

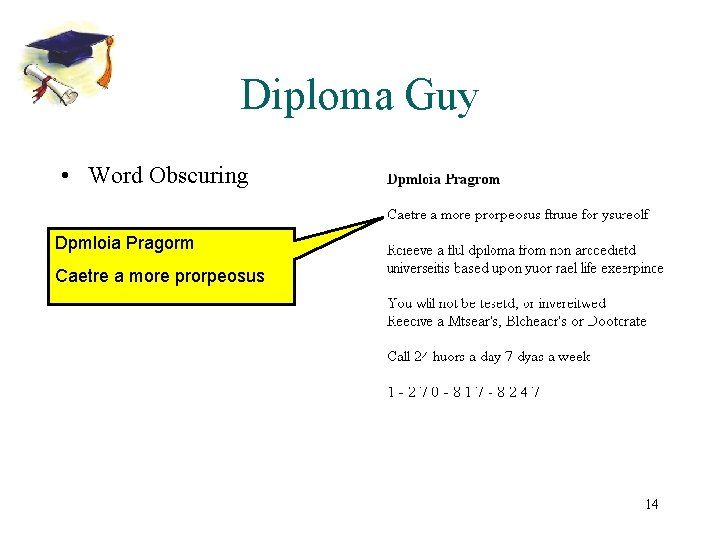

Diploma Guy • Word Obscuring Dpmloia Pragorm Caetre a more prorpeosus 14

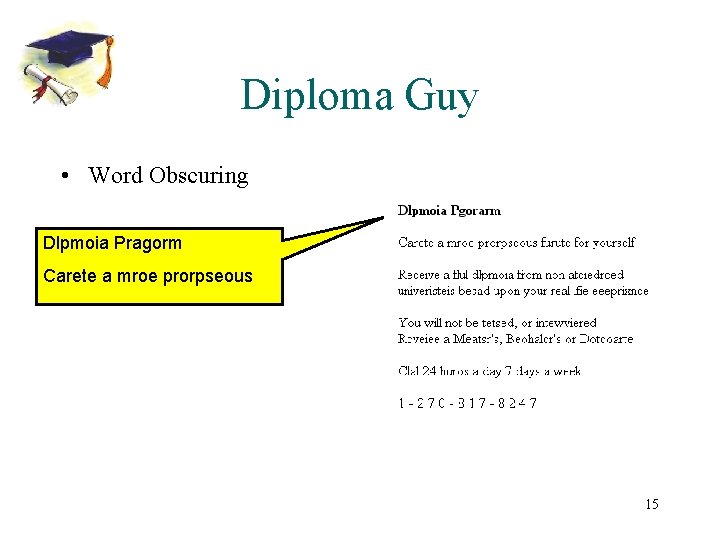

Diploma Guy • Word Obscuring Dlpmoia Pragorm Carete a mroe prorpseous 15

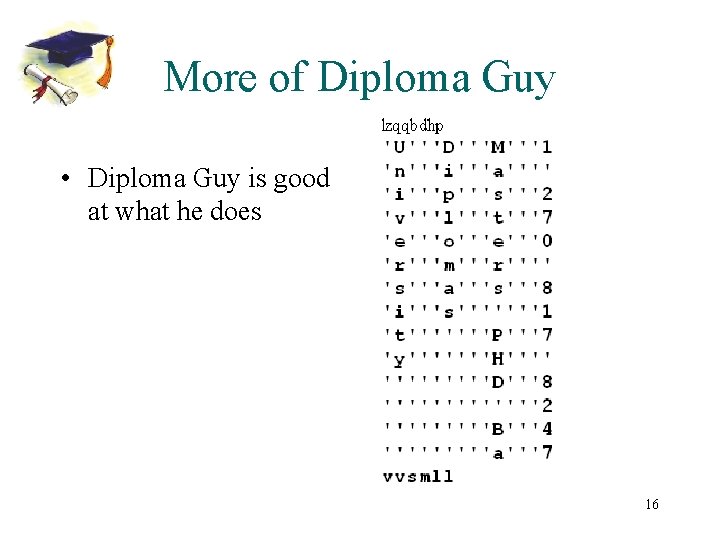

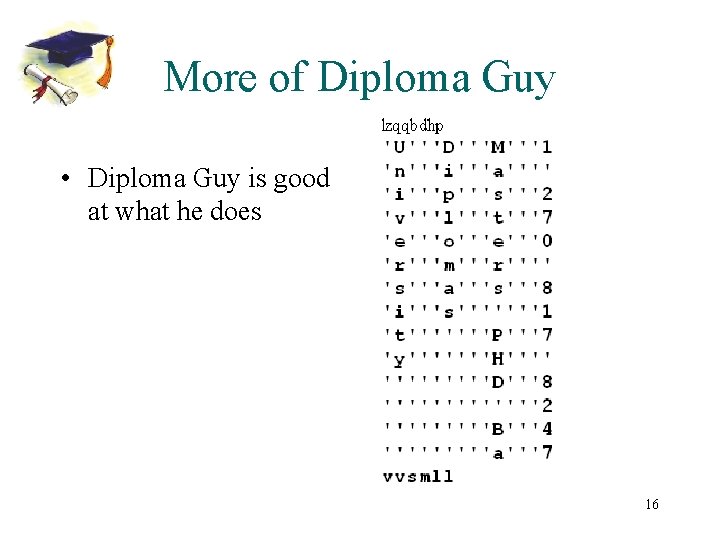

More of Diploma Guy • Diploma Guy is good at what he does 16

One Solution to Spam Detection • Machine Learning – Learn spam versus good 17

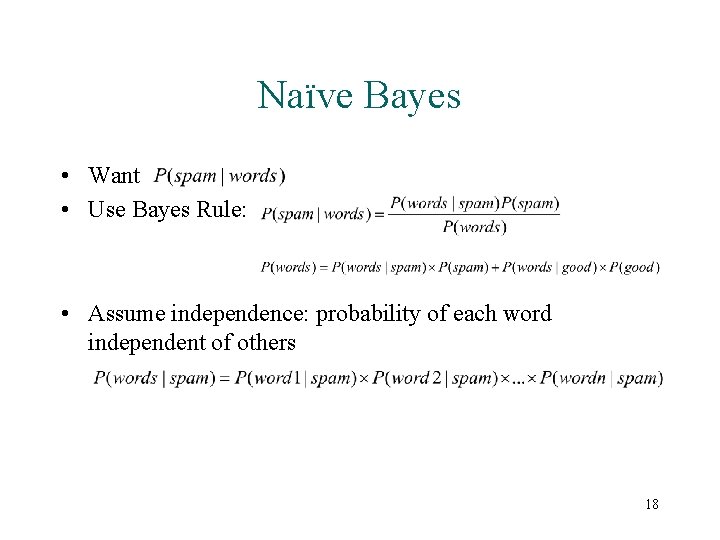

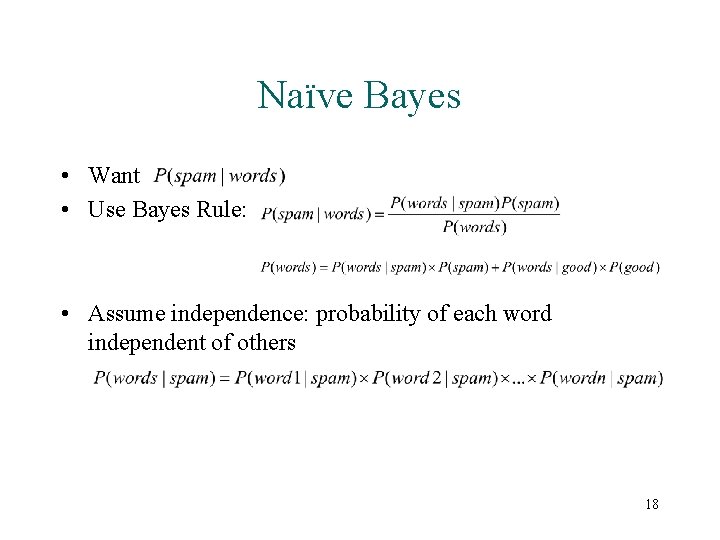

Naïve Bayes • Want • Use Bayes Rule: • Assume independence: probability of each word independent of others 18

A Bayesian Approach to Filtering Junk E-Mail 1998 - Sahami, Dumais, Heckerman, Horvitz • One of the first papers on using machine learning to combat spam • Used Naïve Bayes • Feature Space: Words, Phrases, Domain-Specific Features • Evaluation Data: ~1700 Messages, ~88% Spam, from volunteer’s private e-mail 19

A Bayesian Approach to Filtering Junk E-Mail 1998 - Sahami, Dumais, Heckerman, Horvitz • Hand Crafted Features – 35 Phrases • ‘Free Money’ • ‘Only $’ • ‘be over 21’ – 20 Domain Specific Features • • • Domain type of sender (. edu, . com, etc) Sender name resolutions (internal mail) Has attachments Time received Percent of non-alphanumeric characters in subject • Best collection of heuristics discussed in literature – Without them: – With them: Spam precision 97. 1% Spam recall 94. 3% Spam precision 100% Spam recall 98. 3% 20

A Plan for Spam 2002 – P. Graham • • Widely cited in the open source community Uses a heavily tuned version of Naïve Bayes Feature Space: Words in header and body Feature Selection: ~23, 000 features – all that appeared more than 5 times • Evaluation Data: ~8000 messages from author; ~50% spam • Results: Spam precision 100%, Spam recall 99. 5% 21

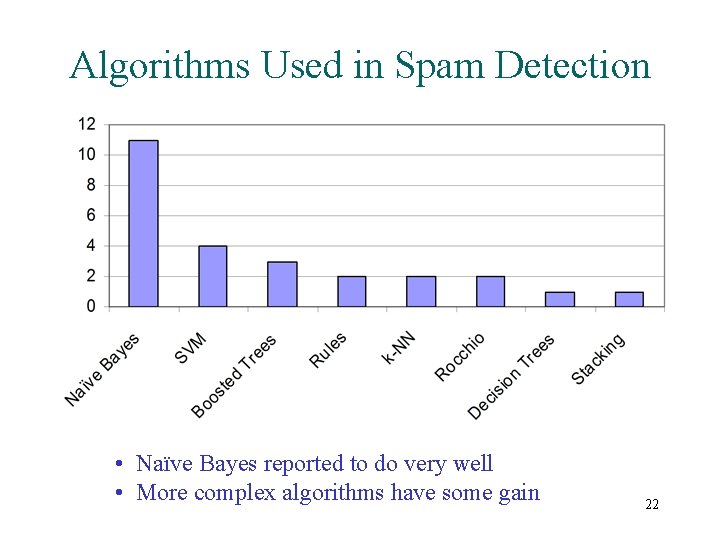

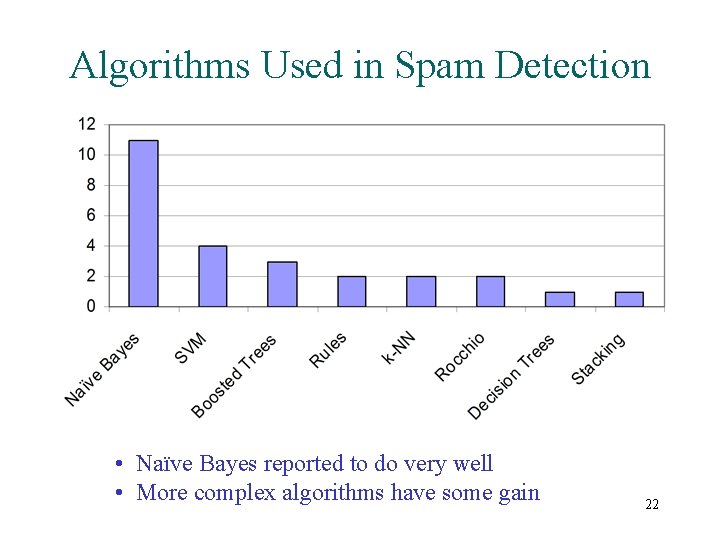

Algorithms Used in Spam Detection • Naïve Bayes reported to do very well • More complex algorithms have some gain 22

Which Algorithm is Best? • Very difficult to tell – No consistently-used good data set – No standard evaluation measures Focus of the paper 23

End of Detour 24

Overview of the Paper A Study of Supervised Spam Detection Applied to Eight Months of Personal E-Mail • Present several evaluation measures for spam detection • Compare methods in six open-sources spam filters • Analysis the experiment results 25

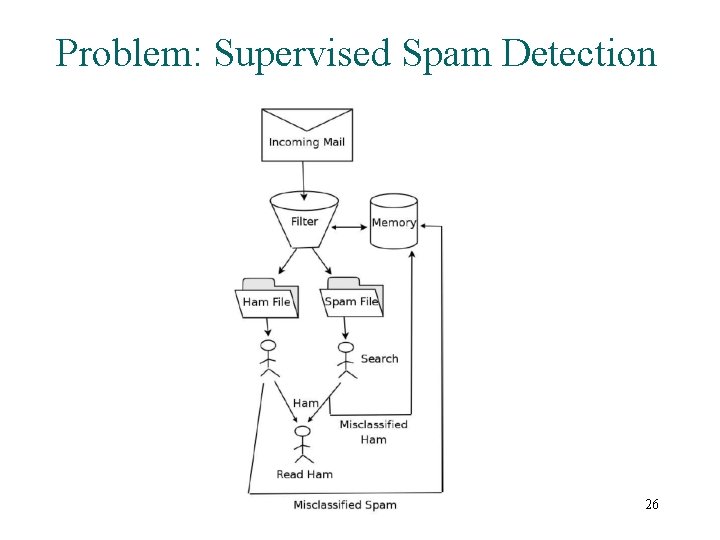

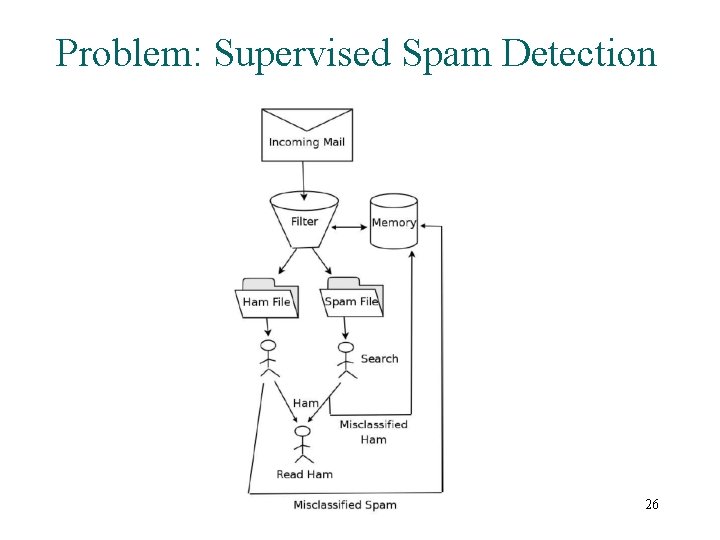

Problem: Supervised Spam Detection 26

Methods • Methods in six open-source spam filters – Spamassassin – Bogofilter – CRM-114 – DSPAM – Spam. Bayes – Spamprobe 27

Data • A person’s eight month E-mails – From Aug. 2003 to March 2004 • Stored in the order received • 49, 086 messages with judgements – 9, 038 (18. 4%) ham – 40, 048 (81. 6%) spam 28

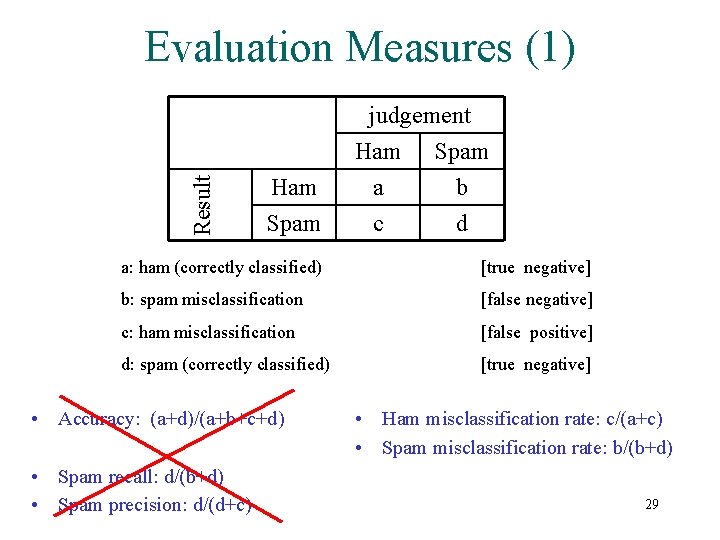

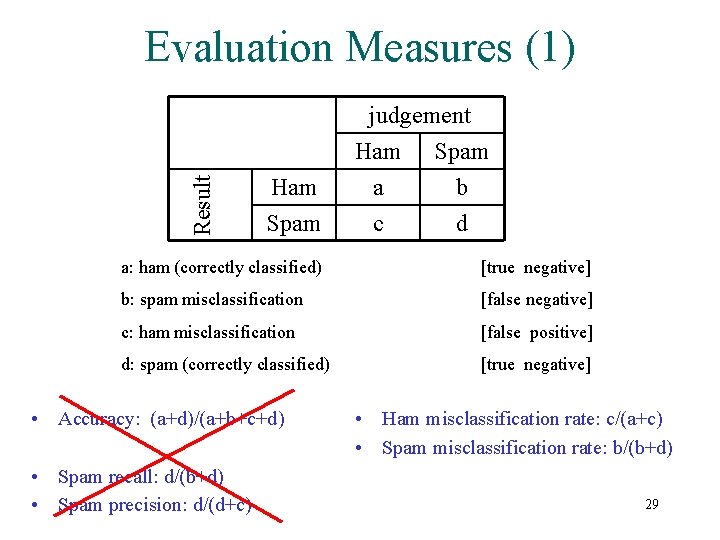

Evaluation Measures (1) Result judgement Ham Spam Ham a c Spam b d a: ham (correctly classified) [true negative] b: spam misclassification [false negative] c: ham misclassification [false positive] d: spam (correctly classified) [true negative] • Accuracy: (a+d)/(a+b+c+d) • Spam recall: d/(b+d) • Spam precision: d/(d+c) • Ham misclassification rate: c/(a+c) • Spam misclassification rate: b/(b+d) 29

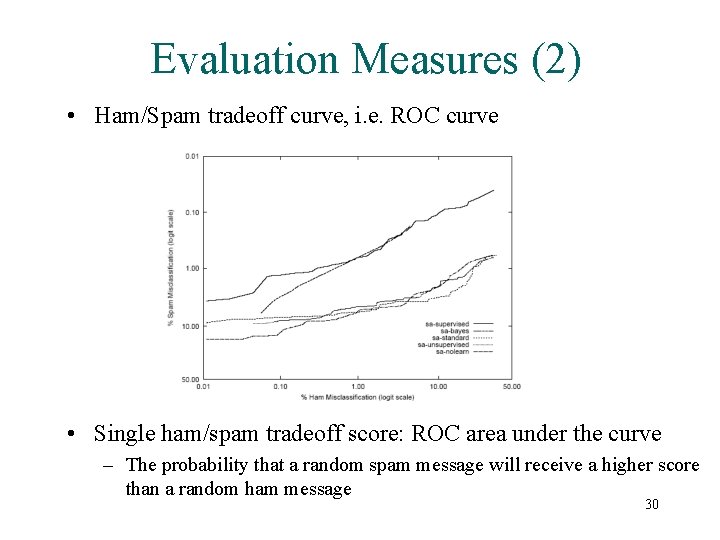

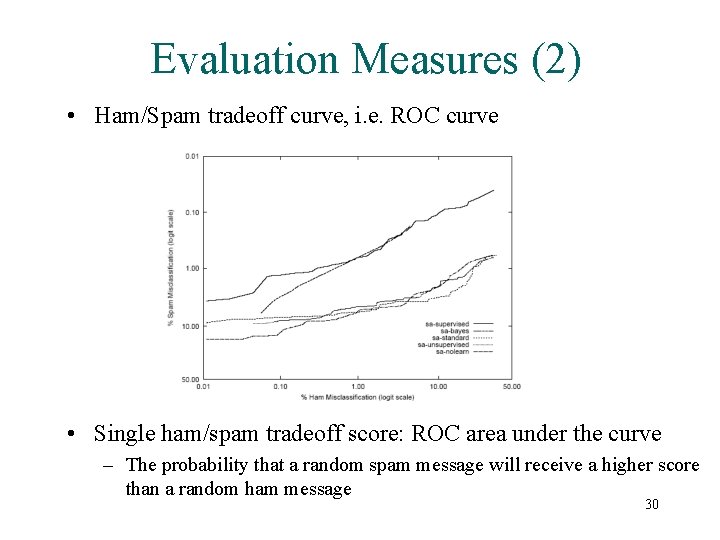

Evaluation Measures (2) • Ham/Spam tradeoff curve, i. e. ROC curve • Single ham/spam tradeoff score: ROC area under the curve – The probability that a random spam message will receive a higher score than a random ham message 30

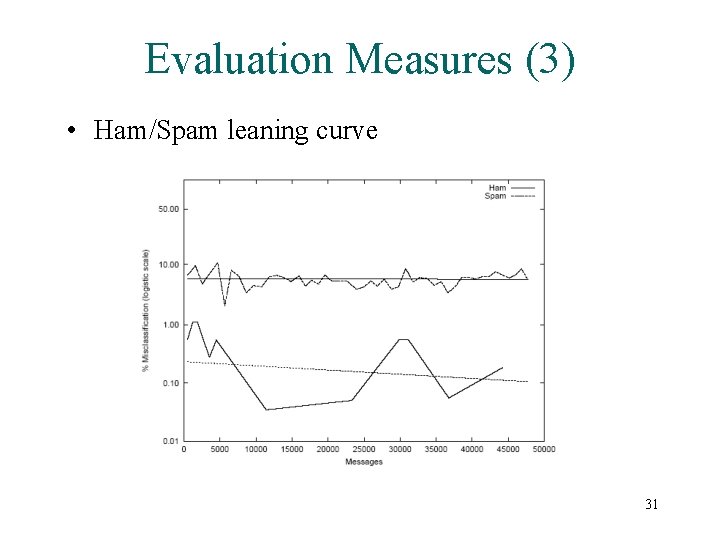

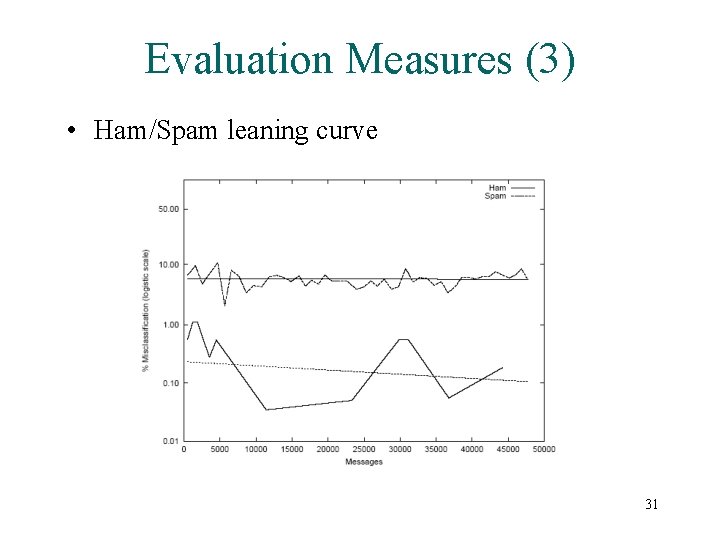

Evaluation Measures (3) • Ham/Spam leaning curve 31

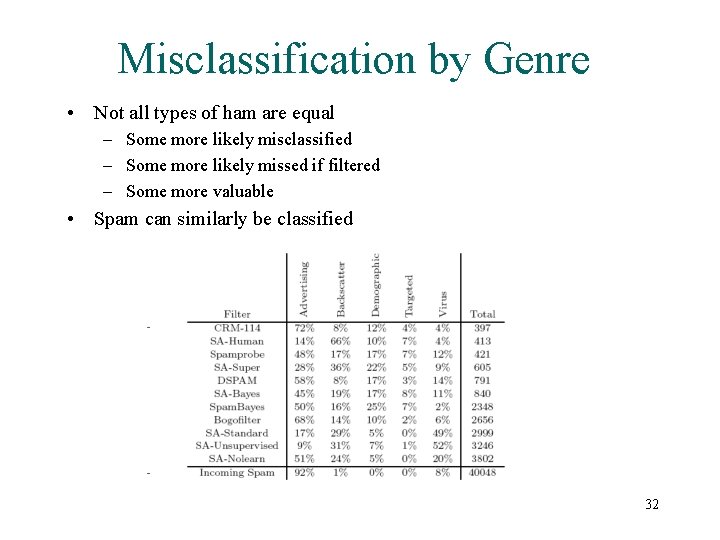

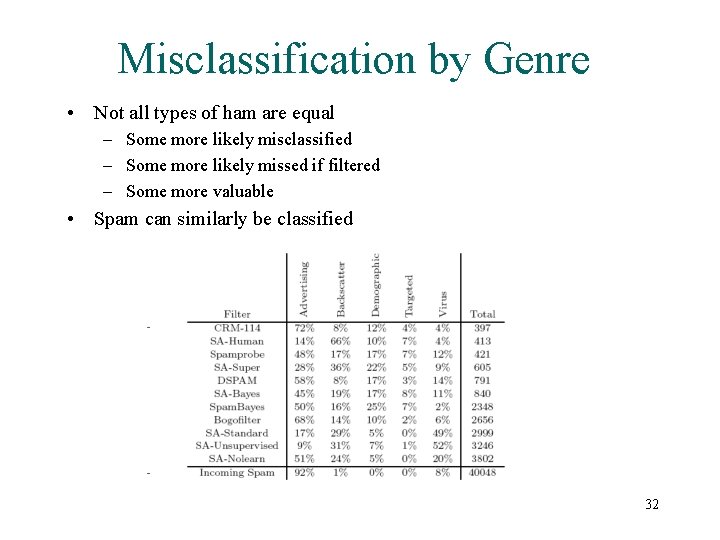

Misclassification by Genre • Not all types of ham are equal – Some more likely misclassified – Some more likely missed if filtered – Some more valuable • Spam can similarly be classified 32

Conclusion • Present several possible evaluation measures for spam detection • Compare several spam detection methods • Provide Analysis of the experiment results • However, it would be more interesting to compare the performance of different algorithms (e. g. NB vs. SVM). 33

The End • Thank you! 34