A Generalized Processor Sharing Approach to Flow Control

- Slides: 28

A Generalized Processor Sharing Approach to Flow Control in Integrated Service Networks: The Single Node Case. Abhay. K. Parekh and Robert G. Gallager Laboratory for Information and Decision Systems Massachusetts Institute of Technology IEEE INFOCOM 1992

Outline �Introduction �Major work of the paper �GPS Example �PGPS �Determine the difference of delay and traffic in 2 scheme

Outline �Bounds of buffer size �Virtual System Implementation �Leaky-Bucket Admission Control �Result �Conclusion

Introduction �The Paper focus on a central problem in the control of congestion and flow in high speed integrated service networks. �Goal is to find a implementable schemes for guaranteeing worst-case packet delay(Flow Control).

Major work of the paper �Major part of the paper is to provide a implementable scheme for guaranteeing worst-case packet delay. �Show that PGPS ( Packet-by-Packet GPS ) combined with Leaky bucket admission control can achieve the goal.

Generalized Processor Sharing �GPS is a work-conserving flow control mechanism that ensure upper bounds of worst packet delay. �But it can not be implemented because it assume that the packet size can be infinitely divided. �Work Conserving – server will not let bandwidth idle.

Generalized Processor Sharing �GPS Server checks to see if a new source can be accommodated and, if so, takes actions (such as reserving transmission links or switching capacity) to ensure the quality of service desired.

Generalized Processor Sharing �Once a source begins sending traffic, the network ensures that the agreed-upon values of traffic parameters are not violated.

Generalized Processor Sharing �GPS Serer is defined as �Si (τ, t) be the amount of session i traffic served in an time interval [τ, t]. � Si (τ, t) / Sj (τ, t) ≥ Φi / Φj , j = 1, 2, …, N , for any session i that is continuously backlogged in the time interval [τ, t].

Generalized Processor Sharing �By Summering all session j ( in order to find session i ‘s rate ), we can derive : �Si (τ, t) ∑j Φj ≥ (t-τ)r Φi , where r is the processing rate of server and Φ is the weight of the session. � gi = Φ i r / ∑ j Φ j.

Generalized Processor Sharing �GPS is a attractive multiplexing scheme for a number of reasons: �If the average rate of a session i less than gi the session can be guaranteed a throughput ρi , independent of the demand of the other session �The delay of an arriving session i bit can be bounded as a function of the session I queue length , independent of the queues and arrivals of the other sessions

Generalized Processor Sharing �By varying Φi , we have flexibility to treat sessions in different way, as long as the total average rate of all sessions <= r. �For example, a high-bandwidth delayinsensitive session can be assigned gi much less than its average rate, thus allowing for better treatment of the other sessios.

Generalized Processor Sharing �each backlogged flow is guaranteed a minimum service rate(fairness) � the excess service rate is redistributed among the backlogged flows in proportion to their minimum service rates(flexible and efficient).

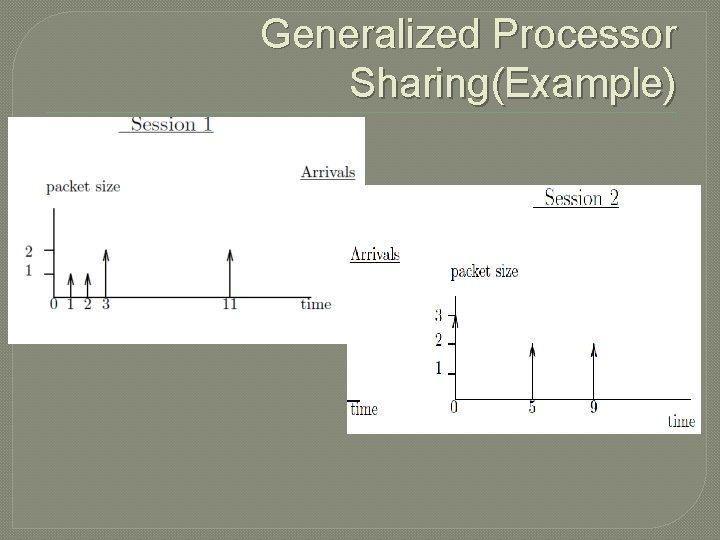

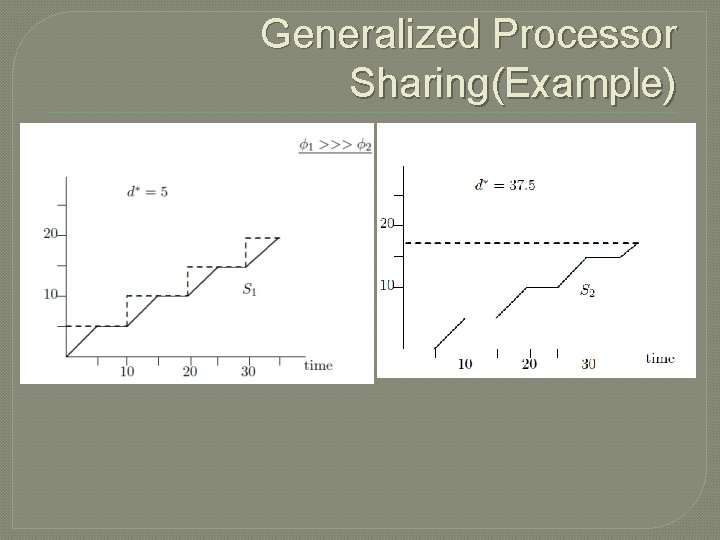

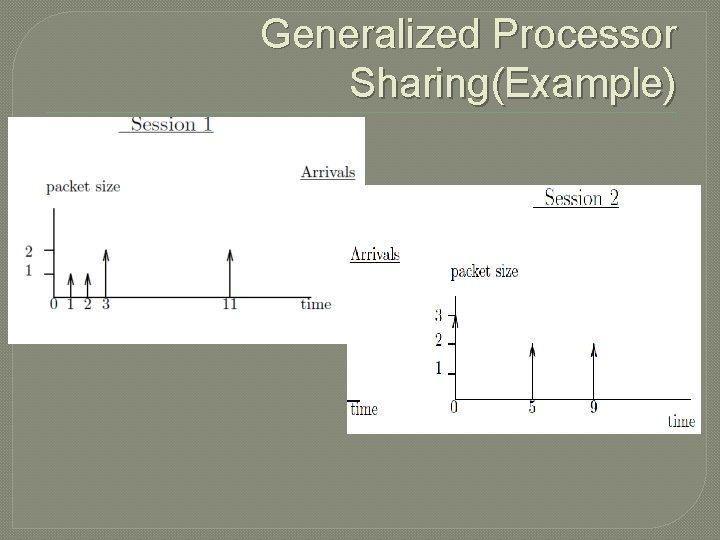

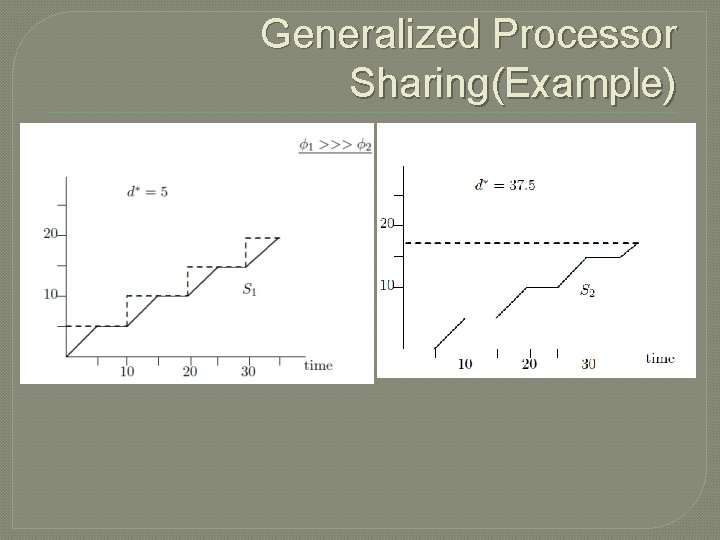

Generalized Processor Sharing(Example)

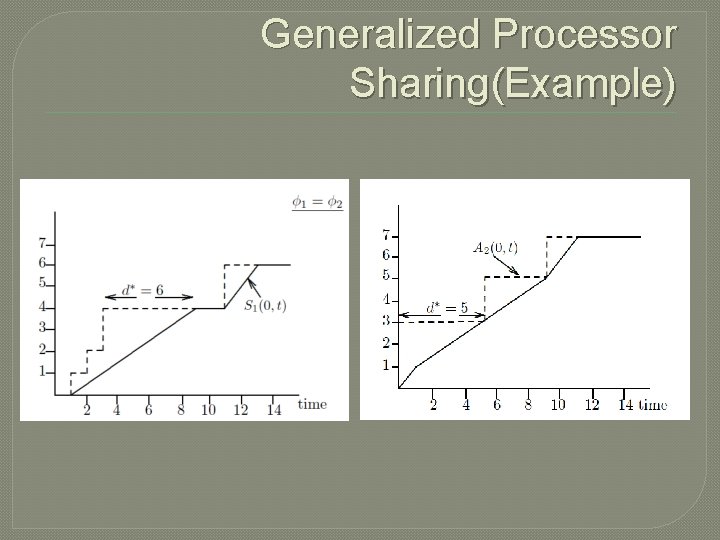

Generalized Processor Sharing(Example)

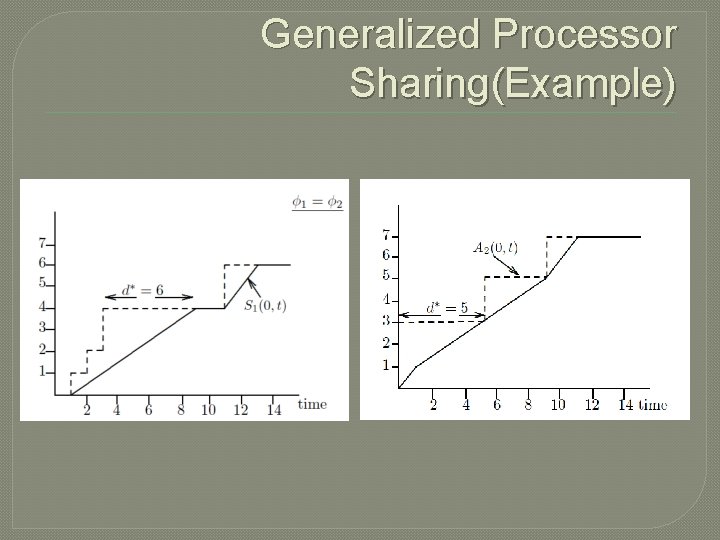

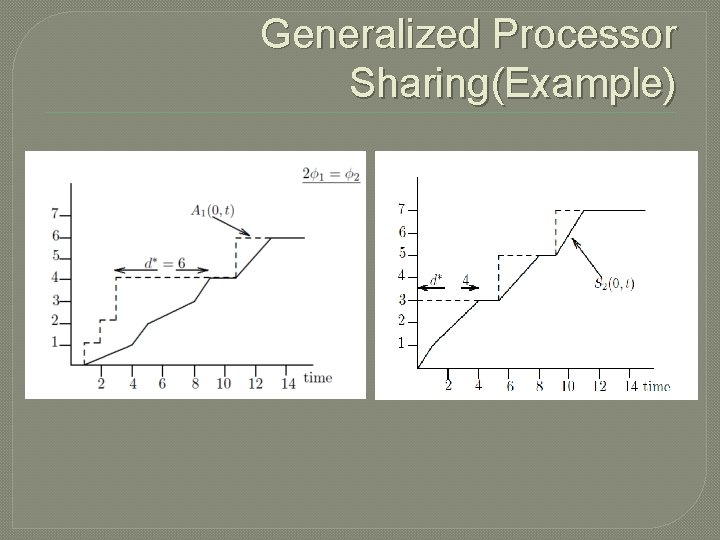

Generalized Processor Sharing(Example)

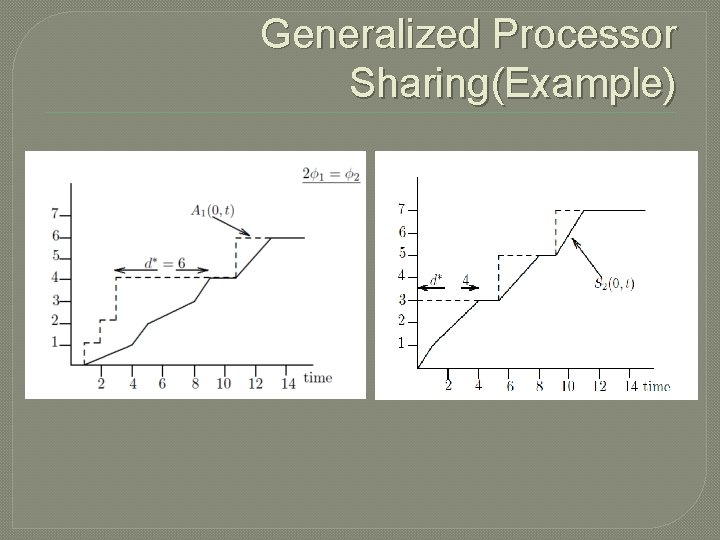

Generalized Processor Sharing(Example)

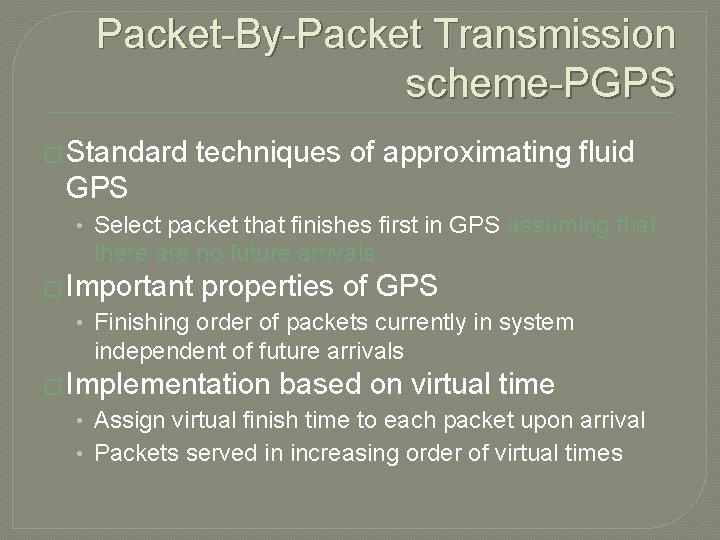

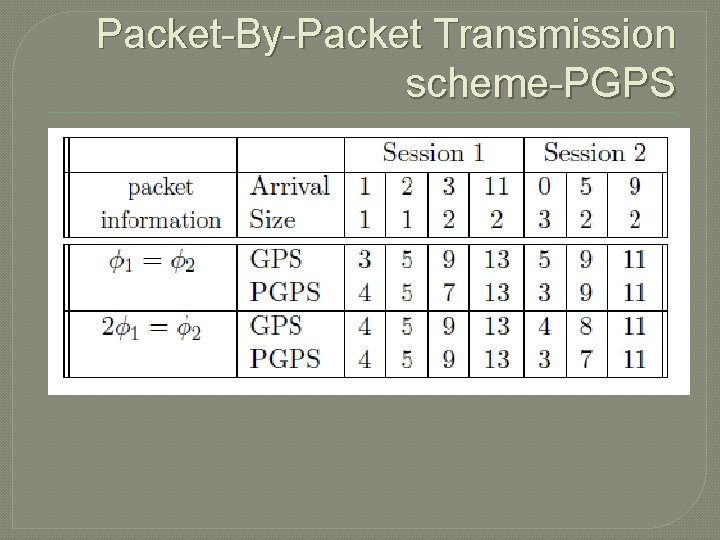

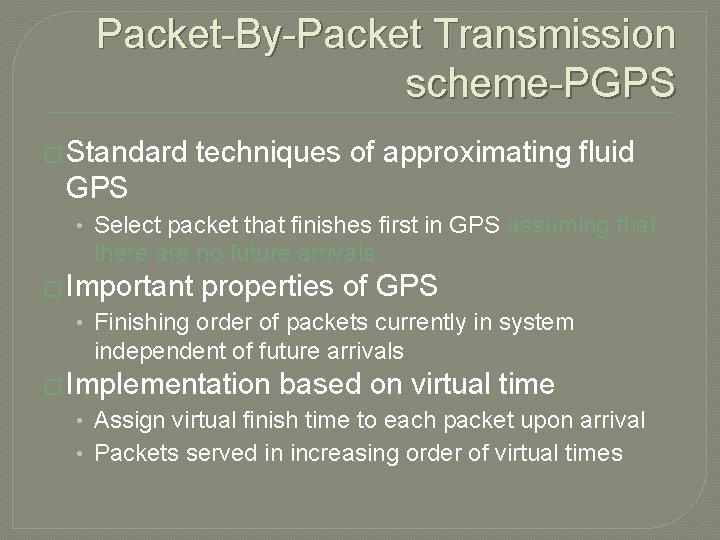

Packet-By-Packet Transmission scheme-PGPS � Standard techniques of approximating fluid GPS • Select packet that finishes first in GPS assuming that there are no future arrivals � Important properties of GPS • Finishing order of packets currently in system independent of future arrivals � Implementation based on virtual time • Assign virtual finish time to each packet upon arrival • Packets served in increasing order of virtual times

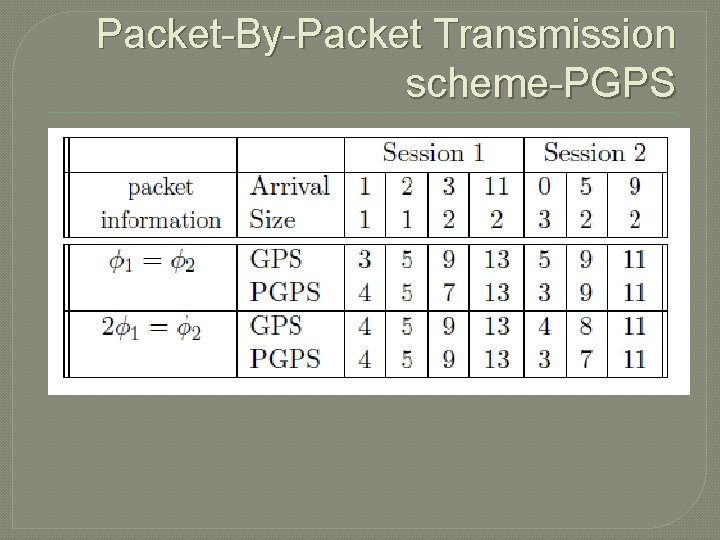

Packet-By-Packet Transmission scheme-PGPS

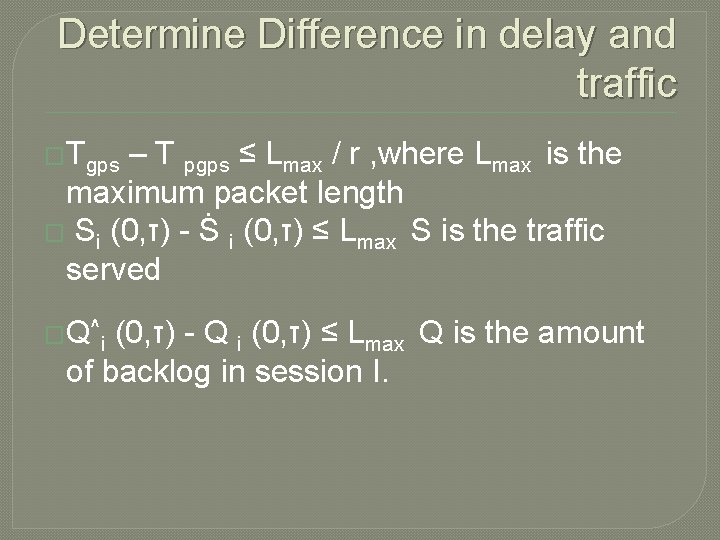

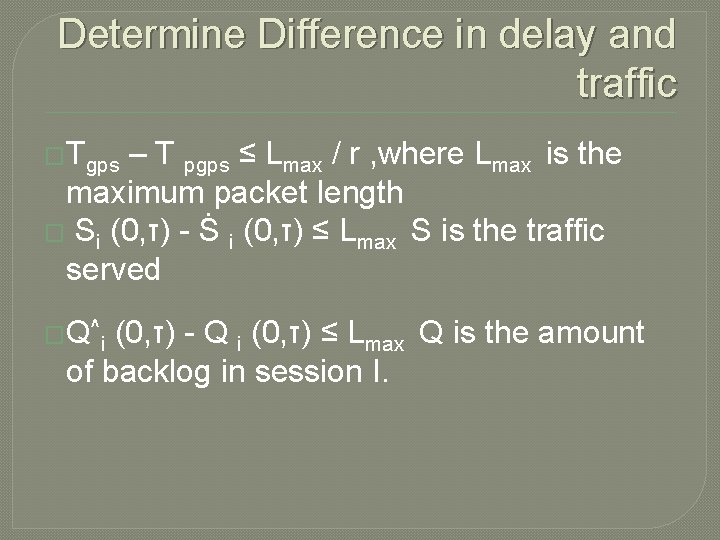

Determine Difference in delay and traffic �Tgps – T pgps ≤ Lmax / r , where Lmax is the maximum packet length � Si (0, τ) - Ṡ i (0, τ) ≤ Lmax S is the traffic served � Q ^i (0, τ) - Q i (0, τ) ≤ Lmax Q is the amount of backlog in session I.

Bounds of buffer size �GPS need buffer size Lmax each link �PGPS needs Lmax + max t≥ 0 (fi(t)-rit)

Virtual System Implementation �Virtual time , v(t), is used to to represent the progress of work in the reference system. • When the departure or arrival of the events, the virtual time will update. • Assign virtual finish time to each packet upon arrival • Packets served in increasing order of virtual times

Example �Two Sessions submit fixed size packet 1 unit length �Rate of server is 1 �Starting at time zero 1000 session 1 packets begins to arrive at a rate of 1 packet/sec �At time 900 , 450 session 2 packets arrive at rate of 1 packet/sec

Example �The virtual clock of session 1 will read 1800 and virtual clock of session 2 will read 900 at real time 900. ( if the server treat both session equally) �And from that session 1 will read 1800, 1802 while the other one is 900, 902, 904

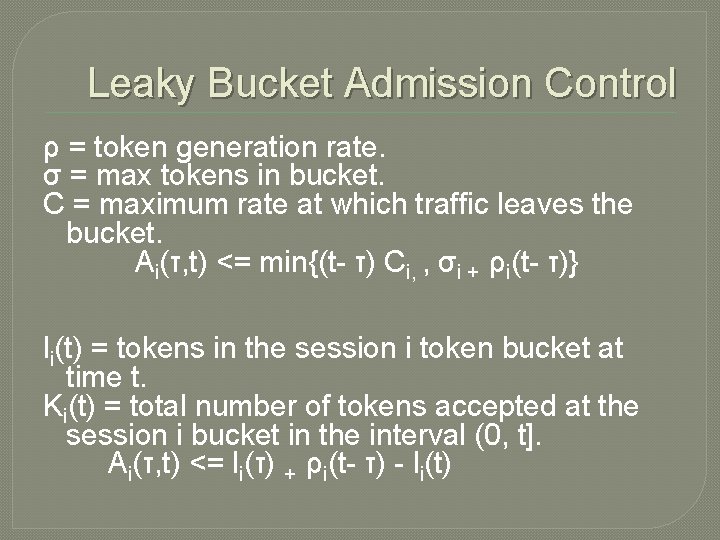

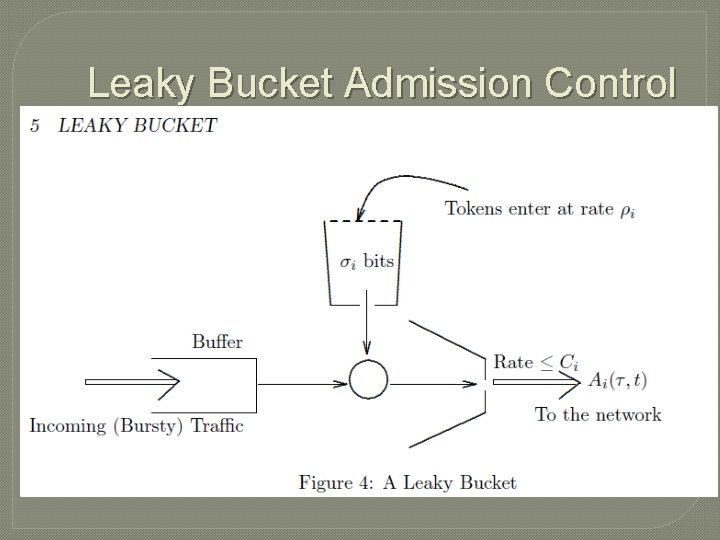

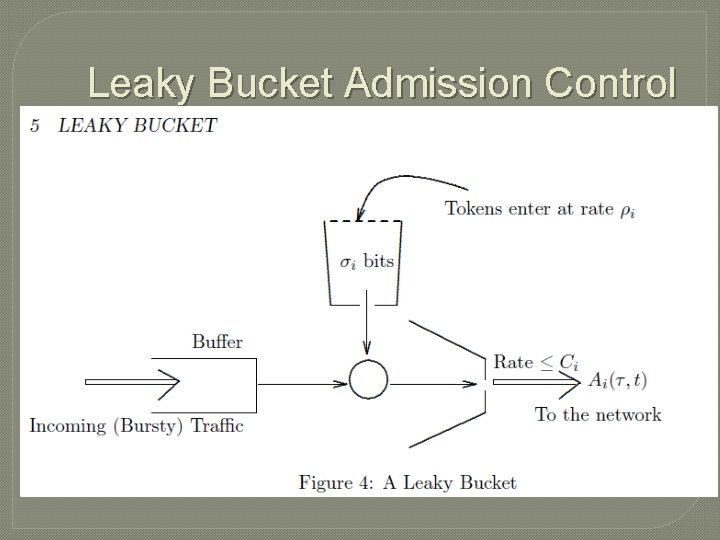

Leaky Bucket Admission Control ρ = token generation rate. σ = max tokens in bucket. C = maximum rate at which traffic leaves the bucket. Ai(τ, t) <= min{(t- τ) Ci, , σi + ρi(t- τ)} li(t) = tokens in the session i token bucket at time t. Ki(t) = total number of tokens accepted at the session i bucket in the interval (0, t]. Ai(τ, t) <= li(τ) + ρi(t- τ) - li(t)

Leaky Bucket Admission Control

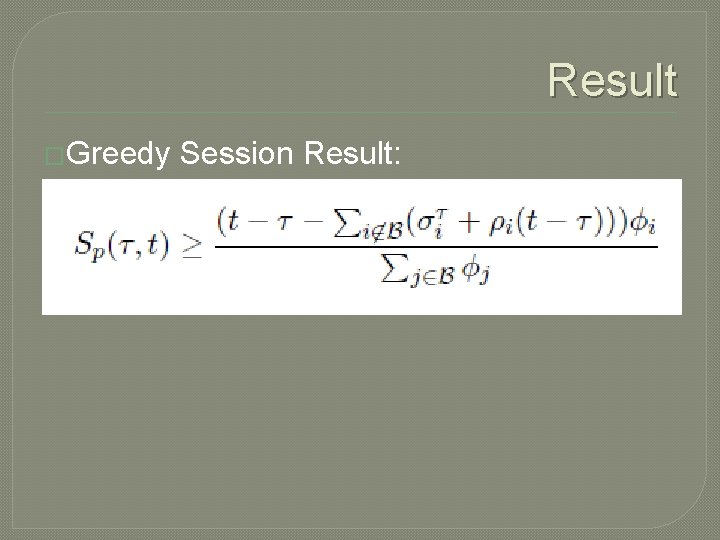

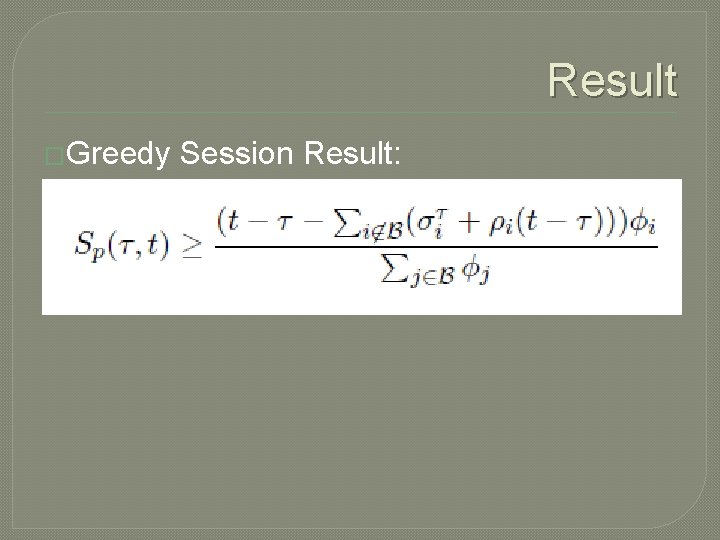

Result �Greedy Session Result:

Conclusion The use of Generalized processor Sharing (GPS), when combined with Leaky Bucket admission control, allows the network to make a wide range of worst-case performance guarantees on throughput and delay