1 SPARK CLUSTER SETTING Why Use Spark Runtime

![Basic Transformations > nums = sc. parallelize([1, 2, 3]) # Pass each element through Basic Transformations > nums = sc. parallelize([1, 2, 3]) # Pass each element through](https://slidetodoc.com/presentation_image_h/bfcff353206ca22107a604270129c599/image-17.jpg)

![Basic Actions > nums = sc. parallelize([1, 2, 3]) # Retrieve RDD contents as Basic Actions > nums = sc. parallelize([1, 2, 3]) # Retrieve RDD contents as](https://slidetodoc.com/presentation_image_h/bfcff353206ca22107a604270129c599/image-18.jpg)

- Slides: 23

1 SPARK CLUSTER SETTING 賴家民

Why Use Spark Runtime Architecture Build Spark Local mode Standalone Cluster Manager Hadoop Yarn Spark. Context with RDD 2

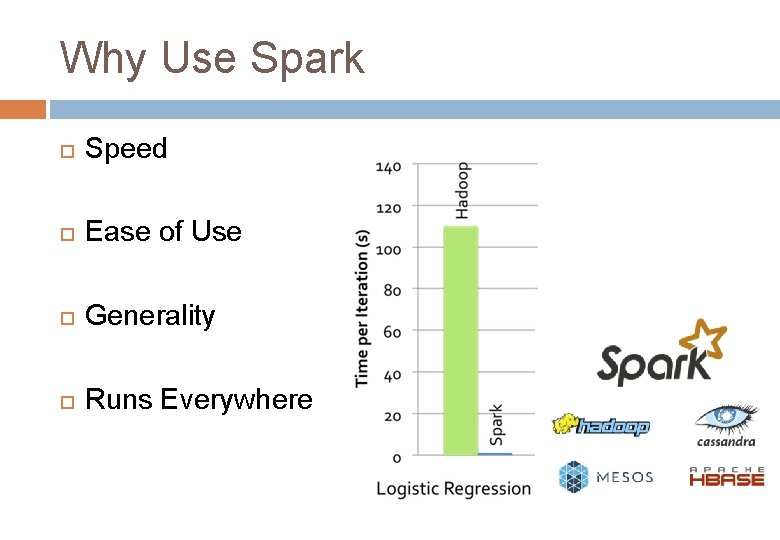

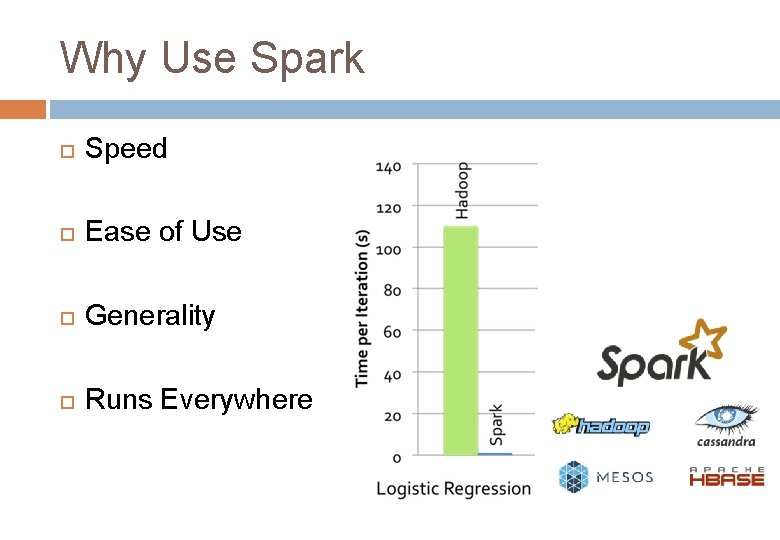

Why Use Spark Speed Ease of Use Generality Runs Everywhere

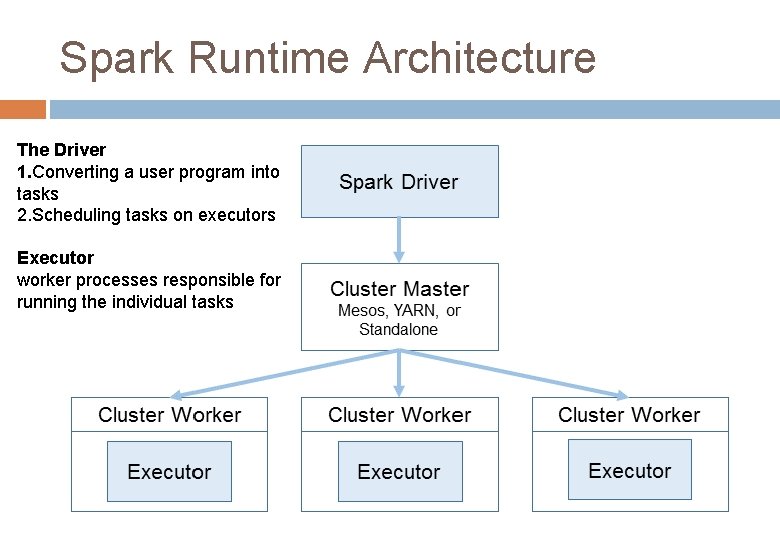

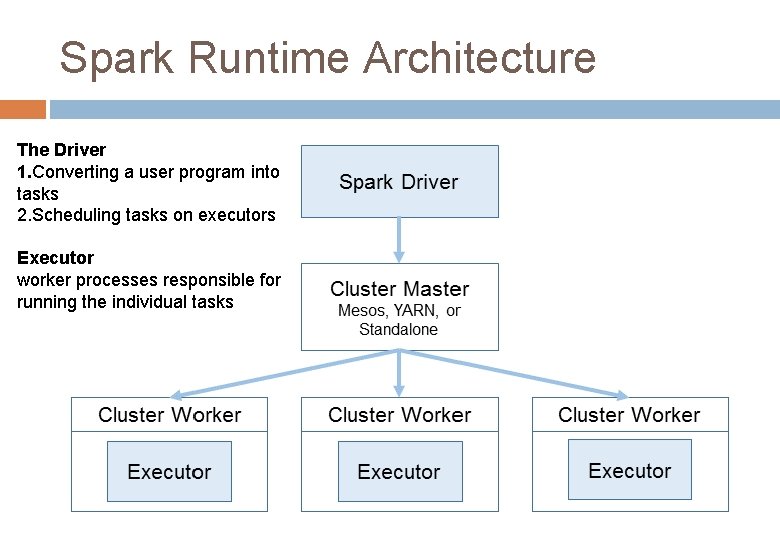

Spark Runtime Architecture The Driver 1. Converting a user program into tasks 2. Scheduling tasks on executors Executor worker processes responsible for running the individual tasks 4

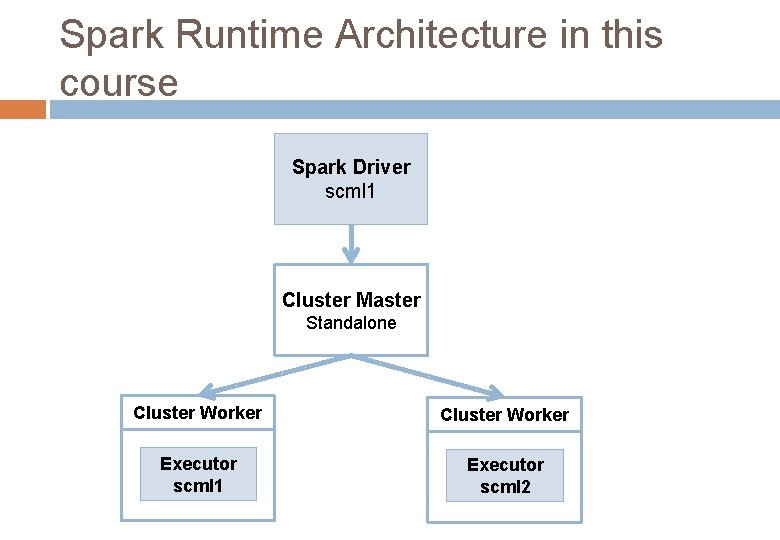

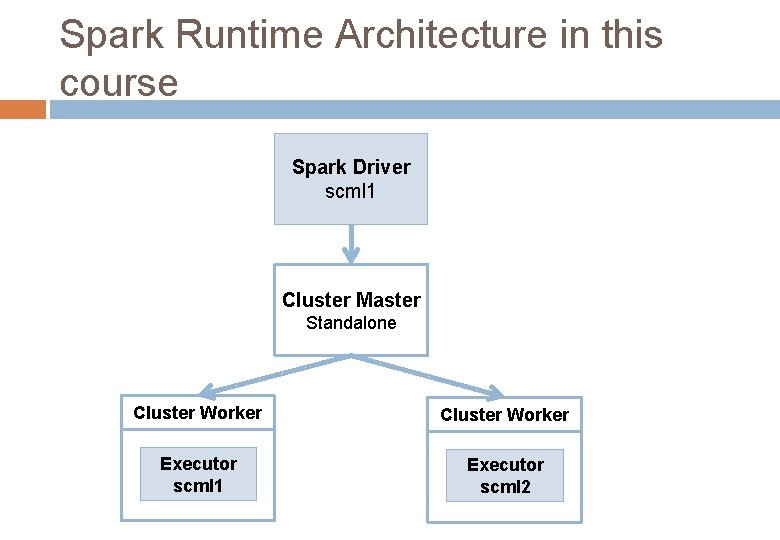

Spark Runtime Architecture in this course Spark Driver scml 1 Cluster Master Standalone Cluster Worker Executor scml 1 Executor scml 2

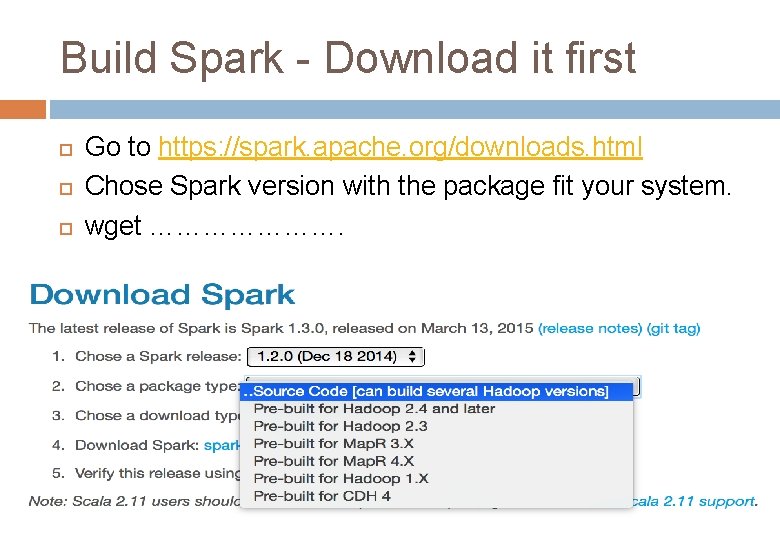

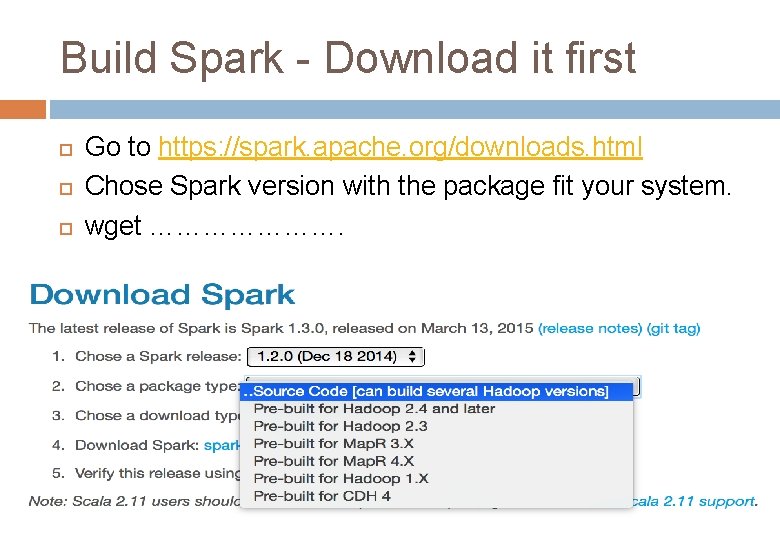

Build Spark - Download it first Go to https: //spark. apache. org/downloads. html Chose Spark version with the package fit your system. wget …………………. 6

Build Spark - Start to build Set JAVA_HOME � Vim ~/. bashrc ; � export JAVA_HOME=/usr/lib/jvm/java-7 -oracle Built with the right package � mvn -Dskip. Tests clean package Built in our course � mvn package -Pyarn -Dyarn. version=2. 6. 0 -Phadoop-2. 4 Dhadoop. version=2. 6. 0 -Phive –Dskip. Tests � If you don’t built like this, you will get IPC connection fail. 7

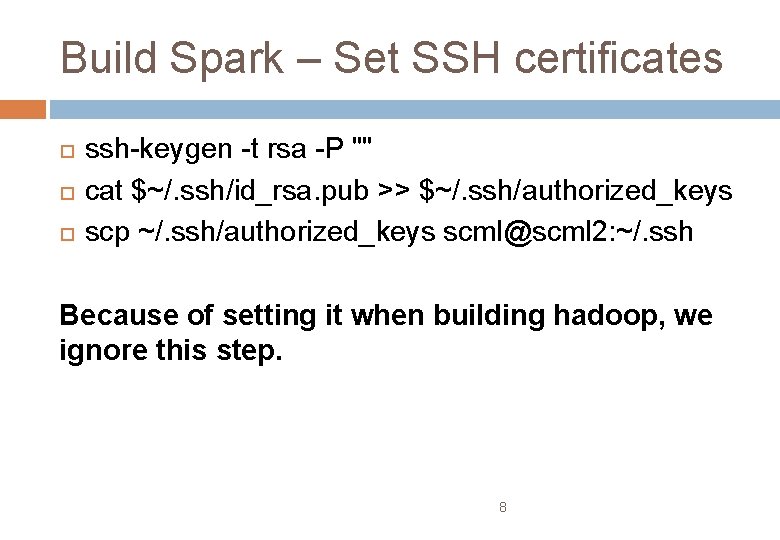

Build Spark – Set SSH certificates ssh-keygen -t rsa -P "" cat $~/. ssh/id_rsa. pub >> $~/. ssh/authorized_keys scp ~/. ssh/authorized_keys scml@scml 2: ~/. ssh Because of setting it when building hadoop, we ignore this step. 8

Local mode Interactive shells � bin/pyspark � bin/spark-shell Launch application � � bin/spark-submit my_script. py If Spark cluster start, bin/spark-submit –master local my_script. py 9

Standalone Cluster Manager scml 1 � cp slaves. template slave � vim ~/spark-1. 2. 0/conf/slaves � ADD scml 1 and scml 2 � cp spark-env. sh. template spark-env. sh � ADD export SPARK_MASTER_IP=192. 168. 56. 111 � ADD export SPARK_LOCAL_IP=192. 168. 56. 111 10

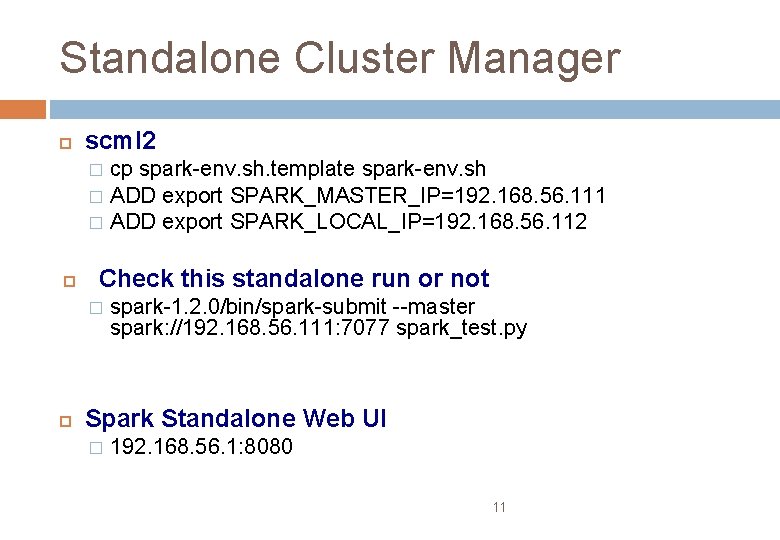

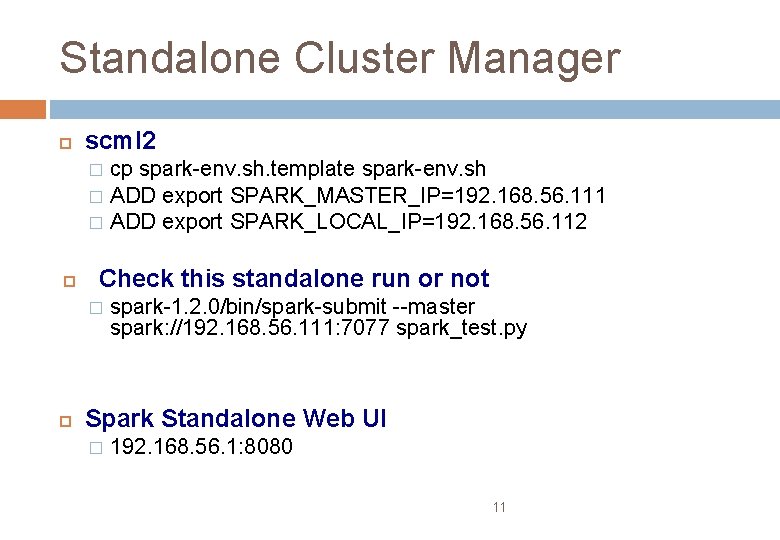

Standalone Cluster Manager scml 2 cp spark-env. sh. template spark-env. sh � ADD export SPARK_MASTER_IP=192. 168. 56. 111 � ADD export SPARK_LOCAL_IP=192. 168. 56. 112 � Check this standalone run or not � spark-1. 2. 0/bin/spark-submit --master spark: //192. 168. 56. 111: 7077 spark_test. py Spark Standalone Web UI � 192. 168. 56. 1: 8080 11

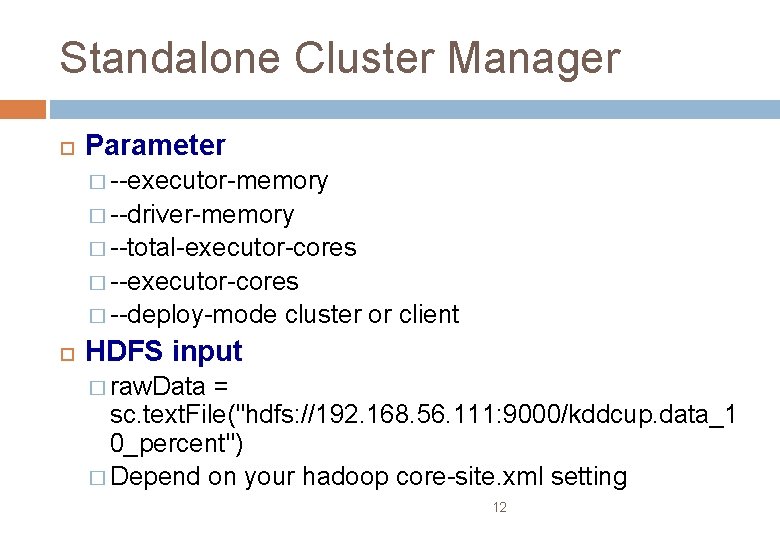

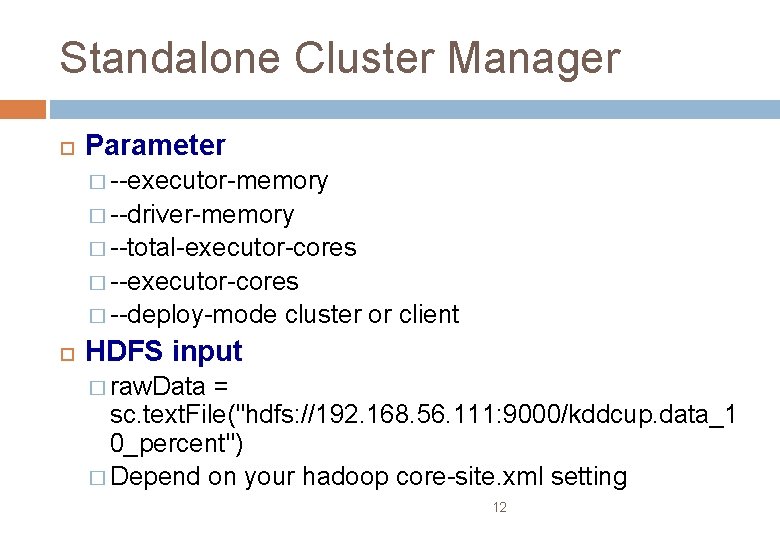

Standalone Cluster Manager Parameter � --executor-memory � --driver-memory � --total-executor-cores � --deploy-mode cluster or client HDFS input � raw. Data = sc. text. File("hdfs: //192. 168. 56. 111: 9000/kddcup. data_1 0_percent") � Depend on your hadoop core-site. xml setting 12

Hadoop Yarn scml 1 � Vim spark-1. 2. 0/conf/spark-env. sh; Add export HADOOP_CONF_DIR="/home/scml/hadoop 2. 6. 0/etc/hadoop/” Check this Yarn Manager run or not � spark-1. 2. 0/bin/spark-submit --master spark: //192. 168. 56. 111: 7077 spark_test. py 13

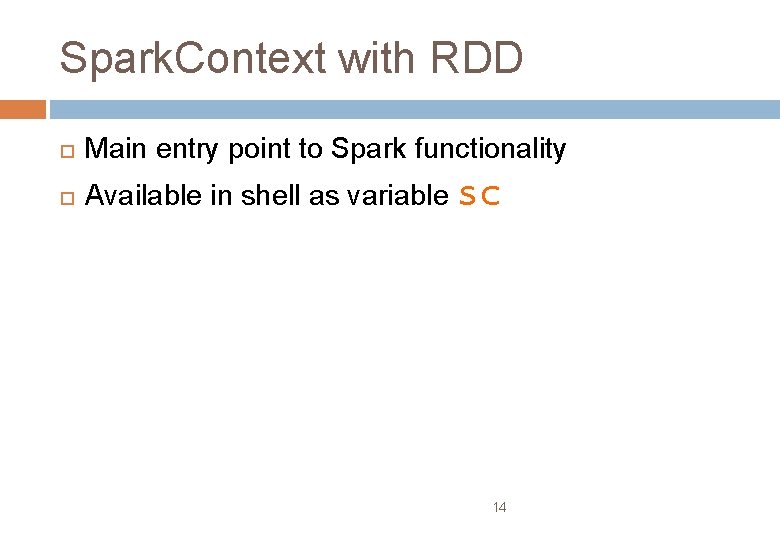

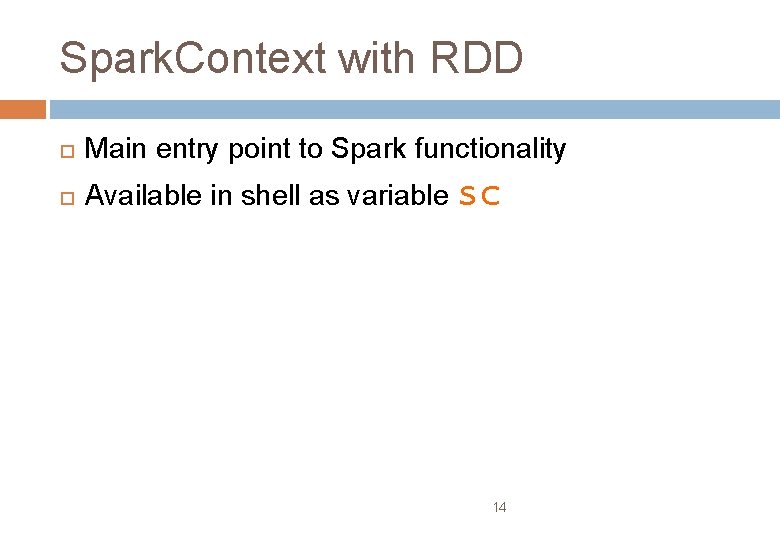

Spark. Context with RDD Main entry point to Spark functionality Available in shell as variable sc 14

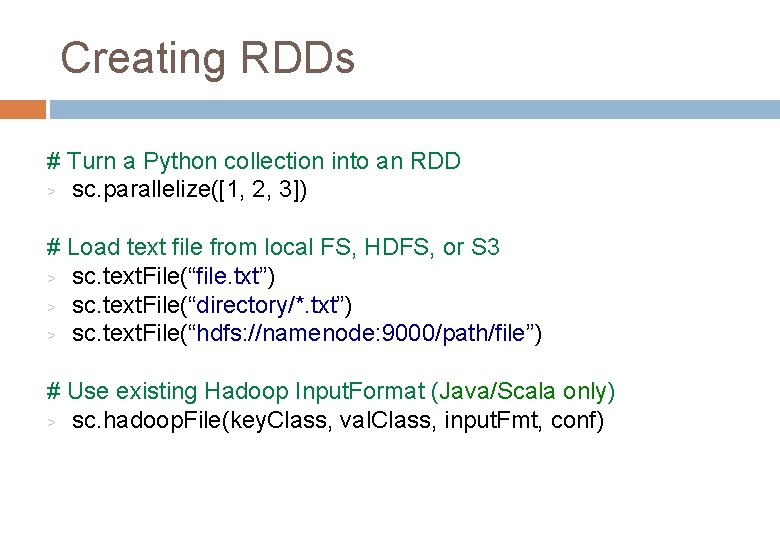

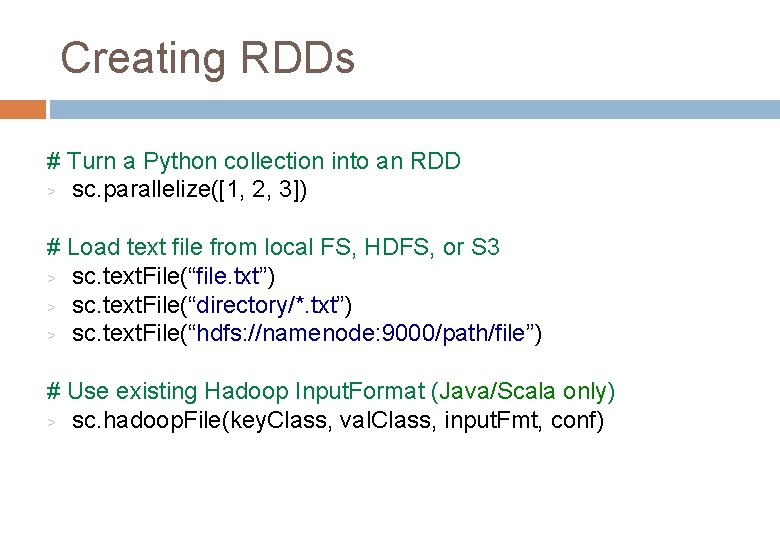

Creating RDDs # Turn a Python collection into an RDD > sc. parallelize([1, 2, 3]) # Load text file from local FS, HDFS, or S 3 > sc. text. File(“file. txt”) > sc. text. File(“directory/*. txt”) > sc. text. File(“hdfs: //namenode: 9000/path/file”) # Use existing Hadoop Input. Format (Java/Scala only) > sc. hadoop. File(key. Class, val. Class, input. Fmt, conf)

Creating RDDs # Turn a Python collection into an RDD > sc. parallelize([1, 2, 3]) # Load text file from local FS, HDFS, or S 3 > sc. text. File(“file. txt”) > sc. text. File(“directory/*. txt”) > sc. text. File(“hdfs: //namenode: 9000/path/file”) # Use existing Hadoop Input. Format (Java/Scala only) > sc. hadoop. File(key. Class, val. Class, input. Fmt, conf)

![Basic Transformations nums sc parallelize1 2 3 Pass each element through Basic Transformations > nums = sc. parallelize([1, 2, 3]) # Pass each element through](https://slidetodoc.com/presentation_image_h/bfcff353206ca22107a604270129c599/image-17.jpg)

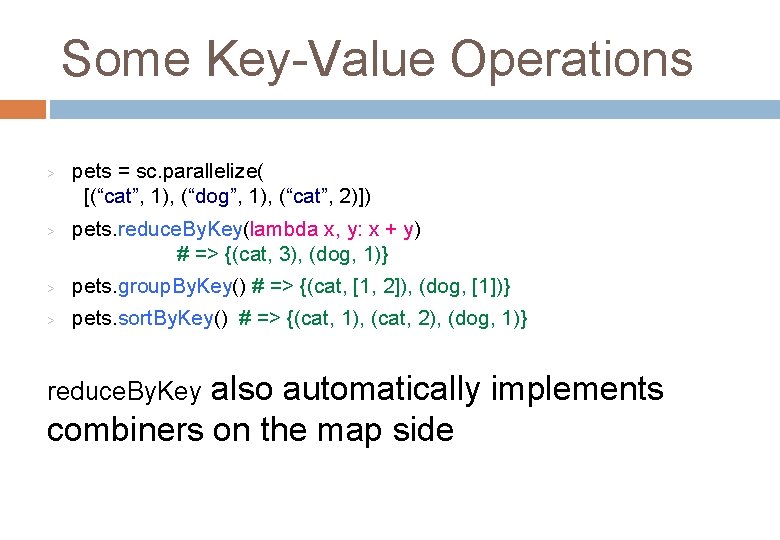

Basic Transformations > nums = sc. parallelize([1, 2, 3]) # Pass each element through a function > squares = nums. map(lambda x: x*x) // {1, 4, 9} # Keep elements passing a predicate > even = squares. filter(lambda x: x % 2 == 0) // {4} # Map each element to zero or more others > nums. flat. Map(lambda x: => range(x)) > # => {0, 0, 1, 2} Range object (sequence of numbers 0, 1, …, x-1)

![Basic Actions nums sc parallelize1 2 3 Retrieve RDD contents as Basic Actions > nums = sc. parallelize([1, 2, 3]) # Retrieve RDD contents as](https://slidetodoc.com/presentation_image_h/bfcff353206ca22107a604270129c599/image-18.jpg)

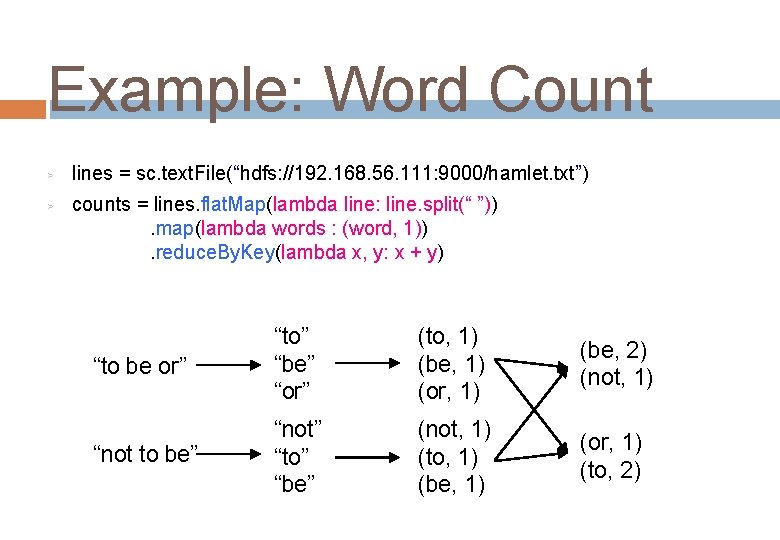

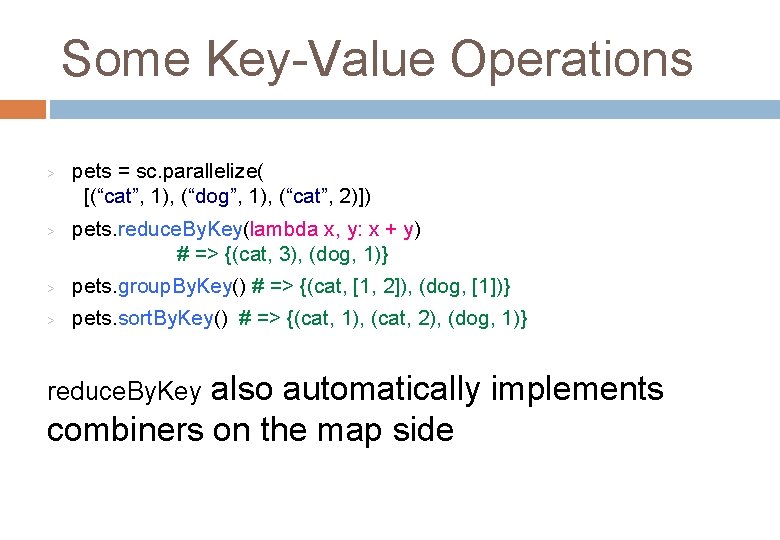

Basic Actions > nums = sc. parallelize([1, 2, 3]) # Retrieve RDD contents as a local collection > nums. collect() # => [1, 2, 3] # Return first K elements > nums. take(2) # => [1, 2] # Count number of elements > nums. count() # => 3 # Merge elements with an associative function > nums. reduce(lambda x, y: x + y) # => 6 # Write elements to a text file > nums. save. As. Text. File(“hdfs: //file. txt”)

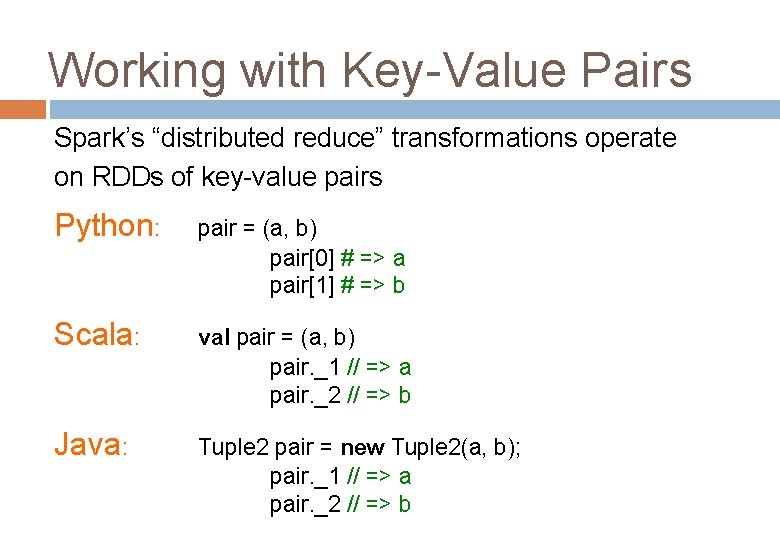

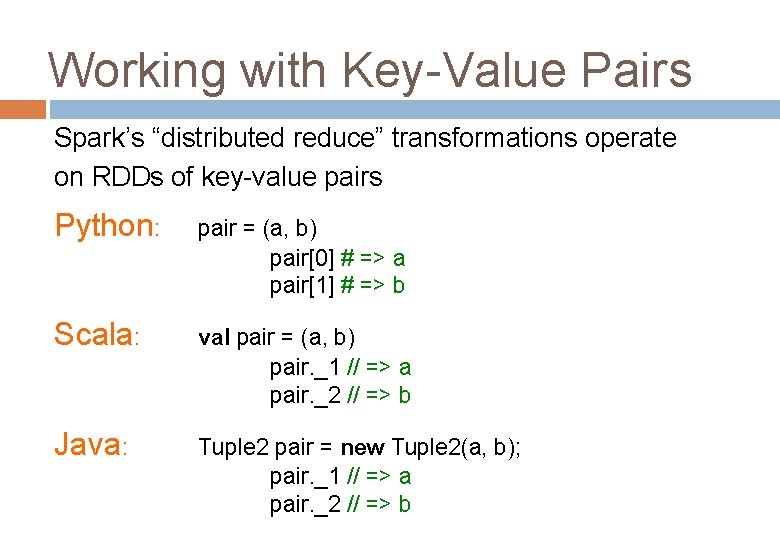

Working with Key-Value Pairs Spark’s “distributed reduce” transformations operate on RDDs of key-value pairs Python: pair = (a, b) pair[0] # => a pair[1] # => b Scala: val pair = (a, b) pair. _1 // => a pair. _2 // => b Java: Tuple 2 pair = new Tuple 2(a, b); pair. _1 // => a pair. _2 // => b

Some Key-Value Operations > > pets = sc. parallelize( [(“cat”, 1), (“dog”, 1), (“cat”, 2)]) pets. reduce. By. Key(lambda x, y: x + y) # => {(cat, 3), (dog, 1)} > pets. group. By. Key() # => {(cat, [1, 2]), (dog, [1])} > pets. sort. By. Key() # => {(cat, 1), (cat, 2), (dog, 1)} also automatically implements combiners on the map side reduce. By. Key

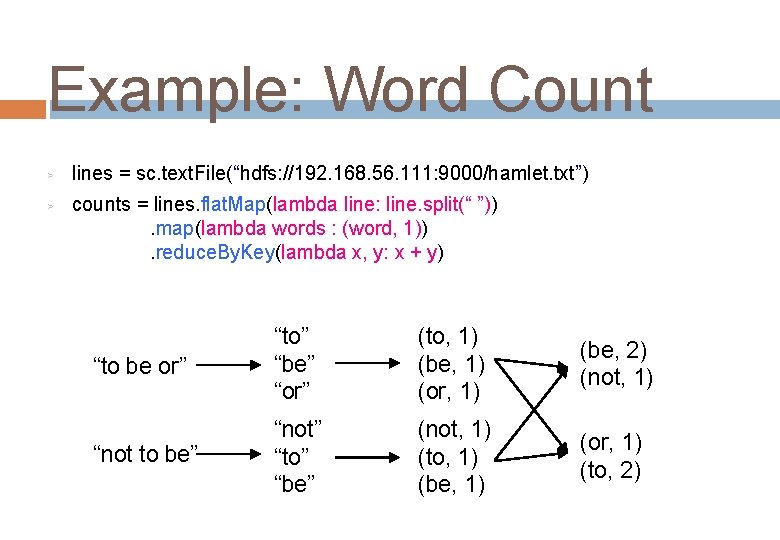

Example: Word Count > > lines = sc. text. File(“hdfs: //192. 168. 56. 111: 9000/hamlet. txt”) counts = lines. flat. Map(lambda line: line. split(“ ”)). map(lambda words : (word, 1)). reduce. By. Key(lambda x, y: x + y) “to be or” “to” “be” “or” (to, 1) (be, 1) (or, 1) (be, 2) (not, 1) “not to be” “not” “to” “be” (not, 1) (to, 1) (be, 1) (or, 1) (to, 2)

Other Key-Value Operations > > visits = sc. parallelize([ (“index. html”, “ 1. 2. 3. 4”), (“about. html”, “ 3. 4. 5. 6”), (“index. html”, “ 1. 3. 3. 1”) ]) page. Names = sc. parallelize([ (“index. html”, “Home”), (“about. html”, “About”) ]) visits. join(page. Names) # (“index. html”, (“ 1. 2. 3. 4”, “Home”)) # (“index. html”, (“ 1. 3. 3. 1”, “Home”)) # (“about. html”, (“ 3. 4. 5. 6”, “About”)) visits. cogroup(page. Names) # (“index. html”, ([“ 1. 2. 3. 4”, “ 1. 3. 3. 1”], [“Home”])) # (“about. html”, ([“ 3. 4. 5. 6”], [“About”]))

Thank sena. lai 1982@gmail. com 23