WIREFRAME Supporting Datadependent Parallelism through Dependency Graph Execution

- Slides: 43

WIREFRAME: Supporting Data-dependent Parallelism through Dependency Graph Execution in GPUs Amir. Ali Abdolrashidi†, Devashree Tripathy†, Mehmet Esat Belviranli‡, Laxmi Narayan Bhuyan†, Daniel Wong† †University of California Riverside ‡Oak Ridge National Research Lab WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 1/39

Outline • Introduction • Wireframe • DATS: Dependency-Aware TB Scheduler • Evaluation • Conclusion WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 2/39

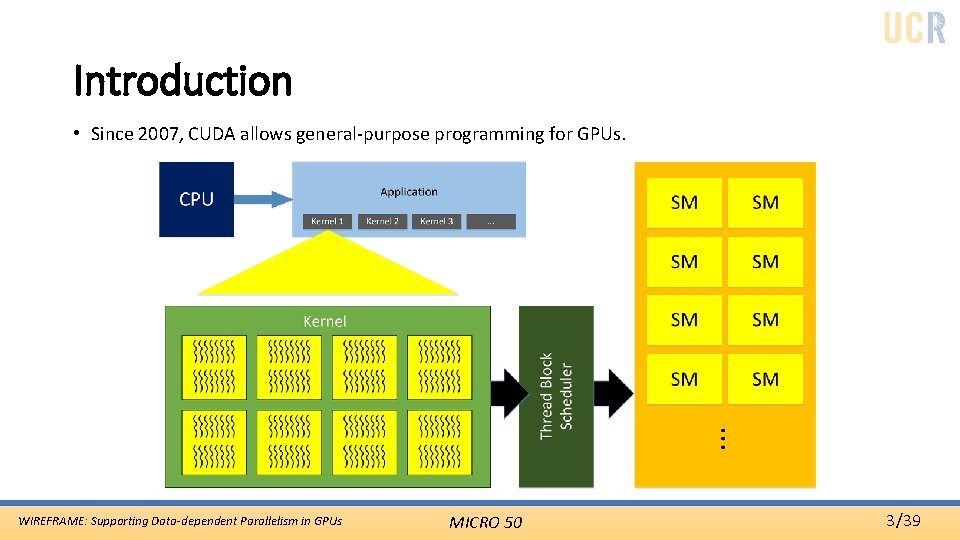

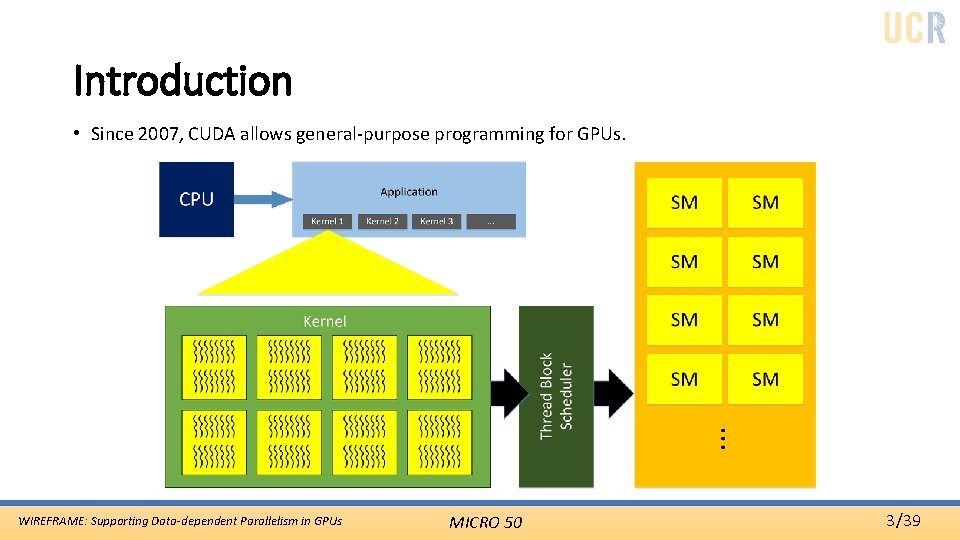

Introduction • Since 2007, CUDA allows general-purpose programming for GPUs. WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 3/39

Introduction • Despite the support for parallelism, GPUs lack support for datadependent parallelism. • No prior work has provided a generalized solution to inter-block data dependencies. WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 4/39

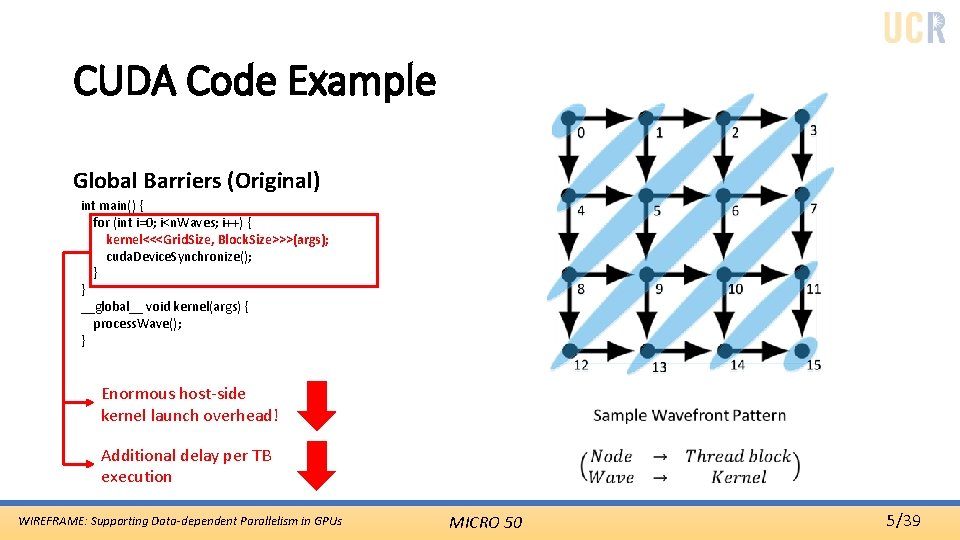

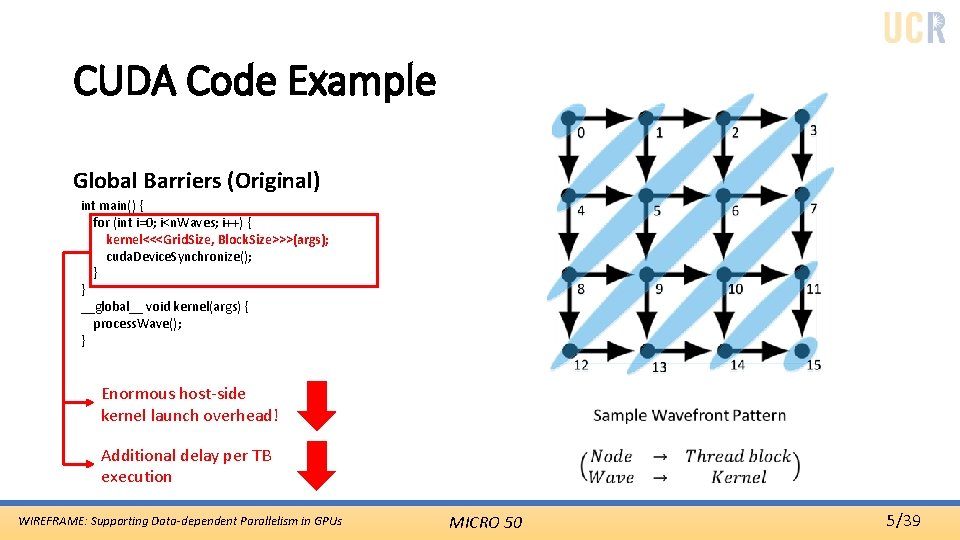

CUDA Code Example Global Barriers (Original) int main() { for (int i=0; i<n. Waves; i++) { kernel<<<Grid. Size, Block. Size>>>(args); cuda. Device. Synchronize(); } } __global__ void kernel(args) { process. Wave(); } Enormous host-side kernel launch overhead! Additional delay per TB execution WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 5/39

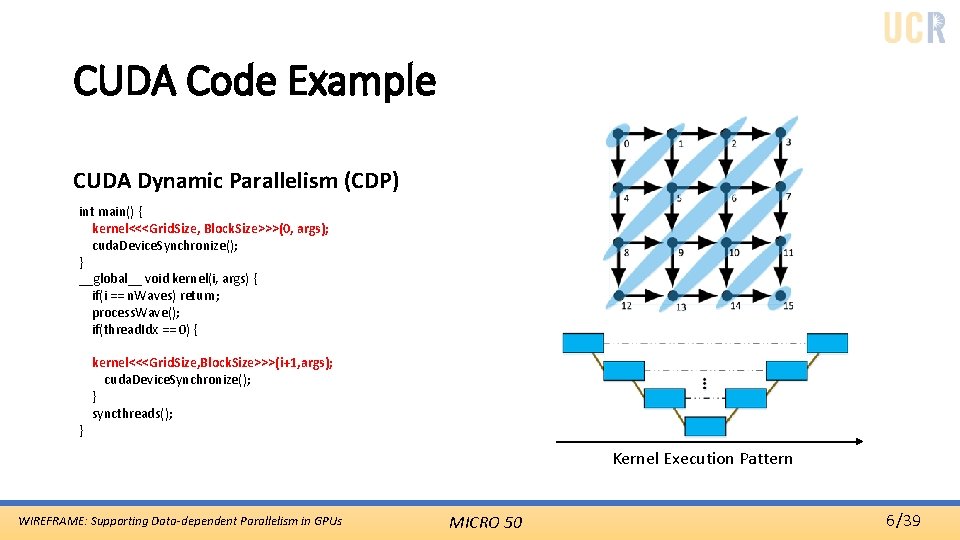

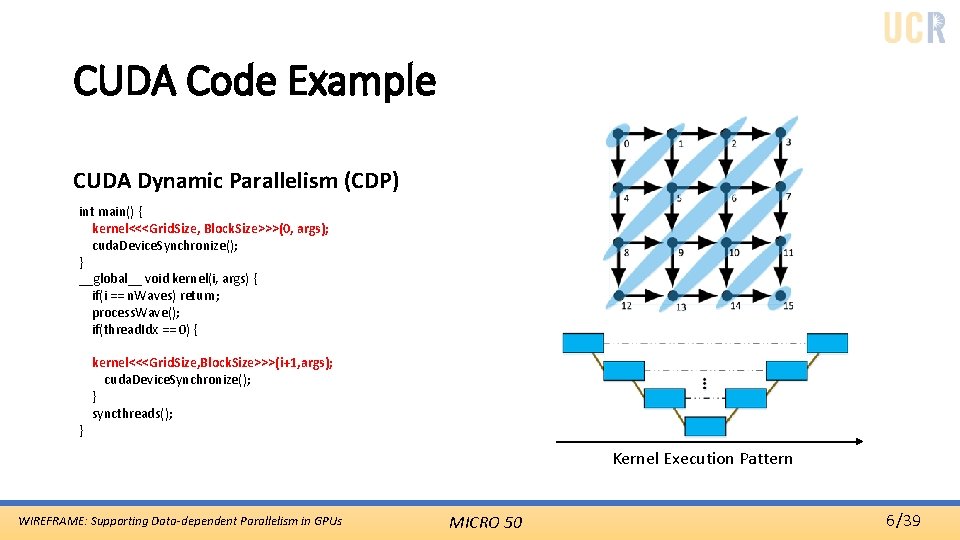

CUDA Code Example CUDA Dynamic Parallelism (CDP) int main() { kernel<<<Grid. Size, Block. Size>>>(0, args); cuda. Device. Synchronize(); } __global__ void kernel(i, args) { if(i == n. Waves) return; process. Wave(); if(thread. Idx == 0) { kernel<<<Grid. Size, Block. Size>>>(i+1, args); cuda. Device. Synchronize(); } syncthreads(); } Kernel Execution Pattern WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 6/39

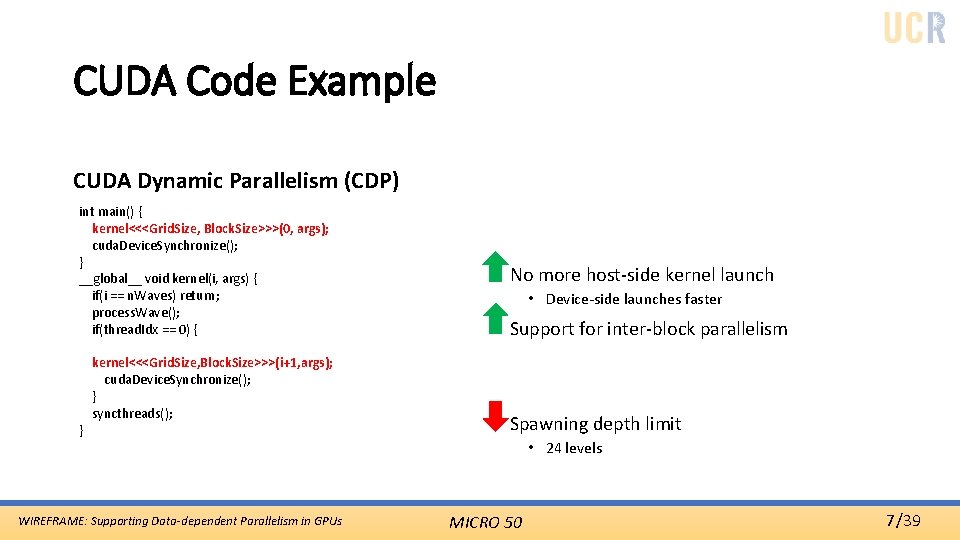

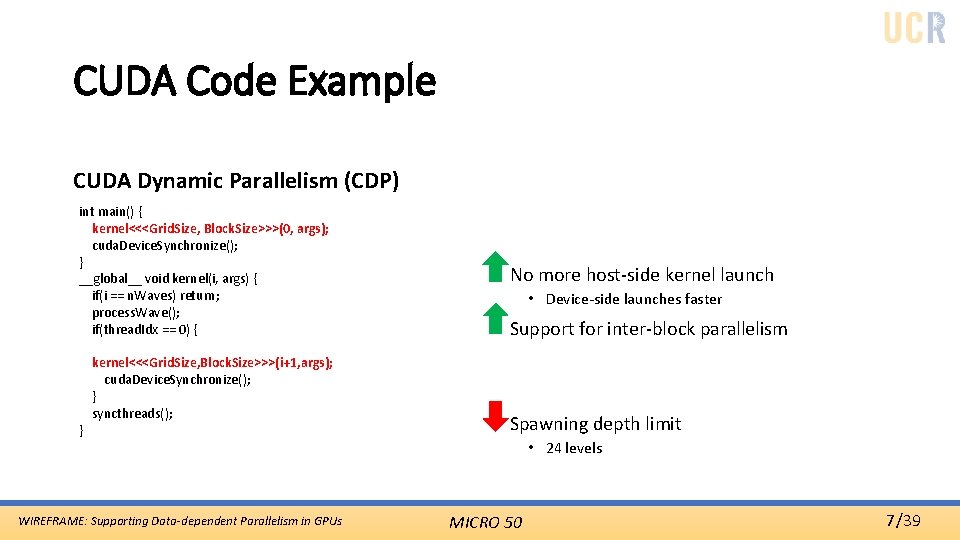

CUDA Code Example CUDA Dynamic Parallelism (CDP) int main() { kernel<<<Grid. Size, Block. Size>>>(0, args); cuda. Device. Synchronize(); } __global__ void kernel(i, args) { if(i == n. Waves) return; process. Wave(); if(thread. Idx == 0) { kernel<<<Grid. Size, Block. Size>>>(i+1, args); cuda. Device. Synchronize(); } syncthreads(); } WIREFRAME: Supporting Data-dependent Parallelism in GPUs • No more host-side kernel launch • Device-side launches faster • Support for inter-block parallelism • Spawning depth limit • 24 levels MICRO 50 7/39

CUDA Code Example CDP (Nested) int main() { parent. Kernel<<<Grid. Size, Block. Size>>>(0, args); cuda. Device. Synchronize(); } __global__ void parent. Kernel(i, args) { for (int i=0; i<n. Waves; i++) { if(thread. Idx == 0) { child. Kernel<<<Grid. Size, Block. Size>>>(args); cuda. Device. Synchronize(); } syncthreads(); } } __global__ void child. Kernel(args) { process. Wave(); } WIREFRAME: Supporting Data-dependent Parallelism in GPUs Kernel Execution Pattern MICRO 50 8/39

CUDA Code Example CDP (Nested) int main() { parent. Kernel<<<Grid. Size, Block. Size>>>(0, args); cuda. Device. Synchronize(); } __global__ void parent. Kernel(i, args) { for (int i=0; i<n. Waves; i++) { if(thread. Idx == 0) { child. Kernel<<<Grid. Size, Block. Size>>>(args); cuda. Device. Synchronize(); } syncthreads(); } } __global__ void child. Kernel(args) { process. Wave(); } WIREFRAME: Supporting Data-dependent Parallelism in GPUs • Spawning depth is no more a constraint • The device-side kernel launch still has significant overhead MICRO 50 9/39

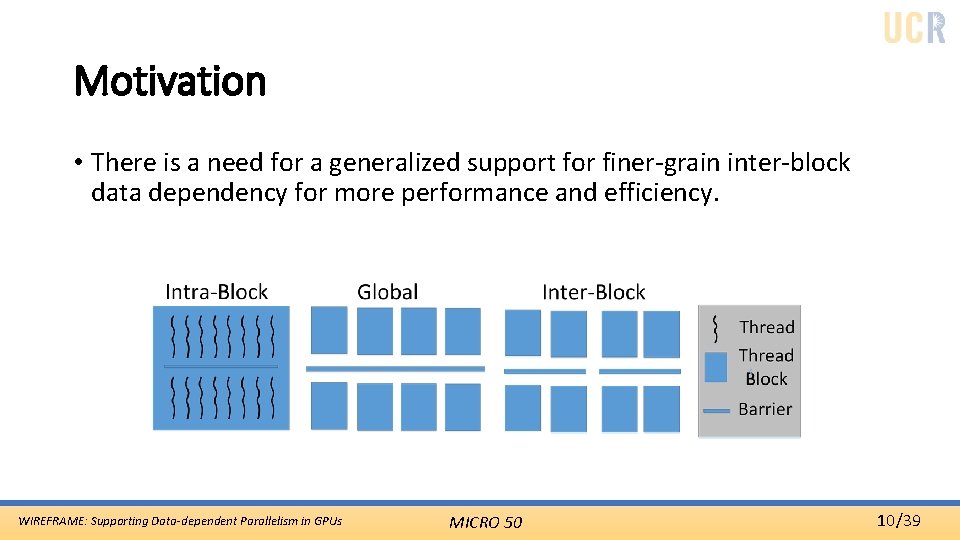

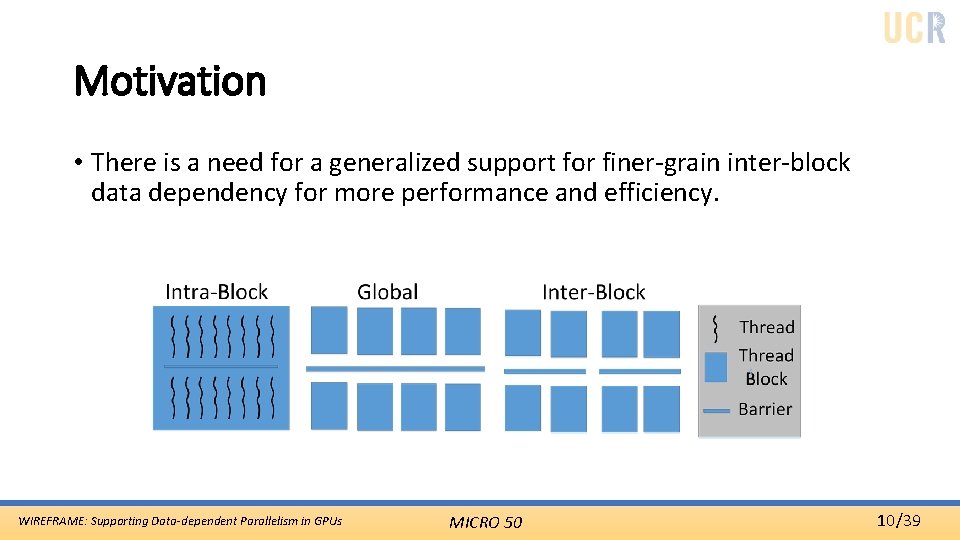

Motivation • There is a need for a generalized support for finer-grain inter-block data dependency for more performance and efficiency. WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 10/39

Motivation • Current limitations • High device-side kernel launch overhead • No general inter-block data dependency support • Our solution: Wireframe • Hybrid HW/SW solution • Hardware support for dependent parallelism • Thread block scheduling • Programming model • Main focus is inter-block dependencies WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 11/39

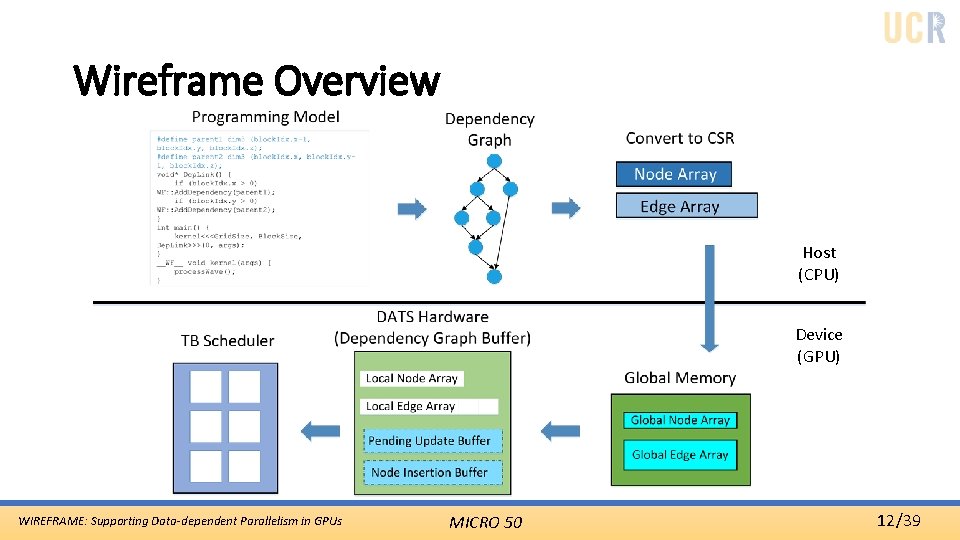

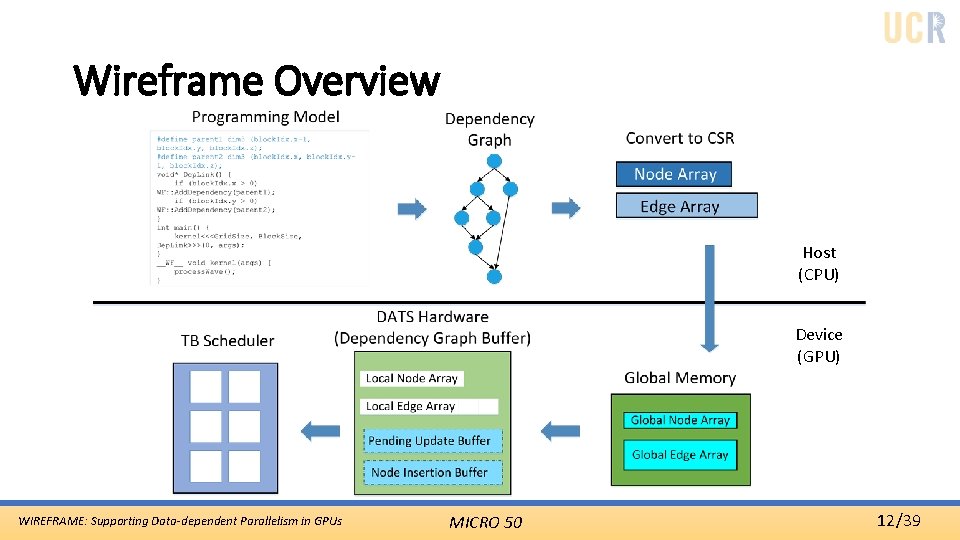

Wireframe Overview Host (CPU) Device (GPU) WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 12/39

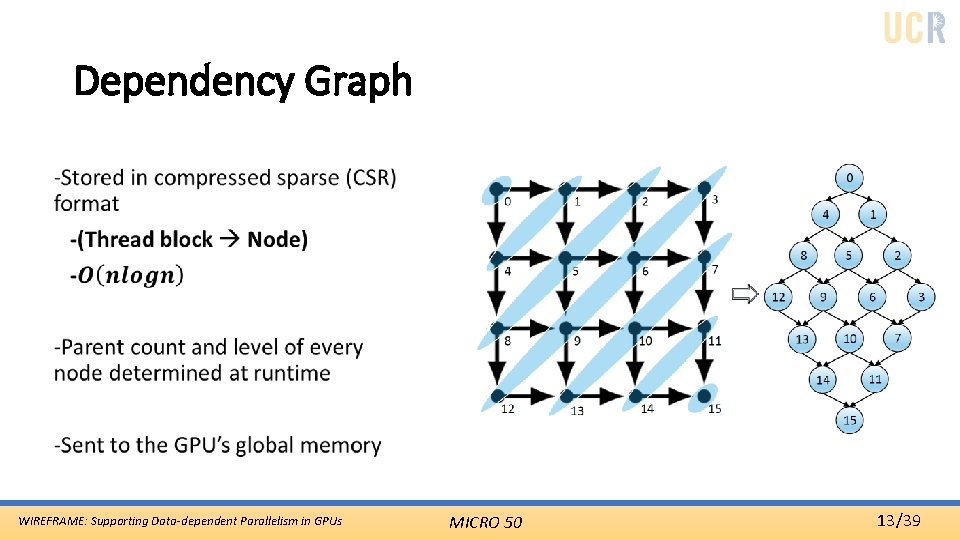

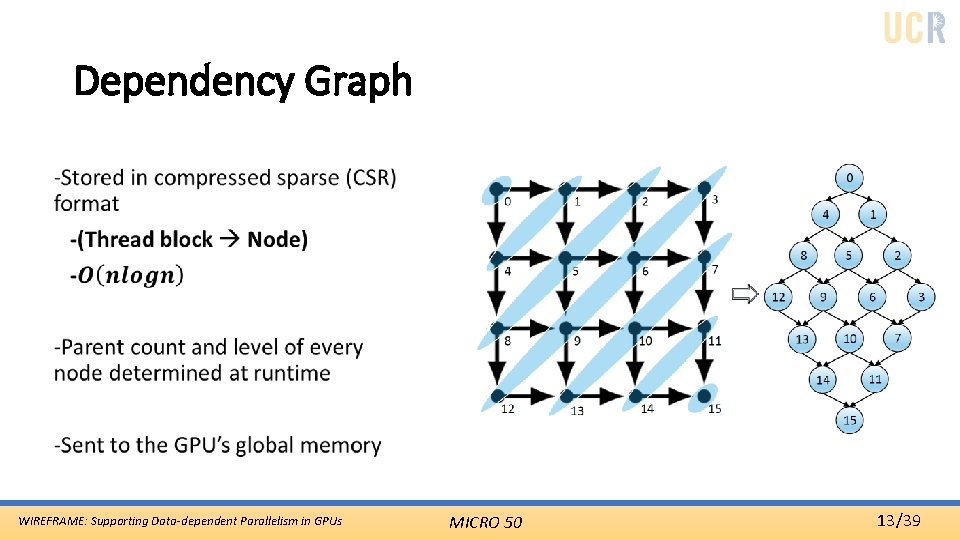

Dependency Graph • WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 13/39

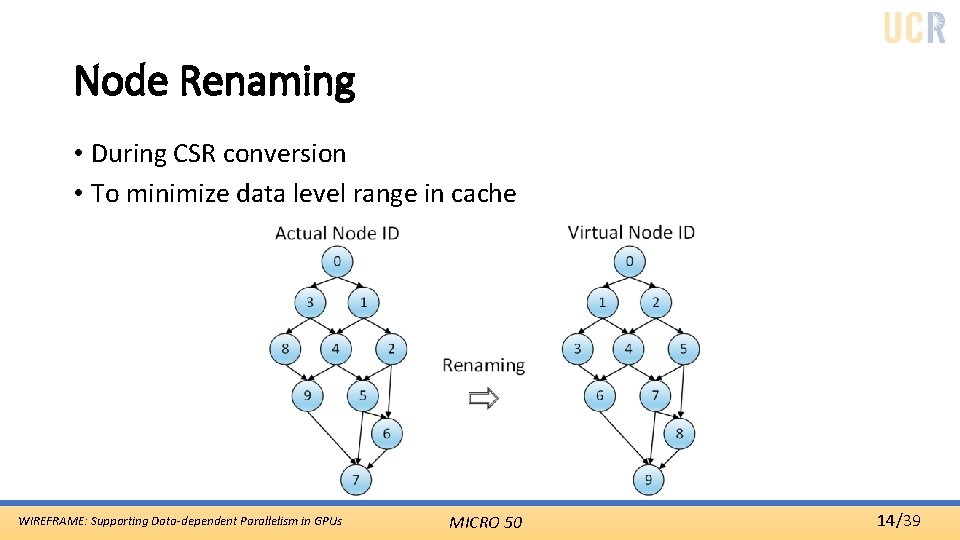

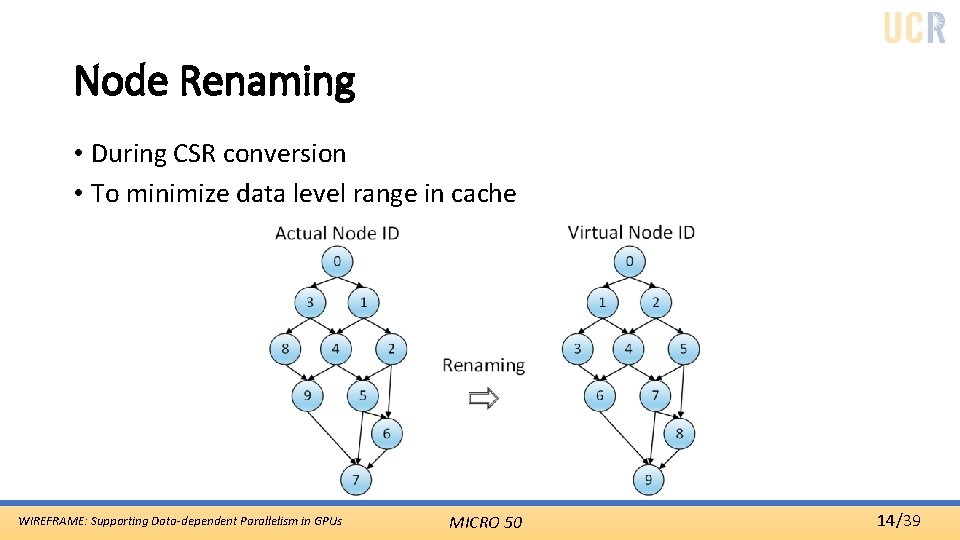

Node Renaming • During CSR conversion • To minimize data level range in cache WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 14/39

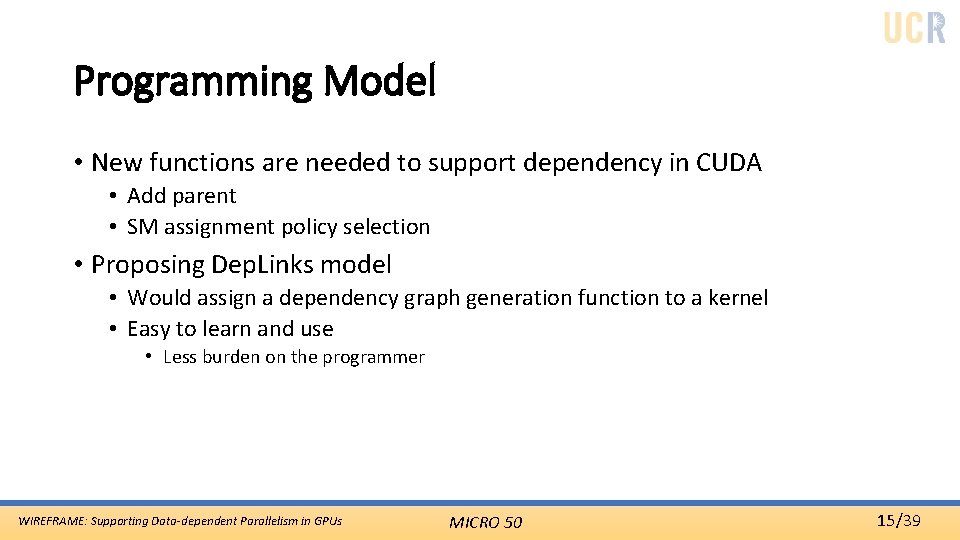

Programming Model • New functions are needed to support dependency in CUDA • Add parent • SM assignment policy selection • Proposing Dep. Links model • Would assign a dependency graph generation function to a kernel • Easy to learn and use • Less burden on the programmer WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 15/39

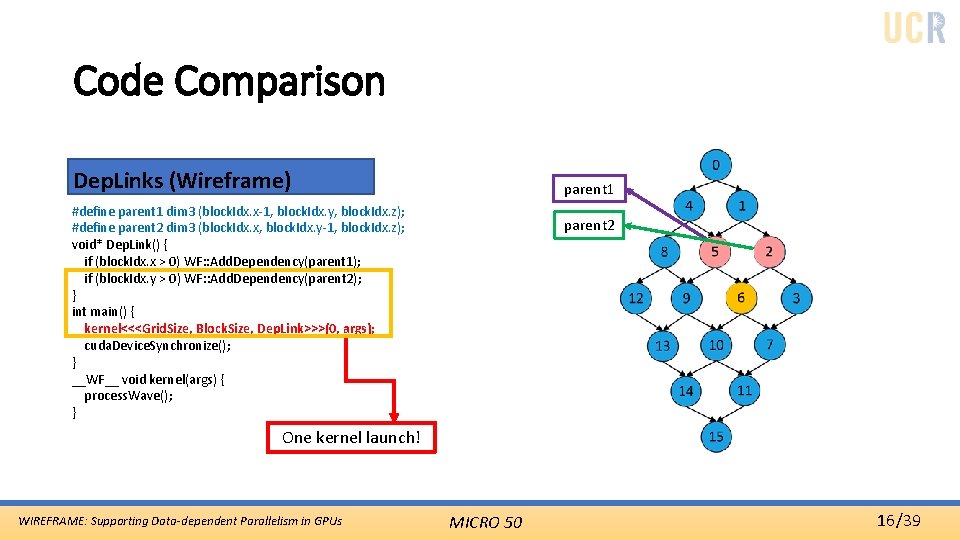

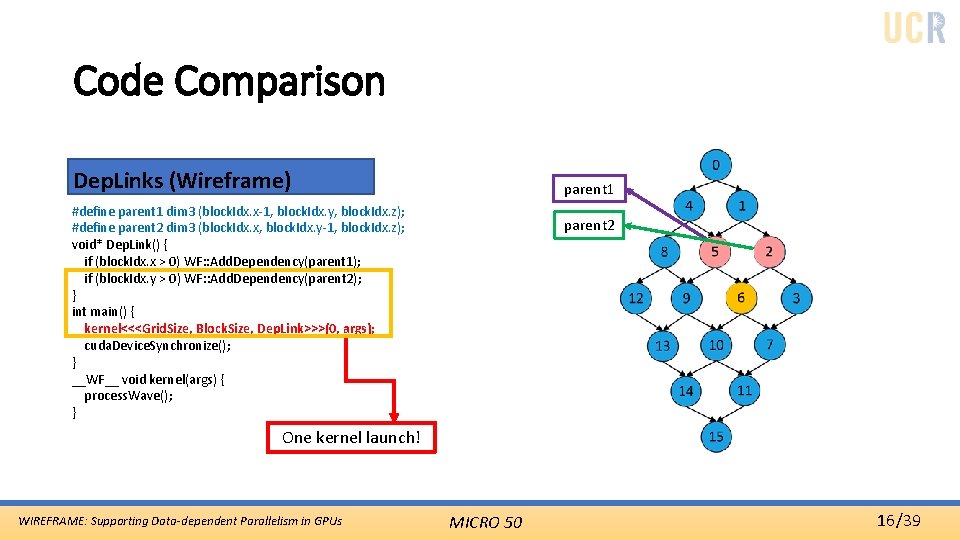

Code Comparison Dep. Links (Wireframe) parent 1 #define parent 1 dim 3 (block. Idx. x-1, block. Idx. y, block. Idx. z); #define parent 2 dim 3 (block. Idx. x, block. Idx. y-1, block. Idx. z); void* Dep. Link() { if (block. Idx. x > 0) WF: : Add. Dependency(parent 1); if (block. Idx. y > 0) WF: : Add. Dependency(parent 2); } int main() { kernel<<<Grid. Size, Block. Size, Dep. Link>>>(0, args); cuda. Device. Synchronize(); } __WF__ void kernel(args) { process. Wave(); } parent 2 One kernel launch! WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 16/39

Outline • Introduction • Wireframe • DATS: Dependency-Aware TB Scheduler • Evaluation • Conclusion WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 17/39

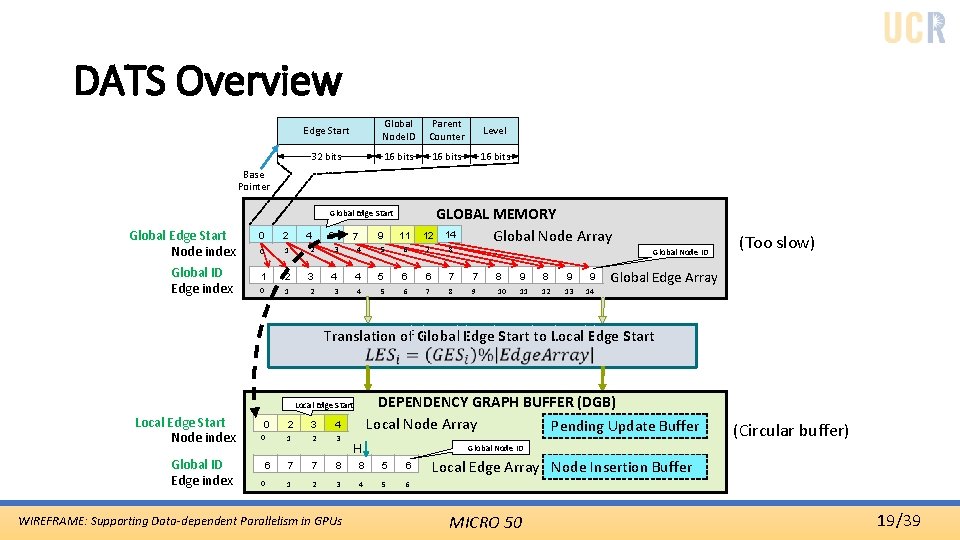

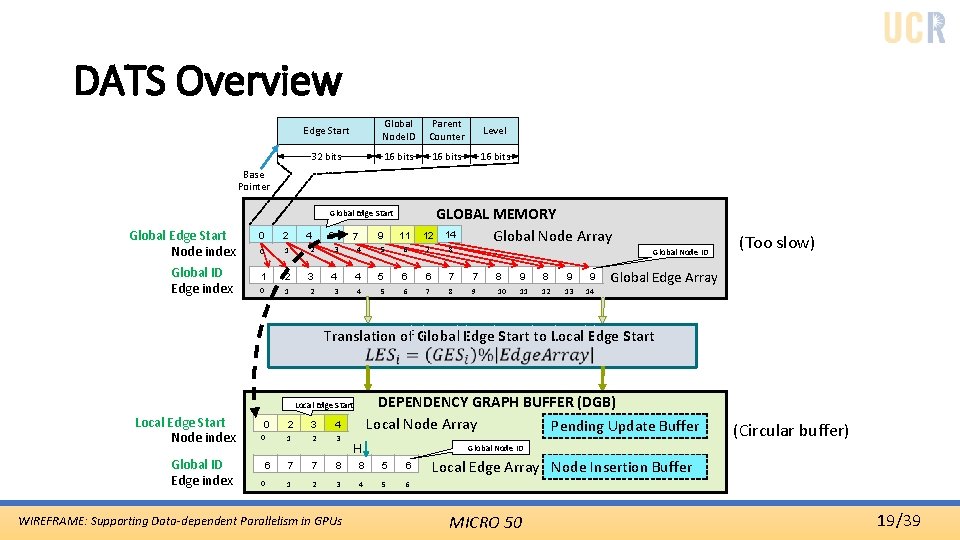

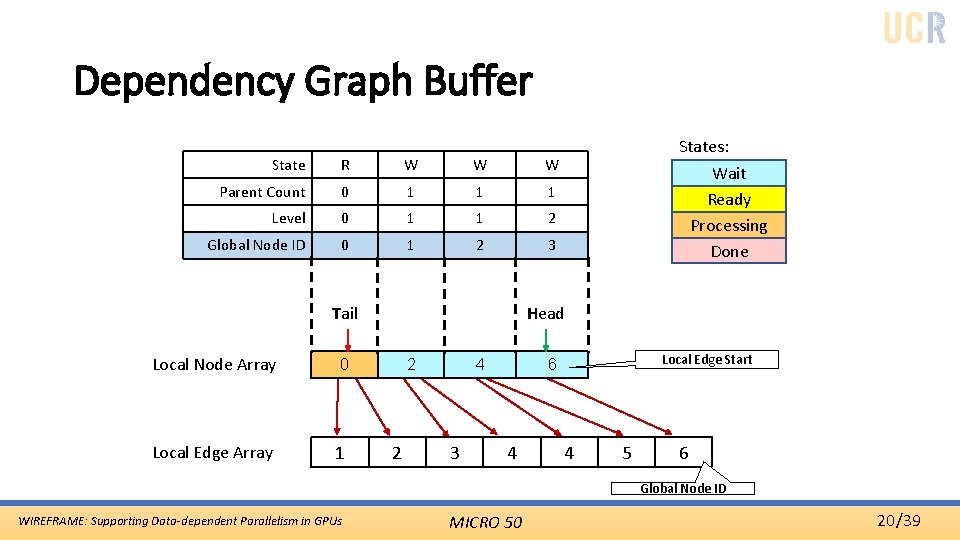

Dependency-Aware TB Scheduler (DATS) • Thread block scheduler • Issues the relevant thread block at the time for execution based on the dependency graph • Dependency Graph Buffer (DGB) • Cache data from global memory • Challenge: Efficient caching and data utilization WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 18/39

DATS Overview Edge Start Global Node. ID Parent Counter Level 32 bits 16 bits Base Pointer Global Edge Start Node index Global ID Edge index 2 4 7 9 0 1 2 3 4 5 6 1 2 3 4 4 5 0 1 2 3 4 5 0 6 Global ID Edge index 0 6 0 8 6 6 7 7 8 9 9 6 7 8 9 10 11 12 13 14 Global Node ID (Too slow) Global Edge Array DEPENDENCY GRAPH BUFFER (DGB) Local Node Array Pending Update Buffer Local Edge Start 0 7 Translation of Global Edge Start to Local Edge Start Node index 11 11 GLOBAL MEMORY 12 12 14 Global Node Array 2 3 4 1 2 3 7 7 8 8 5 6 1 2 3 4 5 6 WIREFRAME: Supporting Data-dependent Parallelism in GPUs H (Circular buffer) Global Node ID Local Edge Array Node Insertion Buffer MICRO 50 19/39

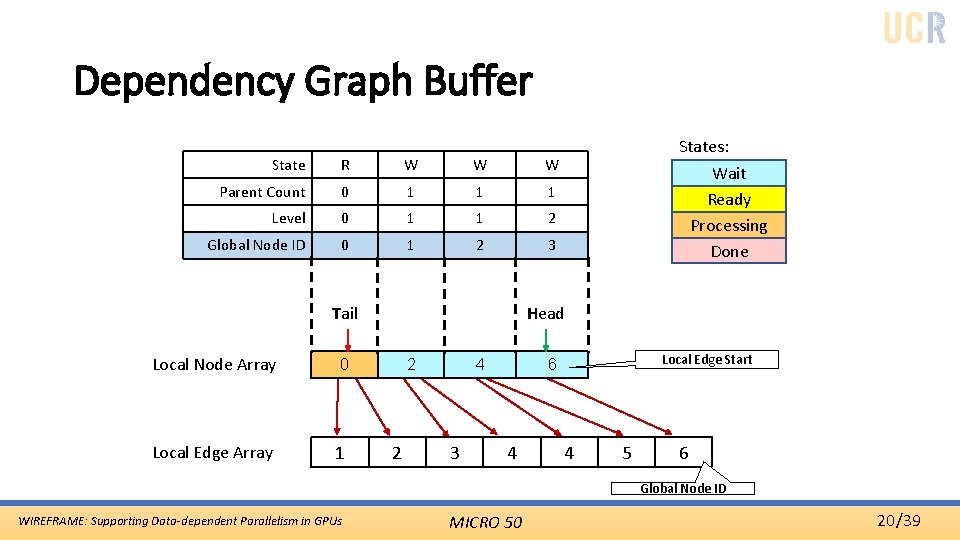

Dependency Graph Buffer State R W W W Parent Count 0 1 1 1 Level 0 1 1 2 Global Node ID 0 1 2 3 0 Local Edge Array 1 Wait Ready Processing Done H Head T Tail Local Node Array States: 2 2 4 3 Local Edge Start 6 4 4 5 6 Global Node ID WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 20/39

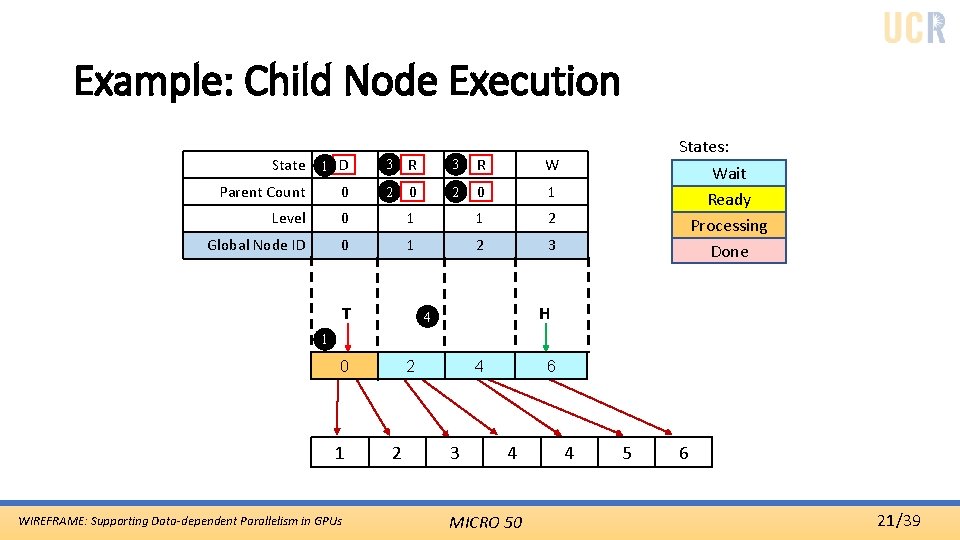

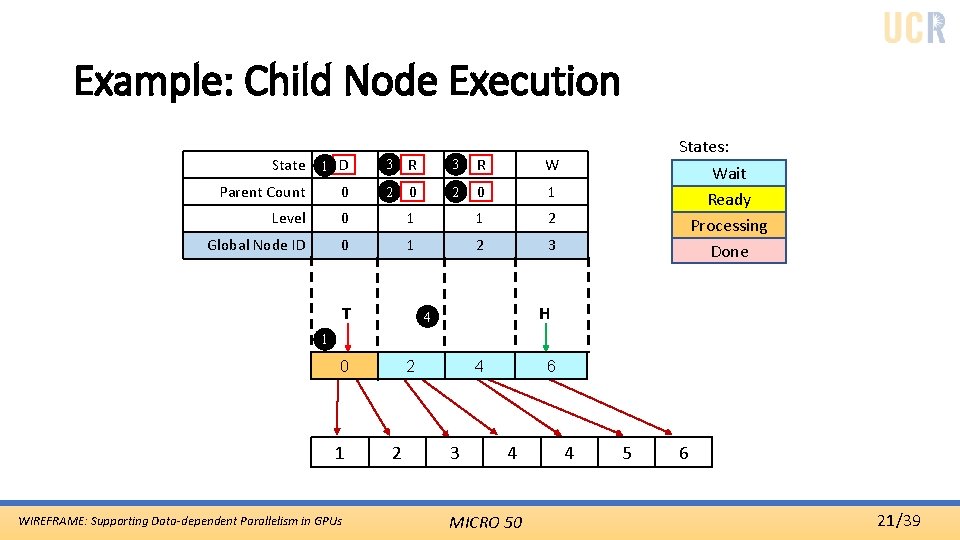

Example: Child Node Execution State 1 D R 3 W R W 10 1 Parent Count 0 Level 0 1 1 2 Global Node ID 0 1 2 3 2 10 3 W R T 2 States: Wait Ready Processing Done H 4 1 0 1 WIREFRAME: Supporting Data-dependent Parallelism in GPUs 2 2 4 3 6 4 MICRO 50 4 5 6 21/39

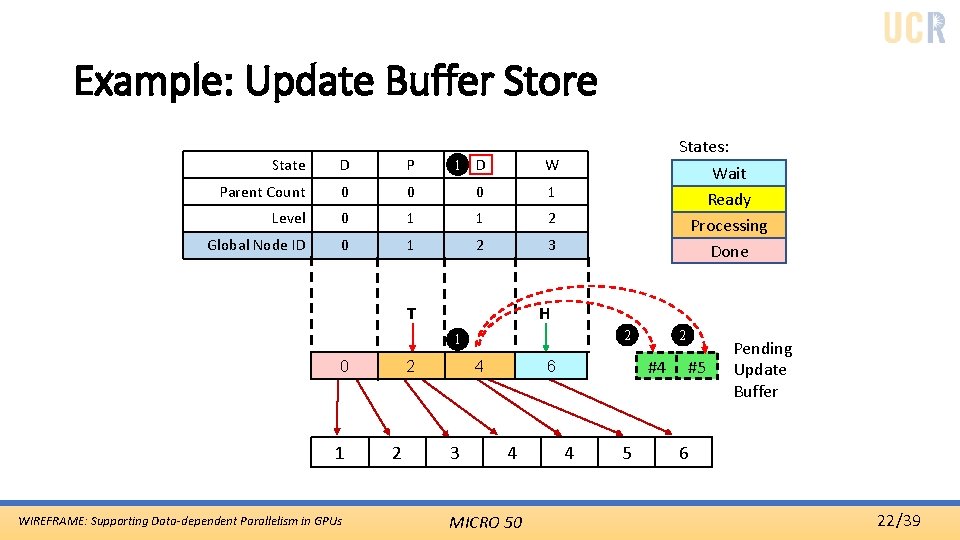

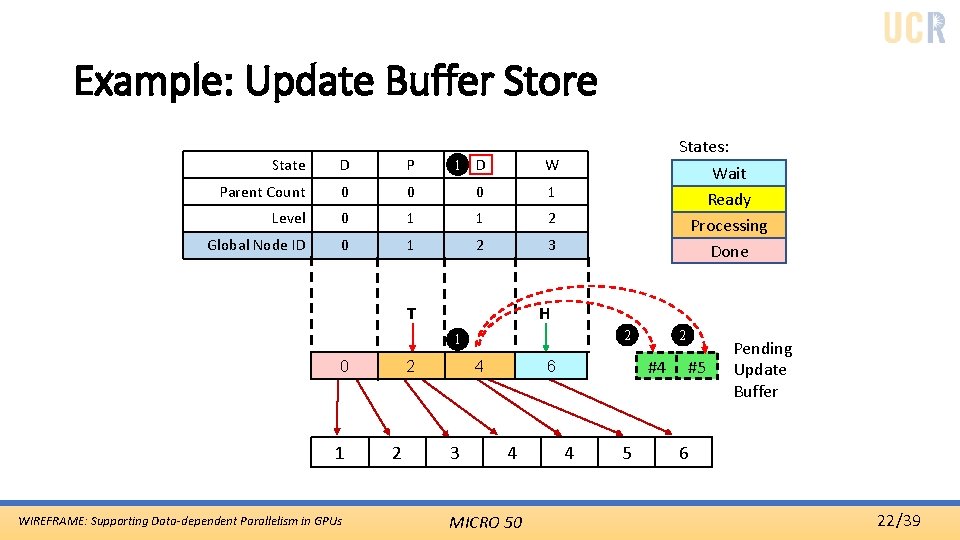

Example: Update Buffer Store State D P PD W Parent Count 0 0 0 1 Level 0 1 1 2 Global Node ID 0 1 2 3 1 States: Wait Ready Processing Done H T 2 1 0 1 WIREFRAME: Supporting Data-dependent Parallelism in GPUs 2 2 4 3 #4 6 4 MICRO 50 2 4 5 #5 Pending Update Buffer 6 22/39

Example: Invalidation… State D PD D 3 W R Parent Count 0 0 0 2 Level 0 1 1 2 Global Node ID 0 1 2 3 States: Wait Ready Processing Done 0 1 5 H T Enough spaces to load to DGB 1 0 1 WIREFRAME: Supporting Data-dependent Parallelism in GPUs 4 2 2 4 3 #4 6 4 MICRO 50 4 5 #5 #4 6 23/39

Example: …Reloading data State W W D R Parent Count 2 1 0 0 Level 2 2 1 2 Global Node ID 4 5 2 3 6 Load complete! 0 6 WIREFRAME: Supporting Data-dependent Parallelism in GPUs Wait Ready Processing Done T H 2 7 States: - 7 #4 6 - MICRO 50 - - #5 #4 6 24/39

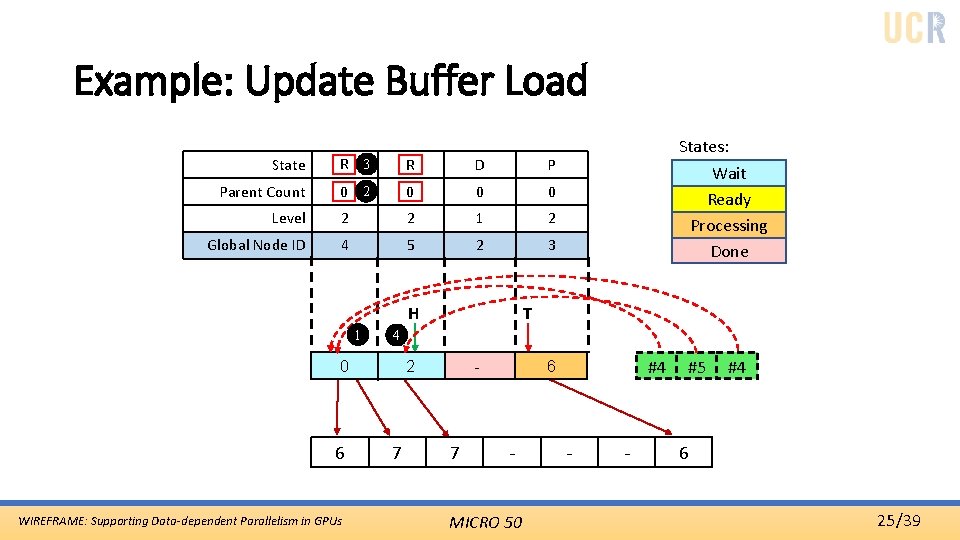

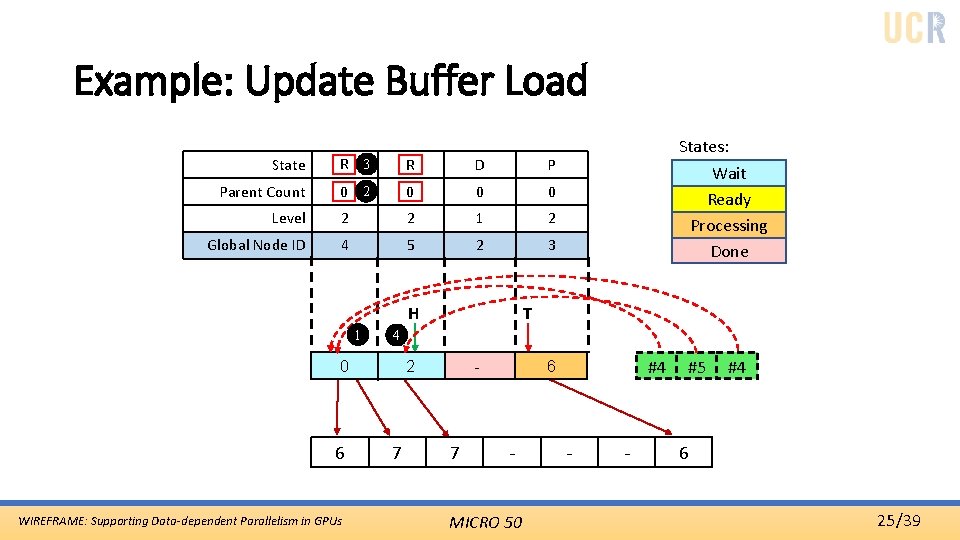

Example: Update Buffer Load State R 3 W W R D P Parent Count 12 2 0 01 0 0 Level 2 2 1 2 Global Node ID 4 5 2 3 H 1 WIREFRAME: Supporting Data-dependent Parallelism in GPUs Wait Ready Processing Done T 4 0 6 States: 2 7 - 7 #4 6 - MICRO 50 - - #5 #4 6 25/39

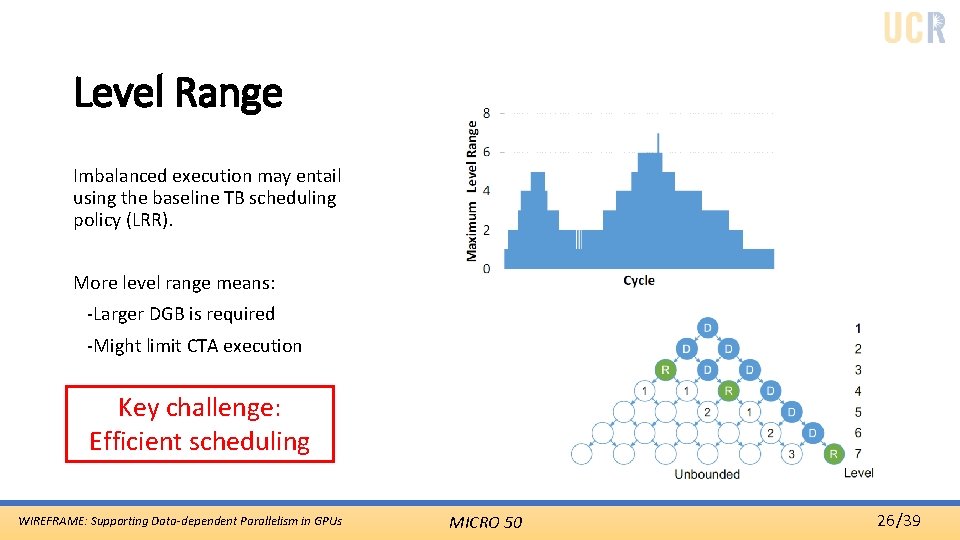

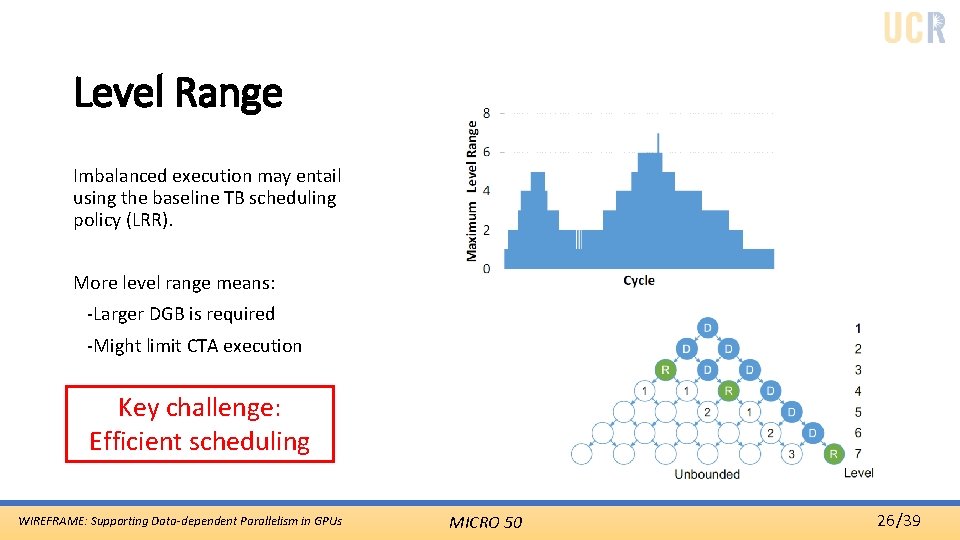

Level Range Imbalanced execution may entail using the baseline TB scheduling policy (LRR). More level range means: -Larger DGB is required -Might limit CTA execution Key challenge: Efficient scheduling WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 26/39

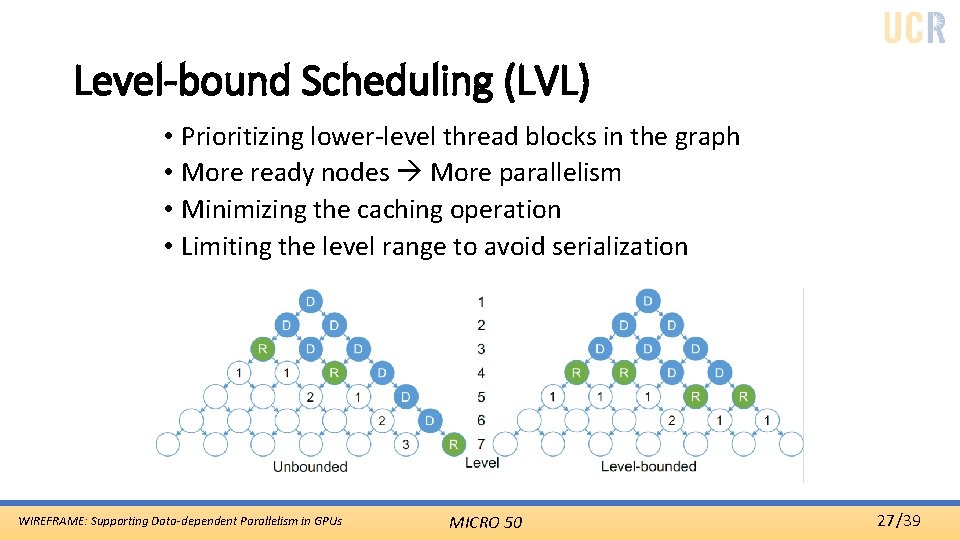

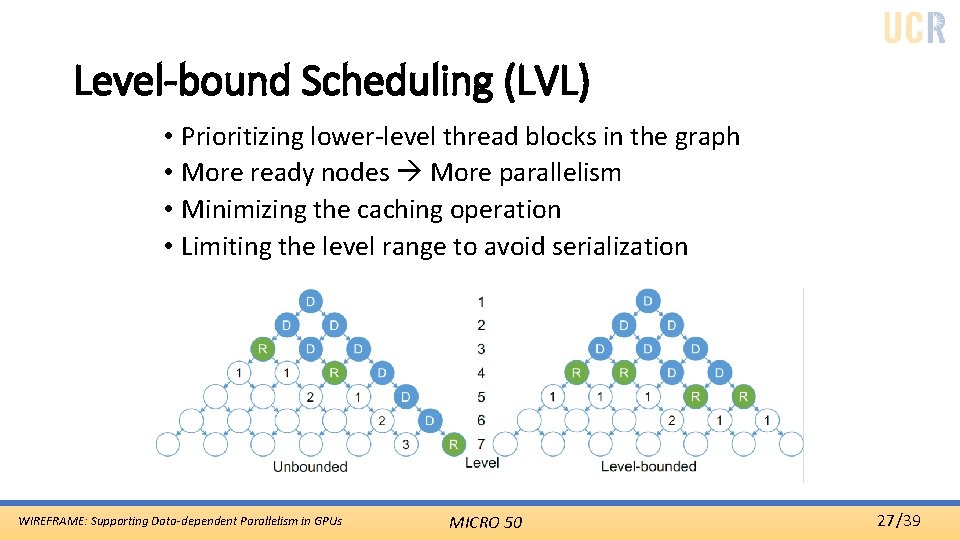

Level-bound Scheduling (LVL) • Prioritizing lower-level thread blocks in the graph • More ready nodes More parallelism • Minimizing the caching operation • Limiting the level range to avoid serialization WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 27/39

Outline • Introduction • Wireframe • DATS: Dependency-Aware TB Scheduler • Evaluation • Conclusion WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 28/39

Evaluation • Evaluation platform • GPGPU-Sim v 3. 2. 2 (GTX 480) • Six modified benchmarks to actively make use of data dependency • HEAT 2 D, IMG_INT, DTW • HIST, SOR, SW • Cases • Global, CDP • Dep. Links primitives • LRR and LVL • LVL=3 WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 29/39

Local Node Array Size • Empirical estimation used WIREFRAME: Supporting Data-dependent Parallelism in GPUs Minimum size chosen (128 entries) MICRO 50 30/39

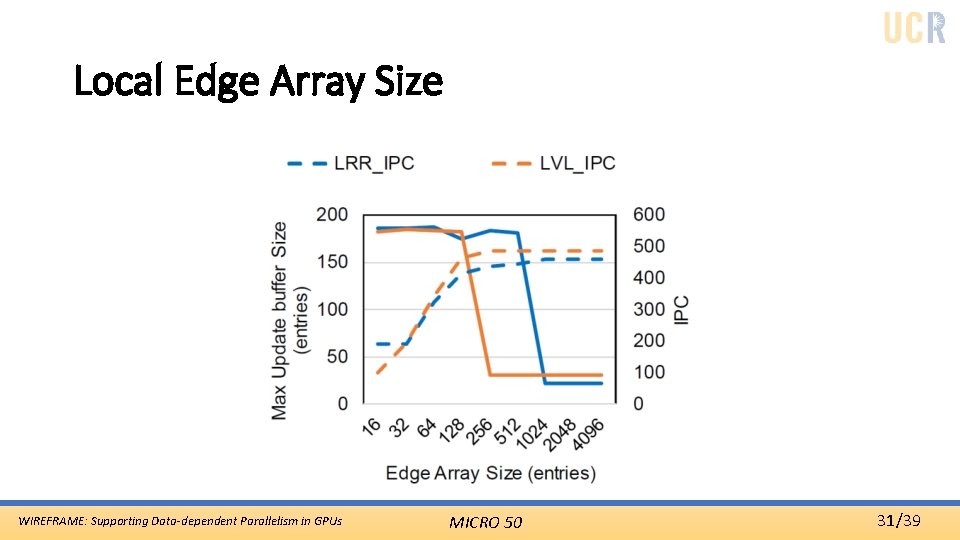

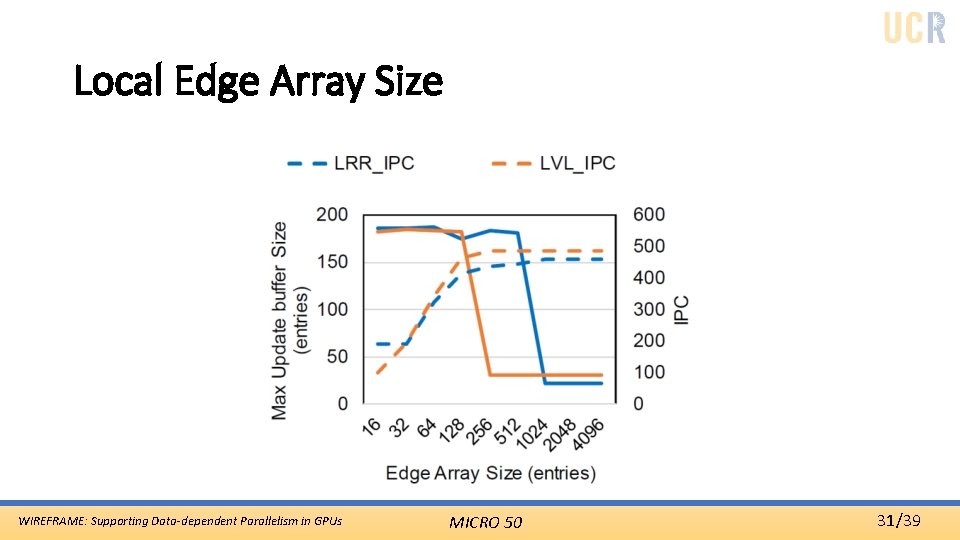

Local Edge Array Size WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 31/39

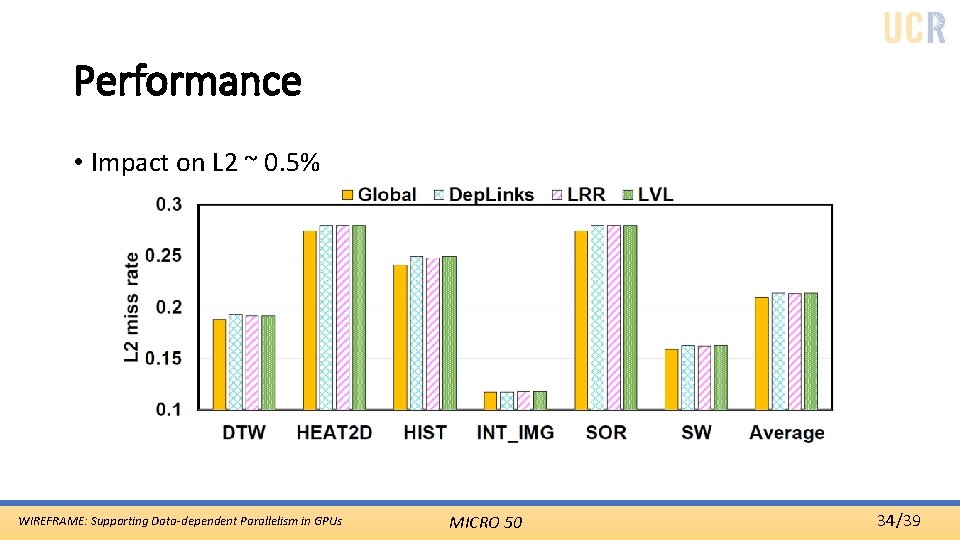

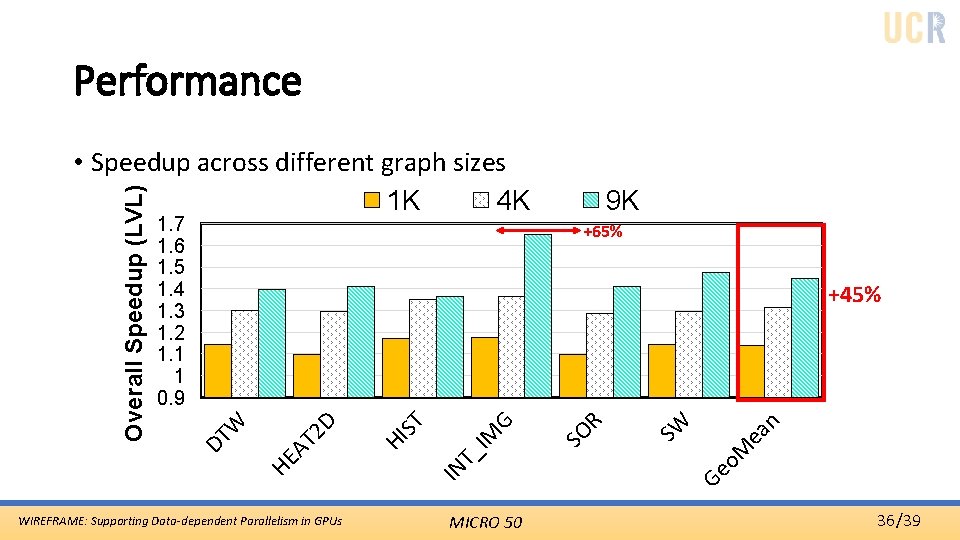

Evaluation • 2 KB area overhead • No significant impact on L 2 miss rate • Low memory request overhead • 0. 13% Average • Performance improvement • 45% average improvement WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 32/39

1 K 4 K WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 9 K Ge SW o. M ea n 14 12 10 8 6 4 2 0 DT HE W AT 2 D HI IN ST T_ IM G SO R • Comp/Launch Ratio Computations vs Launch Overhead 33/39

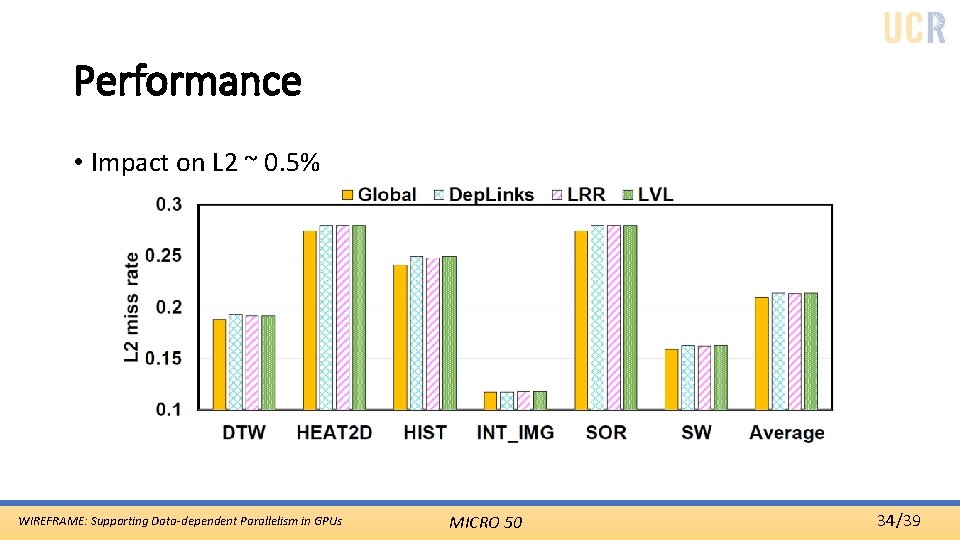

Performance • Impact on L 2 ~ 0. 5% WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 34/39

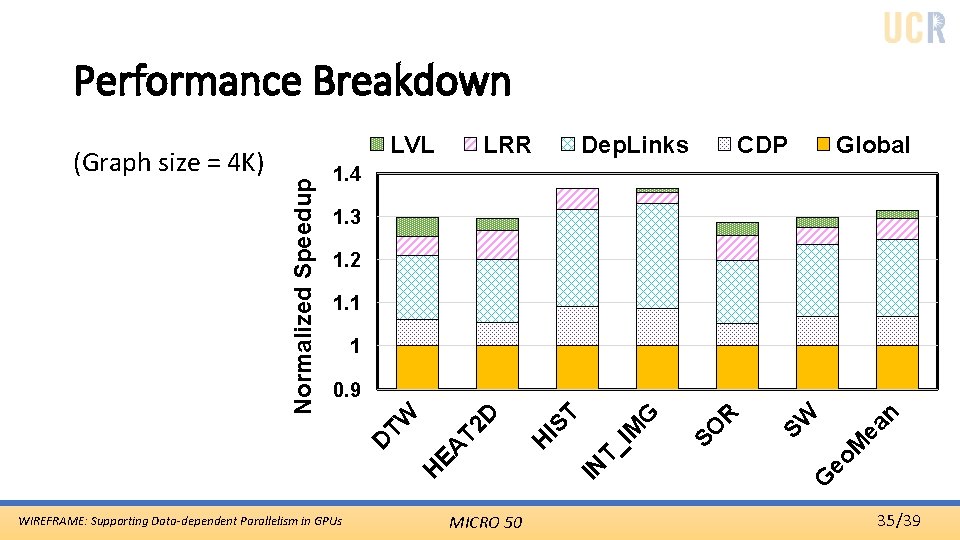

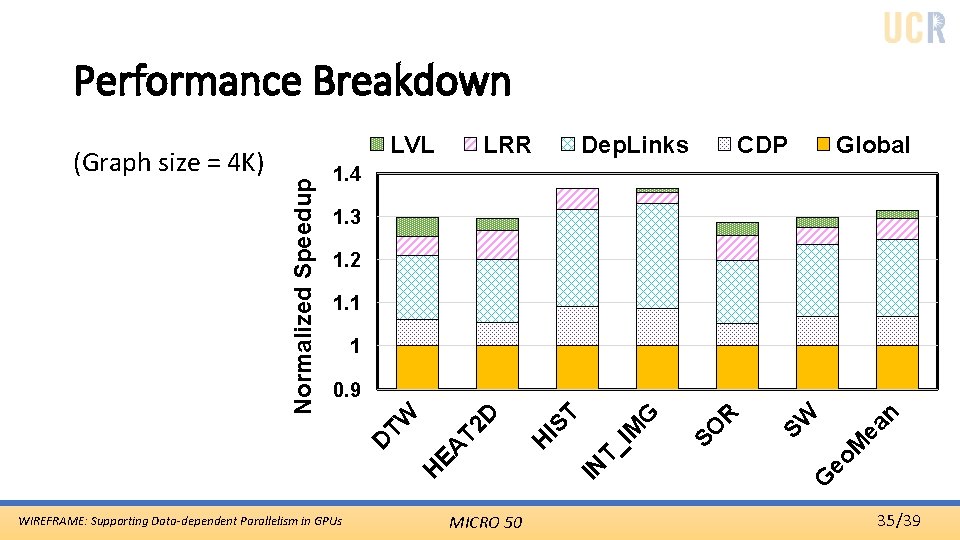

Performance Breakdown LRR Dep. Links CDP Global 1. 4 1. 3 1. 2 1. 1 1 WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 n M ea eo R SW G T_ IN SO IM G T IS H H EA T 2 D TW 0. 9 D Normalized Speedup (Graph size = 4 K) LVL 35/39

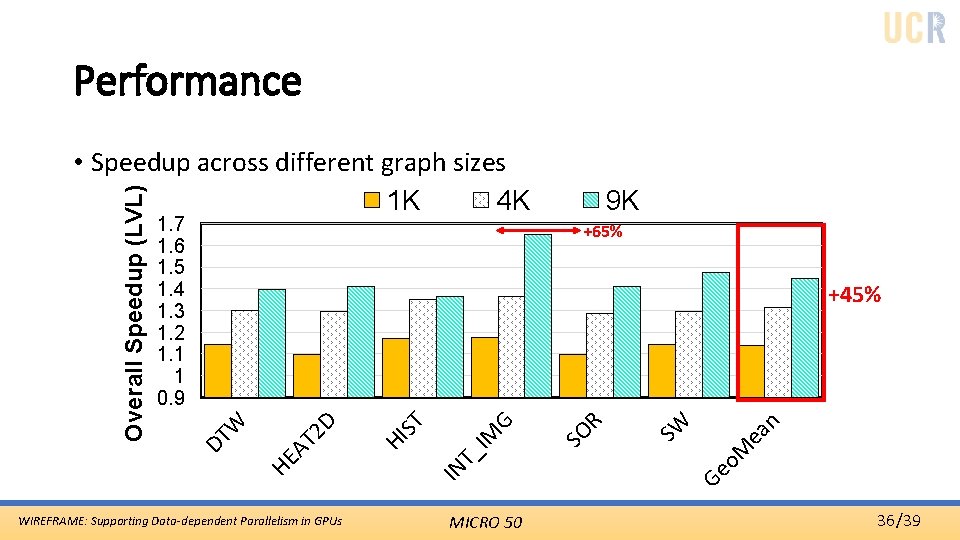

Performance 1. 7 1. 6 1. 5 1. 4 1. 3 1. 2 1. 1 1 0. 9 9 K +65% WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 Ge o. M ea n SW R SO G IM ST HI T_ IN HE AT 2 D +45% DT W Overall Speedup (LVL) • Speedup across different graph sizes 1 K 4 K 36/39

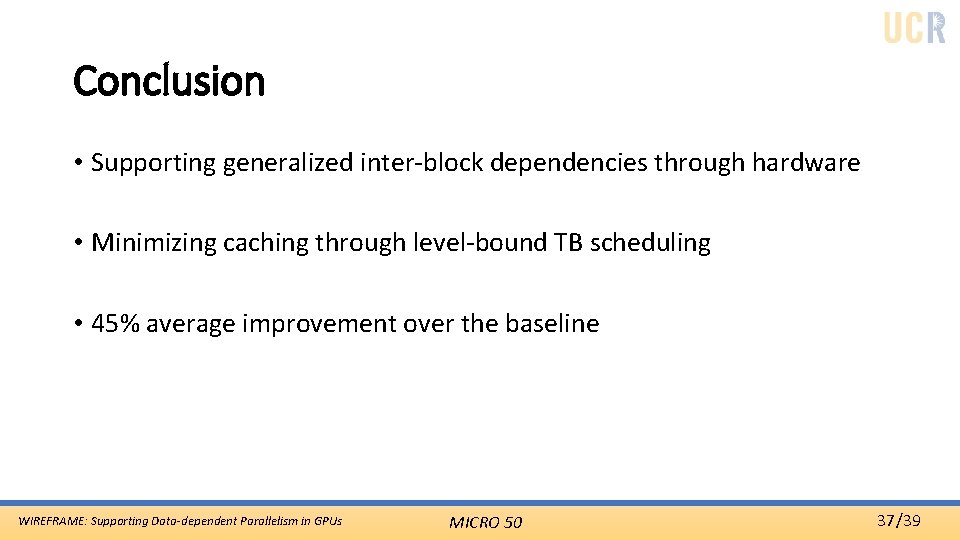

Conclusion • Supporting generalized inter-block dependencies through hardware • Minimizing caching through level-bound TB scheduling • 45% average improvement over the baseline WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 37/39

Conclusion • Future Work • More policies regarding the locality/load balance for SMs • Dynamic dependency • Ability to run workloads with variable sizes, e. g. BFS • Automation/optimization of the graph generation by the compiler • No more load on the programmer! WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 38/39

Thank you! Questions? WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 39/39

Performance (IPC) WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 40/39

LVL vs LRR WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 41/39

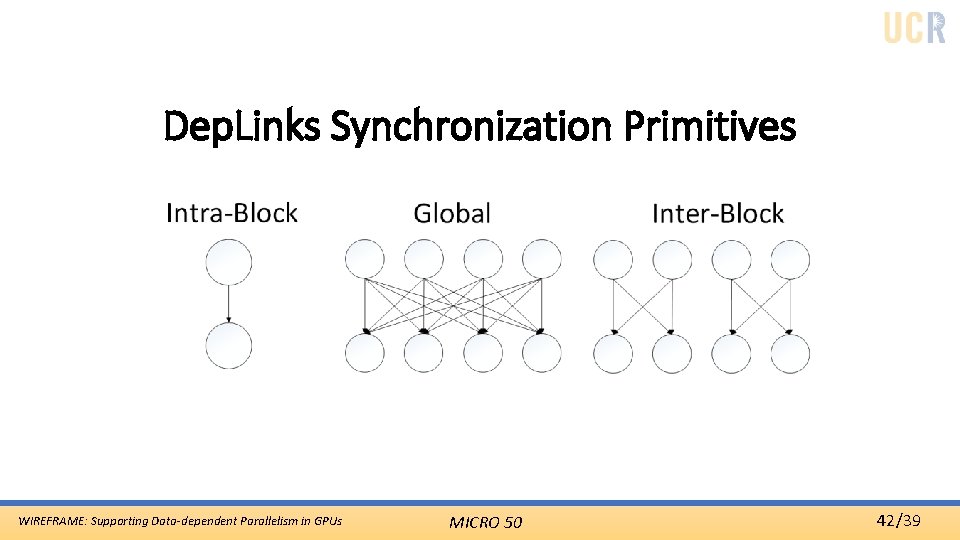

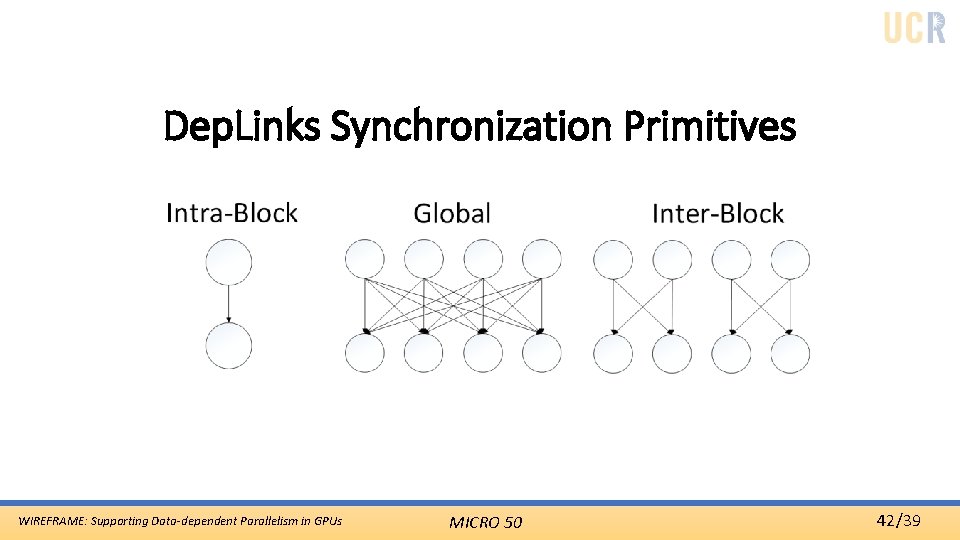

Dep. Links Synchronization Primitives WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 42/39

Thank you! Questions? WIREFRAME: Supporting Data-dependent Parallelism in GPUs MICRO 50 43/39