Windows Failover Clusters in Windows Server 2016 Ned

- Slides: 57

Windows Failover Clusters in Windows Server 2016 Ned Pyle Prin. Program Manager, Microsoft @nerdpyle nedpyle@microsoft. com

Session Objectives and Takeaways § Overview new feature enhancements coming in Windows Server 2016 Failover Clustering § Cover the scenarios and workloads unlocked by these new capabilities © ITEdgeintersection. All rights reserved. http: //www. ITEdgeintersection. com

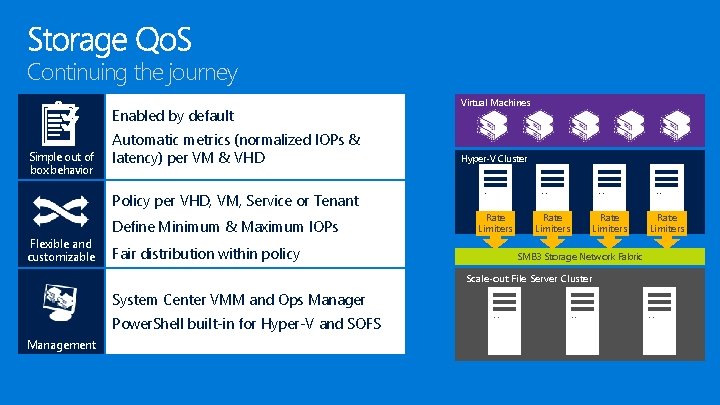

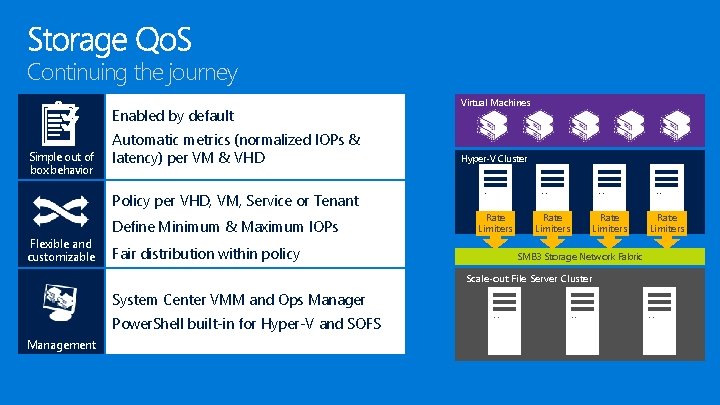

Continuing the journey Enabled by default Simple out of box behavior Automatic metrics (normalized IOPs & latency) per VM & VHD Virtual Machines Hyper-V Cluster Policy per VHD, VM, Service or Tenant Flexible and customizable Define Minimum & Maximum IOPs Fair distribution within policy Rate Limiters SMB 3 Storage Network Fabric Scale-out File Server Cluster System Center VMM and Ops Manager Power. Shell built-in for Hyper-V and SOFS Management Rate Limiters

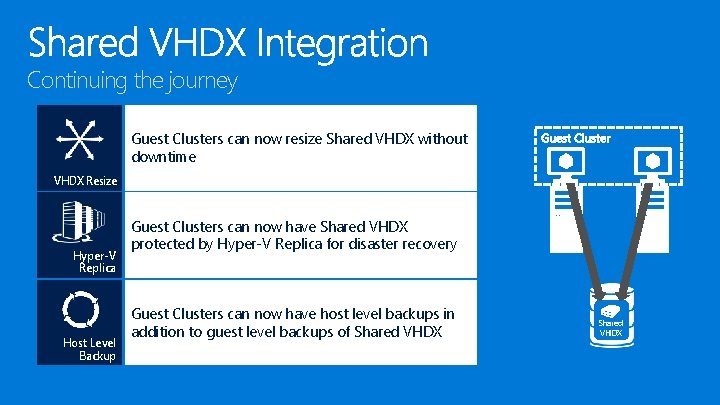

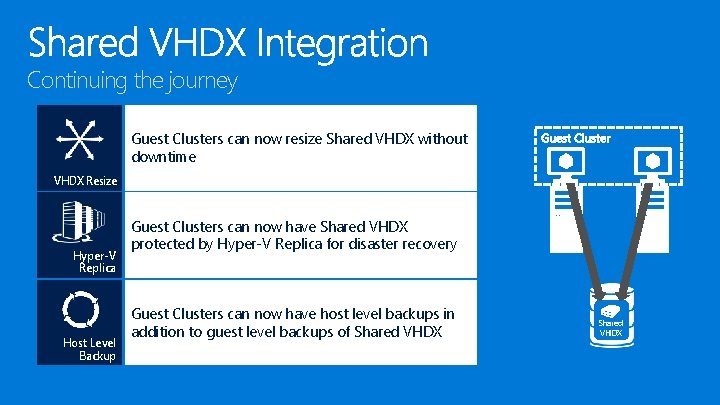

Continuing the journey Guest Clusters can now resize Shared VHDX without downtime VHDX Resize Hyper-V Replica Host Level Backup Guest Clusters can now have Shared VHDX protected by Hyper-V Replica for disaster recovery Guest Clusters can now have host level backups in addition to guest level backups of Shared VHDX

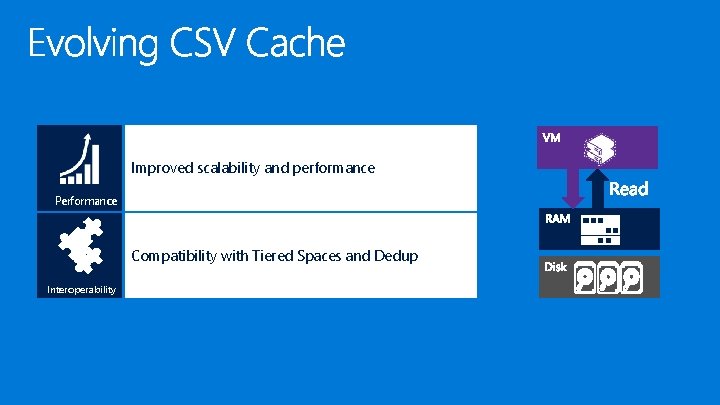

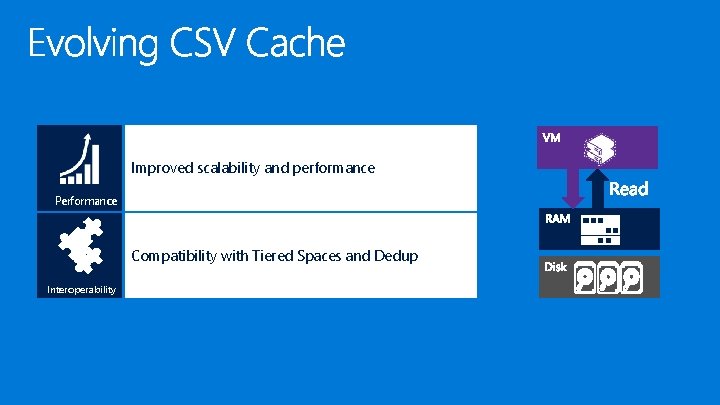

Improved scalability and performance Performance Compatibility with Tiered Spaces and Dedup Interoperability

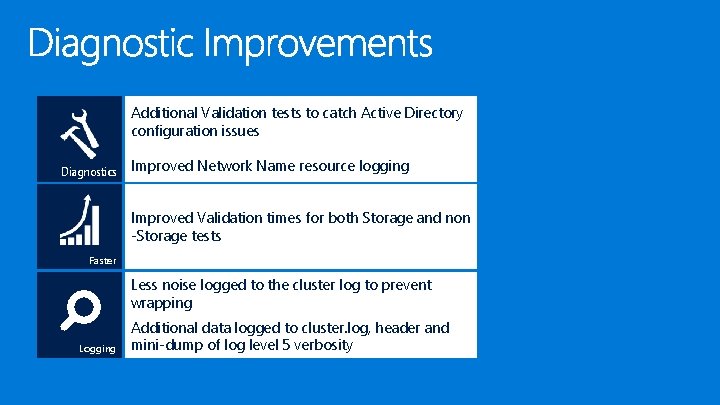

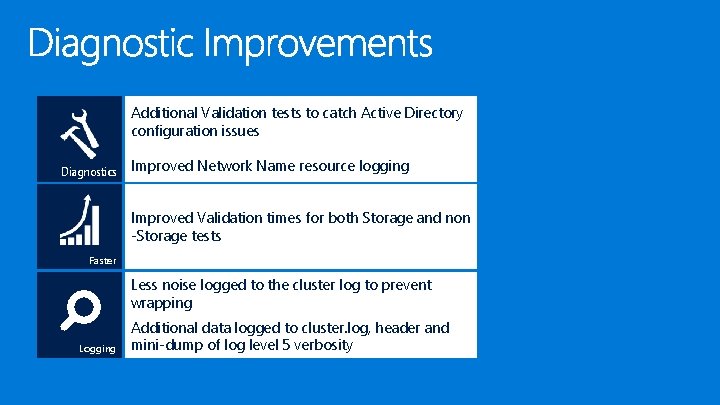

Additional Validation tests to catch Active Directory configuration issues Diagnostics Improved Network Name resource logging Improved Validation times for both Storage and non -Storage tests Faster Less noise logged to the cluster log to prevent wrapping Logging Additional data logged to cluster. log, header and mini-dump of log level 5 verbosity

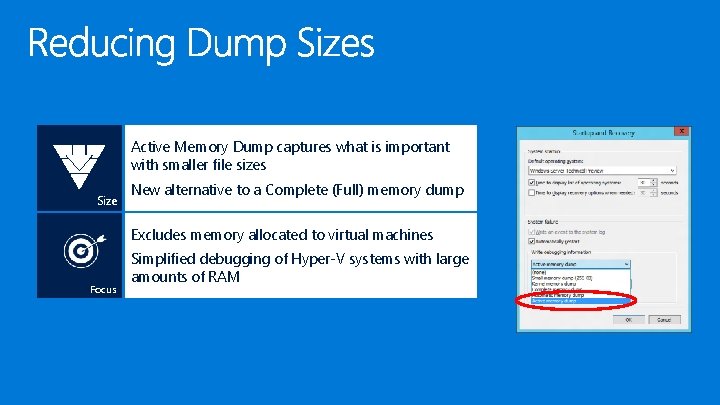

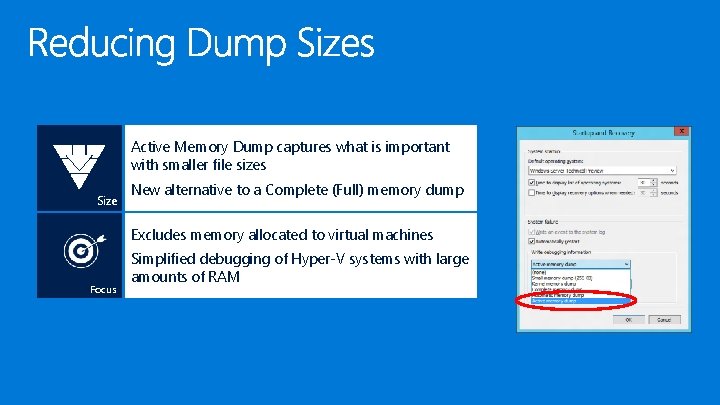

Active Memory Dump captures what is important with smaller file sizes Size New alternative to a Complete (Full) memory dump Excludes memory allocated to virtual machines Focus Simplified debugging of Hyper-V systems with large amounts of RAM

Clustering will capture live dumps on failures Integration Live dumps are a mechanism to generate a memory dump for debugging without crashing the system Capture debugging data without having to bugcheck nodes Availability Debugging data without downtime Capture dumps across multiple machines in parallel to enable debugging the distributed system Orchestration Integrated with Windows Error Reporting to snapshot logs

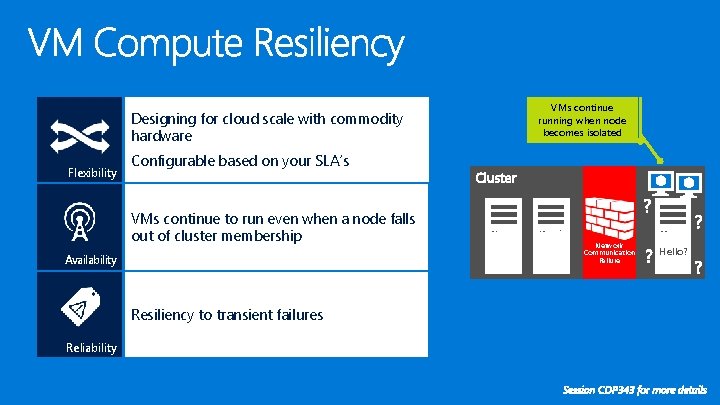

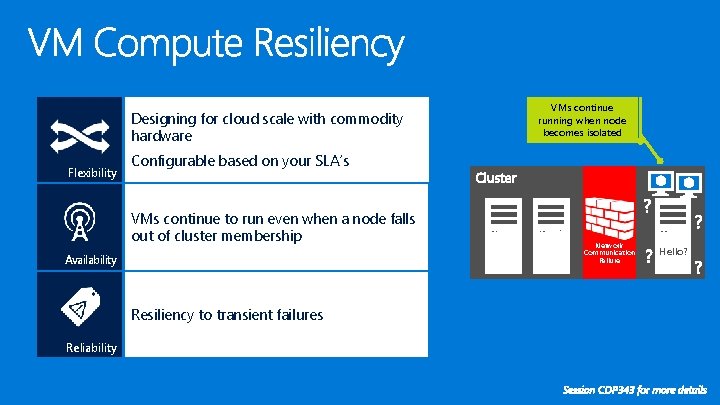

Designing for cloud scale with commodity hardware Flexibility Configurable based on your SLA’s VMs continue to run even when a node falls out of cluster membership Availability Resiliency to transient failures Reliability VMs continue running when node becomes isolated Network Communication Failure Hello?

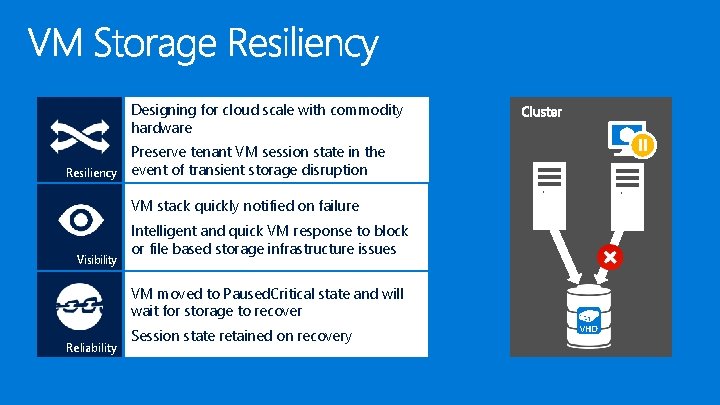

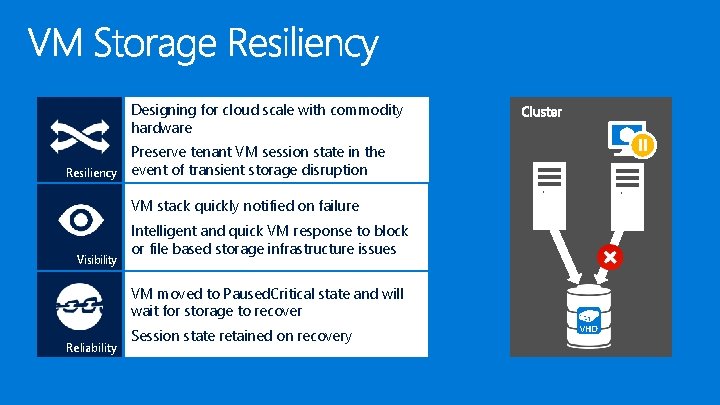

Designing for cloud scale with commodity hardware Resiliency Preserve tenant VM session state in the event of transient storage disruption VM stack quickly notified on failure Visibility Intelligent and quick VM response to block or file based storage infrastructure issues VM moved to Paused. Critical state and will wait for storage to recover Reliability Session state retained on recovery VHD

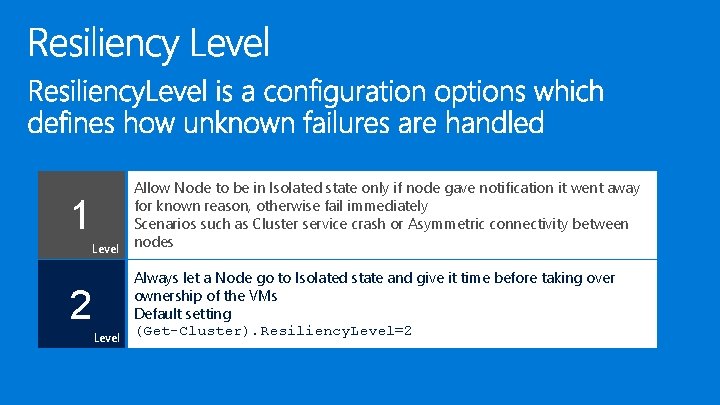

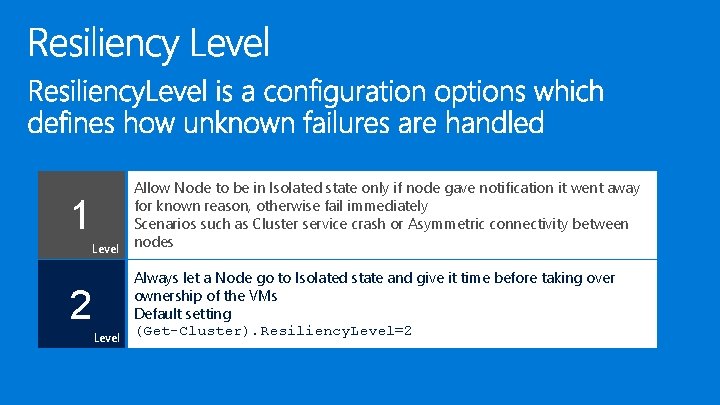

Allow Node to be in Isolated state only if node gave notification it went away for known reason, otherwise fail immediately Scenarios such as Cluster service crash or Asymmetric connectivity between Level nodes 1 2 Always let a Node go to Isolated state and give it time before taking over ownership of the VMs Default setting (Get-Cluster). Resiliency. Level=2 Level

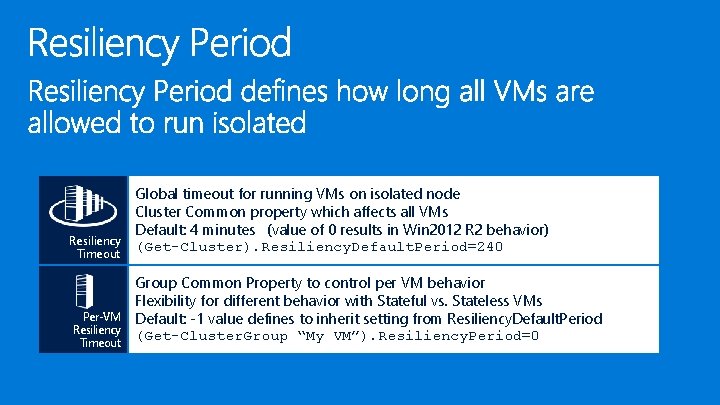

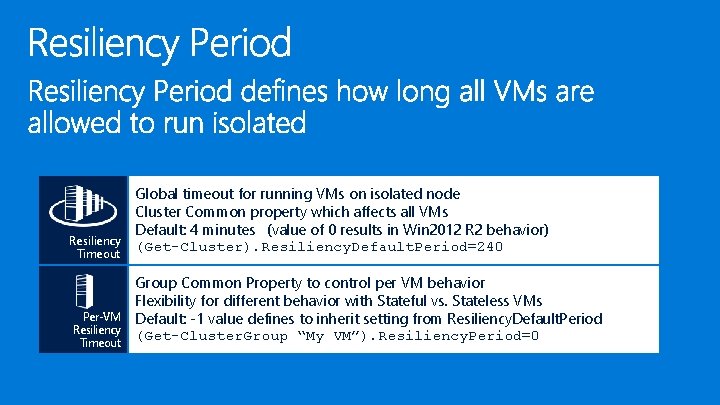

Global timeout for running VMs on isolated node Cluster Common property which affects all VMs Default: 4 minutes (value of 0 results in Win 2012 R 2 behavior) Resiliency (Get-Cluster). Resiliency. Default. Period=240 Timeout Group Common Property to control per VM behavior Flexibility for different behavior with Stateful vs. Stateless VMs Per-VM Default: -1 value defines to inherit setting from Resiliency. Default. Period Resiliency (Get-Cluster. Group “My VM”). Resiliency. Period=0 Timeout

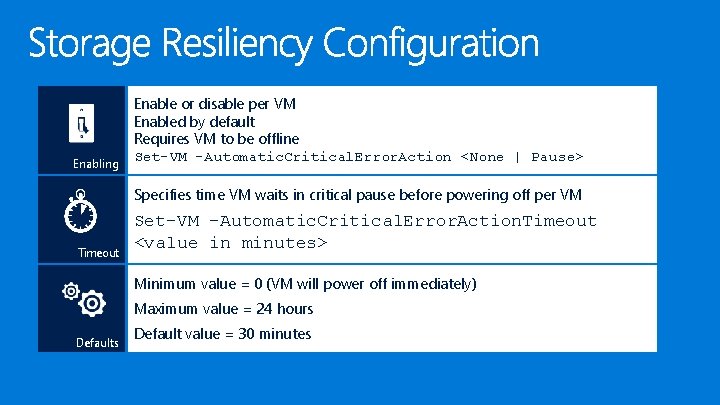

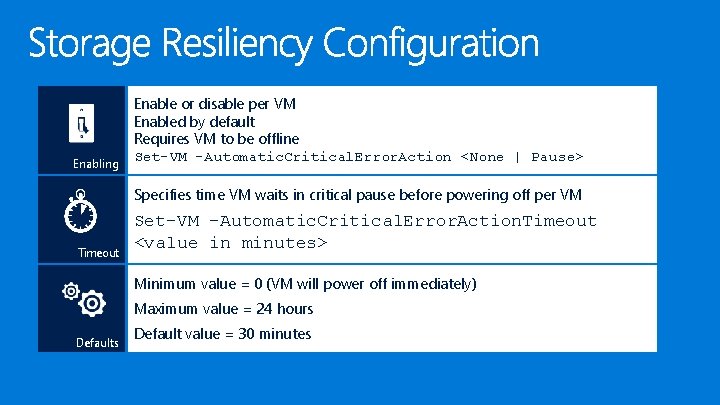

Enabling Enable or disable per VM Enabled by default Requires VM to be offline Set-VM -Automatic. Critical. Error. Action <None | Pause> Specifies time VM waits in critical pause before powering off per VM Timeout Set-VM –Automatic. Critical. Error. Action. Timeout <value in minutes> Minimum value = 0 (VM will power off immediately) Maximum value = 24 hours Default value = 30 minutes

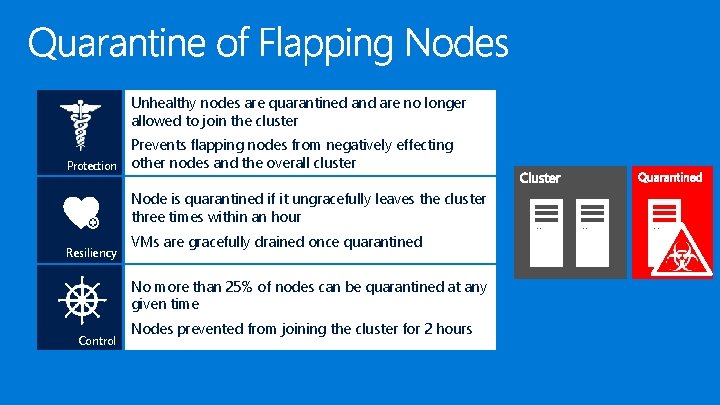

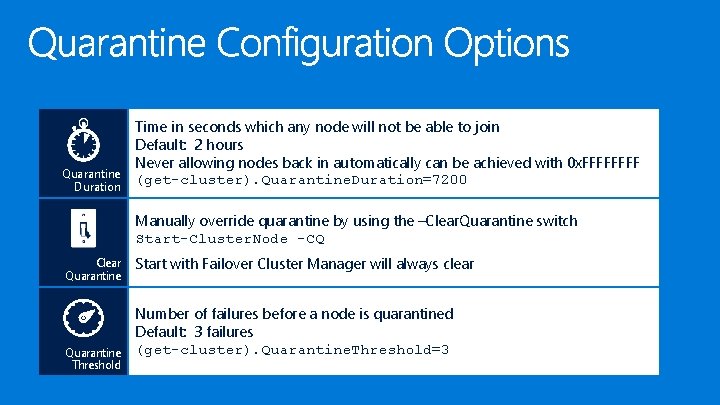

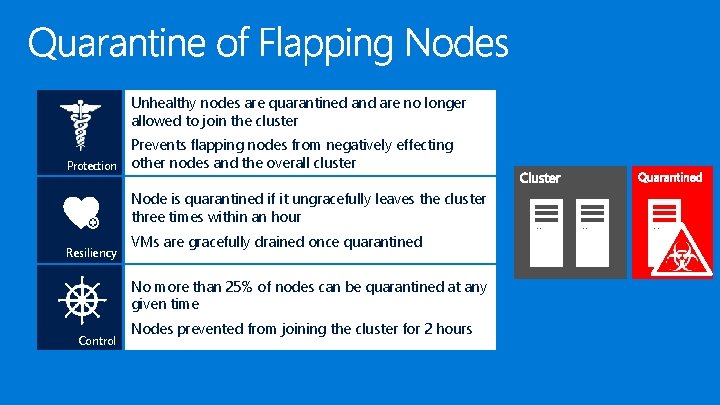

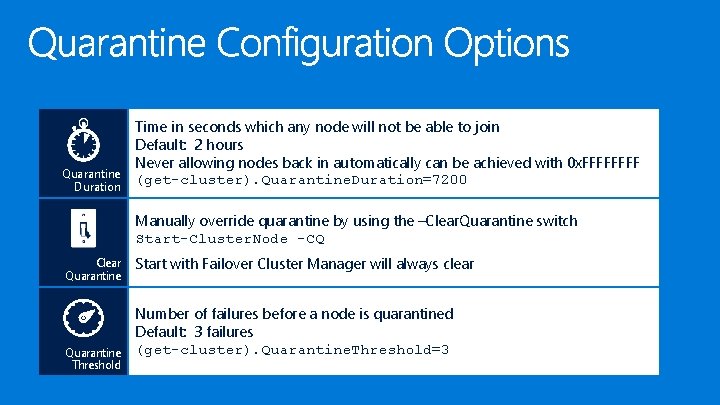

Unhealthy nodes are quarantined and are no longer allowed to join the cluster Protection Prevents flapping nodes from negatively effecting other nodes and the overall cluster Node is quarantined if it ungracefully leaves the cluster three times within an hour Resiliency VMs are gracefully drained once quarantined No more than 25% of nodes can be quarantined at any given time Control Nodes prevented from joining the cluster for 2 hours

Time in seconds which any node will not be able to join Default: 2 hours Never allowing nodes back in automatically can be achieved with 0 x. FFFF Quarantine (get-cluster). Quarantine. Duration=7200 Duration Manually override quarantine by using the –Clear. Quarantine switch Start-Cluster. Node -CQ Clear Quarantine Start with Failover Cluster Manager will always clear Number of failures before a node is quarantined Default: 3 failures Quarantine (get-cluster). Quarantine. Threshold=3 Threshold

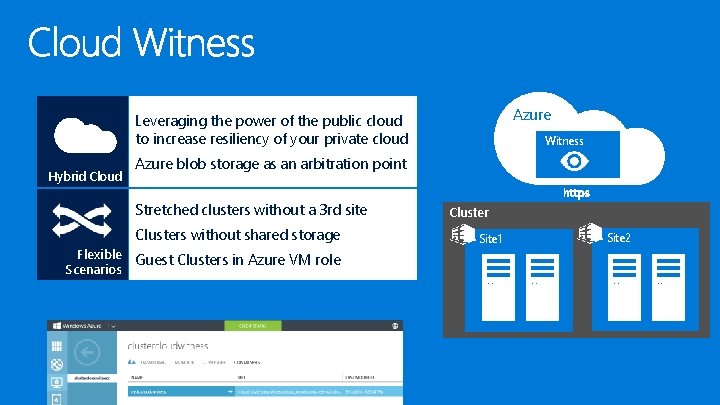

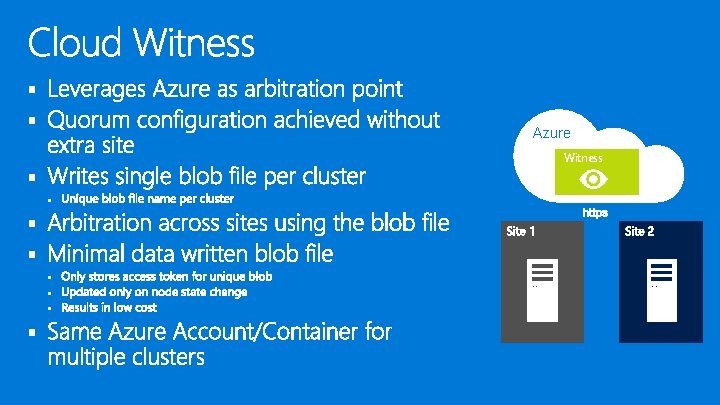

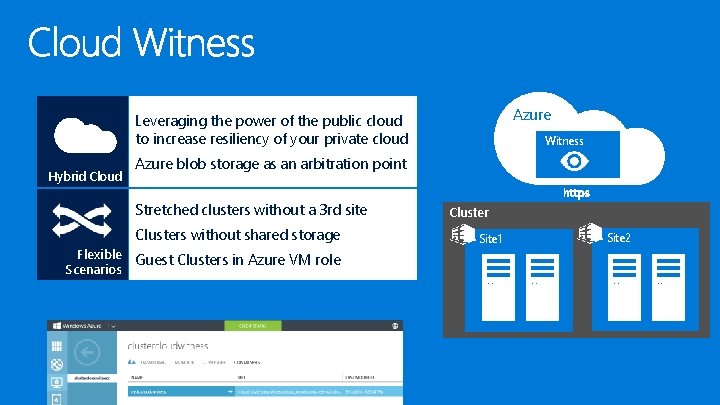

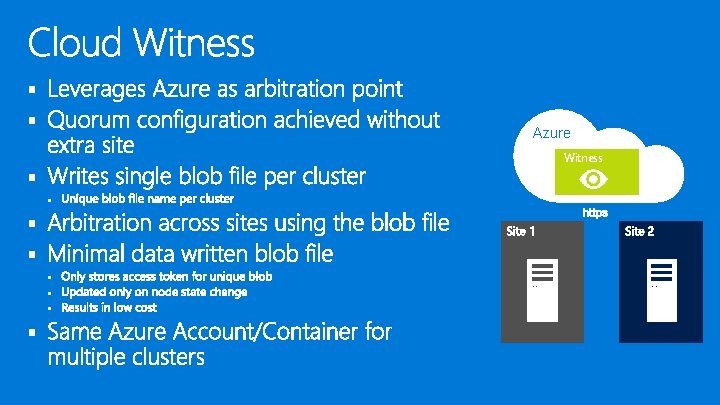

Azure Leveraging the power of the public cloud to increase resiliency of your private cloud Hybrid Cloud Witness Azure blob storage as an arbitration point Stretched clusters without a 3 rd site Clusters without shared storage Flexible Guest Clusters in Azure VM role Scenarios Cluster Site 1 Site 2

§ § Azure Witness § § § §

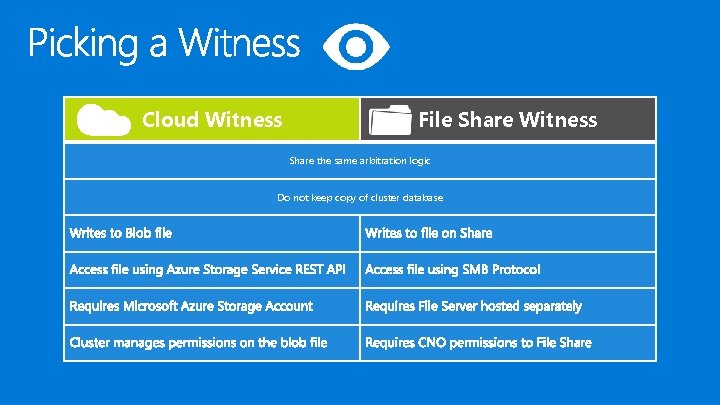

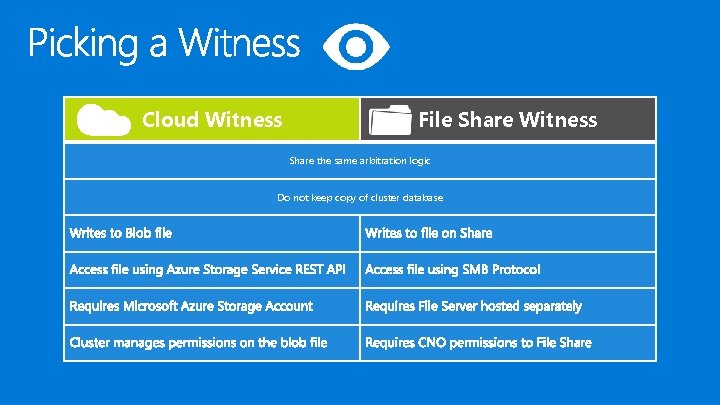

Cloud Witness File Share Witness Share the same arbitration logic Do not keep copy of cluster database

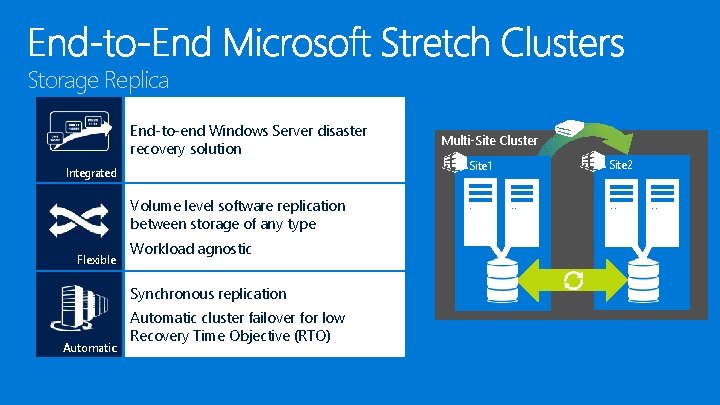

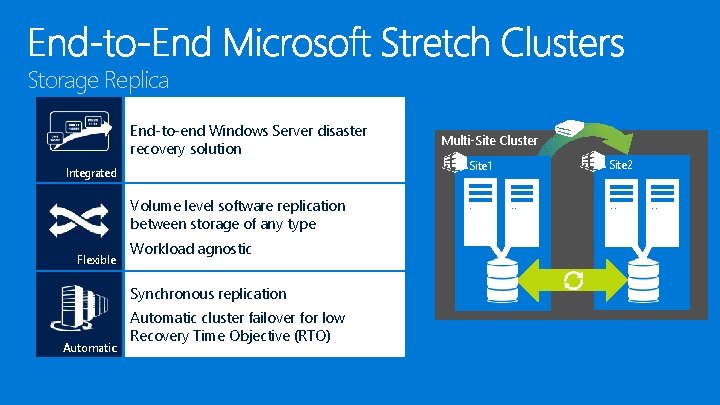

Storage Replica End-to-end Windows Server disaster recovery solution Integrated Volume level software replication between storage of any type Flexible Workload agnostic Synchronous replication Automatic cluster failover for low Recovery Time Objective (RTO) Multi-Site Cluster Site 1 Site 2

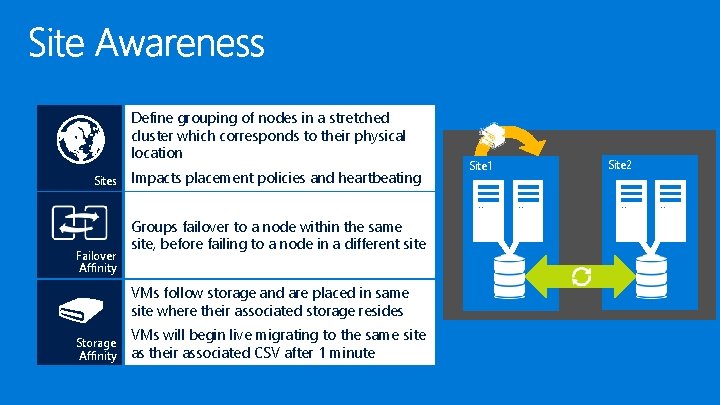

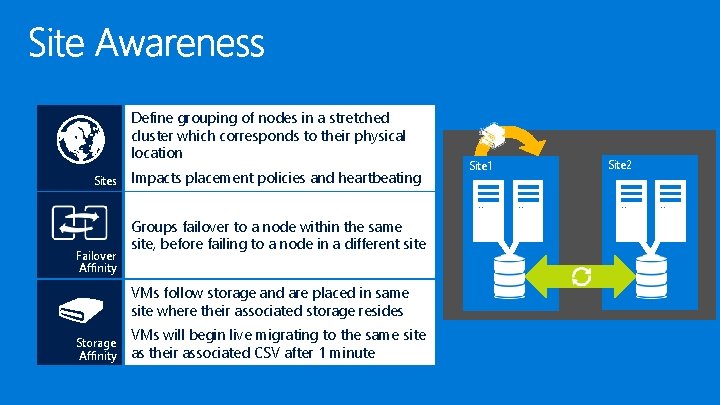

Define grouping of nodes in a stretched cluster which corresponds to their physical location Sites Failover Affinity Impacts placement policies and heartbeating Groups failover to a node within the same site, before failing to a node in a different site VMs follow storage and are placed in same site where their associated storage resides Storage Affinity VMs will begin live migrating to the same site as their associated CSV after 1 minute Site 1 Site 2

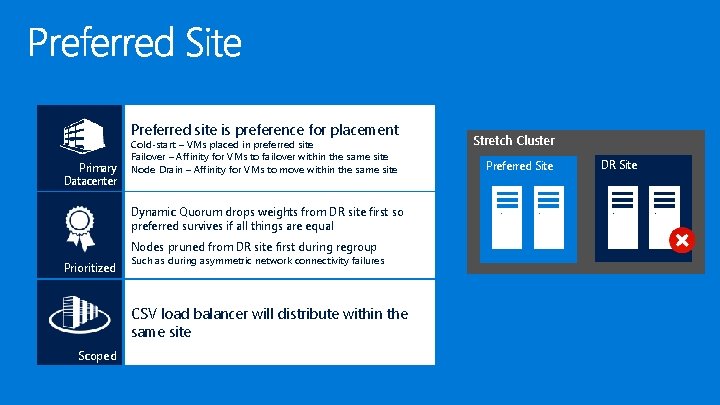

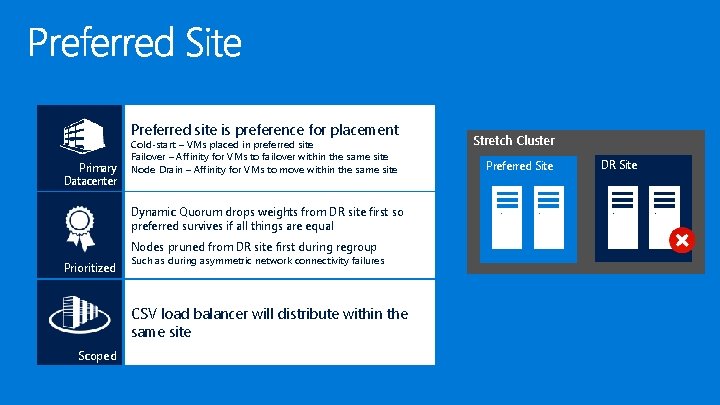

Preferred site is preference for placement Primary Datacenter Cold-start – VMs placed in preferred site Failover – Affinity for VMs to failover within the same site Node Drain – Affinity for VMs to move within the same site Dynamic Quorum drops weights from DR site first so preferred survives if all things are equal Nodes pruned from DR site first during regroup Prioritized Such as during asymmetric network connectivity failures CSV load balancer will distribute within the same site Scoped Stretch Cluster Preferred Site DR Site

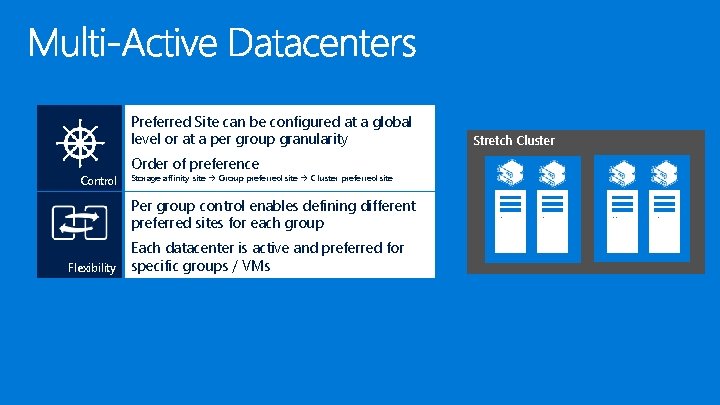

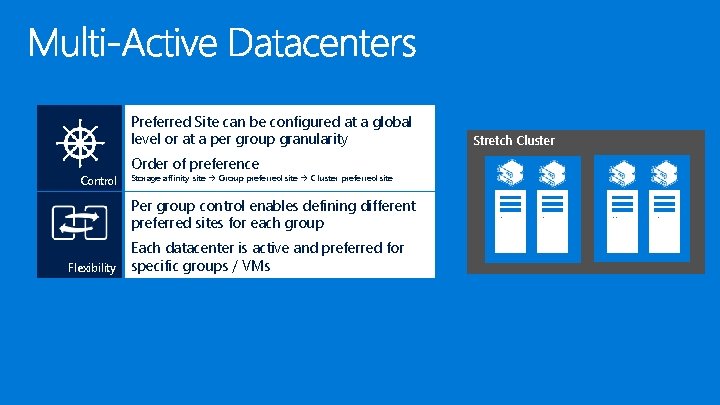

Preferred Site can be configured at a global level or at a per group granularity Control Order of preference Storage affinity site Group preferred site Cluster preferred site Per group control enables defining different preferred sites for each group Flexibility Each datacenter is active and preferred for specific groups / VMs Stretch Cluster

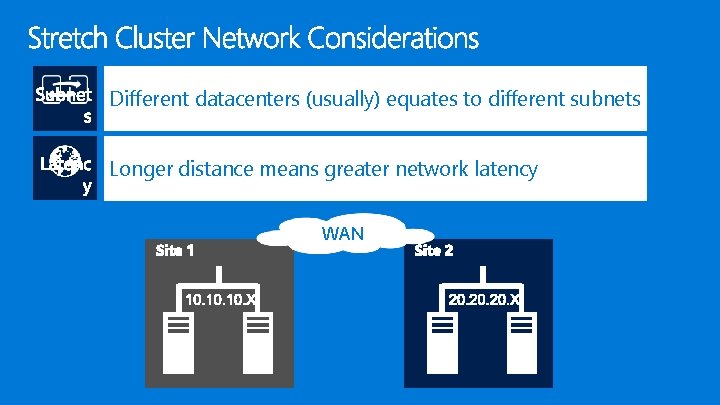

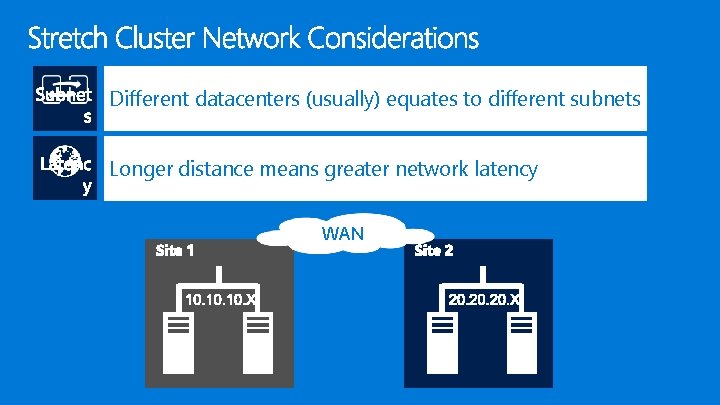

Different datacenters (usually) equates to different subnets Longer distance means greater network latency WAN

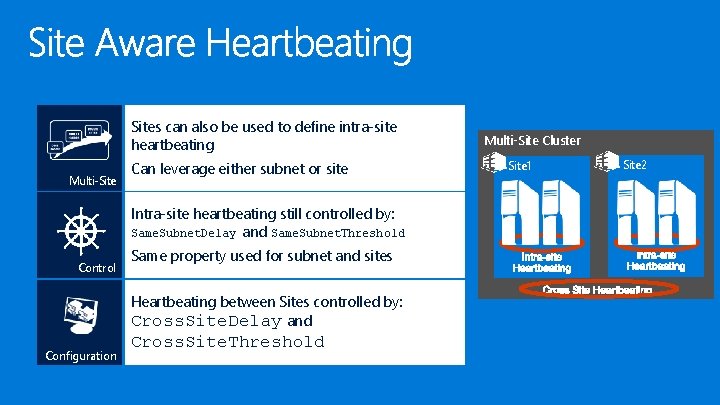

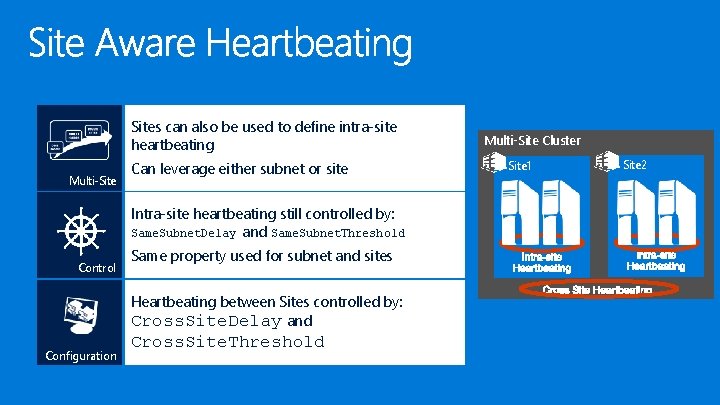

Sites can also be used to define intra-site heartbeating Multi-Site Can leverage either subnet or site Intra-site heartbeating still controlled by: Same. Subnet. Delay and Same. Subnet. Threshold Control Same property used for subnet and sites Heartbeating between Sites controlled by: Cross. Site. Delay and Configuration Cross. Site. Threshold Multi-Site Cluster Site 1 Site 2

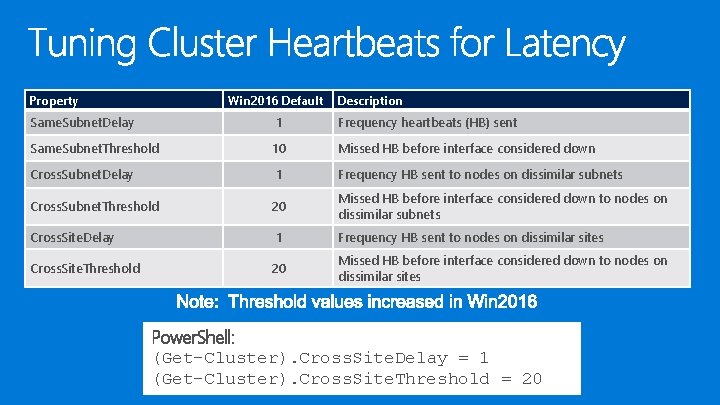

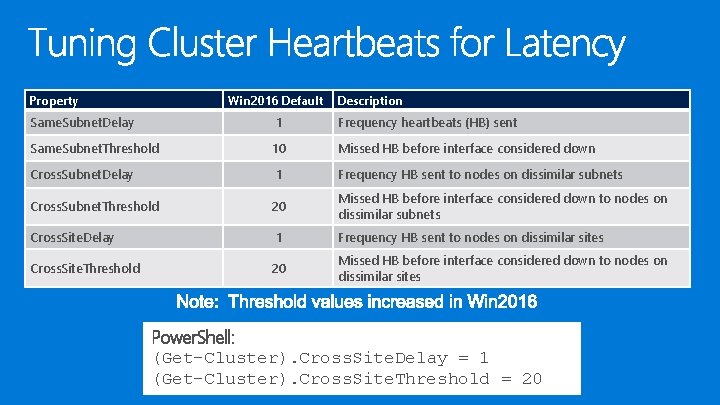

Property Win 2016 Default Description Same. Subnet. Delay 1 Frequency heartbeats (HB) sent Same. Subnet. Threshold 10 Missed HB before interface considered down Cross. Subnet. Delay 1 Frequency HB sent to nodes on dissimilar subnets Cross. Subnet. Threshold 20 Missed HB before interface considered down to nodes on dissimilar subnets Cross. Site. Delay 1 Frequency HB sent to nodes on dissimilar sites Cross. Site. Threshold 20 Missed HB before interface considered down to nodes on dissimilar sites Power. Shell: (Get-Cluster). Cross. Site. Delay = 1 (Get-Cluster). Cross. Site. Threshold = 20

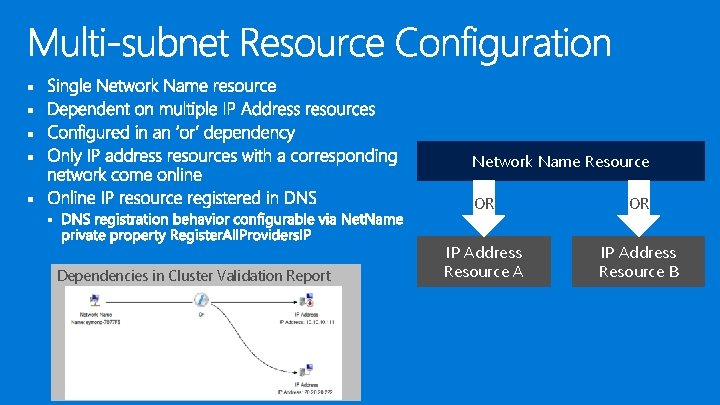

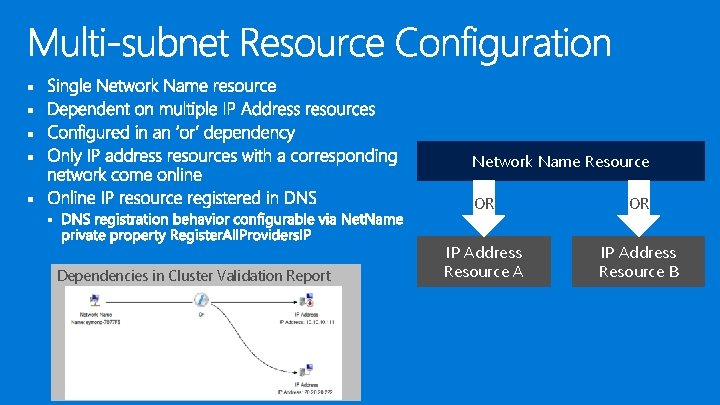

§ § Network Name Resource § OR OR IP Address Resource A IP Address Resource B § Dependencies in Cluster Validation Report

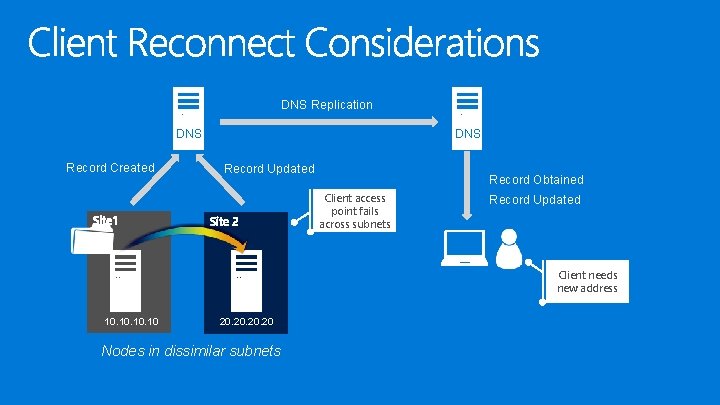

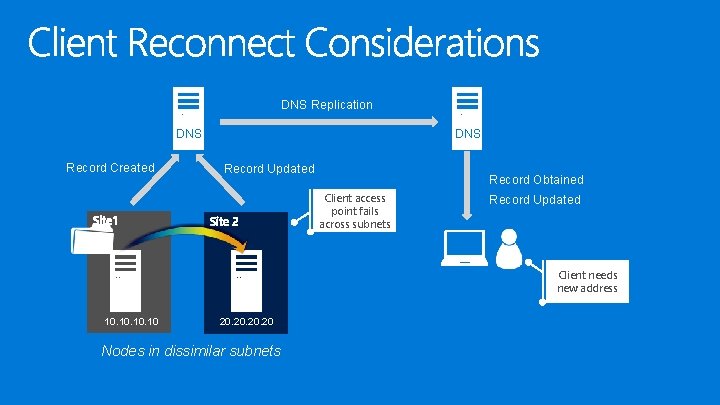

DNS Replication DNS Record Created DNS Record Updated Record Obtained Client access point fails across subnets Record Updated Client needs new address 10. 10. 10 20. 20. 20 Nodes in dissimilar subnets

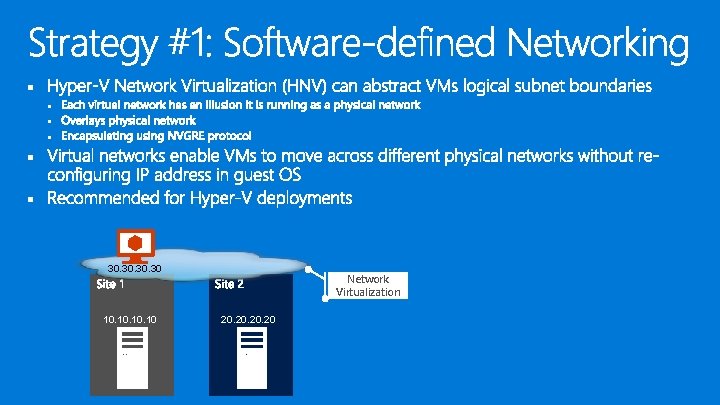

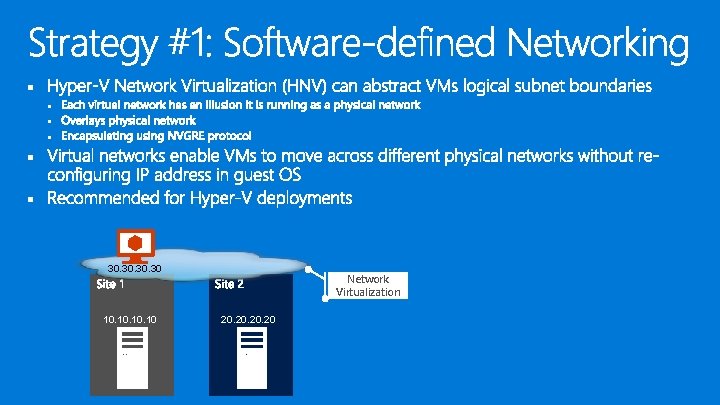

§ § § 30. 30. 30 10. 10. 10 Network Virtualization 20. 20. 20

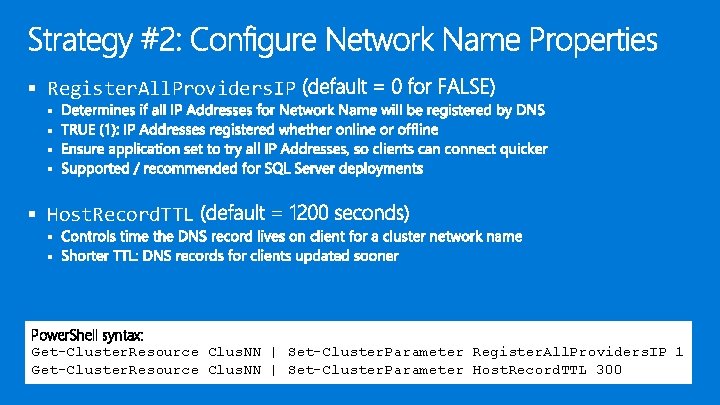

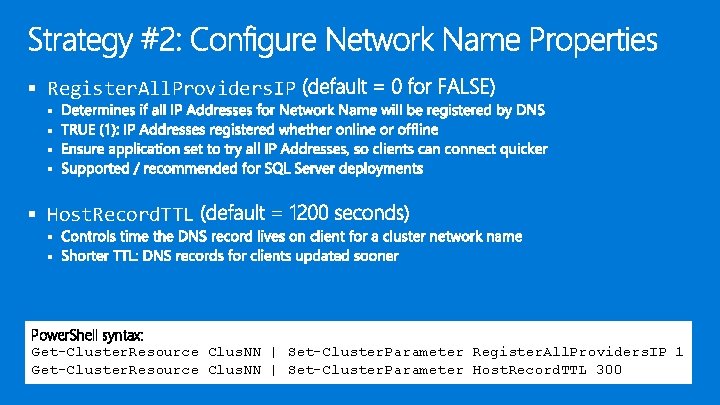

§ Register. All. Providers. IP § § § Host. Record. TTL § § Power. Shell syntax: Get-Cluster. Resource Clus. NN | Set-Cluster. Parameter Register. All. Providers. IP 1 Get-Cluster. Resource Clus. NN | Set-Cluster. Parameter Host. Record. TTL 300

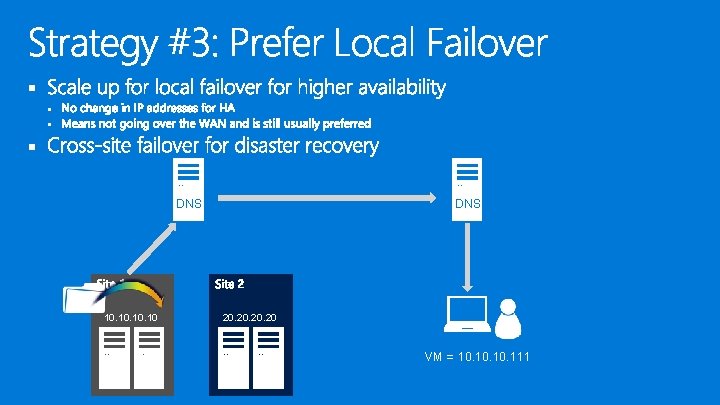

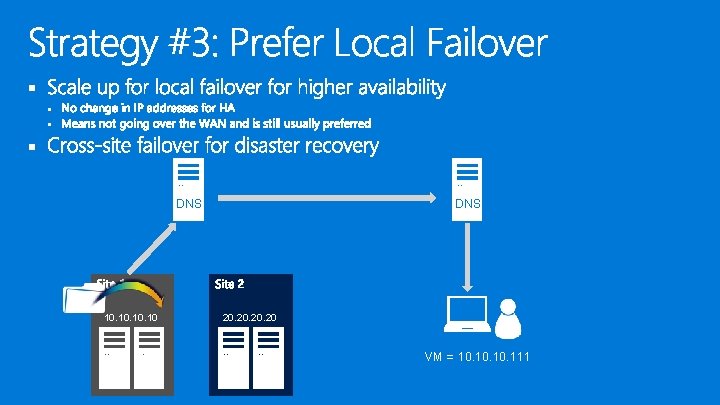

§ § DNS 10. 10. 10 DNS 20. 20. 20 VM = 10. 10. 111

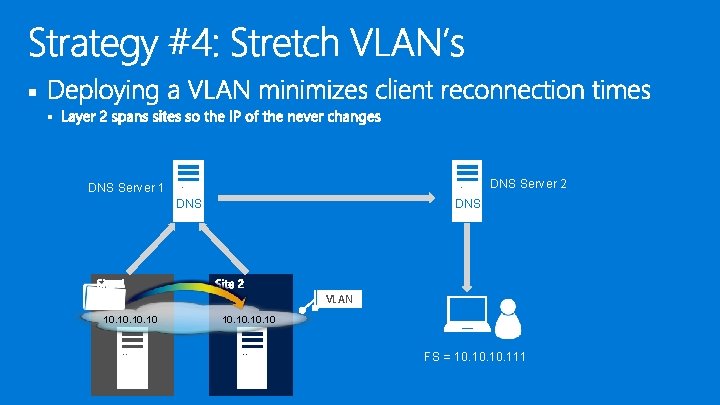

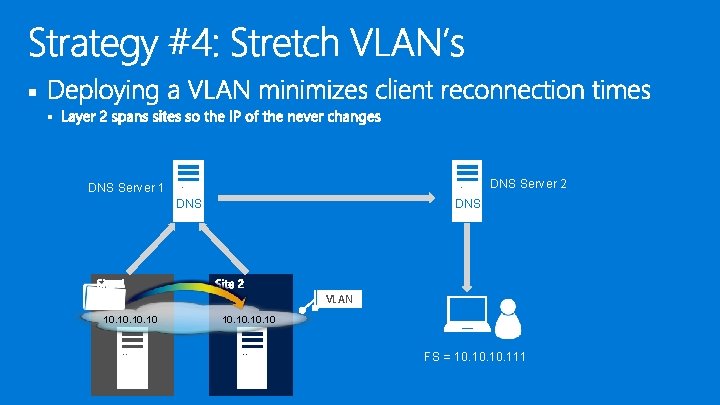

§ § DNS Server 2 DNS Server 1 DNS VLAN 10. 10 FS = 10. 10. 111

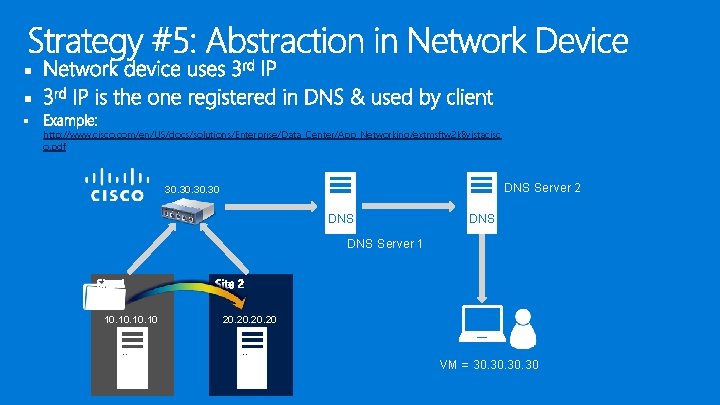

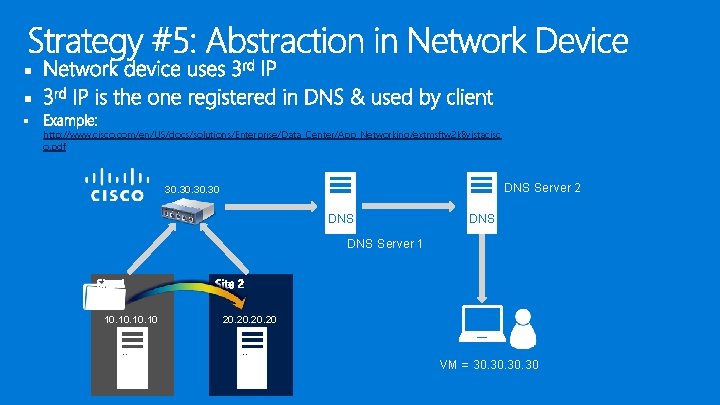

§ § § http: //www. cisco. com/en/US/docs/solutions/Enterprise/Data_Center/App_Networking/extmsftw 2 k 8 vistacisc o. pdf DNS Server 2 30. 30. 30 DNS DNS Server 1 10. 10. 10 20. 20. 20 VM = 30. 30. 30

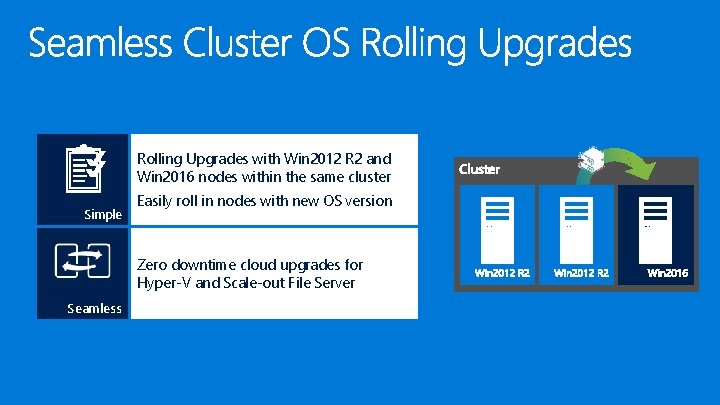

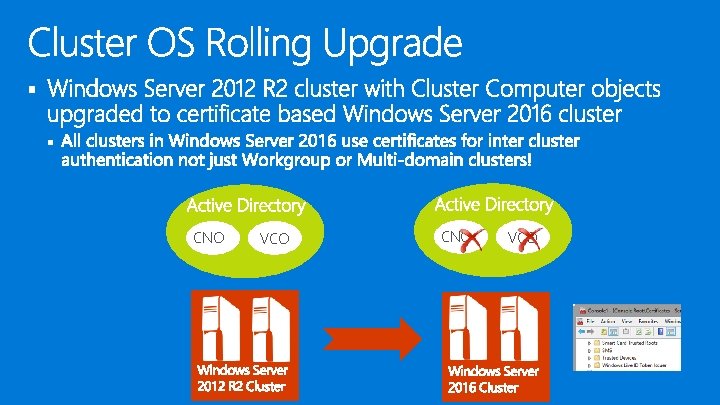

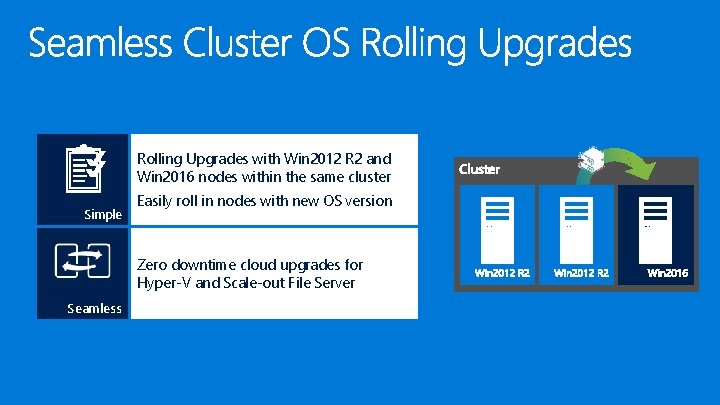

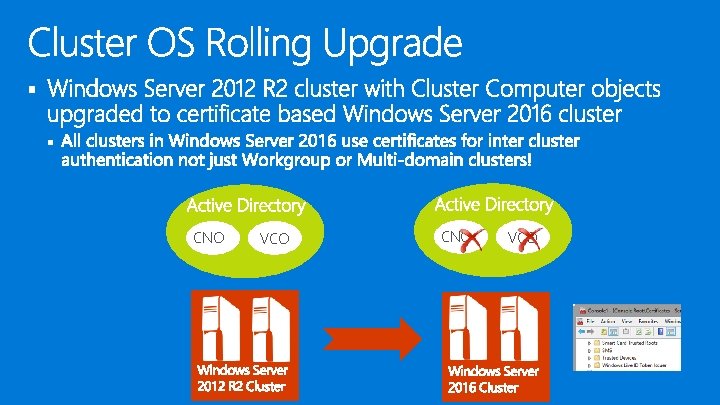

Rolling Upgrades with Win 2012 R 2 and Win 2016 nodes within the same cluster Simple Easily roll in nodes with new OS version Zero downtime cloud upgrades for Hyper-V and Scale-out File Server Seamless

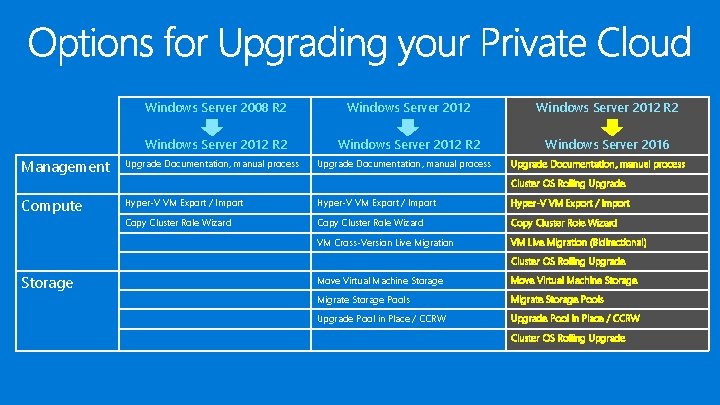

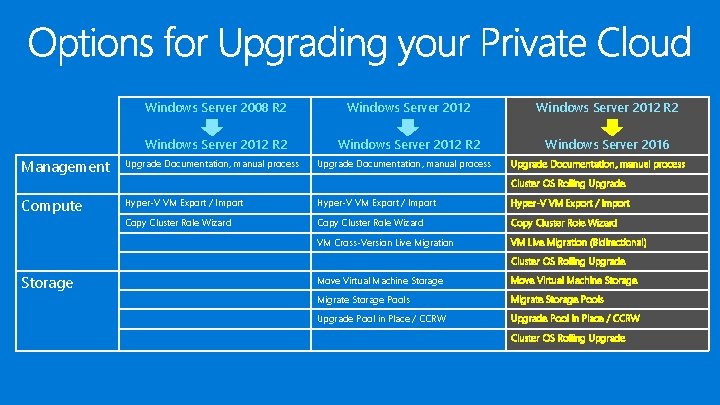

Management Windows Server 2008 R 2 Windows Server 2012 R 2 Windows Server 2016 Upgrade Documentation, manual process Cluster OS Rolling Upgrade Compute Hyper-V VM Export / Import Copy Cluster Role Wizard VM Cross-Version Live Migration VM Live Migration (Bidirectional) Cluster OS Rolling Upgrade Storage Move Virtual Machine Storage Migrate Storage Pools Upgrade Pool in Place / CCRW Cluster OS Rolling Upgrade

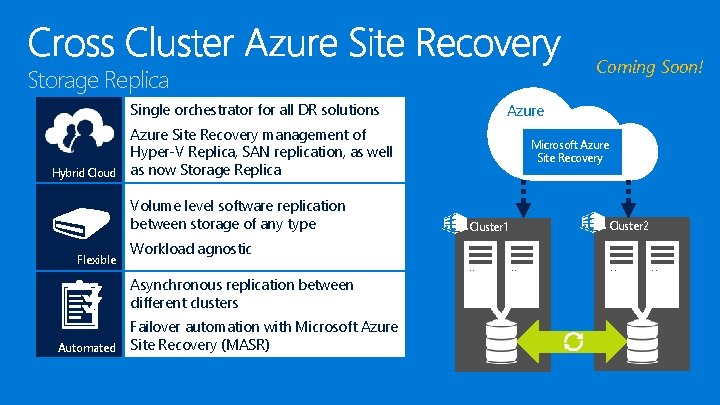

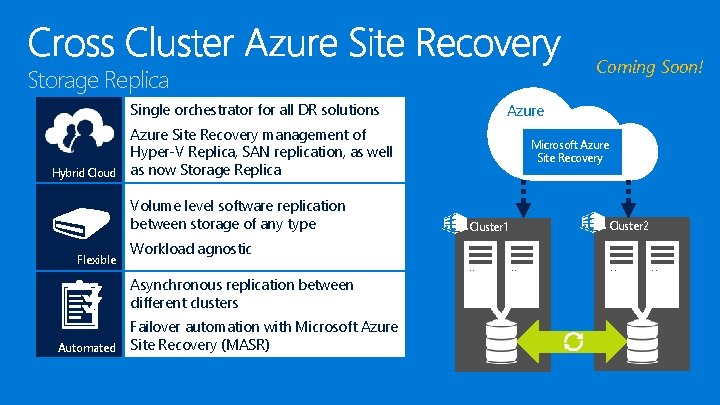

Coming Soon! Storage Replica Single orchestrator for all DR solutions Hybrid Cloud Azure Site Recovery management of Hyper-V Replica, SAN replication, as well as now Storage Replica Volume level software replication between storage of any type Flexible Workload agnostic Asynchronous replication between different clusters Automated Azure Failover automation with Microsoft Azure Site Recovery (MASR) Microsoft Azure Site Recovery Cluster 1 Cluster 2

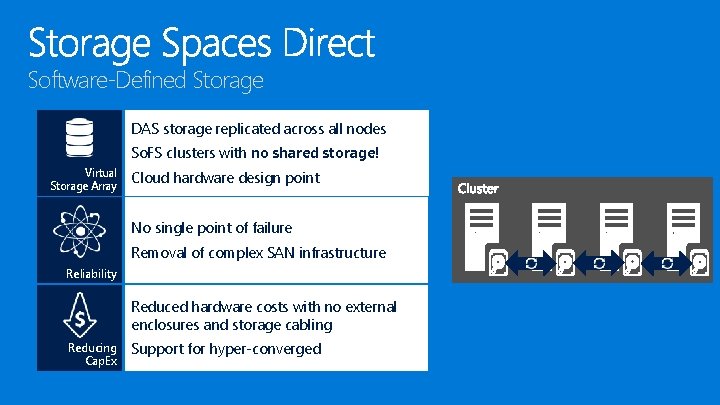

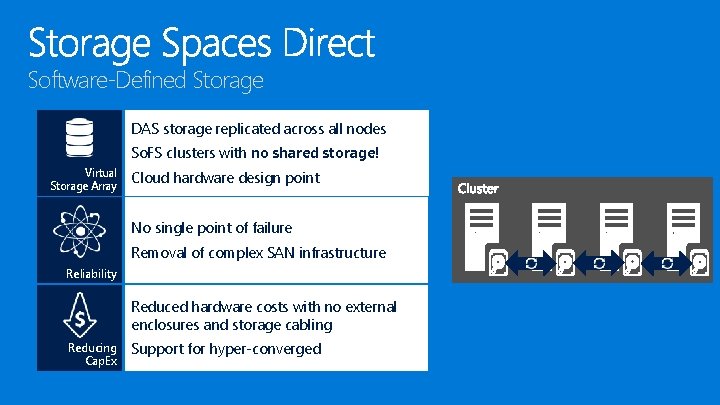

Software-Defined Storage DAS storage replicated across all nodes So. FS clusters with no shared storage! Virtual Storage Array Cloud hardware design point No single point of failure Removal of complex SAN infrastructure Reliability Reduced hardware costs with no external enclosures and storage cabling Reducing Cap. Ex Support for hyper-converged

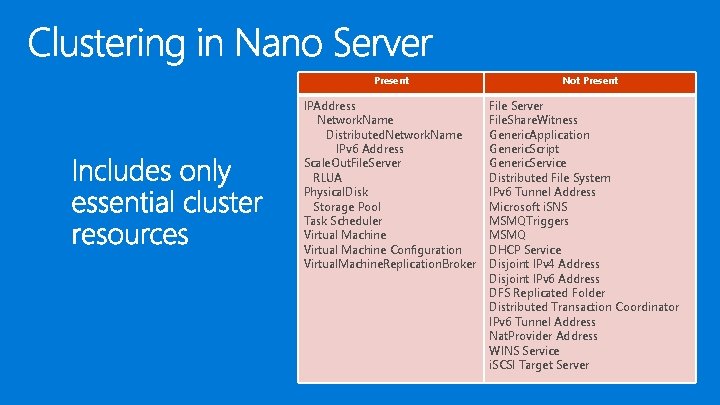

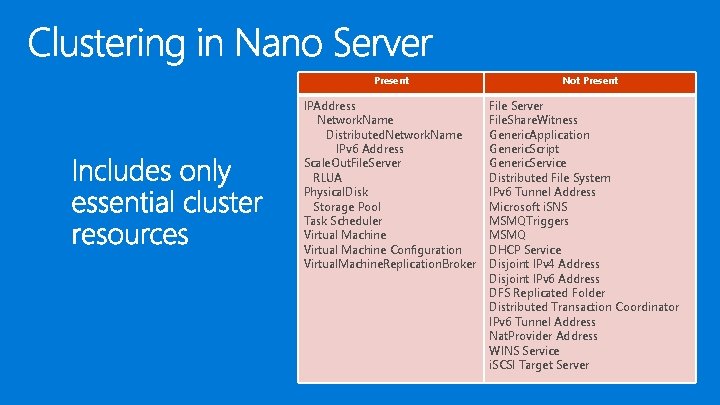

Present IPAddress Network. Name Distributed. Network. Name IPv 6 Address Scale. Out. File. Server RLUA Physical. Disk Storage Pool Task Scheduler Virtual Machine Configuration Virtual. Machine. Replication. Broker Not Present File Server File. Share. Witness Generic. Application Generic. Script Generic. Service Distributed File System IPv 6 Tunnel Address Microsoft i. SNS MSMQTriggers MSMQ DHCP Service Disjoint IPv 4 Address Disjoint IPv 6 Address DFS Replicated Folder Distributed Transaction Coordinator IPv 6 Tunnel Address Nat. Provider Address WINS Service i. SCSI Target Server

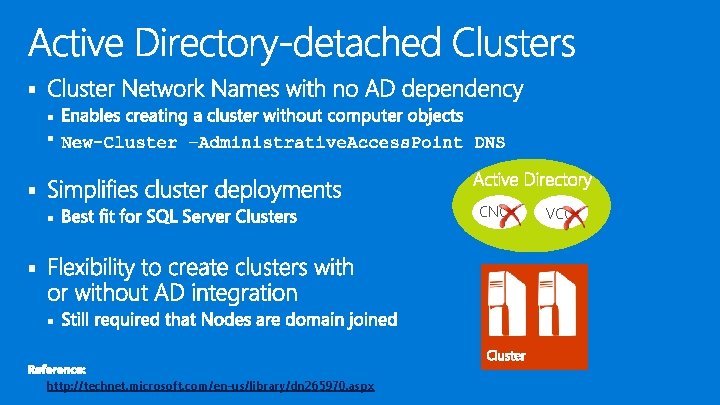

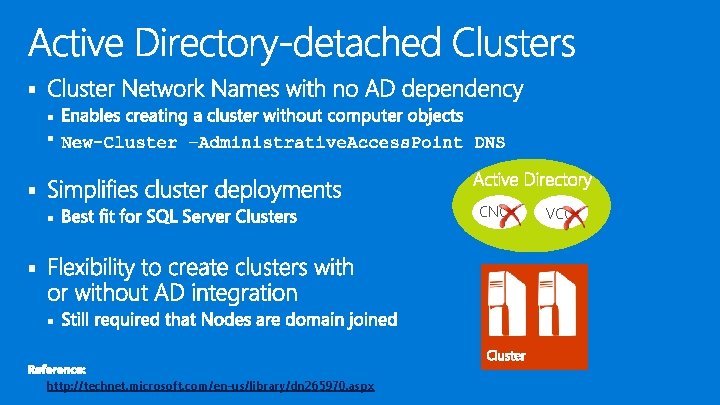

§ § § § http: //technet. microsoft. com/en-us/library/dn 265970. aspx CNO VCO

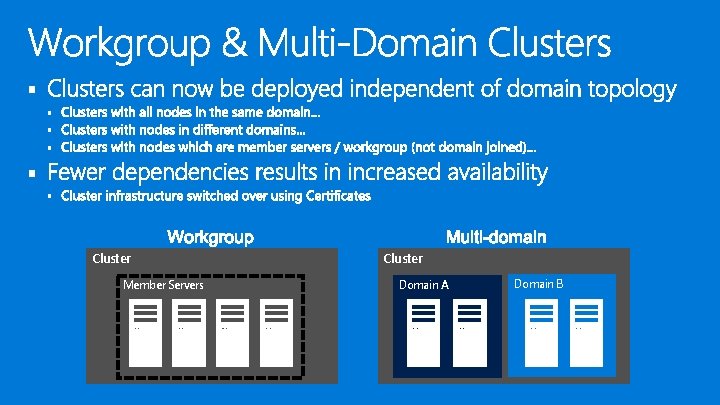

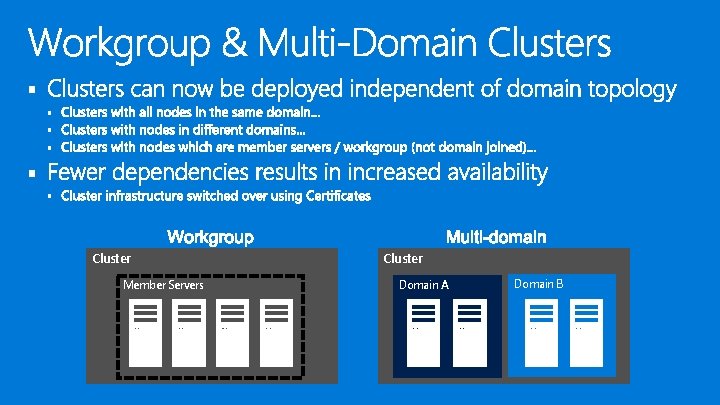

§ § § Cluster Member Servers Cluster Domain A Domain B

§ § CNO VCO

More Deep Dive Sessions § Failover Clustering: What’s new in Windows Server 2016 o Tuesday 3: 45 - 5: 00 § Exploring Storage Replica in Windows Server 2016 o Wednesday 11: 45 - 1: 00 § Enabling Private Cloud Storage with Local Drives Using Storage Spaces Direct in Windows Server 2016 o Thursday 11: 30 -12: 45 © ITEdgeintersection. All rights reserved. http: //www. ITEdgeintersection. com

Questions? Please use Event Board to fill out a session evaluation. Thank you! © ITEdgeintersection. All rights reserved. http: //www. ITEdgeintersection. com