Welcome to Program Evaluation Overview of Evaluation Concepts

- Slides: 27

Welcome to Program Evaluation Overview of Evaluation Concepts Copyright 2006, The Johns Hopkins University and Jane Bertrand. All rights reserved. This work is licensed under a Creative Commons Attribution-Non. Commercial. Share. Alike. License.

Introduction • • Objectives of course, What is Program Evaluation Types of evaluation (general) Example

Objectives of course • By the end of the course, you will be able to: • (1) Explain major concepts in program evaluation: – Type of evaluation and their purpose – Levels of measurement – Sources of data – Study designs, threats to validity

Objectives of course • (2) Perform skills required in program evaluation: – Design a conceptual framework – Develop objectives, indicators – Conduct a focus group – Pretest a survey – Process statistics – Use participatory evaluation techniques • (3) Write an evaluation plan

What’s not covered? • How to do multivariate analysis required to analyze the designs discussed in class (e. g. , randomized trial) • Calculations of sample size, power • Surveillance techniques • Additional qualitative methods • Actual implementation - ECUR 810

Tell me more Jay.

Overview of Program Evaluation

What is program evaluation? “the systematic assessment of the operation and/or outcomes of a program or policy, compared to a set of explicit or implicit standards as a means of contributing to the improvement of the program or policy. ” (Wiess, 2009)

Which really means… Telling some group how well the program is doing what it is supposed to be doing. “There is room for judgment. ” Brian Noonan

Why do we do program evaluation? • To determine the effectiveness of a program: – Did it achieve its objectives? – Were effects similar across subgroups? • To identify ways of improving on existing program design. • Continue or terminate a program. • To satisfy financial considerations, requirements. • For ‘political’ reasons, PR, staff morale, communication.

Initially you need to think about and ask: • • • What is the problem? Why am I doing this? What exactly do I want to know? Does an answer already exist? How do I find out? Who is involved? What will this cost? What will I do with the data? What happens when the evaluation is finished?

Tailoring evaluation to a specific context • What questions will the evaluation answer? • What methods/procedures will be used? • What will be the evaluator-stakeholder relationship? – Independent evaluation – Participatory/collaborative evaluation – Empowerment evaluation • What do you do with the answers?

Other key factors: program structure and circumstances • Stage of program development • Political context (conflict over goals) • Structure of the program – Scope of activities, type of services – Number and location of service sites – Characteristics of intended audience • Resources available: – Human, $$$, support of admin or management

Challenges in program evaluation • Dealing with the ‘politics’ of programs • Having the program design change mid-course • Balancing the tensions between scientific soundness and practicality (utility for decisionmakers) • Obtaining $$$ and support for strong designs

Primary types of evaluation • Formative – Needs assessment/diagnostic – Pretesting • Process – Implemented well? As planned? – Input analysis – Output, effectiveness, user satisfaction • Summative – Monitoring of program utilization – Monitoring of behavior or health status – Impact/outcome assessment • Cost effectiveness, cost-benefit • Benchmarks, standards

Characteristics of PE (Powell, 2006) • • Used for decision making. Deals with research questions about a program. Takes place in the real world of the program. Usually represents a compromise between pure and applied research. Should be carefully planned. Have a purpose not an end in itself. Must be hope for action or change. Can be used to raise internal as well as external awareness

Pallet Cleanser

An example… • Two ways a programs can be studied. • Two ways to determine what is working or not working with a program.

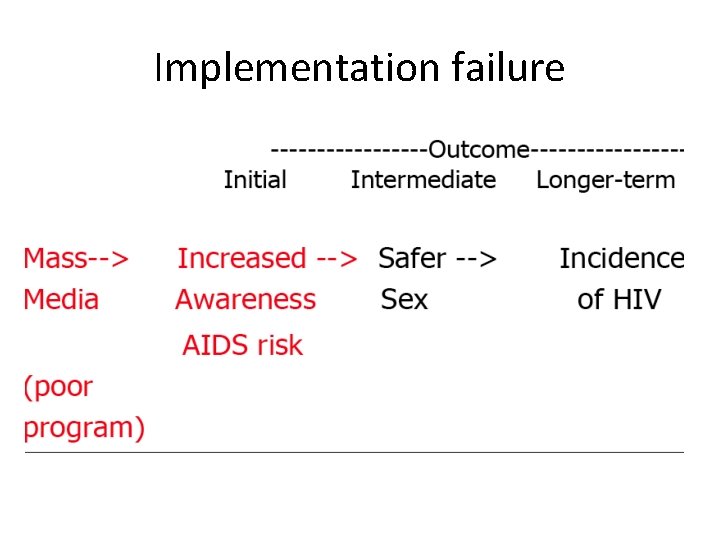

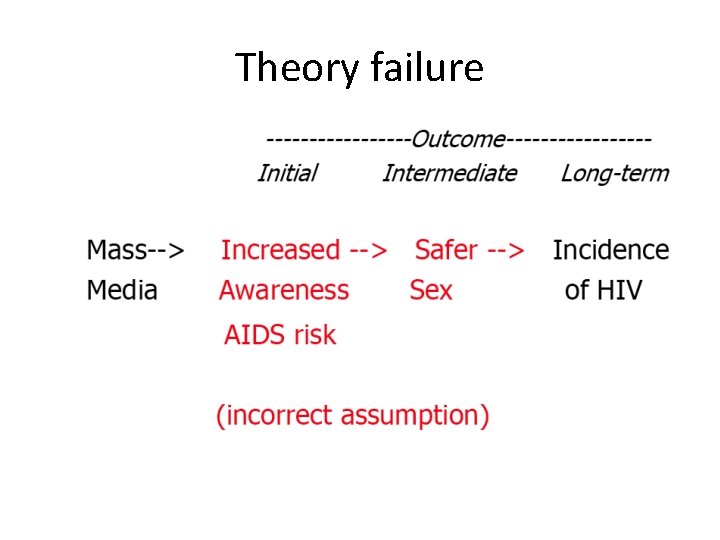

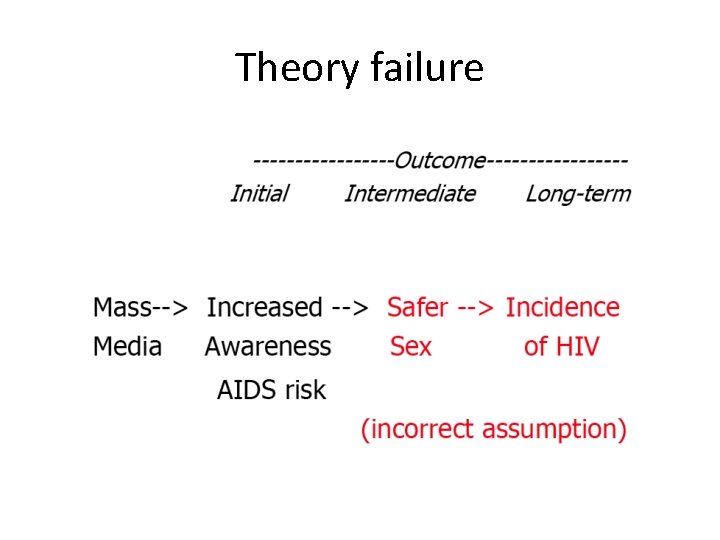

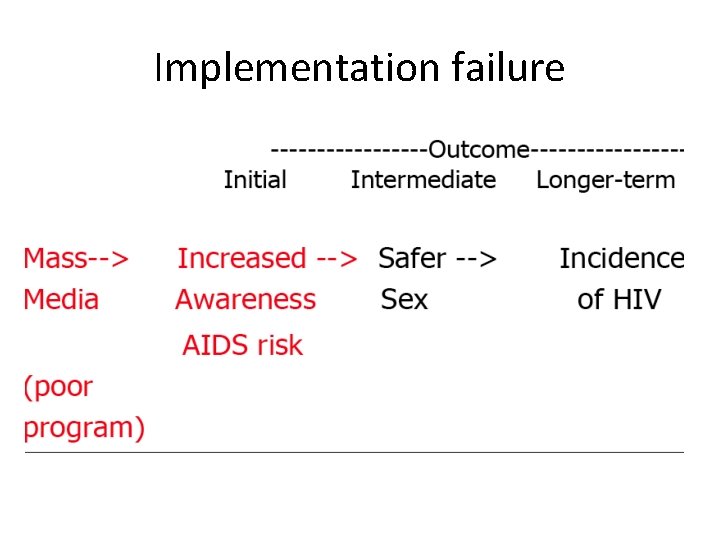

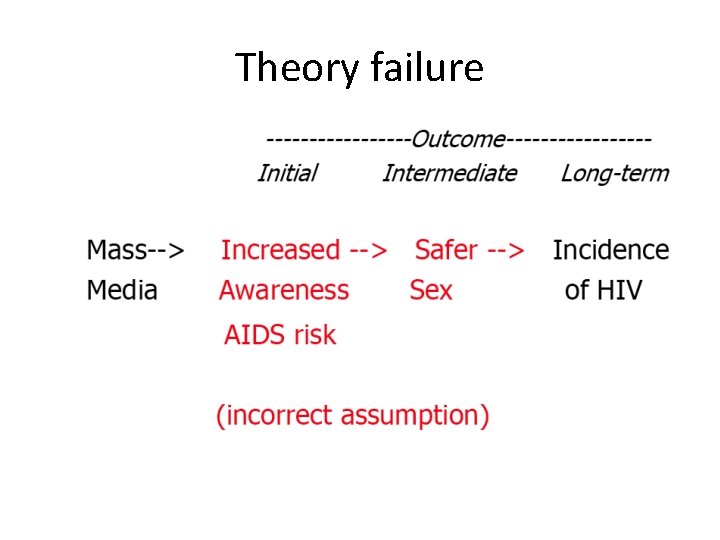

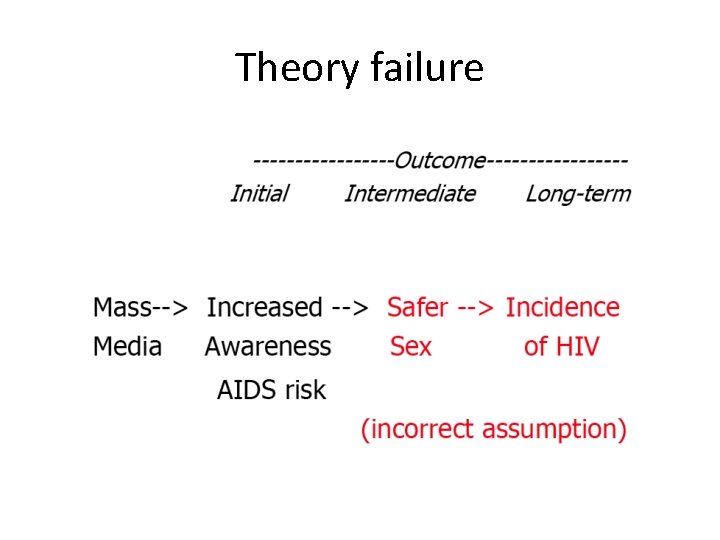

Implementation failure vs. Theory failure • Implementation failure – Program is not implemented as planned • Theory failure – Program is implemented as planned – Intervention does not produce intermediate results and/or – They do not produce desired outcome

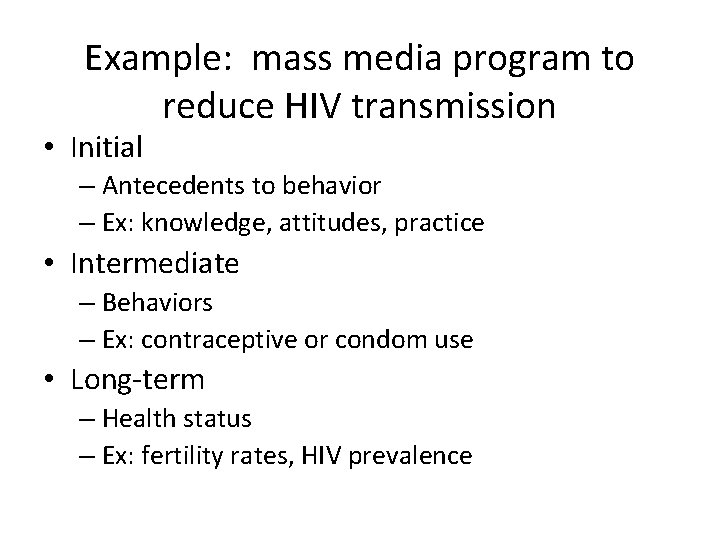

Measures of outcome (Rossi, Lipsey, Freeman, 2004) • Initial • Intermediate • Long-term

Example: mass media program to reduce HIV transmission • Initial – Antecedents to behavior – Ex: knowledge, attitudes, practice • Intermediate – Behaviors – Ex: contraceptive or condom use • Long-term – Health status – Ex: fertility rates, HIV prevalence

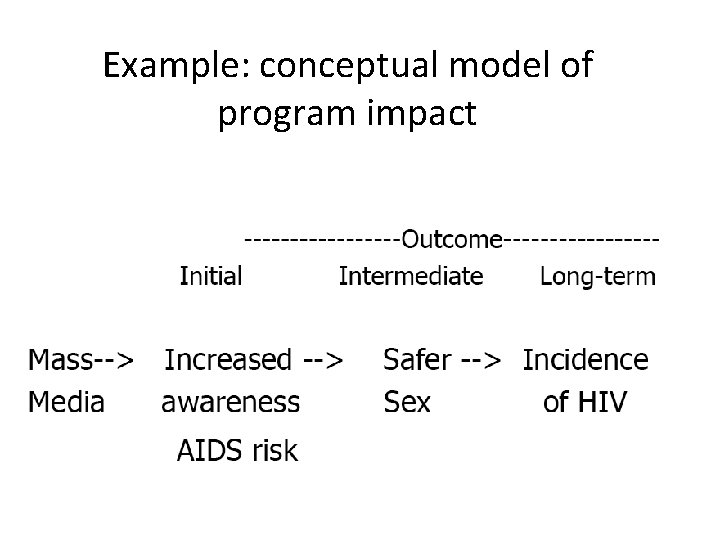

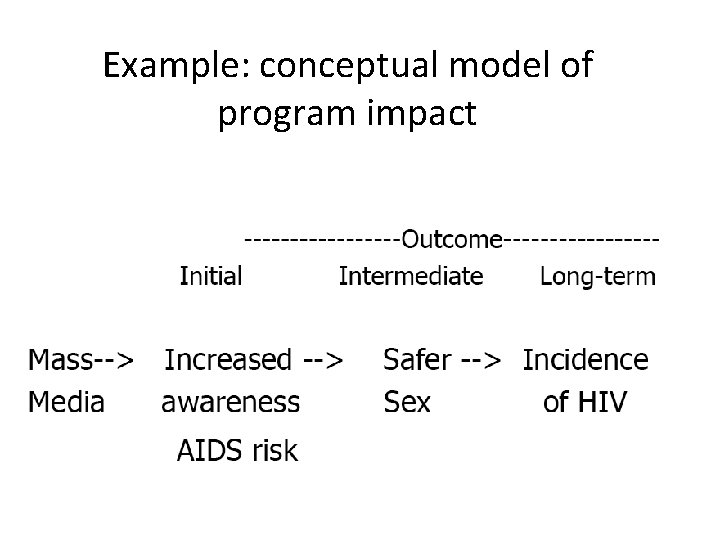

Example: conceptual model of program impact

Implementation failure

Theory failure

Theory failure

What? ?