Program Evaluation Program evaluation Methodological techniques of the

- Slides: 46

Program Evaluation

Program evaluation Methodological techniques of the social sciences social policy public welfare administration.

Evaluation Formative – help form the program Ongoing assessment to improve implementation Outcome – after the fact

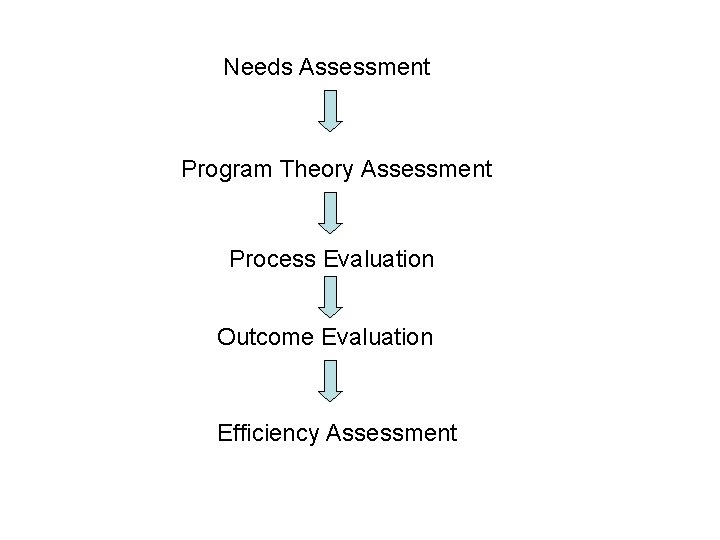

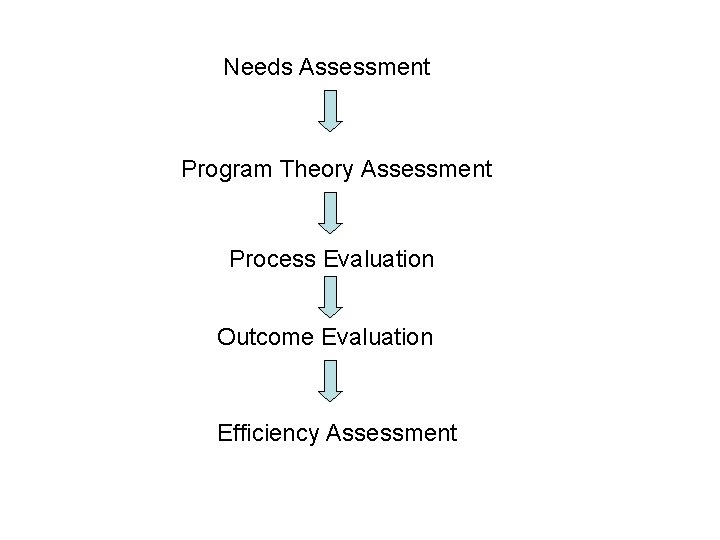

Needs Assessment Program Theory Assessment Process Evaluation Outcome Evaluation Efficiency Assessment

Needs assessment Who needs the program? How great is the need? What might work to meet the need? What resources are available?

“Evaluability” assessment Is an evaluation feasible? How stakeholders can shape its usefulness.

Structured Conceptualization Define the program or technology. Define the target population. Define possible outcomes

Process Evaluation Investigates the process of delivery and alternatives. Summative – summarize the effects

Implementation evaluation Monitors the fidelity of delivery

Outcome Evaluations Demonstrable effects on defined targets.

Impact evaluation Net effects intended and unintended on program as a whole

Cost-effectiveness / Cost benefit. Examines efficiency by standardizing outcomes in dollar costs and values.

Secondary analysis Examine existing data to address new questions or use different methods.

Meta analysis Integrates outcome with other studies to get summary judgment.

Meta-analysis Analysis of analyses Summarize a body of work Replication is good but can lead to inconsistent results

Useful for 1) clarifying inconsistencies 2) program evaluation 3) review work 4) broadly framed questions

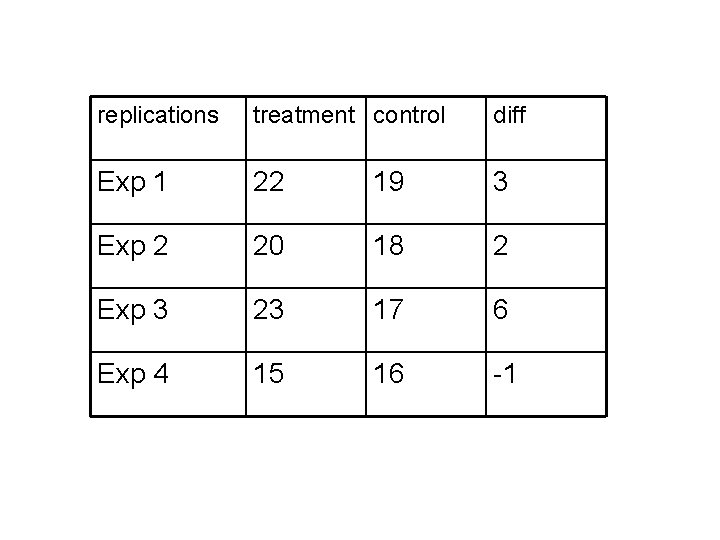

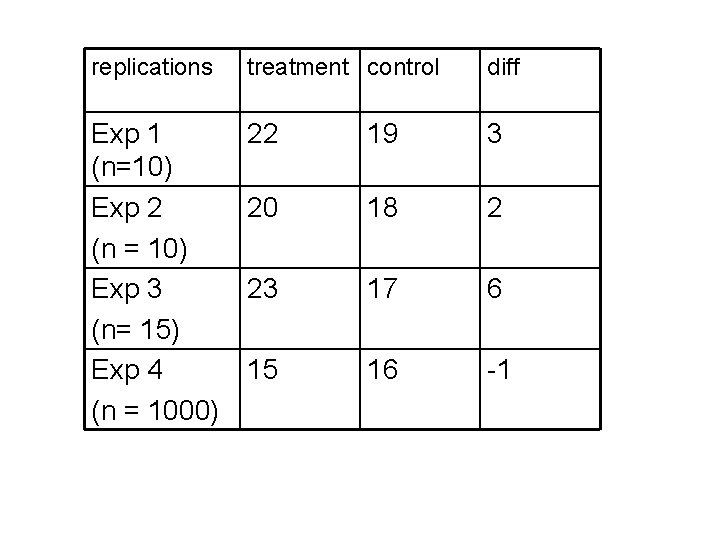

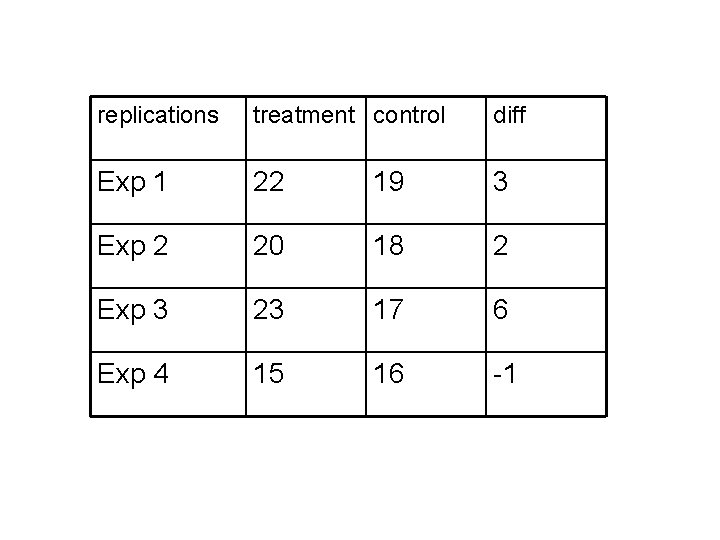

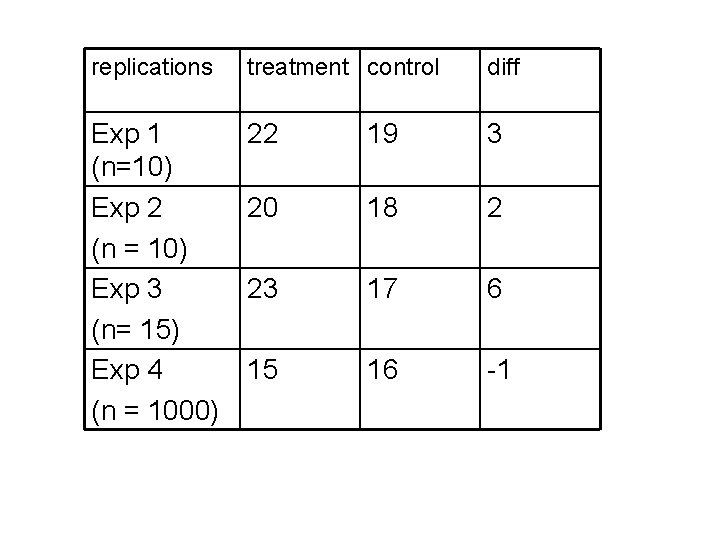

replications treatment control diff Exp 1 22 19 3 Exp 2 20 18 2 Exp 3 23 17 6 Exp 4 15 16 -1

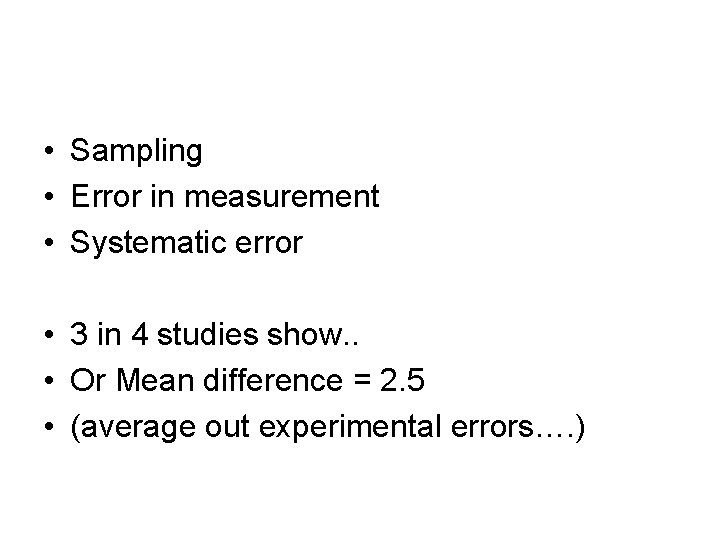

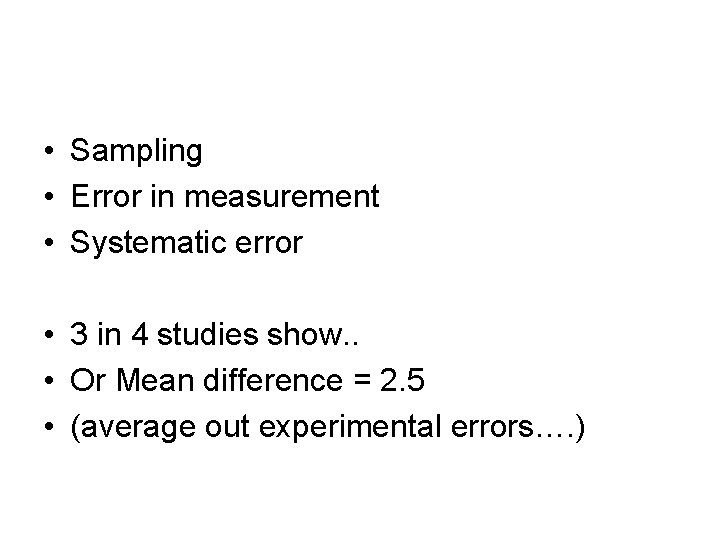

• Sampling • Error in measurement • Systematic error • 3 in 4 studies show. . • Or Mean difference = 2. 5 • (average out experimental errors…. )

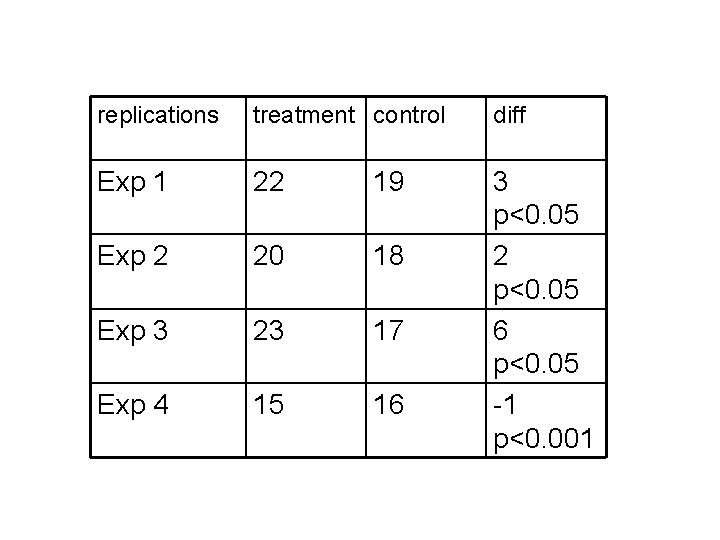

replications treatment control diff Exp 1 (n=10) Exp 2 (n = 10) Exp 3 (n= 15) Exp 4 (n = 1000) 22 19 3 20 18 2 23 17 6 15 16 -1

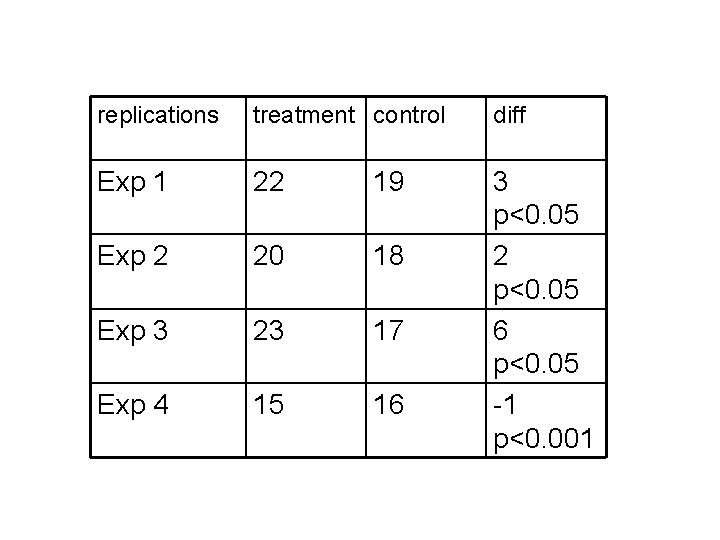

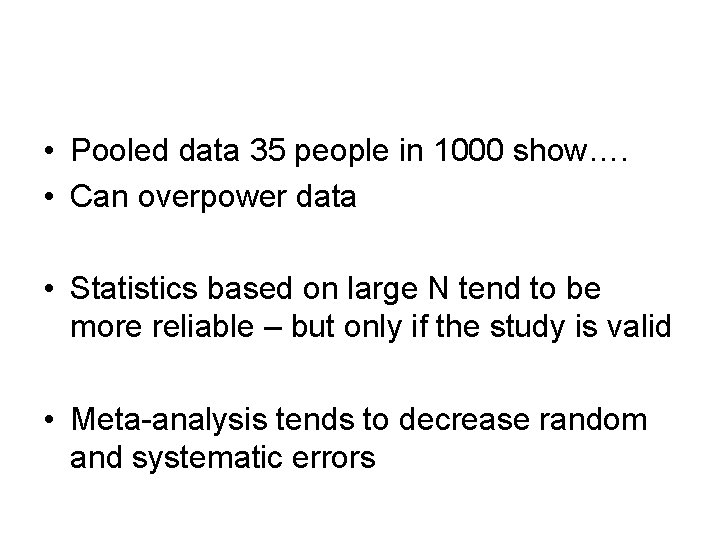

replications treatment control diff Exp 1 22 19 Exp 2 20 18 Exp 3 23 17 Exp 4 15 16 3 p<0. 05 2 p<0. 05 6 p<0. 05 -1 p<0. 001

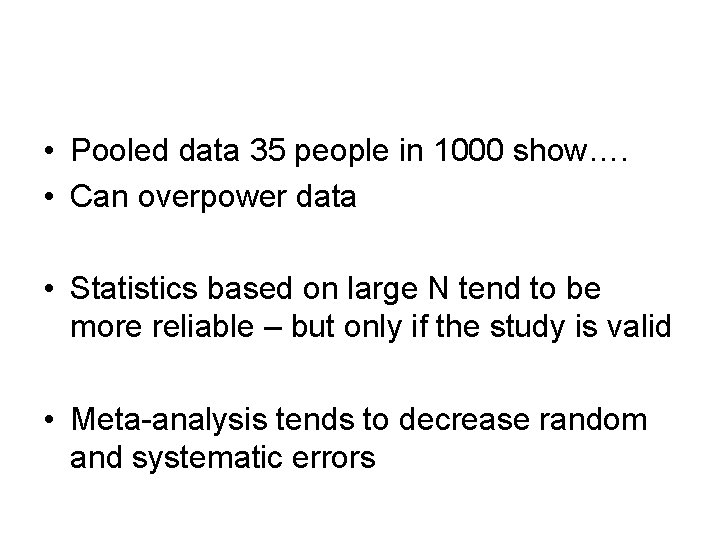

• Pooled data 35 people in 1000 show…. • Can overpower data • Statistics based on large N tend to be more reliable – but only if the study is valid • Meta-analysis tends to decrease random and systematic errors

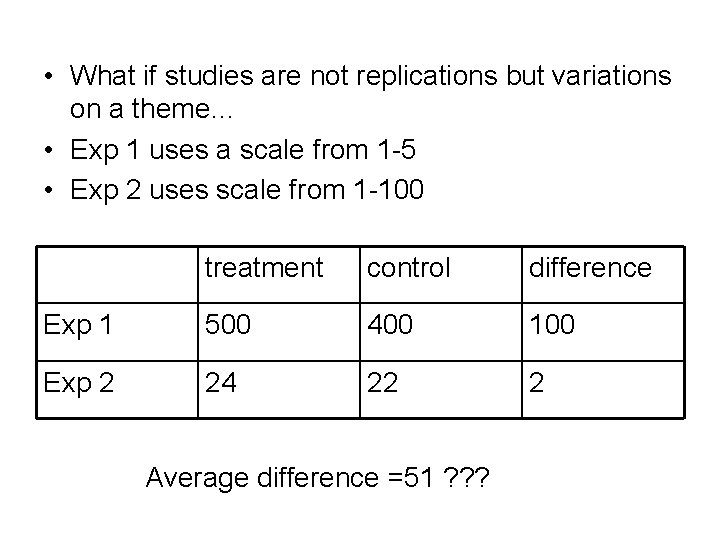

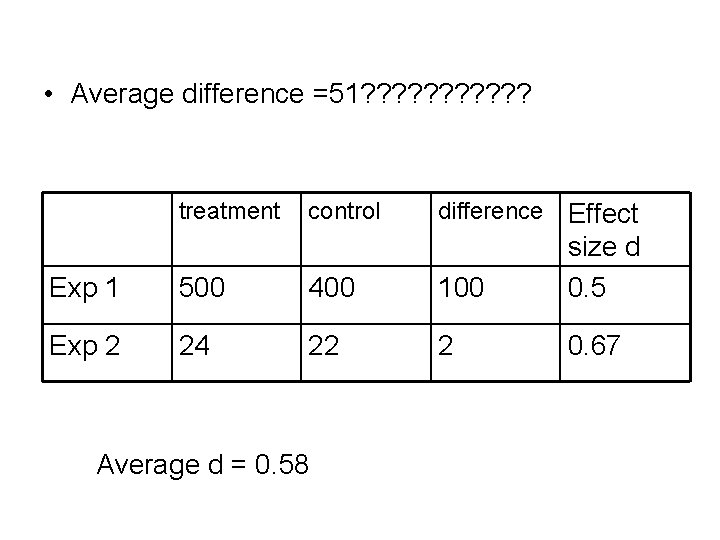

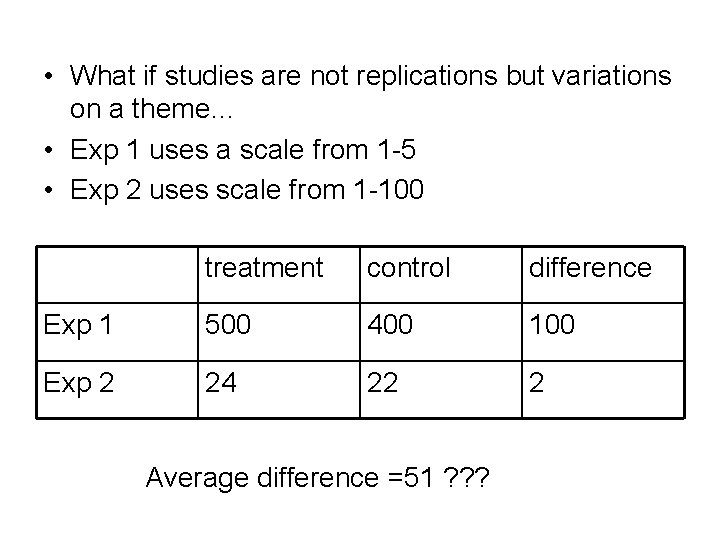

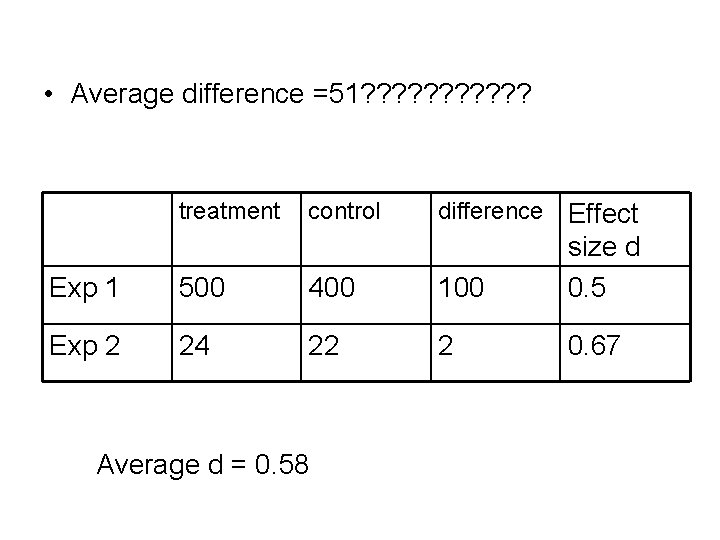

• What if studies are not replications but variations on a theme… • Exp 1 uses a scale from 1 -5 • Exp 2 uses scale from 1 -100 treatment control difference Exp 1 500 400 100 Exp 2 24 22 2 Average difference =51 ? ? ?

• Average difference =51? ? ? treatment control difference Exp 1 500 400 100 Effect size d 0. 5 Exp 2 24 22 2 0. 67 Average d = 0. 58

What is summarized? 1) count studies for and against does not give magnitude and has low power 2) combine significance levels 3) combine effect sizes (effect gives the magnitude of the relationship between 2 variables) Advantage a) increase sample size and power b) increase internal validitysoundness of conclusions about relationship c) increase external validity – generalizability to other places people etc d) shows effect even if small if it is consistent

• Synthesis is a better estimate of effect size • If effect is real and consistent it will be detected • BUT Limited by the original studies

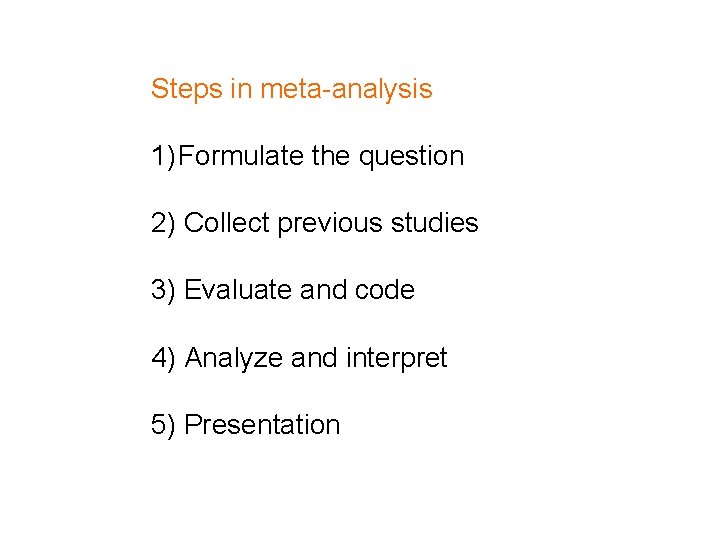

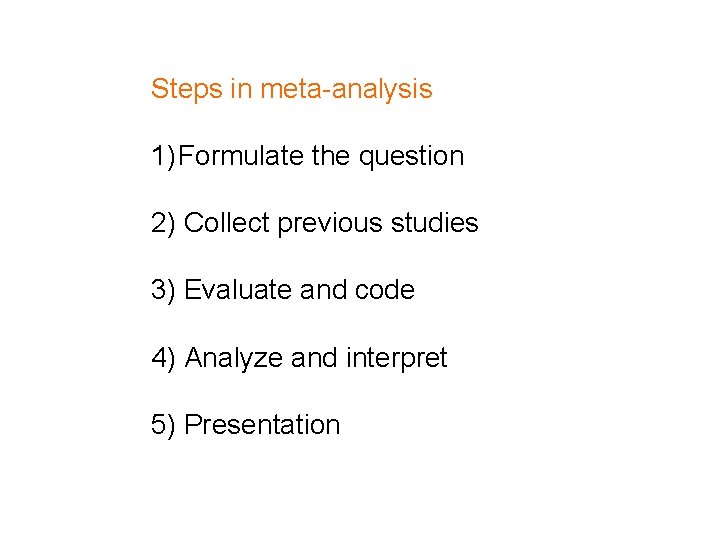

Steps in meta-analysis 1) Formulate the question 2) Collect previous studies 3) Evaluate and code 4) Analyze and interpret 5) Presentation

Data Sources Study Selection Data Abstraction Statistical Analysis

Data Sources 1. 2. 3. 4. Computer searches Cross-referencing Hand-searching Expert(s) to review list

Study Selection 1. 2. 3. 4. 5. 6. Study designs Subjects Publication types Languages Interventions Time Frame

• Need to establish criteria for inclusion • Eg if reading program for schools then maybe it is only effective for younger children. … • Determine cut-off of age acceptable. • Or separate analyses for two groups • Or use it as a moderating factor

Data Abstraction 1. Number of items coded 2. Inter-coder bias 3. Items coded

Coding… Are all studies the same? One has N=10 another has N= 1000…. Different DV scales 1 -5 vs 500 point scale How flawed is ok? ? ? Do we include a study if we think it has a confound? Publication bias…

Statistical Analysis 1. 2. 3. 4. 5. Choice of metric Choice of model/ heterogeneity Publication bias Study quality Moderator analysis

Choice of Metric · Original · Standardized mean difference (Mean/Standard Deviation) Choice of Model/ Heterogeneity · Fixed Effects – current group of studies explained · Random Effects – assumes that this is a random group from all possible

Publication Bias · Graphical methods · Quantitative methods Study Quality a. Difficult to assess b. Interpret with caution c. Numerous scales and checklists available

Moderator Analysis a. Categorical Analysis b. Regression Analysis Allows for explanation of effects

• Meta analysis compared to review • Objective or subjective? ? ?

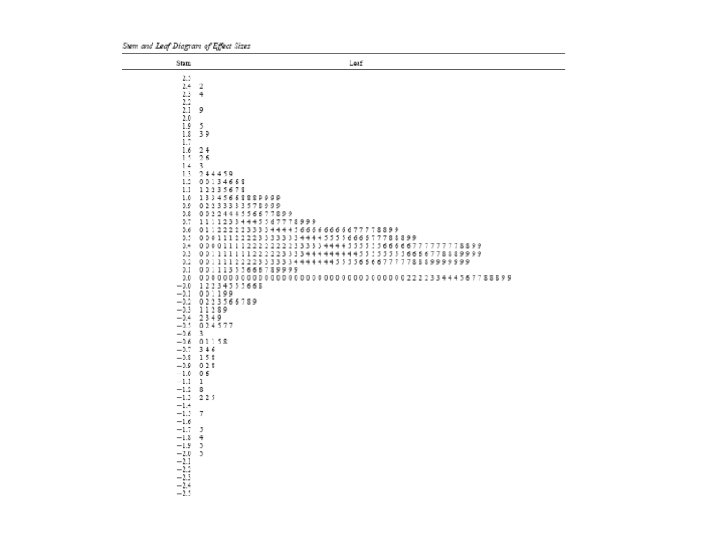

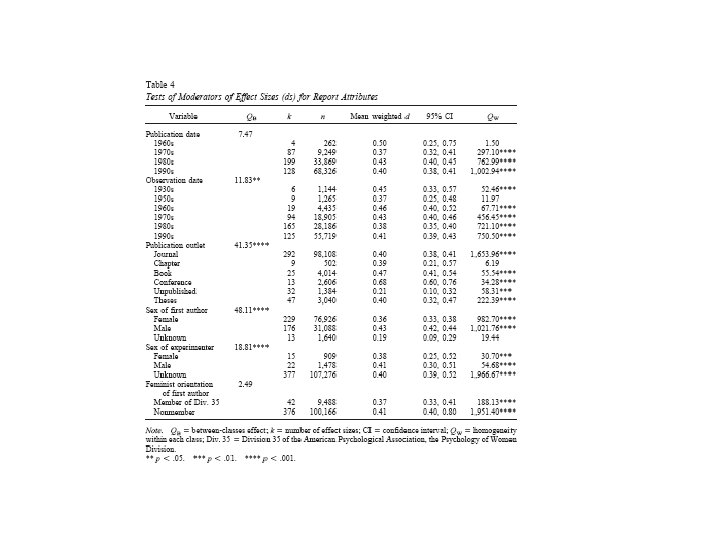

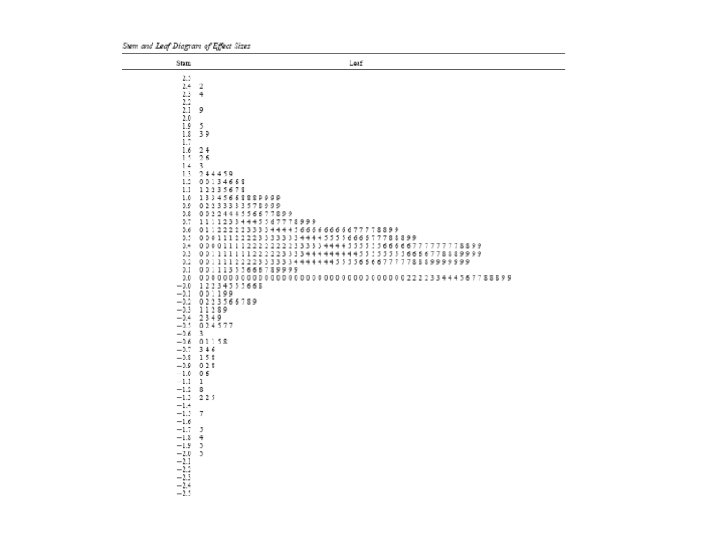

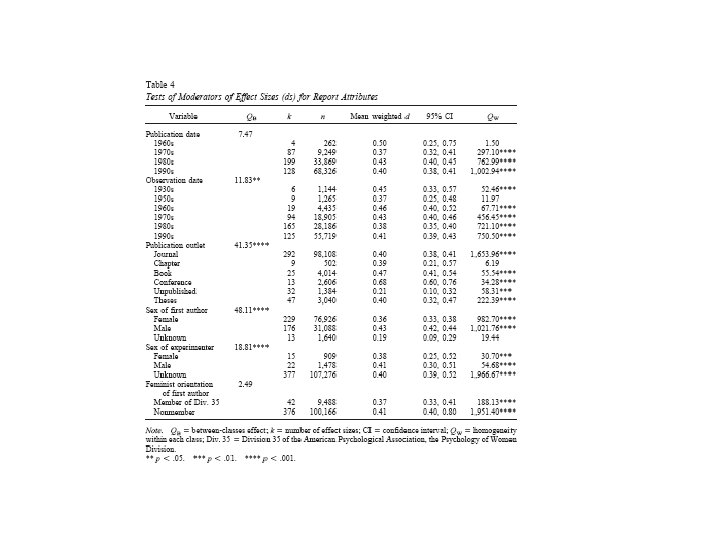

The Contingent Smile: A Meta. Analysis of Sex Differences in Smiling M La. France M A. Hecht E Levy Paluck Psychological Bulletin. 2003, Vol. 129, No. 2, 305– 334

Based on 20 published studies, the effect size (d) she reported was a moderate 0. 63. In a follow-up report, J. A. Hall and Halberstadt (1986) added seven new cases and reported a somewhat lower weighted effect size of 0. 42.

We included in our meta-analysis unpublished studies such as conference papers and theses, as well as previously unanalyzed data that were not included in their prior meta-analysis. Second, we explored the influence of several moderators derived from work in other areas of sex difference research

The third goal for the present metaanalysis was to conduct a more fine-grained analysis of several moderators previously considered by J. A. Hall and Halberstadt (1986)

Method • Retrieval of Studies • We searched the empirical literature for studies that documented a quantitative relationship between sex and smiling, even if that relationship was not the central one of the investigation. • Along with published articles, unpublished materials such as conference papers, theses, dissertations, and other unpublished papers were included. This was done to counter the publication bias toward positive results