Visual Attention Derek Hoiem March 14 2007 Misc

![Model of Vision Pre-Attentive Stage [Rensink 2000] (figure from Itti 2002) Model of Vision Pre-Attentive Stage [Rensink 2000] (figure from Itti 2002)](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-14.jpg)

![Bottom-Up: Normalization • Normalize map values to fixed range [0. . 1] • Compute Bottom-Up: Normalization • Normalize map values to fixed range [0. . 1] • Compute](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-17.jpg)

![Bottom-Up: Predicted Fixations [Itti Koch Niebur 1998] Bottom-Up: Predicted Fixations [Itti Koch Niebur 1998]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-18.jpg)

![Experiments with Newer Model [Peters Iyer Itti Koch 2005] Experiments with Newer Model [Peters Iyer Itti Koch 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-20.jpg)

![Experiments with Newer Model Normalized Scanpath Salience Inter-observer Salience [Peters Iyer Itti Koch 2005] Experiments with Newer Model Normalized Scanpath Salience Inter-observer Salience [Peters Iyer Itti Koch 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-21.jpg)

![Almost No Benefit to More Complicated Models [Peters Iyer Itti Koch 2005] Almost No Benefit to More Complicated Models [Peters Iyer Itti Koch 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-22.jpg)

![“Eccentricity-Dependent Filtering” Helps No EDF [Peters Iyer Itti Koch 2005] “Eccentricity-Dependent Filtering” Helps No EDF [Peters Iyer Itti Koch 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-23.jpg)

![Verbal Cueing Feature Weighting [Navalpakkam Itti 2006] Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-29.jpg)

![Verbal Cueing Feature Weighting [Navalpakkam Itti 2006] Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-30.jpg)

![Verbal Cueing Feature Weighting [Navalpakkam Itti 2006] Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-31.jpg)

![Role of Memory [Brockmole and Henderson 2006] Role of Memory [Brockmole and Henderson 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-33.jpg)

![Scene Context Target Presence Target Absence [Neider Zelinsky 2005] Scene Context Target Presence Target Absence [Neider Zelinsky 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-35.jpg)

![Scene Context Gist [Torralba et al. 2006] Scene Context Gist [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-37.jpg)

![Scene Context [Torralba et al. 2006] Scene Context [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-38.jpg)

![Scene Context [Torralba et al. 2006] Scene Context [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-39.jpg)

![Scene Context [Torralba et al. 2006] Scene Context [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-40.jpg)

![Scene Context [Torralba et al. 2006] Scene Context [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-41.jpg)

![Scene Context: People Search [Torralba et al. 2006] Scene Context: People Search [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-42.jpg)

![Scene Context: Object Search [Torralba et al. 2006] Scene Context: Object Search [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-43.jpg)

- Slides: 47

Visual Attention Derek Hoiem March 14, 2007 Misc Reading Group

The Eye • 120 million rods (intensity) • 7 million cones (color) • Fovea: 2 degrees of cones

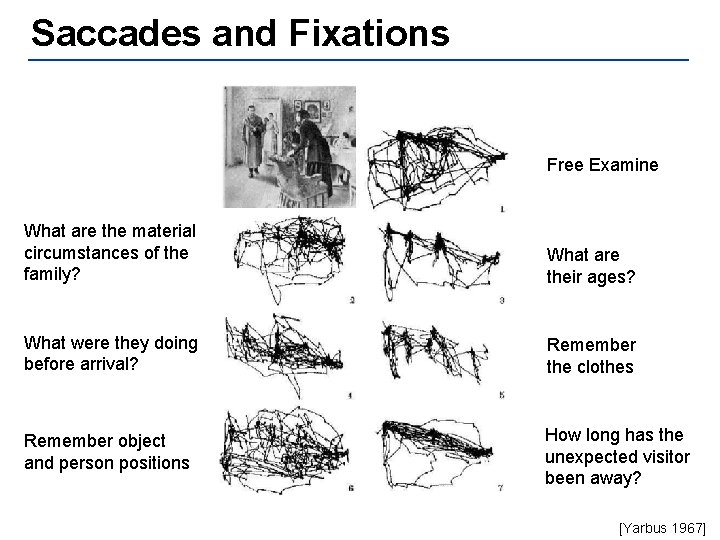

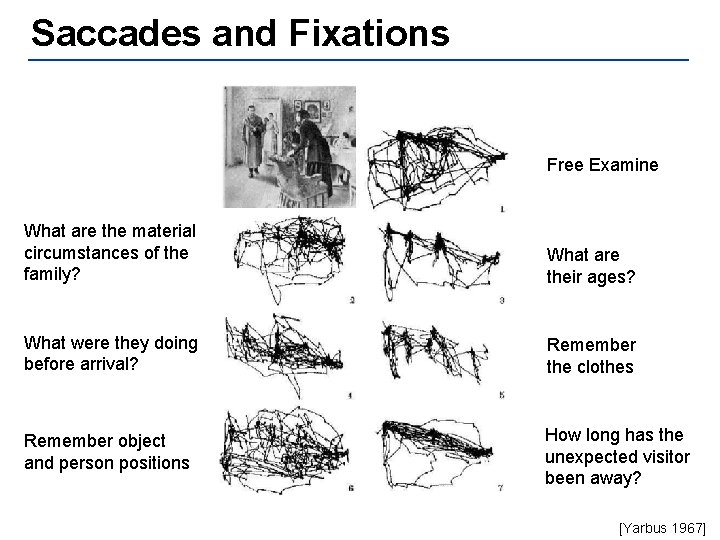

Saccades and Fixations • Scope: 2 deg (poor spatial res beyond this) • Duration: 50 -500 ms (mean 250 ms) • Length: 0. 5 to 50 degrees (mean 4 to 12) • Various types (e. g. , regular, tracking, micro)

Saccades and Fixations Free Examine What are the material circumstances of the family? What are their ages? What were they doing before arrival? Remember the clothes Remember object and person positions How long has the unexpected visitor been away? [Yarbus 1967]

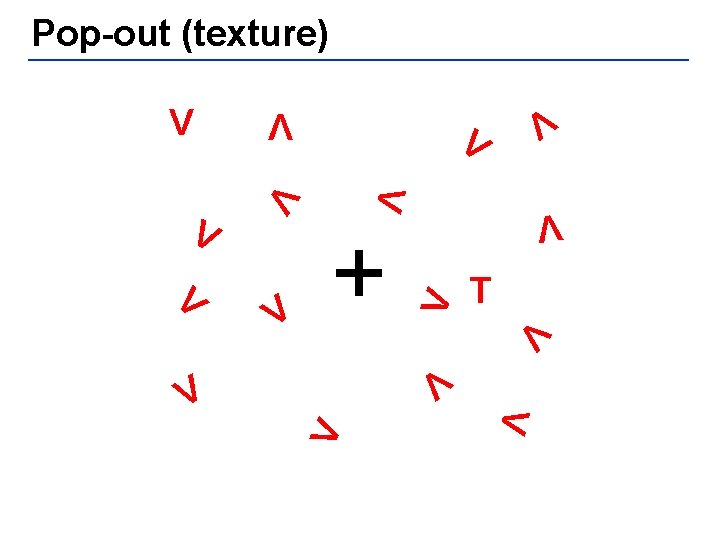

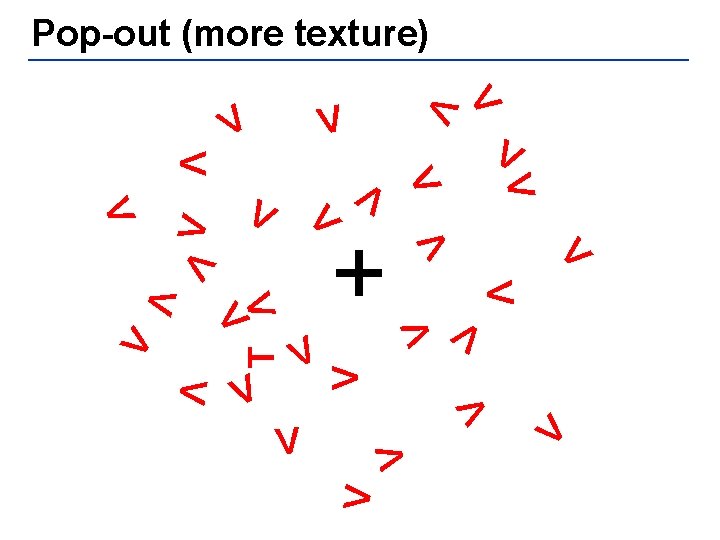

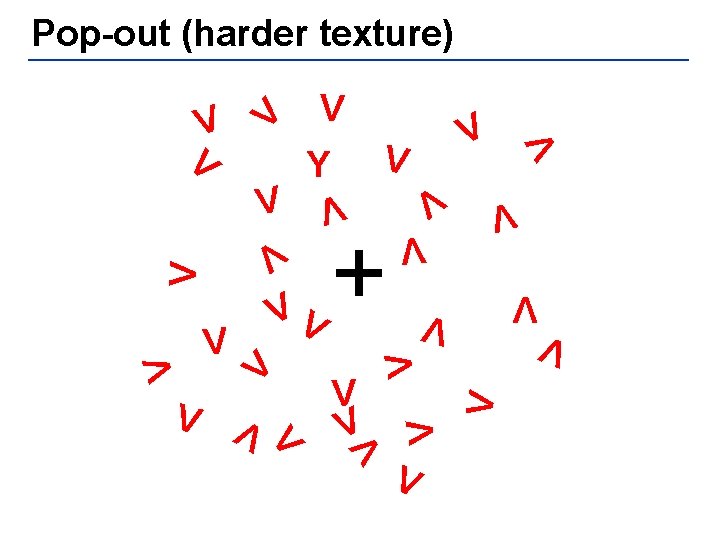

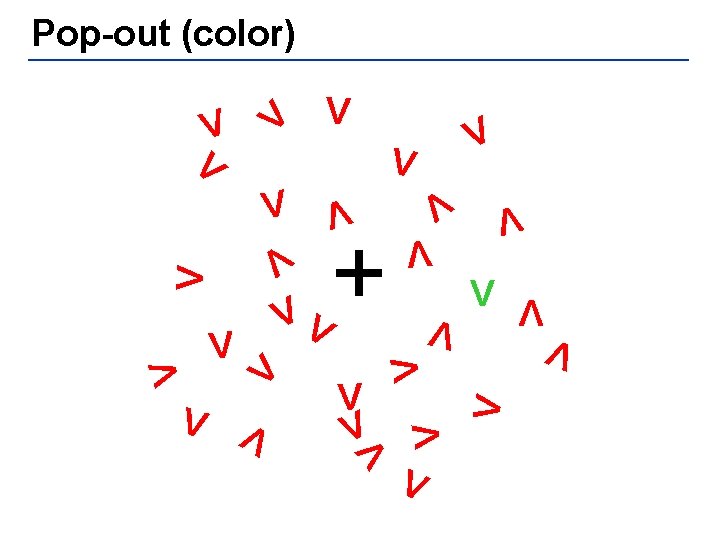

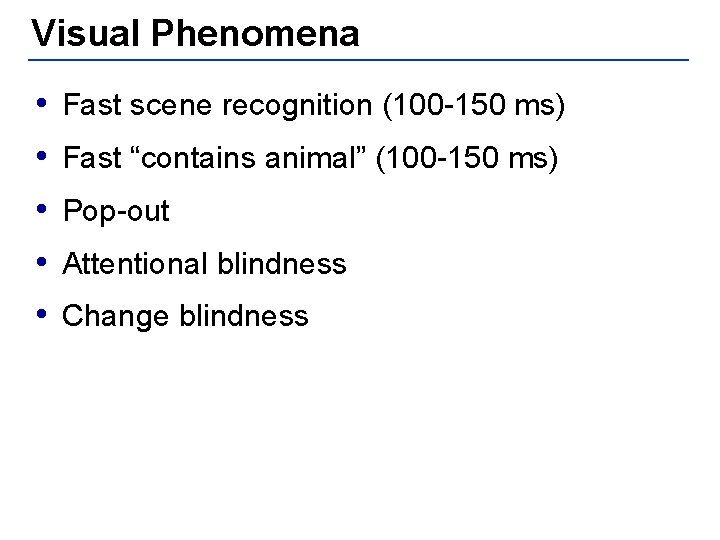

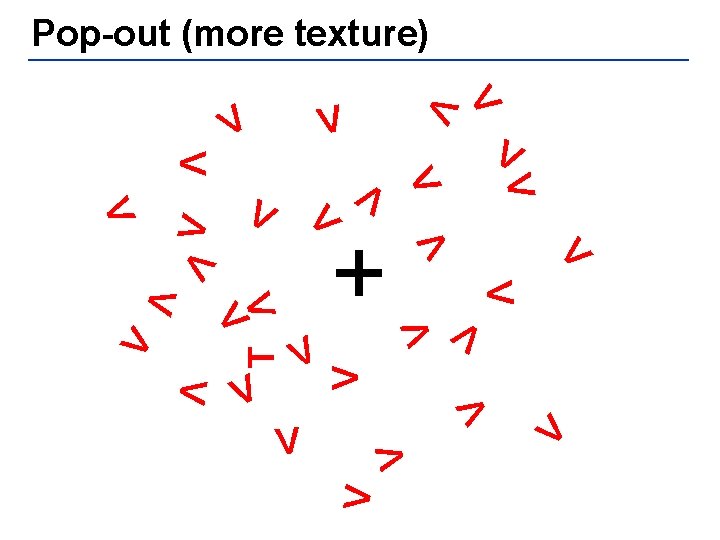

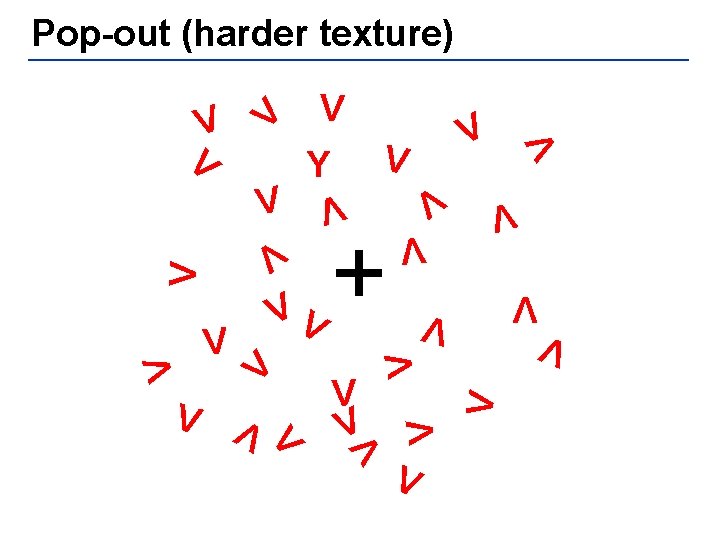

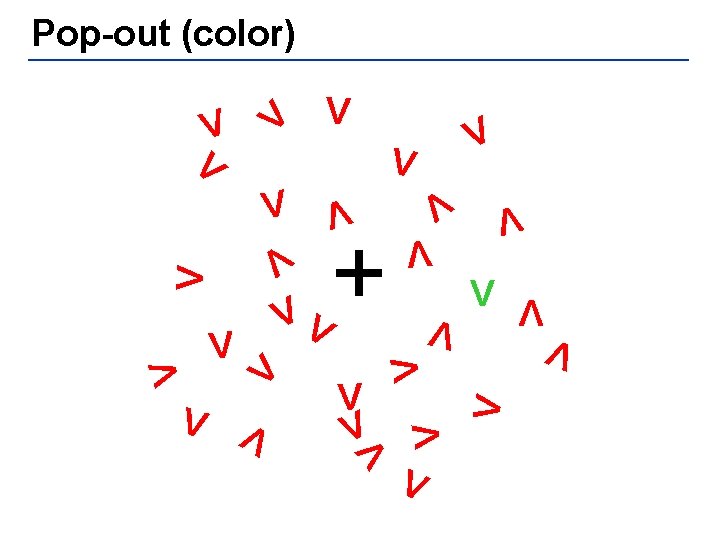

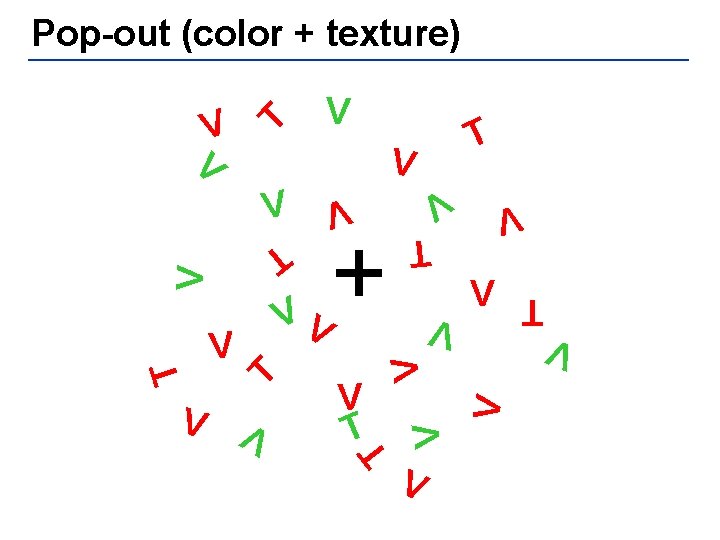

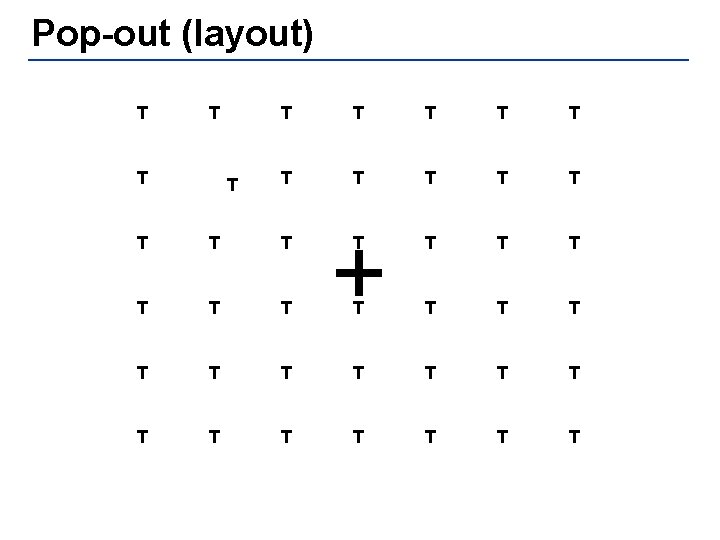

Visual Phenomena • Fast scene recognition (100 -150 ms) • Fast “contains animal” (100 -150 ms) • Pop-out • Attentional blindness • Change blindness

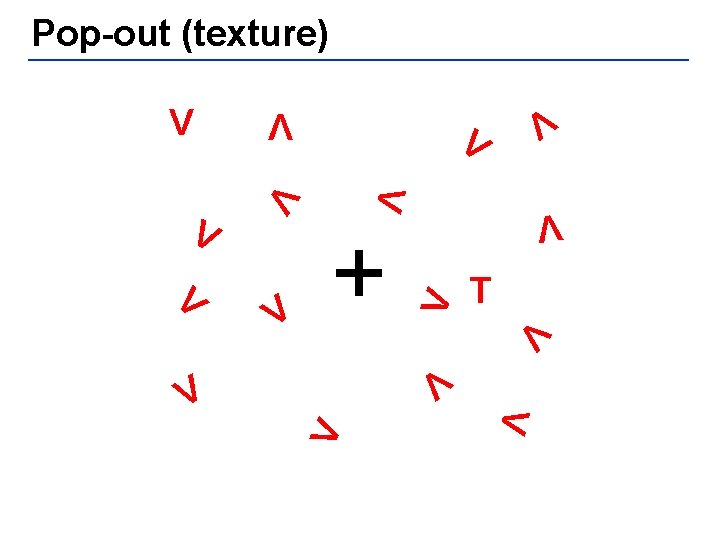

Pop-out (texture) V V + T V V V

Pop-out (more texture) V V V V V T V V V V + V V V V V

Pop-out (harder texture) V V V V VV + V V V Y V V V V V

Pop-out (color) V V V V VV + V V V V V

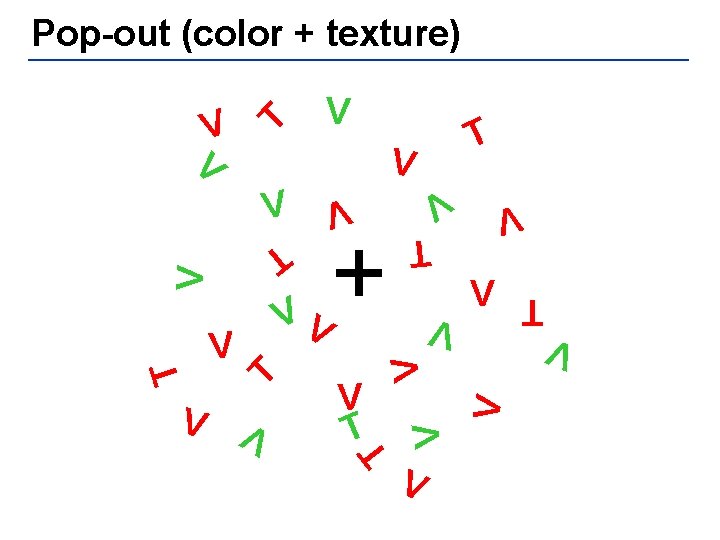

Pop-out (color + texture) T V V T V T V VV + T V V V

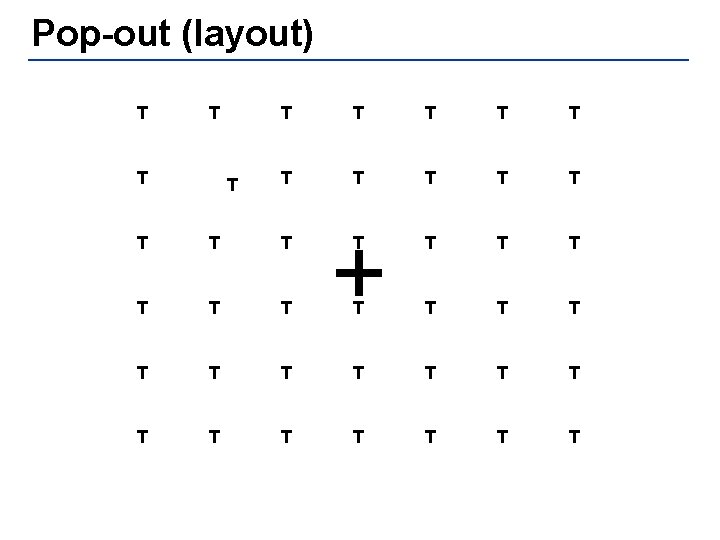

Pop-out (layout) T T T T T T + T T T T T

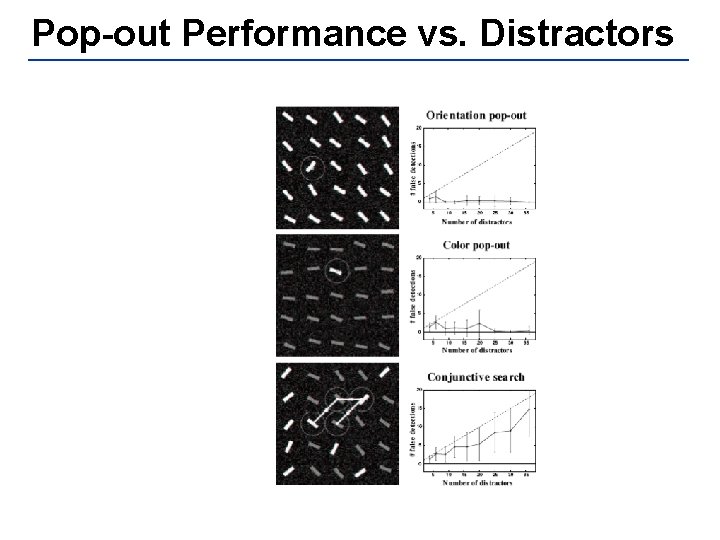

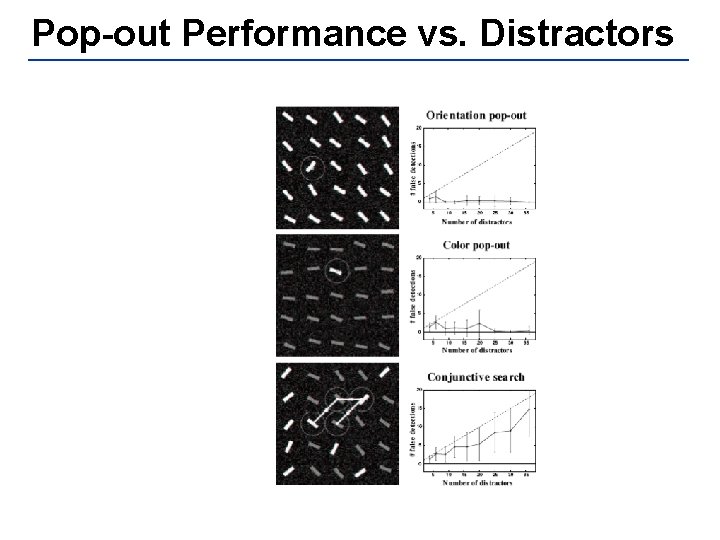

Pop-out Performance vs. Distractors

Demos http: //viscog. beckman. uiuc. edu/djs_lab/demos. html

![Model of Vision PreAttentive Stage Rensink 2000 figure from Itti 2002 Model of Vision Pre-Attentive Stage [Rensink 2000] (figure from Itti 2002)](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-14.jpg)

Model of Vision Pre-Attentive Stage [Rensink 2000] (figure from Itti 2002)

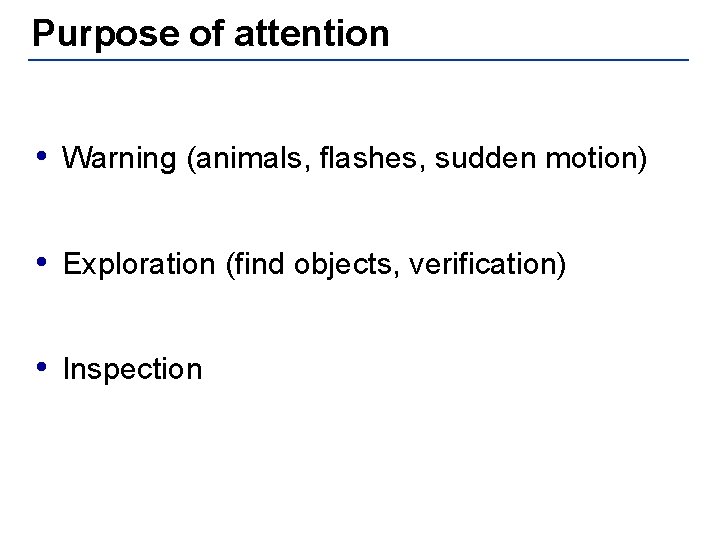

Purpose of attention • Warning (animals, flashes, sudden motion) • Exploration (find objects, verification) • Inspection

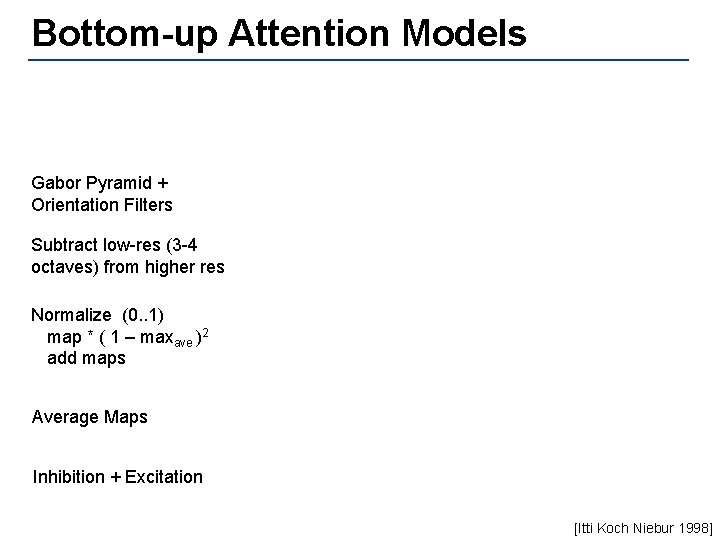

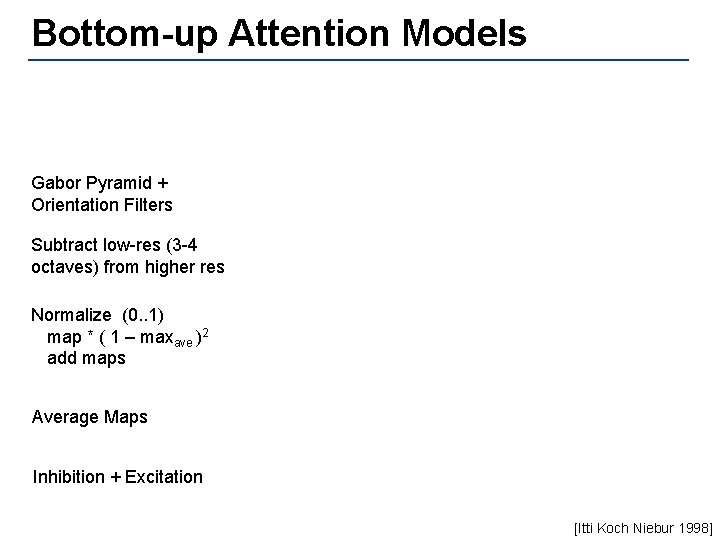

Bottom-up Attention Models Gabor Pyramid + Orientation Filters Subtract low-res (3 -4 octaves) from higher res Normalize (0. . 1) + map * ( 1 – maxave )2 + add maps Average Maps Inhibition + Excitation [Itti Koch Niebur 1998]

![BottomUp Normalization Normalize map values to fixed range 0 1 Compute Bottom-Up: Normalization • Normalize map values to fixed range [0. . 1] • Compute](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-17.jpg)

Bottom-Up: Normalization • Normalize map values to fixed range [0. . 1] • Compute average local maximum m • Multiply map by (1 -m)2 [Itti Koch Niebur 1998]

![BottomUp Predicted Fixations Itti Koch Niebur 1998 Bottom-Up: Predicted Fixations [Itti Koch Niebur 1998]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-18.jpg)

Bottom-Up: Predicted Fixations [Itti Koch Niebur 1998]

Updates to Bottom-Up Model • Cross-orientation suppression • Long-range contour interactions • Eccentricity-dependent processing (e-x) – Goal: better prediction of subsequent fixations [Peters Iyer Itti Koch 2005]

![Experiments with Newer Model Peters Iyer Itti Koch 2005 Experiments with Newer Model [Peters Iyer Itti Koch 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-20.jpg)

Experiments with Newer Model [Peters Iyer Itti Koch 2005]

![Experiments with Newer Model Normalized Scanpath Salience Interobserver Salience Peters Iyer Itti Koch 2005 Experiments with Newer Model Normalized Scanpath Salience Inter-observer Salience [Peters Iyer Itti Koch 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-21.jpg)

Experiments with Newer Model Normalized Scanpath Salience Inter-observer Salience [Peters Iyer Itti Koch 2005]

![Almost No Benefit to More Complicated Models Peters Iyer Itti Koch 2005 Almost No Benefit to More Complicated Models [Peters Iyer Itti Koch 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-22.jpg)

Almost No Benefit to More Complicated Models [Peters Iyer Itti Koch 2005]

![EccentricityDependent Filtering Helps No EDF Peters Iyer Itti Koch 2005 “Eccentricity-Dependent Filtering” Helps No EDF [Peters Iyer Itti Koch 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-23.jpg)

“Eccentricity-Dependent Filtering” Helps No EDF [Peters Iyer Itti Koch 2005]

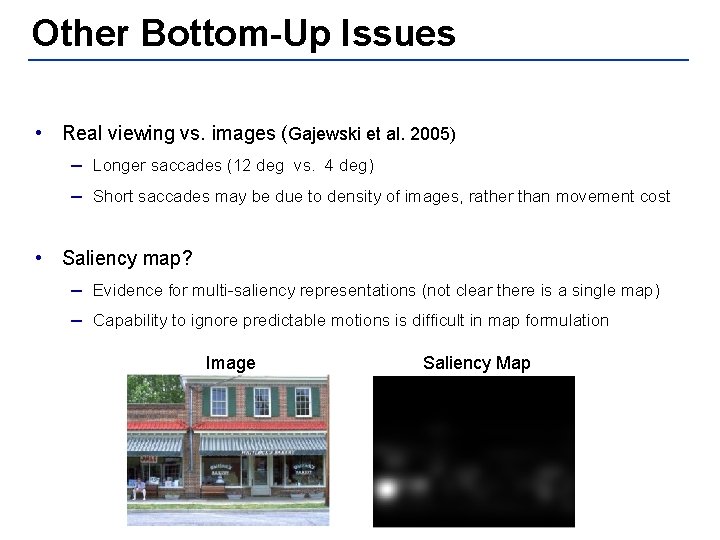

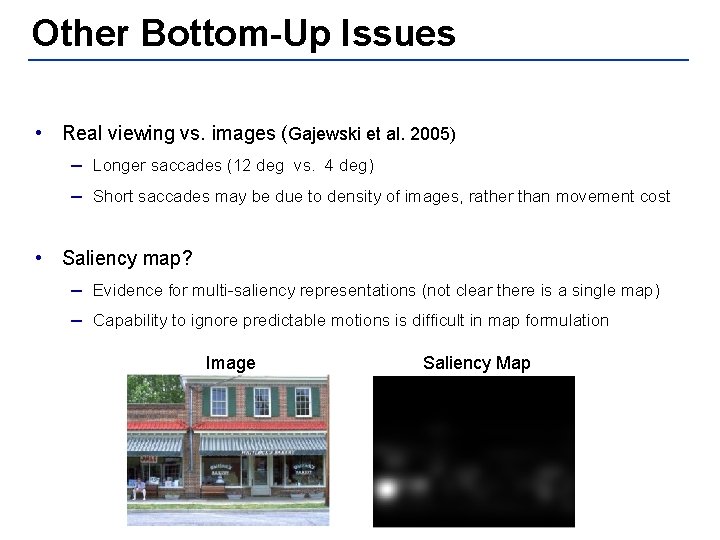

Other Bottom-Up Issues • Real viewing vs. images (Gajewski et al. 2005) – Longer saccades (12 deg vs. 4 deg) – Short saccades may be due to density of images, rather than movement cost • Saliency map? – Evidence for multi-saliency representations (not clear there is a single map) – Capability to ignore predictable motions is difficult in map formulation Image Saliency Map

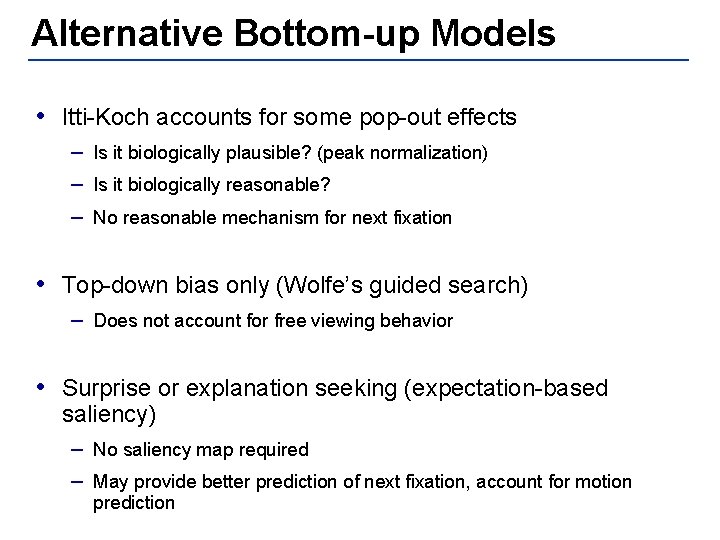

Alternative Bottom-up Models • Itti-Koch accounts for some pop-out effects – Is it biologically plausible? (peak normalization) – Is it biologically reasonable? – No reasonable mechanism for next fixation • Top-down bias only (Wolfe’s guided search) – Does not account for free viewing behavior • Surprise or explanation seeking (expectation-based saliency) – No saliency map required – May provide better prediction of next fixation, account for motion prediction

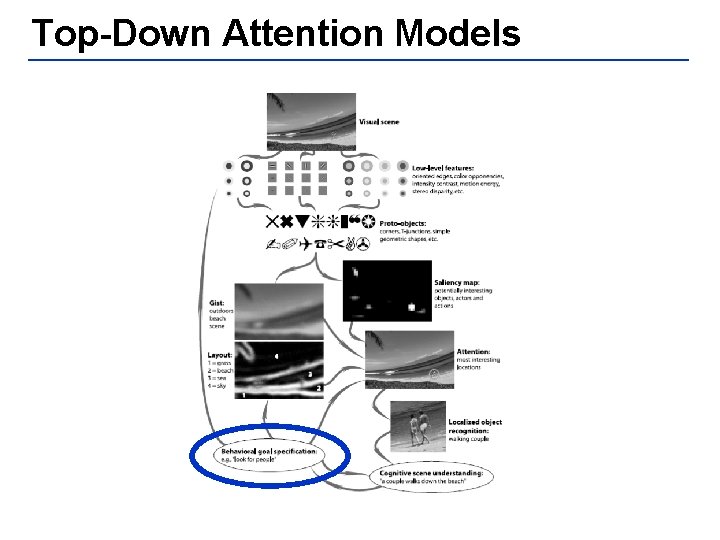

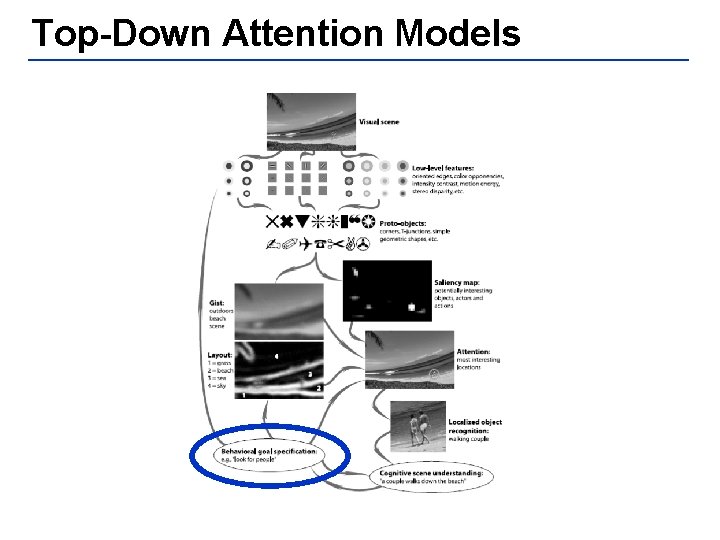

Top-Down Attention Models

Top-Down Attention Models • Feature weighting – Verbal – Visual • Location prior – From memory of scene (direct or indirect) – From scene information and semantics

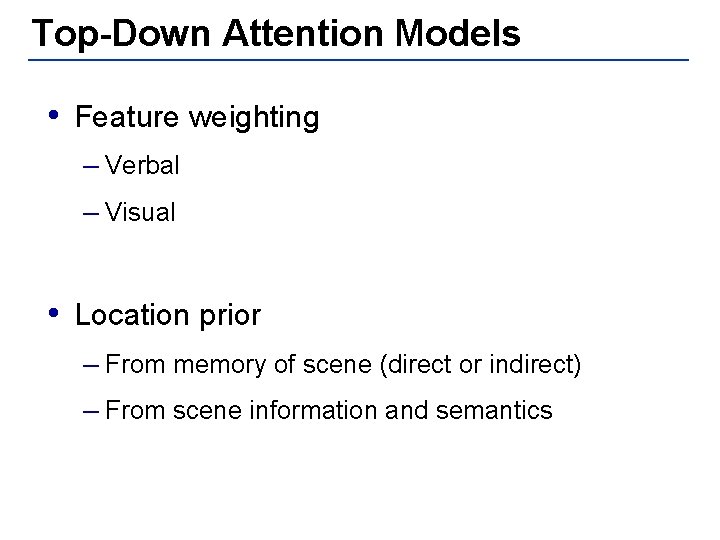

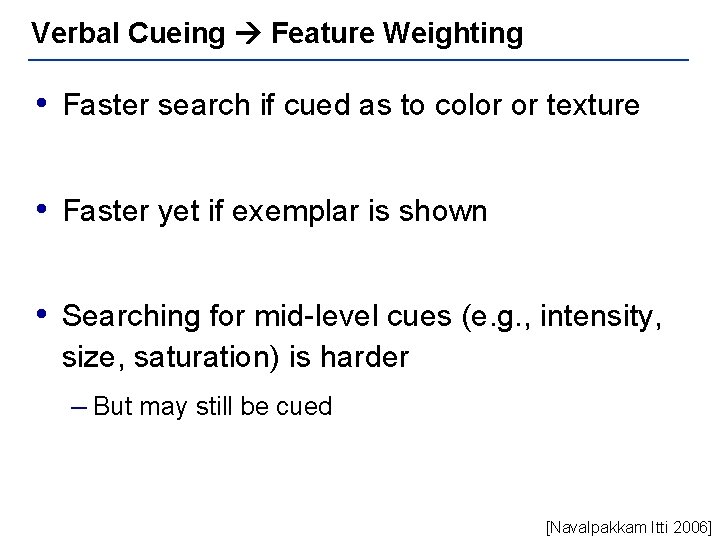

Verbal Cueing Feature Weighting • Faster search if cued as to color or texture • Faster yet if exemplar is shown • Searching for mid-level cues (e. g. , intensity, size, saturation) is harder – But may still be cued [Navalpakkam Itti 2006]

![Verbal Cueing Feature Weighting Navalpakkam Itti 2006 Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-29.jpg)

Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]

![Verbal Cueing Feature Weighting Navalpakkam Itti 2006 Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-30.jpg)

Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]

![Verbal Cueing Feature Weighting Navalpakkam Itti 2006 Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-31.jpg)

Verbal Cueing Feature Weighting [Navalpakkam Itti 2006]

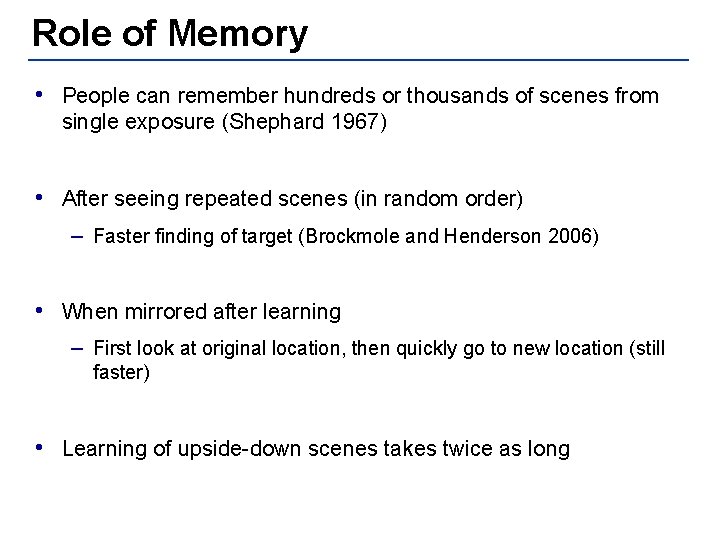

Role of Memory • People can remember hundreds or thousands of scenes from single exposure (Shephard 1967) • After seeing repeated scenes (in random order) – Faster finding of target (Brockmole and Henderson 2006) • When mirrored after learning – First look at original location, then quickly go to new location (still faster) • Learning of upside-down scenes takes twice as long

![Role of Memory Brockmole and Henderson 2006 Role of Memory [Brockmole and Henderson 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-33.jpg)

Role of Memory [Brockmole and Henderson 2006]

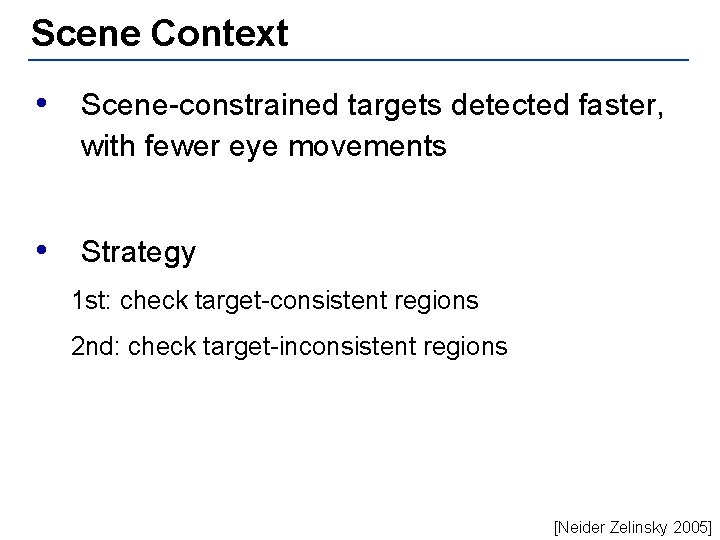

Scene Context • Scene-constrained targets detected faster, with fewer eye movements • Strategy 1 st: check target-consistent regions 2 nd: check target-inconsistent regions [Neider Zelinsky 2005]

![Scene Context Target Presence Target Absence Neider Zelinsky 2005 Scene Context Target Presence Target Absence [Neider Zelinsky 2005]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-35.jpg)

Scene Context Target Presence Target Absence [Neider Zelinsky 2005]

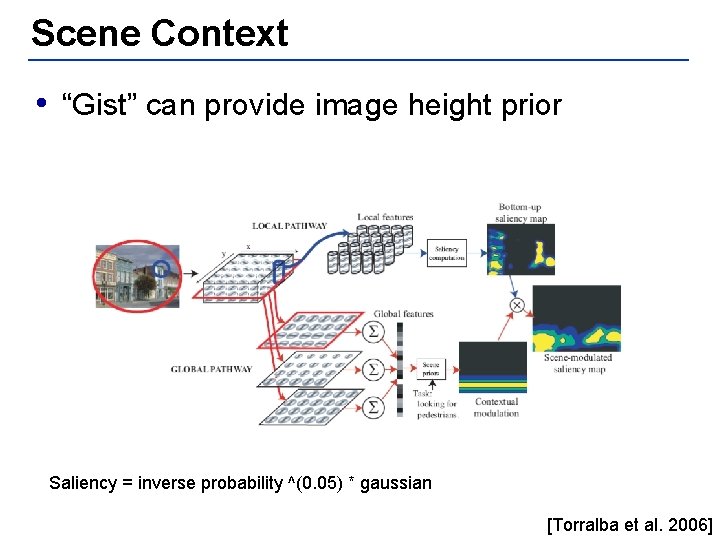

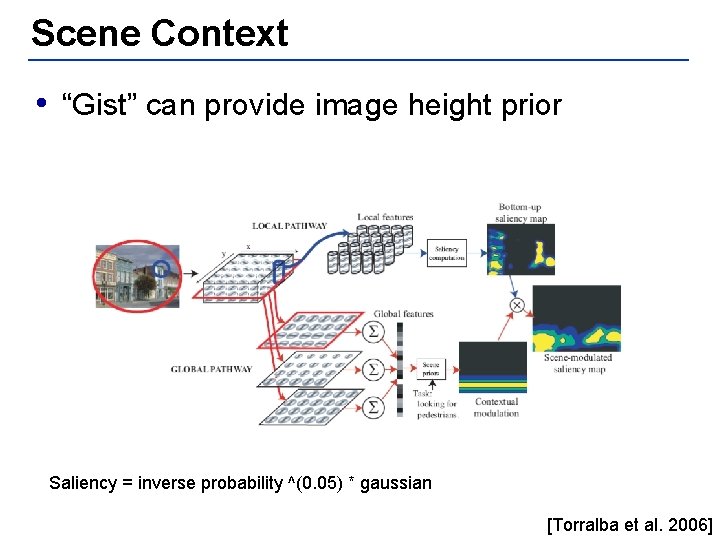

Scene Context • “Gist” can provide image height prior Saliency = inverse probability ^(0. 05) * gaussian [Torralba et al. 2006]

![Scene Context Gist Torralba et al 2006 Scene Context Gist [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-37.jpg)

Scene Context Gist [Torralba et al. 2006]

![Scene Context Torralba et al 2006 Scene Context [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-38.jpg)

Scene Context [Torralba et al. 2006]

![Scene Context Torralba et al 2006 Scene Context [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-39.jpg)

Scene Context [Torralba et al. 2006]

![Scene Context Torralba et al 2006 Scene Context [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-40.jpg)

Scene Context [Torralba et al. 2006]

![Scene Context Torralba et al 2006 Scene Context [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-41.jpg)

Scene Context [Torralba et al. 2006]

![Scene Context People Search Torralba et al 2006 Scene Context: People Search [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-42.jpg)

Scene Context: People Search [Torralba et al. 2006]

![Scene Context Object Search Torralba et al 2006 Scene Context: Object Search [Torralba et al. 2006]](https://slidetodoc.com/presentation_image_h/b9316758052b20bc03c8d6c7480a5685/image-43.jpg)

Scene Context: Object Search [Torralba et al. 2006]

Bottom-up + Top-down Attention • Method 1: Weight individual features • Method 2: Saliency. * Bias

Conclusions • Artificial static scenes and pop-out wellexplained by existing models • Little recent progress in bottom-up models (stuck with Itti-Koch model) • Only simplistic scene information modeled

Sources • Saliency – Itti, Koch, Niebur (1998). A model of saliency-based visual attention for rapid scene analysis. – Itti, Koch (2001). Computational Modelling of Visual Attention. – Itti (2002). Modeling Primate Visual Attention. – Itti (2002). Visual Attention. – Navalpakkam, Arbib, Itti (2004). Attention and Scene Understanding. – Peters, Iyer, Itti, Koch (2005). Components of bottom-up gaze allocation in natural images. • Role of memory – Chun, Jiang (1998). Contextual cueing: implicit learning and memory of visual context guides spatial attention. – Chun, Jiang (2003). Implicit, long-term spatial contextual memory. – Brockmole, Henderson (2006). Recognition and attention guidance during contextual cueing in real-world scenes: Evidence from eye movements. • Top-Down Attention – Niedur, Zelinksy (2005). Scene context guides eye movements during visual search. – Navalpakkam, Itti (2006). Top-down attention selection is fine grained. – Torralba, Oliva, Castelhano, Henderson (2006). Contextual guidance of eye movements and attention in real-world scenes: the role of global features on object search.

Sources • Others (used) – Rensink, O’Regan, Clark (1997). To see or not to see: the need for attention to perceive changes in scenes. – Liversedge, Findlay (2000). Saccadic eye movements and cognition. – Rensink (2000). The dynamic representation of scenes. – Delorme, Rousselet, Mace, Fabre-Thorpe (2004). Interaction of top-down and bottom-up processing in the fast visual analysis of natural scenes. – Gajewski, Pearson, Mack, Bartlett, Henderson (2005). Human gaze control in real world search. – http: //www. diku. dk/~panic/eyegaze/node 13. html • Others (not used but potentially interesting) – Itti, Koch, Braun (2000). Revisiting spatial vision: toward a unifying model. – Epstein (2005). The cortical basis of visual scene processing. – Baldi, Itti (2005). Attention: Bits versus Wows.