Geometric Context from a Single Image Derek Hoiem

![Applications • Object Detection Improvement in Murphy et al. ’s detector [20] with our Applications • Object Detection Improvement in Murphy et al. ’s detector [20] with our](https://slidetodoc.com/presentation_image/1be67db5066bdcb42864f1aede57b5be/image-21.jpg)

- Slides: 36

Geometric Context from a Single Image Derek Hoiem Alexei A. Efros Martial Hebert Carnegie Mellon University June 20, 2017 Presented by Hao Yang 1

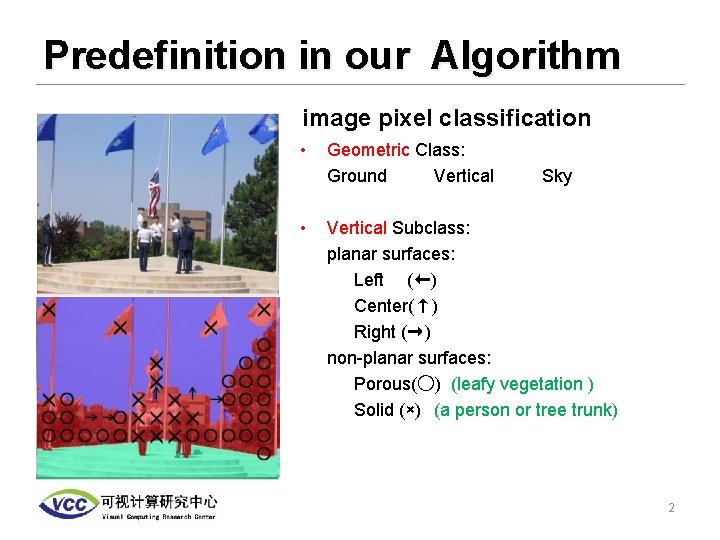

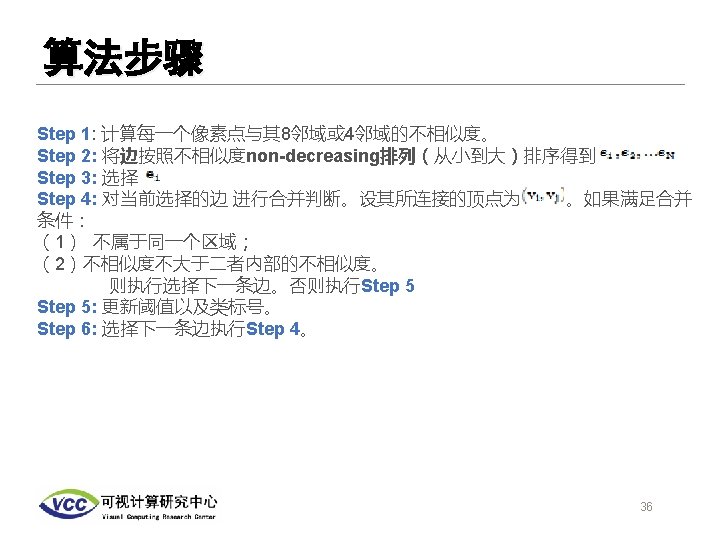

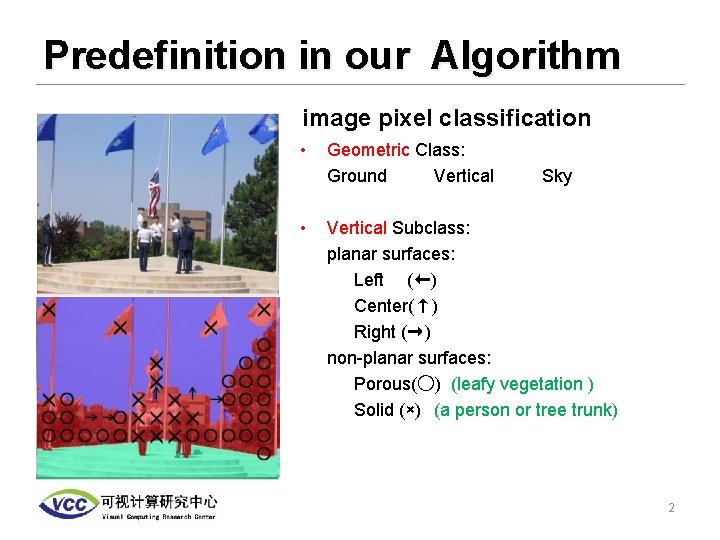

Predefinition in our Algorithm image pixel classification • • Geometric Class: Ground Vertical Sky Vertical Subclass: planar surfaces: Left (←) Center(↑) Right (→) non-planar surfaces: Porous(○) (leafy vegetation ) Solid (×) (a person or tree trunk) 2

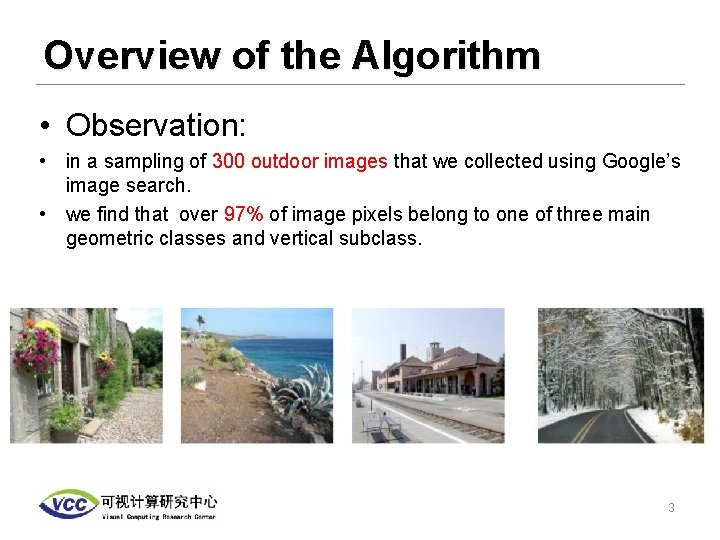

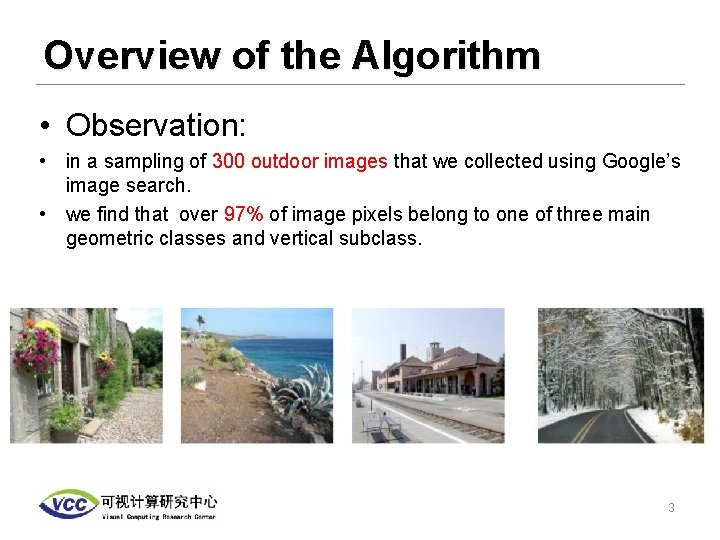

Overview of the Algorithm • Observation: • in a sampling of 300 outdoor images that we collected using Google’s image search. • we find that over 97% of image pixels belong to one of three main geometric classes and vertical subclass. 3

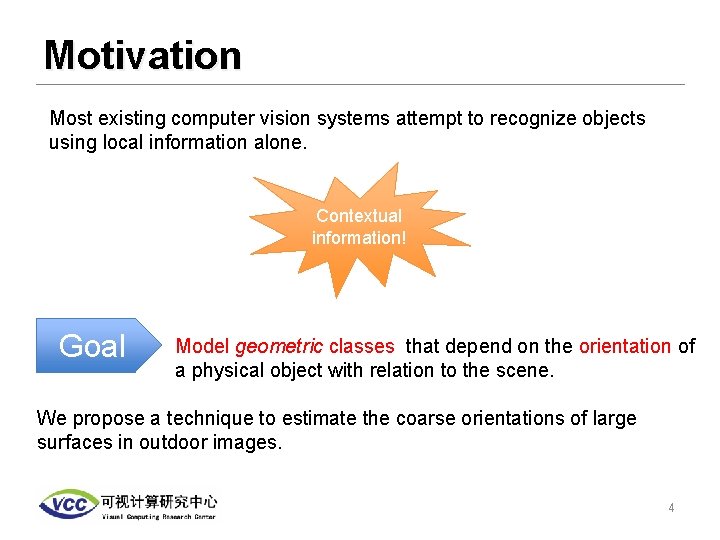

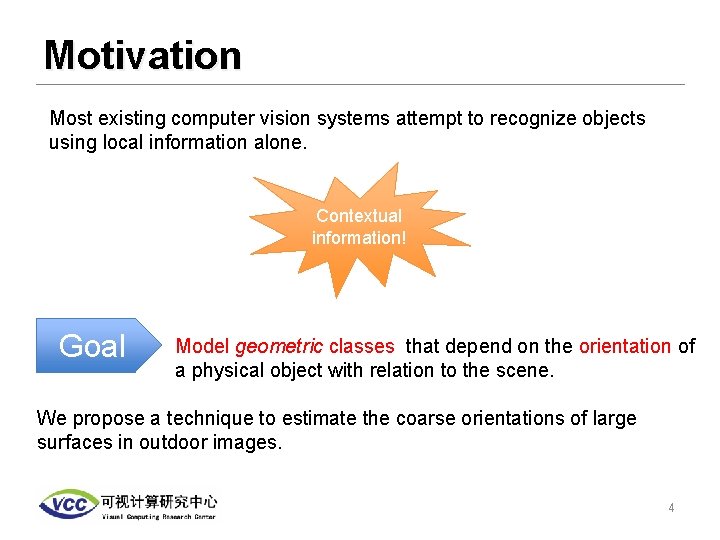

Motivation Most existing computer vision systems attempt to recognize objects using local information alone. Contextual information! Goal Model geometric classes that depend on the orientation of a physical object with relation to the scene. We propose a technique to estimate the coarse orientations of large surfaces in outdoor images. 4

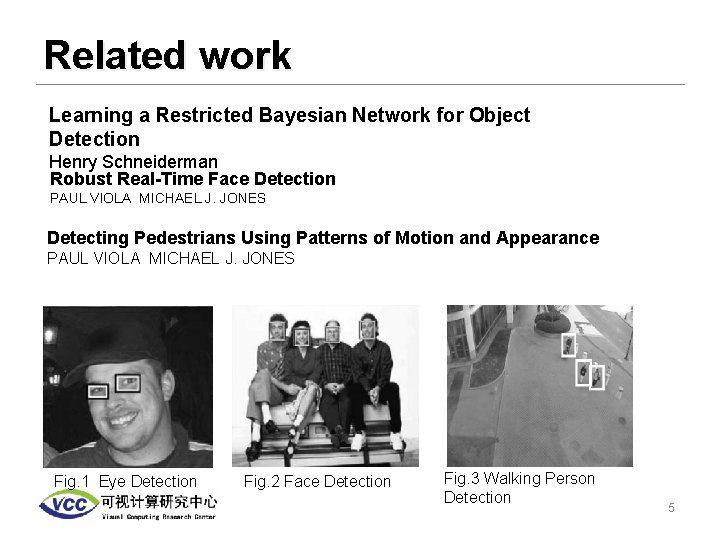

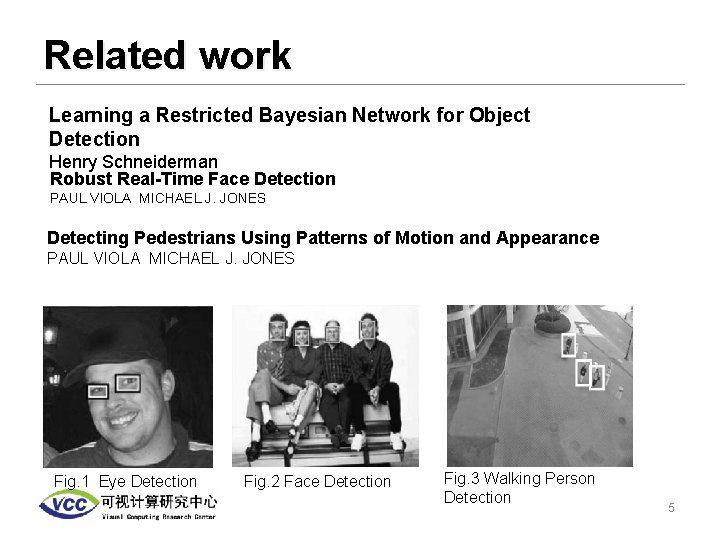

Related work Learning a Restricted Bayesian Network for Object Detection Henry Schneiderman Robust Real-Time Face Detection PAUL VIOLA MICHAEL J. JONES Detecting Pedestrians Using Patterns of Motion and Appearance PAUL VIOLA MICHAEL J. JONES Fig. 1 Eye Detection Fig. 2 Face Detection Fig. 3 Walking Person Detection 5

Related work look outside the box the leve hum l of a perf orm n anc e Local information Global information 6

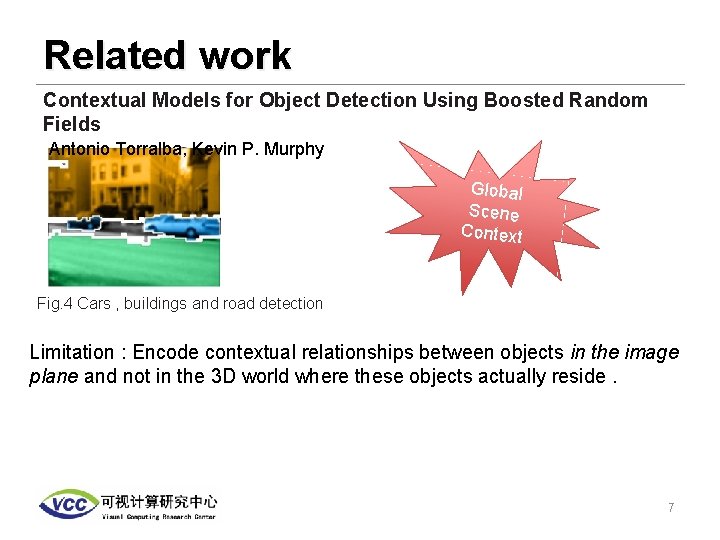

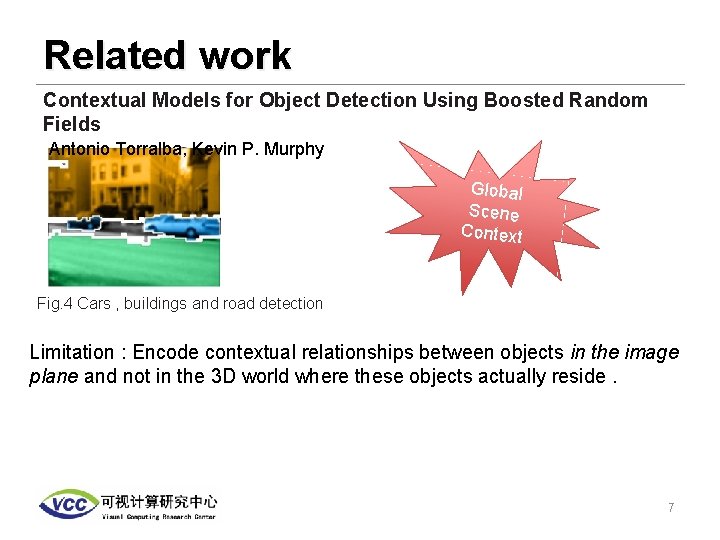

Related work Contextual Models for Object Detection Using Boosted Random Fields Antonio Torralba, Kevin P. Murphy Global Scene Context Fig. 4 Cars , buildings and road detection Limitation : Encode contextual relationships between objects in the image plane and not in the 3 D world where these objects actually reside. 7

Outdoor image • Focus on outdoor images! interesting ch all en gin g lack of human-imposed manhattan structure 8

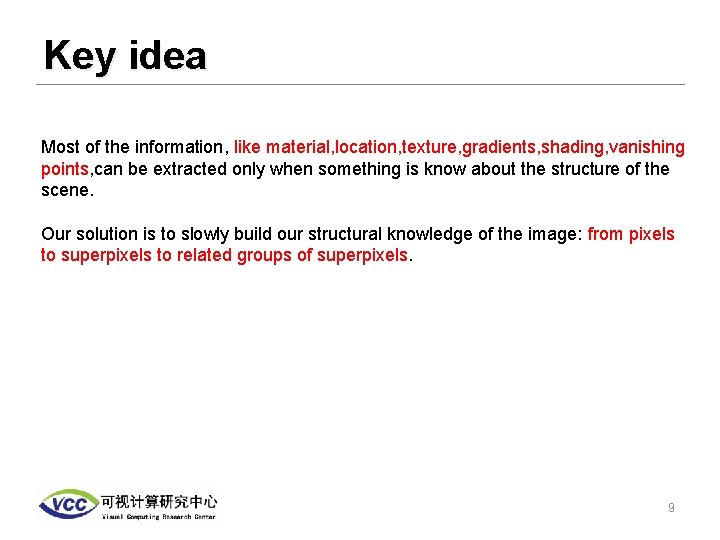

Key idea Most of the information, like material, location, texture, gradients, shading, vanishing points, can be extracted only when something is know about the structure of the scene. Our solution is to slowly build our structural knowledge of the image: from pixels to superpixels to related groups of superpixels. 9

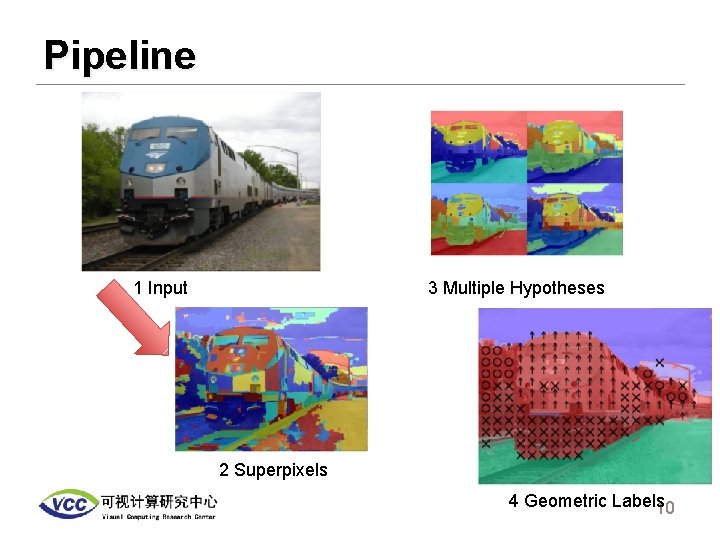

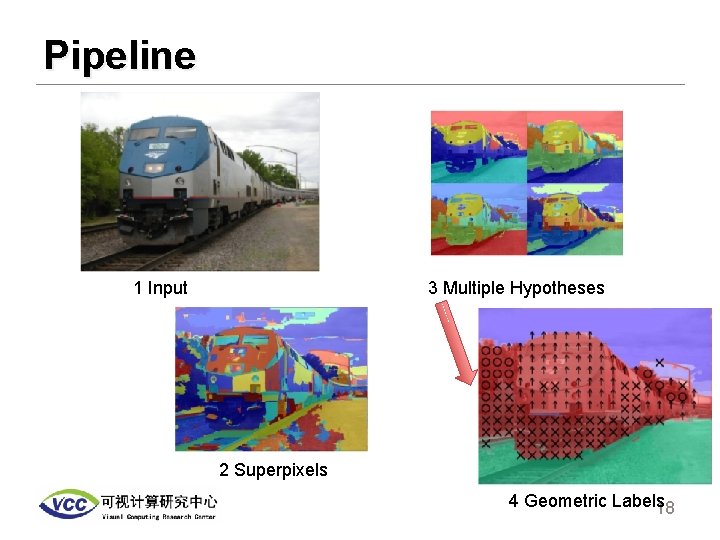

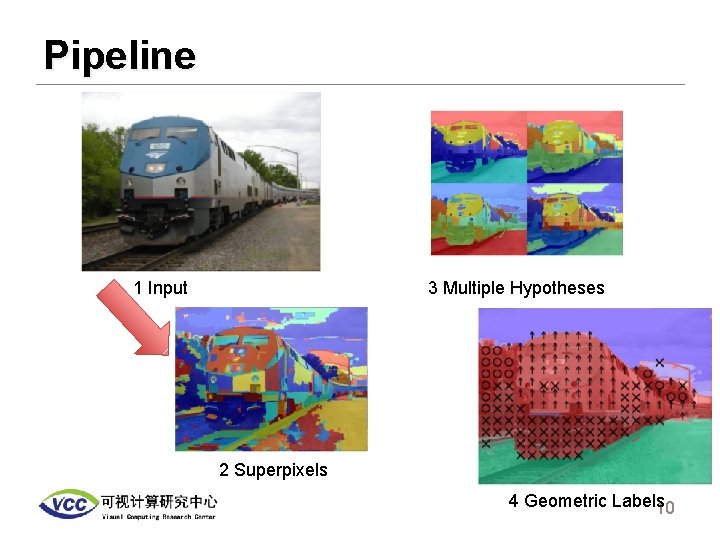

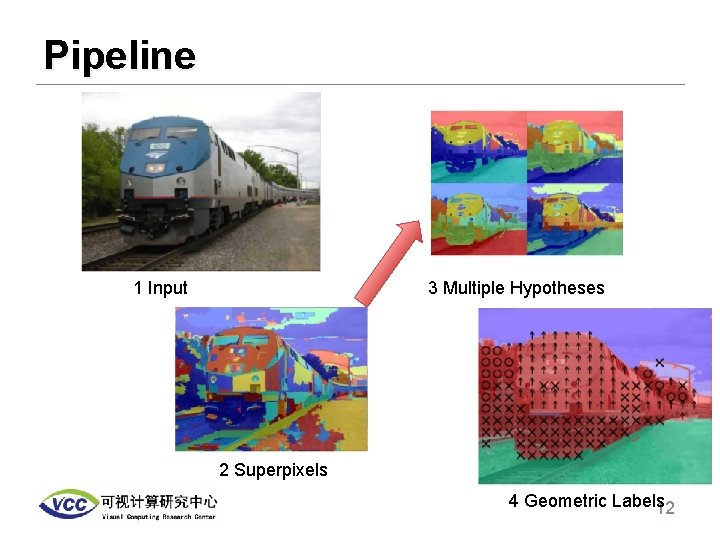

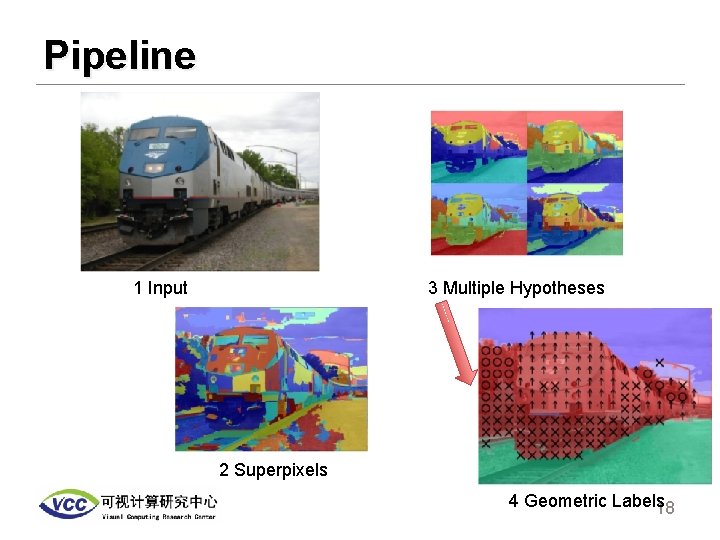

Pipeline 3 Multiple Hypotheses 1 Input 2 Superpixels 4 Geometric Labels 10

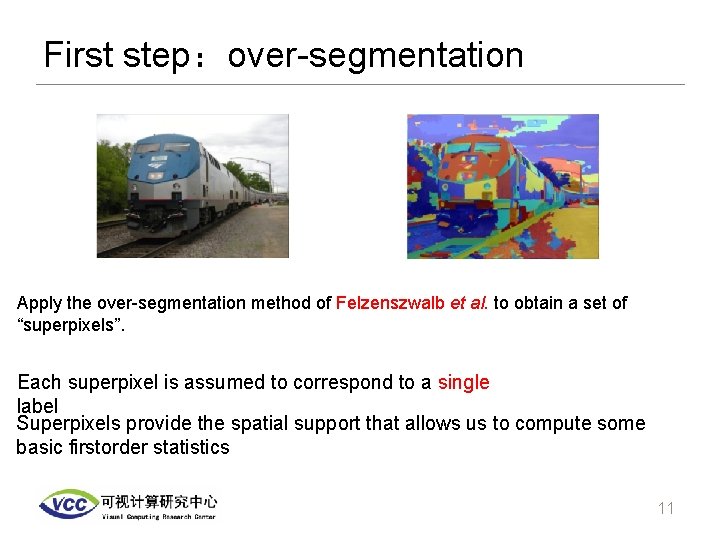

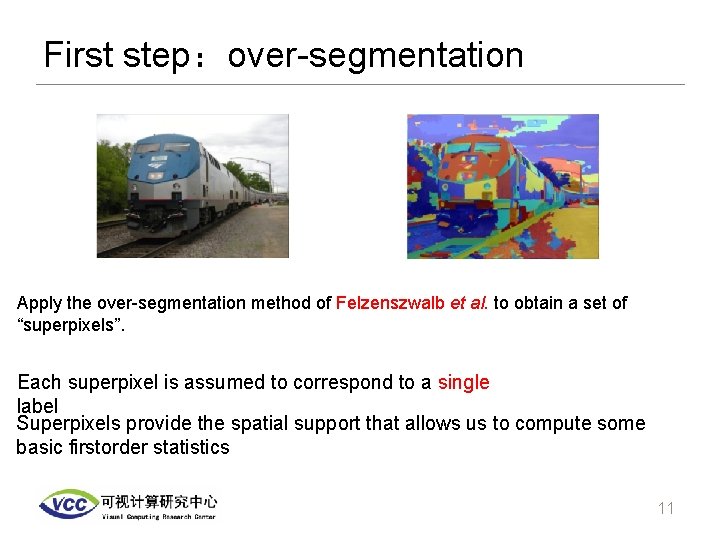

First step:over-segmentation Apply the over-segmentation method of Felzenszwalb et al. to obtain a set of “superpixels”. Each superpixel is assumed to correspond to a single label Superpixels provide the spatial support that allows us to compute some basic firstorder statistics 11

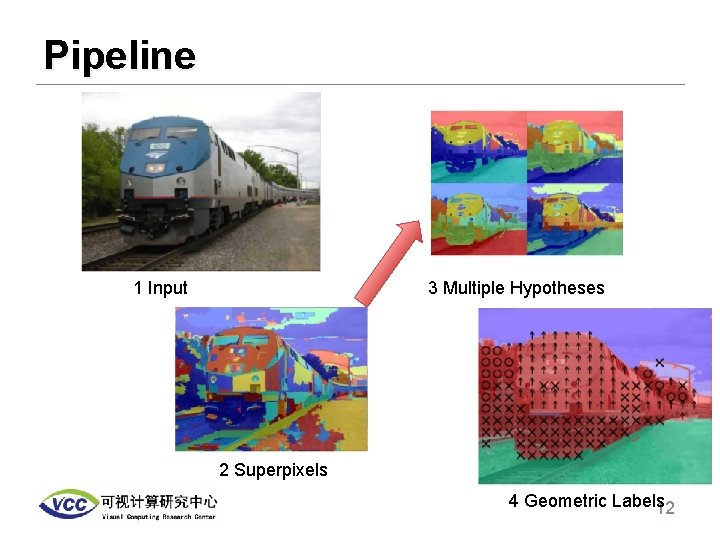

Pipeline 3 Multiple Hypotheses 1 Input 2 Superpixels 4 Geometric Labels 12

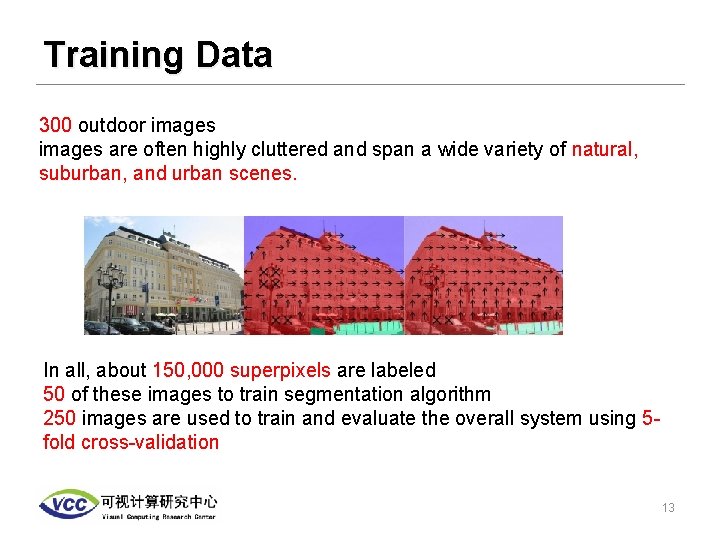

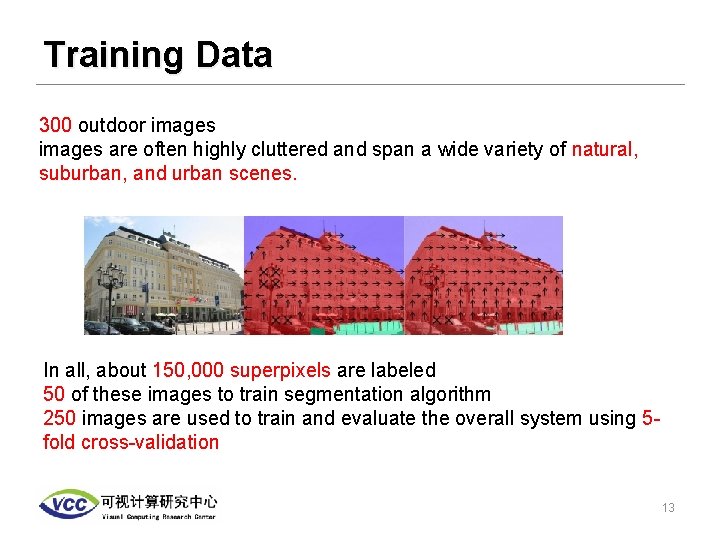

Training Data 300 outdoor images are often highly cluttered and span a wide variety of natural, suburban, and urban scenes. In all, about 150, 000 superpixels are labeled 50 of these images to train segmentation algorithm 250 images are used to train and evaluate the overall system using 5 fold cross-validation 13

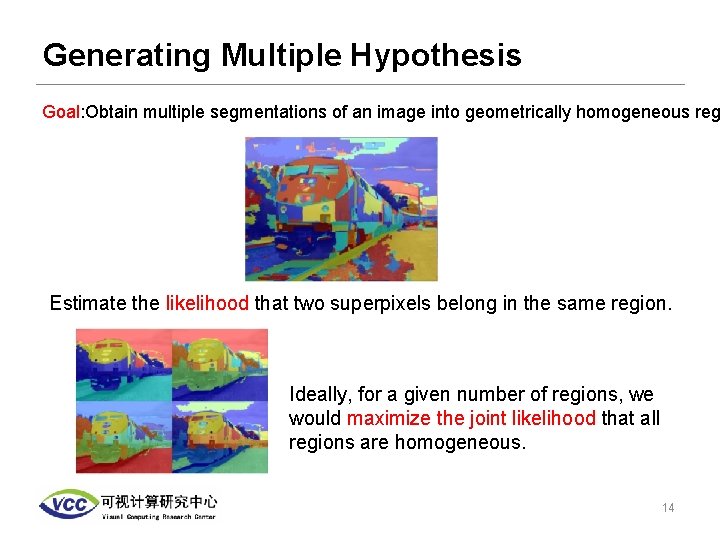

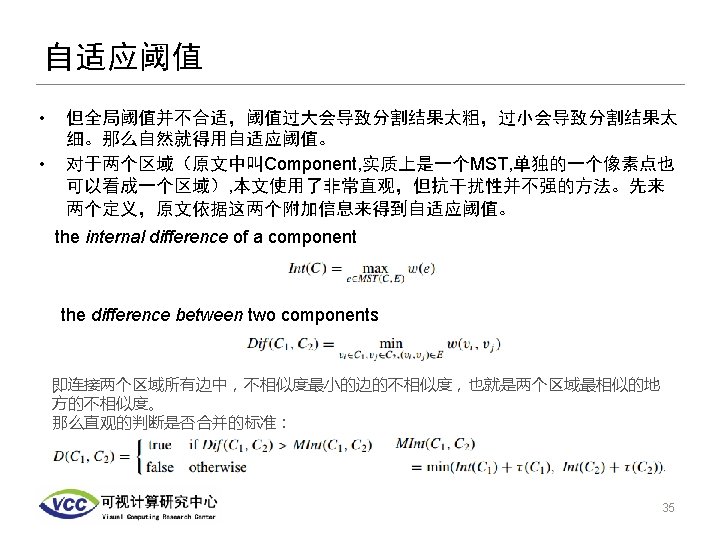

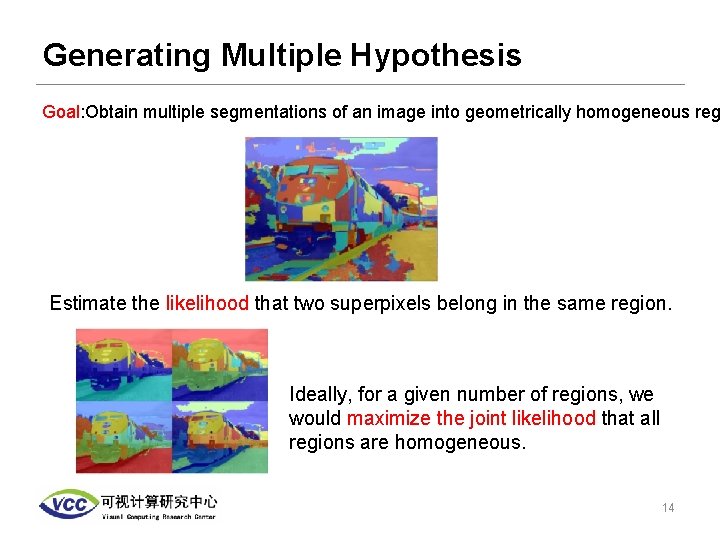

Generating Multiple Hypothesis Goal: Obtain multiple segmentations of an image into geometrically homogeneous reg Estimate the likelihood that two superpixels belong in the same region. Ideally, for a given number of regions, we would maximize the joint likelihood that all regions are homogeneous. 14

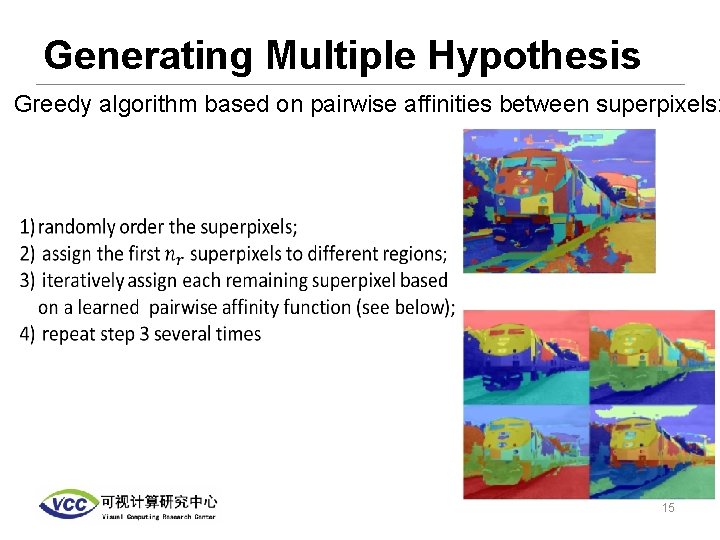

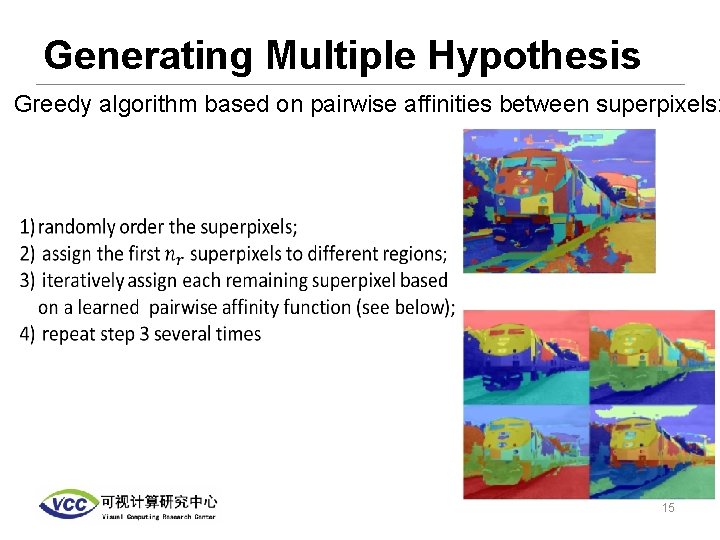

Generating Multiple Hypothesis Greedy algorithm based on pairwise affinities between superpixels: 15

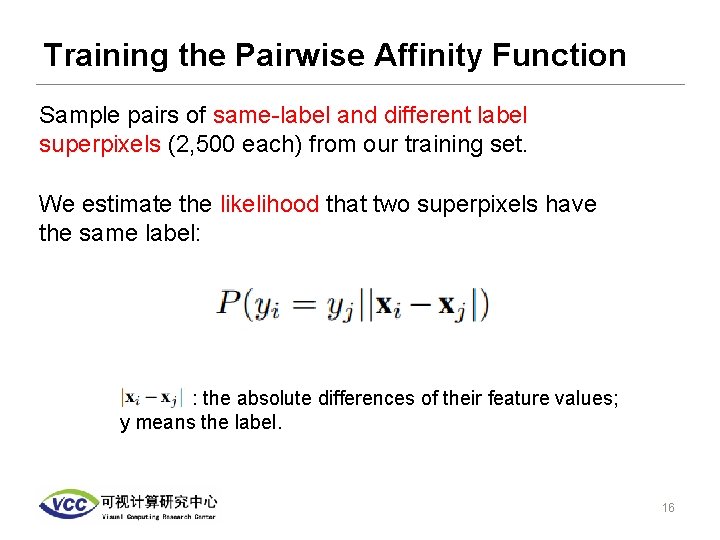

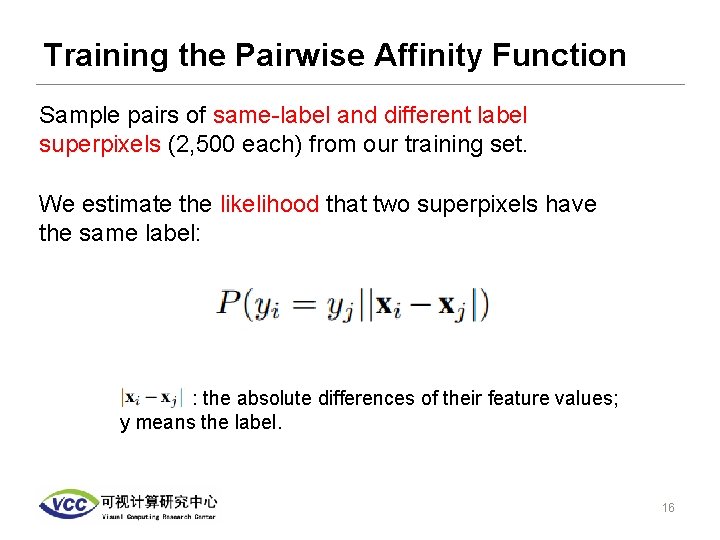

Training the Pairwise Affinity Function Sample pairs of same-label and different label superpixels (2, 500 each) from our training set. We estimate the likelihood that two superpixels have the same label: : the absolute differences of their feature values; y means the label. 16

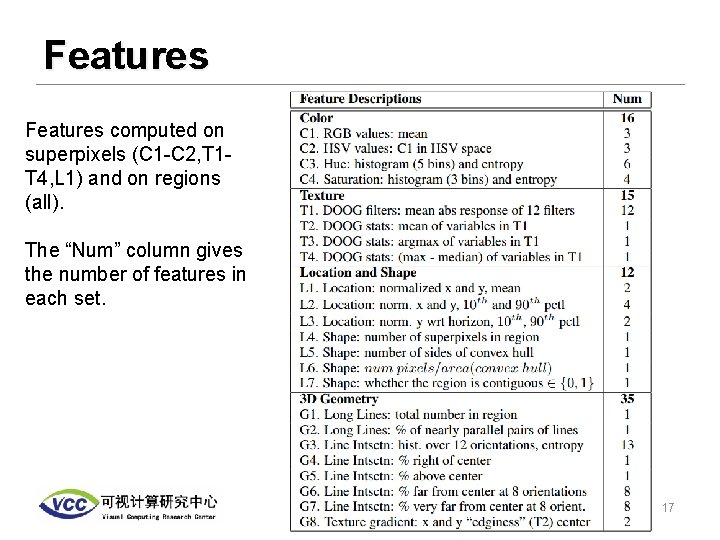

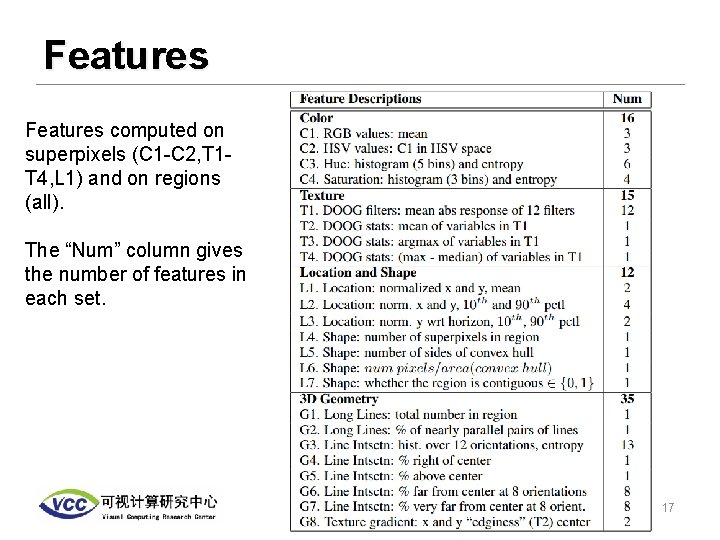

Features computed on superpixels (C 1 -C 2, T 1 T 4, L 1) and on regions (all). The “Num” column gives the number of features in each set. 17

Pipeline 3 Multiple Hypotheses 1 Input 2 Superpixels 4 Geometric Labels 18

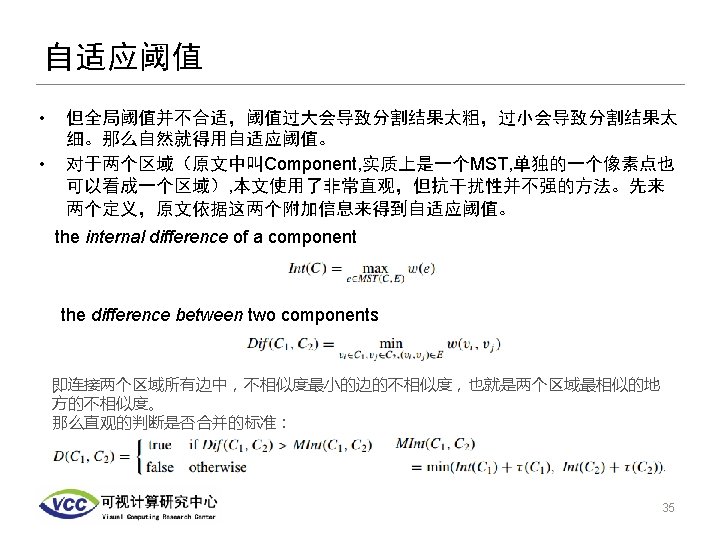

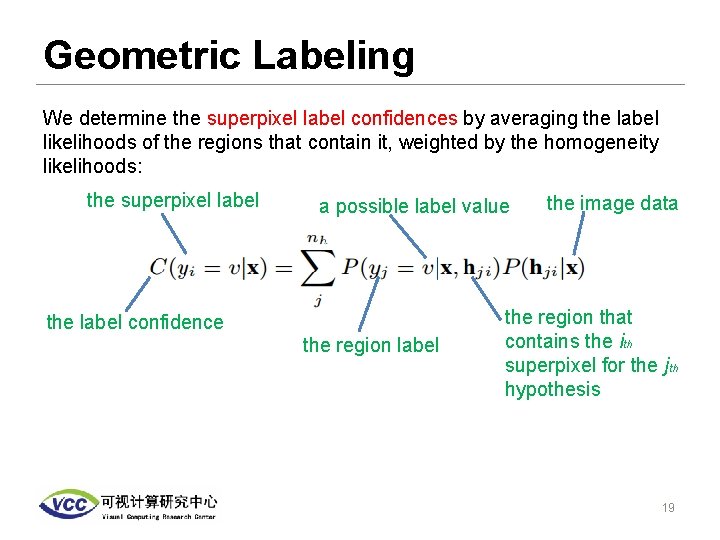

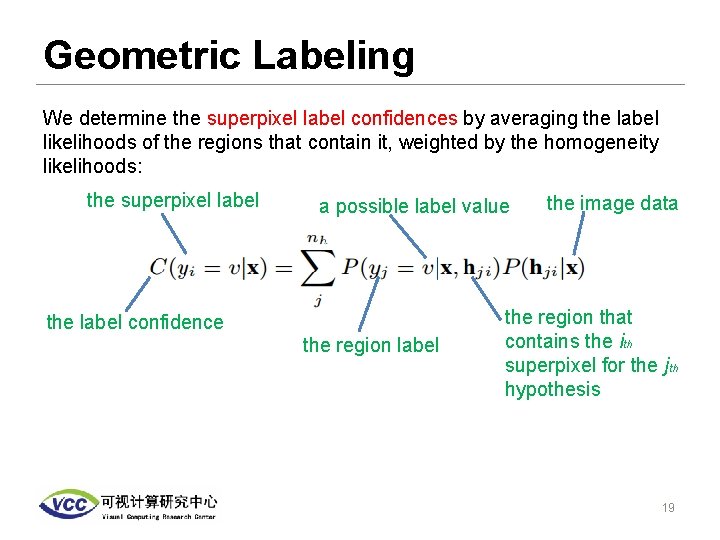

Geometric Labeling We determine the superpixel label confidences by averaging the label likelihoods of the regions that contain it, weighted by the homogeneity likelihoods: the superpixel label a possible label value the label confidence the region label the image data the region that contains the ith superpixel for the jth hypothesis 19

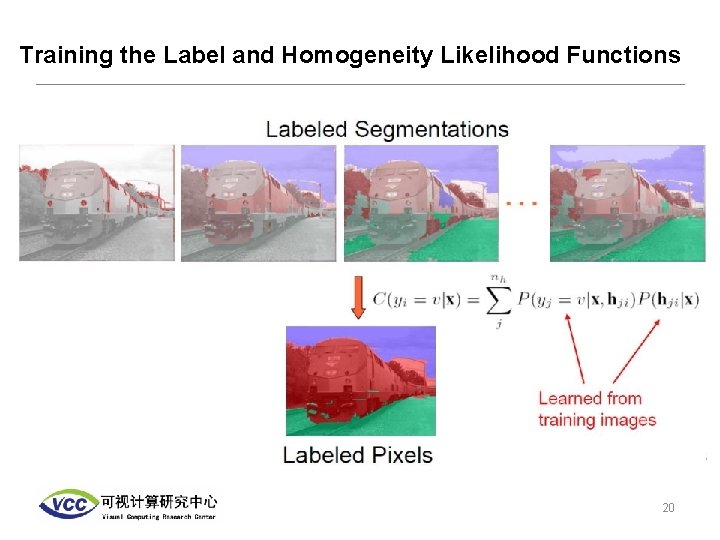

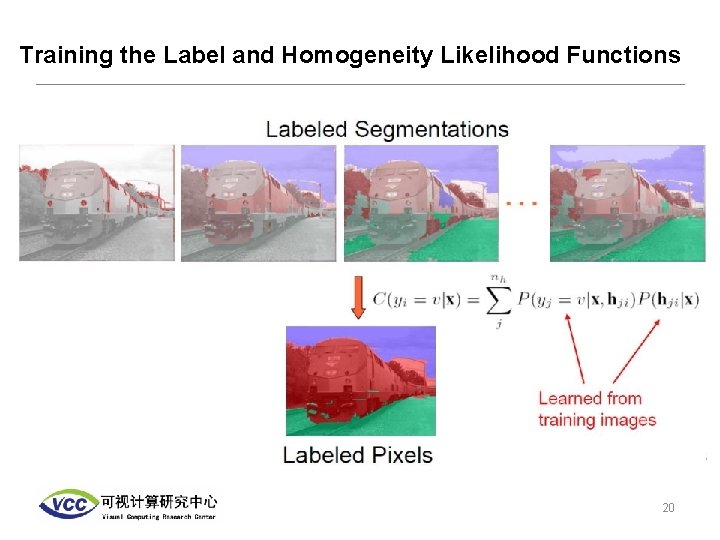

Training the Label and Homogeneity Likelihood Functions 20

![Applications Object Detection Improvement in Murphy et al s detector 20 with our Applications • Object Detection Improvement in Murphy et al. ’s detector [20] with our](https://slidetodoc.com/presentation_image/1be67db5066bdcb42864f1aede57b5be/image-21.jpg)

Applications • Object Detection Improvement in Murphy et al. ’s detector [20] with our geometric context. By adding a small set of context features, we reduce false positives while achieving the same detection rate. [20] K. Murphy, A. Torralba, and W. T. Freeman, “Graphical model for recognizing scenes and objects, ” in Proc. NIPS, 21 2003

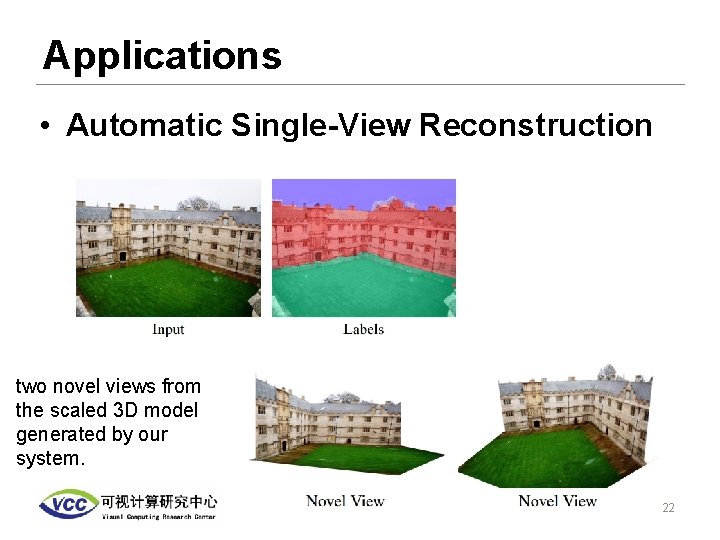

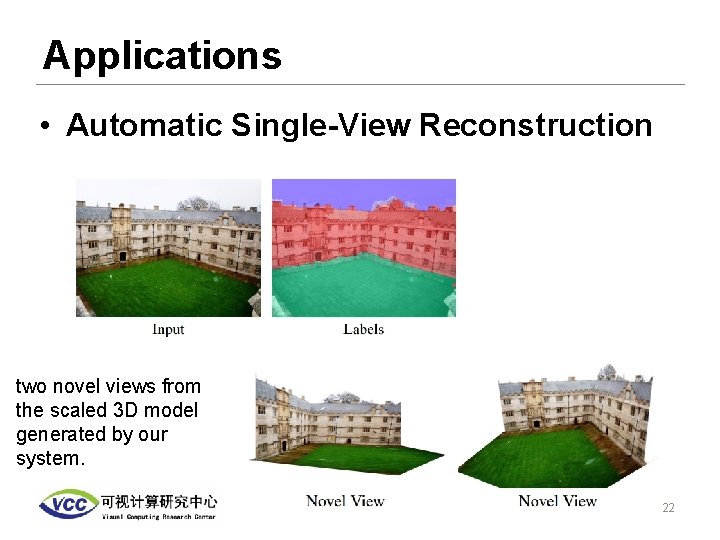

Applications • Automatic Single-View Reconstruction two novel views from the scaled 3 D model generated by our system. 22

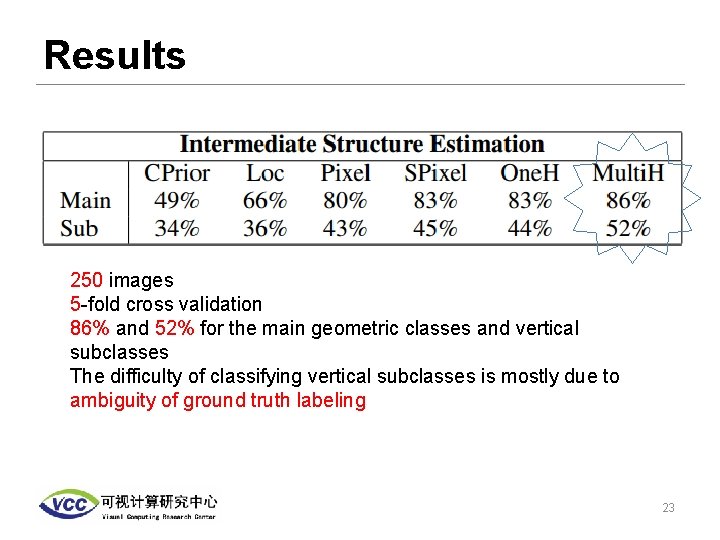

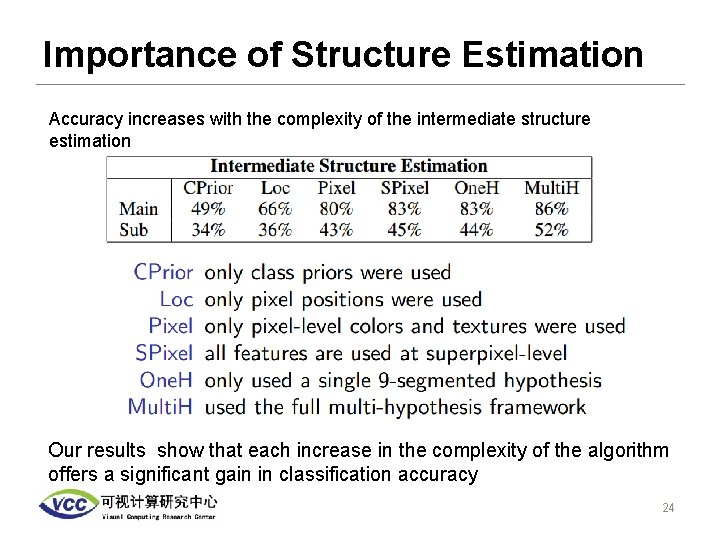

Results 250 images 5 -fold cross validation 86% and 52% for the main geometric classes and vertical subclasses The difficulty of classifying vertical subclasses is mostly due to ambiguity of ground truth labeling 23

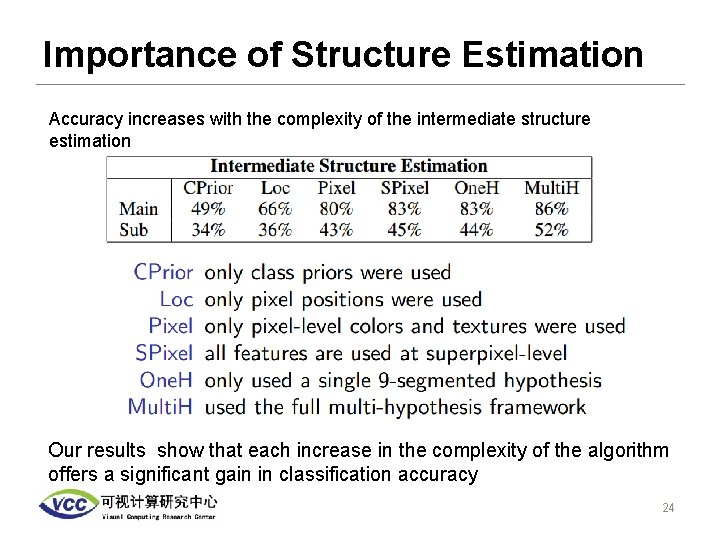

Importance of Structure Estimation Accuracy increases with the complexity of the intermediate structure estimation Our results show that each increase in the complexity of the algorithm offers a significant gain in classification accuracy 24

Conclusion Analyze objects in the image within the context of the 3 D world. Our results show that such context can be estimated and usefully applied, even in outdoor images that lack humanimposed structure. 25

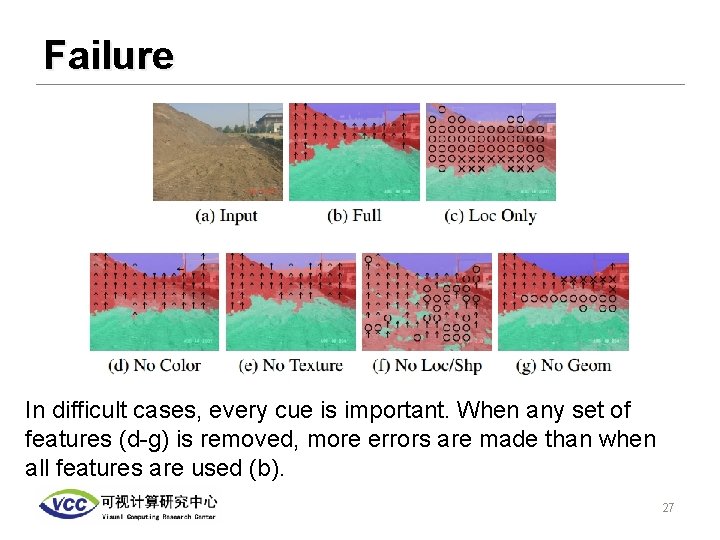

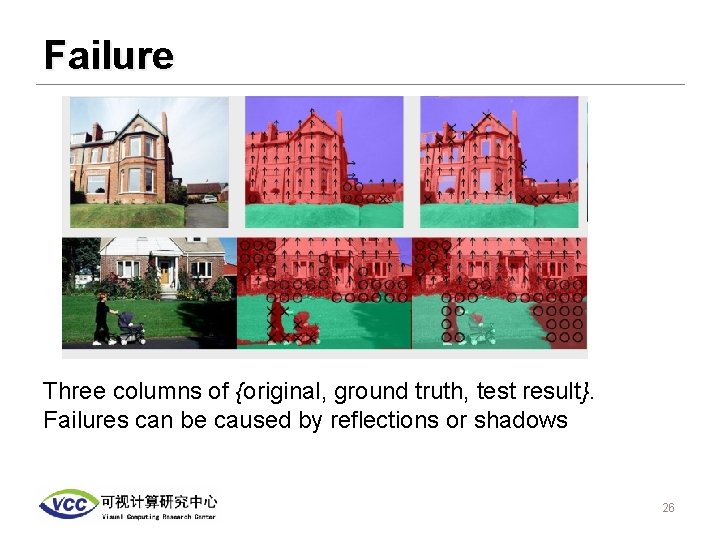

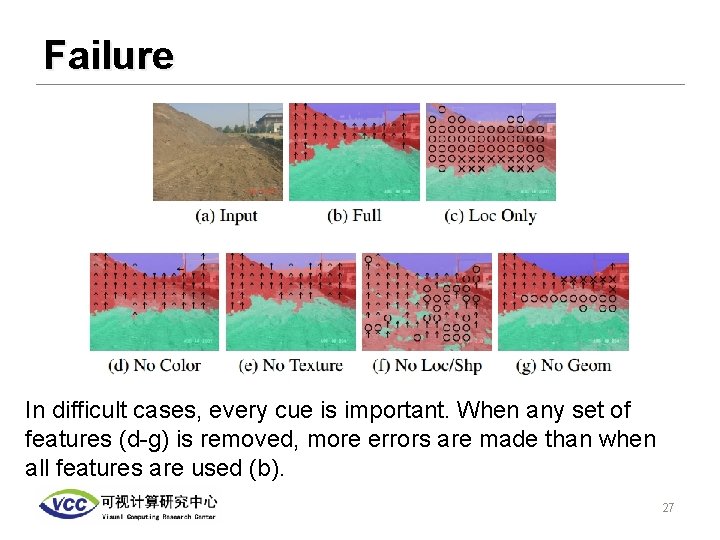

Failure Three columns of {original, ground truth, test result}. Failures can be caused by reflections or shadows 26

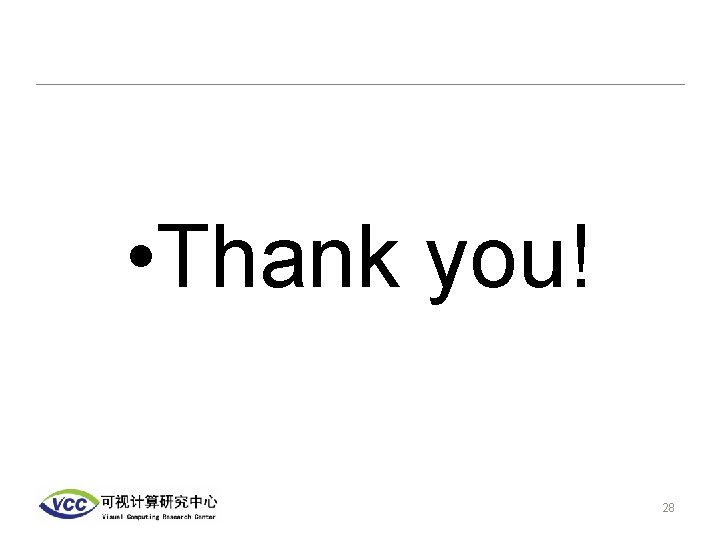

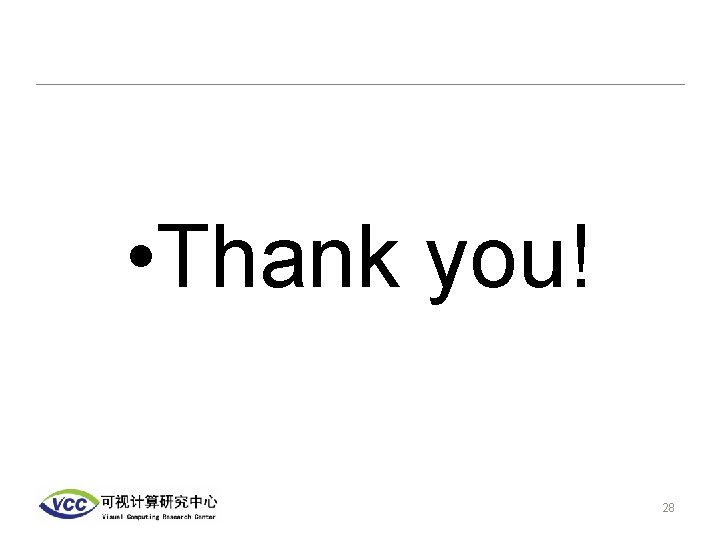

Failure In difficult cases, every cue is important. When any set of features (d-g) is removed, more errors are made than when all features are used (b). 27

• Thank you! 28

Question 1 • How to generate different hypothesis? Answer: we generate different hypothesis by specifying the number of regions. 29

Question 2 • Can you explain the algorithm in page 15 again? Answer: In short , we randomly selected some superpixels , then propagate these superpixels , group them with the nearby superpixels. Finally we can get the hypothesis. 30

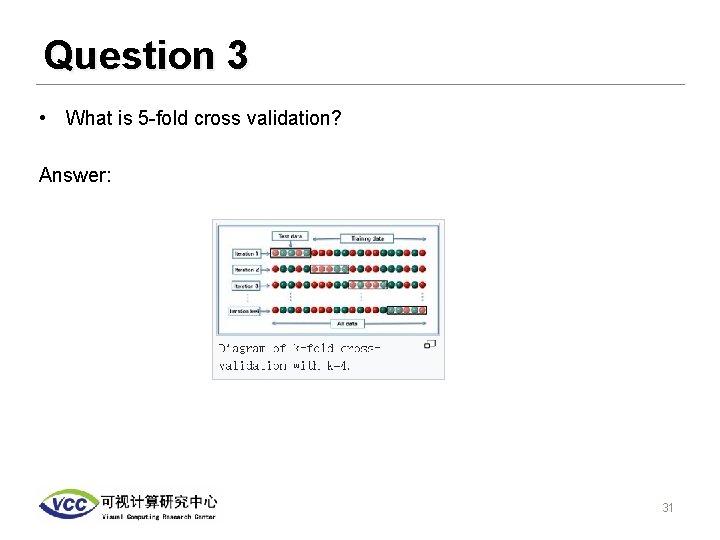

Question 3 • What is 5 -fold cross validation? Answer: 31

Question 4 • Can you explain the method to get the superpixel? Answer: Please look at the next four slides. 32