Using Data to Make Decisions Jacqueline F Kearns

- Slides: 28

Using Data to Make Decisions Jacqueline F. Kearns, Ed. D. NAAC – University of Kentucky NAAC 2008

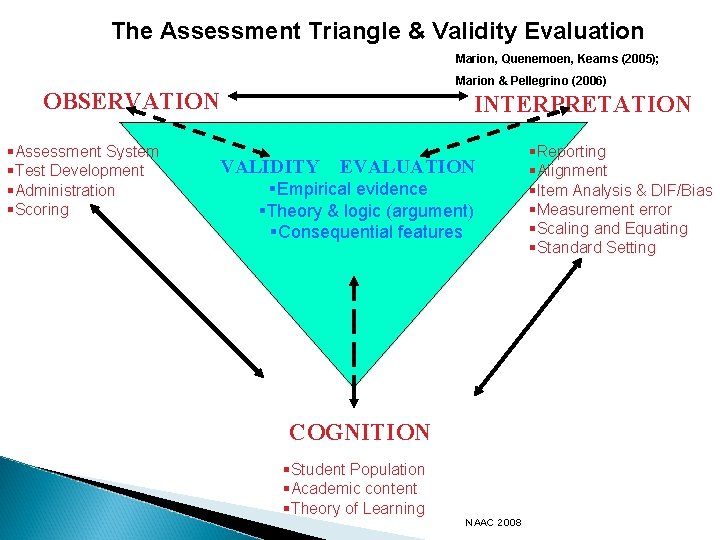

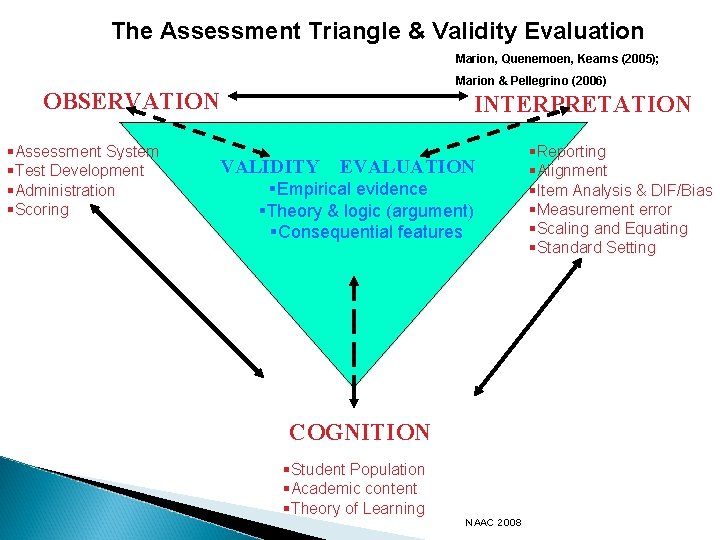

The Assessment Triangle & Validity Evaluation Marion, Quenemoen, Kearns (2005); Marion & Pellegrino (2006) OBSERVATION §Assessment System §Test Development §Administration §Scoring INTERPRETATION VALIDITY EVALUATION §Empirical evidence §Theory & logic (argument) §Consequential features COGNITION §Student Population §Academic content §Theory of Learning NAAC 2008 §Reporting §Alignment §Item Analysis & DIF/Bias §Measurement error §Scaling and Equating §Standard Setting

This Presentation discusses Appropriate use of assessment data at multiple levels ◦ ◦ State District School Student Considers possible data driven actions ◦ ◦ State District School Student NAAC 2008

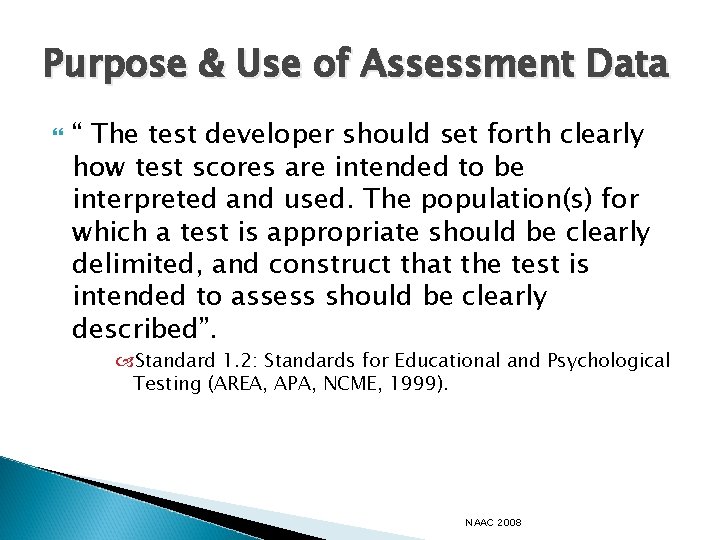

Purpose & Use of Assessment Data “ The test developer should set forth clearly how test scores are intended to be interpreted and used. The population(s) for which a test is appropriate should be clearly delimited, and construct that the test is intended to assess should be clearly described”. Standard 1. 2: Standards for Educational and Psychological Testing (AREA, APA, NCME, 1999). NAAC 2008

Purpose & Use of Assessments Under NCLB Measure Student achievement in reading, mathematics, and science Hold schools/districts/states accountable for improving achievement results in reading, math, & science to ◦ Inform professional development at school/district/state levels ◦ Inform assessment/curriculum/instruction alignment ◦ Inform and improve instructional practices for disaggregated groups ◦ Report achievement results for individual students NAAC 2008

Non- Purposes If a test is likely to be inappropriately used for certain purposes, then specific warnings against those purposes should be specified. (Standard 1. 3: Standards for Educational and Psychological Testing (AREA, APA, NCME, 1999). ◦ Not all assessments used for large-scale achievement purposes are also appropriate for individual decision-making Appropriate interpretive materials must be included in the score report IEP teams must use multiple sources of data in making program decisions for an individual student. NAAC 2008

Technical Quality (Peer Review Guidance, 2007) • Specify • and Delineate purpose and use Measure the knowledge and skills described in its academic content standards and not knowledge, skills, or other characteristics that are not specified in the academic content standards or grade-level expectations • Reflect the intended cognitive processes and that the items and tasks are at the appropriate grade level • Determine consistency of scoring and reporting structures with the sub-domain structures of its academic content • Determine relevance of test and item scores are related to outside variables as intended and not irrelevant characteristics • Determine • consistency of decisions based on purpose and use. Determine intended and unintended consequences NAAC 2008

Mine the Data NAAC 2008

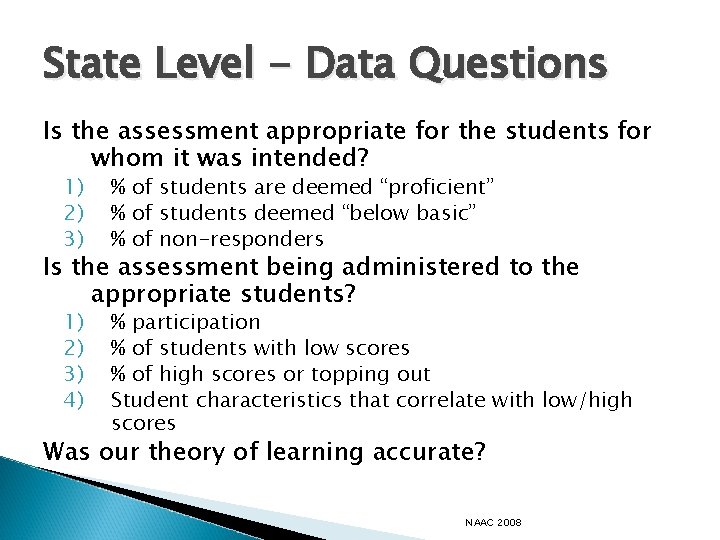

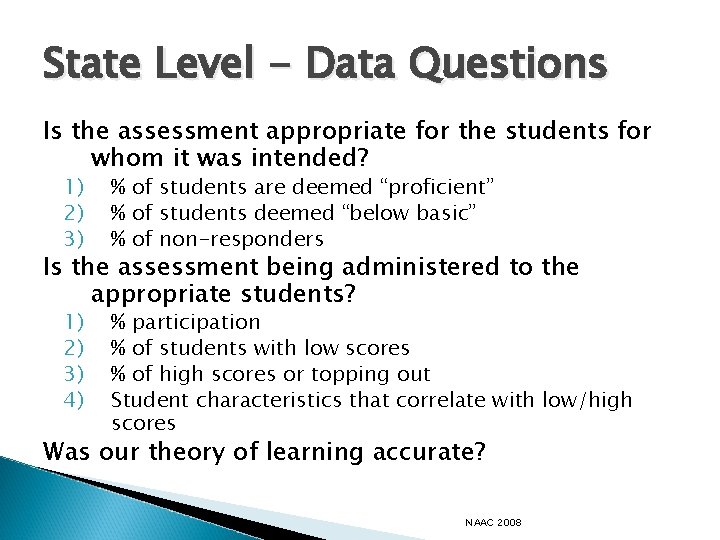

State Level - Data Questions Is the assessment appropriate for the students for whom it was intended? 1) 2) 3) % of students are deemed “proficient” % of students deemed “below basic” % of non-responders 1) 2) 3) 4) % participation % of students with low scores % of high scores or topping out Student characteristics that correlate with low/high scores Is the assessment being administered to the appropriate students? Was our theory of learning accurate? NAAC 2008

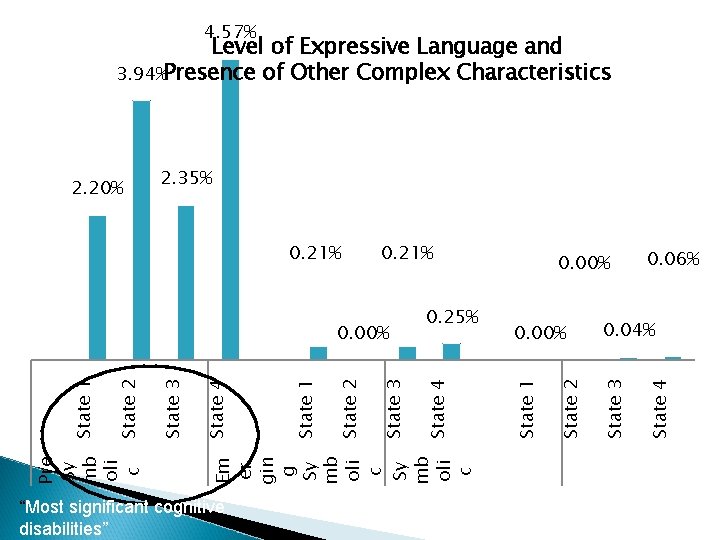

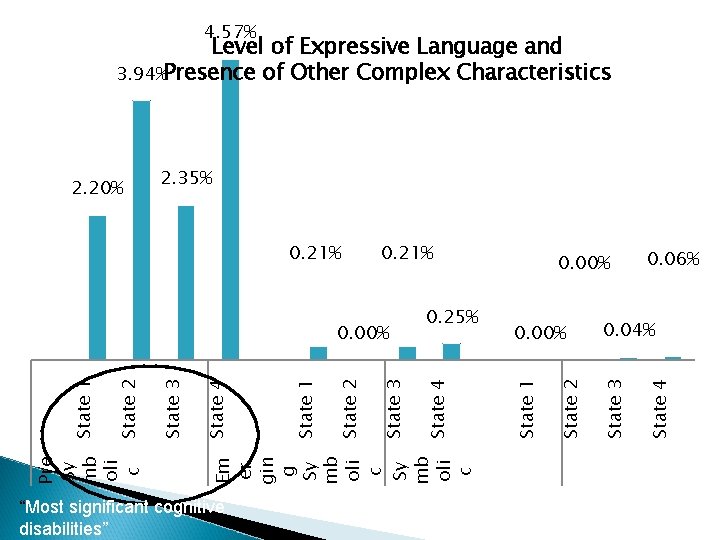

4. 57% Level of Expressive Language and 3. 94%Presence of Other Complex Characteristics 2. 35% 0. 21% “Most significant cognitive disabilities” State 4 0. 04% State 3 0. 06% 0. 00% State 2 State 3 State 2 State 1 State 4 Em er gin g Sy mb oli c State 3 State 2 Pre Sy mb oli c State 1 0. 00% 0. 25% 0. 00% State 1 0. 21% State 4 2. 20%

Elementary School Grade 12. 70% Band-Expressive Langague 10. 70% Middle School Grade Band. Expressive Language 1 20. 30% 2 2 3 3 67. 00% 72. 30% 9. 70% High School Grade Band. Expressive Language 17. 70% 1 2 3 72. 60% 17. 00% 1

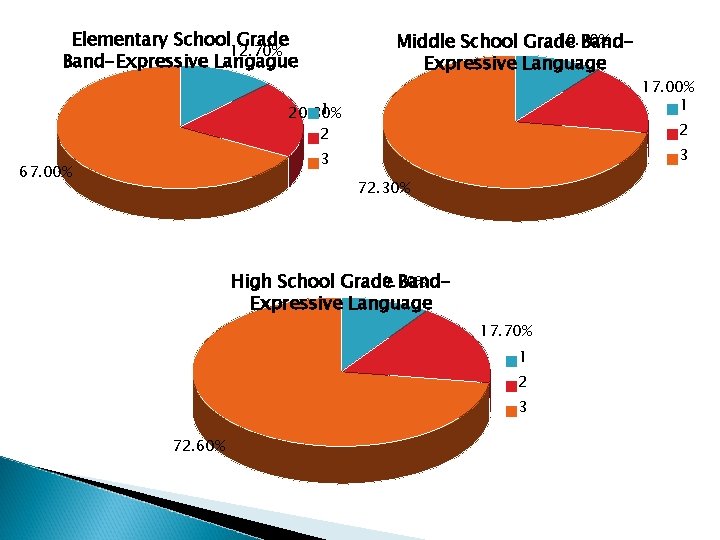

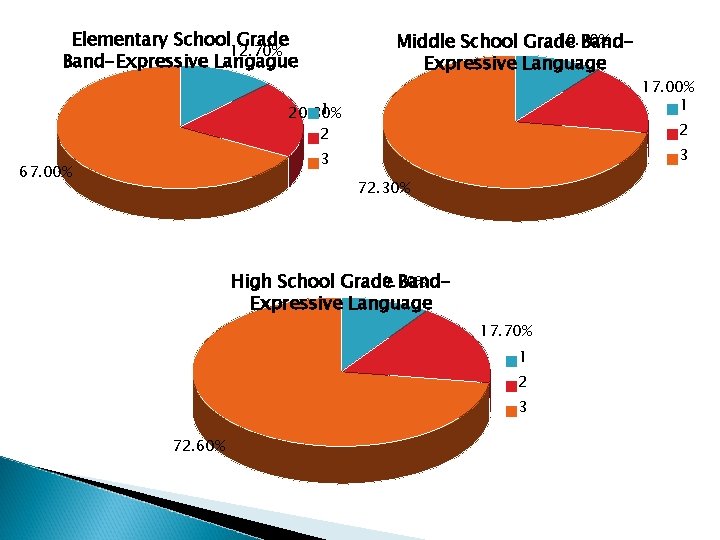

Use of Augmented Communication Systems

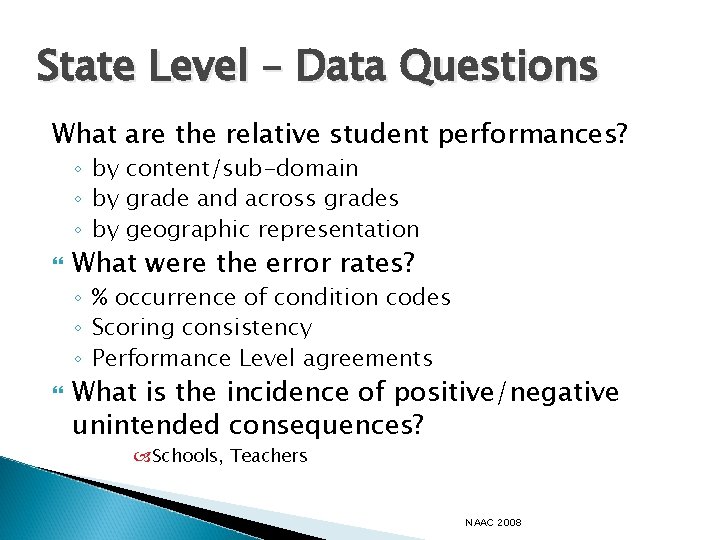

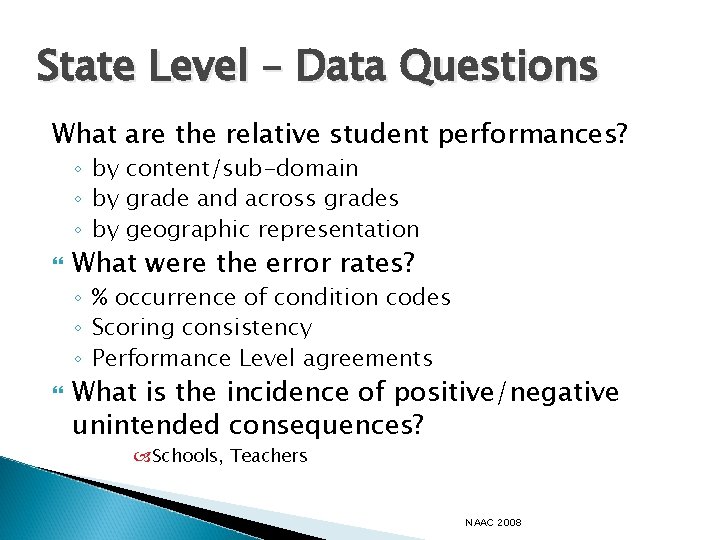

State Level – Data Questions What are the relative student performances? ◦ by content/sub-domain ◦ by grade and across grades ◦ by geographic representation What were the error rates? ◦ % occurrence of condition codes ◦ Scoring consistency ◦ Performance Level agreements What is the incidence of positive/negative unintended consequences? Schools, Teachers NAAC 2008

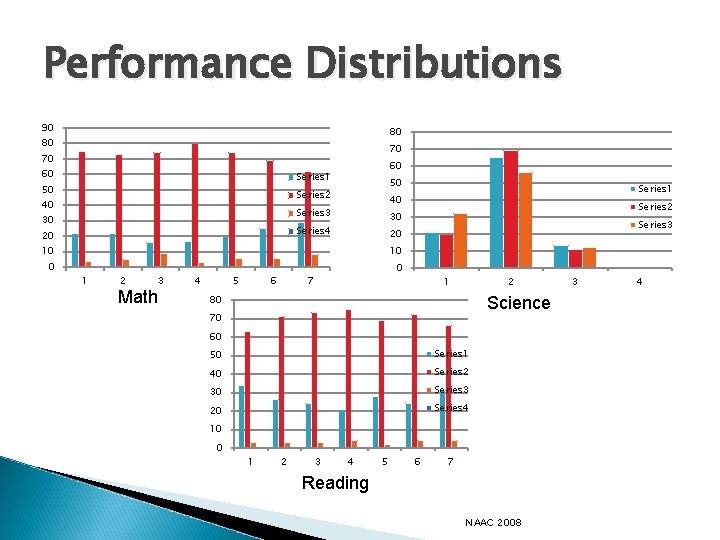

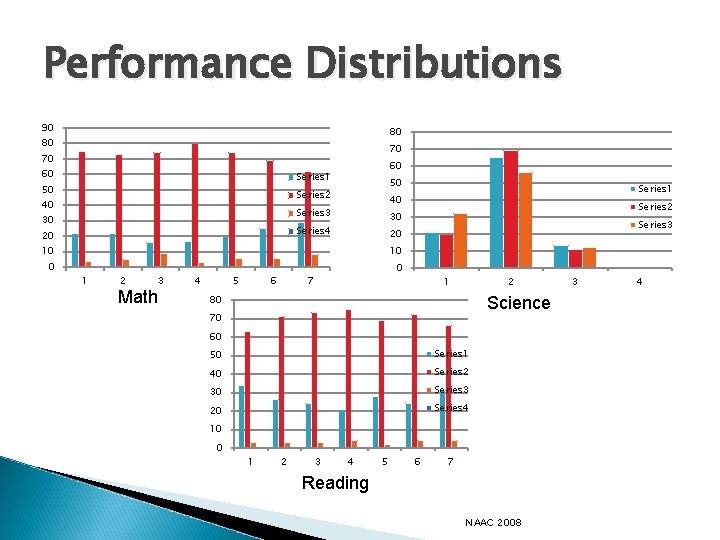

Performance Distributions 90 80 80 70 70 60 60 Series 1 50 50 Series 2 40 Series 3 30 Series 3 20 10 10 0 Series 2 30 Series 4 20 Series 1 40 1 2 3 Math 4 5 6 0 7 1 2 80 Science 70 60 50 Series 1 40 Series 2 30 Series 3 20 Series 4 10 0 1 2 3 4 5 6 7 Reading NAAC 2008 3 4

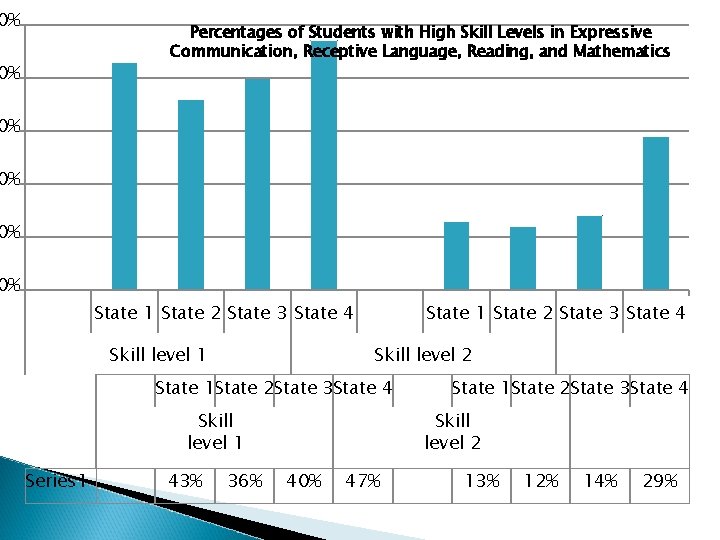

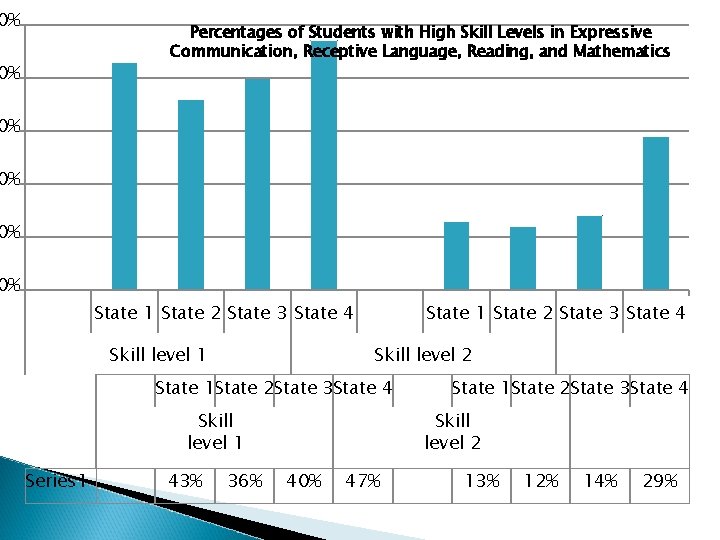

0% Percentages of Students with High Skill Levels in Expressive Communication, Receptive Language, Reading, and Mathematics 0% 0% 0% State 1 State 2 State 3 State 4 Skill level 2 State 1 State 2 State 3 State 4 Skill level 1 Series 1 43% 36% State 1 State 2 State 3 State 4 Skill level 2 40% 47% 13% 12% 14% 29%

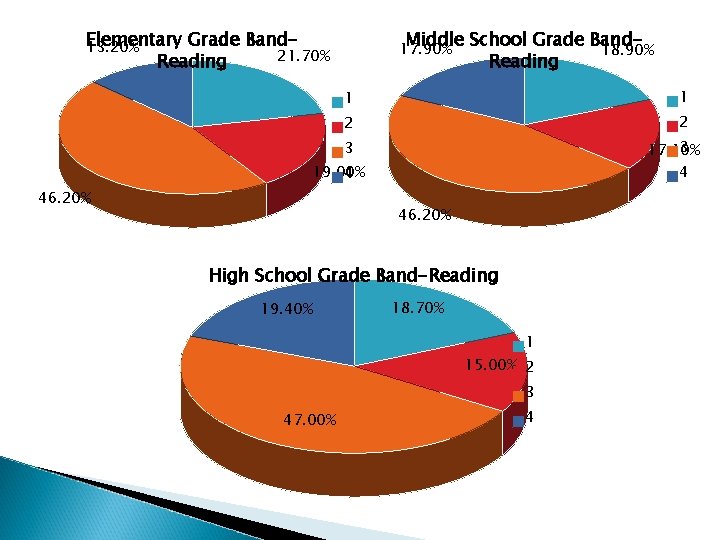

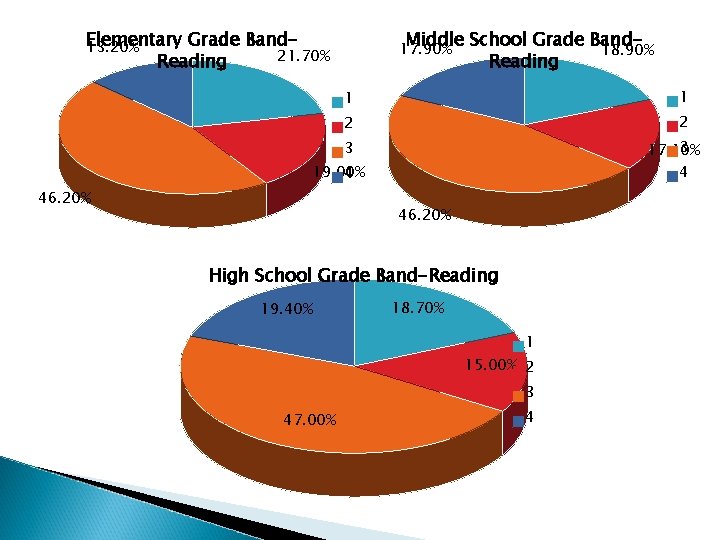

Elementary Grade Band 13. 20% 21. 70% Reading Middle School Grade Band 18. 90% Reading 17. 90% 1 1 2 2 3 17. 10% 4 3 19. 00% 4 46. 20% High School Grade Band-Reading 19. 40% 18. 70% 1 15. 00% 2 3 47. 00% 4

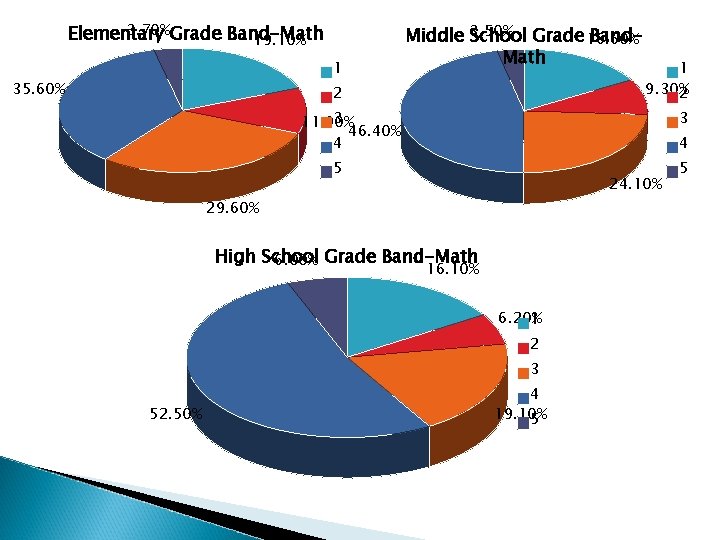

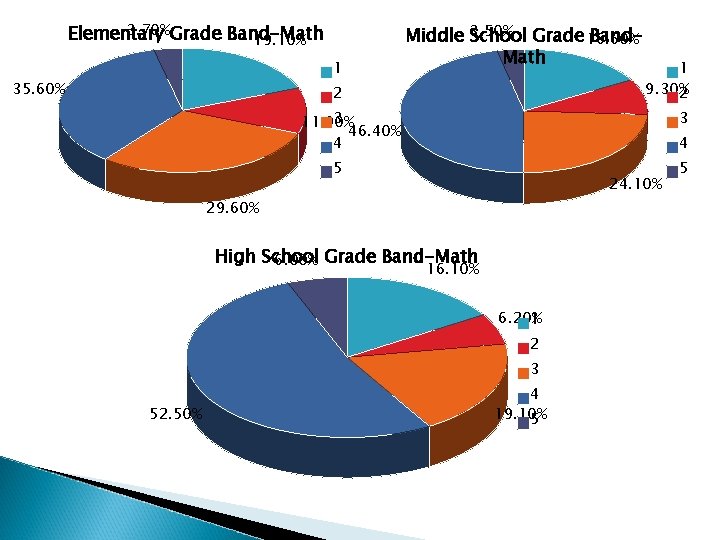

3. 70%Grade Band-Math Elementary 19. 10% 1 35. 60% 3. 50% Grade Band. Middle School 16. 60% Math 2 1 9. 30% 2 3 11. 90% 46. 40% 4 3 4 5 24. 10% 29. 60% High School 6. 00% Grade Band-Math 16. 10% 6. 20% 1 2 3 52. 50% 4 19. 10% 5 5

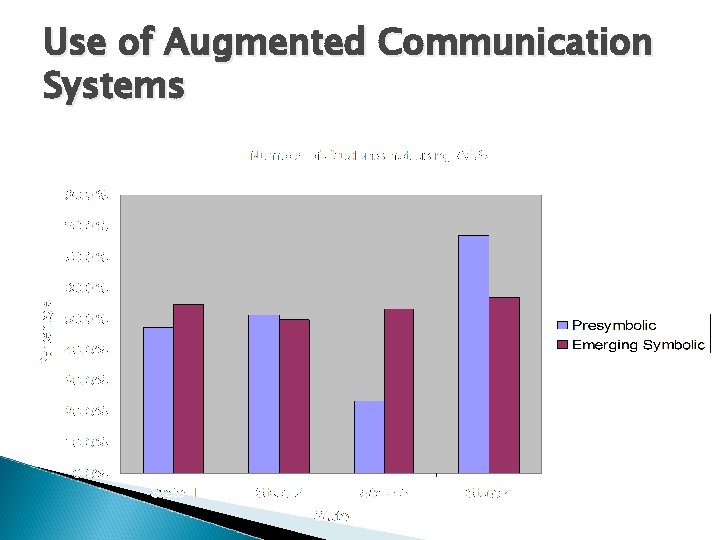

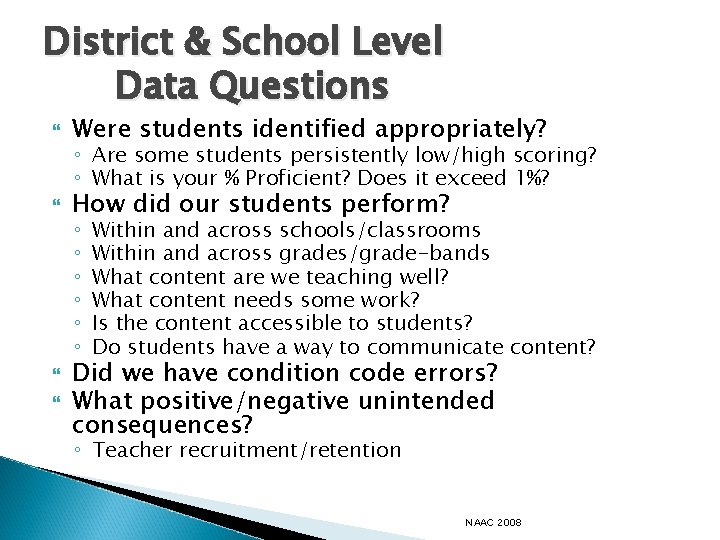

District & School Level Data Questions Were students identified appropriately? How did our students perform? ◦ Are some students persistently low/high scoring? ◦ What is your % Proficient? Does it exceed 1%? ◦ ◦ ◦ Within and across schools/classrooms Within and across grades/grade-bands What content are we teaching well? What content needs some work? Is the content accessible to students? Do students have a way to communicate content? Did we have condition code errors? What positive/negative unintended consequences? ◦ Teacher recruitment/retention NAAC 2008

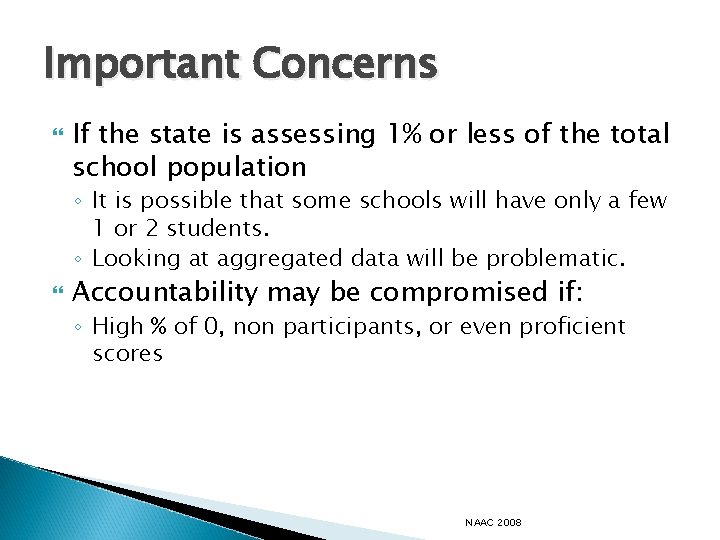

Important Concerns If the state is assessing 1% or less of the total school population ◦ It is possible that some schools will have only a few 1 or 2 students. ◦ Looking at aggregated data will be problematic. Accountability may be compromised if: ◦ High % of 0, non participants, or even proficient scores NAAC 2008

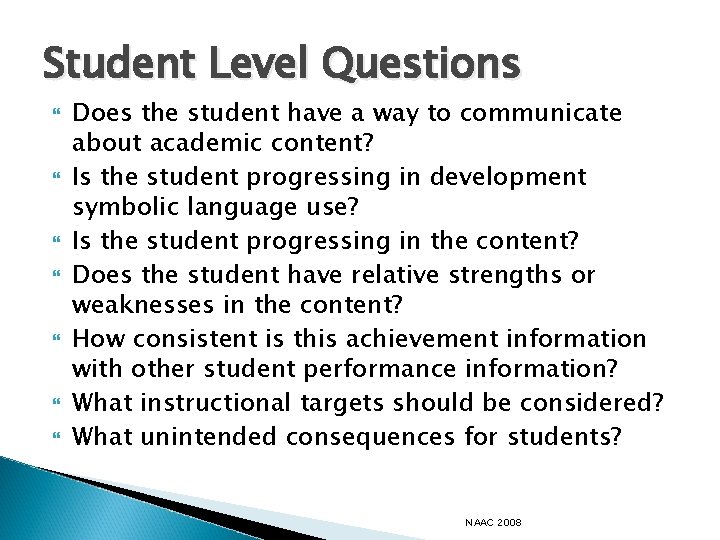

Student Level Questions Does the student have a way to communicate about academic content? Is the student progressing in development symbolic language use? Is the student progressing in the content? Does the student have relative strengths or weaknesses in the content? How consistent is this achievement information with other student performance information? What instructional targets should be considered? What unintended consequences for students? NAAC 2008

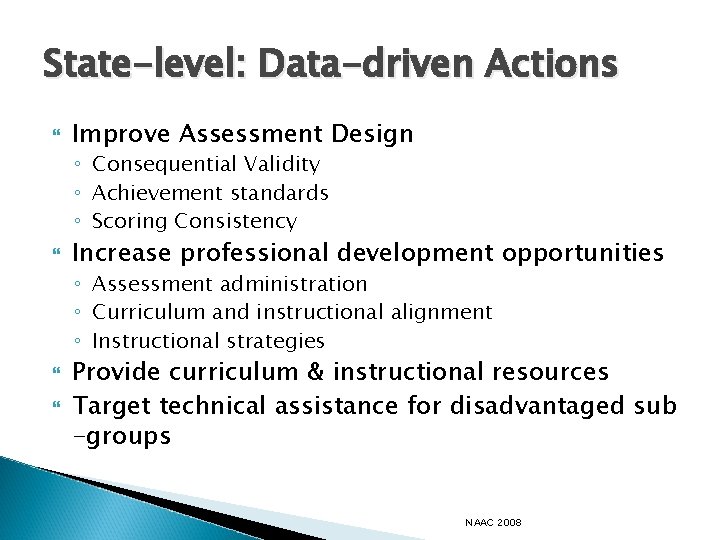

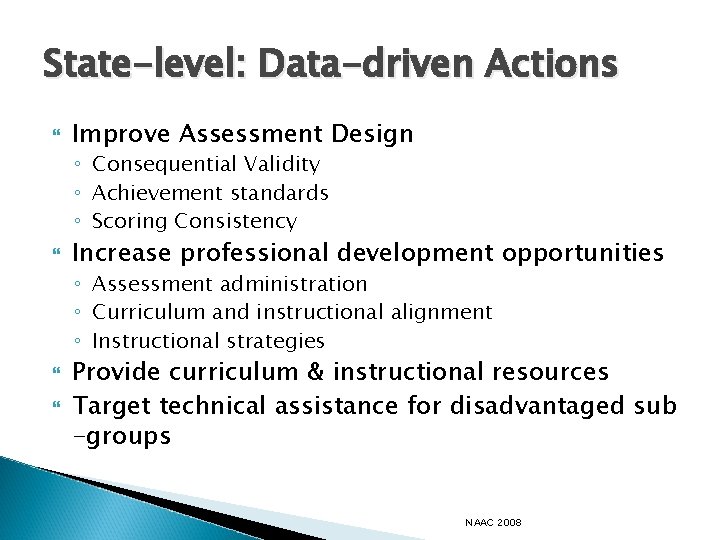

State-level: Data-driven Actions Improve Assessment Design ◦ Consequential Validity ◦ Achievement standards ◦ Scoring Consistency Increase professional development opportunities ◦ Assessment administration ◦ Curriculum and instructional alignment ◦ Instructional strategies Provide curriculum & instructional resources Target technical assistance for disadvantaged sub -groups NAAC 2008

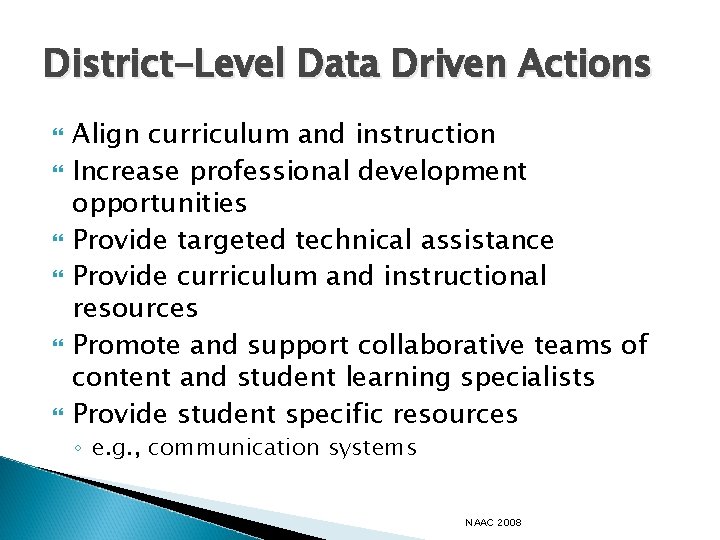

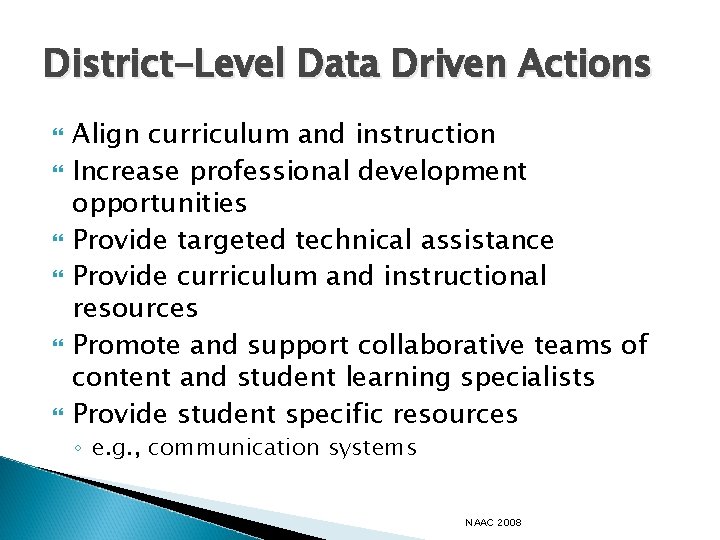

District-Level Data Driven Actions Align curriculum and instruction Increase professional development opportunities Provide targeted technical assistance Provide curriculum and instructional resources Promote and support collaborative teams of content and student learning specialists Provide student specific resources ◦ e. g. , communication systems NAAC 2008

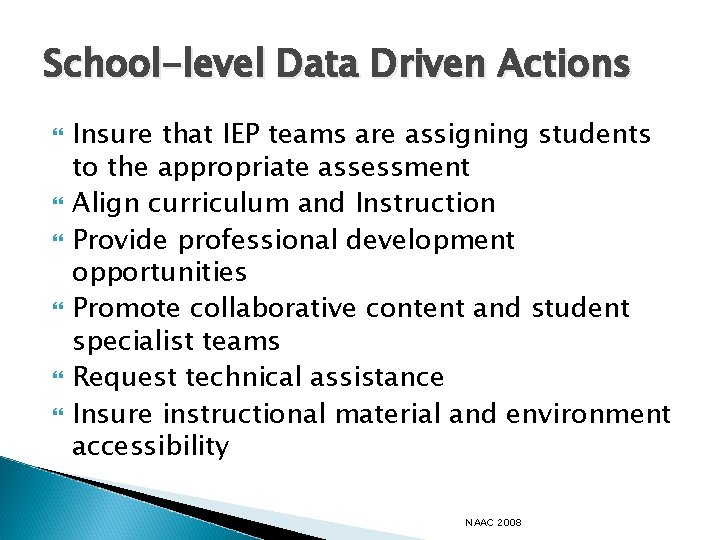

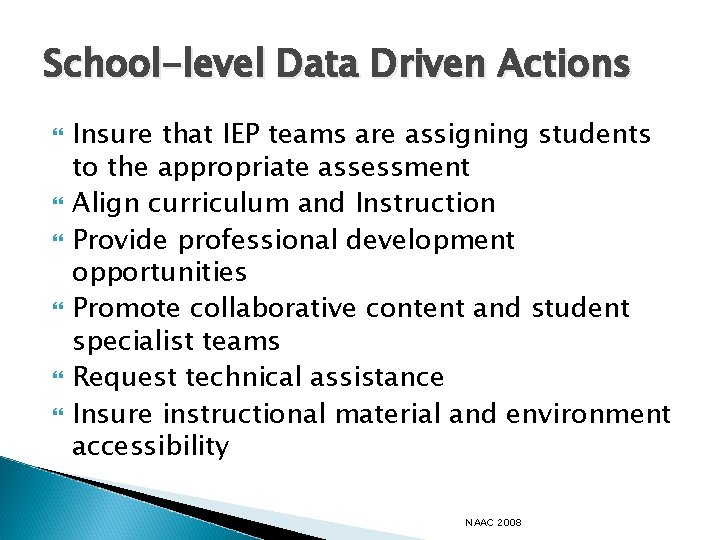

School-level Data Driven Actions Insure that IEP teams are assigning students to the appropriate assessment Align curriculum and Instruction Provide professional development opportunities Promote collaborative content and student specialist teams Request technical assistance Insure instructional material and environment accessibility NAAC 2008

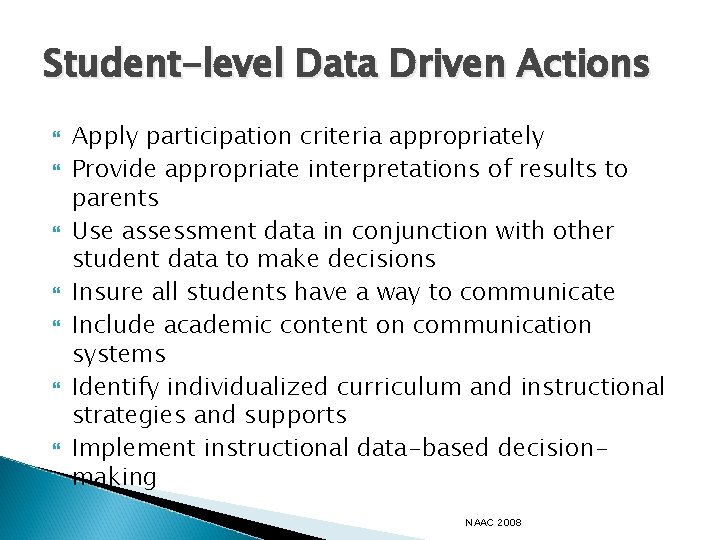

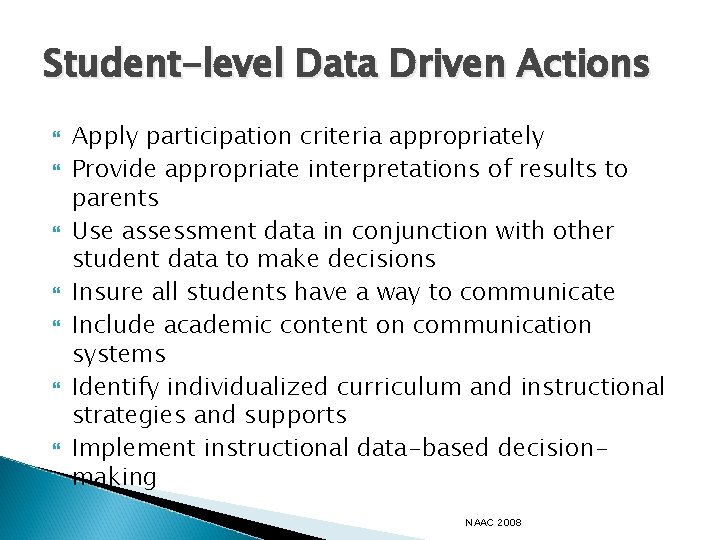

Student-level Data Driven Actions Apply participation criteria appropriately Provide appropriate interpretations of results to parents Use assessment data in conjunction with other student data to make decisions Insure all students have a way to communicate Include academic content on communication systems Identify individualized curriculum and instructional strategies and supports Implement instructional data-based decisionmaking NAAC 2008

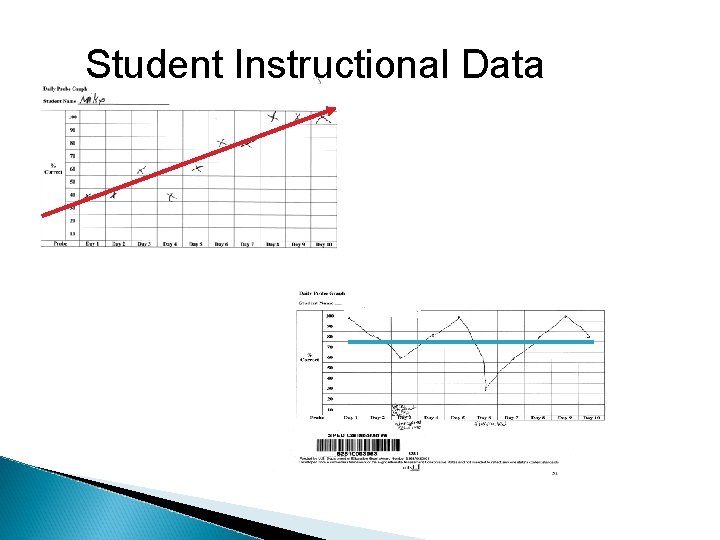

Student Instructional Data

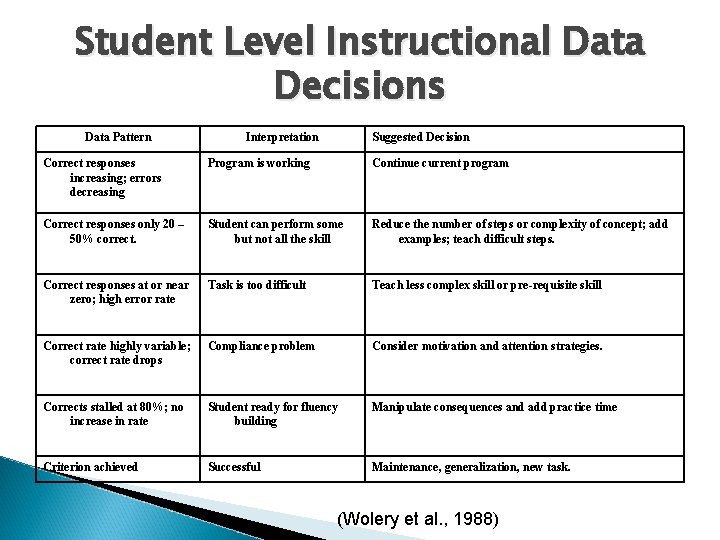

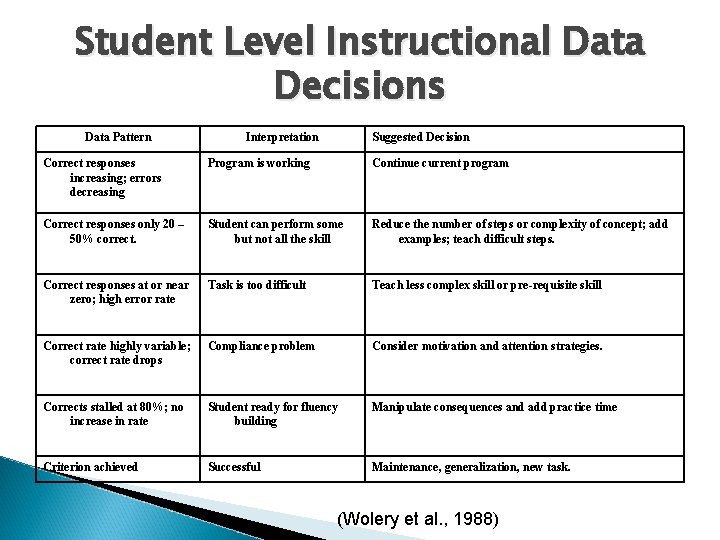

Student Level Instructional Data Decisions Data Pattern Interpretation Suggested Decision Correct responses increasing; errors decreasing Program is working Continue current program Correct responses only 20 – 50% correct. Student can perform some but not all the skill Reduce the number of steps or complexity of concept; add examples; teach difficult steps. Correct responses at or near zero; high error rate Task is too difficult Teach less complex skill or pre-requisite skill Correct rate highly variable; correct rate drops Compliance problem Consider motivation and attention strategies. Corrects stalled at 80%; no increase in rate Student ready for fluency building Manipulate consequences and add practice time Criterion achieved Successful Maintenance, generalization, new task. (Wolery et al. , 1988)

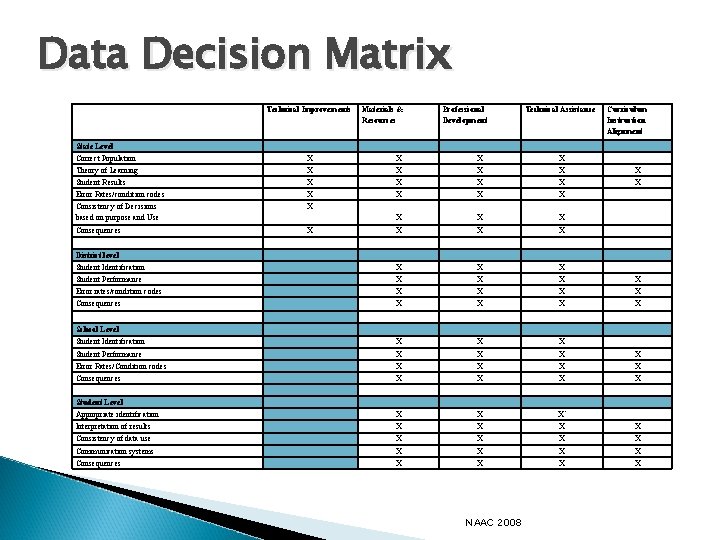

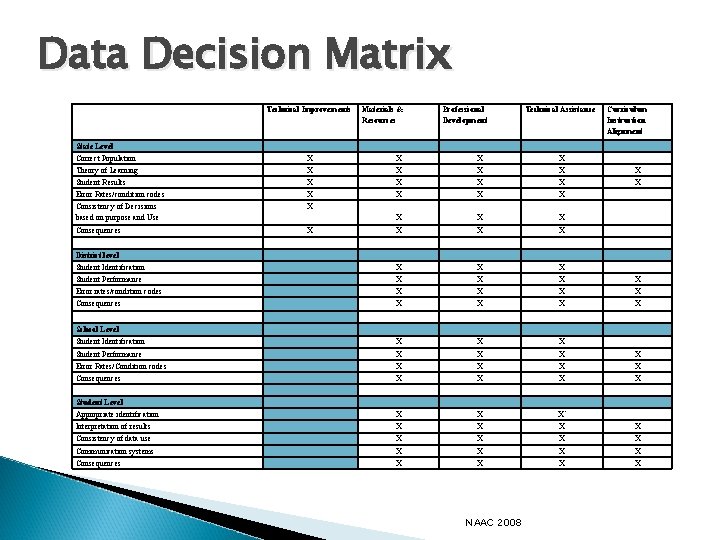

Data Decision Matrix Technical Improvements Materials & Resources Professional Development Technical Assistance Curriculum Instruction Alignment State Level Correct Population X X Theory of Learning X X X Student Results X X X Error Rates/condition codes X X Consistency of Decisions based on purpose and Use X X Consequences X X Student Identification X X X Student Performance X X Error rates/condition codes X X Consequences X X Student Identification X X X Student Performance X X Error Rates/Condition codes X X Consequences X X Appropriate identification X X X` Interpretation of results X X Consistency of data use X X Communication systems X X Consequences X X District level School Level Student Level NAAC 2008

WWW. NAACPartners. org Jacqueline F. Kearns, Ed. D. National Alternate Assessment Center NAAC 2008