This guy is wearing a haircut called a

- Slides: 22

This guy is wearing a haircut called a “Mullet” Lecture 19 Unsupervised and One-Shot Learning Gary Bradski and Sebastian Thrun http: //robots. stanford. edu/cs 223 b/index. html 1

Find the Mullets… One-Shot Learning 2

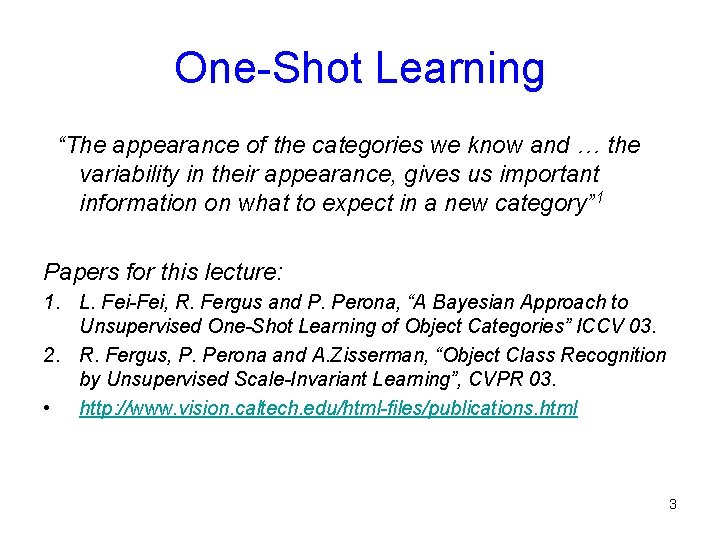

One-Shot Learning “The appearance of the categories we know and … the variability in their appearance, gives us important information on what to expect in a new category” 1 Papers for this lecture: 1. L. Fei-Fei, R. Fergus and P. Perona, “A Bayesian Approach to Unsupervised One-Shot Learning of Object Categories” ICCV 03. 2. R. Fergus, P. Perona and A. Zisserman, “Object Class Recognition by Unsupervised Scale-Invariant Learning”, CVPR 03. • http: //www. vision. caltech. edu/html-files/publications. html 3

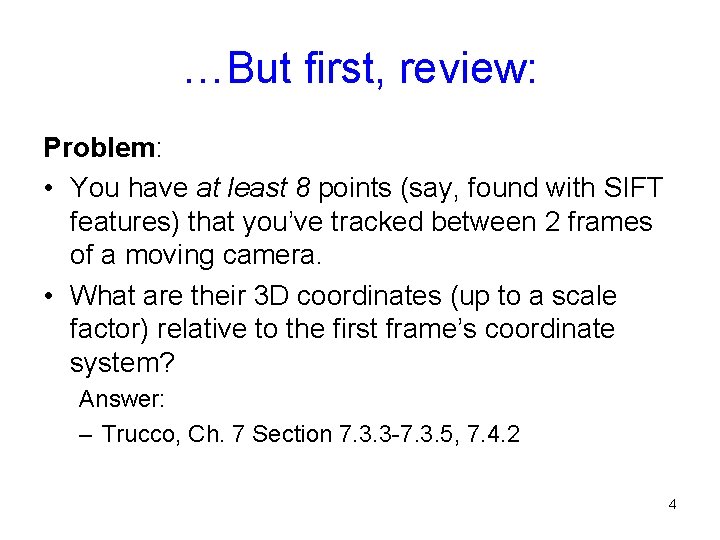

…But first, review: Problem: • You have at least 8 points (say, found with SIFT features) that you’ve tracked between 2 frames of a moving camera. • What are their 3 D coordinates (up to a scale factor) relative to the first frame’s coordinate system? Answer: – Trucco, Ch. 7 Section 7. 3. 3 -7. 3. 5, 7. 4. 2 4

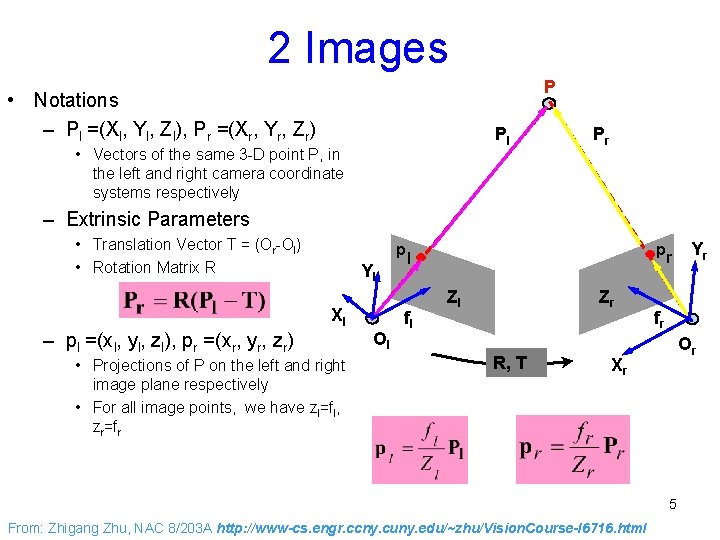

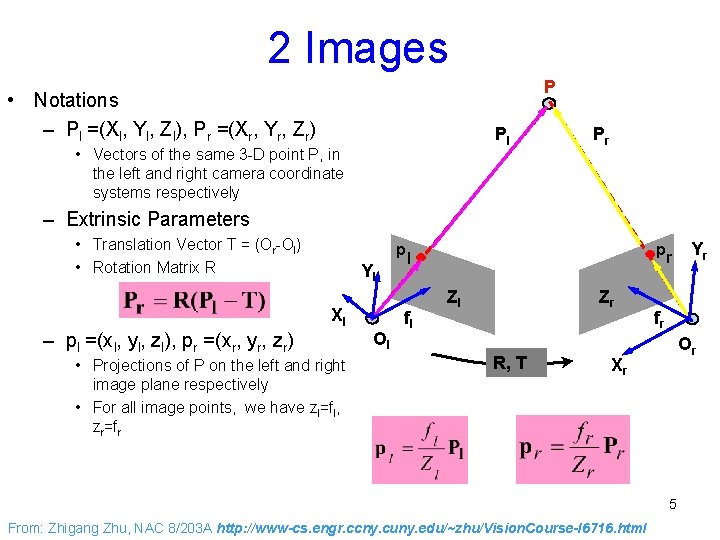

2 Images P • Notations – Pl =(Xl, Yl, Zl), Pr =(Xr, Yr, Zr) Pl • Vectors of the same 3 -D point P, in the left and right camera coordinate systems respectively Pr – Extrinsic Parameters • Translation Vector T = (Or-Ol) • Rotation Matrix R p Yl Xl – pl =(xl, yl, zl), pr =(xr, yr, zr) • Projections of P on the left and right image plane respectively • For all image points, we have zl=fl, zr=fr Ol p l fl Zl Zr R, T r fr Or Xr 5 From: Zhigang Zhu, NAC 8/203 A http: //www-cs. engr. ccny. cuny. edu/~zhu/Vision. Course-I 6716. html Yr

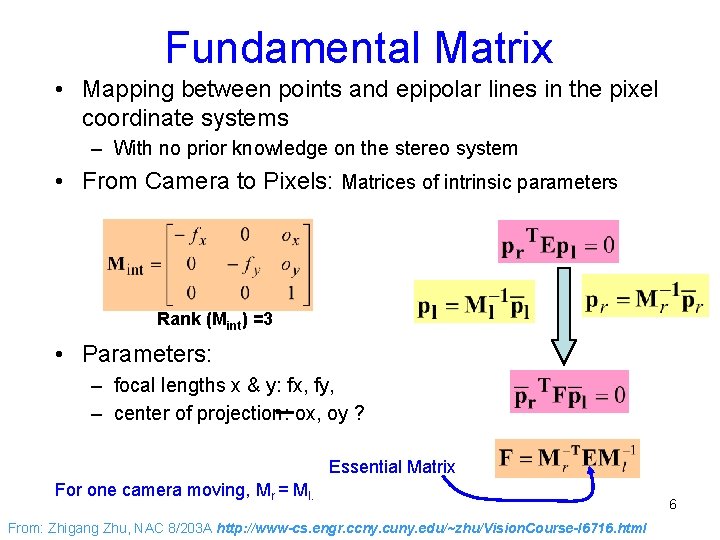

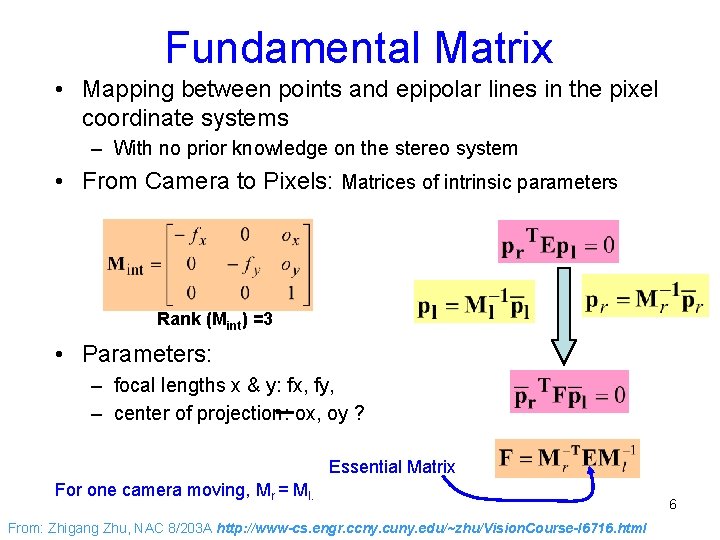

Fundamental Matrix • Mapping between points and epipolar lines in the pixel coordinate systems – With no prior knowledge on the stereo system • From Camera to Pixels: Matrices of intrinsic parameters Rank (Mint) =3 • Parameters: – focal lengths x & y: fx, fy, – center of projection: ox, oy ? Essential Matrix For one camera moving, Mr = Ml. From: Zhigang Zhu, NAC 8/203 A http: //www-cs. engr. ccny. cuny. edu/~zhu/Vision. Course-I 6716. html 6

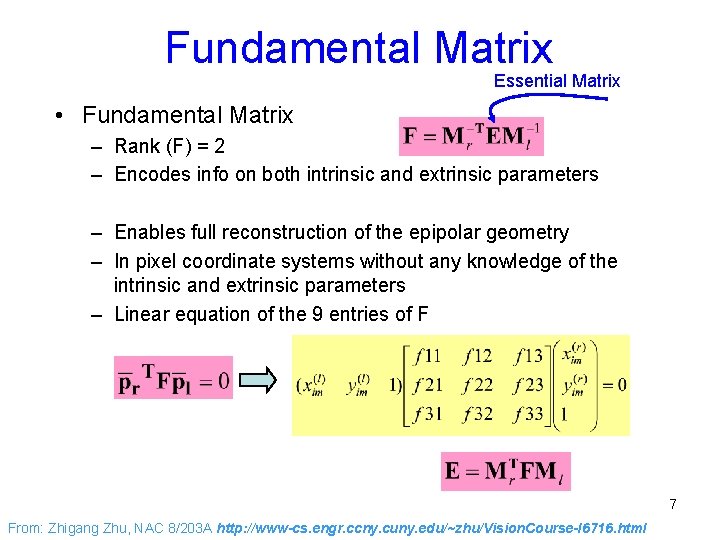

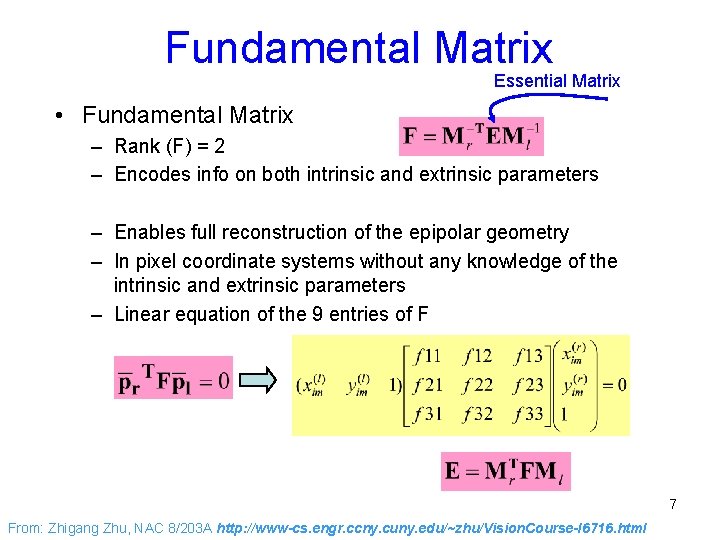

Fundamental Matrix Essential Matrix • Fundamental Matrix – Rank (F) = 2 – Encodes info on both intrinsic and extrinsic parameters – Enables full reconstruction of the epipolar geometry – In pixel coordinate systems without any knowledge of the intrinsic and extrinsic parameters – Linear equation of the 9 entries of F 7 From: Zhigang Zhu, NAC 8/203 A http: //www-cs. engr. ccny. cuny. edu/~zhu/Vision. Course-I 6716. html

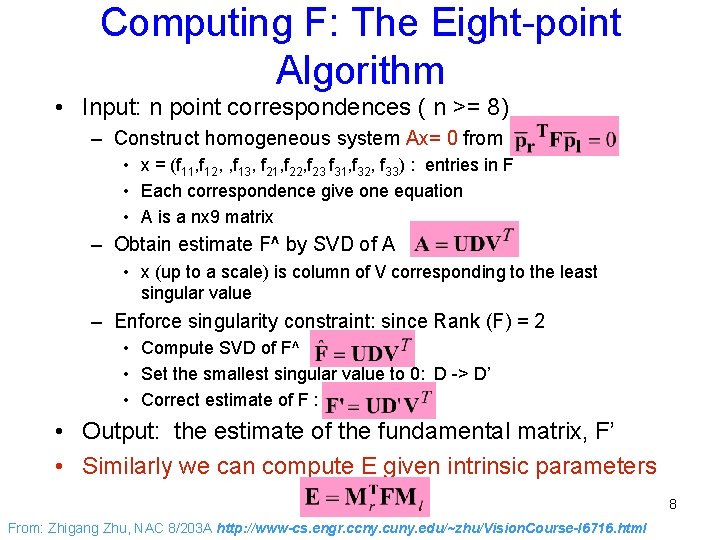

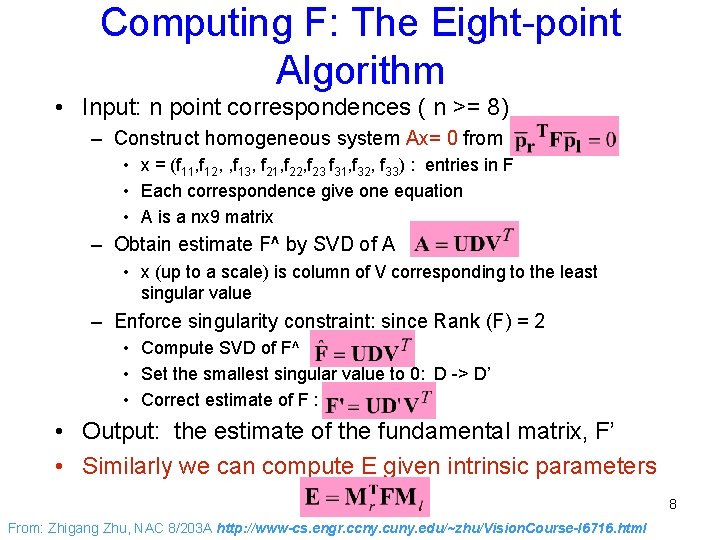

Computing F: The Eight-point Algorithm • Input: n point correspondences ( n >= 8) – Construct homogeneous system Ax= 0 from • x = (f 11, f 12, , f 13, f 21, f 22, f 23 f 31, f 32, f 33) : entries in F • Each correspondence give one equation • A is a nx 9 matrix – Obtain estimate F^ by SVD of A • x (up to a scale) is column of V corresponding to the least singular value – Enforce singularity constraint: since Rank (F) = 2 • Compute SVD of F^ • Set the smallest singular value to 0: D -> D’ • Correct estimate of F : • Output: the estimate of the fundamental matrix, F’ • Similarly we can compute E given intrinsic parameters 8 From: Zhigang Zhu, NAC 8/203 A http: //www-cs. engr. ccny. cuny. edu/~zhu/Vision. Course-I 6716. html

Reconstruction up to a Scale Factor • Assumption and Problem Statement – Under the assumption that only intrinsic parameters and more than 8 point correspondences are given – Compute the 3 -D location from their projections, pl and pr, as well as the extrinsic parameters • Solution – Compute the essential matrix E from at least 8 correspondences – Estimate T (up to a scale and a sign) from E (=RS) using the orthogonal constraint of R, and then R (see Trucco 7. 4. 2) • End up with four different estimates of the pair (T, R) – Reconstruct the depth of each point, and pick up the correct sign of R and T. – Results: reconstructed 3 D points (up to a common scale); – The scale can be determined if distance of two points (in space) are known 9 From: Zhigang Zhu, NAC 8/203 A http: //www-cs. engr. ccny. cuny. edu/~zhu/Vision. Course-I 6716. html

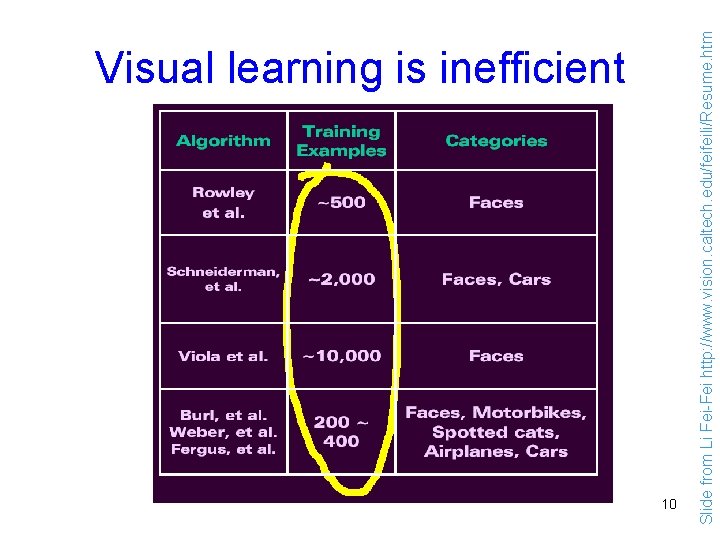

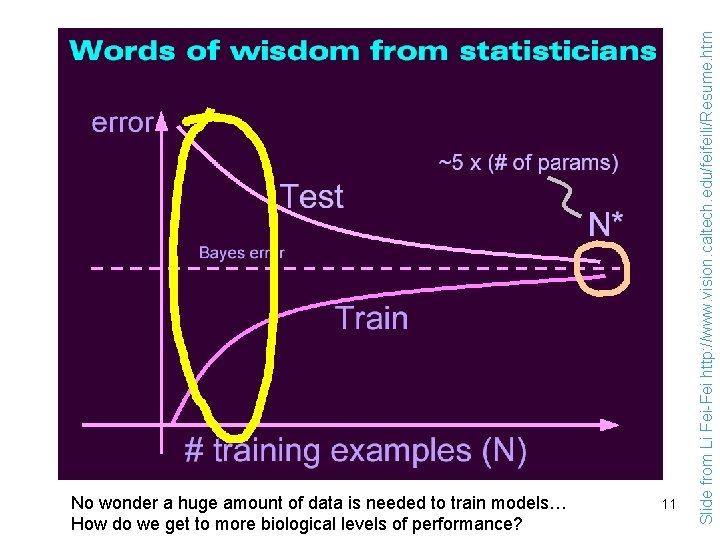

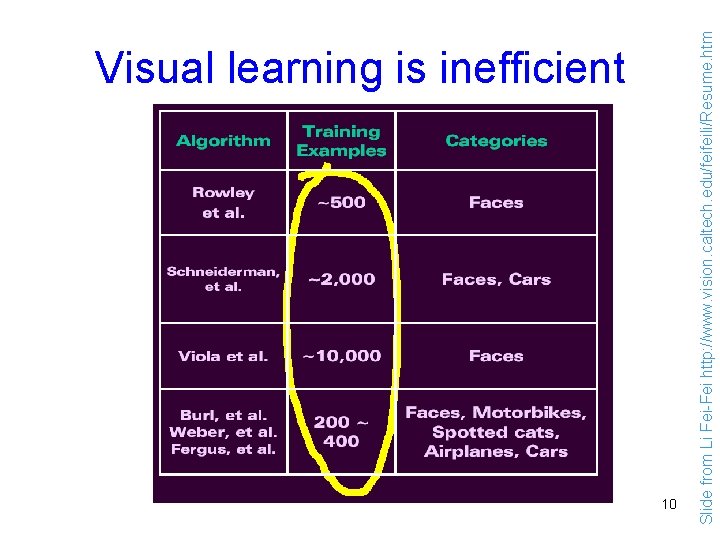

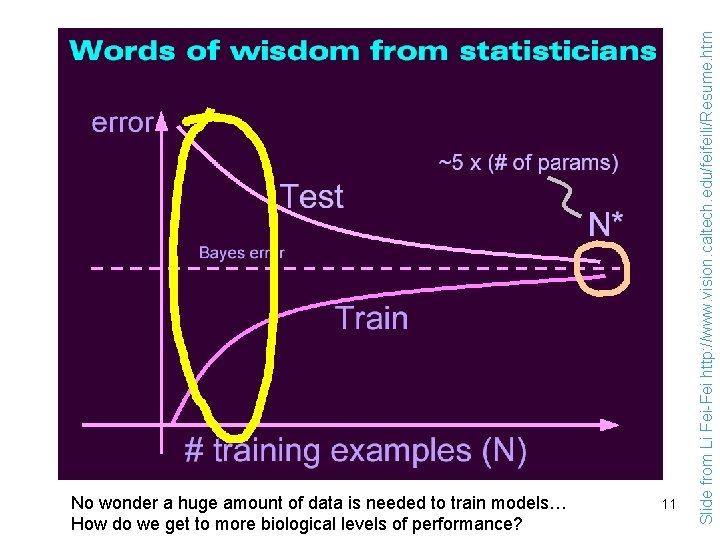

10 Slide from Li Fei-Fei http: //www. vision. caltech. edu/feifeili/Resume. htm Visual learning is inefficient

11 Slide from Li Fei-Fei http: //www. vision. caltech. edu/feifeili/Resume. htm No wonder a huge amount of data is needed to train models… How do we get to more biological levels of performance?

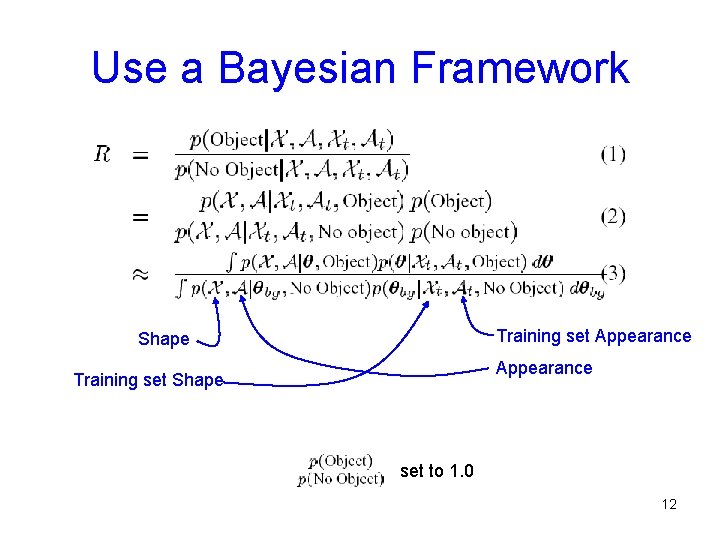

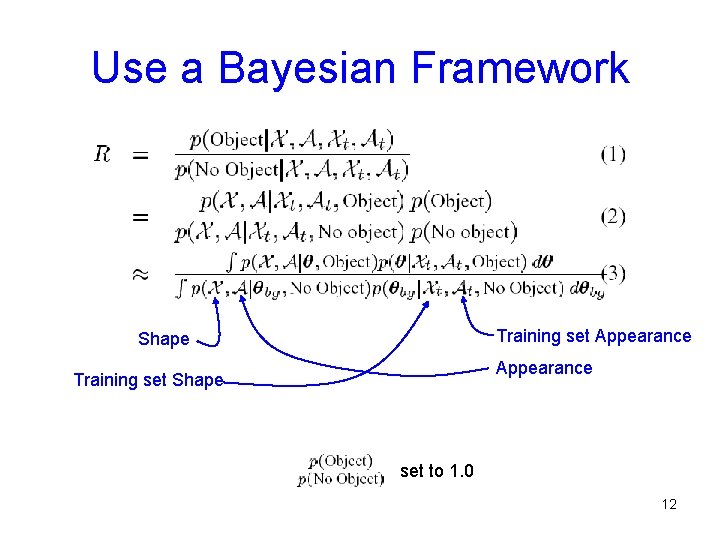

Use a Bayesian Framework Training set Appearance Shape Appearance Training set Shape set to 1. 0 12

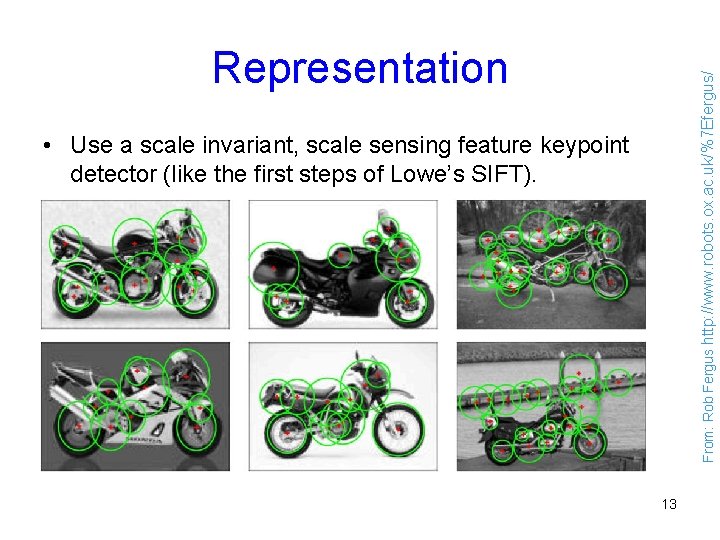

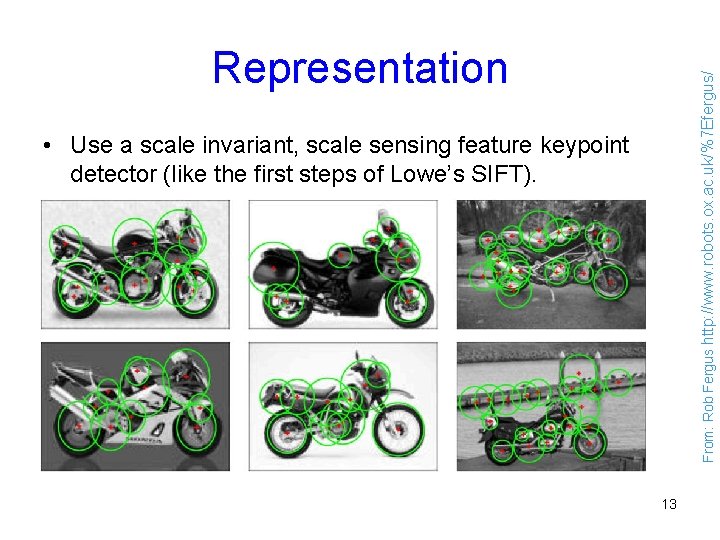

From: Rob Fergus http: //www. robots. ox. ac. uk/%7 Efergus/ Representation • Use a scale invariant, scale sensing feature keypoint detector (like the first steps of Lowe’s SIFT). 13

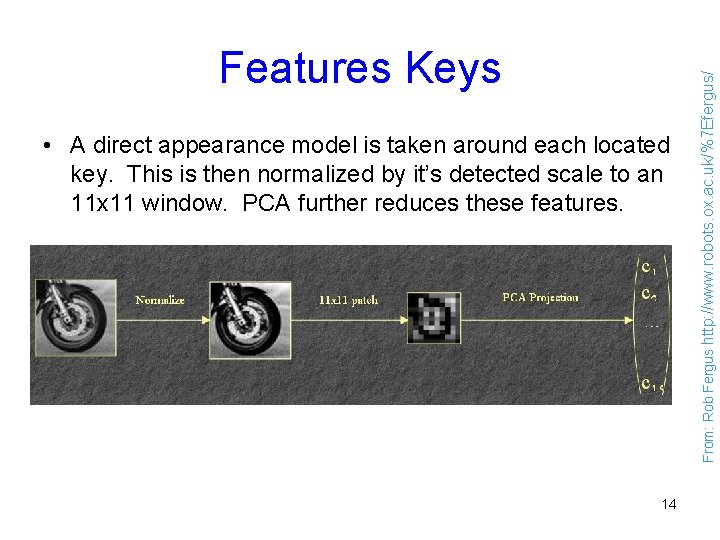

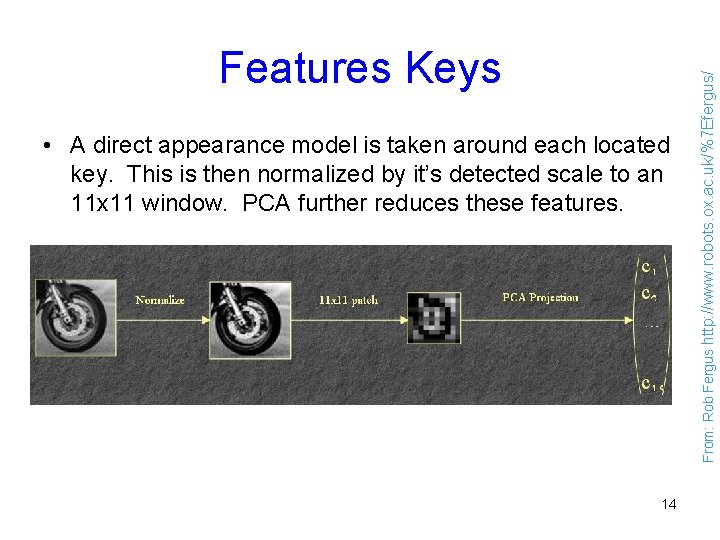

• A direct appearance model is taken around each located key. This is then normalized by it’s detected scale to an 11 x 11 window. PCA further reduces these features. 14 From: Rob Fergus http: //www. robots. ox. ac. uk/%7 Efergus/ Features Keys

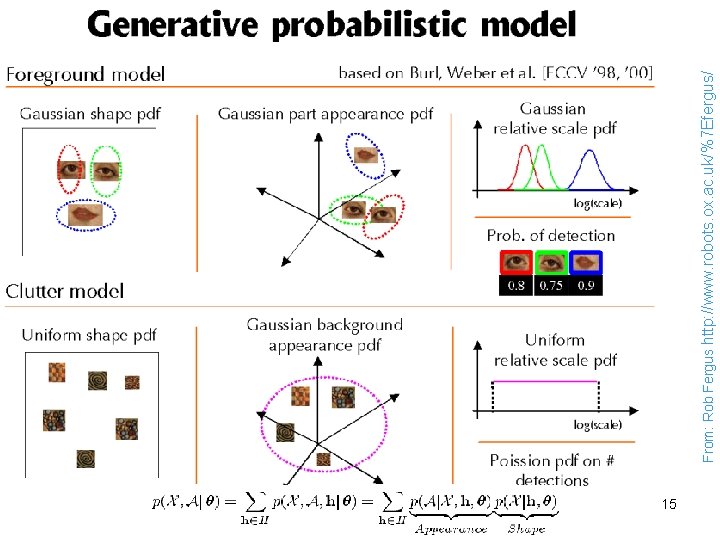

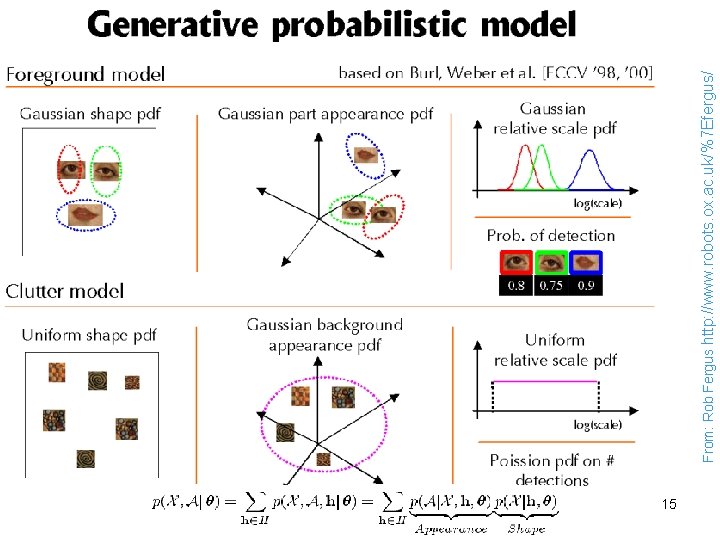

15 From: Rob Fergus http: //www. robots. ox. ac. uk/%7 Efergus/

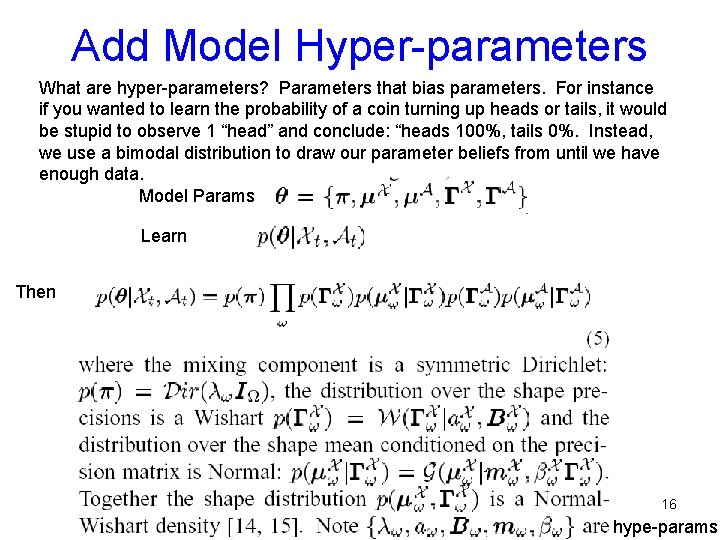

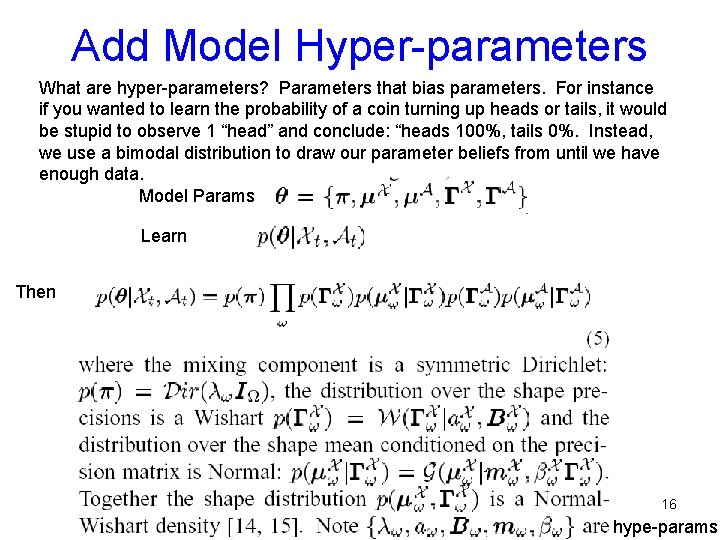

Add Model Hyper-parameters What are hyper-parameters? Parameters that bias parameters. For instance if you wanted to learn the probability of a coin turning up heads or tails, it would be stupid to observe 1 “head” and conclude: “heads 100%, tails 0%. Instead, we use a bimodal distribution to draw our parameter beliefs from until we have enough data. Model Params Learn Then 16 hype-params

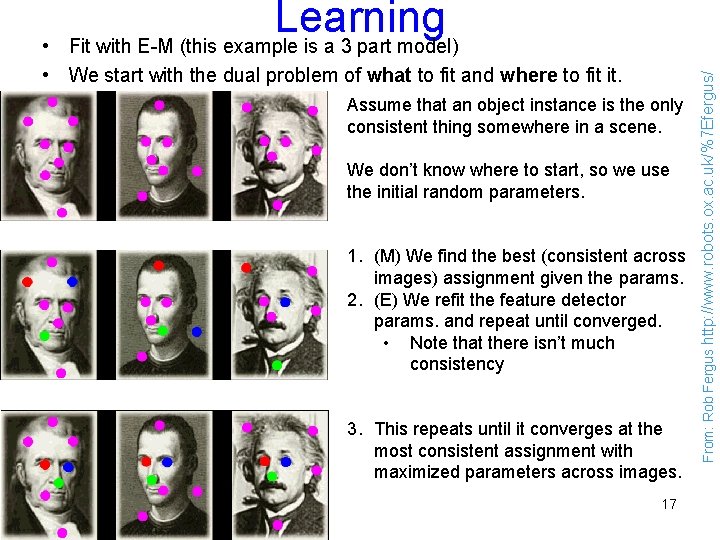

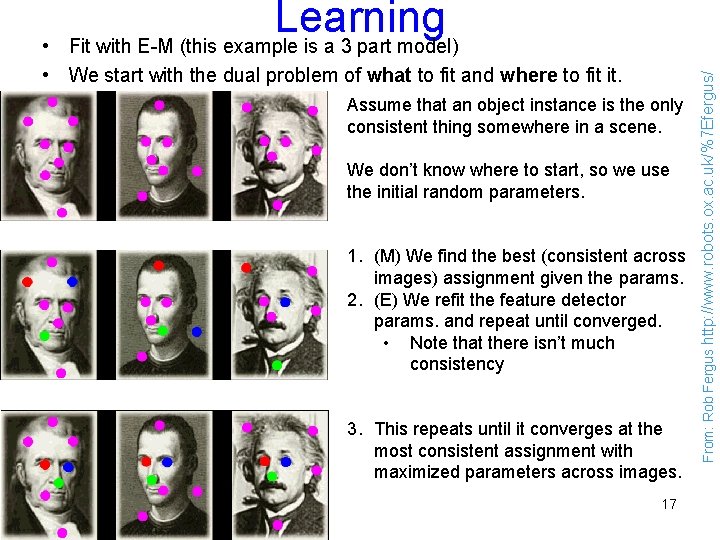

• • We start with the dual problem of what to fit and where to fit it. Assume that an object instance is the only consistent thing somewhere in a scene. We don’t know where to start, so we use the initial random parameters. 1. (M) We find the best (consistent across images) assignment given the params. 2. (E) We refit the feature detector params. and repeat until converged. • Note that there isn’t much consistency 3. This repeats until it converges at the most consistent assignment with maximized parameters across images. 17 From: Rob Fergus http: //www. robots. ox. ac. uk/%7 Efergus/ Learning Fit with E-M (this example is a 3 part model)

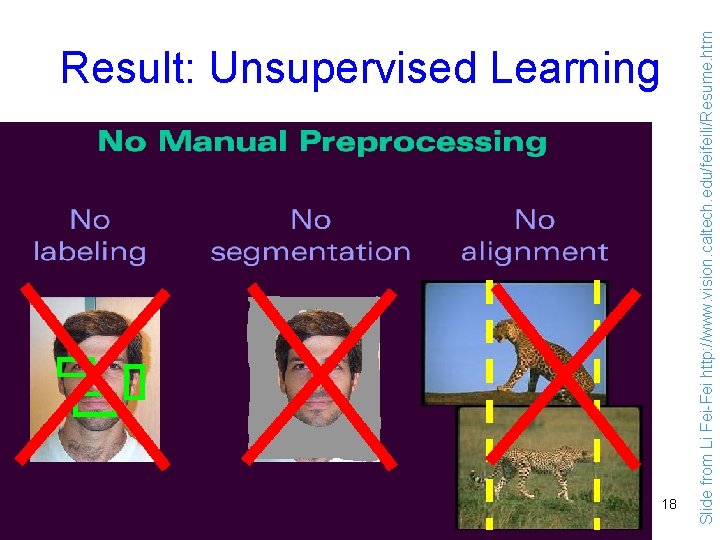

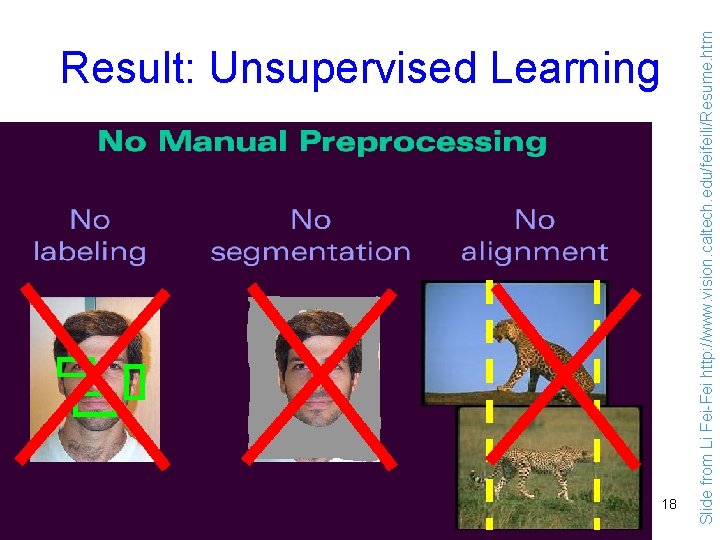

18 Slide from Li Fei-Fei http: //www. vision. caltech. edu/feifeili/Resume. htm Result: Unsupervised Learning

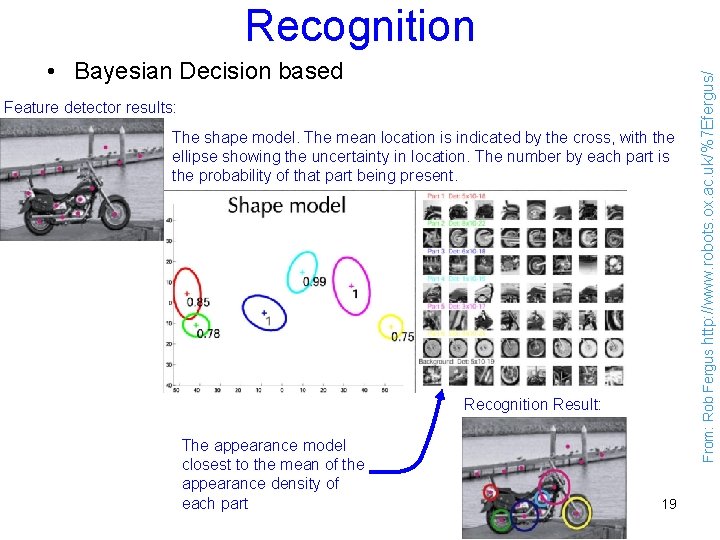

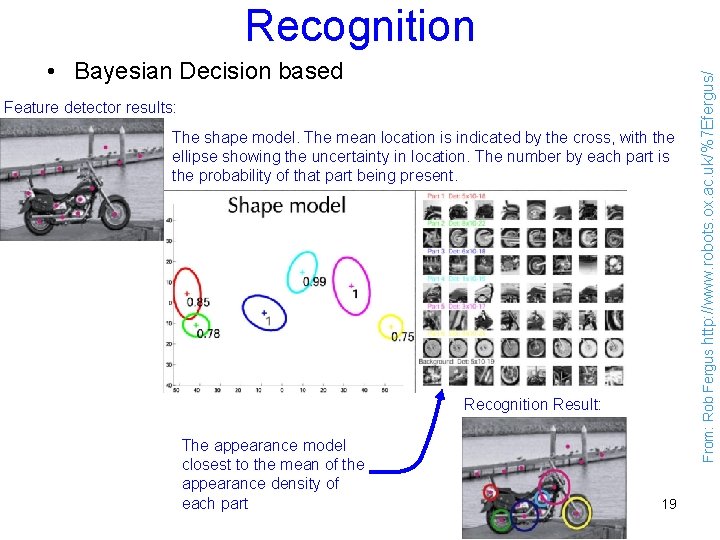

• Bayesian Decision based Feature detector results: The shape model. The mean location is indicated by the cross, with the ellipse showing the uncertainty in location. The number by each part is the probability of that part being present. Recognition Result: The appearance model closest to the mean of the appearance density of each part 19 From: Rob Fergus http: //www. robots. ox. ac. uk/%7 Efergus/ Recognition

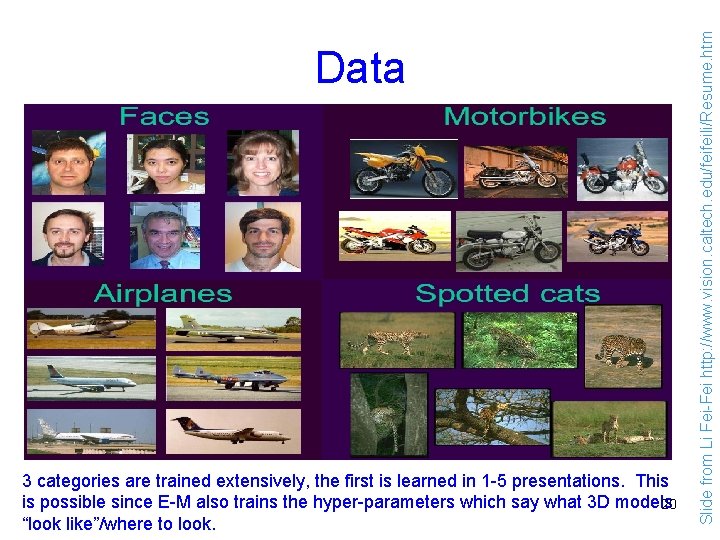

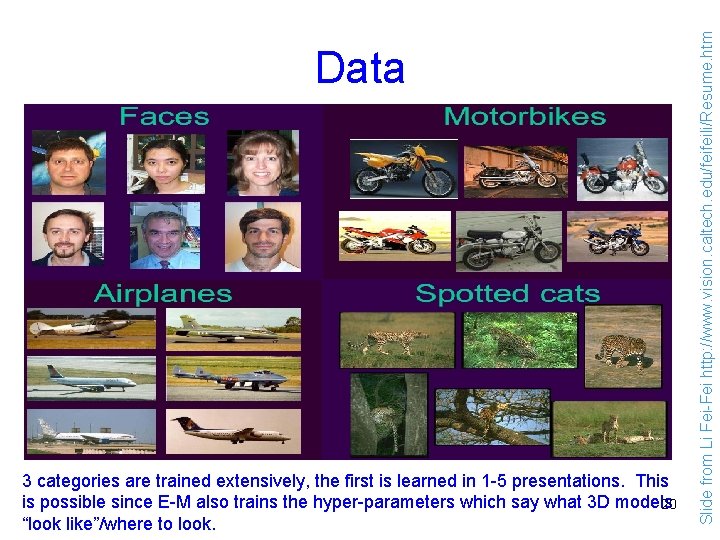

3 categories are trained extensively, the first is learned in 1 -5 presentations. This is possible since E-M also trains the hyper-parameters which say what 3 D models 20 “look like”/where to look. Slide from Li Fei-Fei http: //www. vision. caltech. edu/feifeili/Resume. htm Data

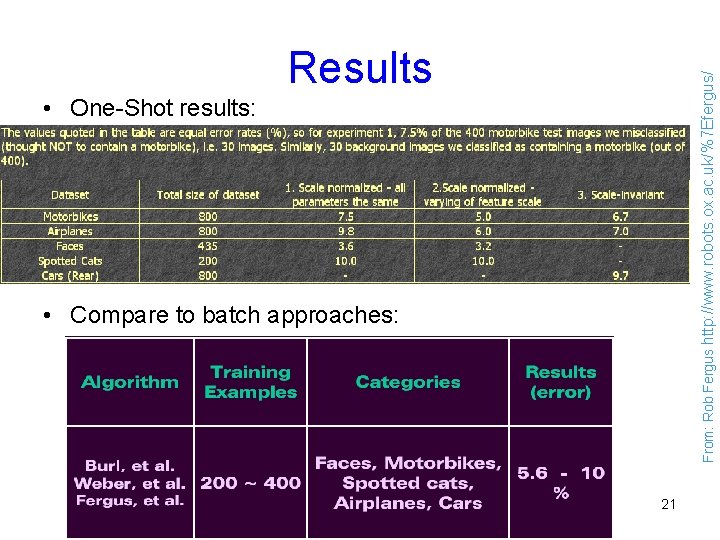

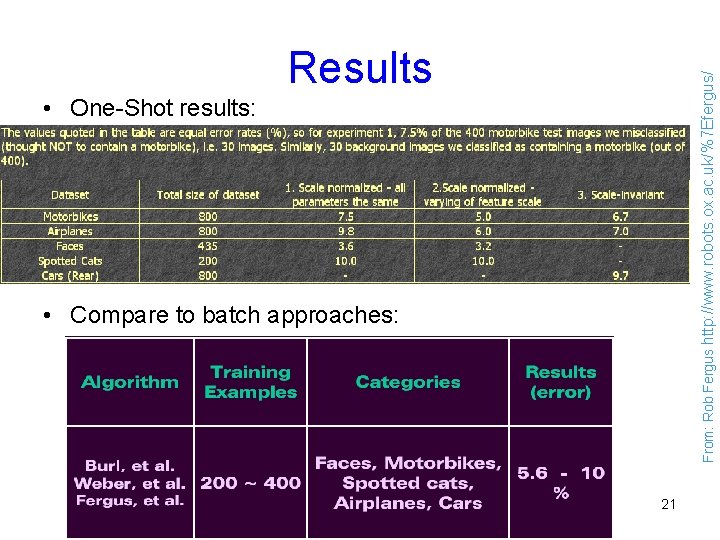

From: Rob Fergus http: //www. robots. ox. ac. uk/%7 Efergus/ Results • One-Shot results: • Compare to batch approaches: 21

Using supervised classifiers for unsupervised learning. • Will discuss in class. 22