The new search engine of SF Search engine

- Slides: 12

The new search engine of SF Search engine optimization Nordic webseminar, Oslo, 7. -8. February 2012 Markku Huttunen

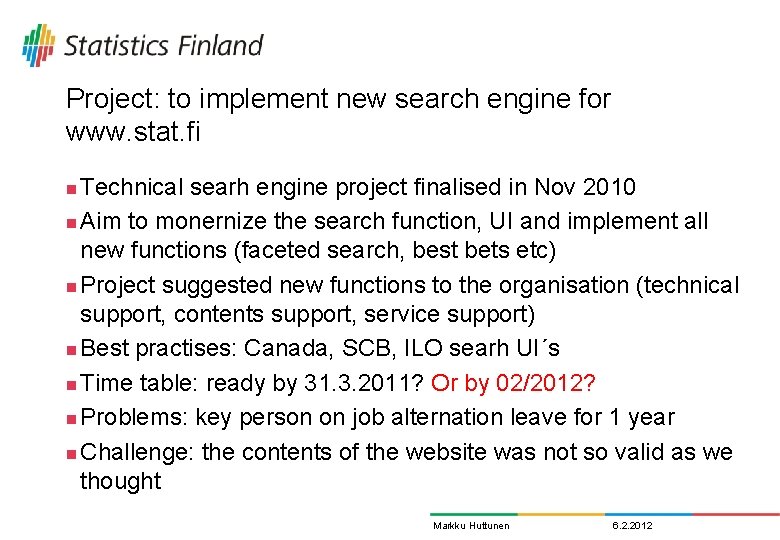

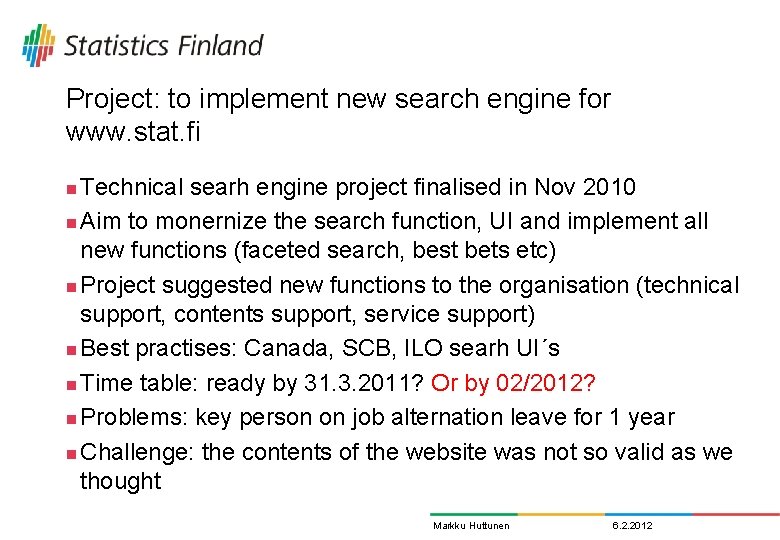

Project: to implement new search engine for www. stat. fi Technical searh engine project finalised in Nov 2010 n Aim to monernize the search function, UI and implement all new functions (faceted search, best bets etc) n Project suggested new functions to the organisation (technical support, contents support, service support) n Best practises: Canada, SCB, ILO searh UI´s n Time table: ready by 31. 3. 2011? Or by 02/2012? n Problems: key person on job alternation leave for 1 year n Challenge: the contents of the website was not so valid as we thought n Markku Huttunen 6. 2. 2012

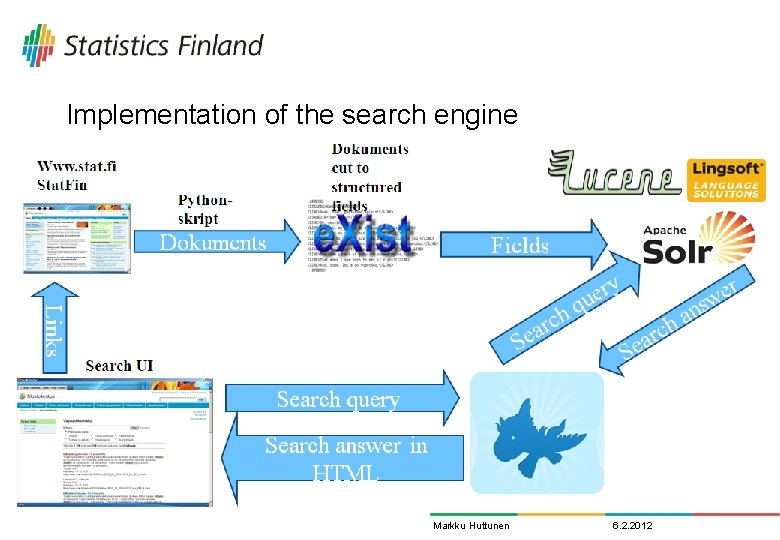

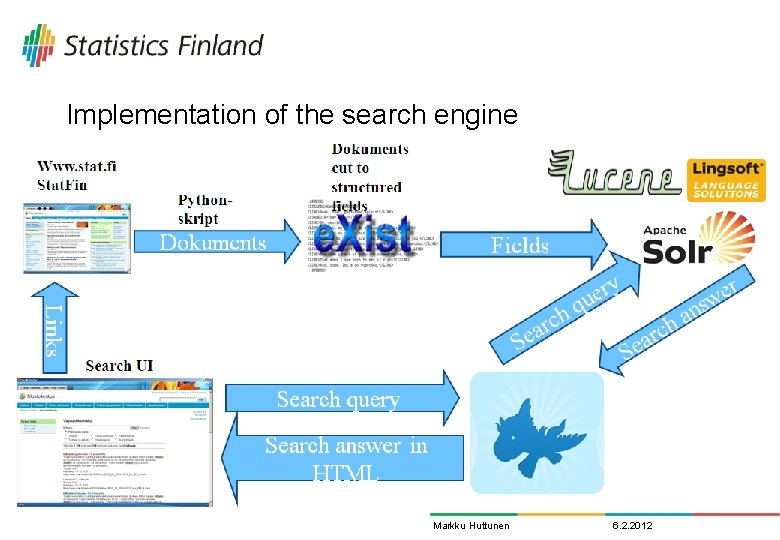

Implementation of the search engine Markku Huttunen 6. 2. 2012

Information Services 24. 10. 2021 4

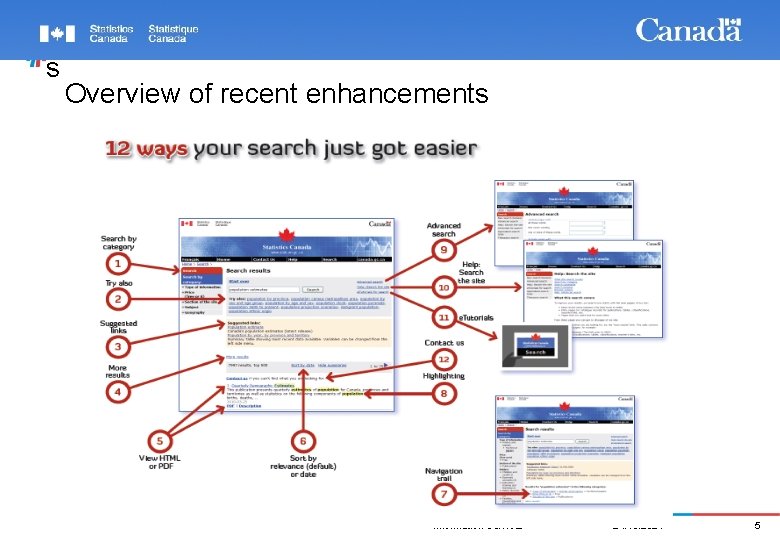

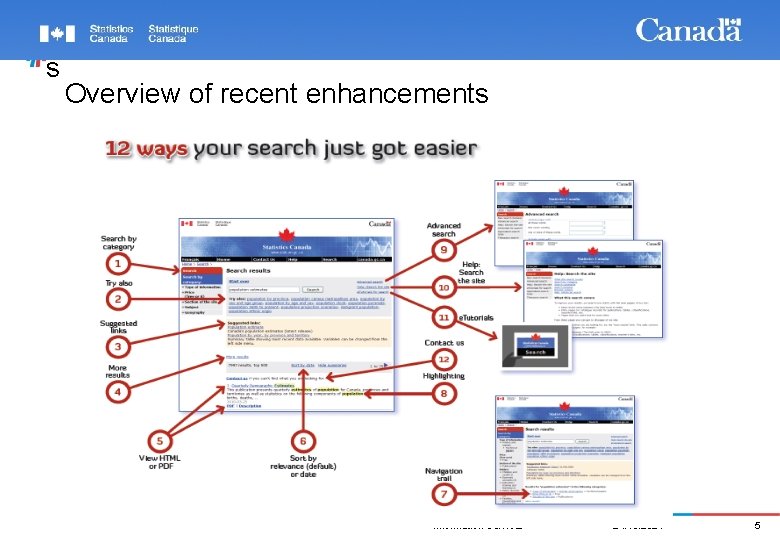

s Overview of recent enhancements Information Services 24. 10. 2021 5

New search engine will have many faces… n Deliver the free text search function to the website l n Deliver functionalities to the website l n It should have something extra to offer compared with Google Backround tool to produce different listings based on document metadata The (technical) search engine is to be used in different functions: website, intranet, Findicator, Tieto&Trendit magazine subsite, XML meta database Markku Huttunen 6. 2. 2012

Optimizing the seach index What files to index and what files to exclude from the index? n 140 000 html files, about 105 000 are indexed at the moment n l Classifications 40 000 files, concepts and definitions 5 000 files What file types: HTML, PX, PDF, dynamic pages, (XML) n We need a spider and rules for the spider n The logic of our spider is more complex than Google´s n Google spider just follows the links and indexes everything exept ”noindex” l We give our spider a list on valid files to index based on clear rules l n The timing of the search function l New statistical releases 9. 00 sharp also from search function? Markku Huttunen 6. 2. 2012

Optimizing the relevance of the results n The contents and stuctures of the files l l l n The structure of the documents is actively used instead of indexing just the contents of the whole <body>xyz</body> For example tables are recognized from the body -> thus we can search tables which are inside a longer text document In search results we can deliver tables from the documents they are exracted from Problem: there has been several problems with the quality of the files l The structure and the metadata of the files has to be repaired Markku Huttunen 6. 2. 2012

Optimizing the results using the human factor n The top 20 searches analyzed (Google and SF search) l l n Based on the analysis we have decided best answers to some of the most used search terms l n For example search term ”population” -> the population theme page will be the forced to be the first result link Try also l n Top 20 searches cover the most search incidents The top search terms are constantly the same Based on a list of ”synonyms” some hints are given: ”inflation” -> ”CPI” Key words l Suggested links to other relevant statistics is based on a list of 1 000 common key words listed to all statics Markku Huttunen 6. 2. 2012

Search engine optimization of SF web site n The information architecture of the statistics pages is clear l n Our URLs are search engine friendly l l n And designed to be archived: Google loves old pages! We dont change URLs when publishing platform changes Our URLs are short and human (and Google) readable Our main contents are search engine friendly l l Permanent home page for all statistics All statistical releases, tables, graphs etc. are archived with original URLs The stucture of the statistical releases is standardised All pages have H 1 main title, the title matadata tags are used etc. Markku Huttunen 6. 2. 2012

Search engine optimization of SF web site 2 n The 1 000 keywords are listed on the statistics home pages l l For Google as well as for human users The key words are common library keywords when possibe (ALLÄRS - Allmän tesaurus på svenska) As part of the search engine work the key words were translated to english and swedish – will be published with the new search engine The same key wors are used by SF search engine to have more functionalities The file based PX-Web data base is search engine friendly n We are trying to follow w 3 c standards whenever we can n We use the ”noindex” command n l We have ”tested” that Google really follows the command Markku Huttunen 6. 2. 2012

Search engine optimization – what could be done more? The top 20 search terms should be analyzed and checked what could be done better n Keep the 50 000 monthly search terms alive also n We should open some our contents to be redistributed (links) n We have used SEO services only in a couple of limited cases n We haven´t used Google Ad. Words n Shorten some the titles of the statistical releases n Standardize the statistical releases a bit more n Shorten some of the statistical releases – better web writing n Markku Huttunen 6. 2. 2012

Internal combustion vs external combustion

Internal combustion vs external combustion Hát kết hợp bộ gõ cơ thể

Hát kết hợp bộ gõ cơ thể Frameset trong html5

Frameset trong html5 Bổ thể

Bổ thể Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Gấu đi như thế nào

Gấu đi như thế nào Thang điểm glasgow

Thang điểm glasgow Chúa yêu trần thế

Chúa yêu trần thế Môn thể thao bắt đầu bằng chữ f

Môn thể thao bắt đầu bằng chữ f Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Cong thức tính động năng

Cong thức tính động năng Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ