TextDocument Categorization Part I CSC 575 Intelligent Information

- Slides: 20

Text/Document Categorization Part I CSC 575 Intelligent Information Retrieval

Text Categorization i Assigning documents to a fixed set of categories 4 Special case of classification in machine learning i Applications: 4 Web pages / documents i Recommending i Automatic bookmarking i Yahoo-like directories 4 Micro-blogs / Social Media i Recommending posts i Categorizing items based on social tags 4 News articles i Personalized news recommendation 4 Email messages i Routing / Folderizing i Spam filtering Intelligent Information Retrieval 2

Classification Task i Given: 4 A description of an instance, x X, where X is the instance language or feature space. i. Typically, x is a row in a table with the instance/feature space described in terms of features or attributes. 4 A fixed set of class or category labels: C={c 1, c 2, …cn} i Classification task is to determine: 4 The class/category of x: c(x) C, where c(x) is a function whose domain is X and whose range is C. 3

Learning for Classification i Training examples: 4 Instance x X, paired with its correct class label c(x): <x, c(x)> for an unknown classification function, c. i Given a set of training examples, D: 4 Find a hypothesized classification function, h(x), such that: h(x) = c(x), for all training instances (i. e. , for all <x, c(x)> in D). This is called consistency. 4

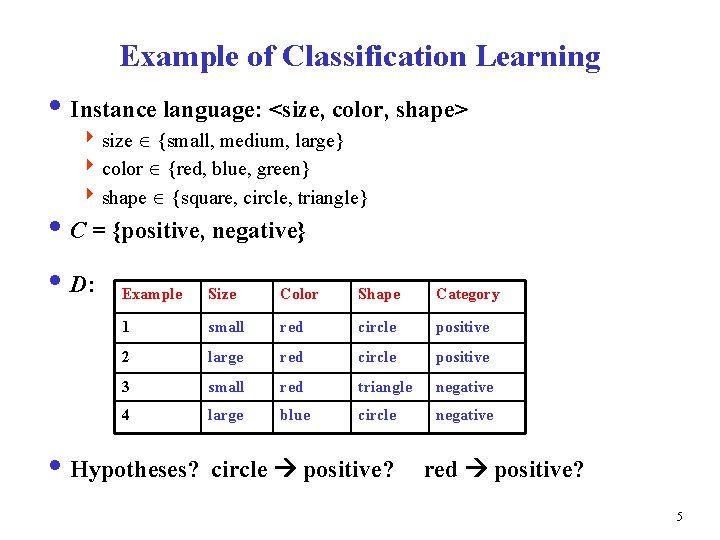

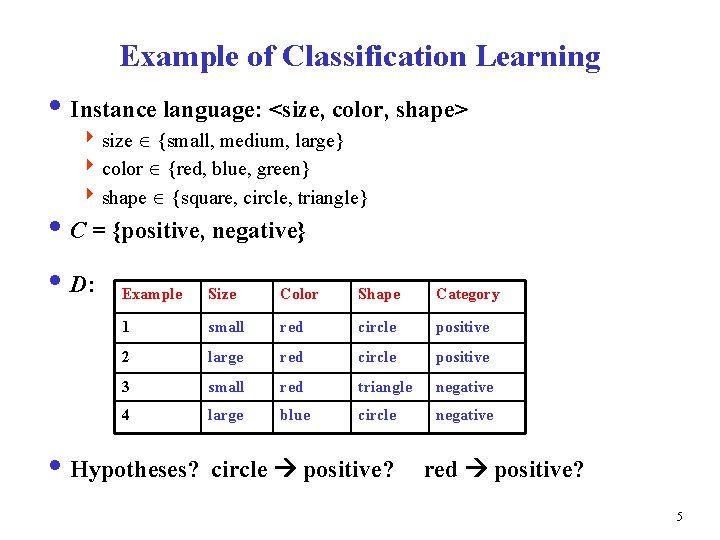

Example of Classification Learning i Instance language: <size, color, shape> 4 size {small, medium, large} 4 color {red, blue, green} 4 shape {square, circle, triangle} i C = {positive, negative} i D: Example Size Color Shape Category 1 small red circle positive 2 large red circle positive 3 small red triangle negative 4 large blue circle negative i Hypotheses? circle positive? red positive? 5

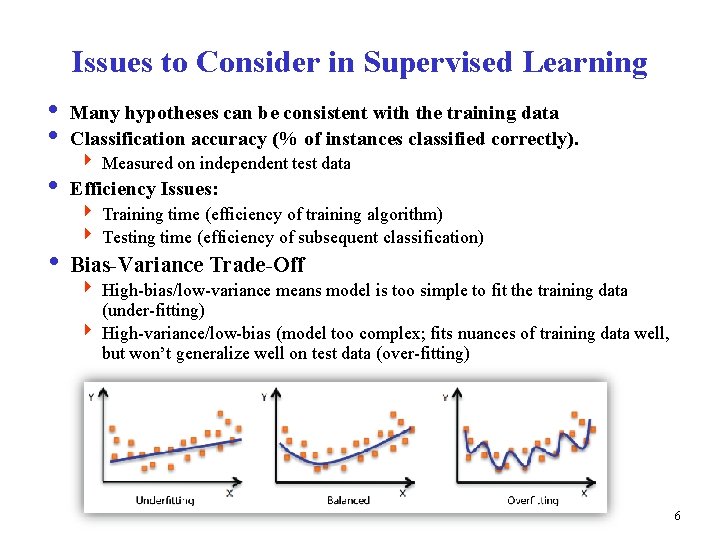

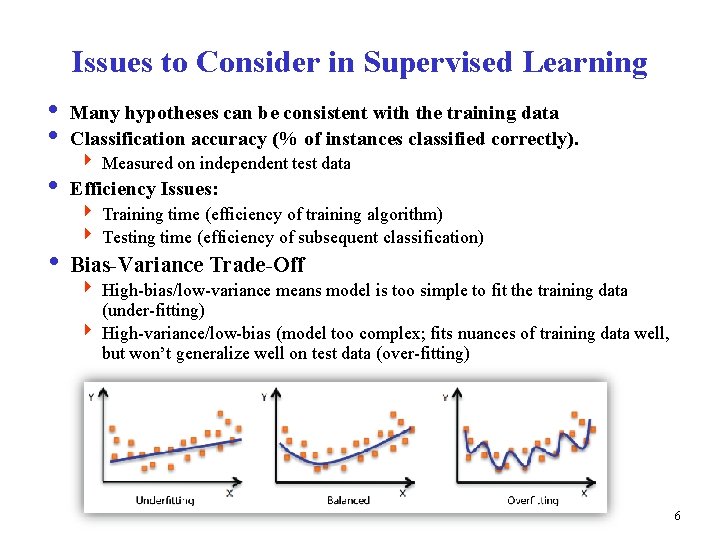

Issues to Consider in Supervised Learning i Many hypotheses can be consistent with the training data i Classification accuracy (% of instances classified correctly). 4 Measured on independent test data i Efficiency Issues: 4 Training time (efficiency of training algorithm) 4 Testing time (efficiency of subsequent classification) i Bias-Variance Trade-Off 4 High-bias/low-variance means model is too simple to fit the training data (under-fitting) 4 High-variance/low-bias (model too complex; fits nuances of training data well, but won’t generalize well on test data (over-fitting) 6

Learning for Text Categorization i. Common algorithms for text/document categorization: 4 Nearest Neighbor (case based) 4 Relevance Feedback (Rocchio) 4 Bayesian (naïve) 4 Neural networks 4 Support Vector Machines (SVM) Intelligent Information Retrieval 7

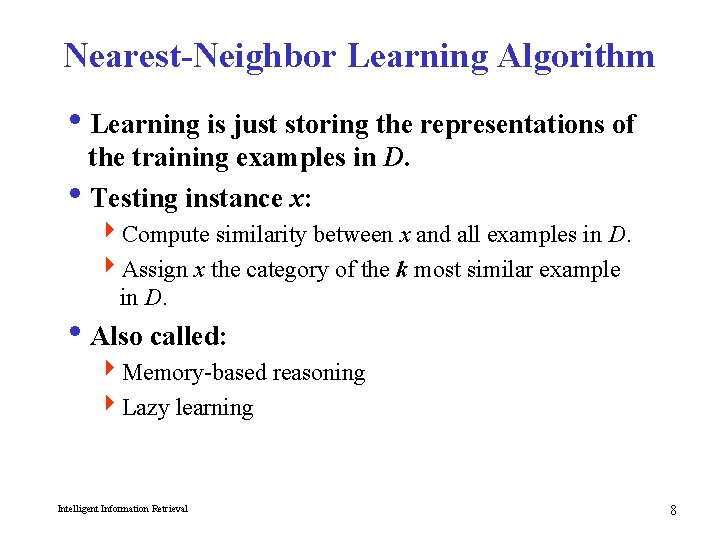

Nearest-Neighbor Learning Algorithm i. Learning is just storing the representations of the training examples in D. i. Testing instance x: 4 Compute similarity between x and all examples in D. 4 Assign x the category of the k most similar example in D. i. Also called: 4 Memory-based reasoning 4 Lazy learning Intelligent Information Retrieval 8

Similarity Metrics i Nearest neighbor method depends on a similarity (or distance) metric. i Simplest for continuous m-dimensional instance space is Euclidean distance. i Simplest for m-dimensional binary instance space is Hamming distance (number of feature values that differ). i For text, cosine similarity of TF-IDF weighted vectors is typically most effective. Intelligent Information Retrieval 9

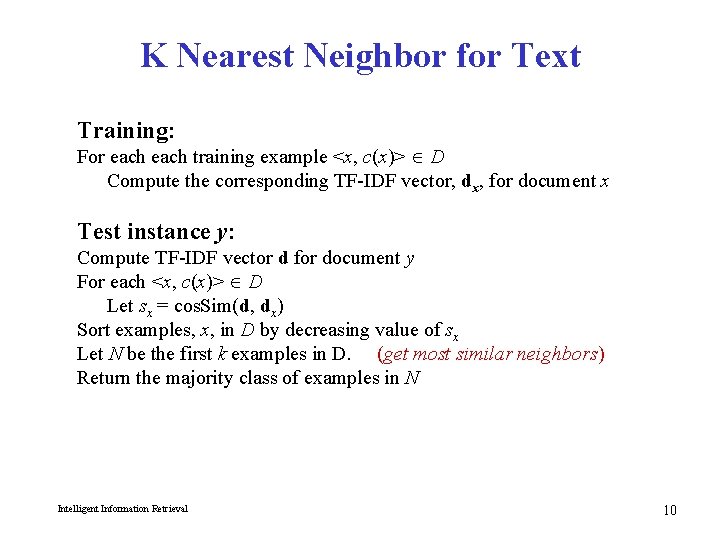

K Nearest Neighbor for Text Training: For each training example <x, c(x)> D Compute the corresponding TF-IDF vector, dx, for document x Test instance y: Compute TF-IDF vector d for document y For each <x, c(x)> D Let sx = cos. Sim(d, dx) Sort examples, x, in D by decreasing value of sx Let N be the first k examples in D. (get most similar neighbors) Return the majority class of examples in N Intelligent Information Retrieval 10

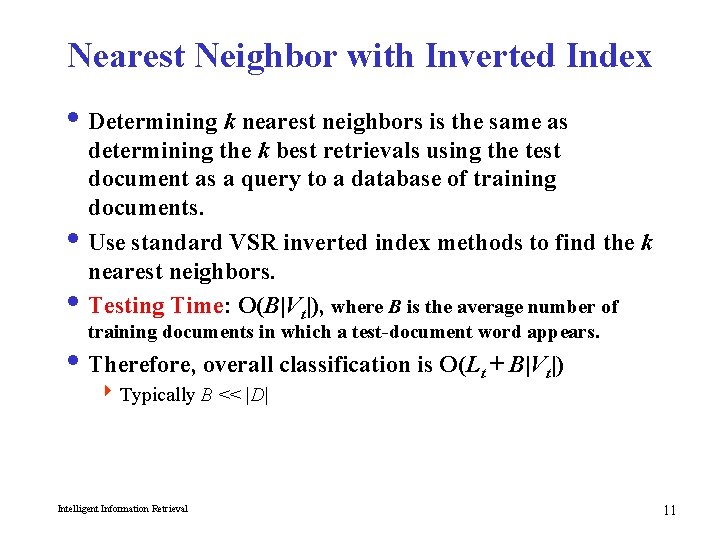

Nearest Neighbor with Inverted Index i Determining k nearest neighbors is the same as determining the k best retrievals using the test document as a query to a database of training documents. i Use standard VSR inverted index methods to find the k nearest neighbors. i Testing Time: O(B|Vt|), where B is the average number of training documents in which a test-document word appears. i Therefore, overall classification is O(Lt + B|Vt|) 4 Typically B << |D| Intelligent Information Retrieval 11

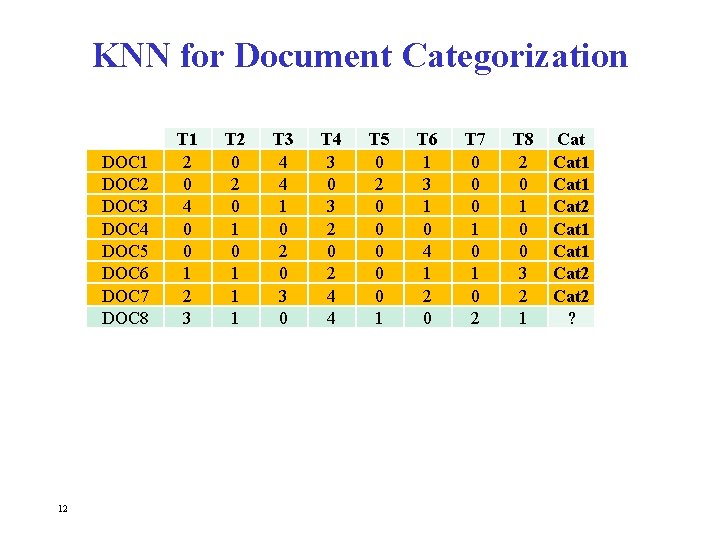

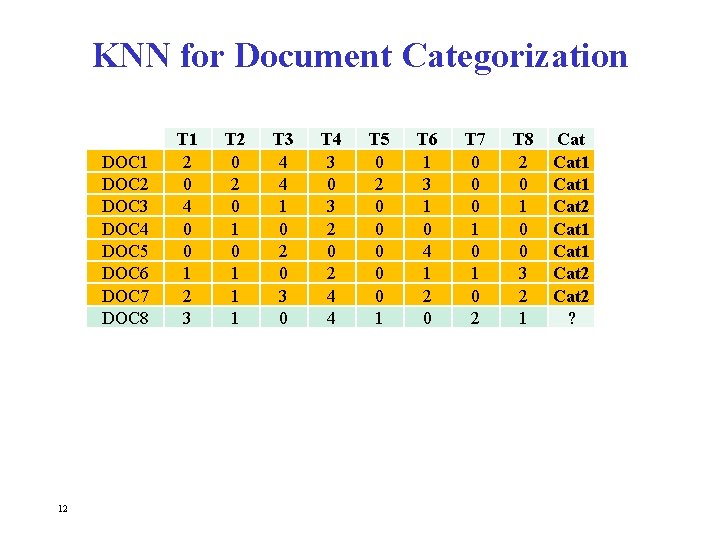

KNN for Document Categorization DOC 1 DOC 2 DOC 3 DOC 4 DOC 5 DOC 6 DOC 7 DOC 8 12 T 1 2 0 4 0 0 1 2 3 T 2 0 1 0 1 1 1 T 3 4 4 1 0 2 0 3 0 T 4 3 0 3 2 0 2 4 4 T 5 0 2 0 0 0 1 T 6 1 3 1 0 4 1 2 0 T 7 0 0 0 1 0 2 T 8 2 0 1 0 0 3 2 1 Cat 1 Cat 2 ?

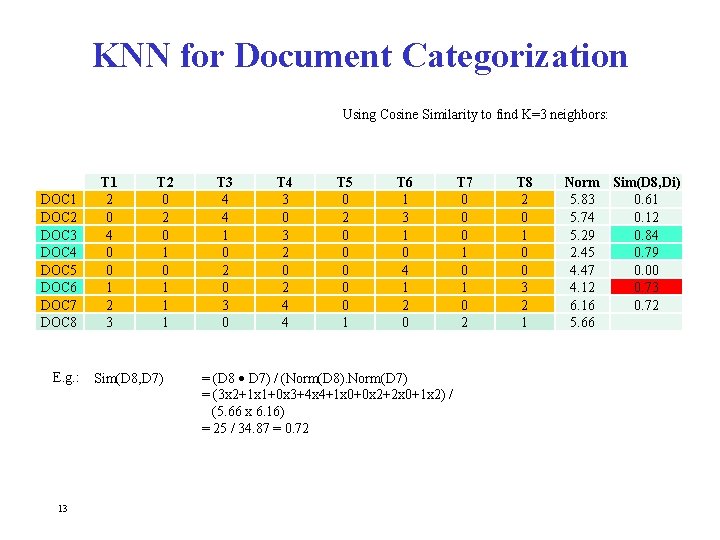

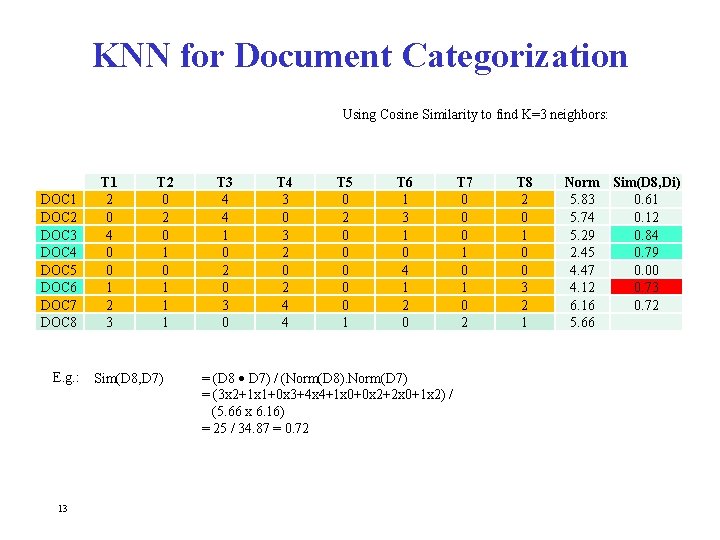

KNN for Document Categorization Using Cosine Similarity to find K=3 neighbors: DOC 1 DOC 2 DOC 3 DOC 4 DOC 5 DOC 6 DOC 7 DOC 8 E. g. : 13 T 1 2 0 4 0 0 1 2 3 T 2 0 1 0 1 1 1 Sim(D 8, D 7) T 3 4 4 1 0 2 0 3 0 T 4 3 0 3 2 0 2 4 4 T 5 0 2 0 0 0 1 T 6 1 3 1 0 4 1 2 0 = (D 8 D 7) / (Norm(D 8). Norm(D 7) = (3 x 2+1 x 1+0 x 3+4 x 4+1 x 0+0 x 2+2 x 0+1 x 2) / (5. 66 x 6. 16) = 25 / 34. 87 = 0. 72 T 7 0 0 0 1 0 2 T 8 2 0 1 0 0 3 2 1 Norm Sim(D 8, Di) 5. 83 0. 61 5. 74 0. 12 5. 29 0. 84 2. 45 0. 79 4. 47 0. 00 4. 12 0. 73 6. 16 0. 72 5. 66

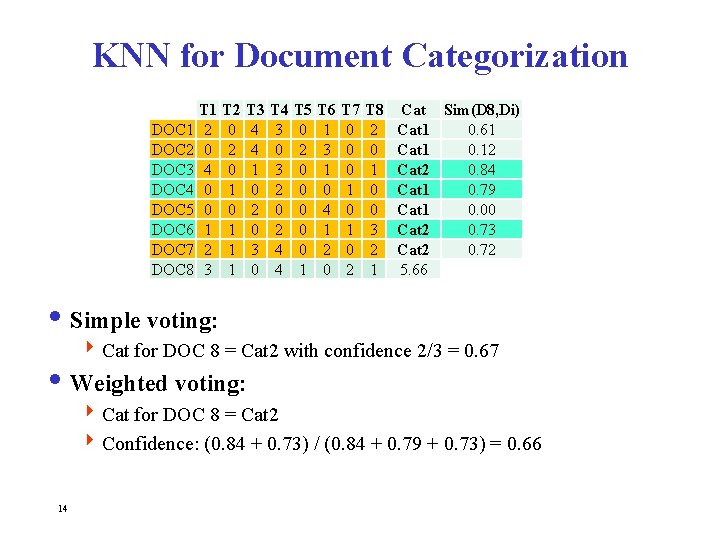

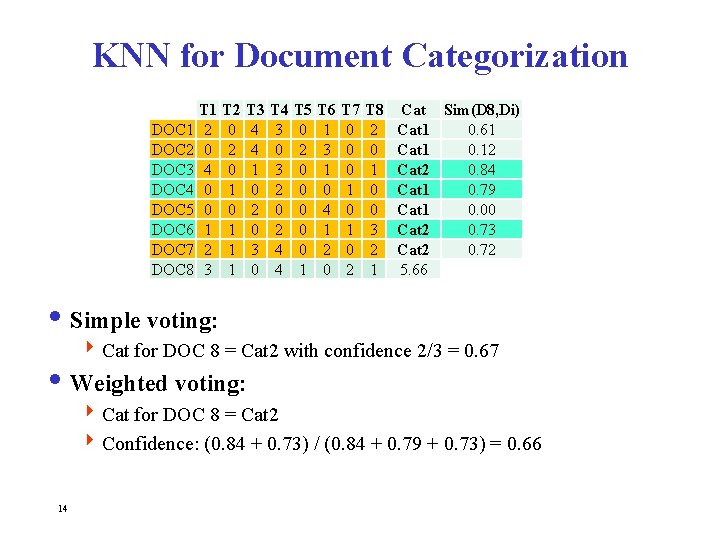

KNN for Document Categorization DOC 1 DOC 2 DOC 3 DOC 4 DOC 5 DOC 6 DOC 7 DOC 8 T 1 2 0 4 0 0 1 2 3 T 2 0 1 0 1 1 1 T 3 4 4 1 0 2 0 3 0 T 4 3 0 3 2 0 2 4 4 T 5 0 2 0 0 0 1 T 6 1 3 1 0 4 1 2 0 T 7 0 0 0 1 0 2 T 8 2 0 1 0 0 3 2 1 Cat Sim(D 8, Di) Cat 1 0. 61 Cat 1 0. 12 Cat 2 0. 84 Cat 1 0. 79 Cat 1 0. 00 Cat 2 0. 73 Cat 2 0. 72 5. 66 i Simple voting: 4 Cat for DOC 8 = Cat 2 with confidence 2/3 = 0. 67 i Weighted voting: 4 Cat for DOC 8 = Cat 2 4 Confidence: (0. 84 + 0. 73) / (0. 84 + 0. 79 + 0. 73) = 0. 66 14

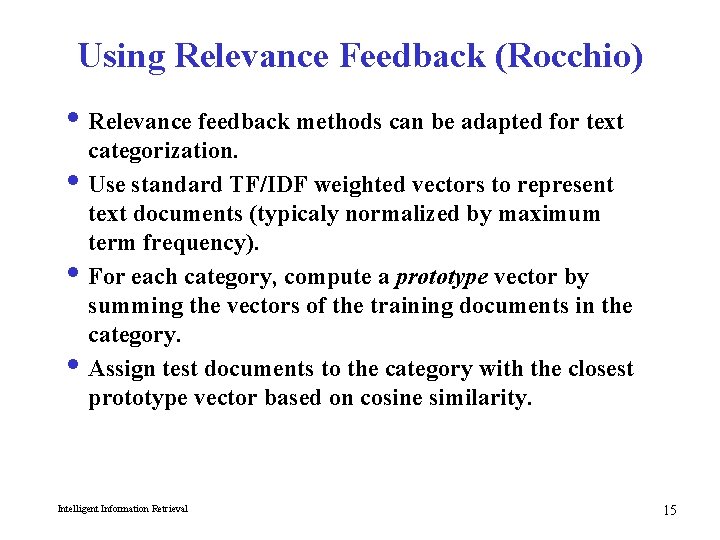

Using Relevance Feedback (Rocchio) i Relevance feedback methods can be adapted for text categorization. i Use standard TF/IDF weighted vectors to represent text documents (typicaly normalized by maximum term frequency). i For each category, compute a prototype vector by summing the vectors of the training documents in the category. i Assign test documents to the category with the closest prototype vector based on cosine similarity. Intelligent Information Retrieval 15

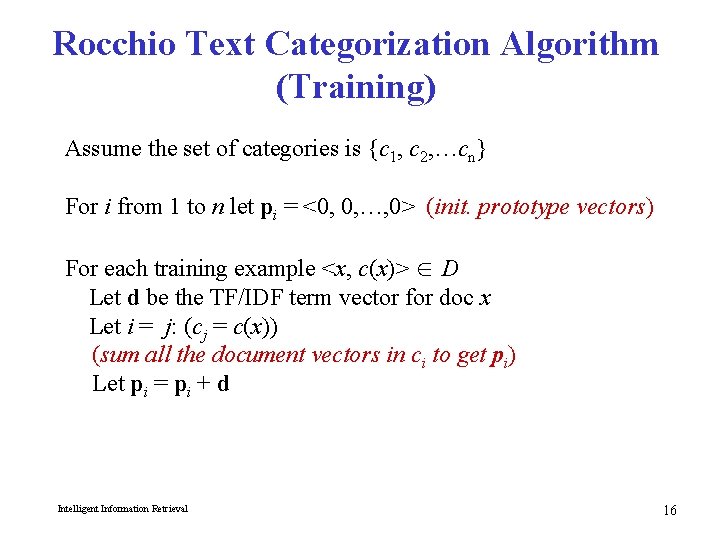

Rocchio Text Categorization Algorithm (Training) Assume the set of categories is {c 1, c 2, …cn} For i from 1 to n let pi = <0, 0, …, 0> (init. prototype vectors) For each training example <x, c(x)> D Let d be the TF/IDF term vector for doc x Let i = j: (cj = c(x)) (sum all the document vectors in ci to get pi) Let pi = pi + d Intelligent Information Retrieval 16

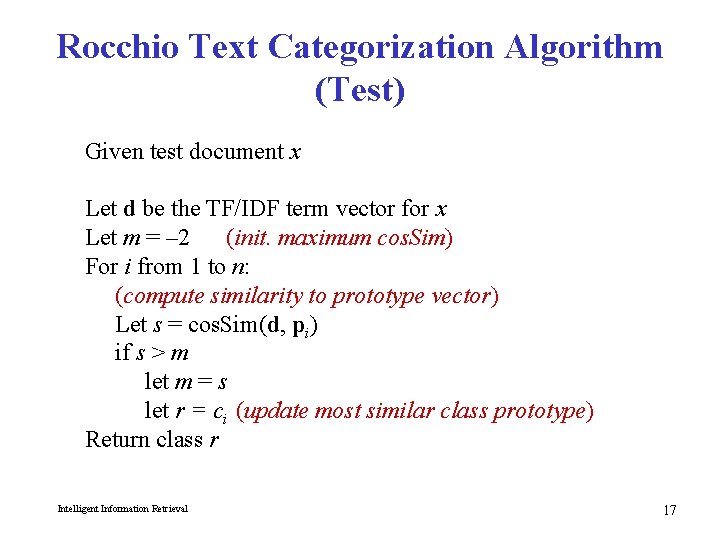

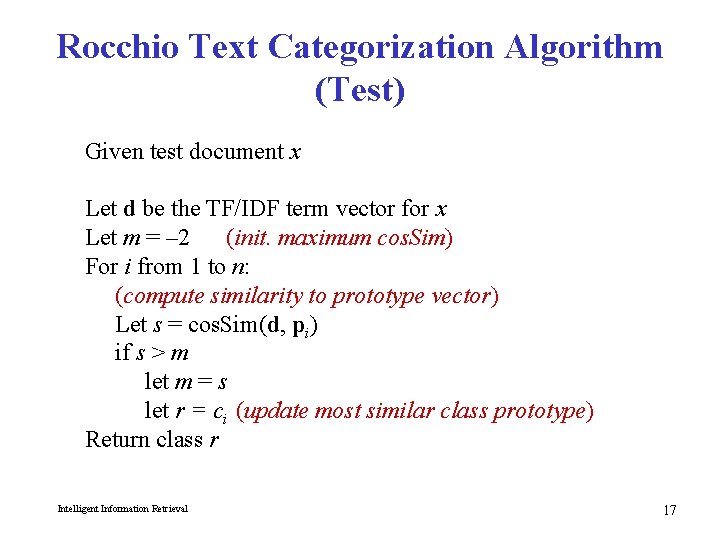

Rocchio Text Categorization Algorithm (Test) Given test document x Let d be the TF/IDF term vector for x Let m = – 2 (init. maximum cos. Sim) For i from 1 to n: (compute similarity to prototype vector) Let s = cos. Sim(d, pi) if s > m let m = s let r = ci (update most similar class prototype) Return class r Intelligent Information Retrieval 17

Rocchio Properties i Does not guarantee a consistent hypothesis. i Forms a simple generalization of the examples in each class (a prototype). i Prototype vector does not need to be averaged or otherwise normalized for length since cosine similarity is insensitive to vector length. i Classification is based on similarity to class prototypes. Intelligent Information Retrieval 18

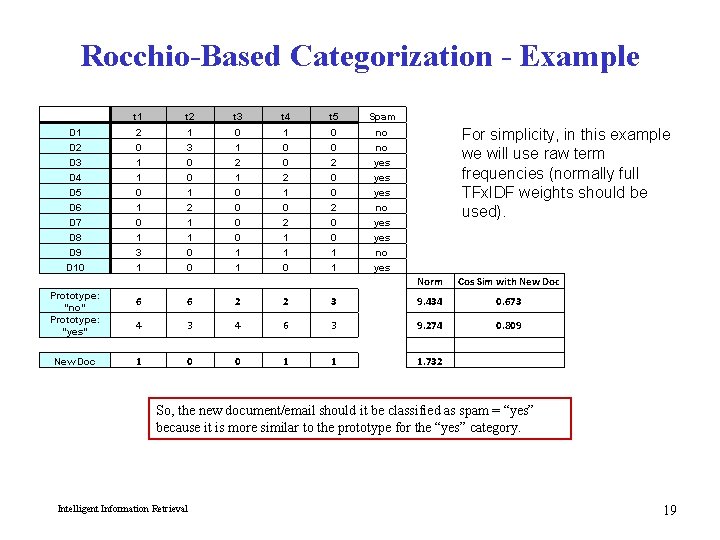

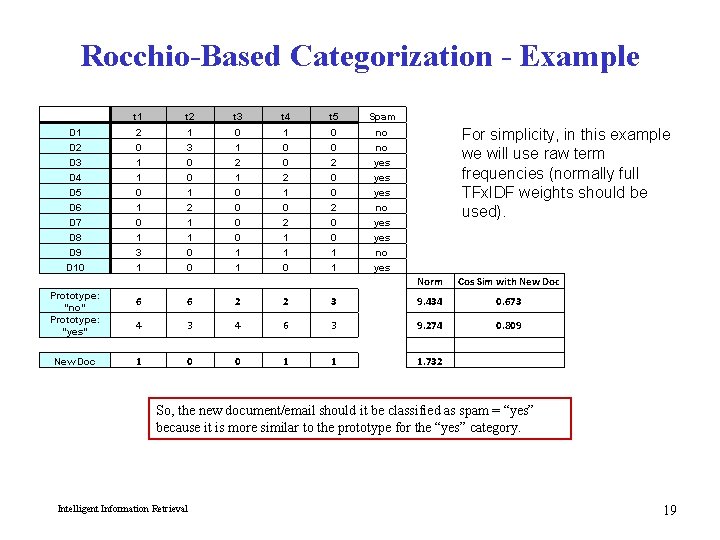

Rocchio-Based Categorization - Example D 1 D 2 D 3 D 4 D 5 D 6 D 7 D 8 D 9 D 10 t 1 t 2 t 3 t 4 t 5 Spam 2 0 1 1 0 1 3 1 1 3 0 0 1 2 1 1 0 0 0 1 2 1 0 0 1 1 1 0 0 2 1 1 0 0 0 2 0 0 1 1 no no yes yes no yes For simplicity, in this example we will use raw term frequencies (normally full TFx. IDF weights should be used). Norm Cos Sim with New Doc 3 9. 434 0. 673 6 3 9. 274 0. 809 1 1 1. 732 Prototype: "no" Prototype: "yes" 6 6 2 2 4 3 4 New Doc 1 0 0 So, the new document/email should it be classified as spam = “yes” because it is more similar to the prototype for the “yes” category. Intelligent Information Retrieval 19

Next: Part II Bayesian Classification CSC 575 Intelligent Information Retrieval