STT 592 002 Intro to Statistical Learning 1

- Slides: 25

STT 592 -002: Intro. to Statistical Learning 1 UNSUPERVISED LEARNING Chapter 10 Disclaimer: This PPT is modified based on IOM 530: Intro. to Statistical Learning

STT 592 -002: Intro. to Statistical Learning Outline ØPrinciple Component Analysis (PCA) ØWhat is Clustering? ØK-Means Clustering ØHierarchical Clustering 2

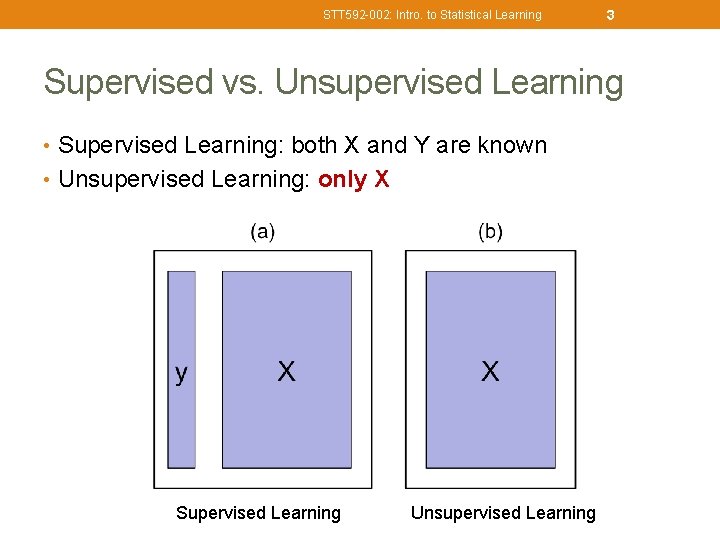

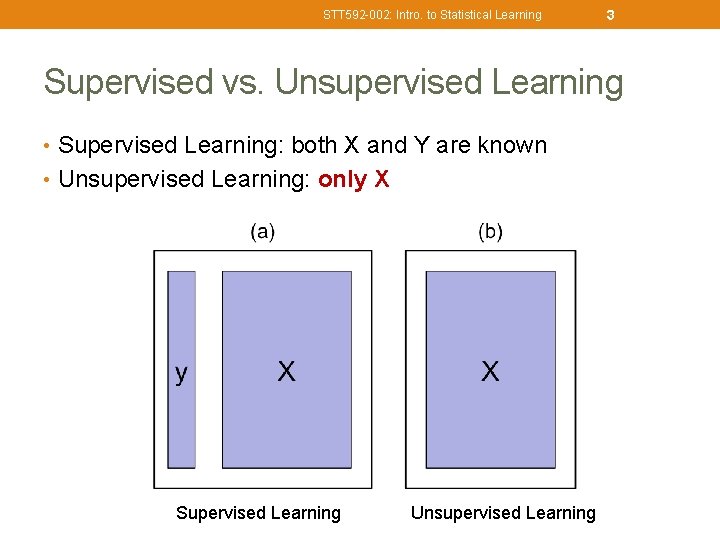

STT 592 -002: Intro. to Statistical Learning 3 Supervised vs. Unsupervised Learning • Supervised Learning: both X and Y are known • Unsupervised Learning: only X Supervised Learning Unsupervised Learning

STT 592 -002: Intro. to Statistical Learning 4 Overview of Unsupervised Learning • Focus on two particular types of unsupervised learning: • Principal Components Analysis (PCA), a tool used for data visualization or data pre-processing (dimension reduction) before supervised techniques are applied • Clustering: a broad class of methods for discovering • unknown subgroups in data.

STT 592 -002: Intro. to Statistical Learning 5 Challenge of Unsupervised Learning • Unsupervised learning is more challenging. • No simple goal for analysis, such as prediction of a response for classification or MSE. • More on exploratory data analysis. • Hard to assess the results from unsupervised learning, as we did not have any ground truth.

STT 592 -002: Intro. to Statistical Learning 6 Examples of Unsupervised Learning • Eg: A cancer researcher might assay gene expression levels in 100 patients with breast cancer, and look for subgroups among the breast cancer samples, or among the genes, in order to obtain a better understanding of the disease. • Eg: Online shopping site: identify groups of shoppers with similar browsing and purchase histories, as well as items of interest within each group. Then an individual shopper can be preferentially shown the items likely to be interested, based on the purchase histories of similar shoppers. A search engine choose search results to display to a particular individual based on the click histories of other individuals with similar search patterns.

STT 592 -002: Intro. to Statistical Learning 7 PRINCIPLE COMPONENT ANALYSIS (PCA) Review Chap 6. 3, page 231 -233

STT 592 -002: Intro. to Statistical Learning 8 PCA • Ideas: a large set of correlated variables, principal components allow us to summarize this set with a smaller # of representative variables for original variability • Recall: PCA serves for - • Dimension reduction: data pre-processing before supervised techniques are applied • Lossy data compression • Feature extraction • A tool for data visualization

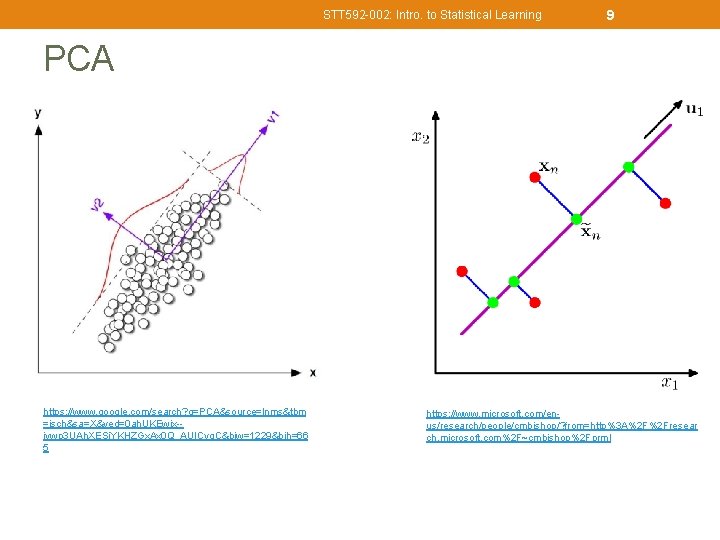

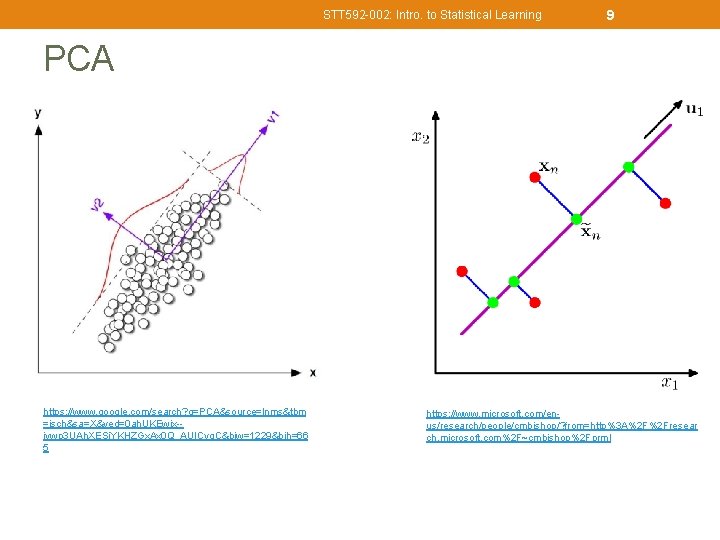

STT 592 -002: Intro. to Statistical Learning 9 PCA https: //www. google. com/search? q=PCA&source=lnms&tbm =isch&sa=X&ved=0 ah. UKEwjx-iywp 3 UAh. XESi. YKHZGx. Ax 0 Q_AUICyg. C&biw=1229&bih=66 5 https: //www. microsoft. com/enus/research/people/cmbishop/? from=http%3 A%2 F%2 Fresear ch. microsoft. com%2 F~cmbishop%2 Fprml

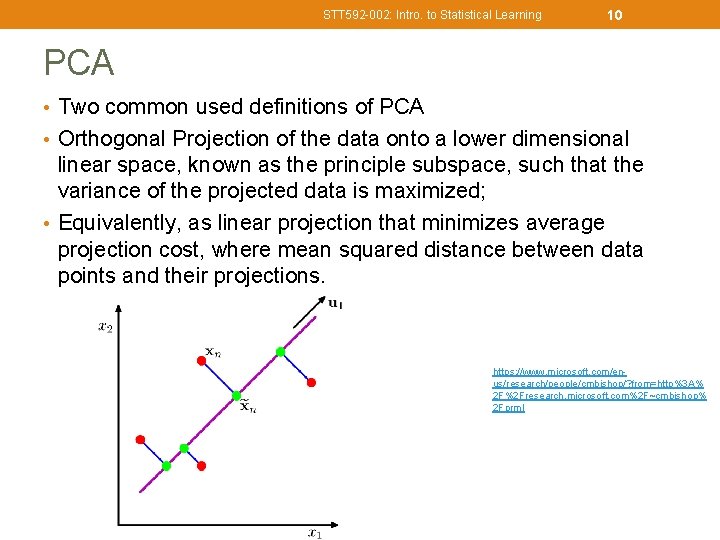

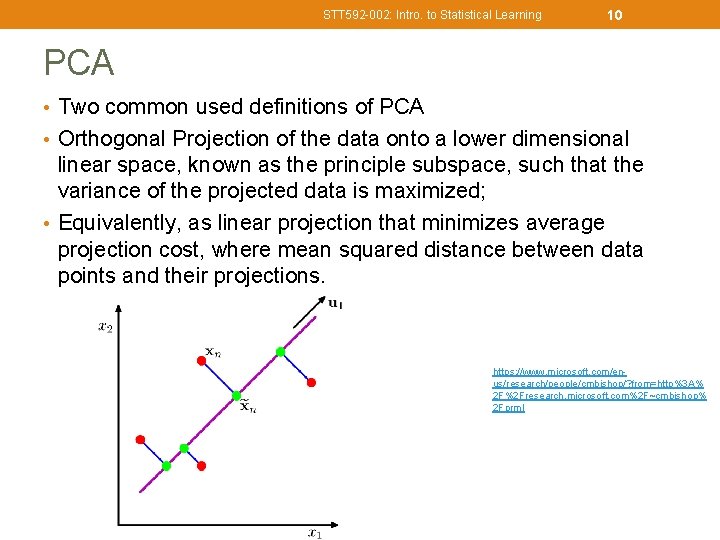

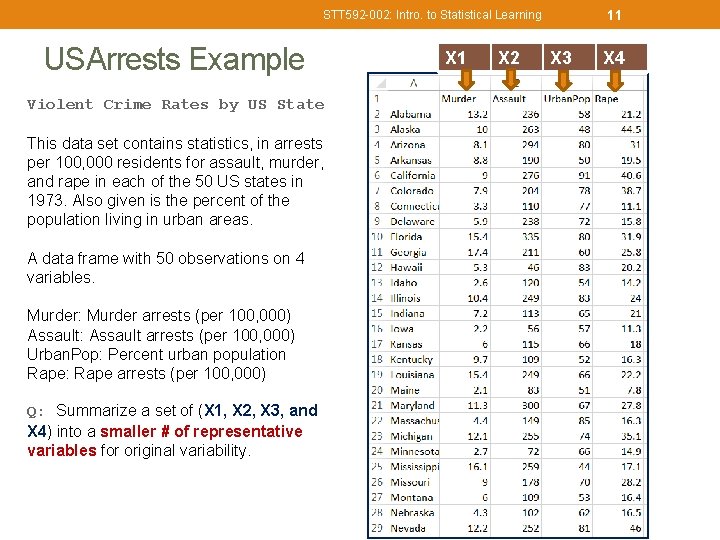

STT 592 -002: Intro. to Statistical Learning 10 PCA • Two common used definitions of PCA • Orthogonal Projection of the data onto a lower dimensional linear space, known as the principle subspace, such that the variance of the projected data is maximized; • Equivalently, as linear projection that minimizes average projection cost, where mean squared distance between data points and their projections. https: //www. microsoft. com/enus/research/people/cmbishop/? from=http%3 A% 2 F%2 Fresearch. microsoft. com%2 F~cmbishop% 2 Fprml

11 STT 592 -002: Intro. to Statistical Learning USArrests Example Violent Crime Rates by US State This data set contains statistics, in arrests per 100, 000 residents for assault, murder, and rape in each of the 50 US states in 1973. Also given is the percent of the population living in urban areas. A data frame with 50 observations on 4 variables. Murder: Murder arrests (per 100, 000) Assault: Assault arrests (per 100, 000) Urban. Pop: Percent urban population Rape: Rape arrests (per 100, 000) Q: Summarize a set of (X 1, X 2, X 3, and X 4) into a smaller # of representative variables for original variability. X 1 X 2 X 3 X 4

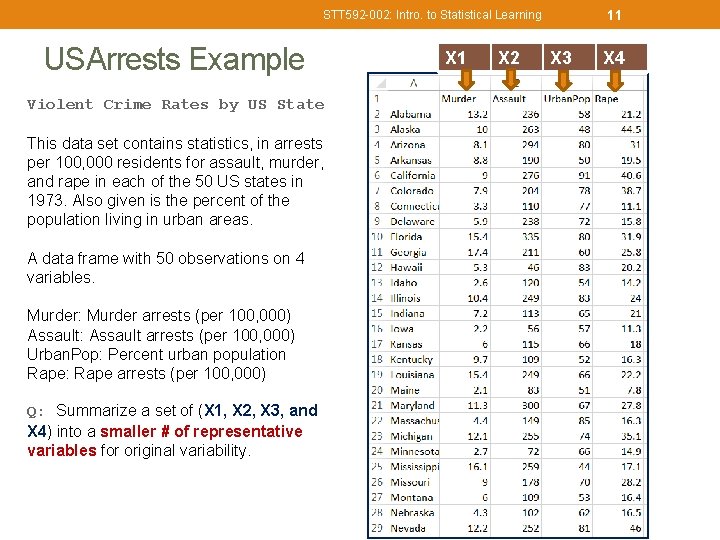

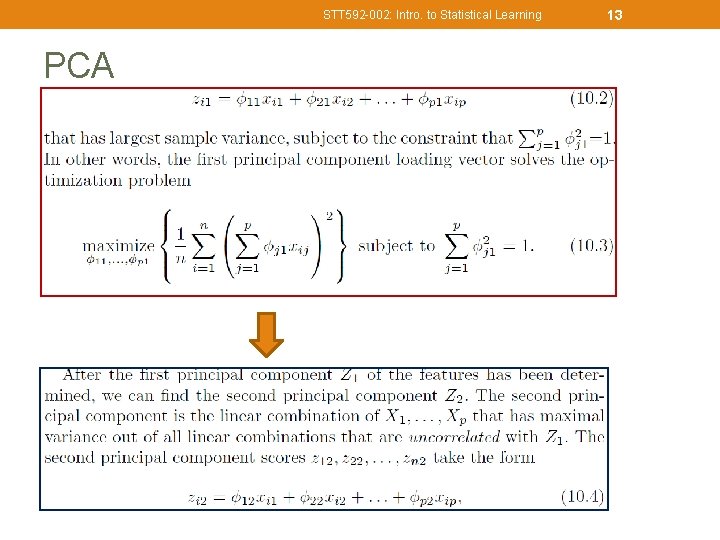

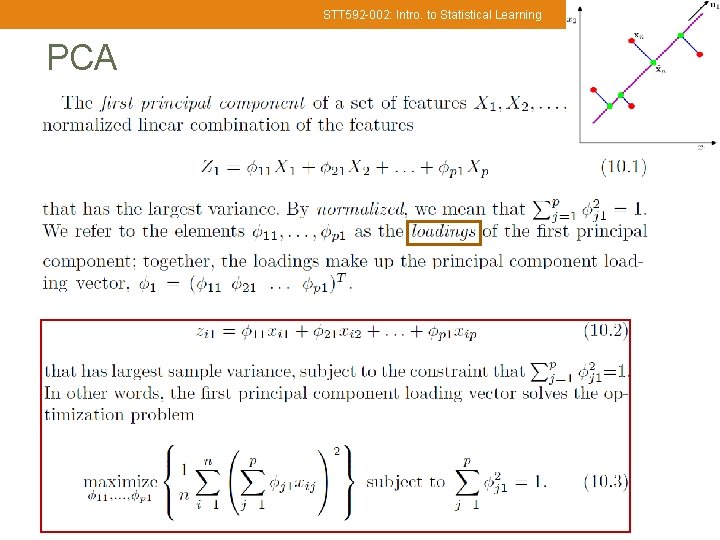

STT 592 -002: Intro. to Statistical Learning PCA 12

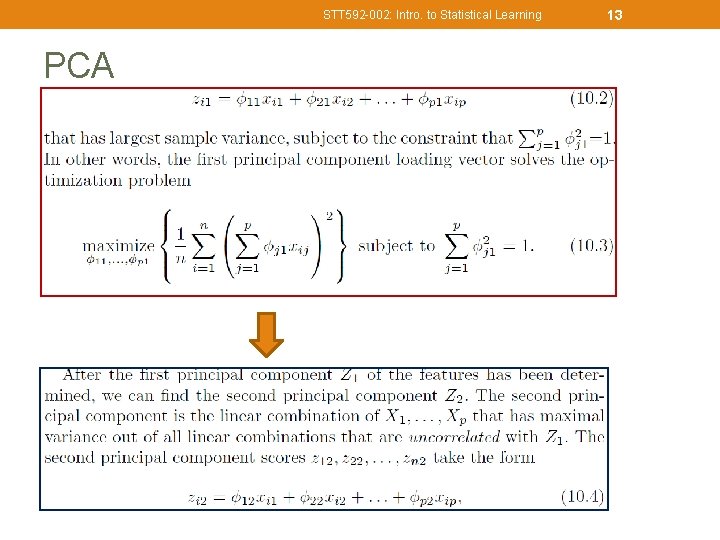

STT 592 -002: Intro. to Statistical Learning PCA 13

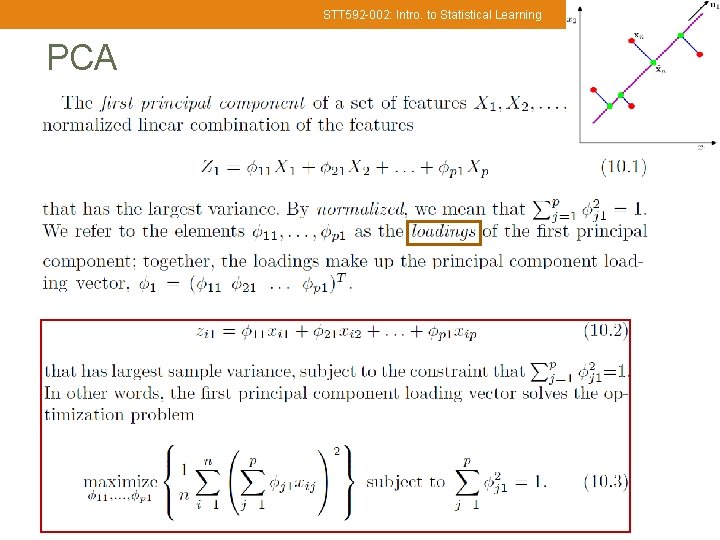

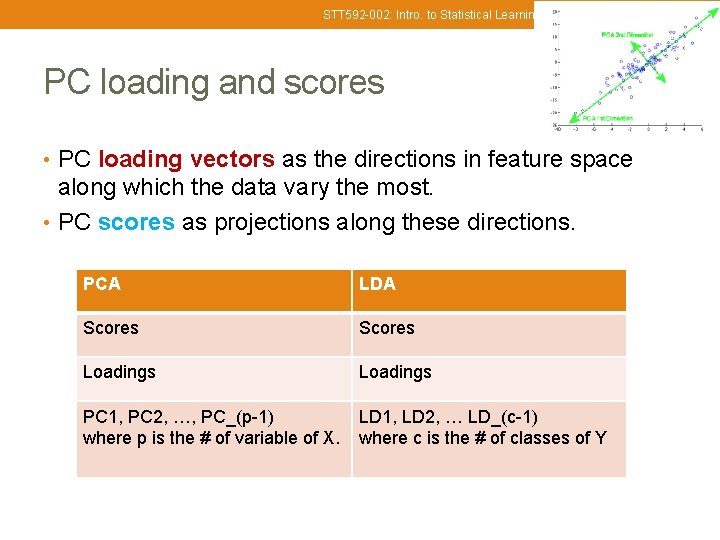

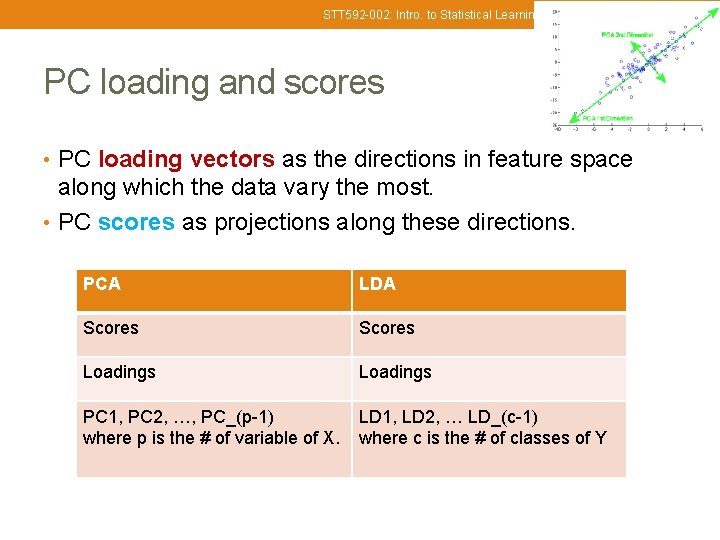

STT 592 -002: Intro. to Statistical Learning 14 PC loading and scores • PC loading vectors as the directions in feature space along which the data vary the most. • PC scores as projections along these directions. PCA LDA Scores Loadings PC 1, PC 2, …, PC_(p-1) where p is the # of variable of X. LD 1, LD 2, … LD_(c-1) where c is the # of classes of Y

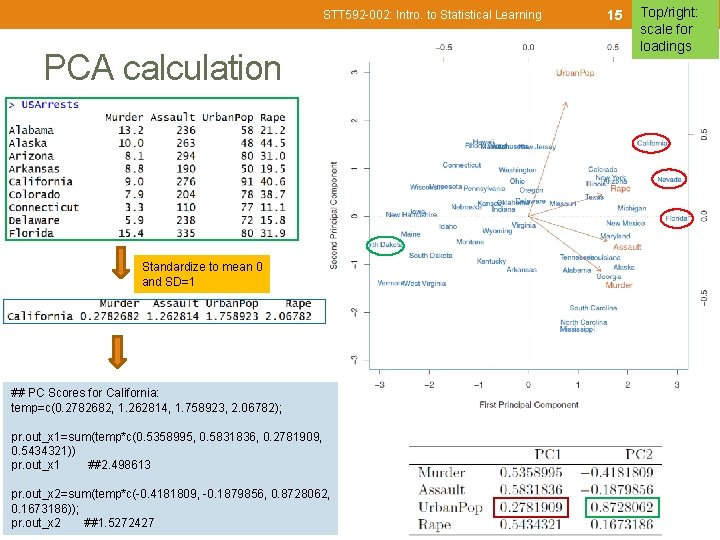

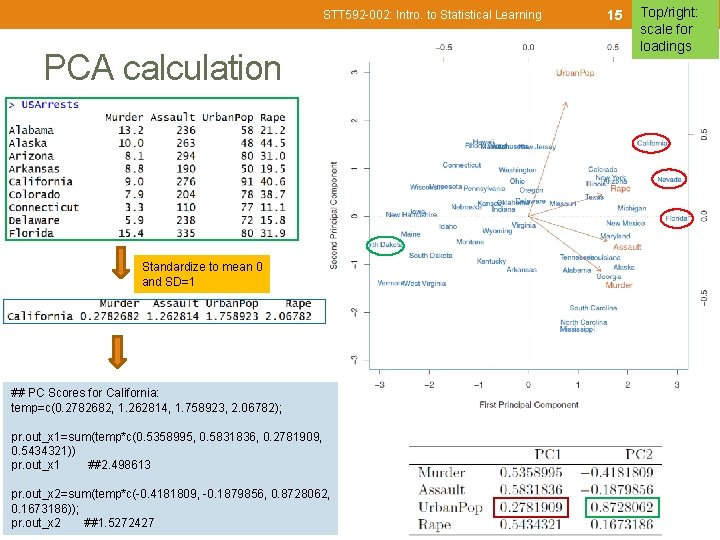

STT 592 -002: Intro. to Statistical Learning PCA calculation Standardize to mean 0 and SD=1 ## PC Scores for California: temp=c(0. 2782682, 1. 262814, 1. 758923, 2. 06782); pr. out_x 1=sum(temp*c(0. 5358995, 0. 5831836, 0. 2781909, 0. 5434321)) pr. out_x 1 ##2. 498613 pr. out_x 2=sum(temp*c(-0. 4181809, -0. 1879856, 0. 8728062, 0. 1673186)); pr. out_x 2 ##1. 5272427 15 Top/right: scale for loadings

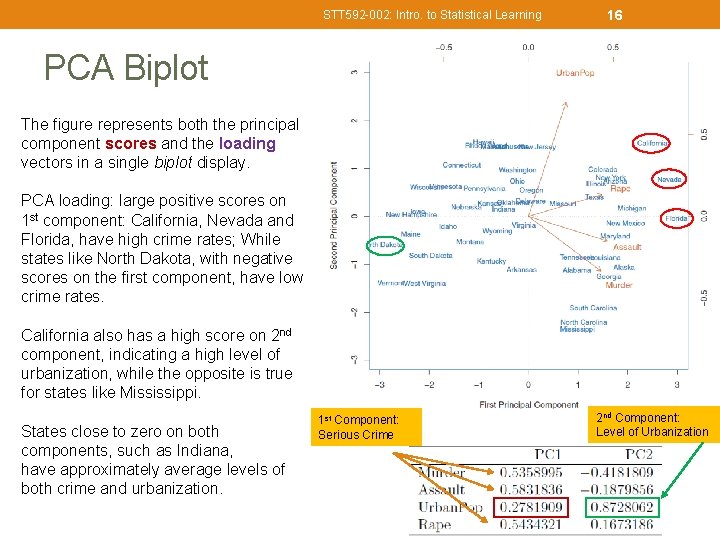

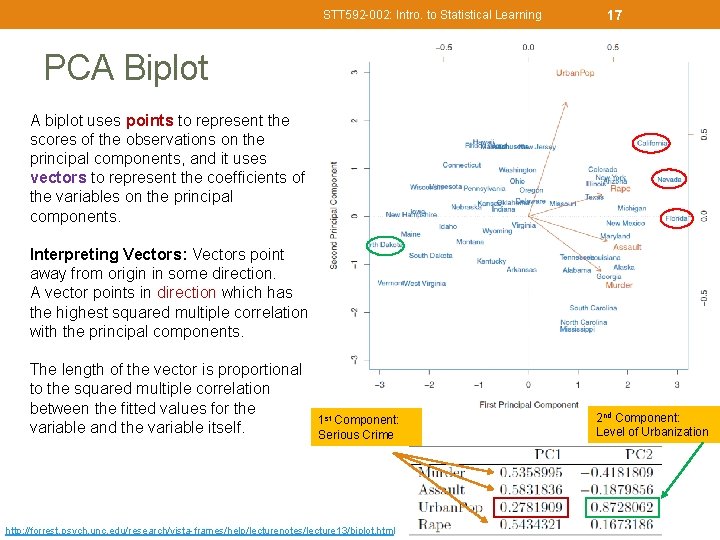

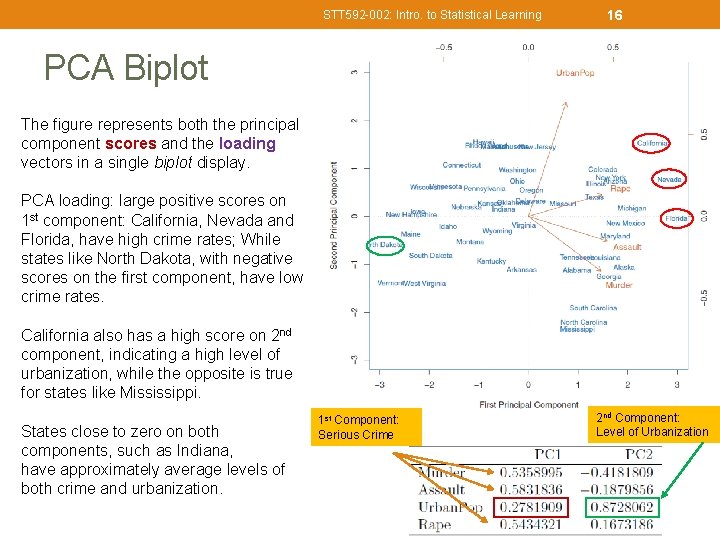

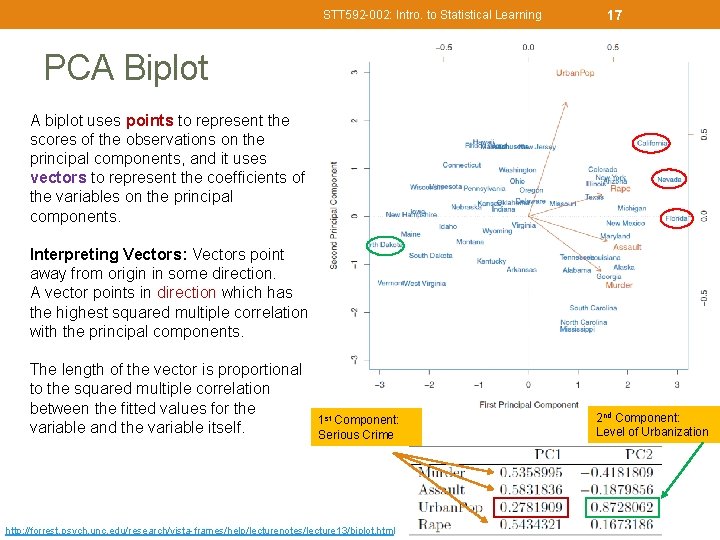

STT 592 -002: Intro. to Statistical Learning 16 PCA Biplot The figure represents both the principal component scores and the loading vectors in a single biplot display. PCA loading: large positive scores on 1 st component: California, Nevada and Florida, have high crime rates; While states like North Dakota, with negative scores on the first component, have low crime rates. California also has a high score on 2 nd component, indicating a high level of urbanization, while the opposite is true for states like Mississippi. States close to zero on both components, such as Indiana, have approximately average levels of both crime and urbanization. 1 st Component: Serious Crime 2 nd Component: Level of Urbanization

STT 592 -002: Intro. to Statistical Learning 17 PCA Biplot A biplot uses points to represent the scores of the observations on the principal components, and it uses vectors to represent the coefficients of the variables on the principal components. Interpreting Vectors: Vectors point away from origin in some direction. A vector points in direction which has the highest squared multiple correlation with the principal components. The length of the vector is proportional to the squared multiple correlation between the fitted values for the variable and the variable itself. 1 st Component: Serious Crime http: //forrest. psych. unc. edu/research/vista-frames/help/lecturenotes/lecture 13/biplot. html 2 nd Component: Level of Urbanization

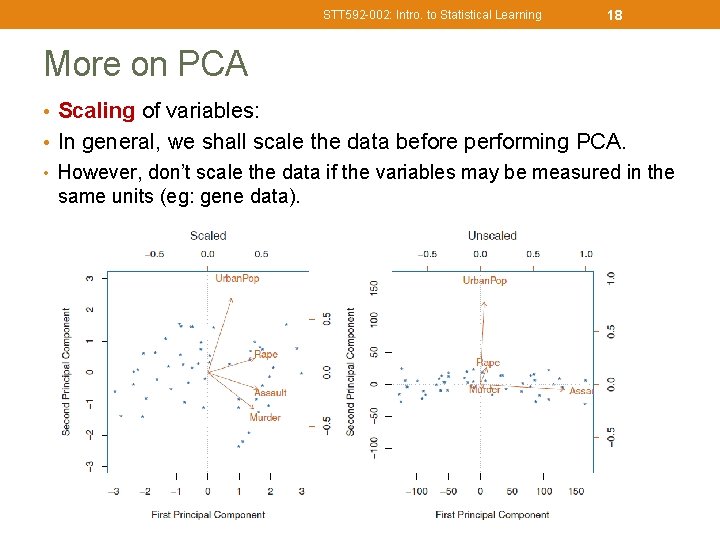

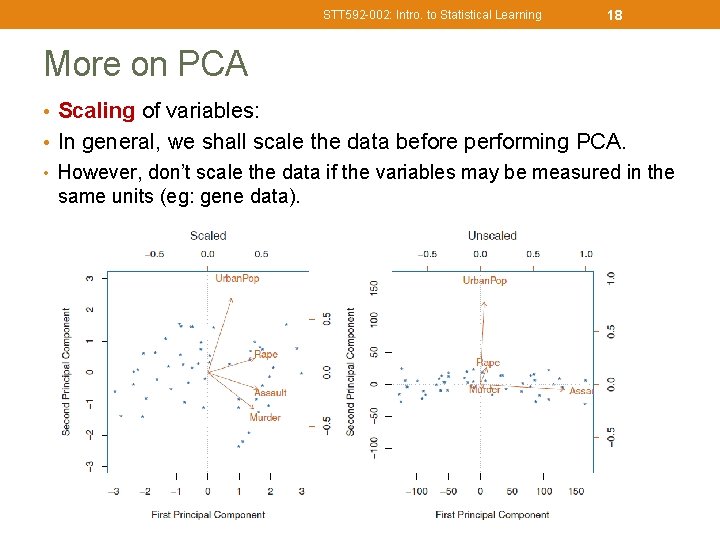

STT 592 -002: Intro. to Statistical Learning 18 More on PCA • Scaling of variables: • In general, we shall scale the data before performing PCA. • However, don’t scale the data if the variables may be measured in the same units (eg: gene data).

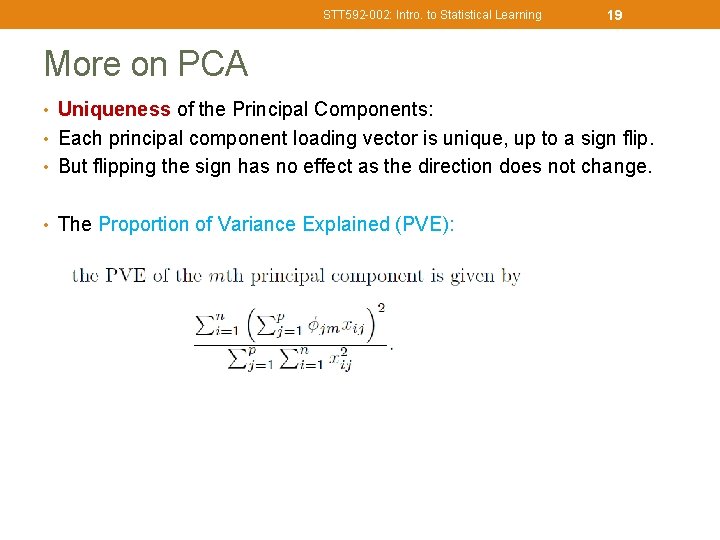

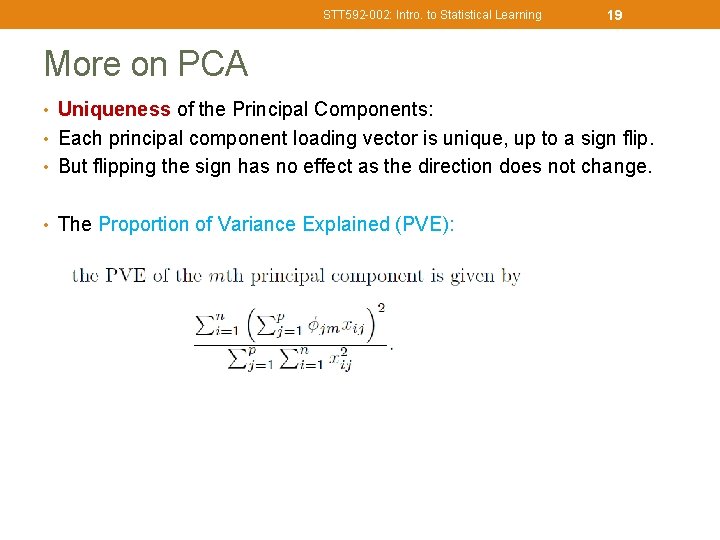

STT 592 -002: Intro. to Statistical Learning 19 More on PCA • Uniqueness of the Principal Components: • Each principal component loading vector is unique, up to a sign flip. • But flipping the sign has no effect as the direction does not change. • The Proportion of Variance Explained (PVE):

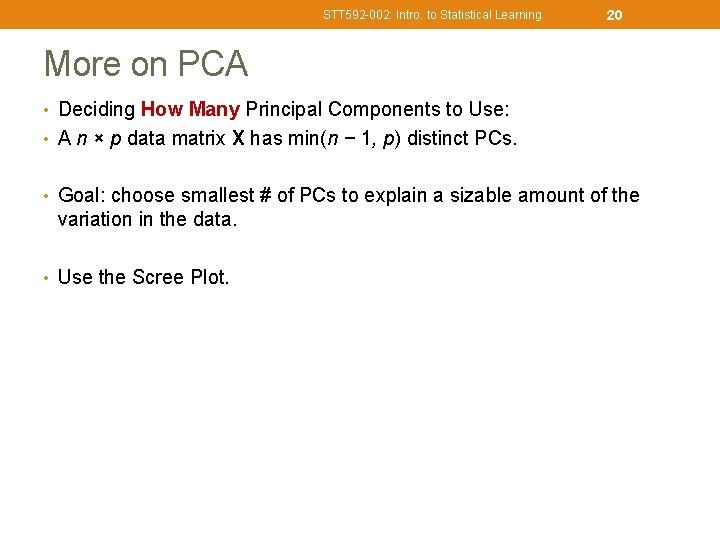

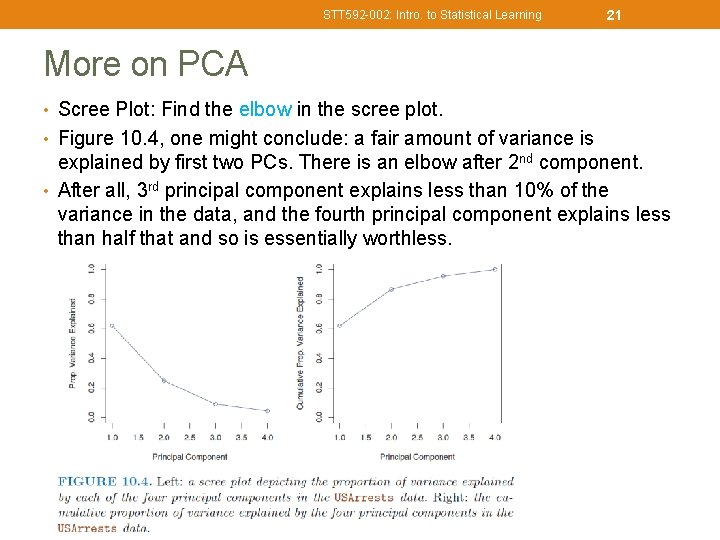

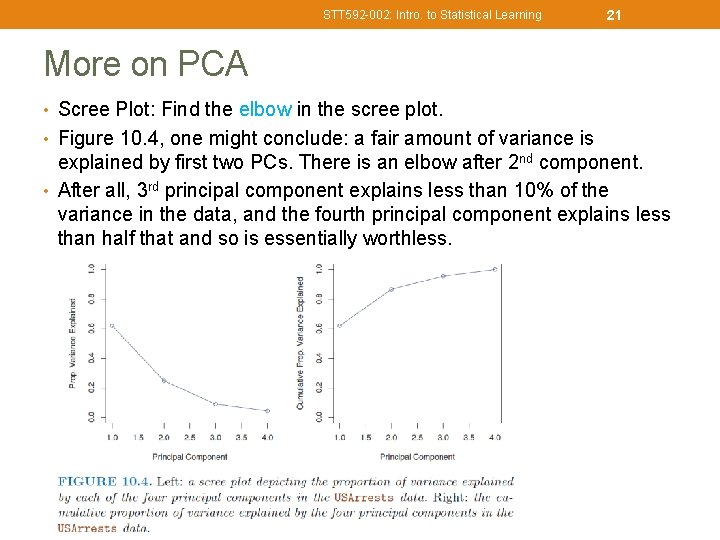

STT 592 -002: Intro. to Statistical Learning 20 More on PCA • Deciding How Many Principal Components to Use: • A n × p data matrix X has min(n − 1, p) distinct PCs. • Goal: choose smallest # of PCs to explain a sizable amount of the variation in the data. • Use the Scree Plot.

STT 592 -002: Intro. to Statistical Learning 21 More on PCA • Scree Plot: Find the elbow in the scree plot. • Figure 10. 4, one might conclude: a fair amount of variance is explained by first two PCs. There is an elbow after 2 nd component. • After all, 3 rd principal component explains less than 10% of the variance in the data, and the fourth principal component explains less than half that and so is essentially worthless.

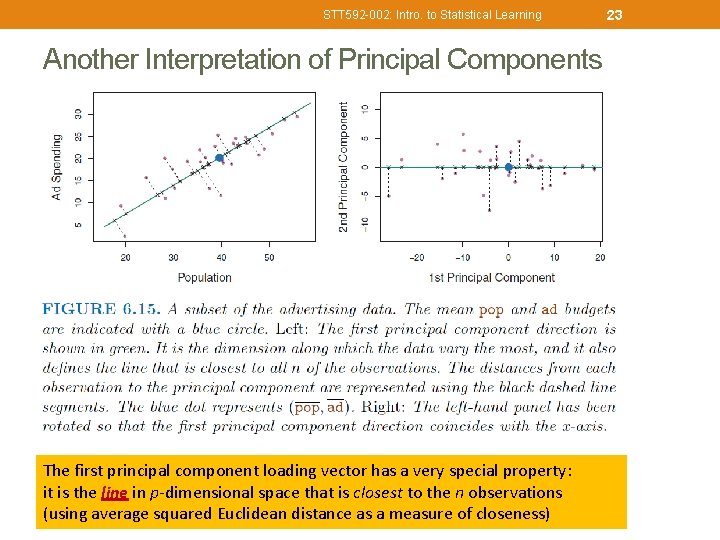

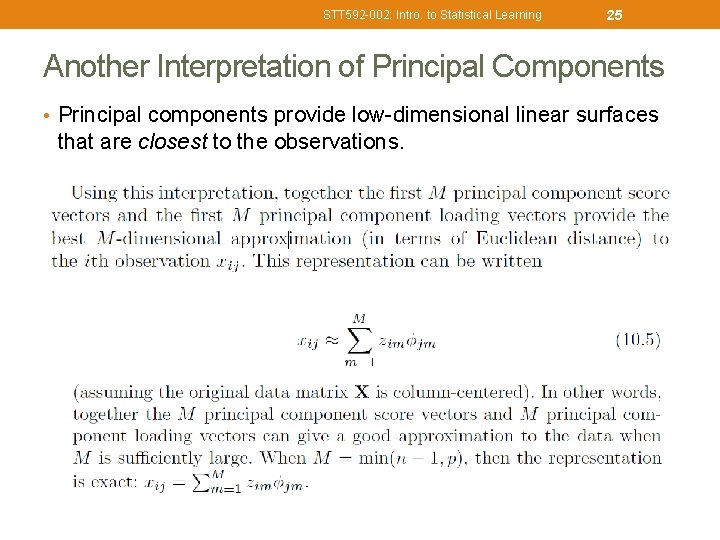

STT 592 -002: Intro. to Statistical Learning 22 Another Interpretation of Principal Components • Principal components provide low-dimensional linear surfaces that are closest to the observations.

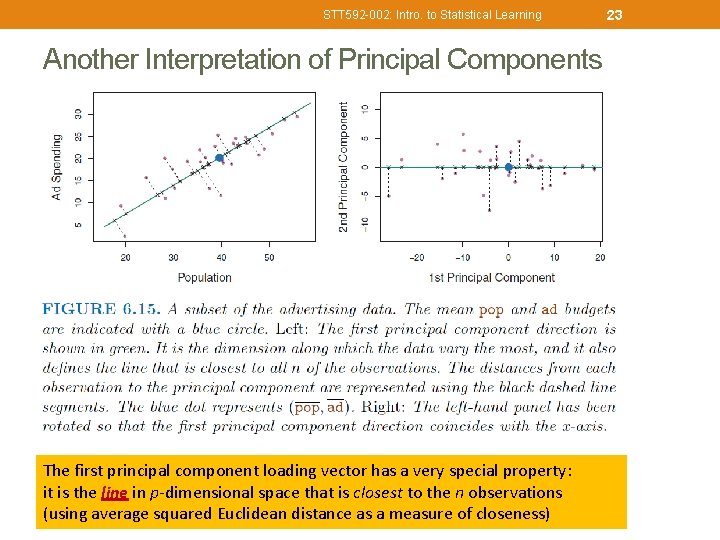

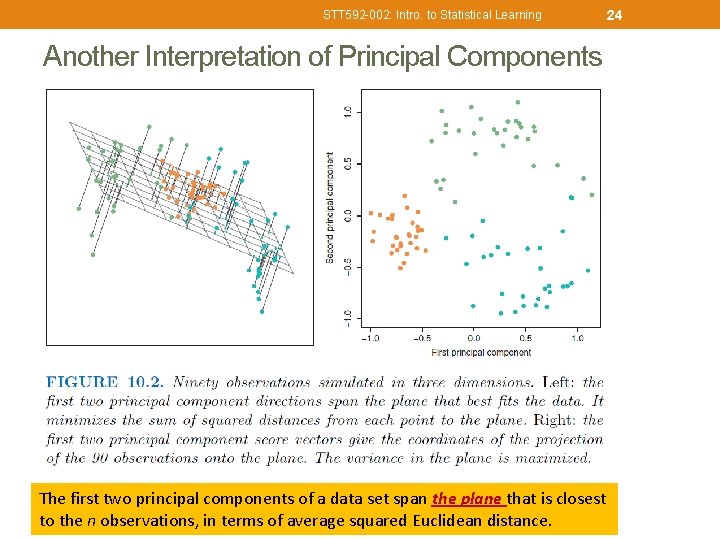

STT 592 -002: Intro. to Statistical Learning Another Interpretation of Principal Components The first principal component loading vector has a very special property: it is the line in p-dimensional space that is closest to the n observations (using average squared Euclidean distance as a measure of closeness) 23

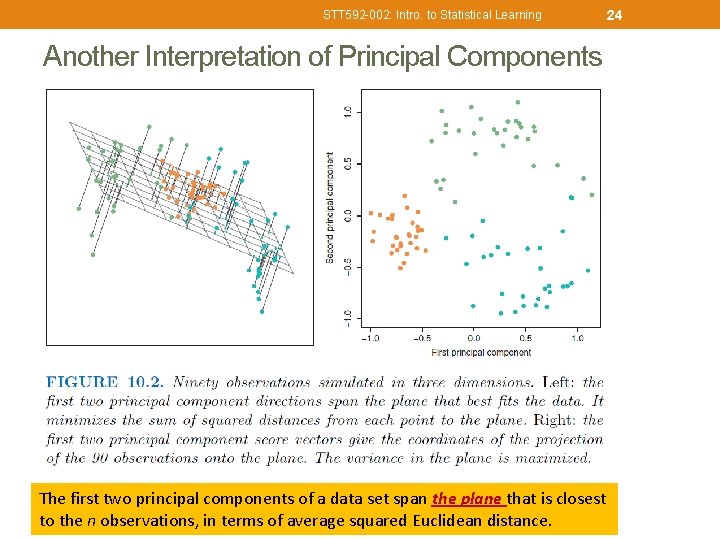

STT 592 -002: Intro. to Statistical Learning Another Interpretation of Principal Components The first two principal components of a data set span the plane that is closest to the n observations, in terms of average squared Euclidean distance. 24

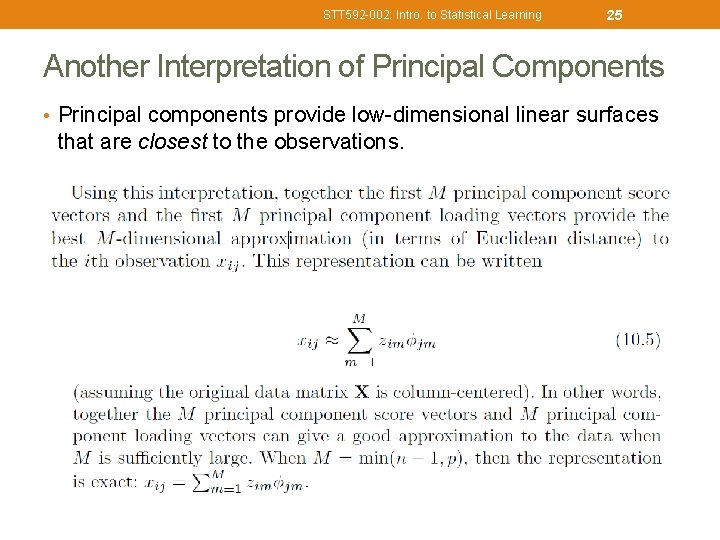

STT 592 -002: Intro. to Statistical Learning 25 Another Interpretation of Principal Components • Principal components provide low-dimensional linear surfaces that are closest to the observations.