Storage Performance on SQL Server Joe Chang Coverage

- Slides: 75

Storage Performance on SQL Server Joe Chang

Coverage Emphasis is on Line of Business DB Different priorities for less critical apps Performance Fault-tolerance covered else where

Overview IO Performance Objectives The Complete Storage Environment Direct-Attach and SAN Storage Components Disk Performance SQL Server IO Characteristics Configuration Examples SSD

Old Rules Meet transaction throughput Disk Performance Criteria Read from Data, Write to Logs Separate Data and Log files? Disk Queue Depth < 2 per disk Prevalent use of SAN LUNs with unknown number of disks Latency (Avg Disk Sec/Read)

Storage Performance Criteria SELECT (Read) Query Data must be read into buffer cache if not already in cache – read from data INSERT/UPDATE/DELETE (Write) Query Data must be read into buffer cache Transaction must be written to log Buffer is marked as dirty, lazy writer handles Large Query (as necessary) Write and Read to tempdb

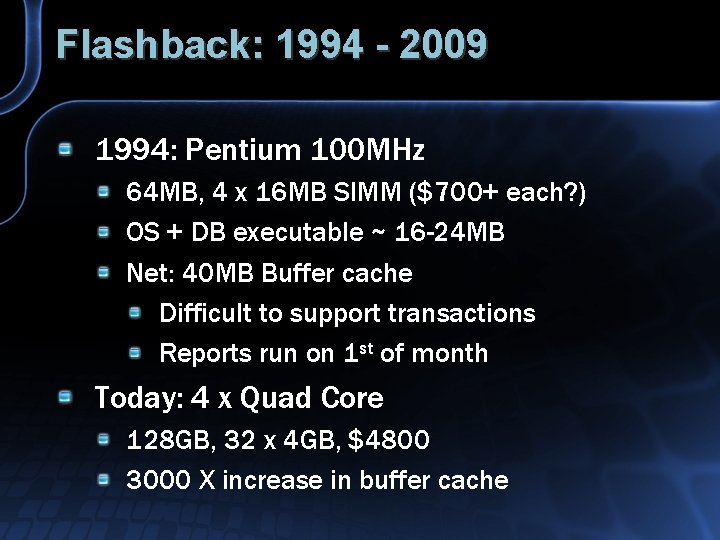

Flashback: 1994 - 2009 1994: Pentium 100 MHz 64 MB, 4 x 16 MB SIMM ($700+ each? ) OS + DB executable ~ 16 -24 MB Net: 40 MB Buffer cache Difficult to support transactions Reports run on 1 st of month Today: 4 x Quad Core 128 GB, 32 x 4 GB, $4800 3000 X increase in buffer cache

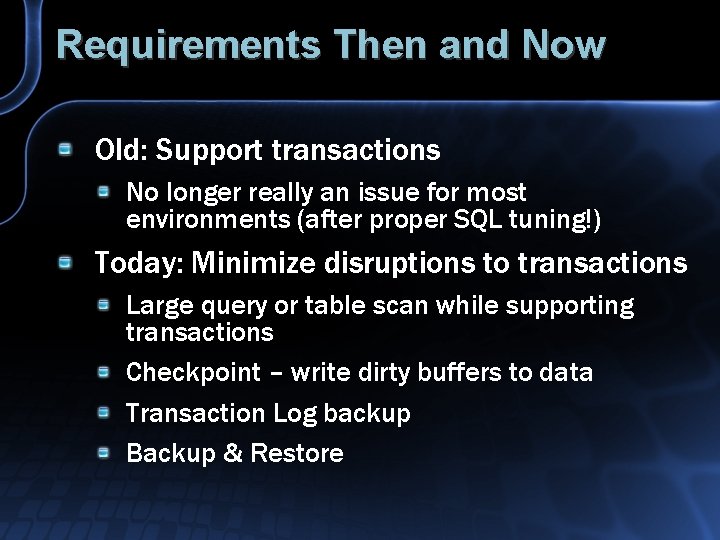

Requirements Then and Now Old: Support transactions No longer really an issue for most environments (after proper SQL tuning!) Today: Minimize disruptions to transactions Large query or table scan while supporting transactions Checkpoint – write dirty buffers to data Transaction Log backup Backup & Restore

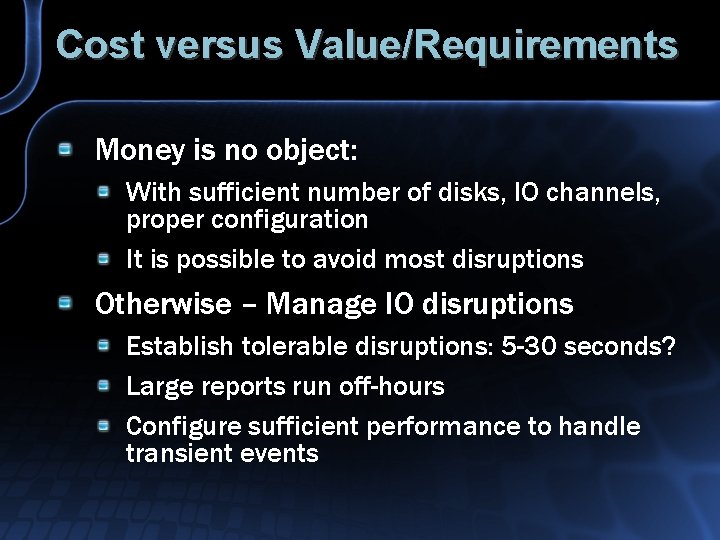

Cost versus Value/Requirements Money is no object: With sufficient number of disks, IO channels, proper configuration It is possible to avoid most disruptions Otherwise – Manage IO disruptions Establish tolerable disruptions: 5 -30 seconds? Large reports run off-hours Configure sufficient performance to handle transient events

Complete Storage Environment Direct Attach and SAN

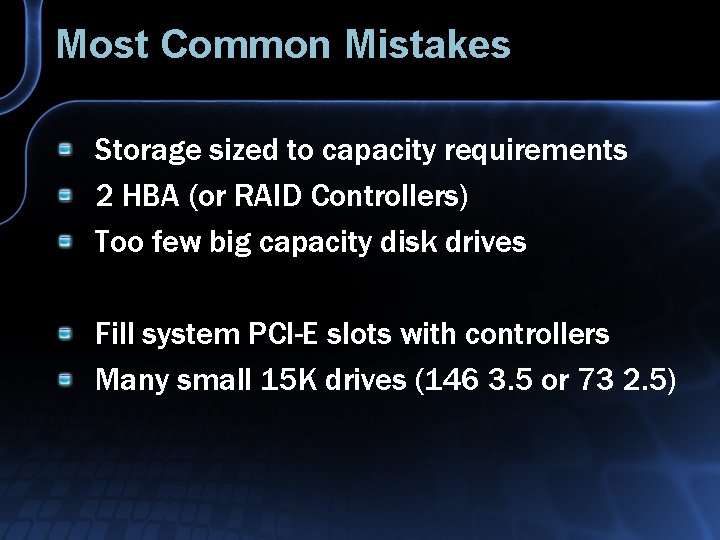

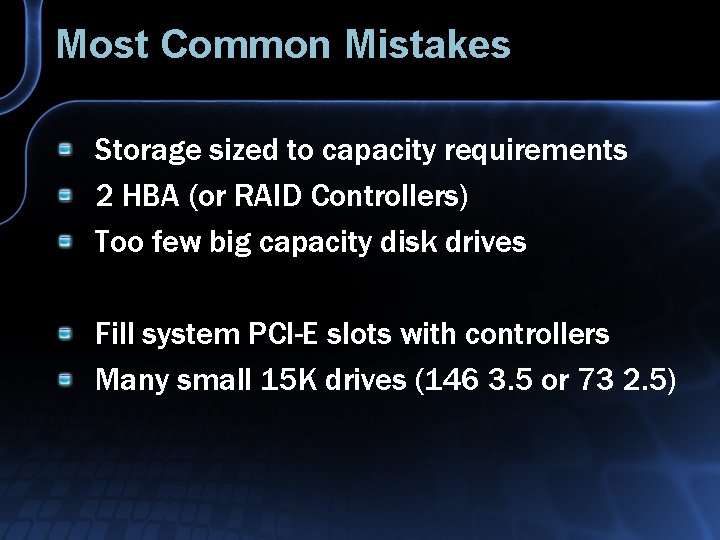

Most Common Mistakes Storage sized to capacity requirements 2 HBA (or RAID Controllers) Too few big capacity disk drives Fill system PCI-E slots with controllers Many small 15 K drives (146 3. 5 or 73 2. 5)

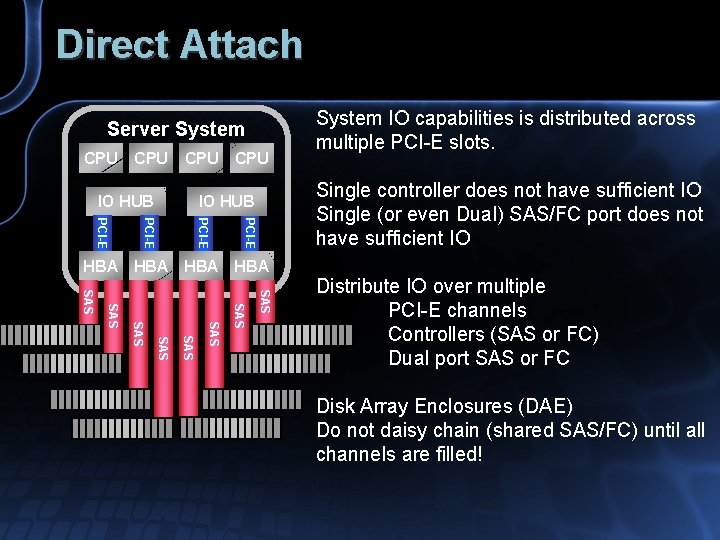

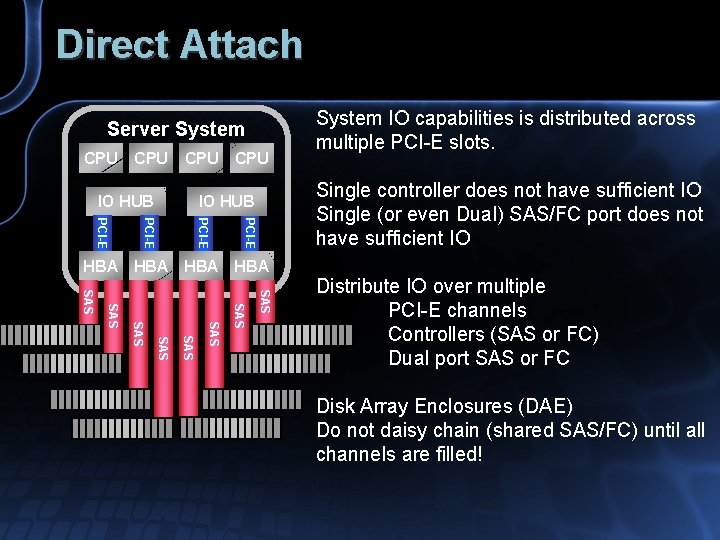

Direct Attach Server System CPU CPU PCI-E Single controller does not have sufficient IO Single (or even Dual) SAS/FC port does not have sufficient IO PCI-E IO HUB System IO capabilities is distributed across multiple PCI-E slots. HBA HBA SAS SAS Distribute IO over multiple PCI-E channels Controllers (SAS or FC) Dual port SAS or FC Disk Array Enclosures (DAE) Do not daisy chain (shared SAS/FC) until all channels are filled!

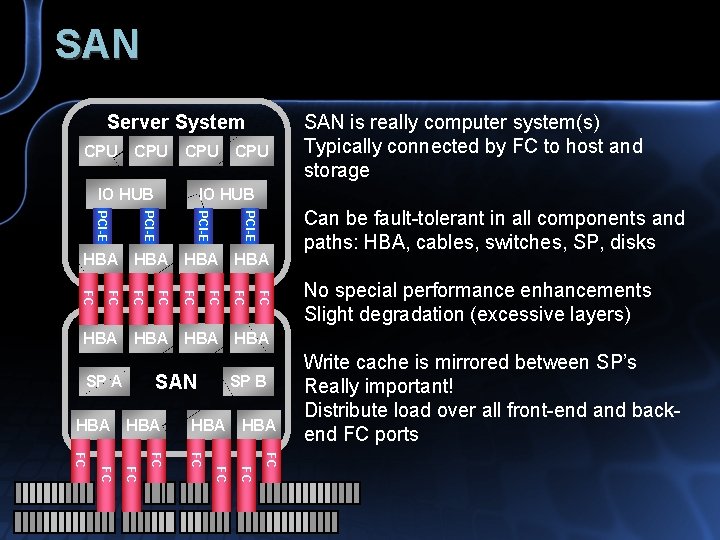

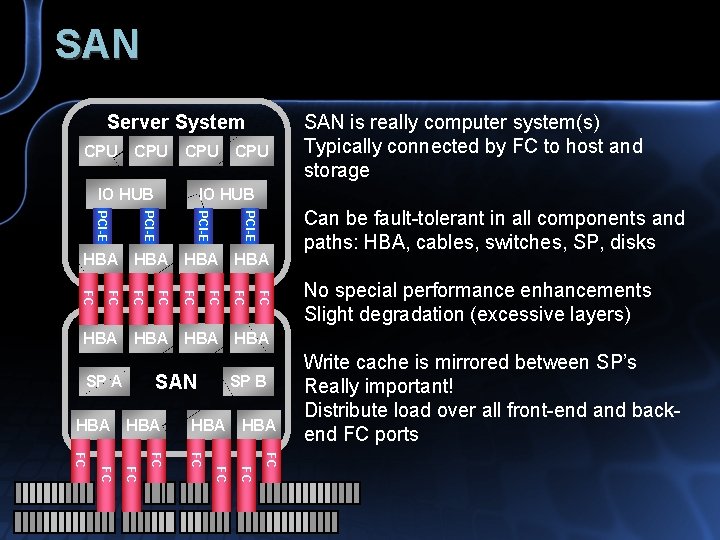

SAN Server System CPU CPU IO HUB CPU SAN is really computer system(s) Typically connected by FC to host and storage IO HUB PCI-E HBA HBA Can be fault-tolerant in all components and paths: HBA, cables, switches, SP, disks FC FC No special performance enhancements Slight degradation (excessive layers) HBA HBA SAN SP A SP B FC FC HBA HBA Write cache is mirrored between SP’s Really important! Distribute load over all front-end and backend FC ports

Direct Attach & SAN Direct Attach RAID Controller in Server Fault-tolerant disks, sometimes controller/path, 2 -node clusters SAN Host Bus Adapter, (switches) Service Processor Full component and path fault tolerance Multi-node clusters

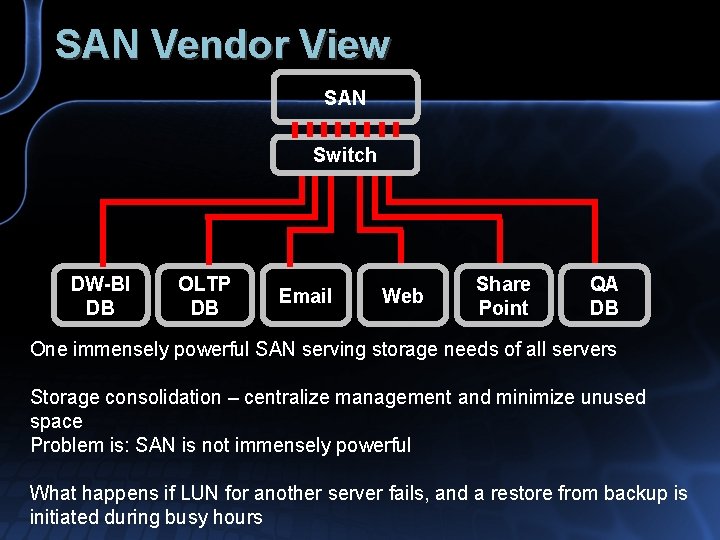

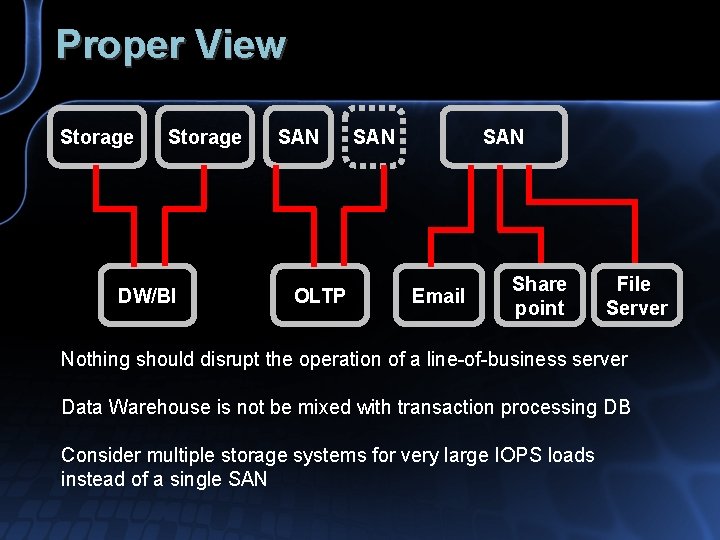

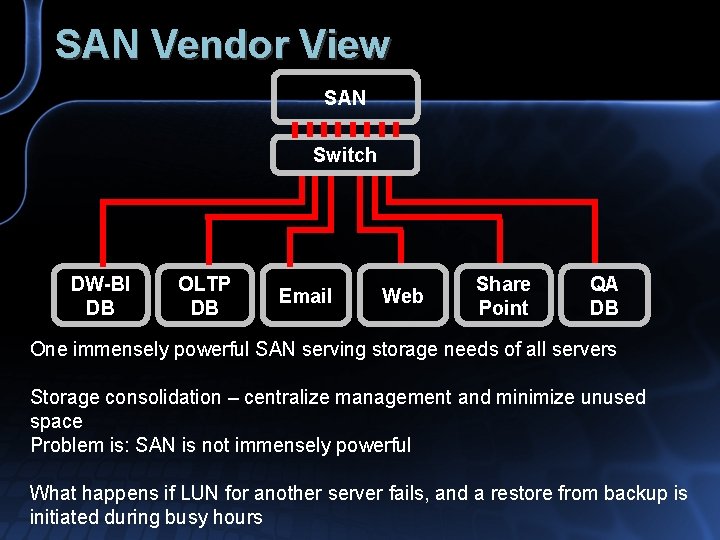

SAN Vendor View SAN Switch DW-BI DB OLTP DB Email Web Share Point QA DB One immensely powerful SAN serving storage needs of all servers Storage consolidation – centralize management and minimize unused space Problem is: SAN is not immensely powerful What happens if LUN for another server fails, and a restore from backup is initiated during busy hours

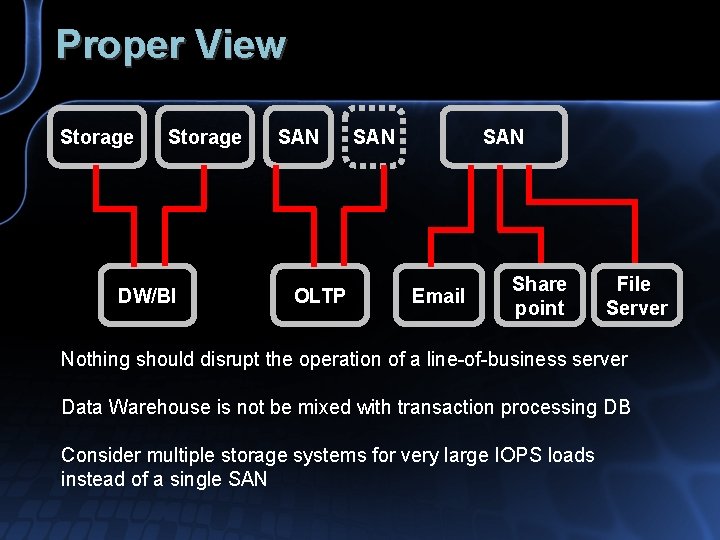

Proper View Storage DW/BI SAN OLTP SAN Email Share point File Server Nothing should disrupt the operation of a line-of-business server Data Warehouse is not be mixed with transaction processing DB Consider multiple storage systems for very large IOPS loads instead of a single SAN

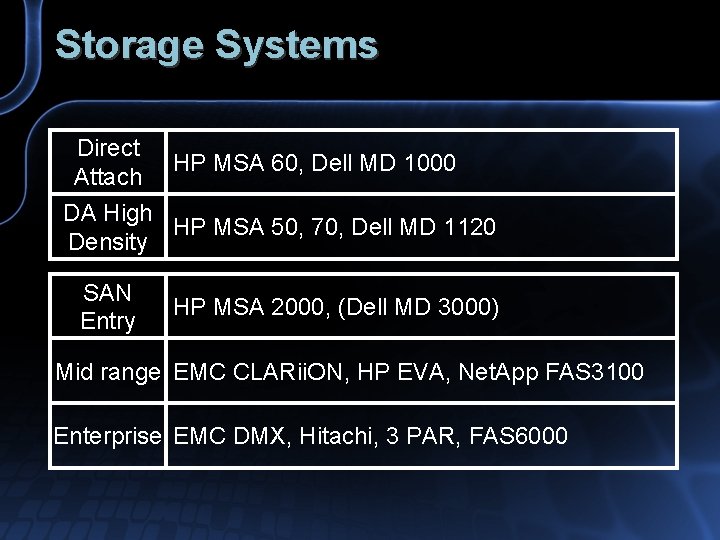

Storage Systems Direct HP MSA 60, Dell MD 1000 Attach DA High HP MSA 50, 70, Dell MD 1120 Density SAN Entry HP MSA 2000, (Dell MD 3000) Mid range EMC CLARii. ON, HP EVA, Net. App FAS 3100 Enterprise EMC DMX, Hitachi, 3 PAR, FAS 6000

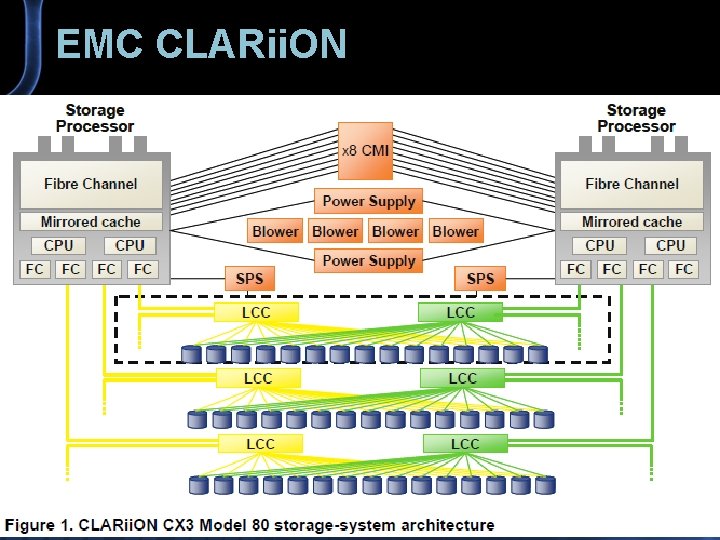

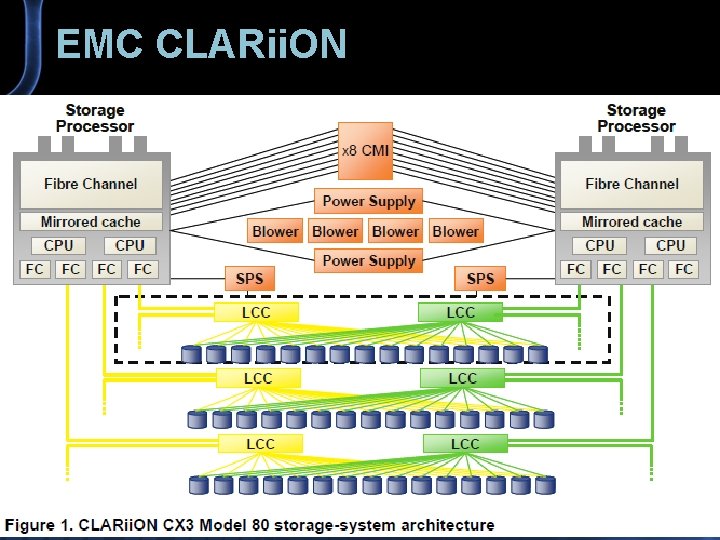

EMC CLARii. ON

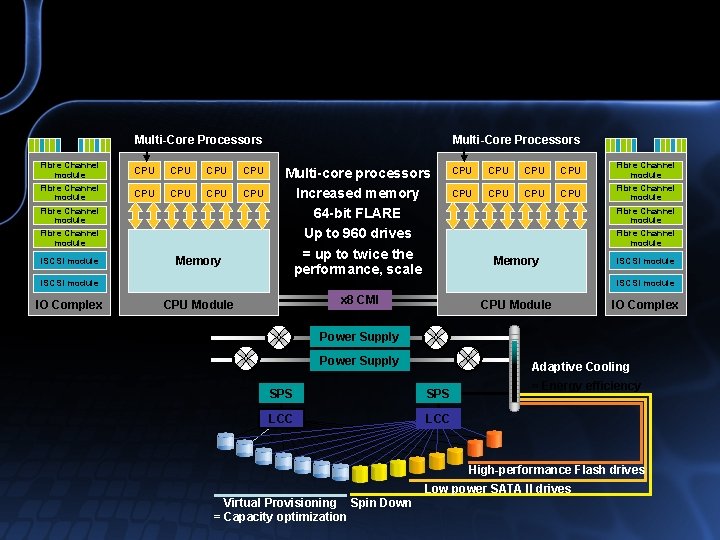

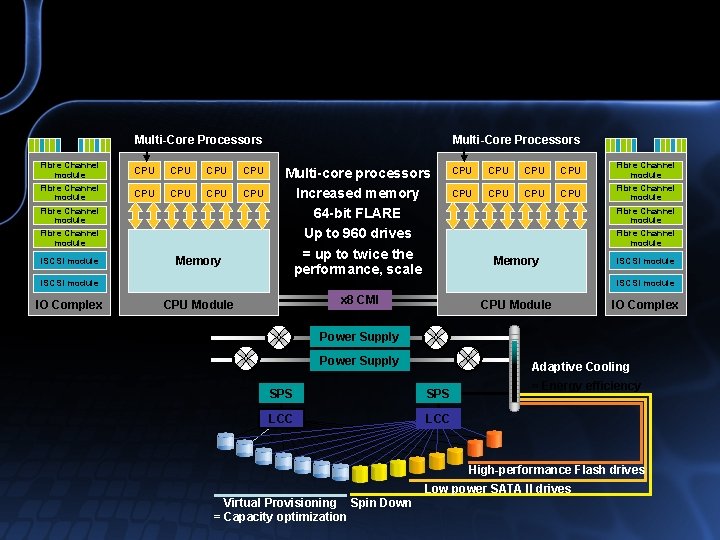

Multi-Core Processors Fibre Channel module CPU CPU Fibre Channel module i. SCSI module Memory Multi-Core Processors Multi-core processors Increased memory 64 -bit FLARE Up to 960 drives = up to twice the performance, scale CPU CPU Fibre Channel module Memory i. SCSI module IO Complex Fibre Channel module i. SCSI module x 8 CMI CPU Module IO Complex Power Supply Adaptive Cooling SPS LCC = Energy efficiency High-performance Flash drives Low power SATA II drives Virtual Provisioning Spin Down = Capacity optimization

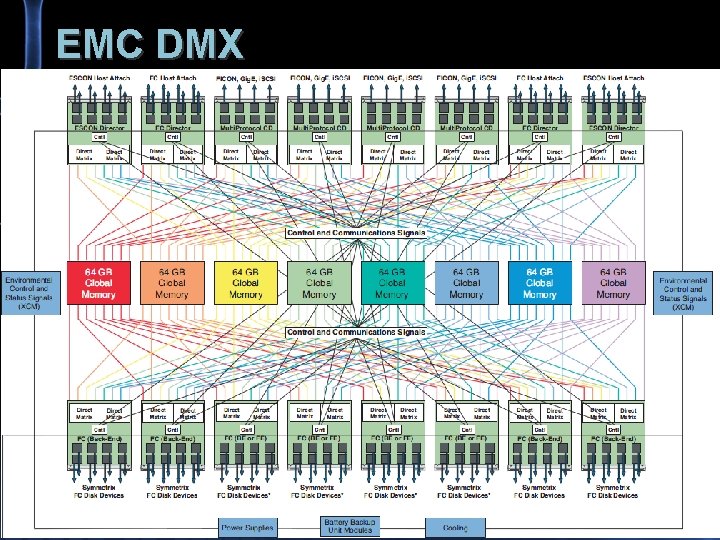

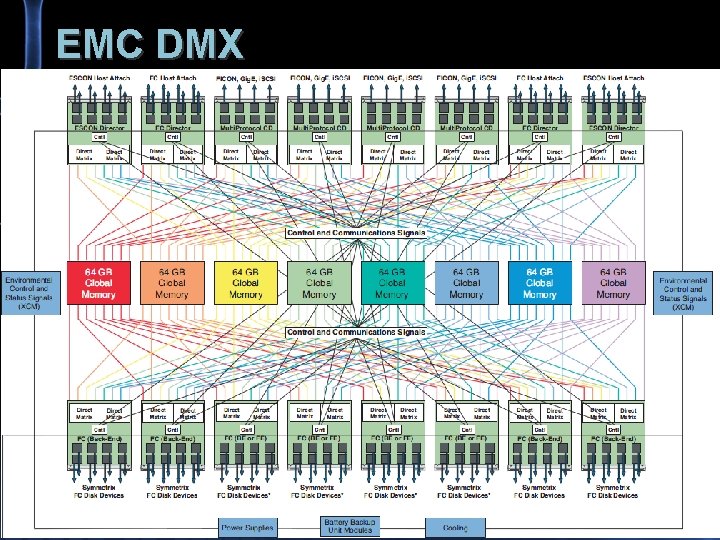

EMC DMX

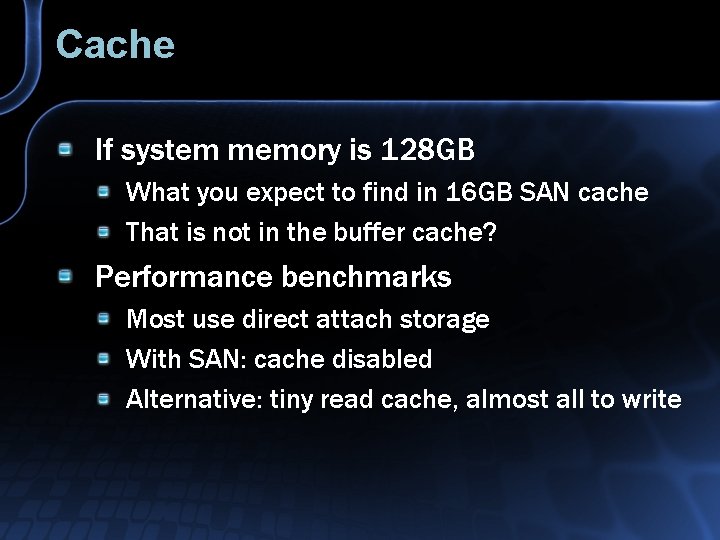

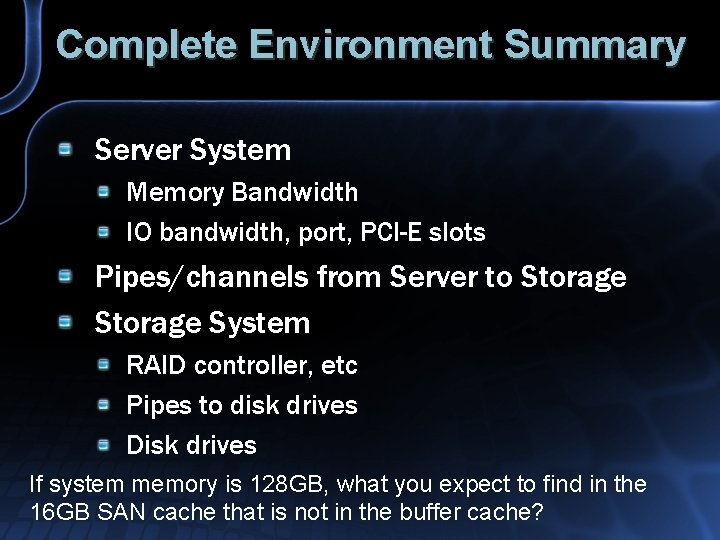

Cache If system memory is 128 GB What you expect to find in 16 GB SAN cache That is not in the buffer cache? Performance benchmarks Most use direct attach storage With SAN: cache disabled Alternative: tiny read cache, almost all to write

Complete Environment Summary Server System Memory Bandwidth IO bandwidth, port, PCI-E slots Pipes/channels from Server to Storage System RAID controller, etc Pipes to disk drives Disk drives If system memory is 128 GB, what you expect to find in the 16 GB SAN cache that is not in the buffer cache?

Storage Components

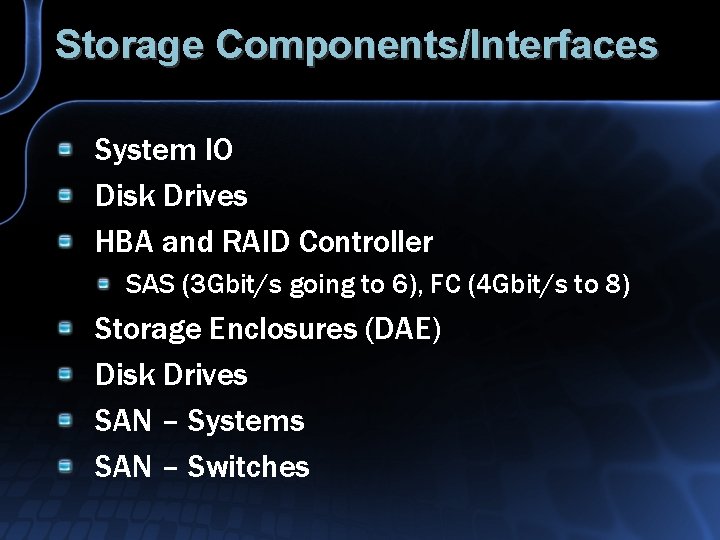

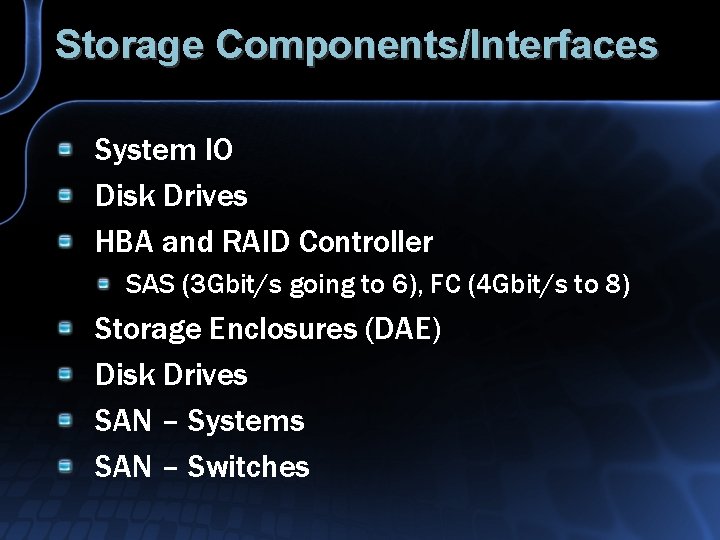

Storage Components/Interfaces System IO Disk Drives HBA and RAID Controller SAS (3 Gbit/s going to 6), FC (4 Gbit/s to 8) Storage Enclosures (DAE) Disk Drives SAN – Systems SAN – Switches

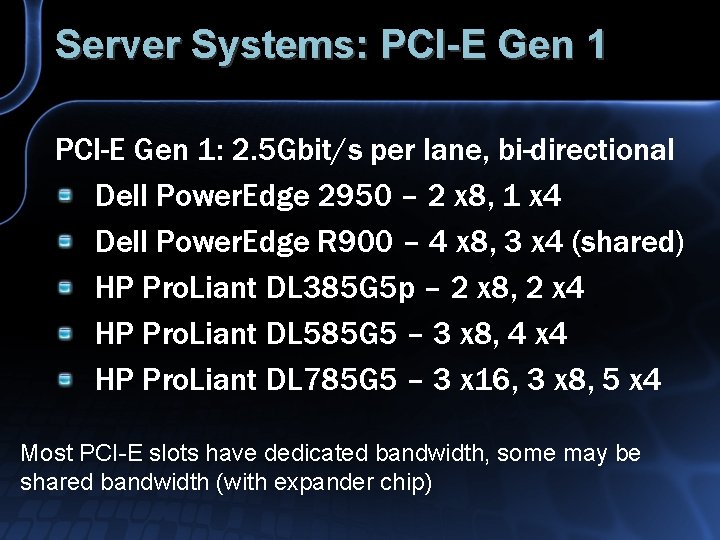

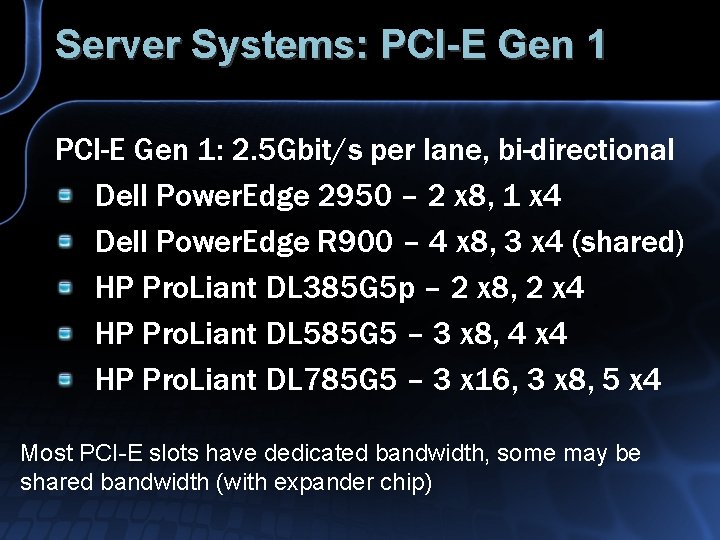

Server Systems: PCI-E Gen 1: 2. 5 Gbit/s per lane, bi-directional Dell Power. Edge 2950 – 2 x 8, 1 x 4 Dell Power. Edge R 900 – 4 x 8, 3 x 4 (shared) HP Pro. Liant DL 385 G 5 p – 2 x 8, 2 x 4 HP Pro. Liant DL 585 G 5 – 3 x 8, 4 x 4 HP Pro. Liant DL 785 G 5 – 3 x 16, 3 x 8, 5 x 4 Most PCI-E slots have dedicated bandwidth, some may be shared bandwidth (with expander chip)

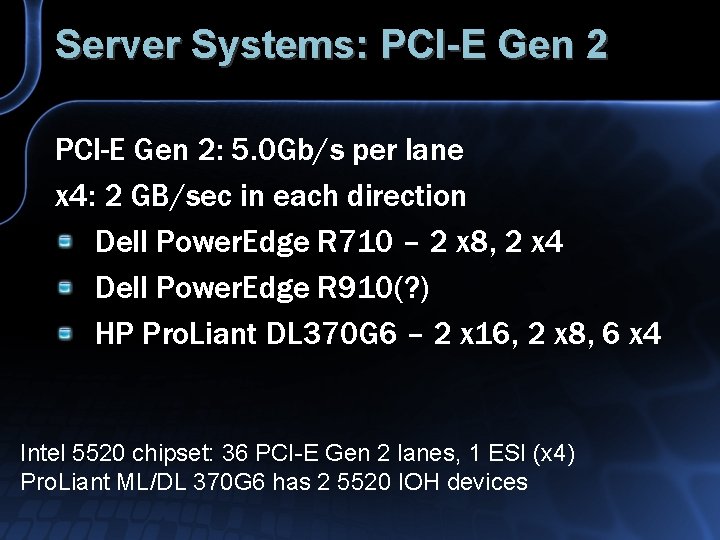

Server Systems: PCI-E Gen 2: 5. 0 Gb/s per lane x 4: 2 GB/sec in each direction Dell Power. Edge R 710 – 2 x 8, 2 x 4 Dell Power. Edge R 910(? ) HP Pro. Liant DL 370 G 6 – 2 x 16, 2 x 8, 6 x 4 Intel 5520 chipset: 36 PCI-E Gen 2 lanes, 1 ESI (x 4) Pro. Liant ML/DL 370 G 6 has 2 5520 IOH devices

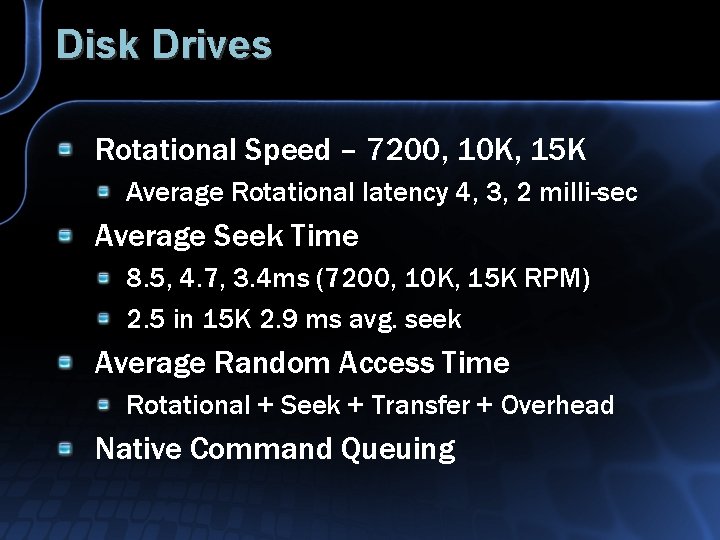

Disk Drives Rotational Speed – 7200, 10 K, 15 K Average Rotational latency 4, 3, 2 milli-sec Average Seek Time 8. 5, 4. 7, 3. 4 ms (7200, 10 K, 15 K RPM) 2. 5 in 15 K 2. 9 ms avg. seek Average Random Access Time Rotational + Seek + Transfer + Overhead Native Command Queuing

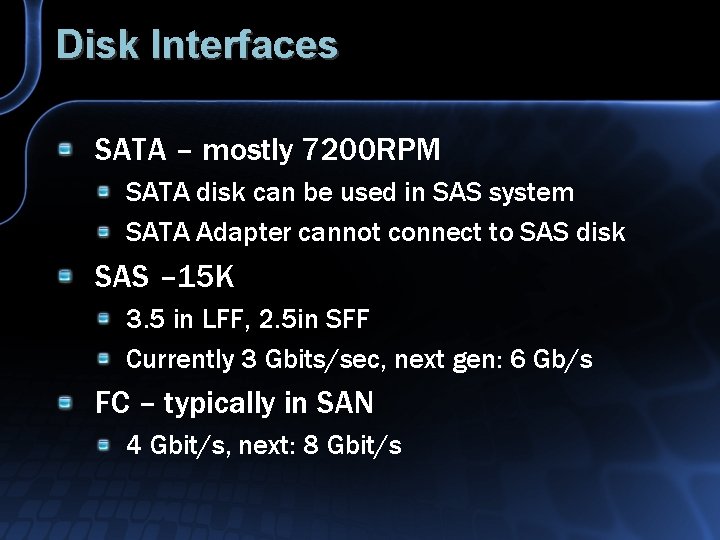

Disk Interfaces SATA – mostly 7200 RPM SATA disk can be used in SAS system SATA Adapter cannot connect to SAS disk SAS – 15 K 3. 5 in LFF, 2. 5 in SFF Currently 3 Gbits/sec, next gen: 6 Gb/s FC – typically in SAN 4 Gbit/s, next: 8 Gbit/s

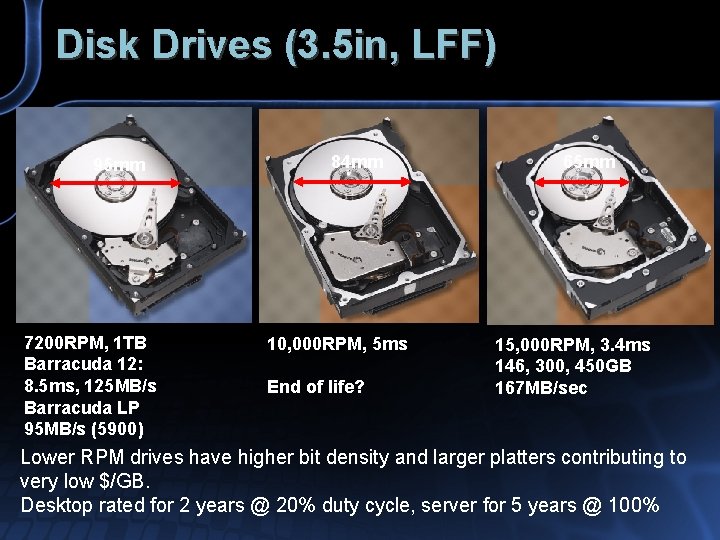

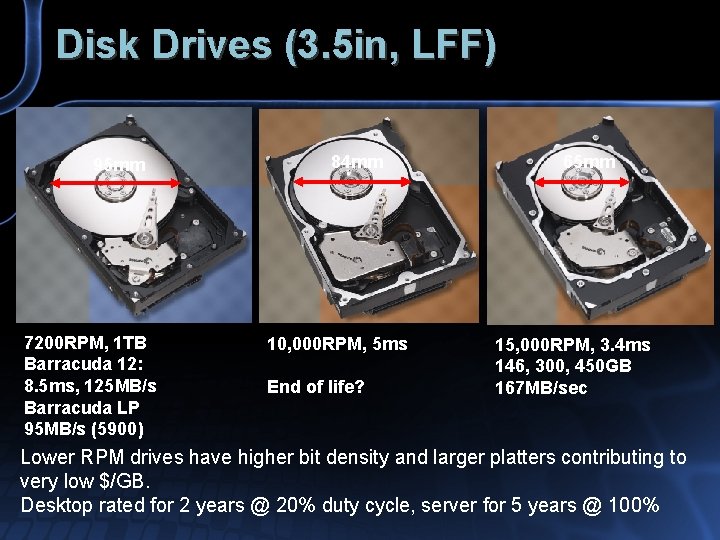

Disk Drives (3. 5 in, LFF) 95 mm 7200 RPM, 1 TB Barracuda 12: 8. 5 ms, 125 MB/s Barracuda LP 95 MB/s (5900) 84 mm 10, 000 RPM, 5 ms End of life? 65 mm 15, 000 RPM, 3. 4 ms 146, 300, 450 GB 167 MB/sec Lower RPM drives have higher bit density and larger platters contributing to very low $/GB. Desktop rated for 2 years @ 20% duty cycle, server for 5 years @ 100%

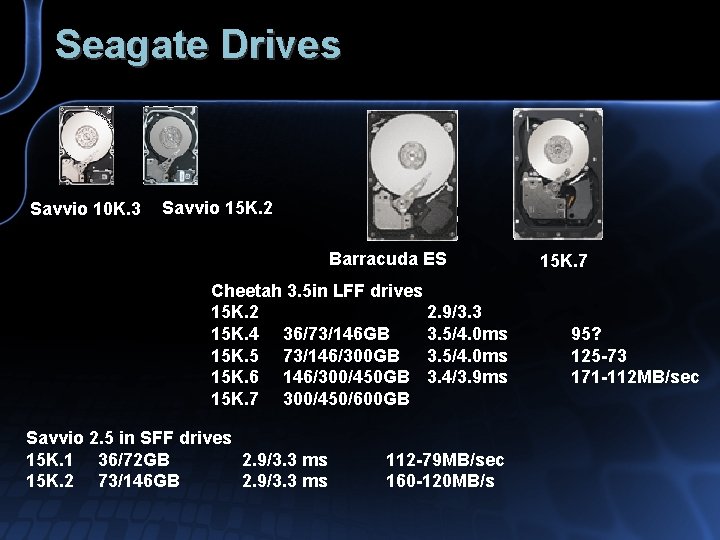

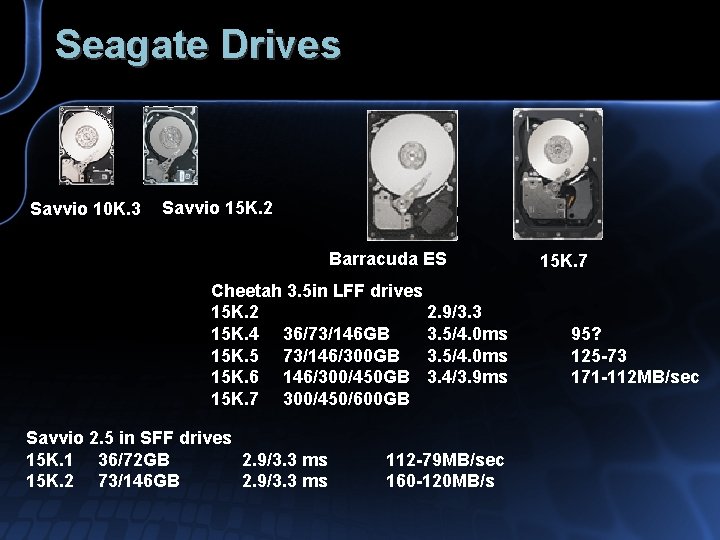

Seagate Drives Savvio 10 K. 3 Savvio 15 K. 2 Barracuda ES Cheetah 3. 5 in LFF drives 15 K. 2 2. 9/3. 3 15 K. 4 36/73/146 GB 3. 5/4. 0 ms 15 K. 5 73/146/300 GB 3. 5/4. 0 ms 15 K. 6 146/300/450 GB 3. 4/3. 9 ms 15 K. 7 300/450/600 GB Savvio 2. 5 in SFF drives 15 K. 1 36/72 GB 2. 9/3. 3 ms 15 K. 2 73/146 GB 2. 9/3. 3 ms 112 -79 MB/sec 160 -120 MB/s 15 K. 7 95? 125 -73 171 -112 MB/sec

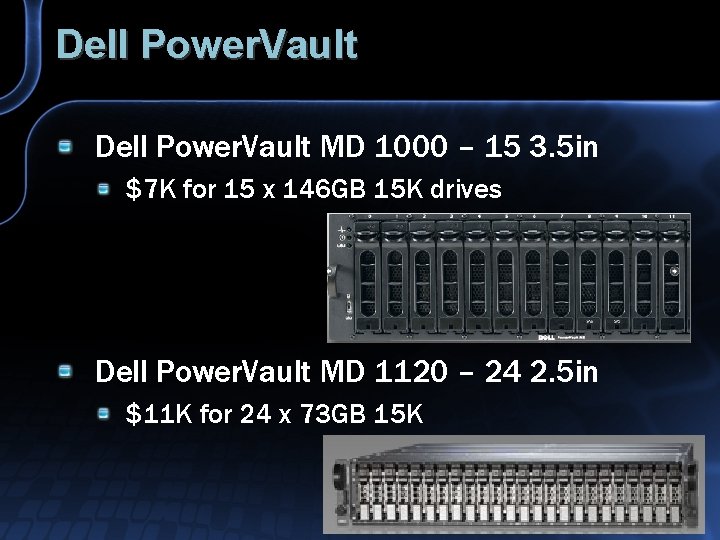

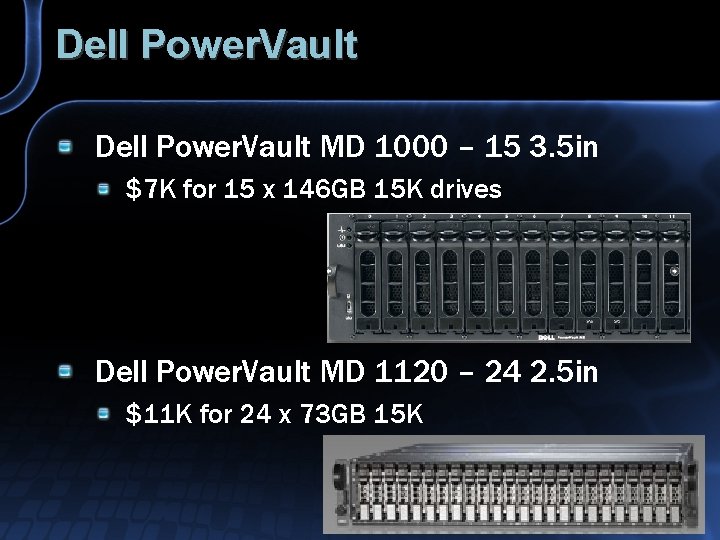

Dell Power. Vault MD 1000 – 15 3. 5 in $7 K for 15 x 146 GB 15 K drives Dell Power. Vault MD 1120 – 24 2. 5 in $11 K for 24 x 73 GB 15 K

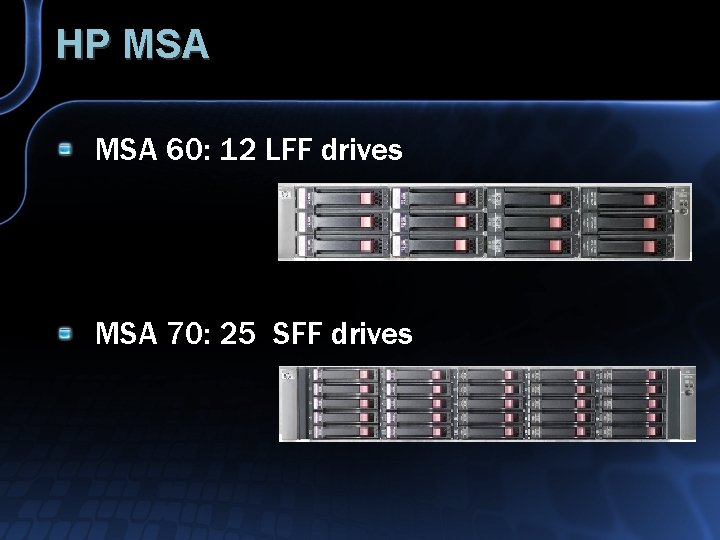

HP MSA 60: 12 LFF drives MSA 70: 25 SFF drives

Direct Attach Cluster Capable Dell Power. Vault MD 3000 – 15 3. 5 in 2 internal dual-port RAID controllers $11. 5 K for 15 x 146 G 15 K drives Listed as Direct Attach, but essentially an entry SAN

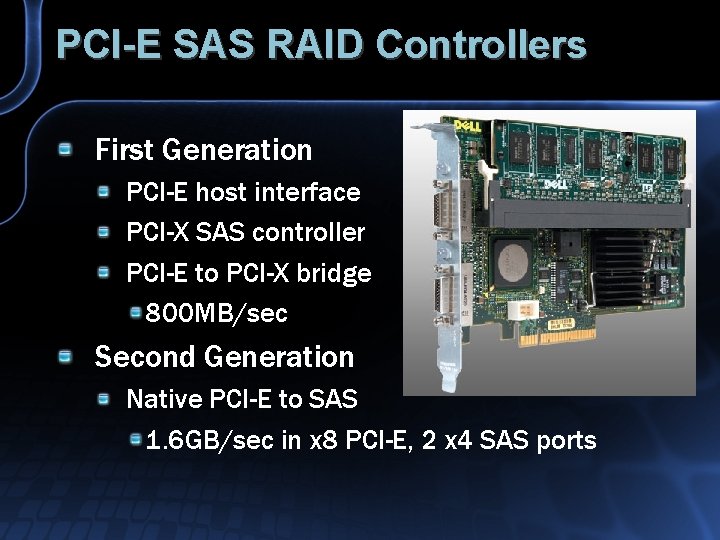

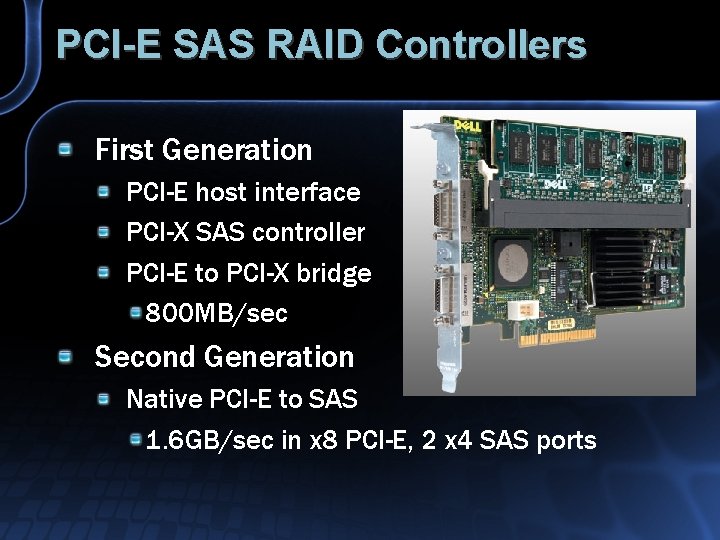

PCI-E SAS RAID Controllers First Generation PCI-E host interface PCI-X SAS controller PCI-E to PCI-X bridge 800 MB/sec Second Generation Native PCI-E to SAS 1. 6 GB/sec in x 8 PCI-E, 2 x 4 SAS ports

FC HBA QLogic QLE 2562 Dual port 8 Gbs FC, x 8 PCI-E Gen 2 QLogic QLE 2462 Dual Port 4 Gbs, x 4 PCI-E Gen 1 Qlogic QLE 2464 Quad port FC, x 8 PCI-E Gen 1 Emulex LPe 12002 Emulex LPe 11002/11004

Disk Performance

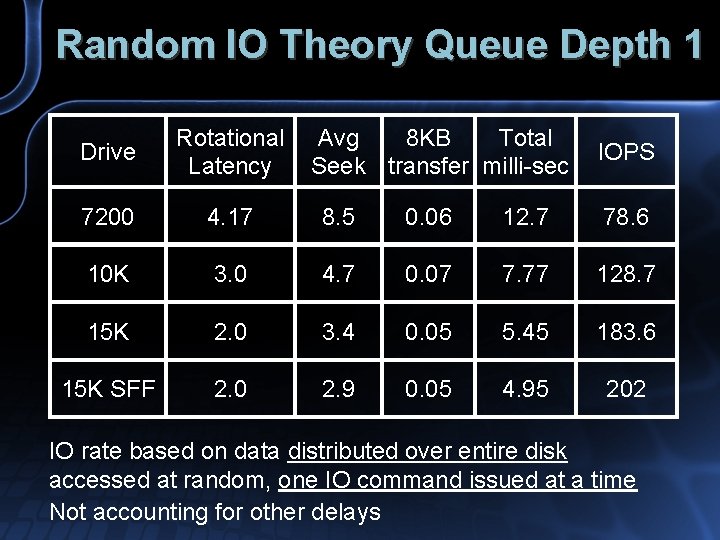

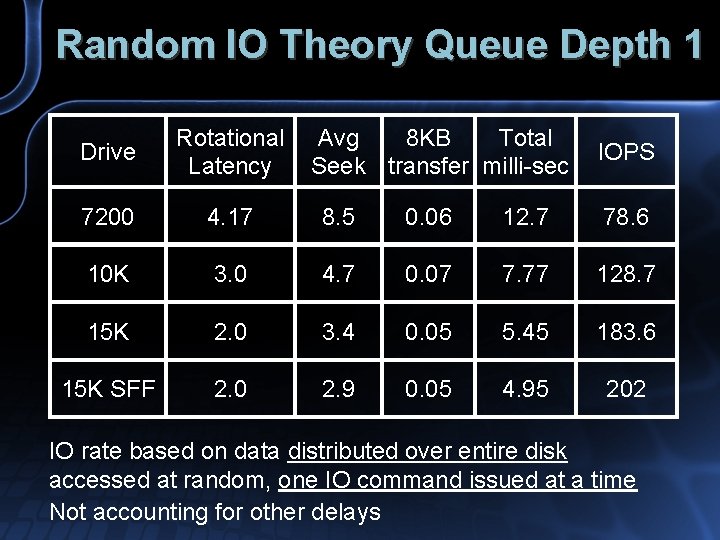

Random IO Theory Queue Depth 1 Drive Rotational Latency Avg 8 KB Total Seek transfer milli-sec 7200 4. 17 8. 5 0. 06 12. 7 78. 6 10 K 3. 0 4. 7 0. 07 7. 77 128. 7 15 K 2. 0 3. 4 0. 05 5. 45 183. 6 15 K SFF 2. 0 2. 9 0. 05 4. 95 202 IOPS IO rate based on data distributed over entire disk accessed at random, one IO command issued at a time Not accounting for other delays

Other Factors Short Stroke: Data is distributed over a fraction of the entire disk Average seek time is lower (track-to-track minimum) Command Queuing: More than one IO issued at a time, Disk can reorder individual IO accesses, lowering access time per IO

8 K Random IOPS vs Utilization IOPS for range of Queue depth and space utilization

Latency versus Queue Depth Latency versus Queue depth for range of space utilization

Disk Summary Frequently cited rules for random IO Applies to Queue Depth 1 Data spread across entire disk Key Factor Short-stroke High-Queue Depth SAN Complex SAN may hide SS and HQ behavior

SQL Server IO Patterns

SQL Server IO Transactional queries Read/Write Reporting / DW queries Checkpoints T-Log backups Differential/Full backups

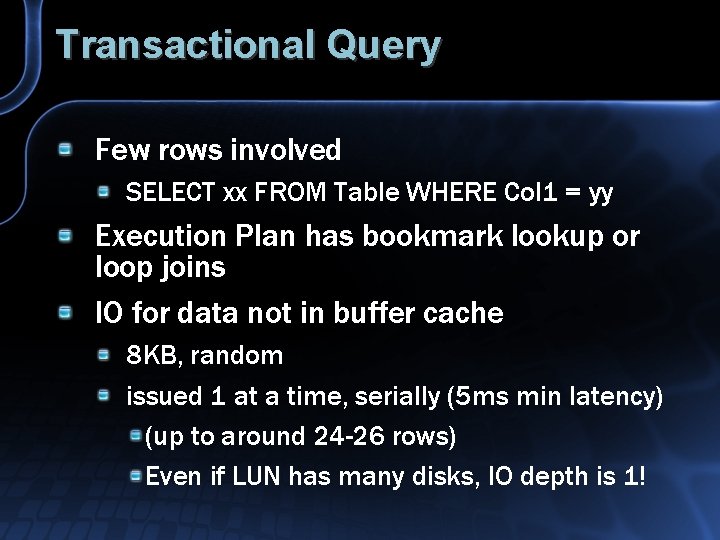

Transactional Query Few rows involved SELECT xx FROM Table WHERE Col 1 = yy Execution Plan has bookmark lookup or loop joins IO for data not in buffer cache 8 KB, random issued 1 at a time, serially (5 ms min latency) (up to around 24 -26 rows) Even if LUN has many disks, IO depth is 1!

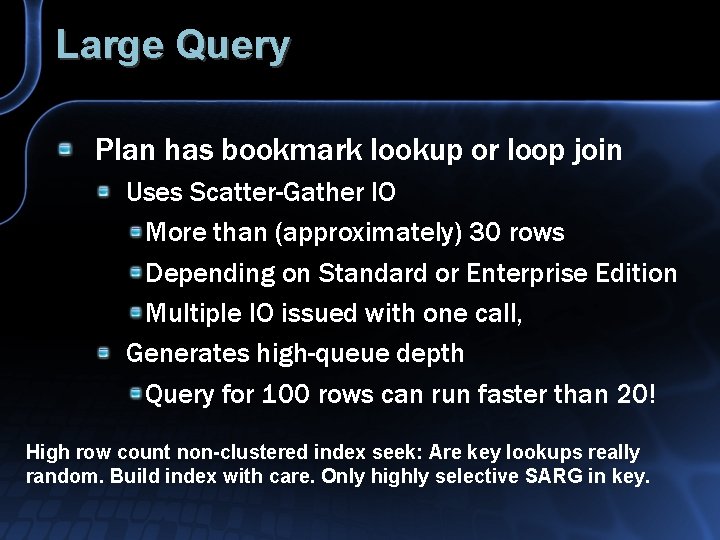

Large Query Plan has bookmark lookup or loop join Uses Scatter-Gather IO More than (approximately) 30 rows Depending on Standard or Enterprise Edition Multiple IO issued with one call, Generates high-queue depth Query for 100 rows can run faster than 20! High row count non-clustered index seek: Are key lookups really random. Build index with care. Only highly selective SARG in key.

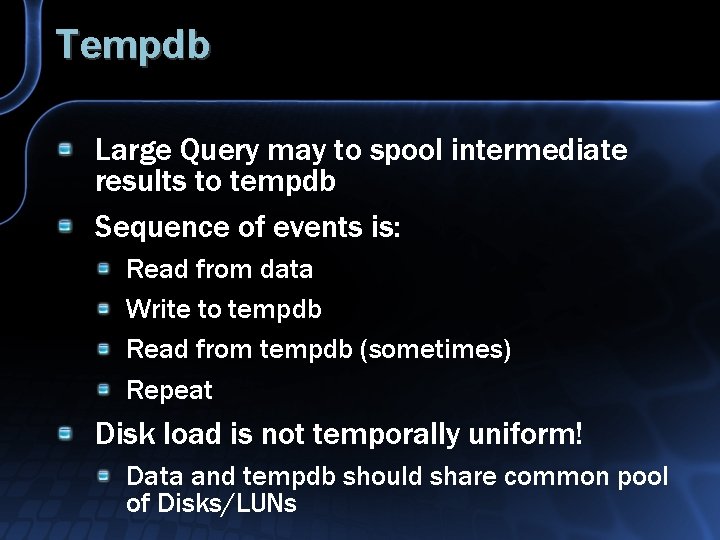

Tempdb Large Query may to spool intermediate results to tempdb Sequence of events is: Read from data Write to tempdb Read from tempdb (sometimes) Repeat Disk load is not temporally uniform! Data and tempdb should share common pool of Disks/LUNs

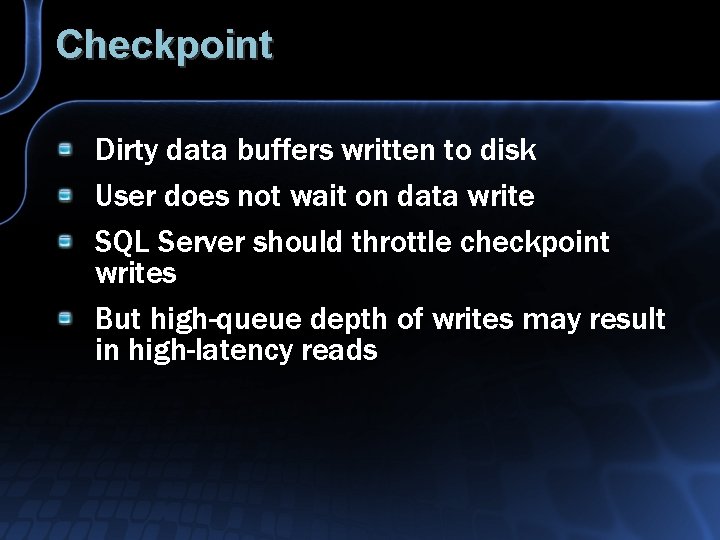

Checkpoint Dirty data buffers written to disk User does not wait on data write SQL Server should throttle checkpoint writes But high-queue depth of writes may result in high-latency reads

Log Backup Disrupts sequential log writes

Update Problem in SQL Server 2000 UPDATE uses non-clustered index Plan does not factor in key lookups Execution – fetch one row at a time ~5 -10 ms per key lookup

Storage Configuration Examples

General Strategy – Distribute IO across multiple PCI-E slots Distribute IO across multiple HBA/Controllers Distribute IO across many disk drives Daisy chain DAE only after High transaction (write) volume Dedicate HBA/controller, SAN SP, disk drives for logs?

LFF or SFF disks LFF 12 -15 disks per enclosure SFF 24 -25 disks per enclosure 15 disks on x 4 SAS, Total bandwidth: 800 MB/s, 53 MB/s per disk 24 disks on x 4 SAS, 33 MB/s

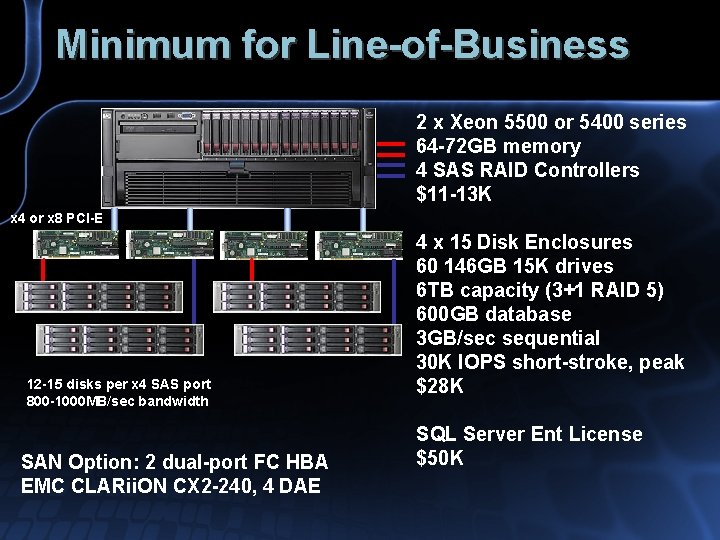

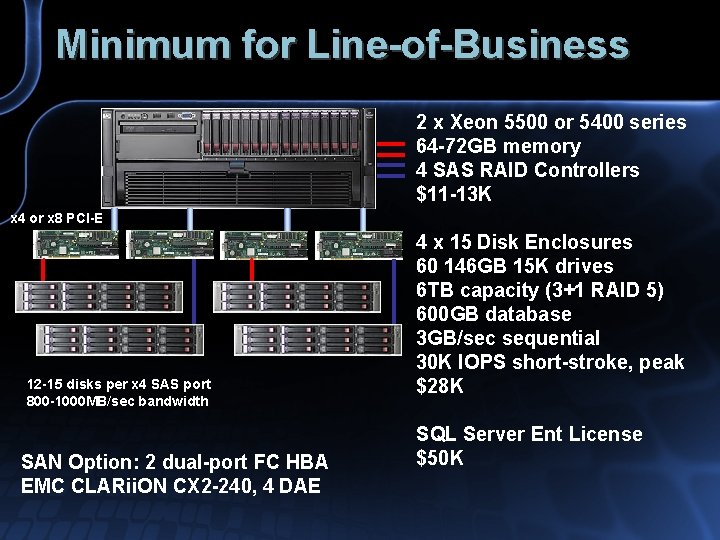

Minimum for Line-of-Business 2 x Xeon 5500 or 5400 series 64 -72 GB memory 4 SAS RAID Controllers $11 -13 K x 4 or x 8 PCI-E 12 -15 disks per x 4 SAS port 800 -1000 MB/sec bandwidth SAN Option: 2 dual-port FC HBA EMC CLARii. ON CX 2 -240, 4 DAE 4 x 15 Disk Enclosures 60 146 GB 15 K drives 6 TB capacity (3+1 RAID 5) 600 GB database 3 GB/sec sequential 30 K IOPS short-stroke, peak $28 K SQL Server Ent License $50 K

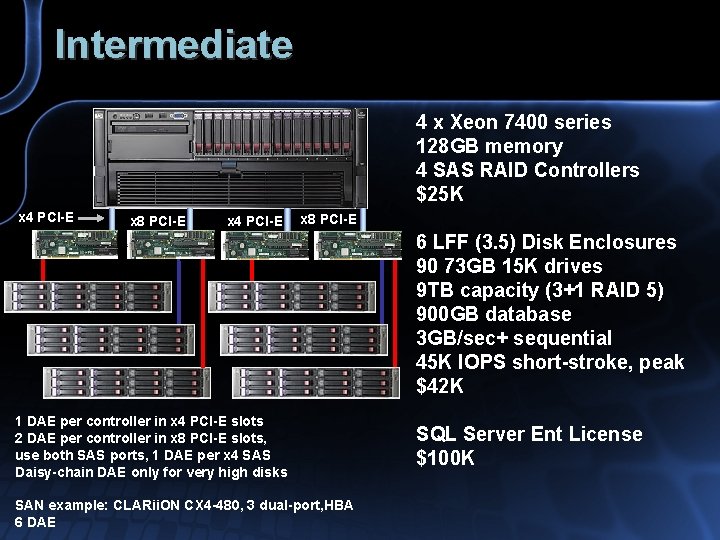

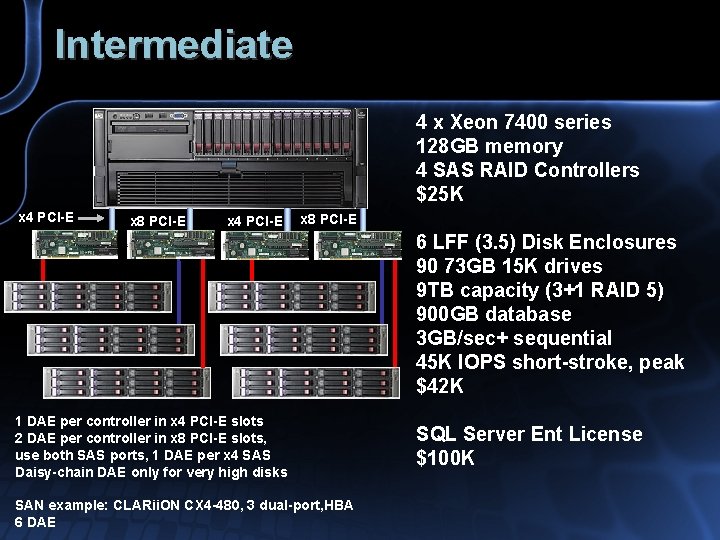

Intermediate 4 x Xeon 7400 series 128 GB memory 4 SAS RAID Controllers $25 K x 4 PCI-E x 8 PCI-E 6 LFF (3. 5) Disk Enclosures 90 73 GB 15 K drives 9 TB capacity (3+1 RAID 5) 900 GB database 3 GB/sec+ sequential 45 K IOPS short-stroke, peak $42 K 1 DAE per controller in x 4 PCI-E slots 2 DAE per controller in x 8 PCI-E slots, use both SAS ports, 1 DAE per x 4 SAS Daisy-chain DAE only for very high disks SAN example: CLARii. ON CX 4 -480, 3 dual-port, HBA 6 DAE SQL Server Ent License $100 K

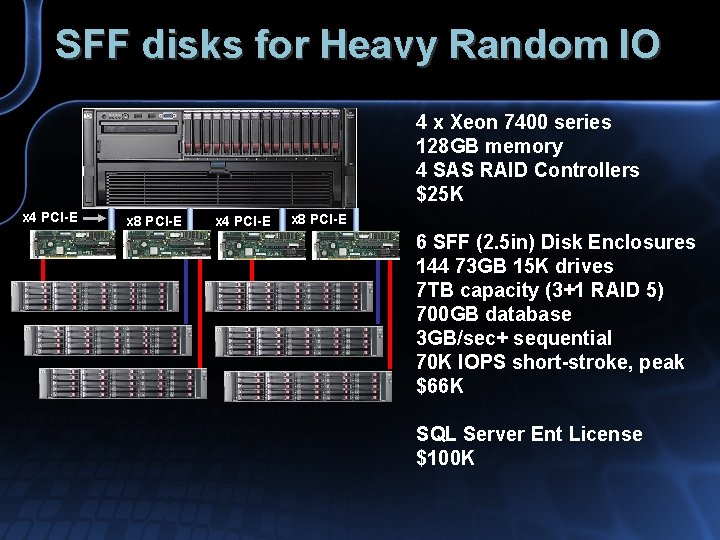

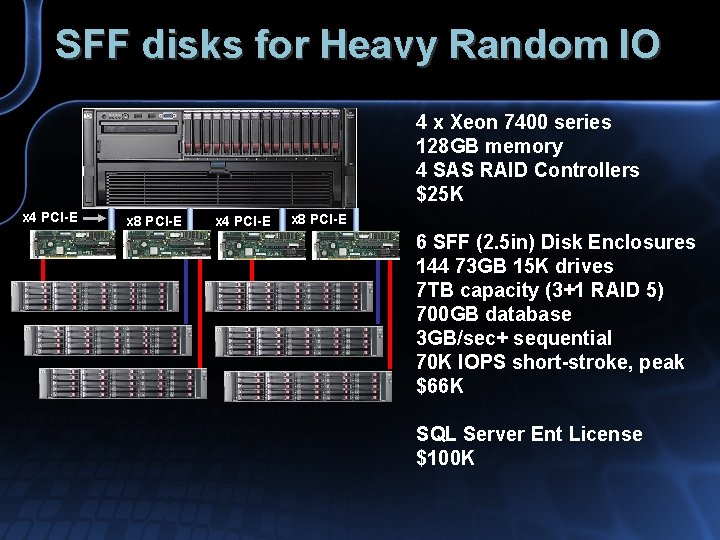

SFF disks for Heavy Random IO 4 x Xeon 7400 series 128 GB memory 4 SAS RAID Controllers $25 K x 4 PCI-E x 8 PCI-E 6 SFF (2. 5 in) Disk Enclosures 144 73 GB 15 K drives 7 TB capacity (3+1 RAID 5) 700 GB database 3 GB/sec+ sequential 70 K IOPS short-stroke, peak $66 K SQL Server Ent License $100 K

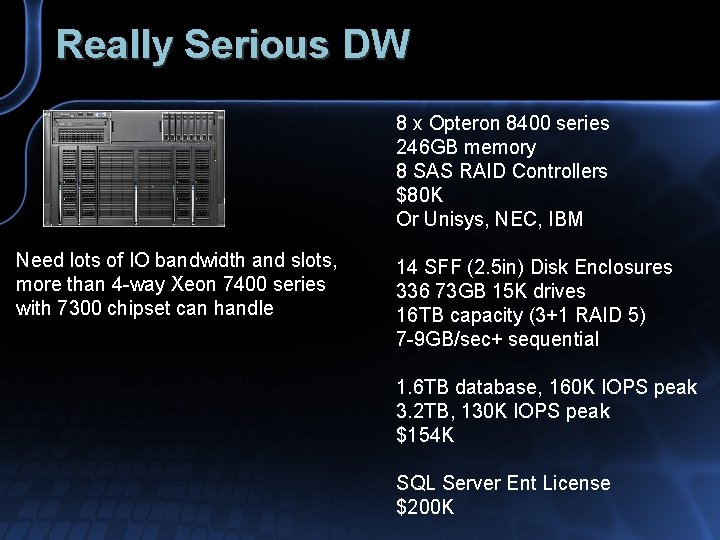

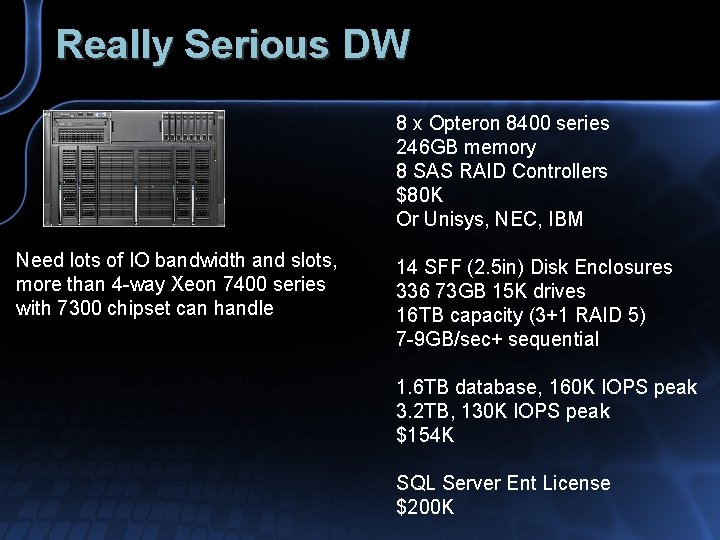

Really Serious DW 8 x Opteron 8400 series 246 GB memory 8 SAS RAID Controllers $80 K Or Unisys, NEC, IBM Need lots of IO bandwidth and slots, more than 4 -way Xeon 7400 series with 7300 chipset can handle 14 SFF (2. 5 in) Disk Enclosures 336 73 GB 15 K drives 16 TB capacity (3+1 RAID 5) 7 -9 GB/sec+ sequential 1. 6 TB database, 160 K IOPS peak 3. 2 TB, 130 K IOPS peak $154 K SQL Server Ent License $200 K

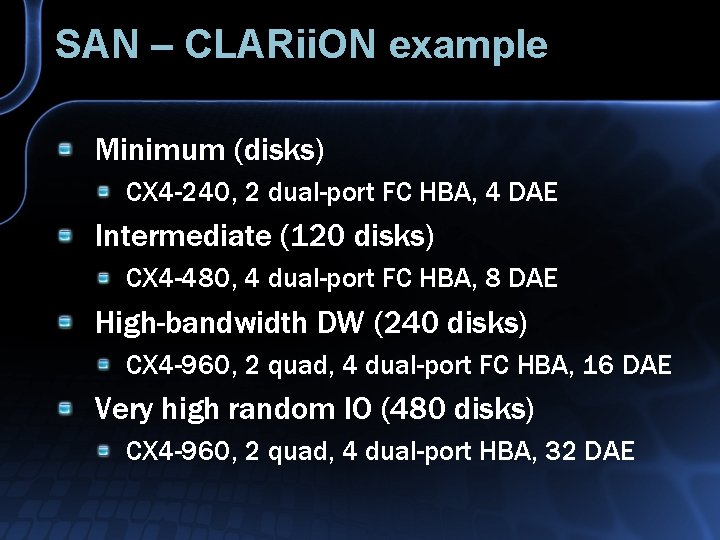

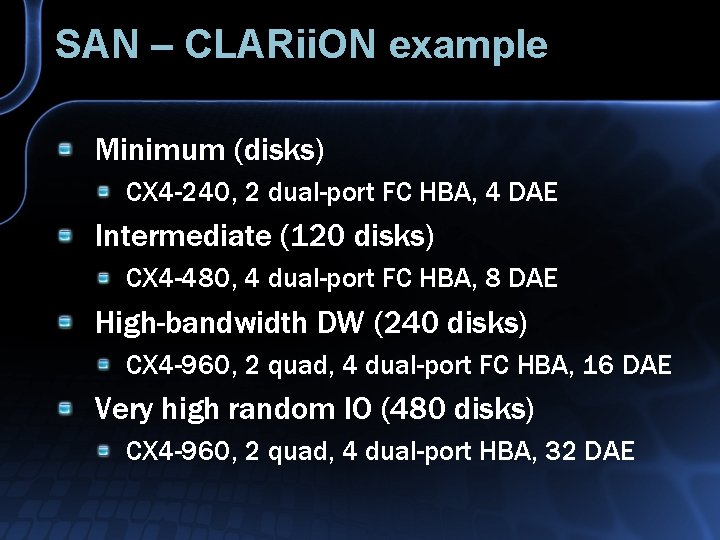

SAN – CLARii. ON example Minimum (disks) CX 4 -240, 2 dual-port FC HBA, 4 DAE Intermediate (120 disks) CX 4 -480, 4 dual-port FC HBA, 8 DAE High-bandwidth DW (240 disks) CX 4 -960, 2 quad, 4 dual-port FC HBA, 16 DAE Very high random IO (480 disks) CX 4 -960, 2 quad, 4 dual-port HBA, 32 DAE

Storage Performance Verification

What To Test Sequential Random low queue, high queue High row count Update with nonclustered index Checkpoint writes Full-stroke and Short-stroke

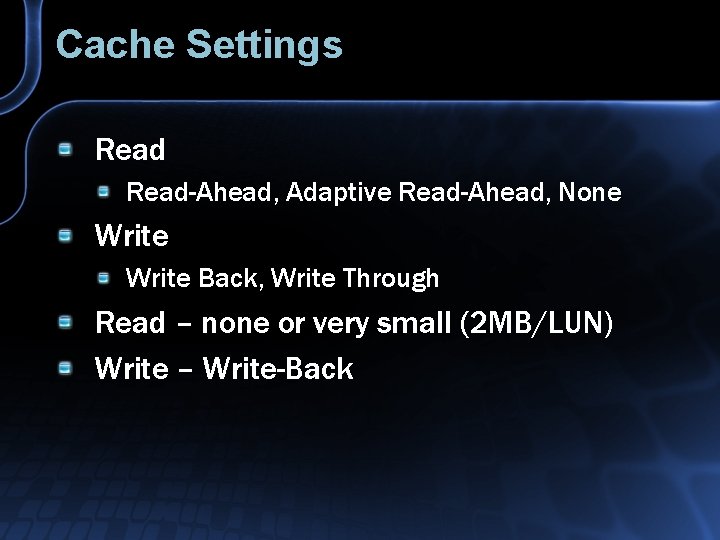

Cache Settings Read-Ahead, Adaptive Read-Ahead, None Write Back, Write Through Read – none or very small (2 MB/LUN) Write – Write-Back

SAN - HBA Settings Number. Of. Requests Default – 32? Prevents multiple hosts from overloading SAN Match to number of disks to control queue depth? Max. SGList

SSD

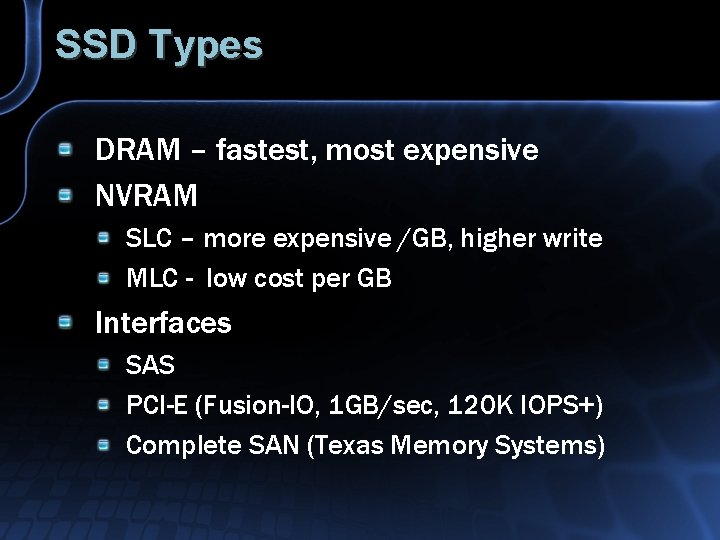

SSD Types DRAM – fastest, most expensive NVRAM SLC – more expensive /GB, higher write MLC - low cost per GB Interfaces SAS PCI-E (Fusion-IO, 1 GB/sec, 120 K IOPS+) Complete SAN (Texas Memory Systems)

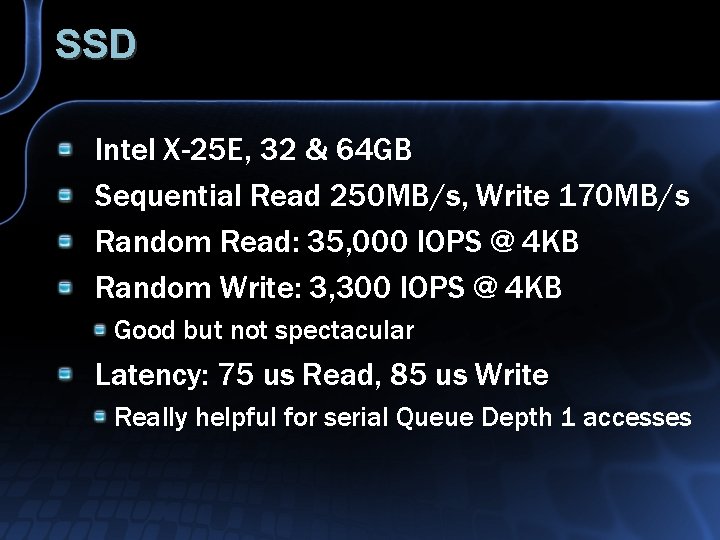

SSD Intel X-25 E, 32 & 64 GB Sequential Read 250 MB/s, Write 170 MB/s Random Read: 35, 000 IOPS @ 4 KB Random Write: 3, 300 IOPS @ 4 KB Good but not spectacular Latency: 75 us Read, 85 us Write Really helpful for serial Queue Depth 1 accesses

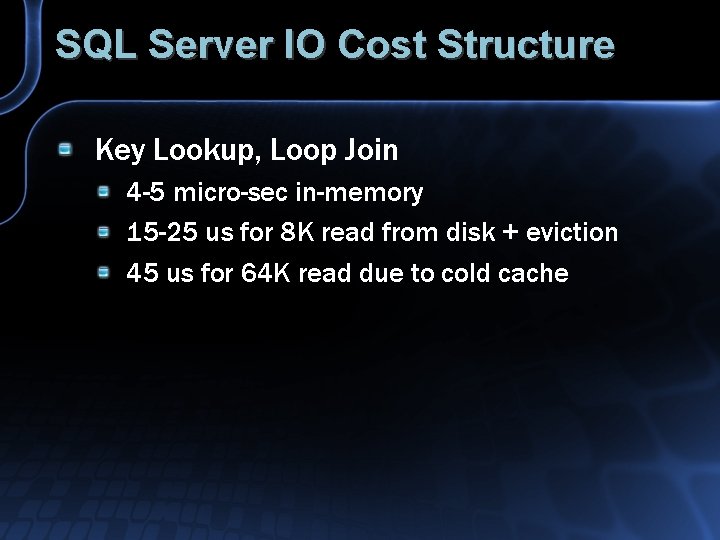

SQL Server IO Cost Structure Key Lookup, Loop Join 4 -5 micro-sec in-memory 15 -25 us for 8 K read from disk + eviction 45 us for 64 K read due to cold cache

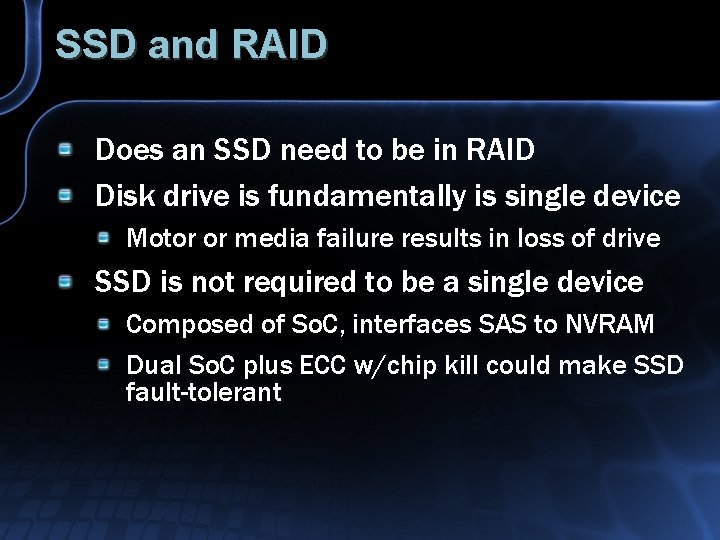

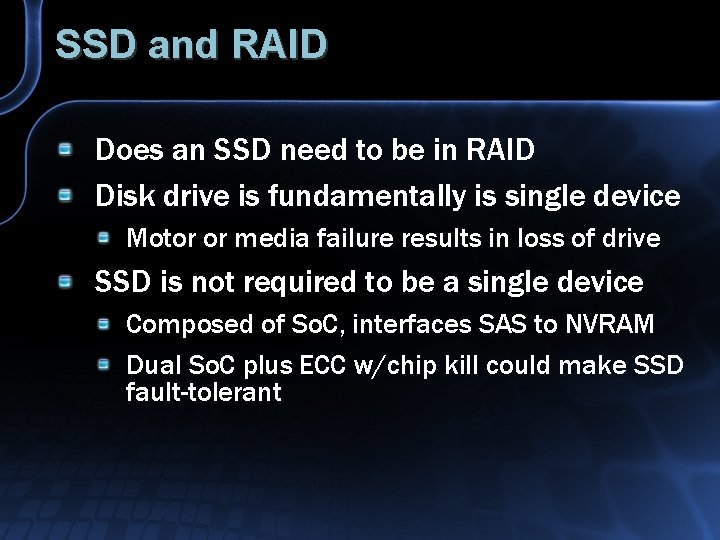

SSD and RAID Does an SSD need to be in RAID Disk drive is fundamentally is single device Motor or media failure results in loss of drive SSD is not required to be a single device Composed of So. C, interfaces SAS to NVRAM Dual So. C plus ECC w/chip kill could make SSD fault-tolerant

Additional Slides

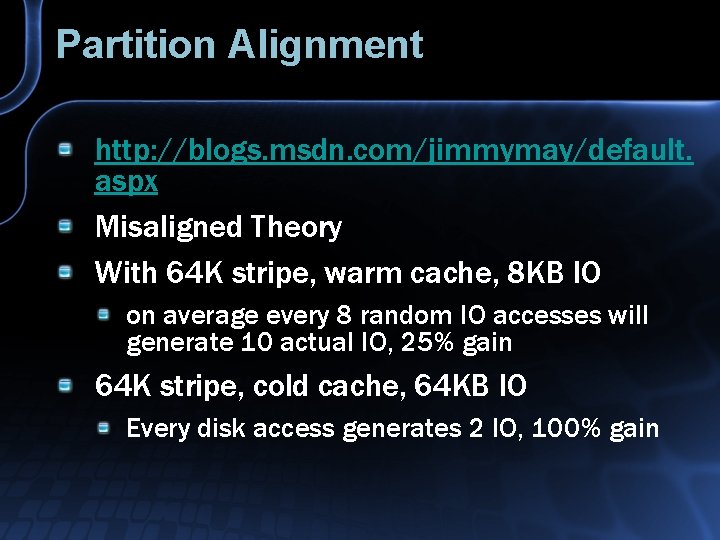

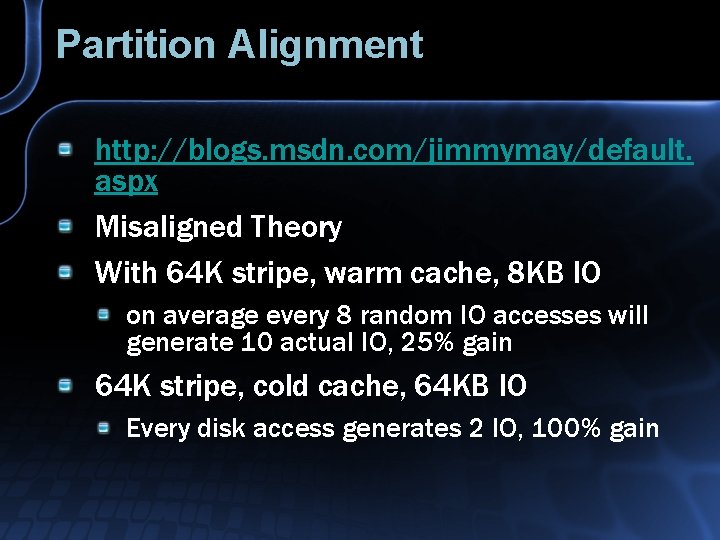

Partition Alignment http: //blogs. msdn. com/jimmymay/default. aspx Misaligned Theory With 64 K stripe, warm cache, 8 KB IO on average every 8 random IO accesses will generate 10 actual IO, 25% gain 64 K stripe, cold cache, 64 KB IO Every disk access generates 2 IO, 100% gain

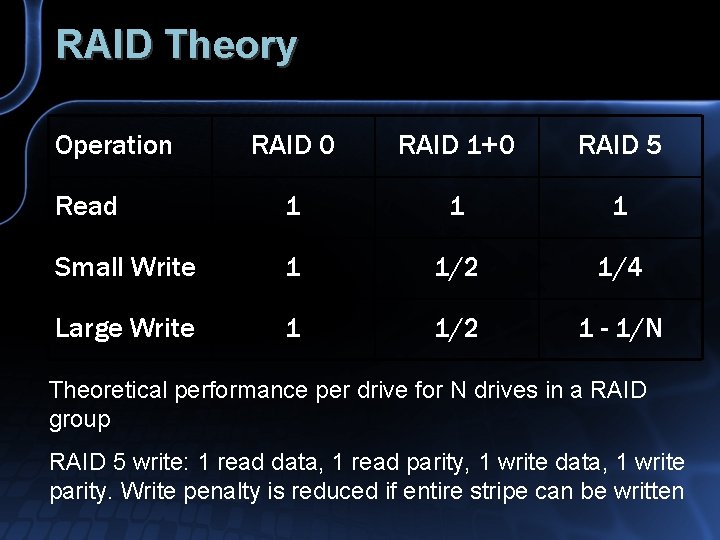

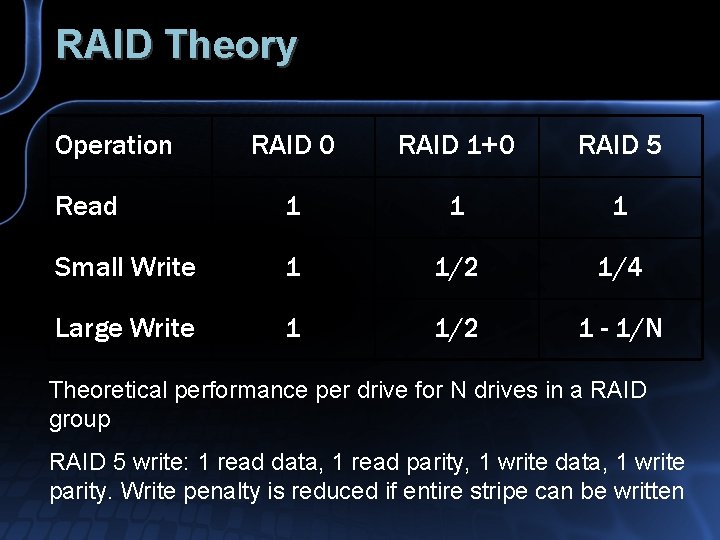

RAID Theory Operation RAID 0 RAID 1+0 RAID 5 Read 1 1 1 Small Write 1 1/2 1/4 Large Write 1 1/2 1 - 1/N Theoretical performance per drive for N drives in a RAID group RAID 5 write: 1 read data, 1 read parity, 1 write data, 1 write parity. Write penalty is reduced if entire stripe can be written

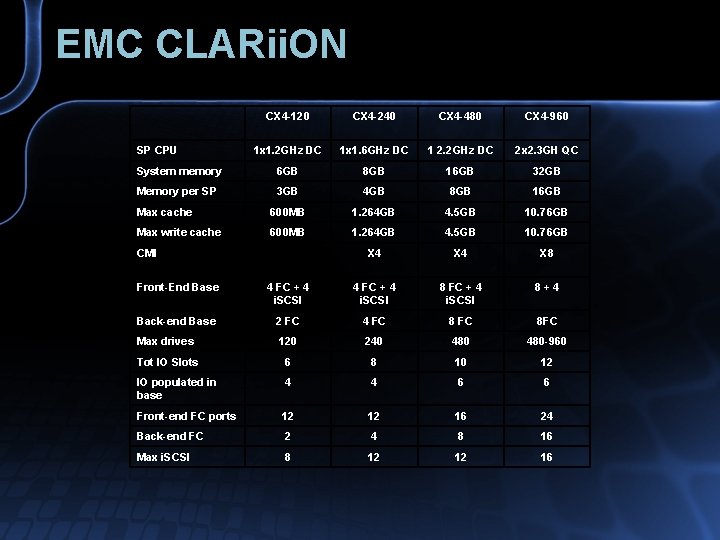

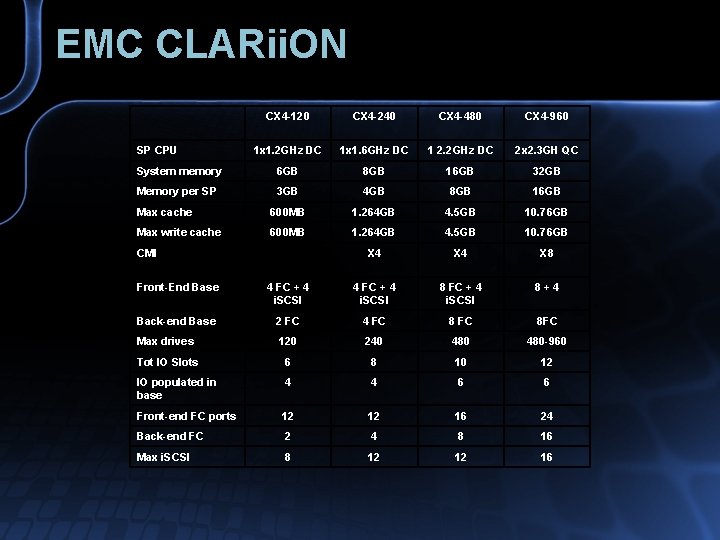

EMC CLARii. ON CX 4 -120 CX 4 -240 CX 4 -480 CX 4 -960 1 x 1. 2 GHz DC 1 x 1. 6 GHz DC 1 2. 2 GHz DC 2 x 2. 3 GH QC System memory 6 GB 8 GB 16 GB 32 GB Memory per SP 3 GB 4 GB 8 GB 16 GB Max cache 600 MB 1. 264 GB 4. 5 GB 10. 76 GB Max write cache 600 MB 1. 264 GB 4. 5 GB 10. 76 GB X 4 X 8 SP CPU CMI Front-End Base 4 FC + 4 i. SCSI 8+4 Back-end Base 2 FC 4 FC 8 FC Max drives 120 240 480 -960 Tot IO Slots 6 8 10 12 IO populated in base 4 4 6 6 Front-end FC ports 12 12 16 24 Back-end FC 2 4 8 16 Max i. SCSI 8 12 12 16

Net. App Write Anywhere File Layout (WAFL) Very different characteristics Overrides many standard database strategies No need to defragment See Net. App specific documents Index rebuild to clean up unused space may still be helpful

Enterprise SAN Massive cross-bar RAID groups RAID 5 3+1 or 7+1, RAID 10 2+2 or 4+4 Hyper Volume: 16 GB slices from RAID group LUNS created from Hyper Volumes Theory: Massive number disks, say 1000 disks, can do 150 K IOPS. Each server averages 10 K IOPS steady, with surges to 50 K. Many servers can share large SAN

Table Scan to Disk

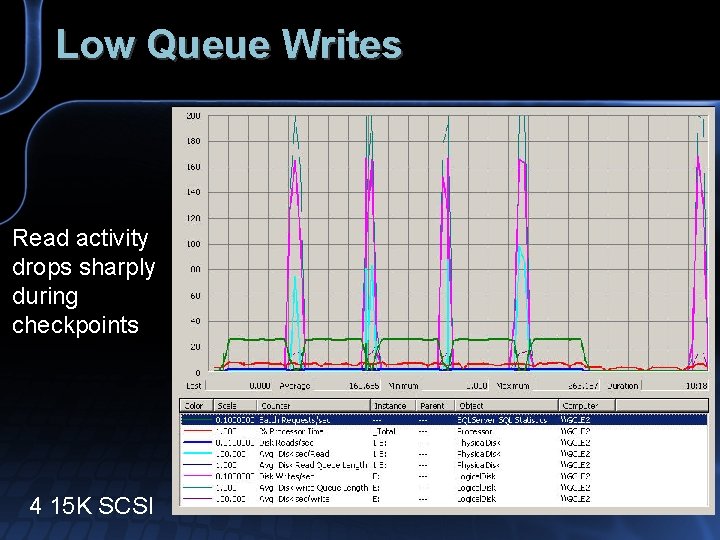

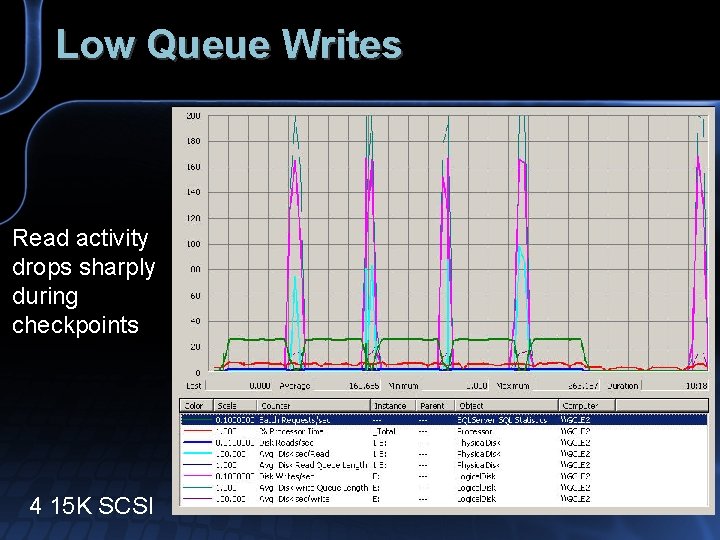

Low Queue Writes Read activity drops sharply during checkpoints 4 15 K SCSI

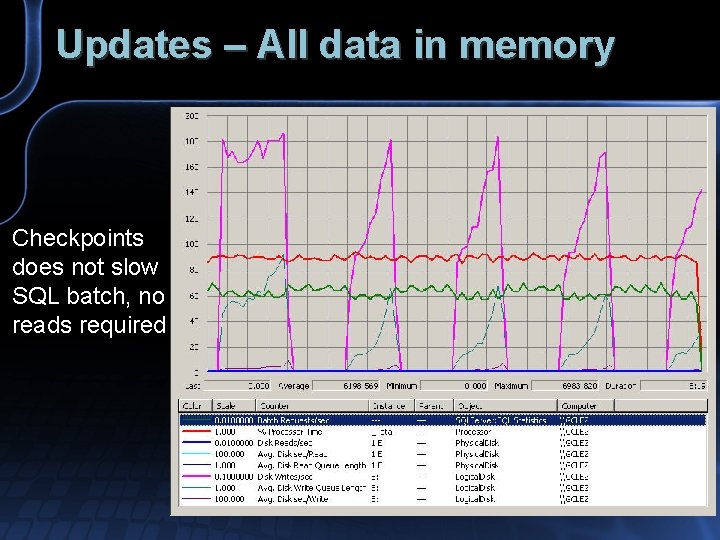

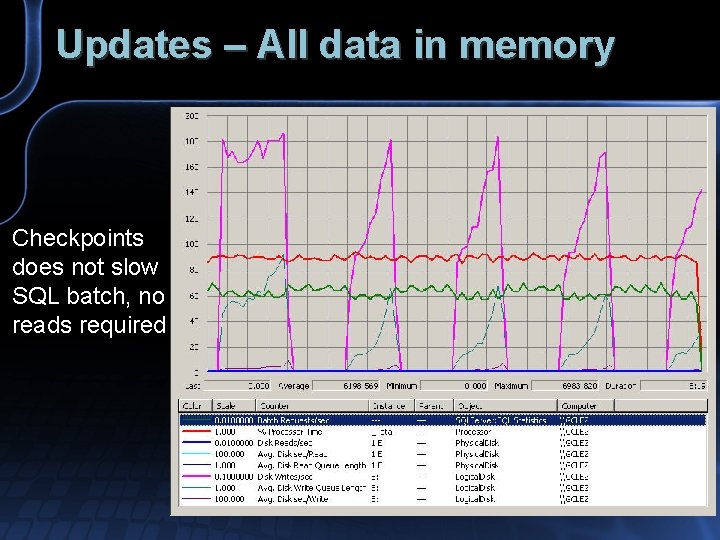

Updates – All data in memory Checkpoints does not slow SQL batch, no reads required

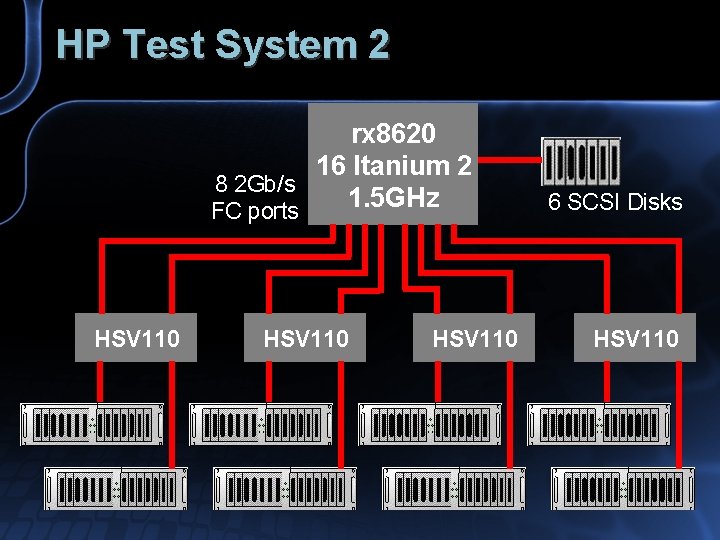

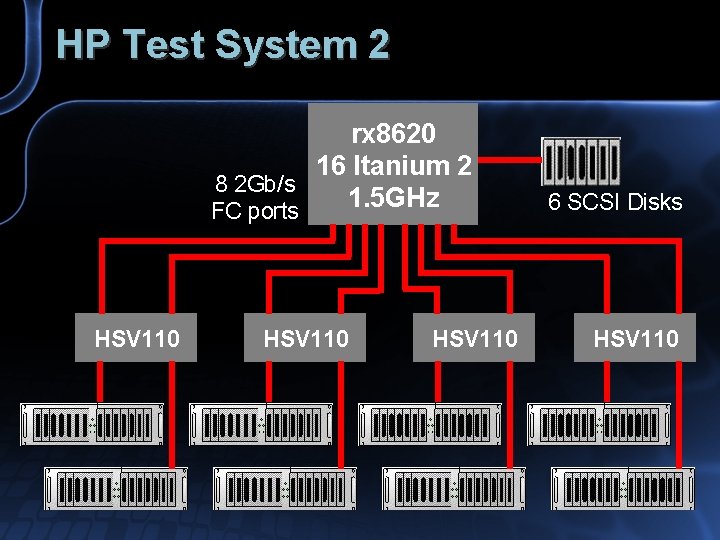

HP Test System 2 rx 8620 16 Itanium 2 8 2 Gb/s 1. 5 GHz FC ports HSV 110 6 SCSI Disks HSV 110