Data Warehouse Patterns and SQL Server Apr 2018

- Slides: 49

Data Warehouse Patterns and SQL Server (Apr 2018) Kevin S. Goff www. Kevin. SGoff. net Microsoft Data Platform (SQL Server) MVP

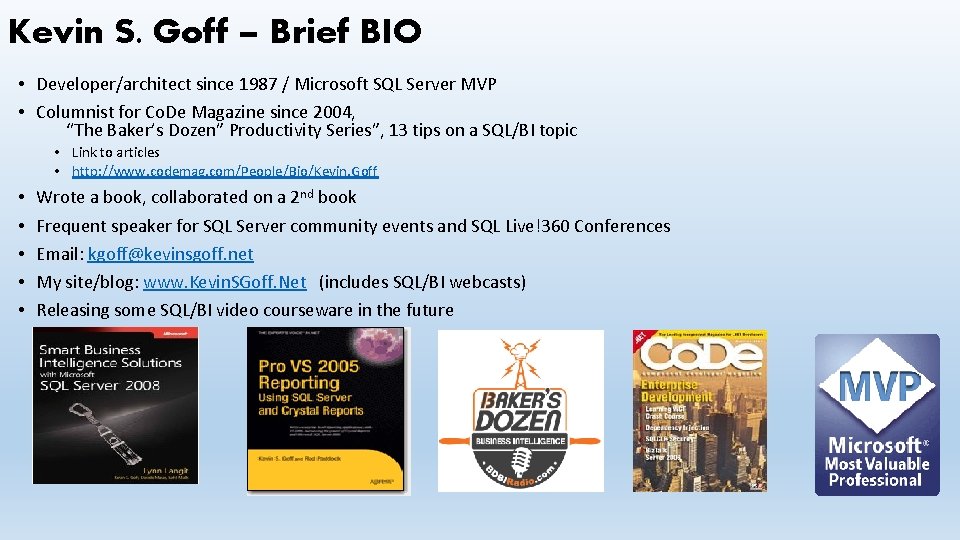

Kevin S. Goff – Brief BIO • Developer/architect since 1987 / Microsoft SQL Server MVP • Columnist for Co. De Magazine since 2004, “The Baker’s Dozen” Productivity Series”, 13 tips on a SQL/BI topic • Link to articles • http: //www. codemag. com/People/Bio/Kevin. Goff • • • Wrote a book, collaborated on a 2 nd book Frequent speaker for SQL Server community events and SQL Live!360 Conferences Email: kgoff@kevinsgoff. net My site/blog: www. Kevin. SGoff. Net (includes SQL/BI webcasts) Releasing some SQL/BI video courseware in the future

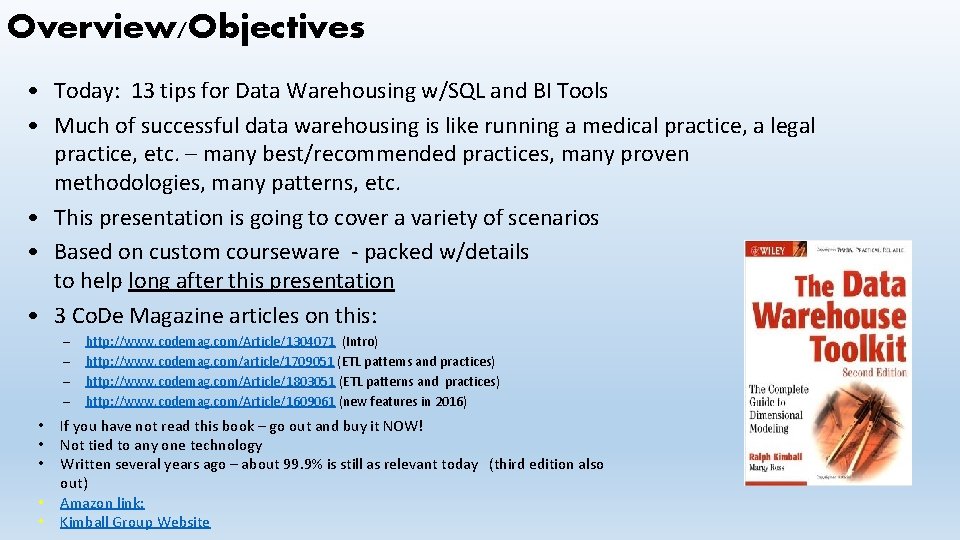

Overview/Objectives • Today: 13 tips for Data Warehousing w/SQL and BI Tools • Much of successful data warehousing is like running a medical practice, a legal practice, etc. – many best/recommended practices, many proven methodologies, many patterns, etc. • This presentation is going to cover a variety of scenarios • Based on custom courseware - packed w/details to help long after this presentation • 3 Co. De Magazine articles on this: – – • • • http: //www. codemag. com/Article/1304071 (Intro) http: //www. codemag. com/article/1709051 (ETL patterns and practices) http: //www. codemag. com/Article/1803051 (ETL patterns and practices) http: //www. codemag. com/Article/1609061 (new features in 2016) If you have not read this book – go out and buy it NOW! Not tied to any one technology Written several years ago – about 99. 9% is still as relevant today (third edition also out) Amazon link: Kimball Group Website

My most “controversial” topic • People either love this session (great evals) or they hate this session (not so great evals) • A ton of detail – many slides – hard to deliver in an hour • This presentation is a short step-means to a greater end • For some, a launching point into Data Warehouse Patterns • For others, maybe will fill in a few gaps • Regardless, those who will use this to continue the path of learning will get the most out of this

Some of the concepts to cover… • Granularity/Transaction Grain (level of detail of data) • Conformed/Common Dimensionality • Role playing dimensions • Hierarchies • Fact-Dimension Usage/Bus Matrix • Transactional vs Snapshot Fact Tables • Factless Fact Tables • Junk Dimensions • Star and Snowflake Schemas • Dimension Outriggers • Slowly changing dimensions • Early and Late Arriving Data • Capacity planning • Staging areas and operational data stores

Topics for today 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. Goals/overview of a data warehouse / analytic database Major components of a Data Warehouse (Facts and Dimensions) Cumulative Transactional Fact Tables Factless Fact Tables Periodic Snapshot Fact Tables Dimension Tables in General Type 2 Slowly Changing Dimensions Role-Playing Dimensions Junk Dimensions Dimension Outriggers Storing NULL values for Foreign Keys in Fact Tables - DON'T!!! Storing Ratios in Fact Tables - DON'T!!! ETL Checklists

1 -Goals of a Data Warehouse/Analytic Database Home • Data Warehouses are relational databases, comprised of two major items • Fact Tables and Dimension Tables • Fact Tables can be transactional, factless, or periodic snapshot • Fact/Dimension Models (“Dimensional Modeling”) involves structuring/relating fact/dimensions to reflect types of questions/information that users want from the data • Different ways/patterns for joining Facts and Dimensions • More often than not, Data Warehouses (or Data Marts) are flat and de-normalized • Sometimes companies will build analytic databases (i. e. OLAP cubes using Analysis Services) from Data Warehouses

1 -Goals of a Data Warehouse/Analytic Database “I want to look at order request volume by market, for the last three months, compared to the same time period a year ago” “Executives need to see revenue by month for this year, broken out by pricing components and by Material Types” “I want to look at the ratio of daily inventory counts with respect to weekly shipments, by auto program and part number, over the last year” “I want to see a weekly scorecard of sales and returns with respect to our goals and thresholds – by market, sales rep, and brand” What word do we see in common in each of these requests/”user stories”? Home

1 -Goals of a Data Warehouse/Analytic Database “I want to look at order request volume by market, for the last three months, compared to the same time period a year ago” “Executives need to see revenue by month for this year, broken out by pricing components and by Material Types” “I want to look at the ratio of daily inventory counts with respect to weekly shipments, by auto program and part number, over the last year” “I want to see a weekly scorecard of sales and returns with respect to our goals and thresholds – by market, sales rep, and brand” Answer: the word “BY” Facts (Measures): data collected as part of business process we want to aggregate/analyze Dimensions: business entities to describe/provide context for facts Home

1 -Goals of a Data Warehouse/Analytic Database “I want to look at order request volume by market, for the last three months, compared to the same time period a year ago” “Executives need to see revenue by month for this year, broken out by pricing components and by Material Types” “I want to look at the ratio of daily inventory counts with respect to weekly shipments, by auto program and part number, over the last year” “I want to see a weekly scorecard of sales and returns with respect to our goals and thresholds – by market, sales rep, and brand” Fact/Dimension Modeling: • Process of organizing facts and dimensions to facilitate “easy” access for users to answer these questions. • Along the way, we need to identify the grain at which facts/measures exist • How facts relate to dimensions • How they are collected • How we can aggregate them. Home

1 -Goals of a Data Warehouse/Analytic Database A few key points Home • Data Warehouse methodology is different than Relational Normalized Methodology • Data Warehouses and Data Marts are generally more de-normalized and flat • Why? • Transaction systems utilizing normalized methodologies need to get data into the database as efficiently as possible, and to validate PK/FK constraints • Data Warehouse/Data Mart systems – more interested in getting data “out” of the system • A key process in data warehousing – shaping data into Fact/Dimension models • Once you shape data into Fact/Dimension structures, can use many tools • (“If you build it, they will come”) • Discovery process is challenging – requirements from many areas

1 -Goals of a Data Warehouse/Analytic Database • Think of a customer order • The order is for a product(s), and that product has attributes (brand, category, business groupings) • The order has an order date, a due date, a request date, a promise date, etc. • The order is for a specific customer, and the sales agreement for the order has a specific ship-to • The order is for a specific steel grade, material type, industry part number, part dimensions, etc. Home

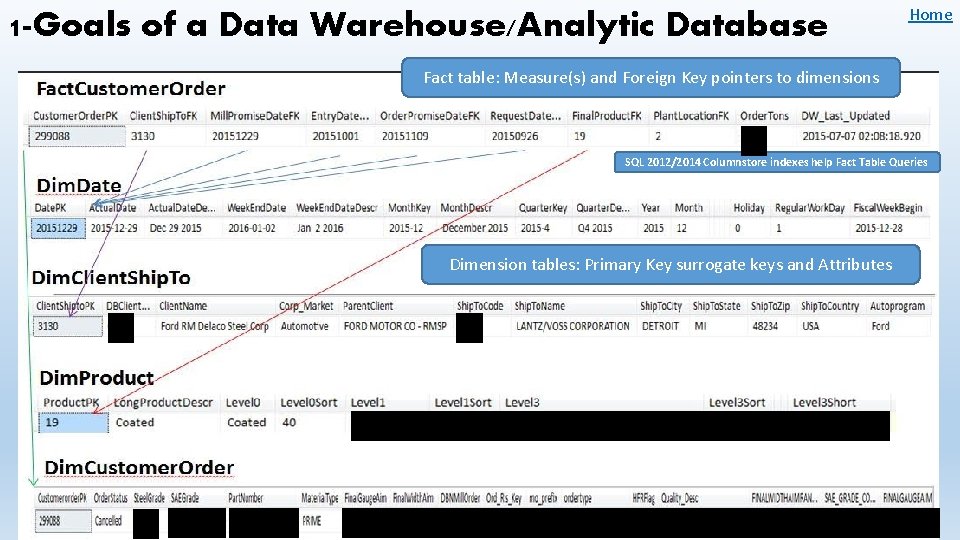

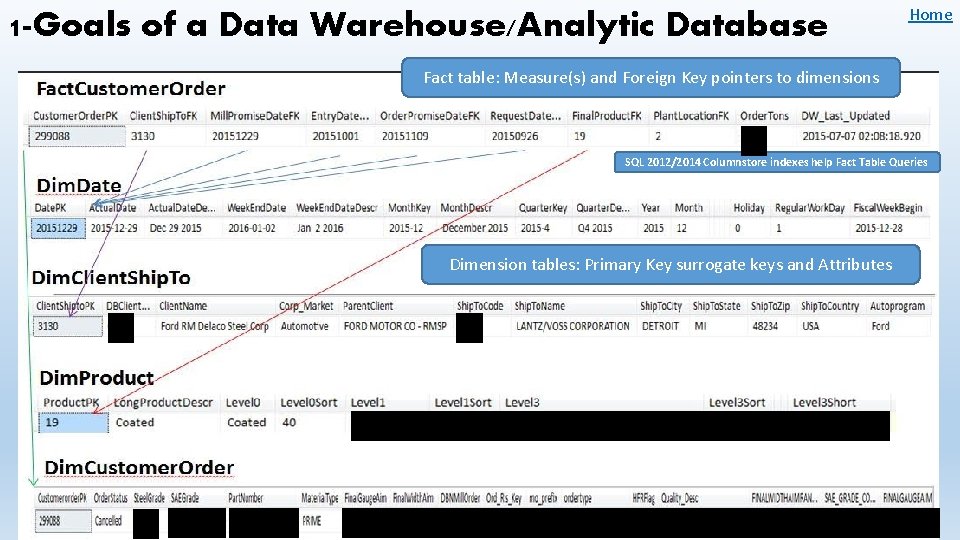

1 -Goals of a Data Warehouse/Analytic Database Home Fact table: Measure(s) and Foreign Key pointers to dimensions SQL 2012/2014 Columnstore indexes help Fact Table Queries Dimension tables: Primary Key surrogate keys and Attributes

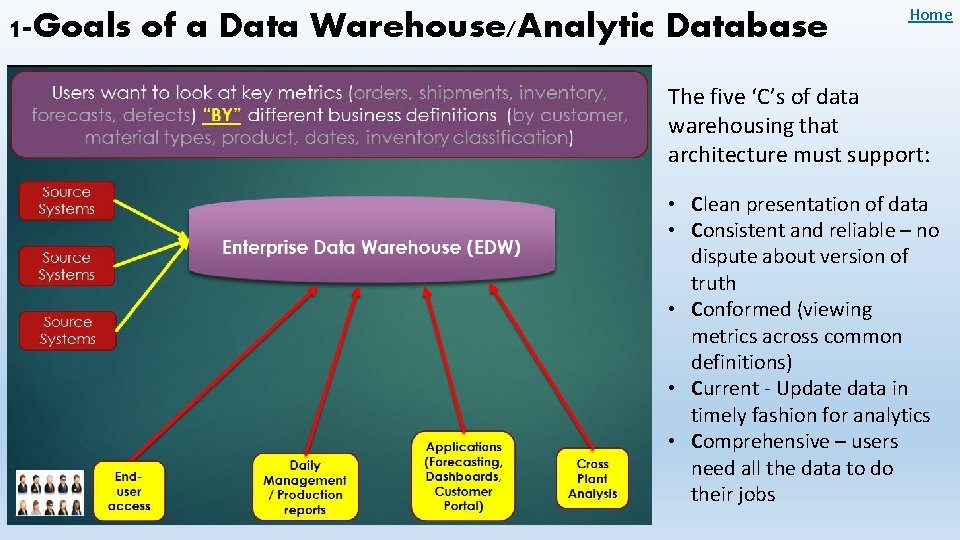

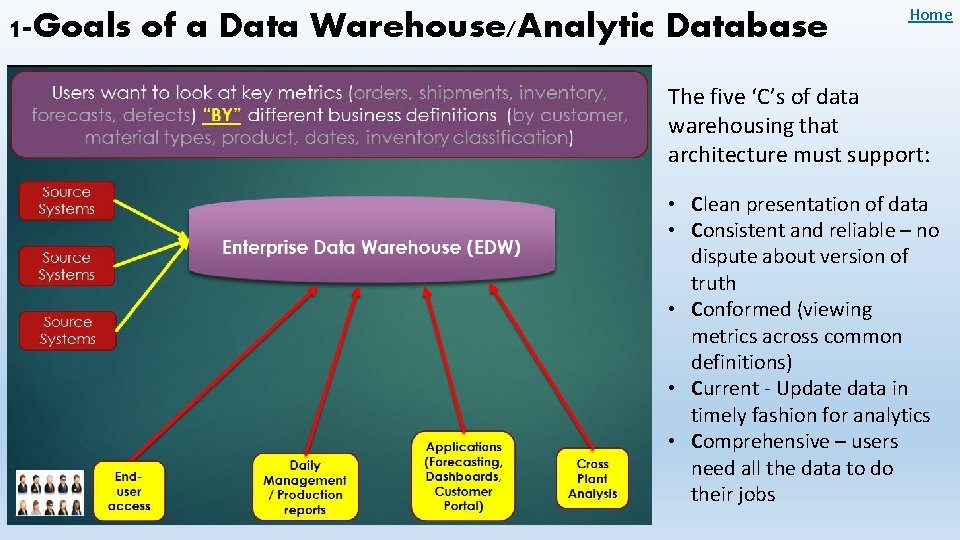

1 -Goals of a Data Warehouse/Analytic Database Home The five ‘C’s of data warehousing that architecture must support: • Clean presentation of data • Consistent and reliable – no dispute about version of truth • Conformed (viewing metrics across common definitions) • Current - Update data in timely fashion for analytics • Comprehensive – users need all the data to do their jobs

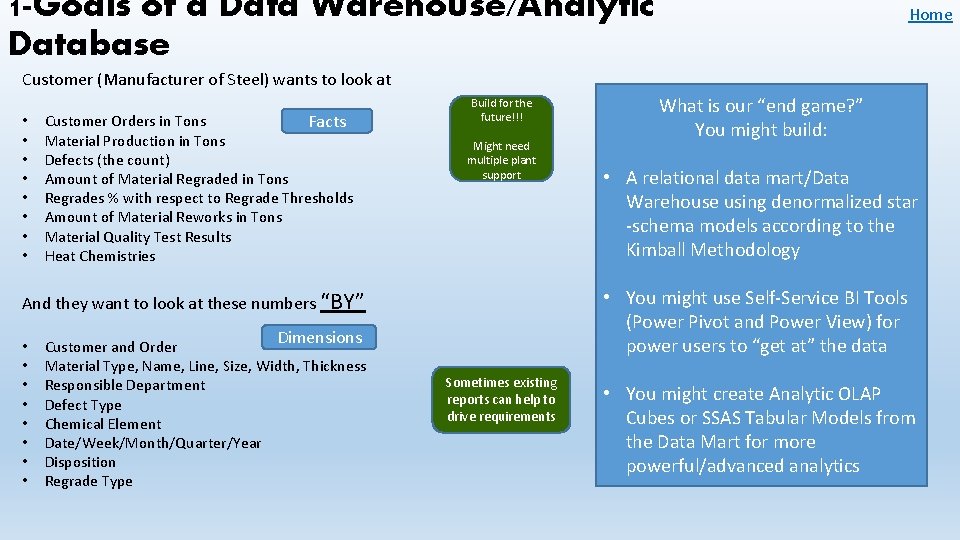

1 -Goals of a Data Warehouse/Analytic Database Home Customer (Manufacturer of Steel) wants to look at • • Customer Orders in Tons Facts Material Production in Tons Defects (the count) Amount of Material Regraded in Tons Regrades % with respect to Regrade Thresholds Amount of Material Reworks in Tons Material Quality Test Results Heat Chemistries Build for the future!!! Might need multiple plant support Dimensions Customer and Order Material Type, Name, Line, Size, Width, Thickness Responsible Department Defect Type Chemical Element Date/Week/Month/Quarter/Year Disposition Regrade Type • A relational data mart/Data Warehouse using denormalized star -schema models according to the Kimball Methodology • You might use Self-Service BI Tools (Power Pivot and Power View) for power users to “get at” the data And they want to look at these numbers “BY” • • What is our “end game? ” You might build: Sometimes existing reports can help to drive requirements • You might create Analytic OLAP Cubes or SSAS Tabular Models from the Data Mart for more powerful/advanced analytics

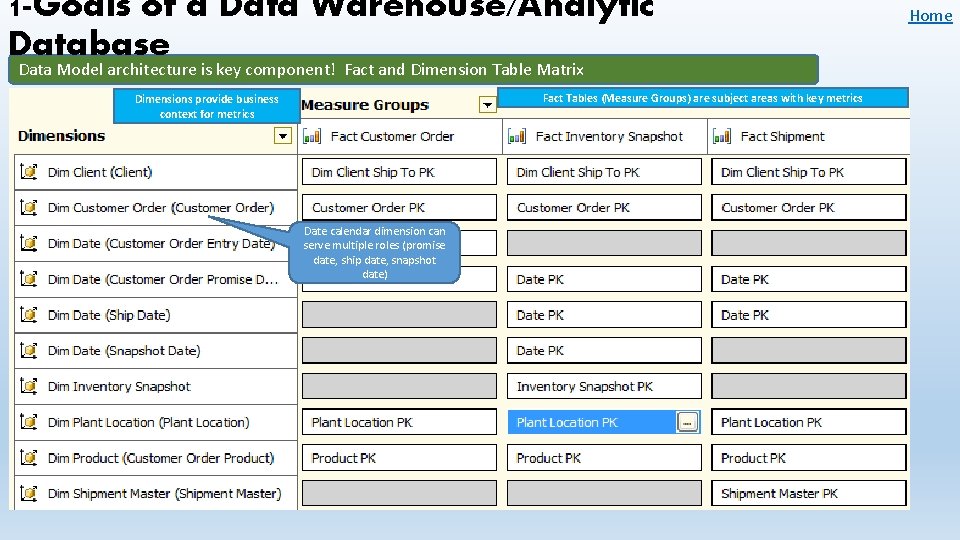

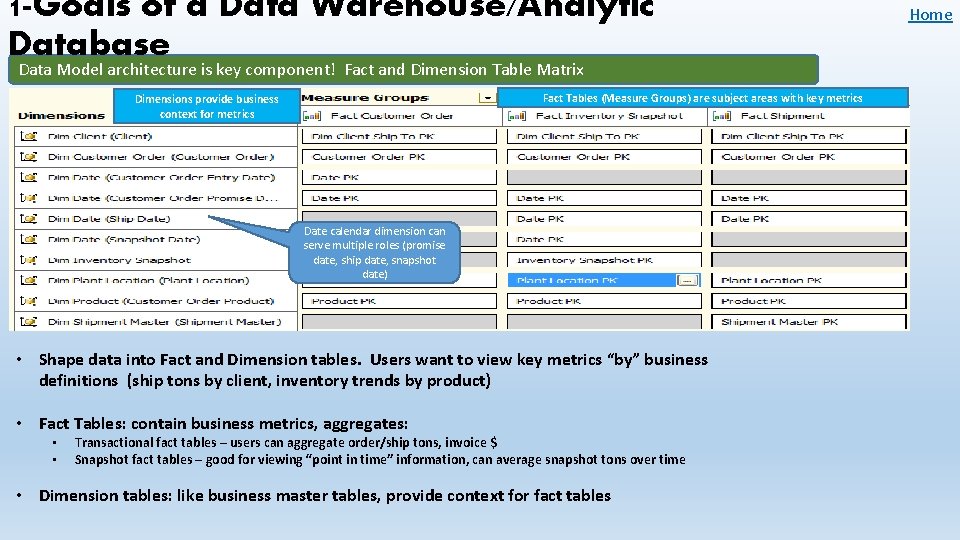

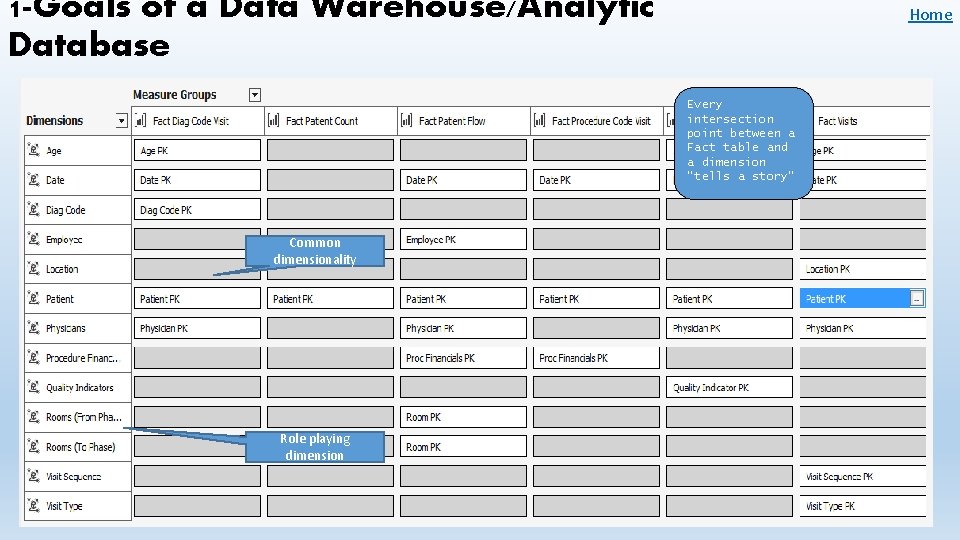

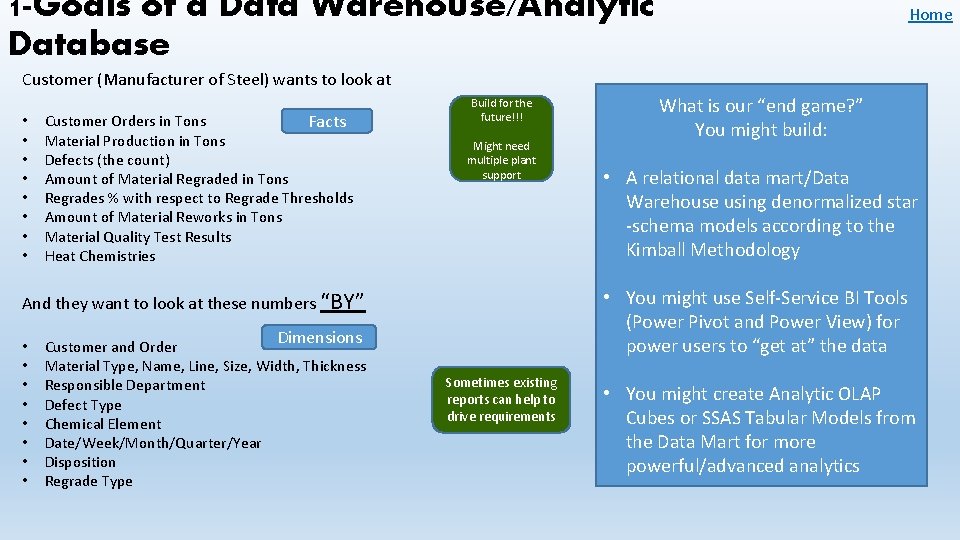

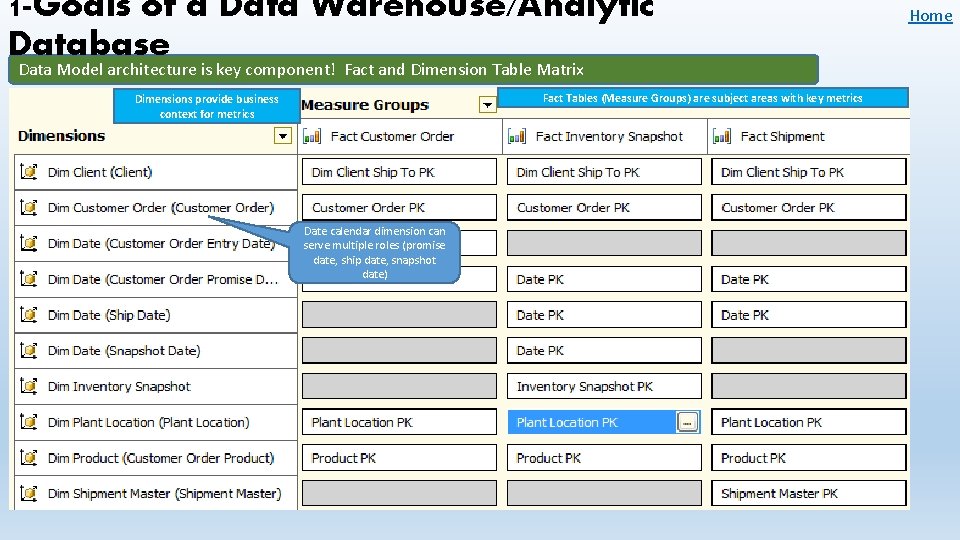

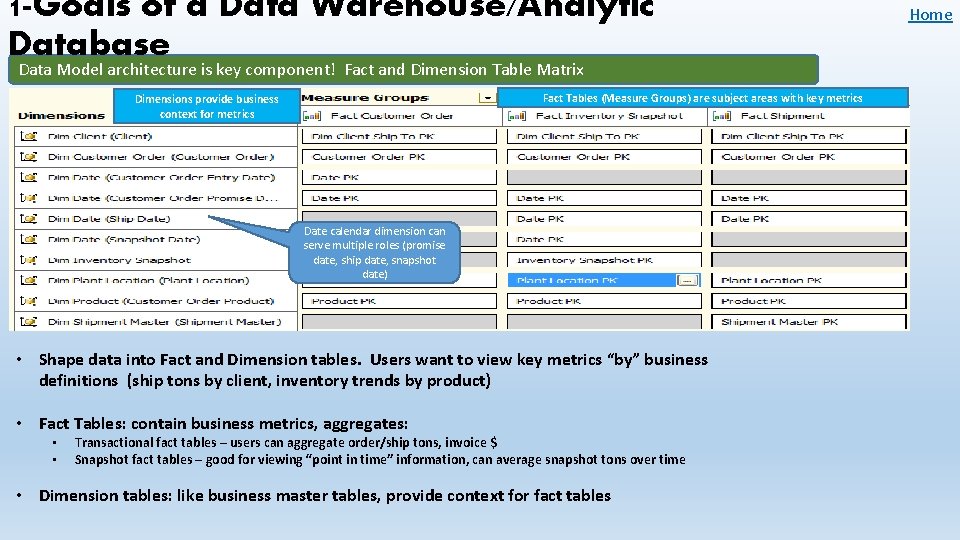

1 -Goals of a Data Warehouse/Analytic Database Data Model architecture is key component! Fact and Dimension Table Matrix Fact Tables (Measure Groups) are subject areas with key metrics Dimensions provide business context for metrics Date calendar dimension can serve multiple roles (promise date, ship date, snapshot date) Home

1 -Goals of a Data Warehouse/Analytic Database Data Model architecture is key component! Fact and Dimension Table Matrix Fact Tables (Measure Groups) are subject areas with key metrics Dimensions provide business context for metrics Date calendar dimension can serve multiple roles (promise date, ship date, snapshot date) • Shape data into Fact and Dimension tables. Users want to view key metrics “by” business definitions (ship tons by client, inventory trends by product) • Fact Tables: contain business metrics, aggregates: • • Transactional fact tables – users can aggregate order/ship tons, invoice $ Snapshot fact tables – good for viewing “point in time” information, can average snapshot tons over time • Dimension tables: like business master tables, provide context for fact tables Home

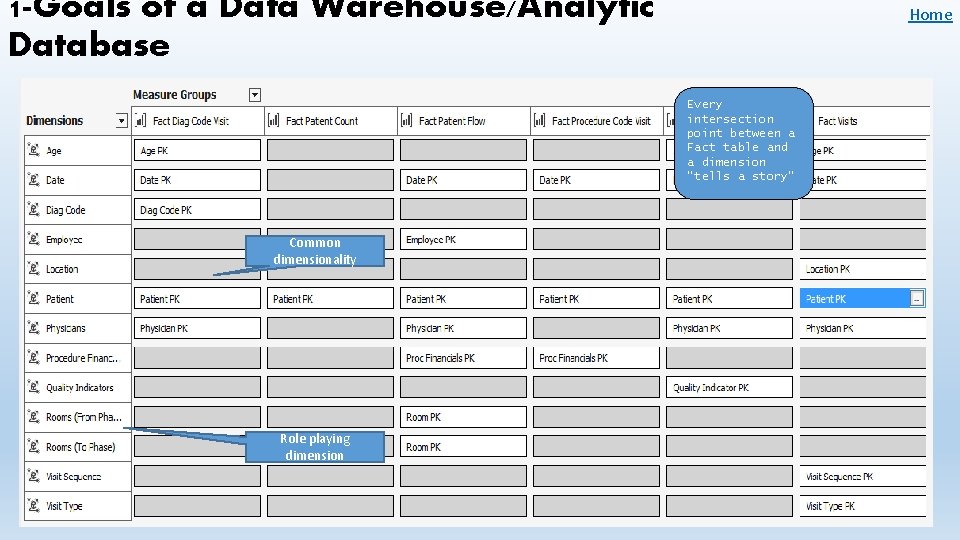

1 -Goals of a Data Warehouse/Analytic Database Home Every intersection point between a Fact table and a dimension “tells a story” Common dimensionality Role playing dimension

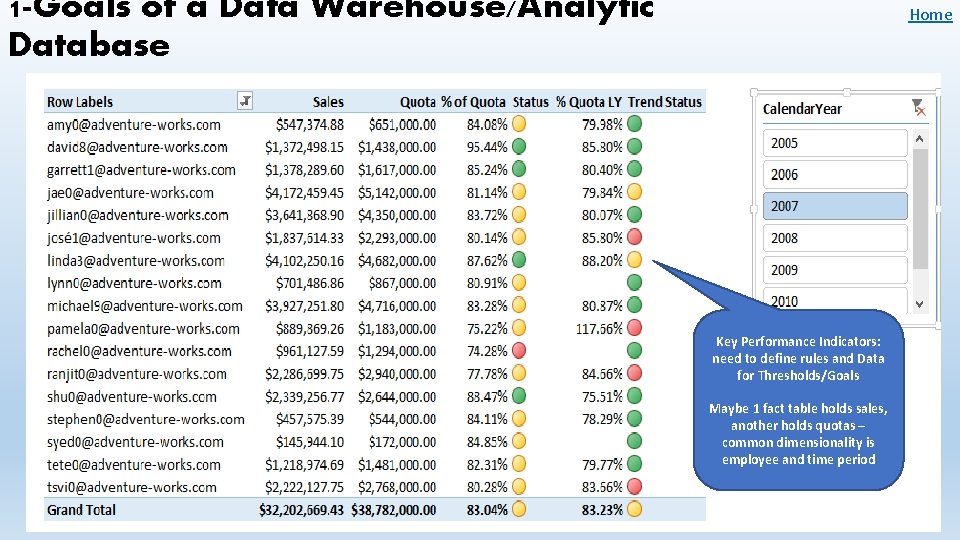

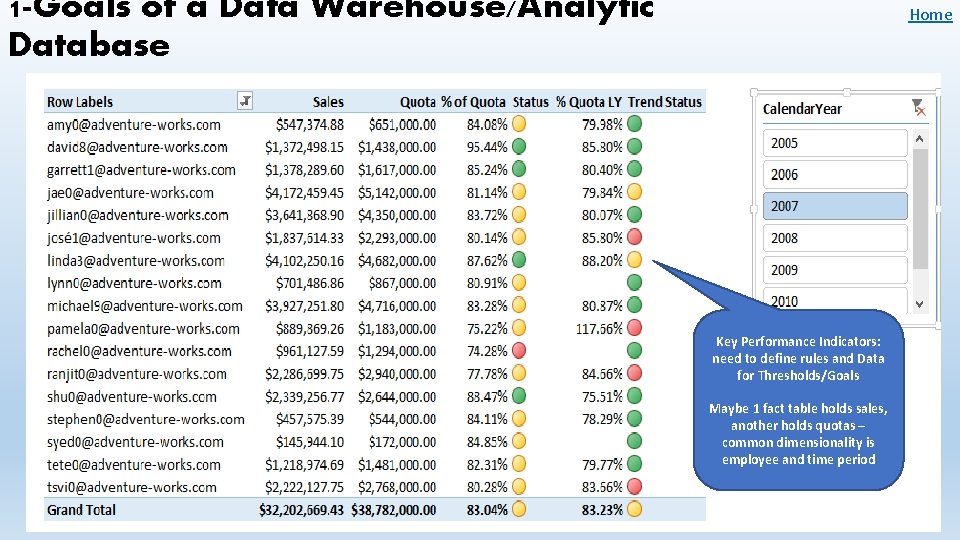

1 -Goals of a Data Warehouse/Analytic Database Home Key Performance Indicators: need to define rules and Data for Thresholds/Goals Maybe 1 fact table holds sales, another holds quotas – common dimensionality is employee and time period

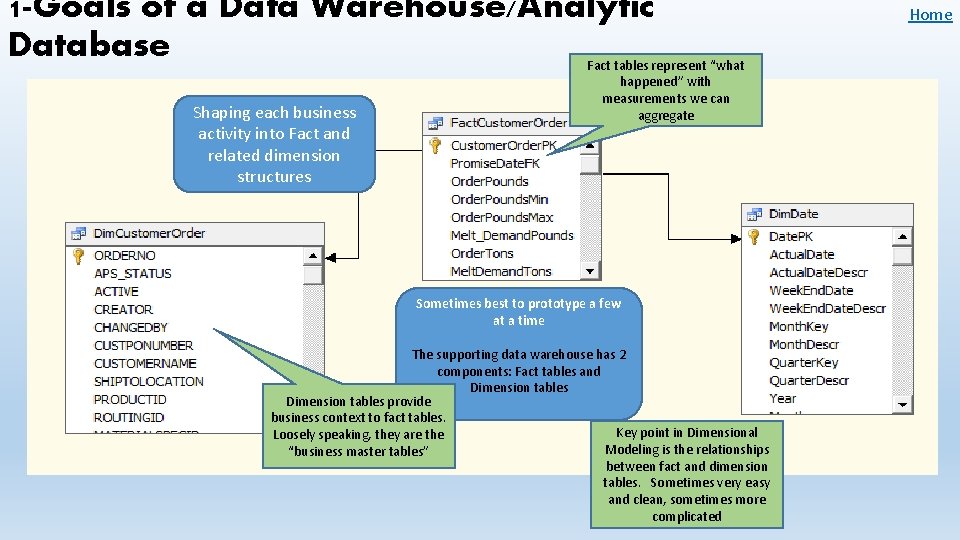

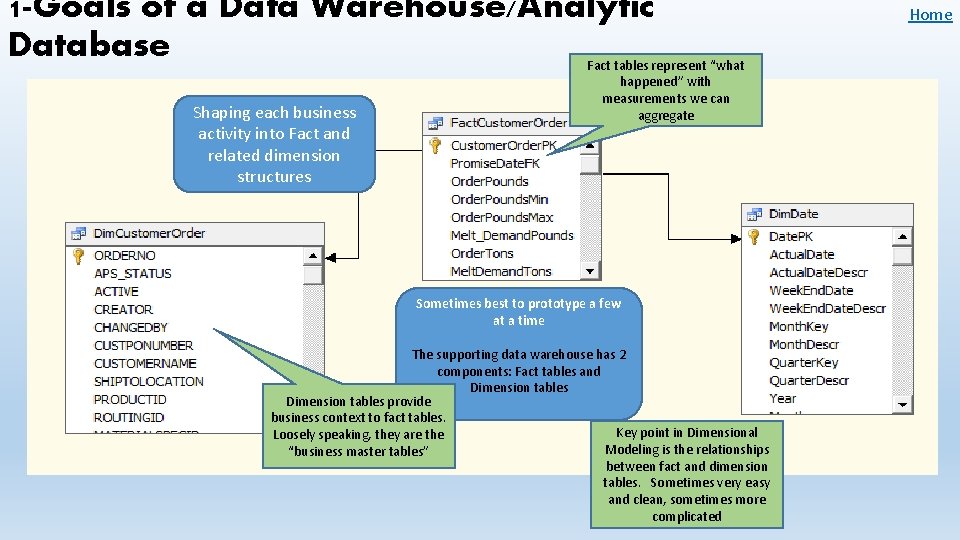

1 -Goals of a Data Warehouse/Analytic Database Shaping each business activity into Fact and related dimension structures Fact tables represent “what happened” with measurements we can aggregate Sometimes best to prototype a few at a time The supporting data warehouse has 2 components: Fact tables and Dimension tables provide business context to fact tables. Key point in Dimensional Loosely speaking, they are the Modeling is the relationships “business master tables” between fact and dimension tables. Sometimes very easy and clean, sometimes more complicated Home

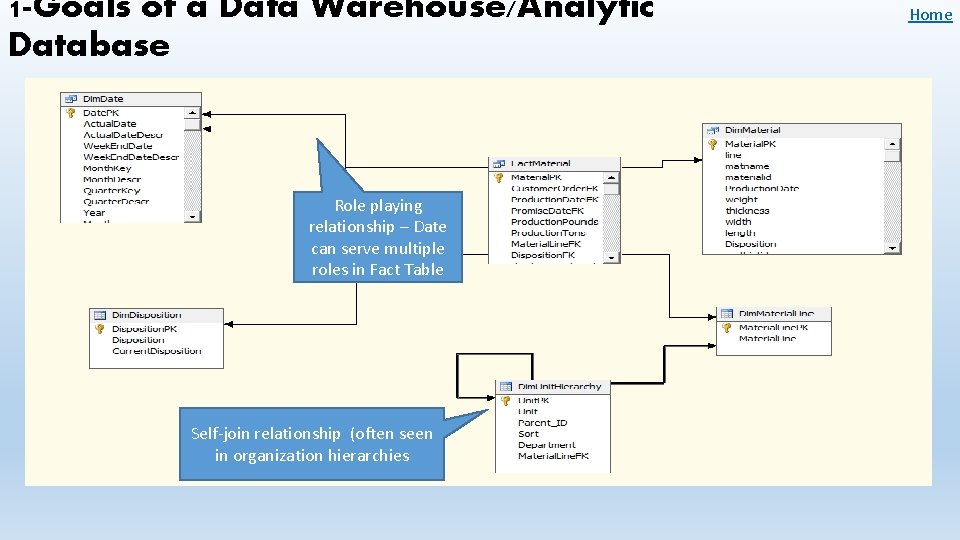

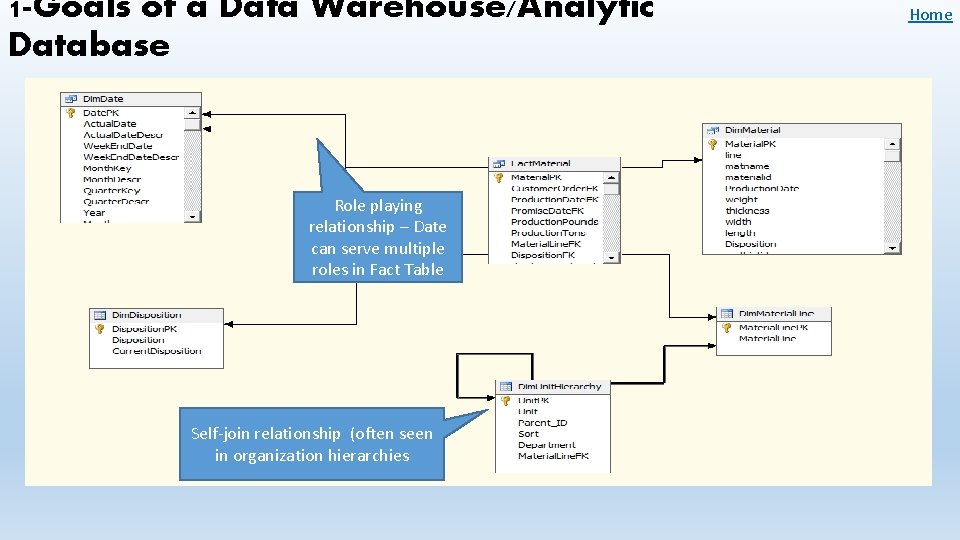

1 -Goals of a Data Warehouse/Analytic Database Role playing relationship – Date can serve multiple roles in Fact Table Self-join relationship (often seen in organization hierarchies Home

1 -Goals of a Data Warehouse/Analytic Database Factless Fact table – no measures, just a tally of “how many times something happened” Home

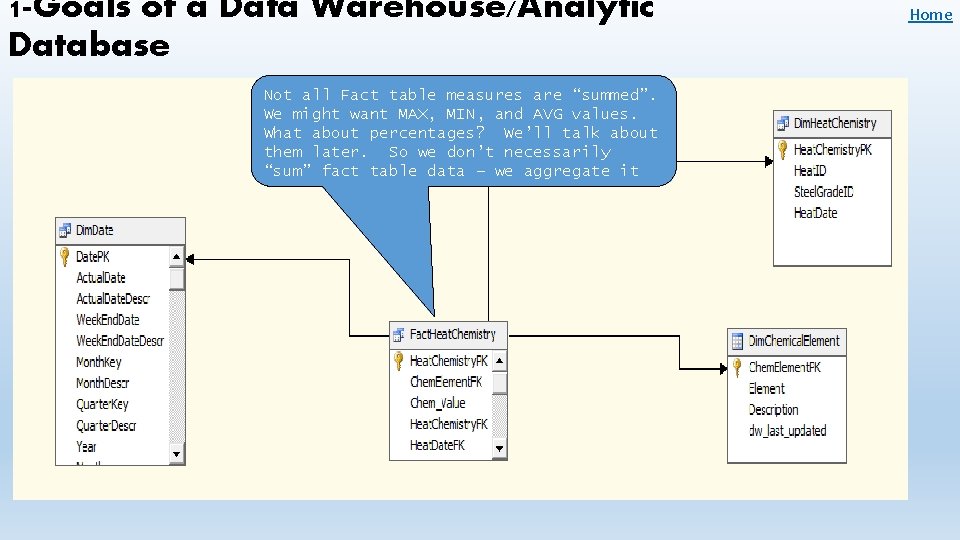

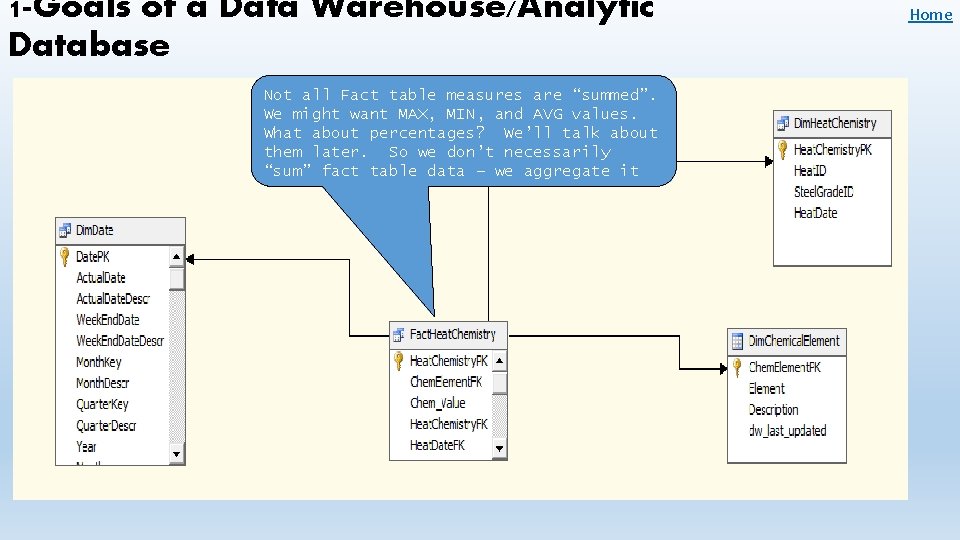

1 -Goals of a Data Warehouse/Analytic Database Not all Fact table measures are “summed”. We might want MAX, MIN, and AVG values. What about percentages? We’ll talk about them later. So we don’t necessarily “sum” fact table data – we aggregate it Home

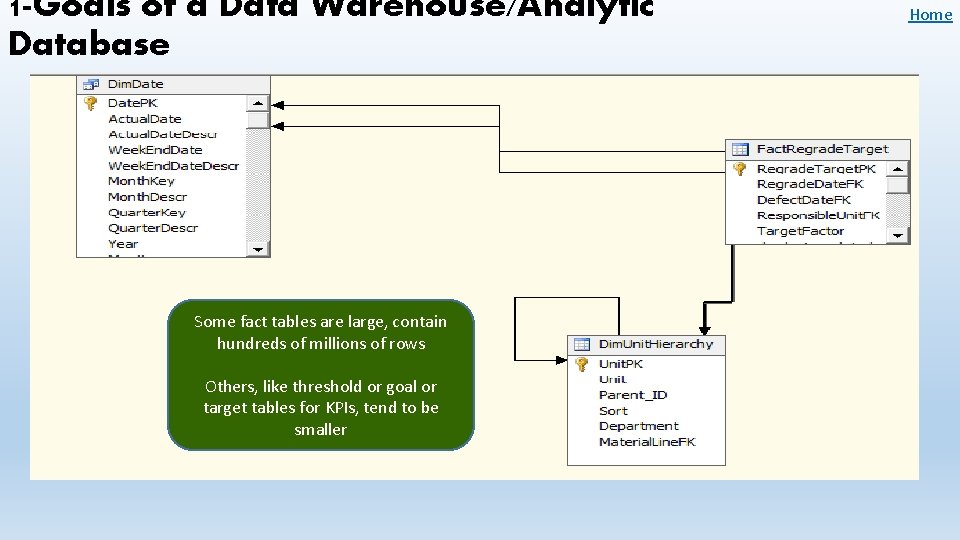

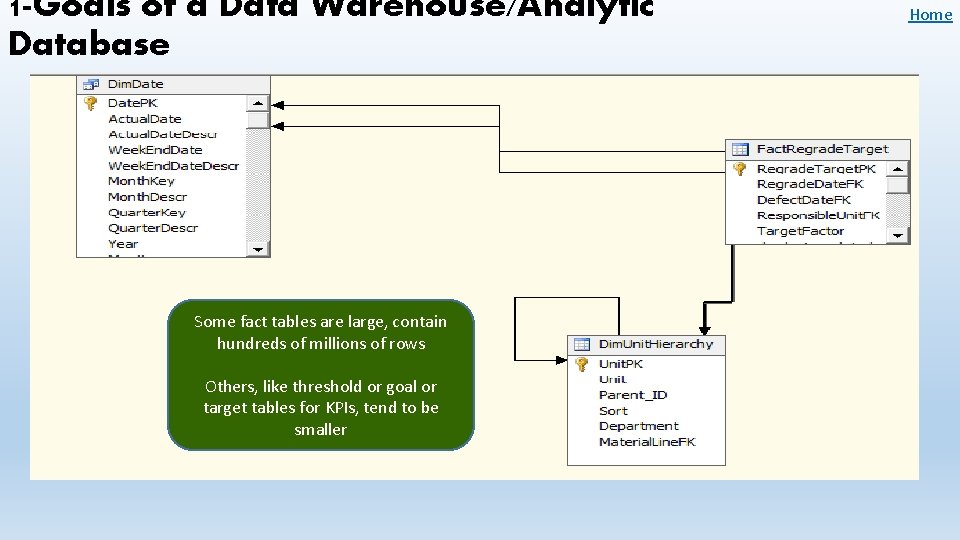

1 -Goals of a Data Warehouse/Analytic Database Some fact tables are large, contain hundreds of millions of rows Others, like threshold or goal or target tables for KPIs, tend to be smaller Home

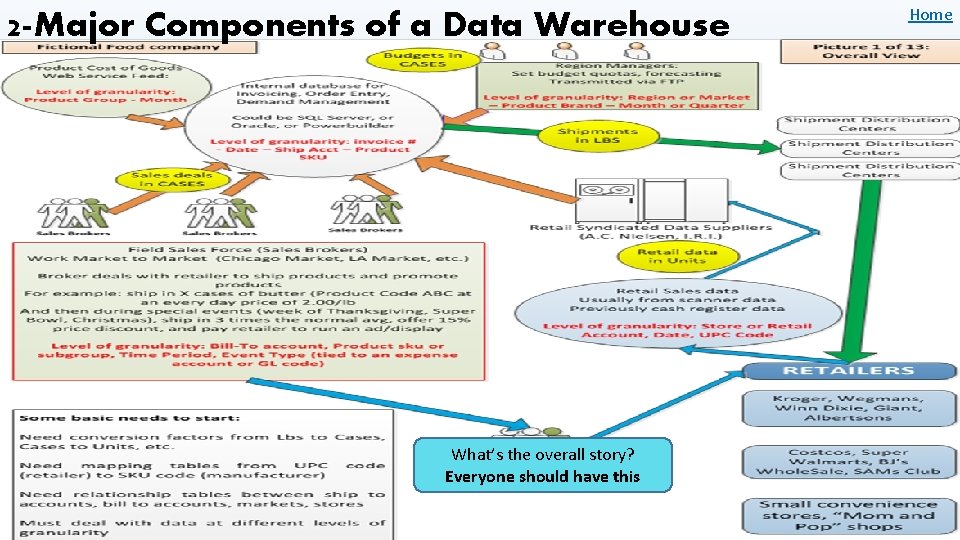

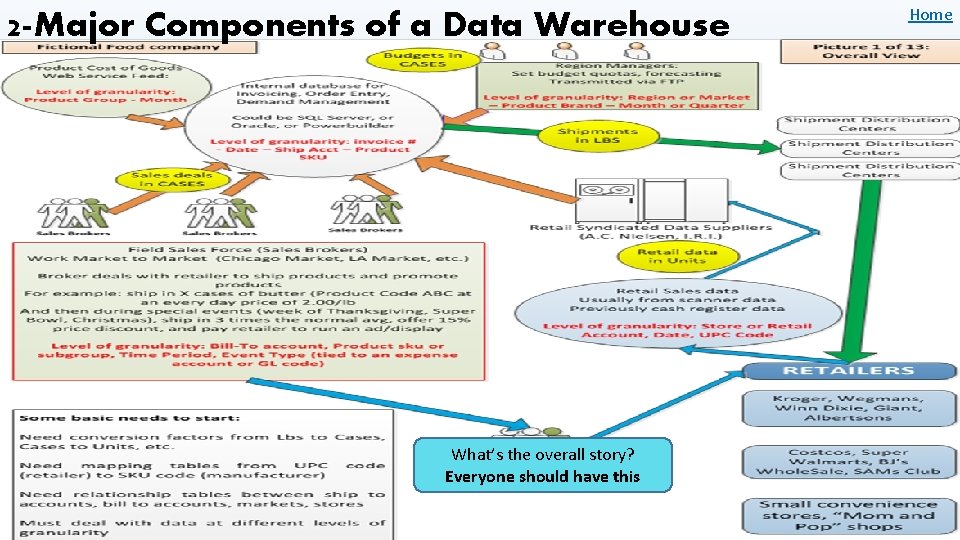

2 -Major Components of a Data Warehouse What’s the overall story? Everyone should have this Home

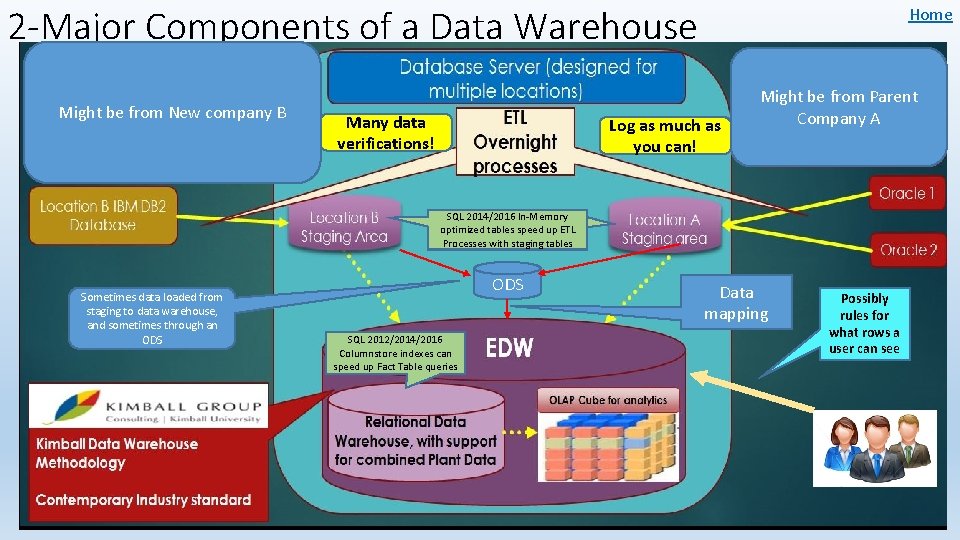

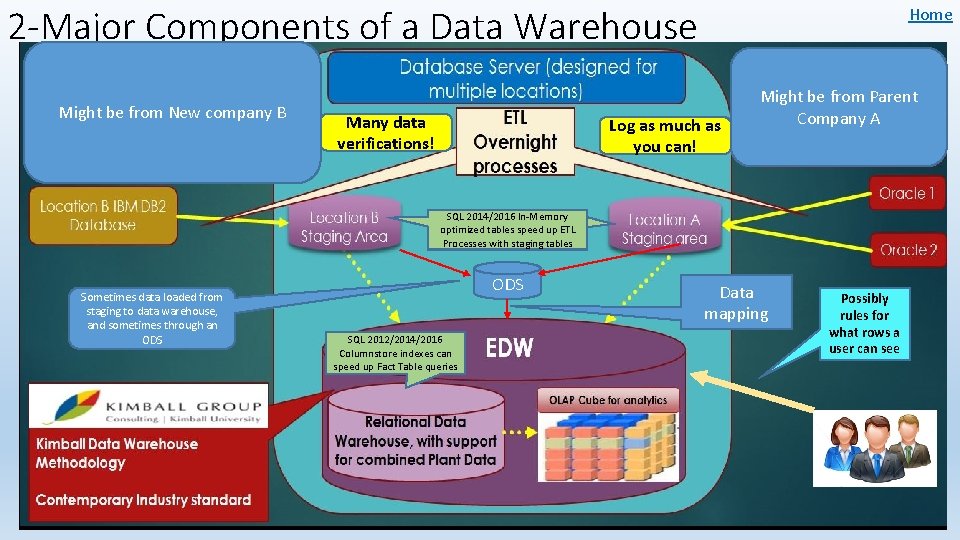

2 -Major Components of a Data Warehouse Might be from New company B Many data verifications! Home Log as much as you can! Might be from Parent Company A SQL 2014/2016 In-Memory SQL 2014 In-Memory optimized tables speed up ETL tables speed things up Processes with staging tables Sometimes data loaded from staging to data warehouse, and sometimes through an ODS SQL 2012/2014/2016 Columnstore indexes can speed up Fact Table queries Data mapping Possibly rules for what rows a user can see

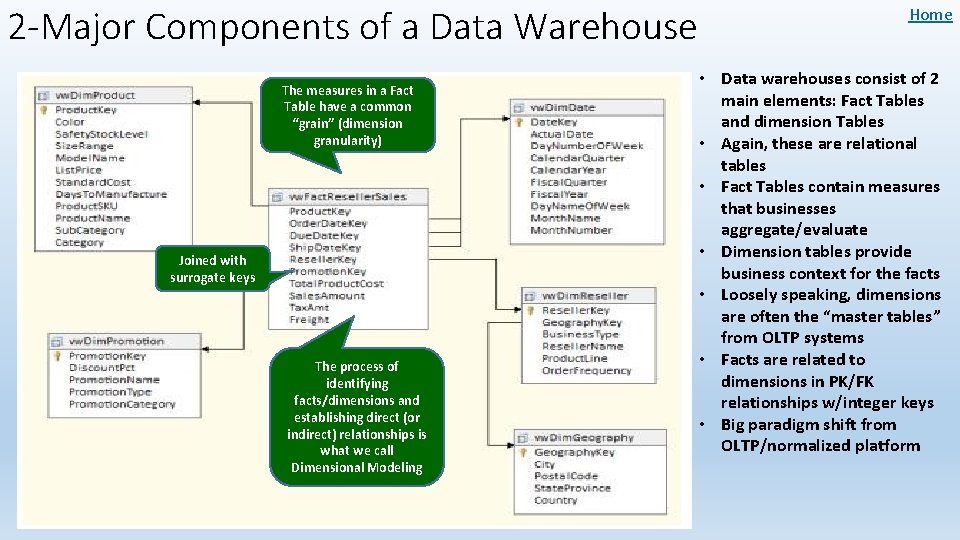

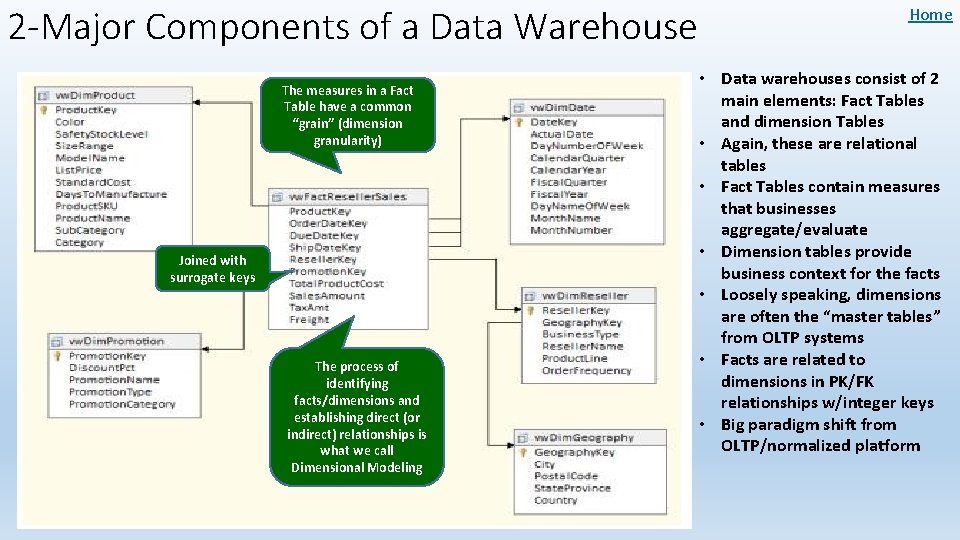

2 -Major Components of a Data Warehouse The measures in a Fact Table have a common “grain” (dimension granularity) Joined with surrogate keys The process of identifying facts/dimensions and establishing direct (or indirect) relationships is what we call Dimensional Modeling Home • Data warehouses consist of 2 main elements: Fact Tables and dimension Tables • Again, these are relational tables • Fact Tables contain measures that businesses aggregate/evaluate • Dimension tables provide business context for the facts • Loosely speaking, dimensions are often the “master tables” from OLTP systems • Facts are related to dimensions in PK/FK relationships w/integer keys • Big paradigm shift from OLTP/normalized platform

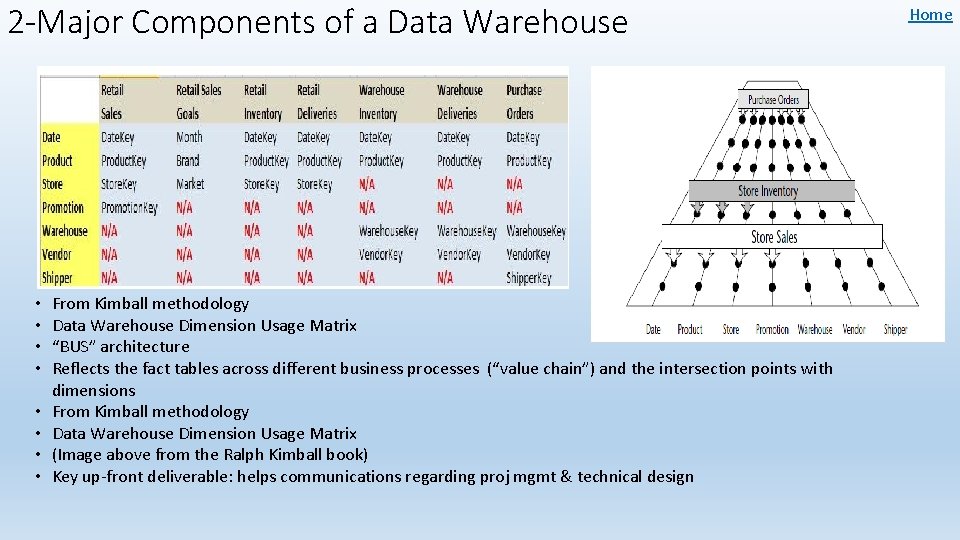

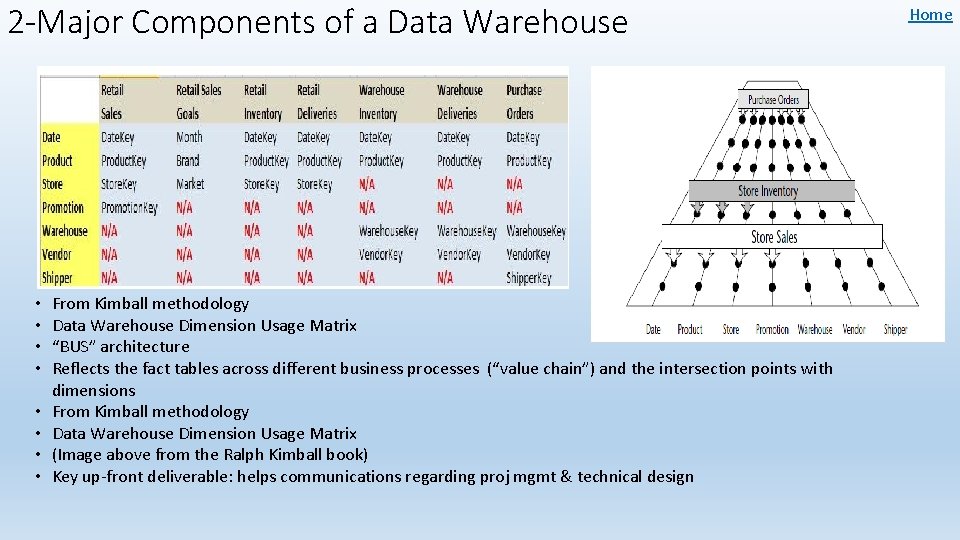

2 -Major Components of a Data Warehouse • • From Kimball methodology Data Warehouse Dimension Usage Matrix “BUS” architecture Reflects the fact tables across different business processes (“value chain”) and the intersection points with dimensions From Kimball methodology Data Warehouse Dimension Usage Matrix (Image above from the Ralph Kimball book) Key up-front deliverable: helps communications regarding proj mgmt & technical design Home

2 -Major Components of a Data Warehouse • So before you begin…. • Make sure you’ve shaped your data into star-schema Fact/Dimension tables, using surrogate integer keys • Fact tables ideally should only contain numeric measures (dollars, units sold) and foreign key integer values that relate to Business Dimension master tables • Database engine features like x. Velocity, Columnstore index can optimize these structures • Recommend: use the Kimball Methodology • Read this book, and read it again, and again! – Data Warehouse Toolkit: Complete Guide to Dimensional Modeling Home

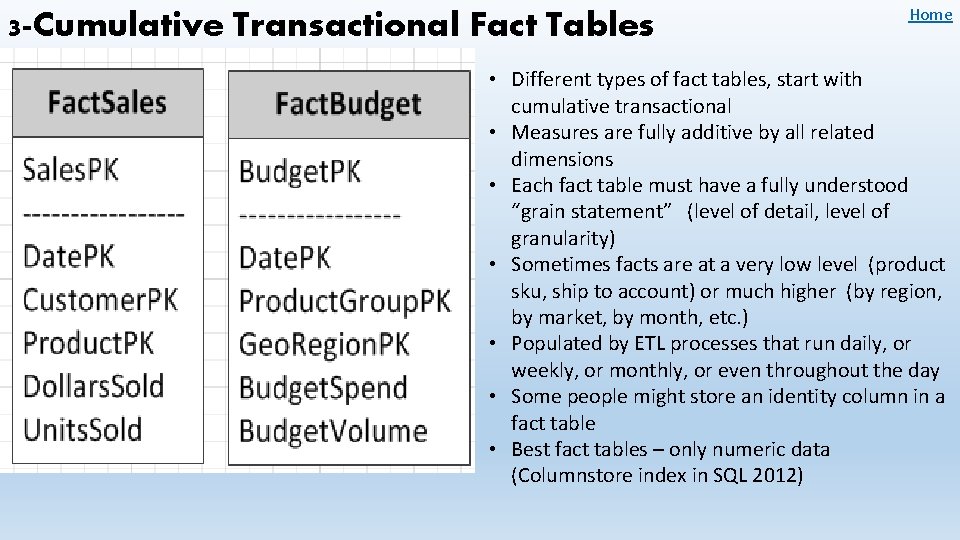

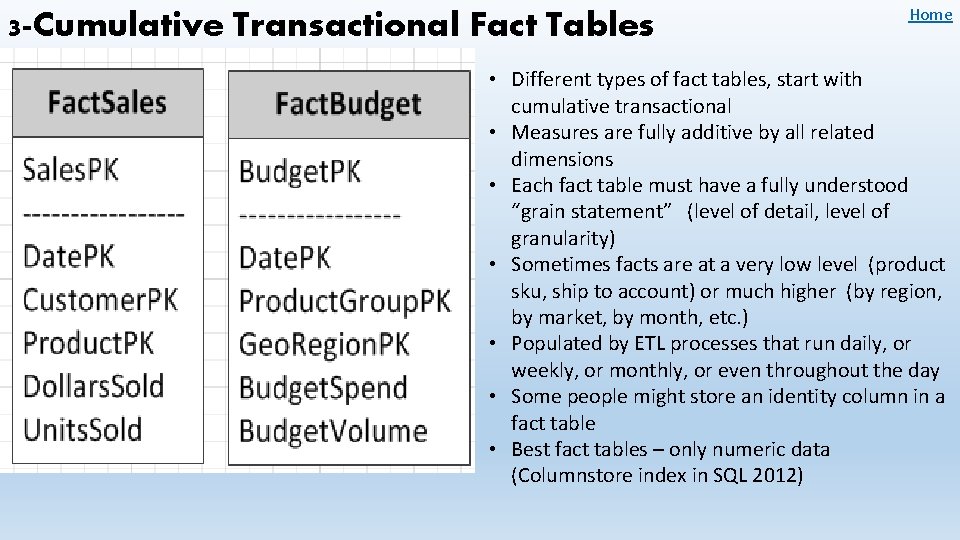

3 -Cumulative Transactional Fact Tables Home • Different types of fact tables, start with cumulative transactional • Measures are fully additive by all related dimensions • Each fact table must have a fully understood “grain statement” (level of detail, level of granularity) • Sometimes facts are at a very low level (product sku, ship to account) or much higher (by region, by market, by month, etc. ) • Populated by ETL processes that run daily, or weekly, or monthly, or even throughout the day • Some people might store an identity column in a fact table • Best fact tables – only numeric data (Columnstore index in SQL 2012)

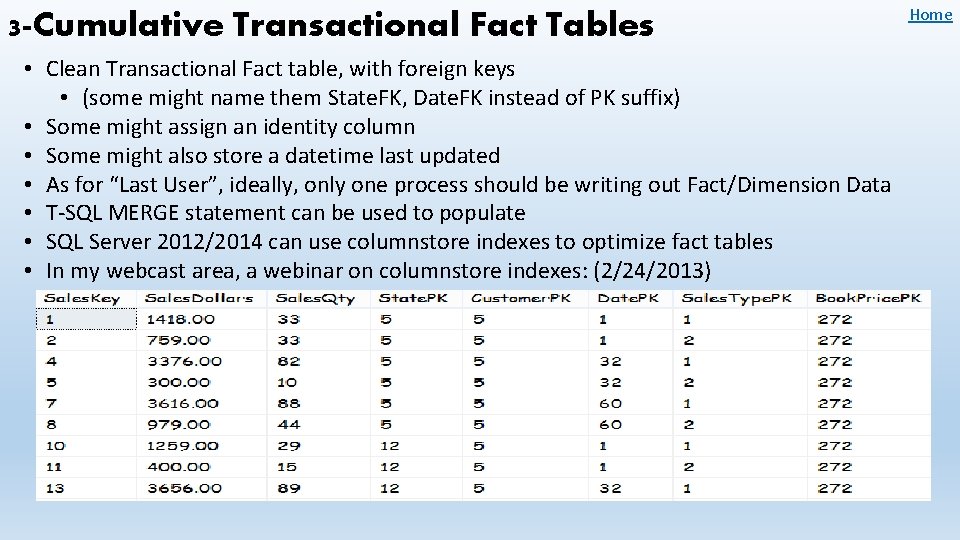

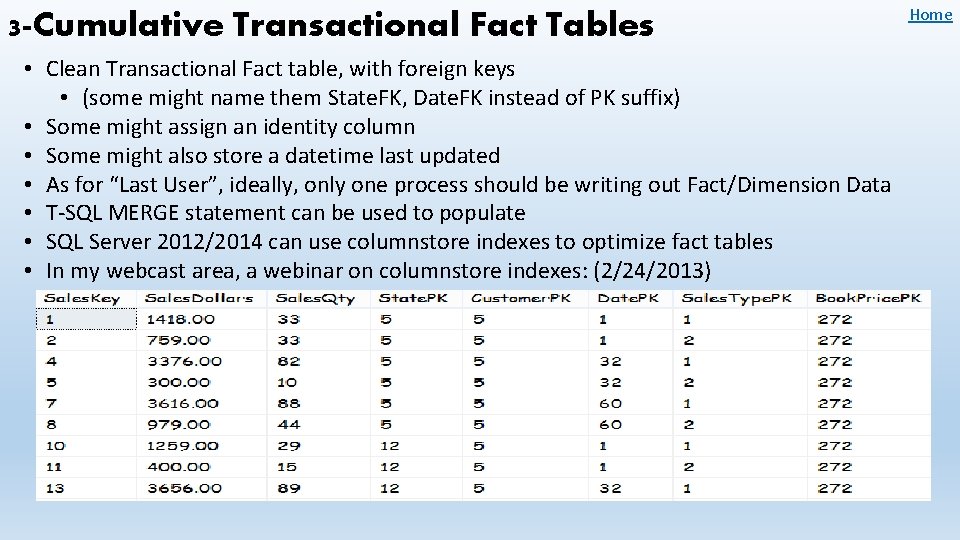

3 -Cumulative Transactional Fact Tables • Clean Transactional Fact table, with foreign keys • (some might name them State. FK, Date. FK instead of PK suffix) • Some might assign an identity column • Some might also store a datetime last updated • As for “Last User”, ideally, only one process should be writing out Fact/Dimension Data • T-SQL MERGE statement can be used to populate • SQL Server 2012/2014 can use columnstore indexes to optimize fact tables • In my webcast area, a webinar on columnstore indexes: (2/24/2013) Home

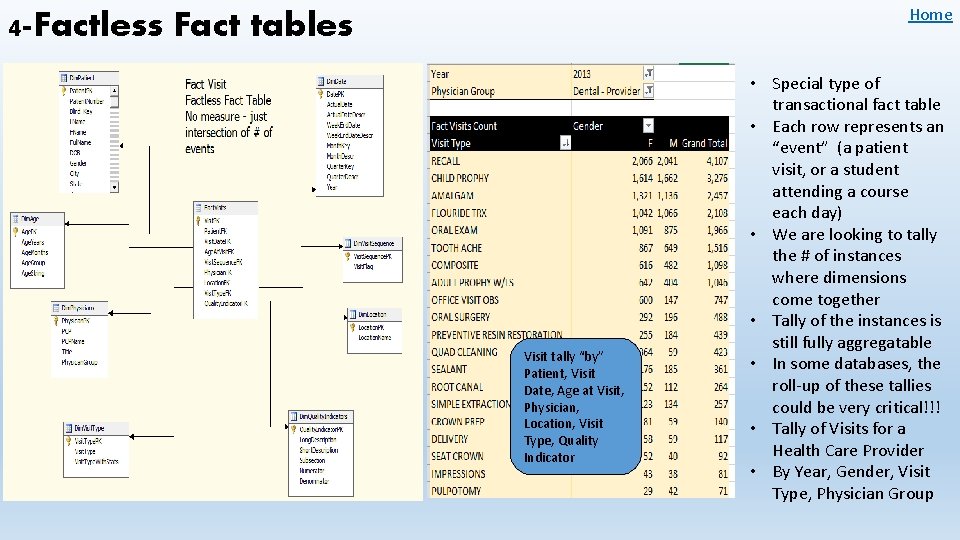

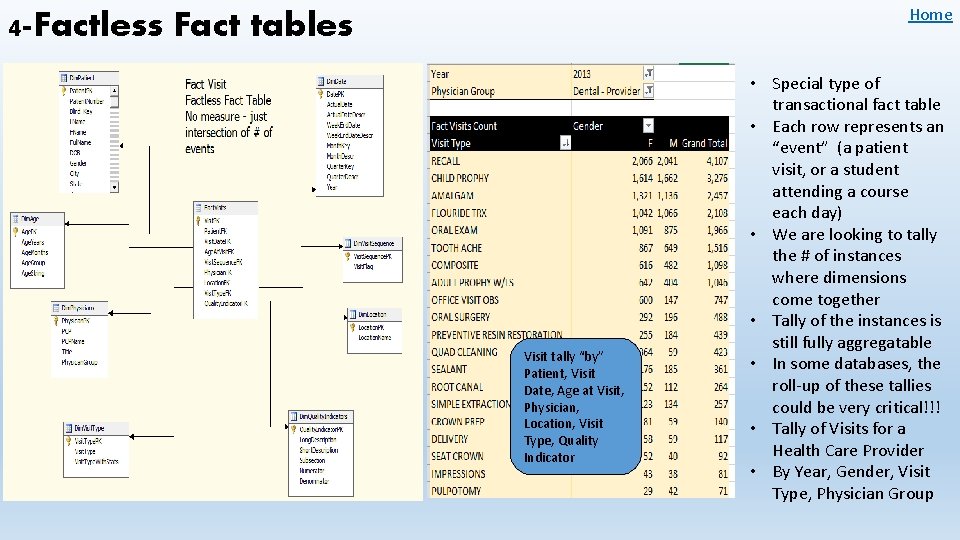

Home 4 -Factless Fact tables Visit tally “by” Patient, Visit Date, Age at Visit, Physician, Location, Visit Type, Quality Indicator • Special type of transactional fact table • Each row represents an “event” (a patient visit, or a student attending a course each day) • We are looking to tally the # of instances where dimensions come together • Tally of the instances is still fully aggregatable • In some databases, the roll-up of these tallies could be very critical!!! • Tally of Visits for a Health Care Provider • By Year, Gender, Visit Type, Physician Group

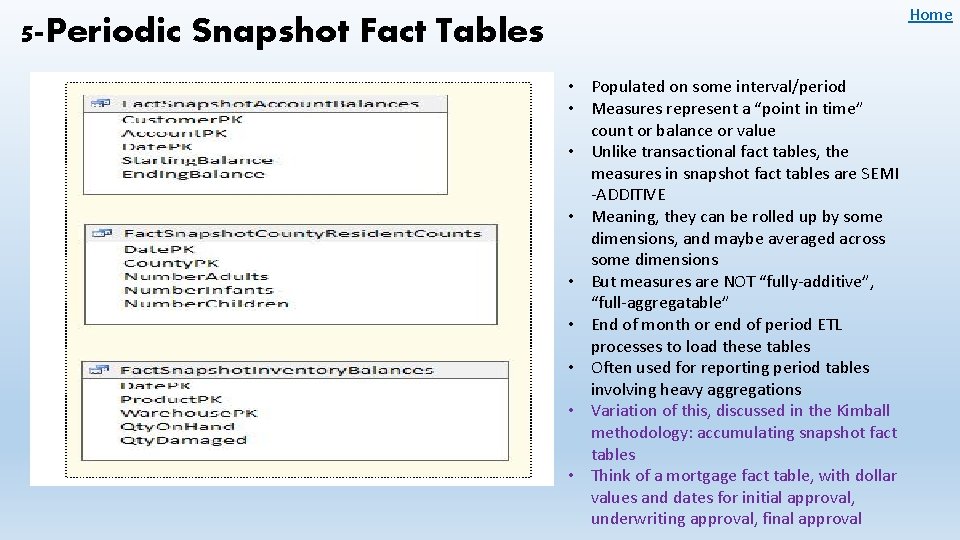

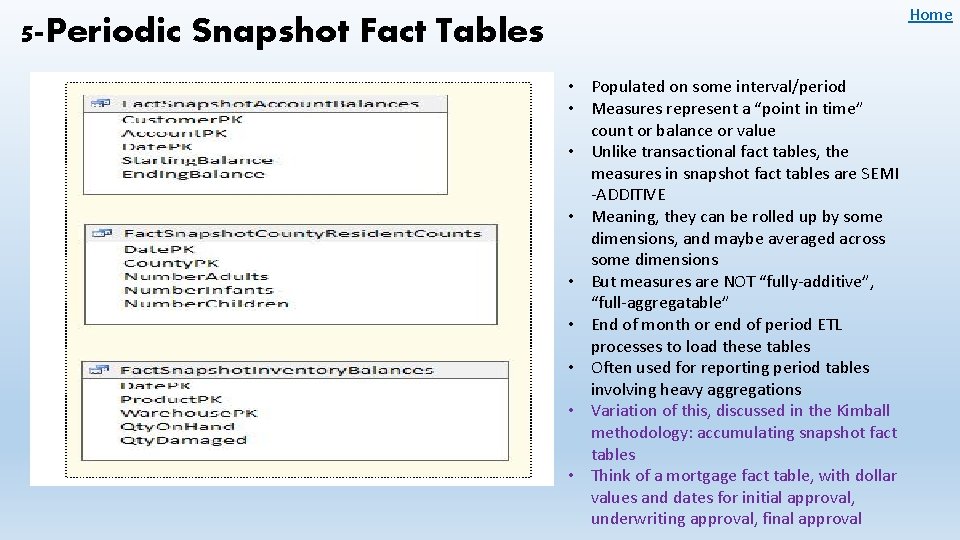

Home 5 -Periodic Snapshot Fact Tables • Populated on some interval/period • Measures represent a “point in time” count or balance or value • Unlike transactional fact tables, the measures in snapshot fact tables are SEMI -ADDITIVE • Meaning, they can be rolled up by some dimensions, and maybe averaged across some dimensions • But measures are NOT “fully-additive”, “full-aggregatable” • End of month or end of period ETL processes to load these tables • Often used for reporting period tables involving heavy aggregations • Variation of this, discussed in the Kimball methodology: accumulating snapshot fact tables • Think of a mortgage fact table, with dollar values and dates for initial approval, underwriting approval, final approval

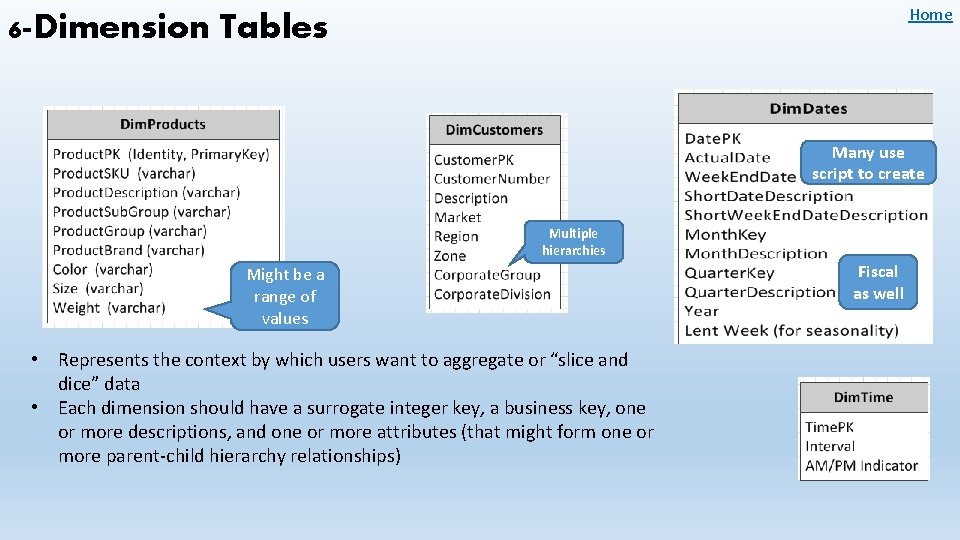

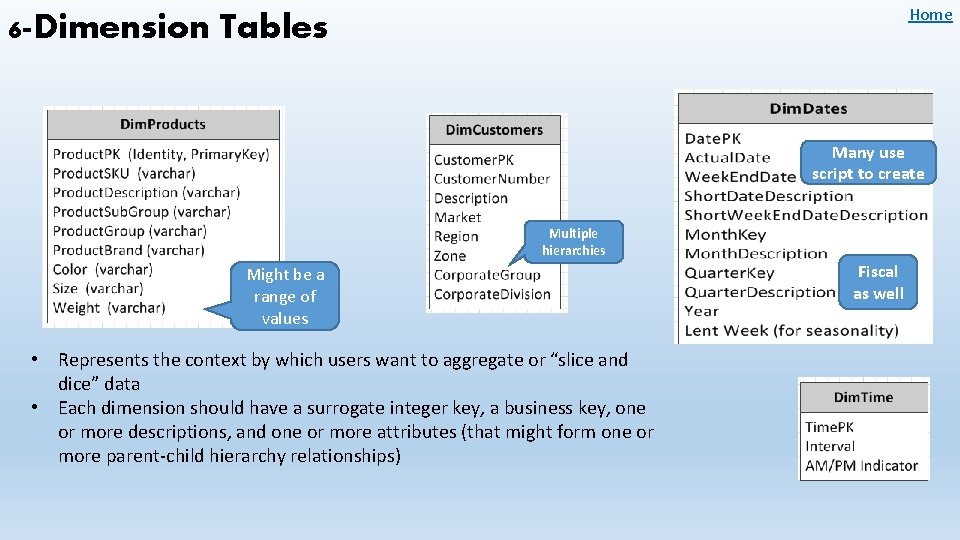

Home 6 -Dimension Tables Many use script to create Multiple hierarchies Might be a range of values • Represents the context by which users want to aggregate or “slice and dice” data • Each dimension should have a surrogate integer key, a business key, one or more descriptions, and one or more attributes (that might form one or more parent-child hierarchy relationships) Fiscal as well

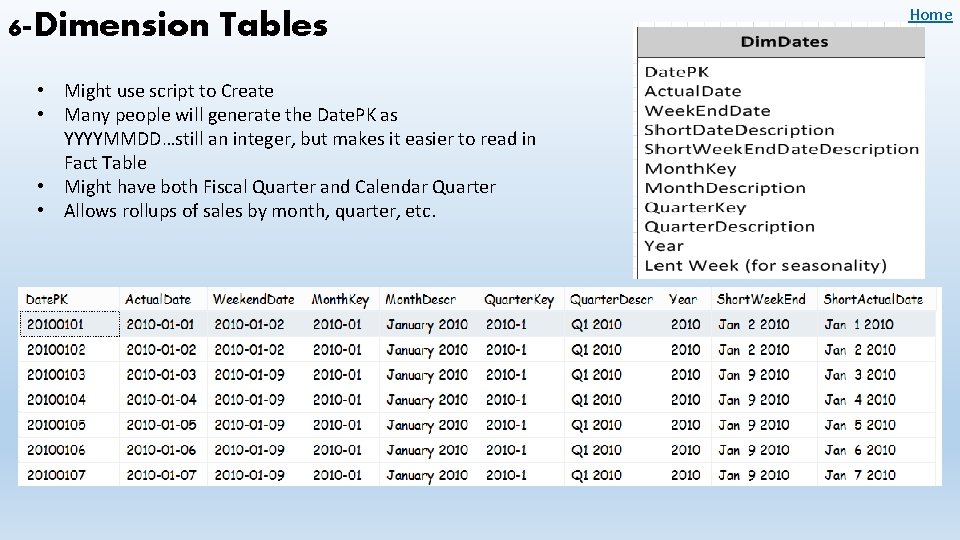

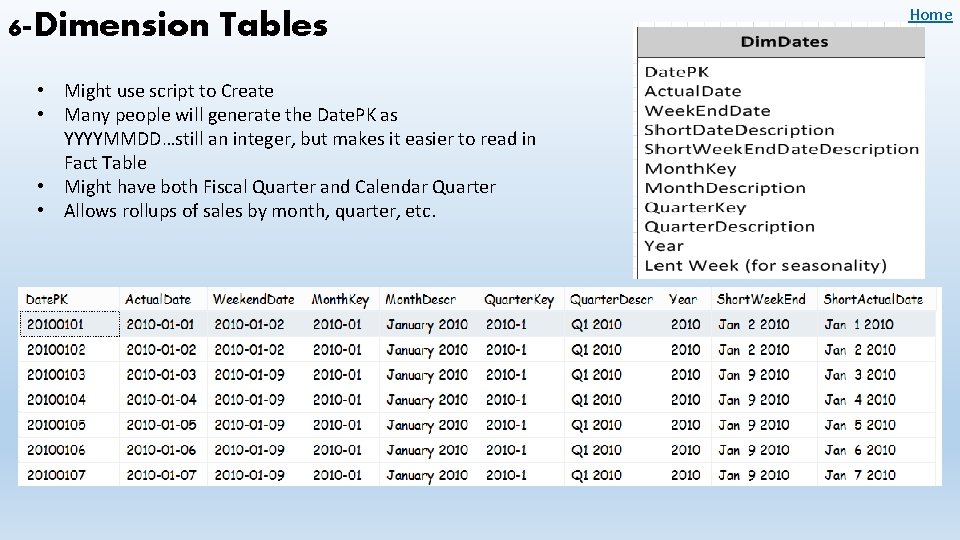

6 -Dimension Tables • Might use script to Create • Many people will generate the Date. PK as YYYYMMDD…still an integer, but makes it easier to read in Fact Table • Might have both Fiscal Quarter and Calendar Quarter • Allows rollups of sales by month, quarter, etc. Home

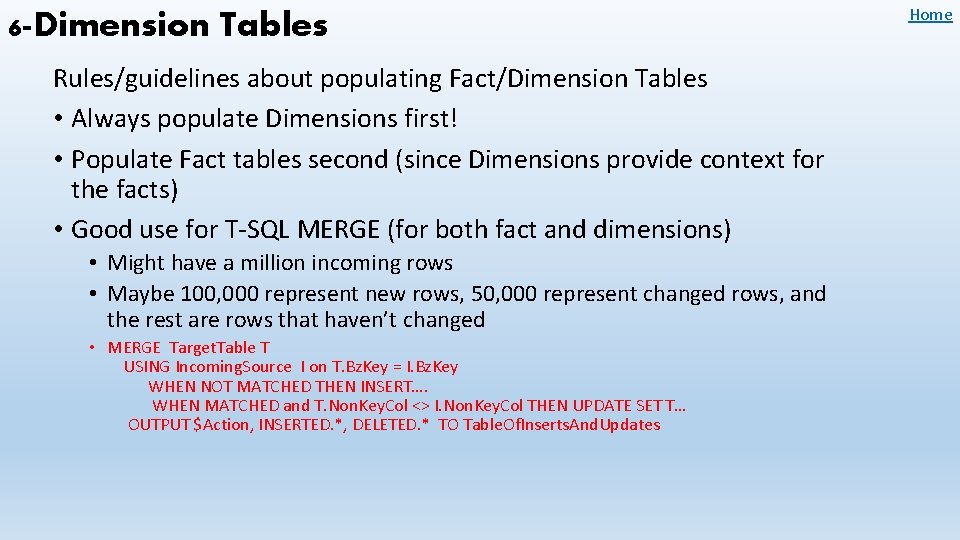

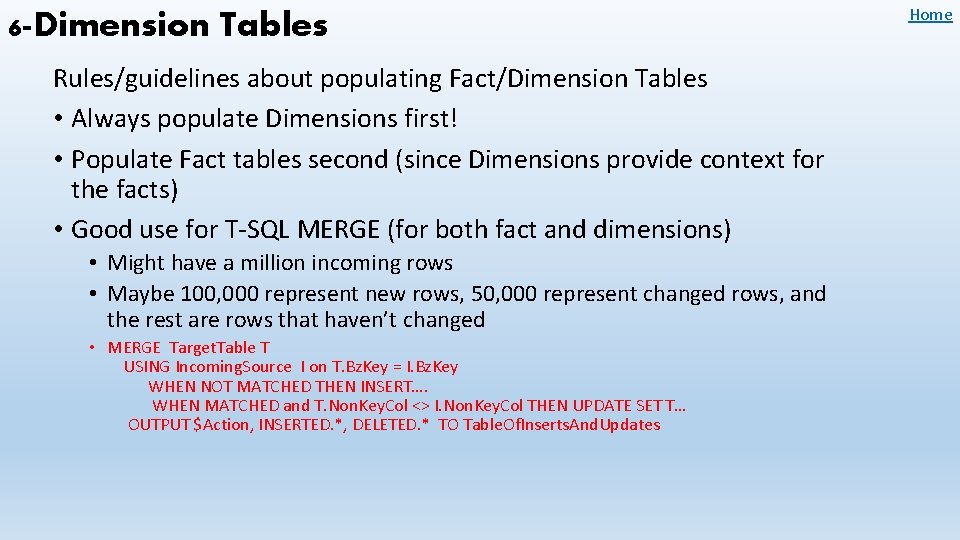

6 -Dimension Tables Rules/guidelines about populating Fact/Dimension Tables • Always populate Dimensions first! • Populate Fact tables second (since Dimensions provide context for the facts) • Good use for T-SQL MERGE (for both fact and dimensions) • Might have a million incoming rows • Maybe 100, 000 represent new rows, 50, 000 represent changed rows, and the rest are rows that haven’t changed • MERGE Target. Table T USING Incoming. Source I on T. Bz. Key = I. Bz. Key WHEN NOT MATCHED THEN INSERT…. WHEN MATCHED and T. Non. Key. Col <> I. Non. Key. Col THEN UPDATE SET T… OUTPUT $Action, INSERTED. *, DELETED. * TO Table. Of. Inserts. And. Updates Home

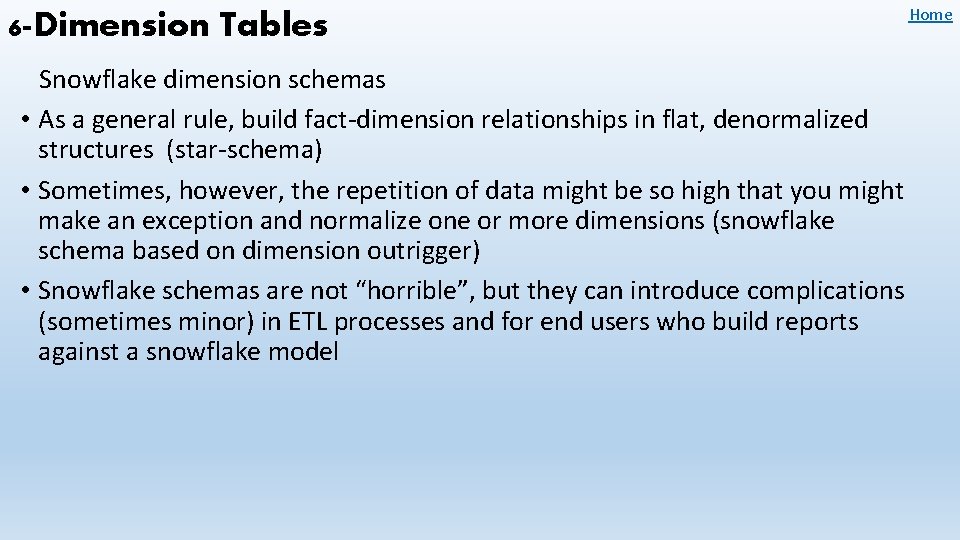

6 -Dimension Tables Snowflake dimension schemas • As a general rule, build fact-dimension relationships in flat, denormalized structures (star-schema) • Sometimes, however, the repetition of data might be so high that you might make an exception and normalize one or more dimensions (snowflake schema based on dimension outrigger) • Snowflake schemas are not “horrible”, but they can introduce complications (sometimes minor) in ETL processes and for end users who build reports against a snowflake model Home

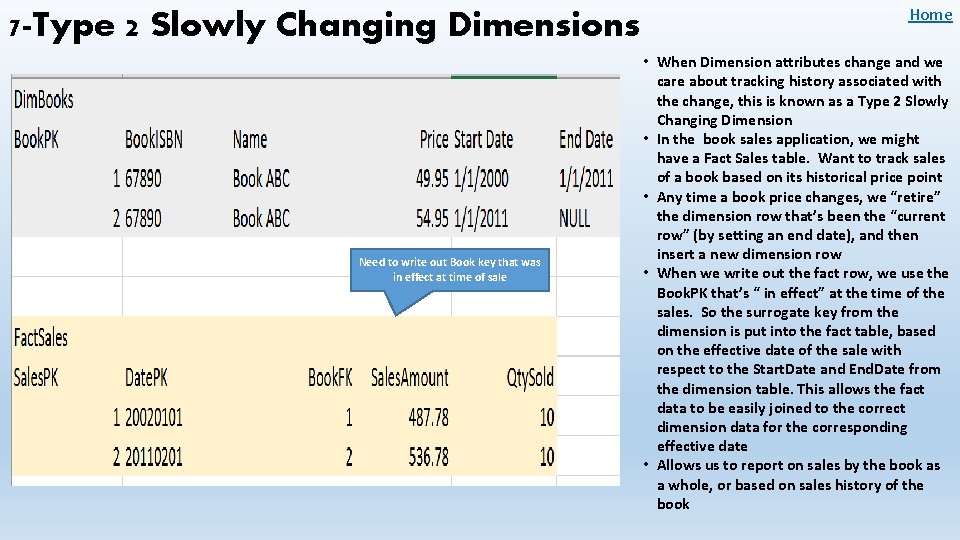

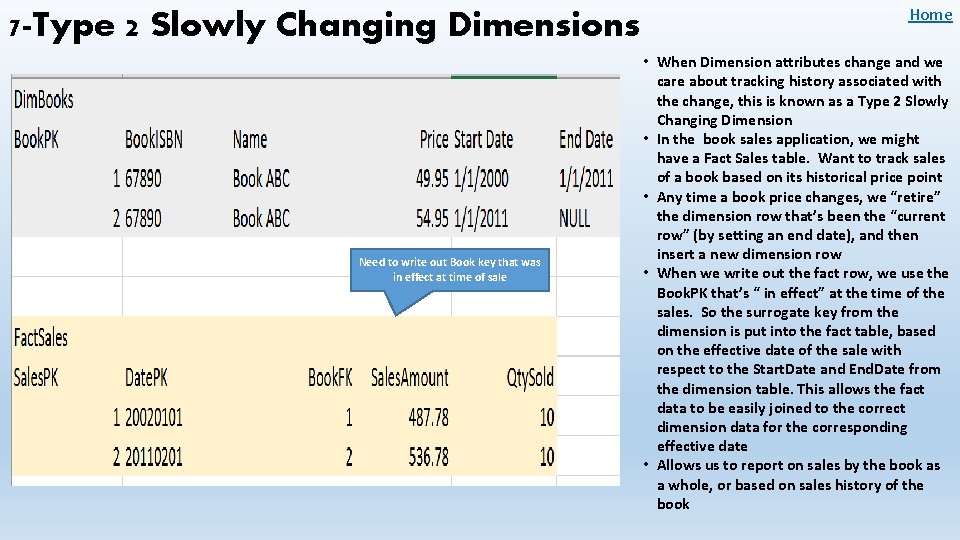

7 -Type 2 Slowly Changing Dimensions Need to write out Book key that was in effect at time of sale Home • When Dimension attributes change and we care about tracking history associated with the change, this is known as a Type 2 Slowly Changing Dimension • In the book sales application, we might have a Fact Sales table. Want to track sales of a book based on its historical price point • Any time a book price changes, we “retire” the dimension row that’s been the “current row” (by setting an end date), and then insert a new dimension row • When we write out the fact row, we use the Book. PK that’s “ in effect” at the time of the sales. So the surrogate key from the dimension is put into the fact table, based on the effective date of the sale with respect to the Start. Date and End. Date from the dimension table. This allows the fact data to be easily joined to the correct dimension data for the corresponding effective date • Allows us to report on sales by the book as a whole, or based on sales history of the book

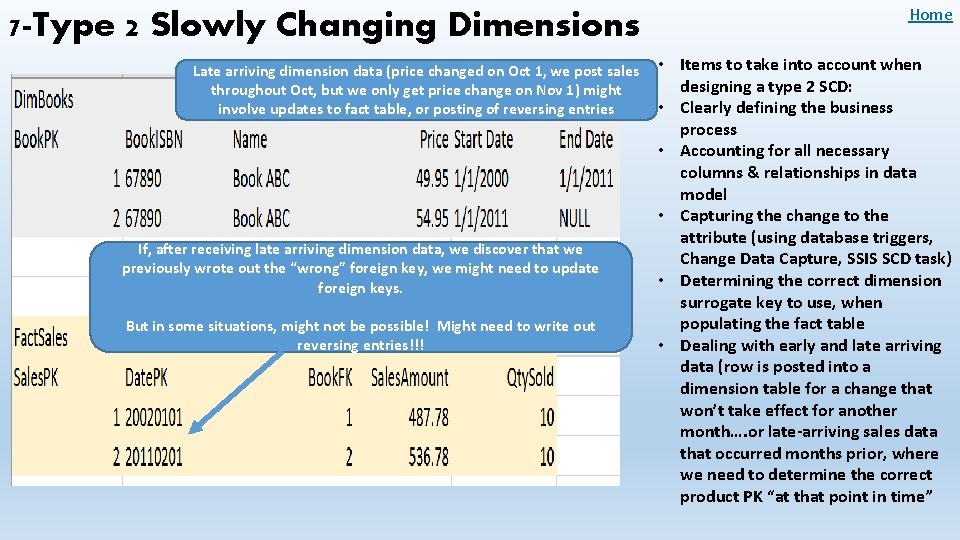

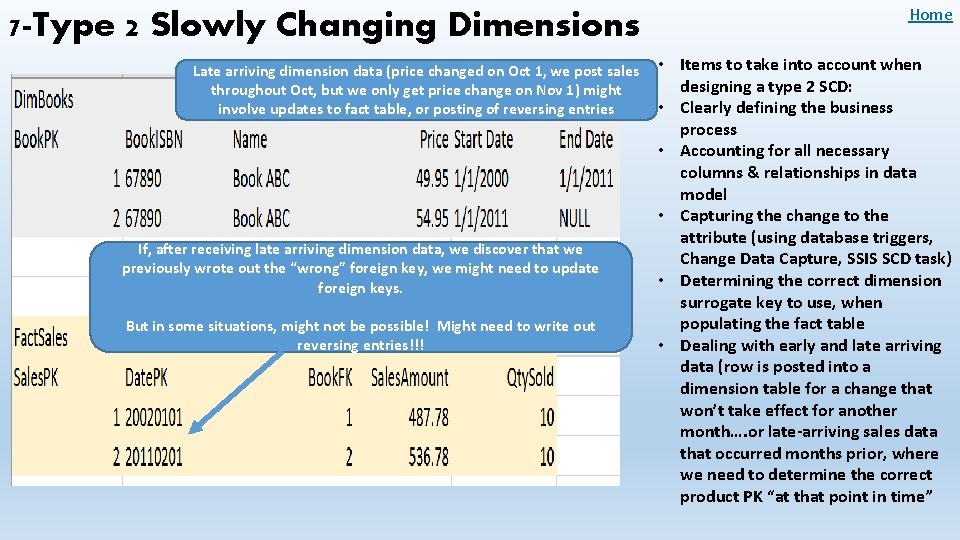

7 -Type 2 Slowly Changing Dimensions Home Late arriving dimension data (price changed on Oct 1, we post sales throughout Oct, but we only get price change on Nov 1) might involve updates to fact table, or posting of reversing entries • Items to take into account when designing a type 2 SCD: • Clearly defining the business process • Accounting for all necessary columns & relationships in data model • Capturing the change to the attribute (using database triggers, Change Data Capture, SSIS SCD task) • Determining the correct dimension surrogate key to use, when populating the fact table • Dealing with early and late arriving data (row is posted into a dimension table for a change that won’t take effect for another month…. or late-arriving sales data that occurred months prior, where we need to determine the correct product PK “at that point in time” If, after receiving late arriving dimension data, we discover that we previously wrote out the “wrong” foreign key, we might need to update foreign keys. But in some situations, might not be possible! Might need to write out reversing entries!!!

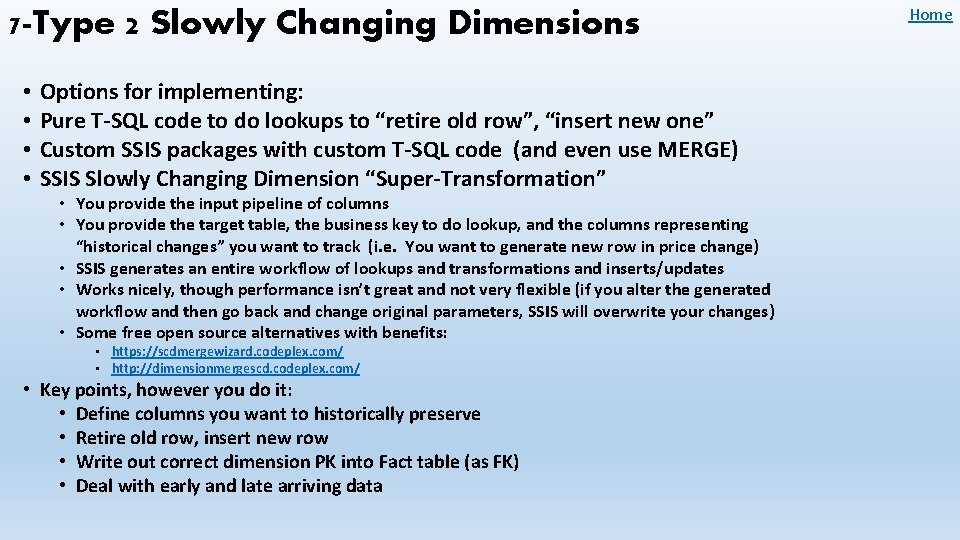

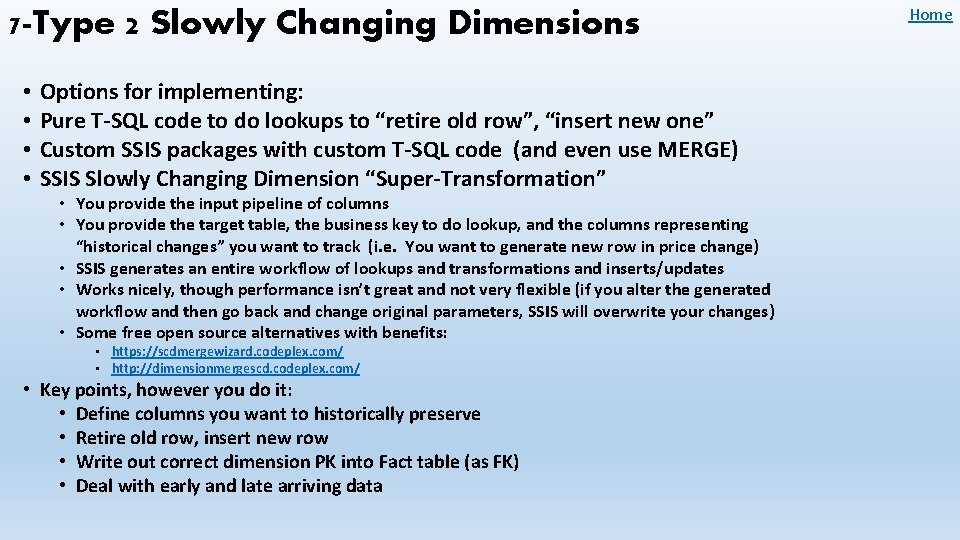

7 -Type 2 Slowly Changing Dimensions • • Options for implementing: Pure T-SQL code to do lookups to “retire old row”, “insert new one” Custom SSIS packages with custom T-SQL code (and even use MERGE) SSIS Slowly Changing Dimension “Super-Transformation” • You provide the input pipeline of columns • You provide the target table, the business key to do lookup, and the columns representing “historical changes” you want to track (i. e. You want to generate new row in price change) • SSIS generates an entire workflow of lookups and transformations and inserts/updates • Works nicely, though performance isn’t great and not very flexible (if you alter the generated workflow and then go back and change original parameters, SSIS will overwrite your changes) • Some free open source alternatives with benefits: • https: //scdmergewizard. codeplex. com/ • http: //dimensionmergescd. codeplex. com/ • Key points, however you do it: • Define columns you want to historically preserve • Retire old row, insert new row • Write out correct dimension PK into Fact table (as FK) • Deal with early and late arriving data Home

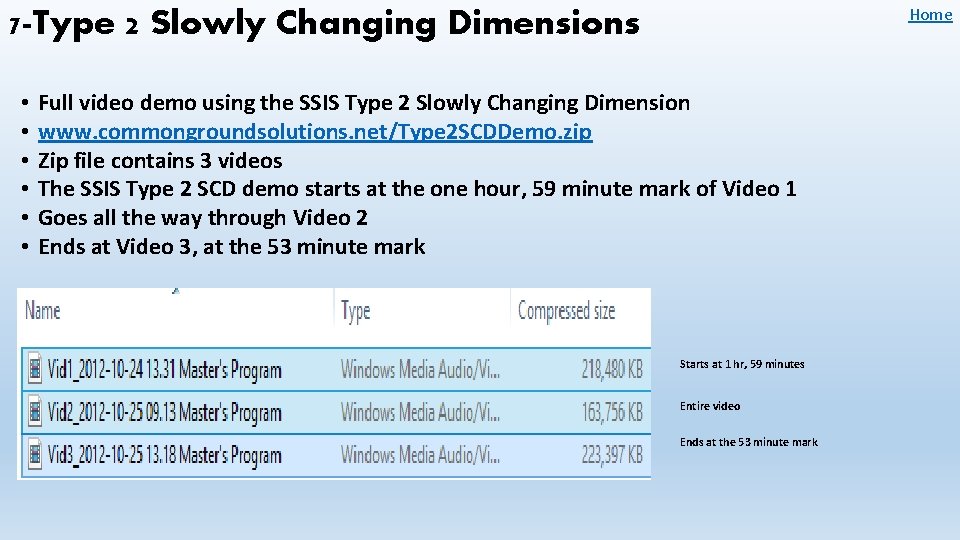

Home 7 -Type 2 Slowly Changing Dimensions • • • Full video demo using the SSIS Type 2 Slowly Changing Dimension www. commongroundsolutions. net/Type 2 SCDDemo. zip Zip file contains 3 videos The SSIS Type 2 SCD demo starts at the one hour, 59 minute mark of Video 1 Goes all the way through Video 2 Ends at Video 3, at the 53 minute mark Starts at 1 hr, 59 minutes Entire video Ends at the 53 minute mark

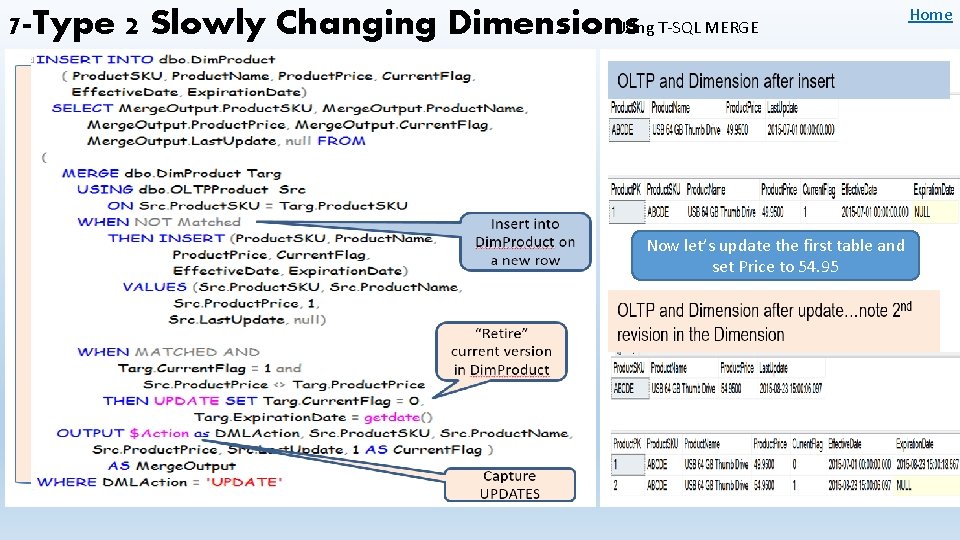

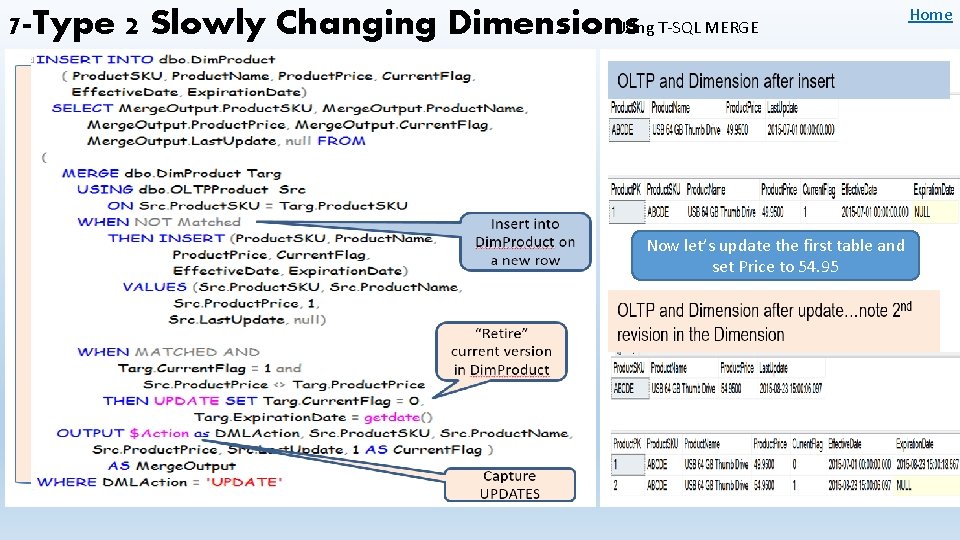

Using T-SQL MERGE 7 -Type 2 Slowly Changing Dimensions Now let’s update the first table and set Price to 54. 95 Home

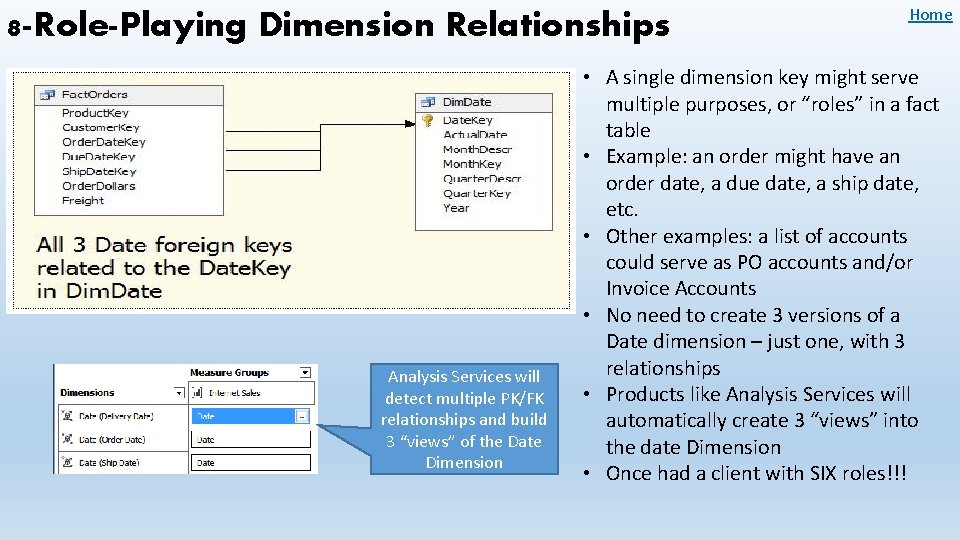

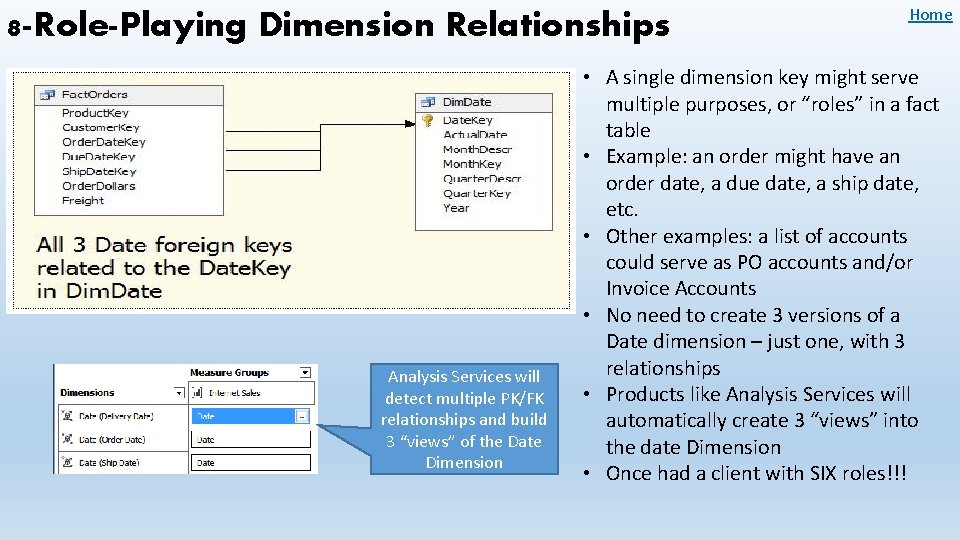

8 -Role-Playing Dimension Relationships Analysis Services will detect multiple PK/FK relationships and build 3 “views” of the Date Dimension Home • A single dimension key might serve multiple purposes, or “roles” in a fact table • Example: an order might have an order date, a due date, a ship date, etc. • Other examples: a list of accounts could serve as PO accounts and/or Invoice Accounts • No need to create 3 versions of a Date dimension – just one, with 3 relationships • Products like Analysis Services will automatically create 3 “views” into the date Dimension • Once had a client with SIX roles!!!

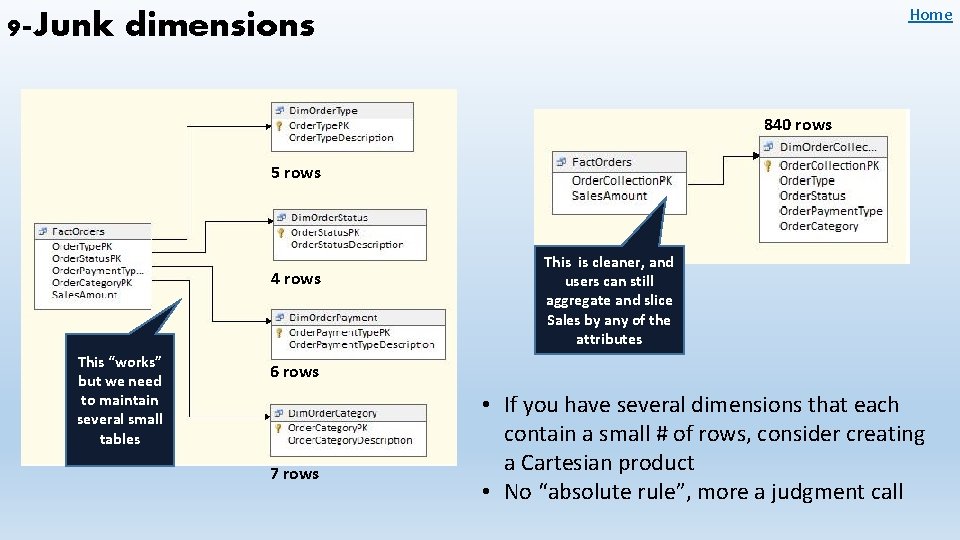

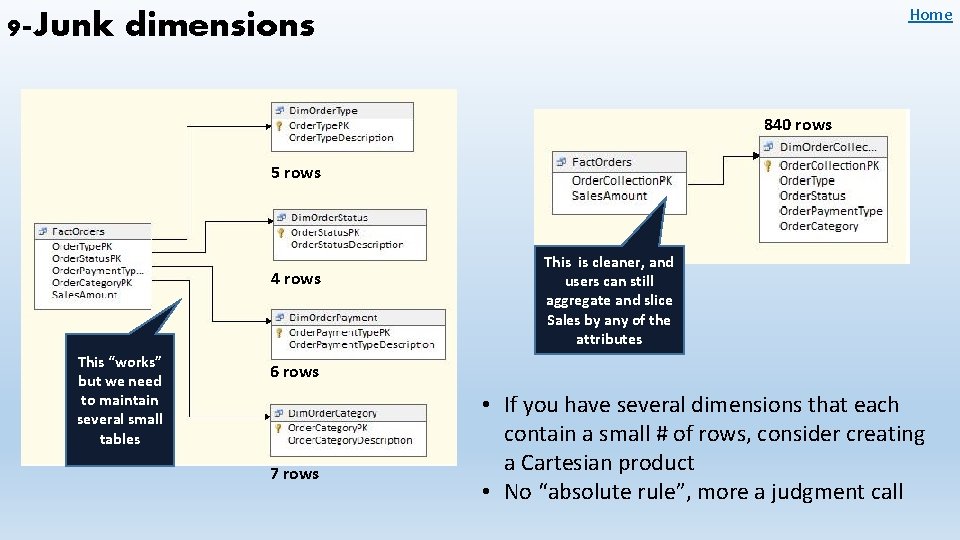

Home 9 -Junk dimensions 840 rows 5 rows 4 rows This “works” but we need to maintain several small tables This is cleaner, and users can still aggregate and slice Sales by any of the attributes 6 rows 7 rows • If you have several dimensions that each contain a small # of rows, consider creating a Cartesian product • No “absolute rule”, more a judgment call

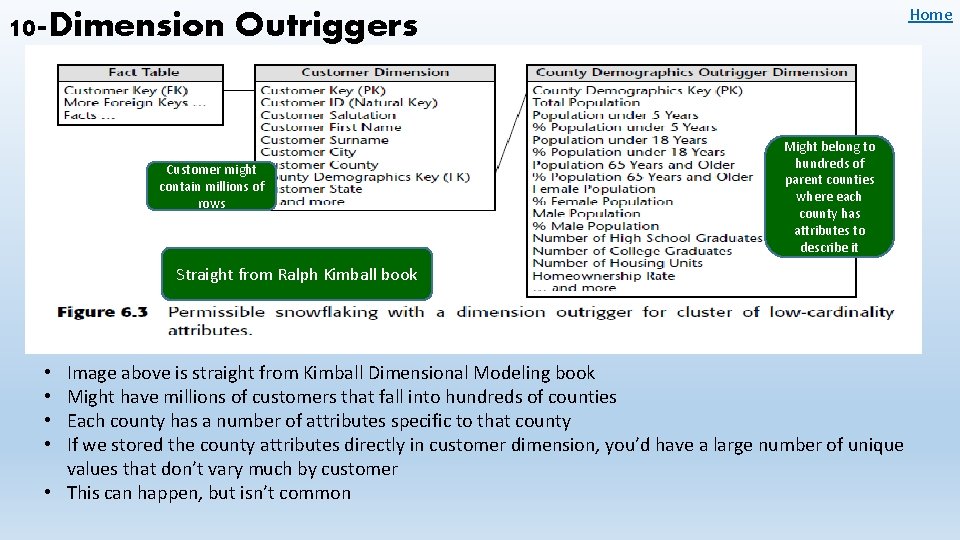

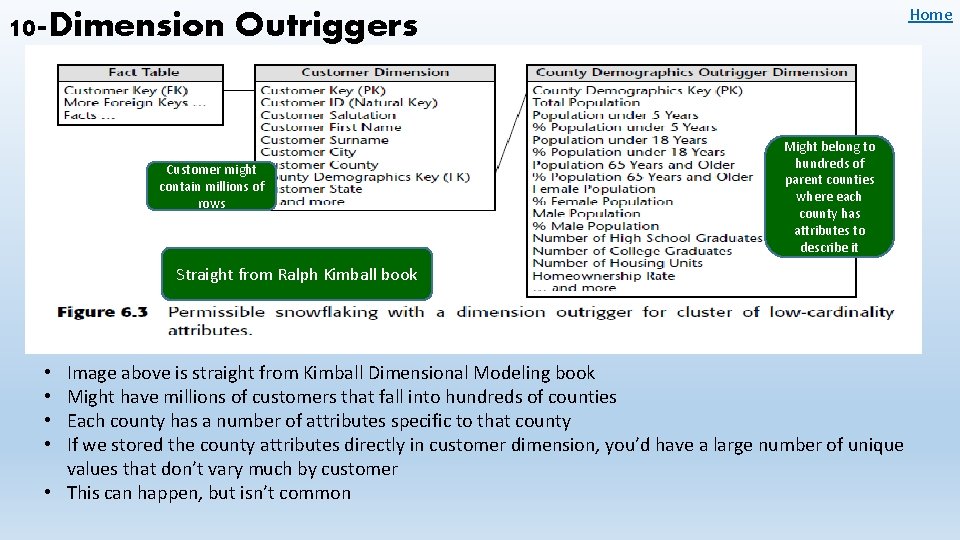

Home 10 -Dimension Outriggers Customer might contain millions of rows Might belong to hundreds of parent counties where each county has attributes to describe it Straight from Ralph Kimball book Image above is straight from Kimball Dimensional Modeling book Might have millions of customers that fall into hundreds of counties Each county has a number of attributes specific to that county If we stored the county attributes directly in customer dimension, you’d have a large number of unique values that don’t vary much by customer • This can happen, but isn’t common • •

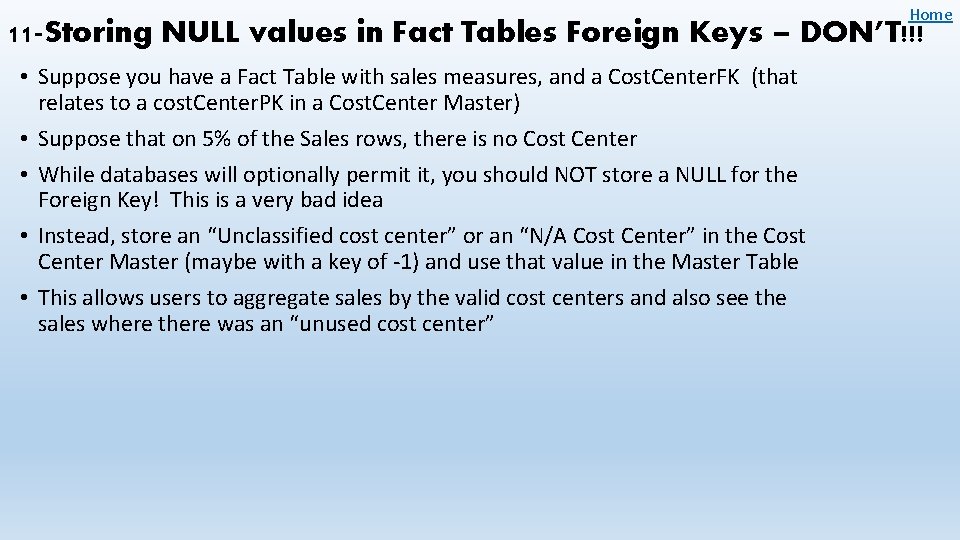

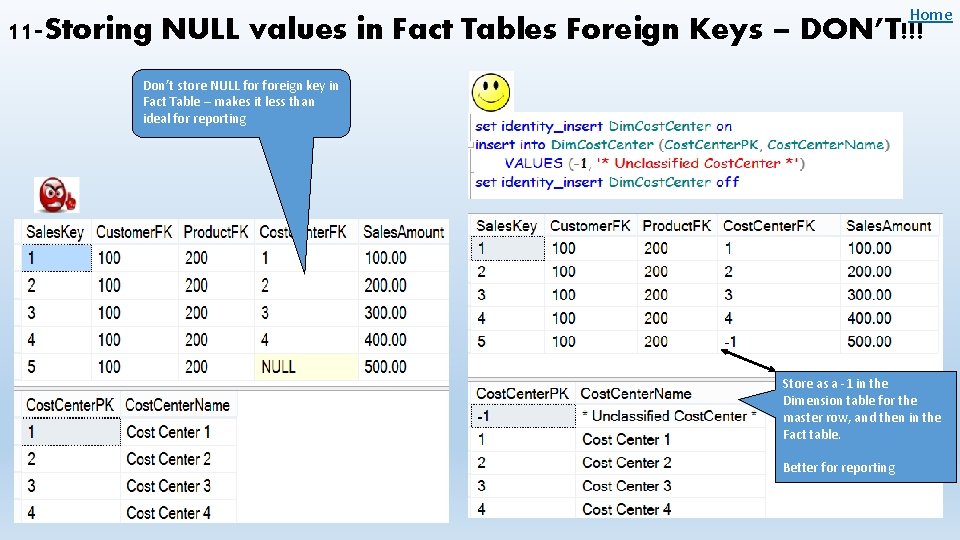

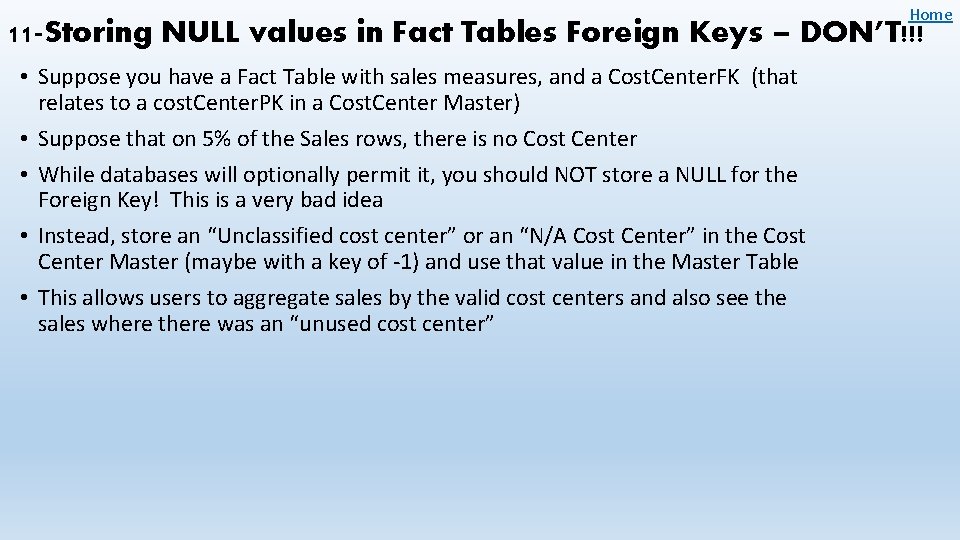

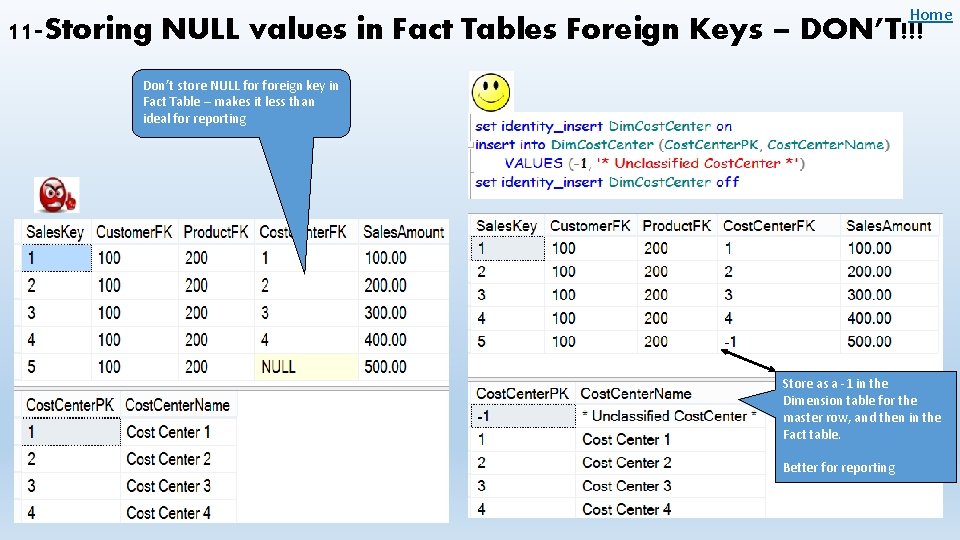

Home 11 -Storing NULL values in Fact Tables Foreign Keys – DON’T!!! • Suppose you have a Fact Table with sales measures, and a Cost. Center. FK (that relates to a cost. Center. PK in a Cost. Center Master) • Suppose that on 5% of the Sales rows, there is no Cost Center • While databases will optionally permit it, you should NOT store a NULL for the Foreign Key! This is a very bad idea • Instead, store an “Unclassified cost center” or an “N/A Cost Center” in the Cost Center Master (maybe with a key of -1) and use that value in the Master Table • This allows users to aggregate sales by the valid cost centers and also see the sales where there was an “unused cost center”

Home 11 -Storing NULL values in Fact Tables Foreign Keys – DON’T!!! Don’t store NULL foreign key in Fact Table – makes it less than ideal for reporting Store as a -1 in the Dimension table for the master row, and then in the Fact table. Better for reporting

12 -Storing ratios in Fact Tables – DON’T!!! • In fact tables, you can store measures that are either fully additive (sales) or partly additive (end of month inventory count) • You can also store measures that are derived from simple math (Net Revenue = Gross Revenue less Returns, less Damages, etc) • But DON’T store measures that represent percentages or ratios that are derived from division – calculate them “on the fly”. Why? Because they won’t aggregate to any sensible value • For Instance: • Store A $10 in returns, $20 in sales (return % of 50%) • Store B $10 in returns, $100 in sales (return % of 10%) • We want to roll up the returns % for the region – but we obviously want a “weighted” returns %. Storing the returns % is meaningless when we want to aggregate/roll-up. • Best practice: store the numbers that represent the numerator/denominator, and then calculate “on the fly” Home

13 -ETL Checklist for Data Warehousing • • • • Populating the end-result data model as early as possible Defining a high-level roadmap of physical data sources and processes Establishing necessary source data, profile source data, and source primary keys Extract logic (vet this process carefully, perform reviews) Understand necessary data mappings and how it relates to business rules Identify and account for any specific data type challenges Define primary keys in source data, and what happens when source systems delete data Use of the MERGE and composable DML and extract engines Collecting all activity in ETL logs Capturing periodic snapshots of data Source system verifications Messaging and alerts Scheduling and source dependencies Data Lineage Home