Stato del Tier 1 Luca dellAgnello 11 Maggio

- Slides: 14

Stato del Tier 1 Luca dell’Agnello 11 Maggio 2012

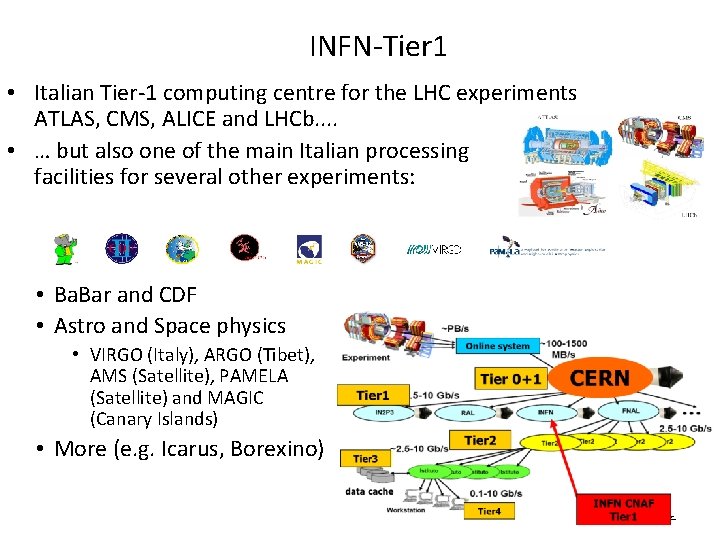

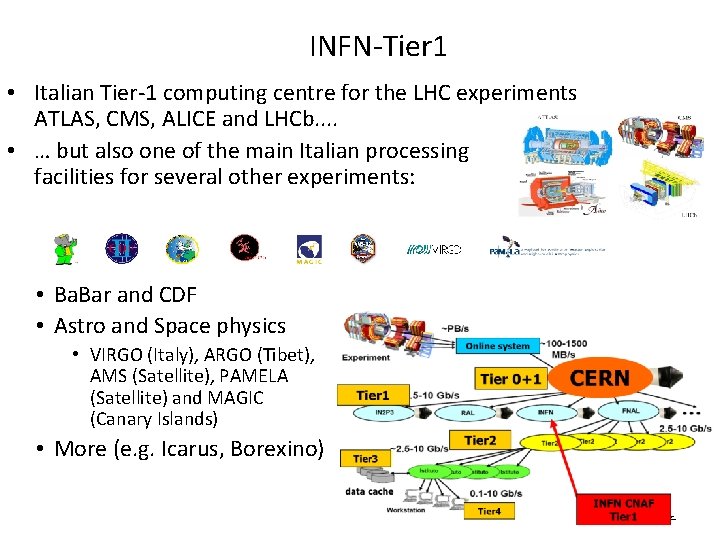

INFN-Tier 1 • Italian Tier-1 computing centre for the LHC experiments ATLAS, CMS, ALICE and LHCb. . • … but also one of the main Italian processing facilities for several other experiments: • Ba. Bar and CDF • Astro and Space physics • VIRGO (Italy), ARGO (Tibet), AMS (Satellite), PAMELA (Satellite) and MAGIC (Canary Islands) • More (e. g. Icarus, Borexino) 2

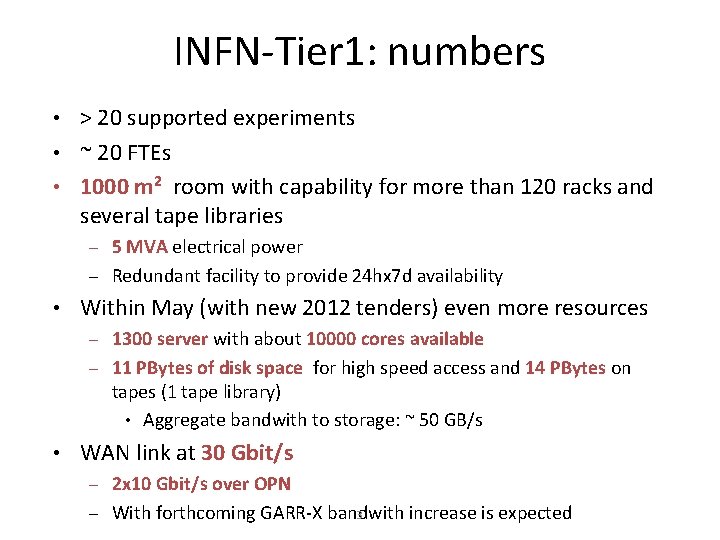

INFN-Tier 1: numbers • > 20 supported experiments • ~ 20 FTEs • 1000 m 2 room with capability for more than 120 racks and several tape libraries – 5 MVA electrical power – Redundant facility to provide 24 hx 7 d availability • Within May (with new 2012 tenders) even more resources – 1300 server with about 10000 cores available – 11 PBytes of disk space for high speed access and 14 PBytes on tapes (1 tape library) • Aggregate bandwith to storage: ~ 50 GB/s • WAN link at 30 Gbit/s – 2 x 10 Gbit/s over OPN – With forthcoming GARR-X bandwith increase is expected 3

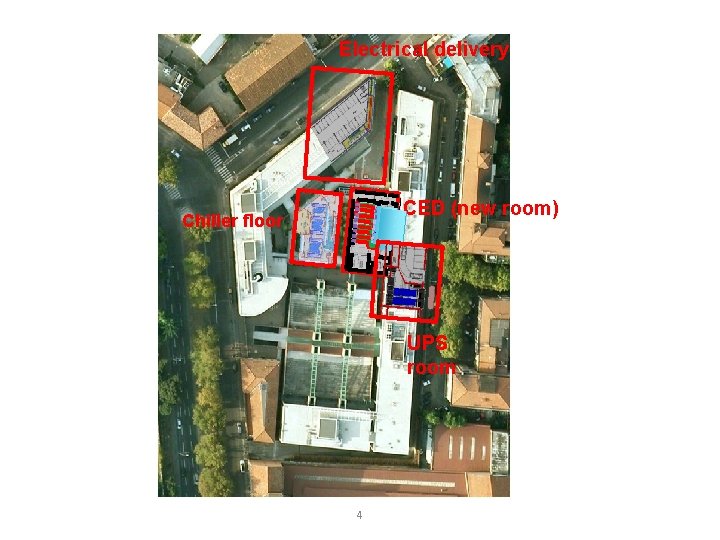

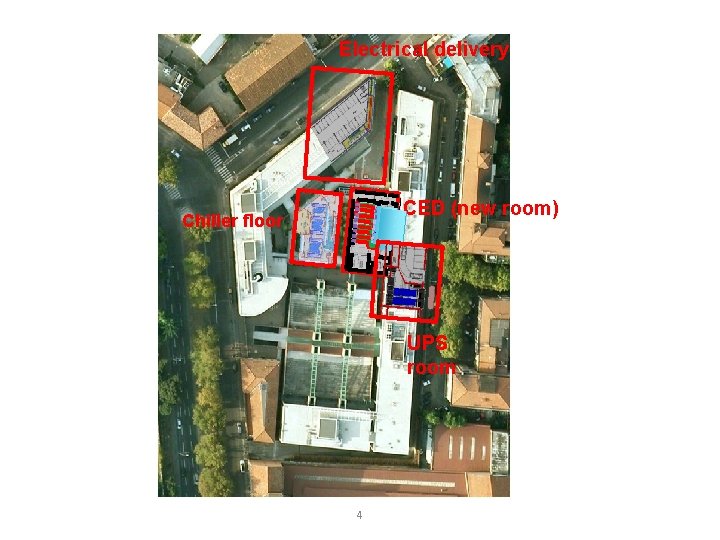

Electrical delivery CED (new room) Chiller floor UPS room 4

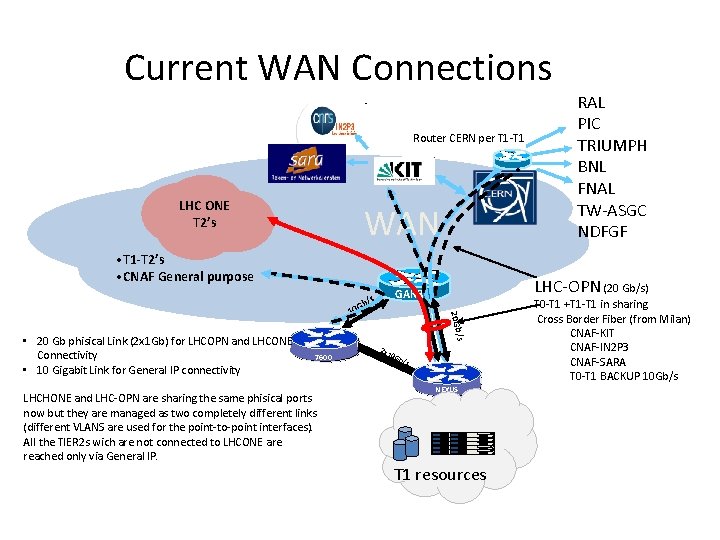

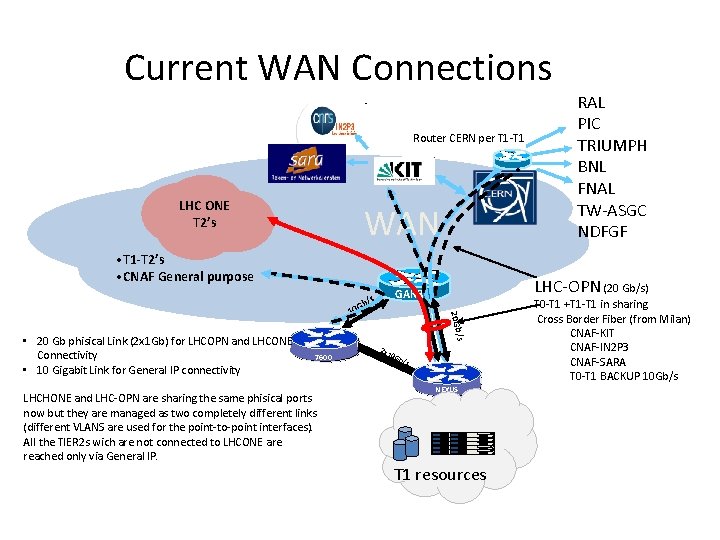

Current WAN Connections Router CERN per T 1 -T 1 LHC ONE T 2’s WAN • T 1 -T 2’s • CNAF General purpose /s Gb 7600 LHCHONE and LHC-OPN are sharing the same phisical ports now but they are managed as two completely different links (different VLANS are used for the point-to-point interfaces). All the TIER 2 s wich are not connected to LHCONE are reached only via General IP. /s 20 Gb • 20 Gb phisical Link (2 x 1 Gb) for LHCOPN and LHCONE Connectivity • 10 Gigabit Link for General IP connectivity LHC-OPN (20 Gb/s) GARR 10 RAL PIC TRIUMPH BNL FNAL TW-ASGC NDFGF 2 x 1 0 G b /s NEXUS T 1 resources T 0 -T 1 +T 1 -T 1 in sharing Cross Border Fiber (from Milan) CNAF-KIT CNAF-IN 2 P 3 CNAF-SARA T 0 -T 1 BACKUP 10 Gb/s

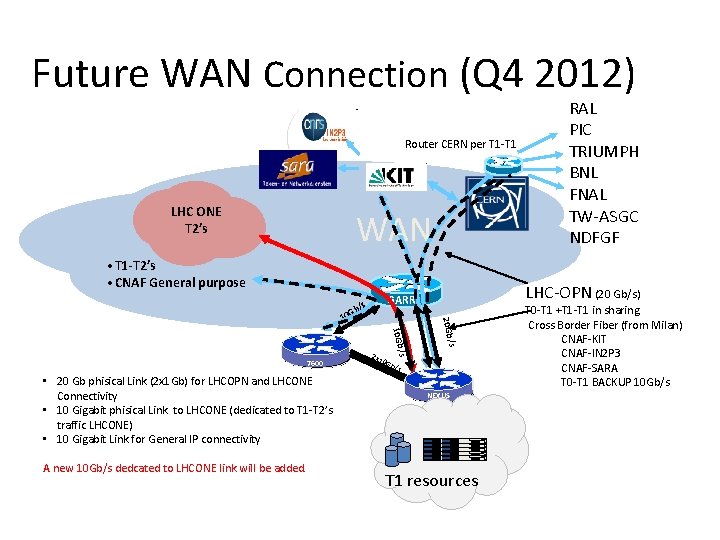

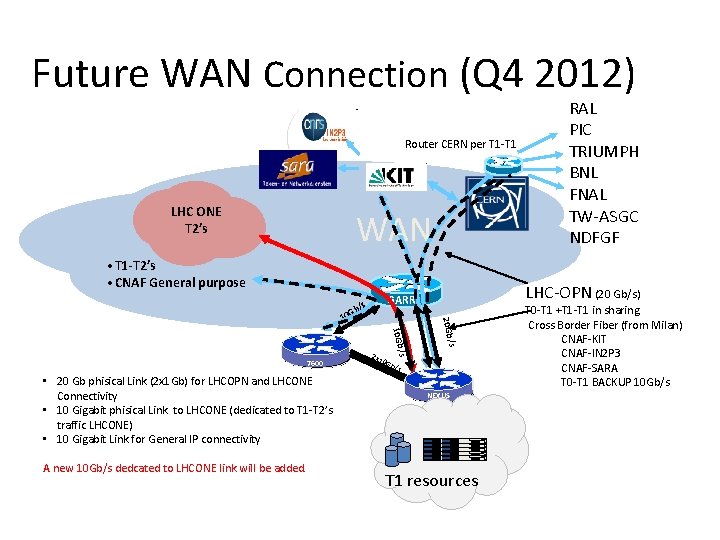

Future WAN Connection (Q 4 2012) Router CERN per T 1 -T 1 LHC ONE T 2’s WAN • T 1 -T 2’s • CNAF General purpose A new 10 Gb/s dedcated to LHCONE link will be added. /s 10 Gb • 20 Gb phisical Link (2 x 1 Gb) for LHCOPN and LHCONE Connectivity • 10 Gigabit phisical Link to LHCONE (dedicated to T 1 -T 2’s traffic LHCONE) • 10 Gigabit Link for General IP connectivity 2 x 1 0 /s 20 Gb G 10 7600 LHC-OPN (20 Gb/s) GARR b/s RAL PIC TRIUMPH BNL FNAL TW-ASGC NDFGF Gb /s NEXUS T 1 resources T 0 -T 1 +T 1 -T 1 in sharing Cross Border Fiber (from Milan) CNAF-KIT CNAF-IN 2 P 3 CNAF-SARA T 0 -T 1 BACKUP 10 Gb/s

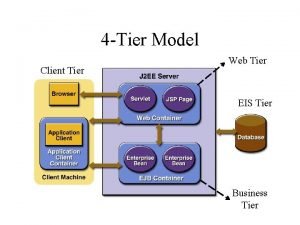

CNAF in the grid • CNAF is part of the WLCG/EGI infrastructure, granting access to distributed computing and storage resources – Access to computing farm via the EMI CREAM Compute Elements – Access to storage resources, on GEMSS, via the srm end-points – Also “legacy” access (i. e. local access allowed) • Some typical grid acronyms for storage: – SE (Storage Element) a Grid service that allows Grid users to store and manage files together with the space assigned to them. – SRM (Storage Resource Manager) middleware component whose function is to provide dynamic space allocation and file management in spaces for shared storage components on the Grid. Essential for bulk operations on tape system.

Middleware status • Deployed several EMI nodes – UIs, Cream. CEs, Argus, BDII, FTS, Storm, WNs – Legacy glite-3. x phased-out almost completely – Planning to completely migrate glite-3. 2 nodes to EMI within summer • Atlas and LHCb switched to cvmfs for software area – Tests ongoing on cvmfs server for Super. B Andrea Chierici 8 24 -apr-2012

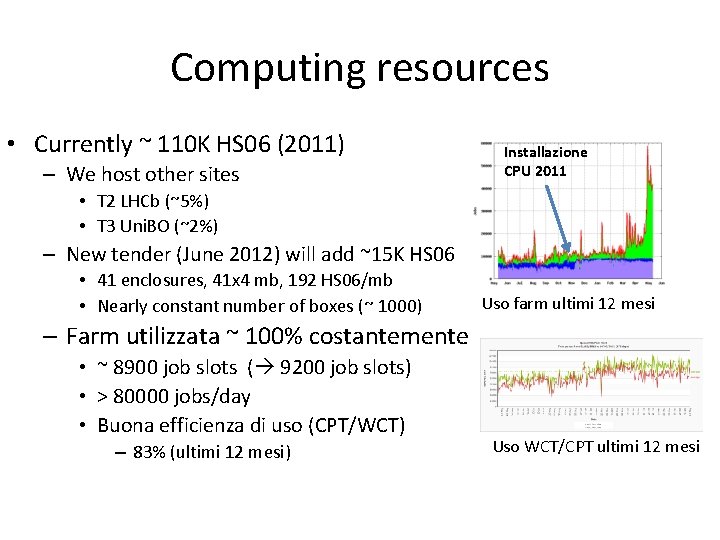

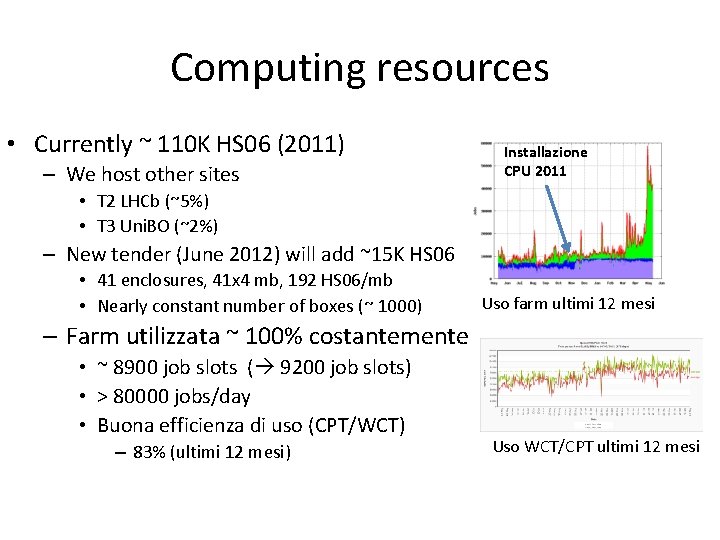

Computing resources • Currently ~ 110 K HS 06 (2011) – We host other sites Installazione CPU 2011 • T 2 LHCb (~5%) • T 3 Uni. BO (~2%) – New tender (June 2012) will add ~15 K HS 06 • 41 enclosures, 41 x 4 mb, 192 HS 06/mb • Nearly constant number of boxes (~ 1000) Uso farm ultimi 12 mesi – Farm utilizzata ~ 100% costantemente • ~ 8900 job slots ( 9200 job slots) • > 80000 jobs/day • Buona efficienza di uso (CPT/WCT) – 83% (ultimi 12 mesi) Uso WCT/CPT ultimi 12 mesi

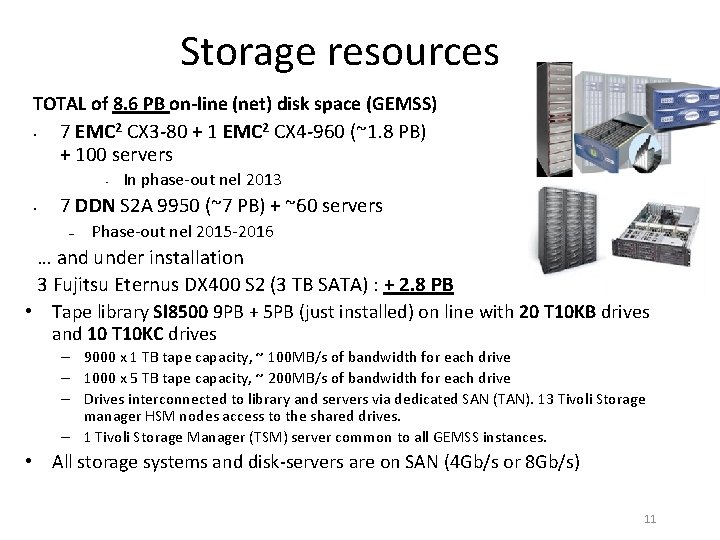

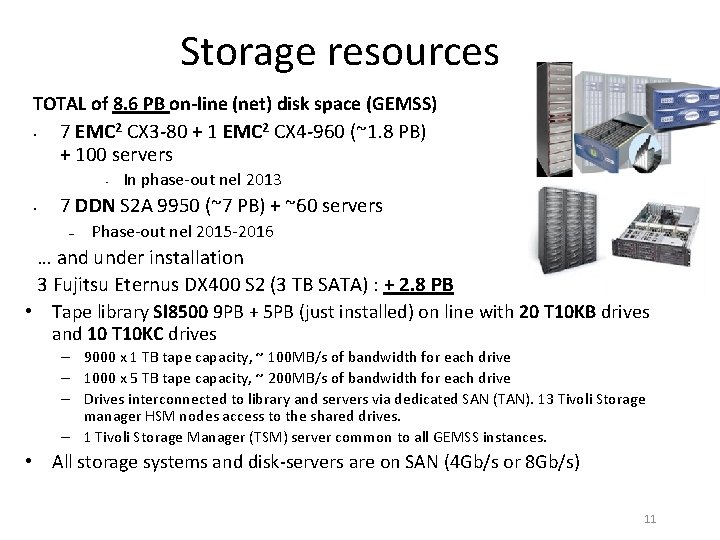

Storage resources TOTAL of 8. 6 PB on-line (net) disk space (GEMSS) • 7 EMC 2 CX 3 -80 + 1 EMC 2 CX 4 -960 (~1. 8 PB) + 100 servers • • In phase-out nel 2013 7 DDN S 2 A 9950 (~7 PB) + ~60 servers – Phase-out nel 2015 -2016 … and under installation 3 Fujitsu Eternus DX 400 S 2 (3 TB SATA) : + 2. 8 PB • Tape library Sl 8500 9 PB + 5 PB (just installed) on line with 20 T 10 KB drives and 10 T 10 KC drives – 9000 x 1 TB tape capacity, ~ 100 MB/s of bandwidth for each drive – 1000 x 5 TB tape capacity, ~ 200 MB/s of bandwidth for each drive – Drives interconnected to library and servers via dedicated SAN (TAN). 13 Tivoli Storage manager HSM nodes access to the shared drives. – 1 Tivoli Storage Manager (TSM) server common to all GEMSS instances. • All storage systems and disk-servers are on SAN (4 Gb/s or 8 Gb/s) 11

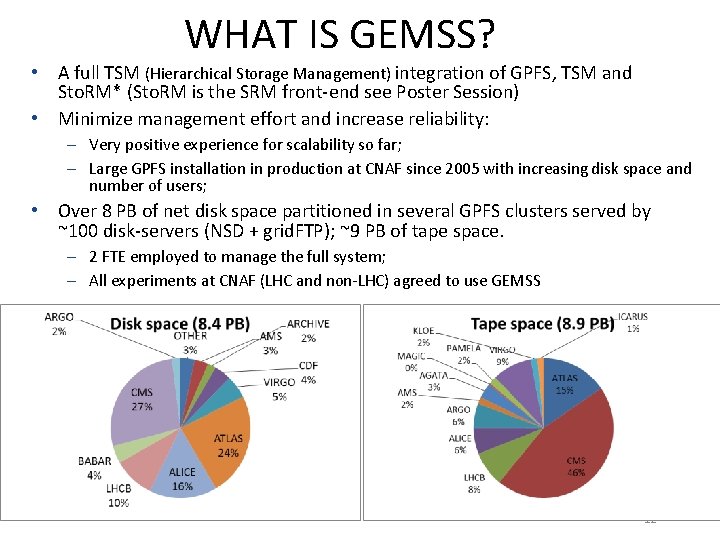

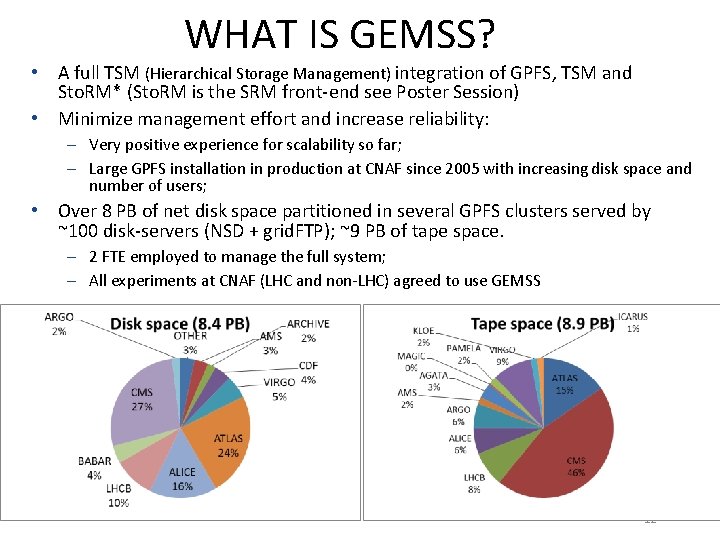

WHAT IS GEMSS? • A full TSM (Hierarchical Storage Management) integration of GPFS, TSM and Sto. RM* (Sto. RM is the SRM front-end see Poster Session) • Minimize management effort and increase reliability: – Very positive experience for scalability so far; – Large GPFS installation in production at CNAF since 2005 with increasing disk space and number of users; • Over 8 PB of net disk space partitioned in several GPFS clusters served by ~100 disk-servers (NSD + grid. FTP); ~9 PB of tape space. – 2 FTE employed to manage the full system; – All experiments at CNAF (LHC and non-LHC) agreed to use GEMSS 12

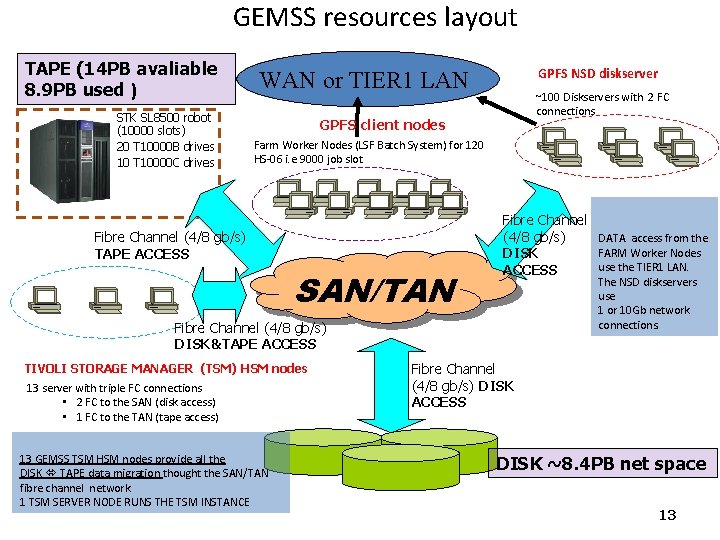

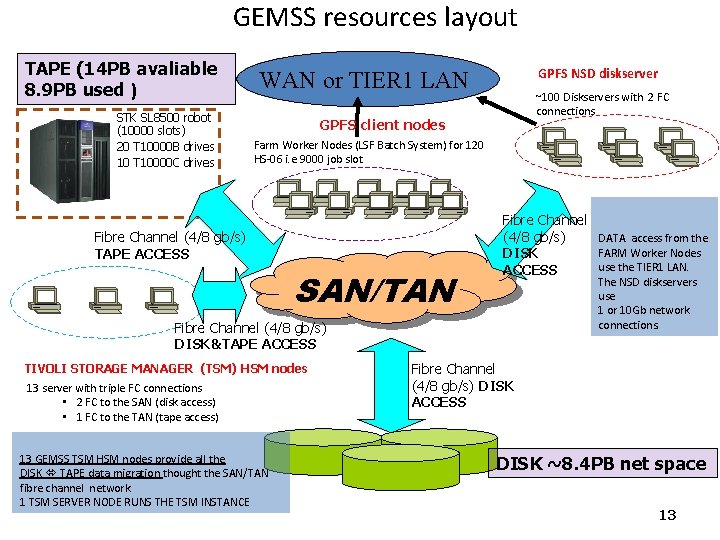

GEMSS resources layout TAPE (14 PB avaliable 8. 9 PB used ) STK SL 8500 robot (10000 slots) 20 T 10000 B drives 10 T 10000 C drives GPFS NSD diskserver WAN or TIER 1 LAN ~100 Diskservers with 2 FC connections GPFS client nodes Farm Worker Nodes (LSF Batch System) for 120 HS-06 i. e 9000 job slot Fibre Channel (4/8 gb/s) TAPE ACCESS SAN/TAN Fibre Channel (4/8 gb/s) DATA access from the FARM Worker Nodes DISK use the TIER 1 LAN. ACCESS The NSD diskservers use 1 or 10 Gb network connections. Fibre Channel (4/8 gb/s) DISK&TAPE ACCESS TIVOLI STORAGE MANAGER (TSM) HSM nodes 13 server with triple FC connections • 2 FC to the SAN (disk access) • 1 FC to the TAN (tape access) 13 GEMSS TSM HSM nodes provide all the DISK TAPE data migration thought the SAN/TAN fibre channel network. 1 TSM SERVER NODE RUNS THE TSM INSTANCE Fibre Channel (4/8 gb/s) DISK ACCESS DISK ~8. 4 PB net space 13

Risorse Dismissioni CPU • • Anno TB 2012 15519 2012 0 2013 66255 2013 1800 2014 26884 2014 0 2015 31488 2015 5900 1 box “vecchia” ~96 x 2 HS 06 ~ 0. 61 E/HS 06 Valutare upgrade blade? Senza server! Presso estremamente competitivo (ripetibile? ) Costo tape: ~ 40 E/TB (con trade-in) • • HS 06 Costo disco (2011): 261 E/TB • • Anno Costo CPU(2011): 13 E/HS 06 • Con costi occulti (da sommare costo rete etc. . ) Manutenzione vecchie CPU: 98 E/box (2 mb) • • • Dismissioni disco 1 tape driver: ~ 15 KE Rinnovo hw servizi (extra wn, extra disk-server), manutenzioni (es. SAN)

Vocabulary pyramid

Vocabulary pyramid Tiers of vocabulary words

Tiers of vocabulary words Sintesi 5 maggio

Sintesi 5 maggio 5 maggio analisi

5 maggio analisi Neoistituzionalismo

Neoistituzionalismo Isabelle di maggio

Isabelle di maggio La guerra di piero spiegazione

La guerra di piero spiegazione Maggio kattar reviews

Maggio kattar reviews Stato giuridico del docente 2020

Stato giuridico del docente 2020 Stato giuridico del docente

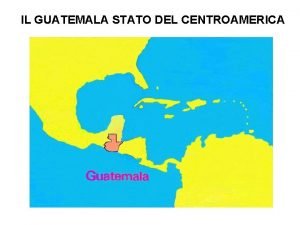

Stato giuridico del docente Stato del centroamerica

Stato del centroamerica Introduzione al vangelo di luca

Introduzione al vangelo di luca Passaggi di stato della materia

Passaggi di stato della materia Stato ideale per platone

Stato ideale per platone Lo stato ideale di platone

Lo stato ideale di platone