Special topics on text mining Part I text

![Special topics on text mining [Part I: text classification] Hugo Jair Escalante, Aurelio Lopez, Special topics on text mining [Part I: text classification] Hugo Jair Escalante, Aurelio Lopez,](https://slidetodoc.com/presentation_image_h2/dfbe4fd311da9030f50df918cec9edb1/image-1.jpg)

- Slides: 77

![Special topics on text mining Part I text classification Hugo Jair Escalante Aurelio Lopez Special topics on text mining [Part I: text classification] Hugo Jair Escalante, Aurelio Lopez,](https://slidetodoc.com/presentation_image_h2/dfbe4fd311da9030f50df918cec9edb1/image-1.jpg)

Special topics on text mining [Part I: text classification] Hugo Jair Escalante, Aurelio Lopez, Manuel Montes and Luis Villaseñor

Classification algorithms and evaluation Hugo Jair Escalante, Aurelio Lopez, Manuel Montes and Luis Villaseñor

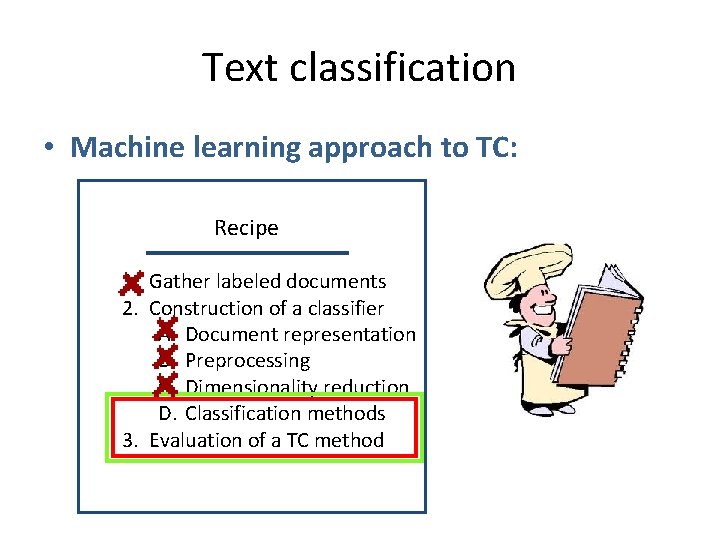

Text classification • Machine learning approach to TC: Recipe 1. Gather labeled documents 2. Construction of a classifier A. Document representation B. Preprocessing C. Dimensionality reduction D. Classification methods 3. Evaluation of a TC method

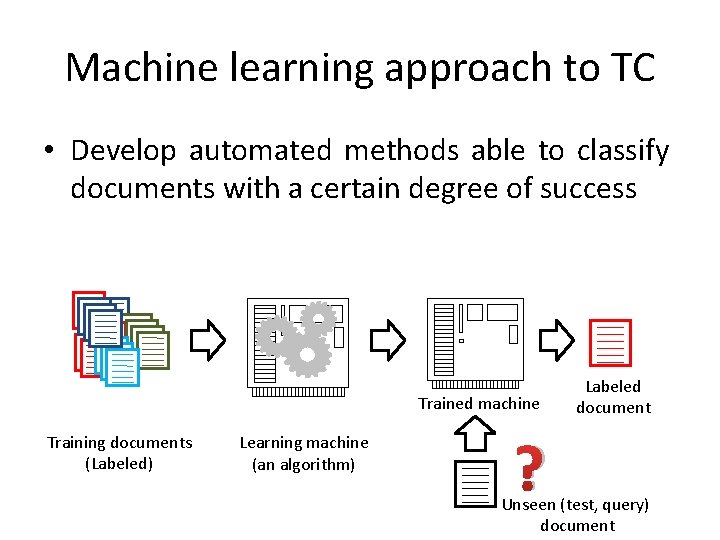

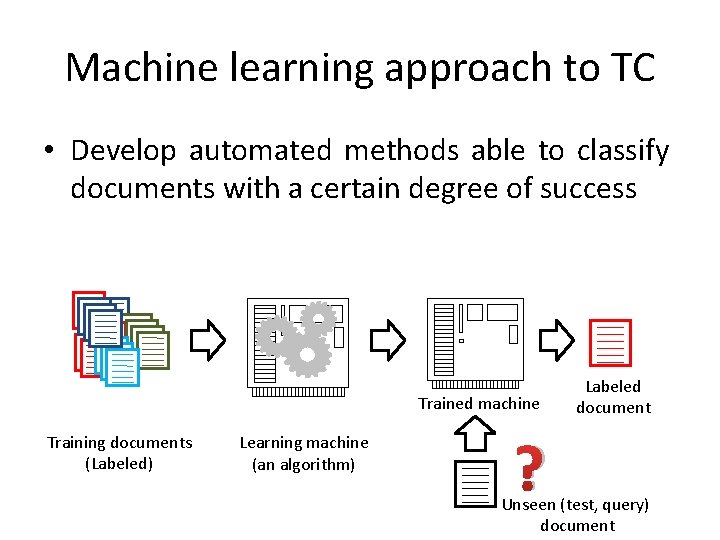

Machine learning approach to TC • Develop automated methods able to classify documents with a certain degree of success Trained machine Training documents (Labeled) Learning machine (an algorithm) ? Labeled document Unseen (test, query) document

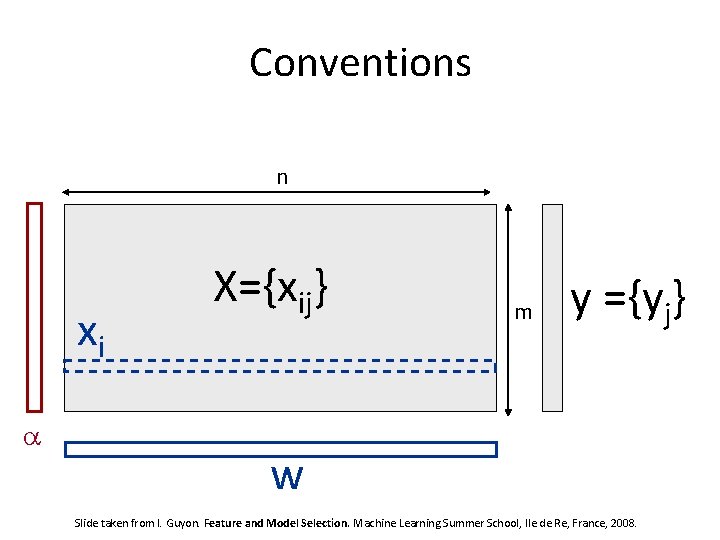

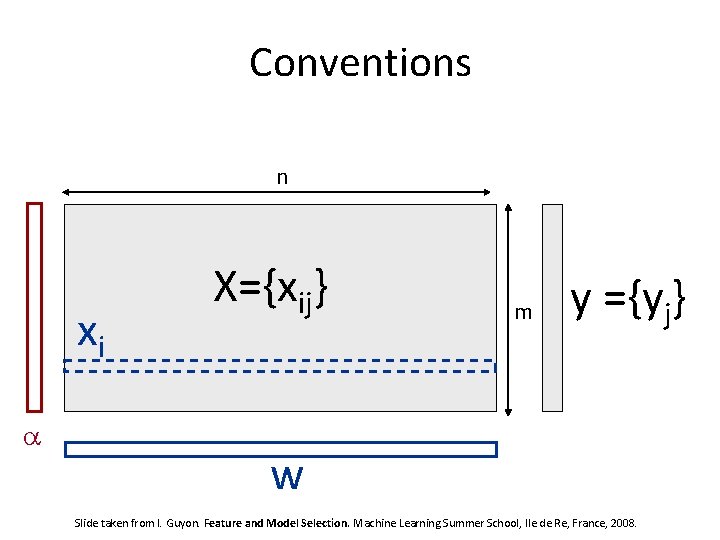

Conventions n xi a X={xij} m y ={yj} w Slide taken from I. Guyon. Feature and Model Selection. Machine Learning Summer School, Ile de Re, France, 2008.

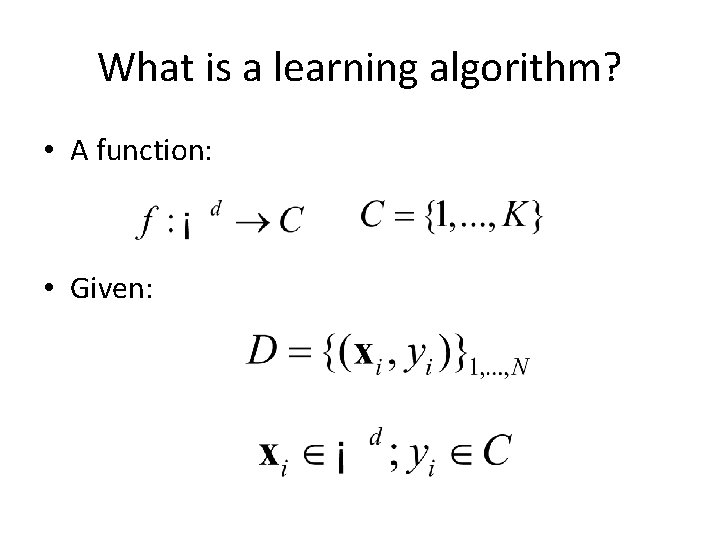

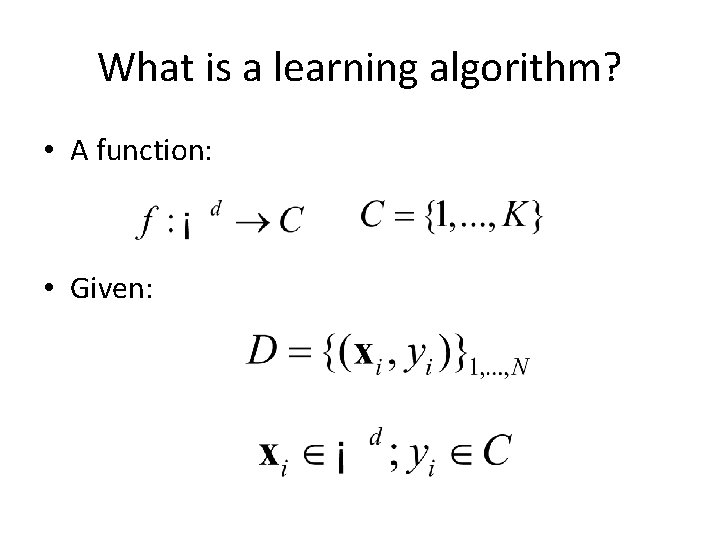

What is a learning algorithm? • A function: • Given:

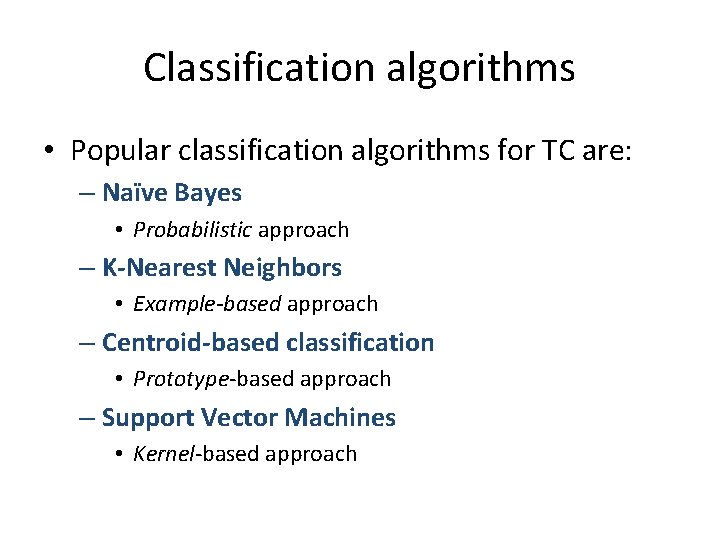

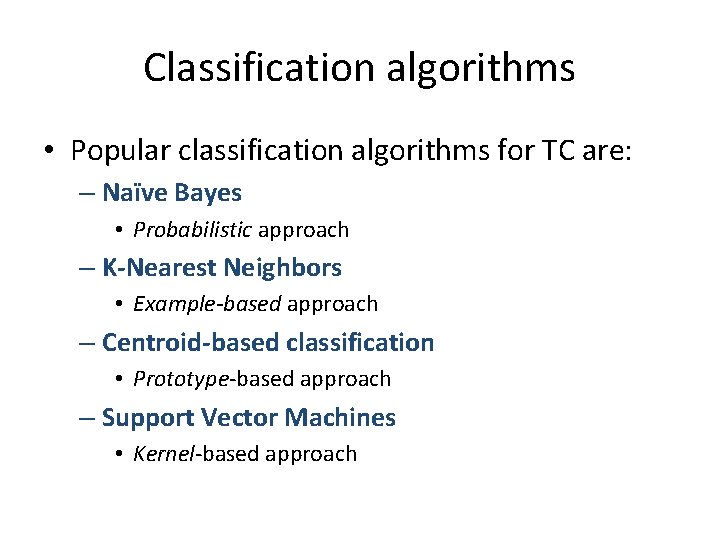

Classification algorithms • Popular classification algorithms for TC are: – Naïve Bayes • Probabilistic approach – K-Nearest Neighbors • Example-based approach – Centroid-based classification • Prototype-based approach – Support Vector Machines • Kernel-based approach

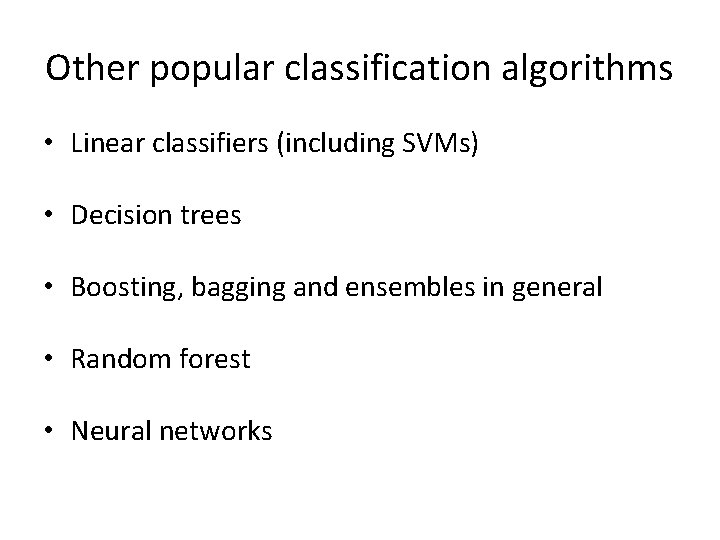

Other popular classification algorithms • Linear classifiers (including SVMs) • Decision trees • Boosting, bagging and ensembles in general • Random forest • Neural networks

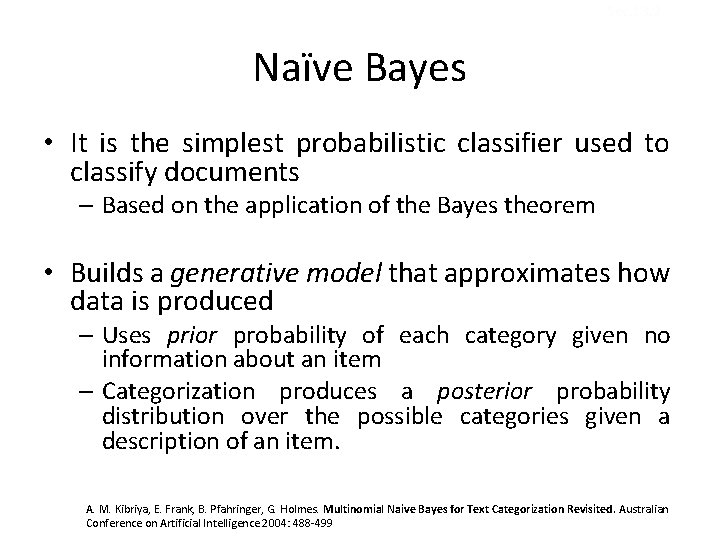

Sec. 13. 2 Naïve Bayes • It is the simplest probabilistic classifier used to classify documents – Based on the application of the Bayes theorem • Builds a generative model that approximates how data is produced – Uses prior probability of each category given no information about an item – Categorization produces a posterior probability distribution over the possible categories given a description of an item. A. M. Kibriya, E. Frank, B. Pfahringer, G. Holmes. Multinomial Naive Bayes for Text Categorization Revisited. Australian Conference on Artificial Intelligence 2004: 488 -499

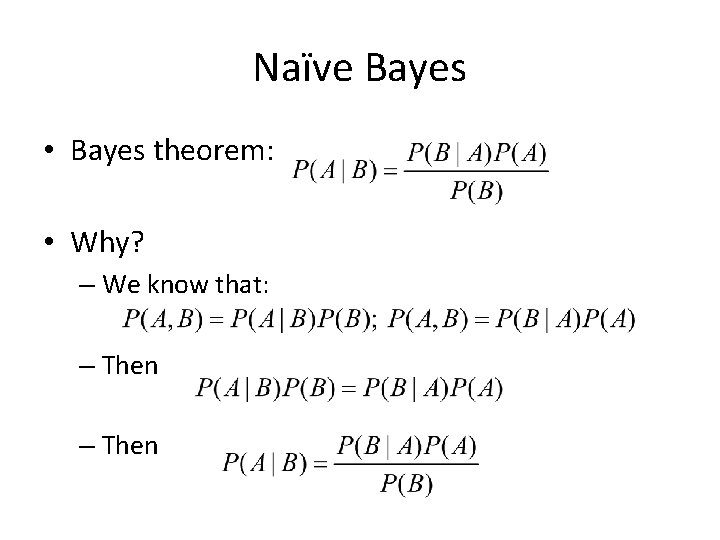

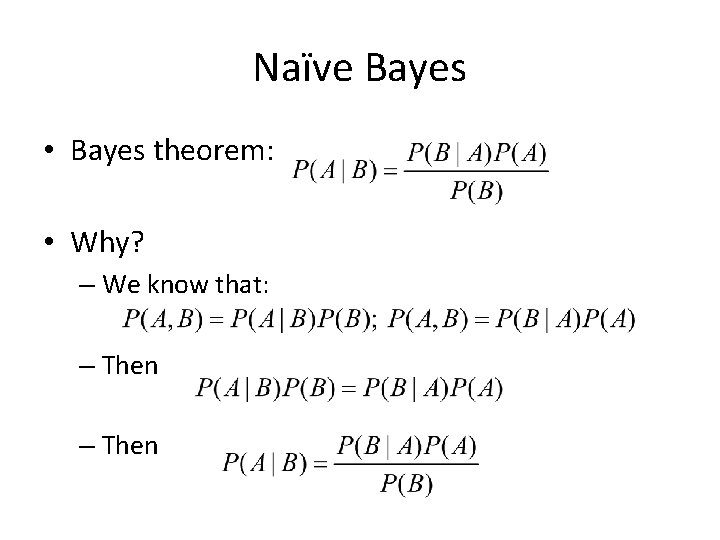

Naïve Bayes • Bayes theorem: • Why? – We know that: – Then

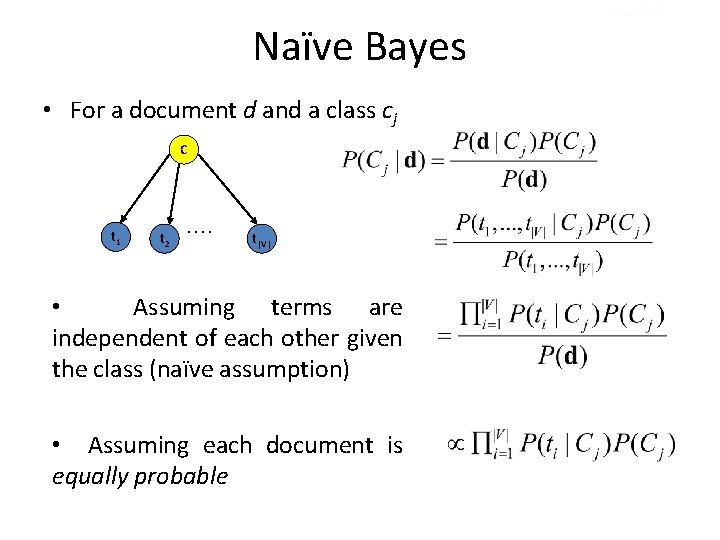

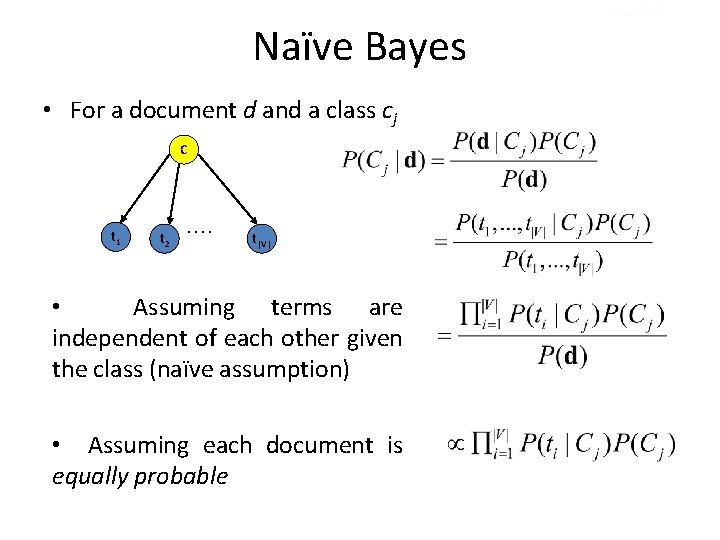

Naïve Bayes • For a document d and a class cj C t 1 t 2 . . t|V| • Assuming terms are independent of each other given the class (naïve assumption) • Assuming each document is equally probable Sec. 13. 2

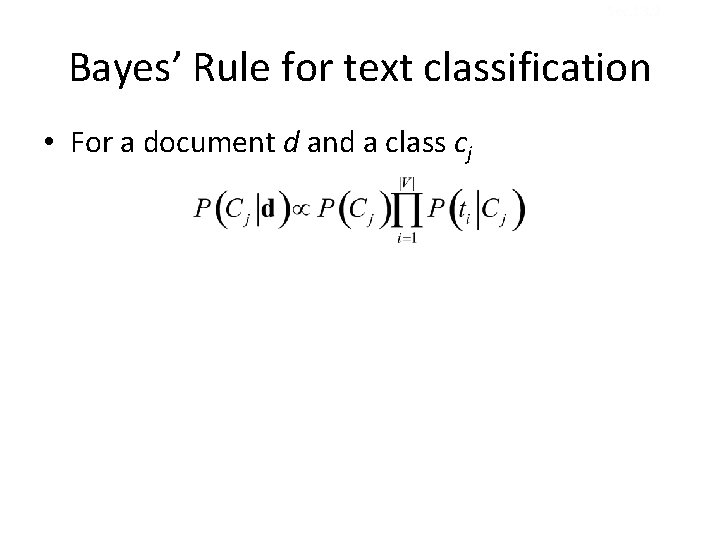

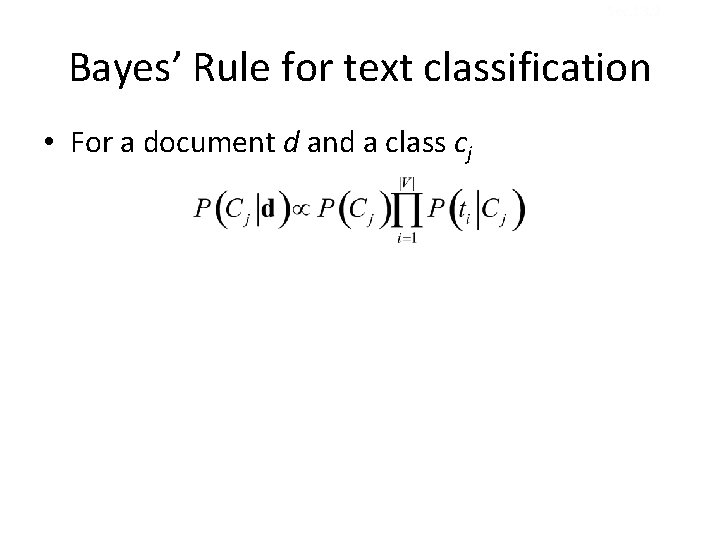

Sec. 13. 2 Bayes’ Rule for text classification • For a document d and a class cj

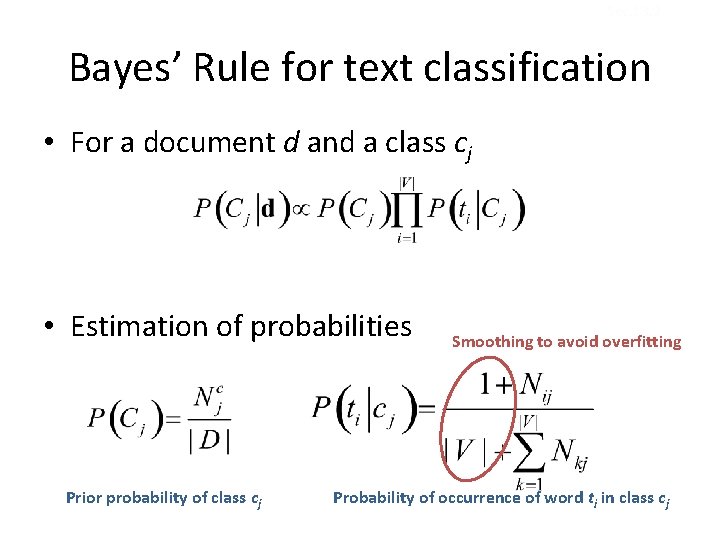

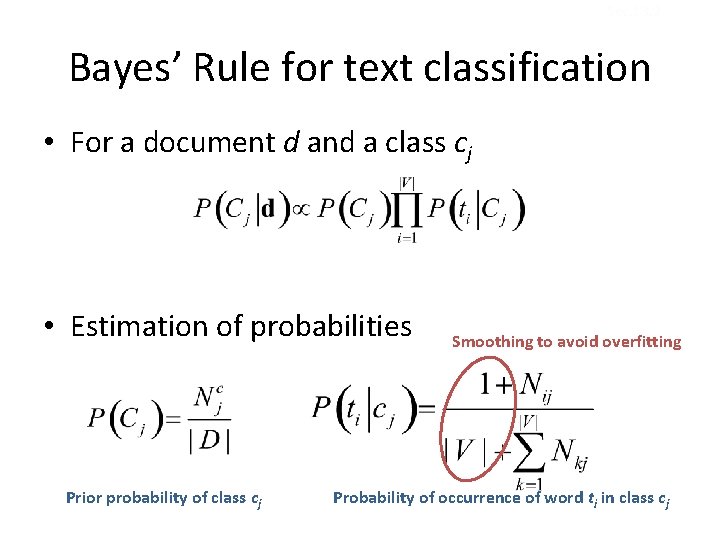

Sec. 13. 2 Bayes’ Rule for text classification • For a document d and a class cj • Estimation of probabilities Prior probability of class cj Smoothing to avoid overfitting Probability of occurrence of word ti in class cj

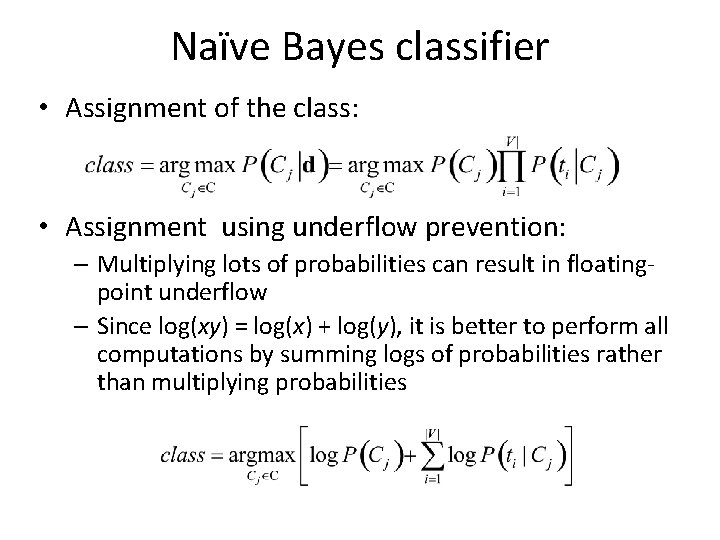

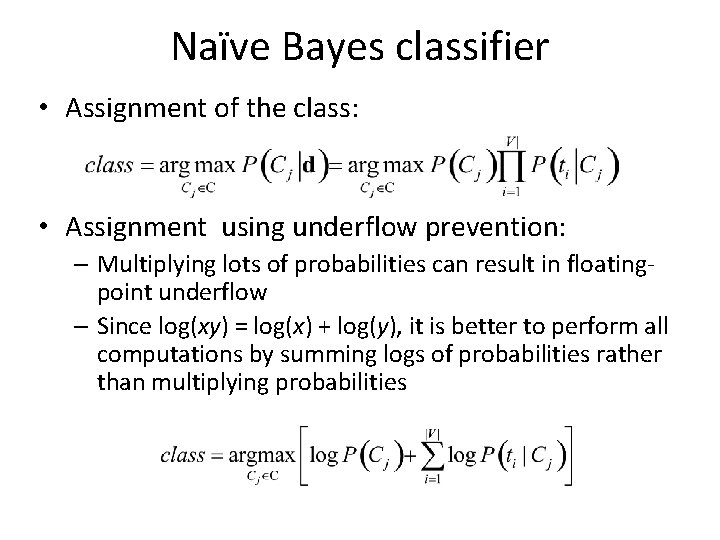

Naïve Bayes classifier • Assignment of the class: • Assignment using underflow prevention: – Multiplying lots of probabilities can result in floatingpoint underflow – Since log(xy) = log(x) + log(y), it is better to perform all computations by summing logs of probabilities rather than multiplying probabilities

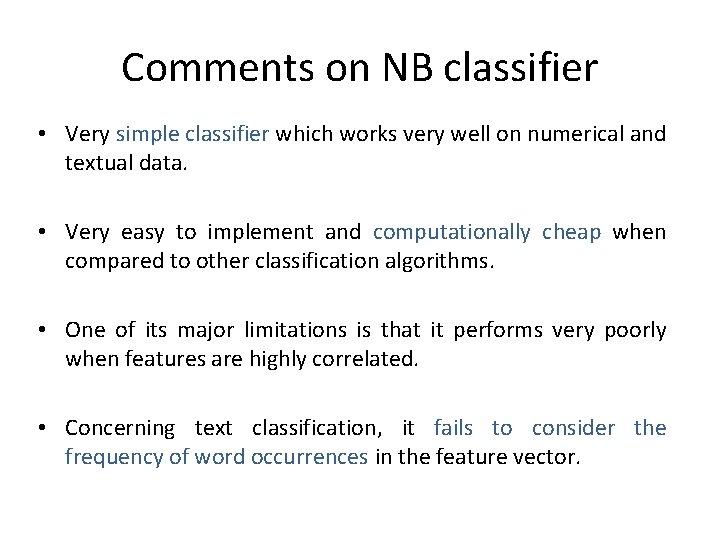

Comments on NB classifier • Very simple classifier which works very well on numerical and textual data. • Very easy to implement and computationally cheap when compared to other classification algorithms. • One of its major limitations is that it performs very poorly when features are highly correlated. • Concerning text classification, it fails to consider the frequency of word occurrences in the feature vector.

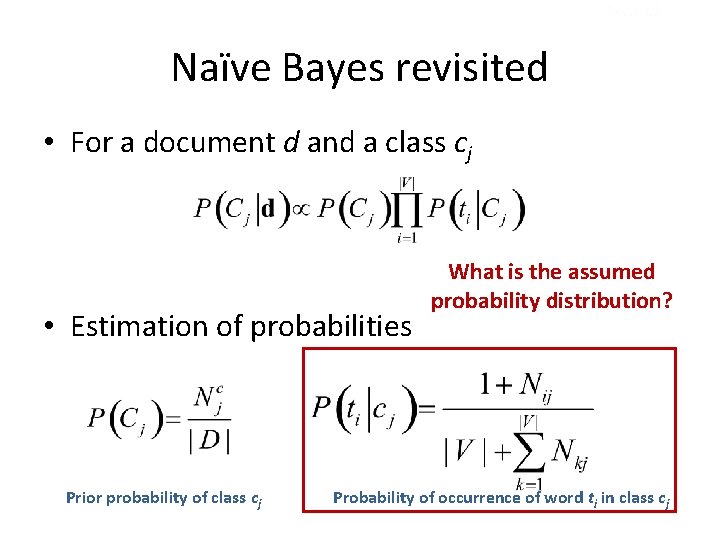

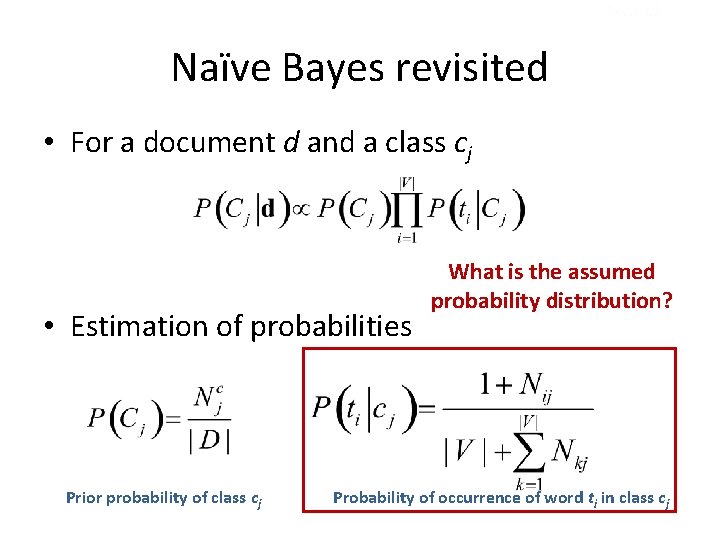

Sec. 13. 2 Naïve Bayes revisited • For a document d and a class cj • Estimation of probabilities Prior probability of class cj What is the assumed probability distribution? Probability of occurrence of word ti in class cj

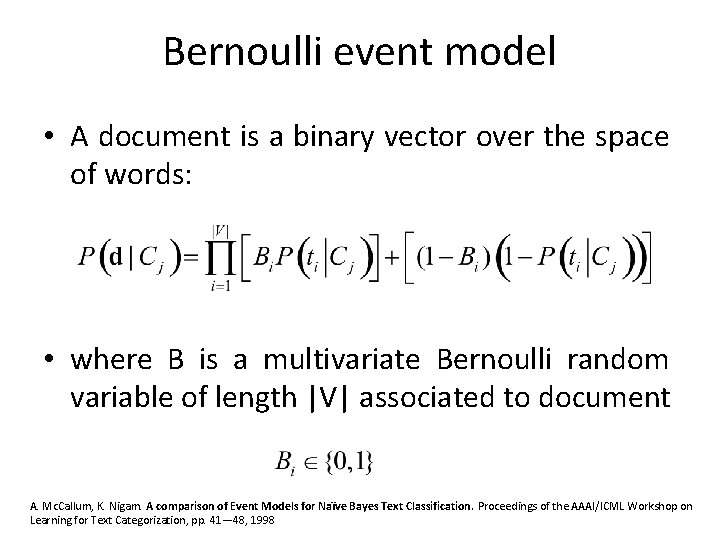

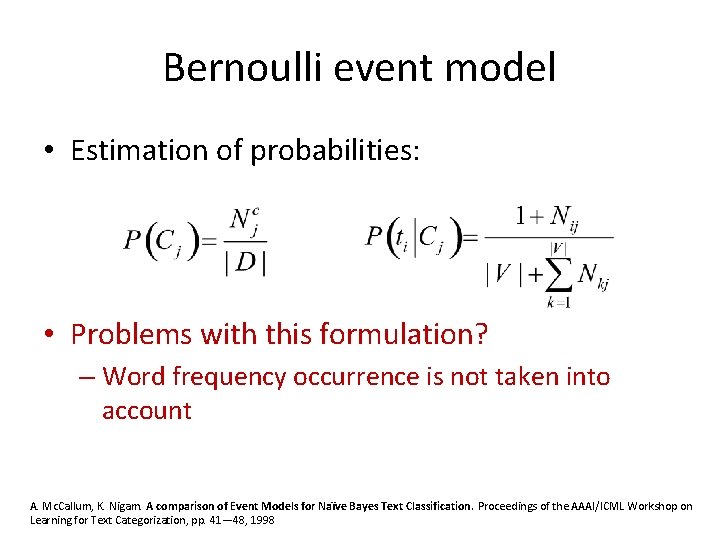

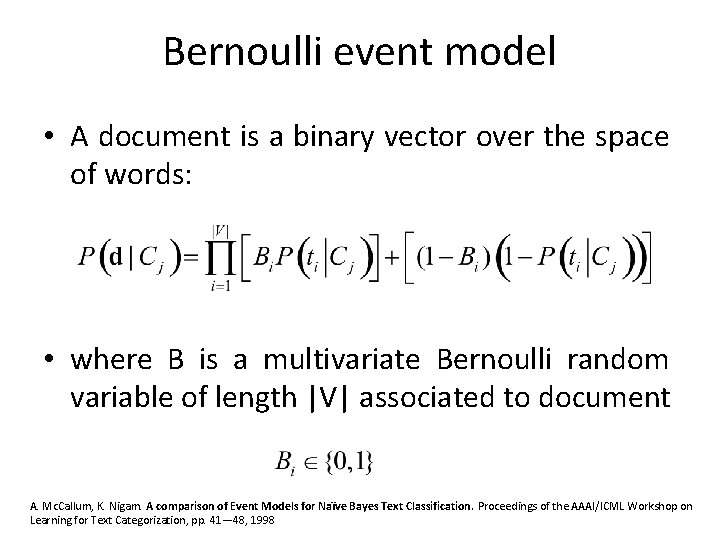

Bernoulli event model • A document is a binary vector over the space of words: • where B is a multivariate Bernoulli random variable of length |V| associated to document A. Mc. Callum, K. Nigam. A comparison of Event Models for Naïve Bayes Text Classification. Proceedings of the AAAI/ICML Workshop on Learning for Text Categorization, pp. 41— 48, 1998

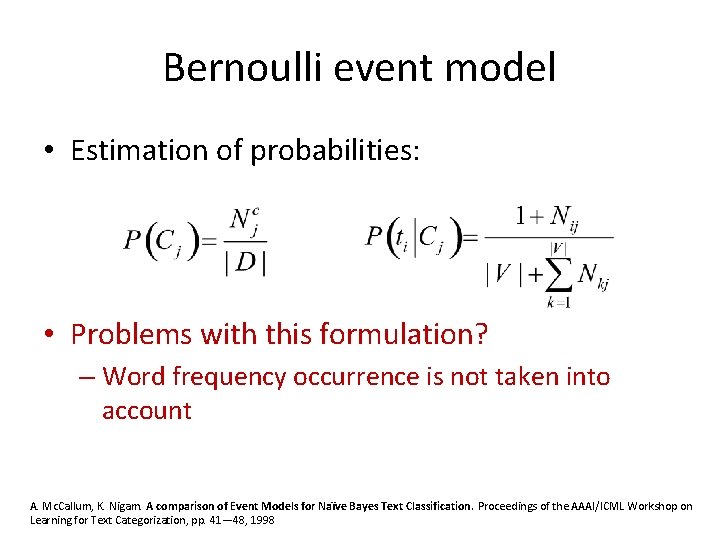

Bernoulli event model • Estimation of probabilities: • Problems with this formulation? – Word frequency occurrence is not taken into account A. Mc. Callum, K. Nigam. A comparison of Event Models for Naïve Bayes Text Classification. Proceedings of the AAAI/ICML Workshop on Learning for Text Categorization, pp. 41— 48, 1998

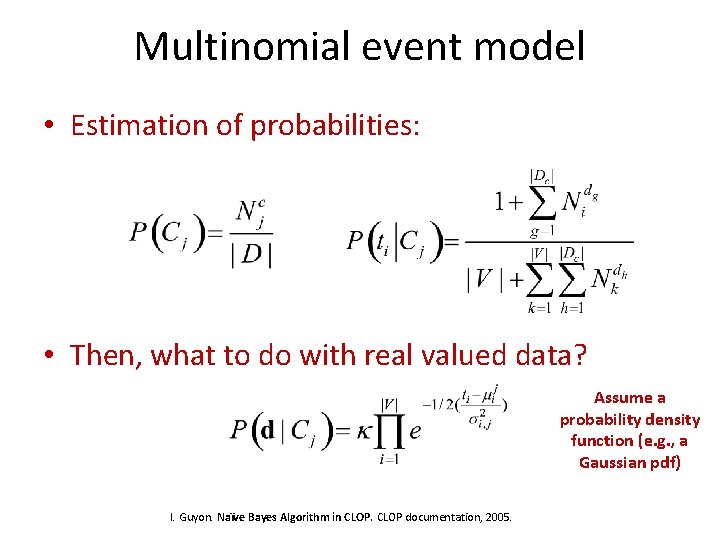

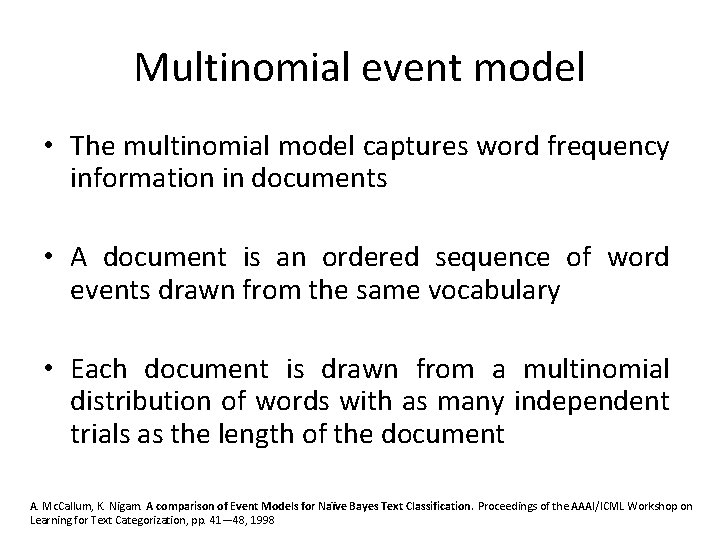

Multinomial event model • The multinomial model captures word frequency information in documents • A document is an ordered sequence of word events drawn from the same vocabulary • Each document is drawn from a multinomial distribution of words with as many independent trials as the length of the document A. Mc. Callum, K. Nigam. A comparison of Event Models for Naïve Bayes Text Classification. Proceedings of the AAAI/ICML Workshop on Learning for Text Categorization, pp. 41— 48, 1998

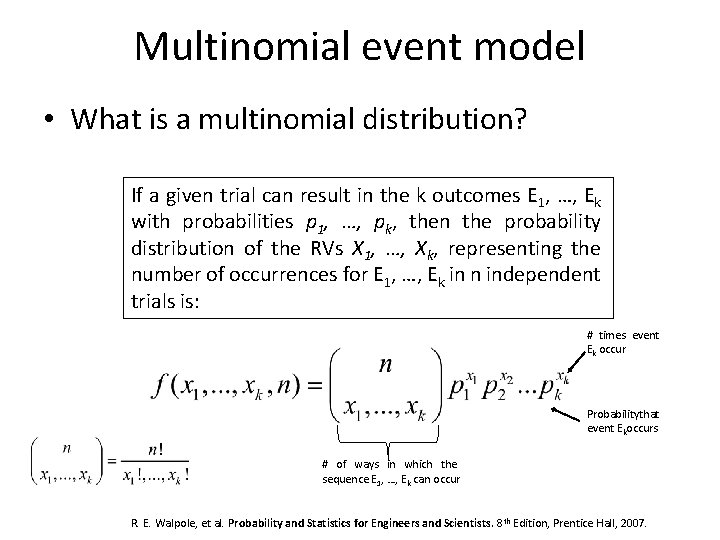

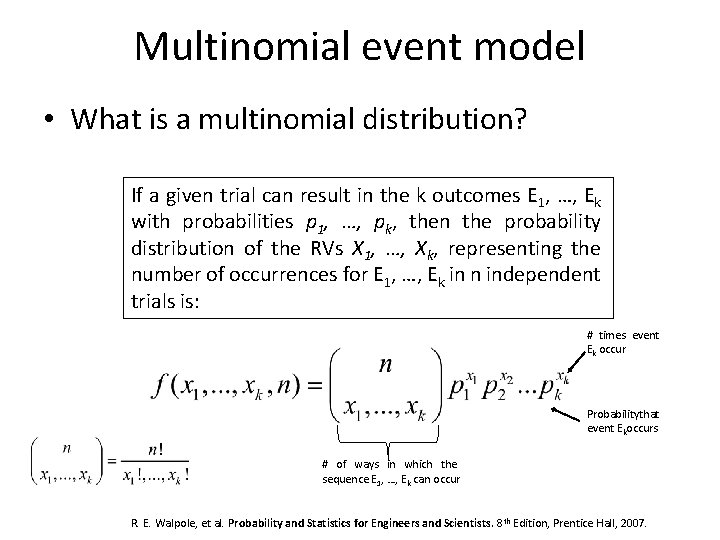

Multinomial event model • What is a multinomial distribution? If a given trial can result in the k outcomes E 1, …, Ek with probabilities p 1, …, pk, then the probability distribution of the RVs X 1, …, Xk, representing the number of occurrences for E 1, …, Ek in n independent trials is: # times event Ek occur Probabilitythat event Ekoccurs # of ways in which the sequence E 1, …, Ek can occur R. E. Walpole, et al. Probability and Statistics for Engineers and Scientists. 8 th Edition, Prentice Hall, 2007.

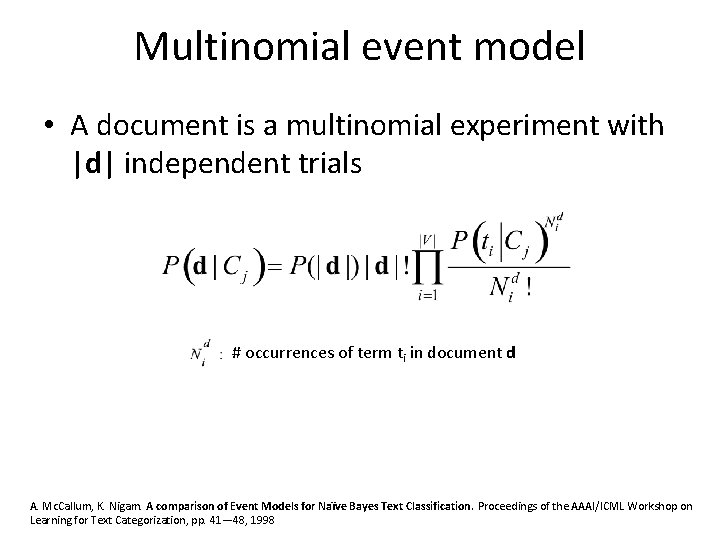

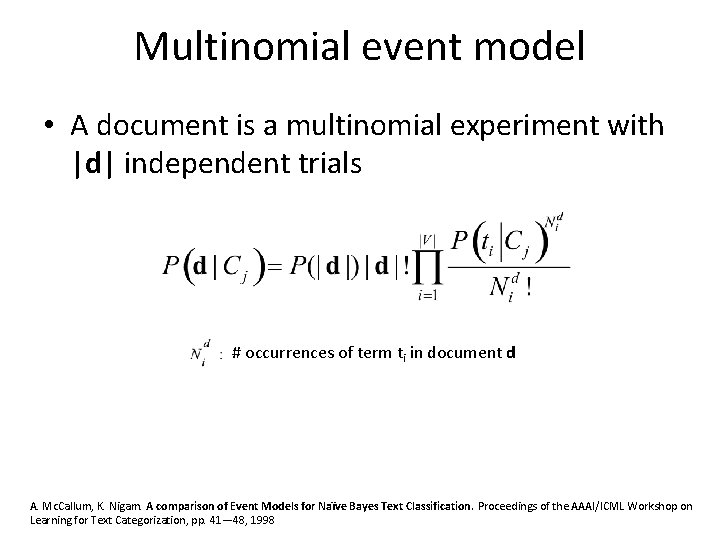

Multinomial event model • A document is a multinomial experiment with |d| independent trials # occurrences of term ti in document d A. Mc. Callum, K. Nigam. A comparison of Event Models for Naïve Bayes Text Classification. Proceedings of the AAAI/ICML Workshop on Learning for Text Categorization, pp. 41— 48, 1998

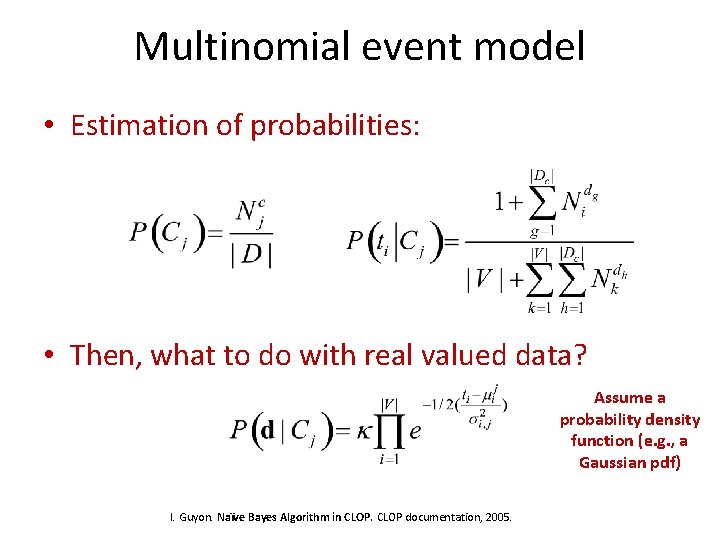

Multinomial event model • Estimation of probabilities: • Then, what to do with real valued data? Assume a probability density function (e. g. , a Gaussian pdf) I. Guyon. Naïve Bayes Algorithm in CLOP documentation, 2005.

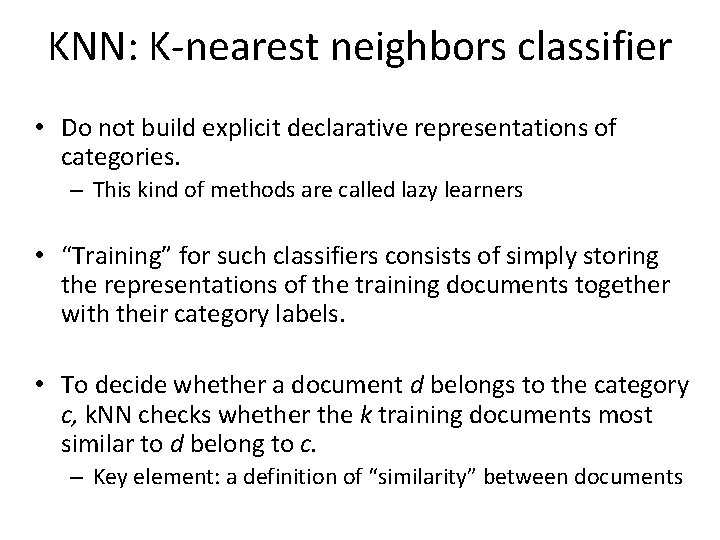

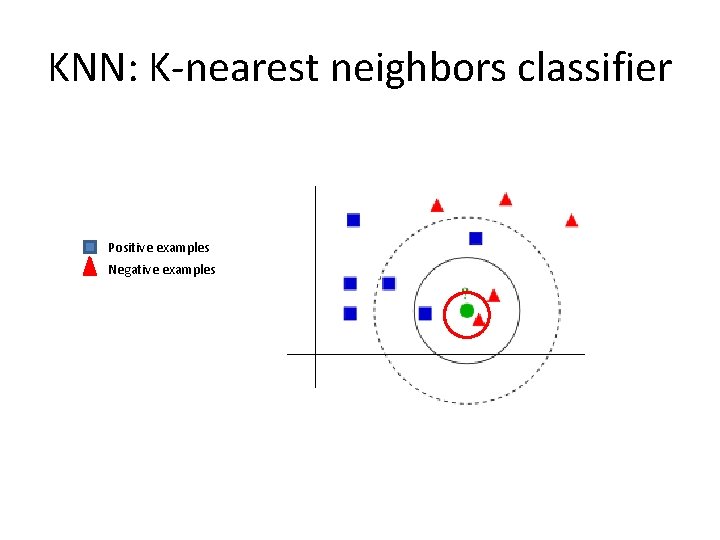

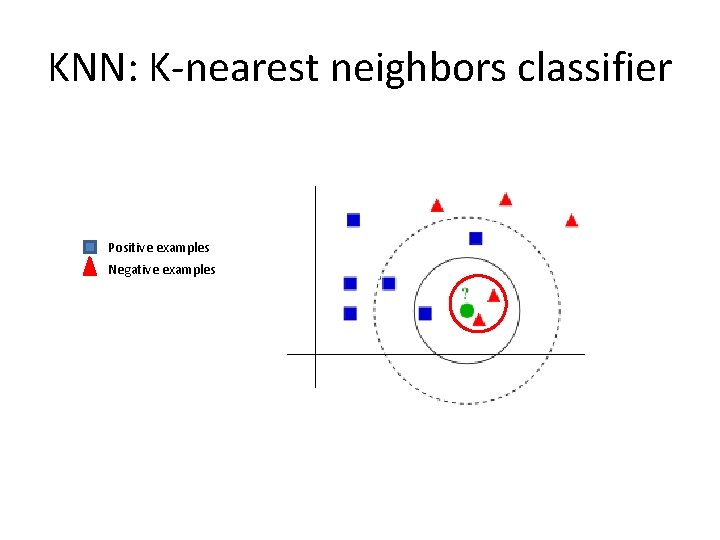

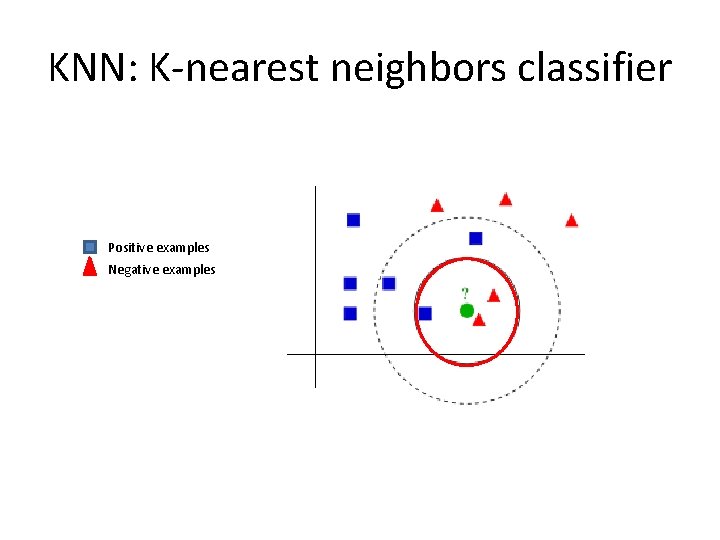

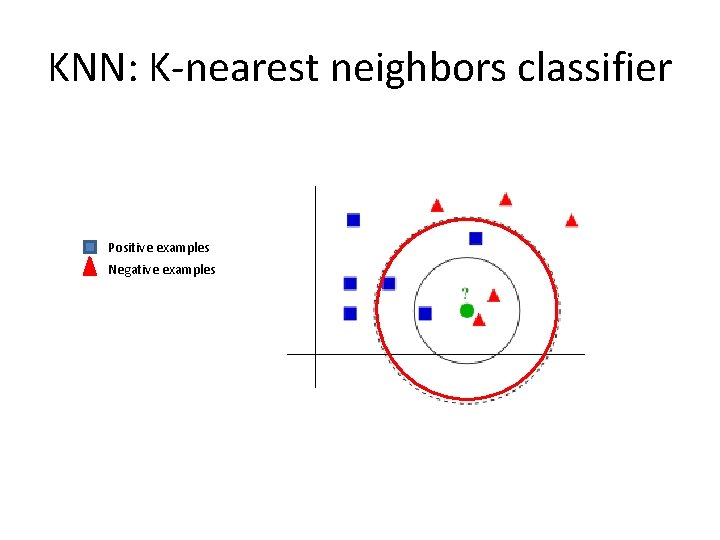

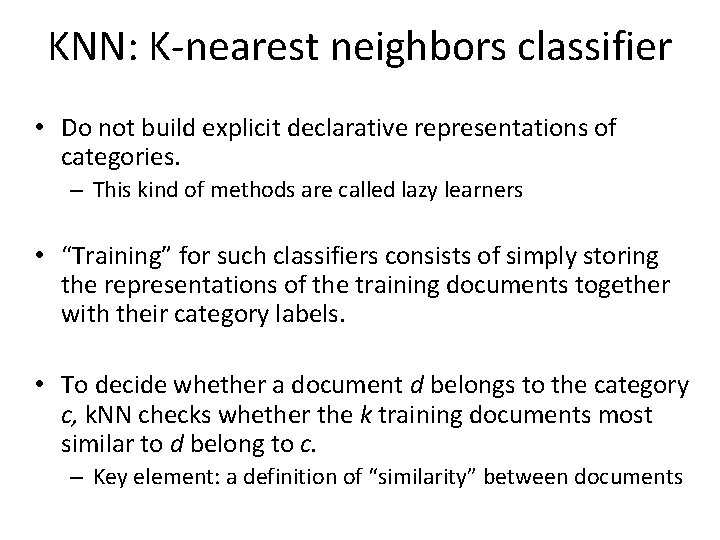

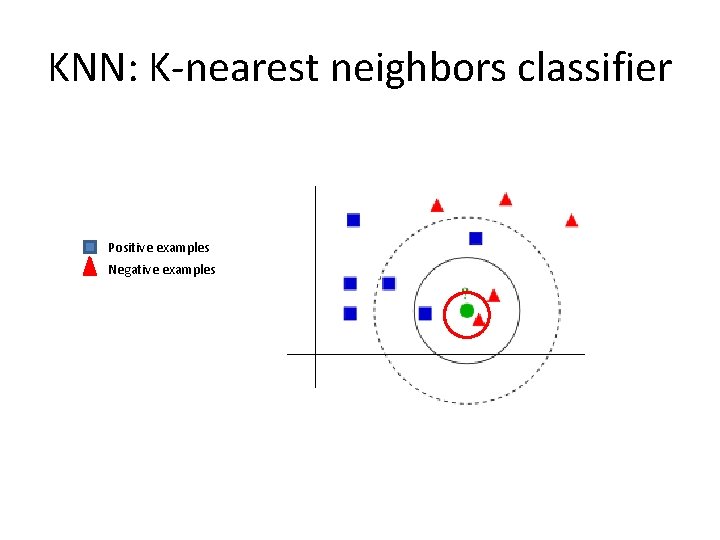

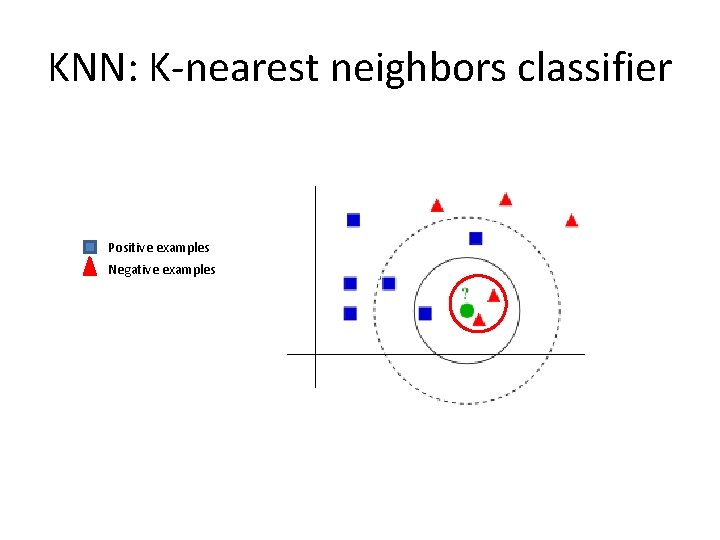

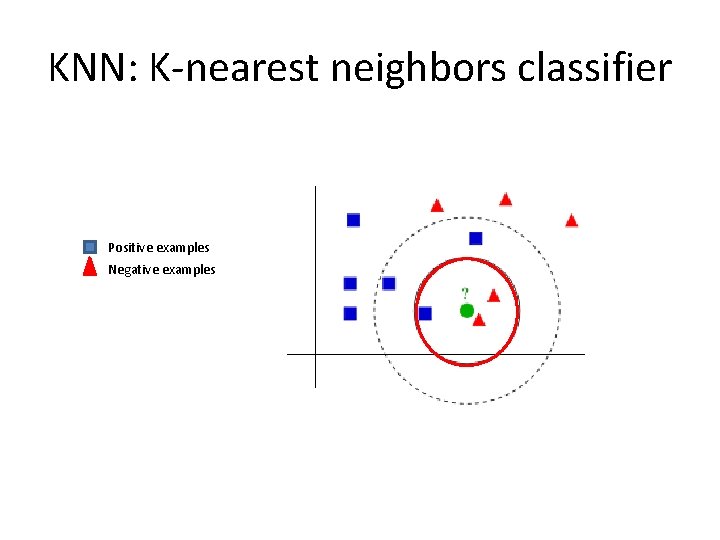

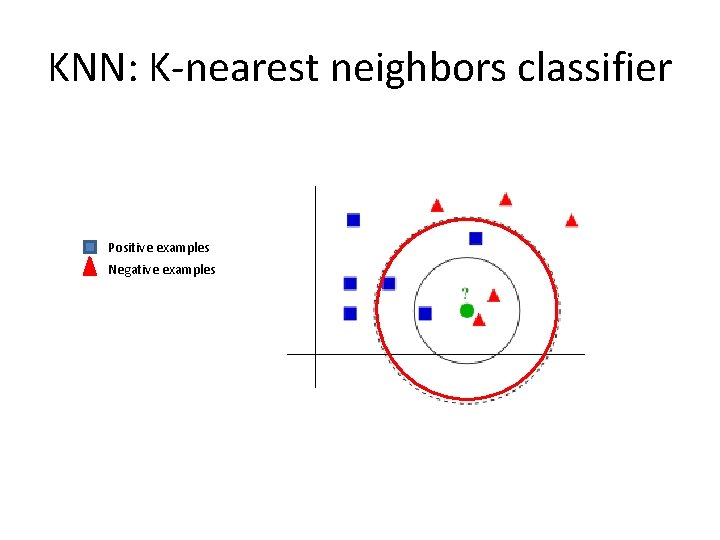

KNN: K-nearest neighbors classifier • Do not build explicit declarative representations of categories. – This kind of methods are called lazy learners • “Training” for such classifiers consists of simply storing the representations of the training documents together with their category labels. • To decide whether a document d belongs to the category c, k. NN checks whether the k training documents most similar to d belong to c. – Key element: a definition of “similarity” between documents

KNN: K-nearest neighbors classifier Positive examples Negative examples

KNN: K-nearest neighbors classifier Positive examples Negative examples

KNN: K-nearest neighbors classifier Positive examples Negative examples

KNN: K-nearest neighbors classifier Positive examples Negative examples

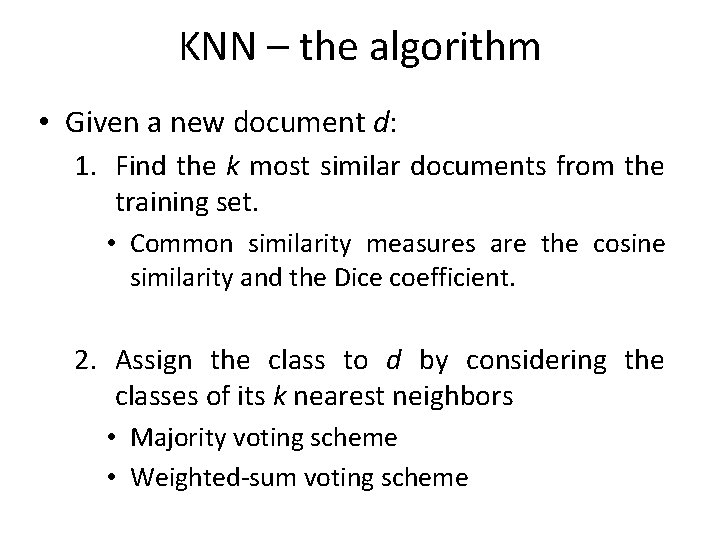

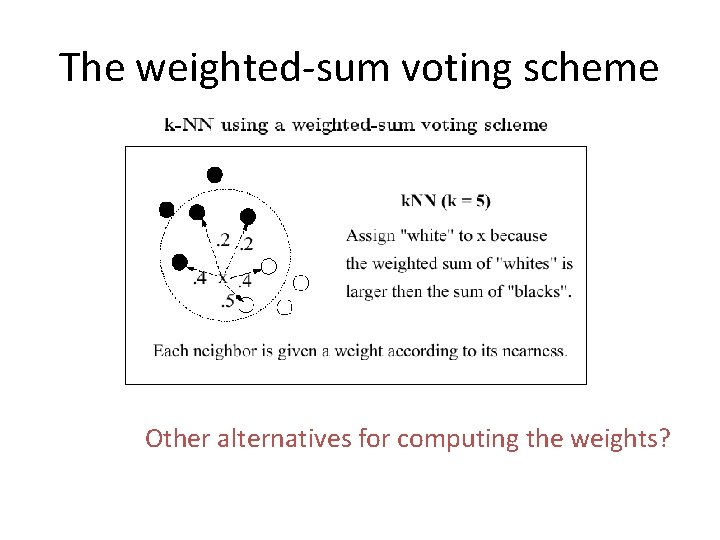

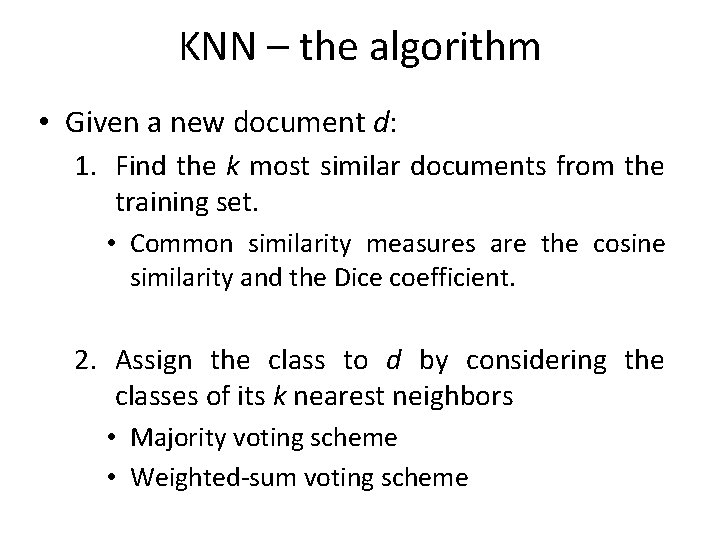

KNN – the algorithm • Given a new document d: 1. Find the k most similar documents from the training set. • Common similarity measures are the cosine similarity and the Dice coefficient. 2. Assign the class to d by considering the classes of its k nearest neighbors • Majority voting scheme • Weighted-sum voting scheme

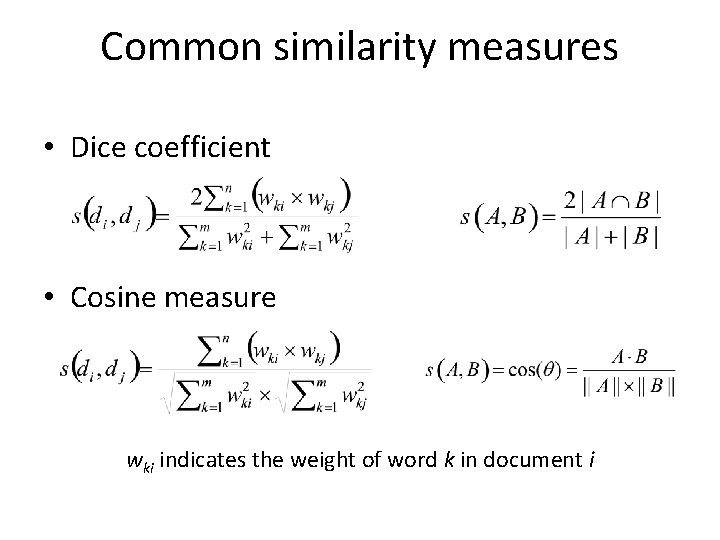

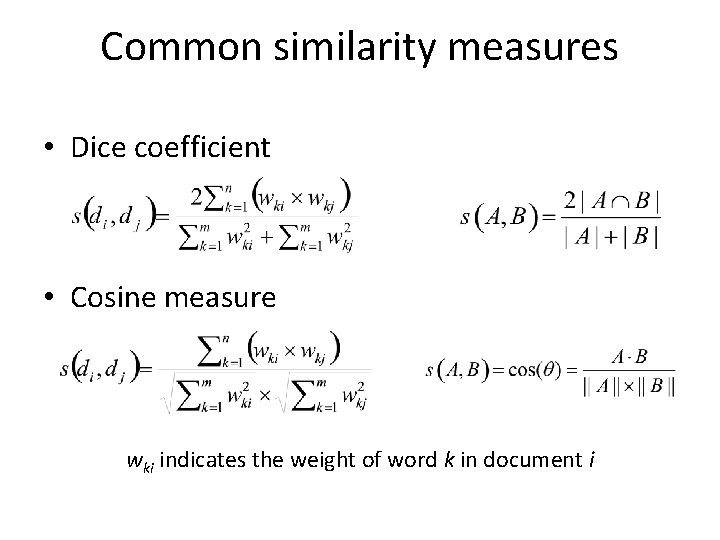

Common similarity measures • Dice coefficient • Cosine measure wki indicates the weight of word k in document i

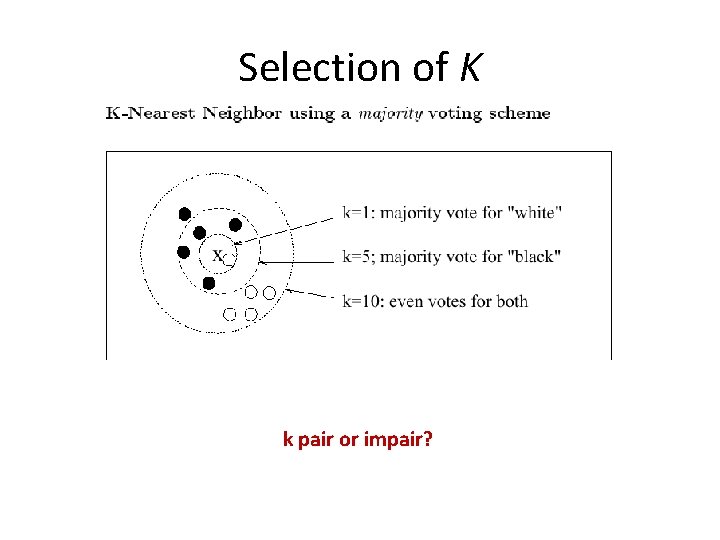

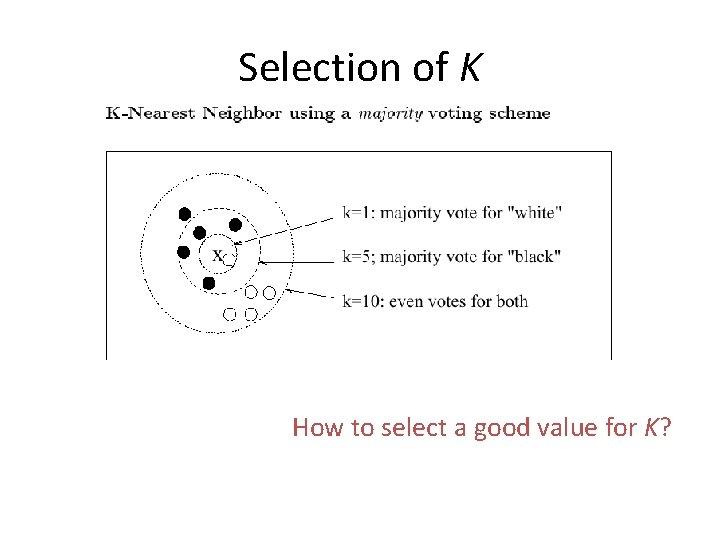

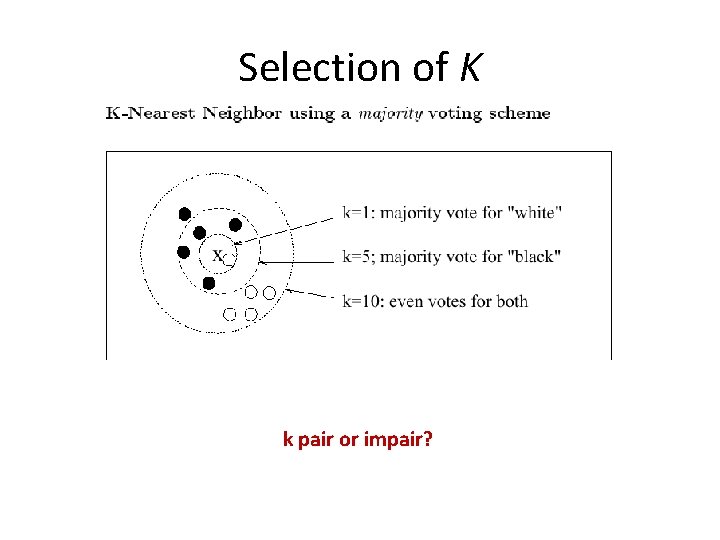

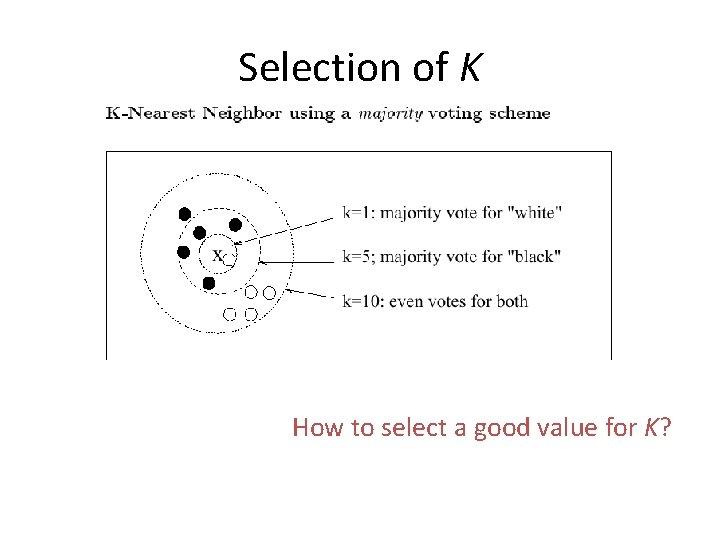

Selection of K k pair or impair?

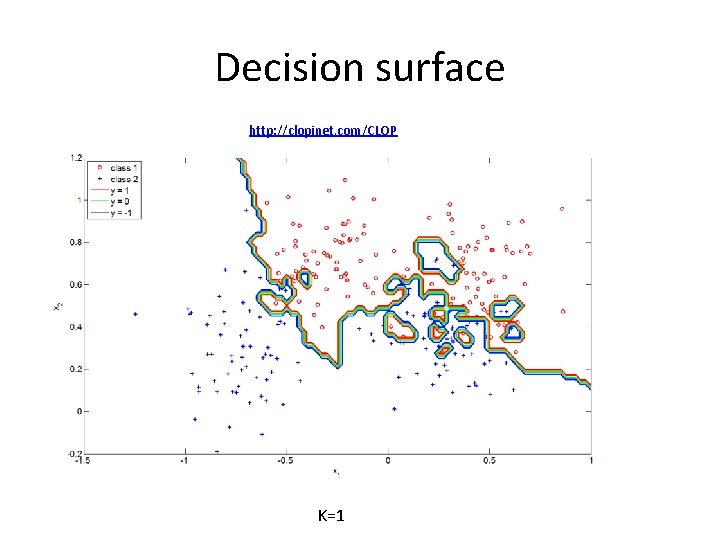

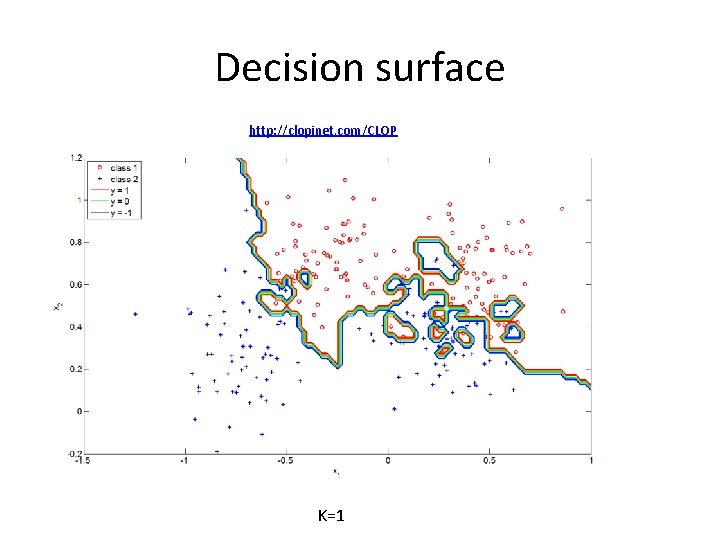

Decision surface http: //clopinet. com/CLOP K=1

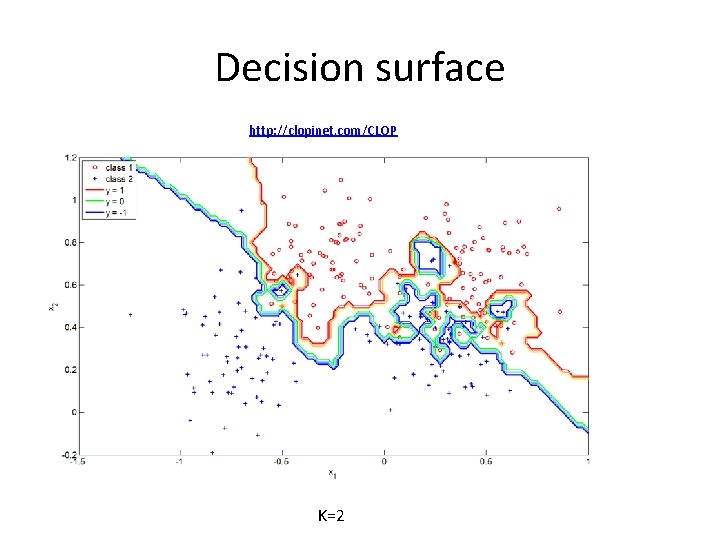

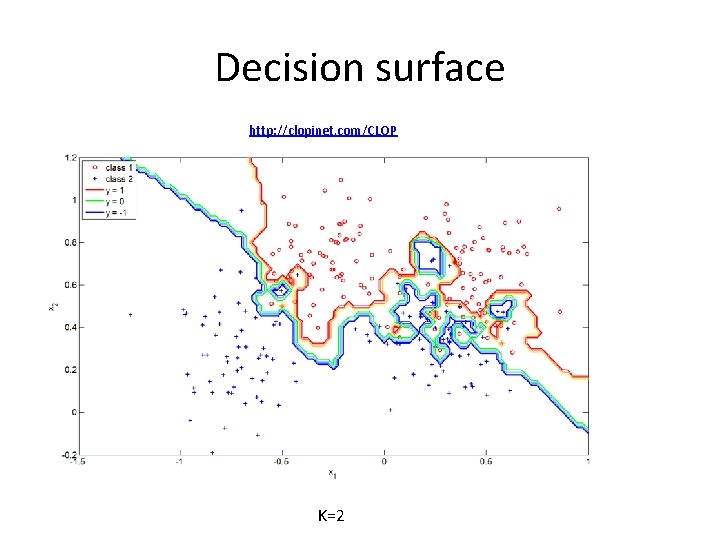

Decision surface http: //clopinet. com/CLOP K=2

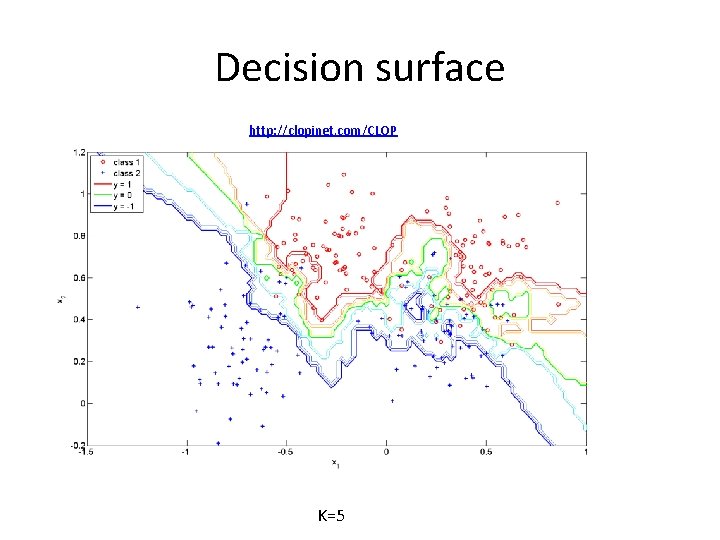

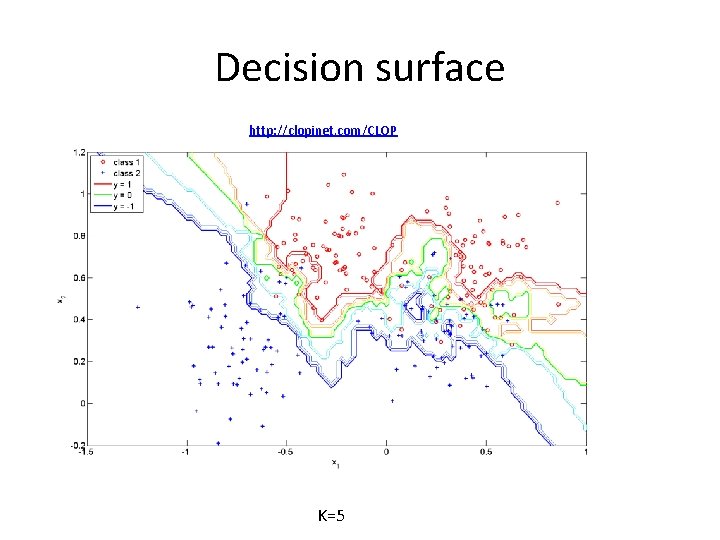

Decision surface http: //clopinet. com/CLOP K=5

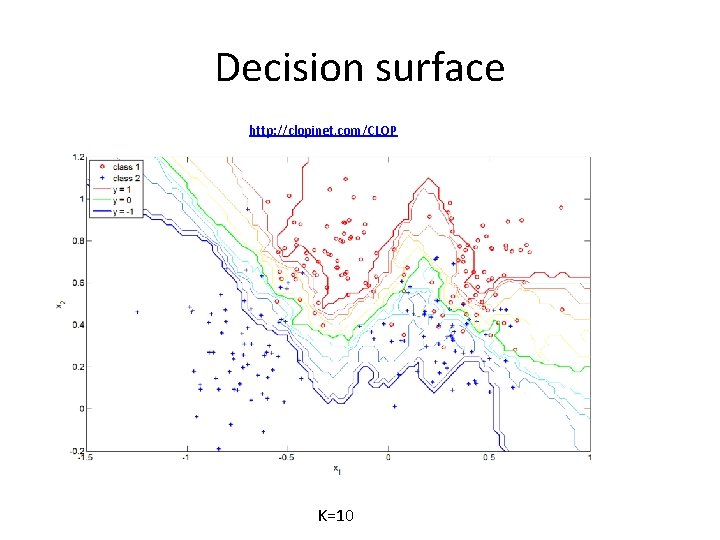

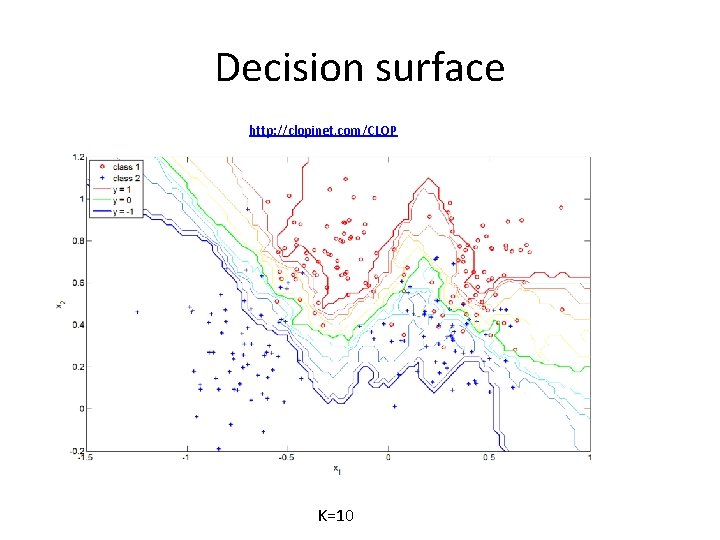

Decision surface http: //clopinet. com/CLOP K=10

Selection of K How to select a good value for K?

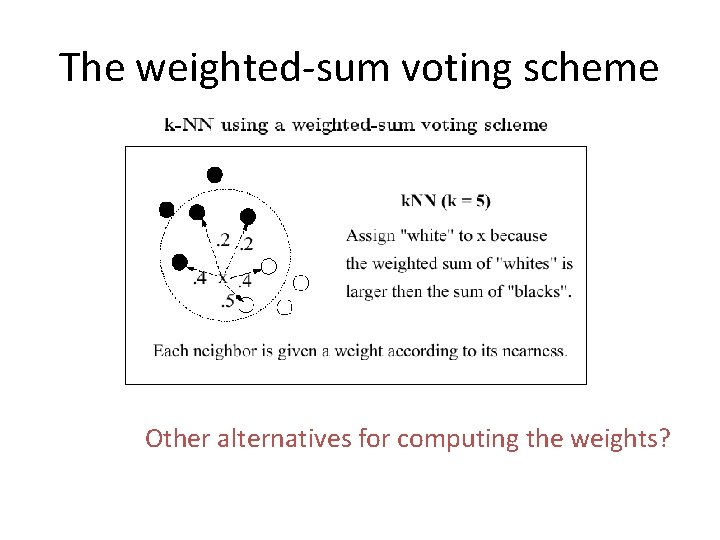

The weighted-sum voting scheme Other alternatives for computing the weights?

KNN - comments • One of the best-performing text classifiers. • It is robust in the sense of not requiring the categories to be linearly separated. • The major drawback is the computational effort during classification. • Other limitation is that its performance is primarily determined by the choice of k as well as the distance metric applied.

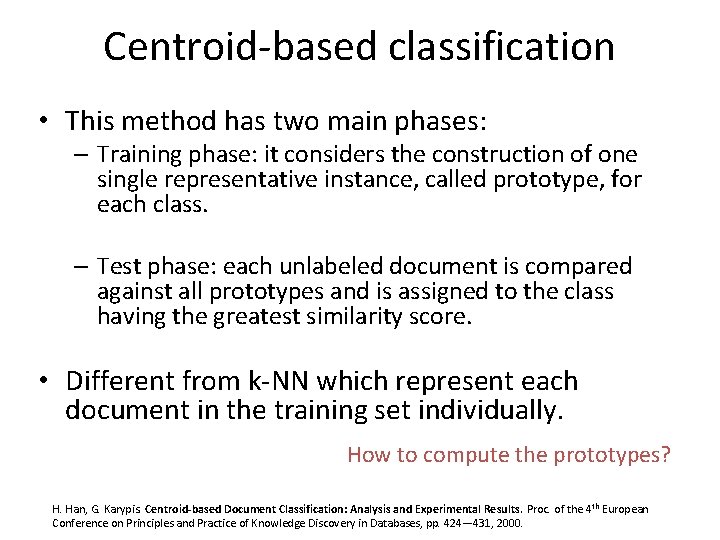

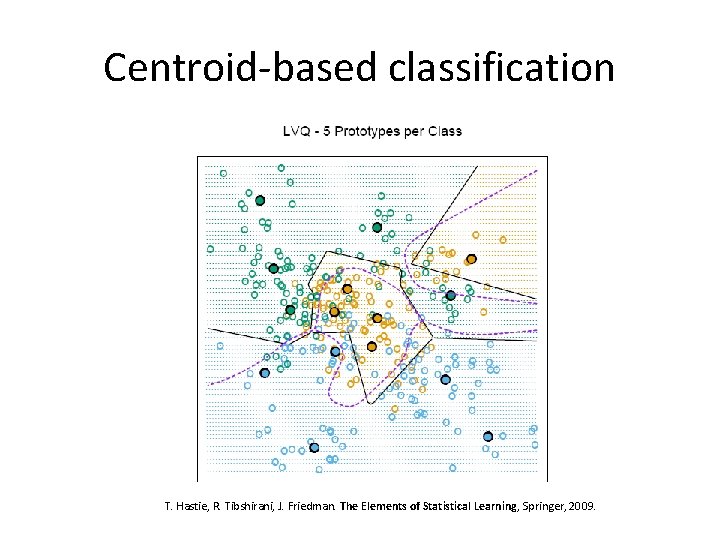

Centroid-based classification • This method has two main phases: – Training phase: it considers the construction of one single representative instance, called prototype, for each class. – Test phase: each unlabeled document is compared against all prototypes and is assigned to the class having the greatest similarity score. • Different from k-NN which represent each document in the training set individually. How to compute the prototypes? H. Han, G. Karypis. Centroid-based Document Classification: Analysis and Experimental Results. Proc. of the 4 th European Conference on Principles and Practice of Knowledge Discovery in Databases, pp. 424— 431, 2000.

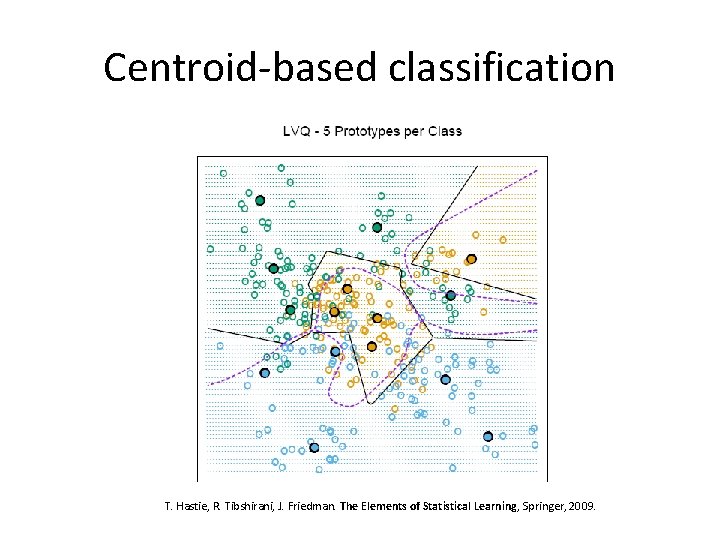

Centroid-based classification T. Hastie, R. Tibshirani, J. Friedman. The Elements of Statistical Learning, Springer, 2009.

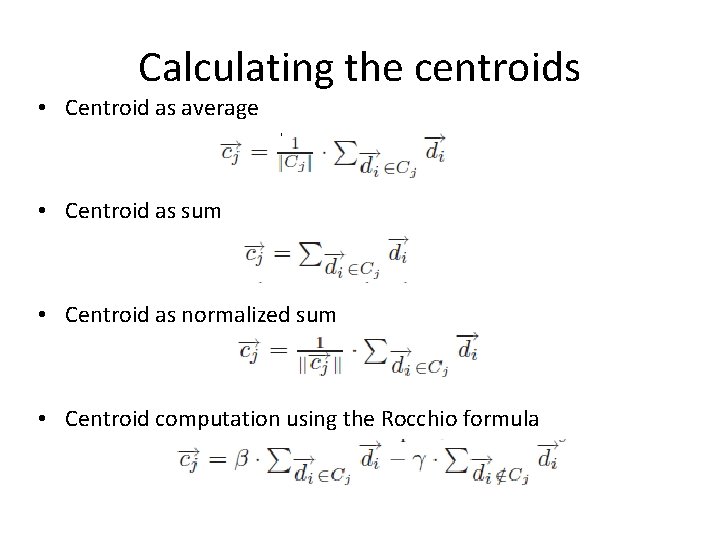

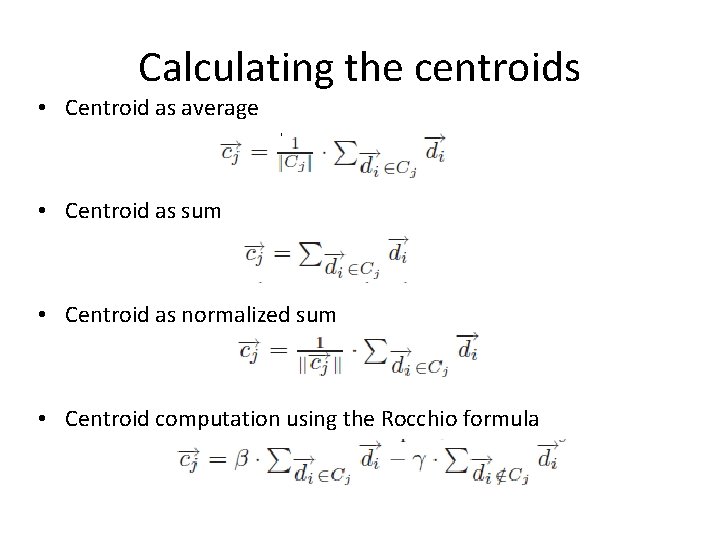

Calculating the centroids • Centroid as average • Centroid as sum • Centroid as normalized sum • Centroid computation using the Rocchio formula

Comments on Centroid-Based Classification • Computationally simple and fast model – Short training and testing time • Good results in text classification • Amenable to changes in the training set • Can handle imbalanced document sets • Disadvantages: – Inadequate for non-linear classification problems – Problem of inductive bias or model misfit • Classifiers are tuned to the contingent characteristics of the training data rather than the constitutive characteristics of the categories

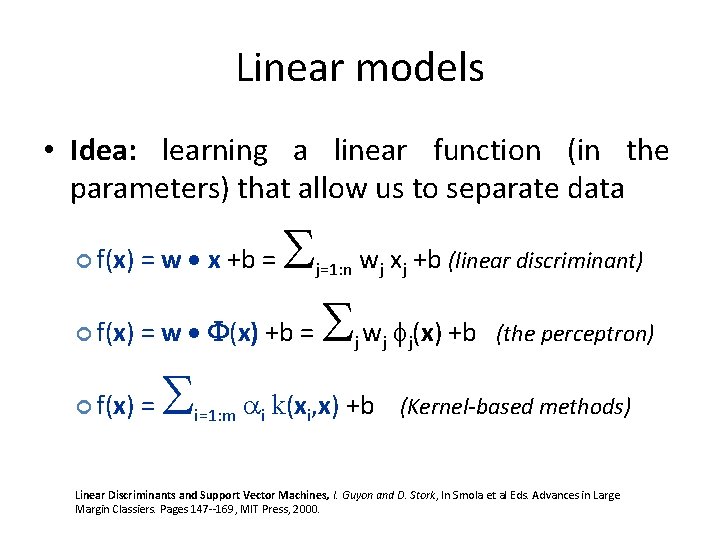

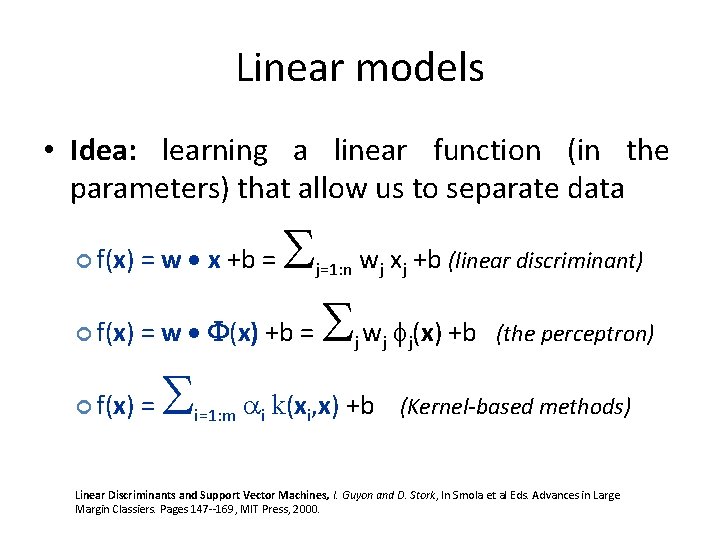

Linear models • Idea: learning a linear function (in the parameters) that allow us to separate data S f(x) = w x +b = f(x) = w F(x) +b = f(x) = S i=1: m wj xj +b (linear discriminant) j=1: n S w f (x) +b j j j (the perceptron) ai k(xi, x) +b (Kernel-based methods) Linear Discriminants and Support Vector Machines, I. Guyon and D. Stork, In Smola et al Eds. Advances in Large Margin Classiers. Pages 147 --169, MIT Press, 2000.

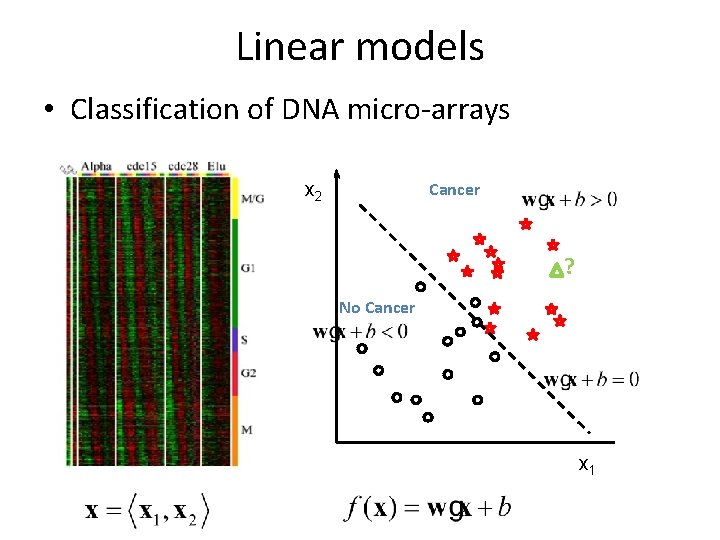

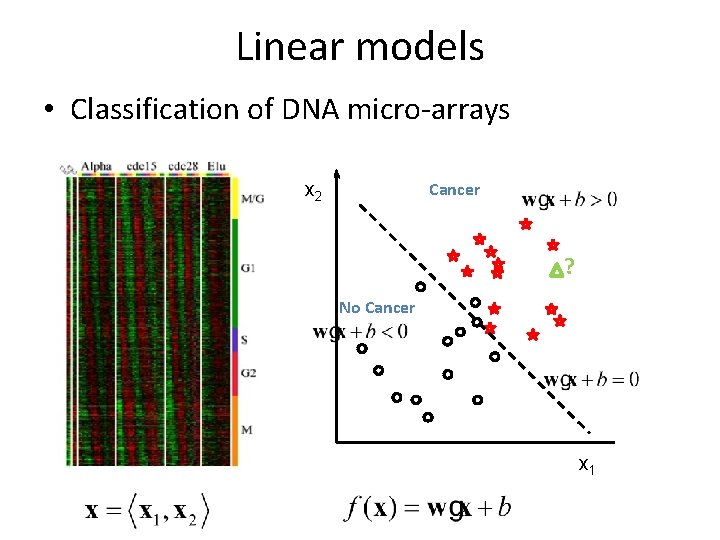

Linear models • Classification of DNA micro-arrays x 2? Cancer ? No Cancer x 1

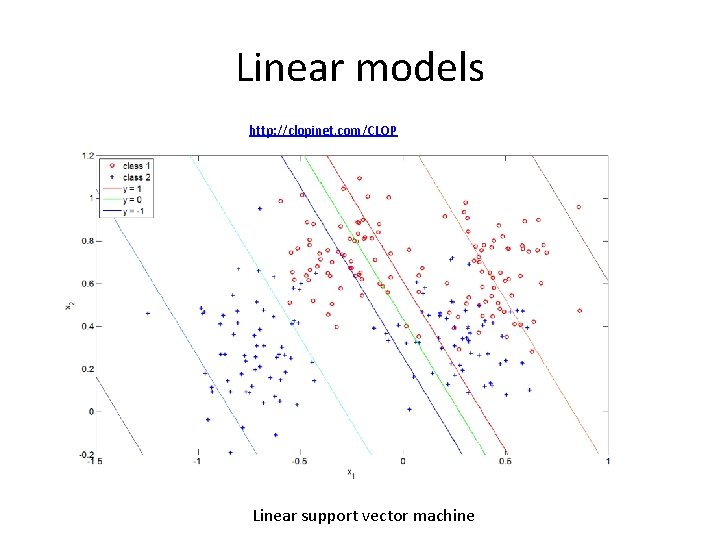

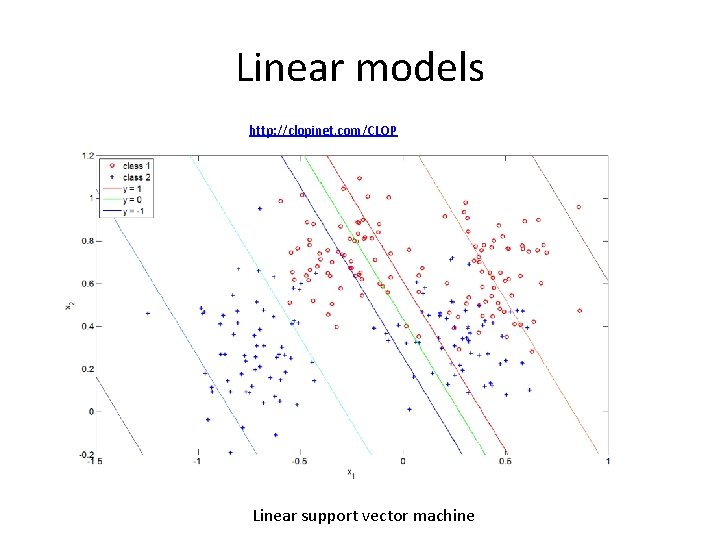

Linear models http: //clopinet. com/CLOP Linear support vector machine

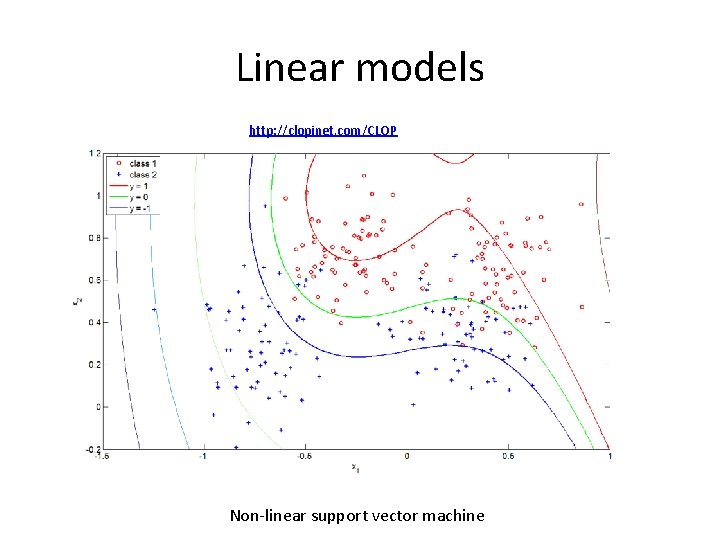

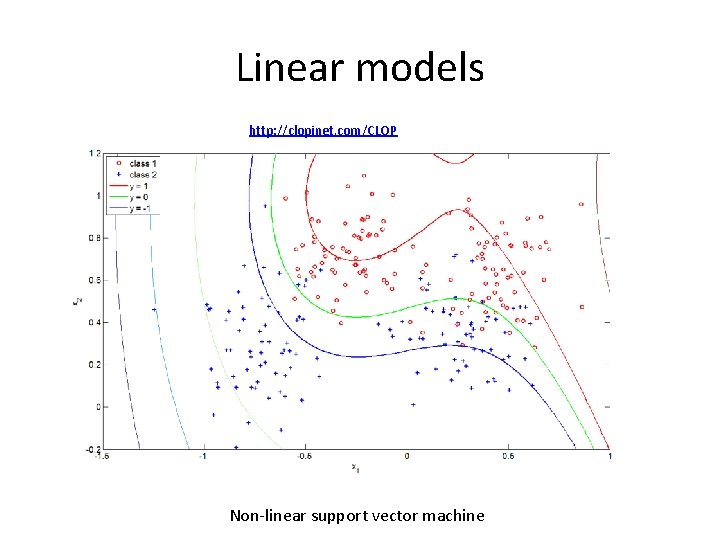

Linear models http: //clopinet. com/CLOP Non-linear support vector machine

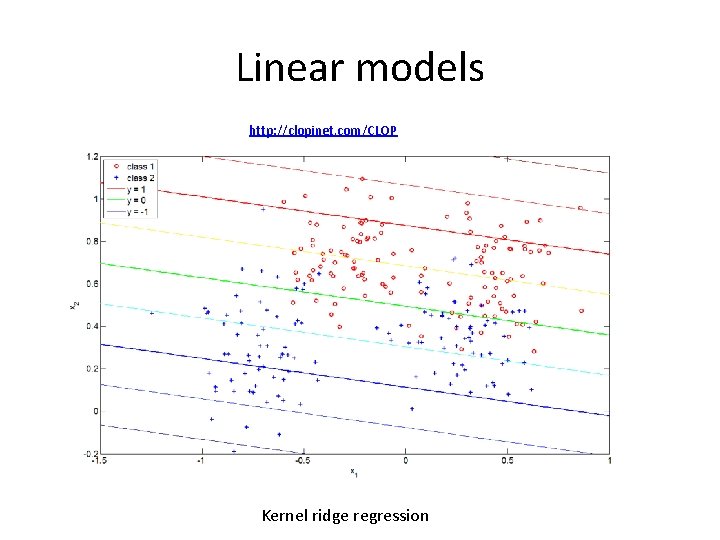

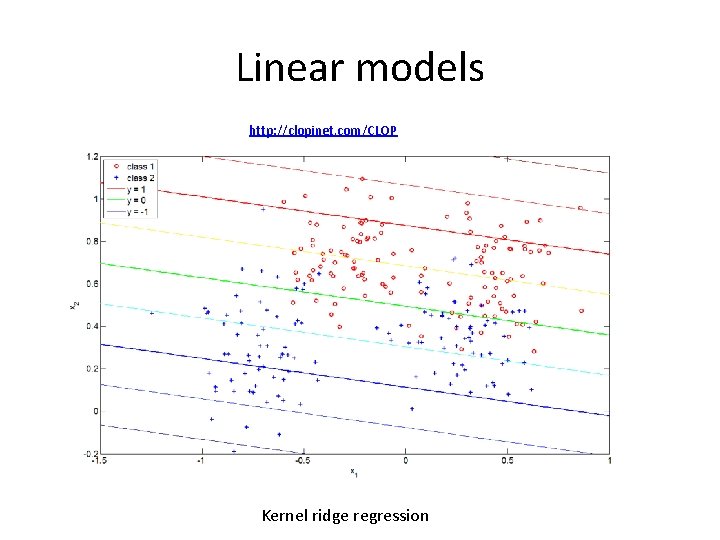

Linear models http: //clopinet. com/CLOP Kernel ridge regression

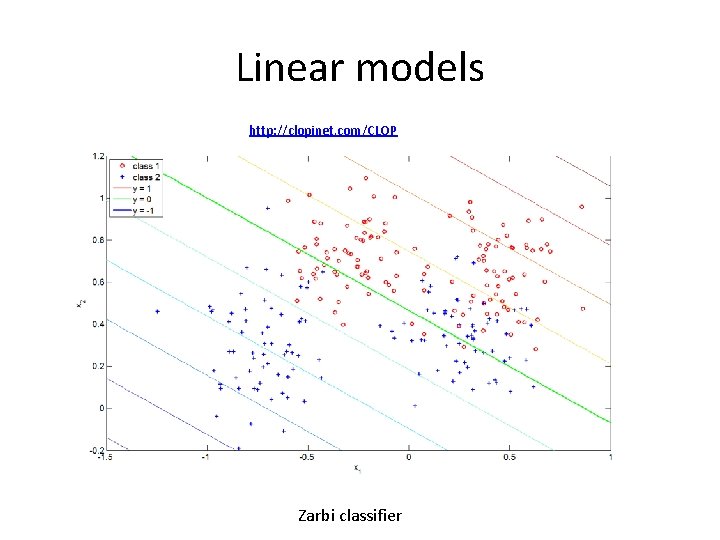

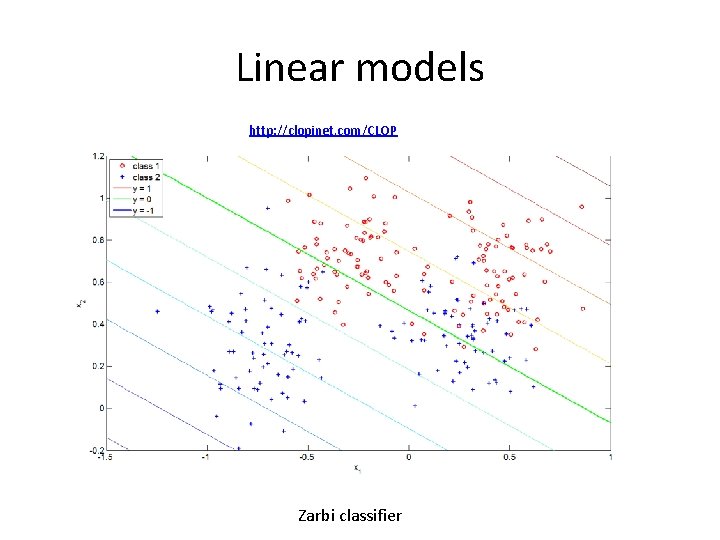

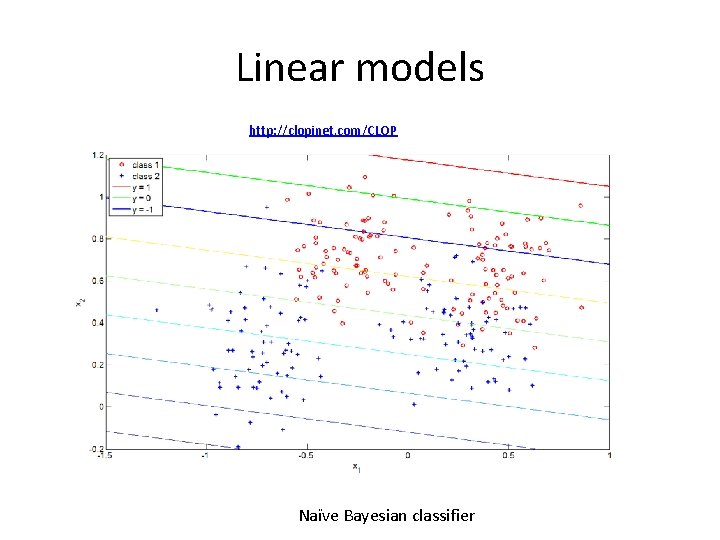

Linear models http: //clopinet. com/CLOP Zarbi classifier

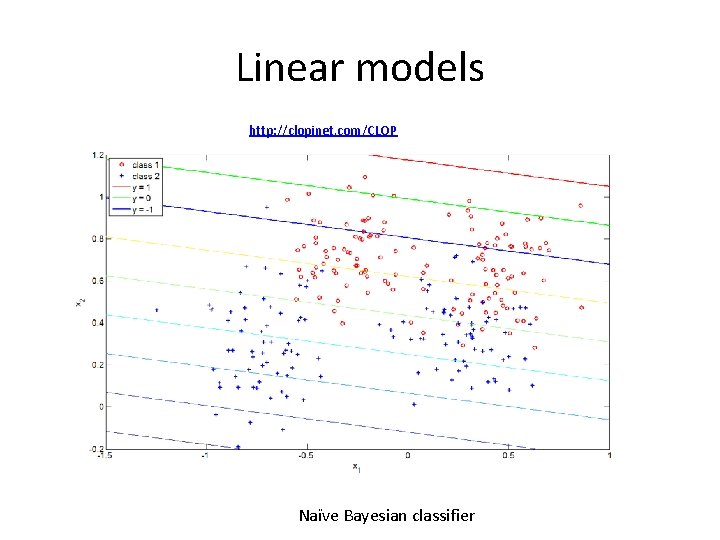

Linear models http: //clopinet. com/CLOP Naïve Bayesian classifier

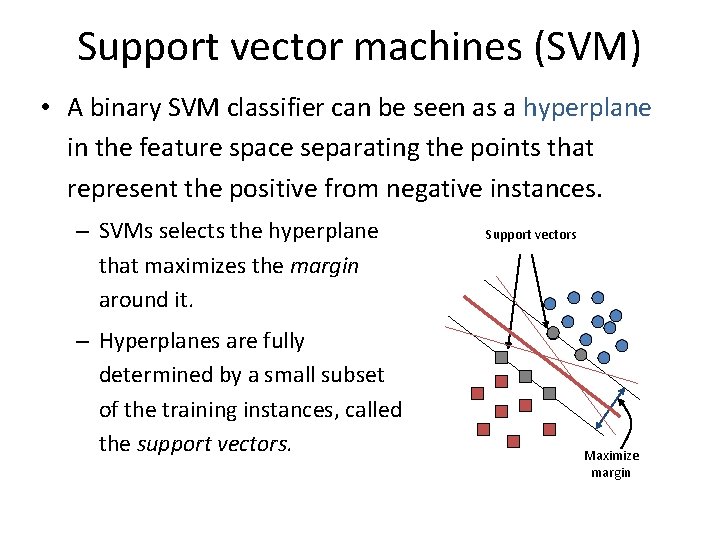

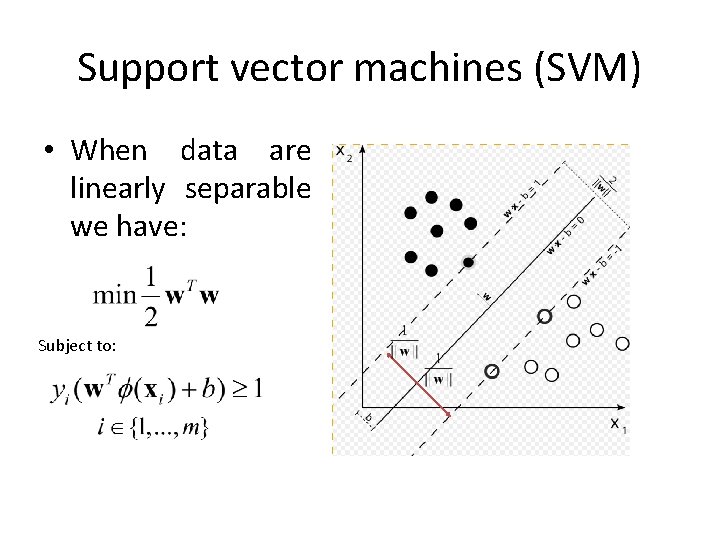

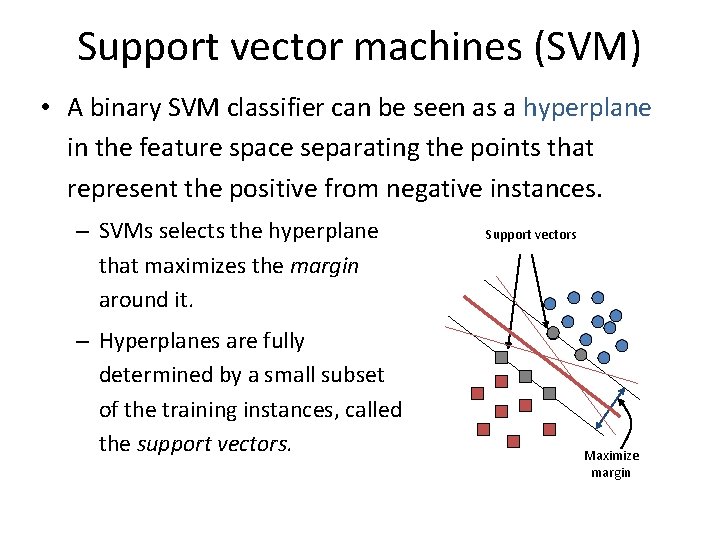

Support vector machines (SVM) • A binary SVM classifier can be seen as a hyperplane in the feature space separating the points that represent the positive from negative instances. – SVMs selects the hyperplane that maximizes the margin around it. – Hyperplanes are fully determined by a small subset of the training instances, called the support vectors. Support vectors Maximize margin

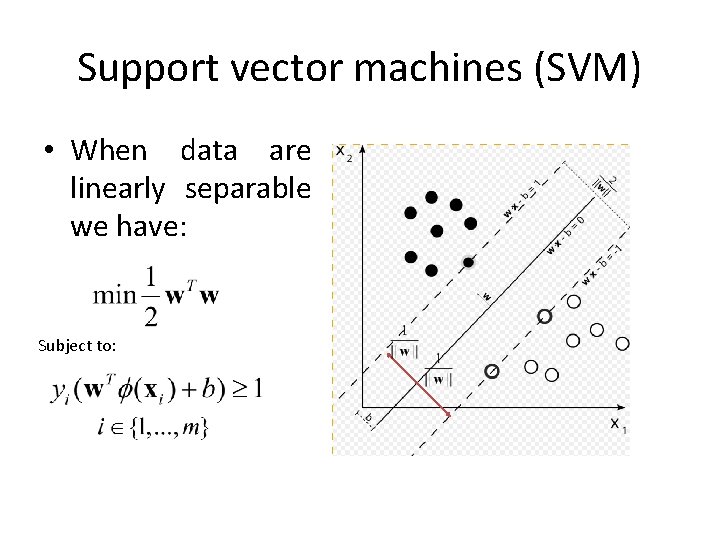

Support vector machines (SVM) • When data are linearly separable we have: Subject to:

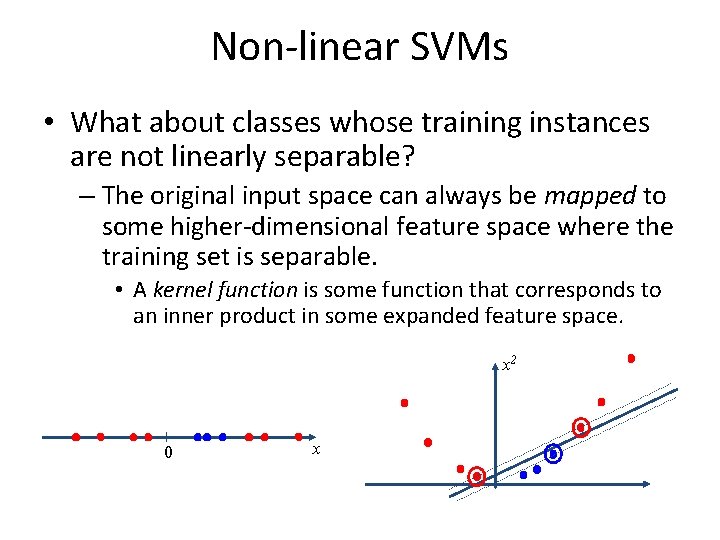

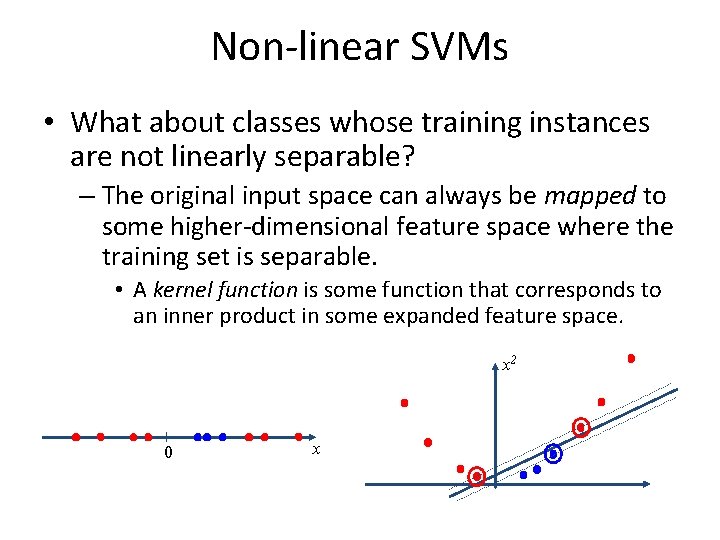

Non-linear SVMs • What about classes whose training instances are not linearly separable? – The original input space can always be mapped to some higher-dimensional feature space where the training set is separable. • A kernel function is some function that corresponds to an inner product in some expanded feature space. x 2 0 x

SVM – discussion • The support vector machine (SVM) algorithm is very fast and effective for text classification problems. – Flexibility in choosing a similarity function • By means of a kernel function – Sparseness of solution when dealing with large data sets • Only support vectors are used to specify the separating hyperplane – Ability to handle large feature spaces • Complexity does not depend on the dimensionality of the feature space

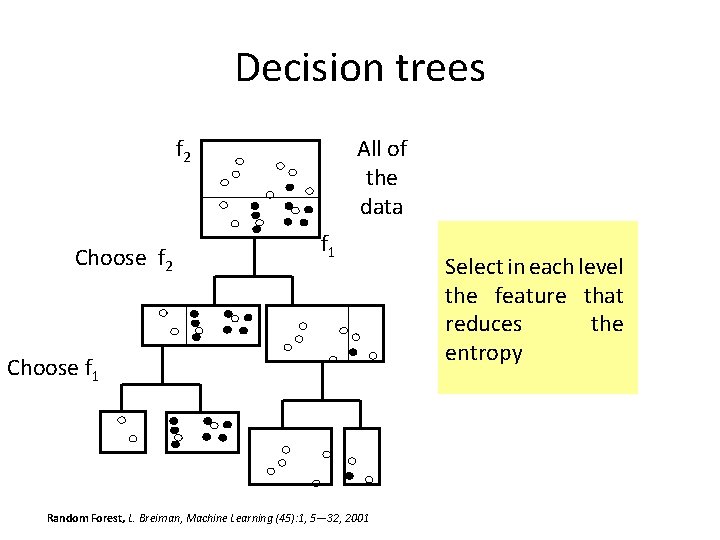

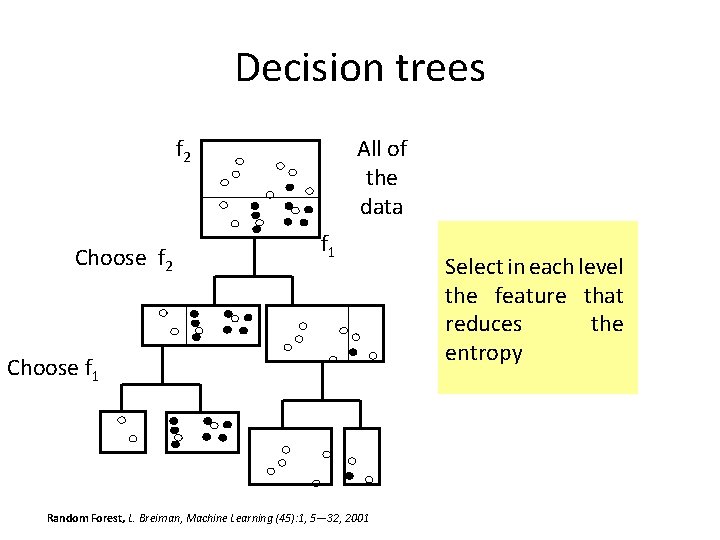

Decision trees f 2 Choose f 2 All of the data f 1 Choose f 1 Random Forest, L. Breiman, Machine Learning (45): 1, 5— 32, 2001 Select in each level the feature that reduces the entropy

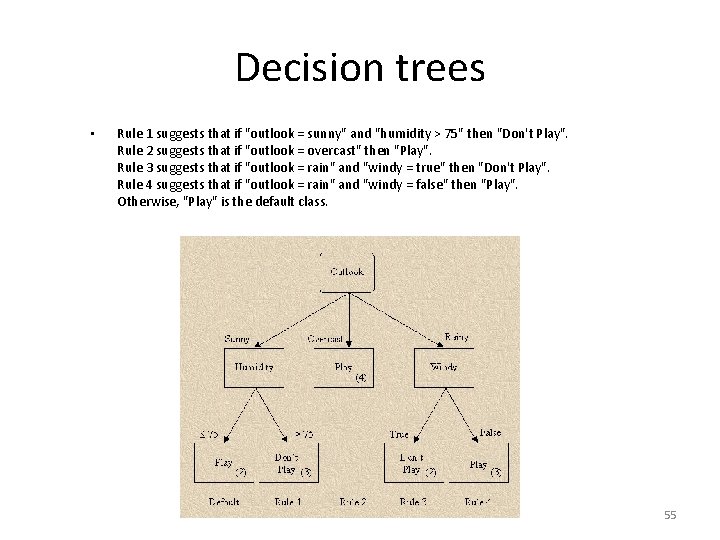

Decision trees Outlook Temperature Humidity Windy Play (positive) / Don't Play (negative) sunny 85 85 false Don't Play sunny 80 90 true Don't Play overcast 83 78 false Play rain 70 68 65 96 80 70 false true Play Don't Play overcast 64 65 true Play sunny 72 95 false Don't Play sunny 69 70 false Play rain 75 80 false Play sunny 75 70 true Play overcast 72 90 true Play overcast 81 75 false Play rain 71 80 true Don't Play 54

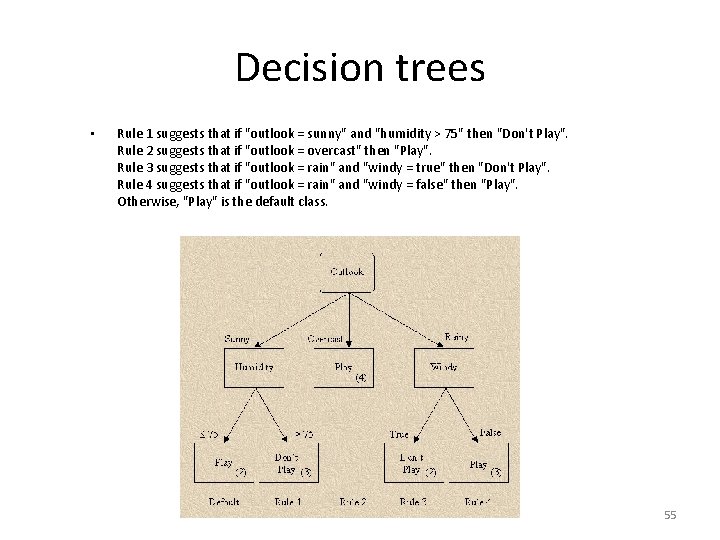

Decision trees • Rule 1 suggests that if "outlook = sunny" and "humidity > 75" then "Don't Play". Rule 2 suggests that if "outlook = overcast" then "Play". Rule 3 suggests that if "outlook = rain" and "windy = true" then "Don't Play". Rule 4 suggests that if "outlook = rain" and "windy = false" then "Play". Otherwise, "Play" is the default class. 55

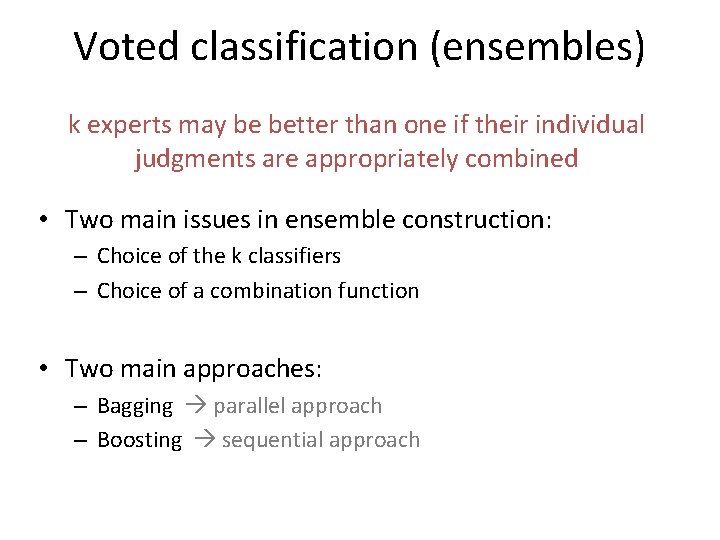

Voted classification (ensembles) k experts may be better than one if their individual judgments are appropriately combined • Two main issues in ensemble construction: – Choice of the k classifiers – Choice of a combination function • Two main approaches: – Bagging parallel approach – Boosting sequential approach

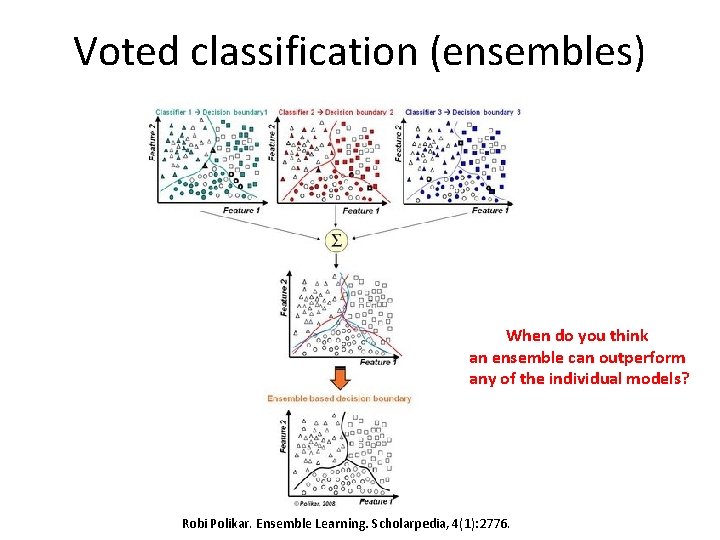

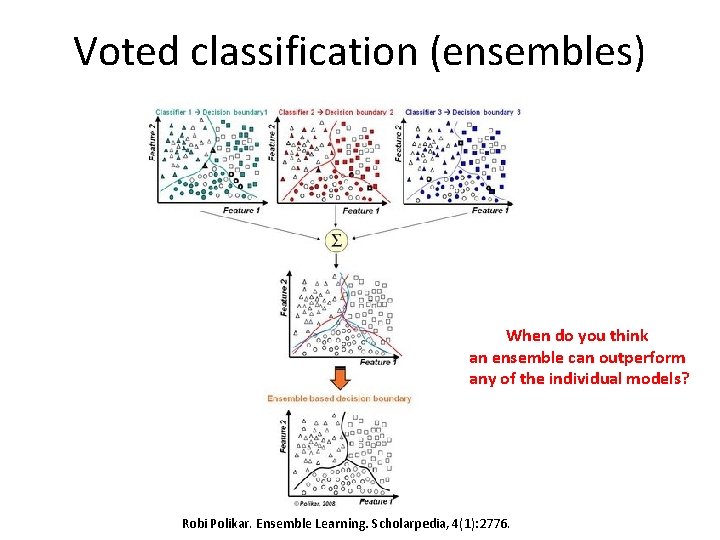

Voted classification (ensembles) When do you think an ensemble can outperform any of the individual models? Robi Polikar. Ensemble Learning. Scholarpedia, 4(1): 2776.

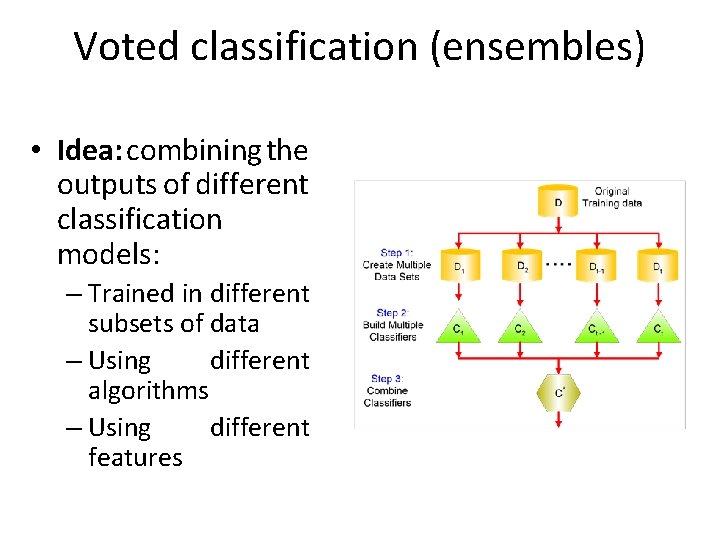

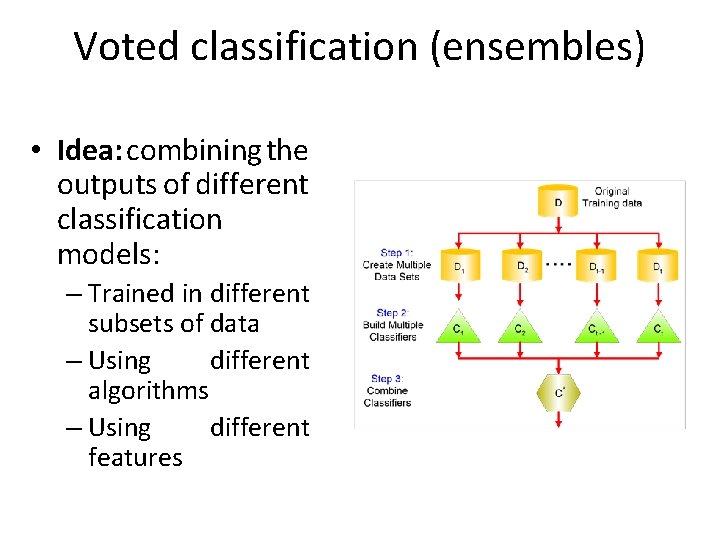

Voted classification (ensembles) • Idea: combining the outputs of different classification models: – Trained in different subsets of data – Using different algorithms – Using different features

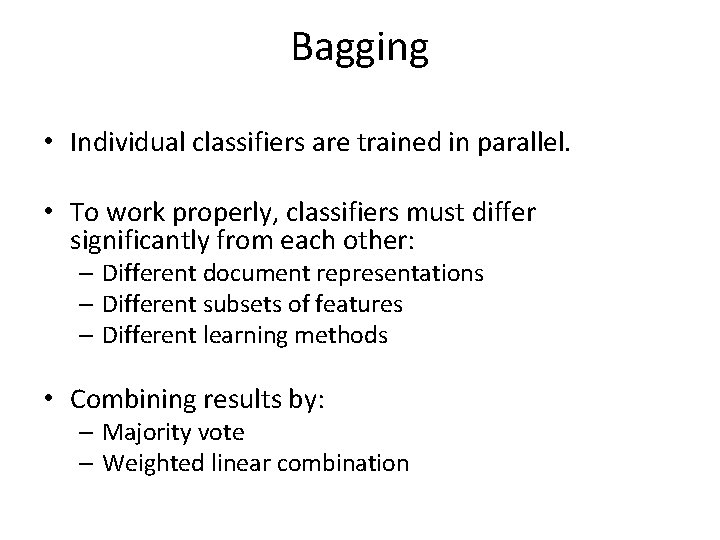

Bagging • Individual classifiers are trained in parallel. • To work properly, classifiers must differ significantly from each other: – Different document representations – Different subsets of features – Different learning methods • Combining results by: – Majority vote – Weighted linear combination

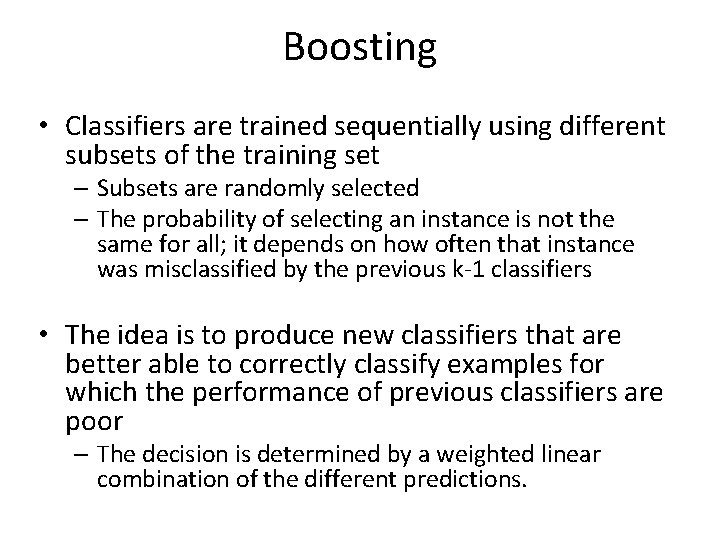

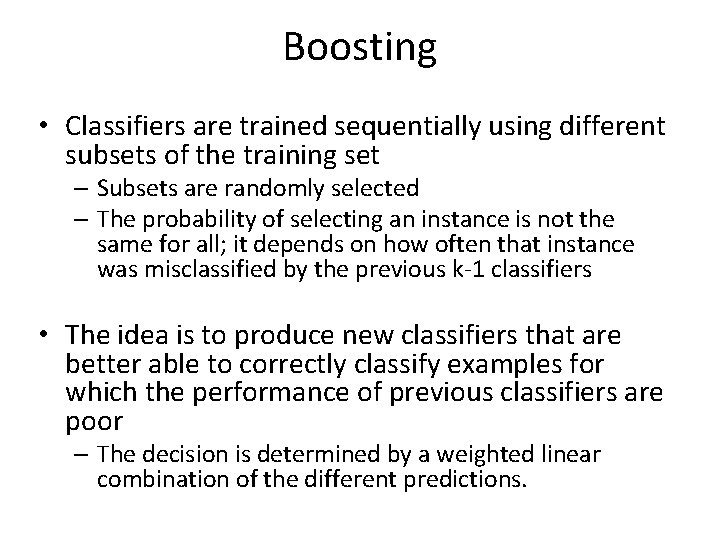

Boosting • Classifiers are trained sequentially using different subsets of the training set – Subsets are randomly selected – The probability of selecting an instance is not the same for all; it depends on how often that instance was misclassified by the previous k-1 classifiers • The idea is to produce new classifiers that are better able to correctly classify examples for which the performance of previous classifiers are poor – The decision is determined by a weighted linear combination of the different predictions.

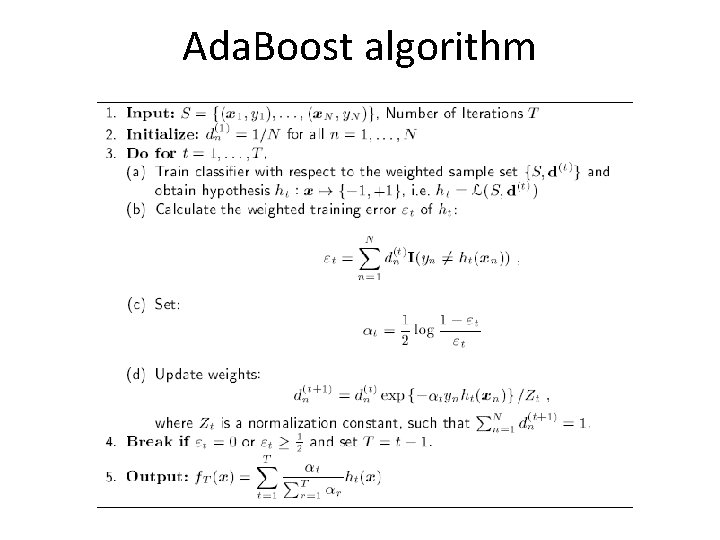

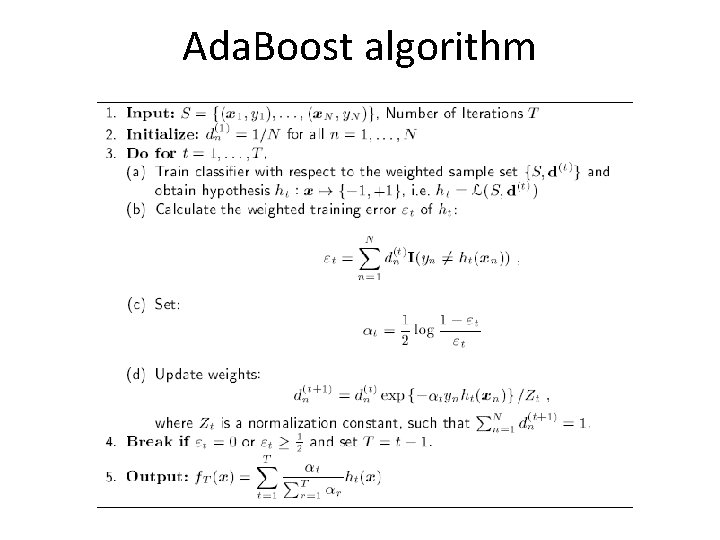

Ada. Boost algorithm

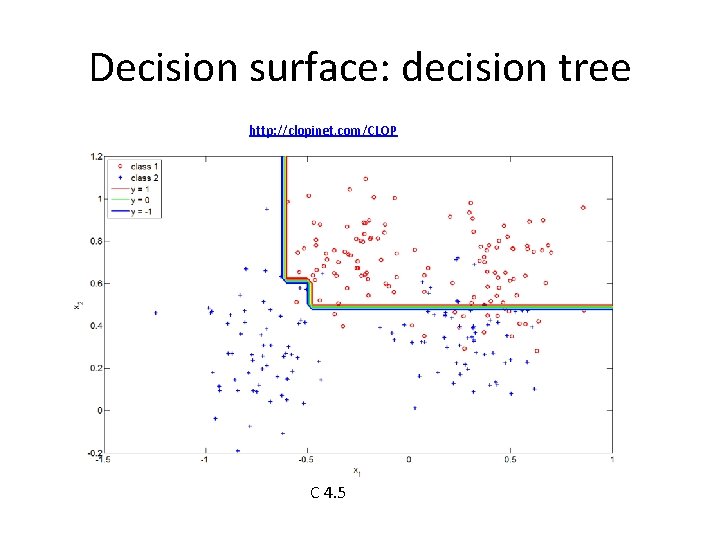

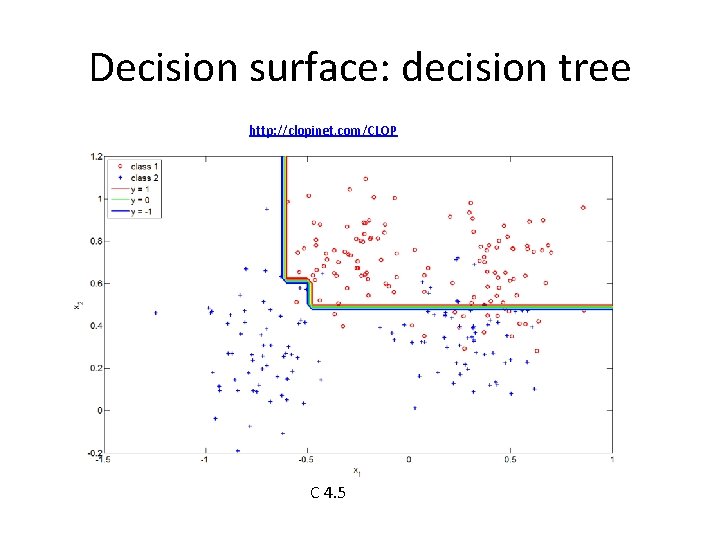

Decision surface: decision tree http: //clopinet. com/CLOP C 4. 5

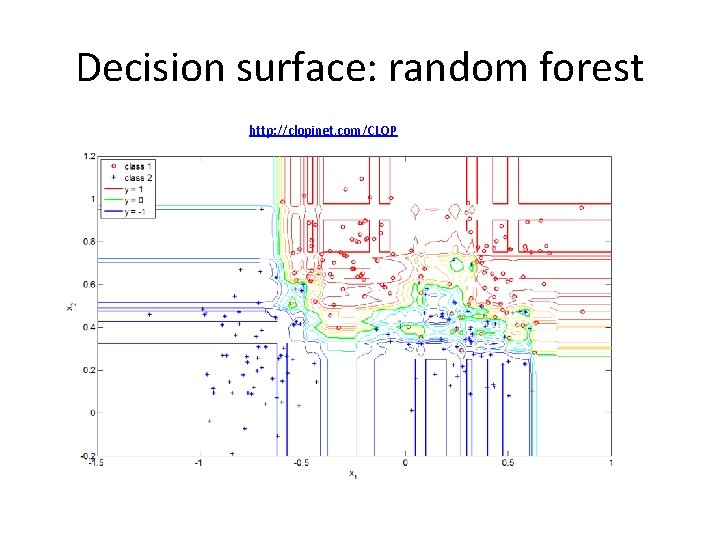

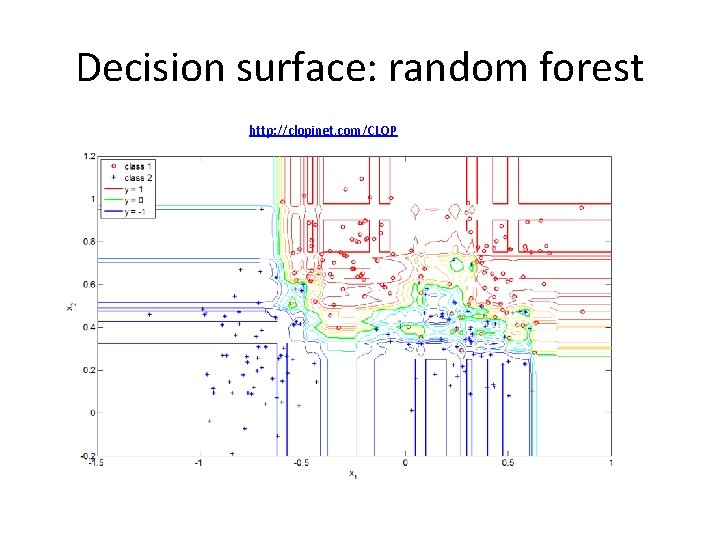

Decision surface: random forest http: //clopinet. com/CLOP

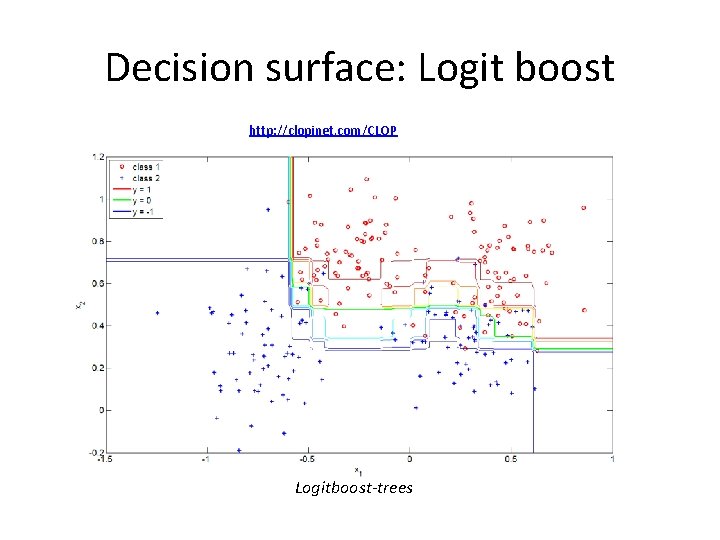

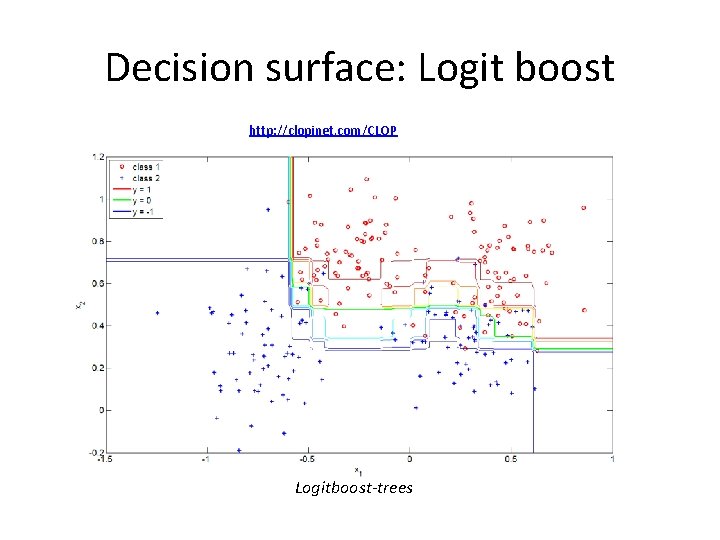

Decision surface: Logit boost http: //clopinet. com/CLOP Logitboost-trees

Evaluation of text classification • What to evaluate? • How to carry out this evaluation? – Which elements (information) are required? • How to know which is the best classifer for a given task? – Which things are important to perform a fair comparison?

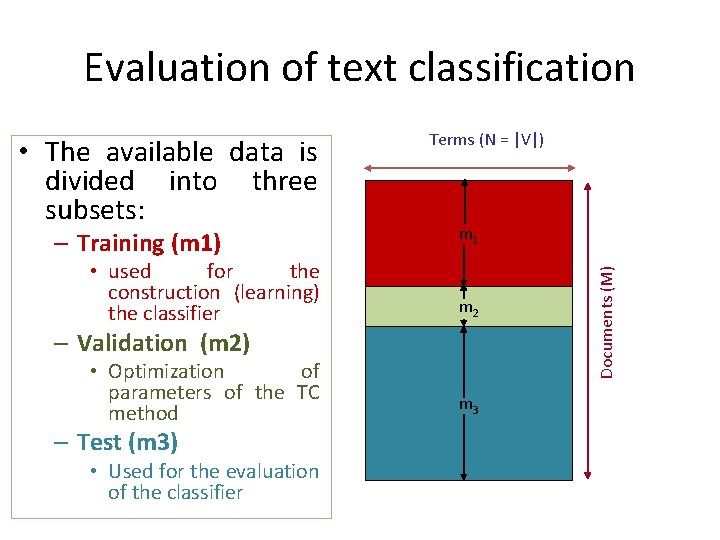

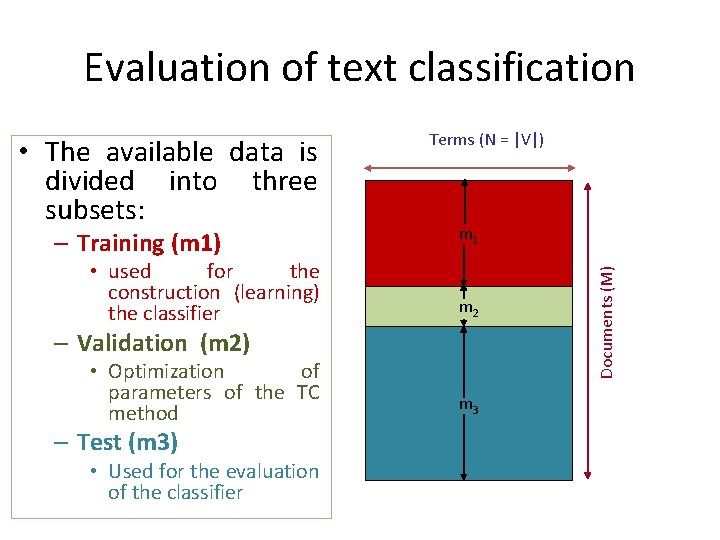

Evaluation of text classification – Training (m 1) • used for the construction (learning) the classifier m 1 m 2 – Validation (m 2) • Optimization of parameters of the TC method – Test (m 3) • Used for the evaluation of the classifier m 3 Documents (M) • The available data is divided into three subsets: Terms (N = |V|)

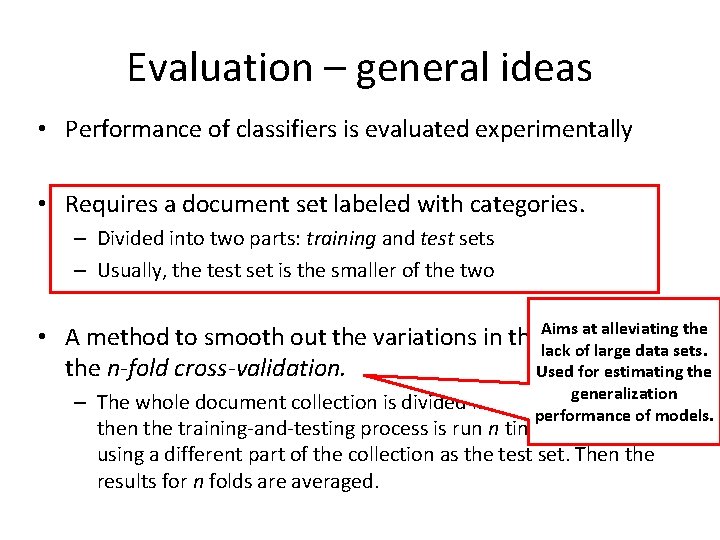

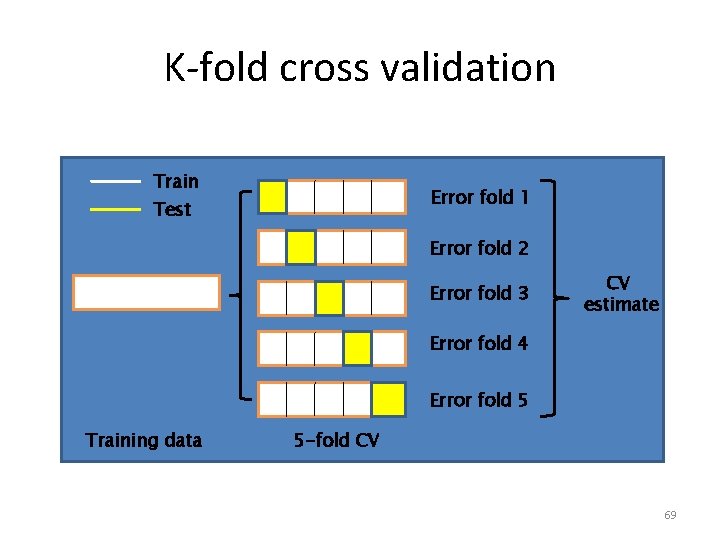

Evaluation – general ideas • Performance of classifiers is evaluated experimentally • Requires a document set labeled with categories. – Divided into two parts: training and test sets – Usually, the test set is the smaller of the two at alleviating the • A method to smooth out the variations in the. Aims corpus is lack of large data sets. the n-fold cross-validation. Used for estimating the – The whole document collection is divided into n equalgeneralization parts, and performance of models. then the training-and-testing process is run n times, each time using a different part of the collection as the test set. Then the results for n folds are averaged.

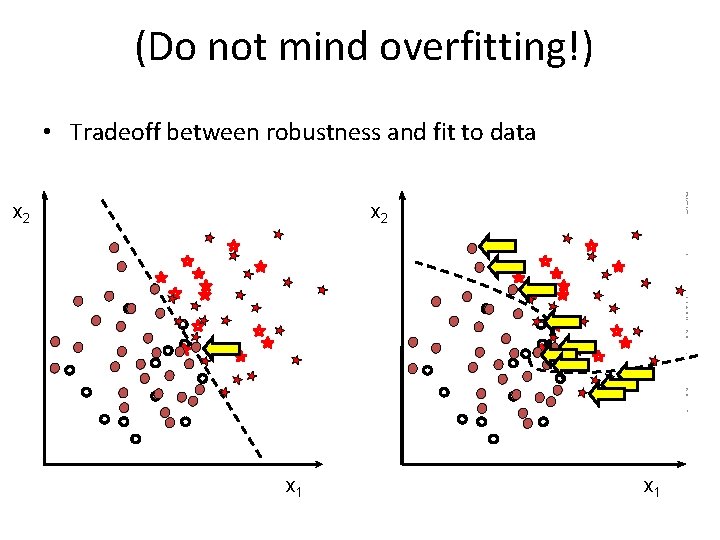

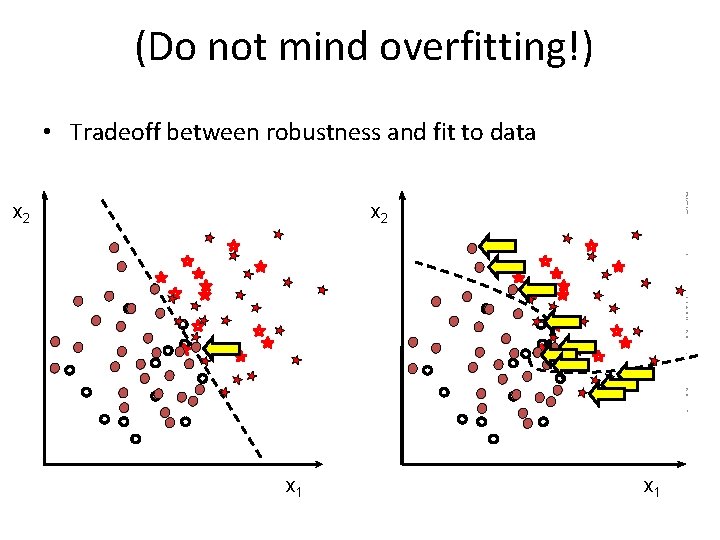

(Do not mind overfitting!) • Tradeoff between robustness and fit to data PISIS research group, UANL, Monterrey, Mexico, 27 de Noviembre de 2009 x 2 x 1 68

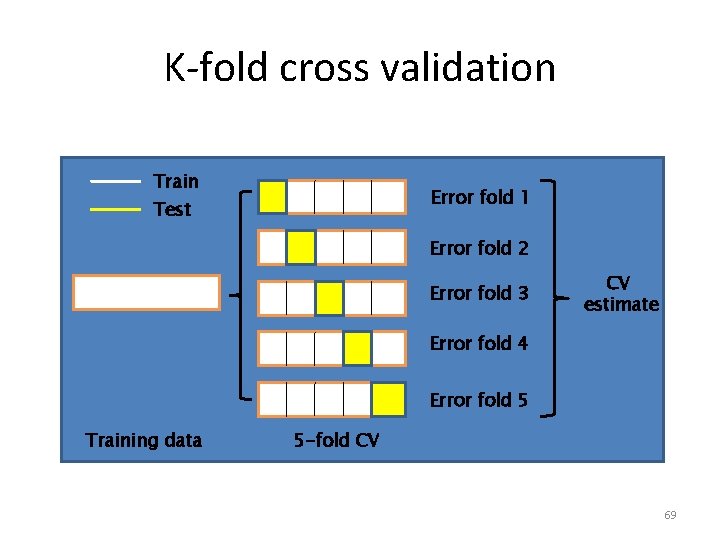

K-fold cross validation Train Error fold 1 Test Error fold 2 Error fold 3 CV estimate Error fold 4 Error fold 5 Training data 5 -fold CV 69

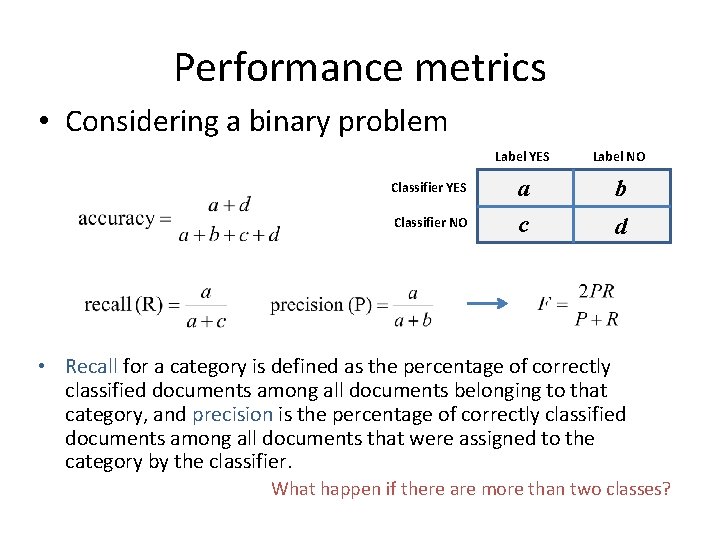

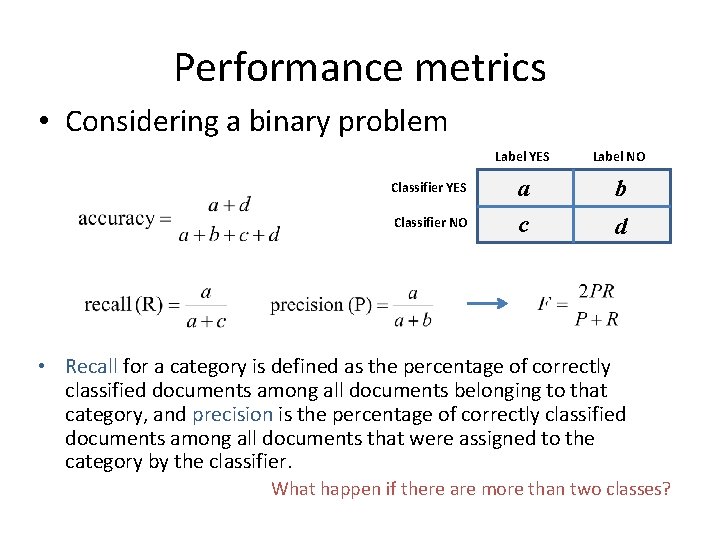

Performance metrics • Considering a binary problem Classifier YES Classifier NO Label YES Label NO a c b d • Recall for a category is defined as the percentage of correctly classified documents among all documents belonging to that category, and precision is the percentage of correctly classified documents among all documents that were assigned to the category by the classifier. What happen if there are more than two classes?

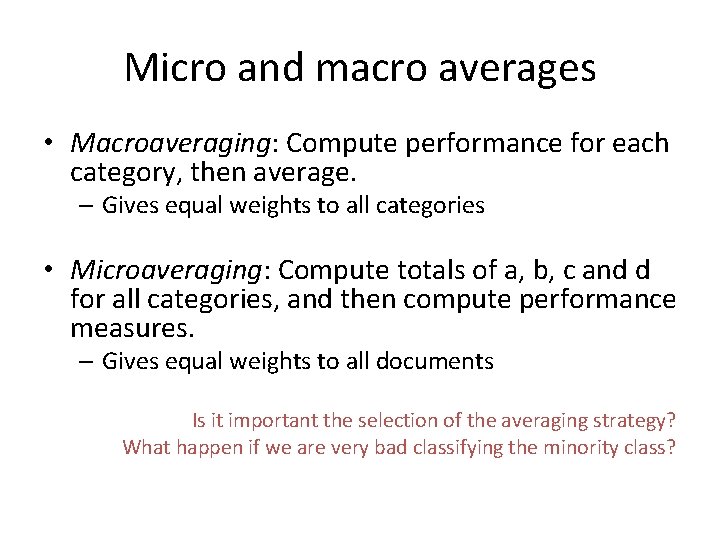

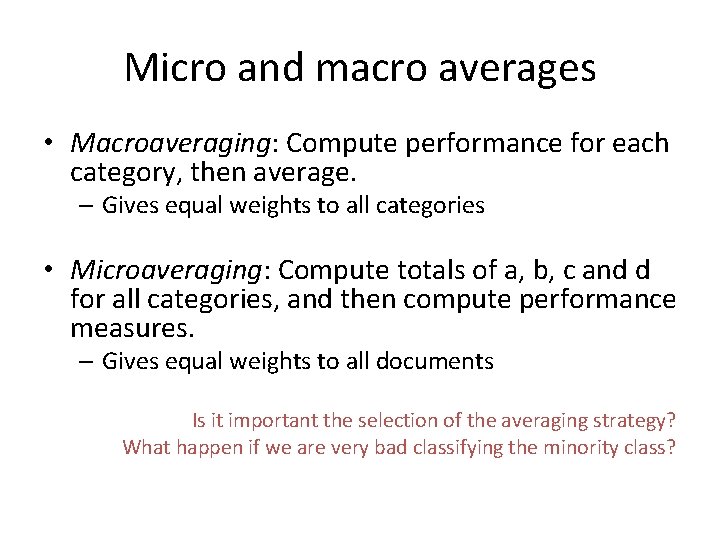

Micro and macro averages • Macroaveraging: Compute performance for each category, then average. – Gives equal weights to all categories • Microaveraging: Compute totals of a, b, c and d for all categories, and then compute performance measures. – Gives equal weights to all documents Is it important the selection of the averaging strategy? What happen if we are very bad classifying the minority class?

Comparison of different classifiers • Direct comparison – Compared by testing them on the same collection of documents and with the same background conditions. – This is the more reliable method • Indirect comparison – Two classifiers may be compared when they have been tested on different collections and with possibly different background conditions if both were compared with a common baseline.

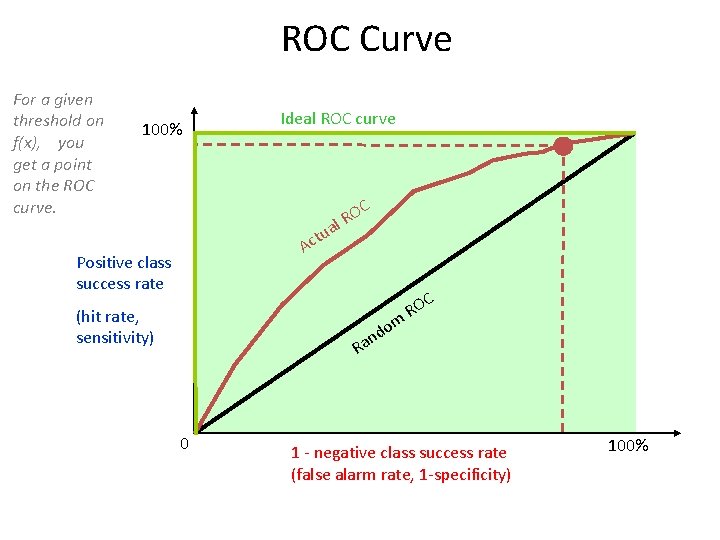

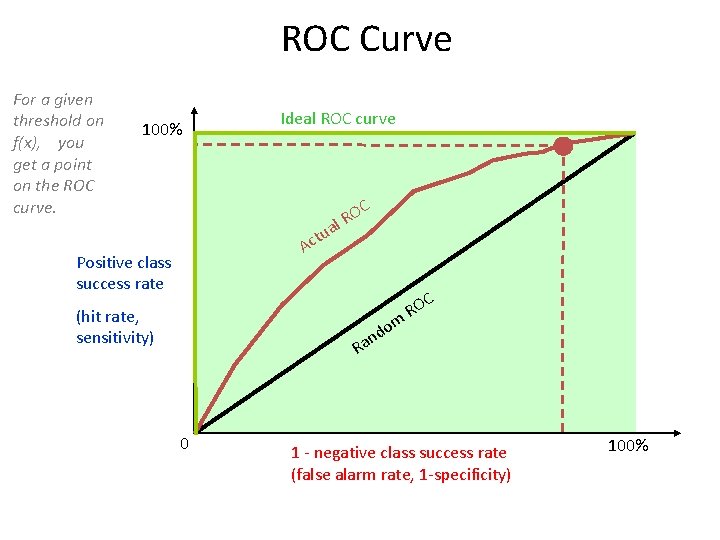

ROC Curve For a given threshold on f(x), you get a point on the ROC curve. 100% Ideal ROC curve a tu c A Positive class success rate (hit rate, sensitivity) C O l. R do n Ra 0 m C RO 1 - negative class success rate (false alarm rate, 1 -specificity) 100%

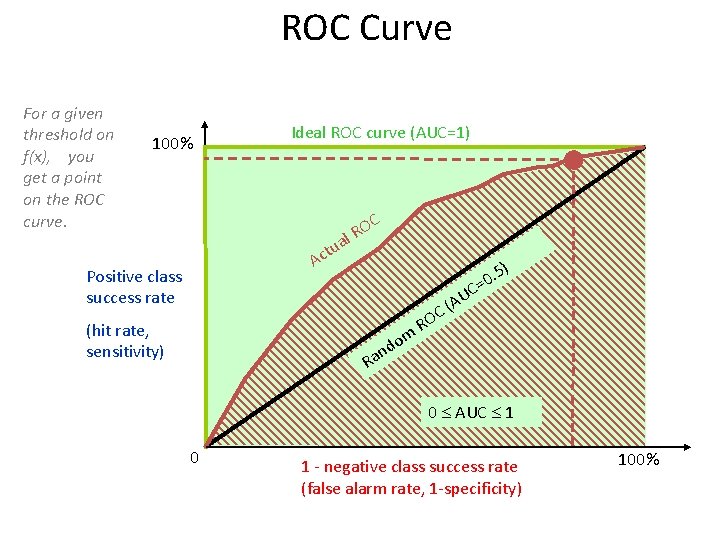

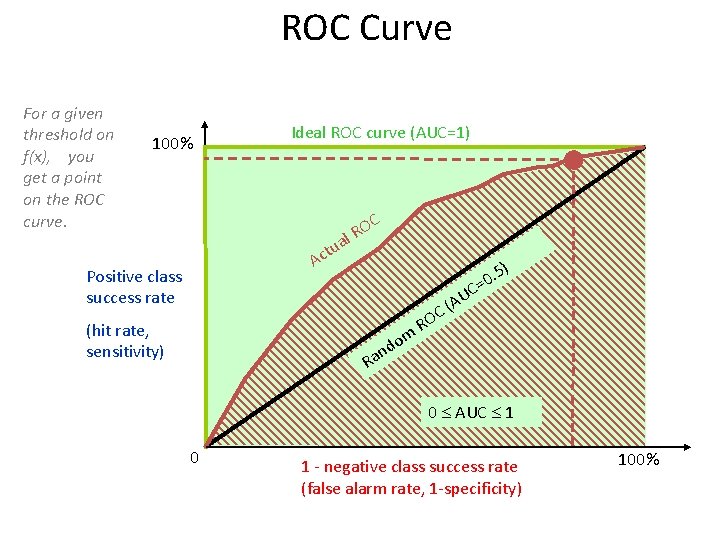

ROC Curve For a given threshold on f(x), you get a point on the ROC curve. 100% Ideal ROC curve (AUC=1) OC R l a tu Ac Positive class success rate C AU ( C (hit rate, sensitivity) m do n Ra . 5) 0 = RO 0 AUC 1 0 1 - negative class success rate (false alarm rate, 1 -specificity) 100%

Want to learn more? • C. Bishop. Pattern Recognition and Machine Learning. Springer, 2006. • T. Hastie, R. Tibshirani, J. Friedman. The Elements of Statistical Learning, Springer, 2009. • R. O. Duda, P. Hart, D. Stork. Pattern Classification. Wiley, 2001. • I. Guyon, et al. Feature Extraction: Foundations and Applications, Springer 2006. • T. Mitchell. Machine Learning. Mc Graw-Hill

Assignment # 3 • Read a paper describing a classification approach or algorithm for TC (it can be one from those available in the course page or another chosen by you) • Prepare a presentation of at most 10 minutes, in which you describe the proposed/adopted approach *different of those seen in class*. The presentation must cover the following aspects: A. Underlying and intuitive idea of the approach B. Formal description C. Benefits and limitations (compared to the schemes seen in class) D. Your idea(s) to improve the presented approach

Suggested readings on text classification • X. Ning, G. Karypis. The Set Classification Problem and Solution Methods. Proc. of International Conference on Data Mining Workshops, IEEE, 2008 • S. Baccianella, A. Esuli, F. Sebastiani. Using Micro-Documents for Feature Selection: The Case of Ordinal Text Classification. Proceedings of the 2 nd Italian Information Retrieval Workshop, 2011 • J. Wang, J. D. Zucker. Solving the Multiple-Instance Problem: A Lazy Learning Approach. Proc. of ICML 200. • A. Sun, E. P. Lim, Y. Liu. On Strategies for imbalanced text classification using SVM: a comparative study. Decision Support Systems, Vol. 48, pp. 191— 201, 2009.