Soft Decision Decoding of RS Codes Using Adaptive

- Slides: 51

Soft Decision Decoding of RS Codes Using Adaptive Parity Check Matrices Jing Jiang and Krishna R. Narayanan Wireless Communication Group Department of Electrical Engineering Texas A&M University

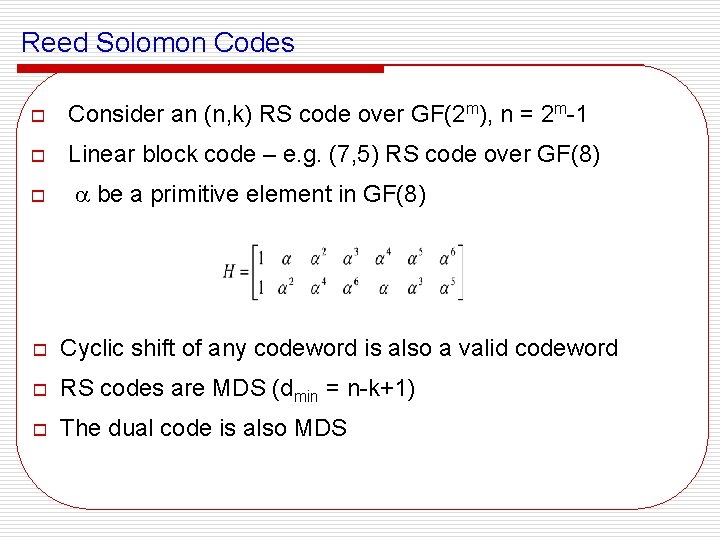

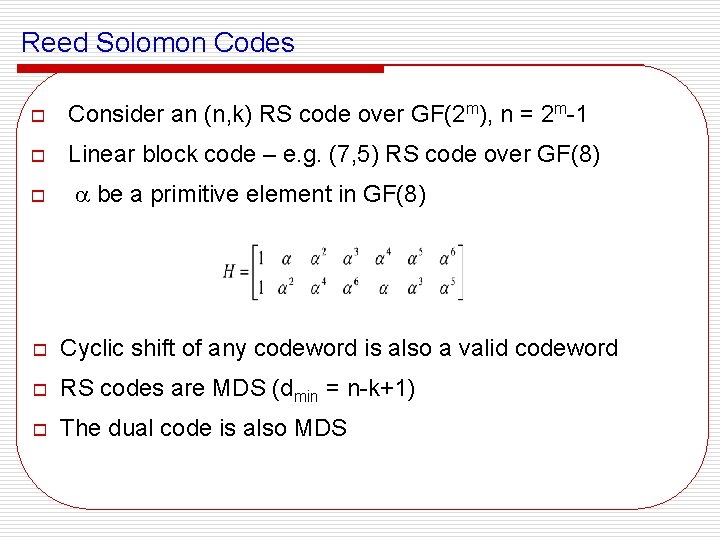

Reed Solomon Codes o Consider an (n, k) RS code over GF(2 m), n = 2 m-1 o Linear block code – e. g. (7, 5) RS code over GF(8) o be a primitive element in GF(8) o Cyclic shift of any codeword is also a valid codeword o RS codes are MDS (dmin = n-k+1) o The dual code is also MDS

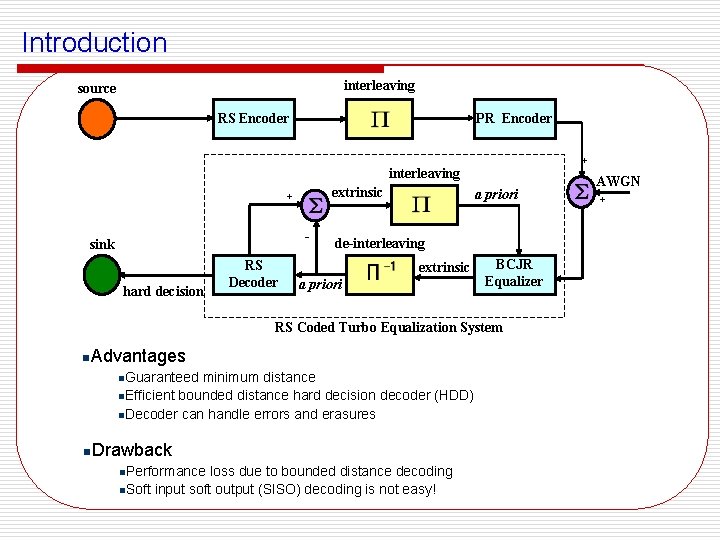

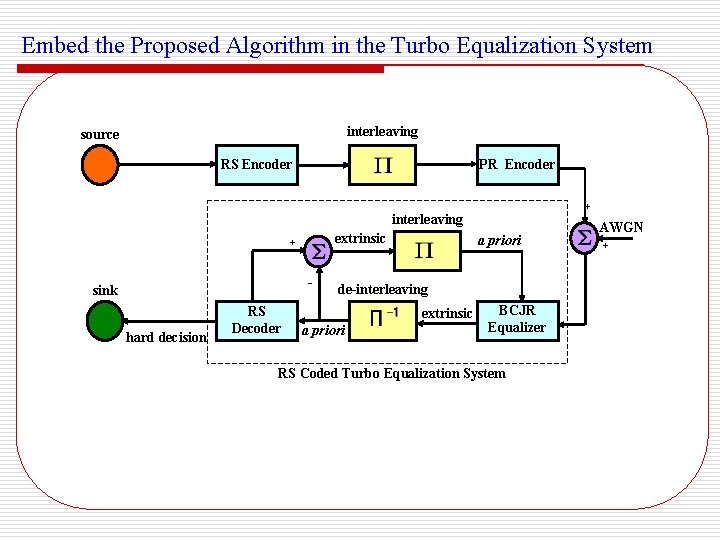

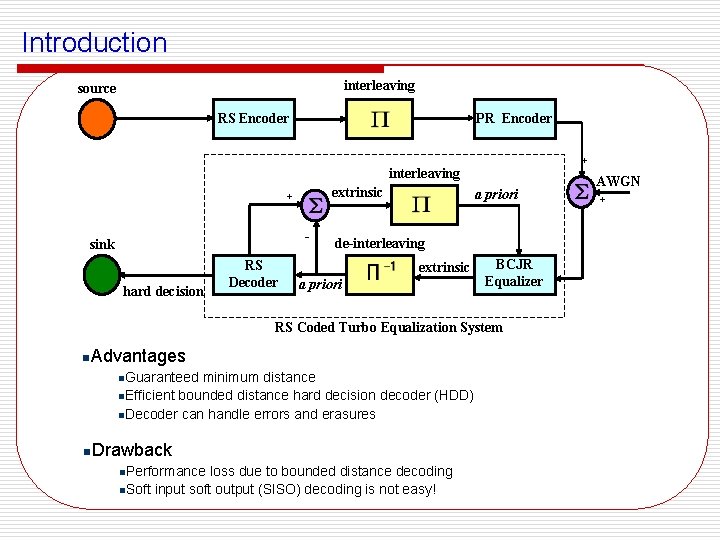

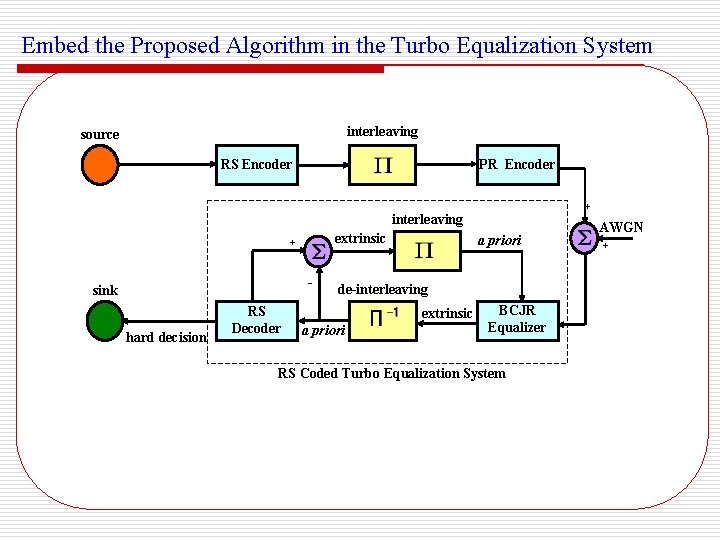

Introduction interleaving source RS Encoder PR Encoder + interleaving extrinsic + - sink hard decision RS Decoder a priori de-interleaving extrinsic a priori BCJR Equalizer RS Coded Turbo Equalization System n. Advantages n. Guaranteed minimum distance n. Efficient bounded distance hard decision decoder (HDD) n. Decoder can handle errors and erasures n. Drawback n. Performance loss due to bounded distance decoding n. Soft input soft output (SISO) decoding is not easy! AWGN +

Presentation Outline n Existing soft decision decoding techniques n Iterative decoding based on adaptive parity check matrices n Variations of the generic algorithm n Applications over various channels n Conclusion and future work

Existing Soft Decoding Techniques

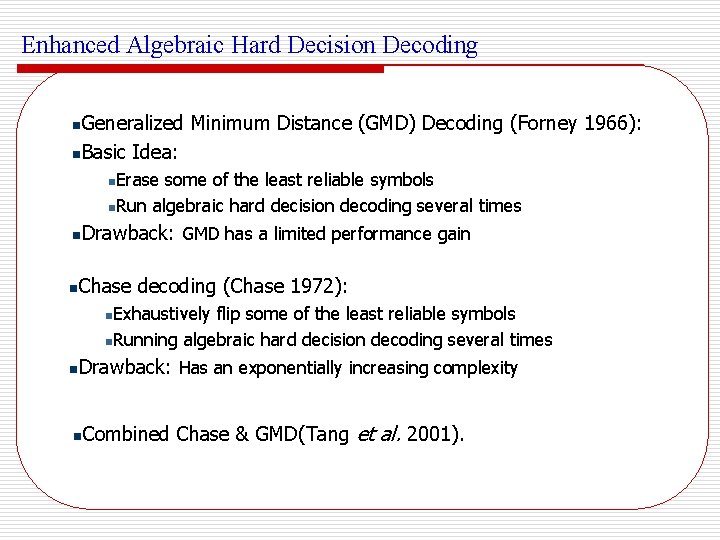

Enhanced Algebraic Hard Decision Decoding Generalized Minimum Distance (GMD) Decoding (Forney 1966): n. Basic Idea: n Erase some of the least reliable symbols n. Run algebraic hard decision decoding several times n n n Drawback: GMD has a limited performance gain Chase decoding (Chase 1972): Exhaustively flip some of the least reliable symbols n. Running algebraic hard decision decoding several times n n Drawback: Has an exponentially increasing complexity n Combined Chase & GMD(Tang et al. 2001).

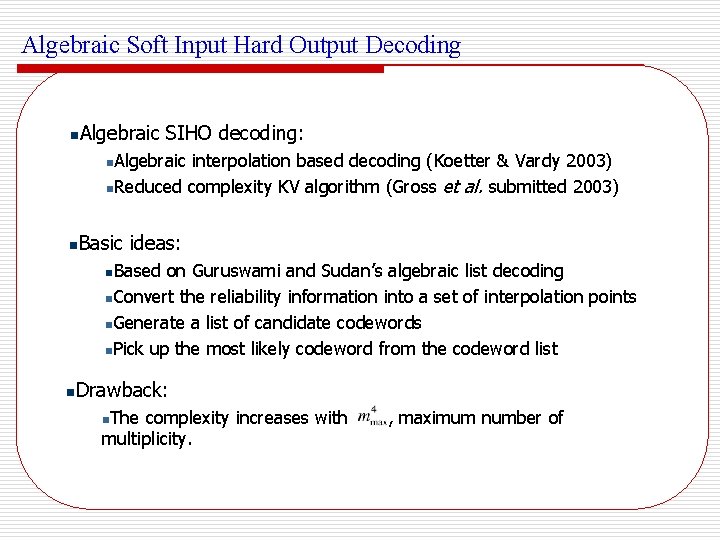

Algebraic Soft Input Hard Output Decoding n Algebraic SIHO decoding: Algebraic interpolation based decoding (Koetter & Vardy 2003) n. Reduced complexity KV algorithm (Gross et al. submitted 2003) n n Basic ideas: n. Based on Guruswami and Sudan’s algebraic list decoding n. Convert the reliability information into a set of interpolation points n. Generate a list of candidate codewords n. Pick up the most likely codeword from the codeword list n Drawback: The complexity increases with multiplicity. n , maximum number of

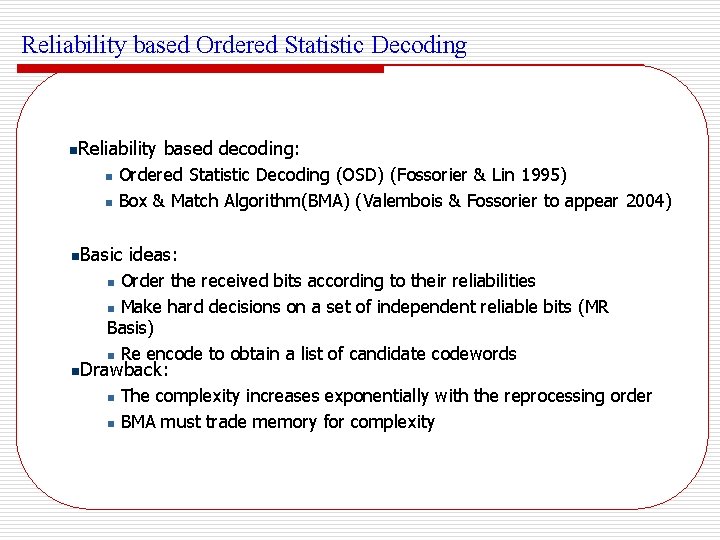

Reliability based Ordered Statistic Decoding n. Reliability n n based decoding: Ordered Statistic Decoding (OSD) (Fossorier & Lin 1995) Box & Match Algorithm(BMA) (Valembois & Fossorier to appear 2004) n. Basic ideas: Order the received bits according to their reliabilities n Make hard decisions on a set of independent reliable bits (MR Basis) n Re encode to obtain a list of candidate codewords n n. Drawback: n n The complexity increases exponentially with the reprocessing order BMA must trade memory for complexity

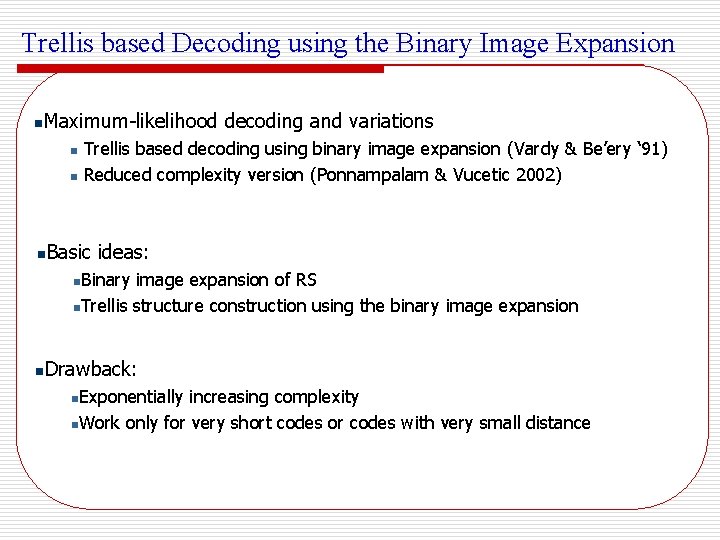

Trellis based Decoding using the Binary Image Expansion n Maximum-likelihood decoding and variations n n n Trellis based decoding using binary image expansion (Vardy & Be’ery ‘ 91) Reduced complexity version (Ponnampalam & Vucetic 2002) Basic ideas: Binary image expansion of RS n. Trellis structure construction using the binary image expansion n n Drawback: Exponentially increasing complexity n. Work only for very short codes or codes with very small distance n

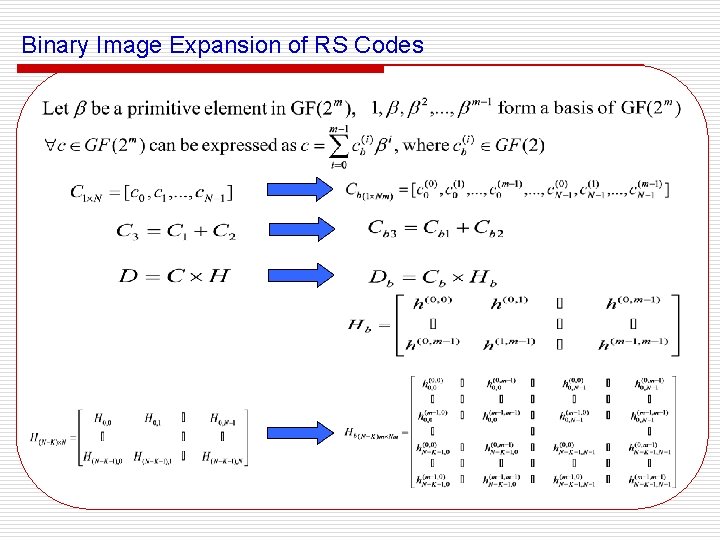

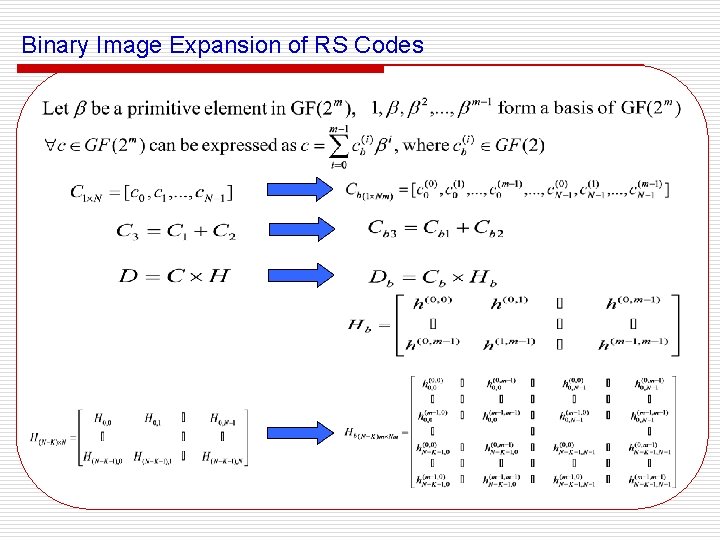

Binary Image Expansion of RS Codes

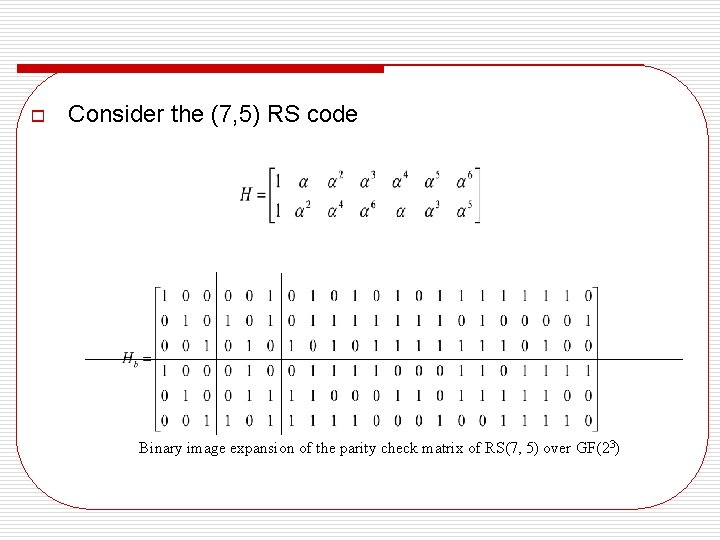

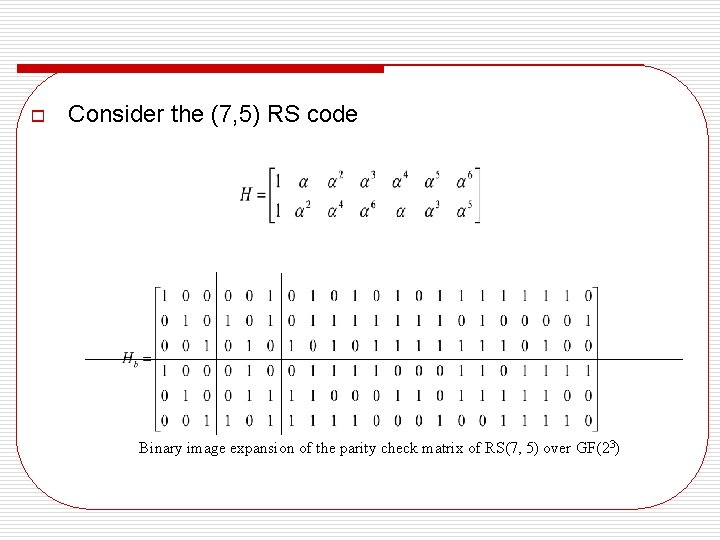

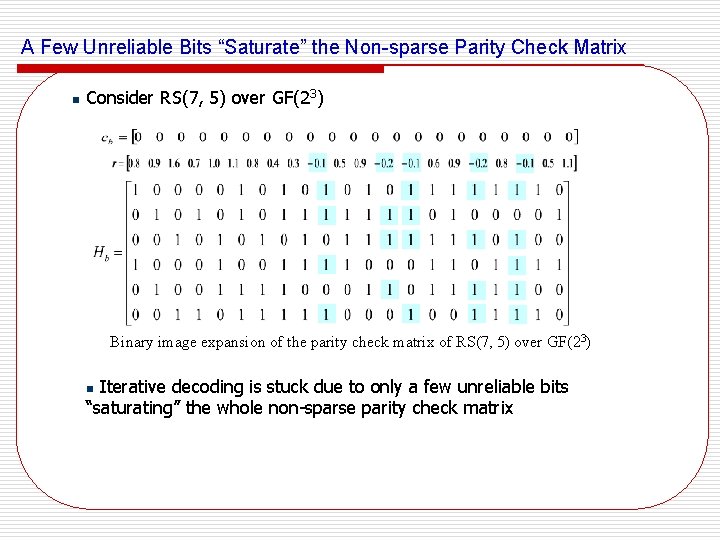

o Consider the (7, 5) RS code Binary image expansion of the parity check matrix of RS(7, 5) over GF(23)

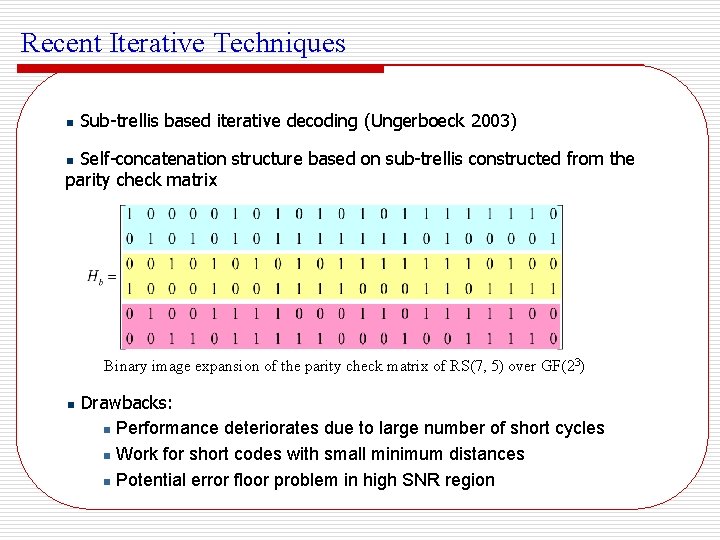

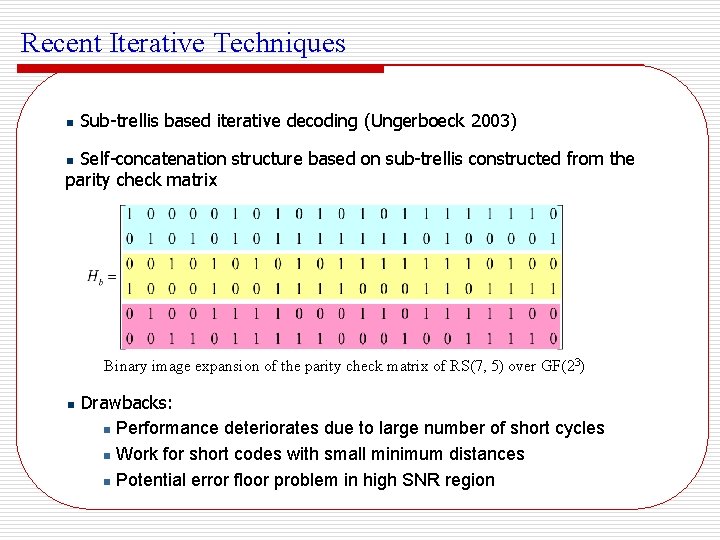

Recent Iterative Techniques n Sub-trellis based iterative decoding (Ungerboeck 2003) Self-concatenation structure based on sub-trellis constructed from the parity check matrix n Binary image expansion of the parity check matrix of RS(7, 5) over GF(23) n Drawbacks: n Performance deteriorates due to large number of short cycles n Work for short codes with small minimum distances n Potential error floor problem in high SNR region

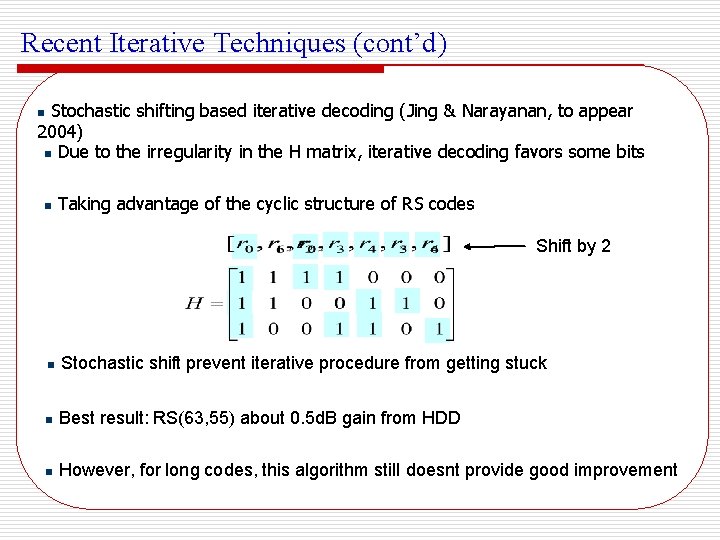

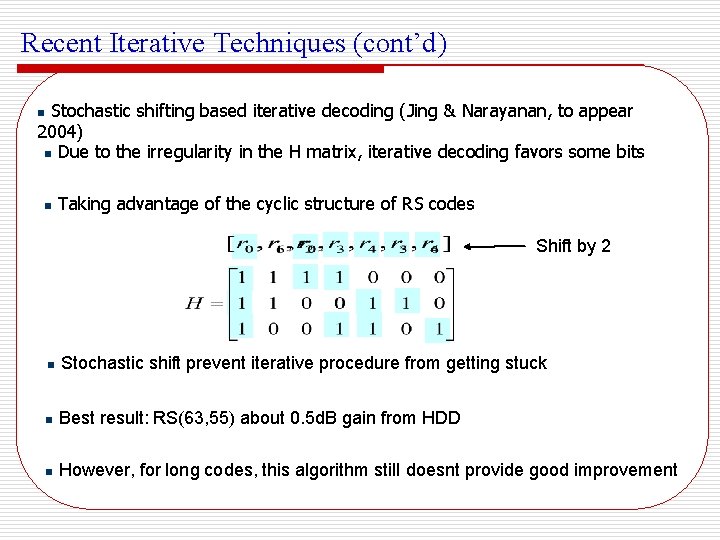

Recent Iterative Techniques (cont’d) Stochastic shifting based iterative decoding (Jing & Narayanan, to appear 2004) n Due to the irregularity in the H matrix, iterative decoding favors some bits n n Taking advantage of the cyclic structure of RS codes Shift by 2 n Stochastic shift prevent iterative procedure from getting stuck n Best result: RS(63, 55) about 0. 5 d. B gain from HDD n However, for long codes, this algorithm still doesnt provide good improvement

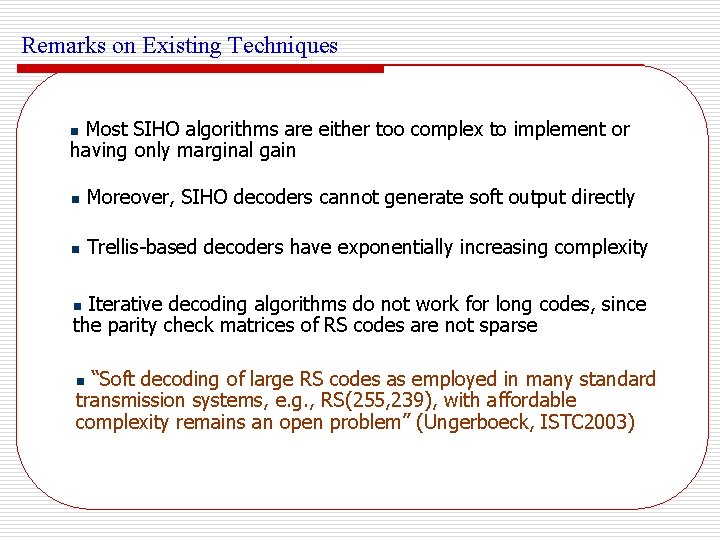

Remarks on Existing Techniques Most SIHO algorithms are either too complex to implement or having only marginal gain n n Moreover, SIHO decoders cannot generate soft output directly n Trellis-based decoders have exponentially increasing complexity Iterative decoding algorithms do not work for long codes, since the parity check matrices of RS codes are not sparse n “Soft decoding of large RS codes as employed in many standard transmission systems, e. g. , RS(255, 239), with affordable complexity remains an open problem” (Ungerboeck, ISTC 2003) n

Questions Q: Why doesn’t iterative decoding work for codes with nonsparse parity check matrices? n n Q: Can we get some idea from the failure of iterative decoder?

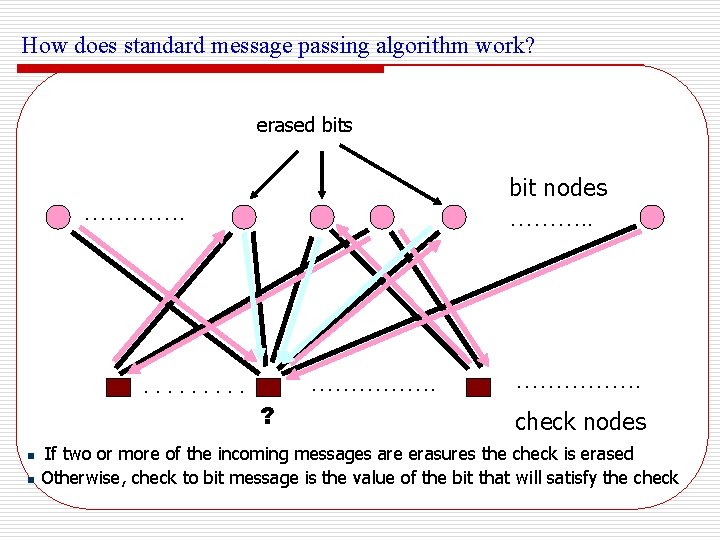

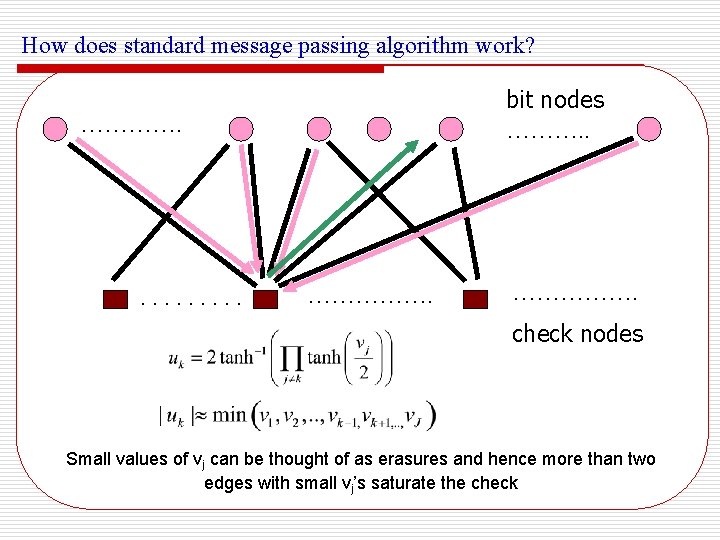

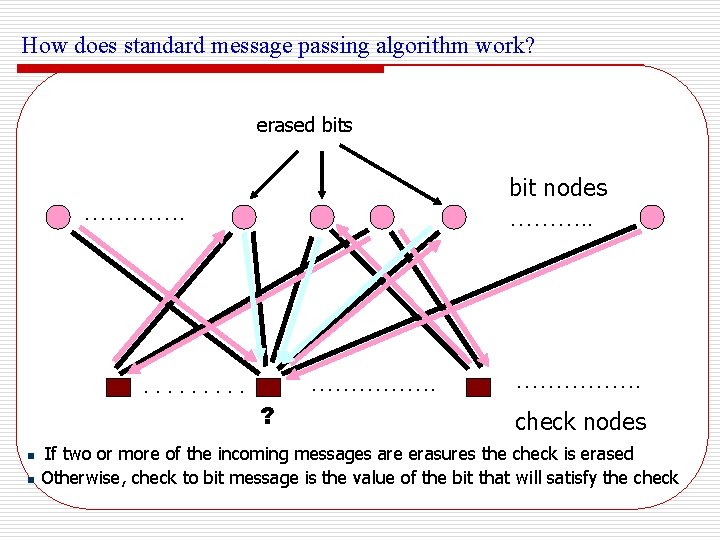

How does standard message passing algorithm work? erased bits bit nodes ………. . …………. . n n ……………. ? ……………. check nodes If two or more of the incoming messages are erasures the check is erased Otherwise, check to bit message is the value of the bit that will satisfy the check

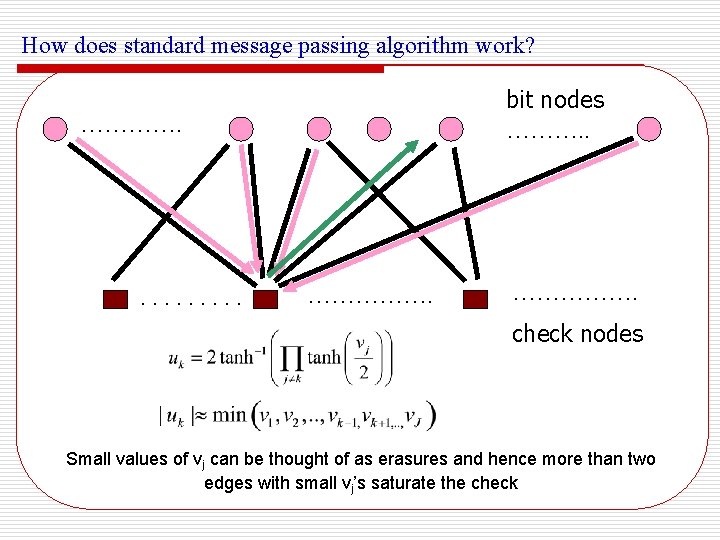

How does standard message passing algorithm work? bit nodes ………. . ……………. check nodes Small values of vj can be thought of as erasures and hence more than two edges with small vj’s saturate the check

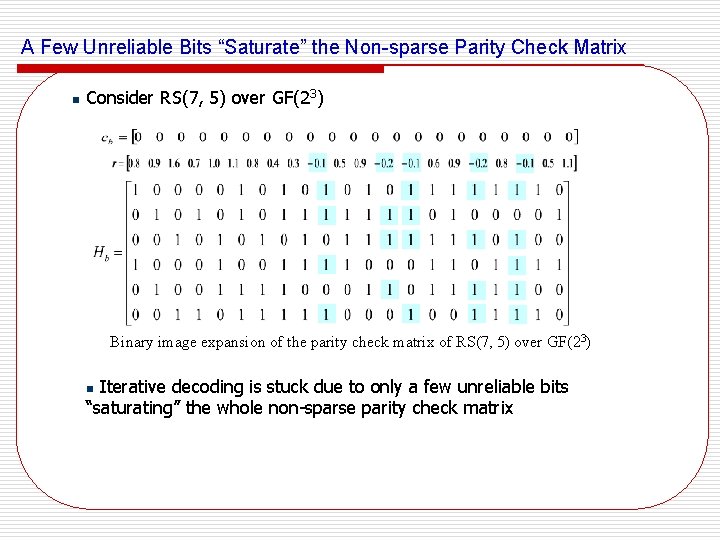

A Few Unreliable Bits “Saturate” the Non-sparse Parity Check Matrix n Consider RS(7, 5) over GF(23) Binary image expansion of the parity check matrix of RS(7, 5) over GF(23) Iterative decoding is stuck due to only a few unreliable bits “saturating” the whole non-sparse parity check matrix n

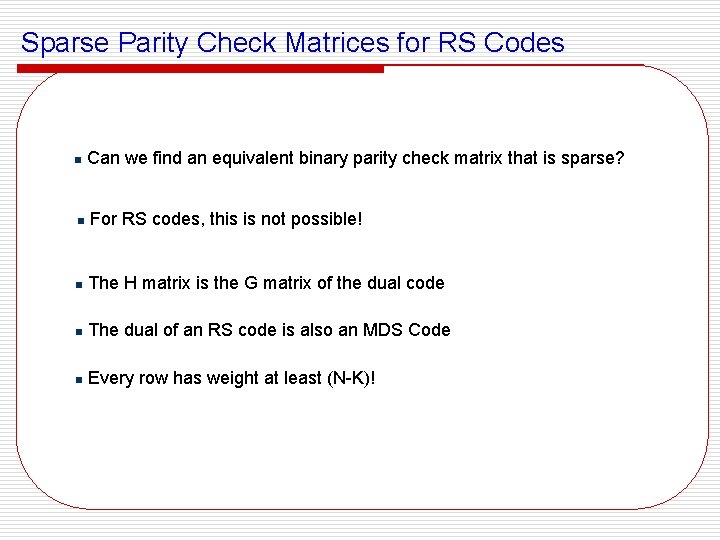

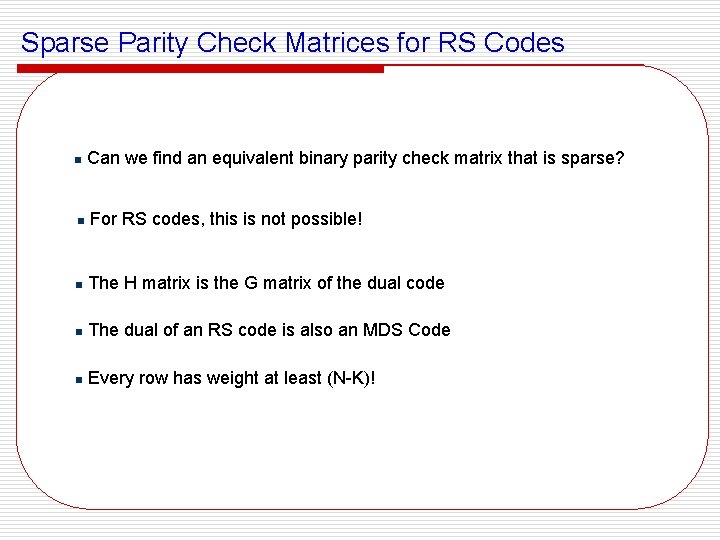

Sparse Parity Check Matrices for RS Codes n Can we find an equivalent binary parity check matrix that is sparse? n For RS codes, this is not possible! n The H matrix is the G matrix of the dual code n The dual of an RS code is also an MDS Code n Every row has weight at least (N-K)!

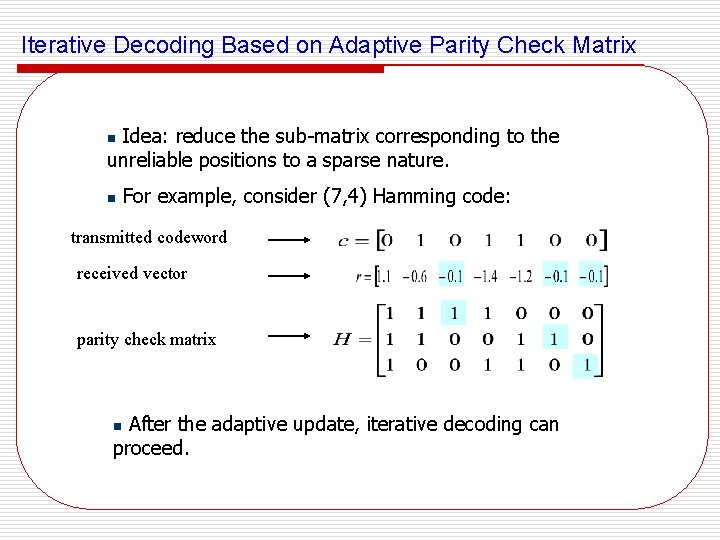

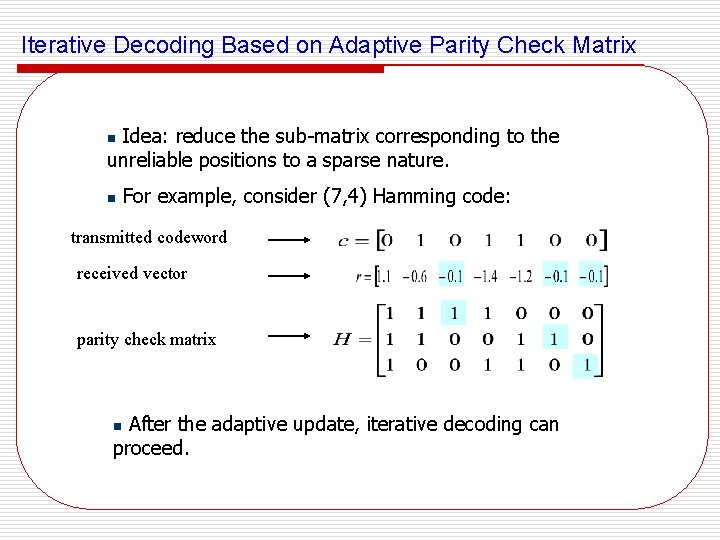

Iterative Decoding Based on Adaptive Parity Check Matrix Idea: reduce the sub-matrix corresponding to the unreliable positions to a sparse nature. n n For example, consider (7, 4) Hamming code: transmitted codeword received vector parity check matrix After the adaptive update, iterative decoding can proceed. n

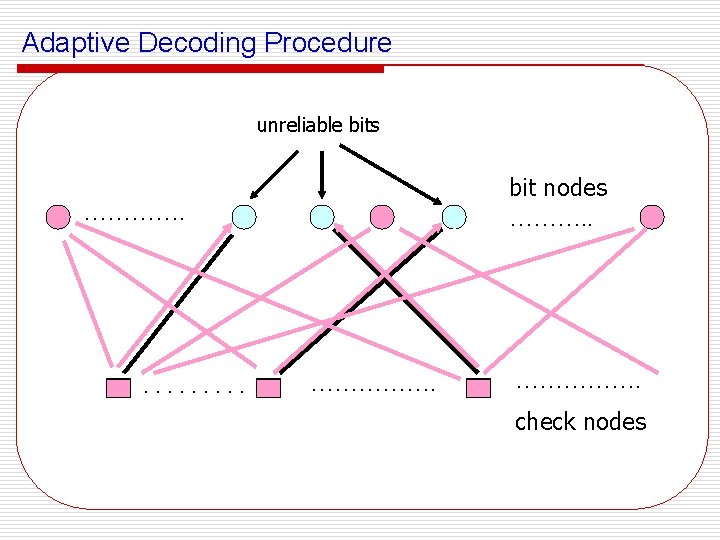

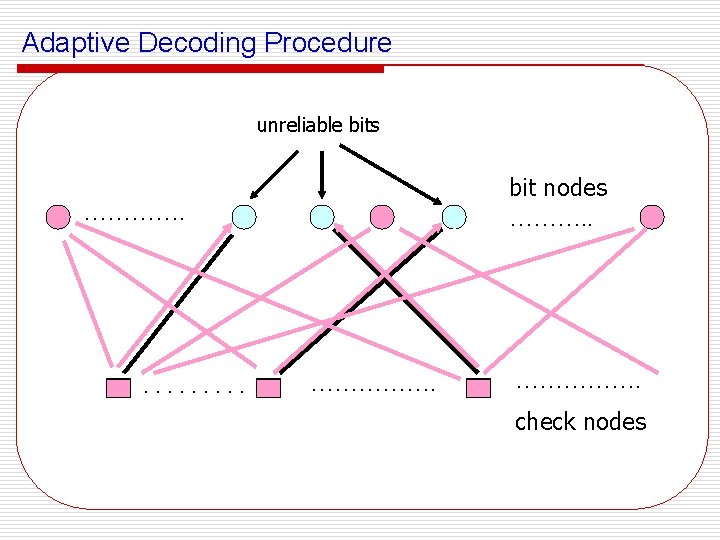

Adaptive Decoding Procedure unreliable bits bit nodes ………. . ……………. check nodes

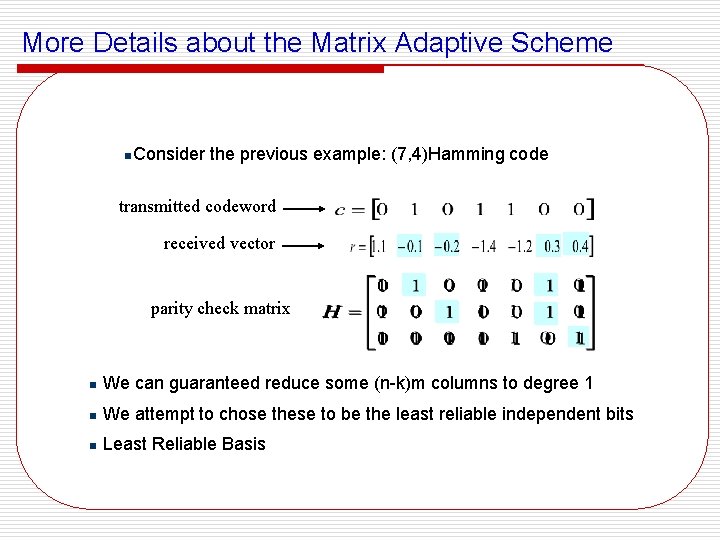

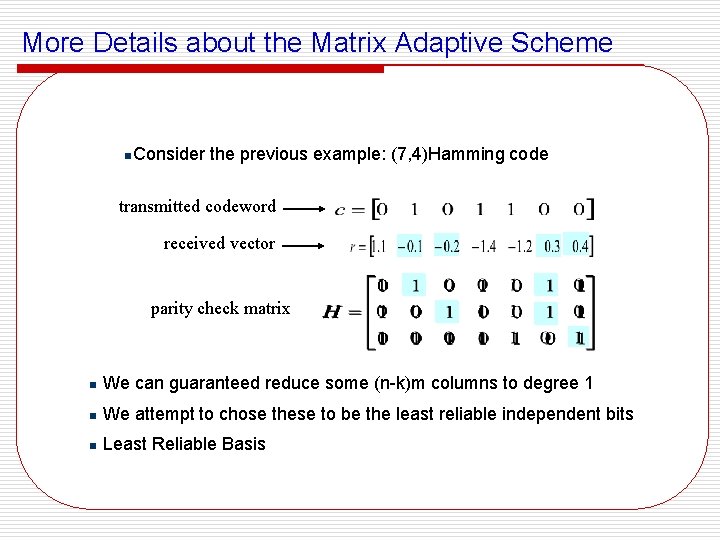

More Details about the Matrix Adaptive Scheme n. Consider the previous example: (7, 4)Hamming code transmitted codeword received vector parity check matrix n We can guaranteed reduce some (n-k)m columns to degree 1 n We attempt to chose these to be the least reliable independent bits n Least Reliable Basis

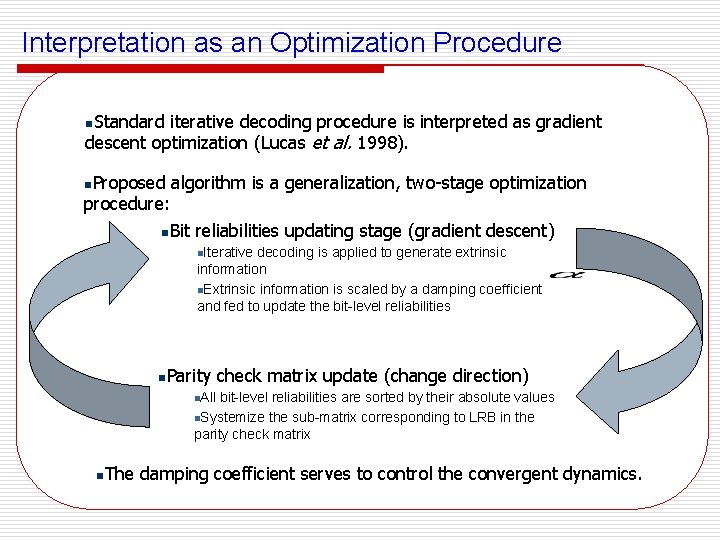

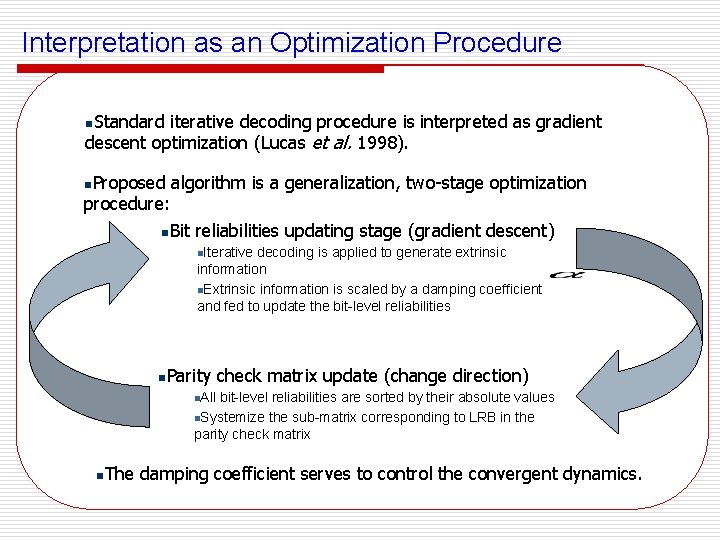

Interpretation as an Optimization Procedure n. Standard iterative decoding procedure is interpreted as gradient descent optimization (Lucas et al. 1998). n. Proposed algorithm is a generalization, two-stage optimization procedure: n. Bit reliabilities updating stage (gradient descent) Iterative decoding is applied to generate extrinsic information n. Extrinsic information is scaled by a damping coefficient and fed to update the bit-level reliabilities n n. Parity check matrix update (change direction) All bit-level reliabilities are sorted by their absolute values n. Systemize the sub-matrix corresponding to LRB in the parity check matrix n n. The damping coefficient serves to control the convergent dynamics.

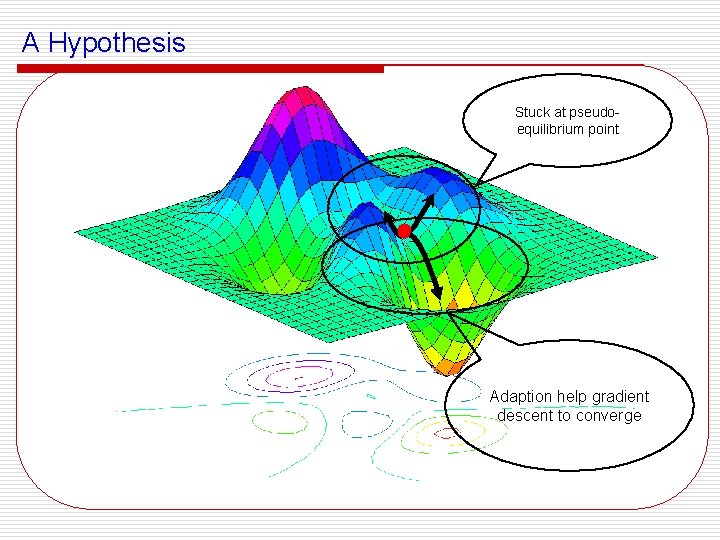

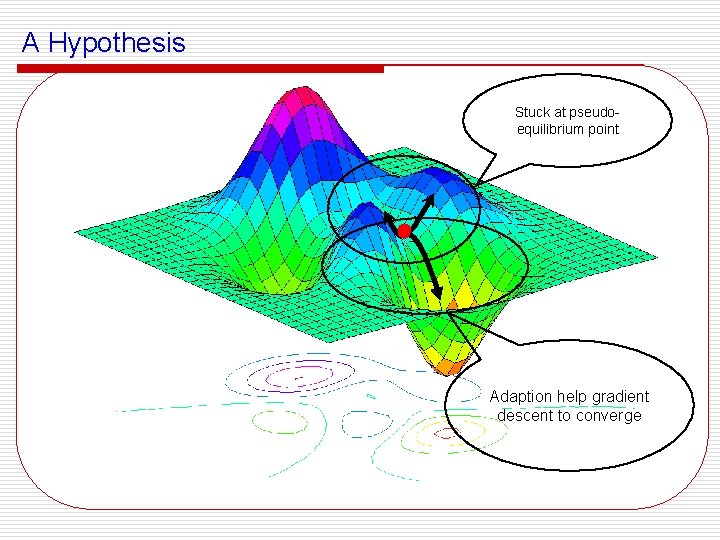

A Hypothesis Stuck at pseudoequilibrium point Adaption help gradient descent to converge

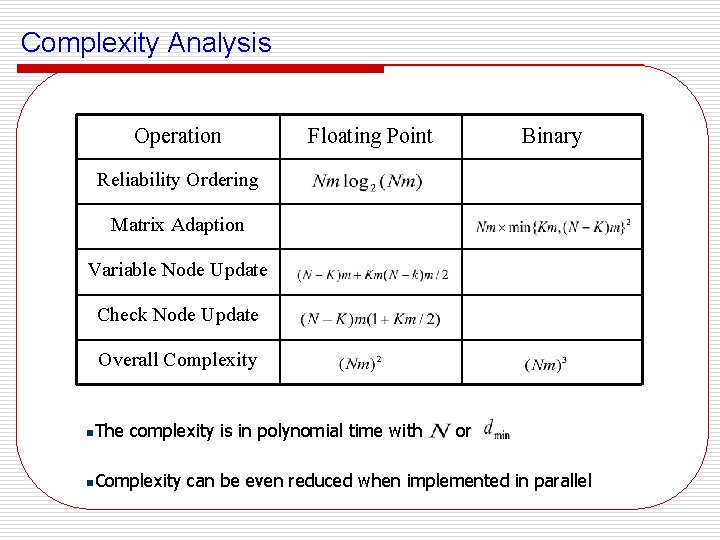

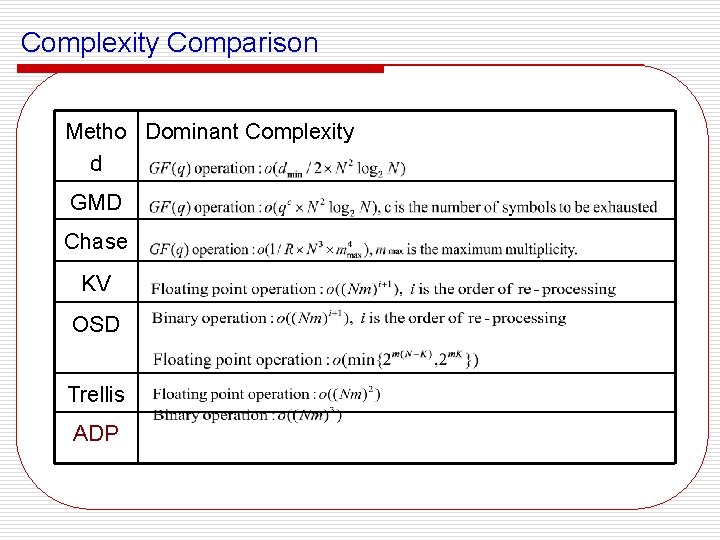

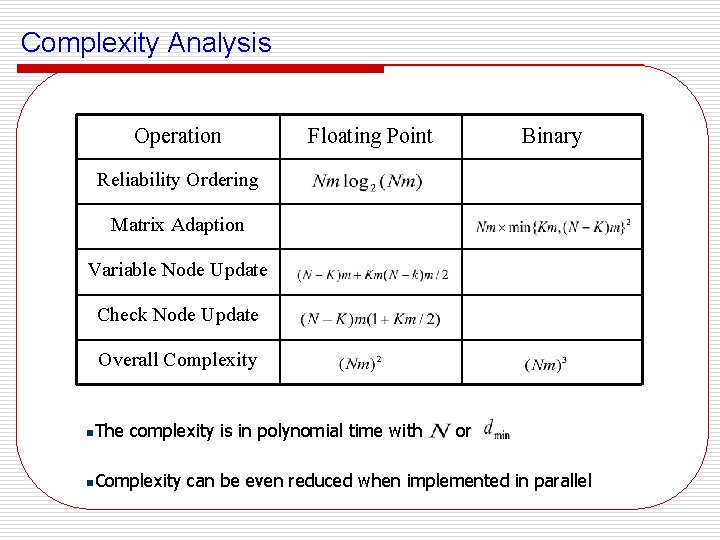

Complexity Analysis Operation Floating Point Binary Reliability Ordering Matrix Adaption Variable Node Update Check Node Update Overall Complexity n. The complexity is in polynomial time with n. Complexity or can be even reduced when implemented in parallel

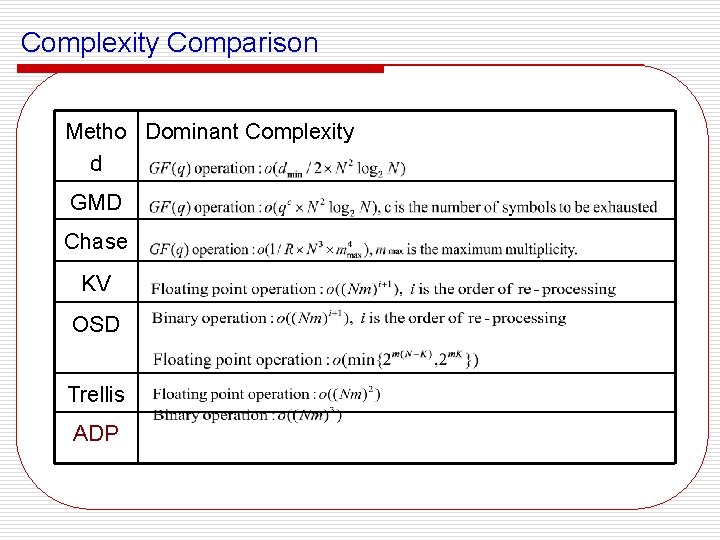

Complexity Comparison Metho Dominant Complexity d GMD Chase KV OSD Trellis ADP

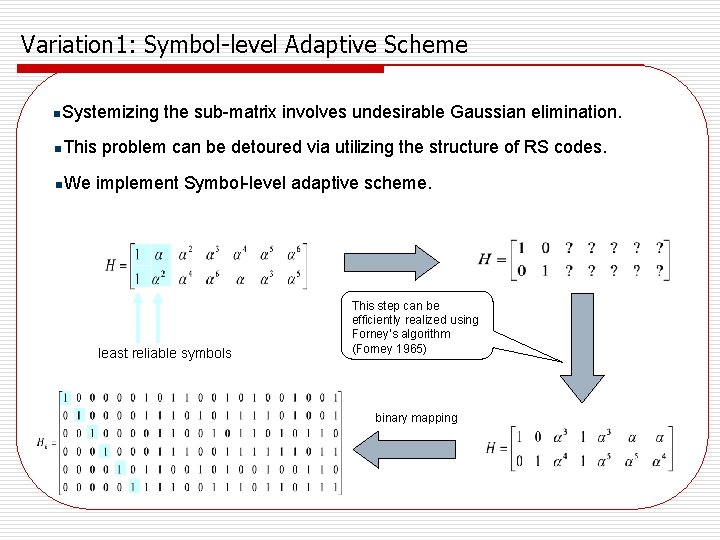

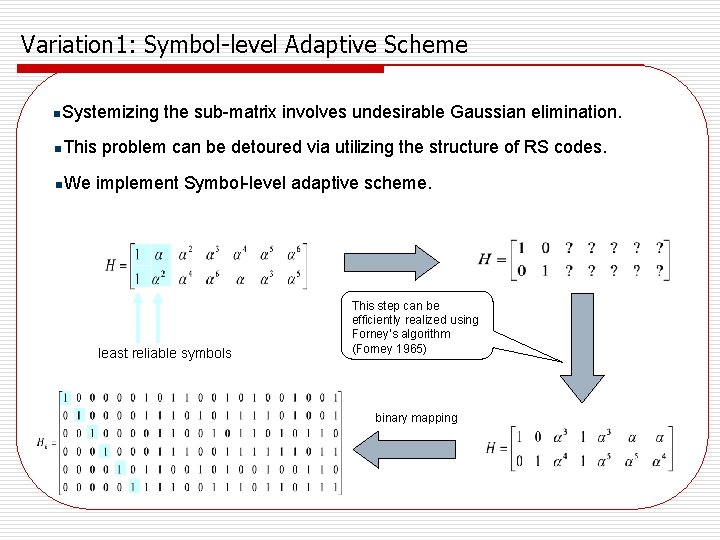

Variation 1: Symbol-level Adaptive Scheme n. Systemizing n. This n. We the sub-matrix involves undesirable Gaussian elimination. problem can be detoured via utilizing the structure of RS codes. implement Symbol-level adaptive scheme. least reliable symbols This step can be efficiently realized using Forney’s algorithm (Forney 1965) binary mapping

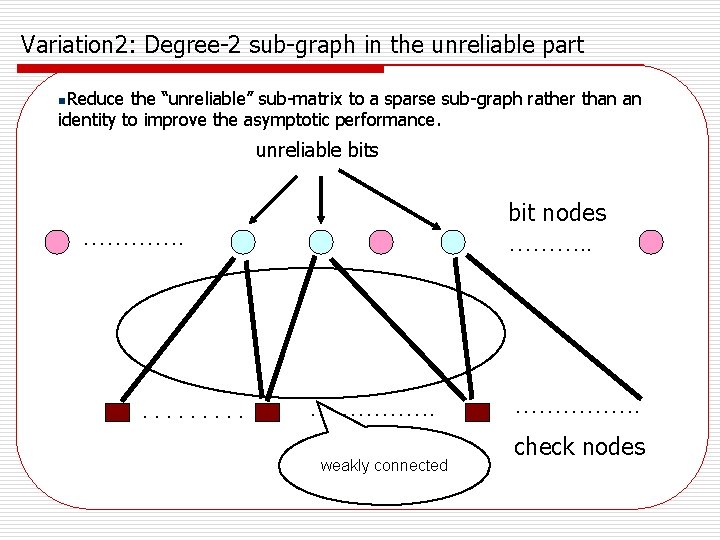

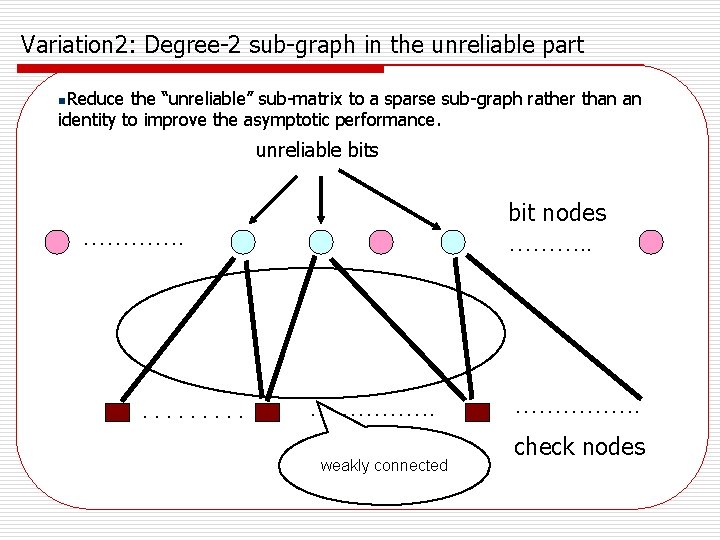

Variation 2: Degree-2 sub-graph in the unreliable part n. Reduce the “unreliable” sub-matrix to a sparse sub-graph rather than an identity to improve the asymptotic performance. unreliable bits bit nodes ………. . ……………. weakly connected ……………. check nodes

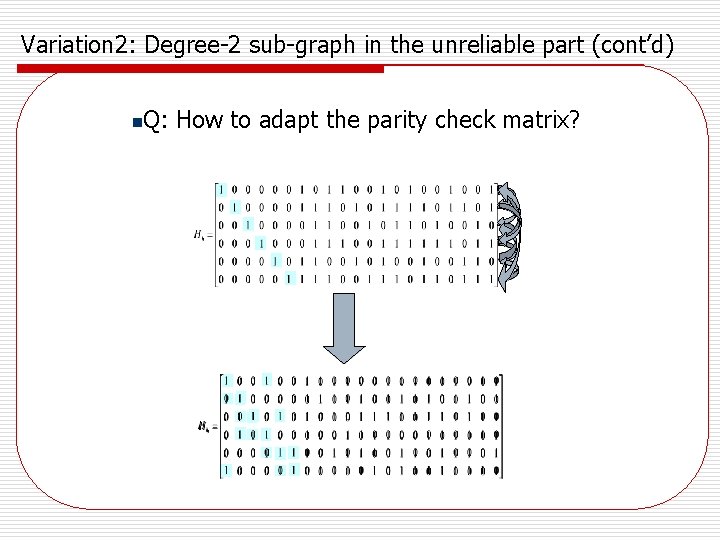

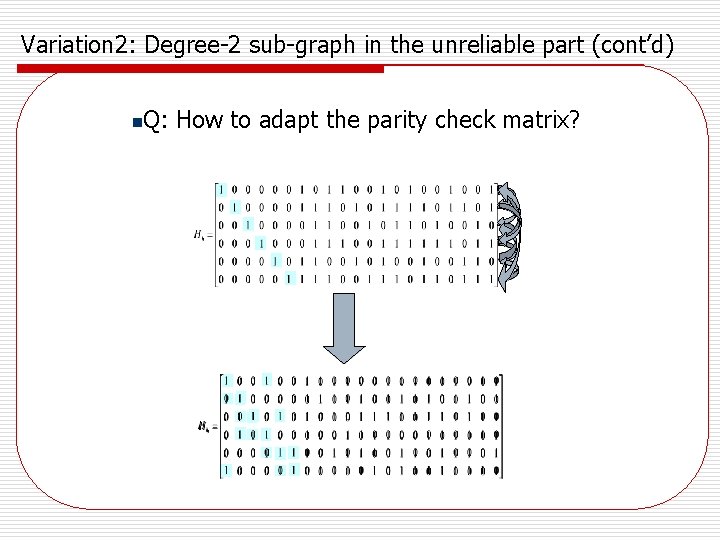

Variation 2: Degree-2 sub-graph in the unreliable part (cont’d) n Q: How to adapt the parity check matrix?

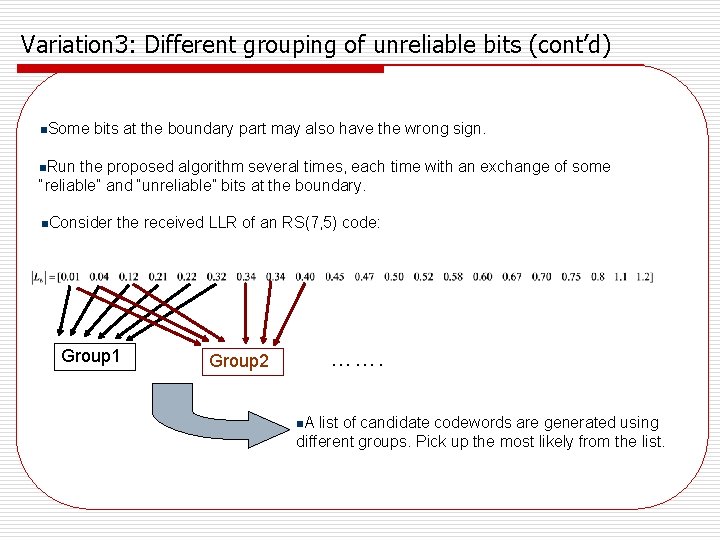

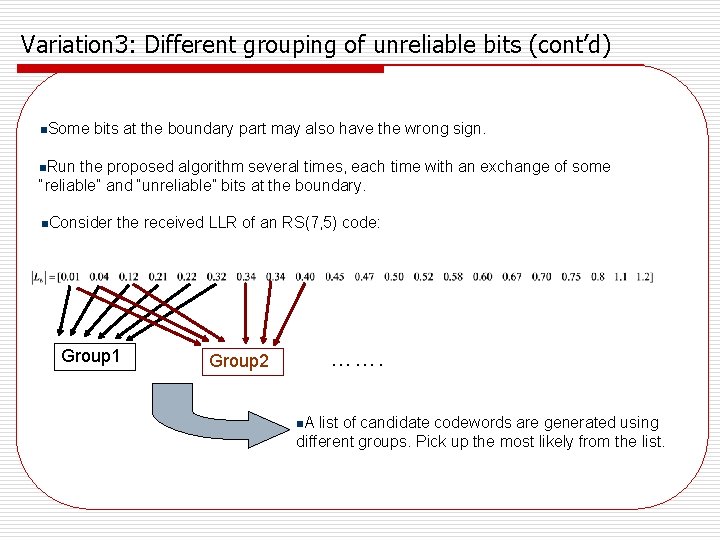

Variation 3: Different grouping of unreliable bits (cont’d) n. Some bits at the boundary part may also have the wrong sign. n. Run the proposed algorithm several times, each time with an exchange of some “reliable” and “unreliable” bits at the boundary. n. Consider the received LLR of an RS(7, 5) code: Group 1 ……. Group 2 n. A list of candidate codewords are generated using different groups. Pick up the most likely from the list.

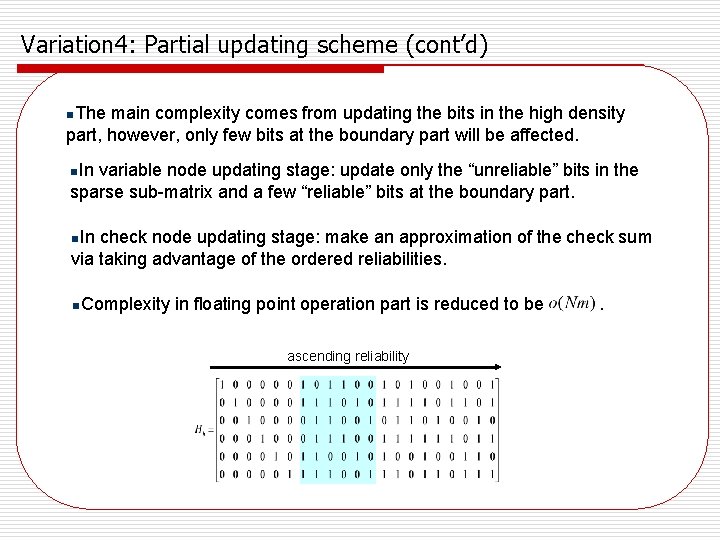

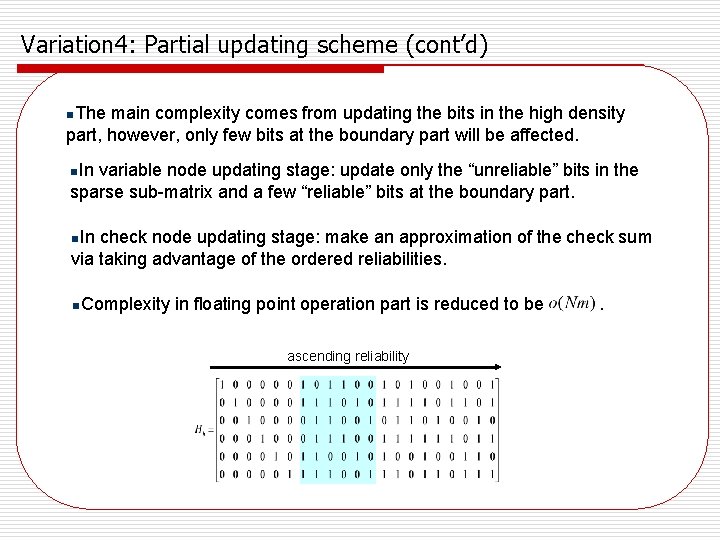

Variation 4: Partial updating scheme (cont’d) n. The main complexity comes from updating the bits in the high density part, however, only few bits at the boundary part will be affected. n. In variable node updating stage: update only the “unreliable” bits in the sparse sub-matrix and a few “reliable” bits at the boundary part. n. In check node updating stage: make an approximation of the check sum via taking advantage of the ordered reliabilities. n. Complexity in floating point operation part is reduced to be ascending reliability .

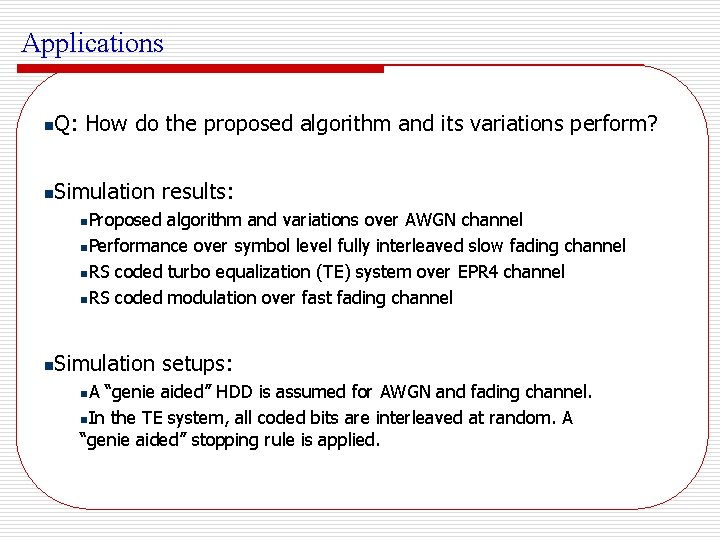

Applications n Q: How do the proposed algorithm and its variations perform? n Simulation results: n. Proposed algorithm and variations over AWGN channel n. Performance over symbol level fully interleaved slow fading channel n. RS coded turbo equalization (TE) system over EPR 4 channel n. RS coded modulation over fast fading channel n Simulation setups: n. A “genie aided” HDD is assumed for AWGN and fading channel. n. In the TE system, all coded bits are interleaved at random. A “genie aided” stopping rule is applied.

Additive White Gaussian Noise (AWGN) Channel

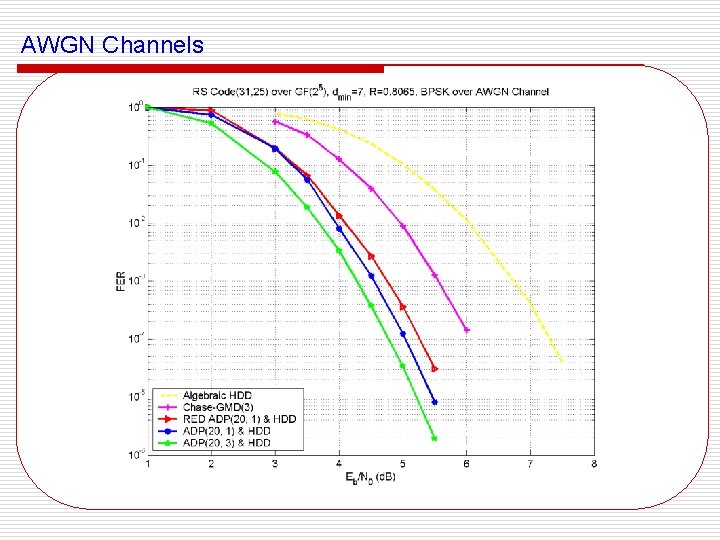

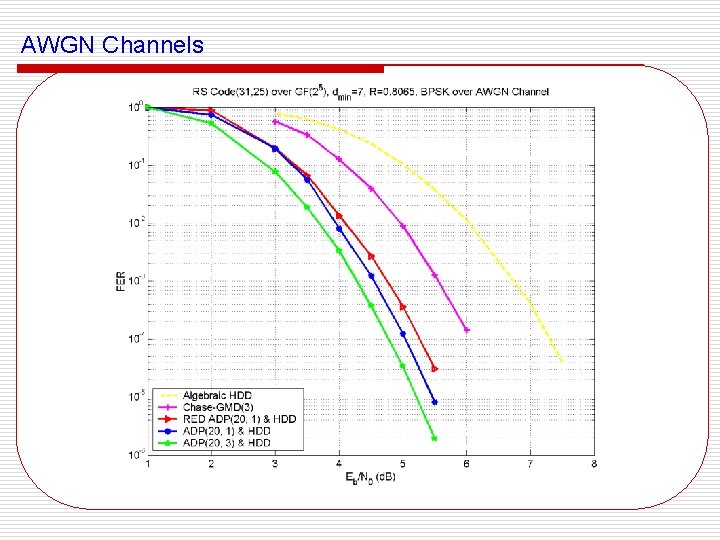

AWGN Channels

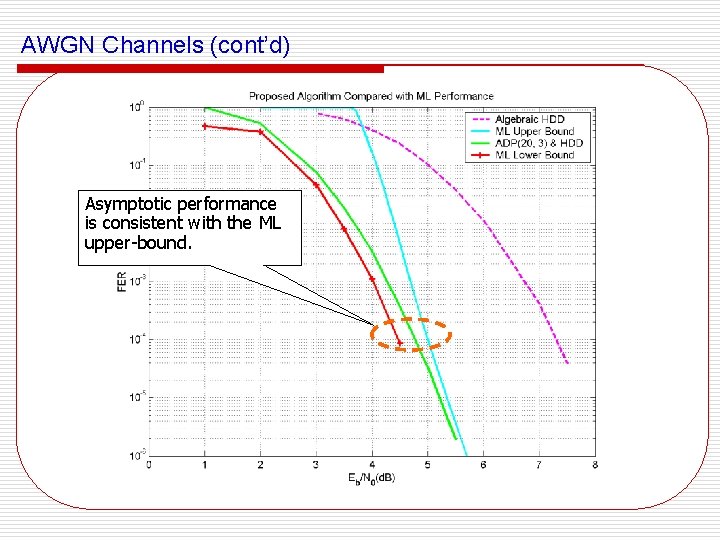

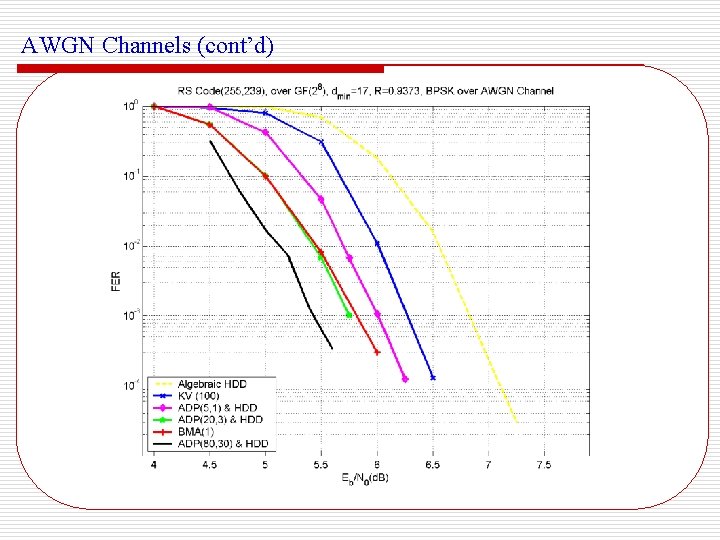

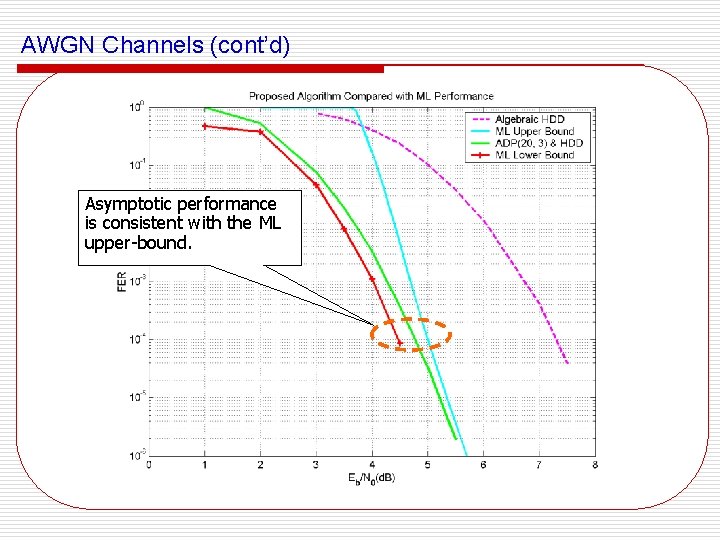

AWGN Channels (cont’d) Asymptotic performance is consistent with the ML upper-bound.

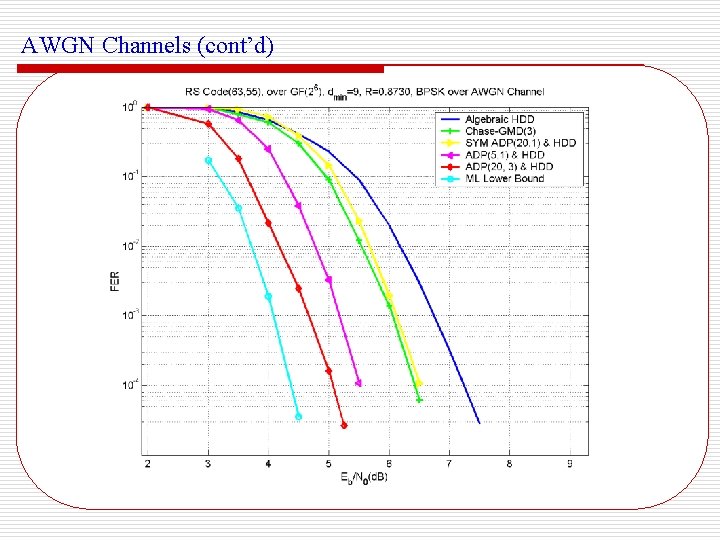

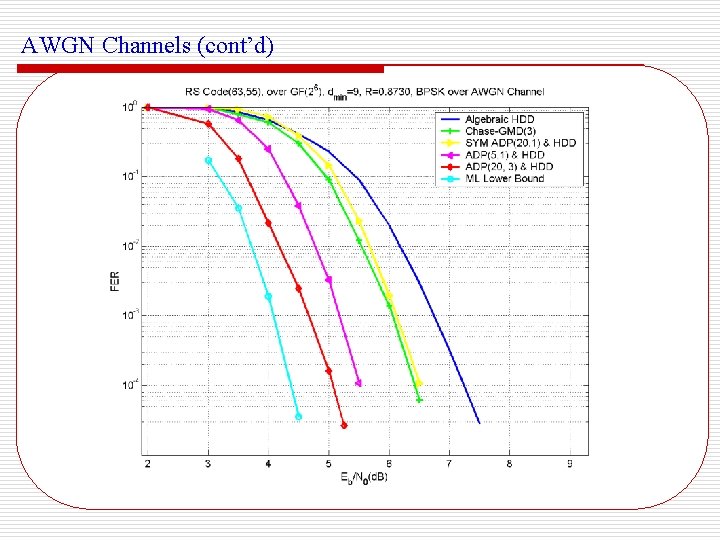

AWGN Channels (cont’d)

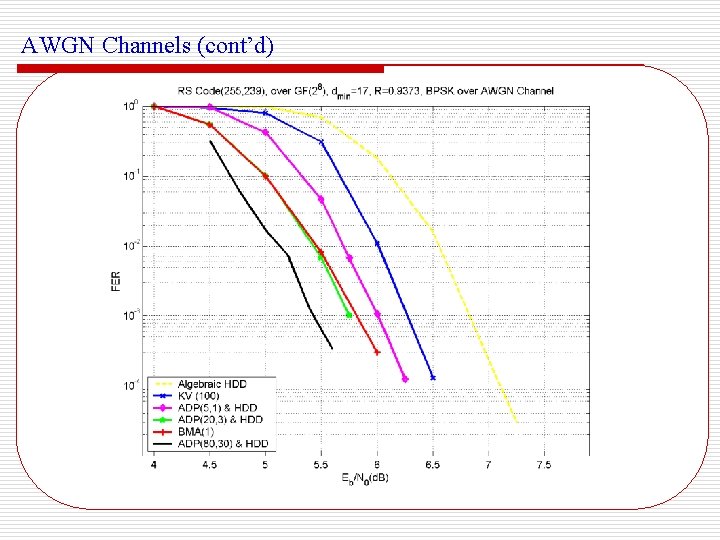

AWGN Channels (cont’d)

Remarks n n Proposed scheme performs near ML for medium length codes. Symbol-level adaptive updating scheme provides non-trivial gain. n Partial updating incurs little penalty with great reduction in complexity. n For long codes, proposed scheme is still away from ML decoding. n Q: How does it work over other channels?

Interleaved Slow Fading Channel

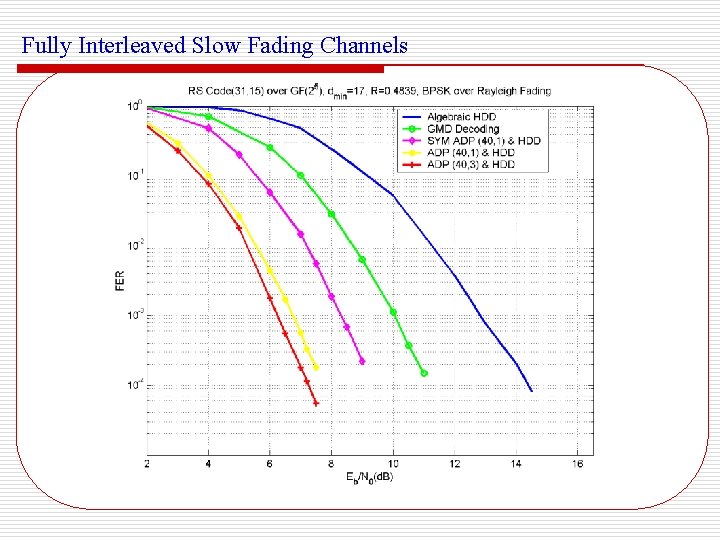

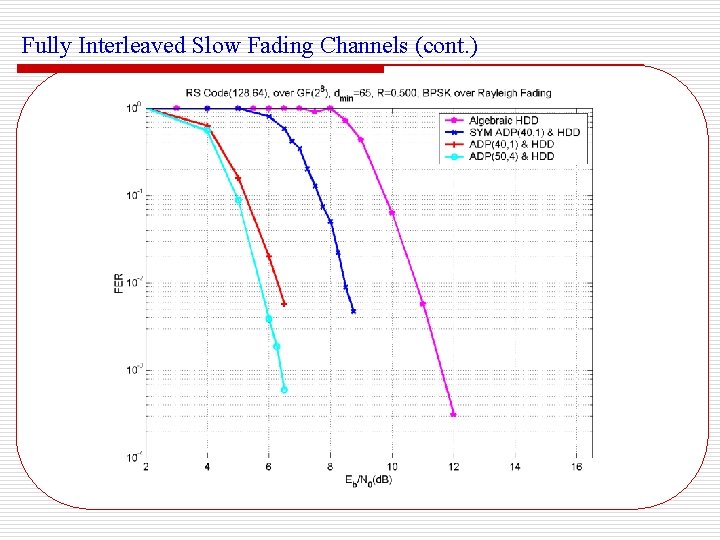

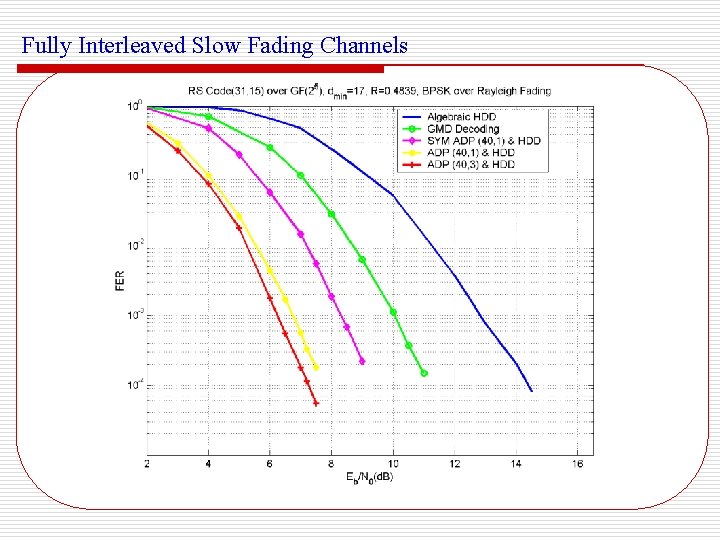

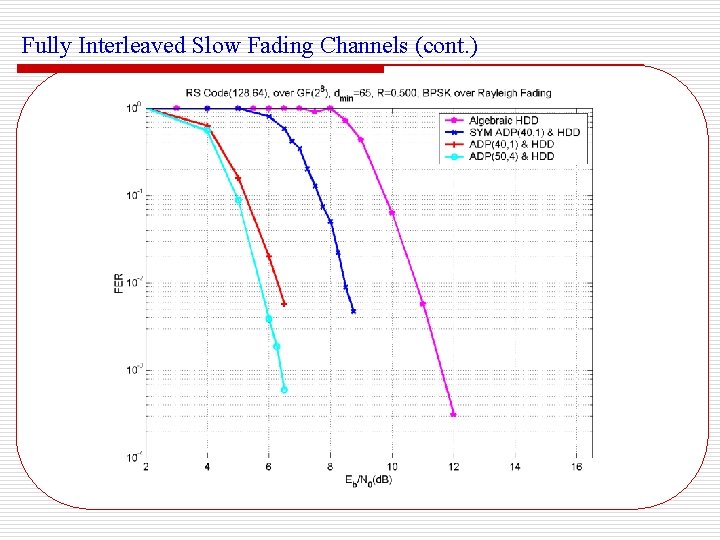

Fully Interleaved Slow Fading Channels

Fully Interleaved Slow Fading Channels (cont. )

Turbo Equalization Systems

Embed the Proposed Algorithm in the Turbo Equalization System interleaving source RS Encoder PR Encoder + interleaving extrinsic + - sink hard decision RS Decoder a priori de-interleaving extrinsic a priori BCJR Equalizer RS Coded Turbo Equalization System AWGN +

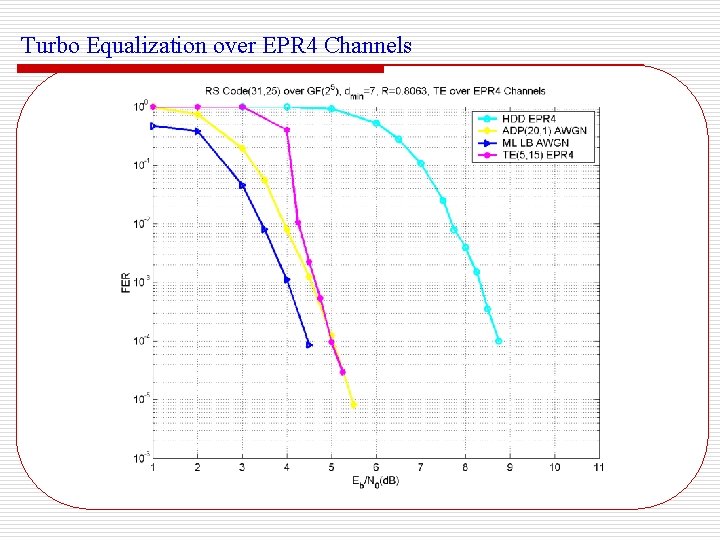

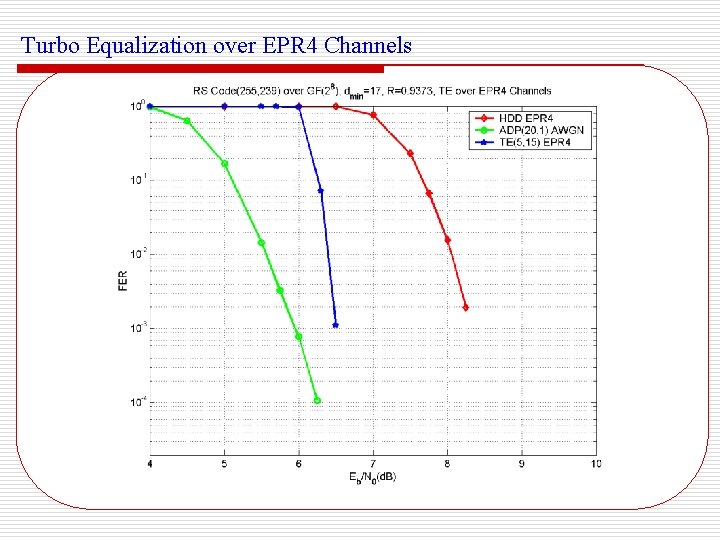

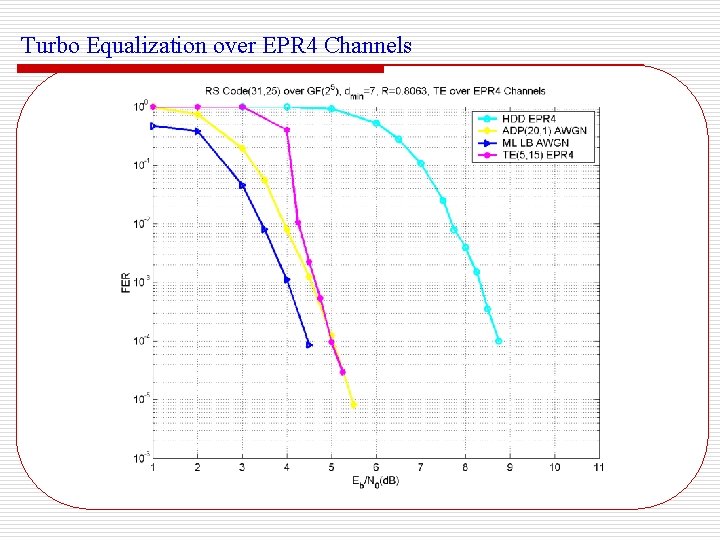

Turbo Equalization over EPR 4 Channels

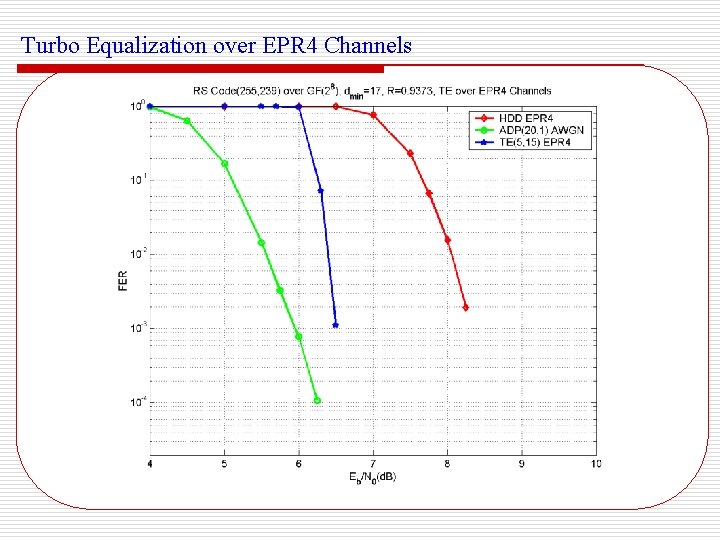

Turbo Equalization over EPR 4 Channels

RS Coded Modulation

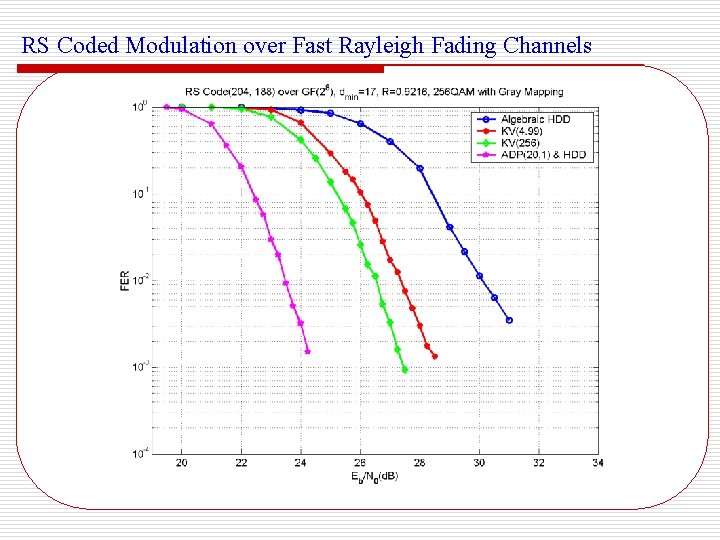

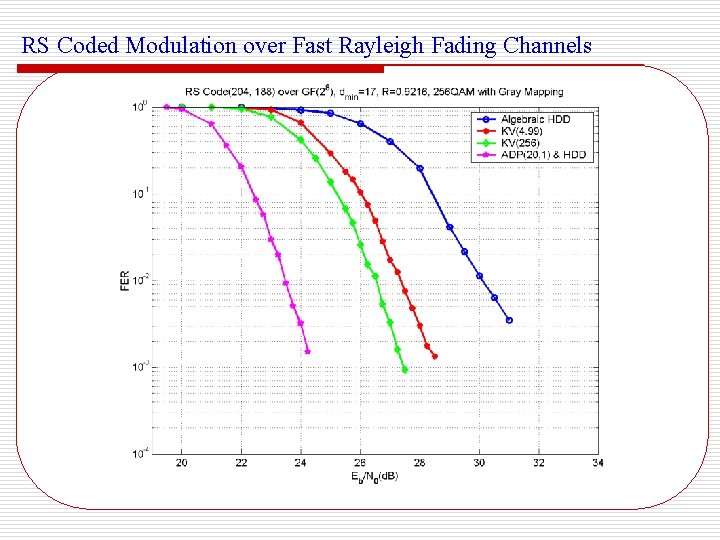

RS Coded Modulation over Fast Rayleigh Fading Channels

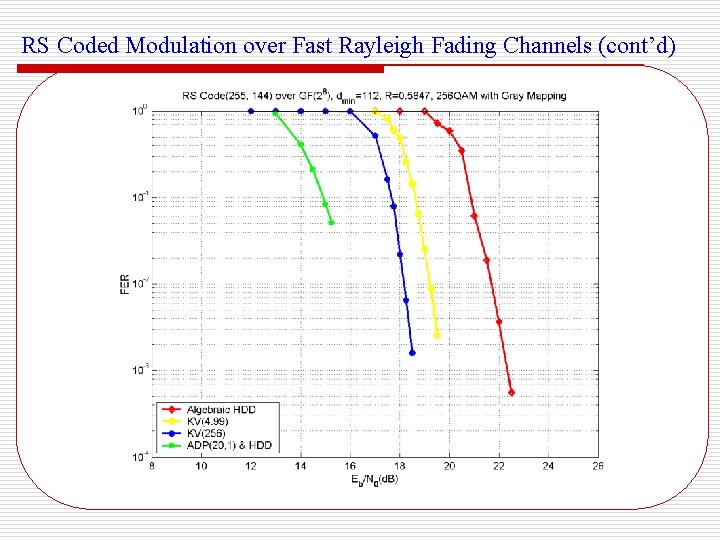

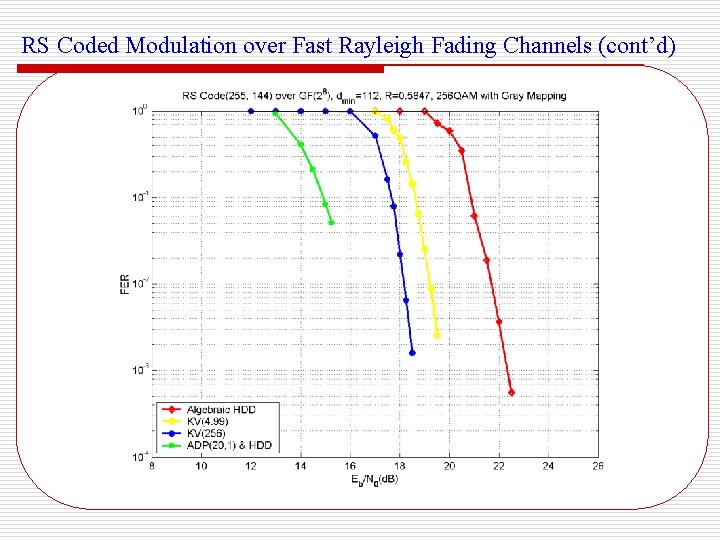

RS Coded Modulation over Fast Rayleigh Fading Channels (cont’d)

Remarks n. More noticeable gain is observed for fading channels, especially for symbol-level adaptive scheme. n. In RS coded modulation scheme, utilizing bit-level soft information seems provide more gain. n. The proposed TE scheme can combat ISI and performs almost identically as the performance over AWGN channels. n. The proposed algorithm has a potential “error floor” problem. n. However, simulation down to even lower FER is impossible. n. Asymptotic performance analysis is still under investigation.

Conclusion and Future work Iterative decoding of RS codes based on adaptive parity check matrix works favorably for practical codes over various channels. n The proposed algorithm and its variations provide a wide range of complexity-performance tradeoff for different applications. n n More works under investigation: Asymptotic performance bound. n. Understanding how this algorithm works from an information theoretic perspective, e. g. , entropy of ordered statistics. n. Improving the generic algorithm using more sophisticated optimization schemes, e. g. , conjugate gradient method. n

Thank you!