Sentiment Analysis CMPT 733 What is sentiment analysis

- Slides: 30

Sentiment Analysis CMPT 733

• What is sentiment analysis? • Overview of approach • Feature Representation • Term Frequency – Inverse Document Frequency (TF-IDF) • Word 2 Vec • Skip-gram • Model Training • • Linear Regression Assignment 2 Outline Computing Science/Apala Guha

• What is sentiment analysis? • Overview of approach • Feature Representation • Term Frequency – Inverse Document Frequency (TF-IDF) • Word 2 Vec • Skip-gram • Model Training • • Linear Regression Assignment 2 Outline Computing Science/Apala Guha

• Wikipedia: Aims to determine the attitude of a speaker or a writer with respect to some topic or the overall contextual polarity of a document. • Examples: • Full of zany characters and richly applied satire, and some great plot twists: is this a positive or negative review? • Public opinion on the stock market mined from Tweets • What do people think about a political candidate or issue? • Can we predict election outcomes or market performance from sentiment analysis? What is sentiment analysis? Computing Science/Apala Guha

• What is sentiment analysis? • Overview of approach • Feature Representation • Term Frequency – Inverse Document Frequency (TF-IDF) • Word 2 Vec • Skip-gram • Model Training • • Linear Regression Assignment 2 Outline Computing Science/Apala Guha

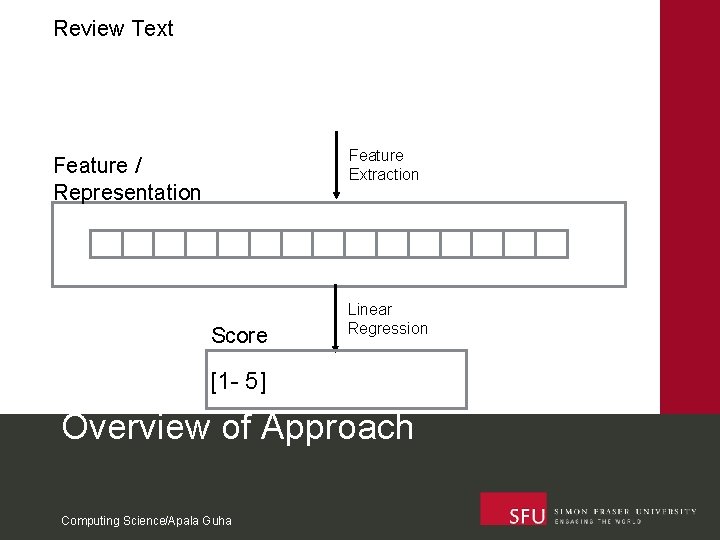

• Running Example: Sentiment analysis in Amazon Reviews • Amazon reviews consist of both a text and a rating • We learn the relationship between the text content and the rating Overview of Approach Computing Science/Apala Guha

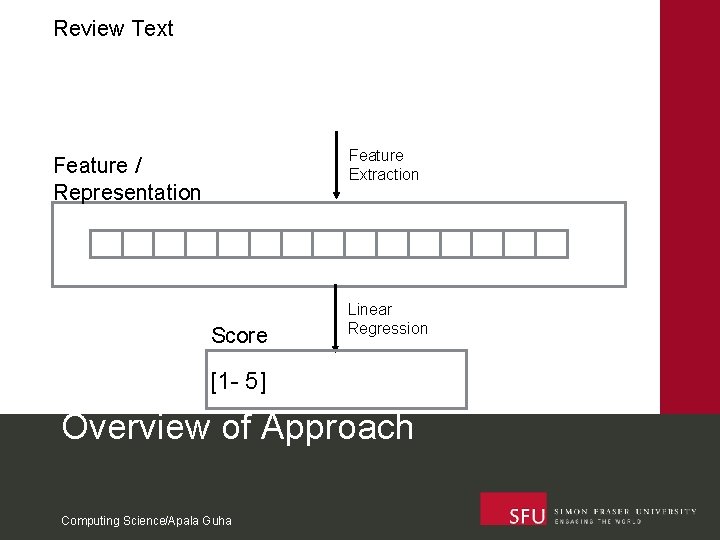

Review Text I purchased one of these feom Walmart ……. Feature Extraction Feature / Representation Score Linear Regression [1 - 5] Overview of Approach Computing Science/Apala Guha

• What is sentiment analysis? • Overview of approach • Feature Representation • Term Frequency – Inverse Document Frequency (TF-IDF) • Word 2 Vec • Skip-gram • Model Training • • Linear Regression Assignment 2 Outline Computing Science/Apala Guha

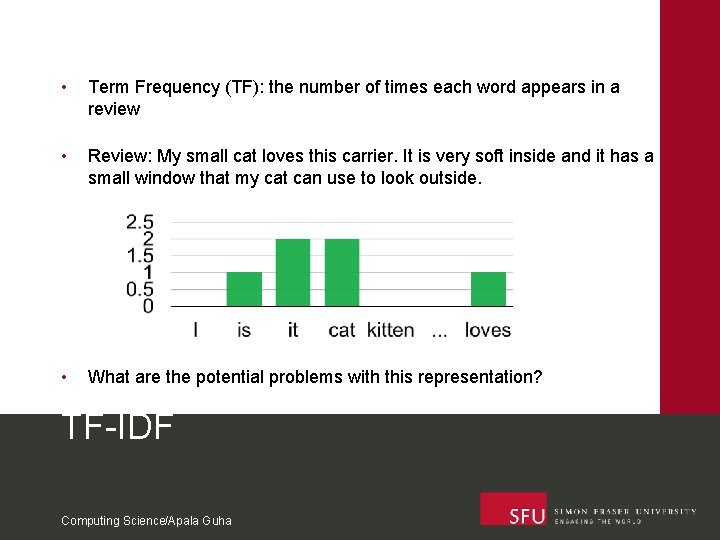

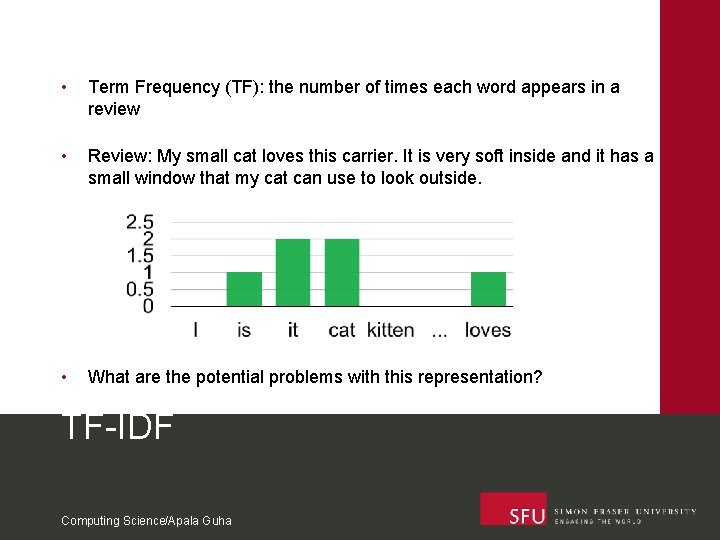

• Term Frequency (TF): the number of times each word appears in a review • Review: My small cat loves this carrier. It is very soft inside and it has a small window that my cat can use to look outside. • What are the potential problems with this representation? TF-IDF Computing Science/Apala Guha

• Raw term frequency will give too much weight to terms used in long reviews • We should give equal importance to each review • Some words are ubiquitous but without significant meaning • These words will receive unnecessary importance • Usually common words occur 1 -2 orders of magnitude more times than uncommon words • We need to suppress less significant, ubiquitous words while enhancing more significant, rare words TF-IDF Computing Science/Apala Guha

• tf (term, review) = term. Freq. In. Doc (term) / total. Terms. In. Review (review) • How does this solve the problem of variable-length reviews? • idf (term) = log ((total. Reviews + 1) / (term. Freq. In. Corpus (term) + 1)) • How does this solve the problem of ubiquitous versus rare words? • tf-idf (term, review) = tf (term, review) * idf (review) • How does this overall reflect the importance of a particular term in a particular review? TF-IDF Computing Science/Apala Guha

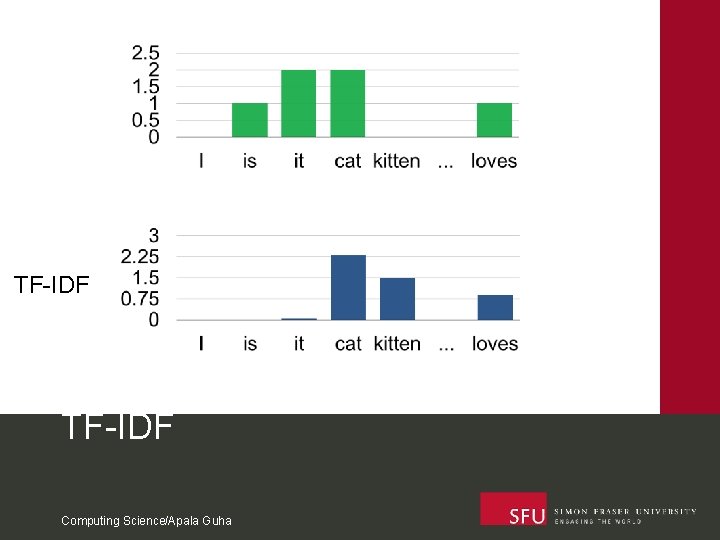

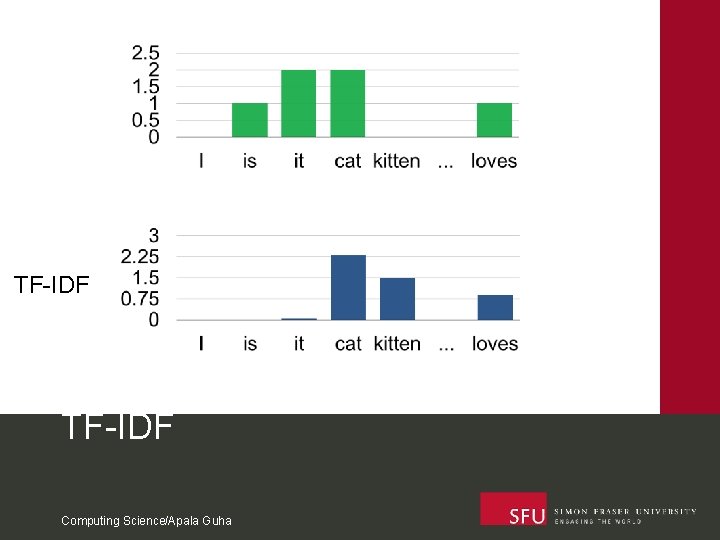

TF-IDF Computing Science/Apala Guha

Can you spot any problems with the TF-IDF representation? TF-IDF Computing Science/Apala Guha

• Pays no attention to word semantics • Words with similar meanings are considered separately • Words having different meanings in different contexts are considered to be the same • It would be nice to incorporate some word semantics information into the feature representation TF-IDF Computing Science/Apala Guha

• What is sentiment analysis? • Overview of approach • Feature Representation • Term Frequency – Inverse Document Frequency (TF-IDF) • Word 2 Vec • Skip-gram • Model Training • • Linear Regression Assignment 2 Outline Computing Science/Apala Guha

• Word semantics are based on their context i. e. nearby words. • Example: • I love having cereal in morning for breakfast. • My breakfast is usually jam with butter. • The best part of my day is morning’s fresh coffee with a hot breakfast. • ‘cereal’, ‘jam’, ‘butter’, and ‘coffee’ are related. • We need to represent each word such that similar words have similar representation. Word 2 Vec Computing Science/Apala Guha

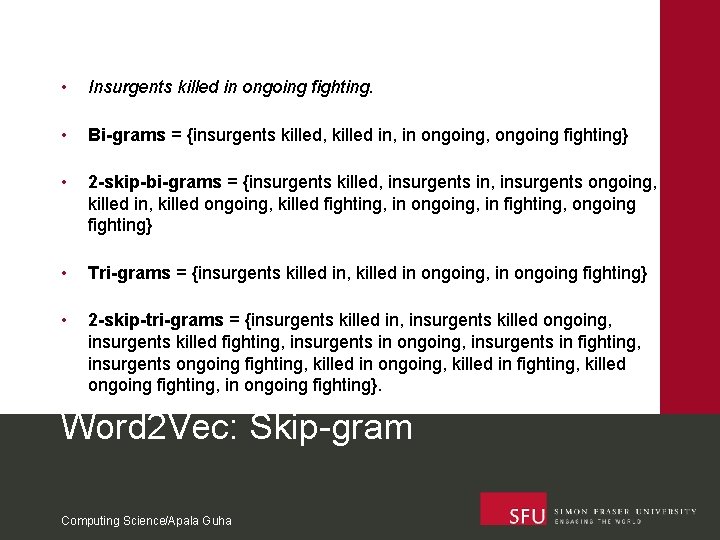

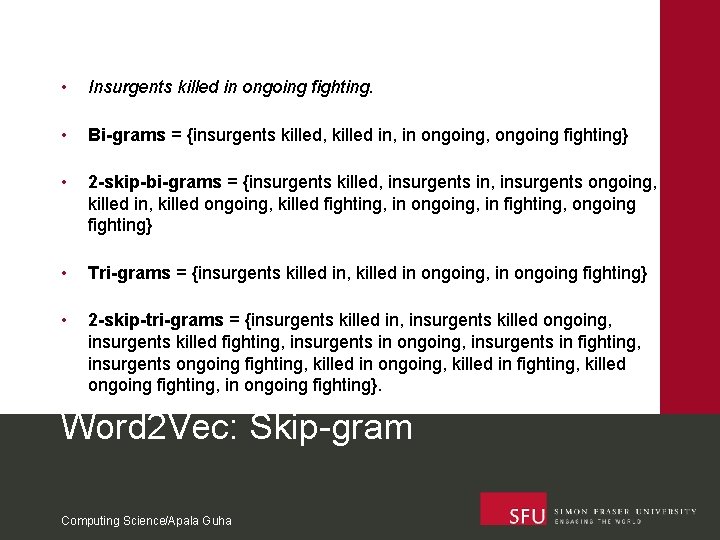

• Insurgents killed in ongoing fighting. • Bi-grams = {insurgents killed, killed in, in ongoing, ongoing fighting} • 2 -skip-bi-grams = {insurgents killed, insurgents in, insurgents ongoing, killed in, killed ongoing, killed fighting, in ongoing, in fighting, ongoing fighting} • Tri-grams = {insurgents killed in, killed in ongoing, in ongoing fighting} • 2 -skip-tri-grams = {insurgents killed in, insurgents killed ongoing, insurgents killed fighting, insurgents in ongoing, insurgents in fighting, insurgents ongoing fighting, killed in ongoing, killed in fighting, killed ongoing fighting, in ongoing fighting}. Word 2 Vec: Skip-gram Computing Science/Apala Guha

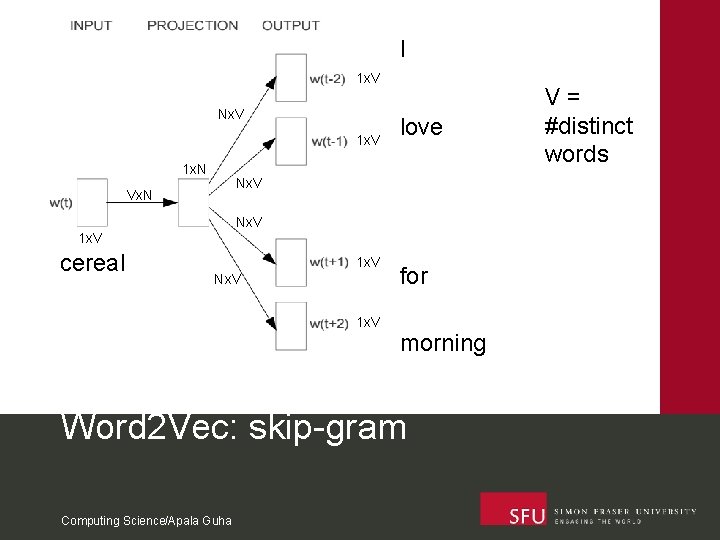

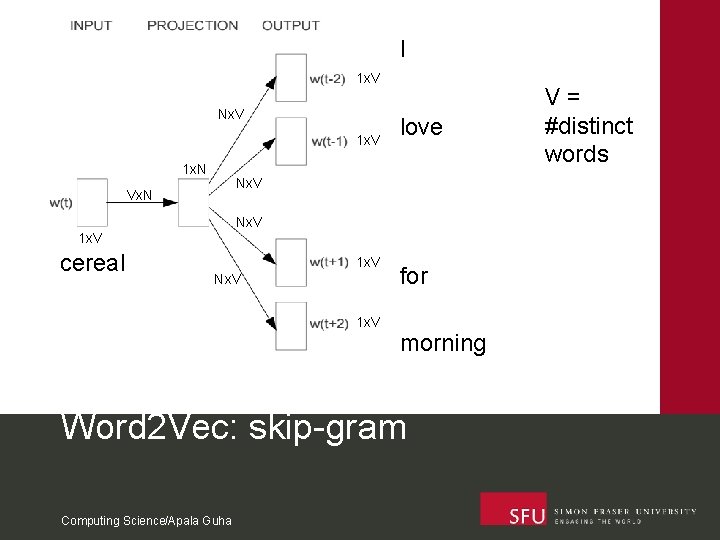

• Neural network trained on context of each word. • Predicts the context given a word. • The predicted context is used as the feature representation of a particular word in a review. • We need to combine the feature vectors of the words in a review to get the overall feature vector of the review. Word 2 Vec: skip-gram Computing Science/Apala Guha

I 1 x. V Nx. V 1 x. N Nx. V Vx. N Nx. V 1 x. V cereal love 1 x. V Nx. V 1 x. V for morning Word 2 Vec: skip-gram Computing Science/Apala Guha V= #distinct words

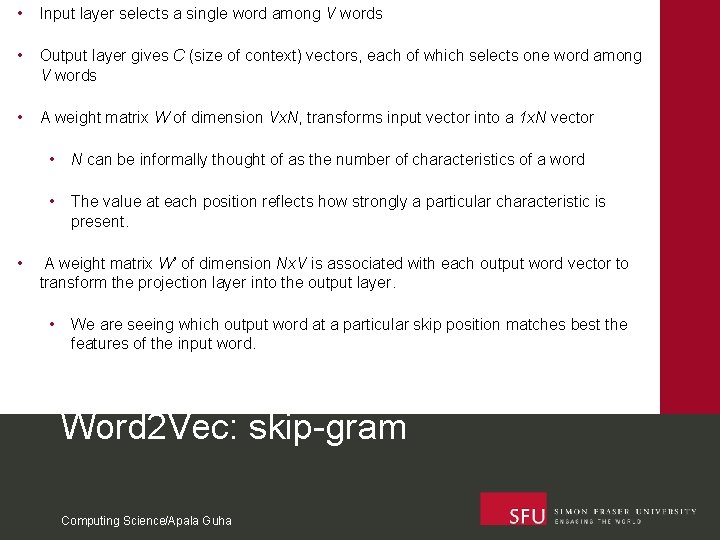

• Input layer selects a single word among V words • Output layer gives C (size of context) vectors, each of which selects one word among V words • A weight matrix W of dimension Vx. N, transforms input vector into a 1 x. N vector • • N can be informally thought of as the number of characteristics of a word • The value at each position reflects how strongly a particular characteristic is present. A weight matrix W’ of dimension Nx. V is associated with each output word vector to transform the projection layer into the output layer. • We are seeing which output word at a particular skip position matches best the features of the input word. Word 2 Vec: skip-gram Computing Science/Apala Guha

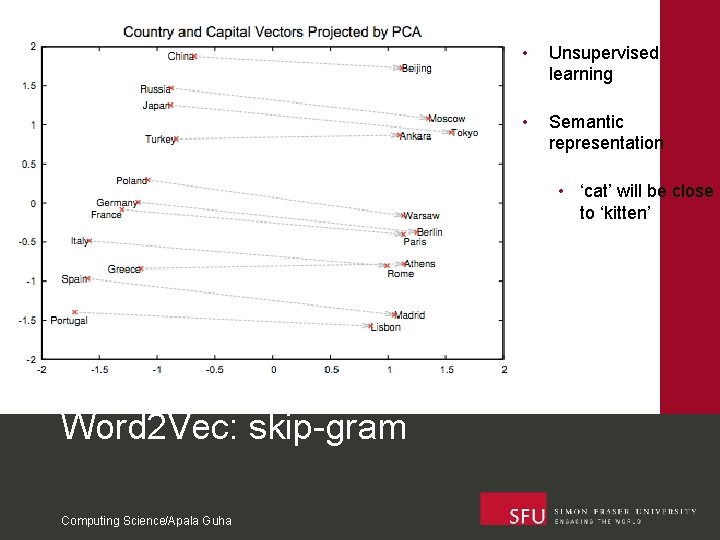

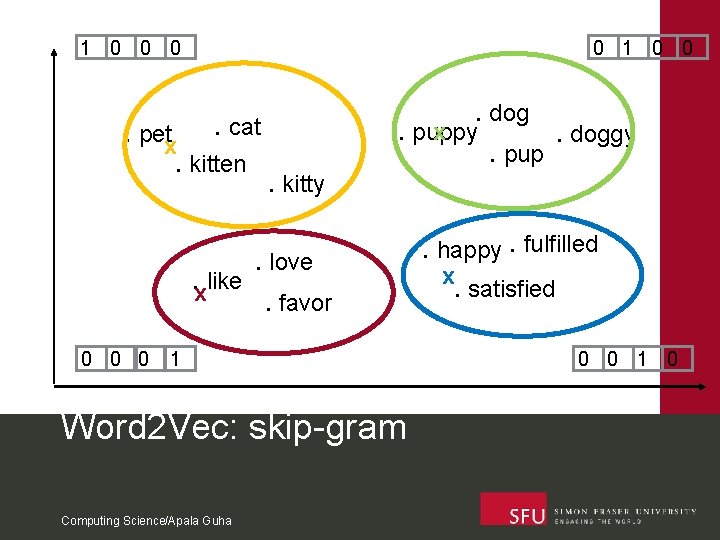

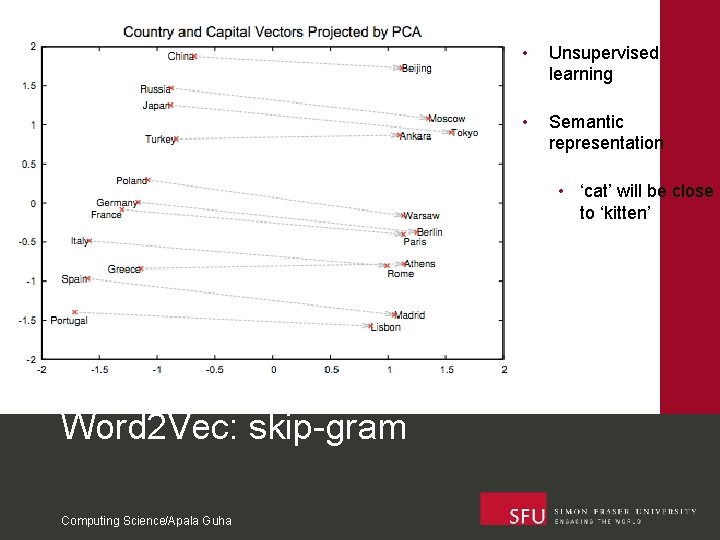

• Unsupervised learning • Semantic representation • ‘cat’ will be close to ‘kitten’ Word 2 Vec: skip-gram Computing Science/Apala Guha

Suggest some ways to combine feature vectors of the words appearing in a review to get the overall feature vector of the review. Word 2 Vec: skip-gram Computing Science/Apala Guha

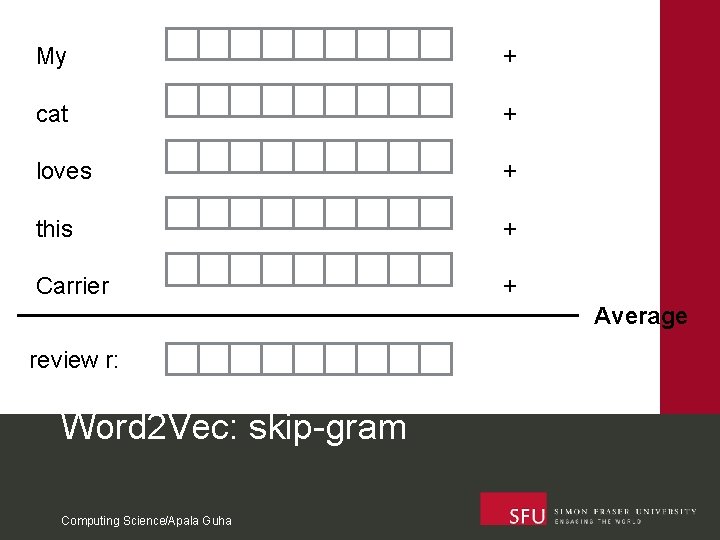

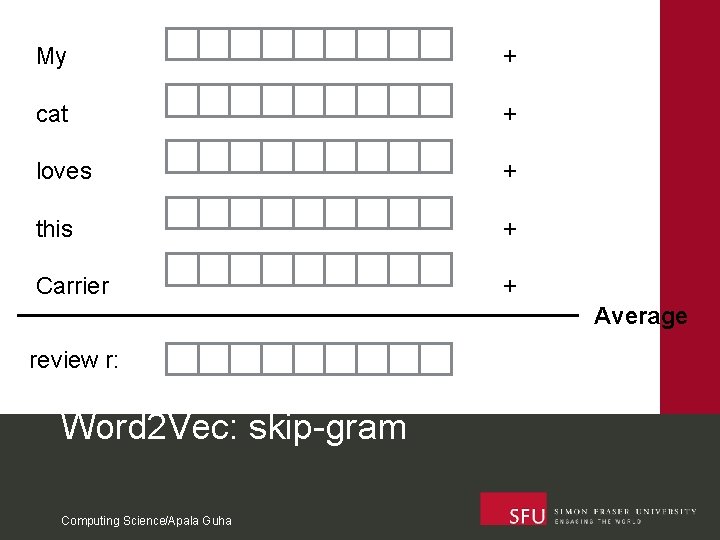

My + cat + loves + this + Carrier + Average review r: Word 2 Vec: skip-gram Computing Science/Apala Guha

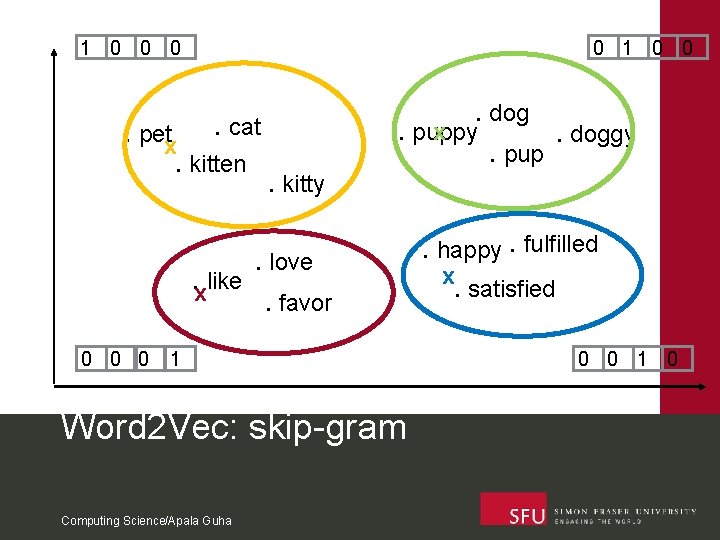

1 0 0 . dog x. puppy. doggy. pup . cat . petx. kitten . kitty. love . like x. favor 0 0 0 1 Word 2 Vec: skip-gram Computing Science/Apala Guha . happy. fulfilled x. satisfied 0 0 1 0

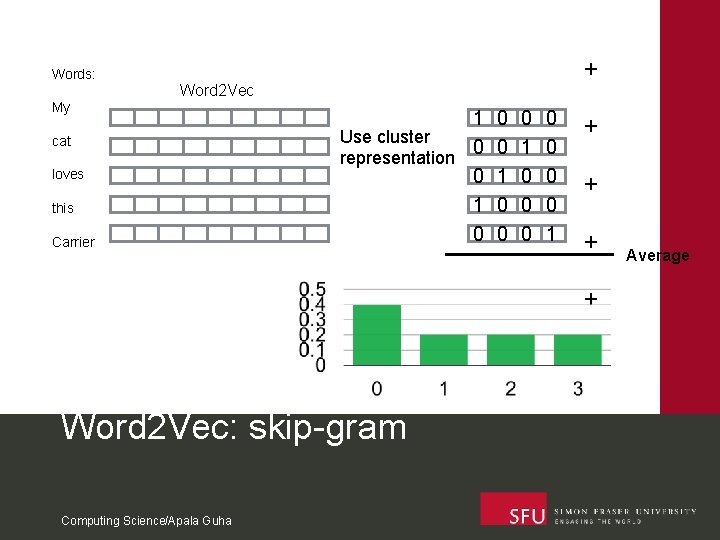

Words: + Word 2 Vec My cat loves this Carrier 1 Use cluster 0 representation 0 1 0 0 0 0 1 + + Word 2 Vec: skip-gram Computing Science/Apala Guha Average

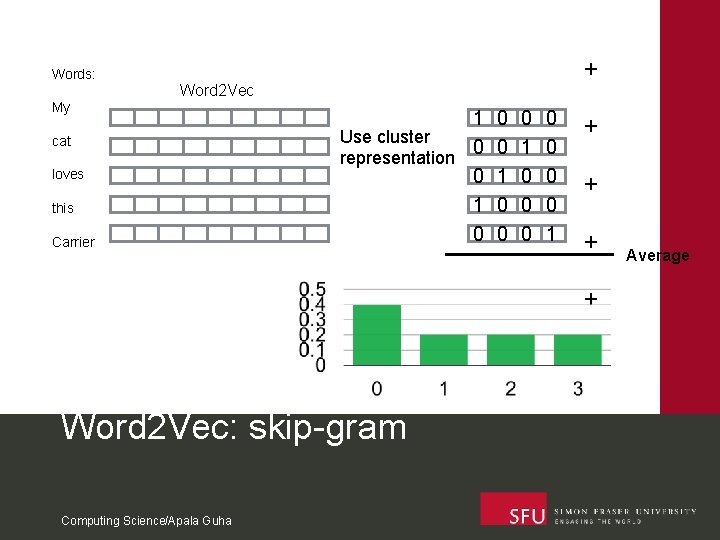

• We need to represent an overall review with a feature vector, not just individual words • We could average the feature vectors of the individual words in the review • Or we could cluster words in the corpus, and use the degree of presence of different clusters in a review as the feature vector Word 2 Vec: skip-gram Computing Science/Apala Guha

• What is sentiment analysis? • Overview of approach • Feature Representation • Term Frequency – Inverse Document Frequency (TF-IDF) • Word 2 Vec • Skip-gram • Model Training • • Linear Regression Assignment 2 Outline Computing Science/Apala Guha

• Find the relationship between review feature vectors and rating scores • Train a linear regression model • Use the model to predict the rating of a test review • Broader classification such as positive/negative is also possible by using a threshold on the score rating Model Training Computing Science/Apala Guha

• What is sentiment analysis? • Overview of approach • Feature Representation • Term Frequency – Inverse Document Frequency (TF-IDF) • Word 2 Vec • Skip-gram • Model Training • • Linear Regression Assignment 2 Outline Computing Science/Apala Guha

• TF-IDF representation • Train Linear Regression model • Train Word 2 Vec representation • Extract average Word 2 Vec for each review • Cluster Word 2 Vec features Assignment 2 Computing Science/Apala Guha