Semantic Representation and Formal Transformation kevin knight uscisi

- Slides: 64

Semantic Representation and Formal Transformation kevin knight usc/isi MURI meeting, CMU, Nov 3 -4, 2011

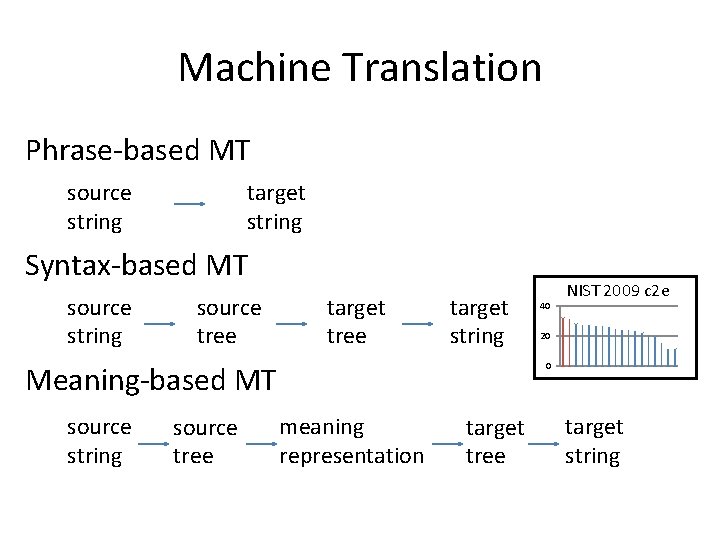

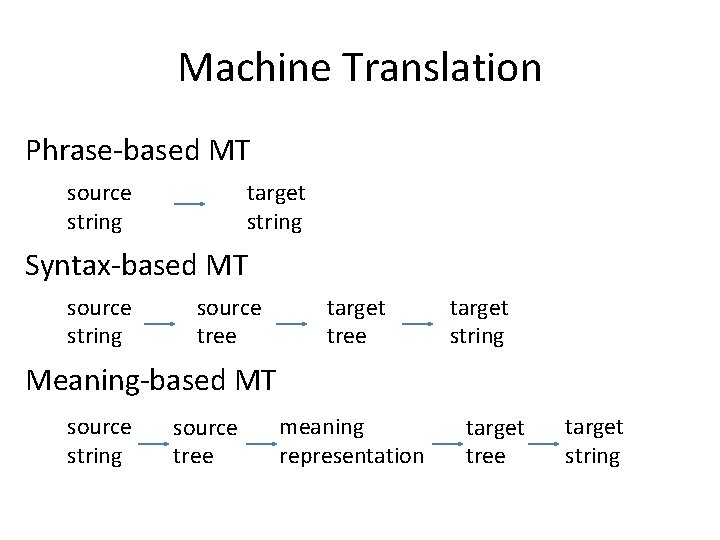

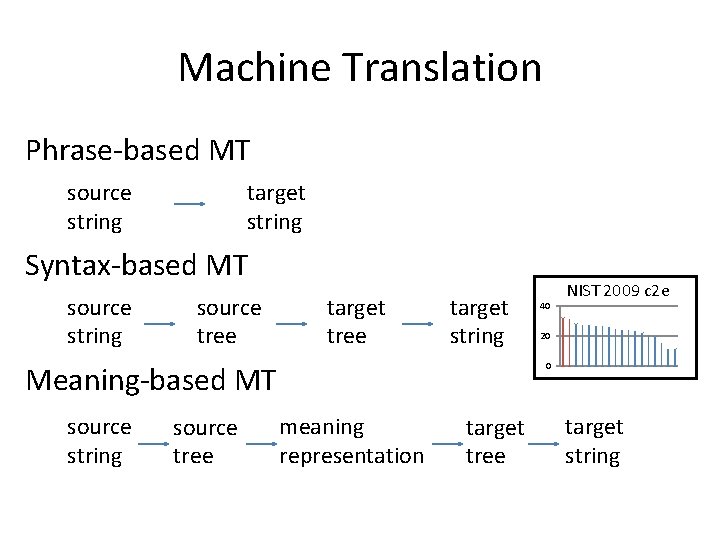

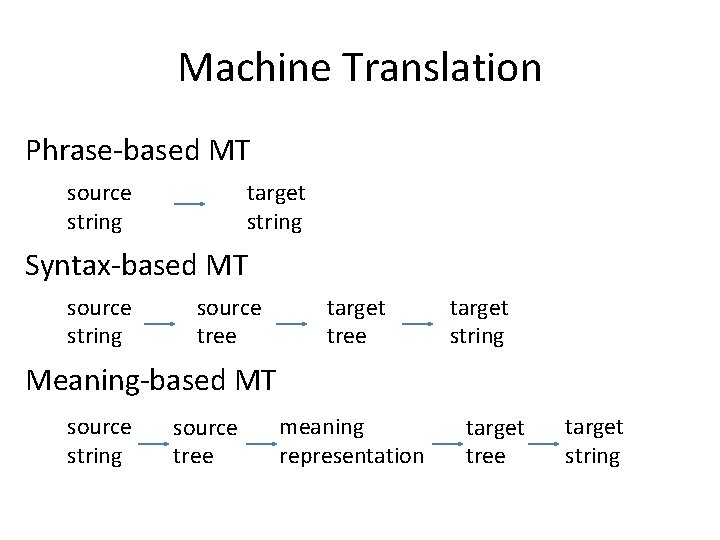

Machine Translation Phrase-based MT source string target string Syntax-based MT source string source tree target string Meaning-based MT source string source tree 40 NIST 2009 c 2 e 20 0 meaning representation target tree target string

Meaning-based MT • Too big for this MURI: – What content goes into the meaning representation? • linguistics, annotation – How are meaning representations probabilistically generated, transformed, scored, ranked? • automata theory, efficient algorithms – How can a full MT system be built and tested? • engineering, language modeling, features, training

Meaning-based MT • Too big for this MURI: – What content goes into the meaning representation? • linguistics, annotation – How are meaning representations probabilistically generated, transformed, scored, ranked? • automata theory, efficient algorithms – How can a full MT system be built and tested? • engineering, language modeling, features, training

Meaning-based MT • Too big for this MURI: – What content goes into the meaning representation? • linguistics, annotation – How are meaning representations probabilistically generated, transformed, scored, ranked? • automata theory, efficient algorithms – How can a full MT system be built and tested? Language-independent theory. • engineering, language modeling, features, training But driven by practical desires.

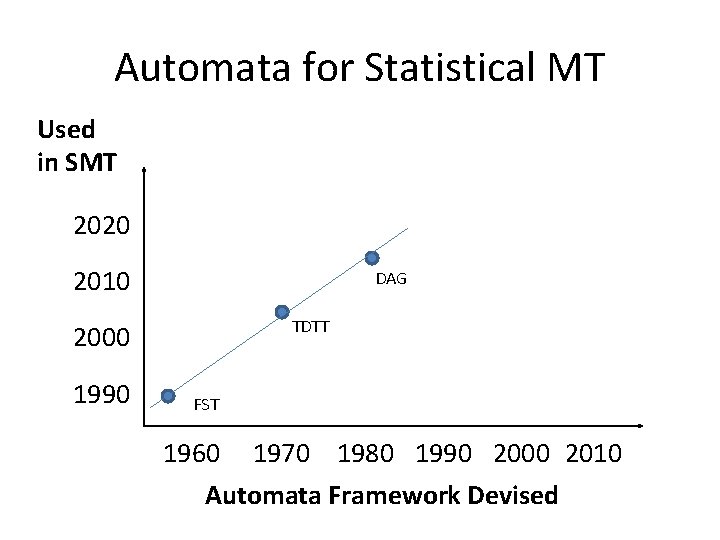

Automata Frameworks • How to represent and manipulate linguistic representations? • Linguistics, NLP, and Automata Theory used to be together (1960 s, 70 s) – Context-free grammars were invented to model human language – Tree transducers were invented to model transformational grammar • They drifted apart • Renewed connections around MT (this century) • Role: greatly simplify systems!

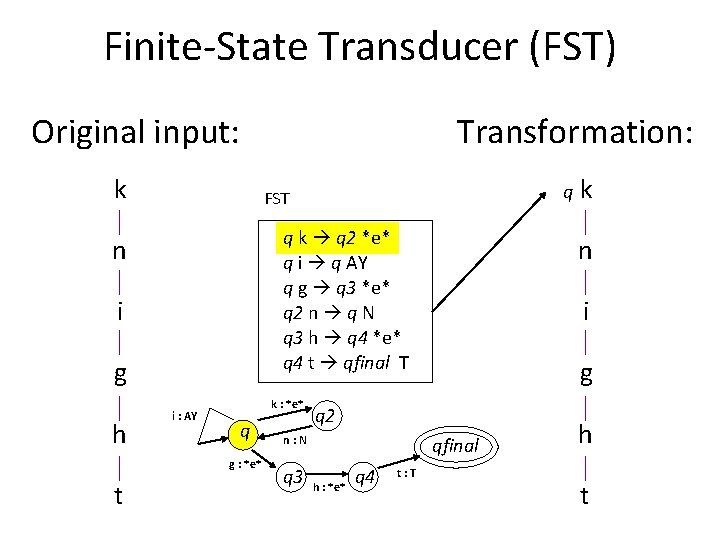

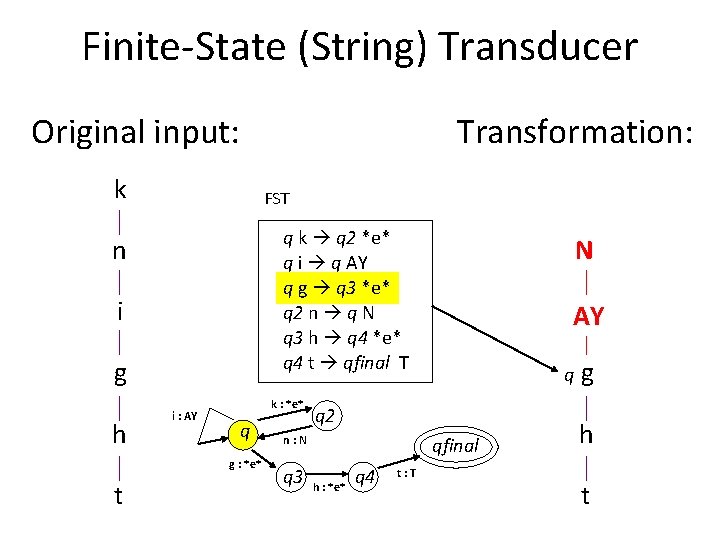

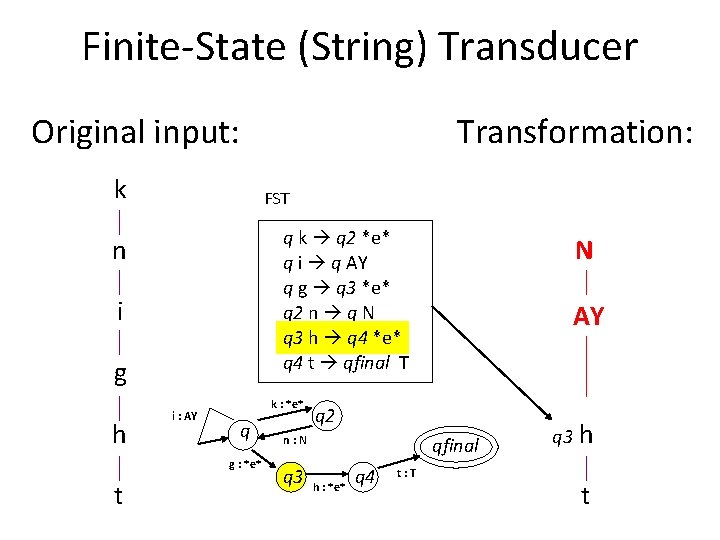

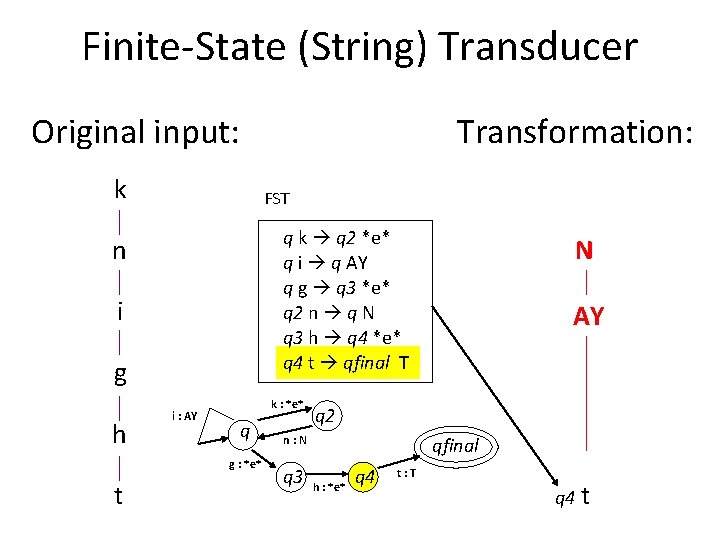

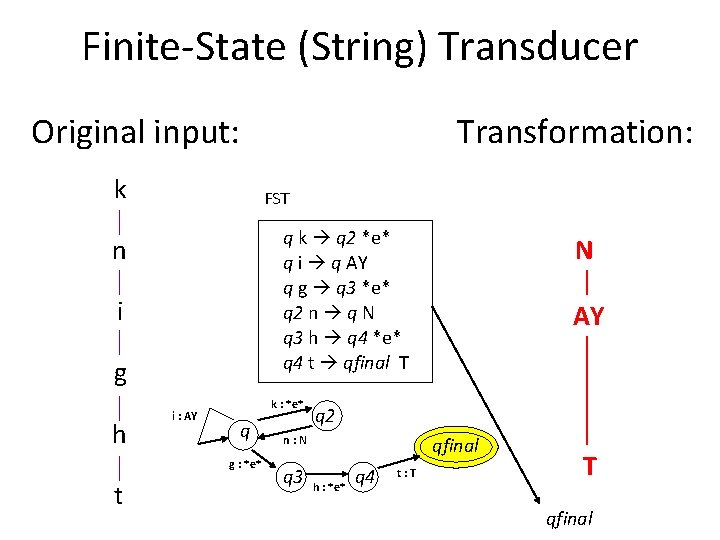

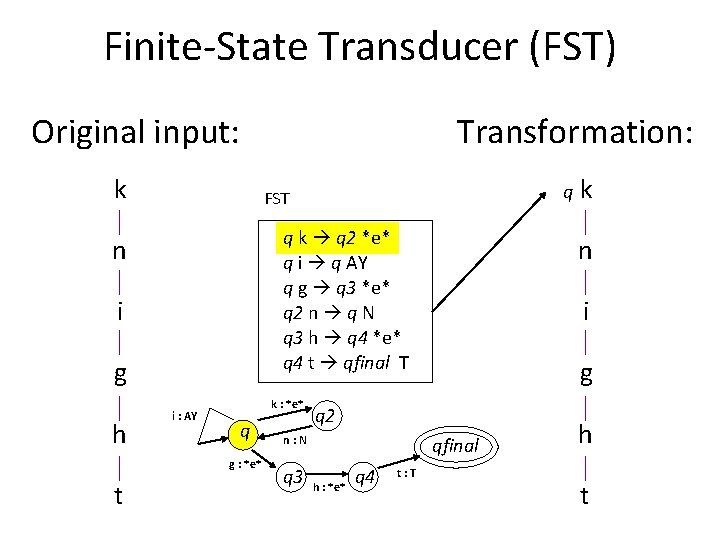

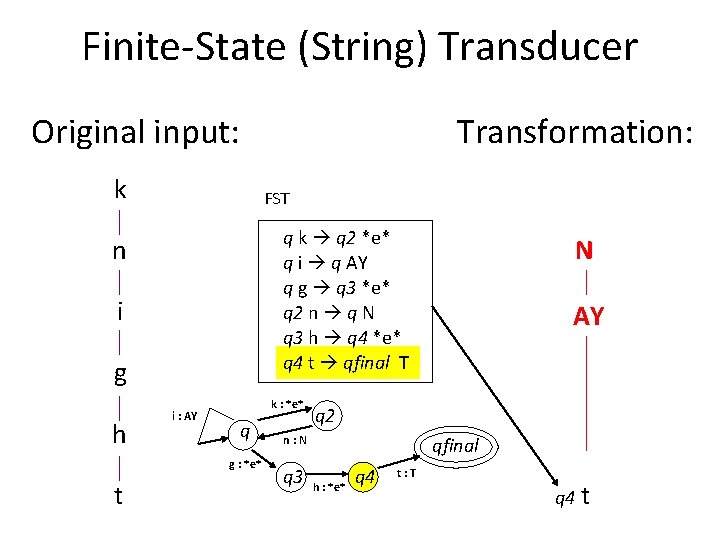

Finite-State Transducer (FST) Original input: k q k q 2 *e* q i q AY q g q 3 *e* q 2 n q N q 3 h q 4 *e* q 4 t qfinal T i g i : AY k : *e* q g : *e* t q FST n h Transformation: q 3 n i g q 2 n: N qfinal h : *e* q 4 t: T k h t

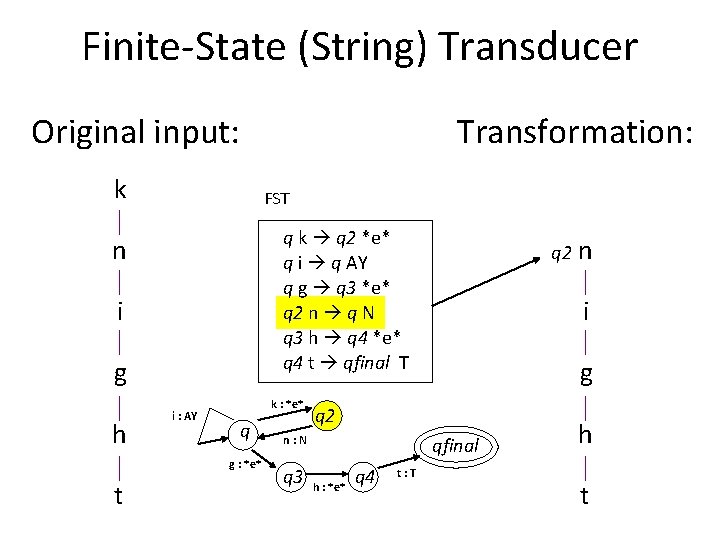

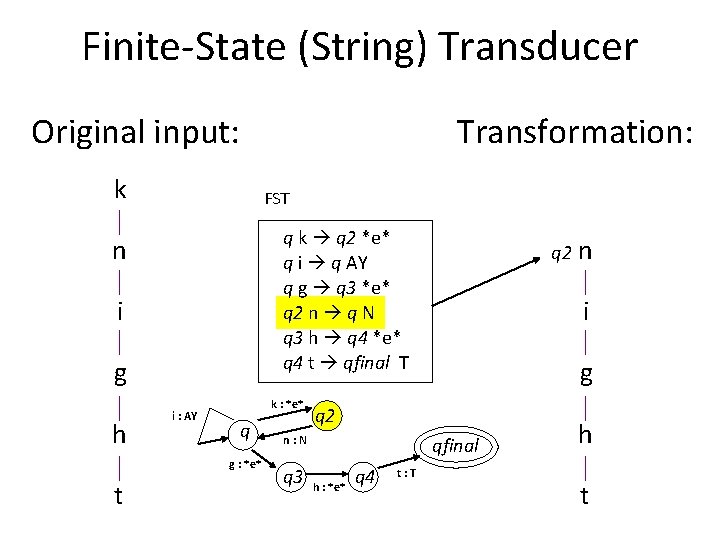

Finite-State (String) Transducer Original input: k FST q k q 2 *e* q i q AY q g q 3 *e* q 2 n q N q 3 h q 4 *e* q 4 t qfinal T n i g h i : AY k : *e* q g : *e* t Transformation: g qfinal h : *e* q 4 t: T n i q 2 n: N q 3 q 2 h t

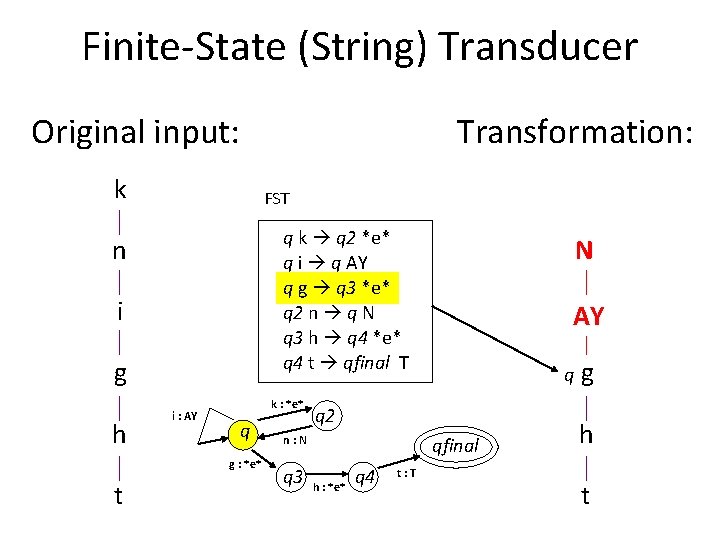

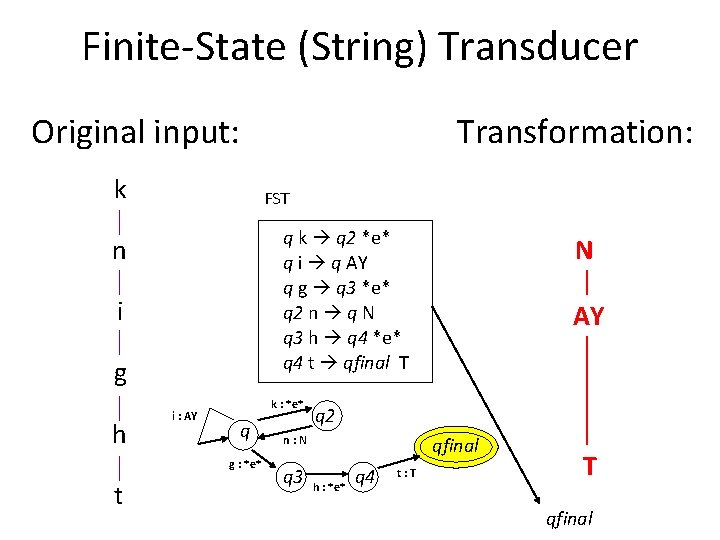

Finite-State (String) Transducer Original input: k FST q k q 2 *e* q i q AY q g q 3 *e* q 2 n q N q 3 h q 4 *e* q 4 t qfinal T n i g h i : AY k : *e* q g : *e* t Transformation: q qfinal h : *e* q 4 t: T i g q 2 n: N q 3 N h t

Finite-State (String) Transducer Original input: k FST q k q 2 *e* q i q AY q g q 3 *e* q 2 n q N q 3 h q 4 *e* q 4 t qfinal T n i g h i : AY k : *e* q g : *e* t Transformation: AY q q 2 n: N q 3 N qfinal h : *e* q 4 t: T g h t

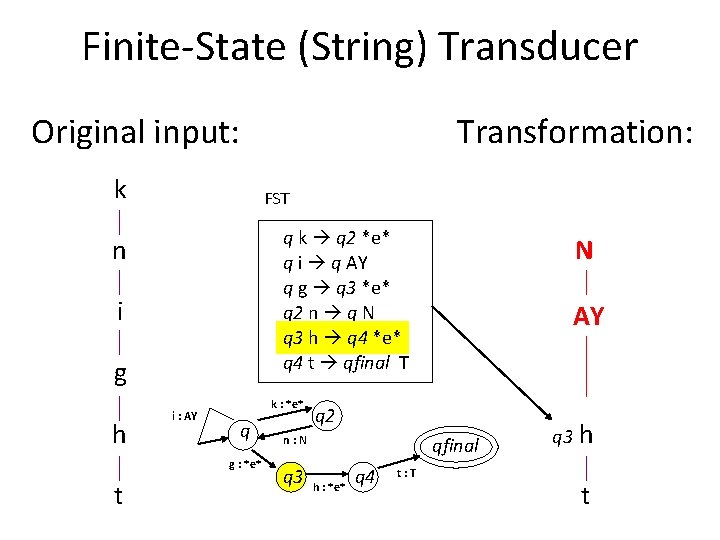

Finite-State (String) Transducer Original input: k FST q k q 2 *e* q i q AY q g q 3 *e* q 2 n q N q 3 h q 4 *e* q 4 t qfinal T n i g h i : AY k : *e* q g : *e* t Transformation: AY q 2 n: N q 3 N qfinal h : *e* q 4 t: T q 3 h t

Finite-State (String) Transducer Original input: k FST q k q 2 *e* q i q AY q g q 3 *e* q 2 n q N q 3 h q 4 *e* q 4 t qfinal T n i g h i : AY k : *e* q g : *e* t Transformation: AY q 2 n: N q 3 N qfinal h : *e* q 4 t: T q 4 t

Finite-State (String) Transducer Original input: k FST q k q 2 *e* q i q AY q g q 3 *e* q 2 n q N q 3 h q 4 *e* q 4 t qfinal T n i g h i : AY k : *e* q g : *e* t Transformation: AY q 2 n: N q 3 N qfinal h : *e* q 4 t: T T qfinal

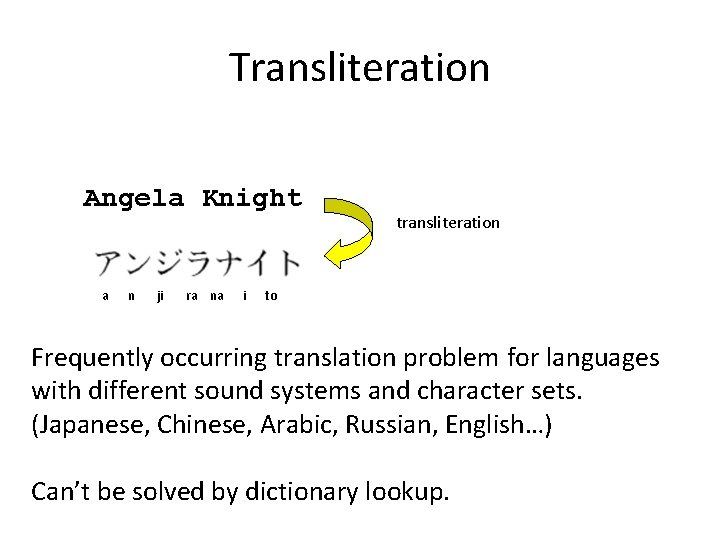

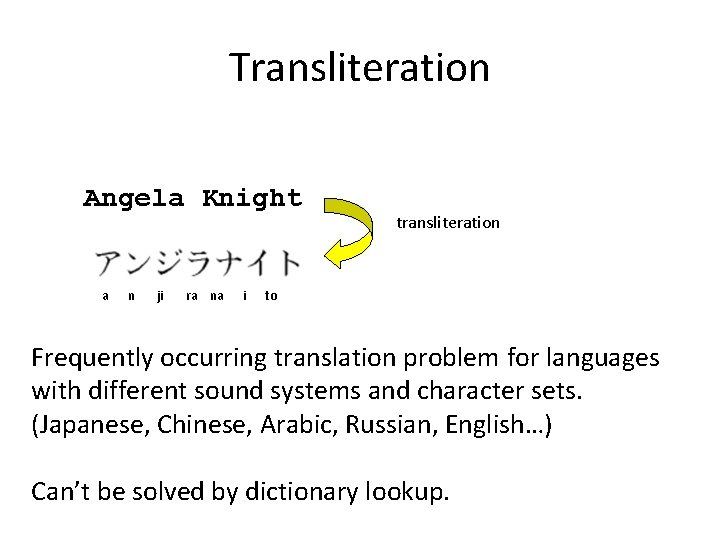

Transliteration Angela Knight a n ji ra na i transliteration to Frequently occurring translation problem for languages with different sound systems and character sets. (Japanese, Chinese, Arabic, Russian, English…) Can’t be solved by dictionary lookup.

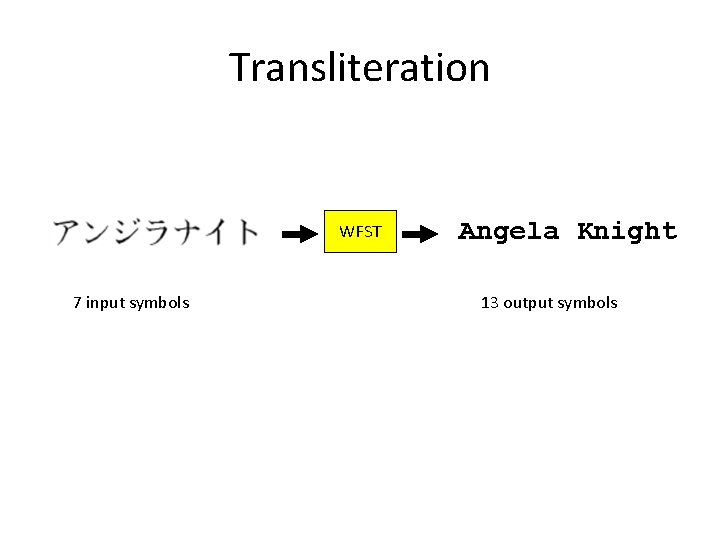

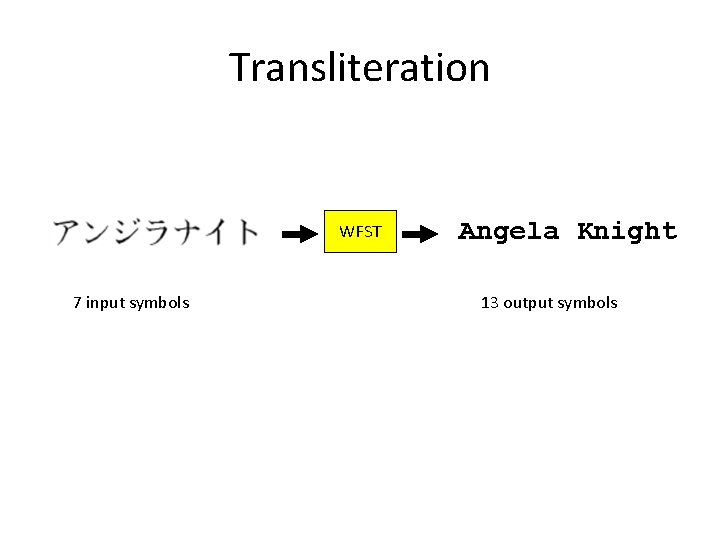

Transliteration WFST 7 input symbols Angela Knight 13 output symbols

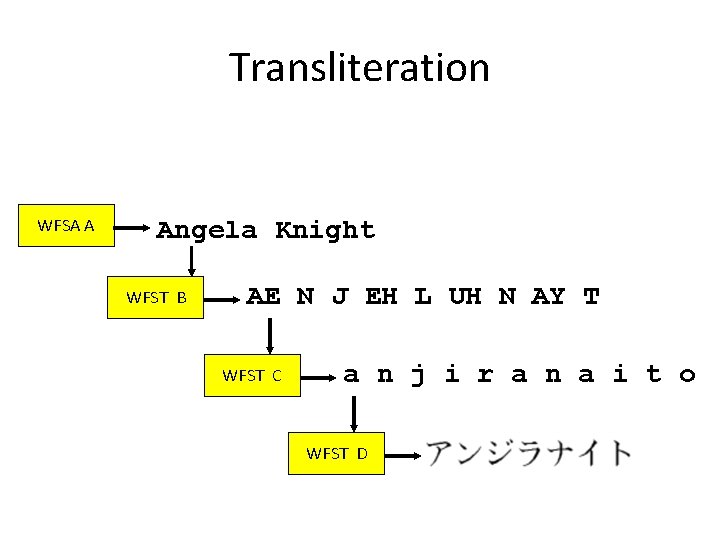

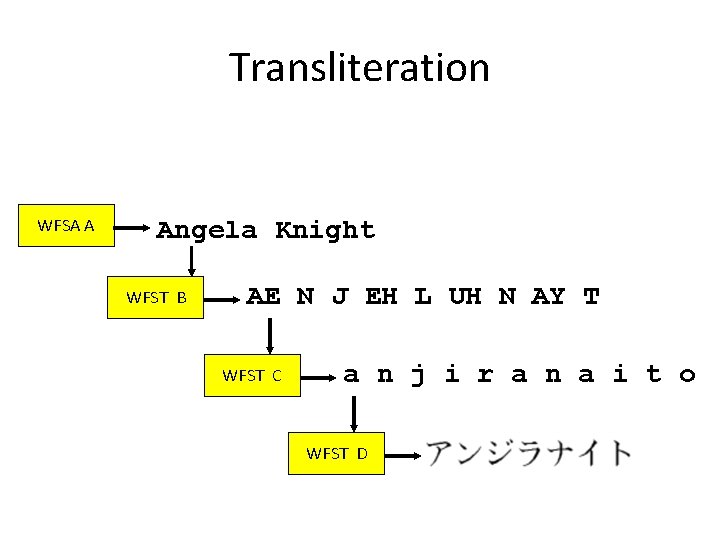

Transliteration WFSA A Angela Knight WFST B AE N J EH L UH N AY T WFST C a n j i r a n a i t o WFST D

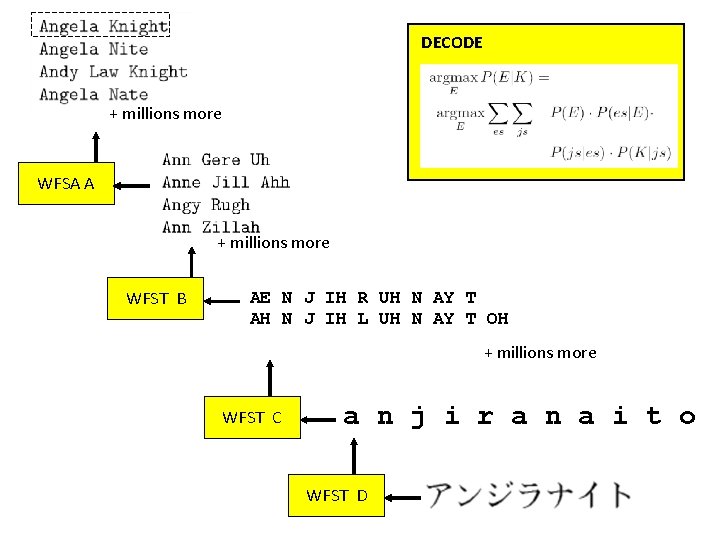

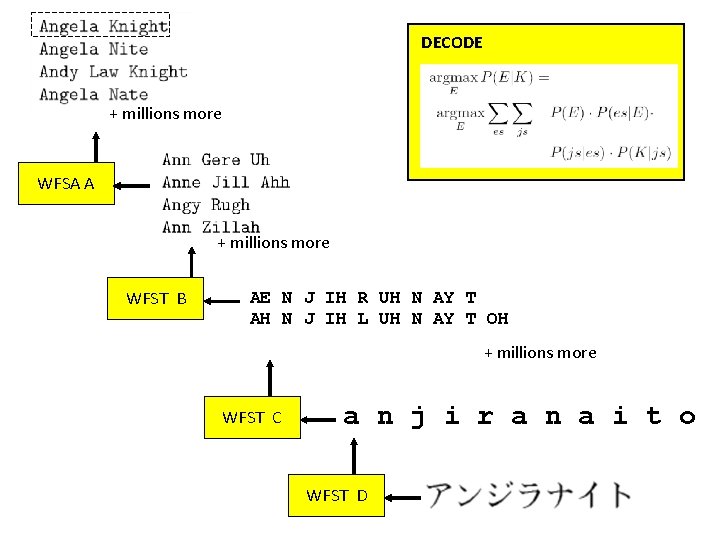

DECODE + millions more WFSA A + millions more WFST B AE N J IH R UH N AY T AH N J IH L UH N AY T OH + millions more WFST C a n j i r a n a i t o WFST D

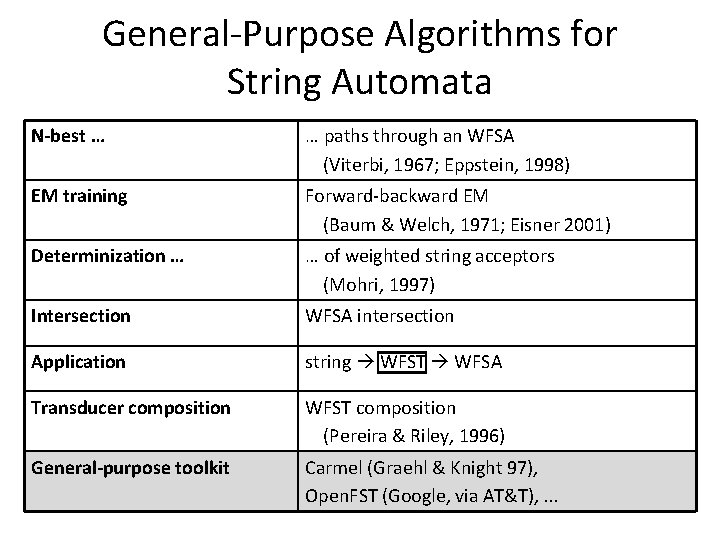

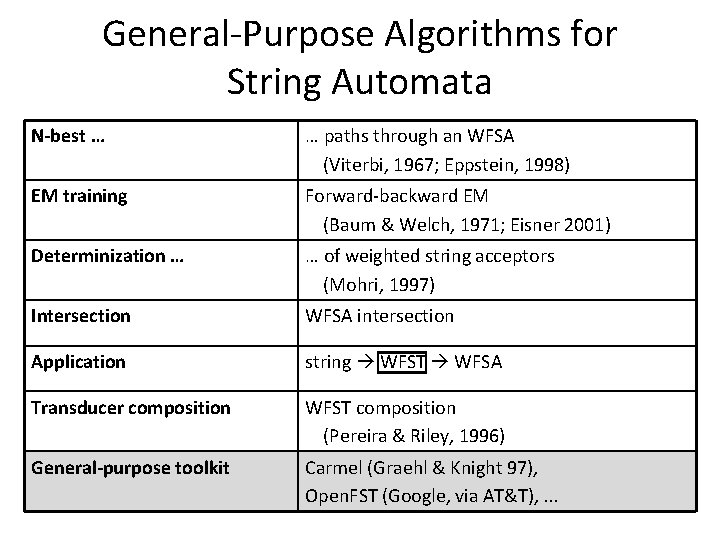

General-Purpose Algorithms for String Automata N-best … … paths through an WFSA (Viterbi, 1967; Eppstein, 1998) EM training Forward-backward EM (Baum & Welch, 1971; Eisner 2001) Determinization … … of weighted string acceptors (Mohri, 1997) Intersection WFSA intersection Application string WFST WFSA Transducer composition WFST composition (Pereira & Riley, 1996) General-purpose toolkit Carmel (Graehl & Knight 97), Open. FST (Google, via AT&T), . . .

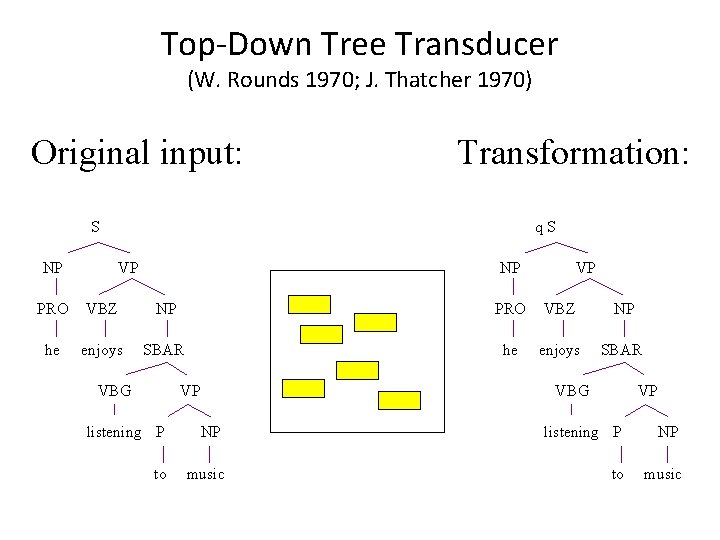

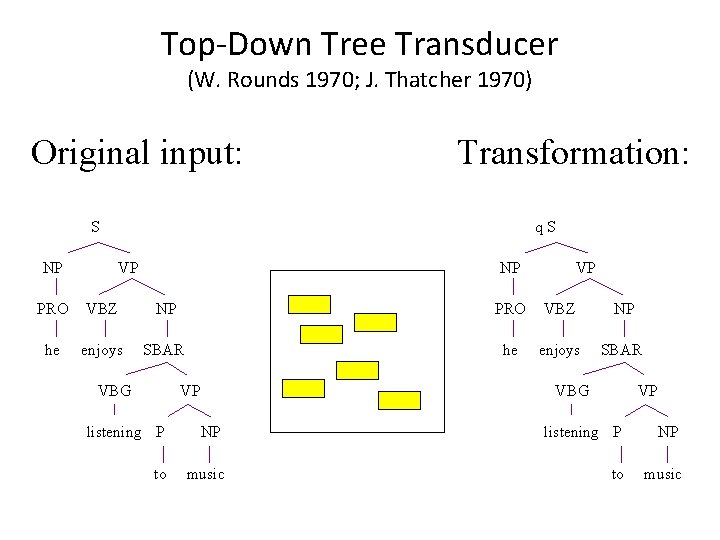

Top-Down Tree Transducer (W. Rounds 1970; J. Thatcher 1970) Original input: Transformation: S NP q. S VP NP VP PRO VBZ NP he enjoys SBAR VBG VP listening P NP to music

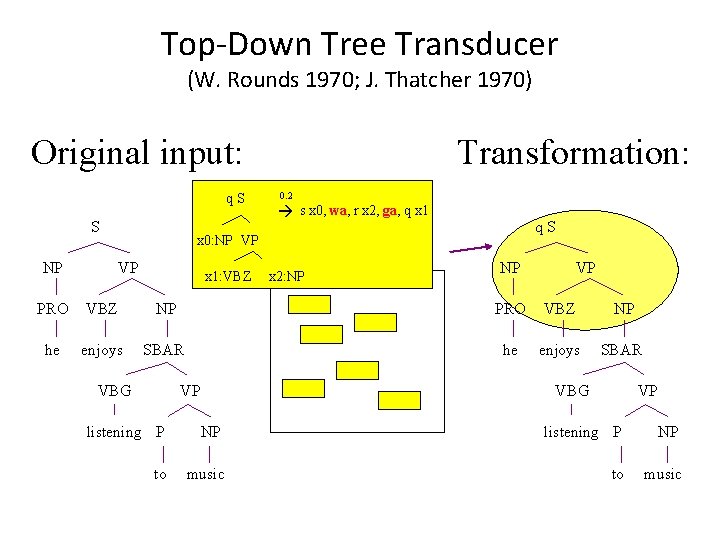

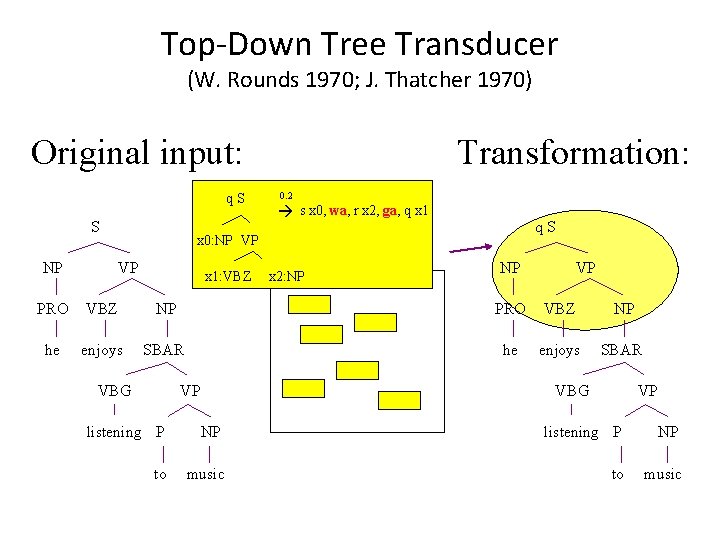

Top-Down Tree Transducer (W. Rounds 1970; J. Thatcher 1970) Original input: q. S S NP Transformation: 0. 2 s x 0, wa, r x 2, ga, q x 1 q. S x 0: NP VP VP x 1: VBZ x 2: NP NP VP PRO VBZ NP he enjoys SBAR VBG VP listening P NP to music

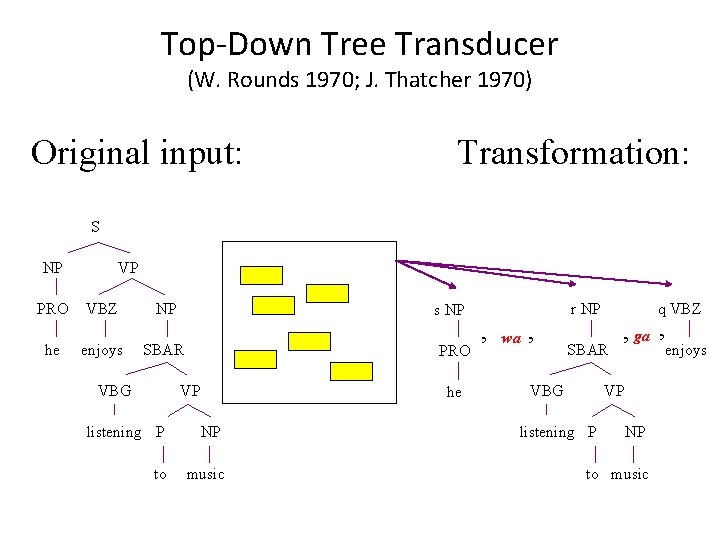

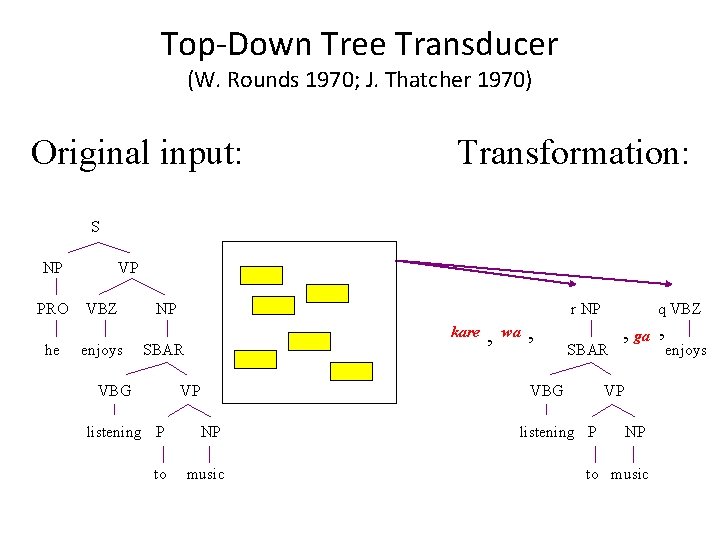

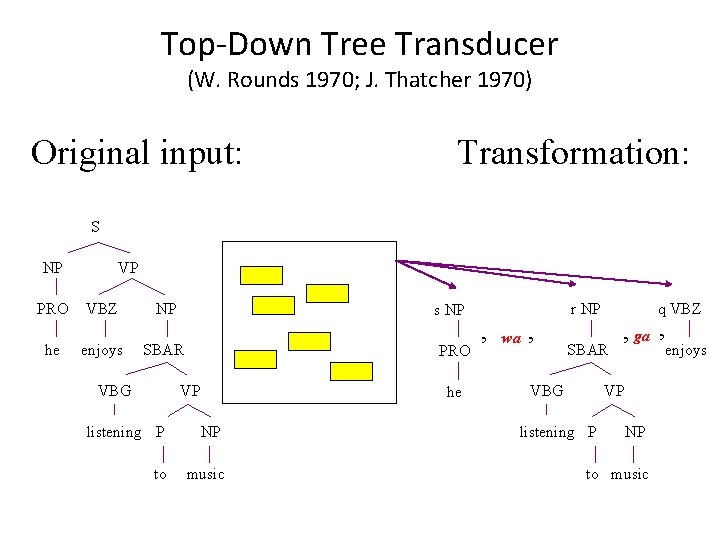

Top-Down Tree Transducer (W. Rounds 1970; J. Thatcher 1970) Original input: Transformation: S NP PRO he VP VBZ enjoys NP SBAR VBG PRO VP he listening P NP to music r NP , wa , q VBZ SBAR VBG , ga , VP listening P NP to music enjoys

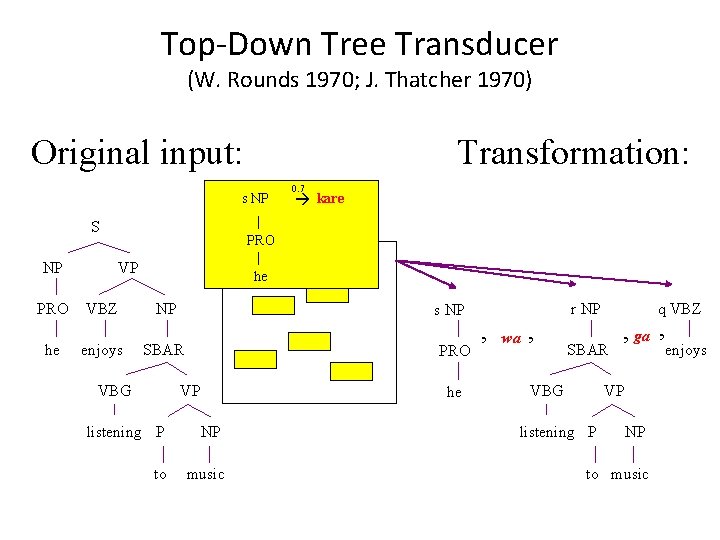

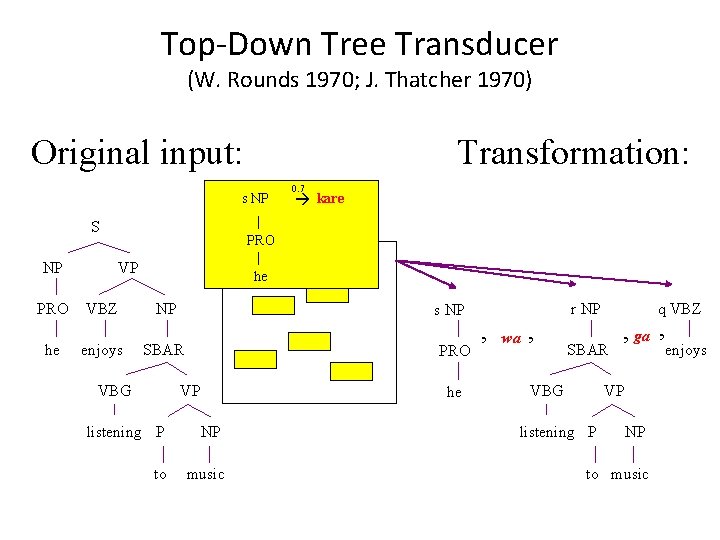

Top-Down Tree Transducer (W. Rounds 1970; J. Thatcher 1970) Original input: Transformation: s NP S NP PRO he 0. 7 kare PRO VP VBZ enjoys he NP s NP SBAR VBG PRO VP he listening P NP to music r NP , wa , q VBZ SBAR VBG , ga , VP listening P NP to music enjoys

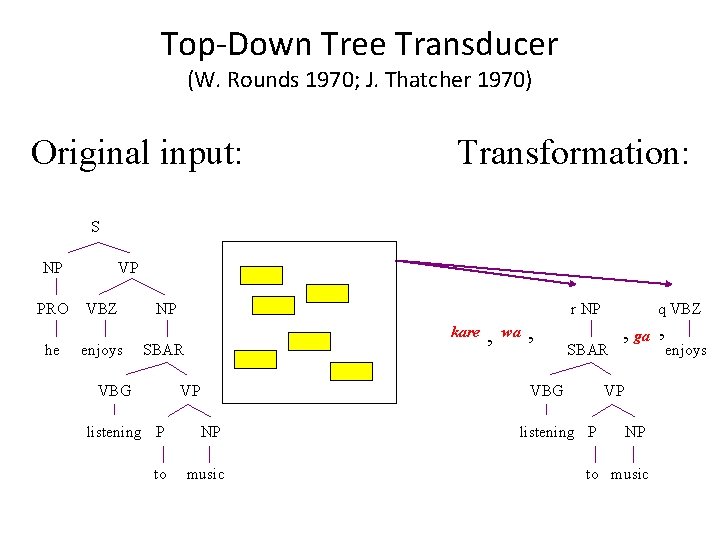

Top-Down Tree Transducer (W. Rounds 1970; J. Thatcher 1970) Original input: Transformation: S NP PRO he VP VBZ enjoys NP r NP kare SBAR VBG VP , wa , SBAR VBG listening P NP to music q VBZ , ga , VP listening P NP to music enjoys

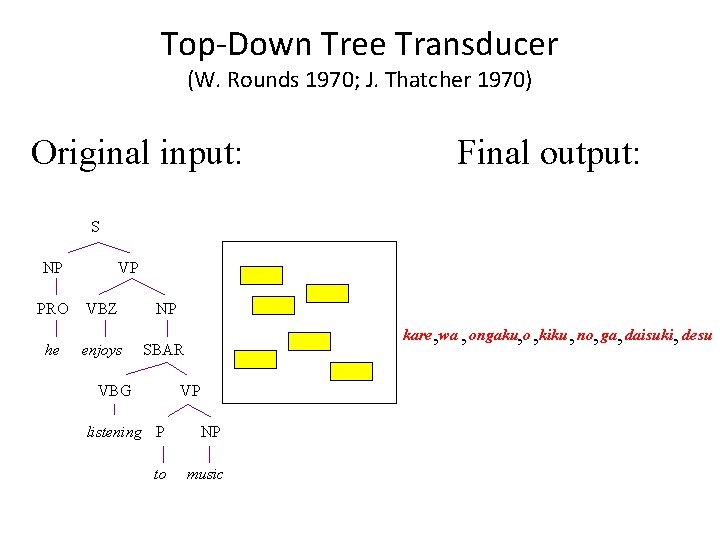

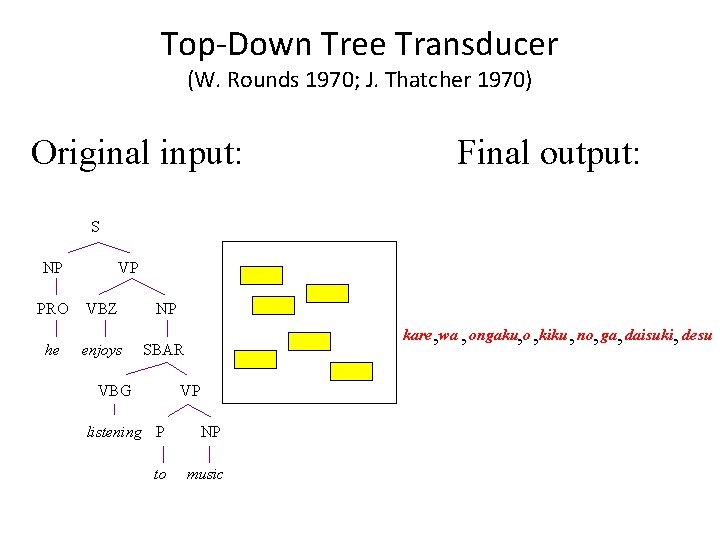

Top-Down Tree Transducer (W. Rounds 1970; J. Thatcher 1970) Original input: Final output: S NP PRO he VP VBZ enjoys NP kare , wa , ongaku, o , kiku , no, ga, daisuki, desu SBAR VBG VP listening P NP to music

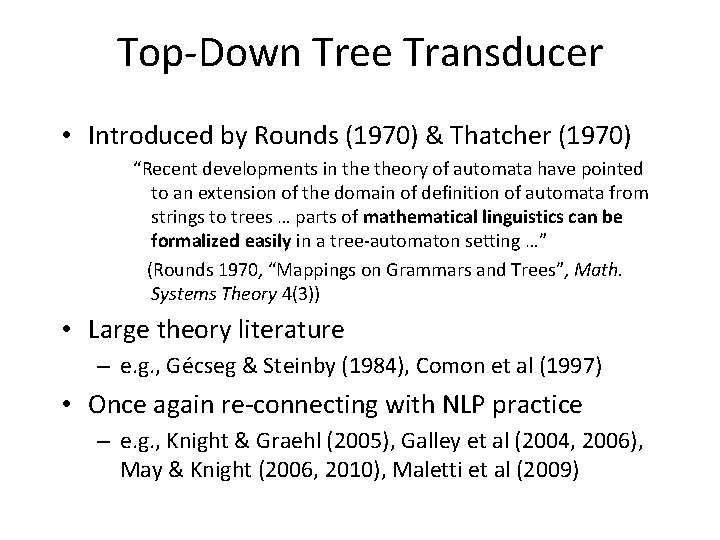

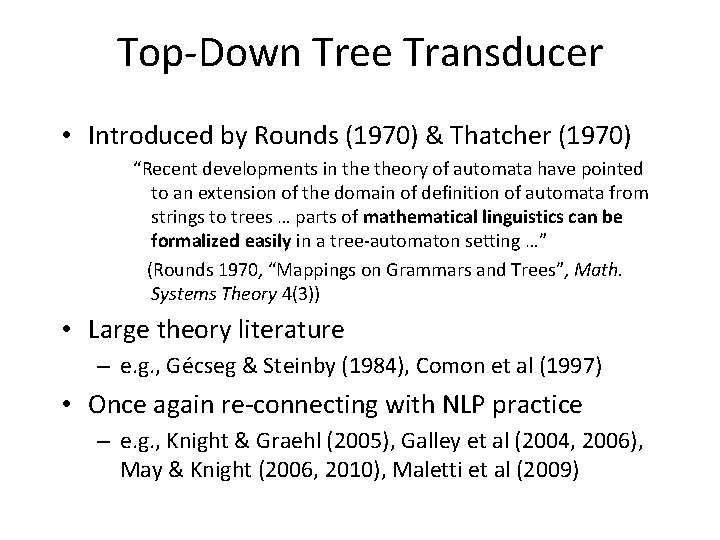

Top-Down Tree Transducer • Introduced by Rounds (1970) & Thatcher (1970) “Recent developments in theory of automata have pointed to an extension of the domain of definition of automata from strings to trees … parts of mathematical linguistics can be formalized easily in a tree-automaton setting …” (Rounds 1970, “Mappings on Grammars and Trees”, Math. Systems Theory 4(3)) • Large theory literature – e. g. , Gécseg & Steinby (1984), Comon et al (1997) • Once again re-connecting with NLP practice – e. g. , Knight & Graehl (2005), Galley et al (2004, 2006), May & Knight (2006, 2010), Maletti et al (2009)

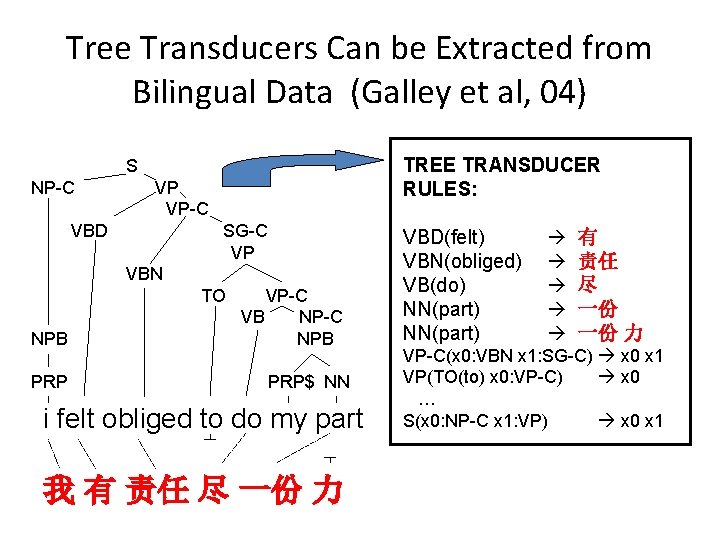

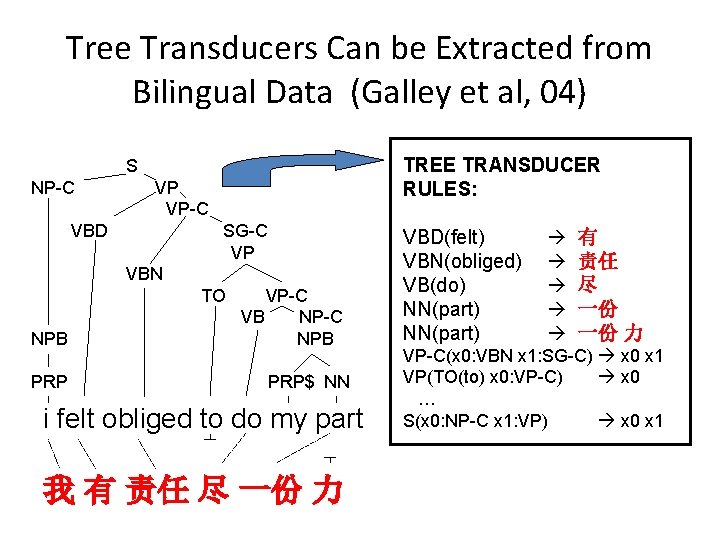

Tree Transducers Can be Extracted from Bilingual Data (Galley et al, 04) TREE TRANSDUCER RULES: S NP-C VP VP-C VBD SG-C VP VBN TO NPB PRP VP-C VB NP-C NPB PRP$ NN i felt obliged to do my part 我 有 责任 尽 一份 力 VBD(felt) VBN(obliged) VB(do) NN(part) 有 责任 尽 一份 一份 力 VP-C(x 0: VBN x 1: SG-C) x 0 x 1 VP(TO(to) x 0: VP-C) x 0 … S(x 0: NP-C x 1: VP) x 0 x 1

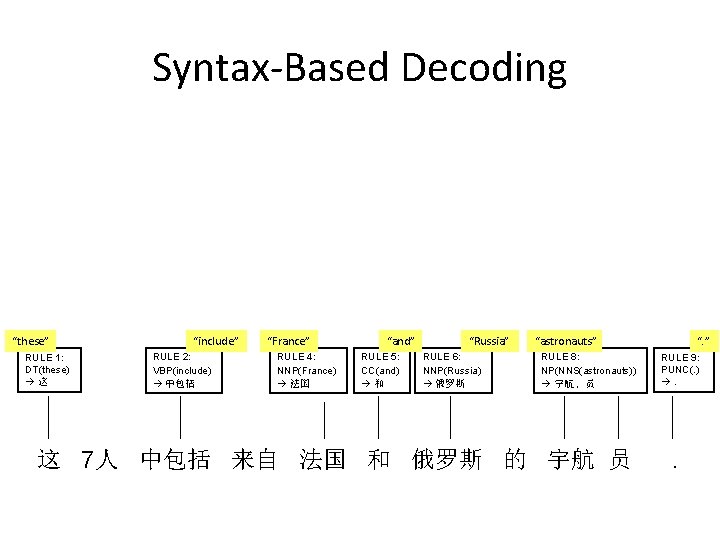

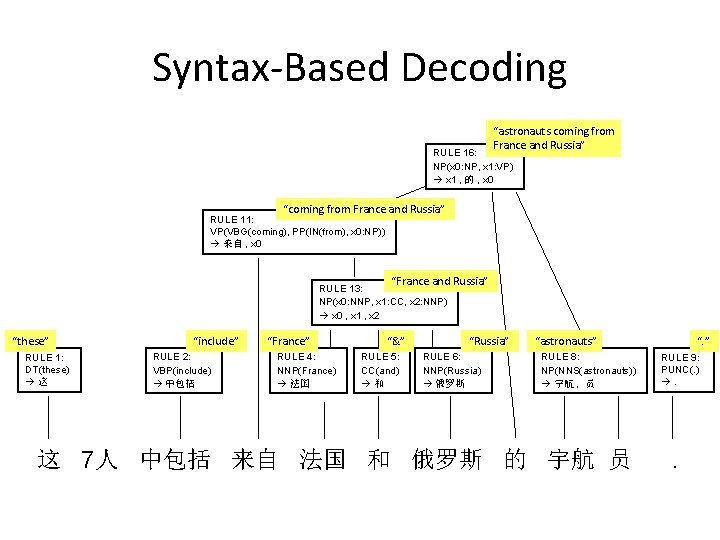

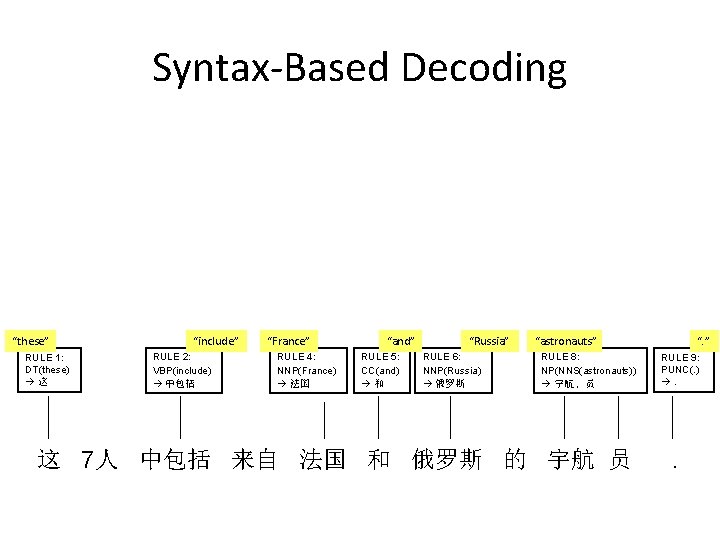

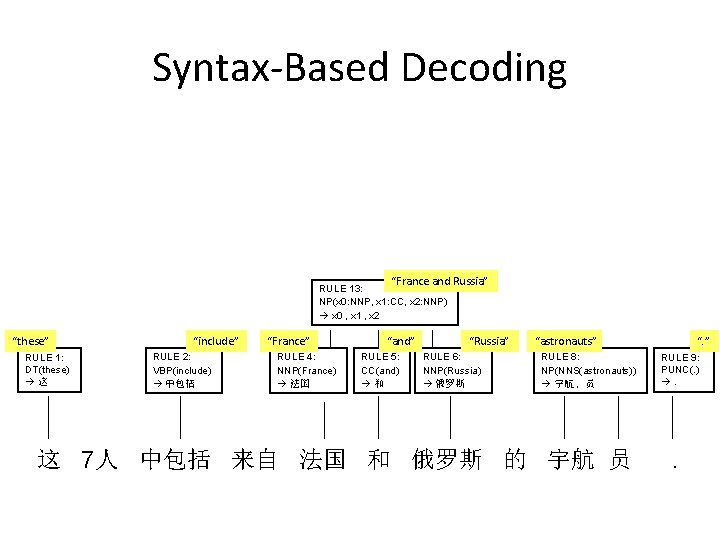

Syntax-Based Decoding “these” RULE 1: DT(these) 这 “include” RULE 2: VBP(include) 中包括 “France” RULE 4: NNP(France) 法国 “and” RULE 5: CC(and) 和 “Russia” RULE 6: NNP(Russia) 俄罗斯 “astronauts” RULE 8: NP(NNS(astronauts)) 宇航 , 员 这 7人 中包括 来自 法国 和 俄罗斯 的 宇航 员 “. ” RULE 9: PUNC(. ) . .

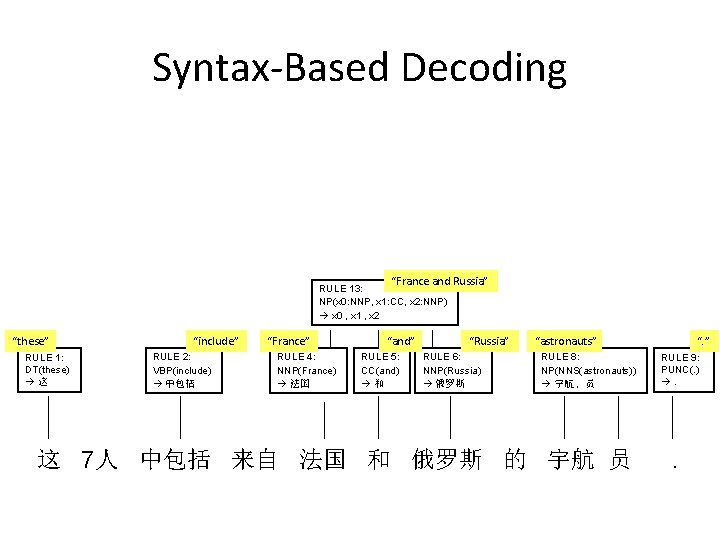

Syntax-Based Decoding “France and Russia” RULE 13: NP(x 0: NNP, x 1: CC, x 2: NNP) x 0 , x 1 , x 2 “these” RULE 1: DT(these) 这 “include” RULE 2: VBP(include) 中包括 “France” RULE 4: NNP(France) 法国 “and” RULE 5: CC(and) 和 “Russia” RULE 6: NNP(Russia) 俄罗斯 “astronauts” RULE 8: NP(NNS(astronauts)) 宇航 , 员 这 7人 中包括 来自 法国 和 俄罗斯 的 宇航 员 “. ” RULE 9: PUNC(. ) . .

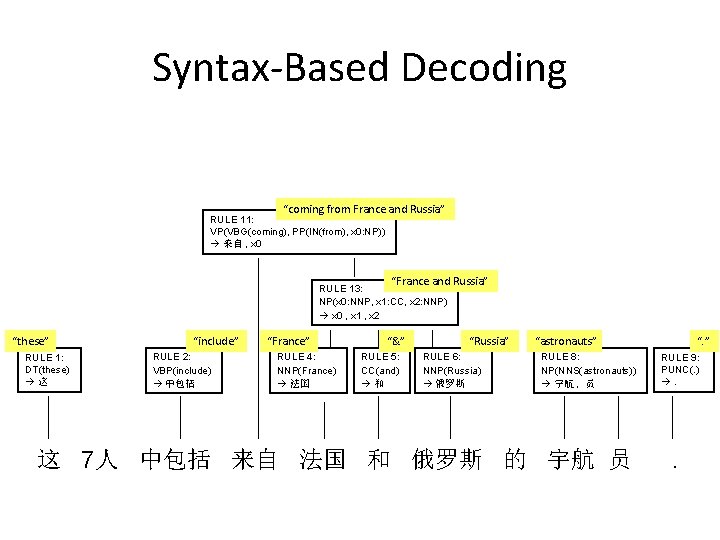

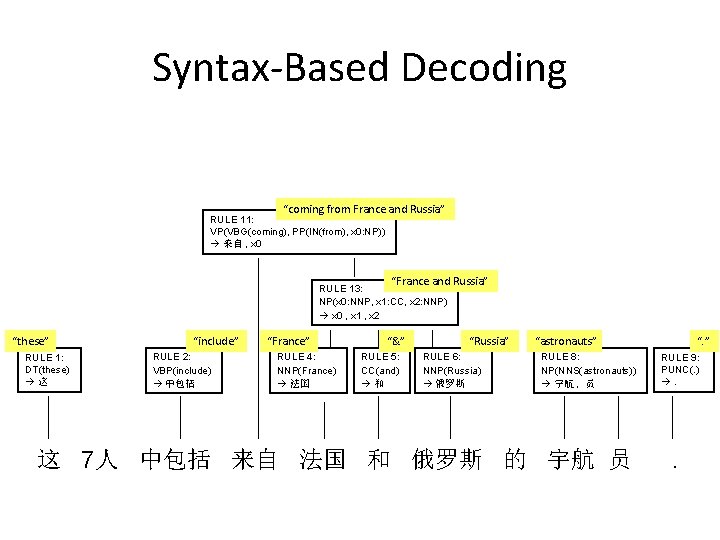

Syntax-Based Decoding “coming from France and Russia” RULE 11: VP(VBG(coming), PP(IN(from), x 0: NP)) 来自 , x 0 “France and Russia” RULE 13: NP(x 0: NNP, x 1: CC, x 2: NNP) x 0 , x 1 , x 2 “these” RULE 1: DT(these) 这 “include” RULE 2: VBP(include) 中包括 “France” RULE 4: NNP(France) 法国 “&” RULE 5: CC(and) 和 “Russia” RULE 6: NNP(Russia) 俄罗斯 “astronauts” RULE 8: NP(NNS(astronauts)) 宇航 , 员 这 7人 中包括 来自 法国 和 俄罗斯 的 宇航 员 “. ” RULE 9: PUNC(. ) . .

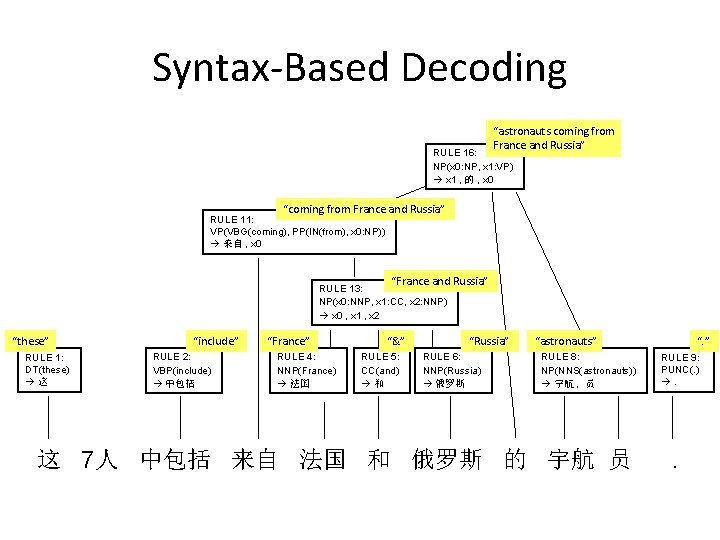

Syntax-Based Decoding “astronauts coming from France and Russia” RULE 16: NP(x 0: NP, x 1: VP) x 1 , 的 , x 0 “coming from France and Russia” RULE 11: VP(VBG(coming), PP(IN(from), x 0: NP)) 来自 , x 0 “France and Russia” RULE 13: NP(x 0: NNP, x 1: CC, x 2: NNP) x 0 , x 1 , x 2 “these” RULE 1: DT(these) 这 “include” RULE 2: VBP(include) 中包括 “France” RULE 4: NNP(France) 法国 “&” RULE 5: CC(and) 和 “Russia” RULE 6: NNP(Russia) 俄罗斯 “astronauts” RULE 8: NP(NNS(astronauts)) 宇航 , 员 这 7人 中包括 来自 法国 和 俄罗斯 的 宇航 员 “. ” RULE 9: PUNC(. ) . .

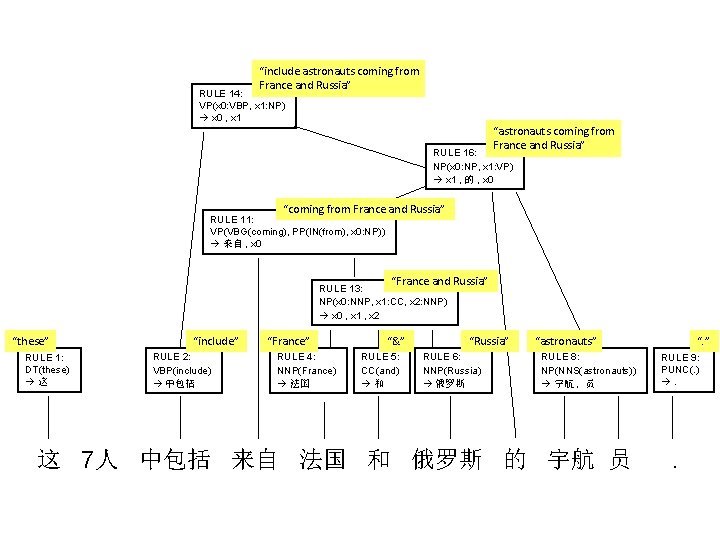

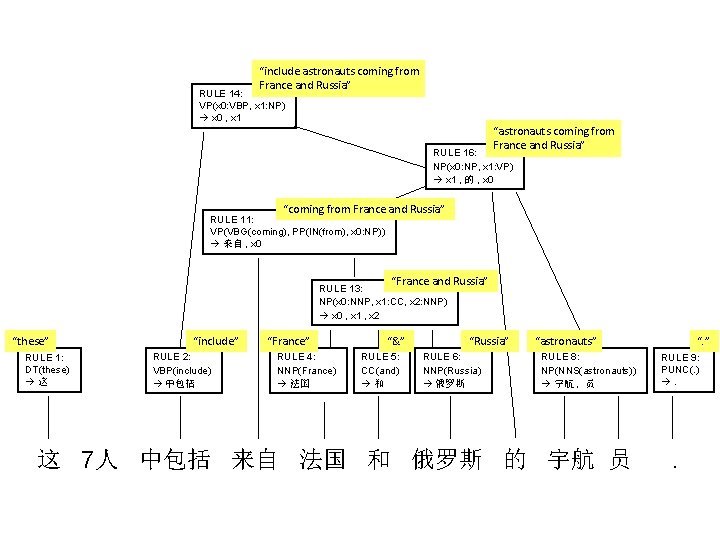

“include astronauts coming from France and Russia” RULE 14: VP(x 0: VBP, x 1: NP) x 0 , x 1 “astronauts coming from France and Russia” RULE 16: NP(x 0: NP, x 1: VP) x 1 , 的 , x 0 “coming from France and Russia” RULE 11: VP(VBG(coming), PP(IN(from), x 0: NP)) 来自 , x 0 “France and Russia” RULE 13: NP(x 0: NNP, x 1: CC, x 2: NNP) x 0 , x 1 , x 2 “these” RULE 1: DT(these) 这 “include” RULE 2: VBP(include) 中包括 “France” RULE 4: NNP(France) 法国 “&” RULE 5: CC(and) 和 “Russia” RULE 6: NNP(Russia) 俄罗斯 “astronauts” RULE 8: NP(NNS(astronauts)) 宇航 , 员 这 7人 中包括 来自 法国 和 俄罗斯 的 宇航 员 “. ” RULE 9: PUNC(. ) . .

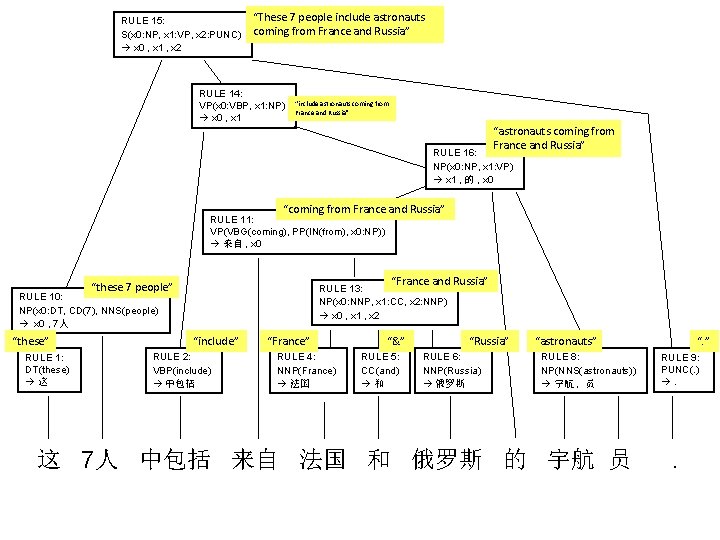

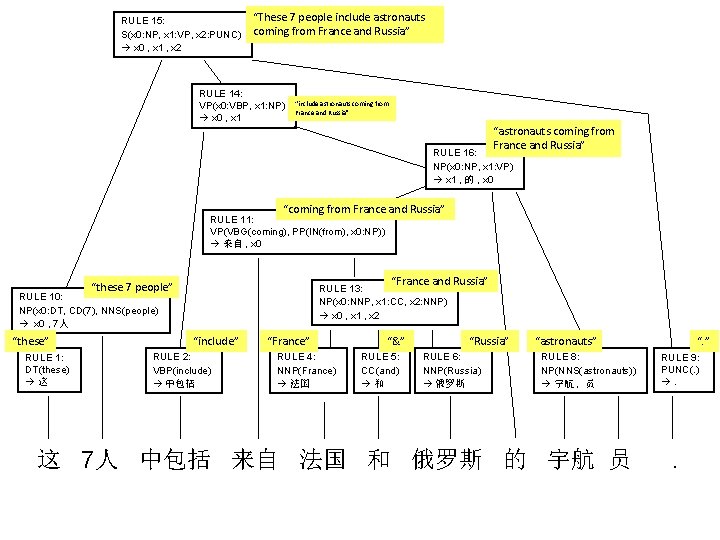

RULE 15: S(x 0: NP, x 1: VP, x 2: PUNC) x 0 , x 1 , x 2 “These 7 people include astronauts coming from France and Russia” RULE 14: VP(x 0: VBP, x 1: NP) x 0 , x 1 “include astronauts coming from France and Russia” “astronauts coming from France and Russia” RULE 16: NP(x 0: NP, x 1: VP) x 1 , 的 , x 0 “coming from France and Russia” RULE 11: VP(VBG(coming), PP(IN(from), x 0: NP)) 来自 , x 0 “France and Russia” “these 7 people” RULE 13: NP(x 0: NNP, x 1: CC, x 2: NNP) x 0 , x 1 , x 2 RULE 10: NP(x 0: DT, CD(7), NNS(people) x 0 , 7人 “these” RULE 1: DT(these) 这 “include” RULE 2: VBP(include) 中包括 “France” RULE 4: NNP(France) 法国 “&” RULE 5: CC(and) 和 “Russia” RULE 6: NNP(Russia) 俄罗斯 “astronauts” RULE 8: NP(NNS(astronauts)) 宇航 , 员 这 7人 中包括 来自 法国 和 俄罗斯 的 宇航 员 “. ” RULE 9: PUNC(. ) . .

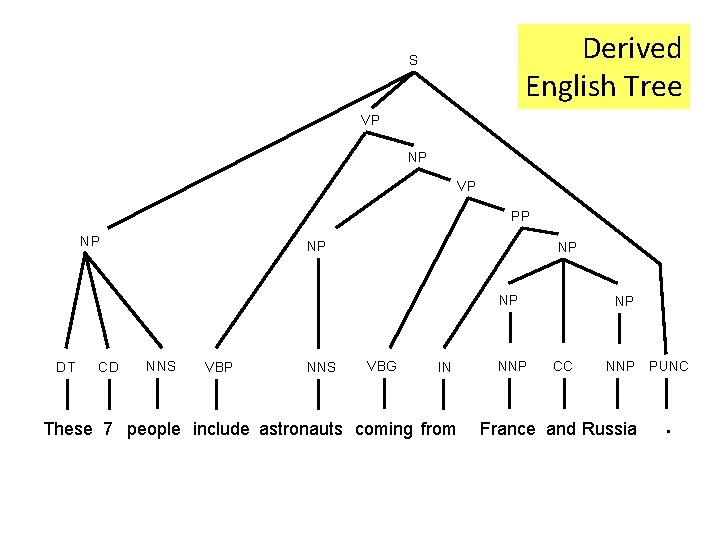

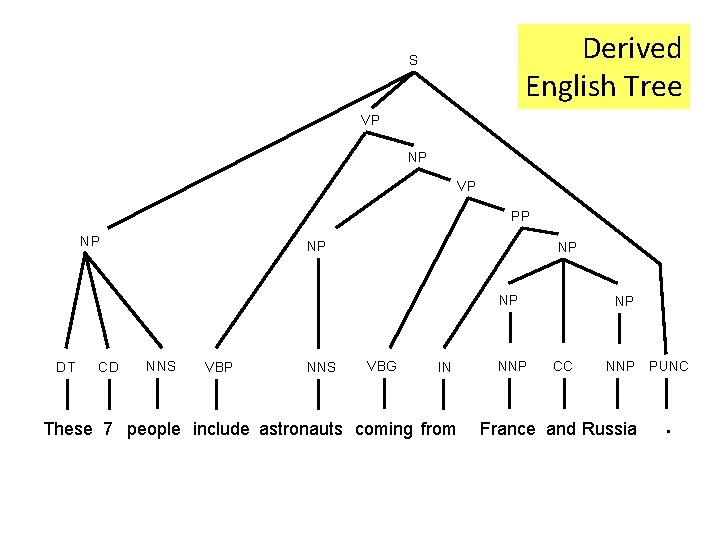

Derived English Tree S VP NP VP PP NP NP DT CD NNS VBP NNS VBG IN These 7 people include astronauts coming from NNP NP CC NNP PUNC France and Russia .

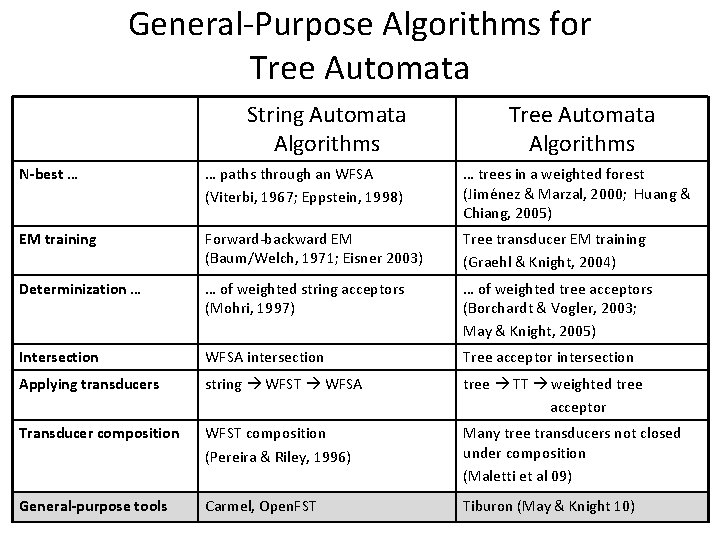

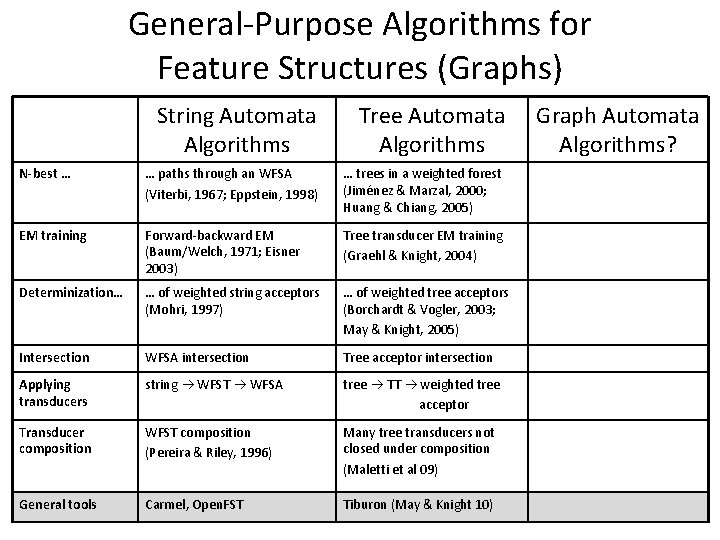

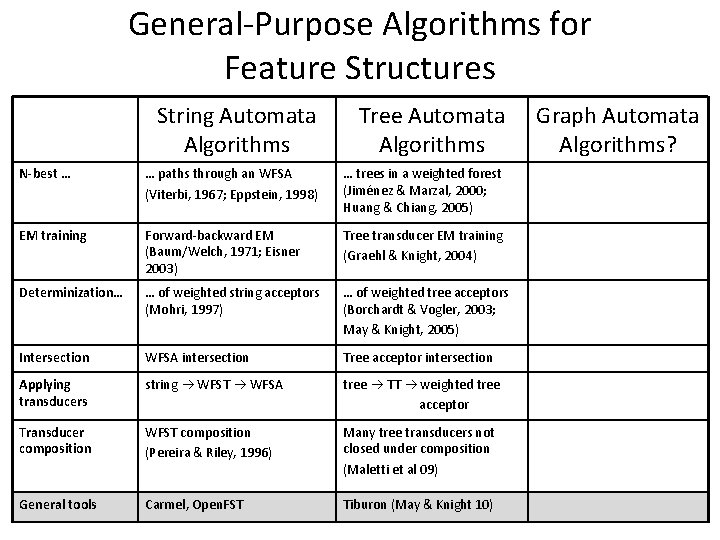

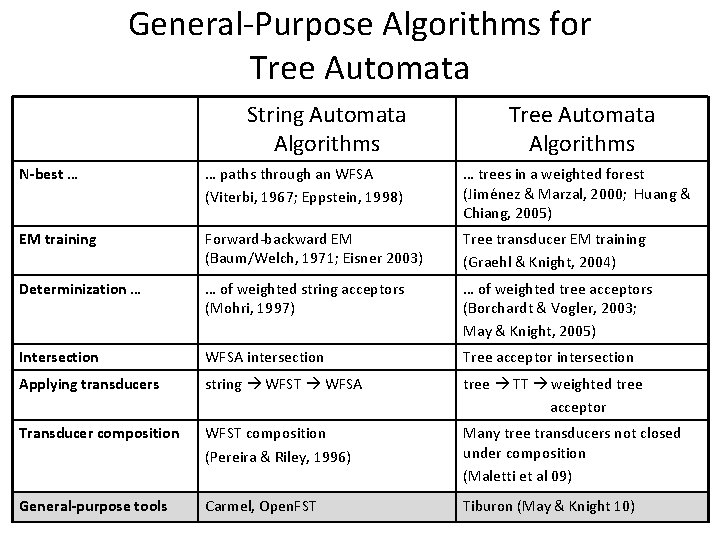

General-Purpose Algorithms for Tree Automata String Automata Algorithms Tree Automata Algorithms N-best … … paths through an WFSA (Viterbi, 1967; Eppstein, 1998) … trees in a weighted forest (Jiménez & Marzal, 2000; Huang & Chiang, 2005) EM training Forward-backward EM (Baum/Welch, 1971; Eisner 2003) Tree transducer EM training (Graehl & Knight, 2004) Determinization … … of weighted string acceptors (Mohri, 1997) … of weighted tree acceptors (Borchardt & Vogler, 2003; May & Knight, 2005) Intersection WFSA intersection Tree acceptor intersection Applying transducers string WFST WFSA tree TT weighted tree acceptor Transducer composition WFST composition (Pereira & Riley, 1996) Many tree transducers not closed under composition (Maletti et al 09) General-purpose tools Carmel, Open. FST Tiburon (May & Knight 10)

Machine Translation Phrase-based MT source string target string Syntax-based MT source string source tree target string Meaning-based MT source string source tree meaning representation target tree target string

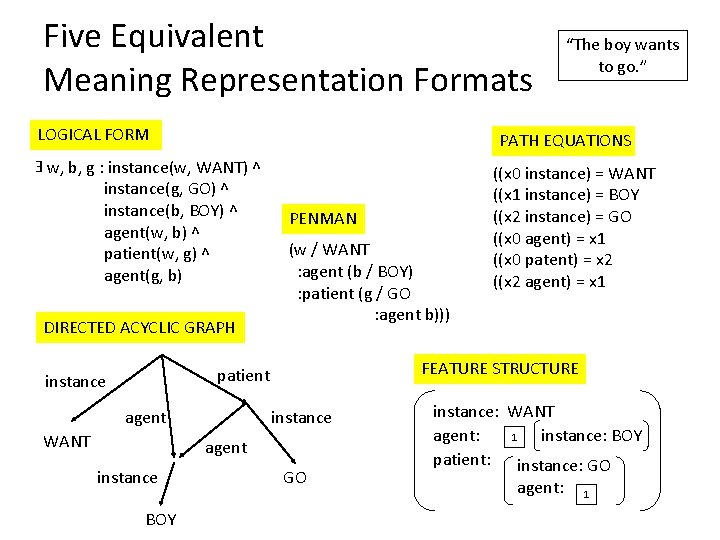

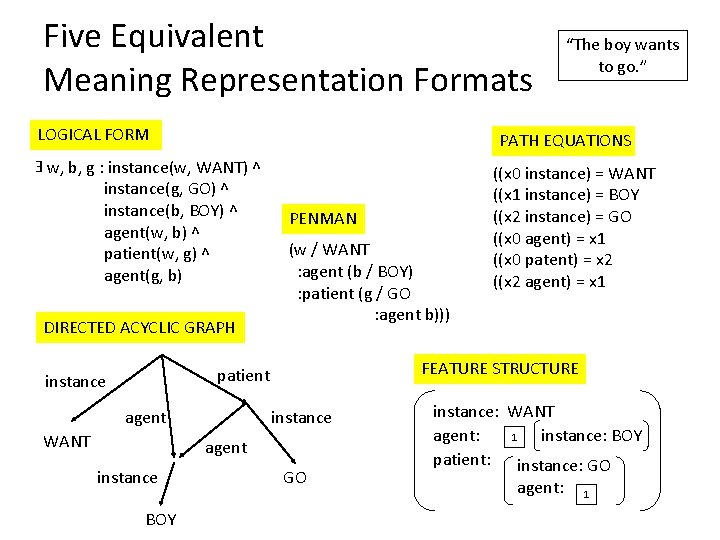

Five Equivalent Meaning Representation Formats LOGICAL FORM PATH EQUATIONS w, b, g : instance(w, WANT) ^ instance(g, GO) ^ instance(b, BOY) ^ agent(w, b) ^ patient(w, g) ^ agent(g, b) E DIRECTED ACYCLIC GRAPH PENMAN (w / WANT : agent (b / BOY) : patient (g / GO : agent b))) agent WANT instance agent instance BOY ((x 0 instance) = WANT ((x 1 instance) = BOY ((x 2 instance) = GO ((x 0 agent) = x 1 ((x 0 patent) = x 2 ((x 2 agent) = x 1 FEATURE STRUCTURE patient instance “The boy wants to go. ” GO instance: WANT 1 agent: instance: BOY patient: instance: GO agent: 1

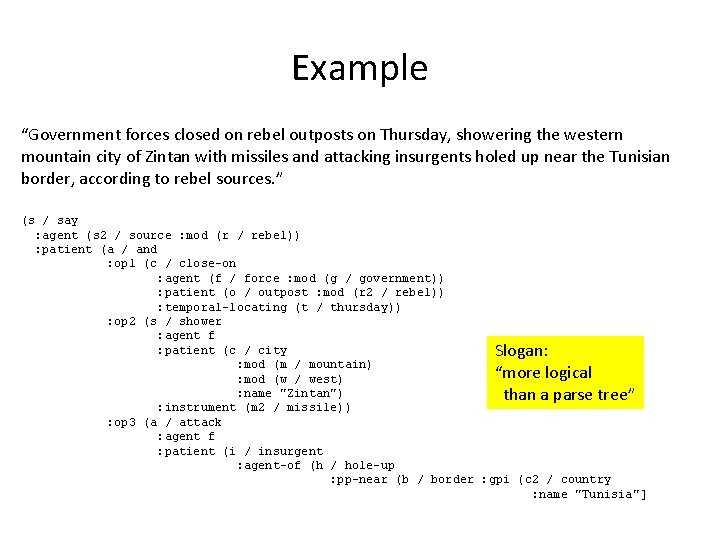

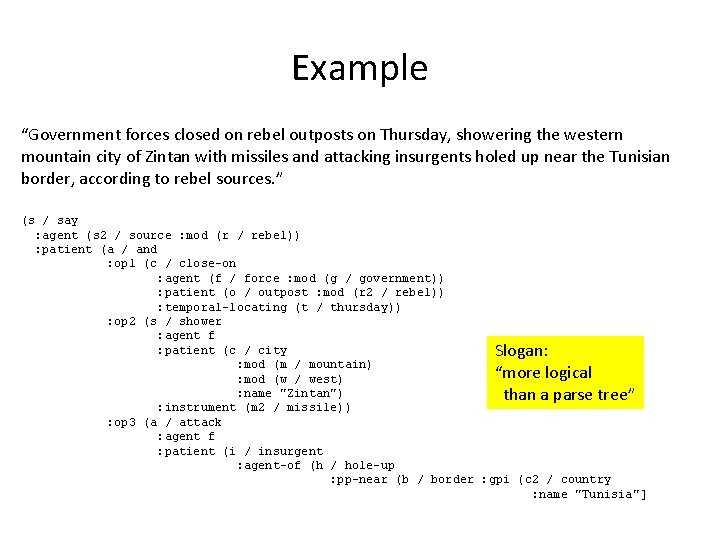

Example “Government forces closed on rebel outposts on Thursday, showering the western mountain city of Zintan with missiles and attacking insurgents holed up near the Tunisian border, according to rebel sources. ” (s / say : agent (s 2 / source : mod (r / rebel)) : patient (a / and : op 1 (c / close-on : agent (f / force : mod (g / government)) : patient (o / outpost : mod (r 2 / rebel)) : temporal-locating (t / thursday)) : op 2 (s / shower : agent f : patient (c / city Slogan: : mod (m / mountain) “more logical : mod (w / west) : name "Zintan") than a parse tree” : instrument (m 2 / missile)) : op 3 (a / attack : agent f : patient (i / insurgent : agent-of (h / hole-up : pp-near (b / border : gpi (c 2 / country : name "Tunisia"]

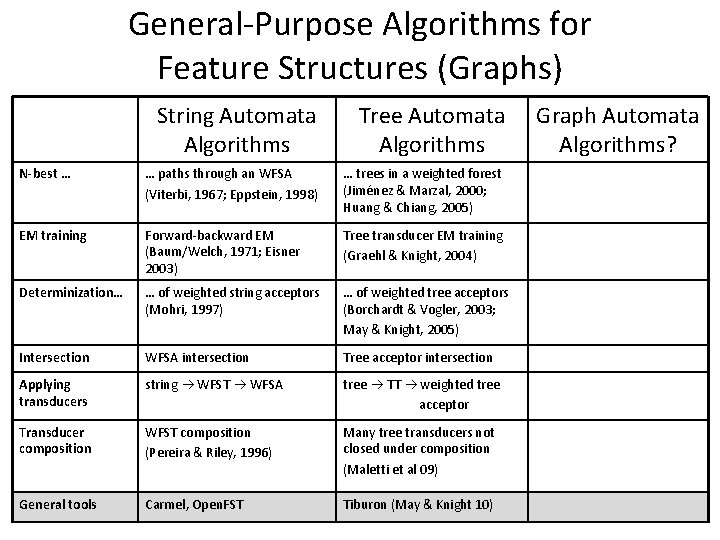

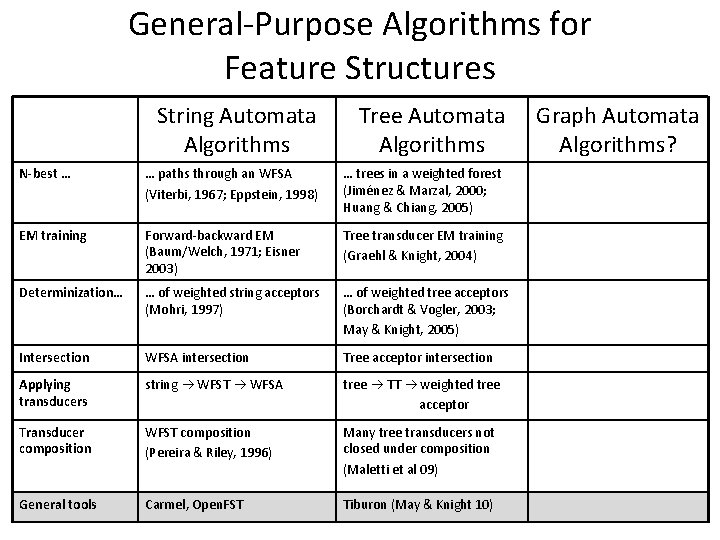

General-Purpose Algorithms for Feature Structures (Graphs) String Automata Algorithms Tree Automata Algorithms N-best … … paths through an WFSA (Viterbi, 1967; Eppstein, 1998) … trees in a weighted forest (Jiménez & Marzal, 2000; Huang & Chiang, 2005) EM training Forward-backward EM (Baum/Welch, 1971; Eisner 2003) Tree transducer EM training (Graehl & Knight, 2004) Determinization… … of weighted string acceptors (Mohri, 1997) … of weighted tree acceptors (Borchardt & Vogler, 2003; May & Knight, 2005) Intersection WFSA intersection Tree acceptor intersection Applying transducers string WFST WFSA tree TT weighted tree acceptor Transducer composition WFST composition (Pereira & Riley, 1996) Many tree transducers not closed under composition (Maletti et al 09) General tools Carmel, Open. FST Tiburon (May & Knight 10) Graph Automata Algorithms?

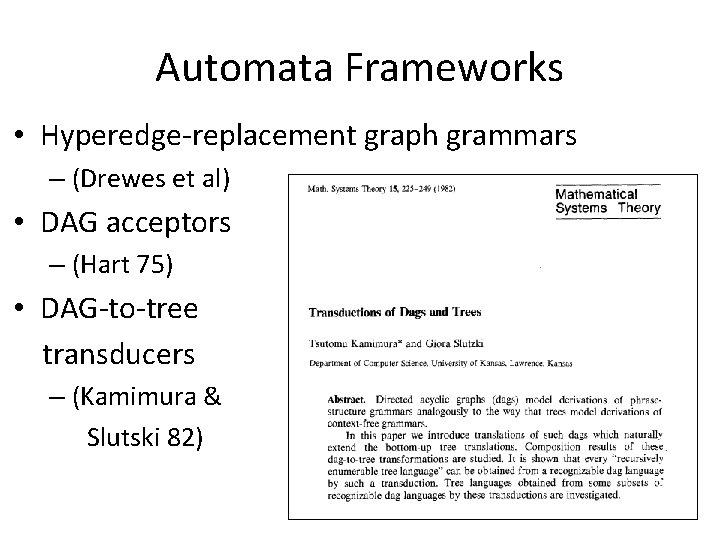

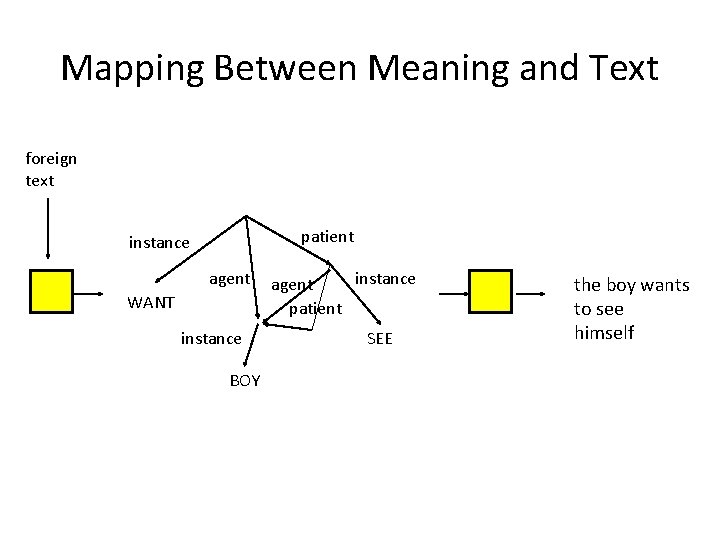

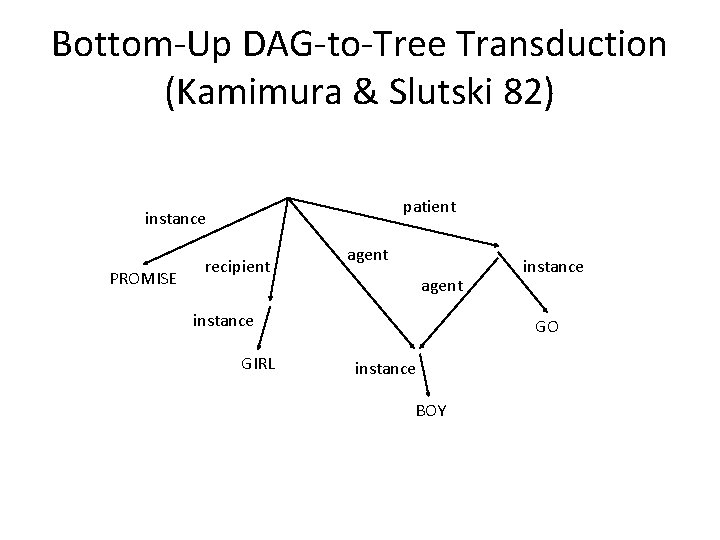

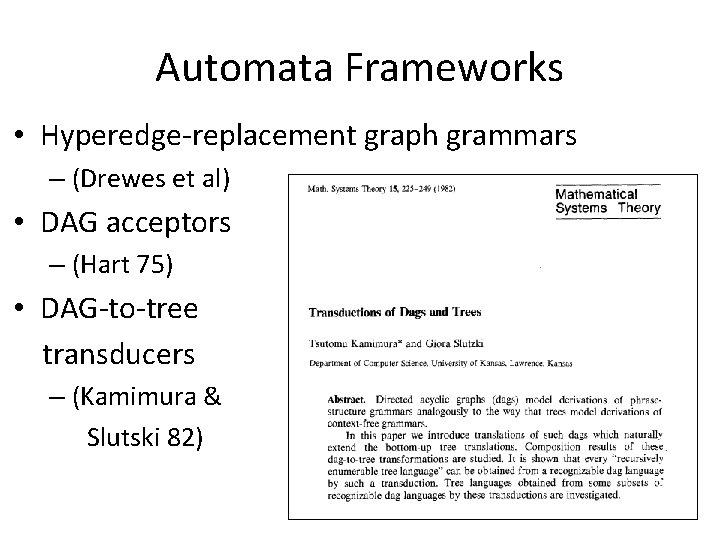

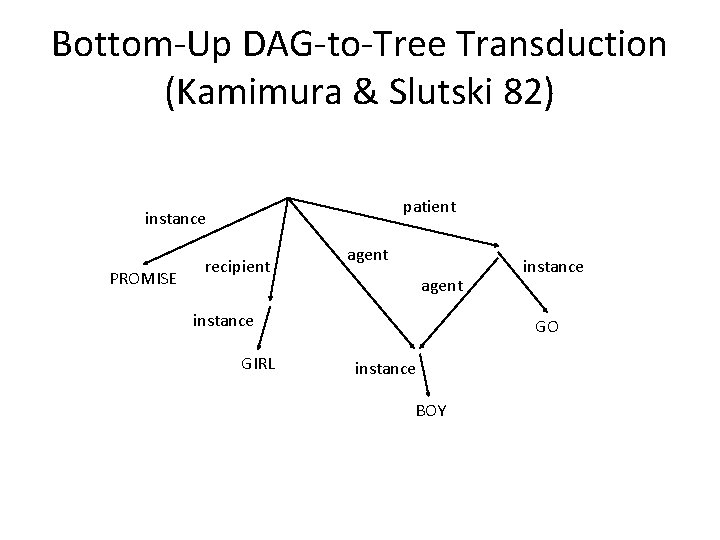

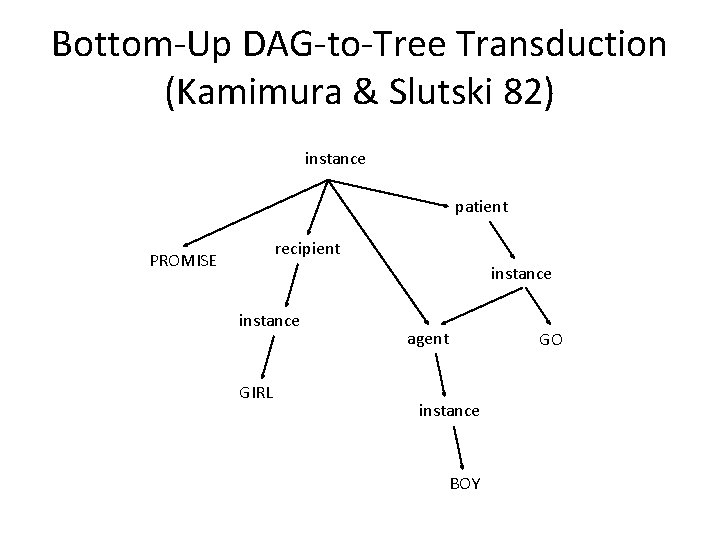

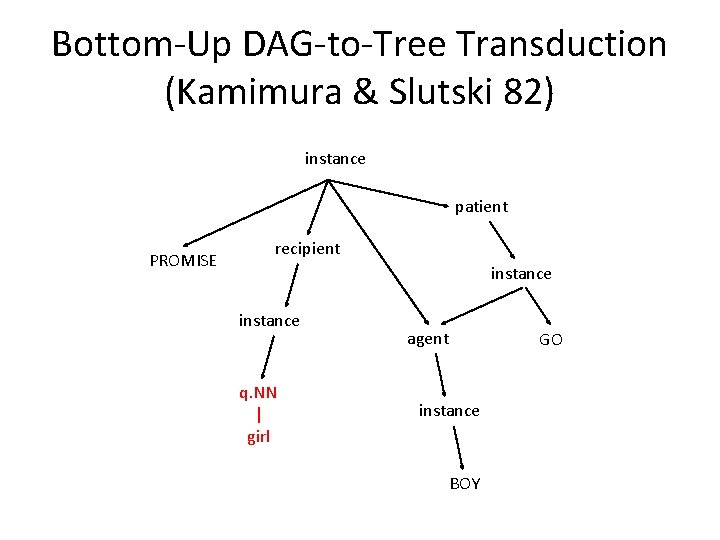

Automata Frameworks • Hyperedge-replacement graph grammars – (Drewes et al) • DAG acceptors – (Hart 75) • DAG-to-tree transducers – (Kamimura & Slutski 82)

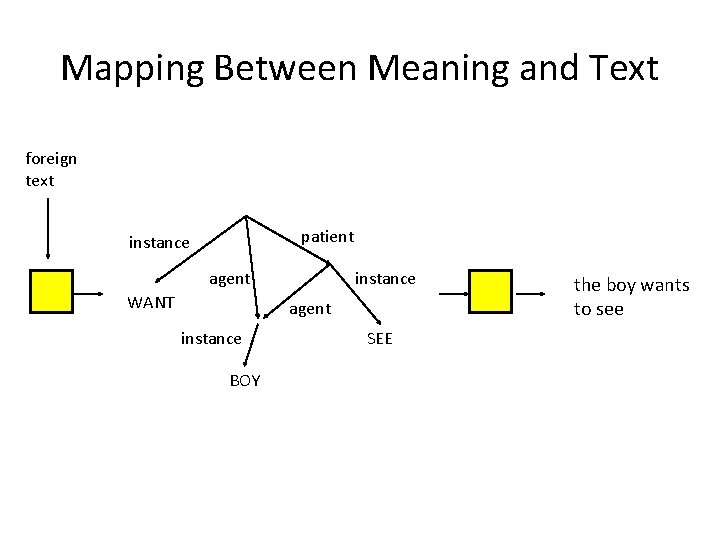

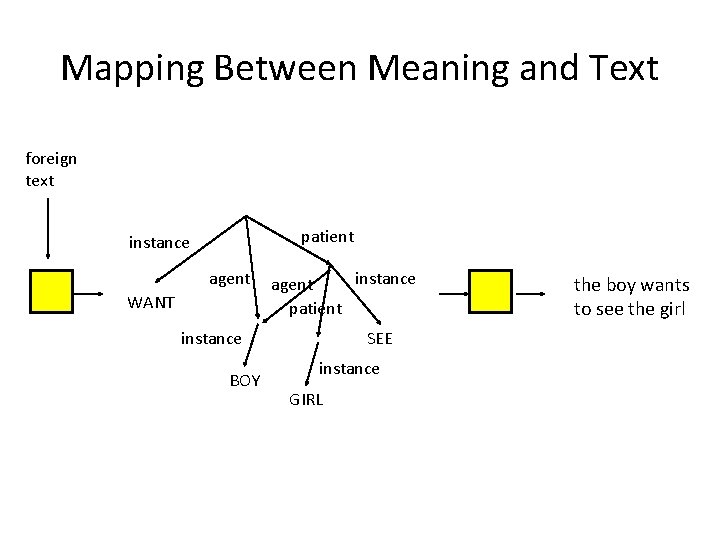

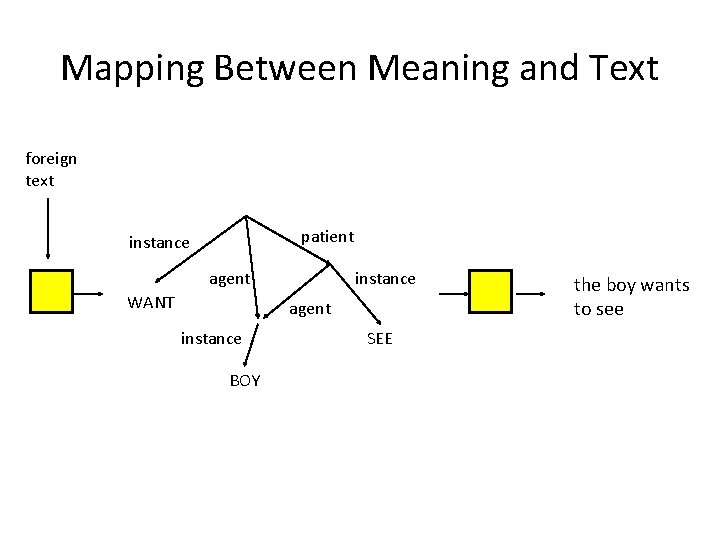

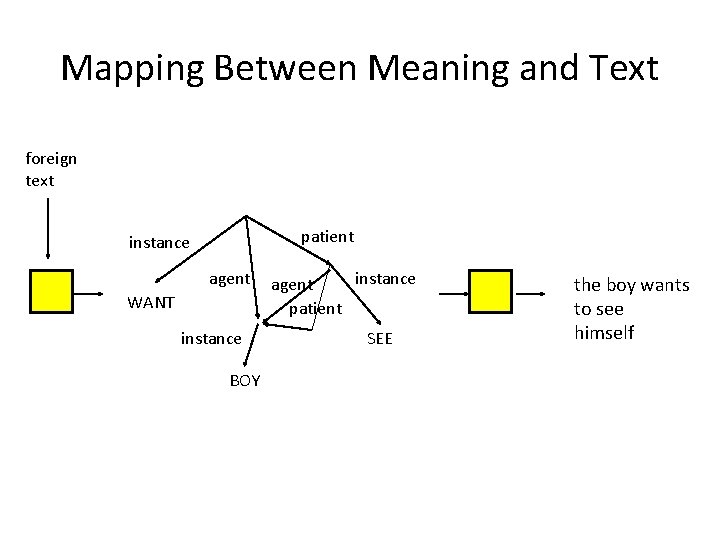

Mapping Between Meaning and Text foreign text patient instance agent WANT instance agent instance BOY SEE the boy wants to see

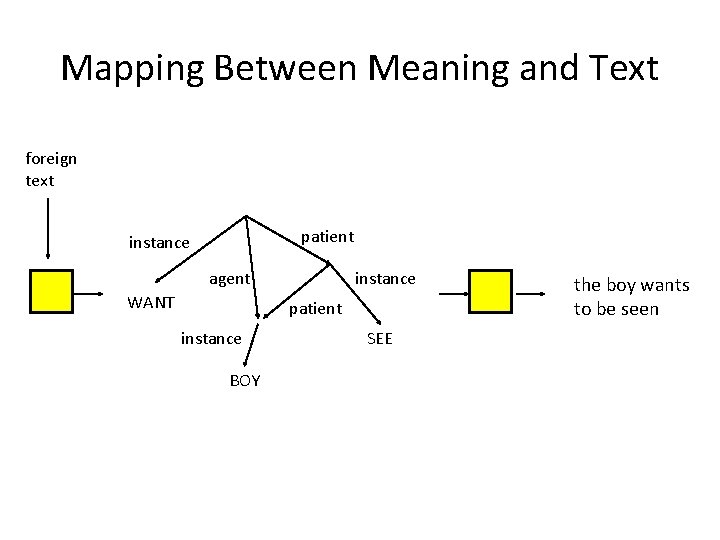

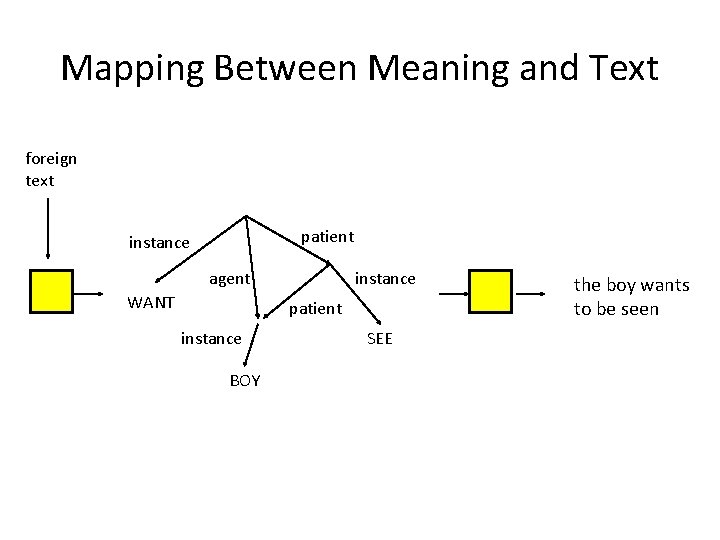

Mapping Between Meaning and Text foreign text patient instance agent WANT instance patient instance BOY SEE the boy wants to be seen

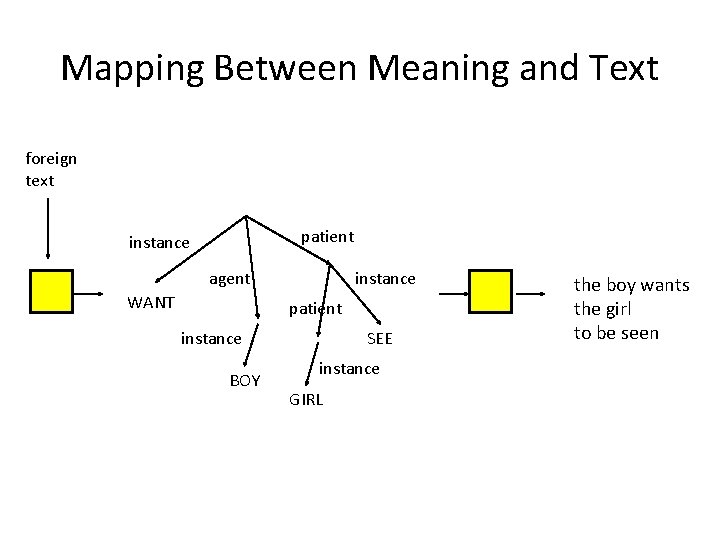

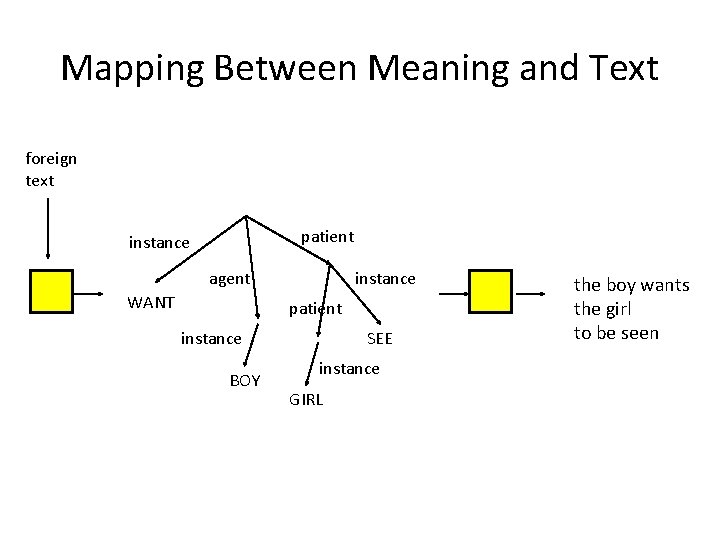

Mapping Between Meaning and Text foreign text patient instance agent WANT instance patient instance BOY SEE instance GIRL the boy wants the girl to be seen

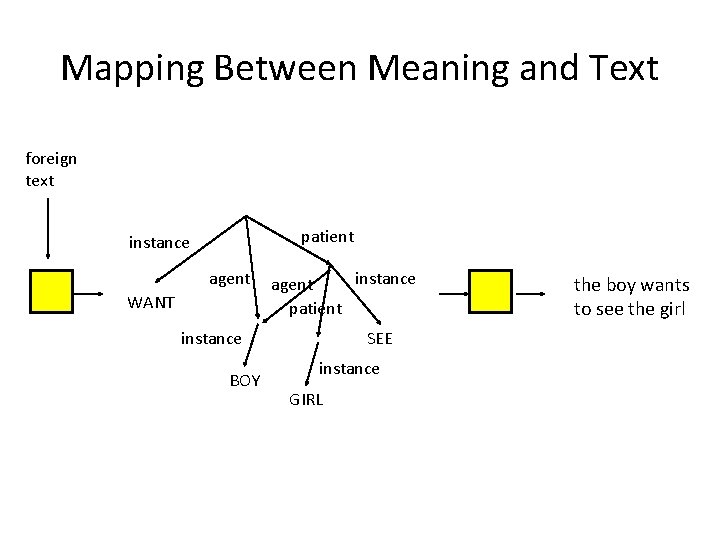

Mapping Between Meaning and Text foreign text patient instance agent WANT instance agent patient instance BOY SEE instance GIRL the boy wants to see the girl

Mapping Between Meaning and Text foreign text patient instance agent WANT instance BOY instance agent patient SEE the boy wants to see himself

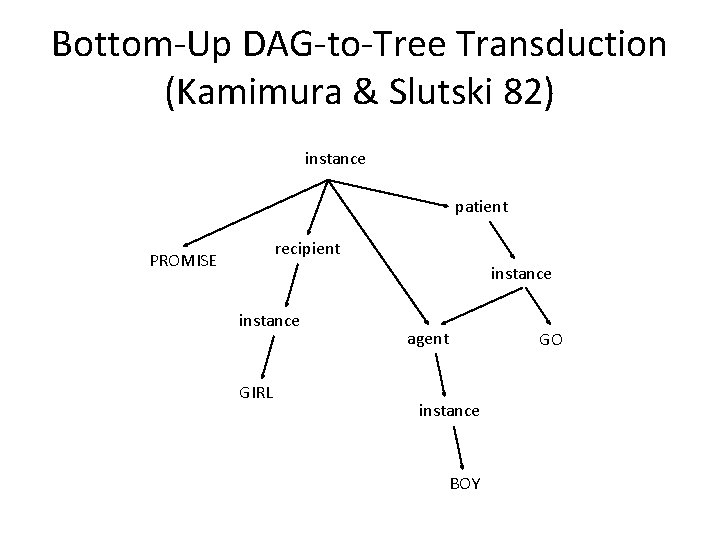

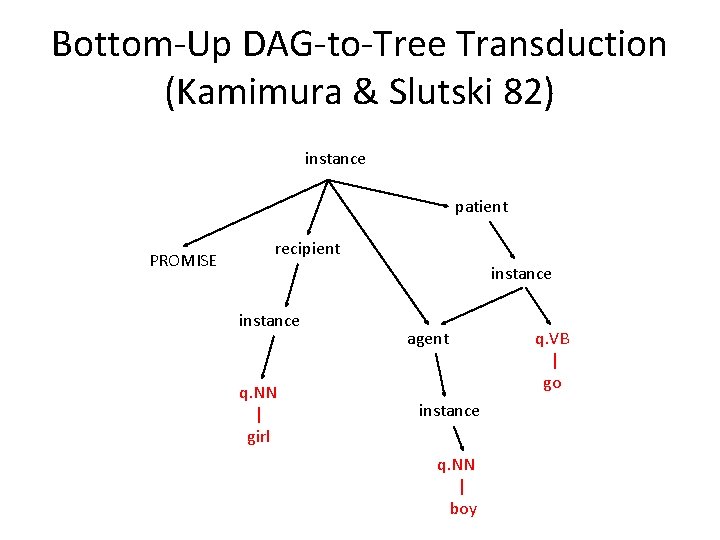

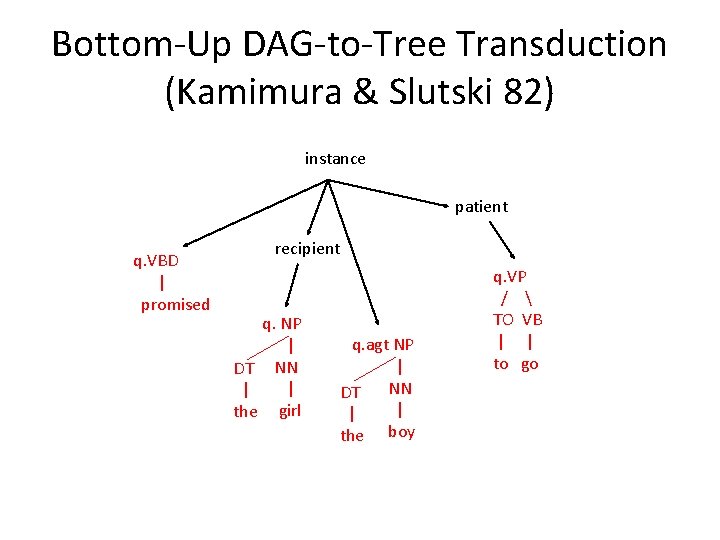

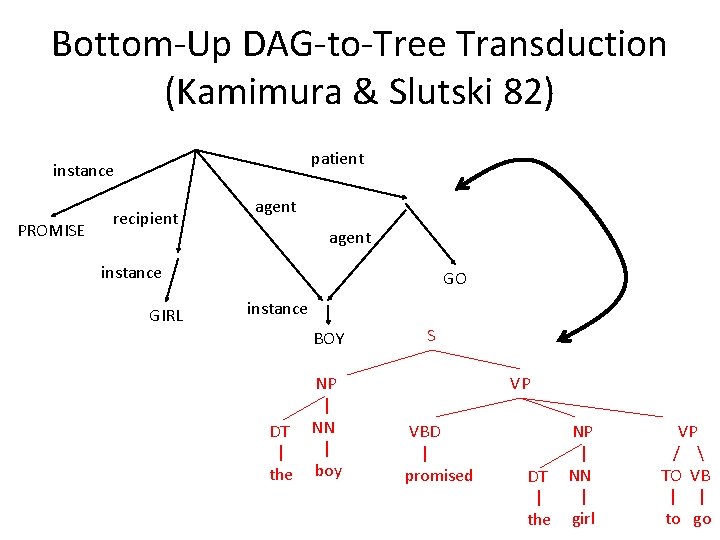

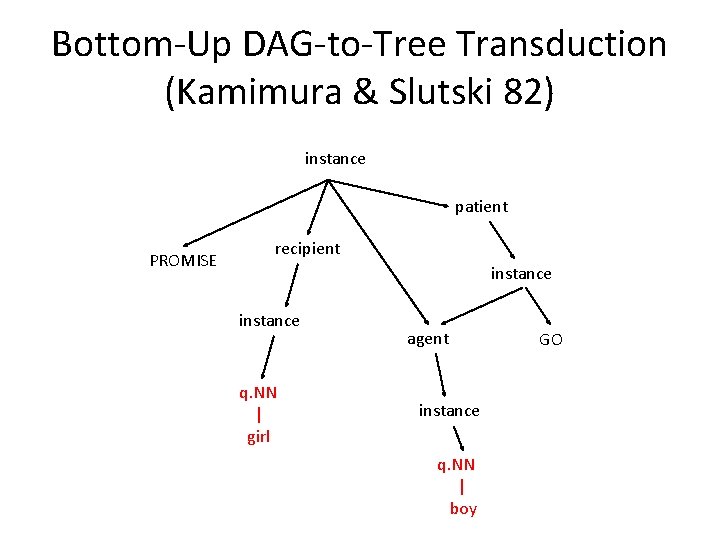

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) patient instance PROMISE recipient agent instance GIRL instance GO instance BOY

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient recipient PROMISE instance GIRL agent GO instance BOY

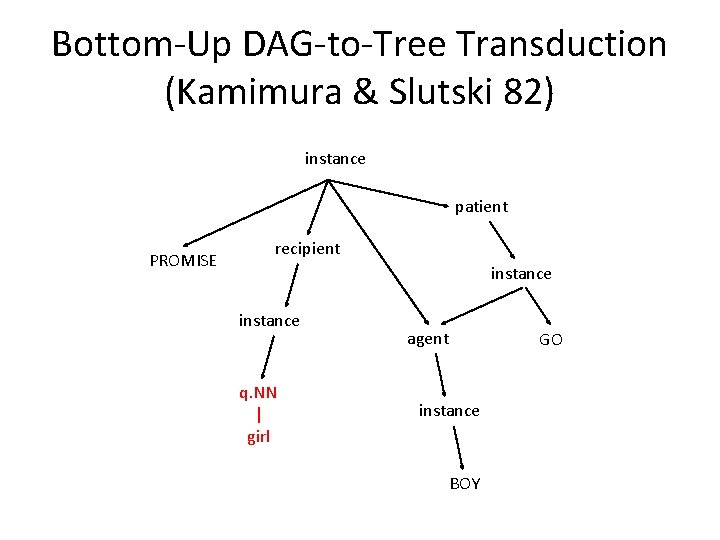

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient PROMISE recipient instance q. NN | girl agent GO instance BOY

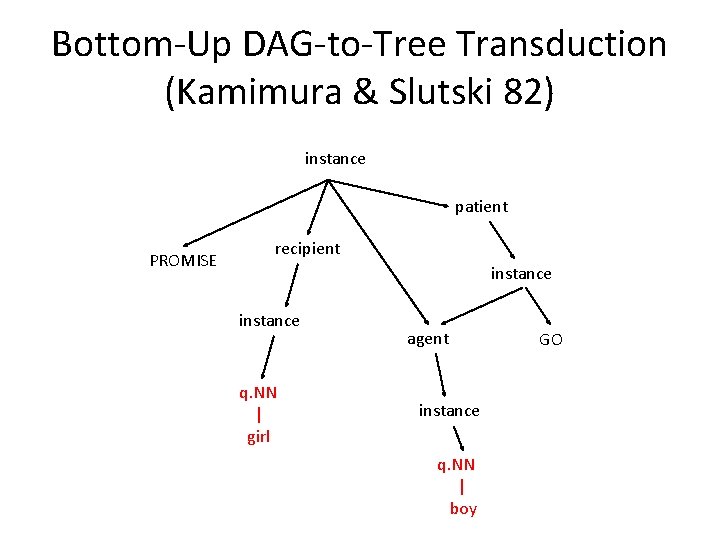

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient PROMISE recipient instance q. NN | girl agent instance q. NN | boy GO

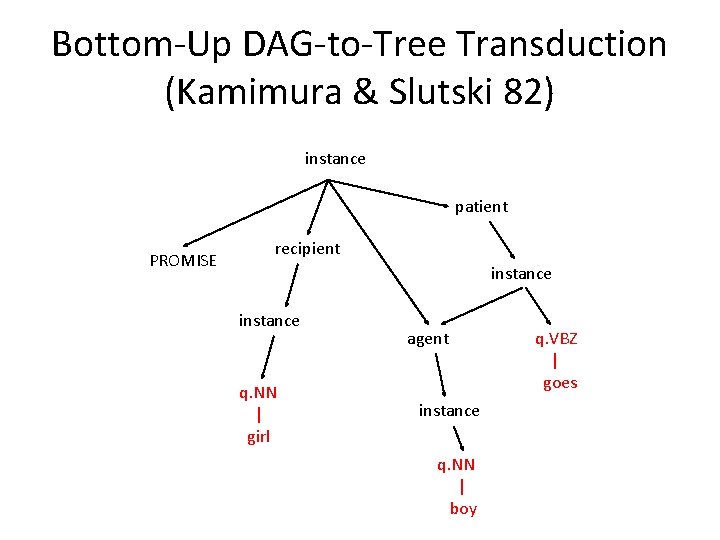

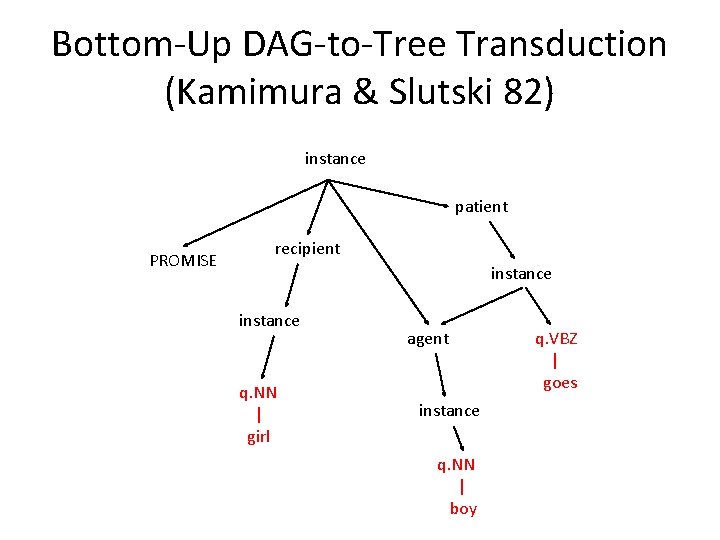

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient PROMISE recipient instance q. NN | girl agent instance q. NN | boy q. VBZ | goes

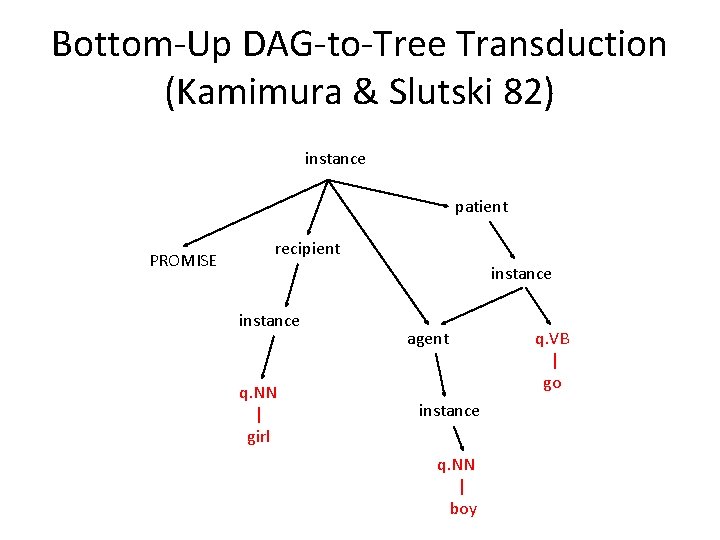

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient PROMISE recipient instance q. NN | girl agent instance q. NN | boy q. VB | go

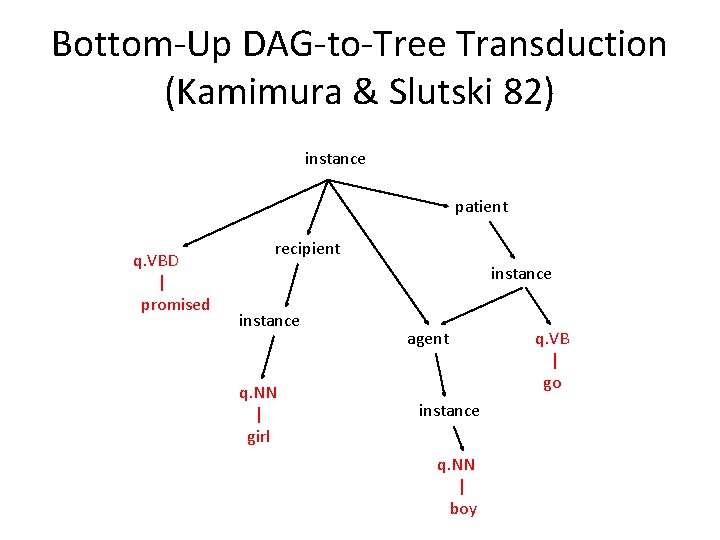

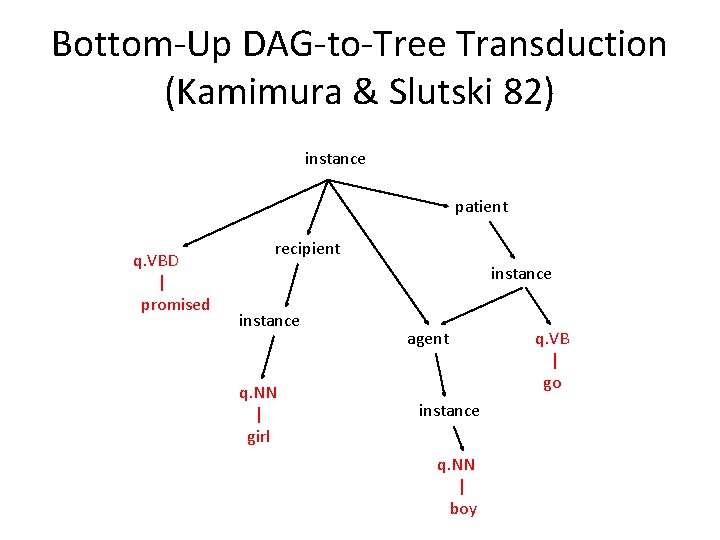

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient q. VBD | promised recipient instance q. NN | girl agent instance q. NN | boy q. VB | go

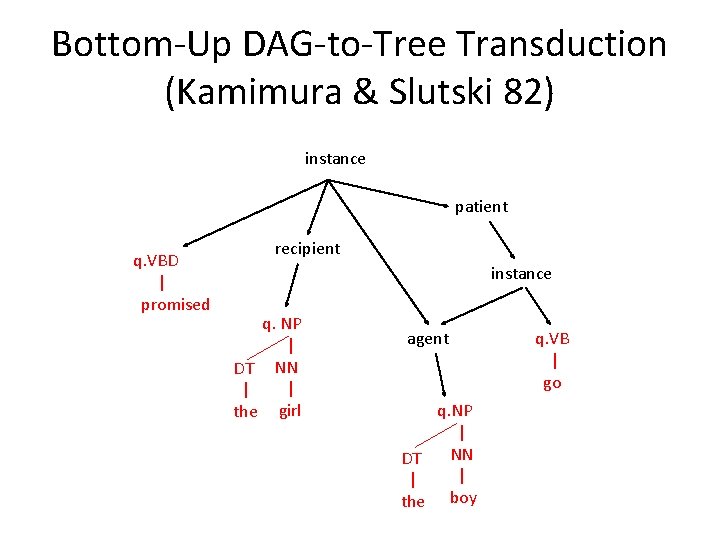

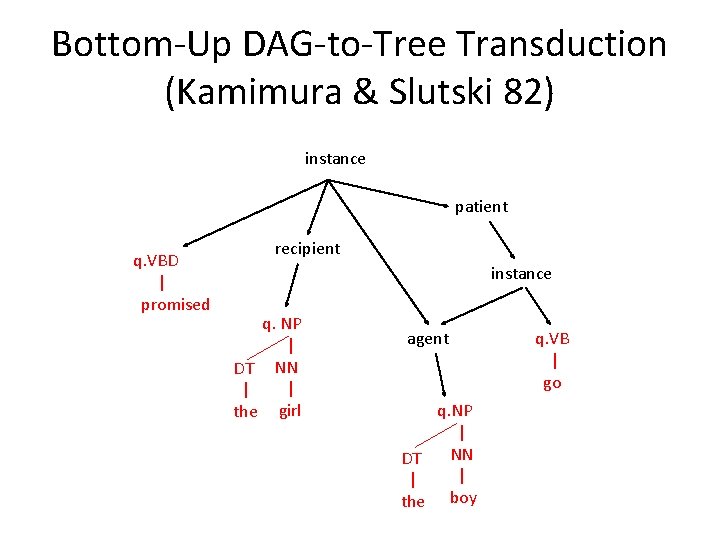

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient q. VBD | promised recipient instance q. NP | DT NN | | the girl agent q. NP | NN DT | | the boy q. VB | go

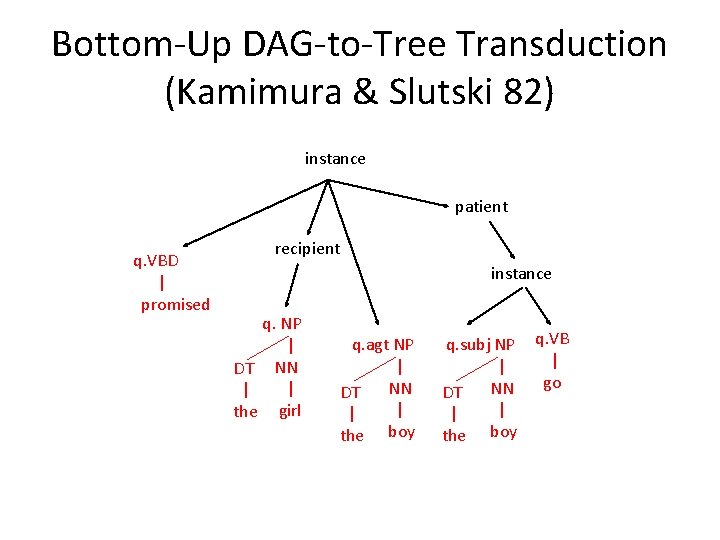

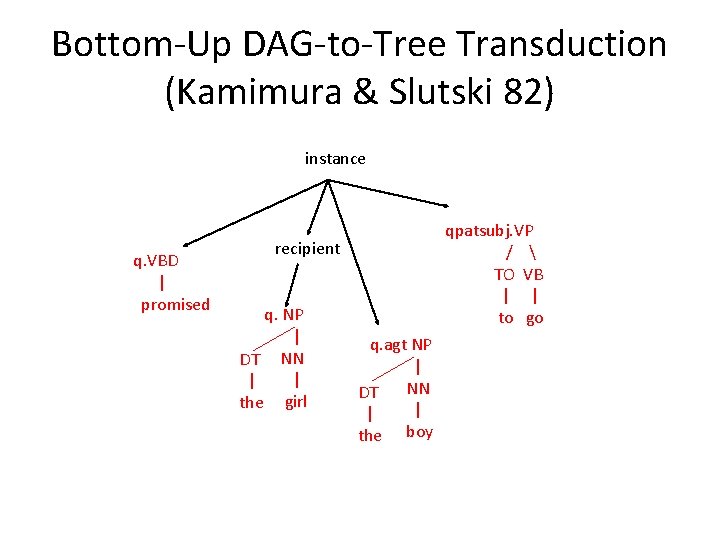

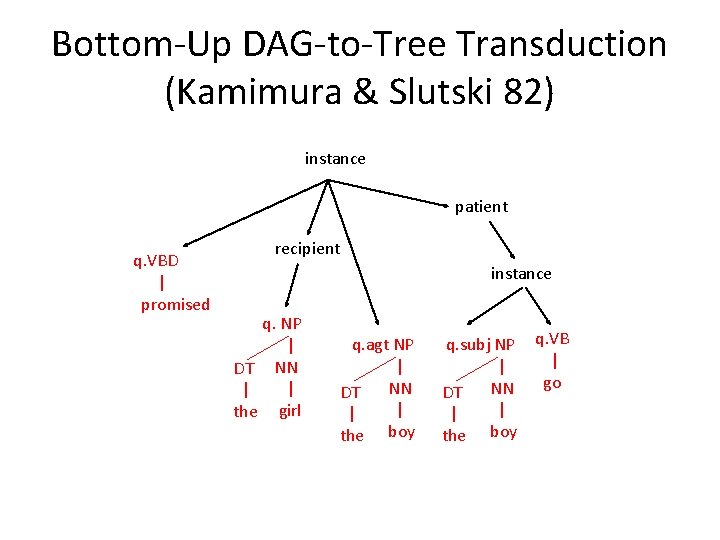

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient q. VBD | promised recipient instance q. NP | DT NN | | the girl q. agt NP | NN DT | | the boy q. subj NP q. VB | | go NN DT | | the boy

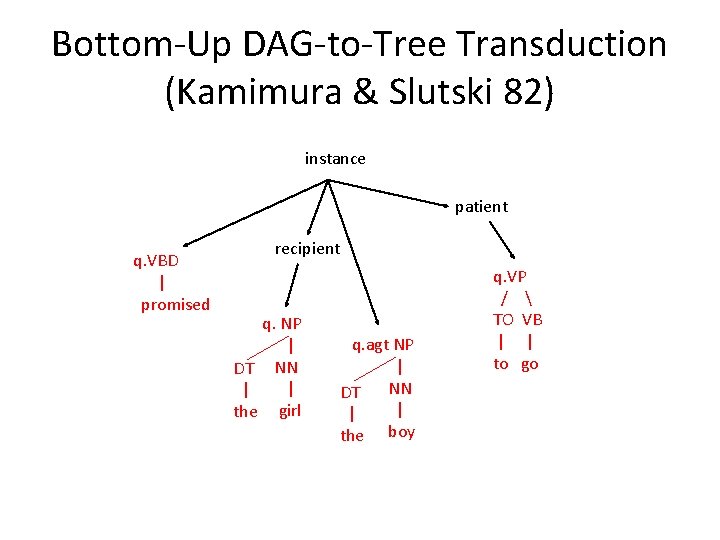

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance patient q. VBD | promised recipient q. NP | DT NN | | the girl q. agt NP | NN DT | | the boy q. VP / TO VB | | to go

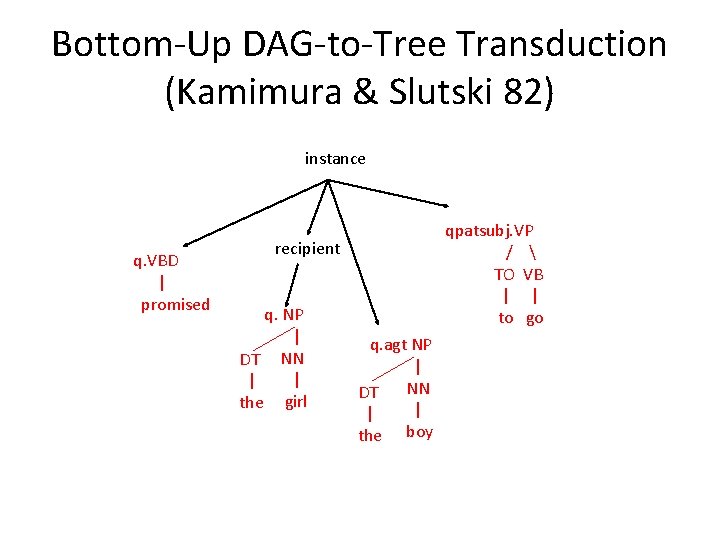

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance q. VBD | promised qpatsubj. VP / TO VB | | to go recipient q. NP | DT NN | | the girl q. agt NP | NN DT | | the boy

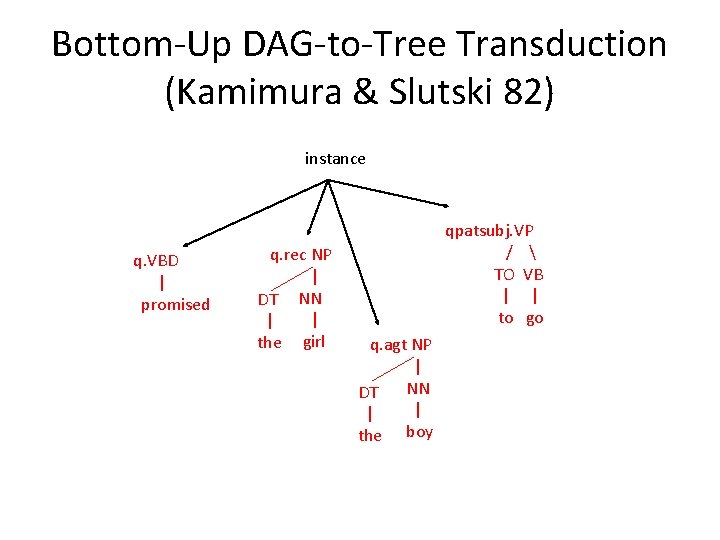

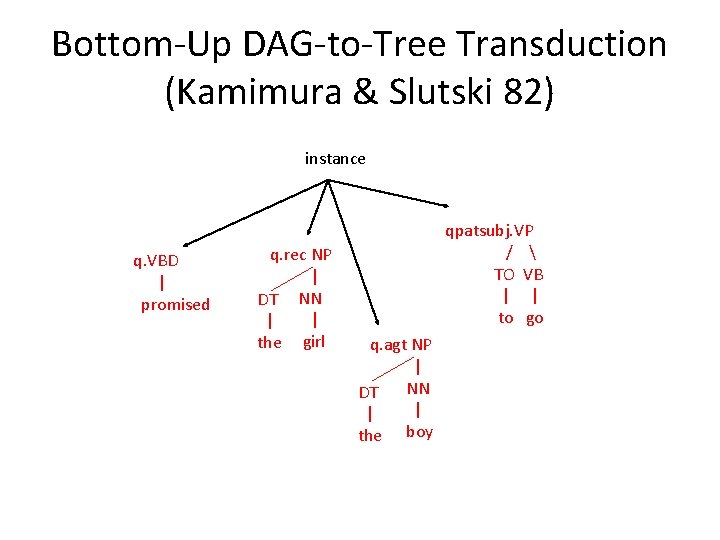

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) instance q. VBD | promised q. rec NP | DT NN | | the girl qpatsubj. VP / TO VB | | to go q. agt NP | NN DT | | the boy

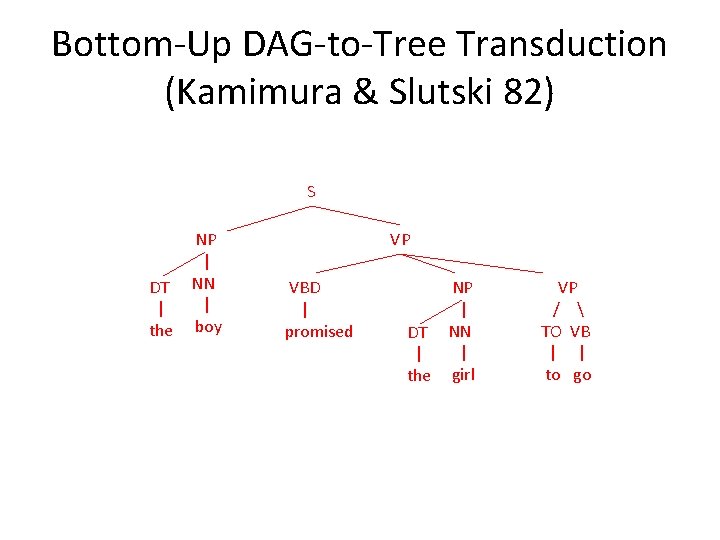

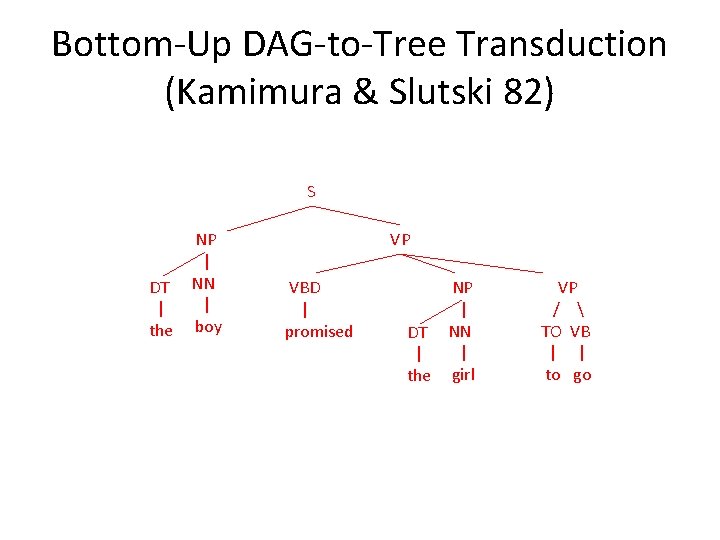

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) S NP | DT NN | | the boy VP VBD | promised NP | DT NN | | the girl VP / TO VB | | to go

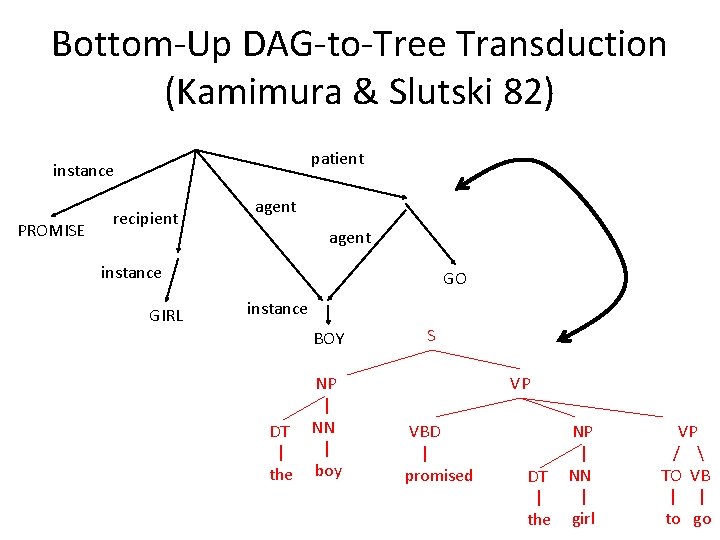

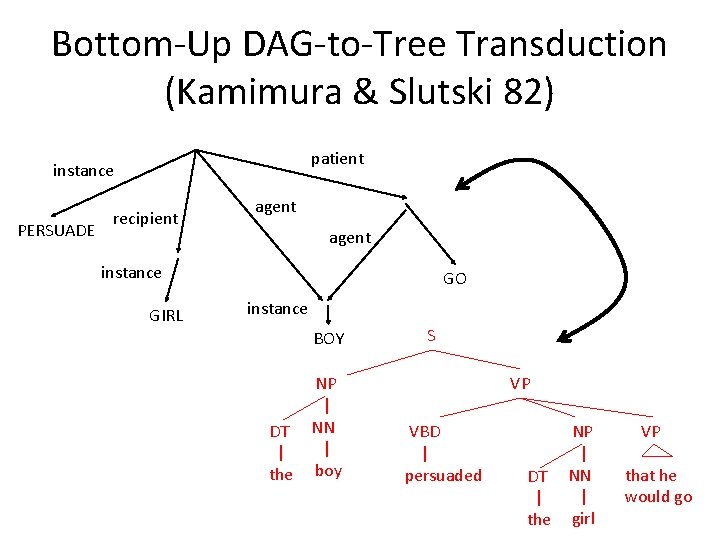

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) patient instance PROMISE recipient agent instance GIRL GO instance BOY NP | DT NN | | the boy S VP VBD | promised NP | DT NN | | the girl VP / TO VB | | to go

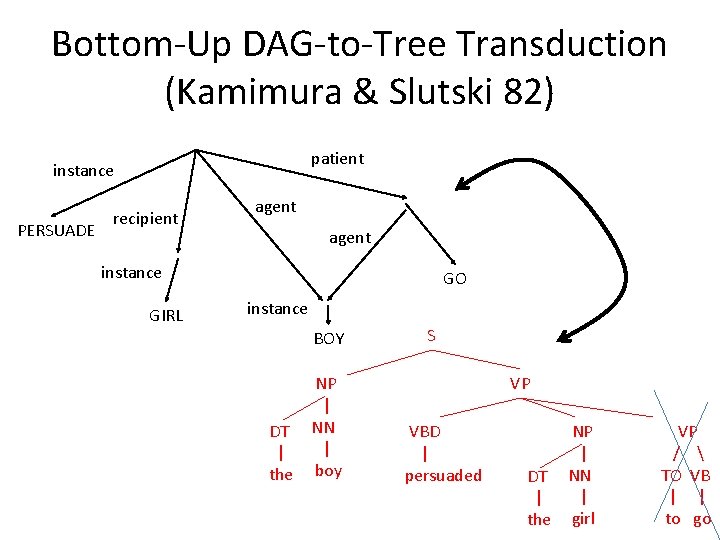

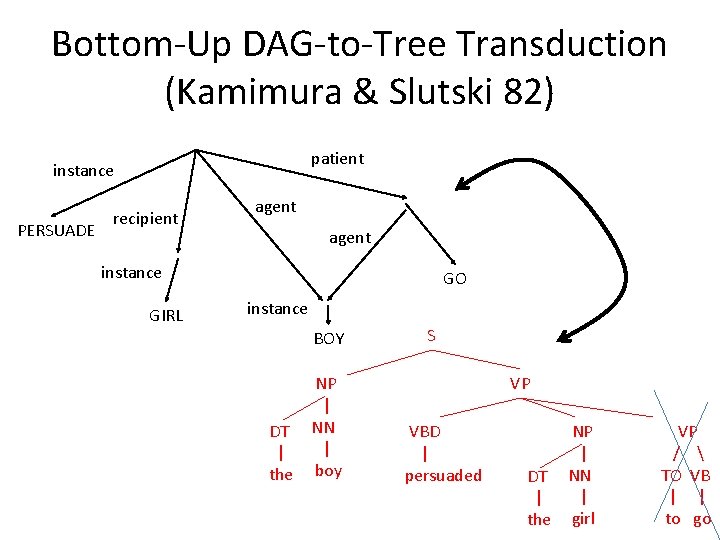

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) patient instance PERSUADE recipient agent instance GIRL GO instance BOY NP | DT NN | | the boy S VP VBD | persuaded NP | DT NN | | the girl VP / TO VB | | to go

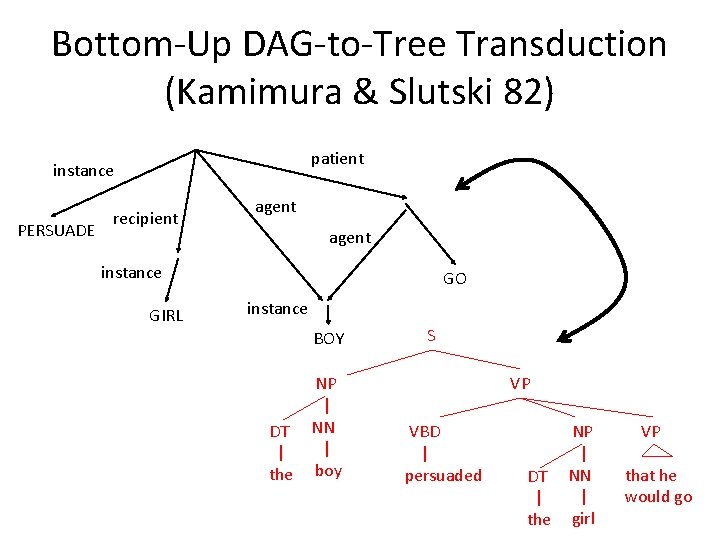

Bottom-Up DAG-to-Tree Transduction (Kamimura & Slutski 82) patient instance PERSUADE recipient agent instance GIRL GO instance BOY NP | DT NN | | the boy S VP VBD | persuaded NP | DT NN | | the girl VP that he would go

General-Purpose Algorithms for Feature Structures String Automata Algorithms Tree Automata Algorithms N-best … … paths through an WFSA (Viterbi, 1967; Eppstein, 1998) … trees in a weighted forest (Jiménez & Marzal, 2000; Huang & Chiang, 2005) EM training Forward-backward EM (Baum/Welch, 1971; Eisner 2003) Tree transducer EM training (Graehl & Knight, 2004) Determinization… … of weighted string acceptors (Mohri, 1997) … of weighted tree acceptors (Borchardt & Vogler, 2003; May & Knight, 2005) Intersection WFSA intersection Tree acceptor intersection Applying transducers string WFST WFSA tree TT weighted tree acceptor Transducer composition WFST composition (Pereira & Riley, 1996) Many tree transducers not closed under composition (Maletti et al 09) General tools Carmel, Open. FST Tiburon (May & Knight 10) Graph Automata Algorithms?

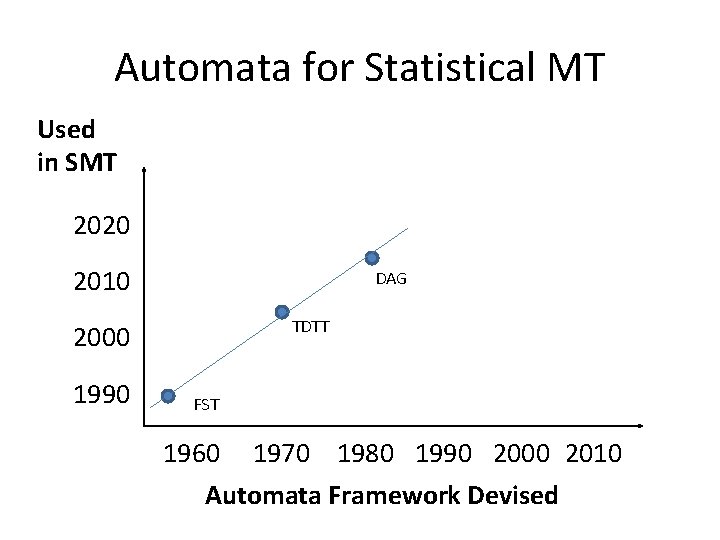

Automata for Statistical MT Used in SMT 2020 2010 DAG TDTT 2000 1990 FST 1960 1970 1980 1990 2000 2010 Automata Framework Devised

end

Kevin knight usc

Kevin knight usc Characteristics of informal curriculum

Characteristics of informal curriculum Unit 1 formal informal and non formal education

Unit 1 formal informal and non formal education How formal education differ from als

How formal education differ from als Formal transformation

Formal transformation Formal transformation model

Formal transformation model Gawain and the green knight hero's journey

Gawain and the green knight hero's journey Sir gawain and the green knight fitt 3

Sir gawain and the green knight fitt 3 Sir gawain's shield

Sir gawain's shield Who is the antagonist in sir gawain and the green knight

Who is the antagonist in sir gawain and the green knight Is sir gawain and the green knight a medieval romance

Is sir gawain and the green knight a medieval romance Sir gawain and the green knight plot diagram

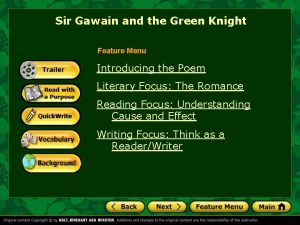

Sir gawain and the green knight plot diagram Sir gawain and the green knight vocabulary

Sir gawain and the green knight vocabulary Sir gawain and the green knight medieval romance

Sir gawain and the green knight medieval romance Knights and samurai venn diagram

Knights and samurai venn diagram Sir gawain and the green knight fitt 2 summary

Sir gawain and the green knight fitt 2 summary Slidetodoc.com

Slidetodoc.com Sir gawain and the green knight plot diagram

Sir gawain and the green knight plot diagram Sir gawain medieval romance

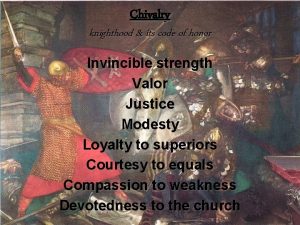

Sir gawain medieval romance The ideal knight respected and vigorously defended his

The ideal knight respected and vigorously defended his Sir gawain and the green knight hero's journey

Sir gawain and the green knight hero's journey Registro culto formal

Registro culto formal Kurikulum formal

Kurikulum formal Contoh kerangka karangan dalam bentuk grafik

Contoh kerangka karangan dalam bentuk grafik Maksud komunikasi sehala

Maksud komunikasi sehala Falasi formal dan tidak formal

Falasi formal dan tidak formal Definisi motivasi

Definisi motivasi Aap1 linguagem e oralidade

Aap1 linguagem e oralidade Fungsi manajemen paud

Fungsi manajemen paud Williams ortezi

Williams ortezi Braddom's physical medicine and rehabilitation

Braddom's physical medicine and rehabilitation Nikki clay xxx

Nikki clay xxx 164chairman

164chairman What does a knight look like

What does a knight look like Help the knight

Help the knight Knight in feudal society war games

Knight in feudal society war games The knight archetype

The knight archetype Da vinci robotic knight

Da vinci robotic knight Silver knight award requirements

Silver knight award requirements Ginkgo bioworks

Ginkgo bioworks The knight's tale middle english

The knight's tale middle english The green knight

The green knight Samurai vs knight venn diagram

Samurai vs knight venn diagram Kingdomality types

Kingdomality types Clarington hyundai

Clarington hyundai Knight honor code

Knight honor code Who was the roundest knight at the round table

Who was the roundest knight at the round table אסף פלר

אסף פלר Opening knight players

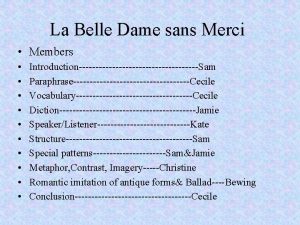

Opening knight players La belle dame sans merci conclusion

La belle dame sans merci conclusion Devin

Devin Help the knight

Help the knight Mr. knight learned the art of watchmaking

Mr. knight learned the art of watchmaking Parson character in canterbury tales

Parson character in canterbury tales Canterbury tales knight description

Canterbury tales knight description The dark knight cinematography analysis

The dark knight cinematography analysis The combination of qualities expected of an ideal knight

The combination of qualities expected of an ideal knight Otto dix prague street

Otto dix prague street Lashante knight

Lashante knight Simile metaphor personification hyperbole

Simile metaphor personification hyperbole Coach k vs coach knight

Coach k vs coach knight Santa celia biografía

Santa celia biografía Sister knight

Sister knight Euler cycle

Euler cycle Nazi party

Nazi party