Second order cone programming approaches for handing missing

- Slides: 18

Second order cone programming approaches for handing missing and uncertain data P. K. Shivaswamy, C. Bhattacharyya and A. J. Smola Discussion led by Qi An Mar 30 th, 2007

Outline • • • Missing and uncertain Data problem Problem formulation Classification with uncertainty Extensions Experimental results Conclusions

Missing data problem • Consider a problem of classification or regression with missing data (only in feature). – Traditional method: simple imputation – Proposed method: robust estimation • Formulate the classification or regression problem into an optimization problem as long as we have information on the first and second moments of data

Classification problem with missing data • Compute the sample mean and covariance for each classes (binary here) from the available observations • Impute the missing data with their conditional mean. • The classification problem can be alternatively formulated into an optimization problem, as shown in the next slide.

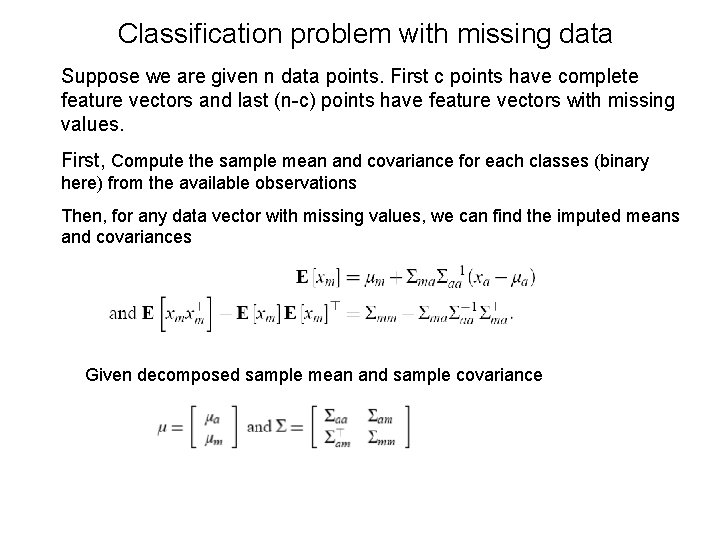

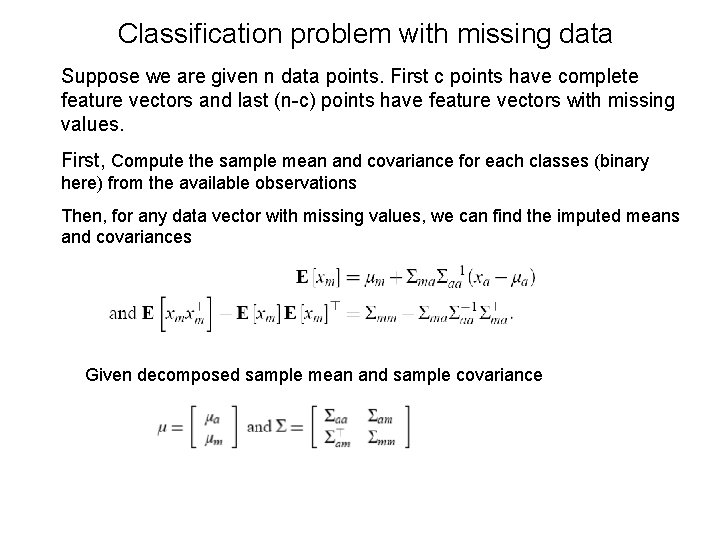

Classification problem with missing data Suppose we are given n data points. First c points have complete feature vectors and last (n-c) points have feature vectors with missing values. First, Compute the sample mean and covariance for each classes (binary here) from the available observations Then, for any data vector with missing values, we can find the imputed means and covariances Given decomposed sample mean and sample covariance

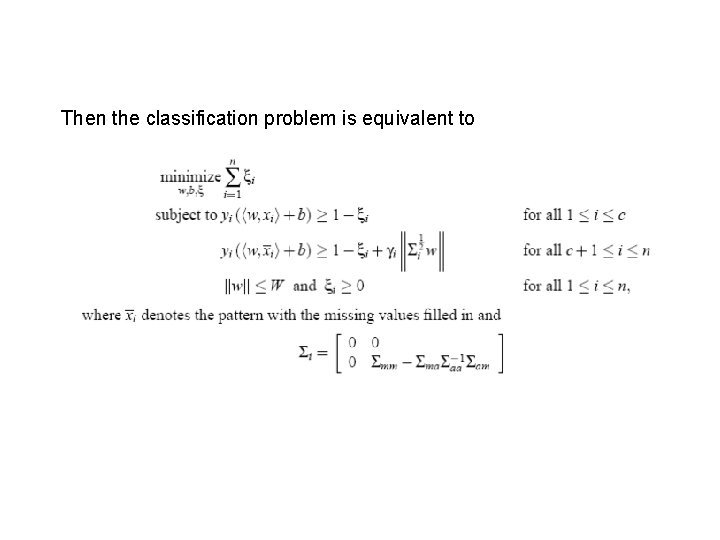

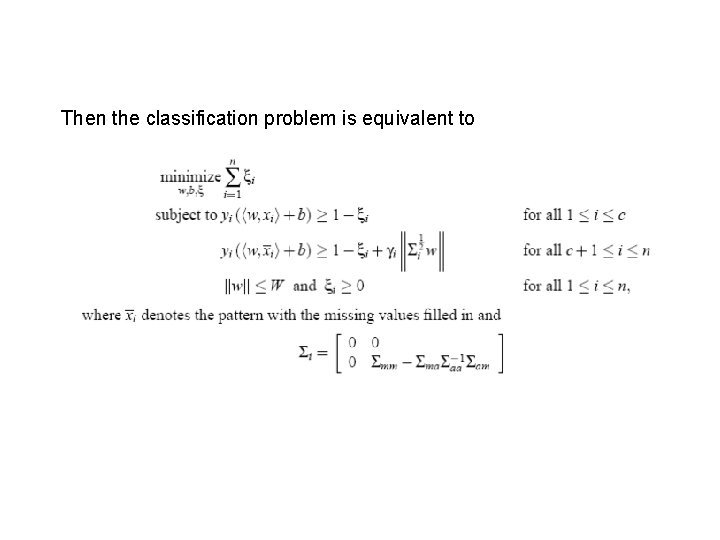

Then the classification problem is equivalent to

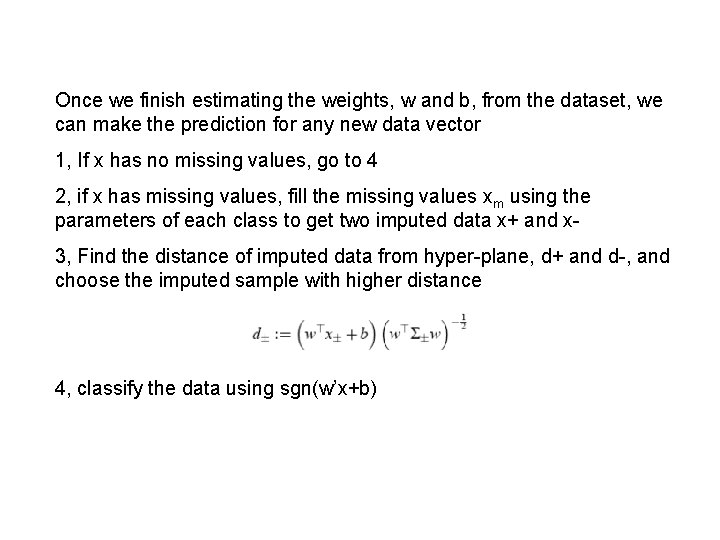

Once we finish estimating the weights, w and b, from the dataset, we can make the prediction for any new data vector 1, If x has no missing values, go to 4 2, if x has missing values, fill the missing values xm using the parameters of each class to get two imputed data x+ and x 3, Find the distance of imputed data from hyper-plane, d+ and d-, and choose the imputed sample with higher distance 4, classify the data using sgn(w’x+b)

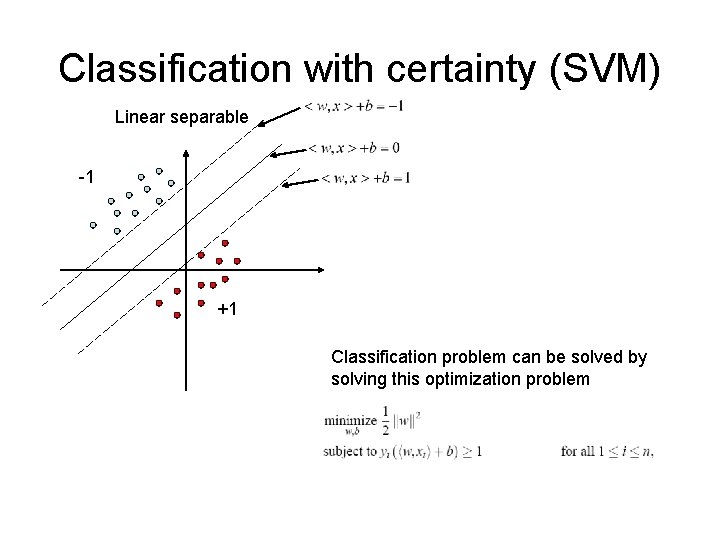

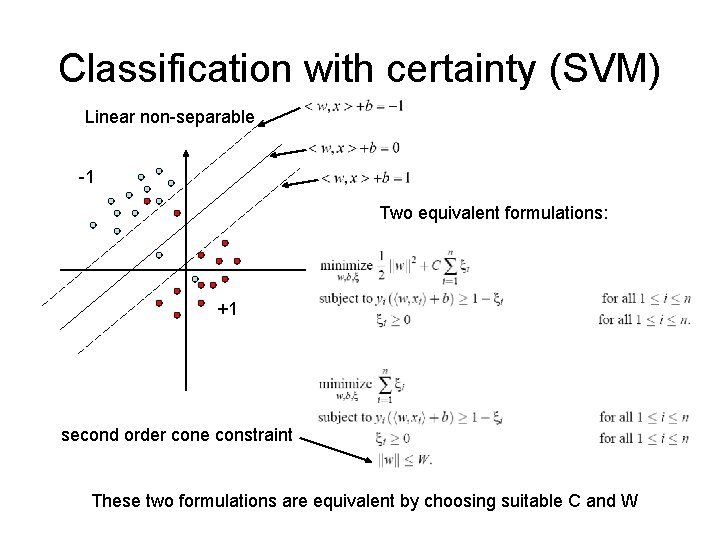

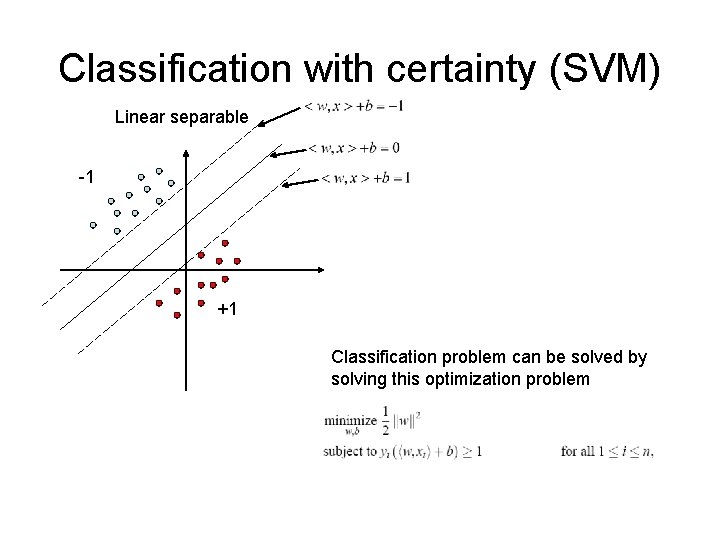

Classification with certainty (SVM) Linear separable -1 +1 Classification problem can be solved by solving this optimization problem

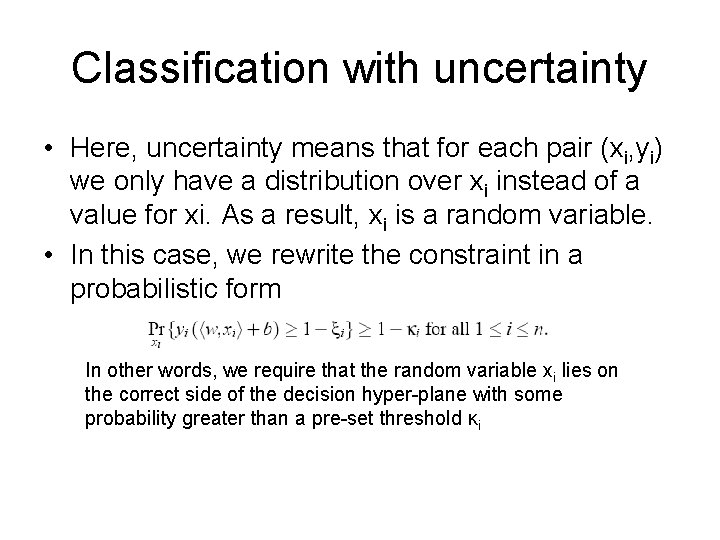

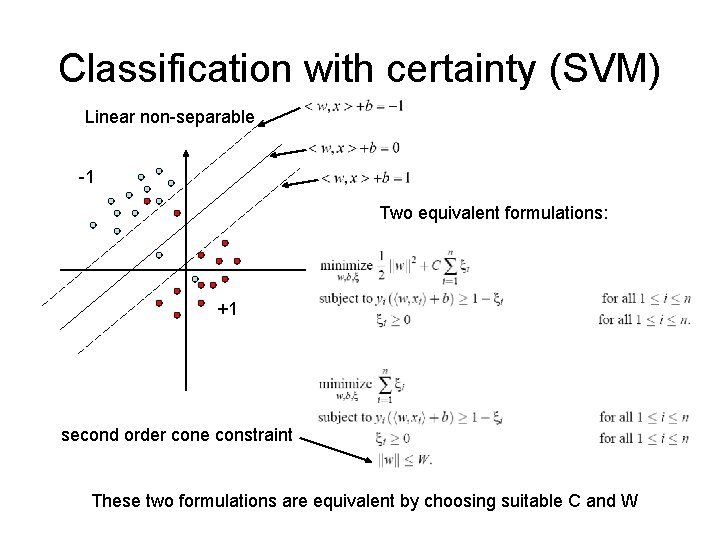

Classification with certainty (SVM) Linear non-separable -1 Two equivalent formulations: +1 second order cone constraint These two formulations are equivalent by choosing suitable C and W

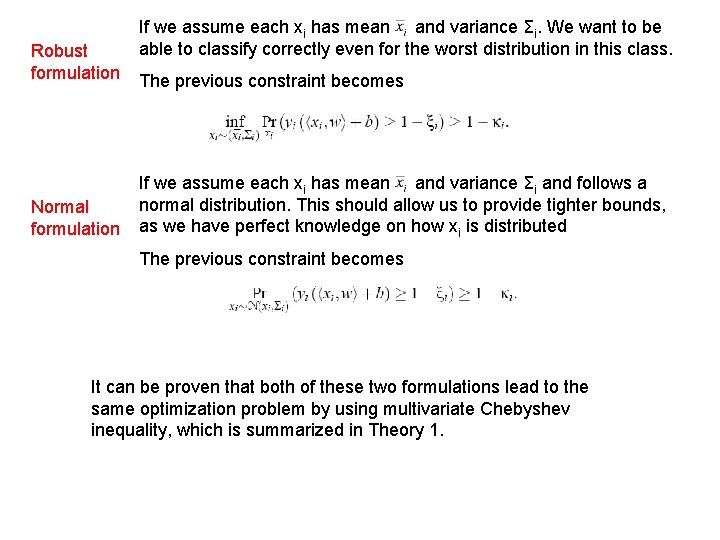

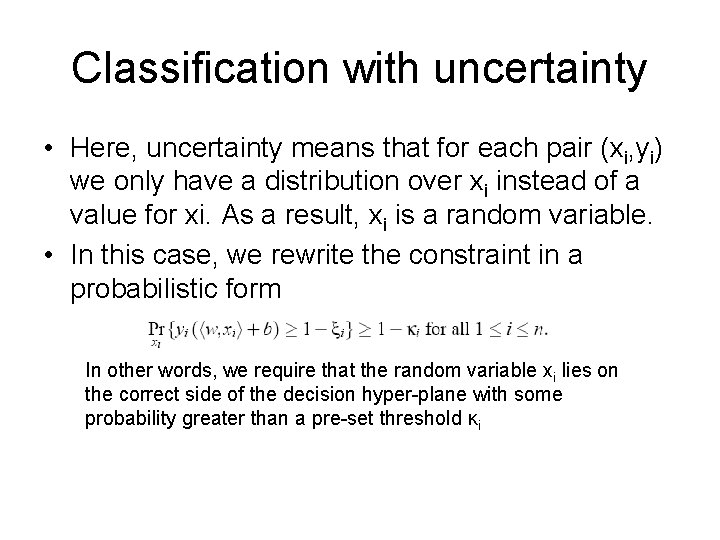

Classification with uncertainty • Here, uncertainty means that for each pair (xi, yi) we only have a distribution over xi instead of a value for xi. As a result, xi is a random variable. • In this case, we rewrite the constraint in a probabilistic form In other words, we require that the random variable xi lies on the correct side of the decision hyper-plane with some probability greater than a pre-set threshold κi

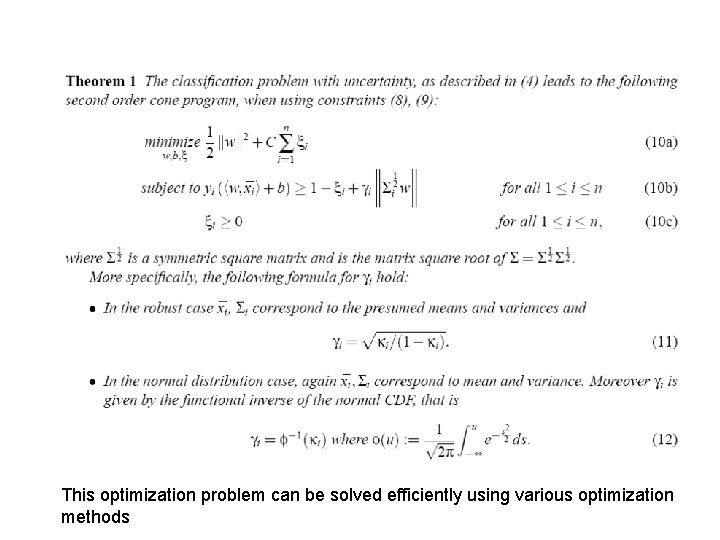

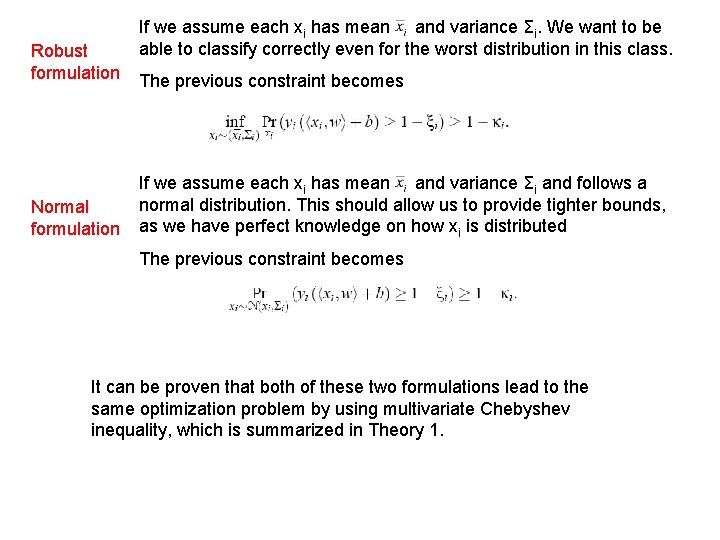

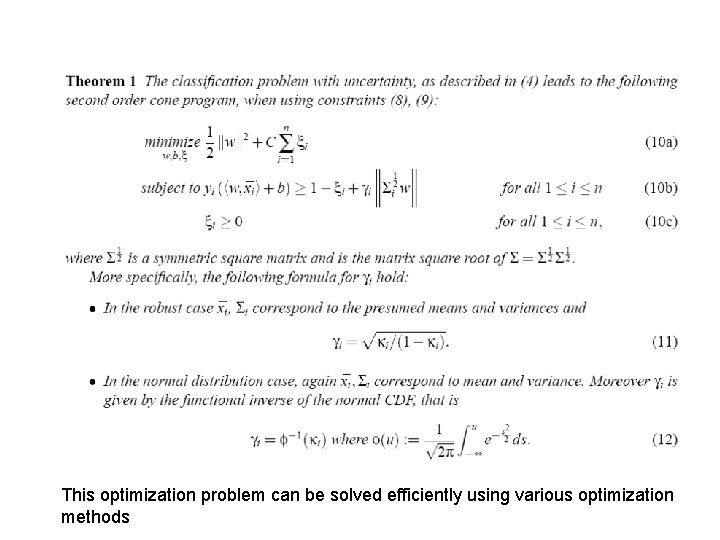

Robust formulation Normal formulation If we assume each xi has mean and variance Σi. We want to be able to classify correctly even for the worst distribution in this class. The previous constraint becomes If we assume each xi has mean and variance Σi and follows a normal distribution. This should allow us to provide tighter bounds, as we have perfect knowledge on how xi is distributed The previous constraint becomes It can be proven that both of these two formulations lead to the same optimization problem by using multivariate Chebyshev inequality, which is summarized in Theory 1.

This optimization problem can be solved efficiently using various optimization methods

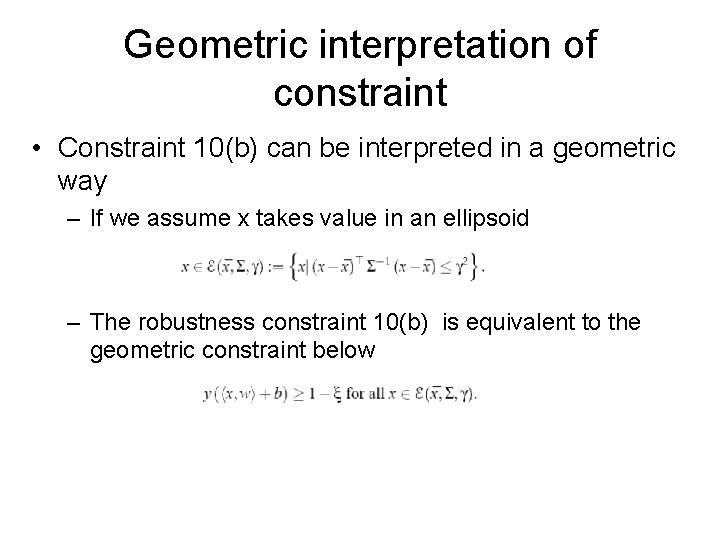

Geometric interpretation of constraint • Constraint 10(b) can be interpreted in a geometric way – If we assume x takes value in an ellipsoid – The robustness constraint 10(b) is equivalent to the geometric constraint below

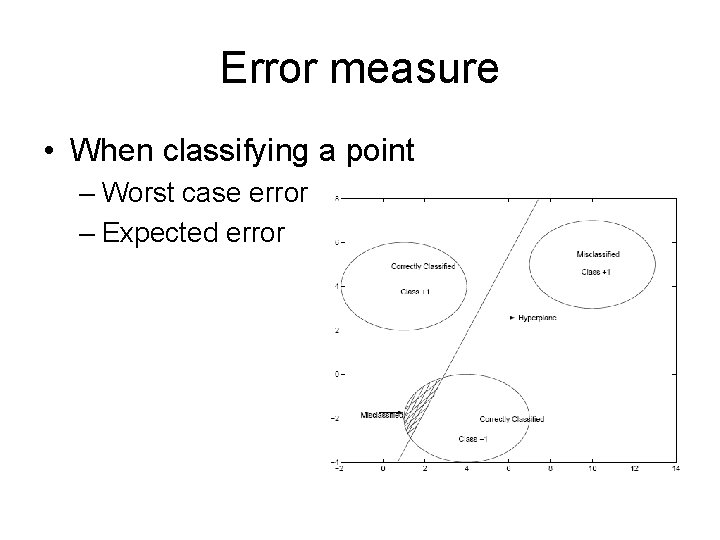

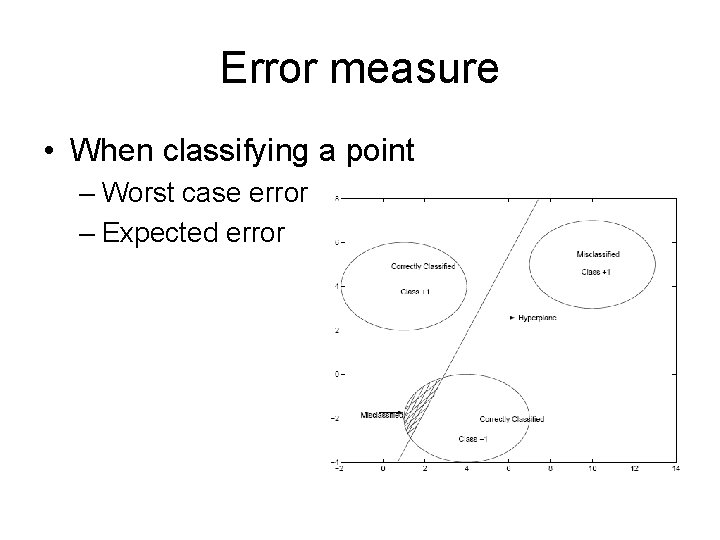

Error measure • When classifying a point – Worst case error – Expected error

Extensions • The optimization problem can be extended – Regression problem – Multi-classification/regression – Some different constraints – Kernelized formulation Go back to the missing feature problem

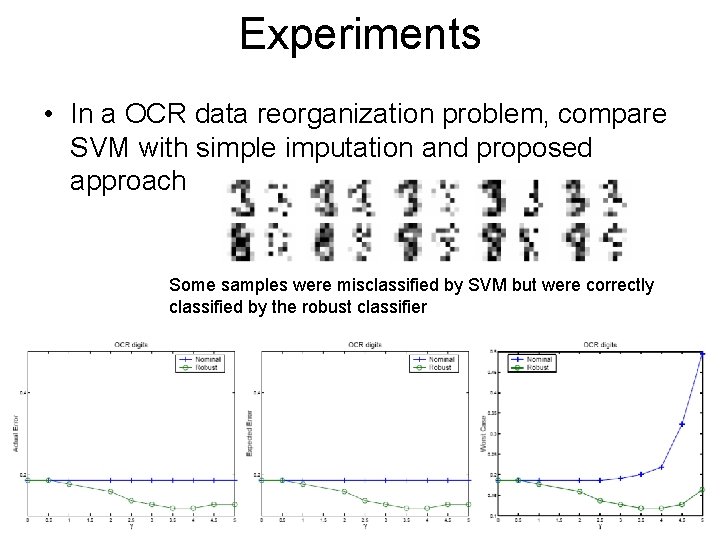

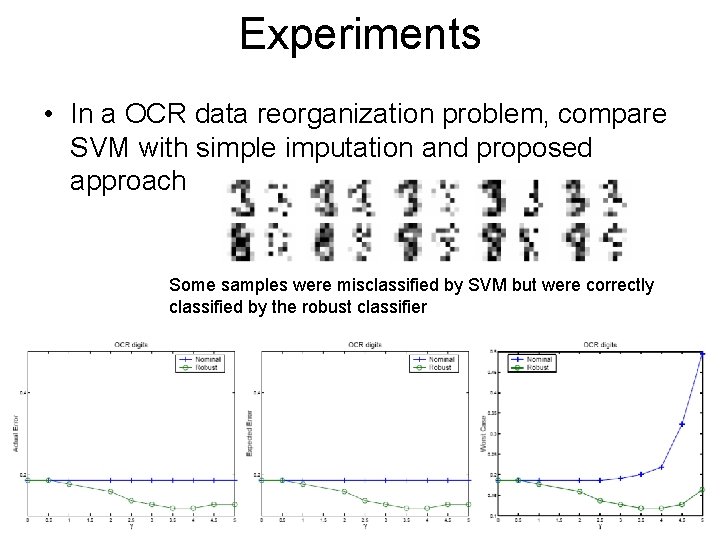

Experiments • In a OCR data reorganization problem, compare SVM with simple imputation and proposed approach Some samples were misclassified by SVM but were correctly classified by the robust classifier

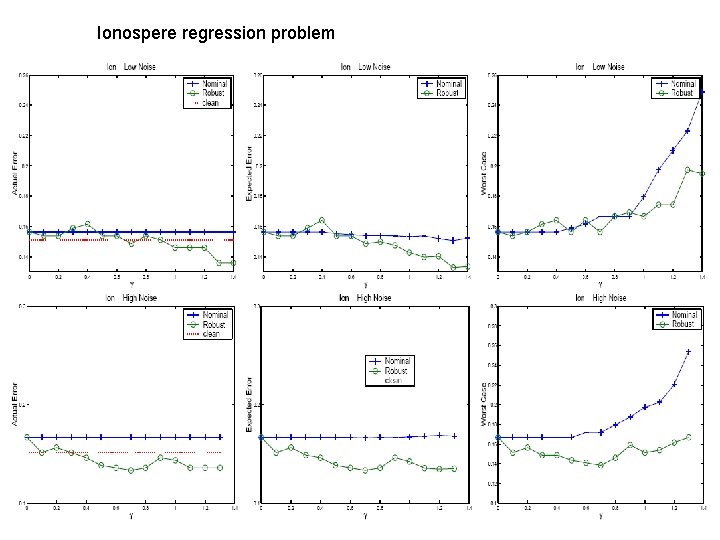

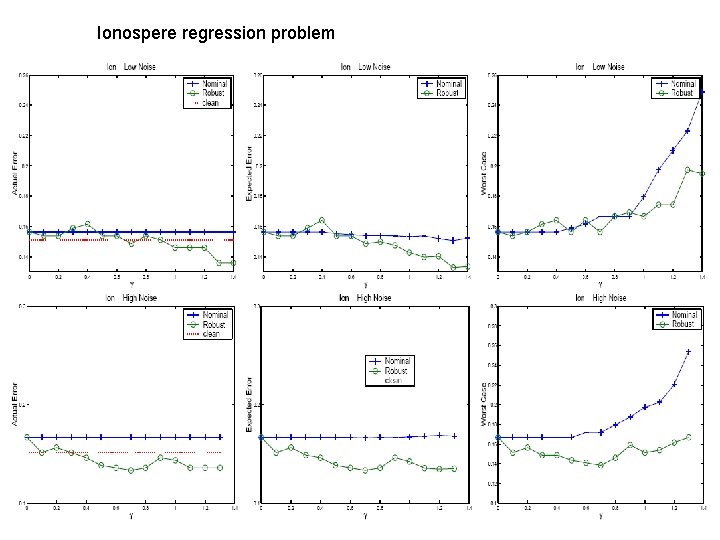

Ionospere regression problem

Conclusions • This paper propose a second order cone programming formulation for designing robust linear prediction function. • This approach is capable of tackling uncertainty in the data vectors both in classification and regression setting • It is applicable to any uncertainty distribution provided the first two moments are computable