Review Dijkstras Single Source Shortest Paths Algorithm Greedily

- Slides: 24

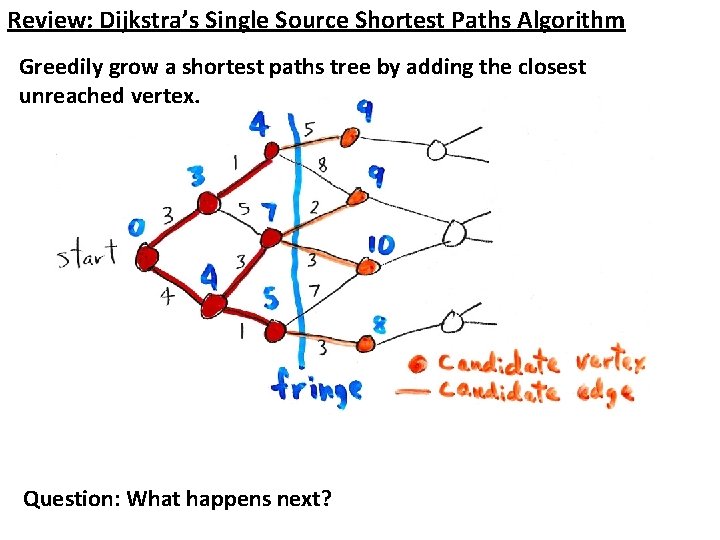

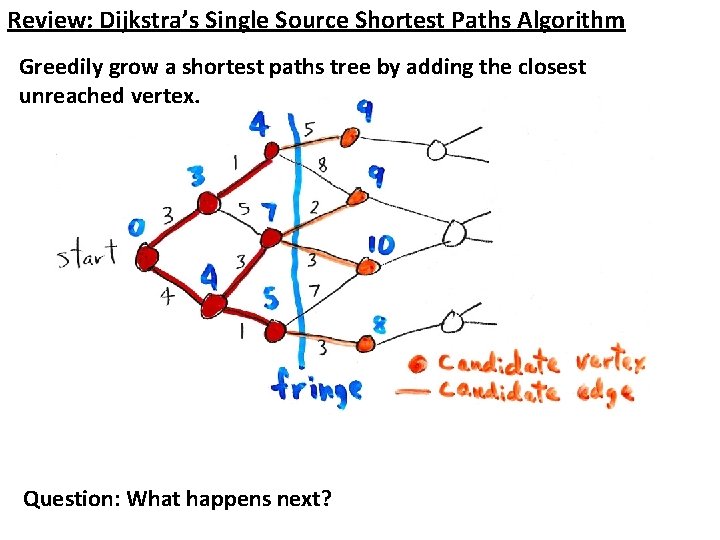

Review: Dijkstra’s Single Source Shortest Paths Algorithm Greedily grow a shortest paths tree by adding the closest unreached vertex. Question: What happens next?

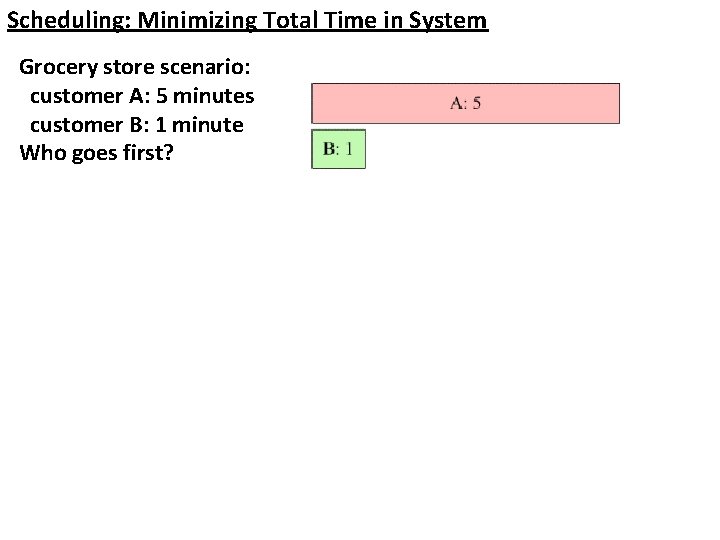

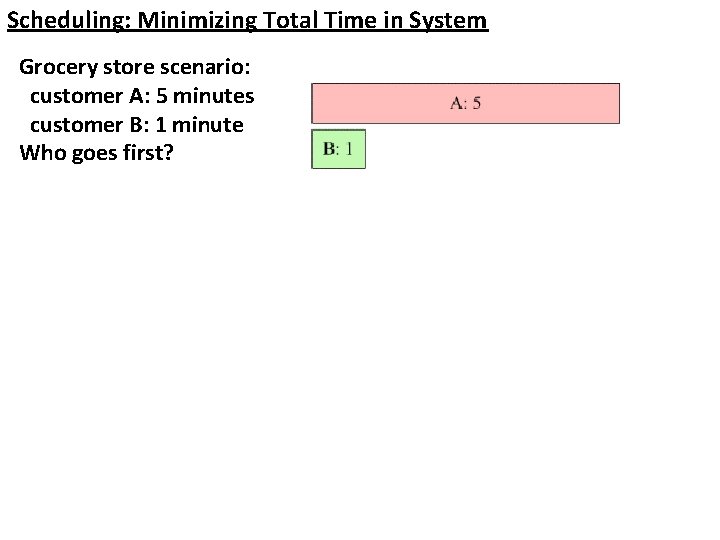

Scheduling: Minimizing Total Time in System Grocery store scenario: customer A: 5 minutes customer B: 1 minute Who goes first?

Scheduling: Minimizing Total Time in System Grocery store scenario: customer A: 5 minutes customer B: 1 minute Who goes first? General problem: You have a bunch of jobs with varying times. Find a scheduling order that minimizes the total time in system. Greedy algorithm?

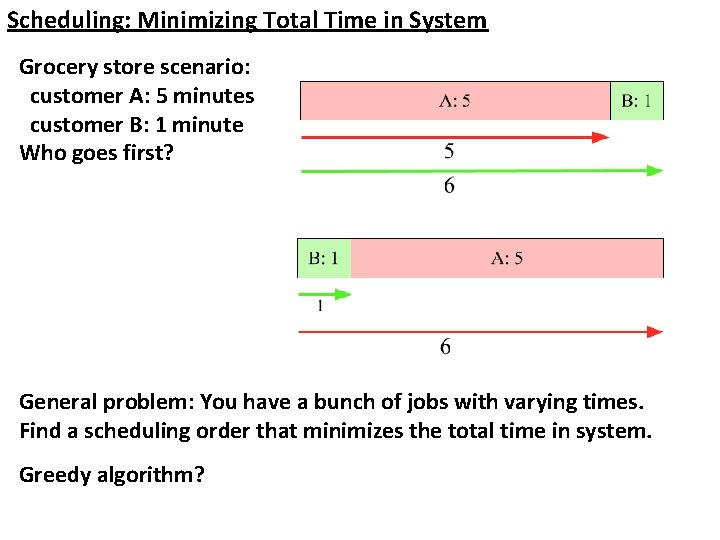

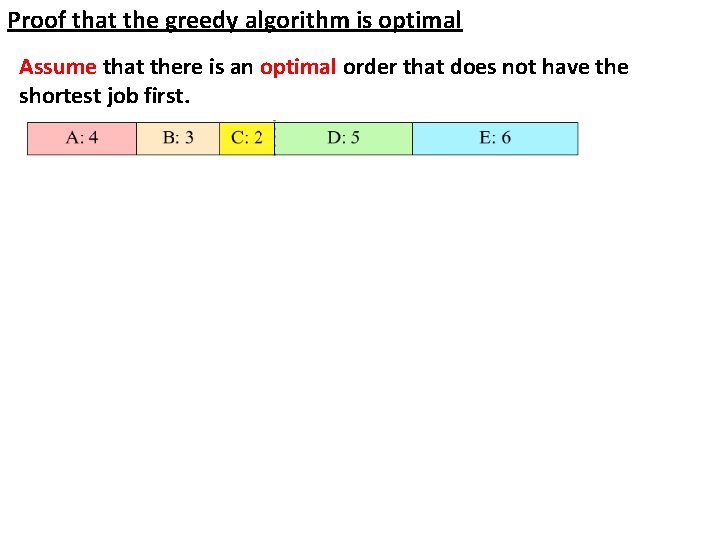

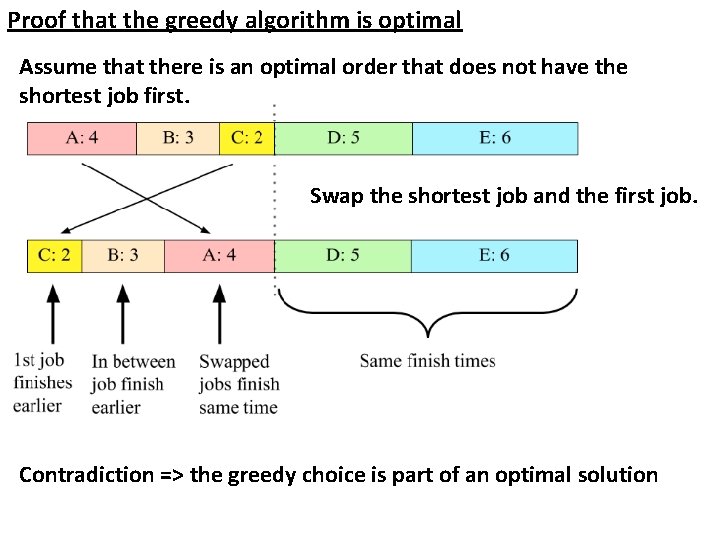

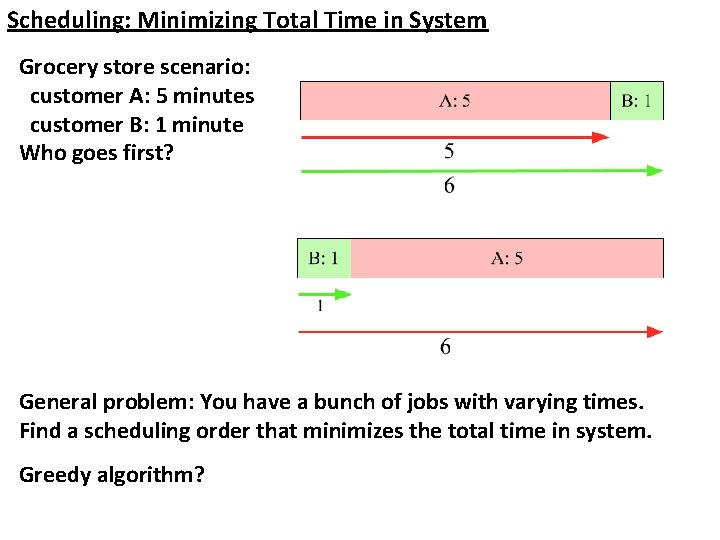

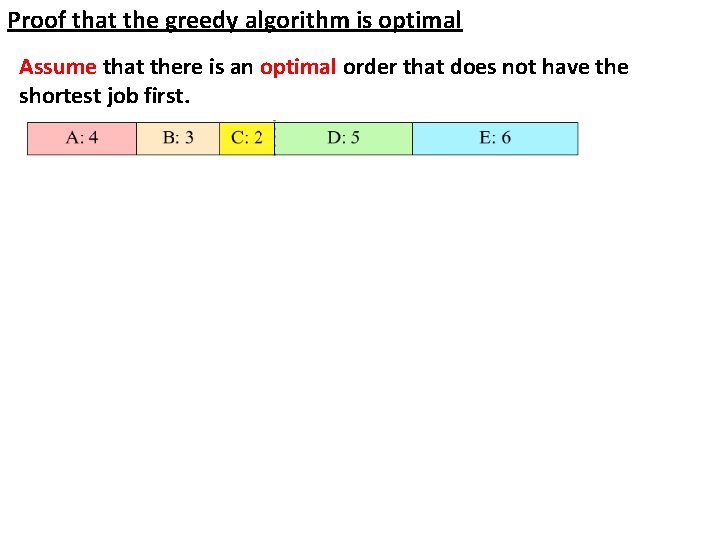

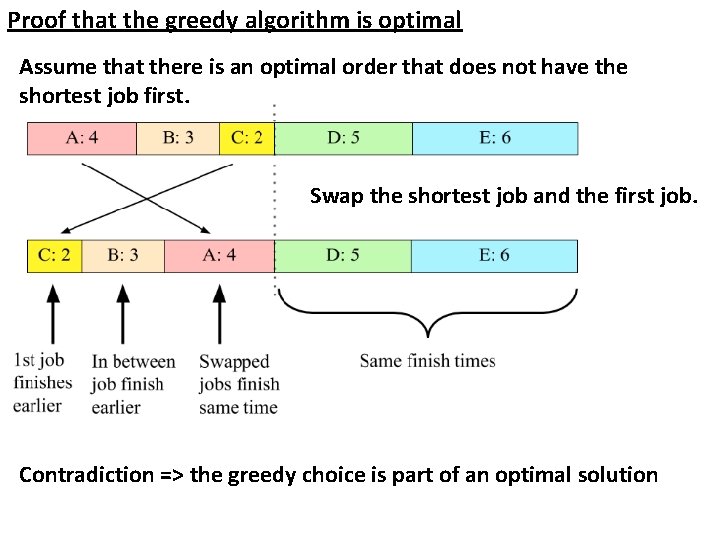

Proof that the greedy algorithm is optimal Assume that there is an optimal order that does not have the shortest job first.

Proof that the greedy algorithm is optimal Assume that there is an optimal order that does not have the shortest job first. Swap the shortest job and the first job. Contradiction => the greedy choice is part of an optimal solution

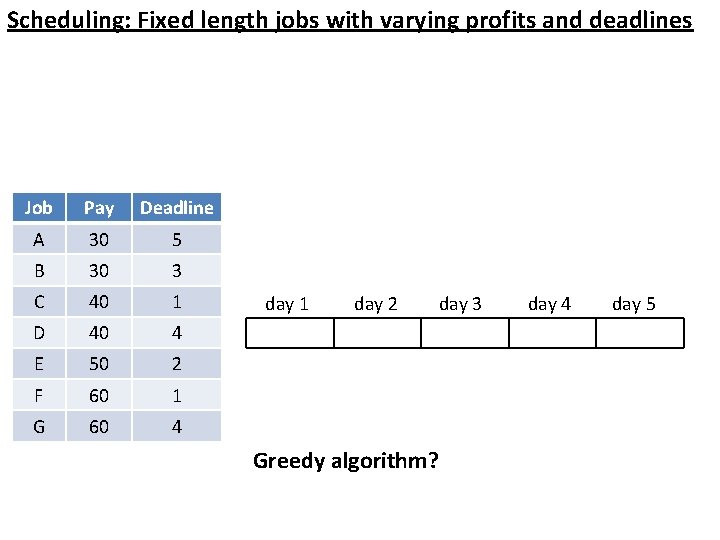

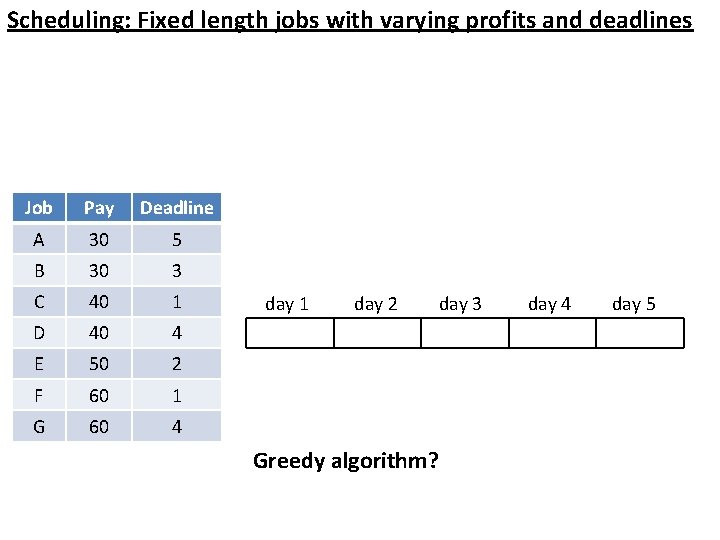

Scheduling: Fixed length jobs with varying profits and deadlines Job Pay Deadline A 30 5 B 30 3 C 40 1 D 40 4 E 50 2 F 60 1 G 60 4 day 1 day 2 day 3 Greedy algorithm? day 4 day 5

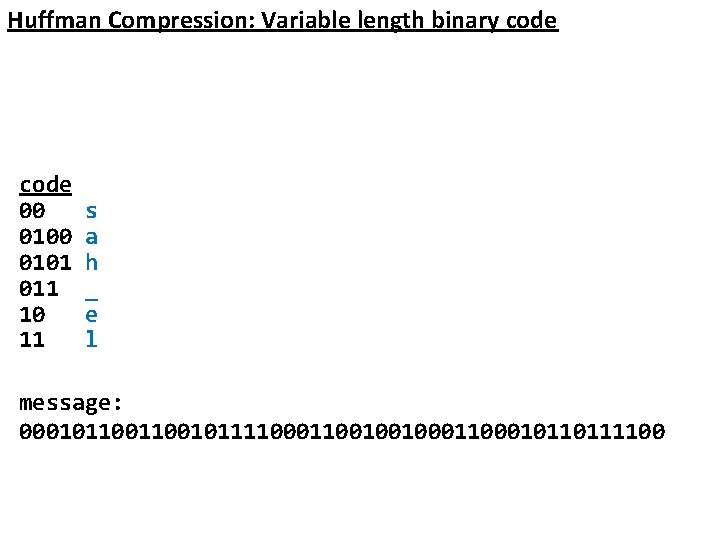

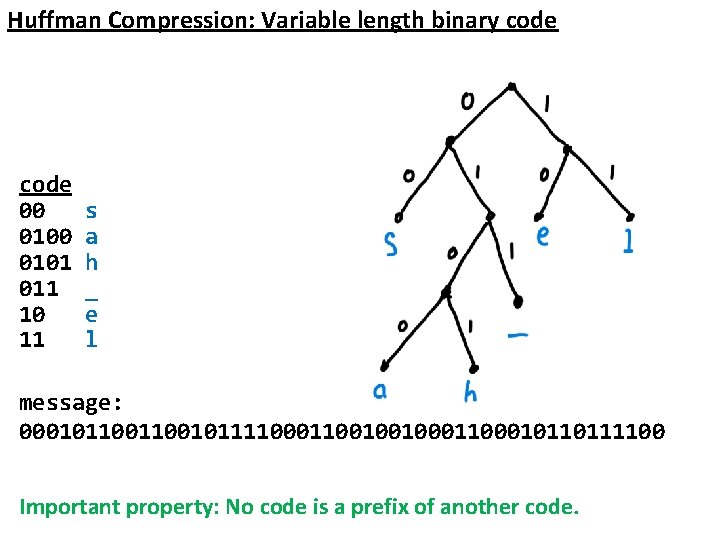

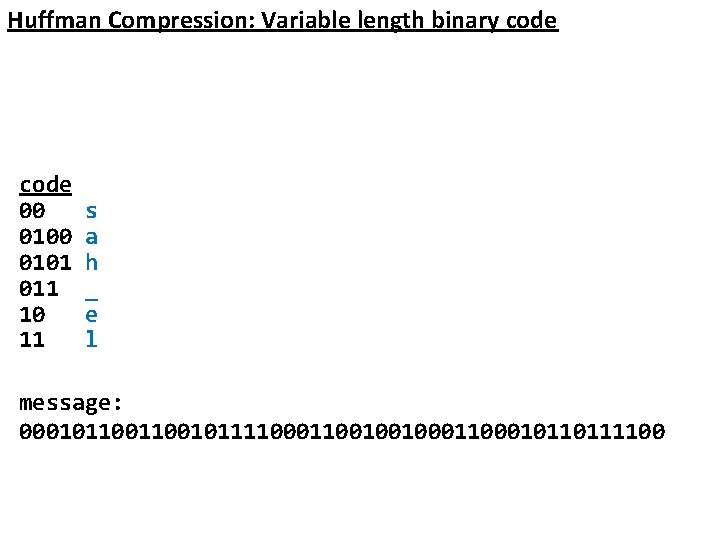

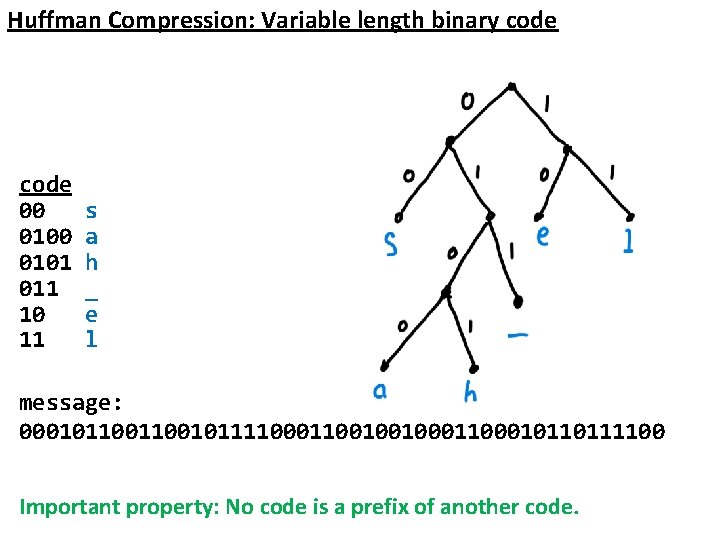

Huffman Compression: Variable length binary code 00 0101 011 10 11 s a h _ e l message: 0001011001011110001100100100010110111100

Huffman Compression: Variable length binary code 00 0101 011 10 11 s a h _ e l message: 0001011001011110001100100100010110111100 Important property: No code is a prefix of another code.

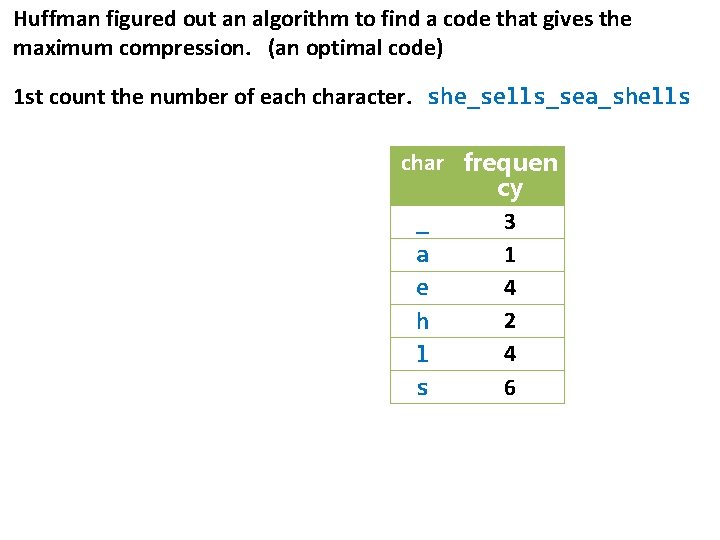

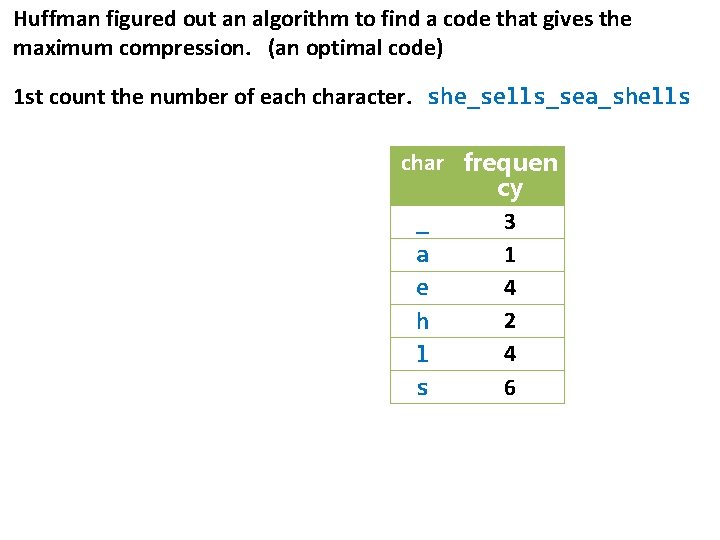

Huffman figured out an algorithm to find a code that gives the maximum compression. (an optimal code) 1 st count the number of each character. she_sells_sea_shells char frequen cy 3 _ 1 a 4 e 2 h 4 l 6 s

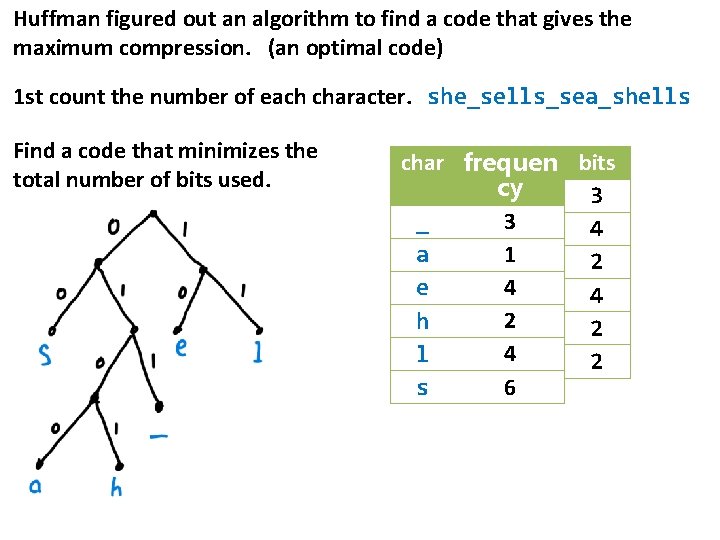

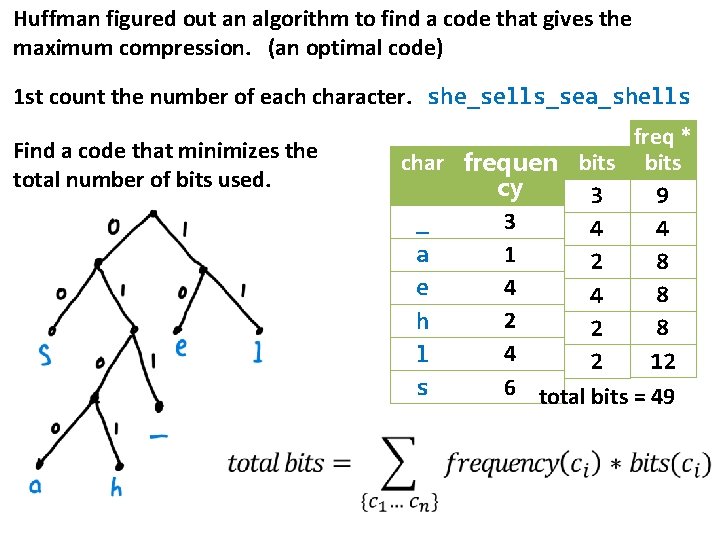

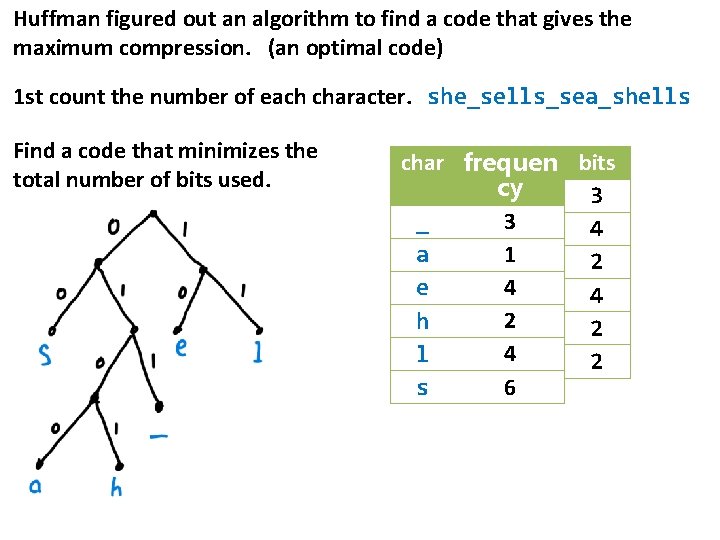

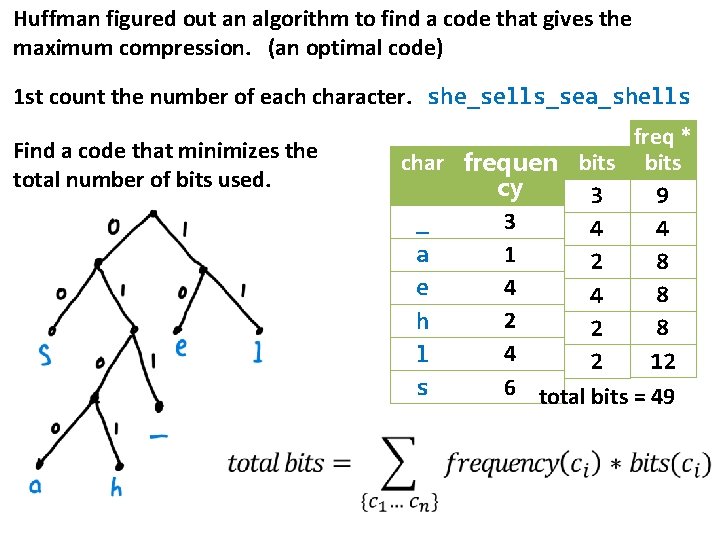

Huffman figured out an algorithm to find a code that gives the maximum compression. (an optimal code) 1 st count the number of each character. she_sells_sea_shells Find a code that minimizes the total number of bits used. char frequen bits cy 3 3 _ 4 1 a 2 4 e 4 2 h 2 4 l 2 6 s

Huffman figured out an algorithm to find a code that gives the maximum compression. (an optimal code) 1 st count the number of each character. she_sells_sea_shells Find a code that minimizes the total number of bits used. freq * char frequen bits cy 9 3 3 _ 4 4 1 a 8 2 4 e 8 4 2 h 8 2 4 l 12 2 6 total bits = 49 s

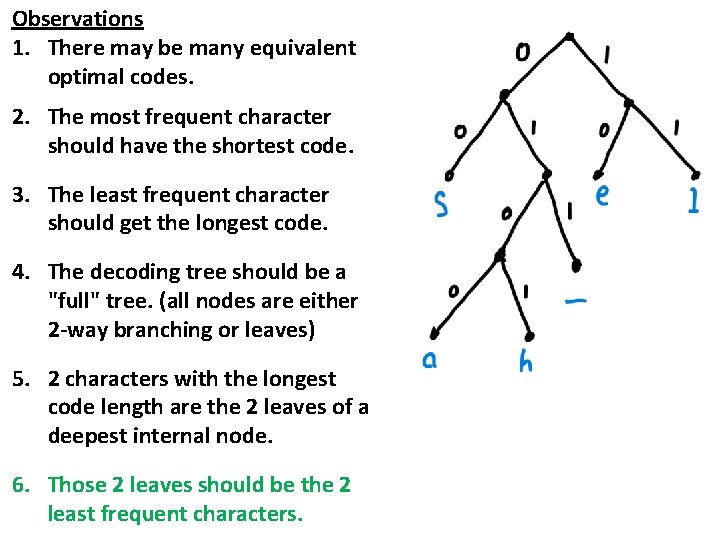

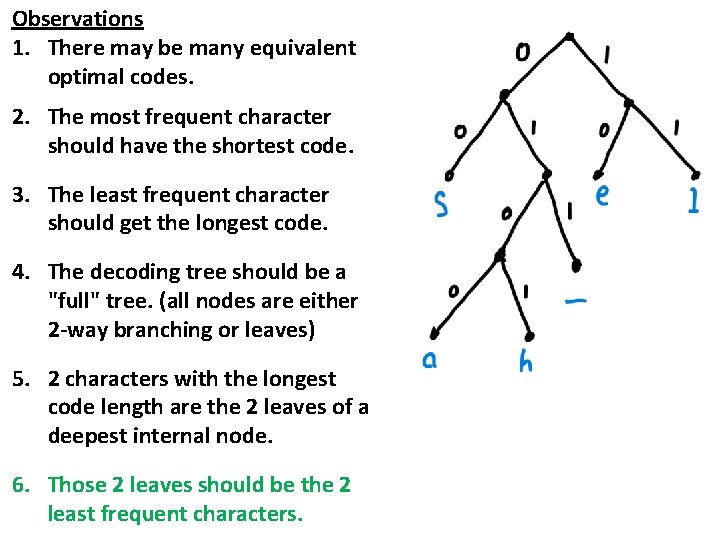

Observations 1. There may be many equivalent optimal codes. 2. The most frequent character should have the shortest code. 3. The least frequent character should get the longest code. 4. The decoding tree should be a "full" tree. (all nodes are either 2 -way branching or leaves) 5. 2 characters with the longest code length are the 2 leaves of a deepest internal node. 6. Those 2 leaves should be the 2 least frequent characters.

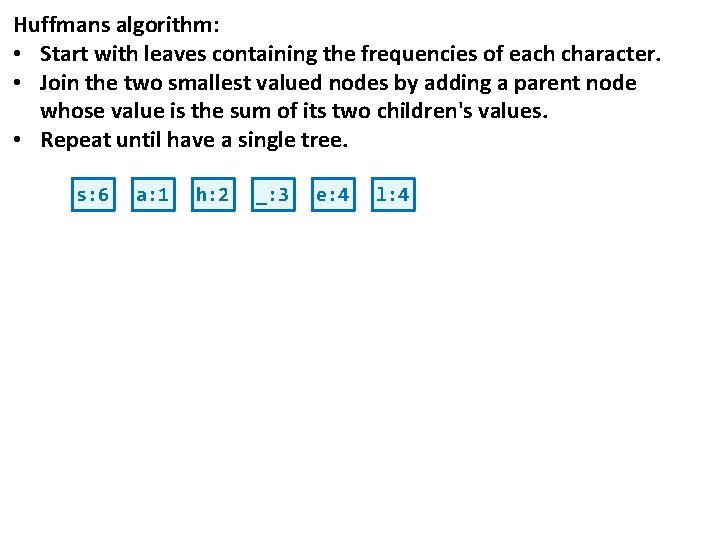

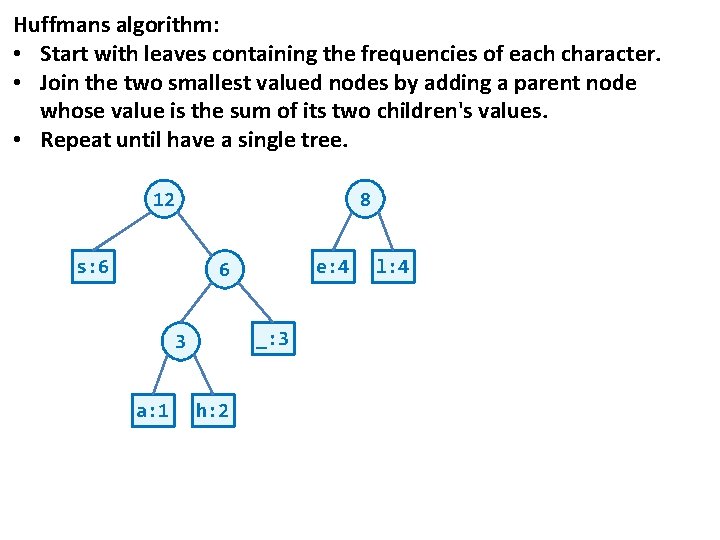

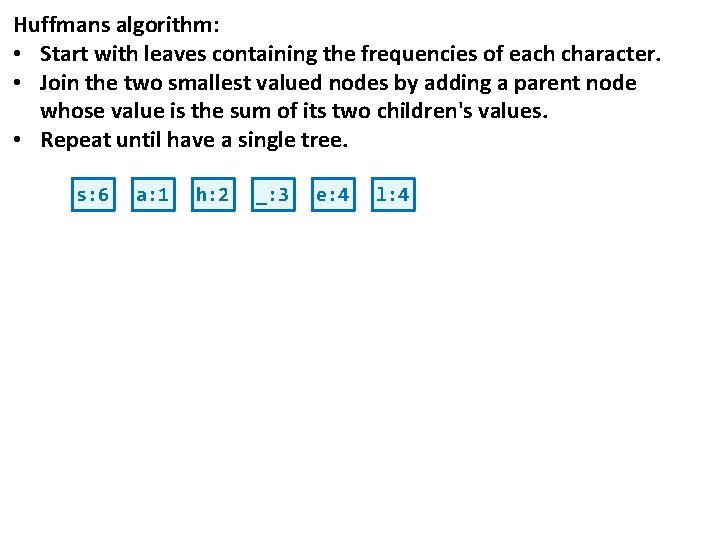

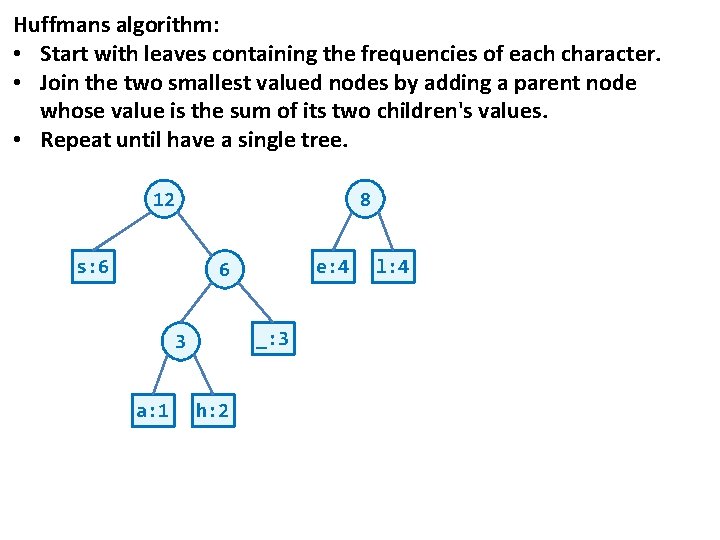

Huffmans algorithm: • Start with leaves containing the frequencies of each character. • Join the two smallest valued nodes by adding a parent node whose value is the sum of its two children's values. • Repeat until have a single tree. s: 6 a: 1 h: 2 _: 3 e: 4 l: 4

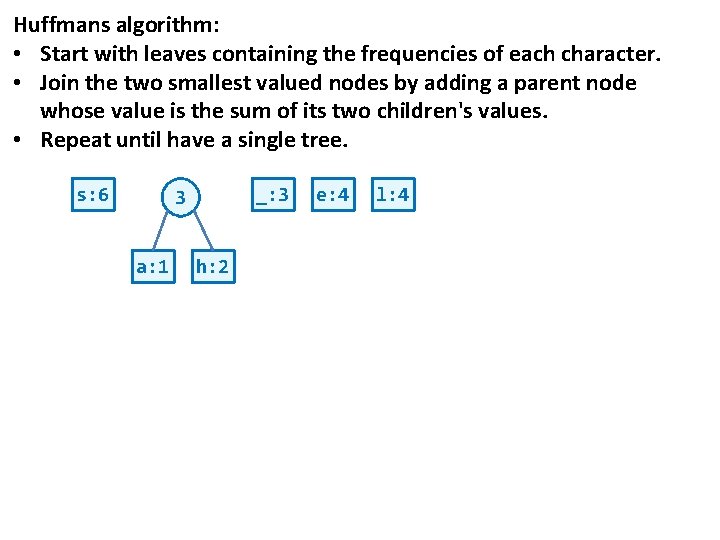

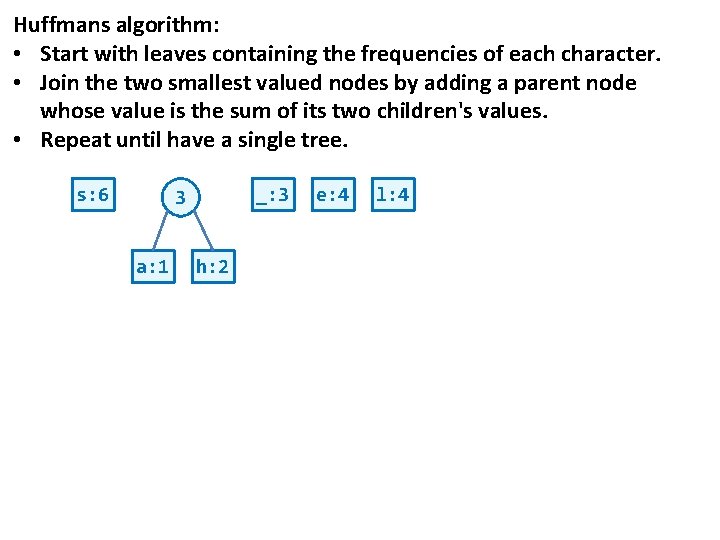

Huffmans algorithm: • Start with leaves containing the frequencies of each character. • Join the two smallest valued nodes by adding a parent node whose value is the sum of its two children's values. • Repeat until have a single tree. s: 6 _: 3 3 a: 1 h: 2 e: 4 l: 4

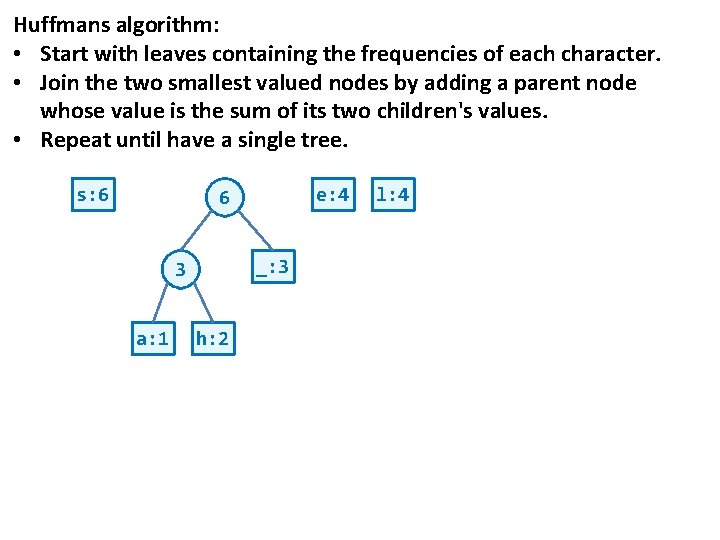

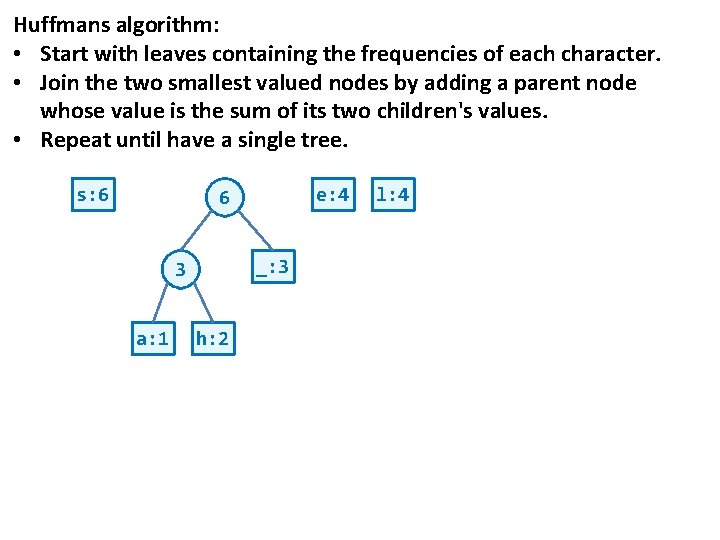

Huffmans algorithm: • Start with leaves containing the frequencies of each character. • Join the two smallest valued nodes by adding a parent node whose value is the sum of its two children's values. • Repeat until have a single tree. s: 6 e: 4 6 _: 3 3 a: 1 h: 2 l: 4

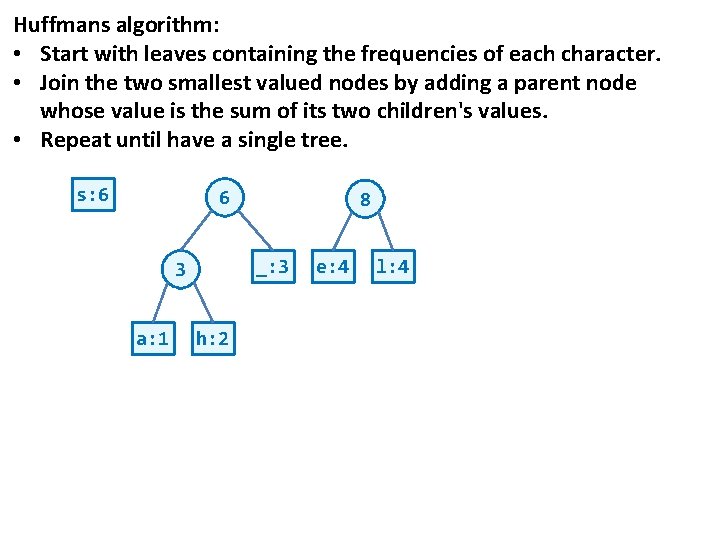

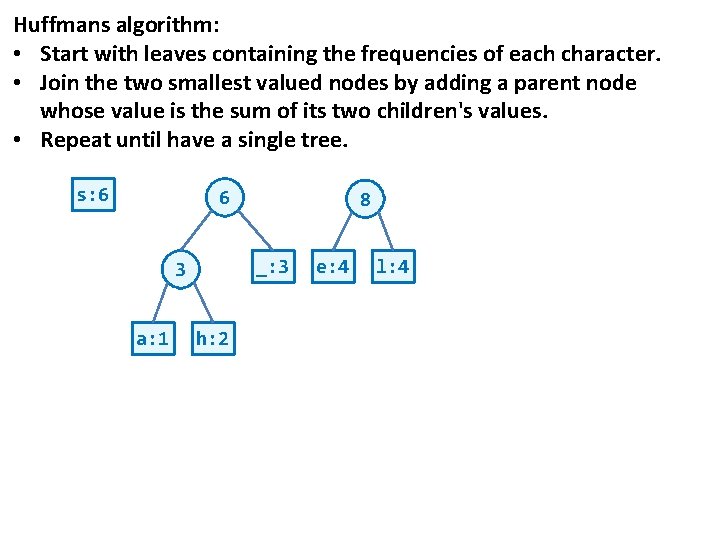

Huffmans algorithm: • Start with leaves containing the frequencies of each character. • Join the two smallest valued nodes by adding a parent node whose value is the sum of its two children's values. • Repeat until have a single tree. s: 6 6 _: 3 3 a: 1 8 h: 2 e: 4 l: 4

Huffmans algorithm: • Start with leaves containing the frequencies of each character. • Join the two smallest valued nodes by adding a parent node whose value is the sum of its two children's values. • Repeat until have a single tree. 12 8 s: 6 e: 4 6 _: 3 3 a: 1 h: 2 l: 4

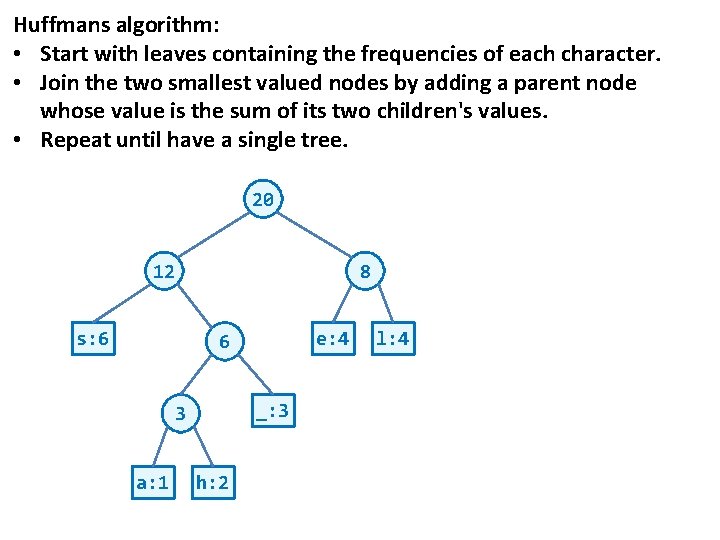

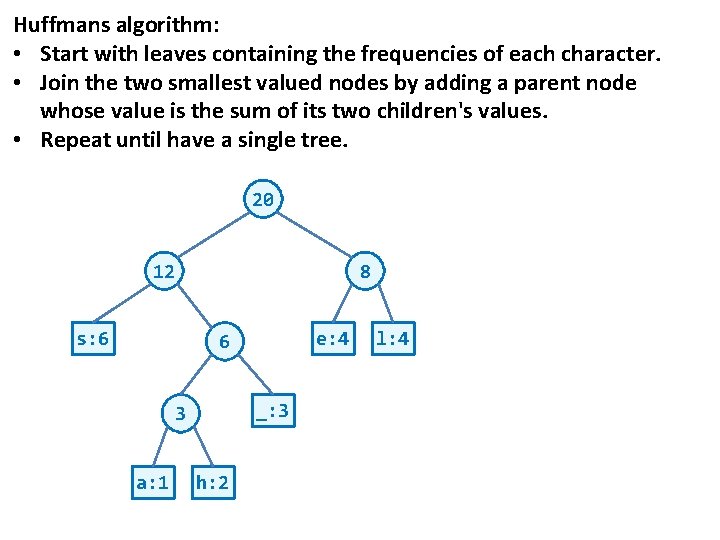

Huffmans algorithm: • Start with leaves containing the frequencies of each character. • Join the two smallest valued nodes by adding a parent node whose value is the sum of its two children's values. • Repeat until have a single tree. 20 12 8 s: 6 e: 4 6 _: 3 3 a: 1 h: 2 l: 4

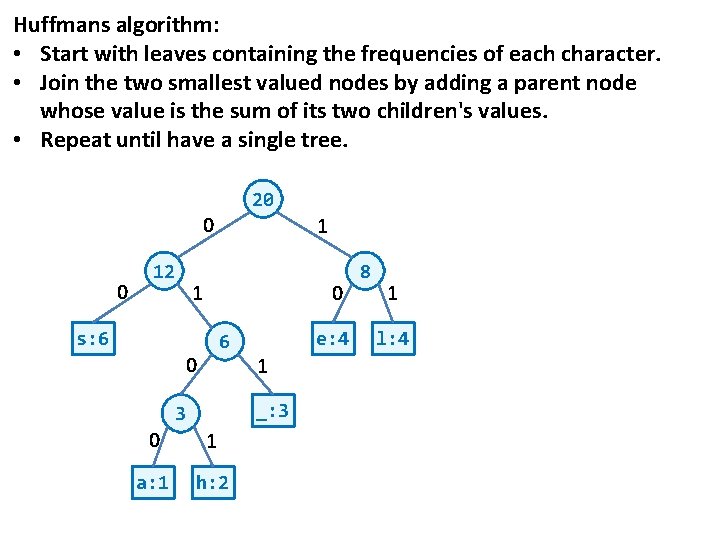

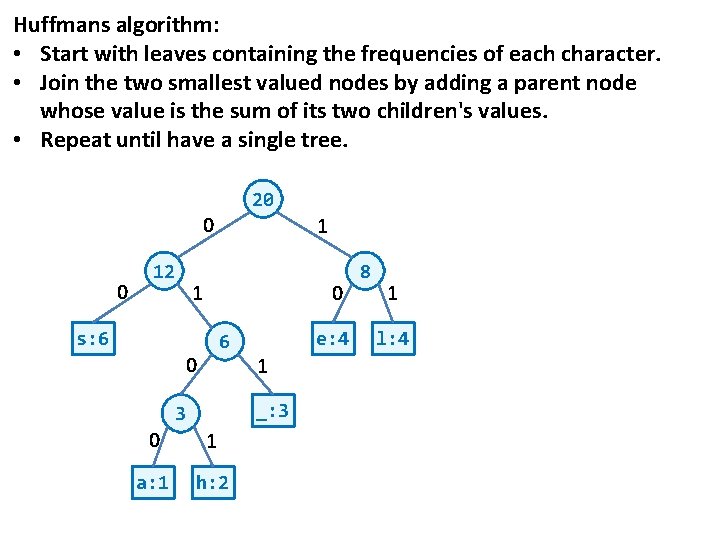

Huffmans algorithm: • Start with leaves containing the frequencies of each character. • Join the two smallest valued nodes by adding a parent node whose value is the sum of its two children's values. • Repeat until have a single tree. 20 0 0 12 1 1 0 s: 6 6 0 e: 4 1 _: 3 3 0 1 a: 1 h: 2 8 1 l: 4

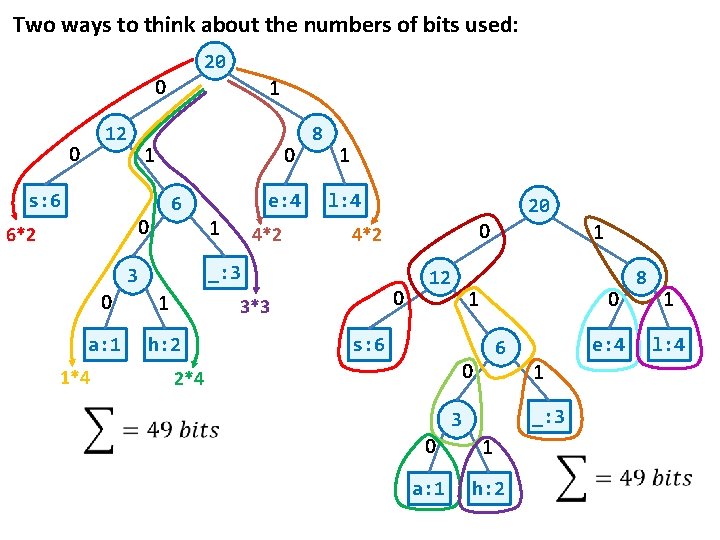

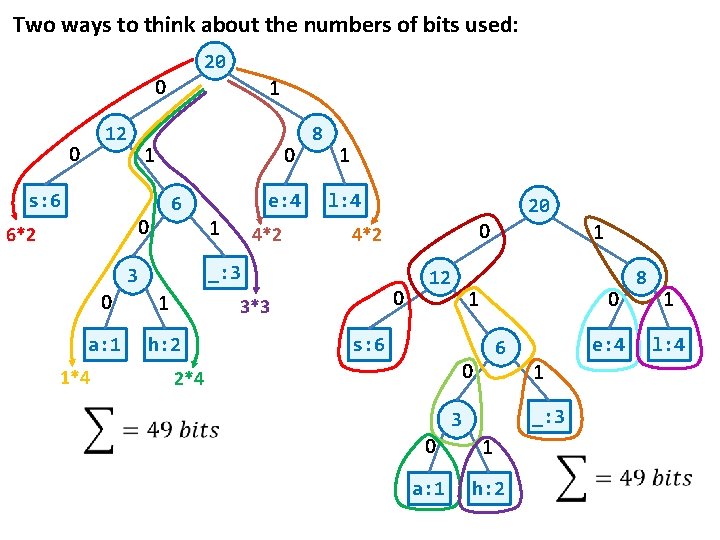

Two ways to think about the numbers of bits used: 20 0 12 0 0 1 s: 6 6 0 6*2 1 1 4*2 1 l: 4 0 1 a: 1 h: 2 20 0 4*2 _: 3 3 1*4 e: 4 8 0 3*3 12 1 0 s: 6 6 0 2*4 1 e: 4 1 _: 3 3 0 1 a: 1 h: 2 8 1 l: 4

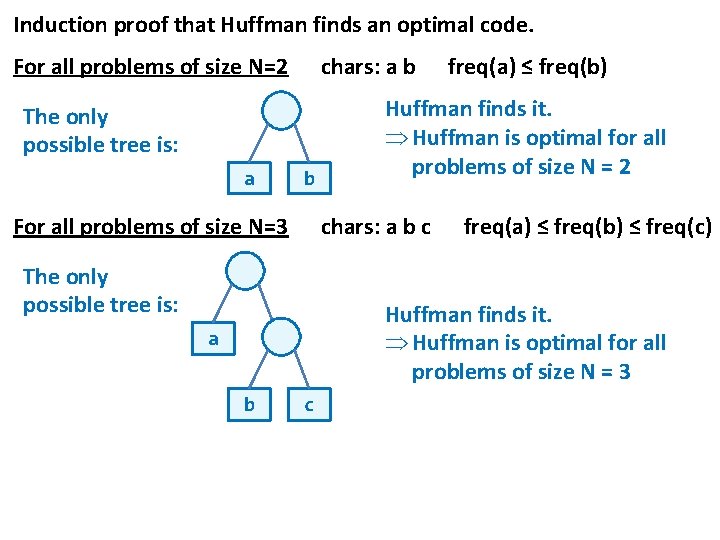

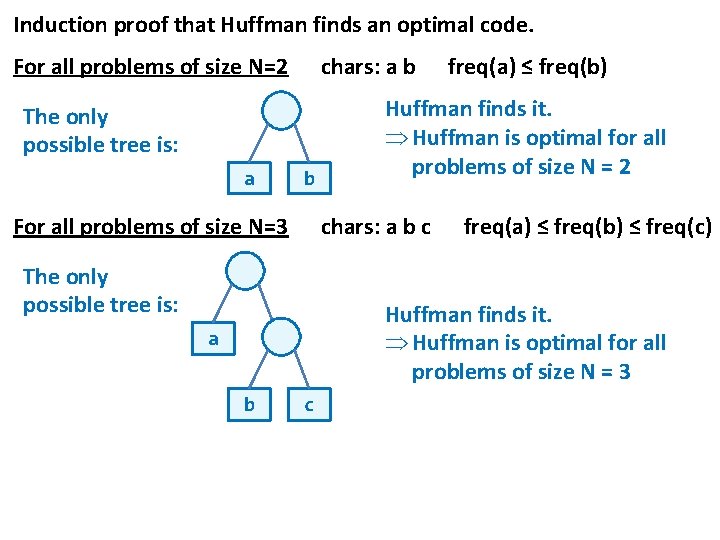

Induction proof that Huffman finds an optimal code. For all problems of size N=2 chars: a b The only possible tree is: a b For all problems of size N=3 Huffman finds it. Þ Huffman is optimal for all problems of size N = 2 chars: a b c The only possible tree is: freq(a) ≤ freq(b) ≤ freq(c) Huffman finds it. Þ Huffman is optimal for all problems of size N = 3 a b c

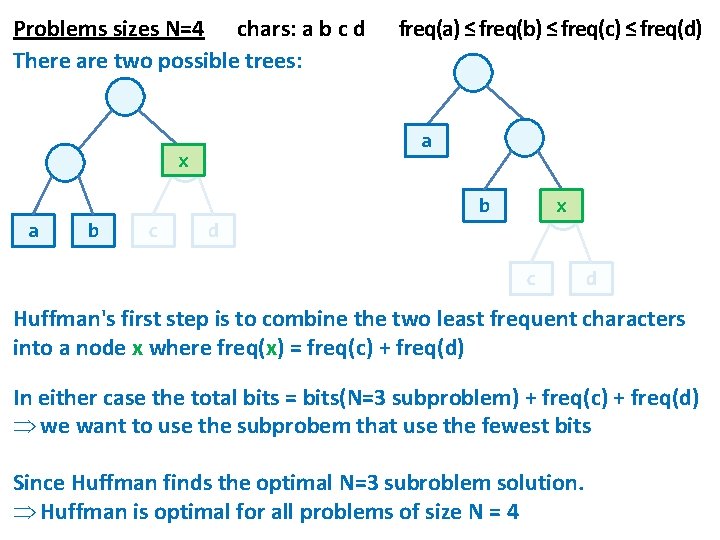

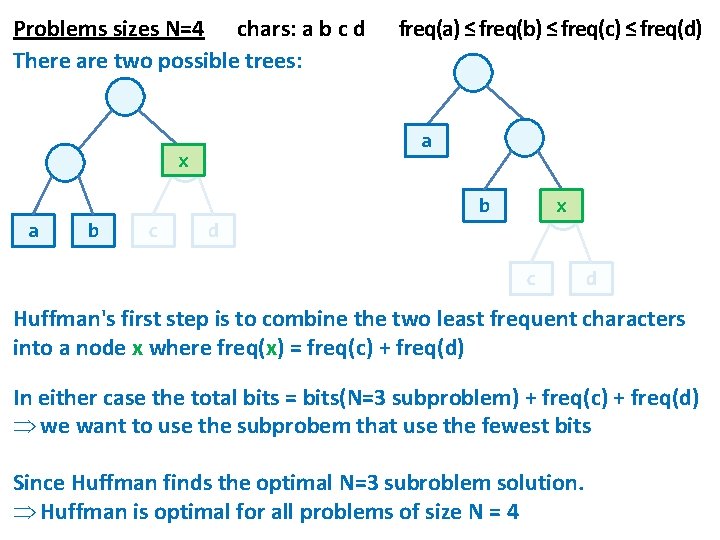

Problems sizes N=4 chars: a b c d There are two possible trees: freq(a) ≤ freq(b) ≤ freq(c) ≤ freq(d) a x b a b c x d c d Huffman's first step is to combine the two least frequent characters into a node x where freq(x) = freq(c) + freq(d) In either case the total bits = bits(N=3 subproblem) + freq(c) + freq(d) Þ we want to use the subprobem that use the fewest bits Since Huffman finds the optimal N=3 subroblem solution. Þ Huffman is optimal for all problems of size N = 4

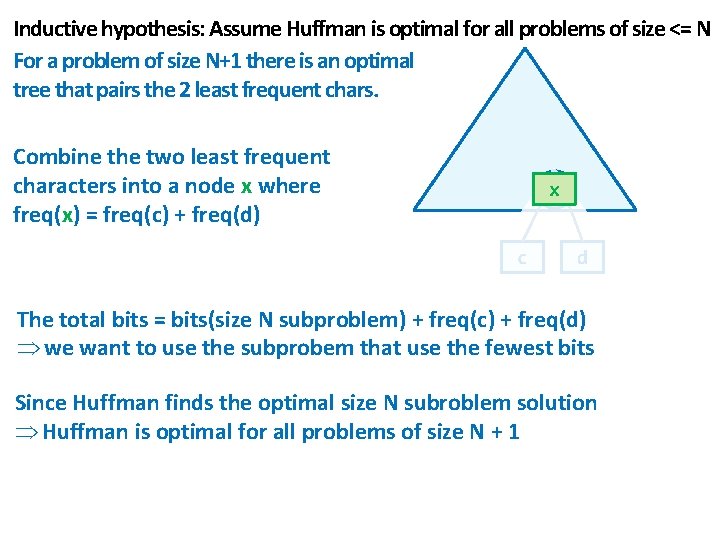

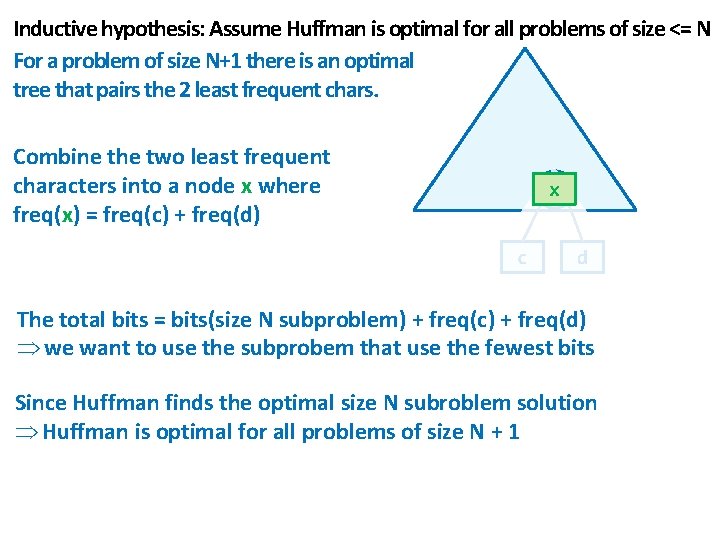

Inductive hypothesis: Assume Huffman is optimal for all problems of size <= N For a problem of size N+1 there is an optimal tree that pairs the 2 least frequent chars. Combine the two least frequent characters into a node x where freq(x) = freq(c) + freq(d) x c d The total bits = bits(size N subproblem) + freq(c) + freq(d) Þ we want to use the subprobem that use the fewest bits Since Huffman finds the optimal size N subroblem solution Þ Huffman is optimal for all problems of size N + 1

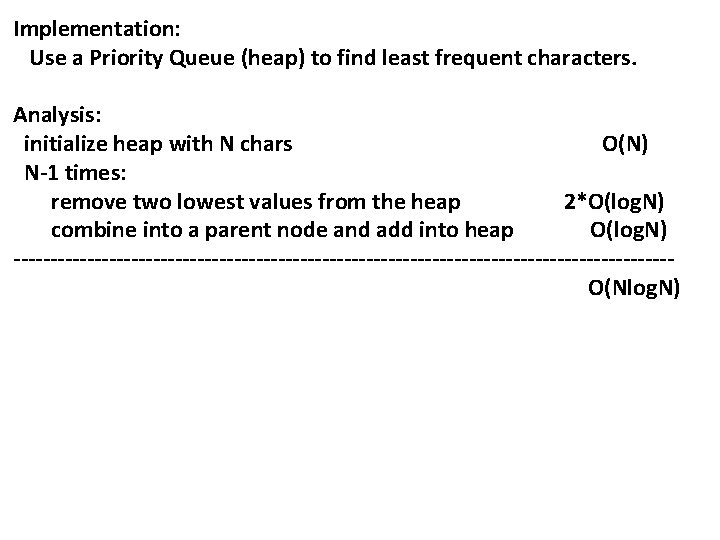

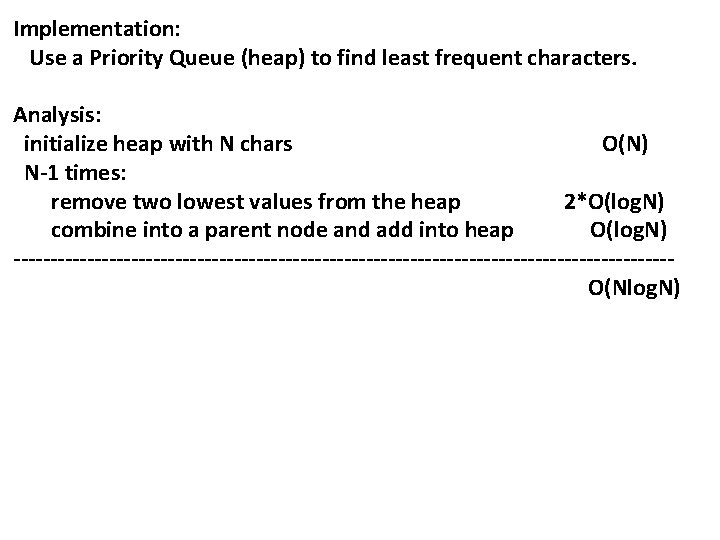

Implementation: Use a Priority Queue (heap) to find least frequent characters. Analysis: initialize heap with N chars O(N) N-1 times: remove two lowest values from the heap 2*O(log. N) combine into a parent node and add into heap O(log. N) ---------------------------------------------O(Nlog. N)