Polychotomizers OneHot Vectors Softmax and CrossEntropy Mark HasegawaJohnson

- Slides: 55

Polychotomizers: One-Hot Vectors, Softmax, and Cross-Entropy Mark Hasegawa-Johnson, 3/9/2019. CC-BY 3. 0: You are free to share and adapt these slides if you cite the original.

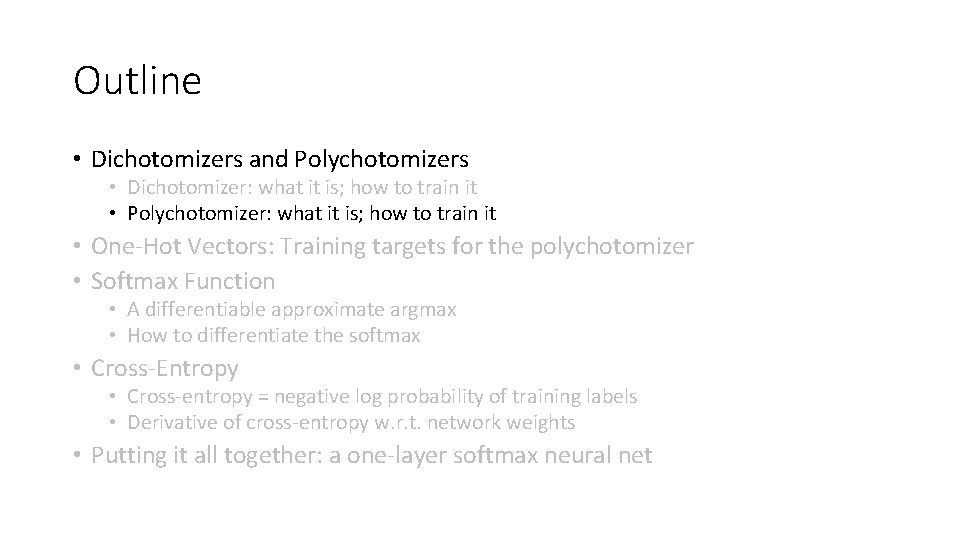

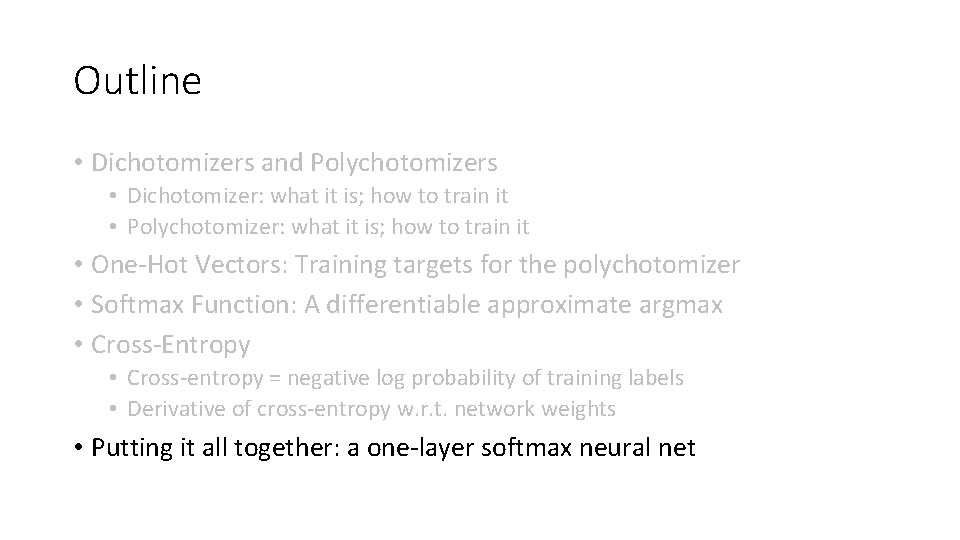

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function • A differentiable approximate argmax • How to differentiate the softmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function • A differentiable approximate argmax • How to differentiate the softmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

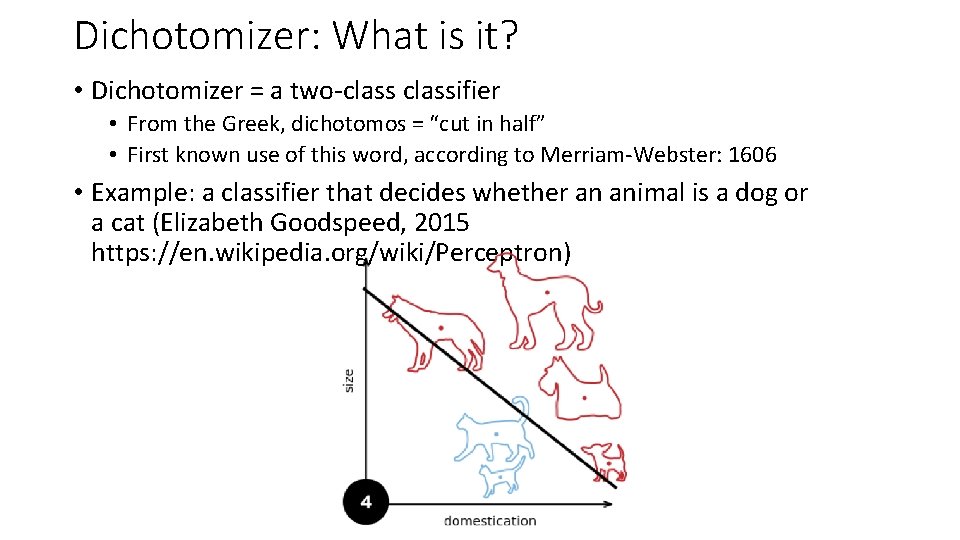

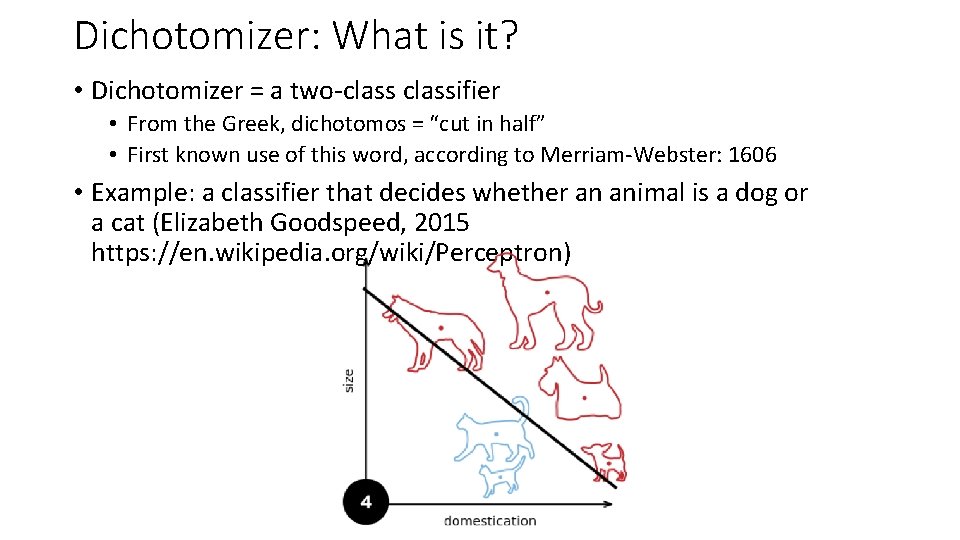

Dichotomizer: What is it? • Dichotomizer = a two-classifier • From the Greek, dichotomos = “cut in half” • First known use of this word, according to Merriam-Webster: 1606 • Example: a classifier that decides whether an animal is a dog or a cat (Elizabeth Goodspeed, 2015 https: //en. wikipedia. org/wiki/Perceptron)

Dichotomizer: Example •

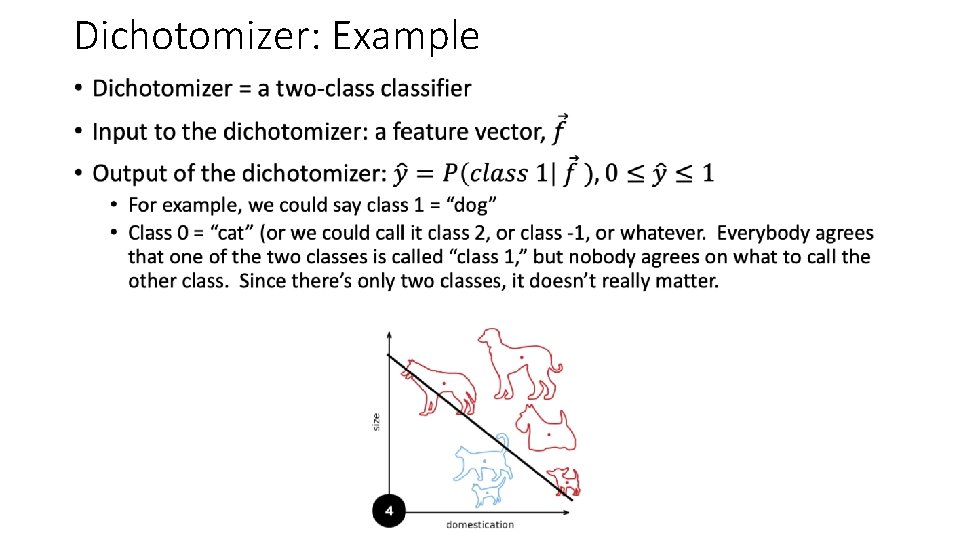

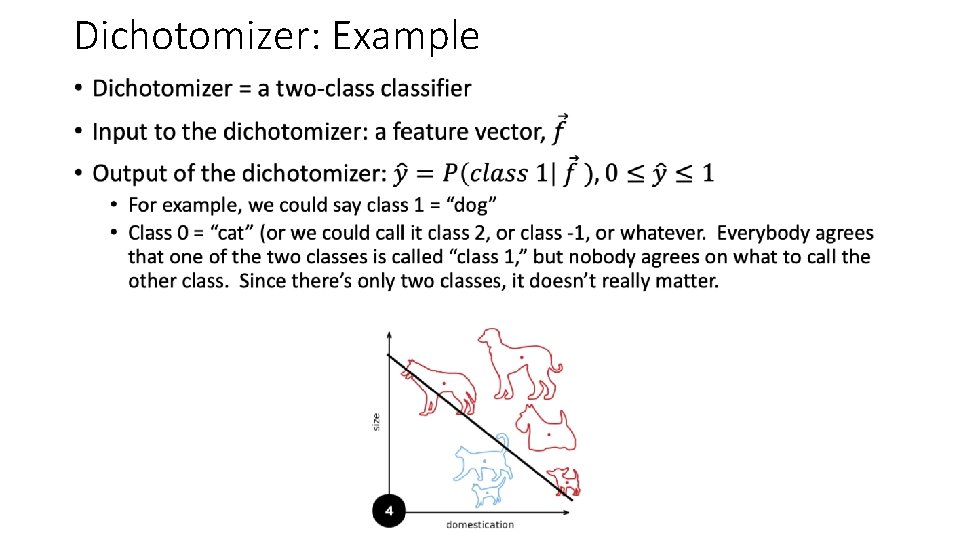

Dichotomizer: Example •

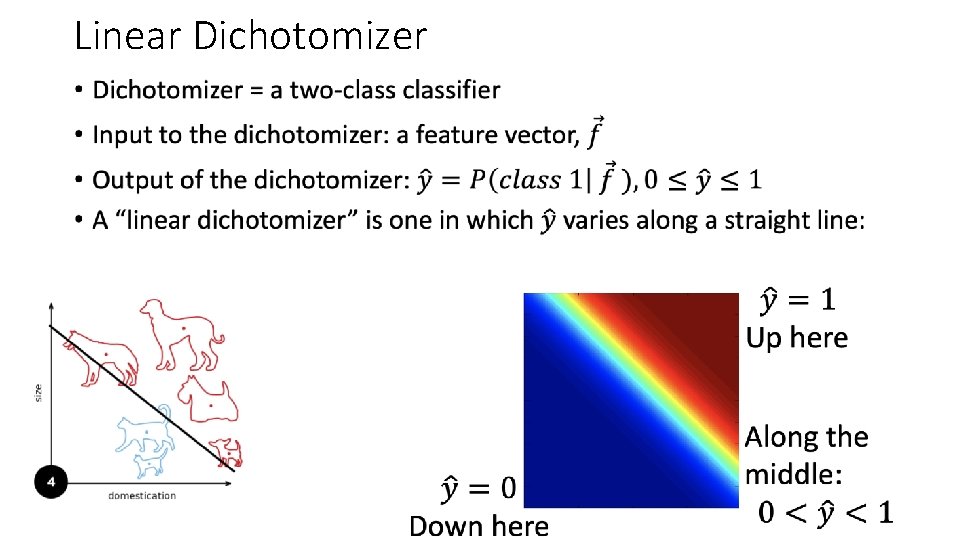

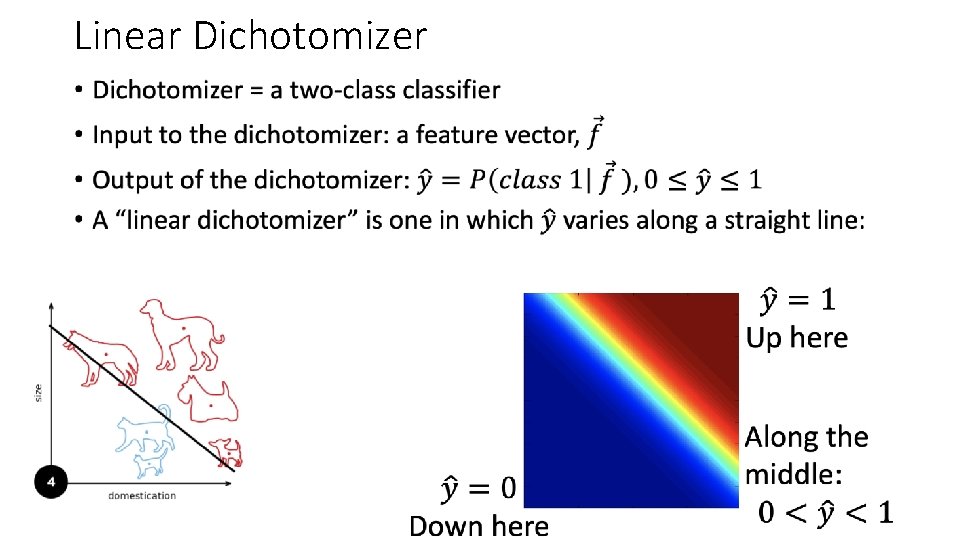

Linear Dichotomizer •

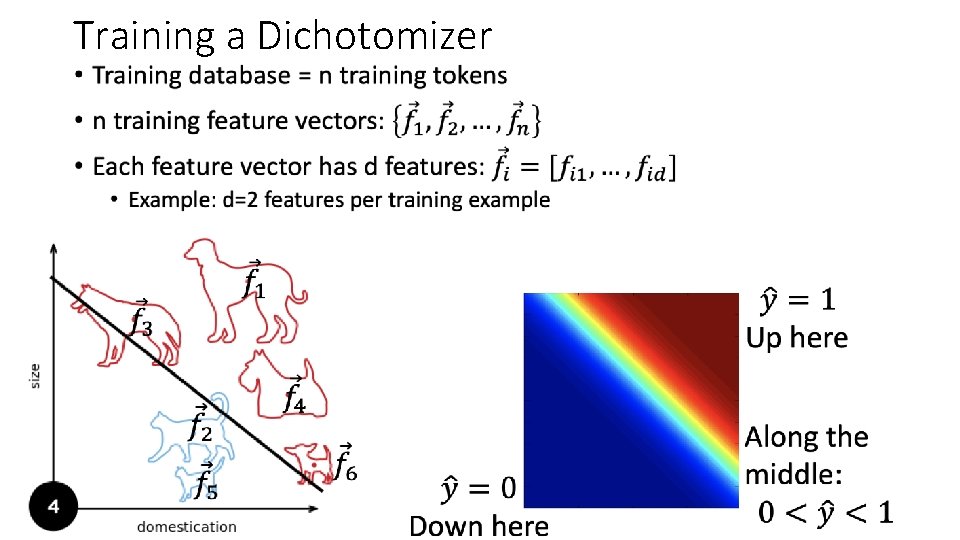

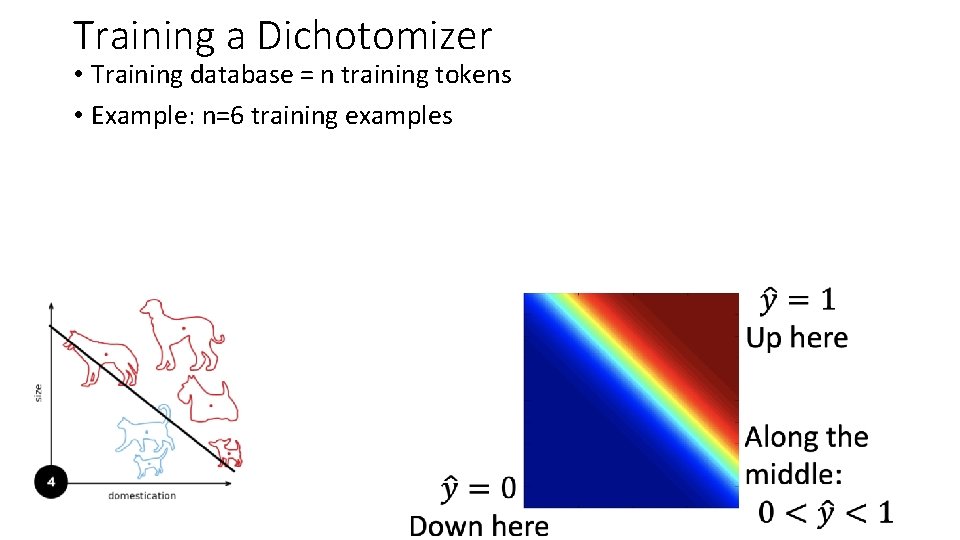

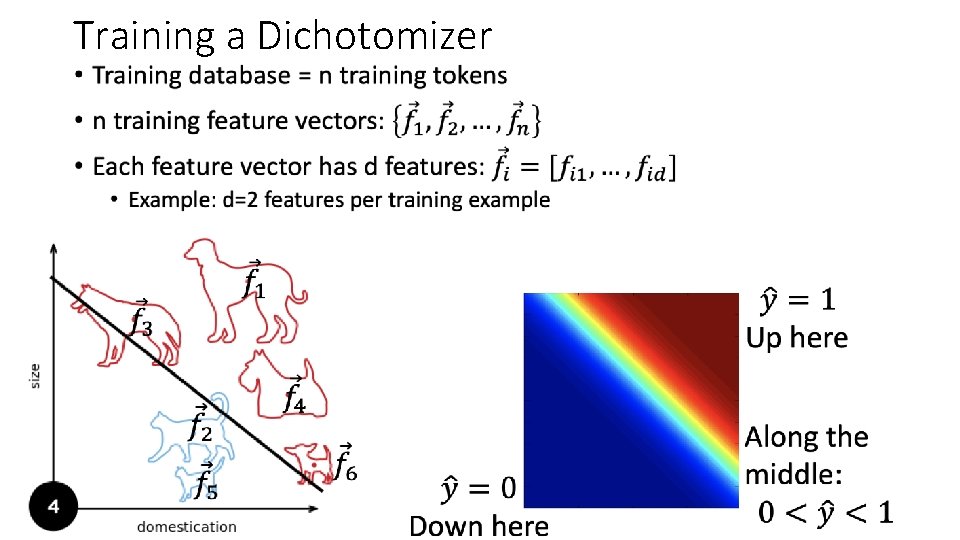

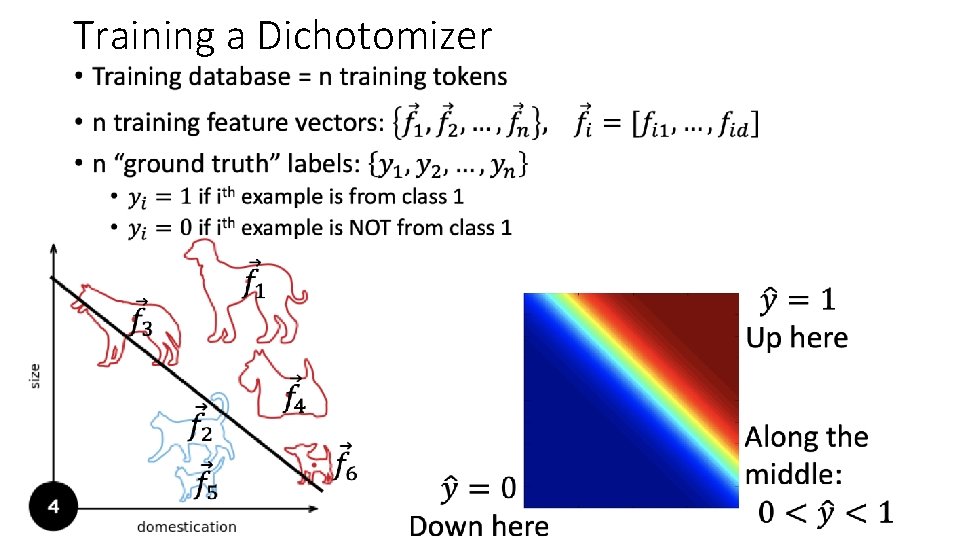

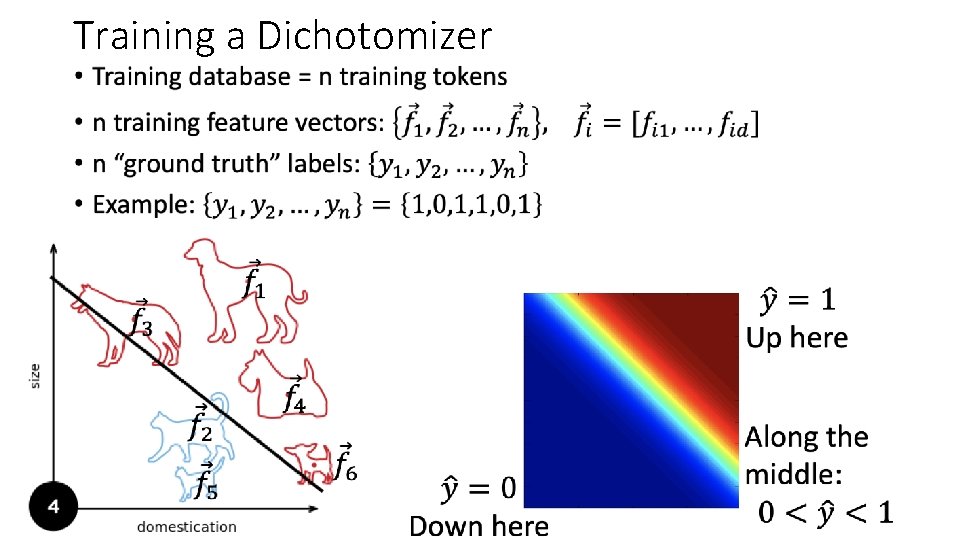

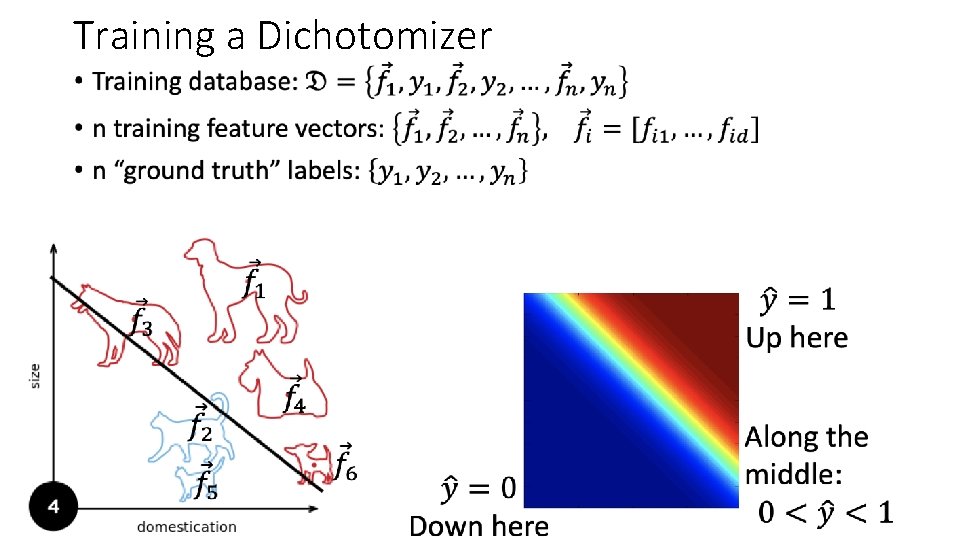

Training a Dichotomizer • Training database = n training tokens • Example: n=6 training examples

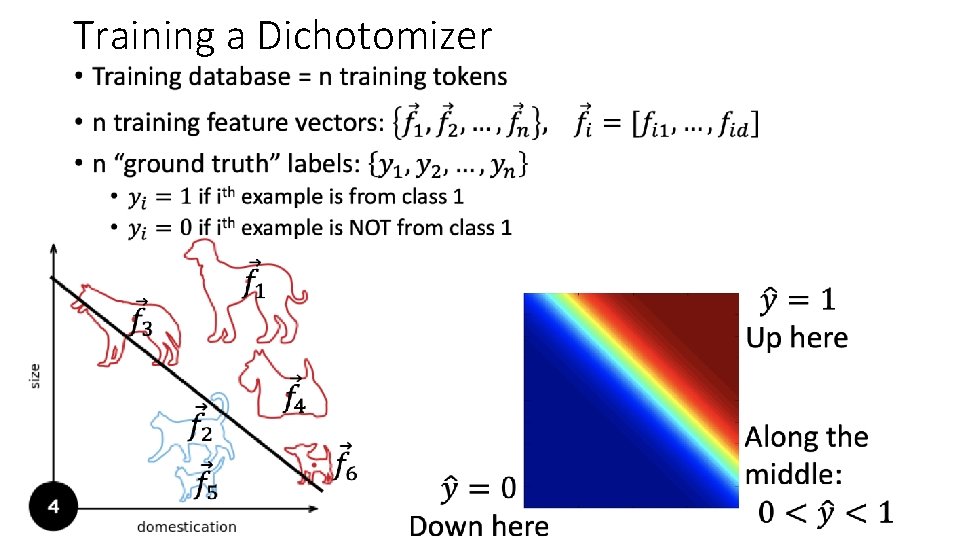

Training a Dichotomizer •

Training a Dichotomizer •

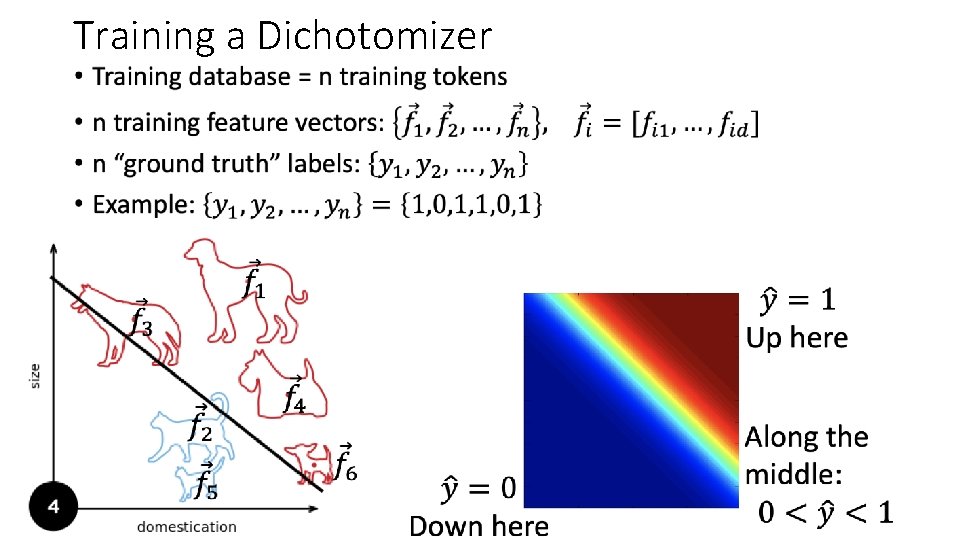

Training a Dichotomizer •

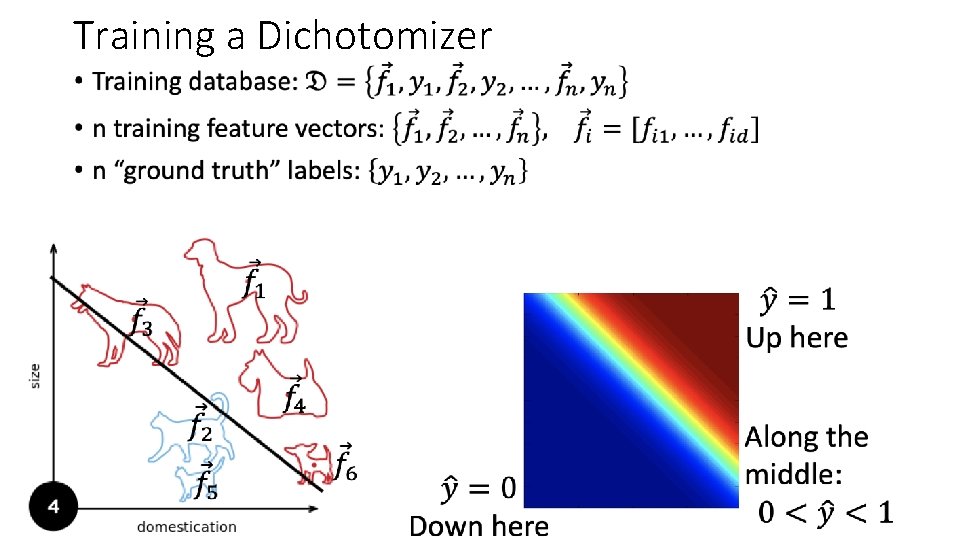

Training a Dichotomizer •

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function • A differentiable approximate argmax • How to differentiate the softmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

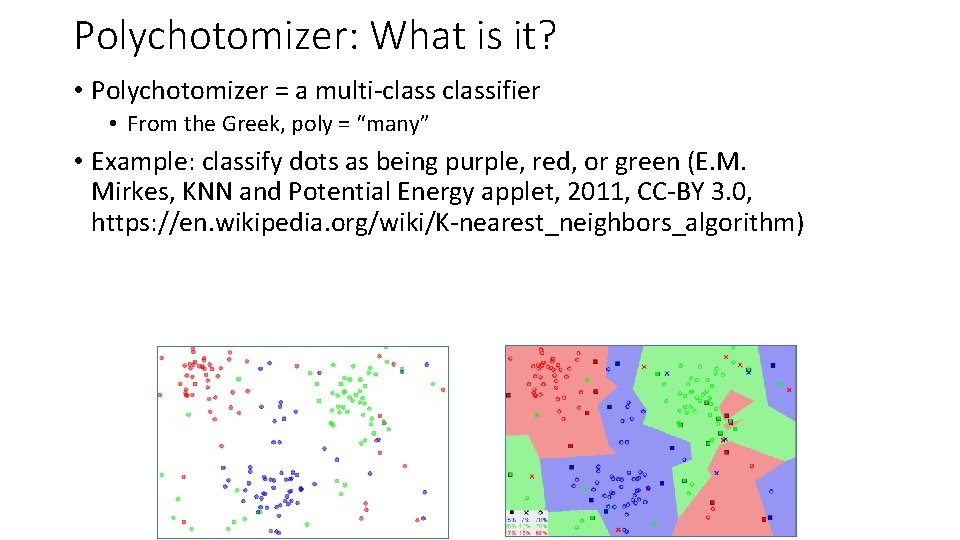

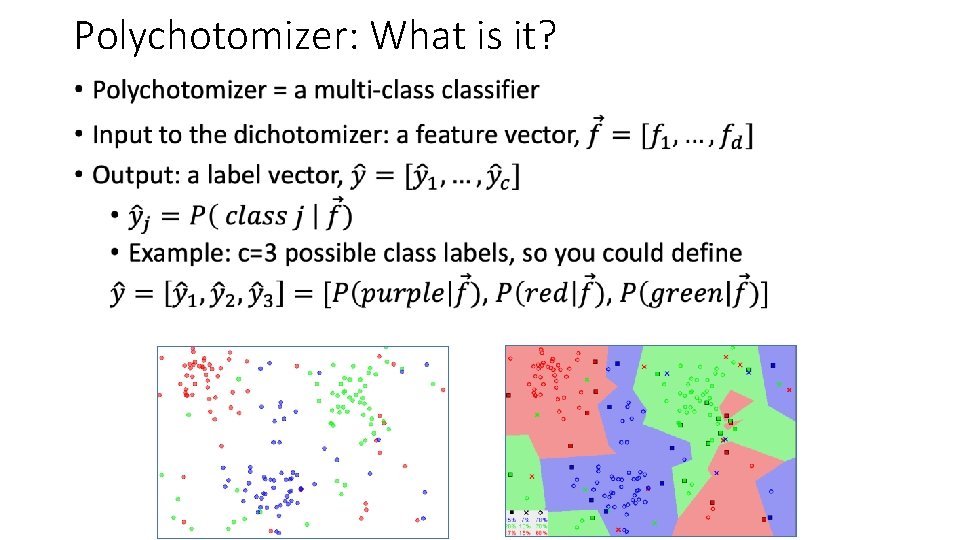

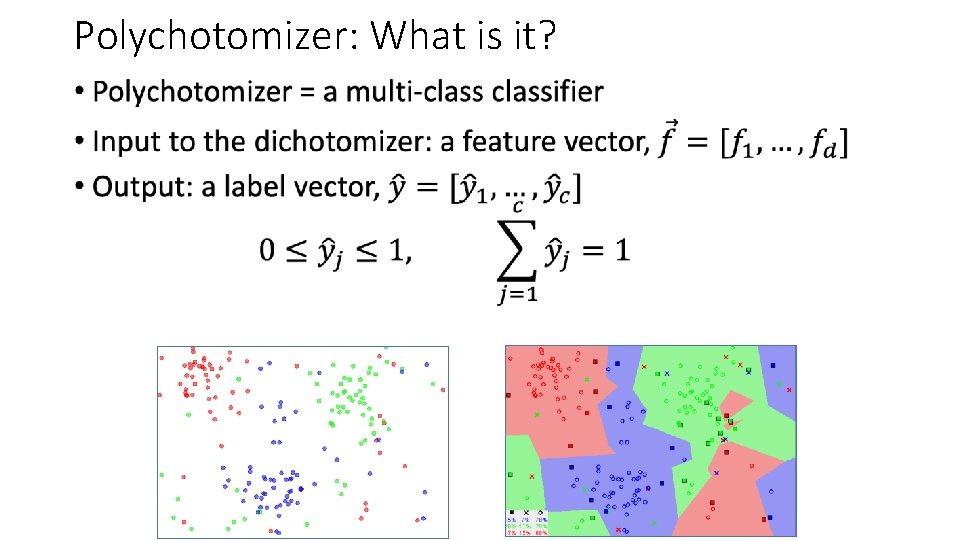

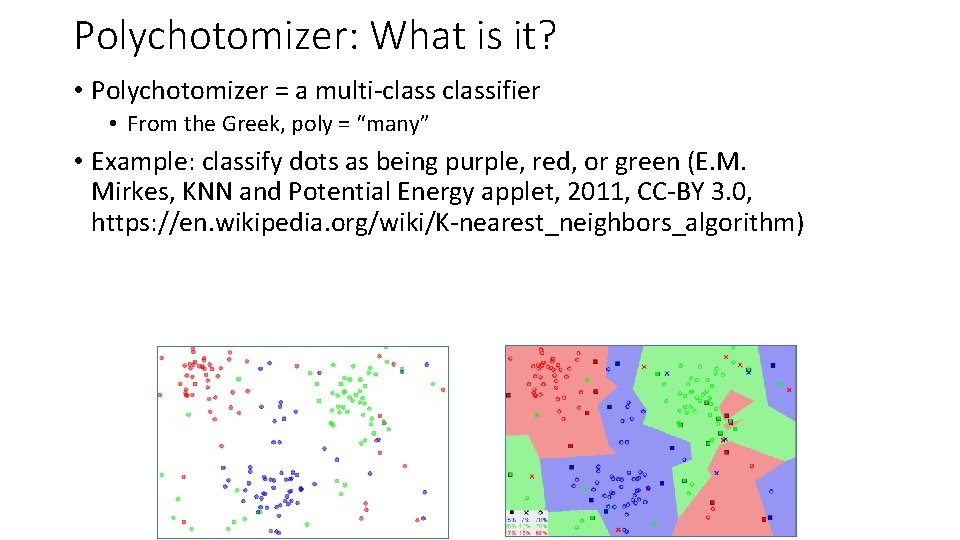

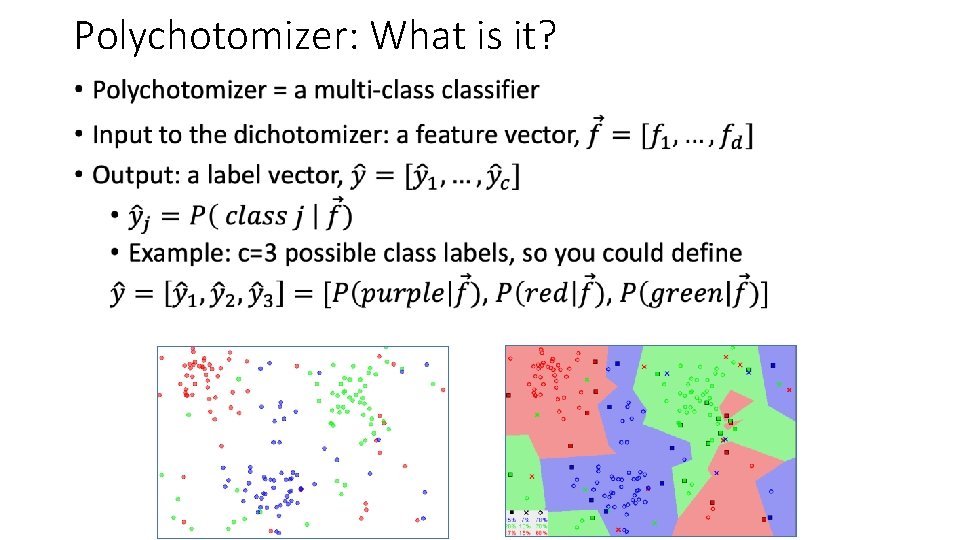

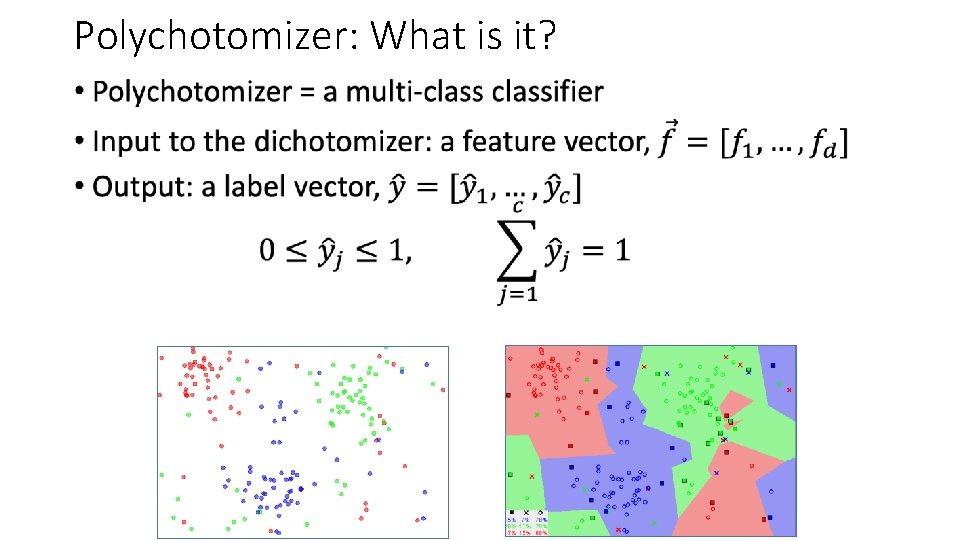

Polychotomizer: What is it? • Polychotomizer = a multi-classifier • From the Greek, poly = “many” • Example: classify dots as being purple, red, or green (E. M. Mirkes, KNN and Potential Energy applet, 2011, CC-BY 3. 0, https: //en. wikipedia. org/wiki/K-nearest_neighbors_algorithm)

Polychotomizer: What is it? •

Polychotomizer: What is it? •

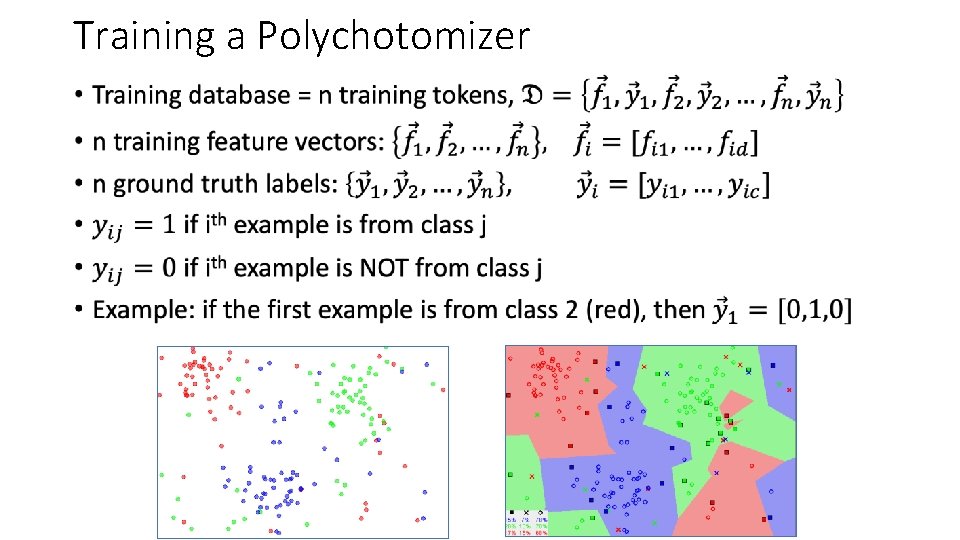

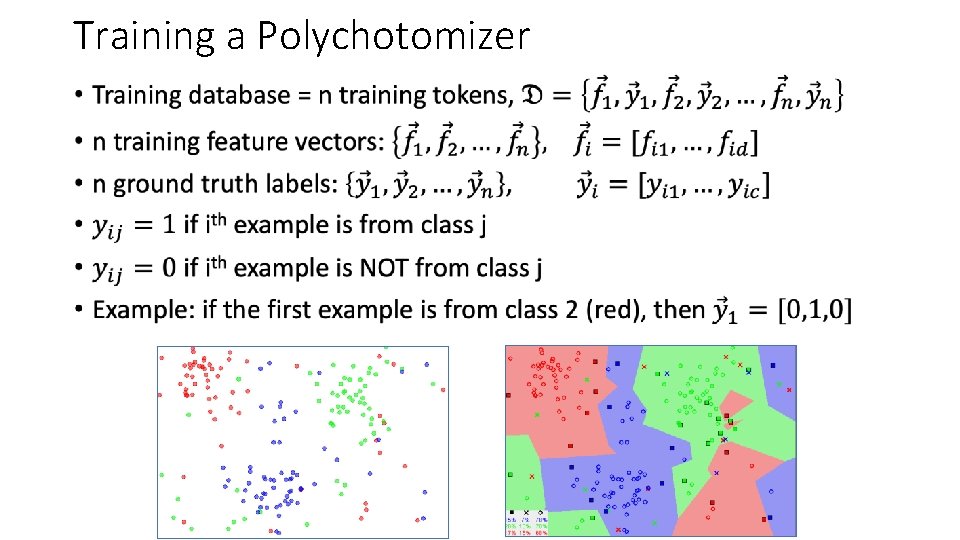

Training a Polychotomizer •

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function • A differentiable approximate argmax • How to differentiate the softmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

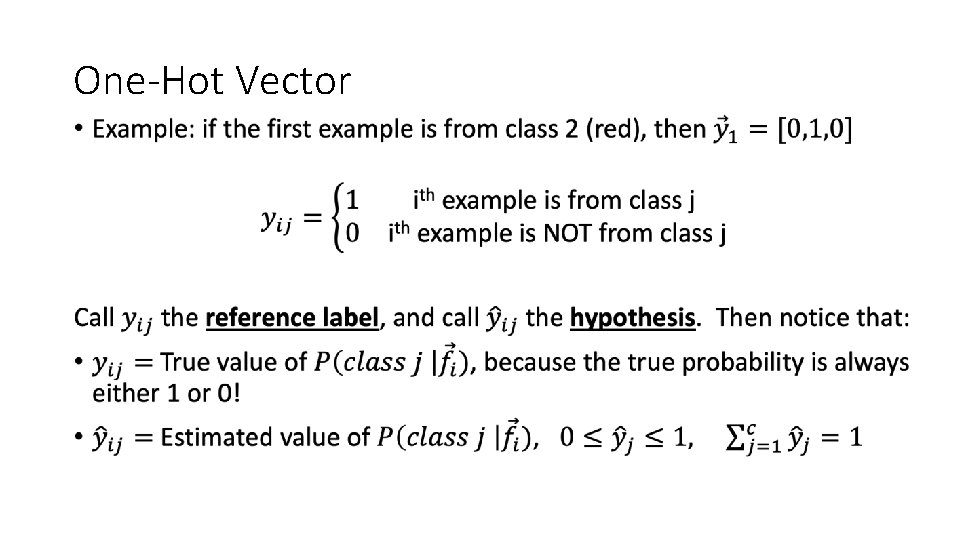

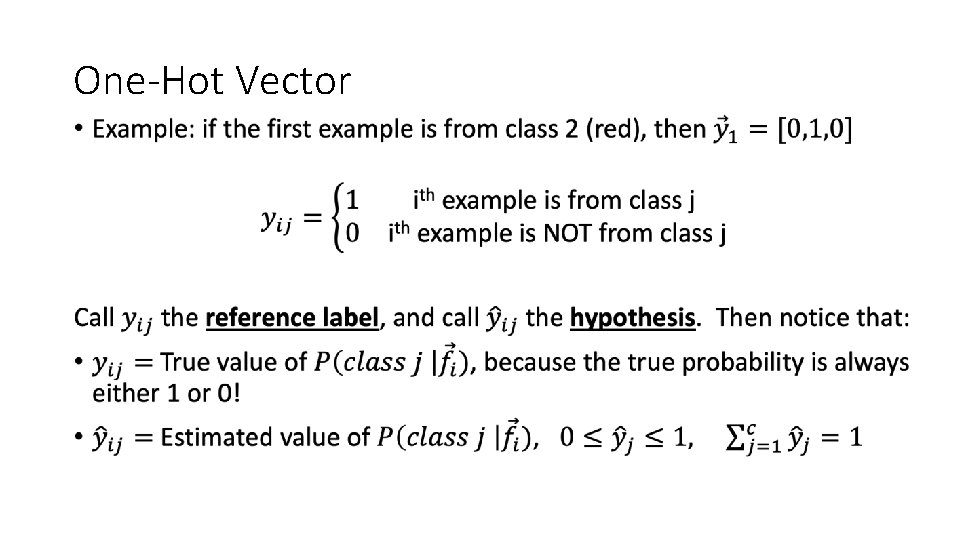

One-Hot Vector •

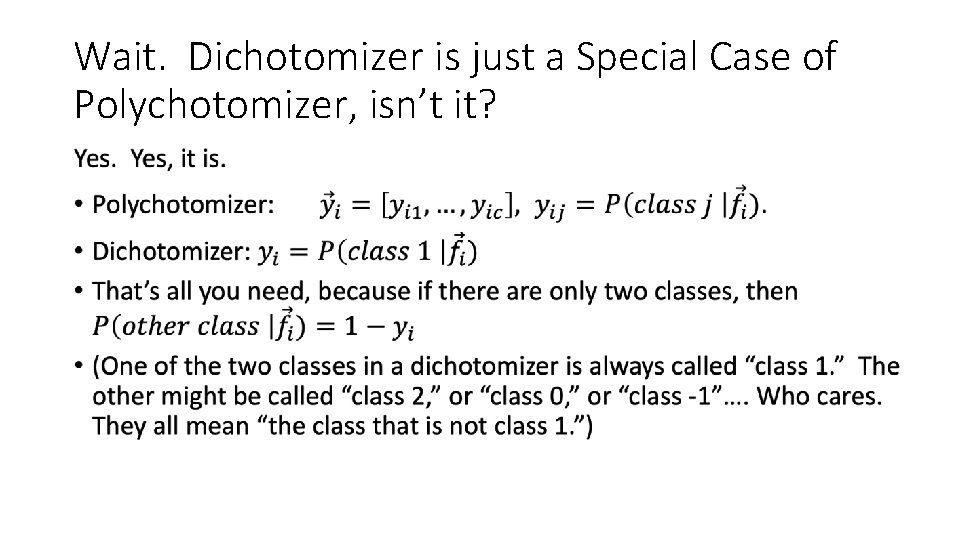

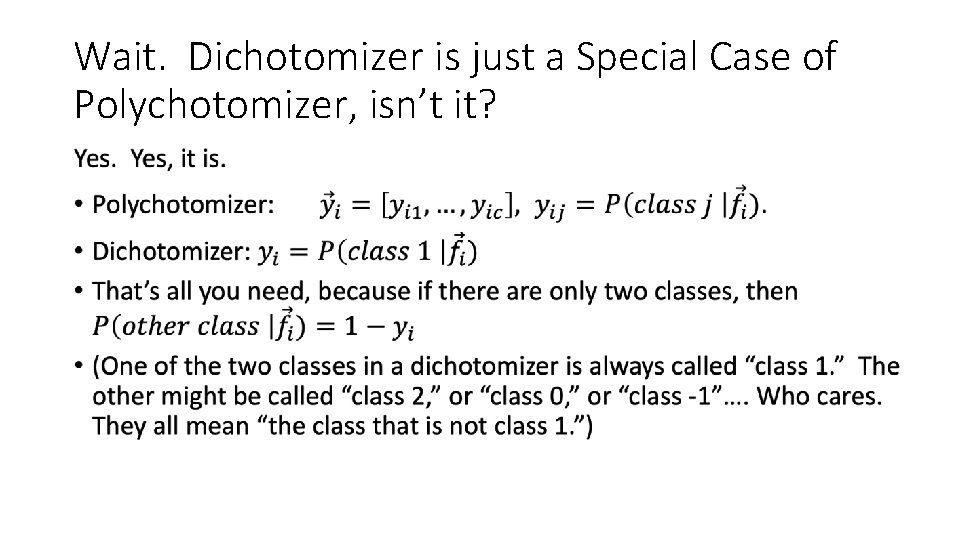

Wait. Dichotomizer is just a Special Case of Polychotomizer, isn’t it? •

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function • A differentiable approximate argmax • How to differentiate the softmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

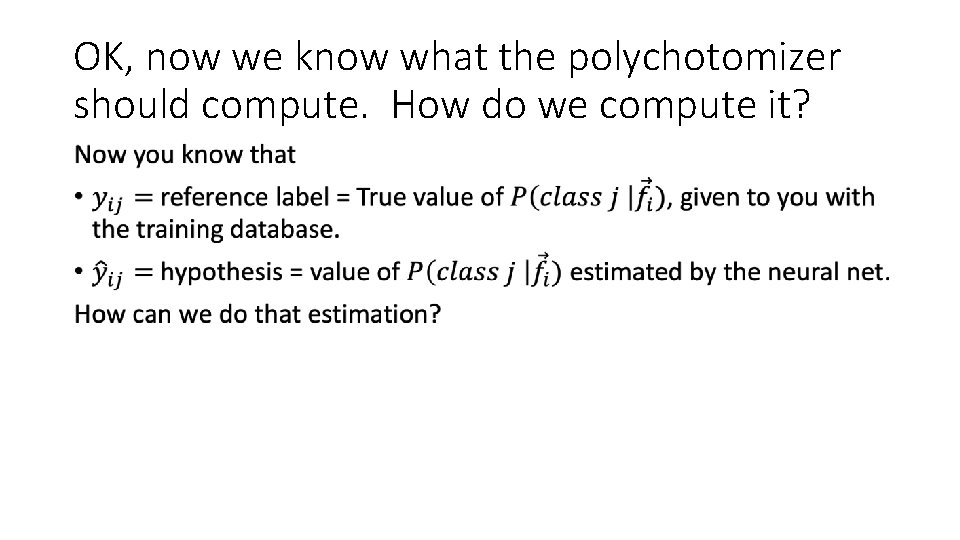

OK, now we know what the polychotomizer should compute. How do we compute it? •

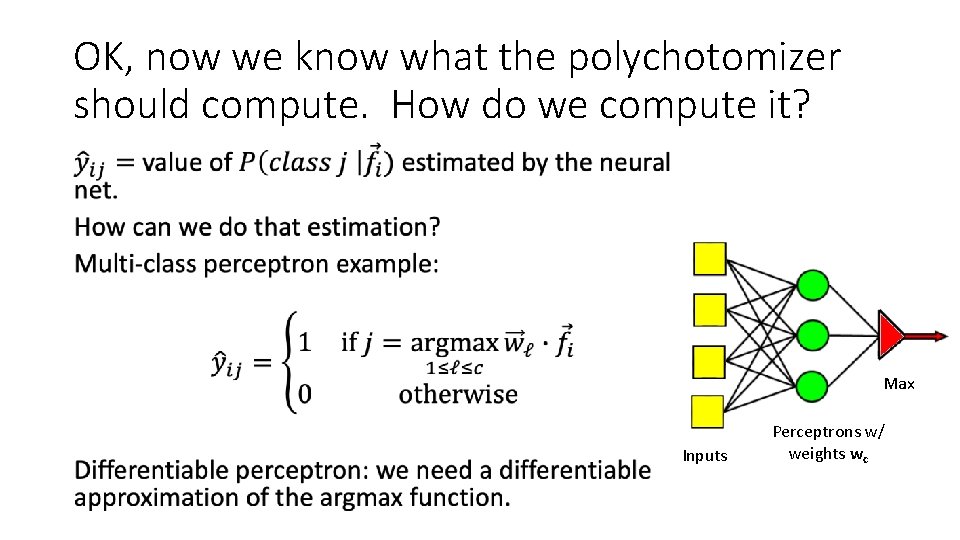

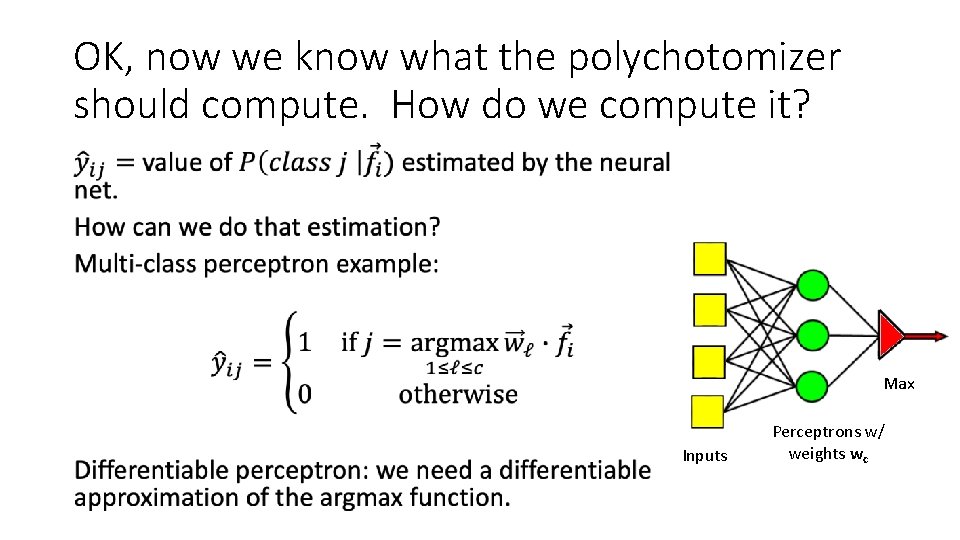

OK, now we know what the polychotomizer should compute. How do we compute it? • Max Inputs Perceptrons w/ weights wc

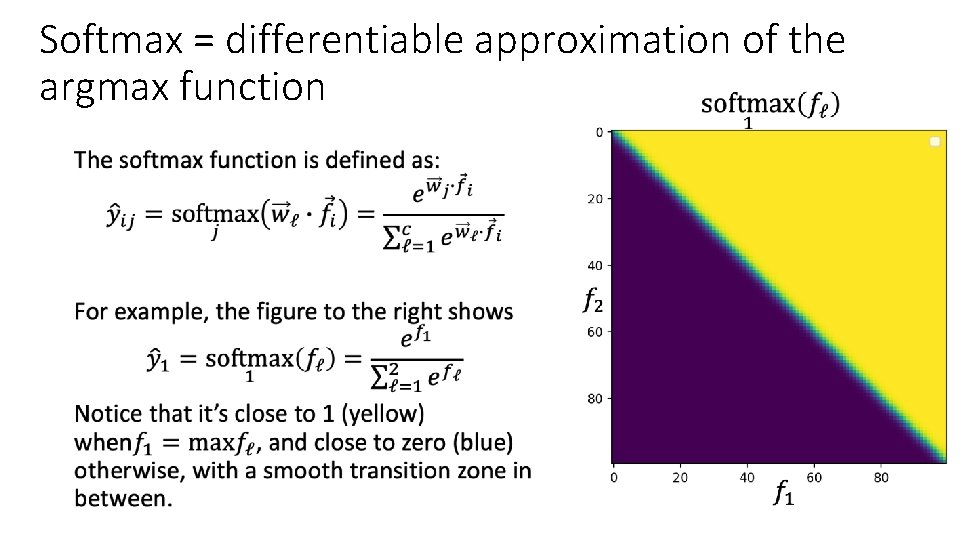

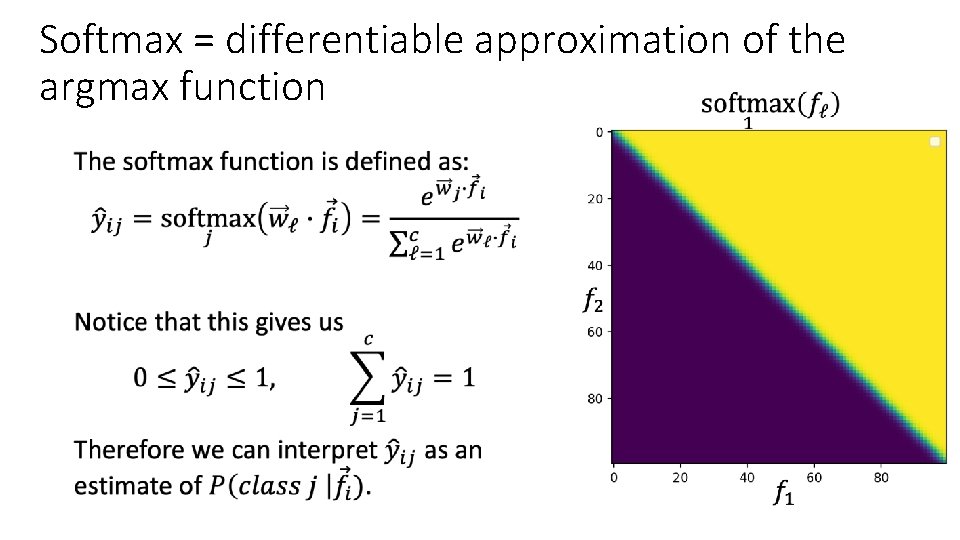

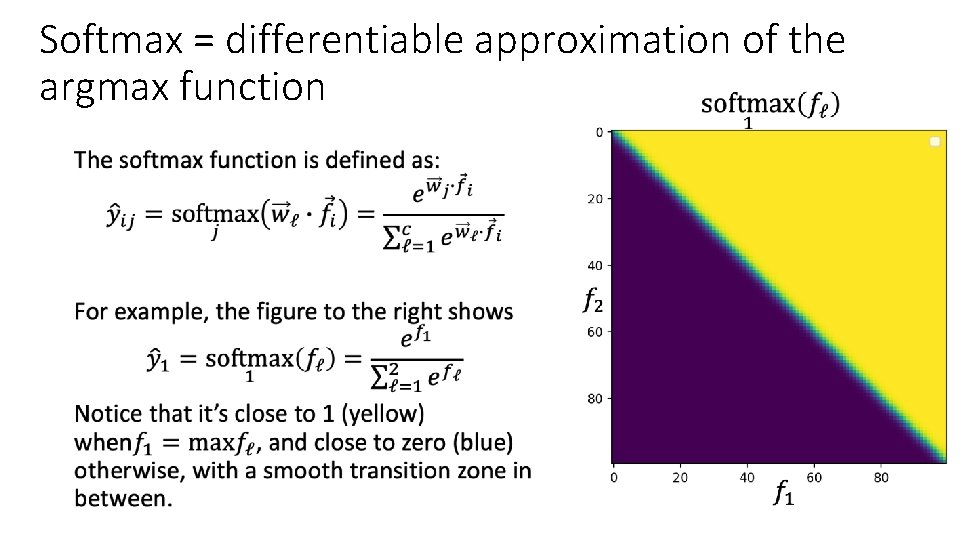

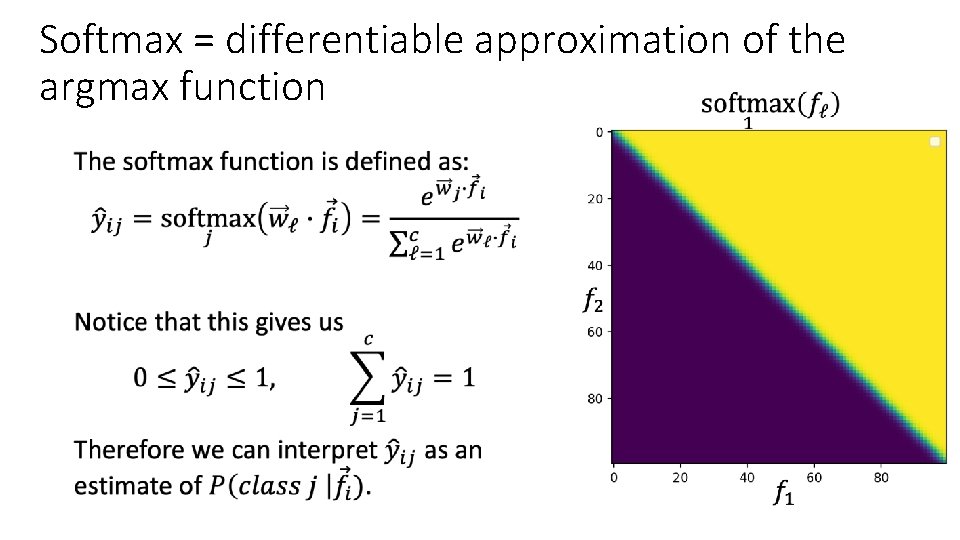

Softmax = differentiable approximation of the argmax function •

Softmax = differentiable approximation of the argmax function •

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function • A differentiable approximate argmax • How to differentiate the softmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

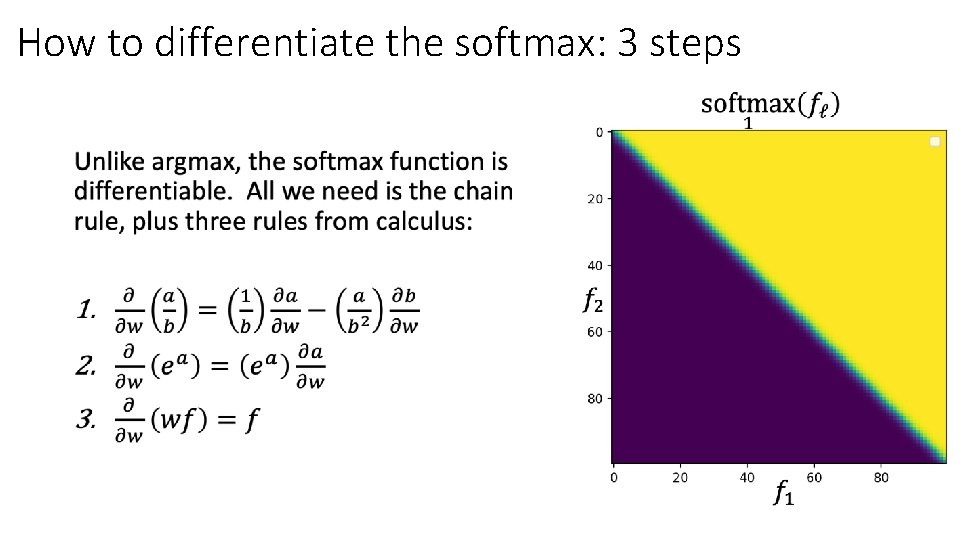

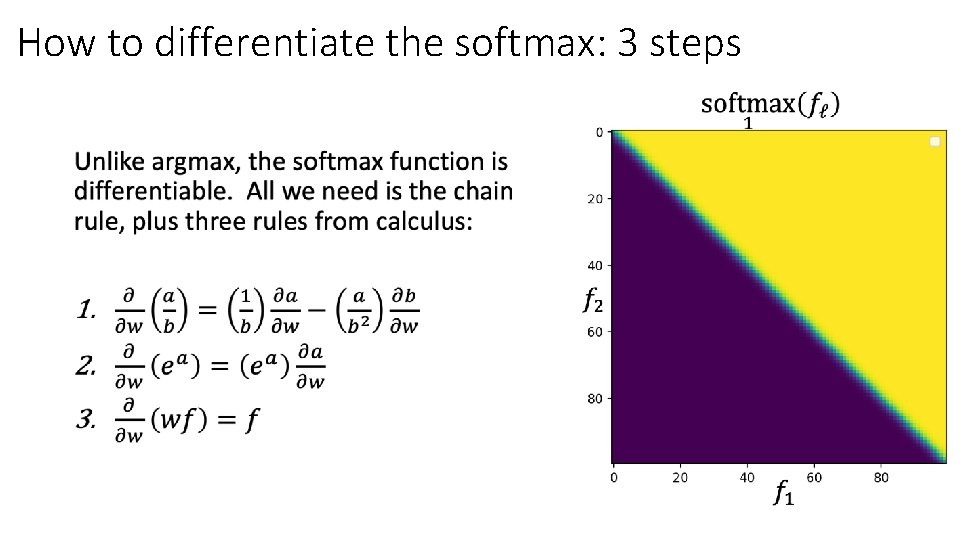

How to differentiate the softmax: 3 steps •

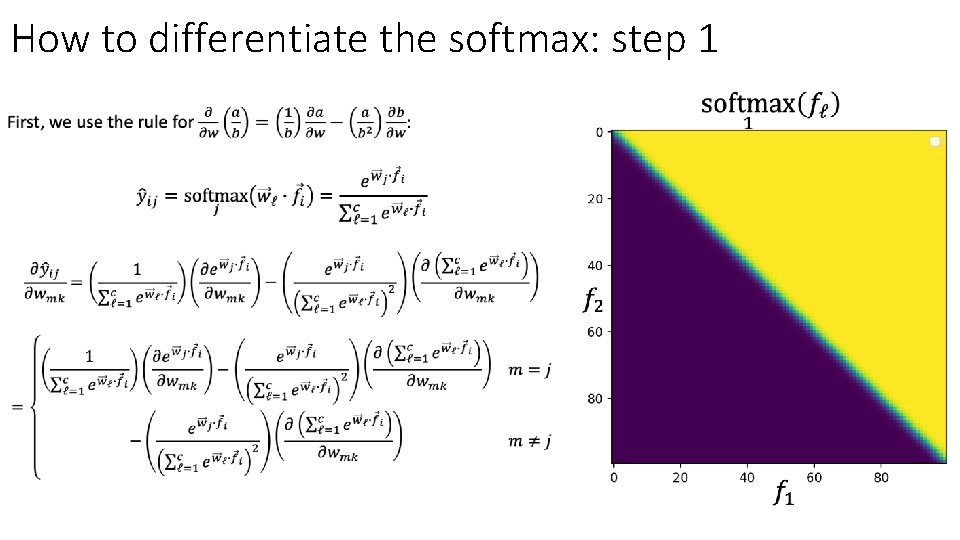

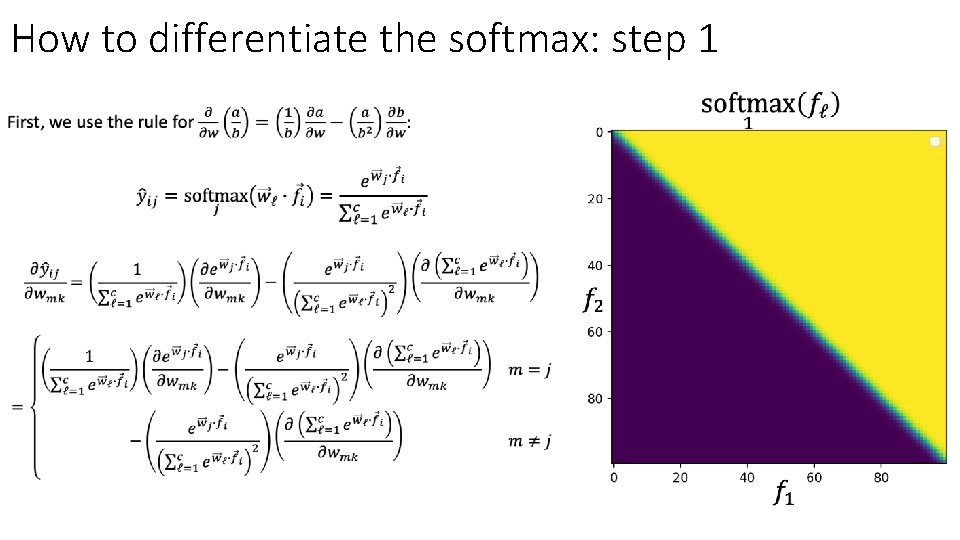

How to differentiate the softmax: step 1 •

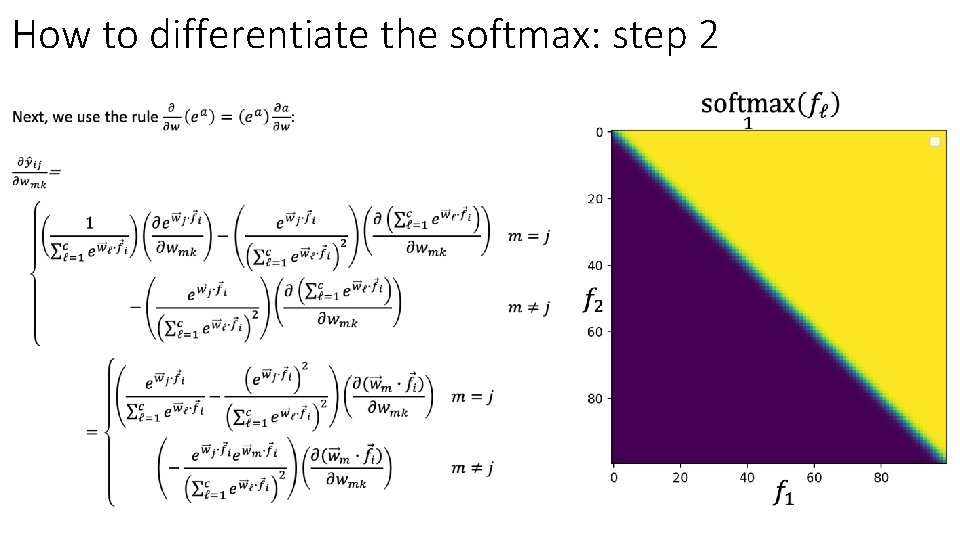

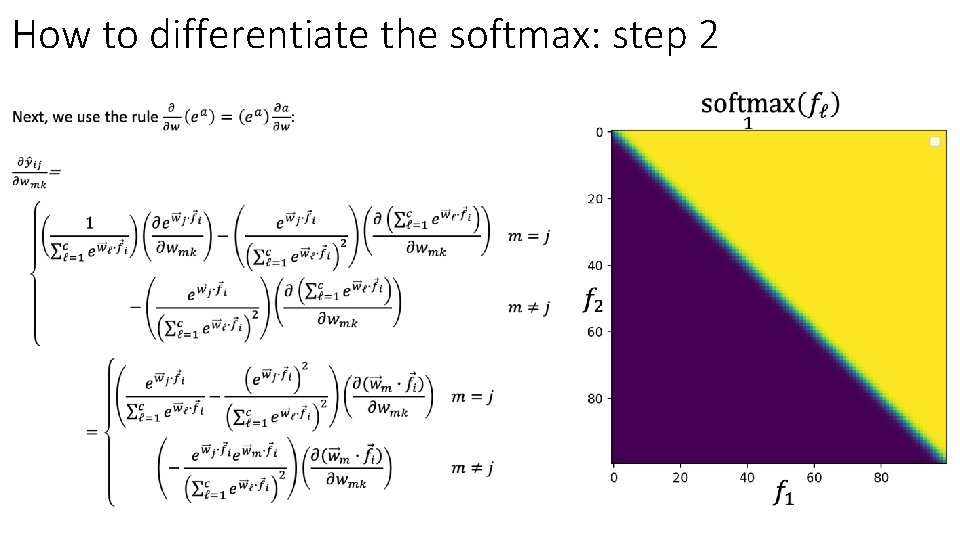

How to differentiate the softmax: step 2 •

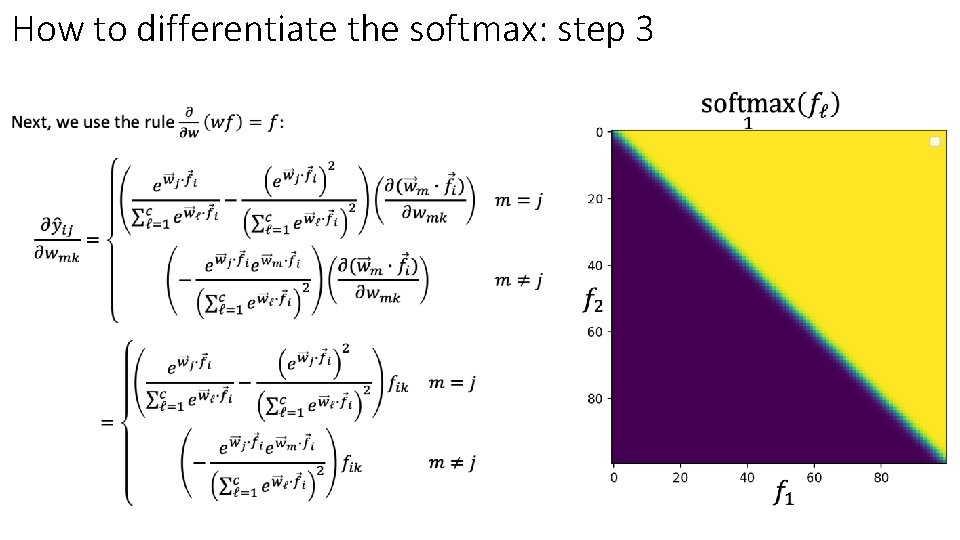

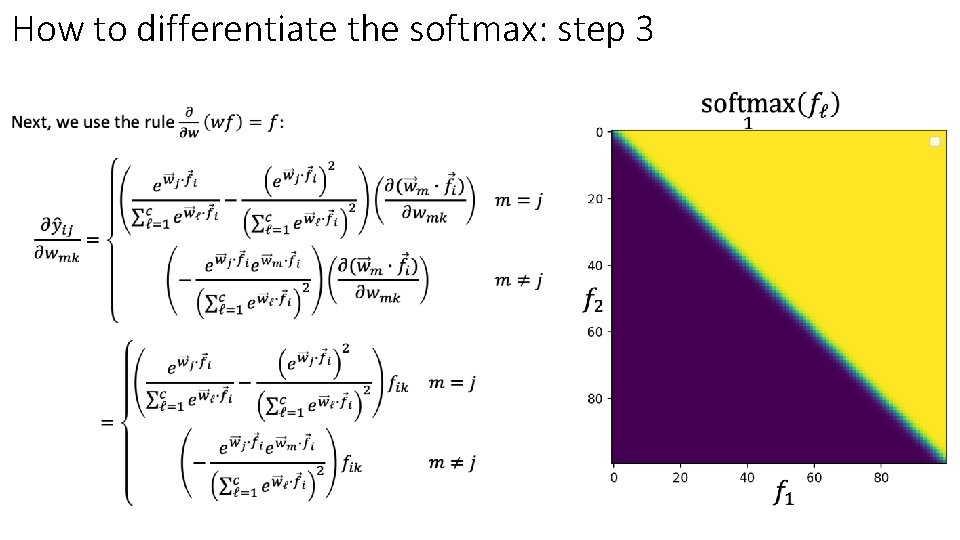

How to differentiate the softmax: step 3 •

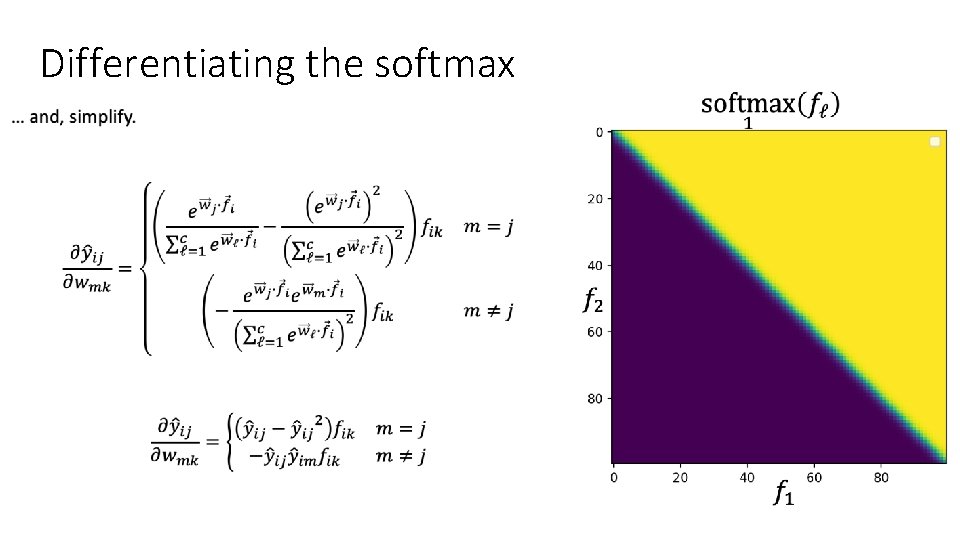

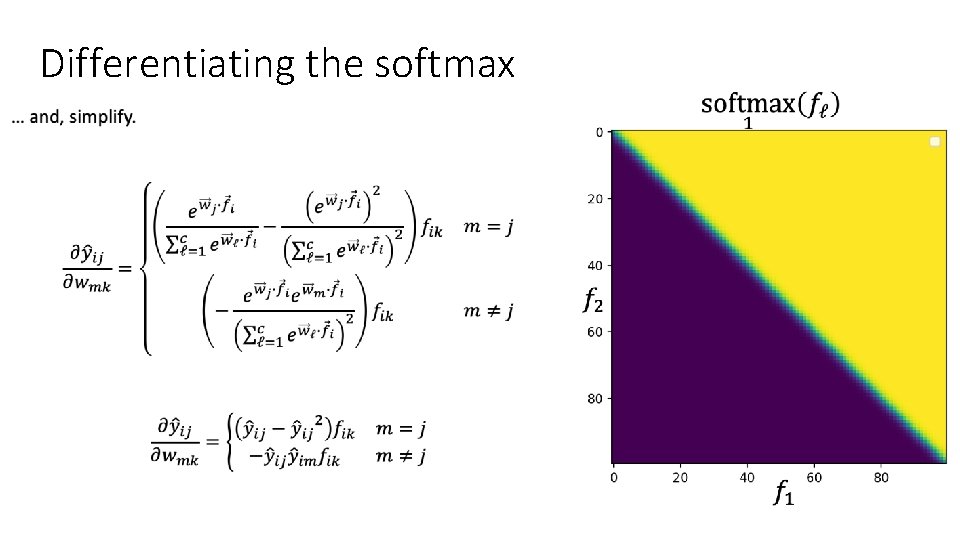

Differentiating the softmax •

Recap: how to differentiate the softmax •

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function: A differentiable approximate argmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

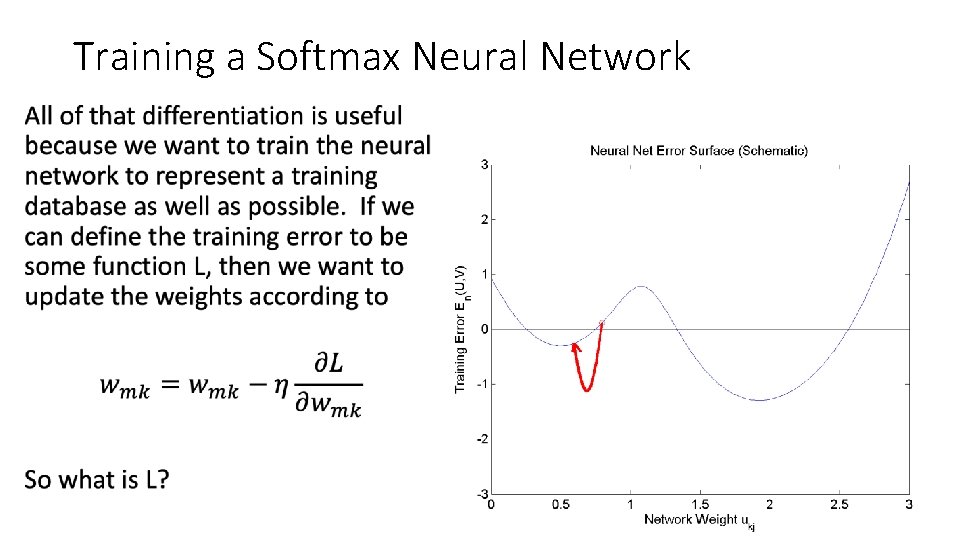

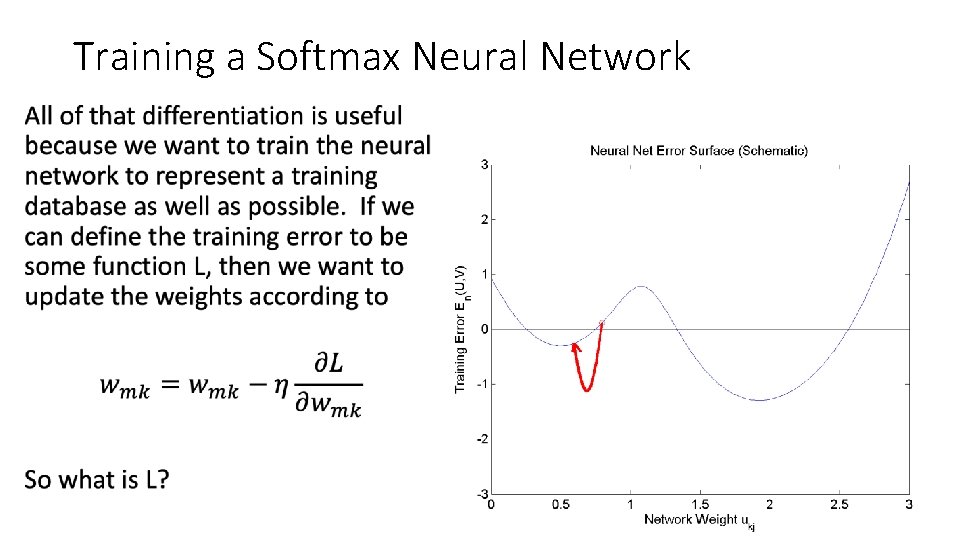

Training a Softmax Neural Network •

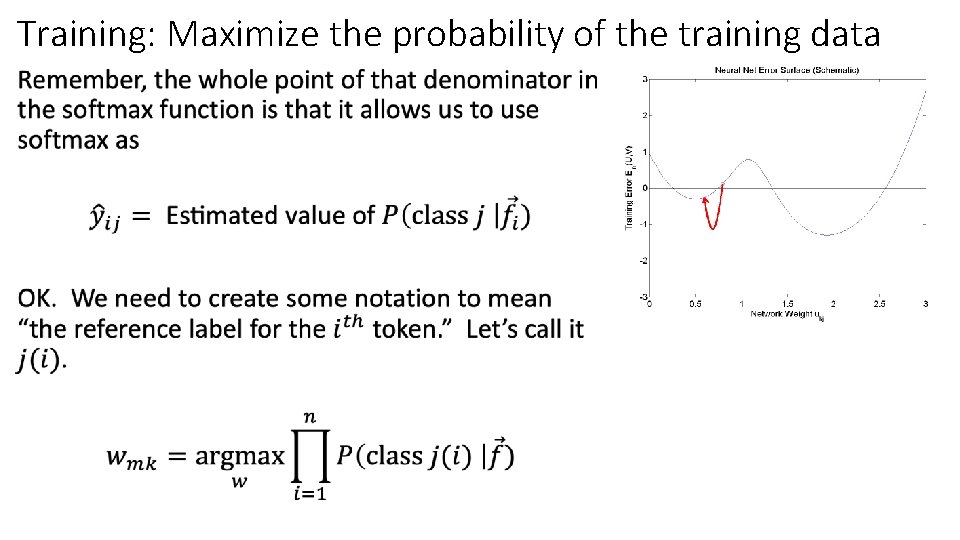

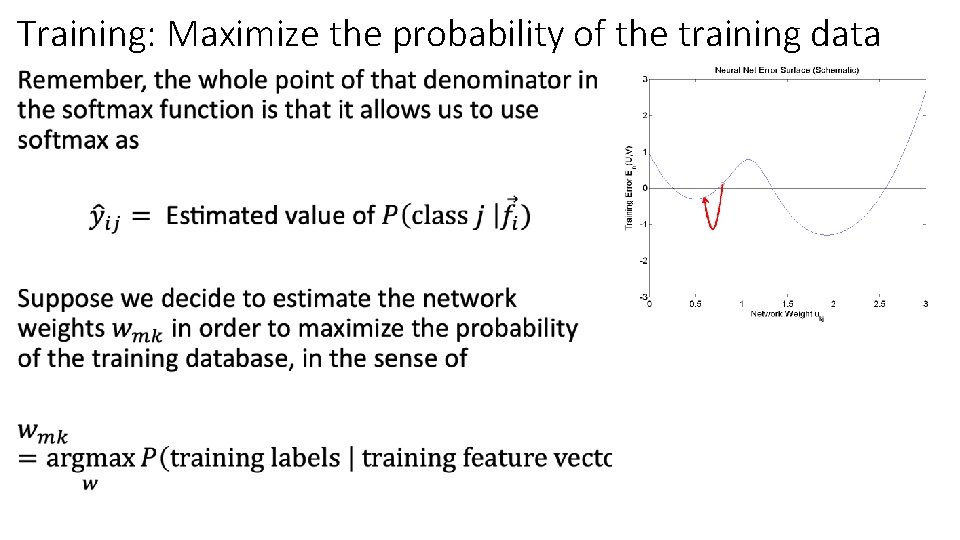

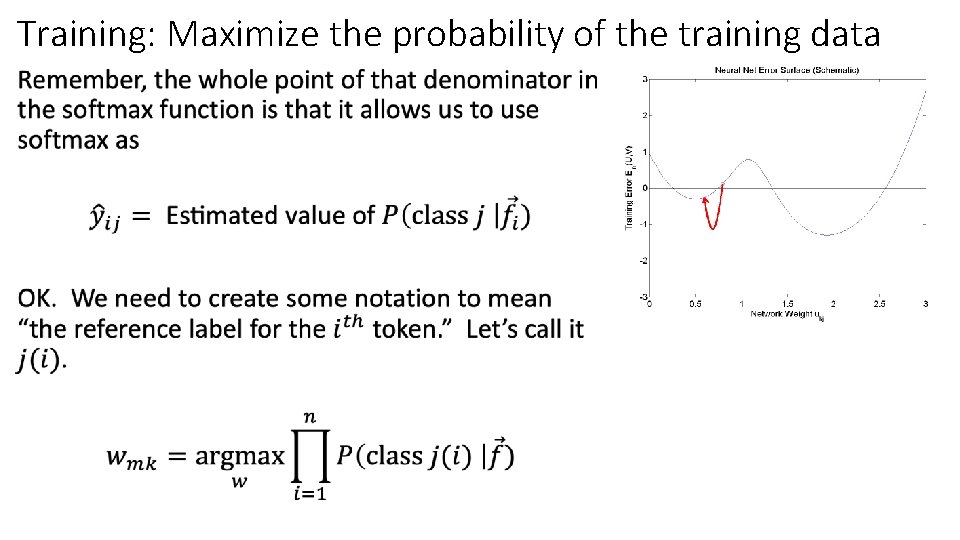

Training: Maximize the probability of the training data •

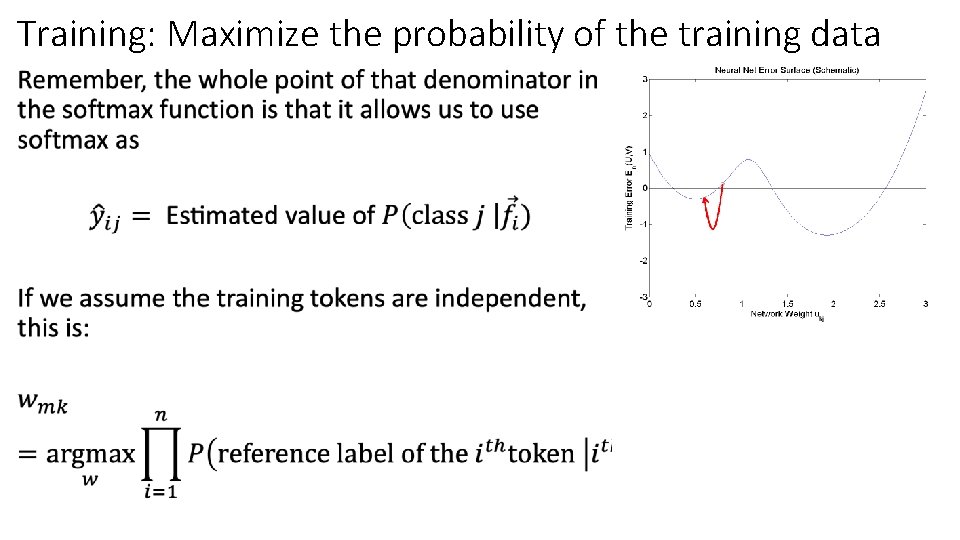

Training: Maximize the probability of the training data •

Training: Maximize the probability of the training data •

Training: Maximize the probability of the training data •

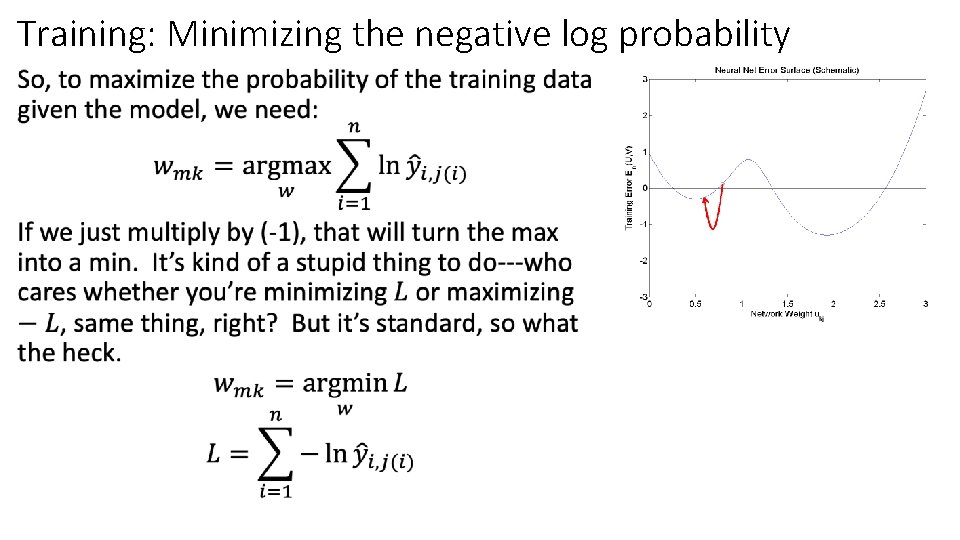

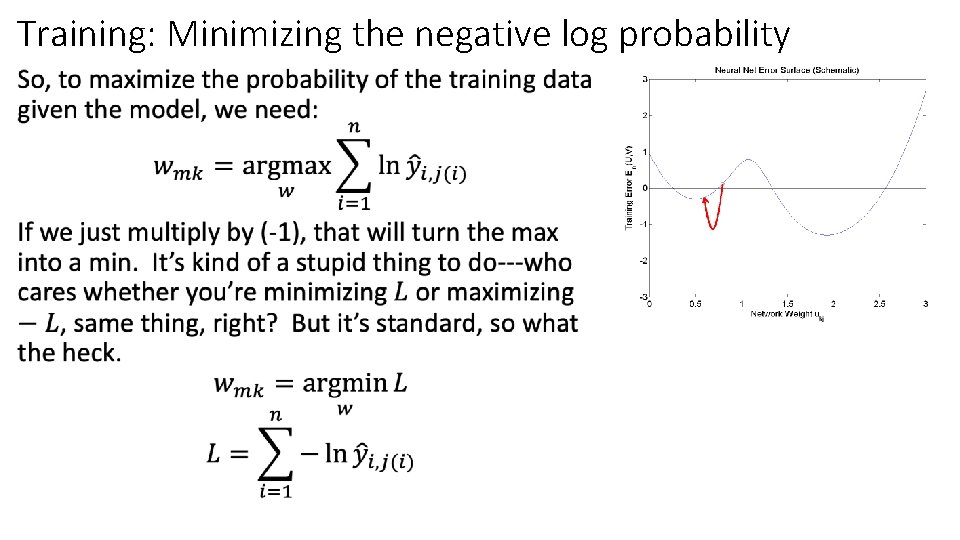

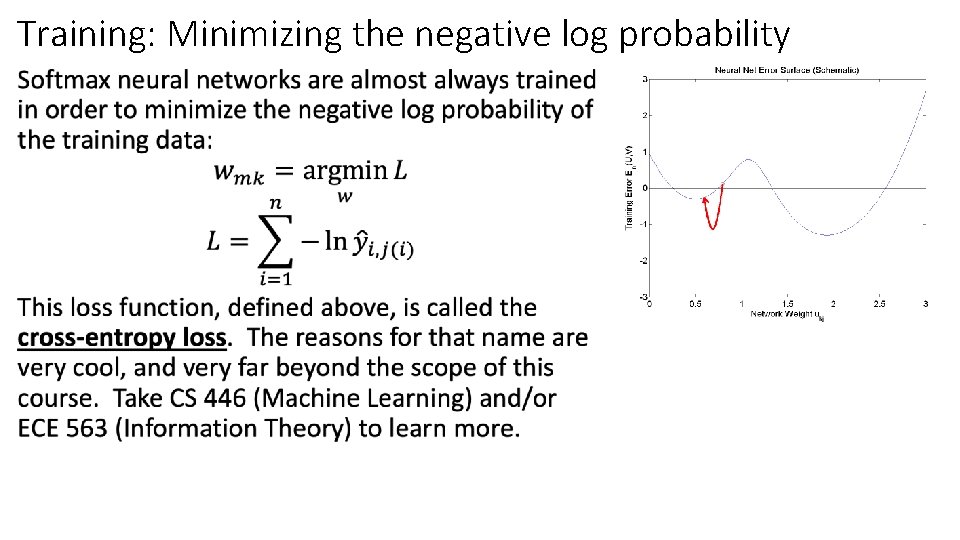

Training: Minimizing the negative log probability •

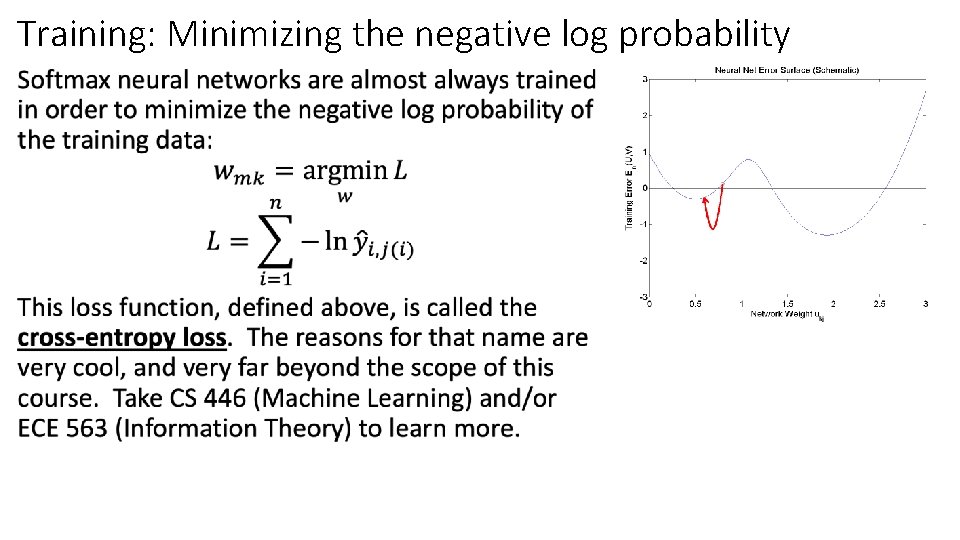

Training: Minimizing the negative log probability •

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function: A differentiable approximate argmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

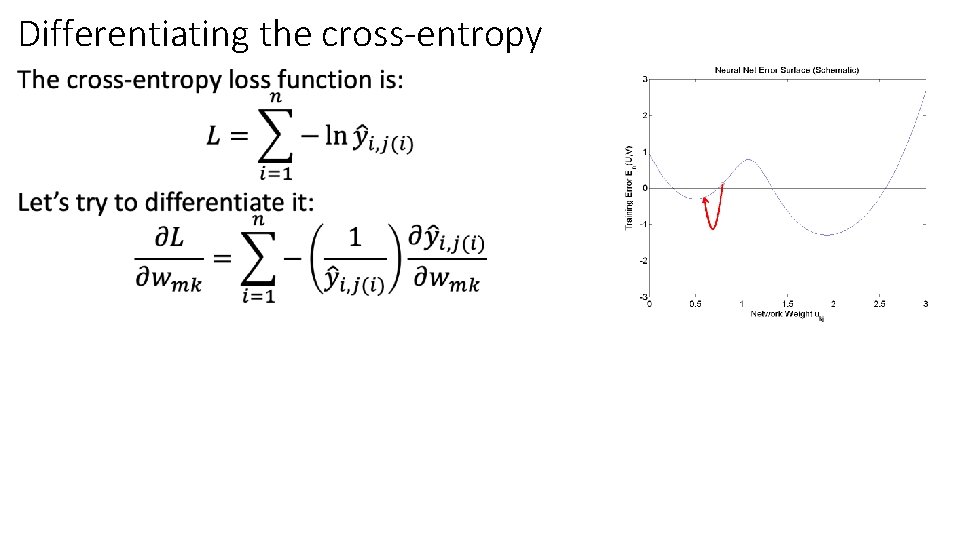

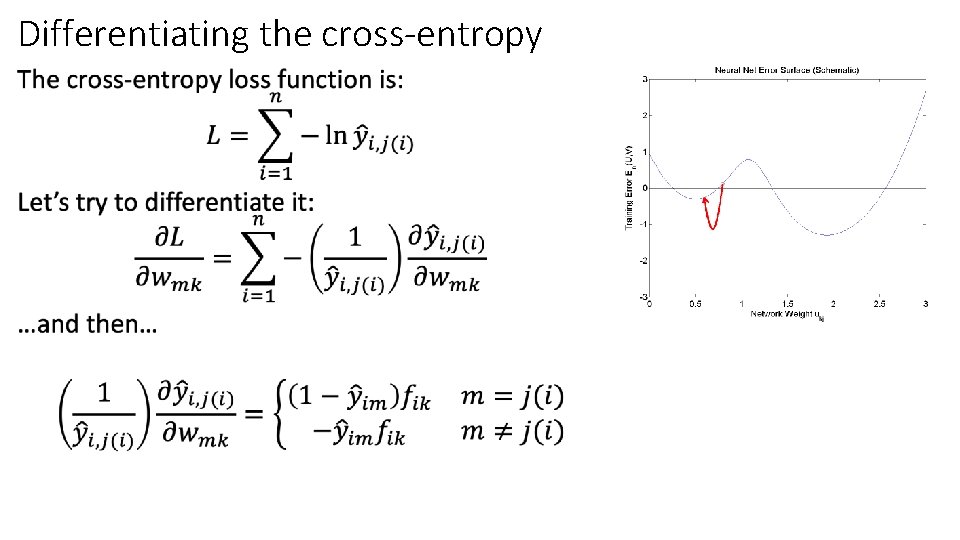

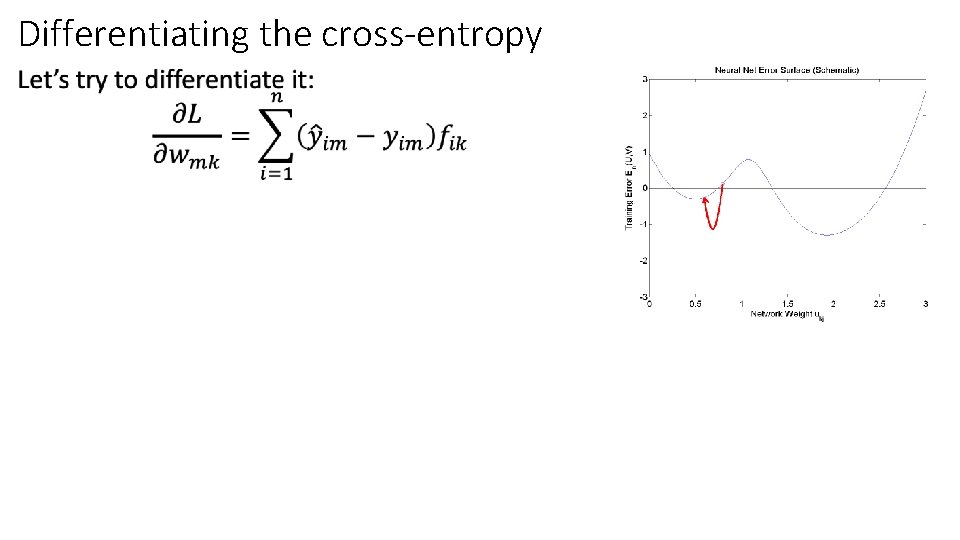

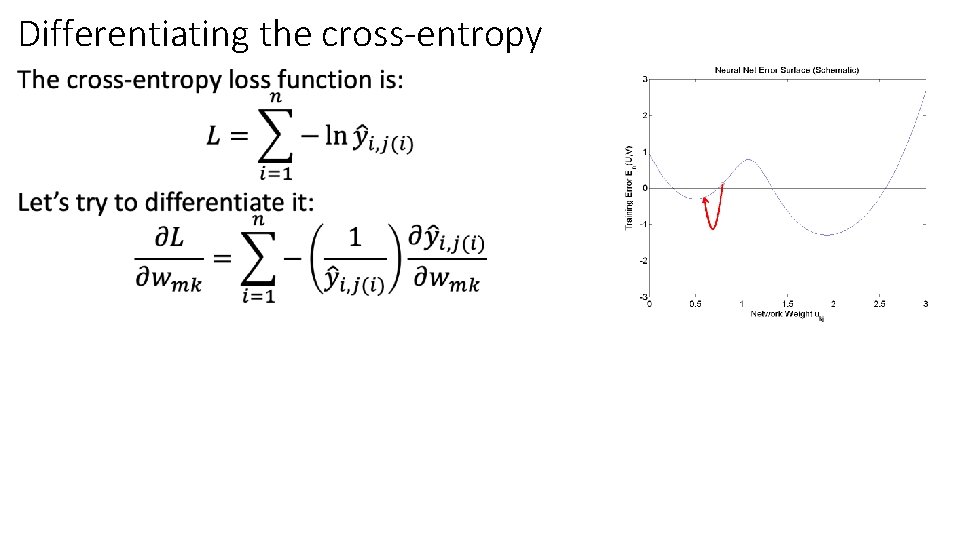

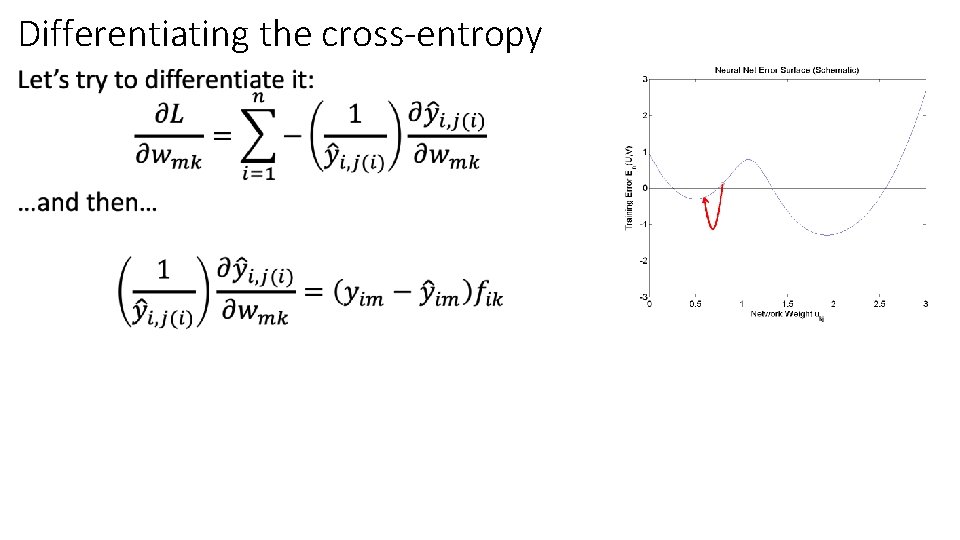

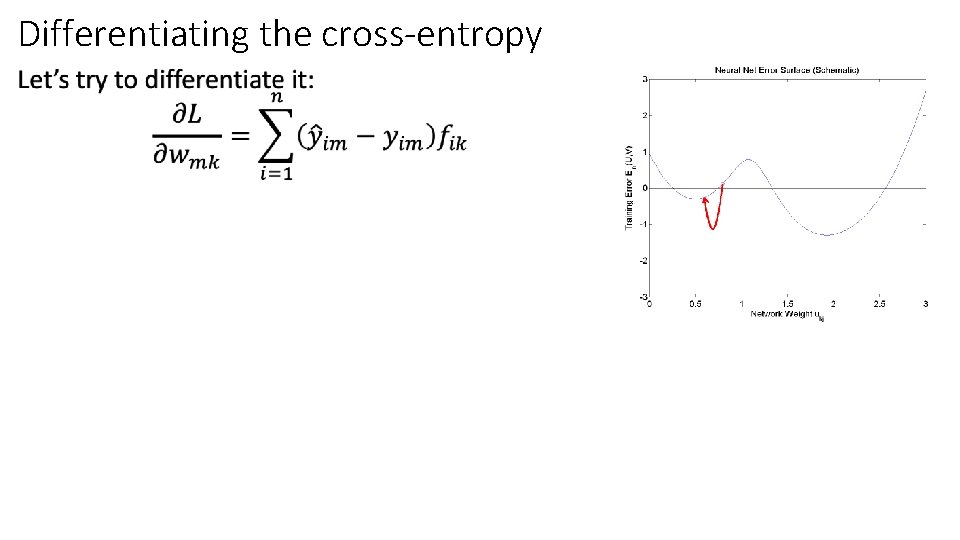

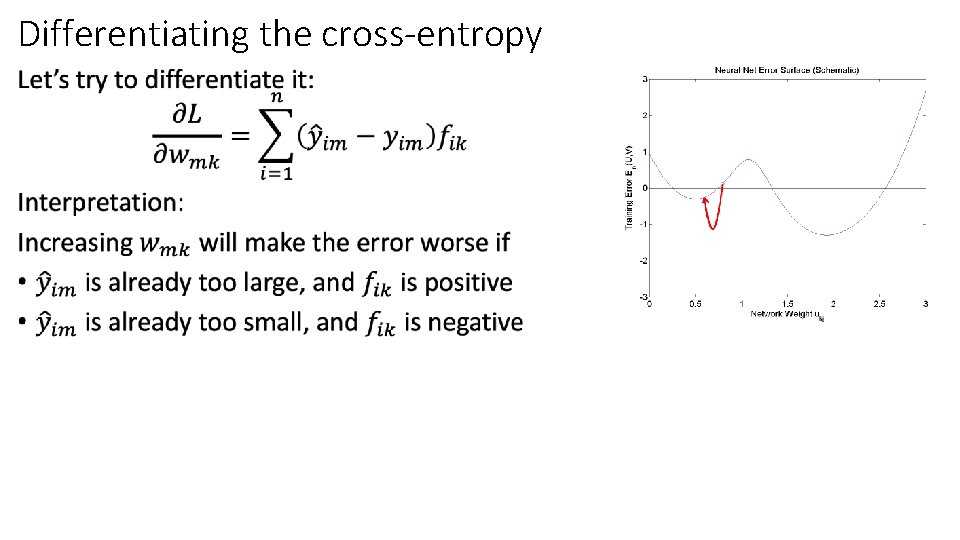

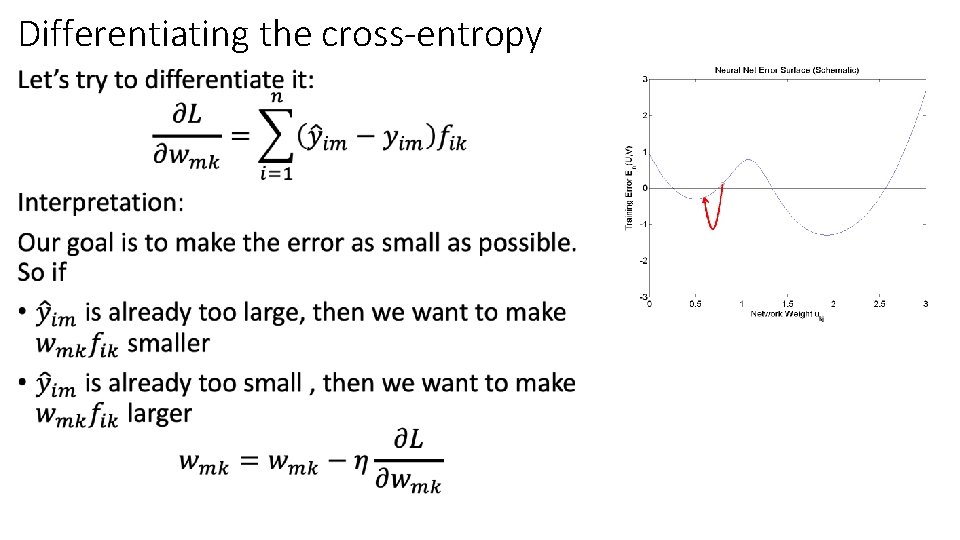

Differentiating the cross-entropy •

Differentiating the cross-entropy •

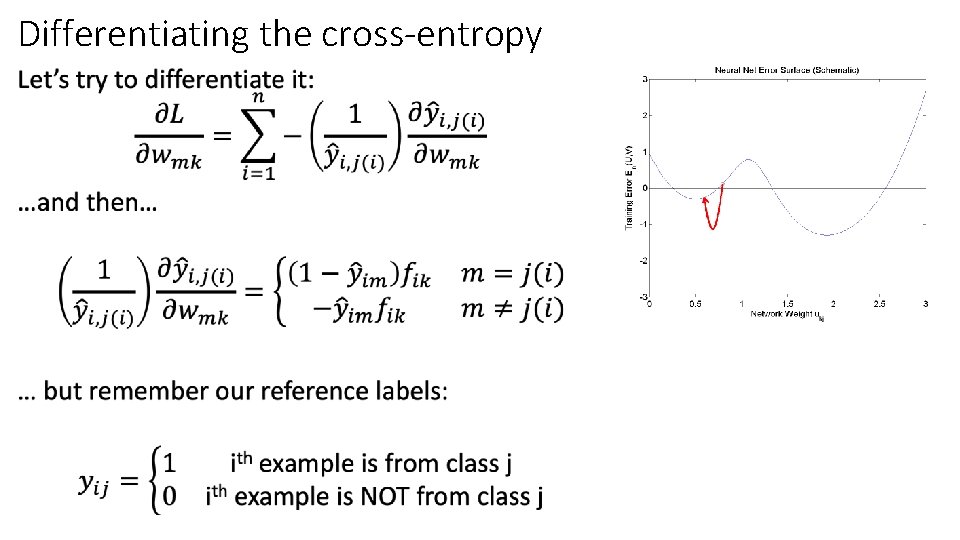

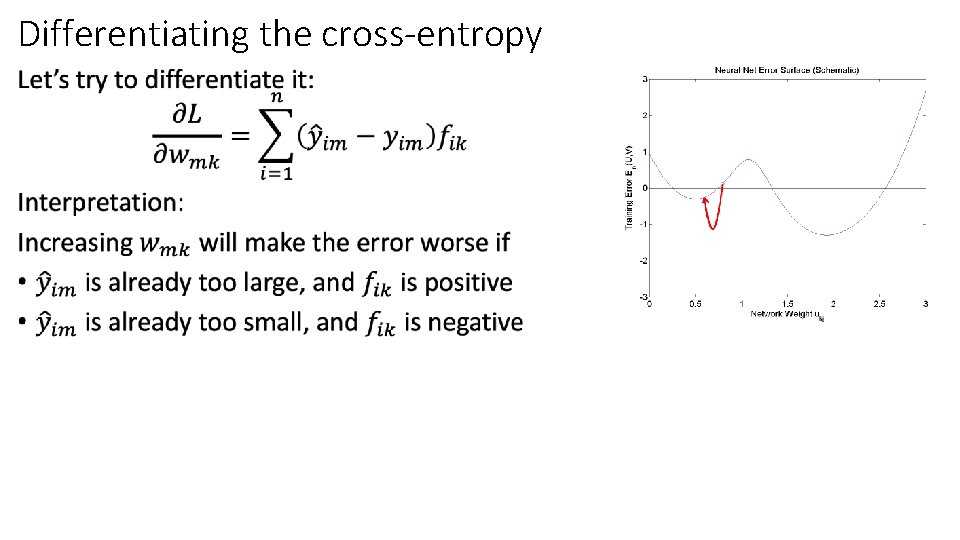

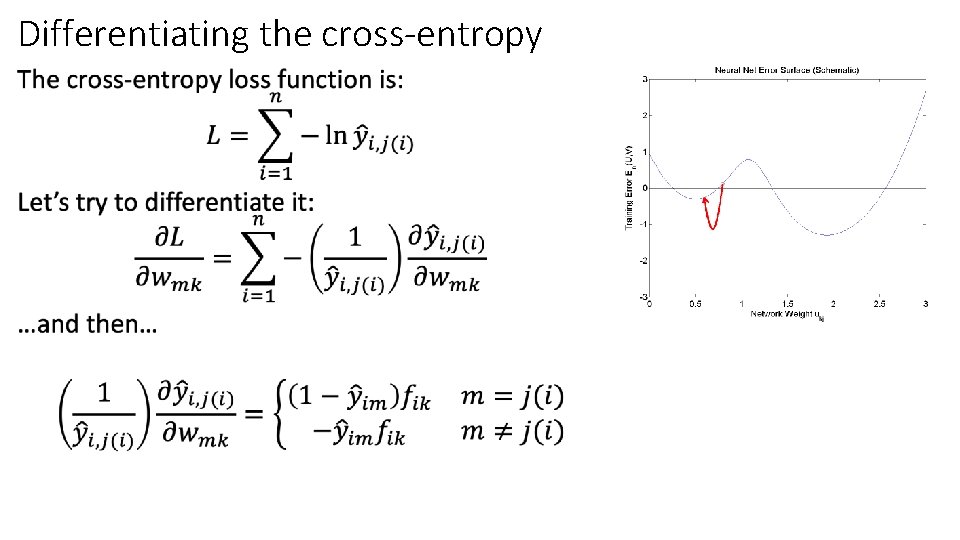

Differentiating the cross-entropy •

Differentiating the cross-entropy •

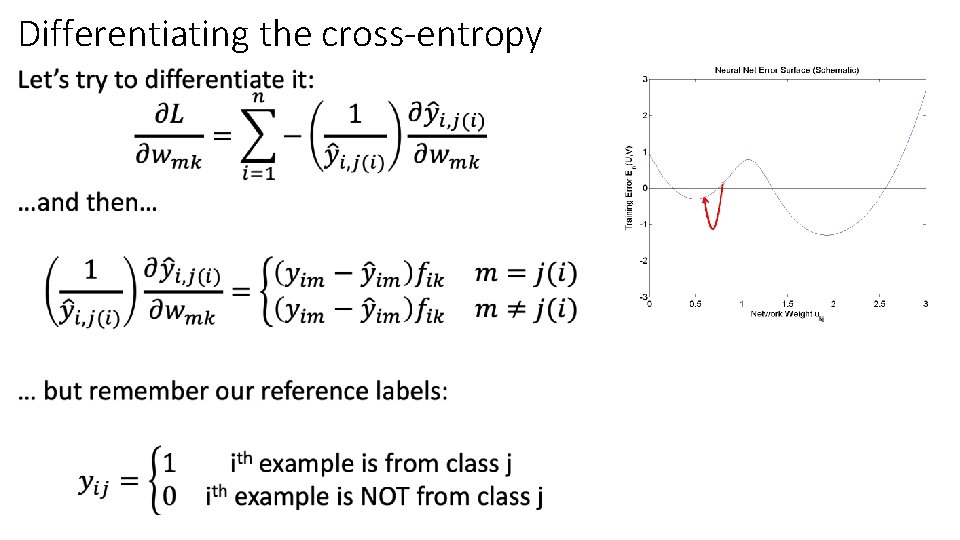

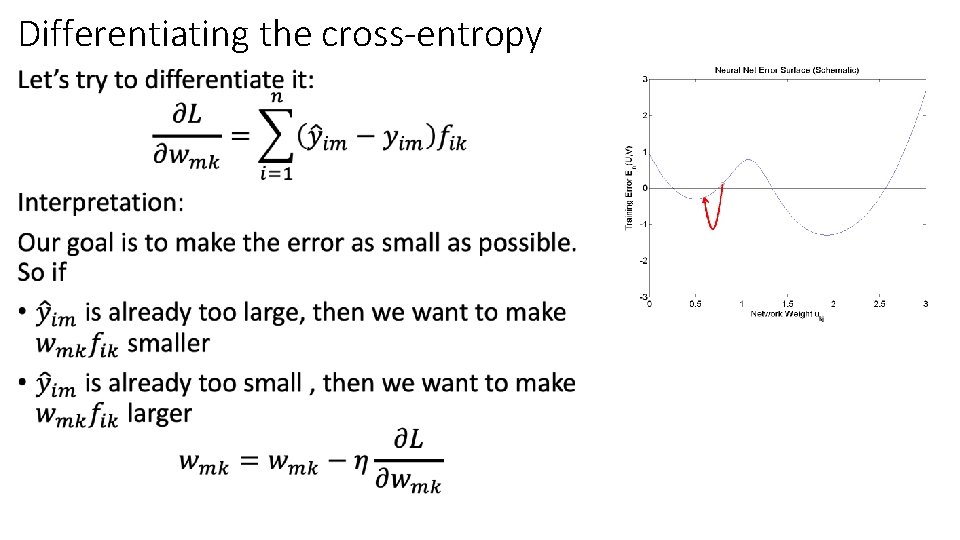

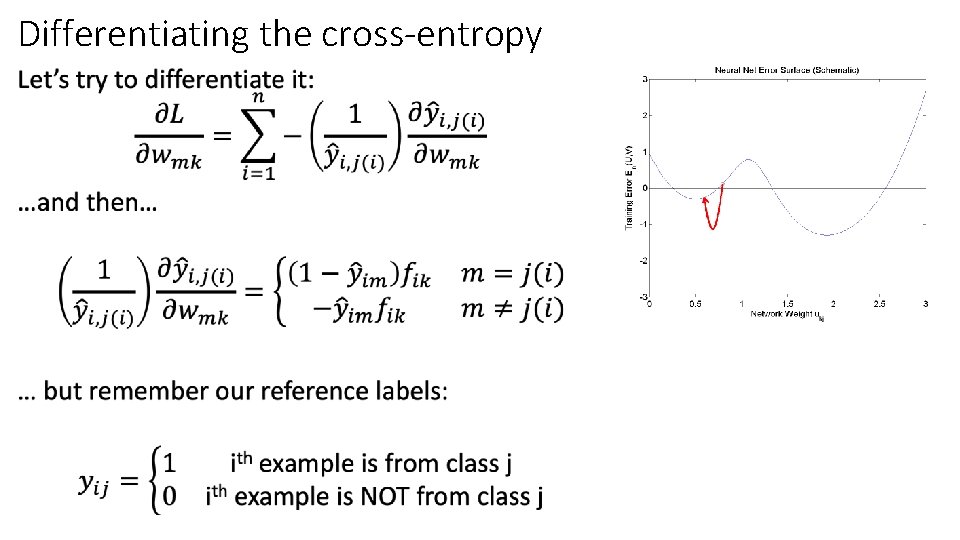

Differentiating the cross-entropy •

Differentiating the cross-entropy •

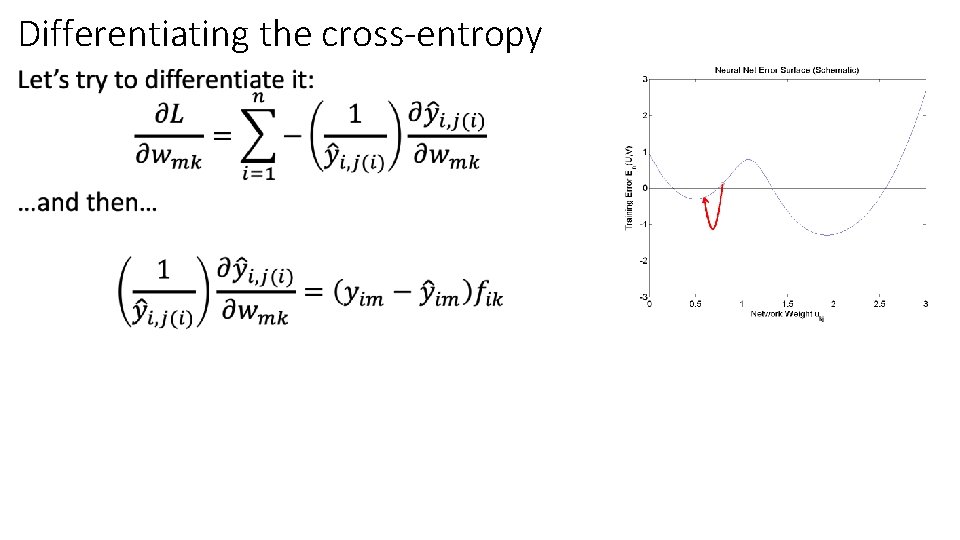

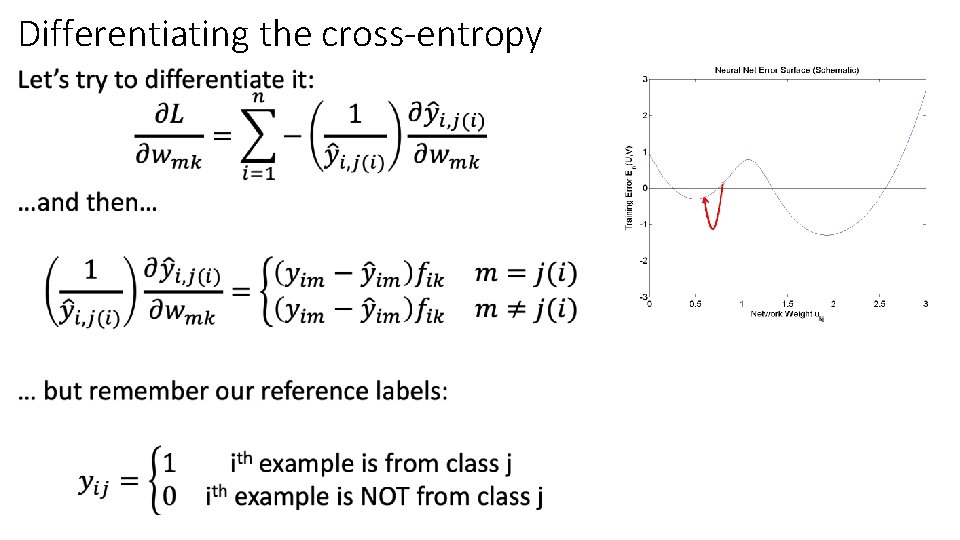

Differentiating the cross-entropy •

Differentiating the cross-entropy •

Outline • Dichotomizers and Polychotomizers • Dichotomizer: what it is; how to train it • Polychotomizer: what it is; how to train it • One-Hot Vectors: Training targets for the polychotomizer • Softmax Function: A differentiable approximate argmax • Cross-Entropy • Cross-entropy = negative log probability of training labels • Derivative of cross-entropy w. r. t. network weights • Putting it all together: a one-layer softmax neural net

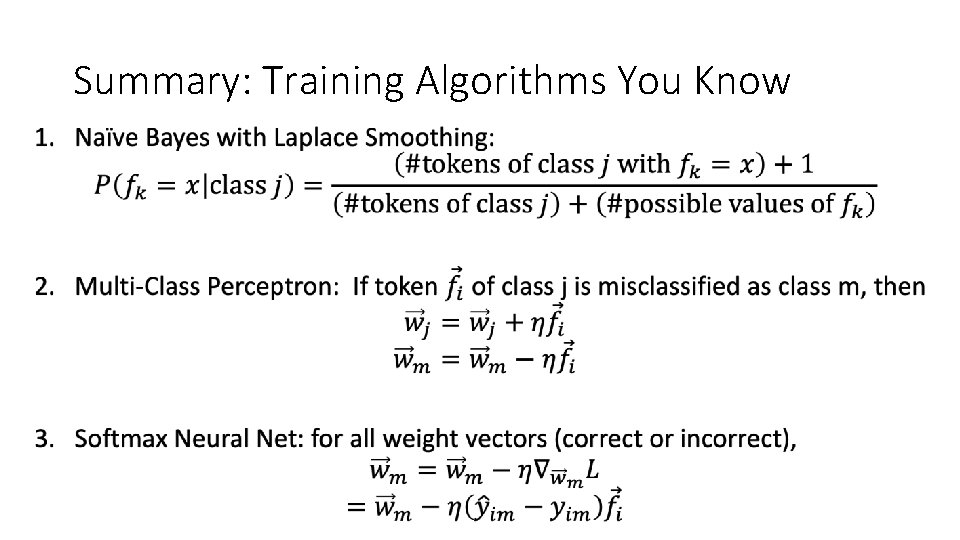

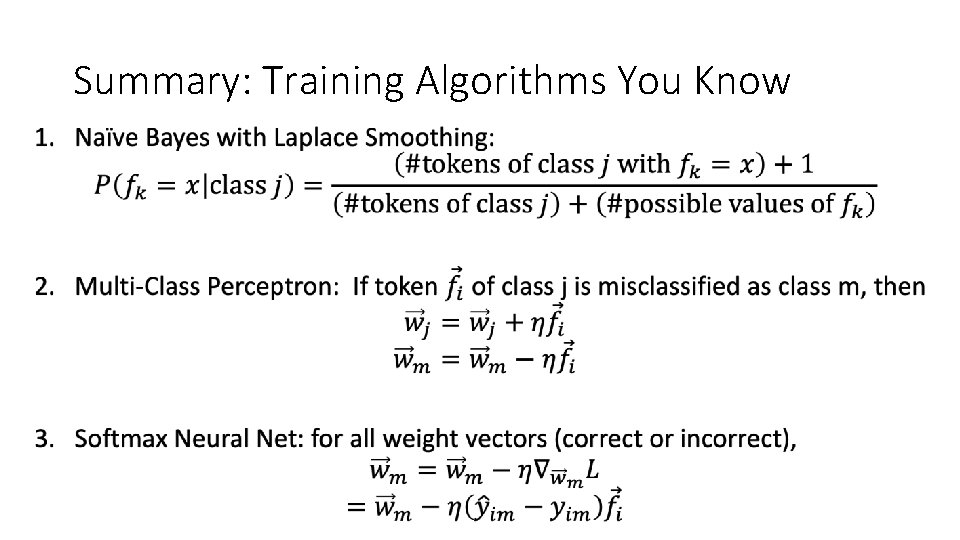

Summary: Training Algorithms You Know •

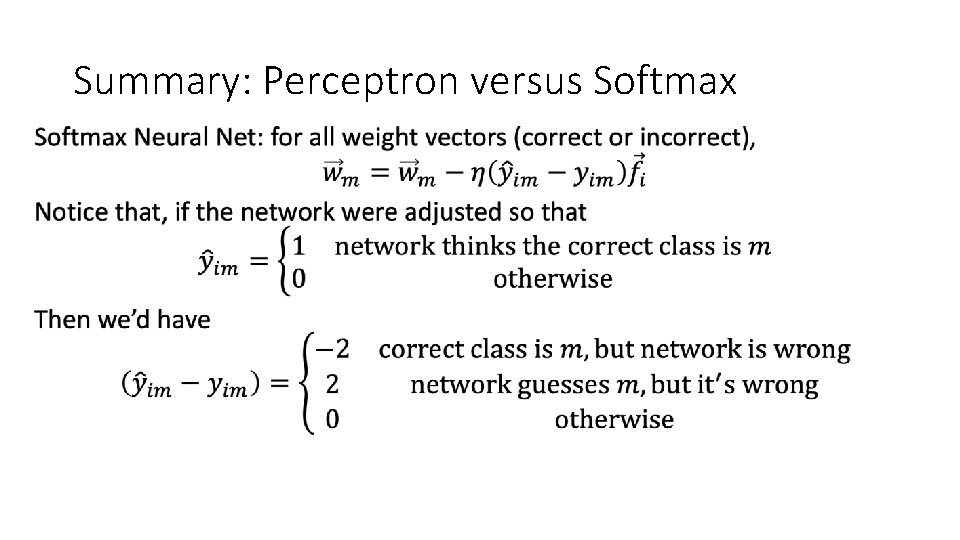

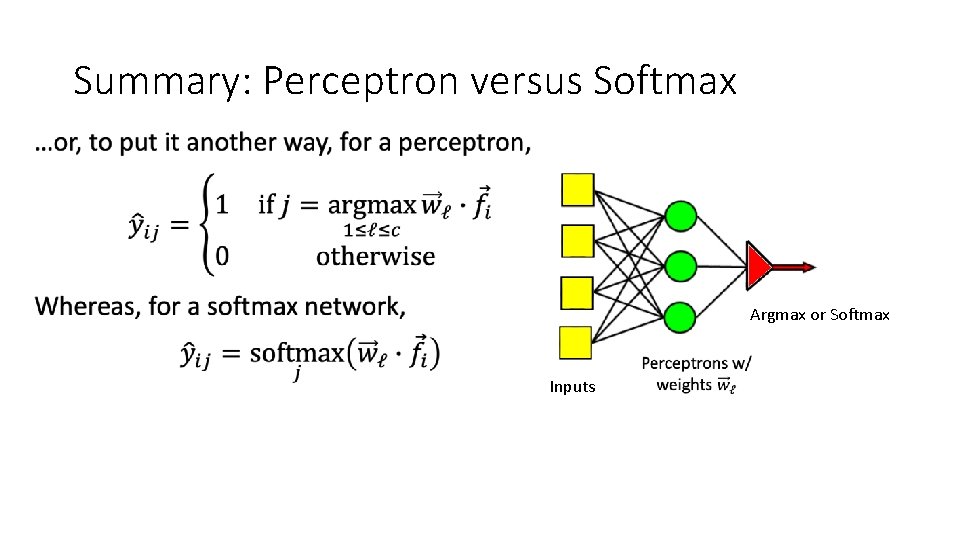

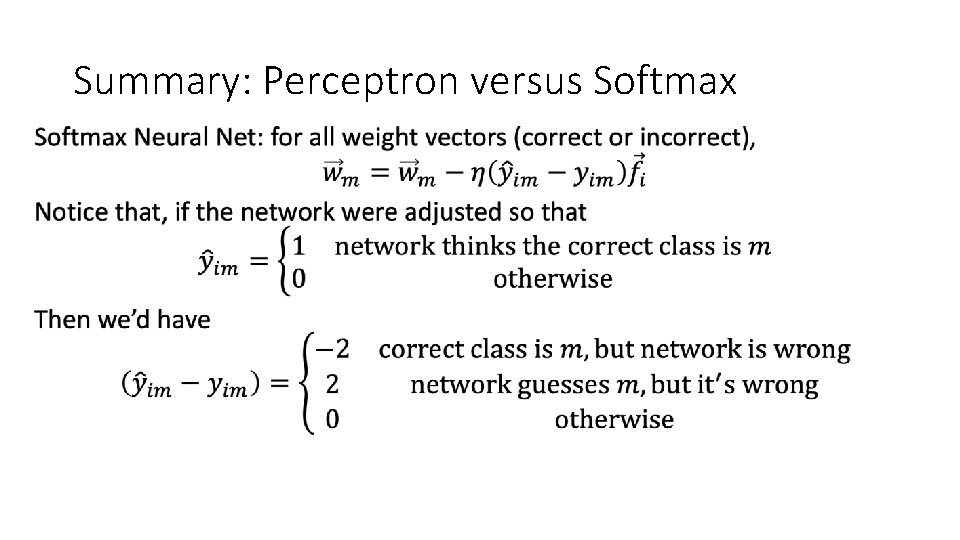

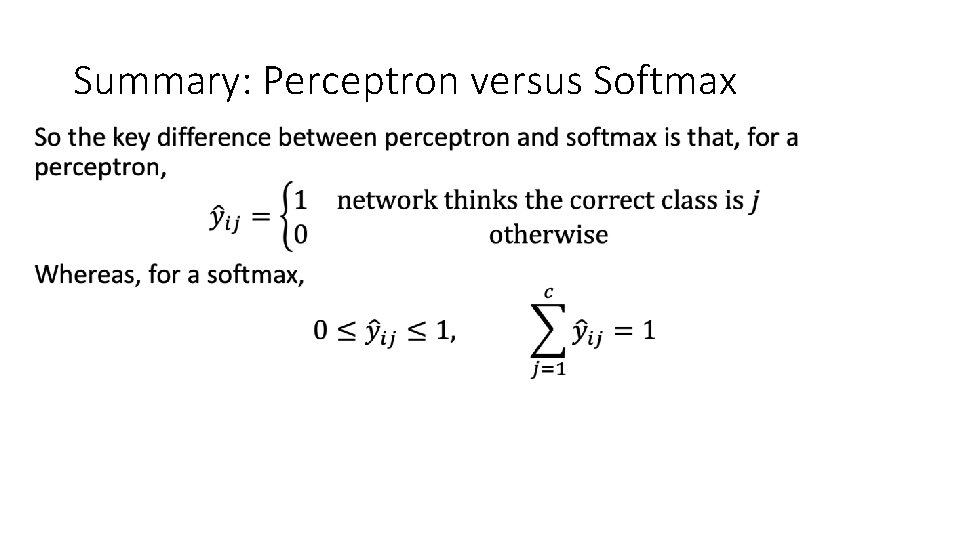

Summary: Perceptron versus Softmax •

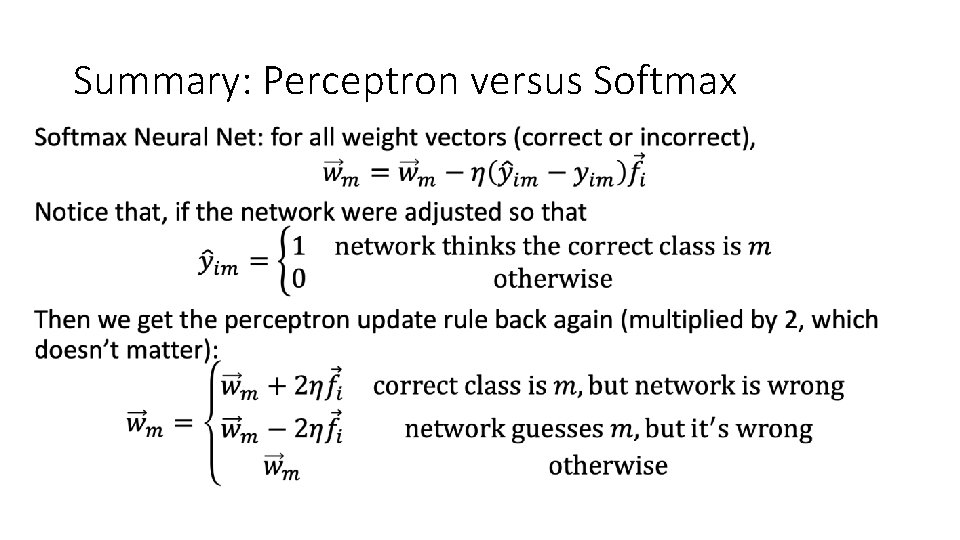

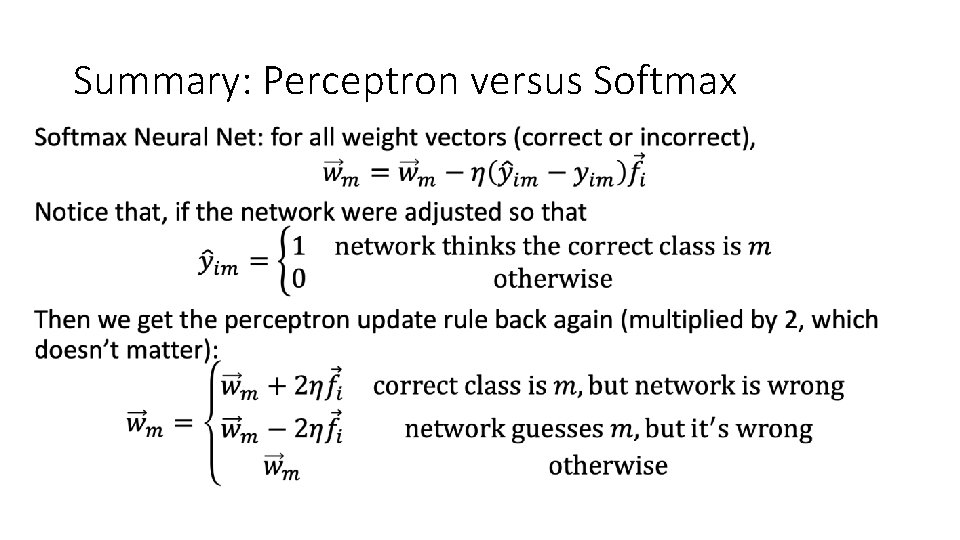

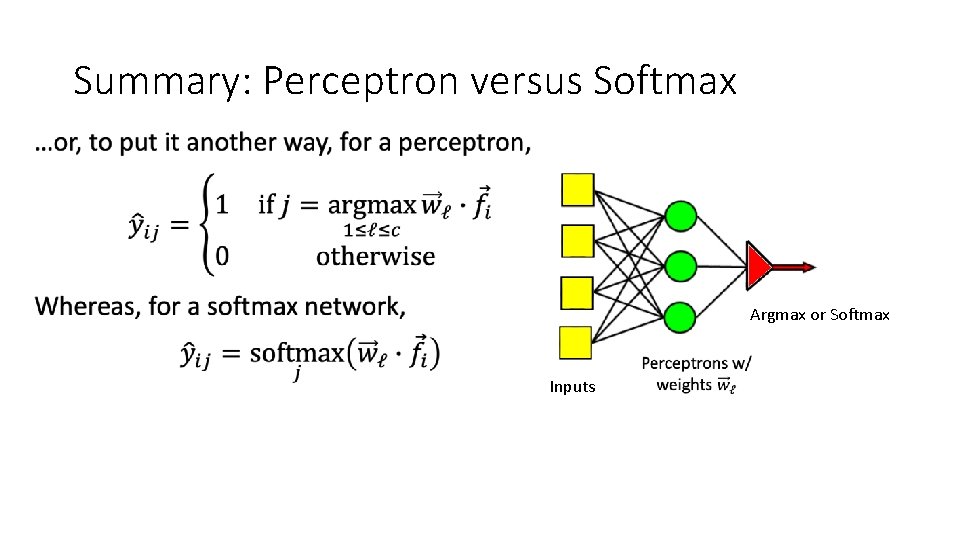

Summary: Perceptron versus Softmax •

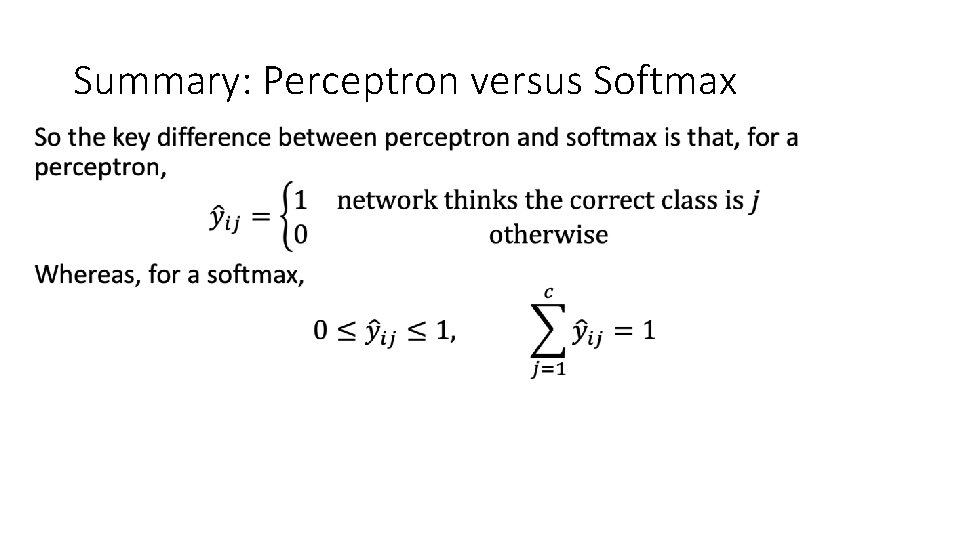

Summary: Perceptron versus Softmax •

Summary: Perceptron versus Softmax • Argmax or Softmax Inputs

Cudnn softmax

Cudnn softmax Softmax

Softmax Softmax function

Softmax function Example of multimodal text

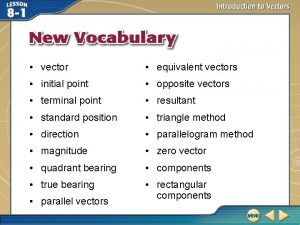

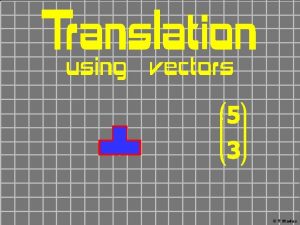

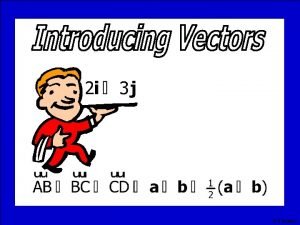

Example of multimodal text Directed line segment definition

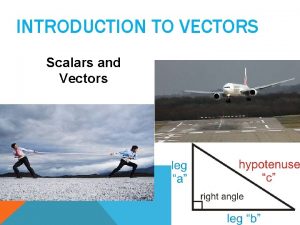

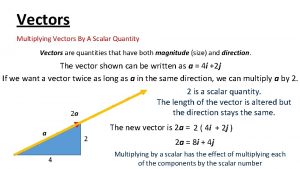

Directed line segment definition Vectors and scalars in physics

Vectors and scalars in physics Vector notation

Vector notation A bear searching for food wanders 35 meters east

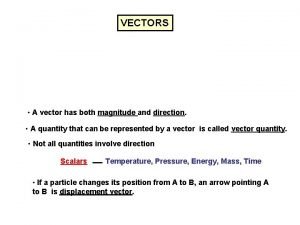

A bear searching for food wanders 35 meters east A vector has both magnitude and direction.

A vector has both magnitude and direction. The diagram shows a regular hexagon abcdef with centre o

The diagram shows a regular hexagon abcdef with centre o Sin 37

Sin 37 Define scalar quantity

Define scalar quantity Entropy is scalar or vector

Entropy is scalar or vector Linear algebra

Linear algebra Linearly dependent and independent vectors

Linearly dependent and independent vectors Vectors and the geometry of space

Vectors and the geometry of space Linearly dependent

Linearly dependent Vectors and the geometry of space

Vectors and the geometry of space Vectors form 3

Vectors form 3 A 100 lb weight hangs from two wires

A 100 lb weight hangs from two wires Vectors have magnitude and direction

Vectors have magnitude and direction Vectors trigonometry

Vectors trigonometry The diagram shows two vectors that point west and north.

The diagram shows two vectors that point west and north. Composition of vectors

Composition of vectors Mat lab

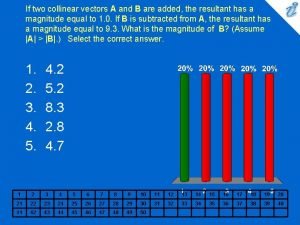

Mat lab If two collinear vectors a and b are added

If two collinear vectors a and b are added Chapter 12 vectors and the geometry of space solutions

Chapter 12 vectors and the geometry of space solutions Units physical quantities and vectors

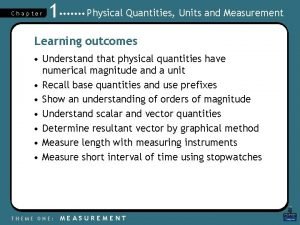

Units physical quantities and vectors Scalar quantity unit

Scalar quantity unit Magnitude of unit vector formula

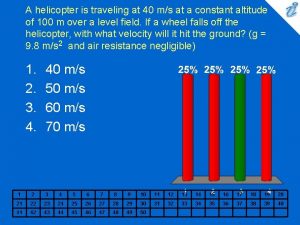

Magnitude of unit vector formula A helicopter is traveling at 40m /s

A helicopter is traveling at 40m /s Vectors and the geometry of space

Vectors and the geometry of space Dot product

Dot product Free fall

Free fall You are adding vectors of length 20 and 40 units

You are adding vectors of length 20 and 40 units Img srcx onerroralert1

Img srcx onerroralert1 Examples of vectors

Examples of vectors Adding multiple vectors

Adding multiple vectors Collinear vectors example

Collinear vectors example Vectors in 2 dimensions

Vectors in 2 dimensions Vectors

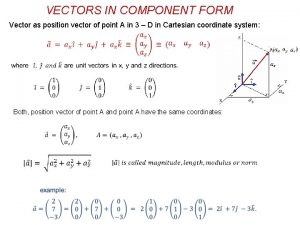

Vectors Component form vectors

Component form vectors Decomposing vectors

Decomposing vectors Ap physics vectors

Ap physics vectors Vector equation of line

Vector equation of line Equivalent vectors

Equivalent vectors Chickering's vectors

Chickering's vectors Madas vectors

Madas vectors Madas vectors

Madas vectors Madas vectors

Madas vectors What is this?

What is this? The direction of the resultant of unlike parallel forces is

The direction of the resultant of unlike parallel forces is Adding velocity vectors

Adding velocity vectors Ap physics vectors test

Ap physics vectors test Graphical method physics

Graphical method physics Orthogonal vectors

Orthogonal vectors