CS 4501 Introduction to Computer Vision Softmax Classifier

![Supervised Learning –Softmax Classifier [1 0 0] 17 Supervised Learning –Softmax Classifier [1 0 0] 17](https://slidetodoc.com/presentation_image/9b79ee957f6b1e5d878fa02203368958/image-17.jpg)

![How do we find a good w and b? [1 0 0] We need How do we find a good w and b? [1 0 0] We need](https://slidetodoc.com/presentation_image/9b79ee957f6b1e5d878fa02203368958/image-18.jpg)

- Slides: 36

CS 4501: Introduction to Computer Vision Softmax Classifier + Generalization Various slides from previous courses by: D. A. Forsyth (Berkeley / UIUC), I. Kokkinos (Ecole Centrale / UCL). S. Lazebnik (UNC / UIUC), S. Seitz (MSR / Facebook), J. Hays (Brown / Georgia Tech), A. Berg (Stony Brook / UNC), D. Samaras (Stony Brook). J. M. Frahm (UNC), V. Ordonez (UVA), Steve Seitz (UW).

Last Class • Introduction to Machine Learning • Unsupervised Learning: Clustering (e. g. k-means clustering) • Supervised Learning: Classification (e. g. k-nearest neighbors)

Today’s Class • Softmax Classifier (Linear Classifiers) • Generalization / Overfitting / Regularization • Global Features

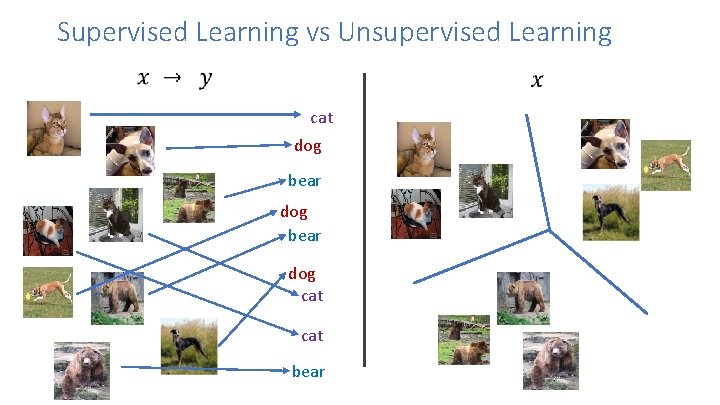

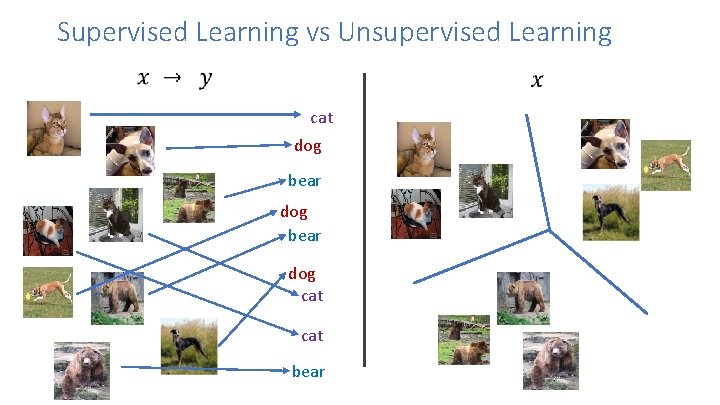

Supervised Learning vs Unsupervised Learning cat dog bear dog cat bear

Supervised Learning vs Unsupervised Learning cat dog bear dog cat bear

Supervised Learning vs Unsupervised Learning cat dog bear Classification dog bear dog cat bear Clustering

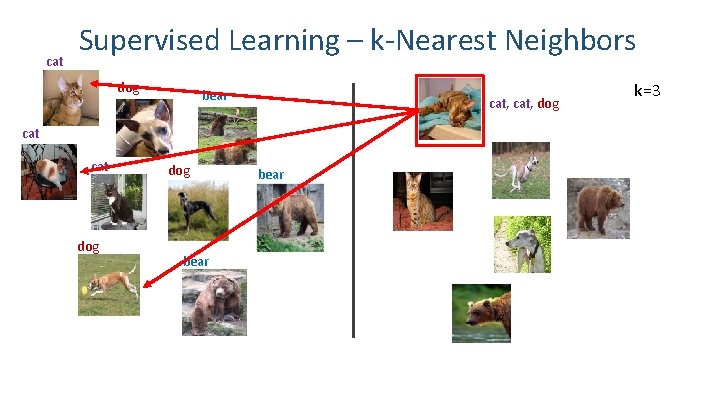

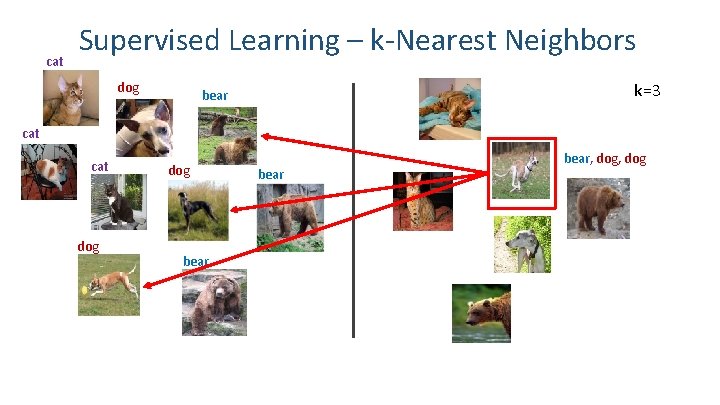

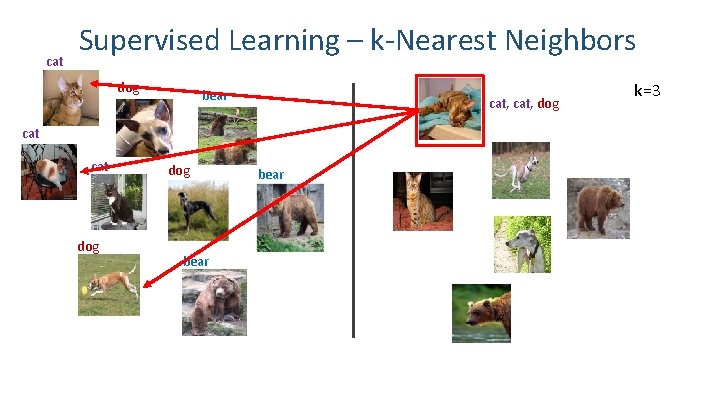

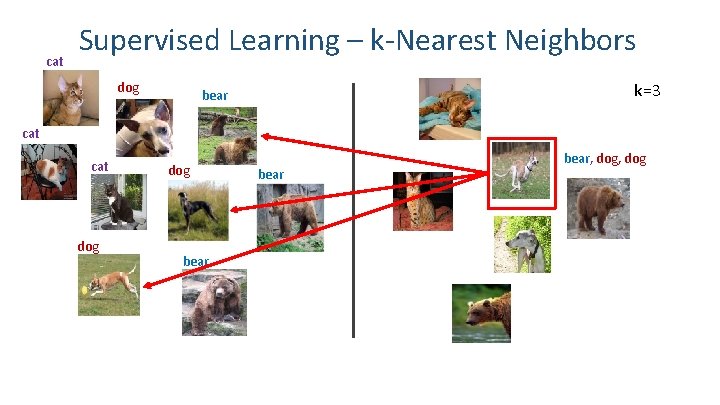

cat Supervised Learning – k-Nearest Neighbors dog bear cat, dog k=3 cat dog bear 7

cat Supervised Learning – k-Nearest Neighbors dog k=3 bear cat dog bear, dog bear 8

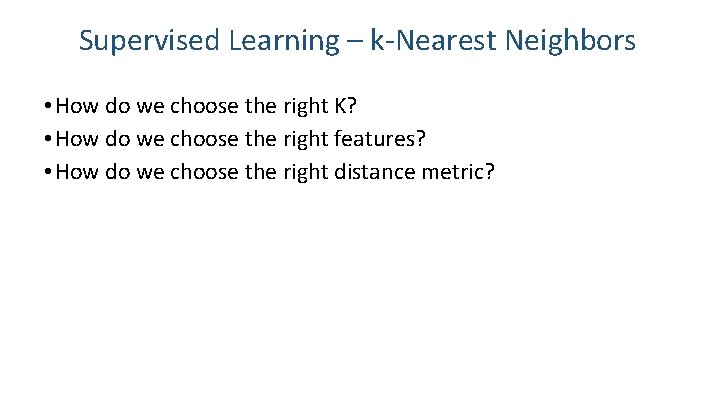

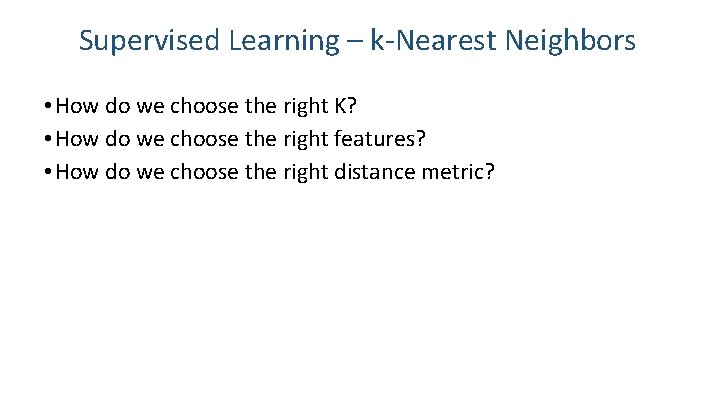

Supervised Learning – k-Nearest Neighbors • How do we choose the right K? • How do we choose the right features? • How do we choose the right distance metric? 9

Supervised Learning – k-Nearest Neighbors • How do we choose the right K? • How do we choose the right features? • How do we choose the right distance metric? Answer: Just choose the one combination that works best! BUT not on the test data. Instead split the training data into a ”Training set” and a ”Validation set” (also called ”Development set”) 10

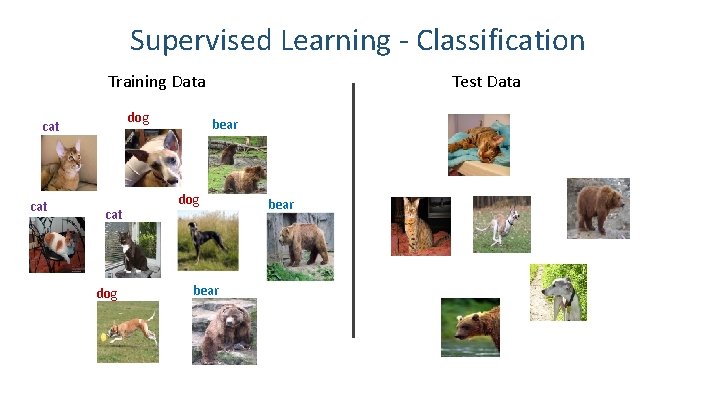

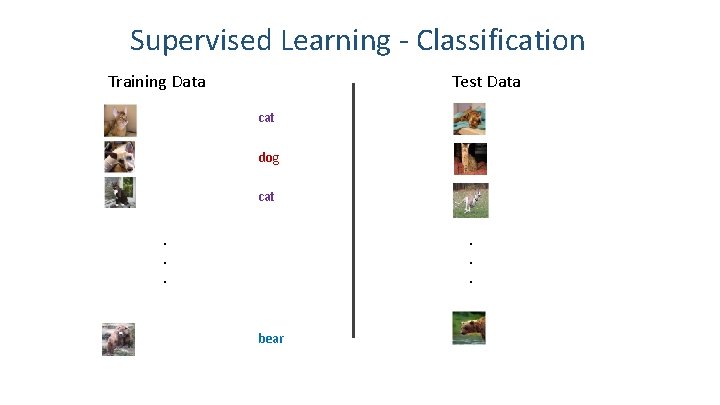

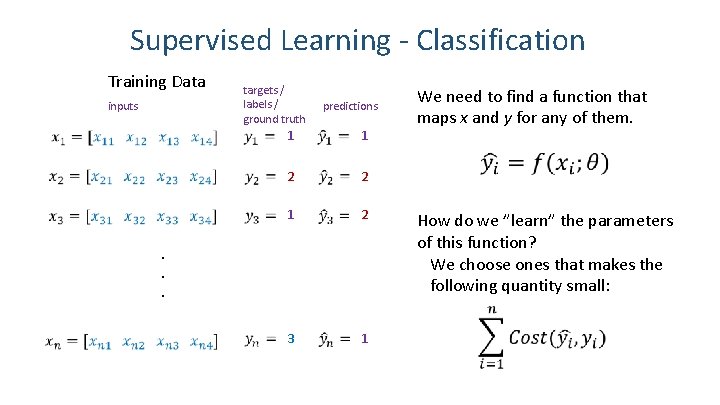

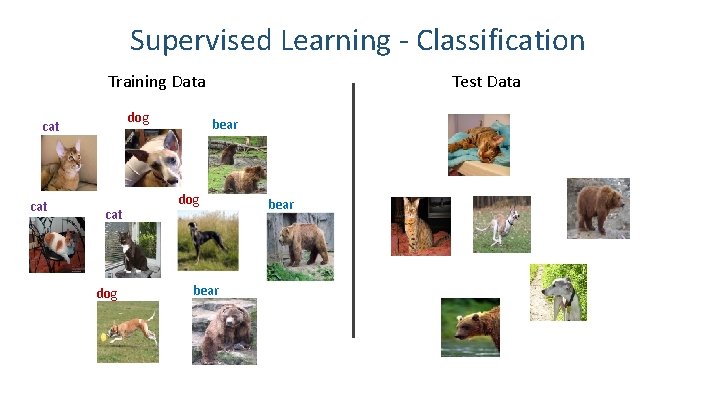

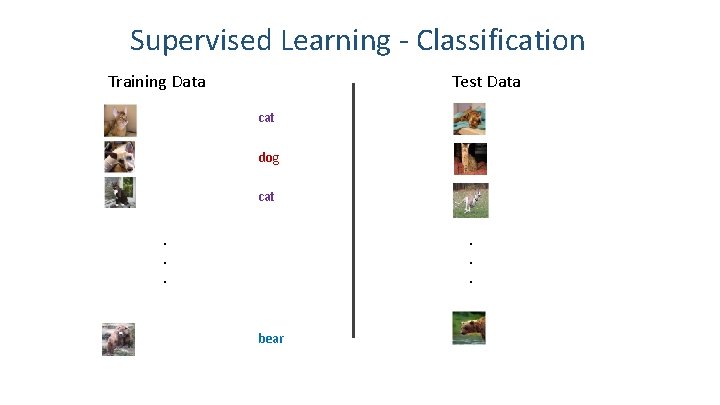

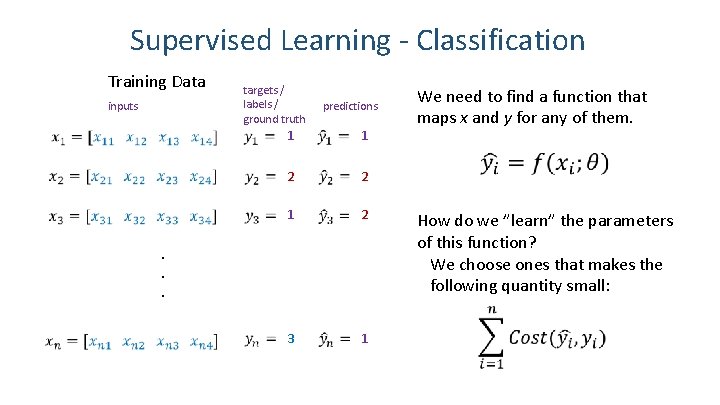

Supervised Learning - Classification Test Data Training Data dog cat cat dog bear 11

Supervised Learning - Classification Test Data Training Data cat dog cat . . . bear 12

Supervised Learning - Classification Training Data cat dog cat bear . . . 13

Supervised Learning - Classification Training Data inputs targets / labels / ground truth 1 predictions 1 2 2 1 2 . . . We need to find a function that maps x and y for any of them. How do we ”learn” the parameters of this function? We choose ones that makes the following quantity small: 3 1 14

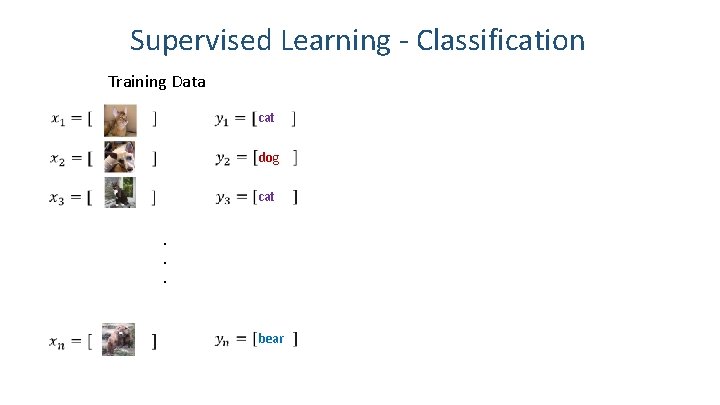

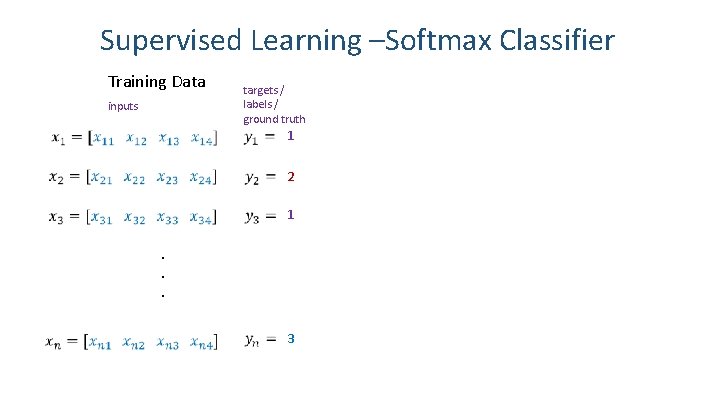

Supervised Learning –Softmax Classifier Training Data inputs targets / labels / ground truth 1 2 1 3 . . . 15

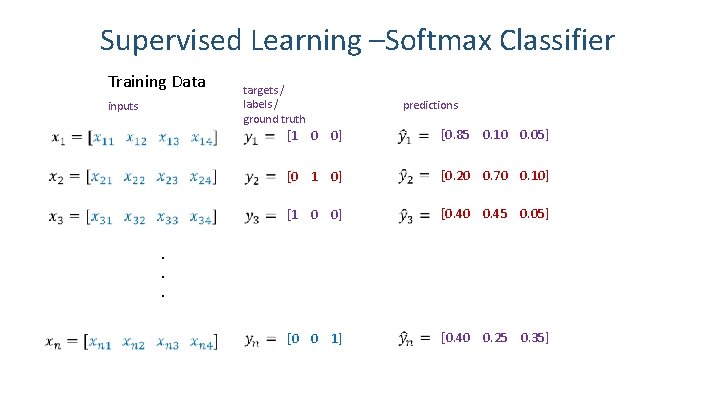

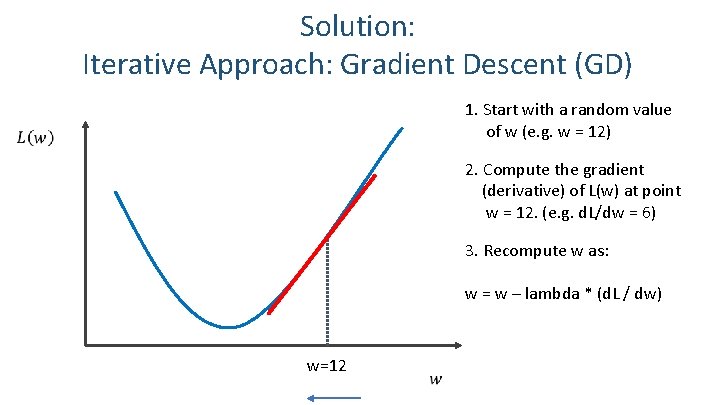

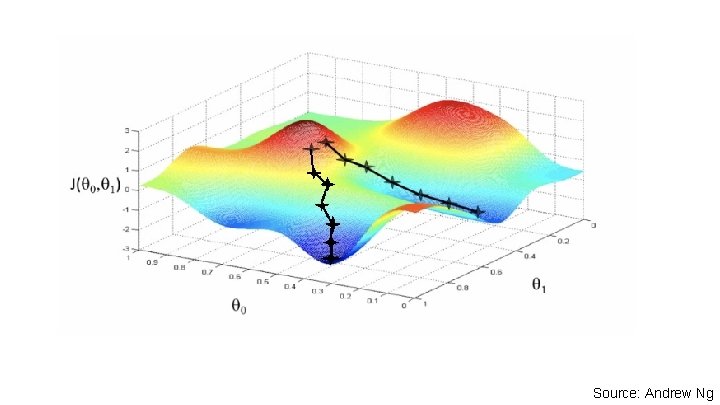

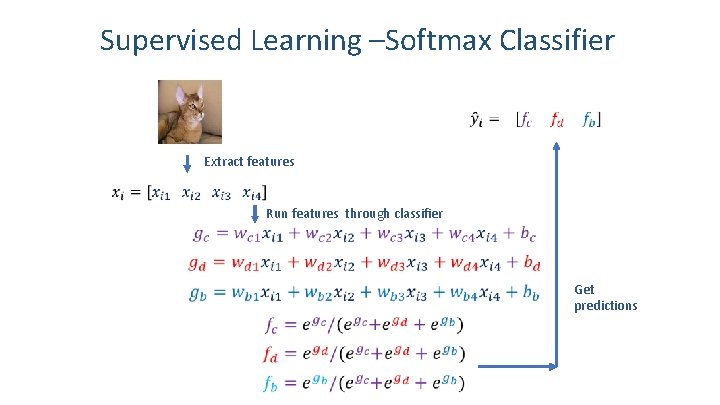

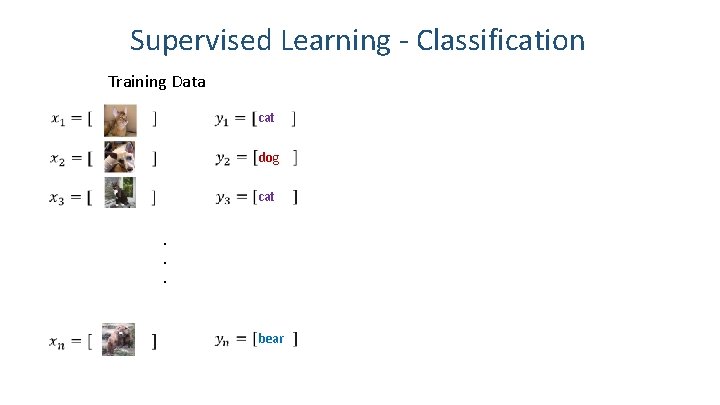

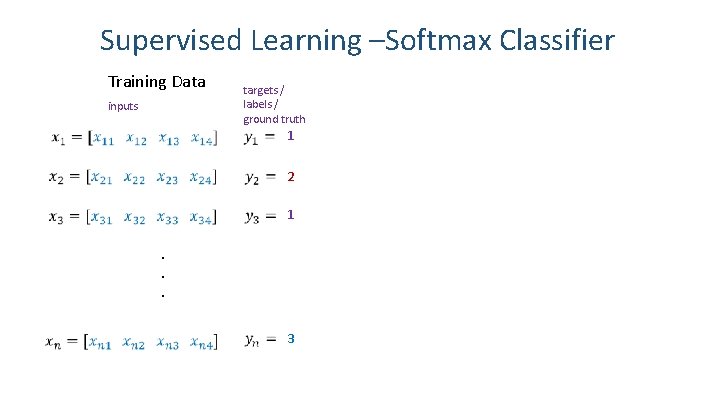

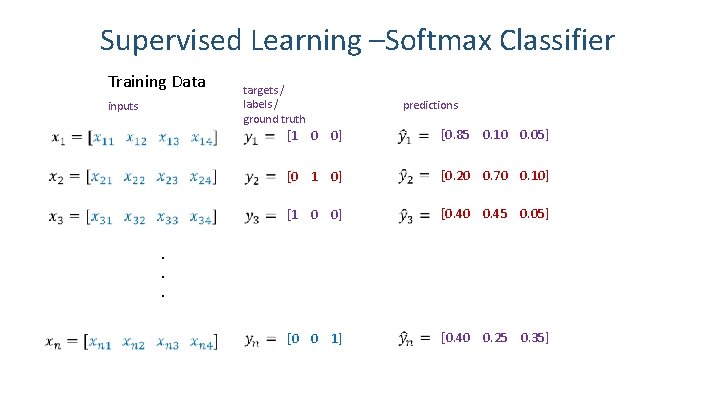

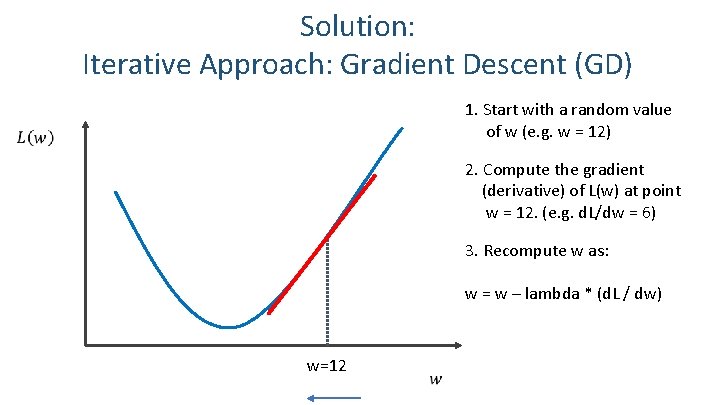

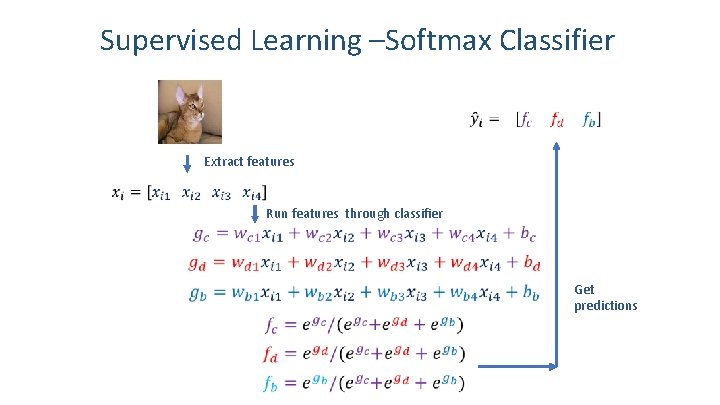

Supervised Learning –Softmax Classifier Training Data inputs targets / labels / ground truth predictions [1 0 0] [0. 85 0. 10 0. 05] [0 1 0] [0. 20 0. 70 0. 10] [1 0 0] [0. 40 0. 45 0. 05] [0 0 1] [0. 40 0. 25 0. 35] . . . 16

![Supervised Learning Softmax Classifier 1 0 0 17 Supervised Learning –Softmax Classifier [1 0 0] 17](https://slidetodoc.com/presentation_image/9b79ee957f6b1e5d878fa02203368958/image-17.jpg)

Supervised Learning –Softmax Classifier [1 0 0] 17

![How do we find a good w and b 1 0 0 We need How do we find a good w and b? [1 0 0] We need](https://slidetodoc.com/presentation_image/9b79ee957f6b1e5d878fa02203368958/image-18.jpg)

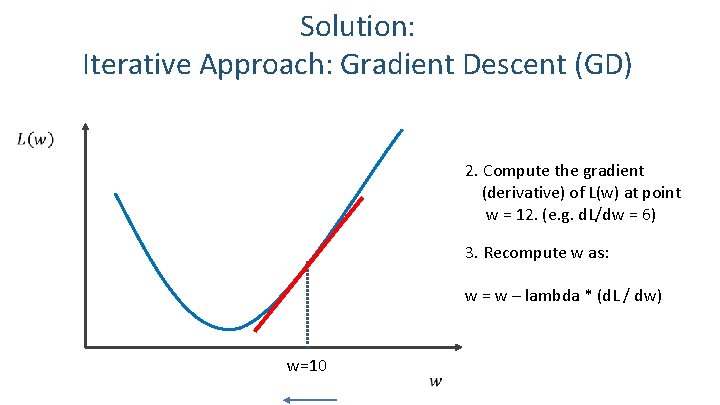

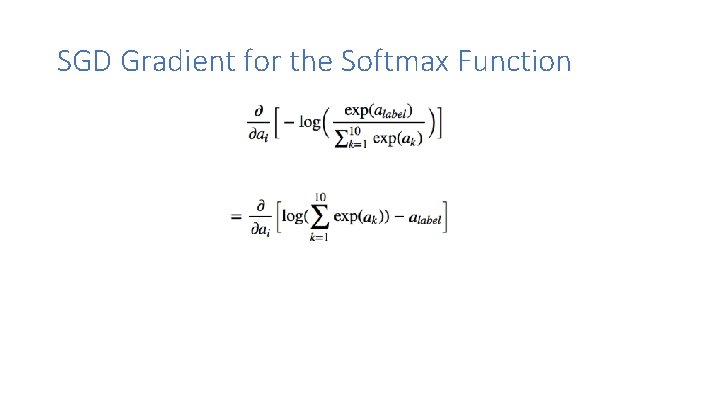

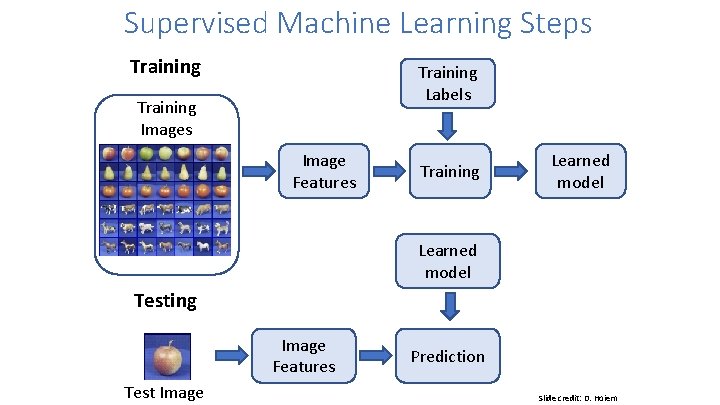

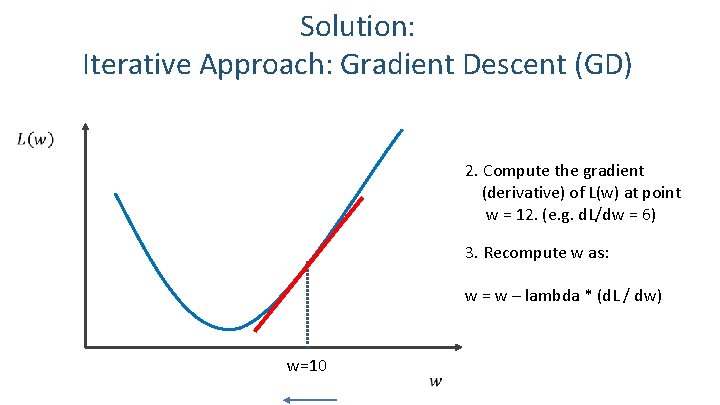

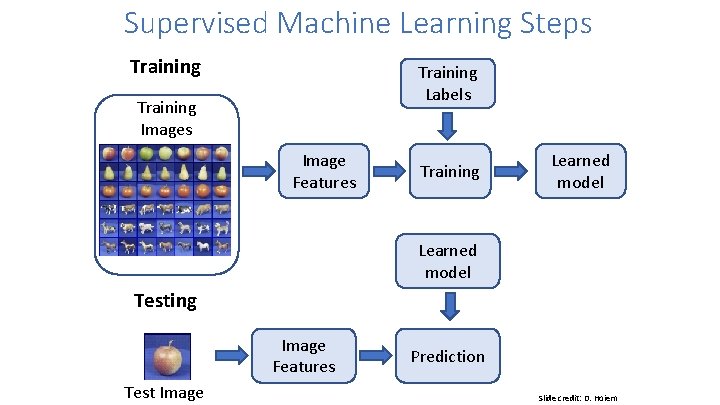

How do we find a good w and b? [1 0 0] We need to find w, and b that minimize the following function L: Why? 18

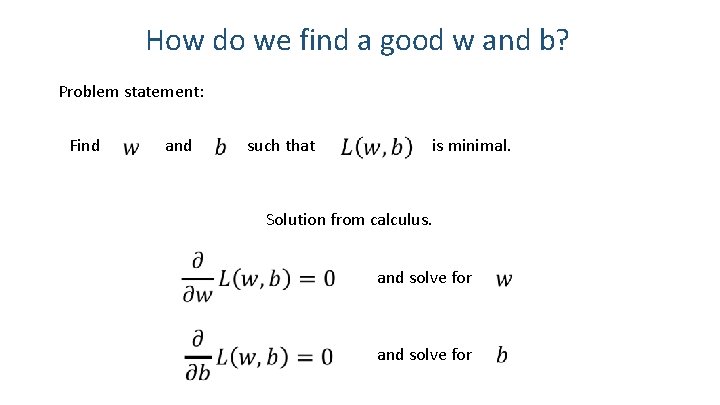

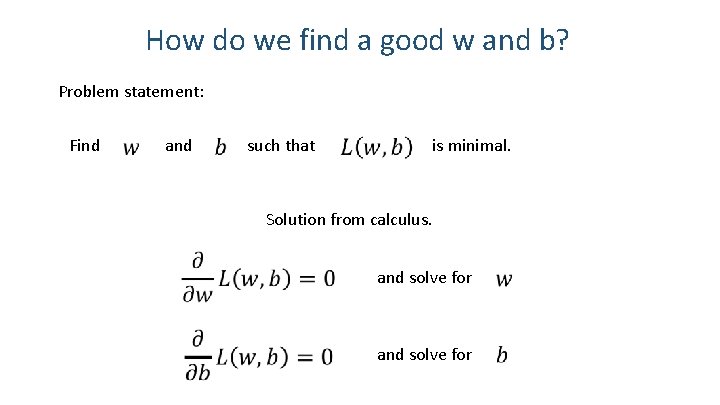

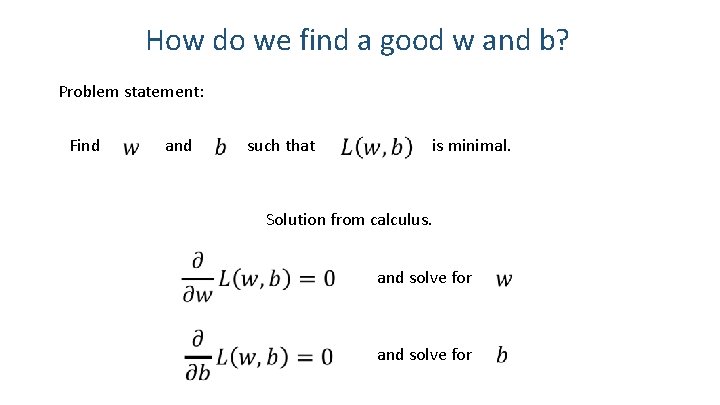

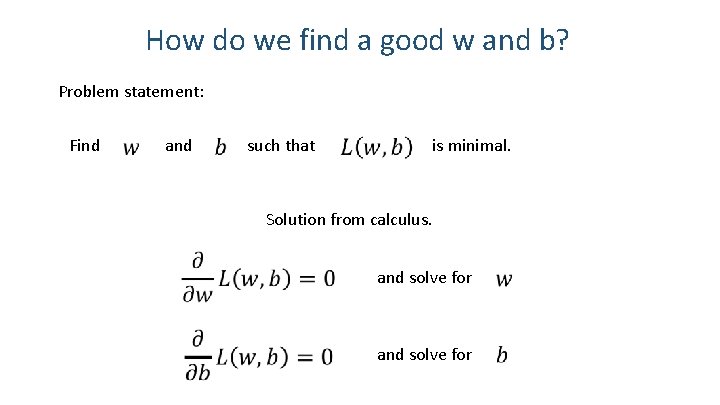

How do we find a good w and b? Problem statement: Find and such that is minimal. Solution from calculus. and solve for

https: //courses. lumenlearning. com/businesscalc 1/chapter/reading-curve-sketching/

How do we find a good w and b? Problem statement: Find and such that is minimal. Solution from calculus. and solve for

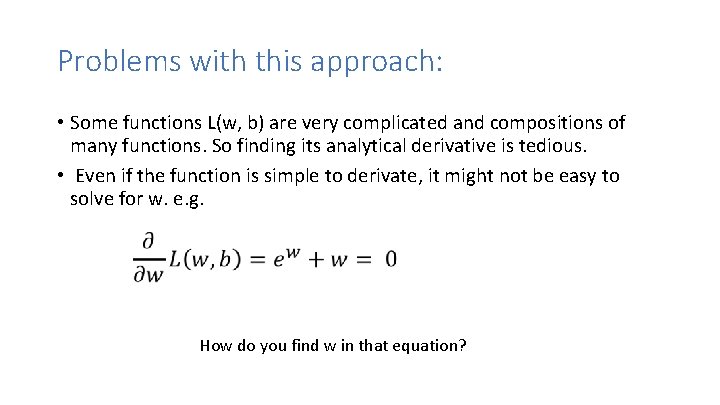

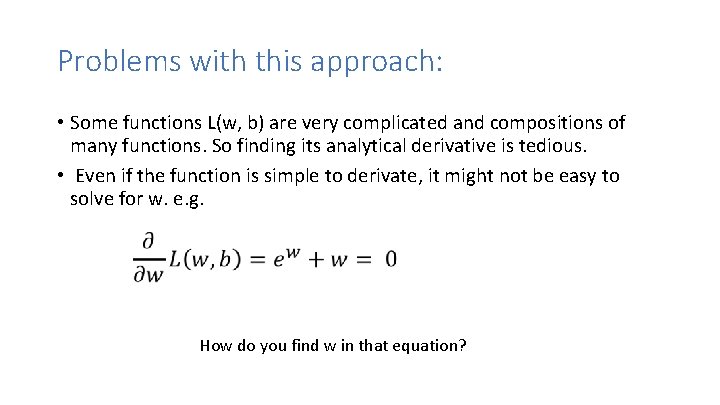

Problems with this approach: • Some functions L(w, b) are very complicated and compositions of many functions. So finding its analytical derivative is tedious. • Even if the function is simple to derivate, it might not be easy to solve for w. e. g. How do you find w in that equation?

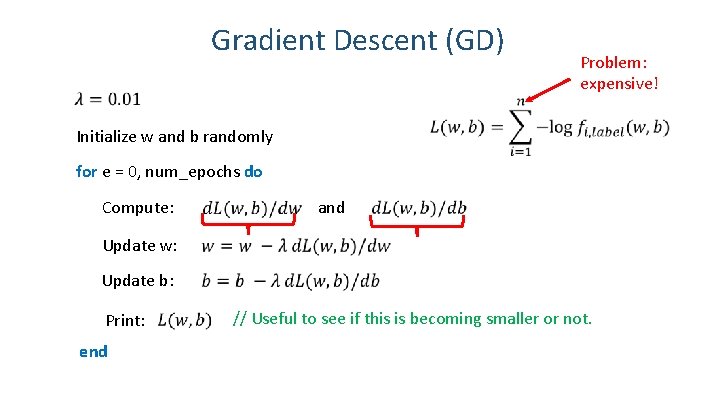

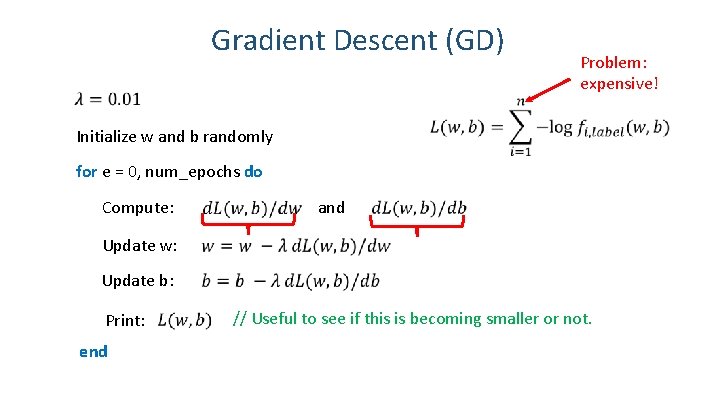

Solution: Iterative Approach: Gradient Descent (GD) 1. Start with a random value of w (e. g. w = 12) 2. Compute the gradient (derivative) of L(w) at point w = 12. (e. g. d. L/dw = 6) 3. Recompute w as: w = w – lambda * (d. L / dw) w=12

Solution: Iterative Approach: Gradient Descent (GD) 2. Compute the gradient (derivative) of L(w) at point w = 12. (e. g. d. L/dw = 6) 3. Recompute w as: w = w – lambda * (d. L / dw) w=10 24

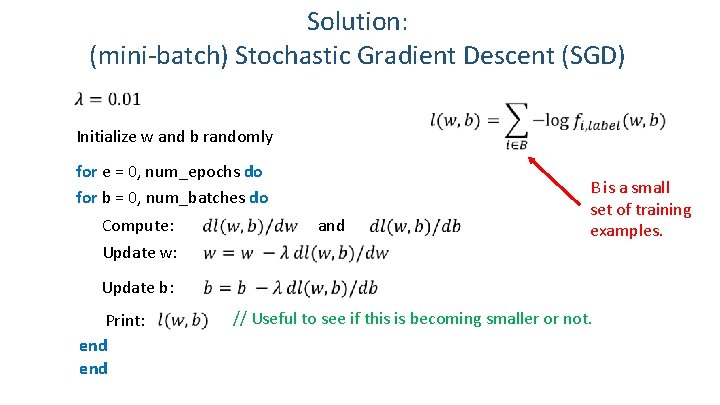

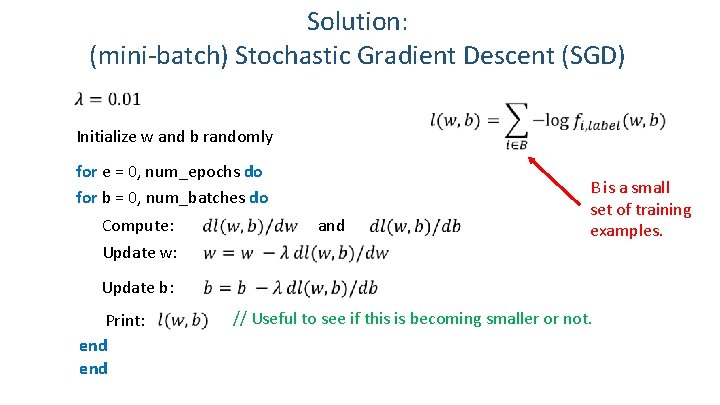

Gradient Descent (GD) Problem: expensive! Initialize w and b randomly for e = 0, num_epochs do Compute: Update w: Update b: Print: and // Useful to see if this is becoming smaller or not. end 25

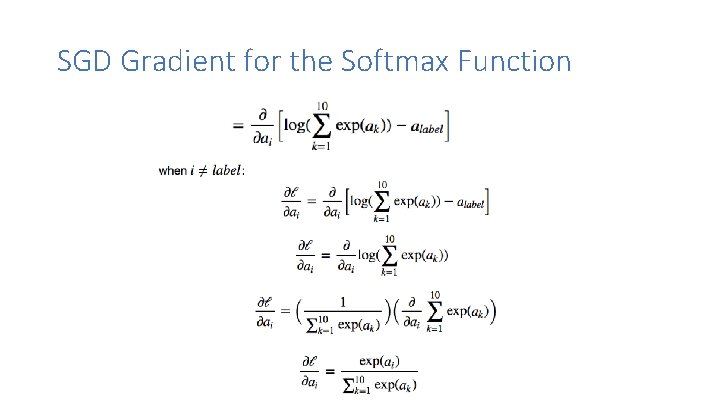

Solution: (mini-batch) Stochastic Gradient Descent (SGD) Initialize w and b randomly for e = 0, num_epochs do for b = 0, num_batches do Compute: Update w: Update b: Print: end and B is a small set of training examples. // Useful to see if this is becoming smaller or not. 26

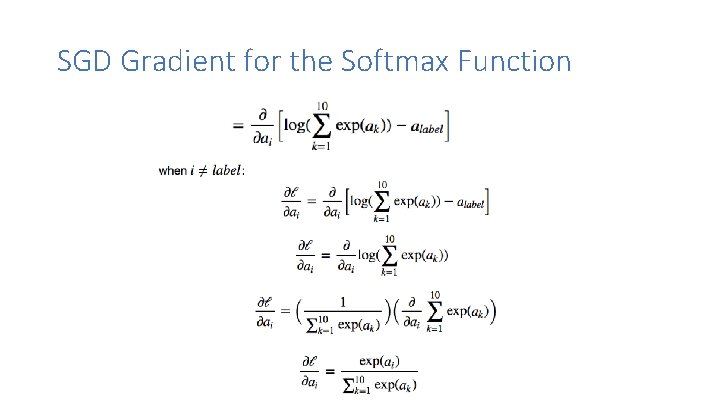

Source: Andrew Ng

Three more things • How to compute the gradient • Regularization • Momentum updates 28

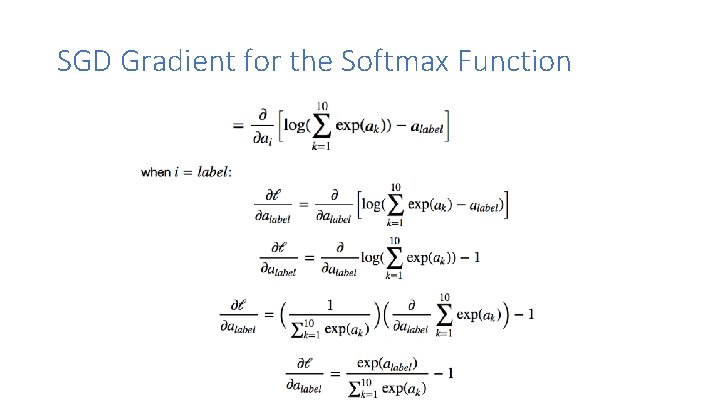

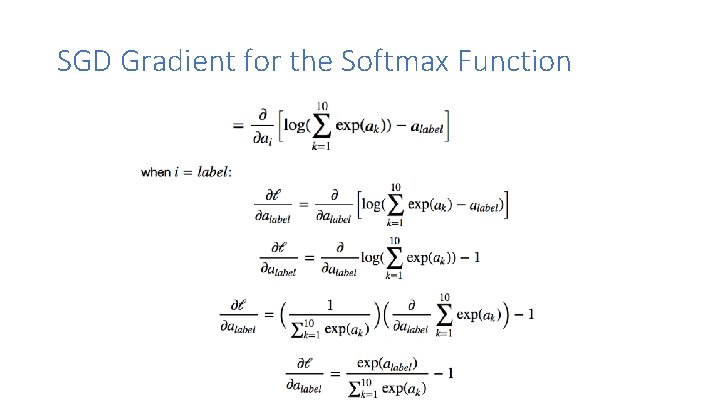

SGD Gradient for the Softmax Function

SGD Gradient for the Softmax Function

SGD Gradient for the Softmax Function

Supervised Learning –Softmax Classifier Extract features Run features through classifier Get predictions 32

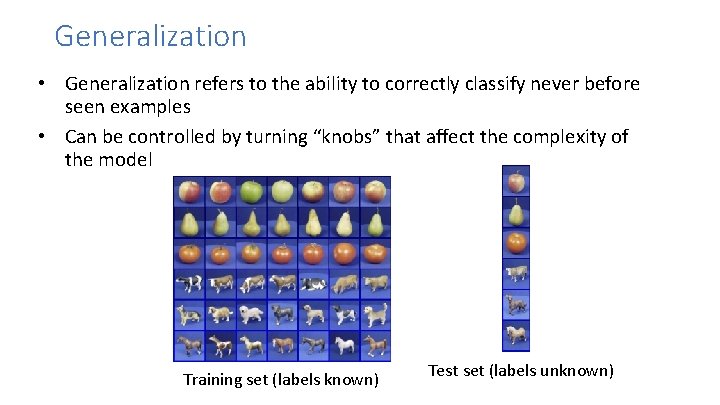

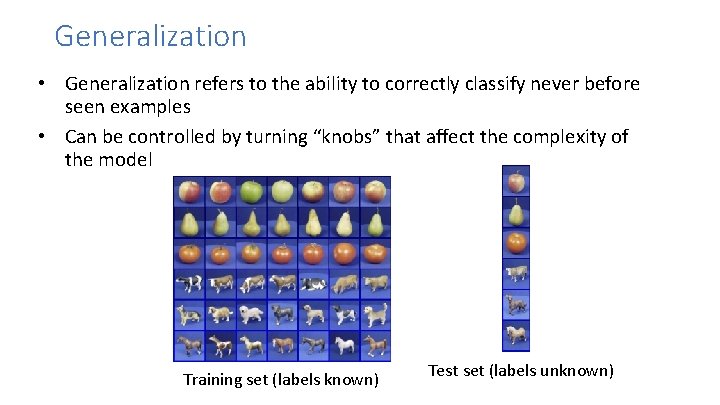

Supervised Machine Learning Steps Training Labels Training Images Image Features Training Learned model Testing Image Features Test Image Prediction Slide credit: D. Hoiem

Generalization • Generalization refers to the ability to correctly classify never before seen examples • Can be controlled by turning “knobs” that affect the complexity of the model Training set (labels known) Test set (labels unknown)

Overfitting Underfitting High Bias Overfitting High Variance

Questions? 36