Perceptive Context for Pervasive Computing Trevor Darrell Vision

- Slides: 36

Perceptive Context for Pervasive Computing Trevor Darrell Vision Interface Group MIT AI Lab

MIT Project Oxygen A multi-laboratory effort at MIT to develop pervasive, human -centric computing Enabling people “to do more by doing less, ” that is, to accomplish more with less work Bringing abundant computation and communication as pervasive as free air, naturally into people’s lives

Human-centered Interfaces • • Free users from desktop and wired interfaces Allow natural gesture and speech commands Give computers awareness of users Work in open and noisy environments - Outdoors -- PDA next to construction site! - Indoors -- crowded meeting room • Vision’s role: provide perceptive context

Perceptive Context • • • Who is there? (presence, identity) What is going on? (activity) Where are they? (individual location) Which person said that? (audiovisual grouping) What are they looking / pointing at? (pose, gaze)

Vision Interface Group Projects • Person Identification at a distance from multiple cameras and multiple cues (face, gait) • Tracking multiple people in indoor environments with large illumination variation and sparse stereo cues • Vision guided microphone array • Joint statistical models for audiovisual fusion • Face pose estimation: rigid motion estimation with longterm drift reduction

Vision Interface Group Projects • Person Identification at a distance from multiple cameras and multiple cues (face, gait) • Tracking multiple people in indoor environments with large illumination variation and sparse stereo cues • Vision guided microphone array • Joint statistical models for audiovisual fusion • Face pose estimation: rigid motion estimation with longterm drift reduction

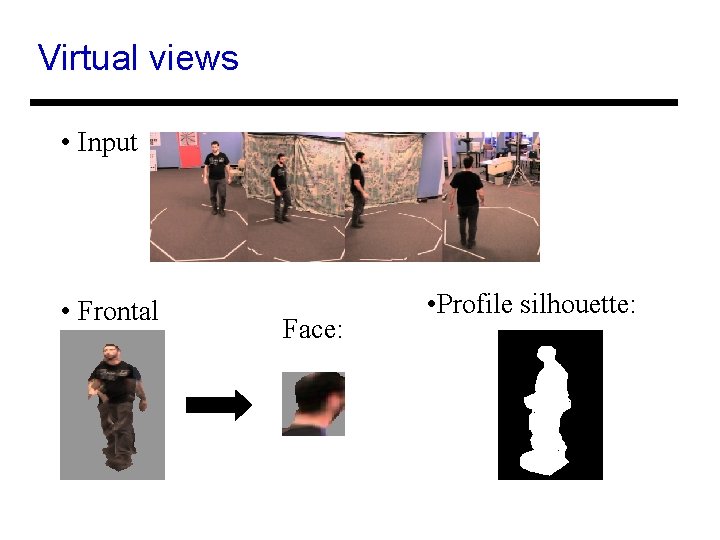

Person Identification at a distance • Multiple cameras • Face and gait cues • Approach: canonical frame for each modality by placing the virtual camera at a desired viewpoint • Face: frontal view, fixed scale • Gait: profile silhouette • Need to place virtual camera - explicit model estimation - search - motion-based heuristic trajectory • We combine trajectory estimate and limited search

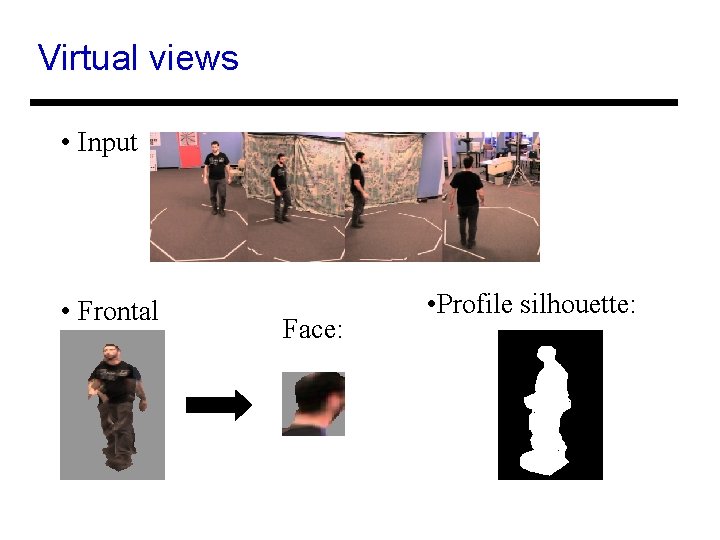

Virtual views • Input • Frontal Face: • Profile silhouette:

Examples: VH-generated views • Faces: • Gait:

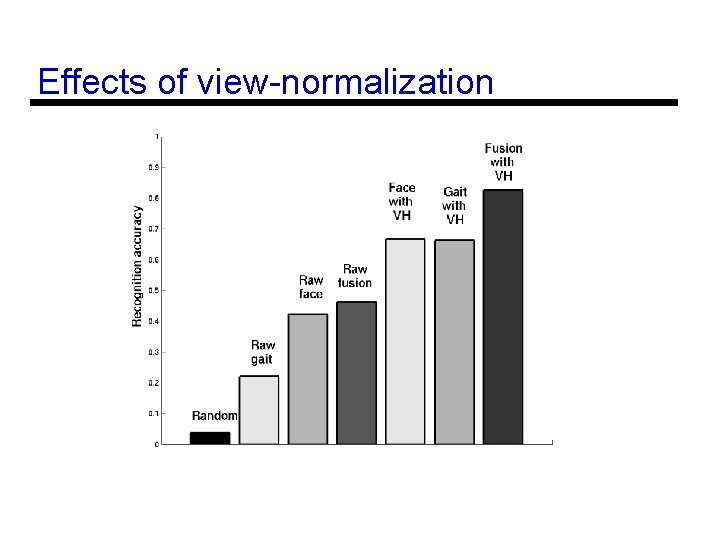

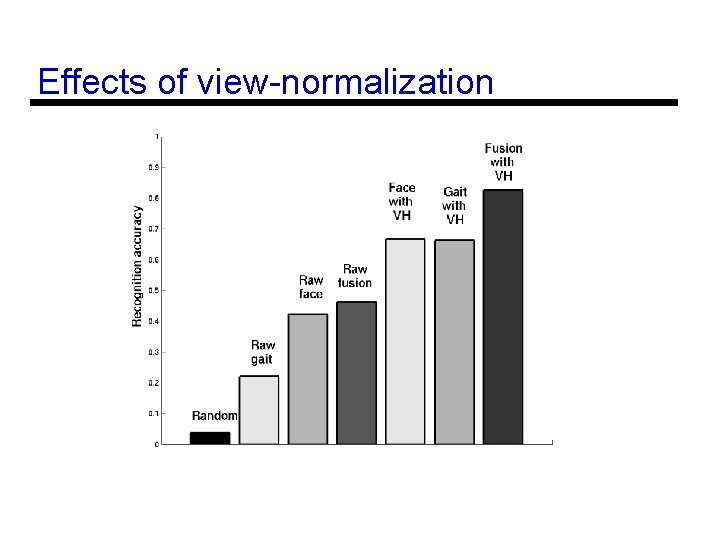

Effects of view-normalization

Vision Interface Group Projects • Person Identification at a distance from multiple cameras and multiple cues (face, gait) • Tracking multiple people in indoor environments with large illumination variation and sparse stereo cues • Vision guided microphone array • Joint statistical models for audiovisual fusion • Face pose estimation: rigid motion estimation with longterm drift reduction

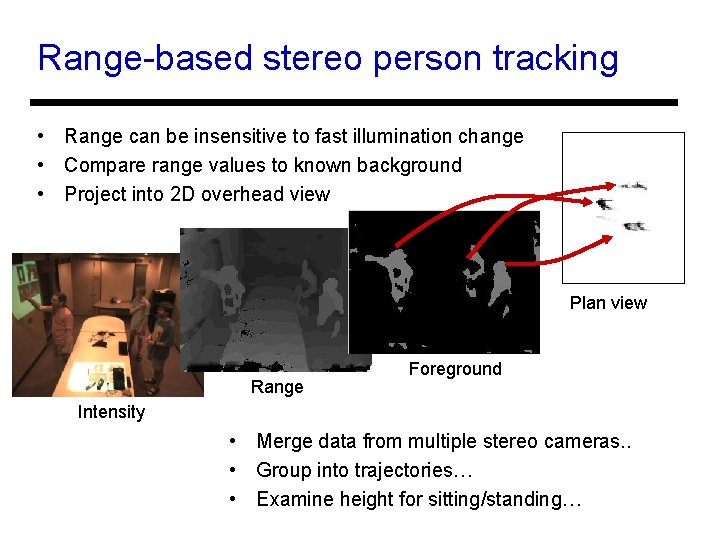

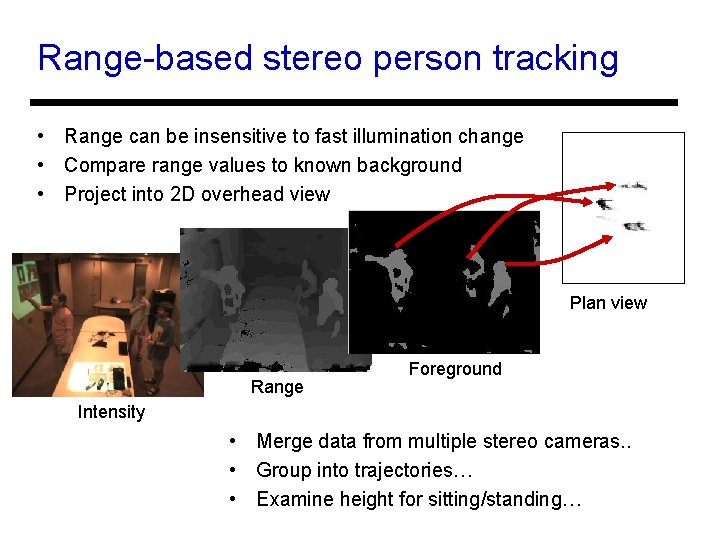

Range-based stereo person tracking • Range can be insensitive to fast illumination change • Compare range values to known background • Project into 2 D overhead view Plan view Range Foreground Intensity • Merge data from multiple stereo cameras. . • Group into trajectories… • Examine height for sitting/standing…

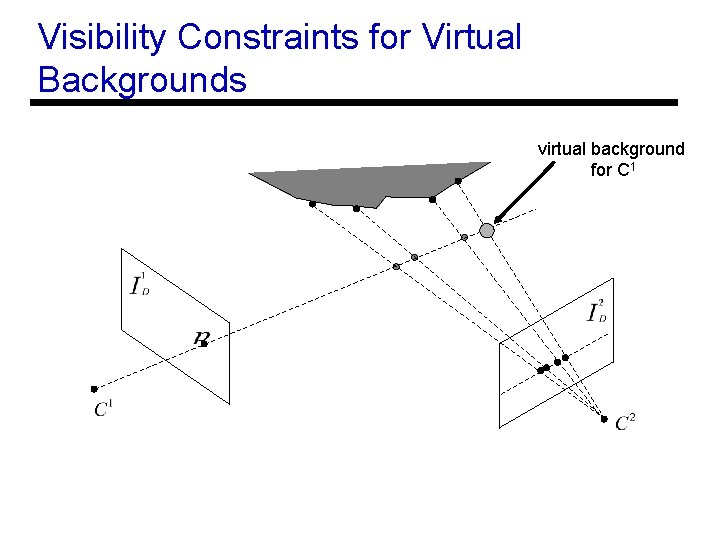

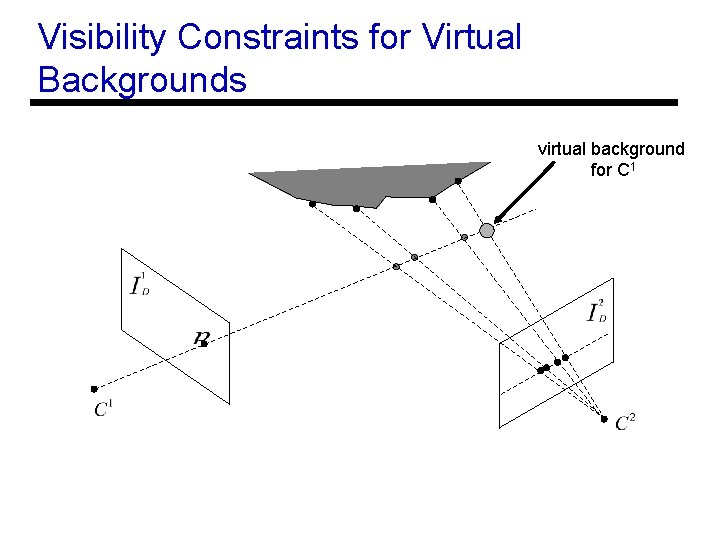

Visibility Constraints for Virtual Backgrounds virtual background for C 1

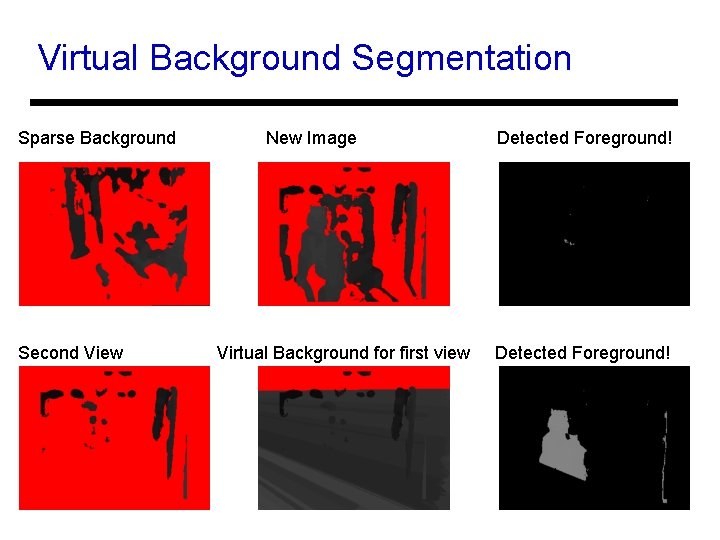

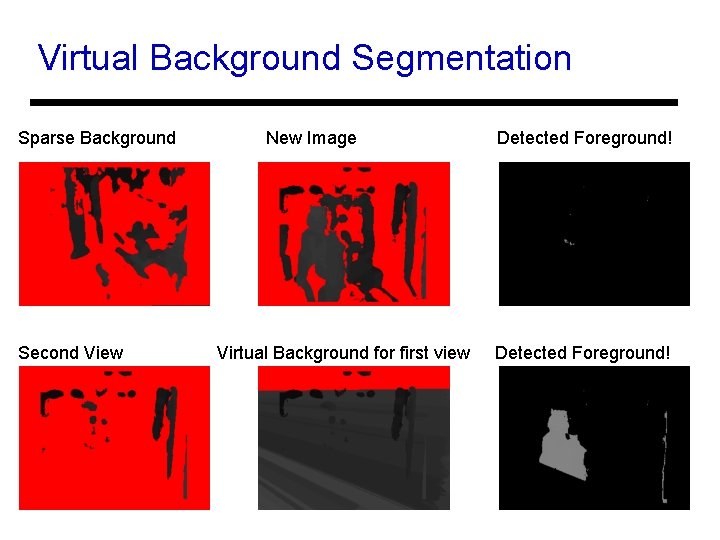

Virtual Background Segmentation Sparse Background Second View New Image Virtual Background for first view Detected Foreground!

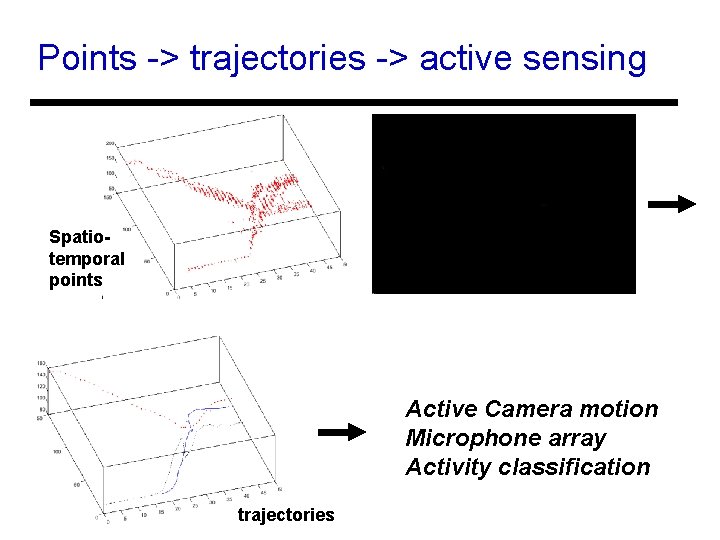

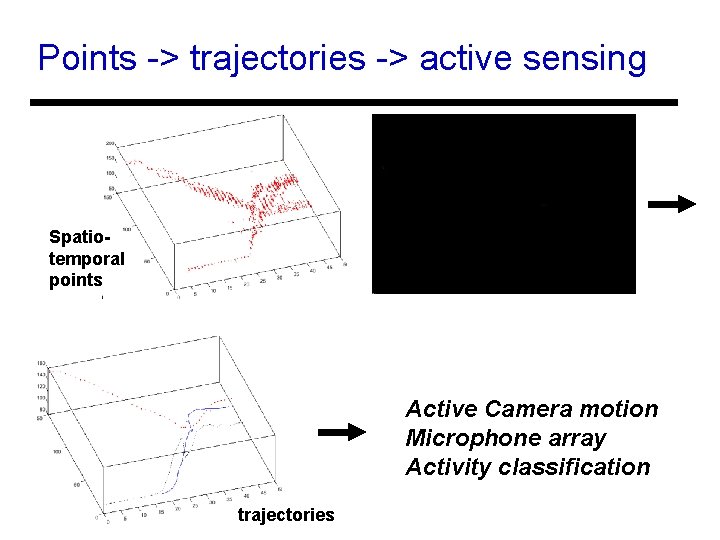

Points -> trajectories -> active sensing Spatiotemporal points Active Camera motion Microphone array Activity classification trajectories

Vision Interface Group Projects • Person Identification at a distance from multiple cameras and multiple cues (face, gait) • Tracking multiple people in indoor environments with large illumination variation and sparse stereo cues • Vision guided microphone array • Joint statistical models for audiovisual fusion • Face pose estimation: rigid motion estimation with longterm drift reduction

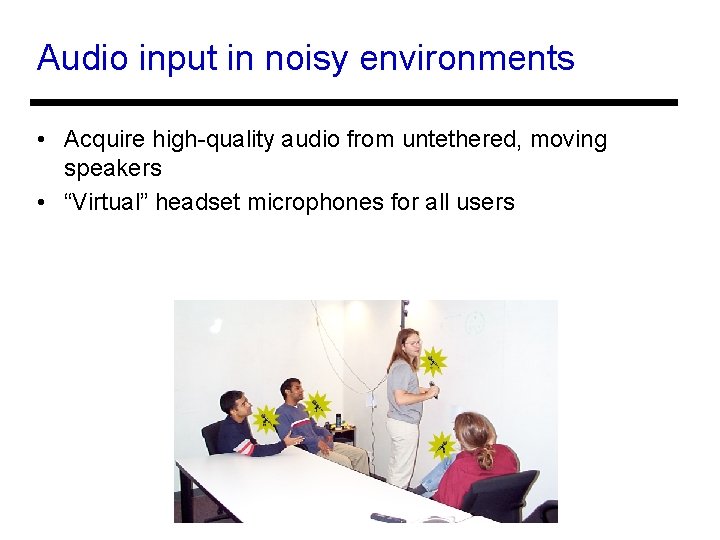

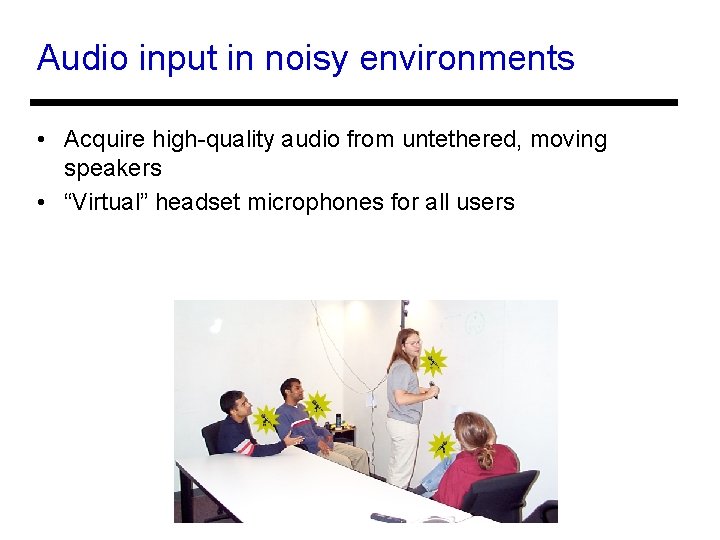

Audio input in noisy environments • Acquire high-quality audio from untethered, moving speakers • “Virtual” headset microphones for all users

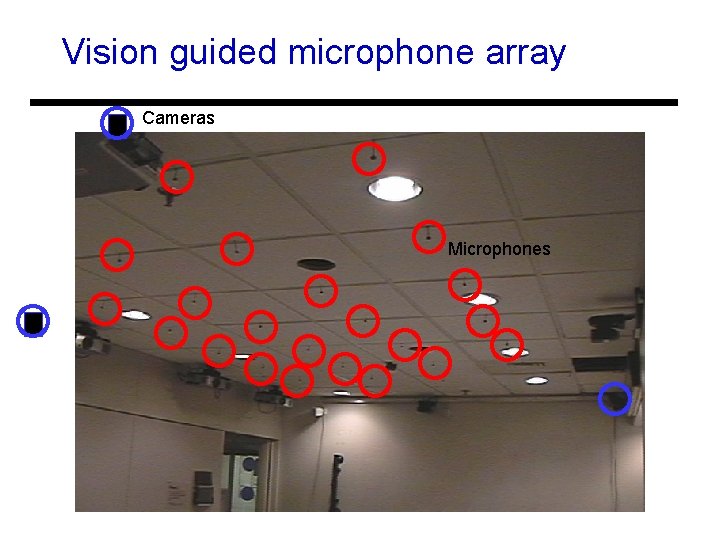

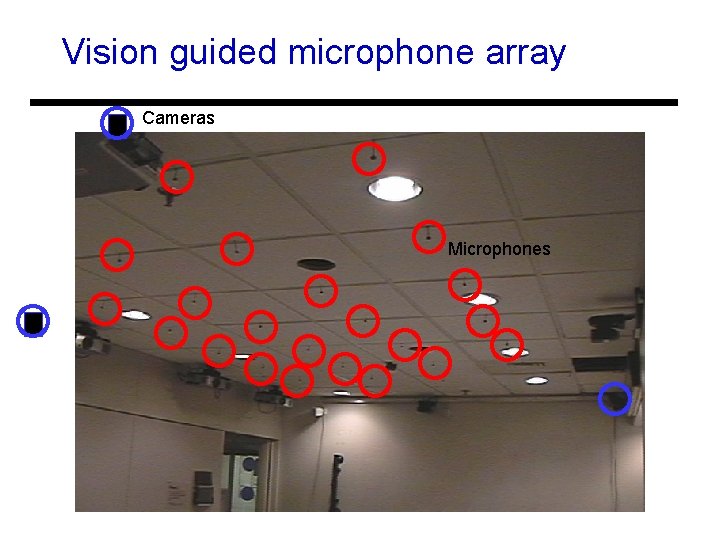

Vision guided microphone array Cameras Microphones

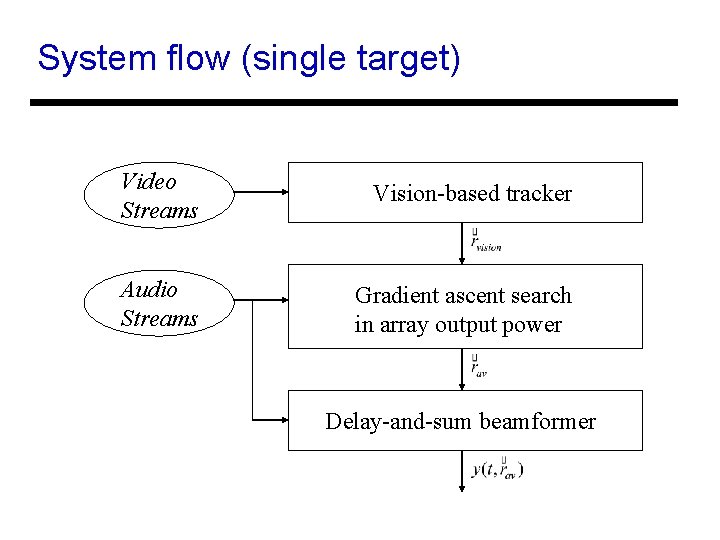

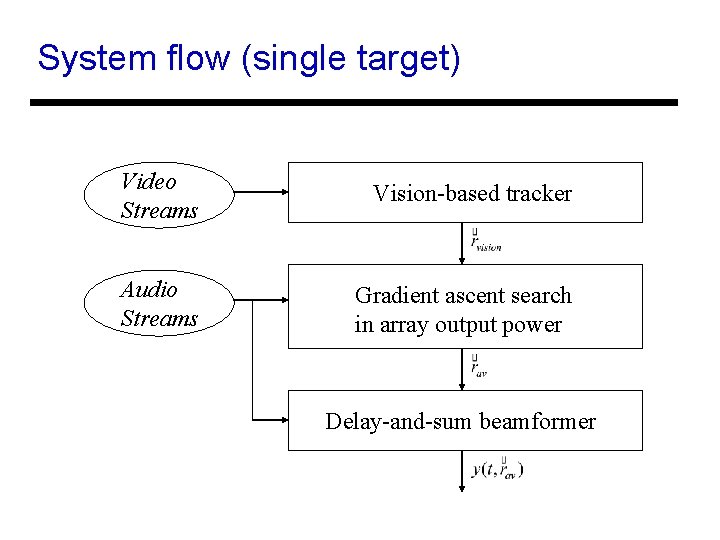

System flow (single target) Video Streams Vision-based tracker Audio Streams Gradient ascent search in array output power Delay-and-sum beamformer

Vision Interface Group Projects • Person Identification at a distance from multiple cameras and multiple cues (face, gait) • Tracking multiple people in indoor environments with large illumination variation and sparse stereo cues • Vision guided microphone array • Joint statistical models for audiovisual fusion • Face pose estimation: rigid motion estimation with longterm drift reduction

Audio-visual Analysis • • • Multi-modal approach to source separation Exploit joint statistics of image and audio signal Use non-parametric density estimation Audio-based image localization Image-based audio localization A/V Verification: is this audio and video from the same person?

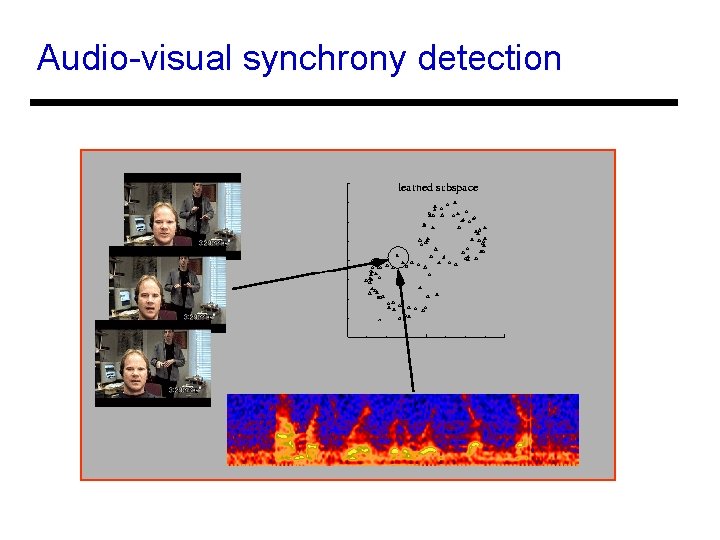

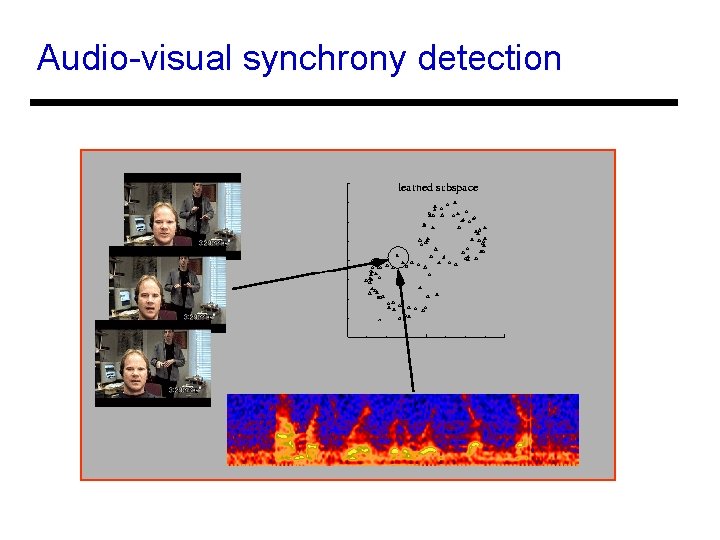

Audio-visual synchrony detection

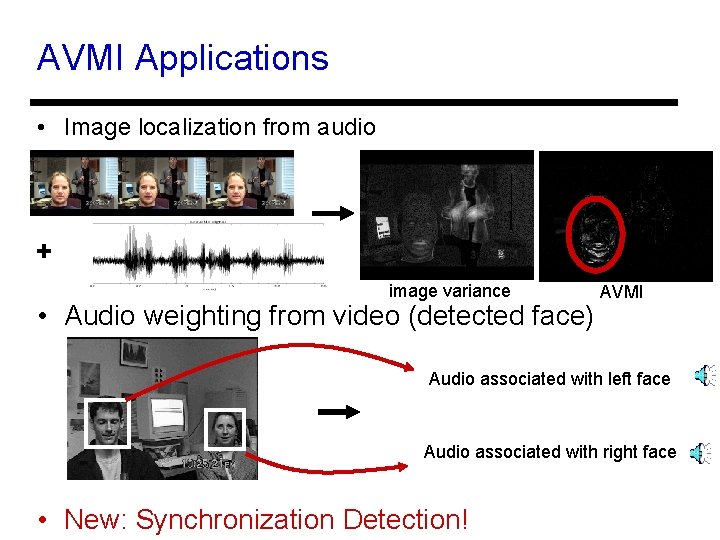

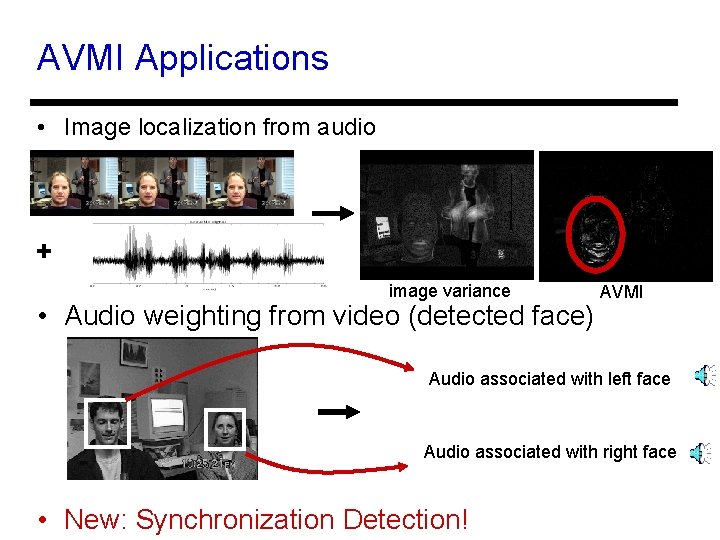

AVMI Applications • Image localization from audio + image variance • Audio weighting from video (detected face) AVMI Audio associated with left face Audio associated with right face • New: Synchronization Detection!

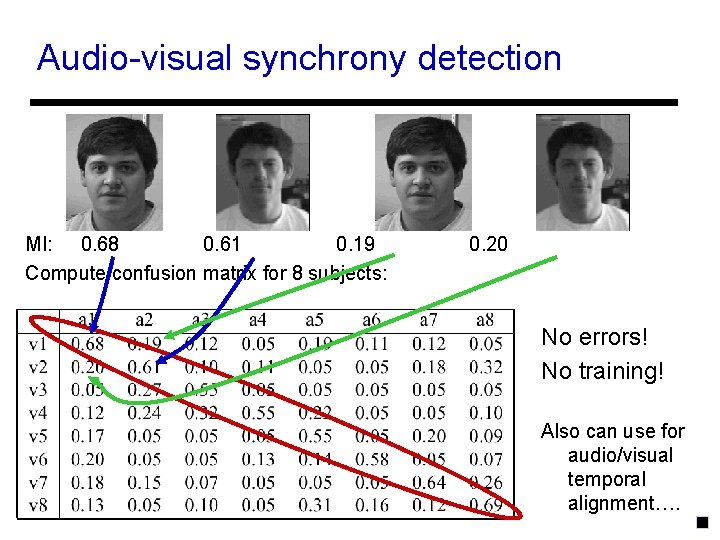

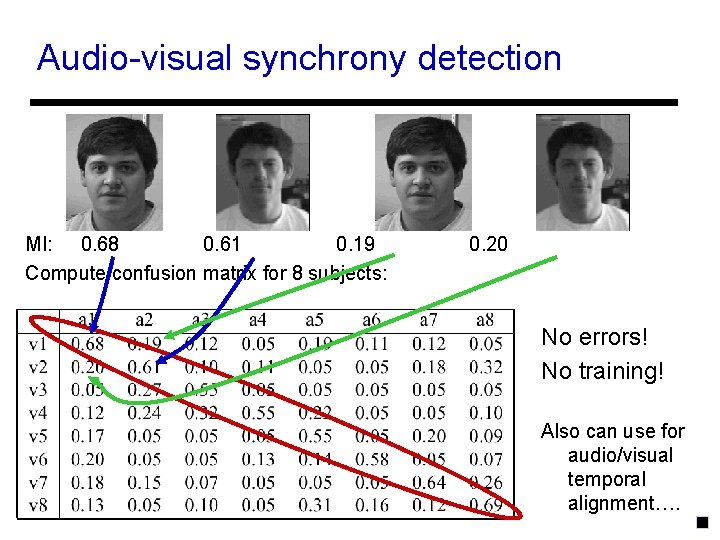

Audio-visual synchrony detection MI: 0. 68 0. 61 0. 19 Compute confusion matrix for 8 subjects: 0. 20 No errors! No training! Also can use for audio/visual temporal alignment….

Vision Interface Group Projects • Person Identification at a distance from multiple cameras and multiple cues (face, gait) • Tracking multiple people in indoor environments with large illumination variation and sparse stereo cues • Vision guided microphone array • Joint statistical models for audiovisual fusion • Face pose estimation: rigid motion estimation with longterm drift reduction

Face pose estimation • rigid motion estimation with long-term drift reduction

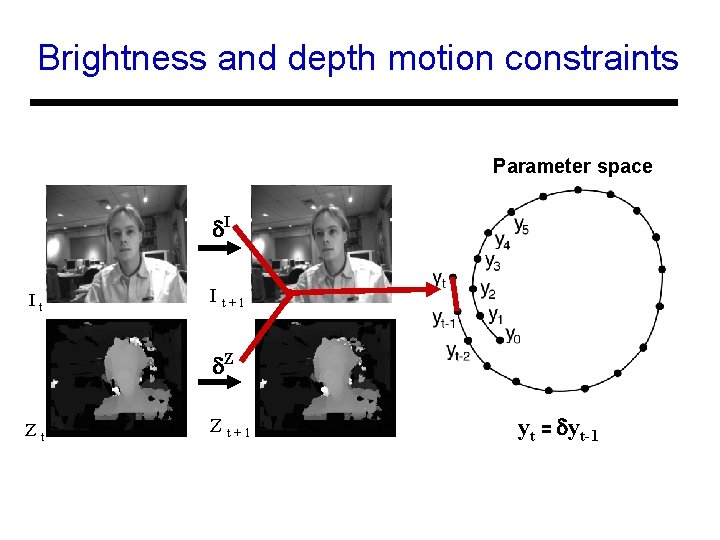

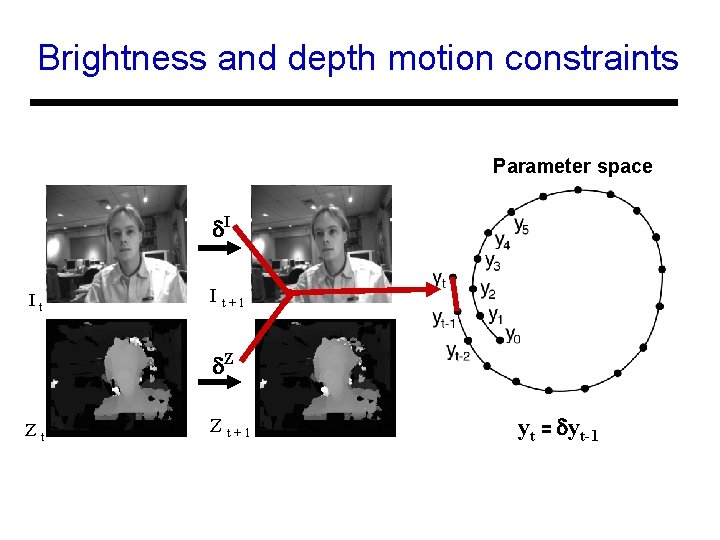

Brightness and depth motion constraints Parameter space I It I t+1 Z Zt Z t+1 yt = yt-1

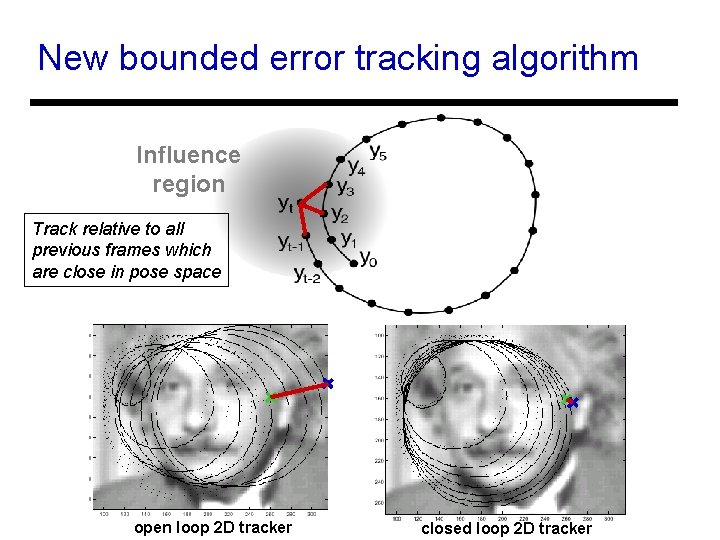

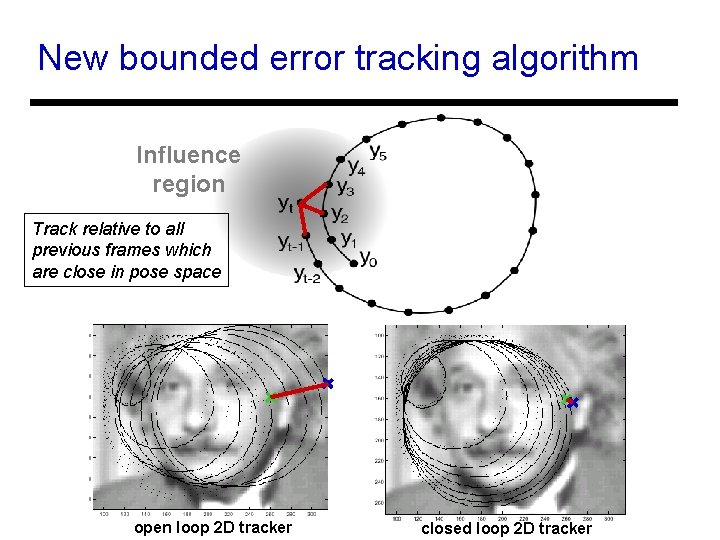

New bounded error tracking algorithm Influence region Track relative to all previous frames which are close in pose space open loop 2 D tracker closed loop 2 D tracker

Closed-loop 3 D tracker Track users head gaze for hands-free pointing…

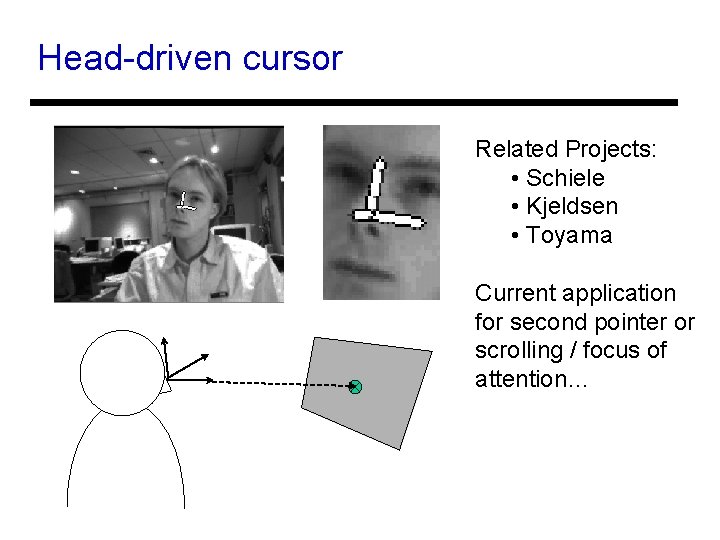

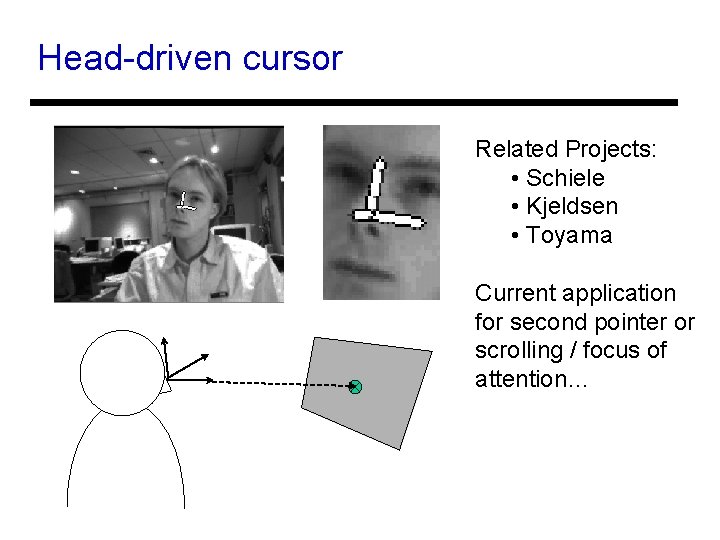

Head-driven cursor Related Projects: • Schiele • Kjeldsen • Toyama Current application for second pointer or scrolling / focus of attention…

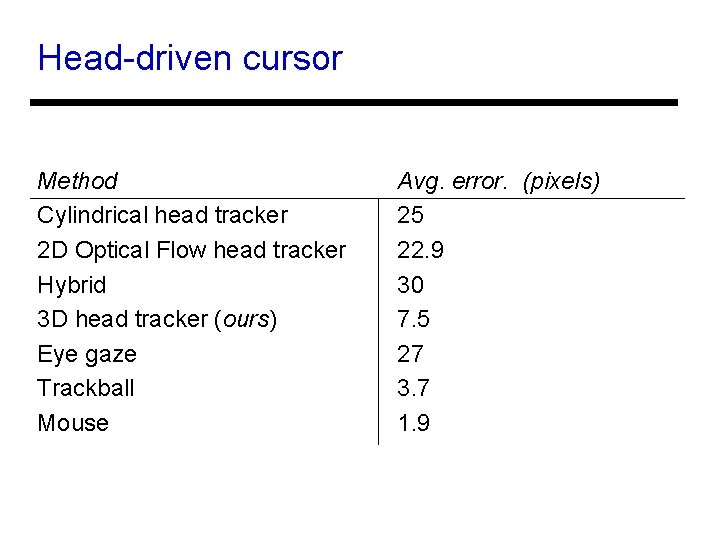

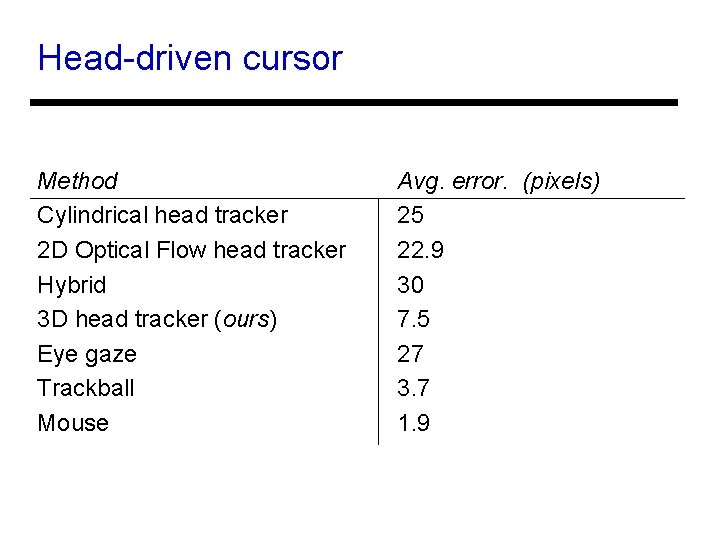

Head-driven cursor Method Cylindrical head tracker 2 D Optical Flow head tracker Hybrid 3 D head tracker (ours) Eye gaze Trackball Mouse Avg. error. (pixels) 25 22. 9 30 7. 5 27 3. 7 1. 9

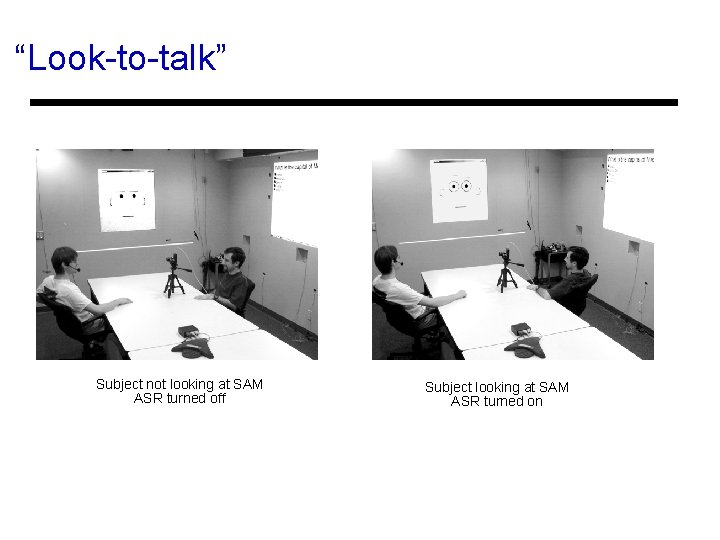

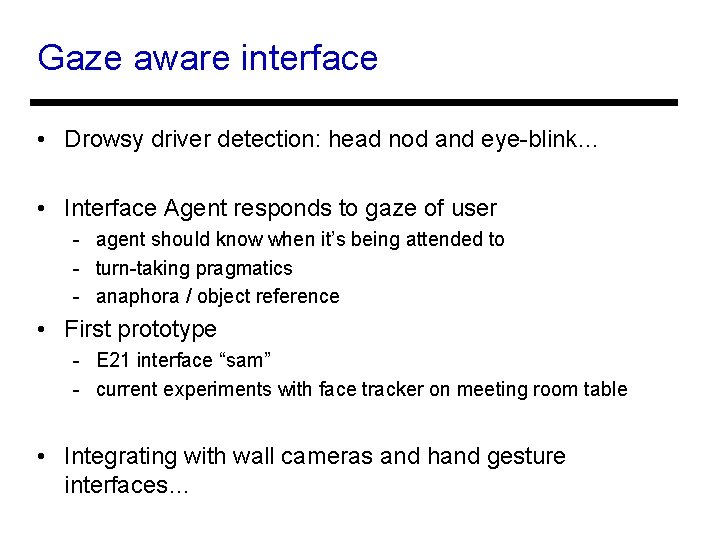

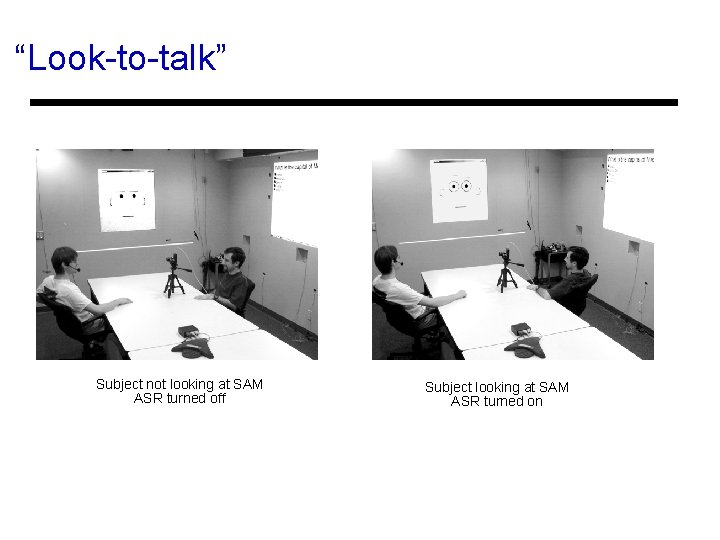

Gaze aware interface • Drowsy driver detection: head nod and eye-blink… • Interface Agent responds to gaze of user - agent should know when it’s being attended to - turn-taking pragmatics - anaphora / object reference • First prototype - E 21 interface “sam” - current experiments with face tracker on meeting room table • Integrating with wall cameras and hand gesture interfaces…

“Look-to-talk” Subject not looking at SAM ASR turned off Subject looking at SAM ASR turned on

Vision Interface Group Projects • Person Identification at a distance from multiple cameras and multiple cues (face, gait) • Tracking multiple people in indoor environments with large illumination variation and sparse stereo cues • Vision guided microphone array • Joint statistical models for audiovisual fusion • Face pose estimation: rigid motion estimation with longterm drift reduction • Conclusion and contact info.

Conclusion: Perceptual Context Take-home message: vision provides Perceptual Context to make applications aware of users. . So far: detection, ID, head pose, audio enhancement and synchrony verification… Soon: • gaze -- add eye tracking on pose stabilized face • pointing -- arm gestures for selection and navigation. • activity -- adapting outdoor activity classification [ Grimson and Stauffer ] to indoor domain…

Contact Prof. Trevor Darrell www. ai. mit. edu/projects/vip • Person Identification at a distance from multiple cameras and multiple cues (face, gait) - Greg Shakhnarovich • Tracking multiple people in indoor environments with large illumination variation and sparse stereo cues - Neal Checka, Leonid Taycher, David Demirdjian • Vision guided microphone array - Kevin Wilson • Joint statistical models for audiovisual fusion - John Fisher • Face pose estimation: rigid motion estimation with long-term drift reduction - Louis Morency, Alice Oh, Kristen Grauman