Parallelization Strategies Laxmikant Kale Overview Open MP Strategies

- Slides: 26

Parallelization Strategies Laxmikant Kale

Overview • Open. MP Strategies • Need for adaptive strategies – Object migration based dynamic load balancing – Minimal modification strategies • Thread based techniques: ROCFLO, . . • Some future plans

Open. MP • Motivation: – Shared memory model often easy to program – Incremental optimization possible

ROCFLO via Open. MP • Parallelization of ROCFLO using a loopparallel paradigm via Open. MP – Poor speedup compared with MPI version – Was locality the culprit? • Study conducted by Jay Hoeflinger – In collaboration with Fady Najjar

ROCFLO with MPI

The Methodology • Do Open. MP/MPI comparison experiments. • Write an Open. MP version of ROCFLO – Start with the MPI version of ROCFLO, – Duplicate the structure of the MPI code exactly (including message passing calls). – This removes locality as a problem. • Measure performance – If any parts do not scale well, determine why.

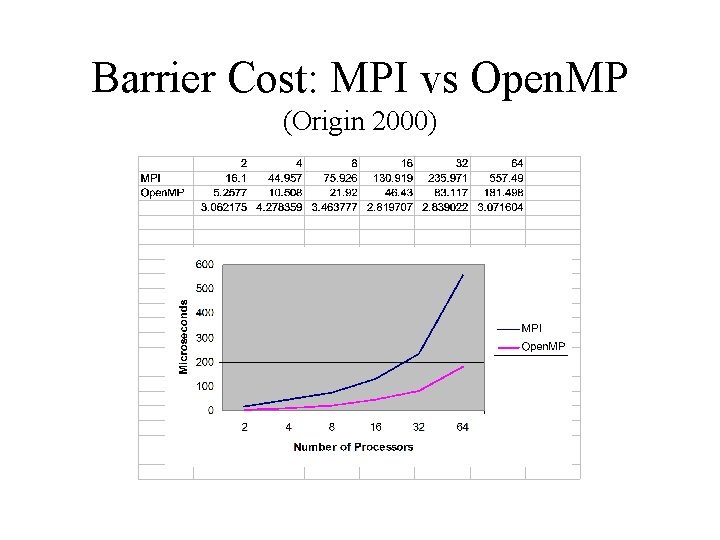

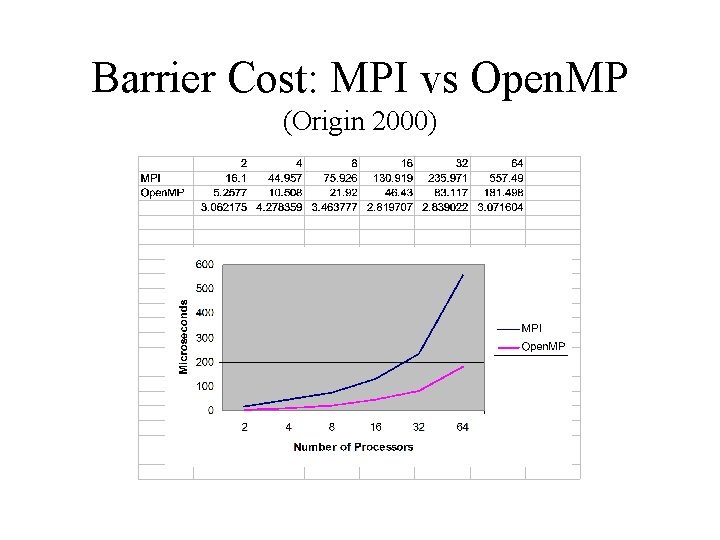

Barrier Cost: MPI vs Open. MP (Origin 2000)

So Locality was not the whole problem! • The other problems turned out to be: – I/O which doesn’t scale – ALLOCATE which doesn’t scale – our non-scaling reduction implementation – our first-cut messaging infrastructure which, could be improved • Conclusion – Efficient loop parallel version may be feasible, avoiding Allocates and using scalable IO

Need for adaptive strategies • Computation structure changes over time: – Combustion • Adaptive techniques in application codes: – Adaptive refinement in structures or even fluid – Other codes such as crack propagation • Can affect the load balance dramatically – One can go from 90% efficiency to less than 25%

Multi-partition decompositions • Idea: decompose the problem into a number of partitions, – independent of the number of processors – # Partitions > # Processors • The system maps partitions to processors – The system should be able to map and re-map objects as needed

Load Balancing Framework • Aimed at handling. . . – Continuous (slow) load variation – Abrupt load variation (refinement) – Workstation clusters in multi-user mode • Measurement based – Exploits temporal persistence of computation and communication structures – Very accurate (compared with estimation) – instrumentation possible via Charm++/Converse

Charm++ • A parallel C++ library – supports data driven objects – many objects per processor, with method execution scheduled with availability of data – system supports automatic instrumentation and object migration – Works with other paradigms: MPI, open. MP, . .

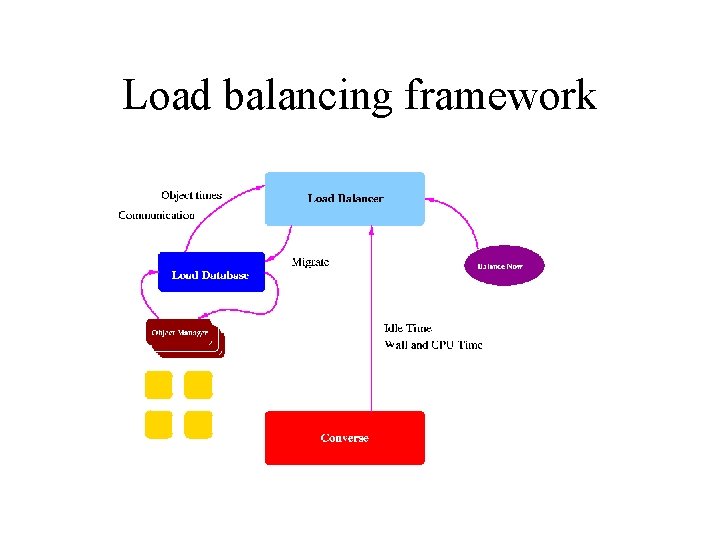

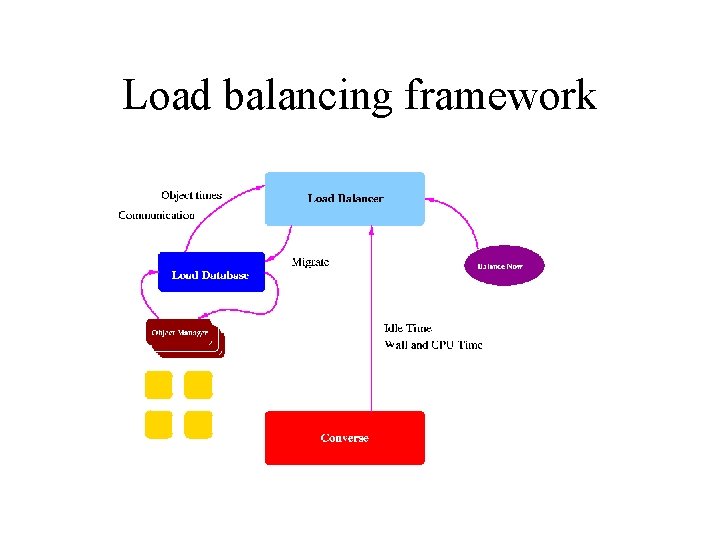

Load balancing framework

Load balancing demonstration • To test the abilities of the framework – A simple problem: Gauss-Jacobi iterations – Refine selected sub-domains • App. Spector: web based tool – Submit parallel jobs – Monitor performance and application behavior – Interact with running jobs via GUI interfaces

Adapitivity with minimal modification • Current code base is parallel (MPI) – But doesn’t support adaptivity directly – Rewrite the code with objects? . . . • Idea: support adaptivity with minimal changes to F 90/MPI codes • Work by: – Milind Bhandarkar, Jay Hoeflinger, Eric de Sturler

Migratable threads approach • Change required: – Encapsulate global variables in modules • Dynamically allocatable • Intercept MPI calls – Implement them in a multithreaded layer • Run each original MPI process as a thread – User level thread • Migrate threads as needed by load balancing – Trickier problem than object migration

Progress: • • • Test Fortran-90 - C++ interface Encapsulation feasibility: Thread migration mechanics ROCFLO study: Test code implementation ROCFLO implementation

Another approach to adaptivity • Cleanly separate parallel and sequential code: – All parallel code in C++ – All application code in Fortran 90 sequential subroutines • Needs more restructuring of application codes – But is feasible, especially for new codes – Much easier to migrate – Improves modularity

Quantum espresso gpu installation

Quantum espresso gpu installation Working principle of draw frame machine

Working principle of draw frame machine 영국 beis

영국 beis Dr. urmila kulkarni-kale

Dr. urmila kulkarni-kale Kale chips benefits

Kale chips benefits Kale köşk han hamam anıt gibi yapı adları

Kale köşk han hamam anıt gibi yapı adları Kale heating and cooling

Kale heating and cooling Dr sachin kale

Dr sachin kale Abe kale

Abe kale Jak wygląda owsik w kale zdjęcia

Jak wygląda owsik w kale zdjęcia Zeynep üçok

Zeynep üçok Dr savur

Dr savur Radhabai kale mahila mahavidyalaya ahmednagar

Radhabai kale mahila mahavidyalaya ahmednagar Elegant coatings pvt ltd

Elegant coatings pvt ltd Sachin kale eye specialist

Sachin kale eye specialist Sonali kale

Sonali kale Dr. urmila kulkarni-kale

Dr. urmila kulkarni-kale Beyond kale and pedicures

Beyond kale and pedicures Dr sheetal kale

Dr sheetal kale Arti kale

Arti kale On delay and off delay timer symbol

On delay and off delay timer symbol Open hearts open hands

Open hearts open hands Overview manajemen keuangan

Overview manajemen keuangan Section 8-2 photosynthesis an overview answers

Section 8-2 photosynthesis an overview answers Overview figure

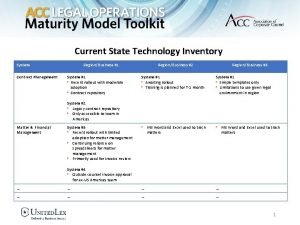

Overview figure Overview in research example

Overview in research example Overview of the current state of technology

Overview of the current state of technology