PARALLEL SOFTWARE 1 Parallel Programming Models n n

![Message Passing Interface (MPI) char message [ 1 0 0 ] ; . . Message Passing Interface (MPI) char message [ 1 0 0 ] ; . .](https://slidetodoc.com/presentation_image_h2/60446911e72e23a7882a5dff67f0cb17/image-27.jpg)

- Slides: 32

PARALLEL SOFTWARE 1

Parallel Programming Models n n Parallel programming models exist as an abstraction above hardware and memory architectures. There are several parallel programming models in common use: n Shared Memory (without threads) n Threads (pthread, Windows Threads, Java Threads, Open. MP, etc. ) n Distributed Memory / Message Passing (MPI) 2

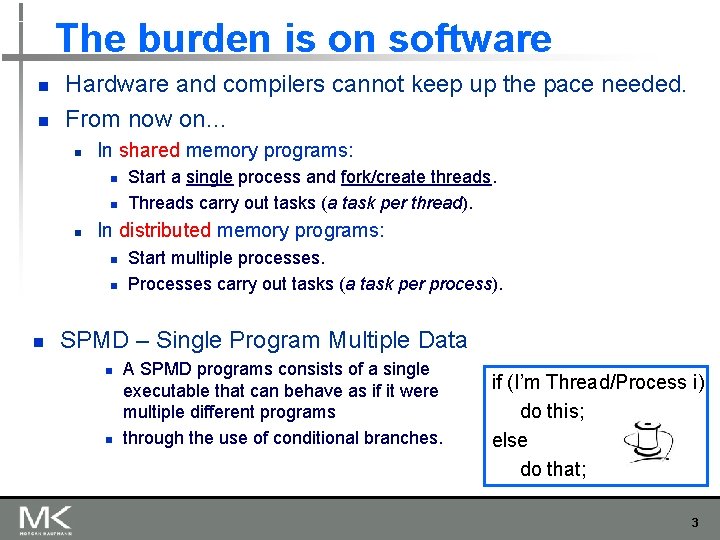

The burden is on software n n Hardware and compilers cannot keep up the pace needed. From now on… n In shared memory programs: n n n In distributed memory programs: n n n Start a single process and fork/create threads. Threads carry out tasks (a task per thread). Start multiple processes. Processes carry out tasks (a task per process). SPMD – Single Program Multiple Data n n A SPMD programs consists of a single executable that can behave as if it were multiple different programs through the use of conditional branches. if (I’m Thread/Process i) do this; else do that; 3

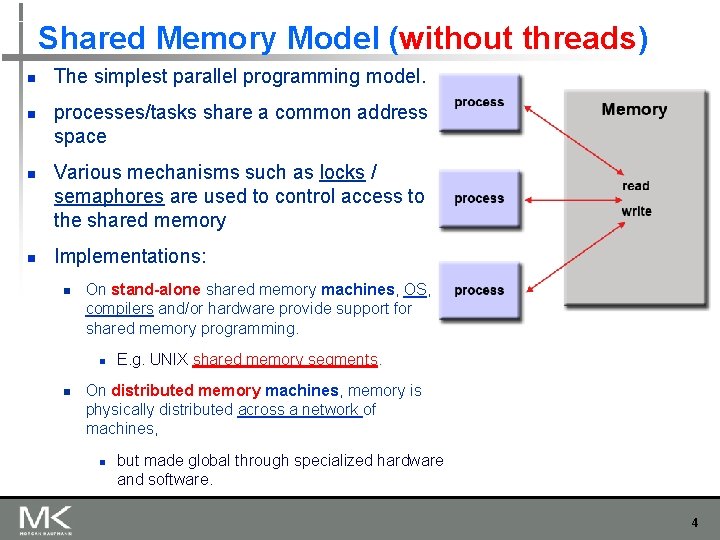

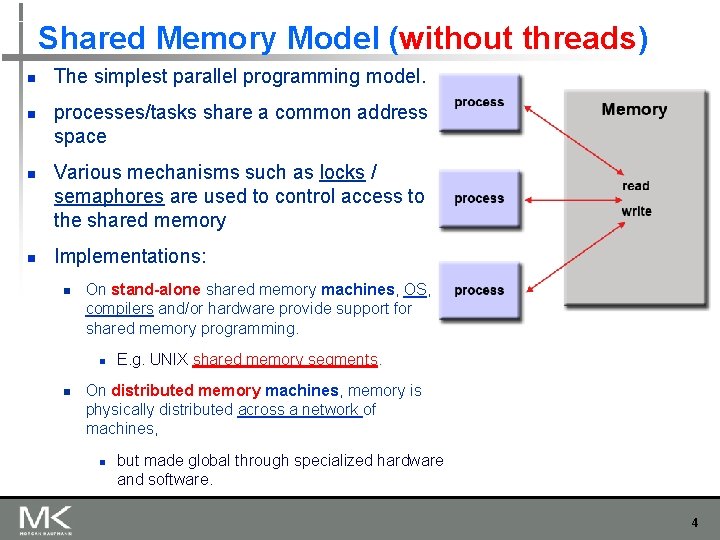

Shared Memory Model (without threads) n n The simplest parallel programming model. processes/tasks share a common address space Various mechanisms such as locks / semaphores are used to control access to the shared memory Implementations: n On stand-alone shared memory machines, OS, compilers and/or hardware provide support for shared memory programming. n n E. g. UNIX shared memory segments. On distributed memory machines, memory is physically distributed across a network of machines, n but made global through specialized hardware and software. 4

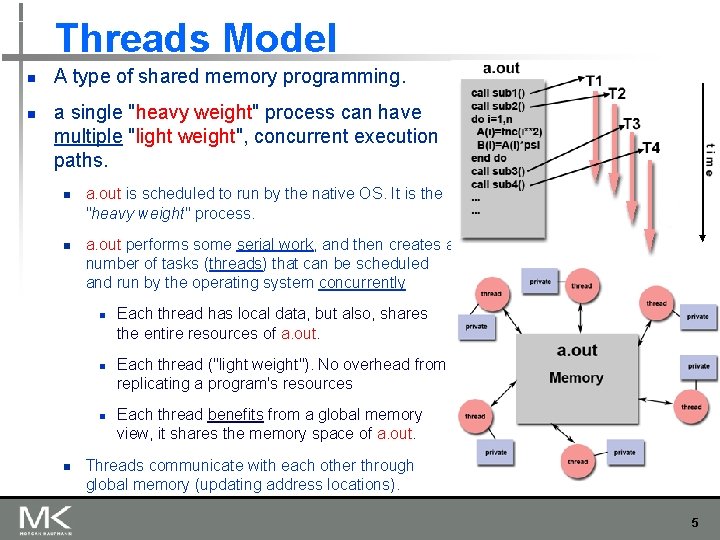

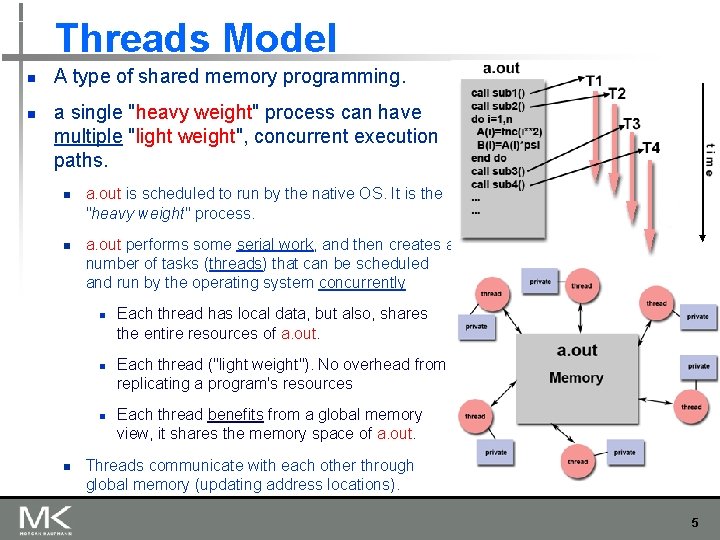

Threads Model n n A type of shared memory programming. a single "heavy weight" process can have multiple "light weight", concurrent execution paths. n n a. out is scheduled to run by the native OS. It is the "heavy weight" process. a. out performs some serial work, and then creates a number of tasks (threads) that can be scheduled and run by the operating system concurrently n n Each thread has local data, but also, shares the entire resources of a. out. Each thread ("light weight"). No overhead from replicating a program's resources Each thread benefits from a global memory view, it shares the memory space of a. out. Threads communicate with each other through global memory (updating address locations). 5

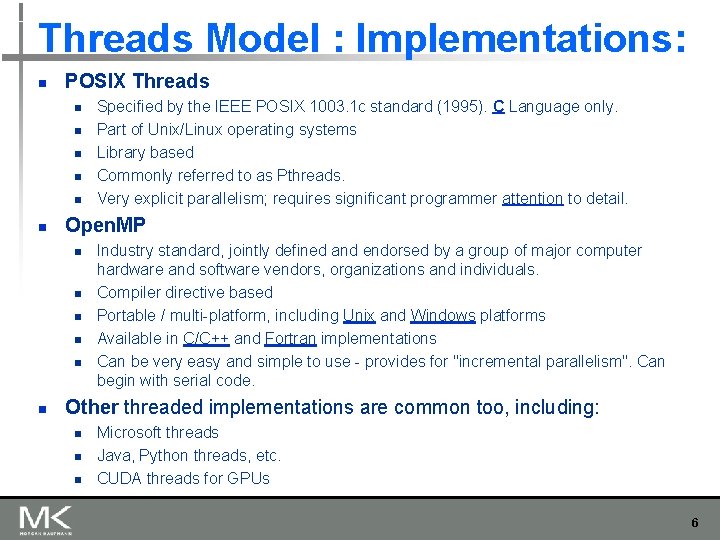

Threads Model : Implementations: n POSIX Threads n n n Open. MP n n n Specified by the IEEE POSIX 1003. 1 c standard (1995). C Language only. Part of Unix/Linux operating systems Library based Commonly referred to as Pthreads. Very explicit parallelism; requires significant programmer attention to detail. Industry standard, jointly defined and endorsed by a group of major computer hardware and software vendors, organizations and individuals. Compiler directive based Portable / multi-platform, including Unix and Windows platforms Available in C/C++ and Fortran implementations Can be very easy and simple to use - provides for "incremental parallelism". Can begin with serial code. Other threaded implementations are common too, including: n n n Microsoft threads Java, Python threads, etc. CUDA threads for GPUs 6

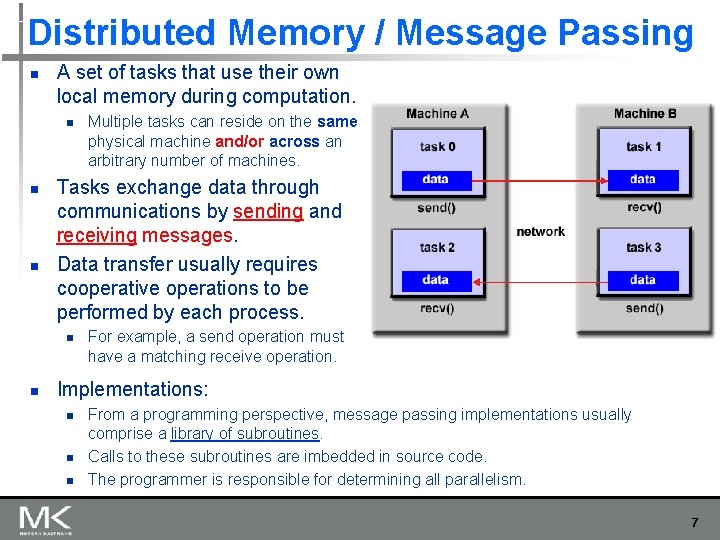

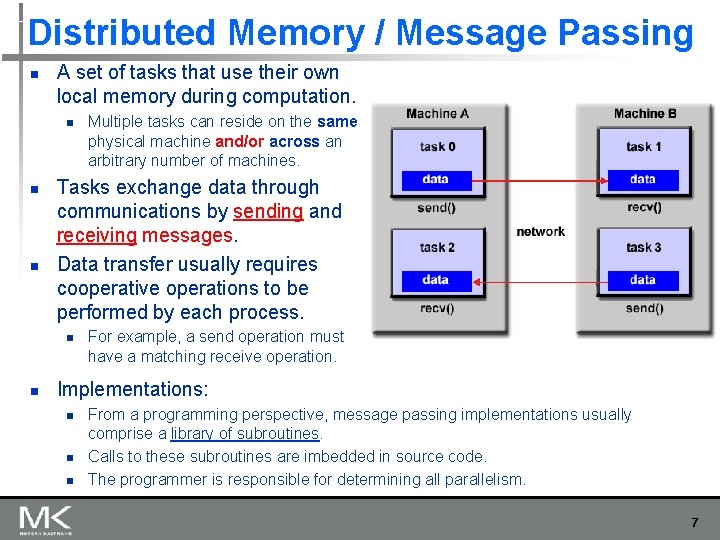

Distributed Memory / Message Passing n A set of tasks that use their own local memory during computation. n n n Tasks exchange data through communications by sending and receiving messages. Data transfer usually requires cooperative operations to be performed by each process. n n Multiple tasks can reside on the same physical machine and/or across an arbitrary number of machines. For example, a send operation must have a matching receive operation. Implementations: n n n From a programming perspective, message passing implementations usually comprise a library of subroutines. Calls to these subroutines are imbedded in source code. The programmer is responsible for determining all parallelism. 7

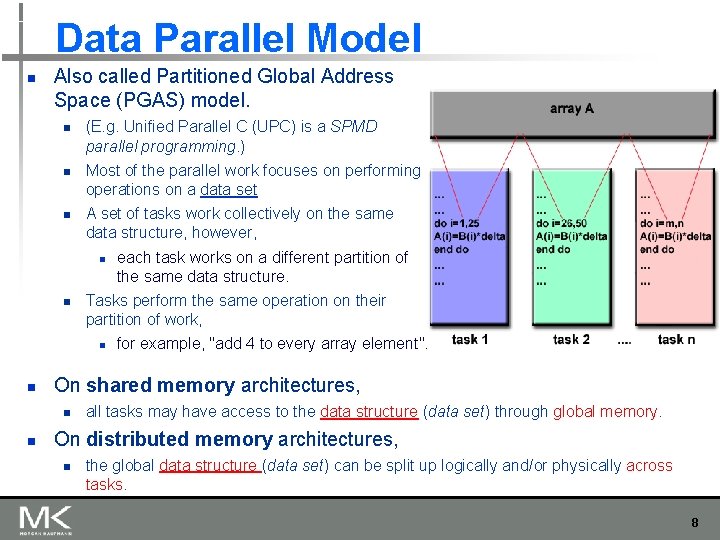

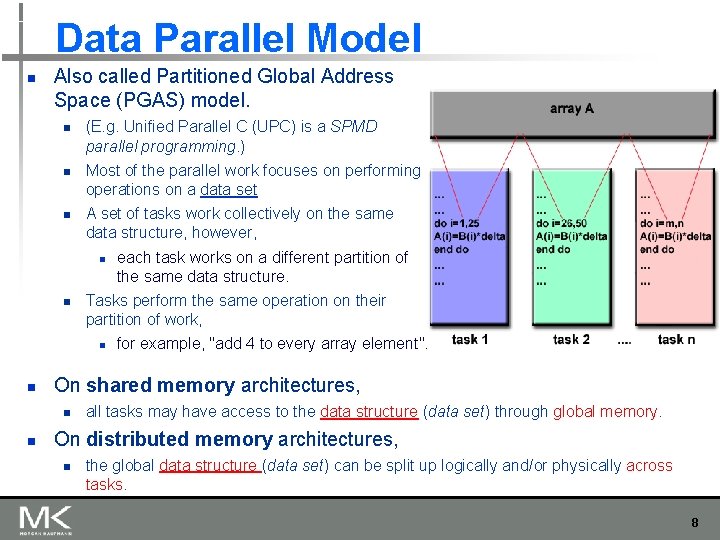

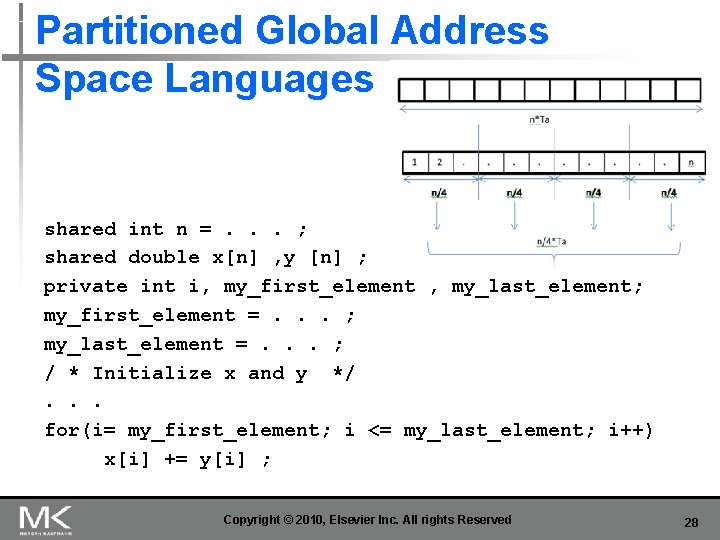

Data Parallel Model n Also called Partitioned Global Address Space (PGAS) model. n n n (E. g. Unified Parallel C (UPC) is a SPMD parallel programming. ) Most of the parallel work focuses on performing operations on a data set A set of tasks work collectively on the same data structure, however, n n Tasks perform the same operation on their partition of work, n n for example, "add 4 to every array element". On shared memory architectures, n n each task works on a different partition of the same data structure. all tasks may have access to the data structure (data set) through global memory. On distributed memory architectures, n the global data structure (data set) can be split up logically and/or physically across tasks. 8

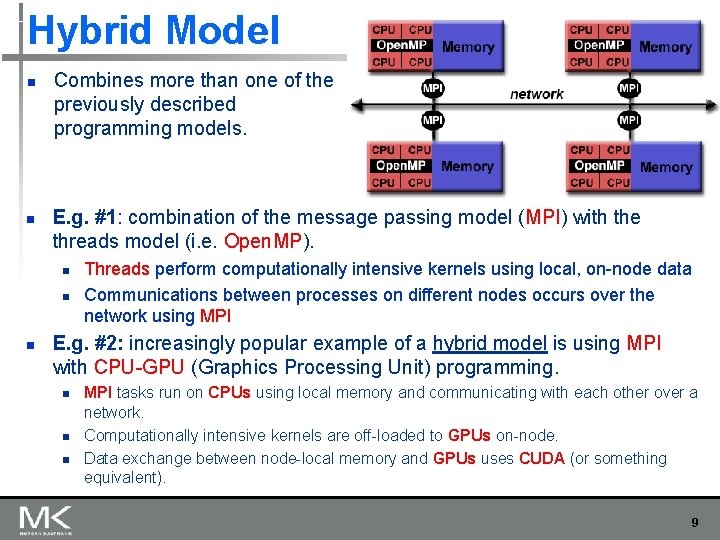

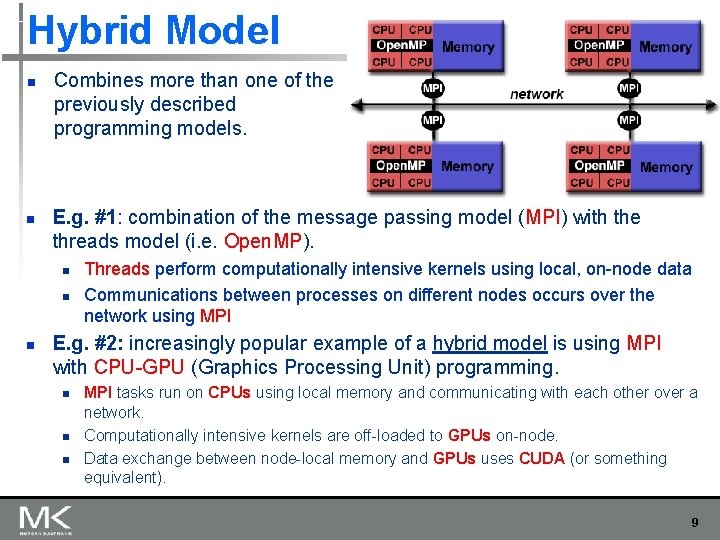

Hybrid Model n n Combines more than one of the previously described programming models. E. g. #1: combination of the message passing model (MPI) with the threads model (i. e. Open. MP). n n n Threads perform computationally intensive kernels using local, on-node data Communications between processes on different nodes occurs over the network using MPI E. g. #2: increasingly popular example of a hybrid model is using MPI with CPU-GPU (Graphics Processing Unit) programming. n n n MPI tasks run on CPUs using local memory and communicating with each other over a network. Computationally intensive kernels are off-loaded to GPUs on-node. Data exchange between node-local memory and GPUs uses CUDA (or something equivalent). 9

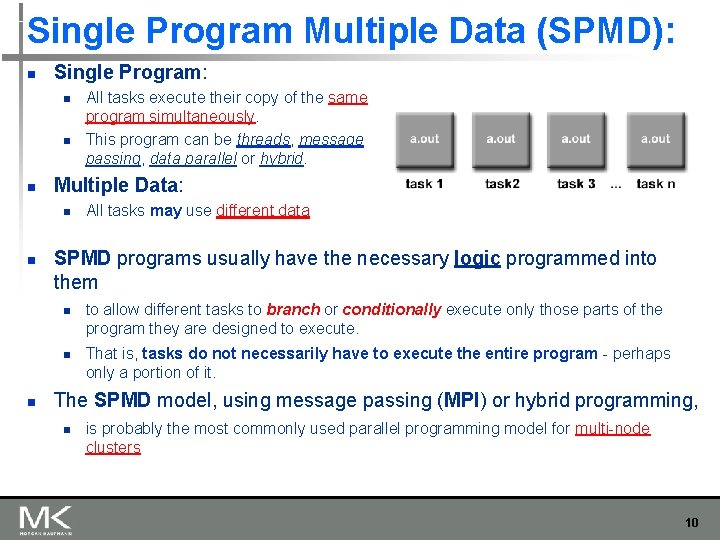

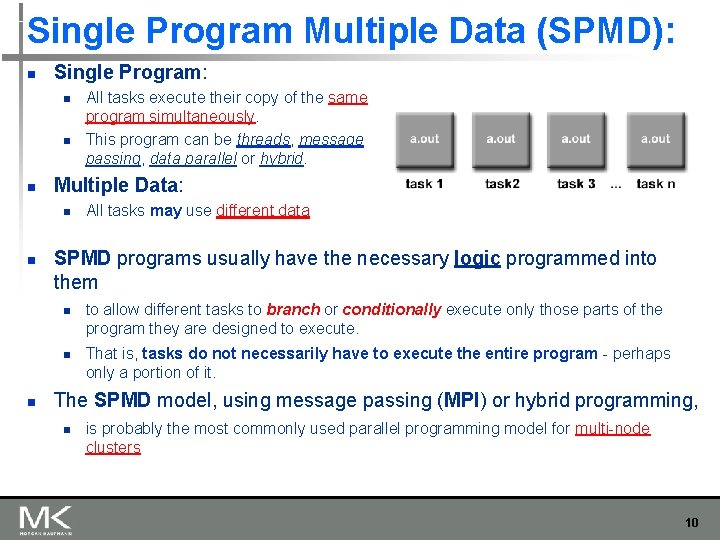

Single Program Multiple Data (SPMD): n Single Program: n n n Multiple Data: n n All tasks may use different data SPMD programs usually have the necessary logic programmed into them n n n All tasks execute their copy of the same program simultaneously. This program can be threads, message passing, data parallel or hybrid. to allow different tasks to branch or conditionally execute only those parts of the program they are designed to execute. That is, tasks do not necessarily have to execute the entire program - perhaps only a portion of it. The SPMD model, using message passing (MPI) or hybrid programming, n is probably the most commonly used parallel programming model for multi-node clusters 10

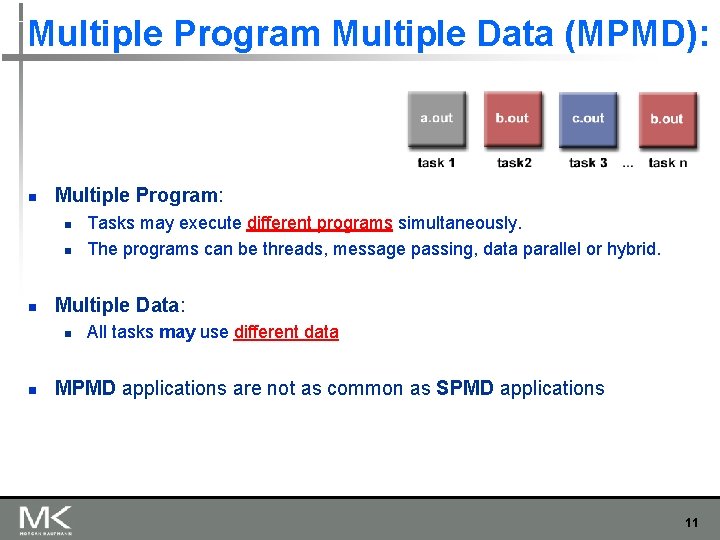

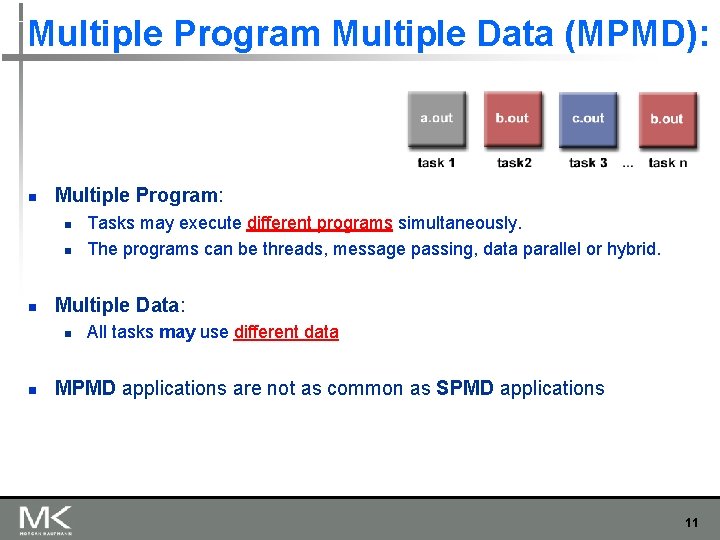

Multiple Program Multiple Data (MPMD): n Multiple Program: n n n Multiple Data: n n Tasks may execute different programs simultaneously. The programs can be threads, message passing, data parallel or hybrid. All tasks may use different data MPMD applications are not as common as SPMD applications 11

DESIGNING PARALLEL PROGRAMS n Understand the Problem and the Program n Partitioning n Communications n Synchronization n Data Dependencies n Load Balancing n Granularity n Debugging 12

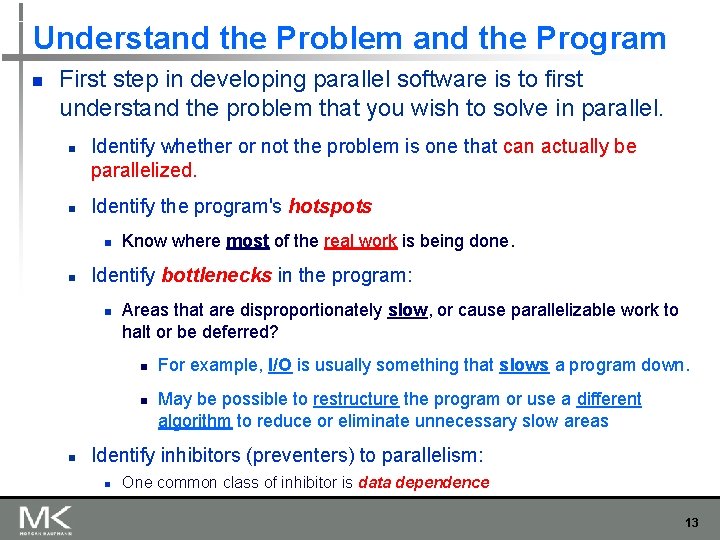

Understand the Problem and the Program n First step in developing parallel software is to first understand the problem that you wish to solve in parallel. n n Identify whether or not the problem is one that can actually be parallelized. Identify the program's hotspots n n Know where most of the real work is being done. Identify bottlenecks in the program: n Areas that are disproportionately slow, or cause parallelizable work to halt or be deferred? n n n For example, I/O is usually something that slows a program down. May be possible to restructure the program or use a different algorithm to reduce or eliminate unnecessary slow areas Identify inhibitors (preventers) to parallelism: n One common class of inhibitor is data dependence 13

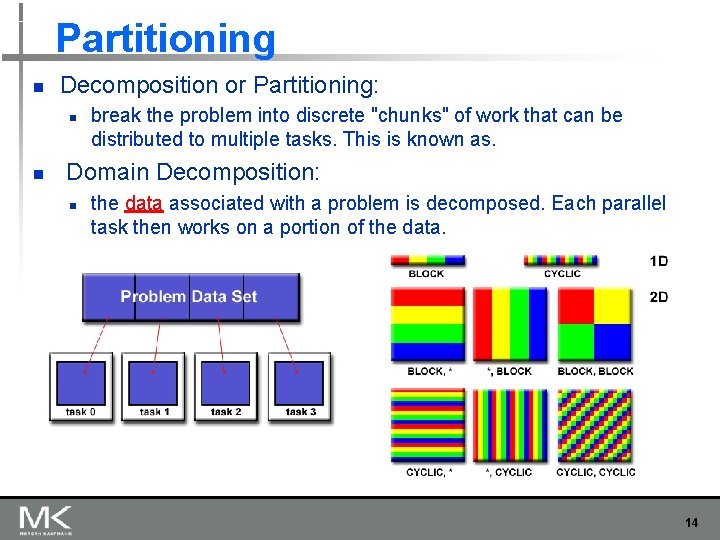

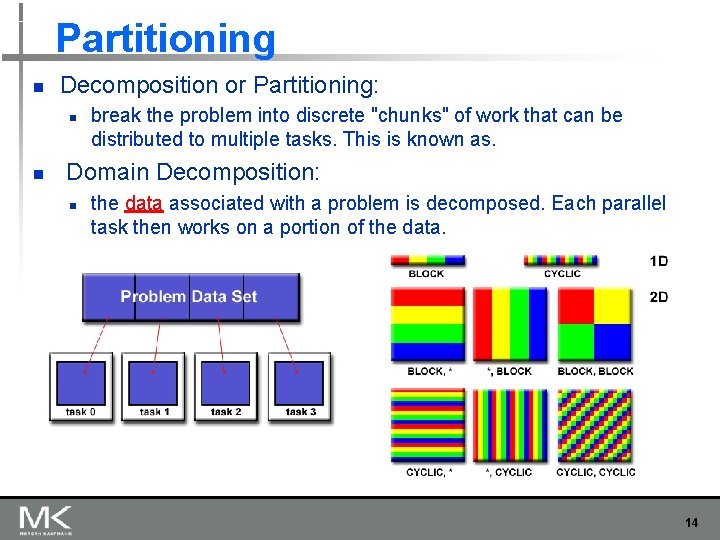

Partitioning n Decomposition or Partitioning: n n break the problem into discrete "chunks" of work that can be distributed to multiple tasks. This is known as. Domain Decomposition: n the data associated with a problem is decomposed. Each parallel task then works on a portion of the data. 14

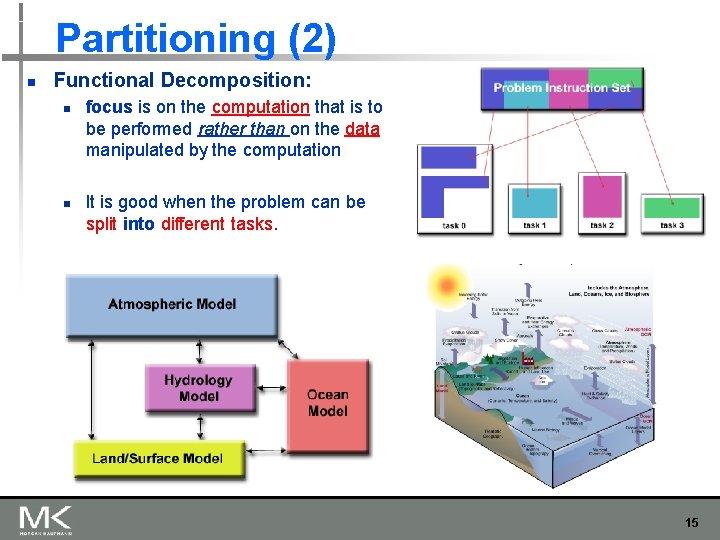

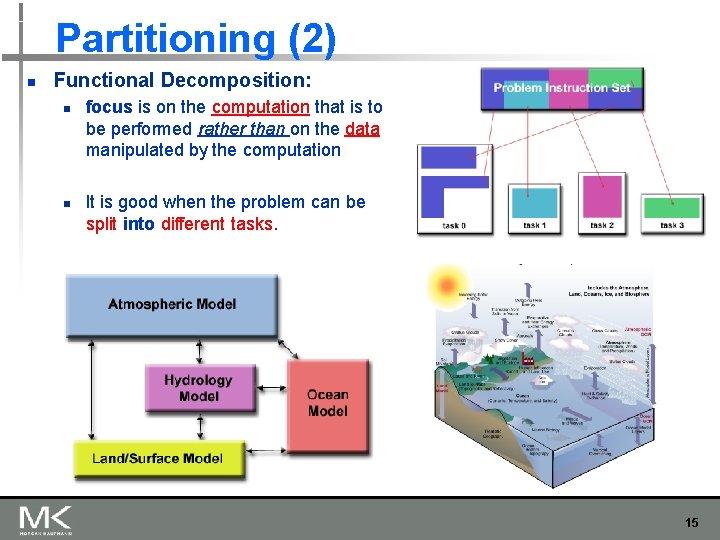

Partitioning (2) n Functional Decomposition: n n focus is on the computation that is to be performed rather than on the data manipulated by the computation It is good when the problem can be split into different tasks. 15

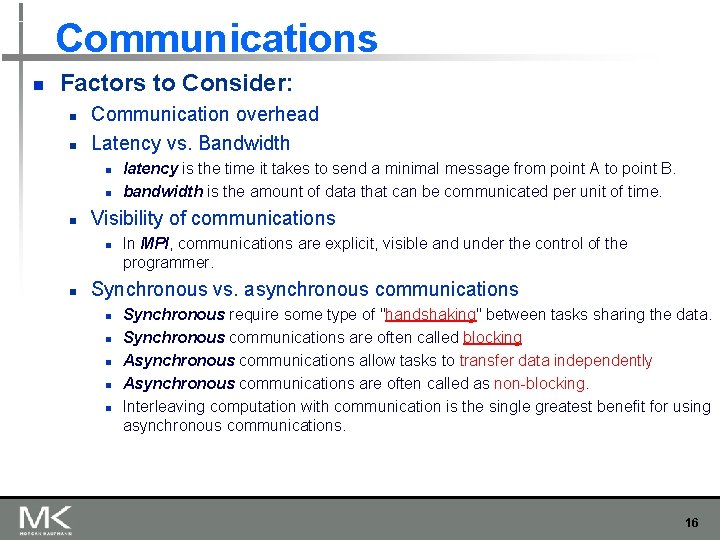

Communications n Factors to Consider: n n Communication overhead Latency vs. Bandwidth n n n Visibility of communications n n latency is the time it takes to send a minimal message from point A to point B. bandwidth is the amount of data that can be communicated per unit of time. In MPI, communications are explicit, visible and under the control of the programmer. Synchronous vs. asynchronous communications n n n Synchronous require some type of "handshaking" between tasks sharing the data. Synchronous communications are often called blocking Asynchronous communications allow tasks to transfer data independently Asynchronous communications are often called as non-blocking. Interleaving computation with communication is the single greatest benefit for using asynchronous communications. 16

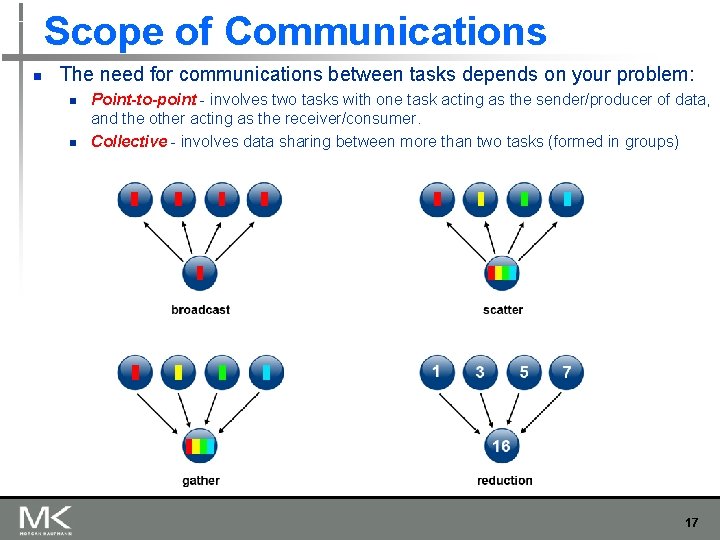

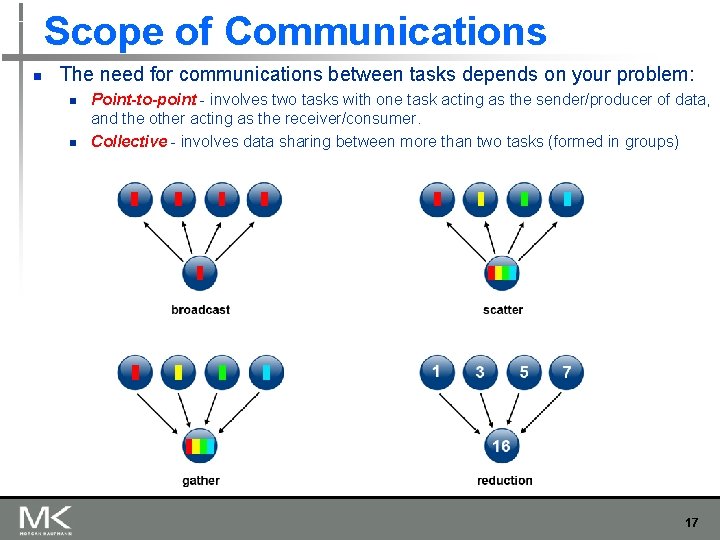

Scope of Communications n The need for communications between tasks depends on your problem: n n Point-to-point - involves two tasks with one task acting as the sender/producer of data, and the other acting as the receiver/consumer. Collective - involves data sharing between more than two tasks (formed in groups) 17

Synchronization Types n Barrier n n Usually all tasks are involved Each task performs its work until it reaches the barrier. It then stops, or "blocks". When the last task reaches the barrier, all tasks are synchronized. Lock / semaphore n n n Can involve any number of tasks Typically used to serialize (protect) access to global data or a section of code. Only one task at a time may use (own) the lock / semaphore / flag. The first task to acquire the lock "sets" it. This task can then safely (serially) access the protected data or code. Other tasks can attempt to acquire the lock but must wait until the task that owns the lock releases it. Can be blocking or non-blocking 18

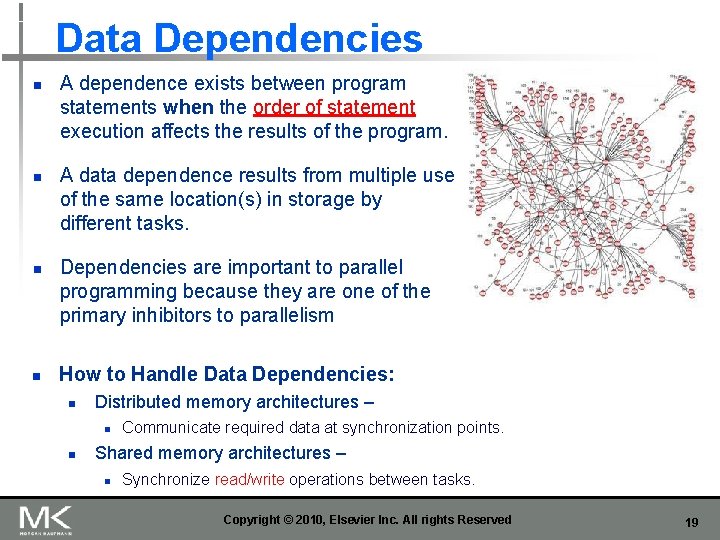

Data Dependencies n n A dependence exists between program statements when the order of statement execution affects the results of the program. A data dependence results from multiple use of the same location(s) in storage by different tasks. Dependencies are important to parallel programming because they are one of the primary inhibitors to parallelism How to Handle Data Dependencies: n Distributed memory architectures – n n Communicate required data at synchronization points. Shared memory architectures – n Synchronize read/write operations between tasks. Copyright © 2010, Elsevier Inc. All rights Reserved 19

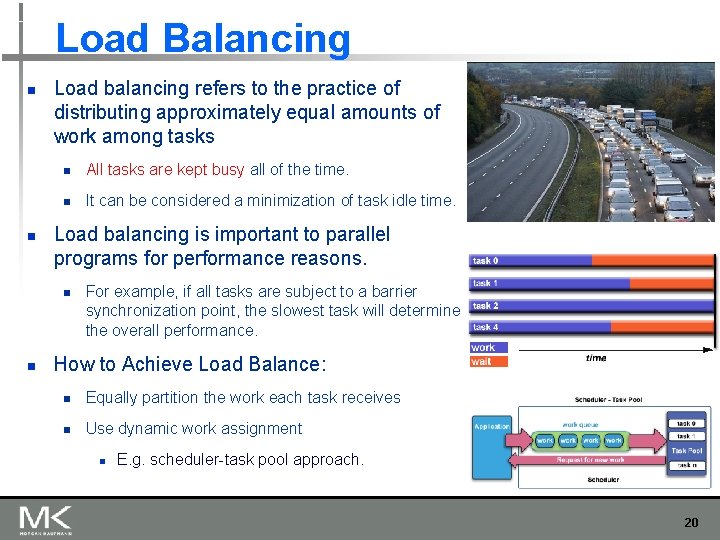

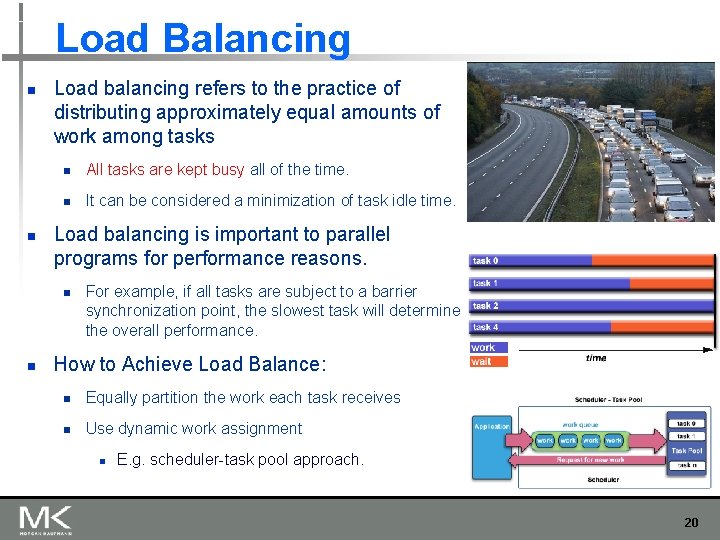

Load Balancing n n Load balancing refers to the practice of distributing approximately equal amounts of work among tasks n All tasks are kept busy all of the time. n It can be considered a minimization of task idle time. Load balancing is important to parallel programs for performance reasons. n n For example, if all tasks are subject to a barrier synchronization point, the slowest task will determine the overall performance. How to Achieve Load Balance: n Equally partition the work each task receives n Use dynamic work assignment n E. g. scheduler-task pool approach. 20

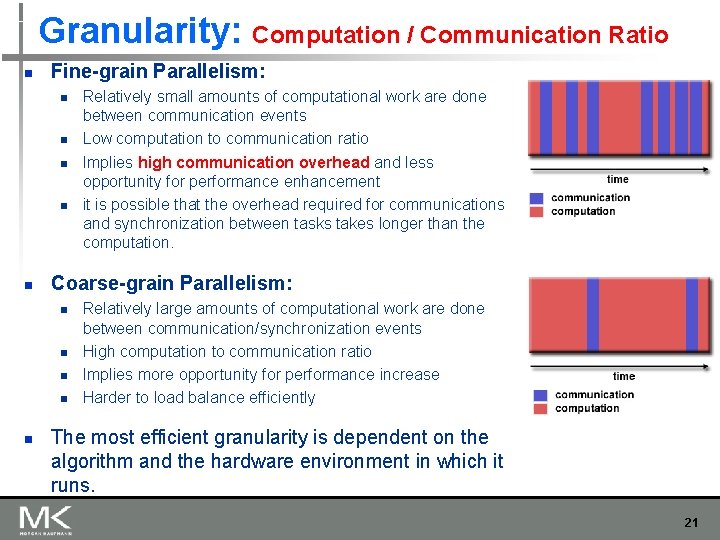

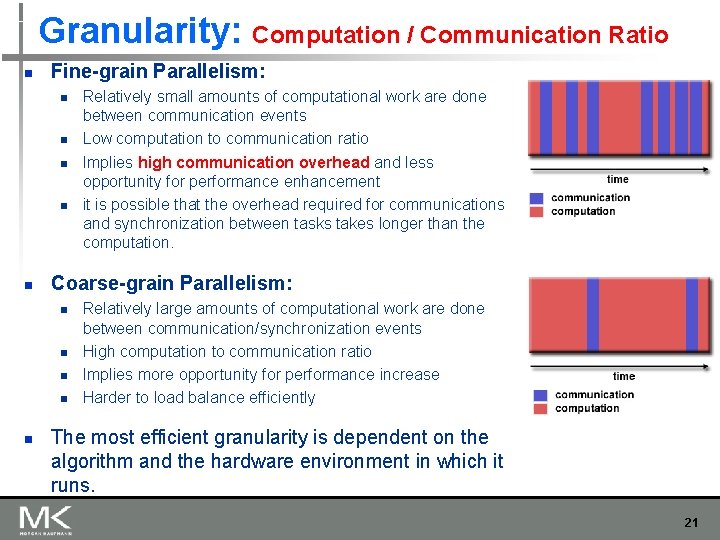

Granularity: Computation / Communication Ratio n Fine-grain Parallelism: n n n Coarse-grain Parallelism: n n n Relatively small amounts of computational work are done between communication events Low computation to communication ratio Implies high communication overhead and less opportunity for performance enhancement it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. Relatively large amounts of computational work are done between communication/synchronization events High computation to communication ratio Implies more opportunity for performance increase Harder to load balance efficiently The most efficient granularity is dependent on the algorithm and the hardware environment in which it runs. 21

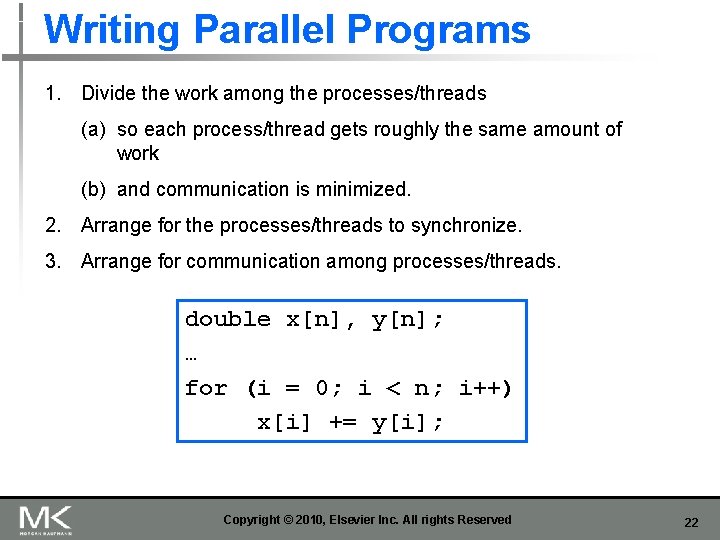

Writing Parallel Programs 1. Divide the work among the processes/threads (a) so each process/thread gets roughly the same amount of work (b) and communication is minimized. 2. Arrange for the processes/threads to synchronize. 3. Arrange for communication among processes/threads. double x[n], y[n]; … for (i = 0; i < n; i++) x[i] += y[i]; Copyright © 2010, Elsevier Inc. All rights Reserved 22

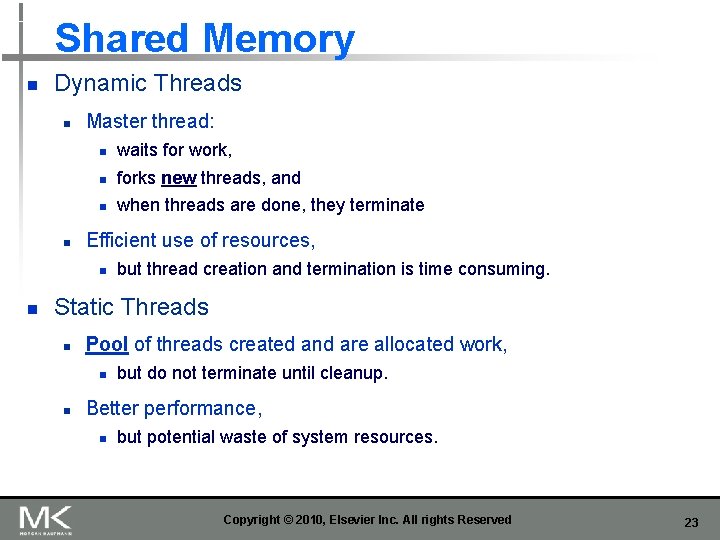

Shared Memory n Dynamic Threads n n Master thread: n waits for work, n forks new threads, and n when threads are done, they terminate Efficient use of resources, n n but thread creation and termination is time consuming. Static Threads n Pool of threads created and are allocated work, n n but do not terminate until cleanup. Better performance, n but potential waste of system resources. Copyright © 2010, Elsevier Inc. All rights Reserved 23

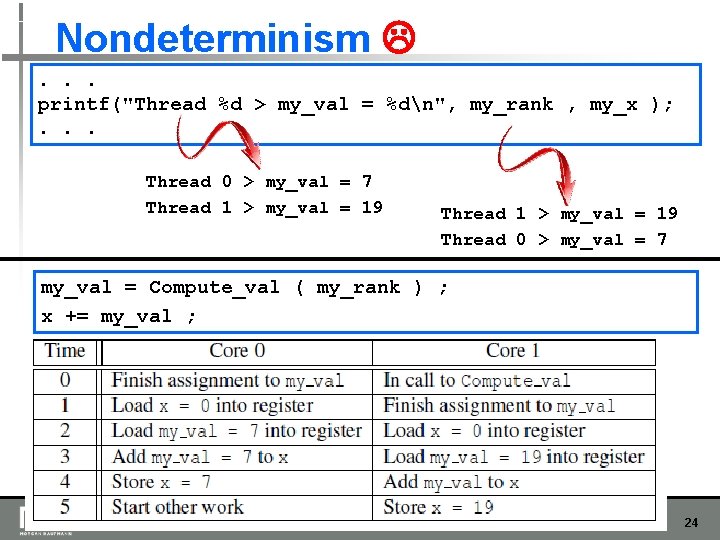

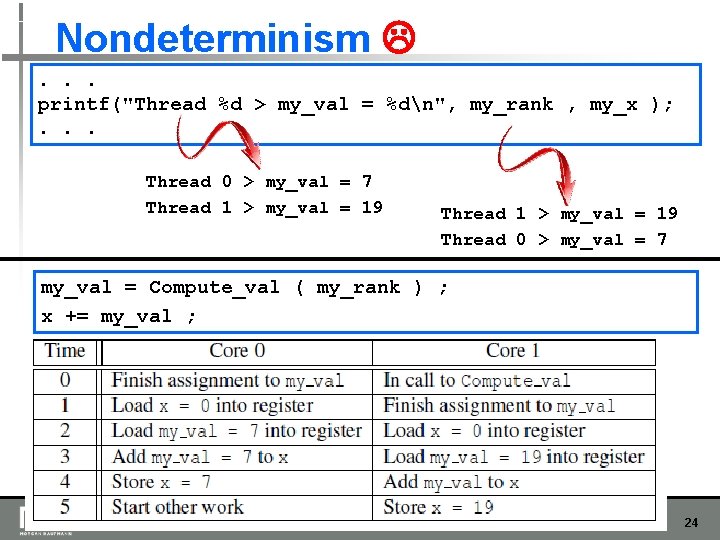

Nondeterminism . . . printf("Thread %d > my_val = %dn", my_rank , my_x ); . . . Thread 0 > my_val = 7 Thread 1 > my_val = 19 Thread 0 > my_val = 7 my_val = Compute_val ( my_rank ) ; x += my_val ; Copyright © 2010, Elsevier Inc. All rights Reserved 24

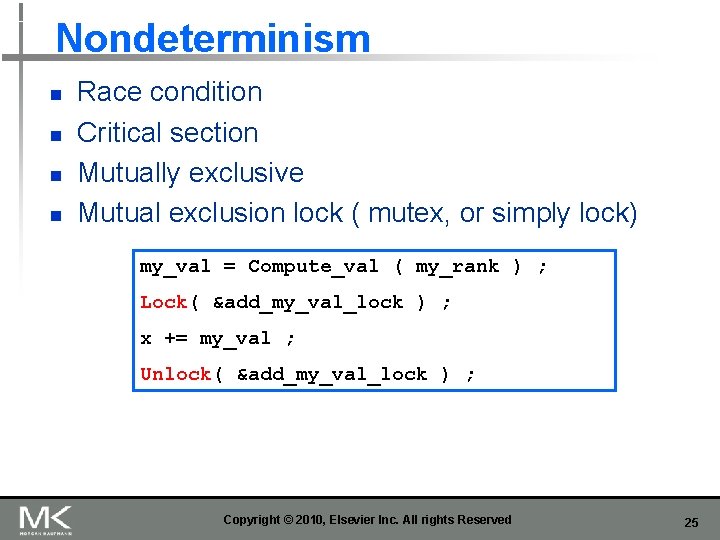

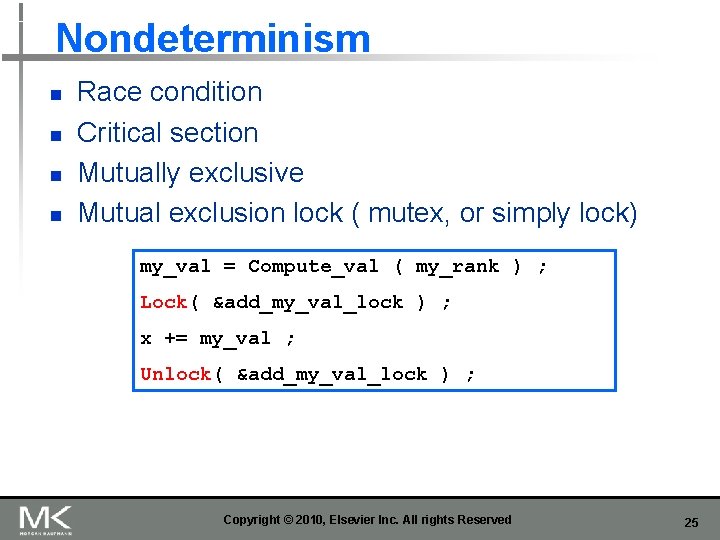

Nondeterminism n n Race condition Critical section Mutually exclusive Mutual exclusion lock ( mutex, or simply lock) my_val = Compute_val ( my_rank ) ; Lock( &add_my_val_lock ) ; x += my_val ; Unlock( &add_my_val_lock ) ; Copyright © 2010, Elsevier Inc. All rights Reserved 25

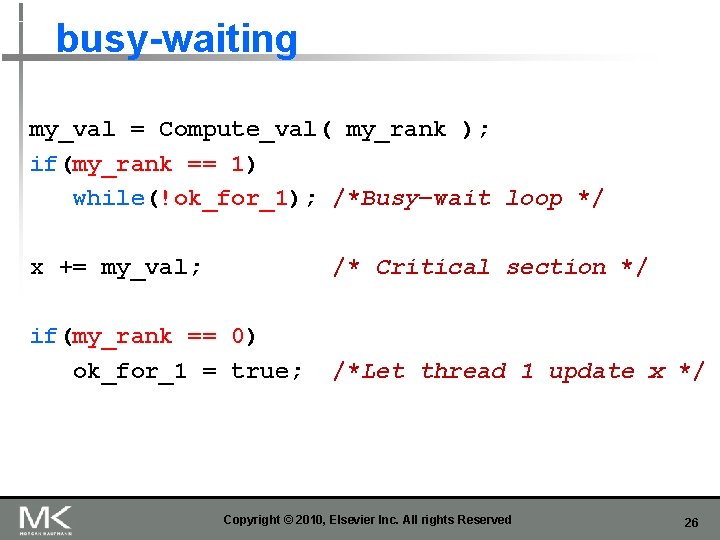

busy-waiting my_val = Compute_val( my_rank ); if(my_rank == 1) while(!ok_for_1); /*Busy−wait loop */ x += my_val; /* Critical section */ if(my_rank == 0) ok_for_1 = true; /*Let thread 1 update x */ Copyright © 2010, Elsevier Inc. All rights Reserved 26

![Message Passing Interface MPI char message 1 0 0 Message Passing Interface (MPI) char message [ 1 0 0 ] ; . .](https://slidetodoc.com/presentation_image_h2/60446911e72e23a7882a5dff67f0cb17/image-27.jpg)

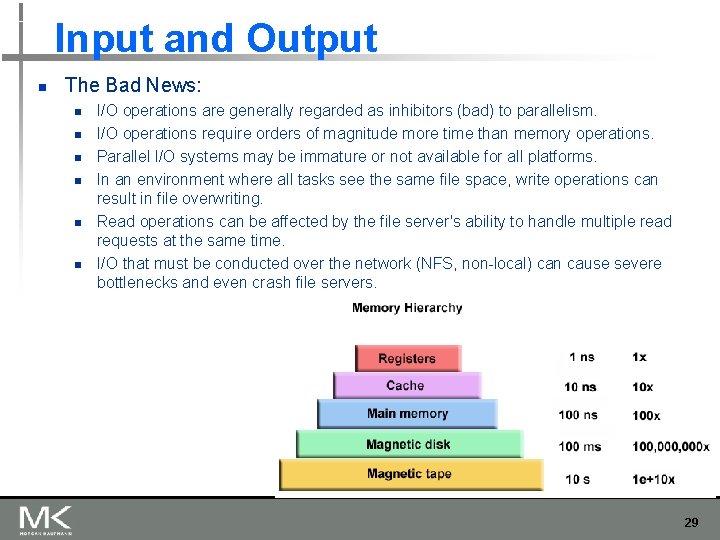

Message Passing Interface (MPI) char message [ 1 0 0 ] ; . . . my_rank = Get_rank( ); if( my_rank == 1) { sprintf ( message , "Greetings from process 1" ); Send( message , MSG_CHAR , 100 , 0 ) ; }else if( my_rank == 0) { Receive ( message , MSG_CHAR , 100 , 1 ) ; printf ( "Process 0 > Received: %sn", message ); } Copyright © 2010, Elsevier Inc. All rights Reserved 27

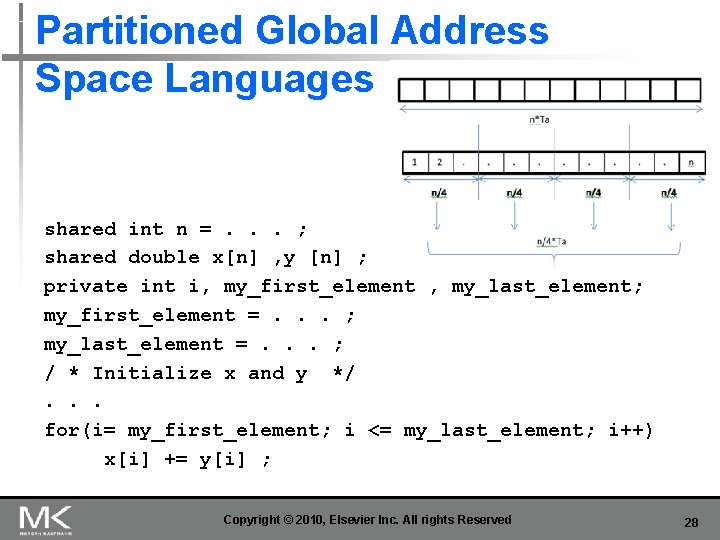

Partitioned Global Address Space Languages shared int n =. . . ; shared double x[n] , y [n] ; private int i, my_first_element , my_last_element; my_first_element =. . . ; my_last_element =. . . ; / * Initialize x and y */. . . for(i= my_first_element; i <= my_last_element; i++) x[i] += y[i] ; Copyright © 2010, Elsevier Inc. All rights Reserved 28

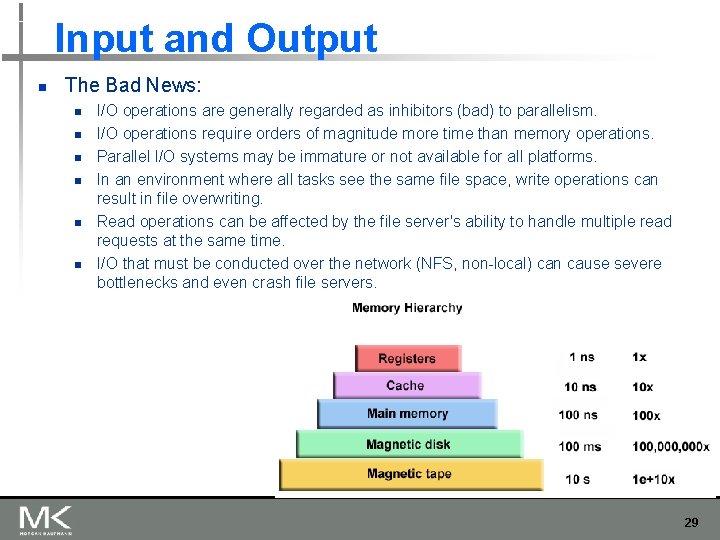

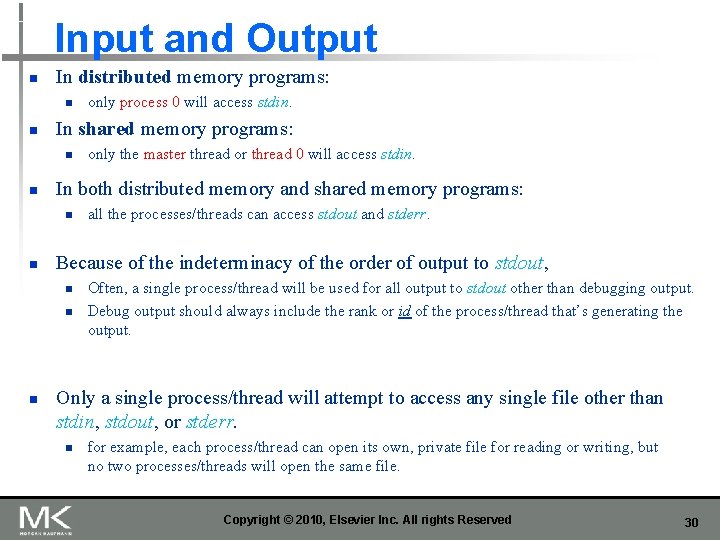

Input and Output n The Bad News: n n n I/O operations are generally regarded as inhibitors (bad) to parallelism. I/O operations require orders of magnitude more time than memory operations. Parallel I/O systems may be immature or not available for all platforms. In an environment where all tasks see the same file space, write operations can result in file overwriting. Read operations can be affected by the file server's ability to handle multiple read requests at the same time. I/O that must be conducted over the network (NFS, non-local) can cause severe bottlenecks and even crash file servers. 29

Input and Output n In distributed memory programs: n n In shared memory programs: n n all the processes/threads can access stdout and stderr. Because of the indeterminacy of the order of output to stdout, n n n only the master thread or thread 0 will access stdin. In both distributed memory and shared memory programs: n n only process 0 will access stdin. Often, a single process/thread will be used for all output to stdout other than debugging output. Debug output should always include the rank or id of the process/thread that’s generating the output. Only a single process/thread will attempt to access any single file other than stdin, stdout, or stderr. n for example, each process/thread can open its own, private file for reading or writing, but no two processes/threads will open the same file. Copyright © 2010, Elsevier Inc. All rights Reserved 30

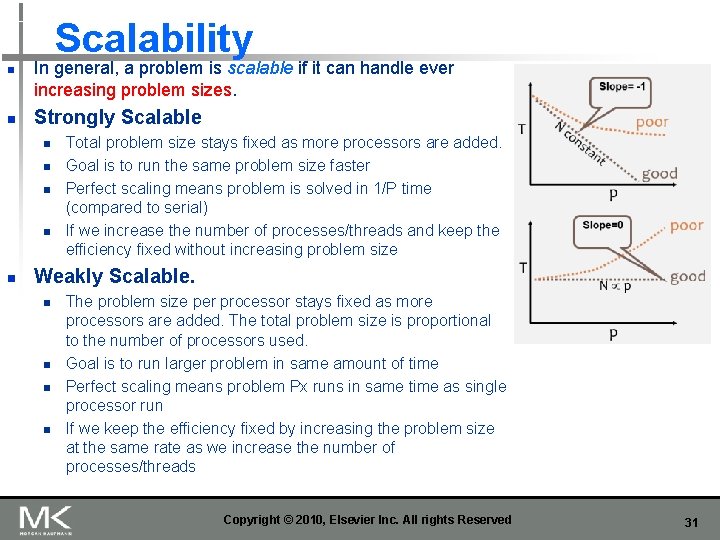

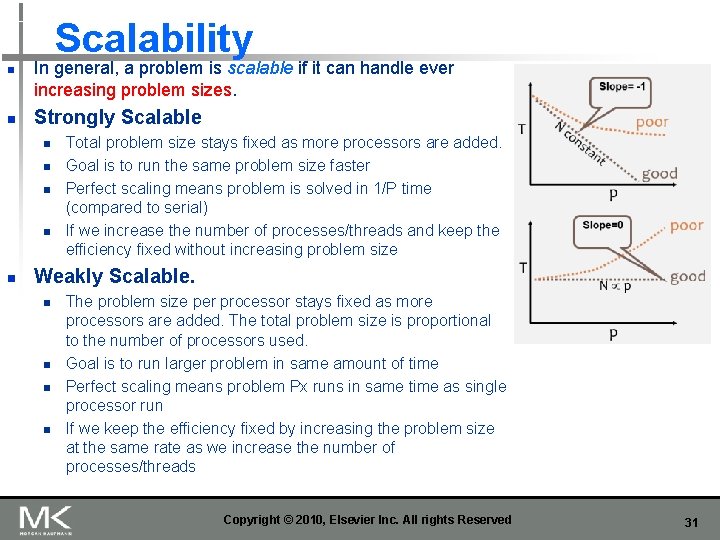

Scalability n n In general, a problem is scalable if it can handle ever increasing problem sizes. Strongly Scalable n n n Total problem size stays fixed as more processors are added. Goal is to run the same problem size faster Perfect scaling means problem is solved in 1/P time (compared to serial) If we increase the number of processes/threads and keep the efficiency fixed without increasing problem size Weakly Scalable. n n The problem size per processor stays fixed as more processors are added. The total problem size is proportional to the number of processors used. Goal is to run larger problem in same amount of time Perfect scaling means problem Px runs in same time as single processor run If we keep the efficiency fixed by increasing the problem size at the same rate as we increase the number of processes/threads Copyright © 2010, Elsevier Inc. All rights Reserved 31

Taking Timings n n n What is time? Start to finish? A program segment of interest? CPU time? Wall clock time? Copyright © 2010, Elsevier Inc. All rights Reserved 32