Parallel Programming with Open MP the Open MultiProcessing

![Use pragma parallel for (int i=0; i < 8; i++) x[i]=0; #pragma omp parallel Use pragma parallel for (int i=0; i < 8; i++) x[i]=0; #pragma omp parallel](https://slidetodoc.com/presentation_image_h2/432653c50eea2742dfd59e0121503ec9/image-12.jpg)

- Slides: 19

Parallel Programming with Open. MP the Open Multi-Processing Part #2 By Dr. Ziad Al-Sharif 1

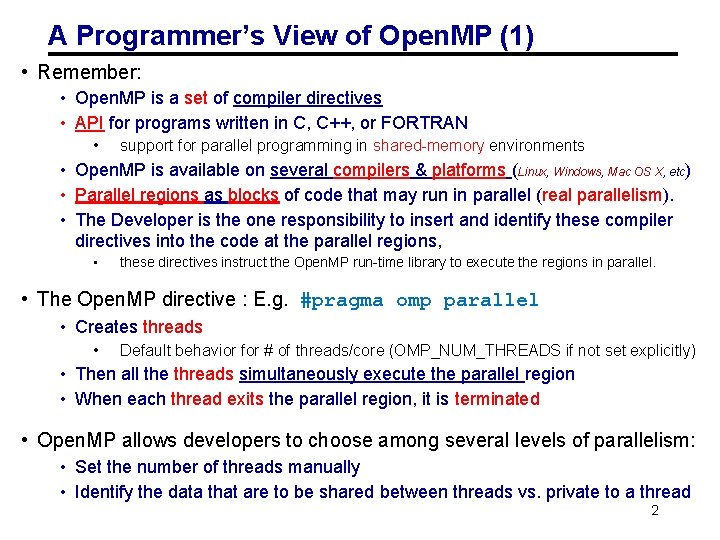

A Programmer’s View of Open. MP (1) • Remember: • Open. MP is a set of compiler directives • API for programs written in C, C++, or FORTRAN • support for parallel programming in shared-memory environments • Open. MP is available on several compilers & platforms (Linux, Windows, Mac OS X, etc) • Parallel regions as blocks of code that may run in parallel (real parallelism). • The Developer is the one responsibility to insert and identify these compiler directives into the code at the parallel regions, • these directives instruct the Open. MP run-time library to execute the regions in parallel. • The Open. MP directive : E. g. #pragma omp parallel • Creates threads • Default behavior for # of threads/core (OMP_NUM_THREADS if not set explicitly) • Then all the threads simultaneously execute the parallel region • When each thread exits the parallel region, it is terminated • Open. MP allows developers to choose among several levels of parallelism: • Set the number of threads manually • Identify the data that are to be shared between threads vs. private to a thread 2

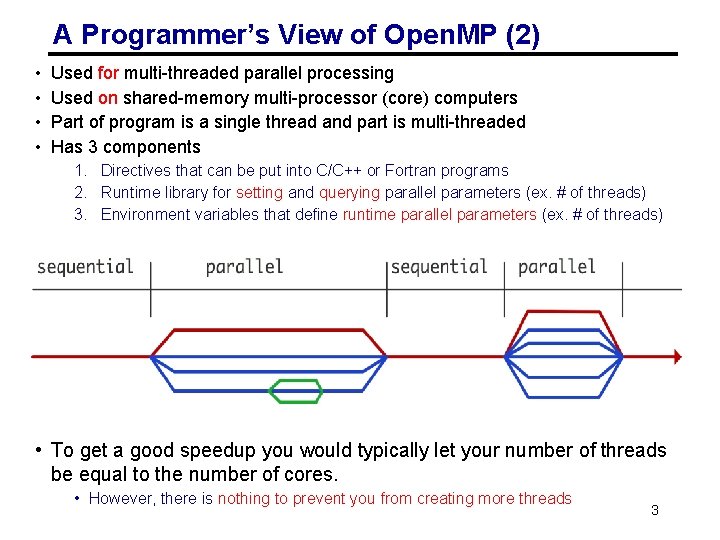

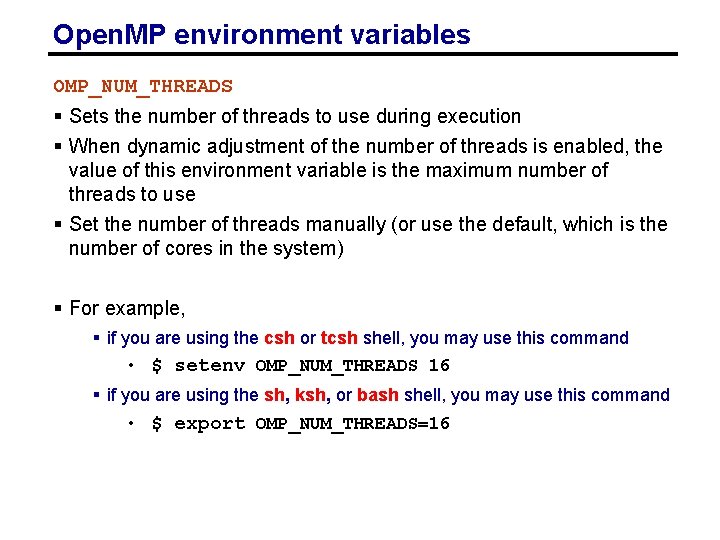

A Programmer’s View of Open. MP (2) • • Used for multi-threaded parallel processing Used on shared-memory multi-processor (core) computers Part of program is a single thread and part is multi-threaded Has 3 components 1. Directives that can be put into C/C++ or Fortran programs 2. Runtime library for setting and querying parallel parameters (ex. # of threads) 3. Environment variables that define runtime parallel parameters (ex. # of threads) • To get a good speedup you would typically let your number of threads be equal to the number of cores. • However, there is nothing to prevent you from creating more threads 3

Open. MP environment variables OMP_NUM_THREADS § Sets the number of threads to use during execution § When dynamic adjustment of the number of threads is enabled, the value of this environment variable is the maximum number of threads to use § Set the number of threads manually (or use the default, which is the number of cores in the system) § For example, § if you are using the csh or tcsh shell, you may use this command • $ setenv OMP_NUM_THREADS 16 § if you are using the sh, ksh, or bash shell, you may use this command • $ export OMP_NUM_THREADS=16

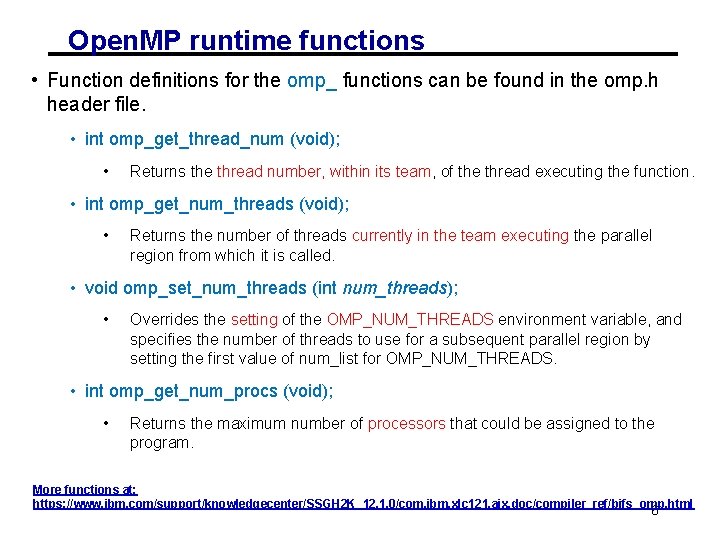

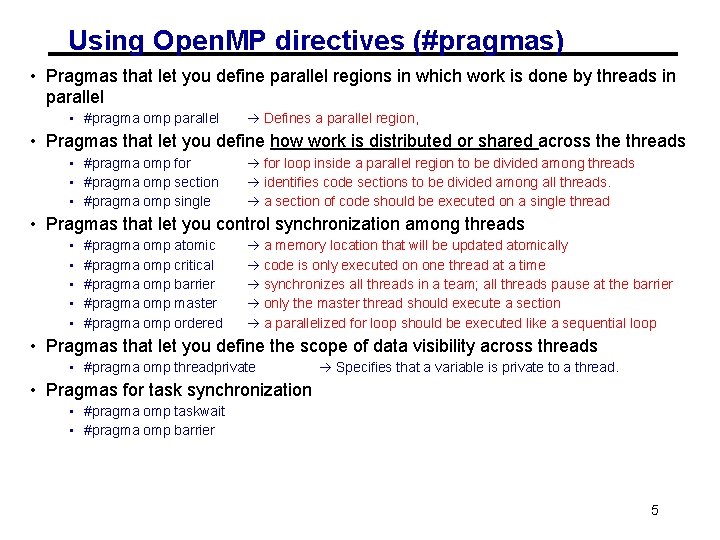

Using Open. MP directives (#pragmas) • Pragmas that let you define parallel regions in which work is done by threads in parallel • #pragma omp parallel Defines a parallel region, • Pragmas that let you define how work is distributed or shared across the threads • #pragma omp for • #pragma omp section • #pragma omp single for loop inside a parallel region to be divided among threads identifies code sections to be divided among all threads. a section of code should be executed on a single thread • Pragmas that let you control synchronization among threads • • • #pragma omp atomic #pragma omp critical #pragma omp barrier #pragma omp master #pragma omp ordered a memory location that will be updated atomically code is only executed on one thread at a time synchronizes all threads in a team; all threads pause at the barrier only the master thread should execute a section a parallelized for loop should be executed like a sequential loop • Pragmas that let you define the scope of data visibility across threads • #pragma omp threadprivate Specifies that a variable is private to a thread. • Pragmas for task synchronization • #pragma omp taskwait • #pragma omp barrier 5

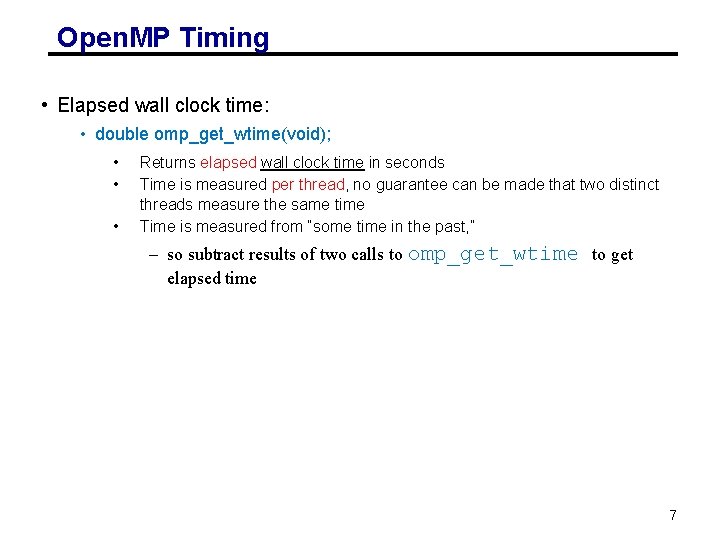

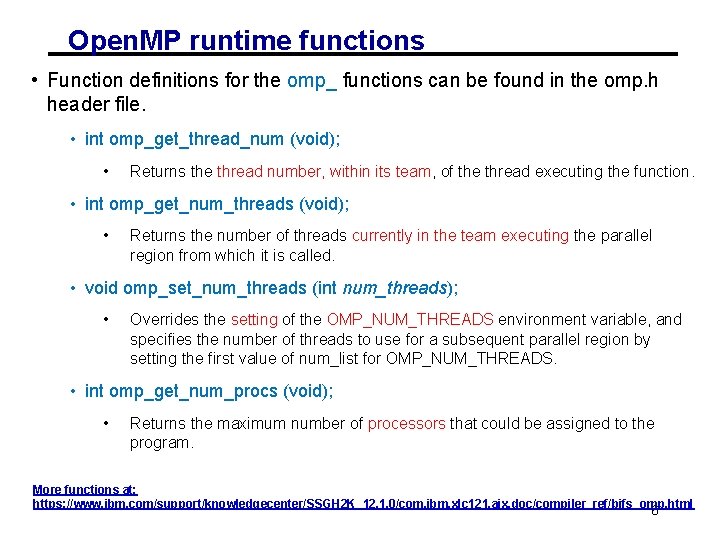

Open. MP runtime functions • Function definitions for the omp_ functions can be found in the omp. h header file. • int omp_get_thread_num (void); • Returns the thread number, within its team, of the thread executing the function. • int omp_get_num_threads (void); • Returns the number of threads currently in the team executing the parallel region from which it is called. • void omp_set_num_threads (int num_threads); • Overrides the setting of the OMP_NUM_THREADS environment variable, and specifies the number of threads to use for a subsequent parallel region by setting the first value of num_list for OMP_NUM_THREADS. • int omp_get_num_procs (void); • Returns the maximum number of processors that could be assigned to the program. More functions at: https: //www. ibm. com/support/knowledgecenter/SSGH 2 K_12. 1. 0/com. ibm. xlc 121. aix. doc/compiler_ref/bifs_omp. html 6

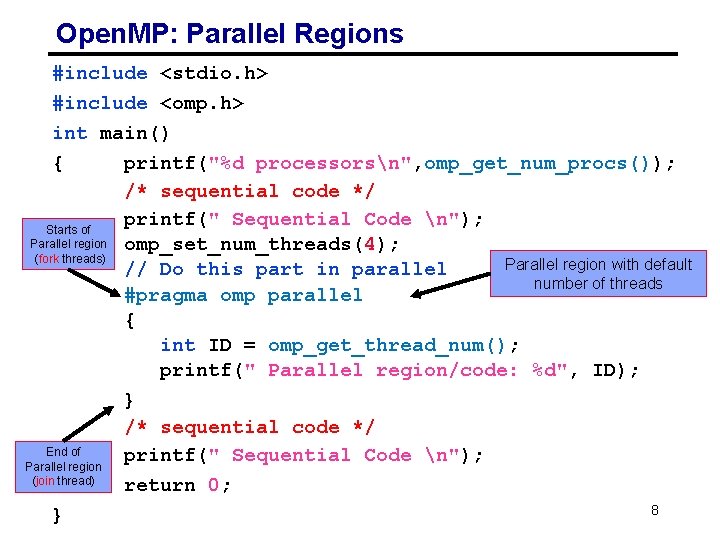

Open. MP Timing • Elapsed wall clock time: • double omp_get_wtime(void); • • • Returns elapsed wall clock time in seconds Time is measured per thread, no guarantee can be made that two distinct threads measure the same time Time is measured from “some time in the past, ” – so subtract results of two calls to omp_get_wtime to get elapsed time 7

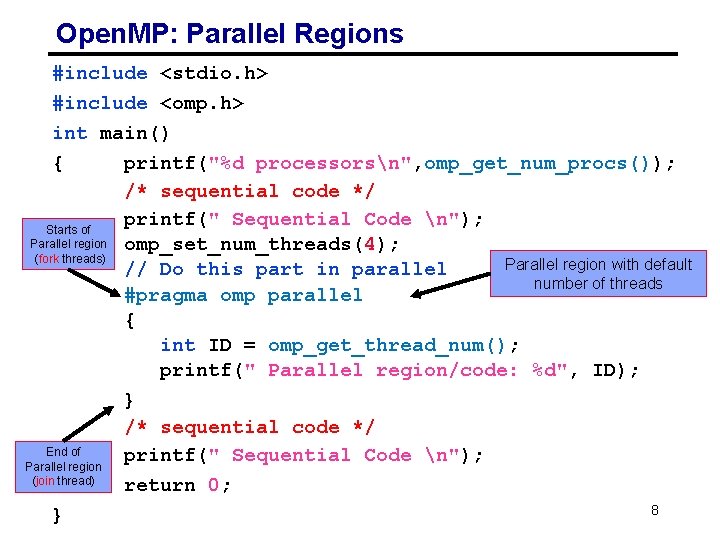

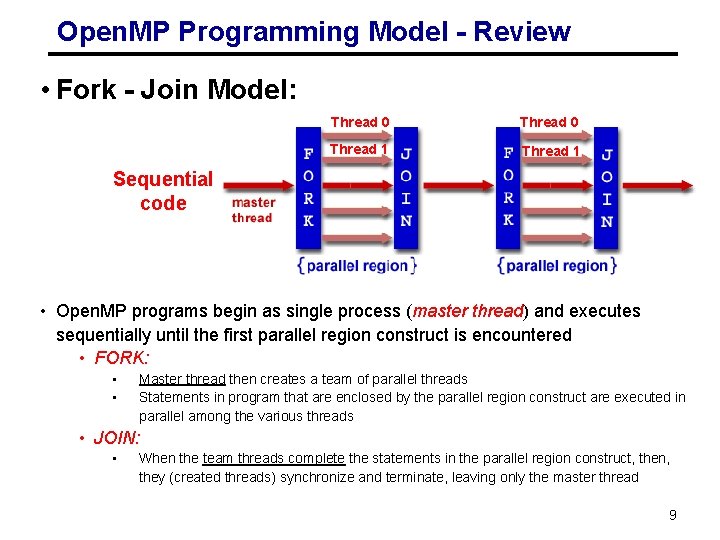

Open. MP: Parallel Regions #include <stdio. h> #include <omp. h> int main() { printf("%d processorsn", omp_get_num_procs()); /* sequential code */ printf(" Sequential Code n"); Starts of Parallel region omp_set_num_threads(4); (fork threads) Parallel region with default // Do this part in parallel number of threads #pragma omp parallel { int ID = omp_get_thread_num(); printf(" Parallel region/code: %d", ID); } /* sequential code */ End of printf(" Sequential Code n"); Parallel region (join thread) return 0; 8 }

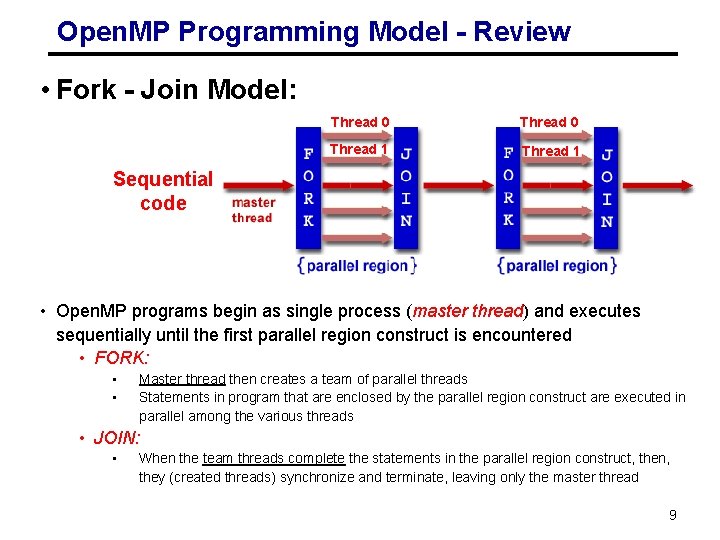

Open. MP Programming Model - Review • Fork - Join Model: Thread 0 Thread 1 Sequential code • Open. MP programs begin as single process (master thread) and executes sequentially until the first parallel region construct is encountered • FORK: • • Master thread then creates a team of parallel threads Statements in program that are enclosed by the parallel region construct are executed in parallel among the various threads • JOIN: • When the team threads complete the statements in the parallel region construct, then, they (created threads) synchronize and terminate, leaving only the master thread 9

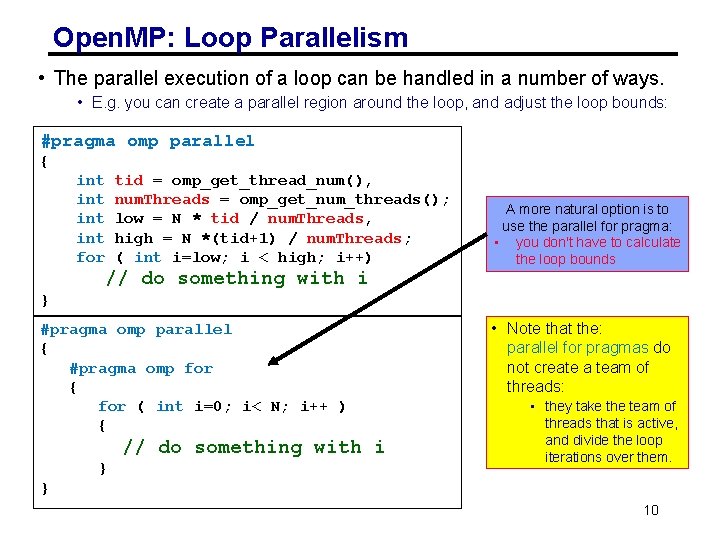

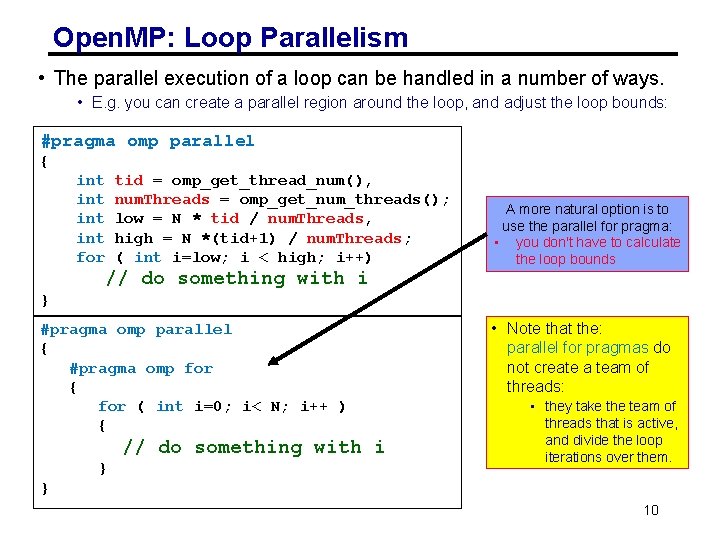

Open. MP: Loop Parallelism • The parallel execution of a loop can be handled in a number of ways. • E. g. you can create a parallel region around the loop, and adjust the loop bounds: #pragma omp parallel { int int for tid = omp_get_thread_num(), num. Threads = omp_get_num_threads(); low = N * tid / num. Threads, high = N *(tid+1) / num. Threads; ( int i=low; i < high; i++) A more natural option is to use the parallel for pragma: • you don't have to calculate the loop bounds // do something with i } #pragma omp parallel { #pragma omp for { for ( int i=0; i< N; i++ ) { // do something with i } • Note that the: parallel for pragmas do not create a team of threads: • they take the team of threads that is active, and divide the loop iterations over them. } 10

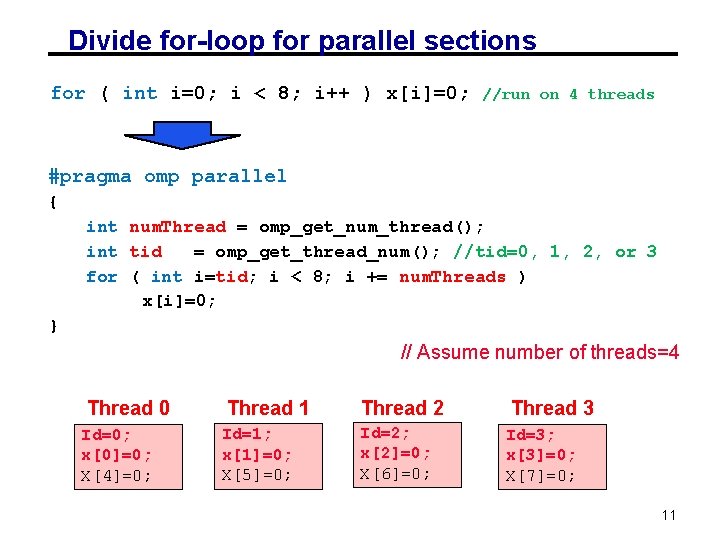

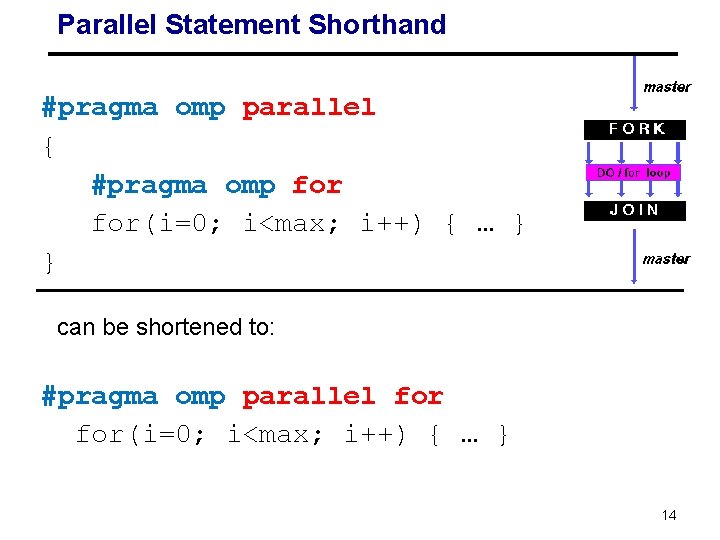

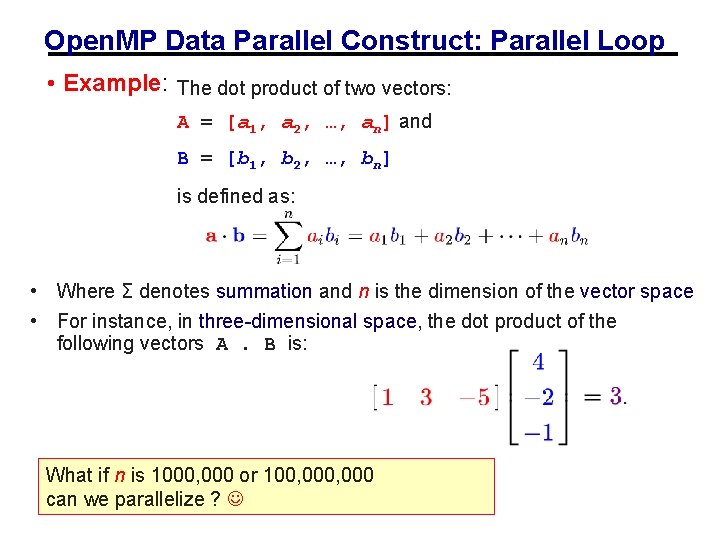

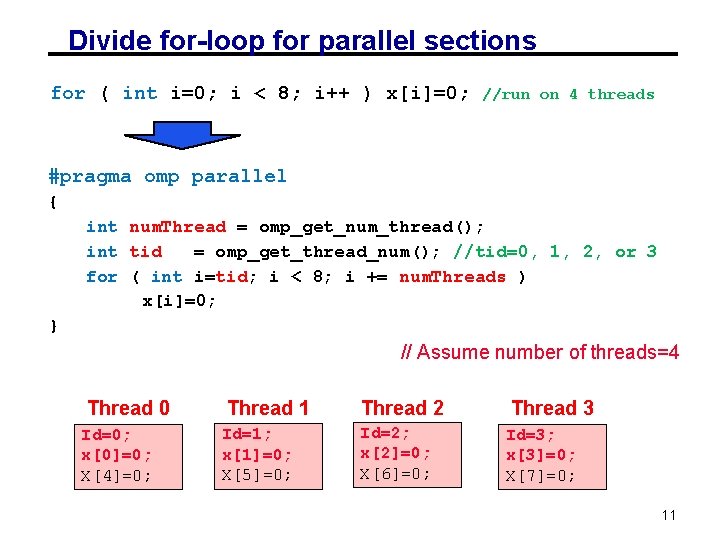

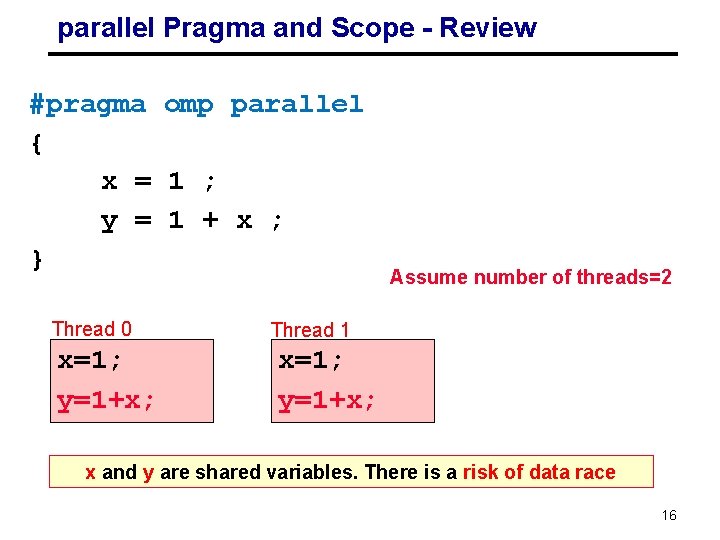

Divide for-loop for parallel sections for ( int i=0; i < 8; i++ ) x[i]=0; //run on 4 threads #pragma omp parallel { int num. Thread = omp_get_num_thread(); int tid = omp_get_thread_num(); //tid=0, 1, 2, or 3 for ( int i=tid; i < 8; i += num. Threads ) x[i]=0; } // Assume number of threads=4 Thread 0 Id=0; x[0]=0; X[4]=0; Thread 1 Id=1; x[1]=0; X[5]=0; Thread 2 Id=2; x[2]=0; X[6]=0; Thread 3 Id=3; x[3]=0; X[7]=0; 11

![Use pragma parallel for int i0 i 8 i xi0 pragma omp parallel Use pragma parallel for (int i=0; i < 8; i++) x[i]=0; #pragma omp parallel](https://slidetodoc.com/presentation_image_h2/432653c50eea2742dfd59e0121503ec9/image-12.jpg)

Use pragma parallel for (int i=0; i < 8; i++) x[i]=0; #pragma omp parallel for { for ( int i=0; i < 8; i++) x[i]=0; } Open. MP runtime system divides the loop iterations into the available team of threads (automatically) Id=0; x[0]=0; X[4]=0; Id=1; x[1]=0; X[5]=0; Id=2; x[2]=0; X[6]=0; Id=3; x[3]=0; X[7]=0; 12

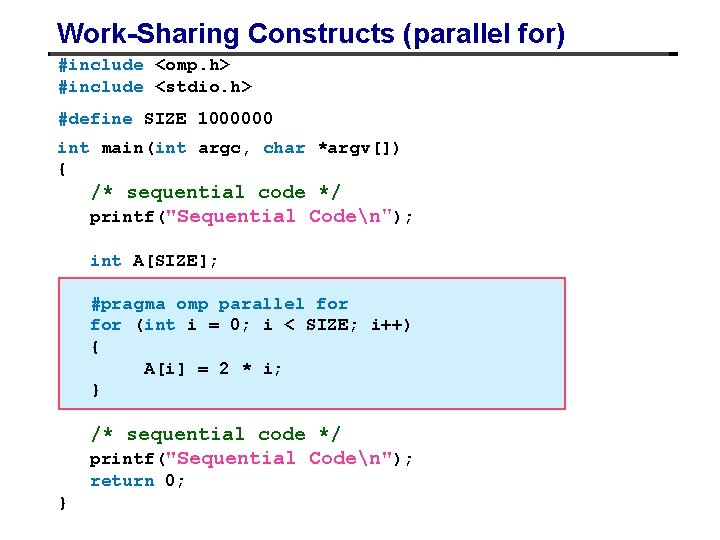

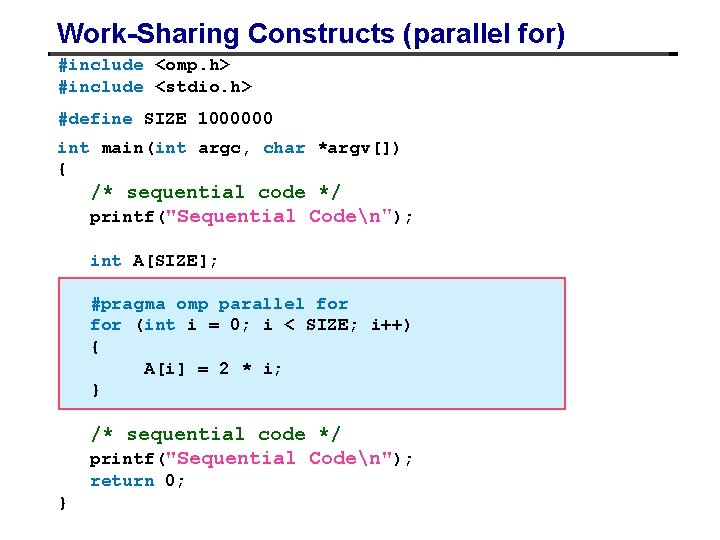

Work-Sharing Constructs (parallel for) #include <omp. h> #include <stdio. h> #define SIZE 1000000 int main(int argc, char *argv[]) { /* sequential code */ printf("Sequential Coden"); int A[SIZE]; #pragma omp parallel for (int i = 0; i < SIZE; i++) { A[i] = 2 * i; } /* sequential code */ printf("Sequential Coden"); return 0; }

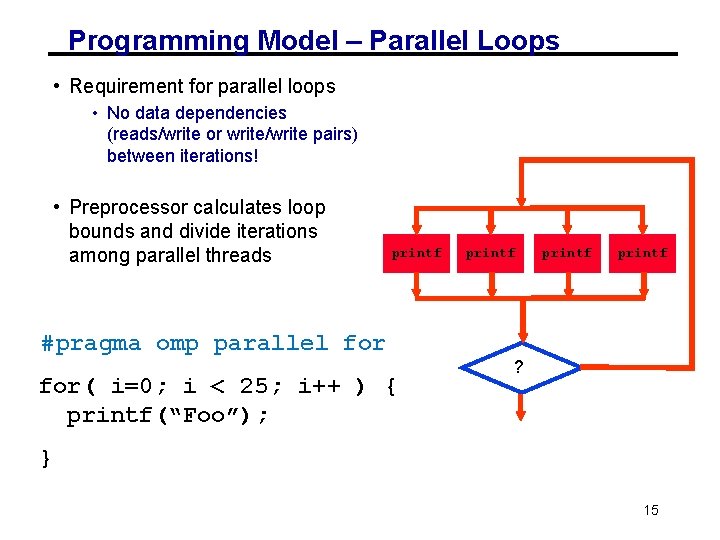

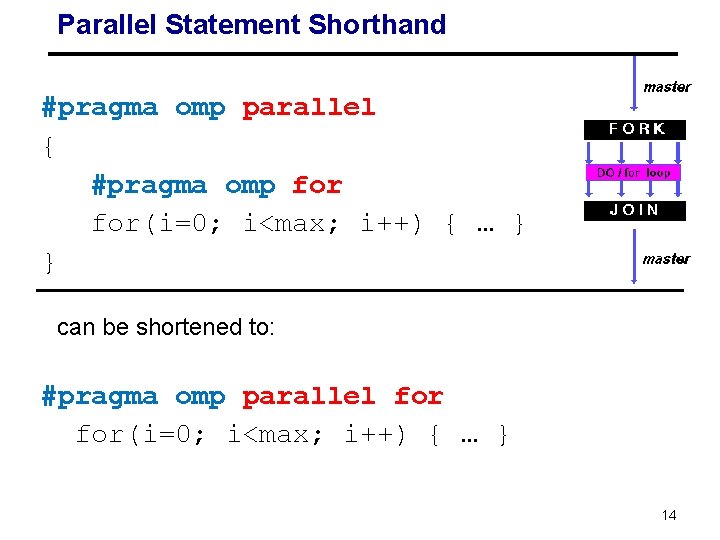

Parallel Statement Shorthand #pragma omp parallel { #pragma omp for(i=0; i<max; i++) { … } } can be shortened to: #pragma omp parallel for(i=0; i<max; i++) { … } 14

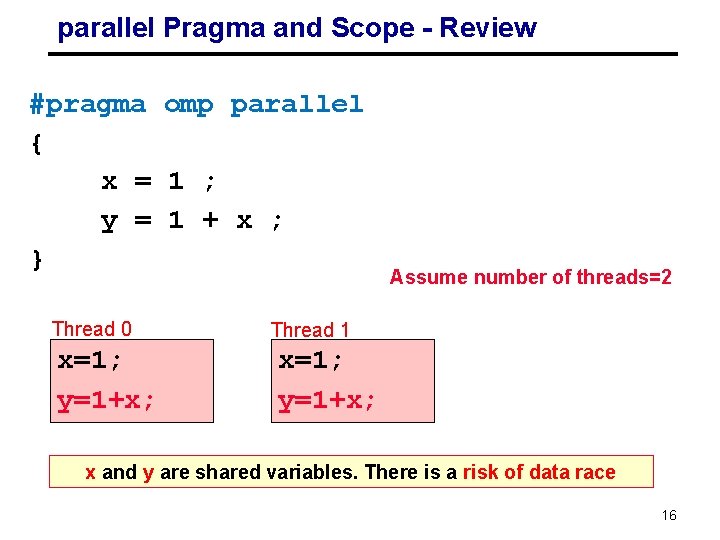

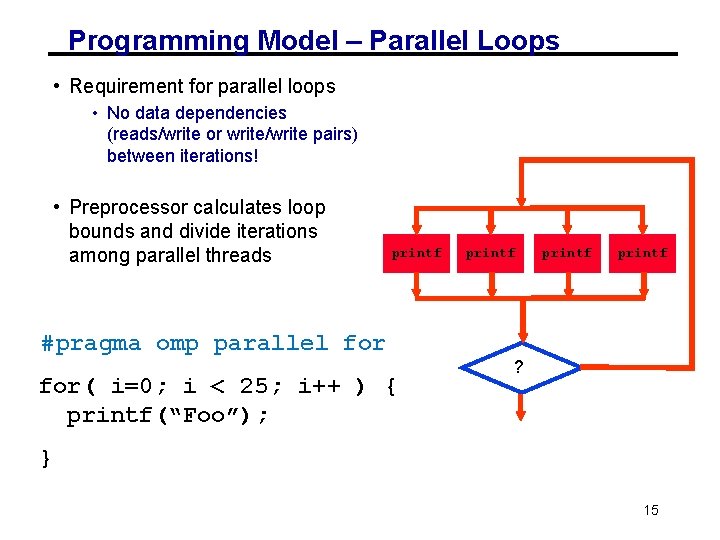

Programming Model – Parallel Loops • Requirement for parallel loops • No data dependencies (reads/write or write/write pairs) between iterations! • Preprocessor calculates loop bounds and divide iterations among parallel threads printf #pragma omp parallel for( i=0; i < 25; i++ ) { printf(“Foo”); printf ? } 15

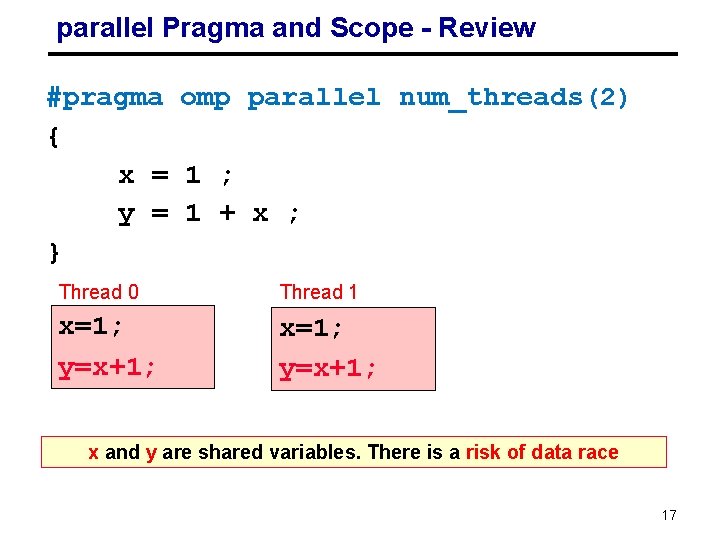

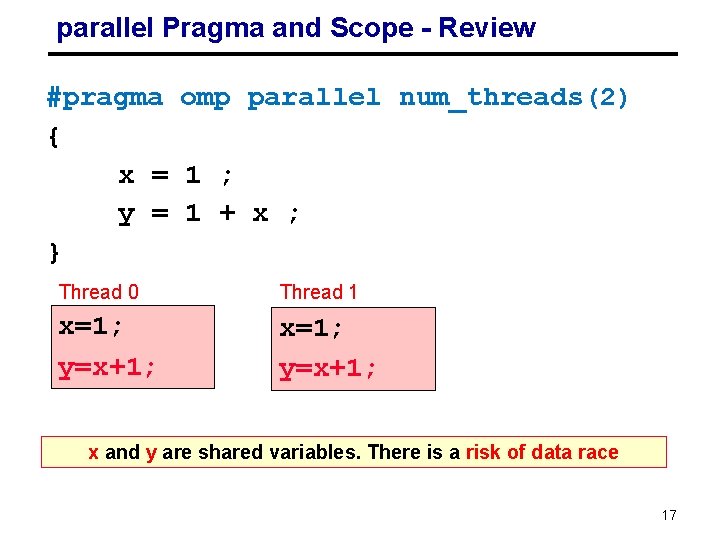

parallel Pragma and Scope - Review #pragma omp parallel { x = 1 ; y = 1 + x ; } Thread 0 x=1; y=1+x; Assume number of threads=2 Thread 1 x=1; y=1+x; x and y are shared variables. There is a risk of data race 16

parallel Pragma and Scope - Review #pragma omp parallel num_threads(2) { x = 1 ; y = 1 + x ; } Thread 0 Thread 1 x=1; y=x+1; x and y are shared variables. There is a risk of data race 17

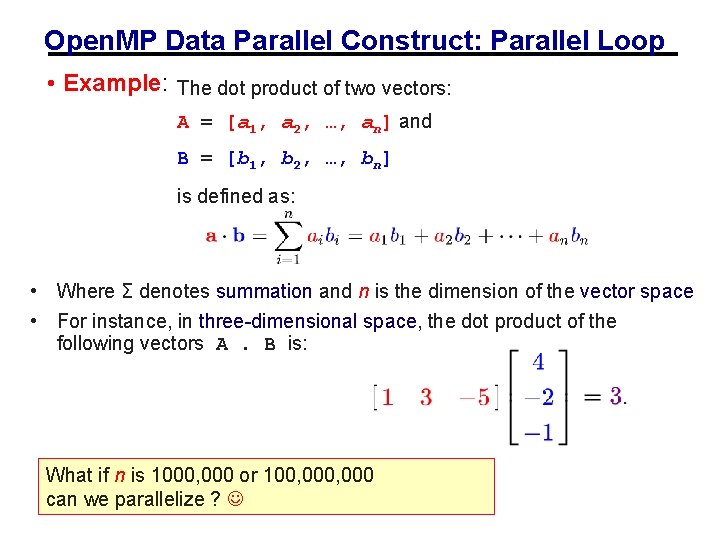

Open. MP Data Parallel Construct: Parallel Loop • Example: The dot product of two vectors: A = [a 1, a 2, …, an] and B = [b 1, b 2, …, bn] is defined as: • Where Σ denotes summation and n is the dimension of the vector space • For instance, in three-dimensional space, the dot product of the following vectors A. B is: What if n is 1000, 000 or 100, 000 can we parallelize ?

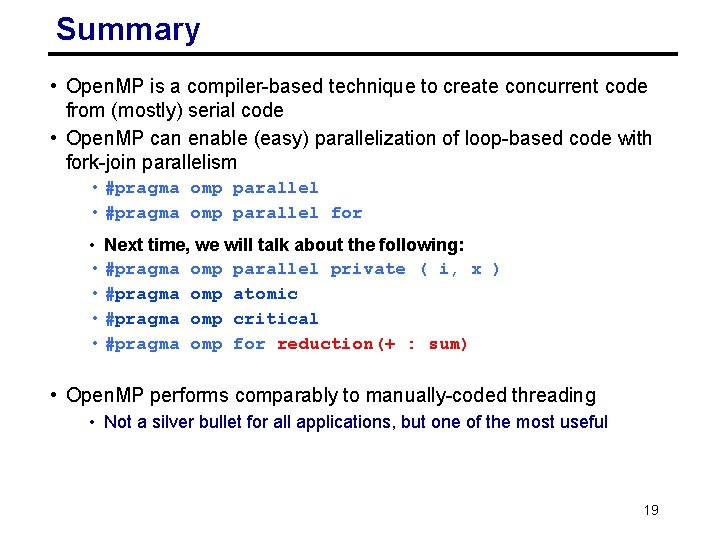

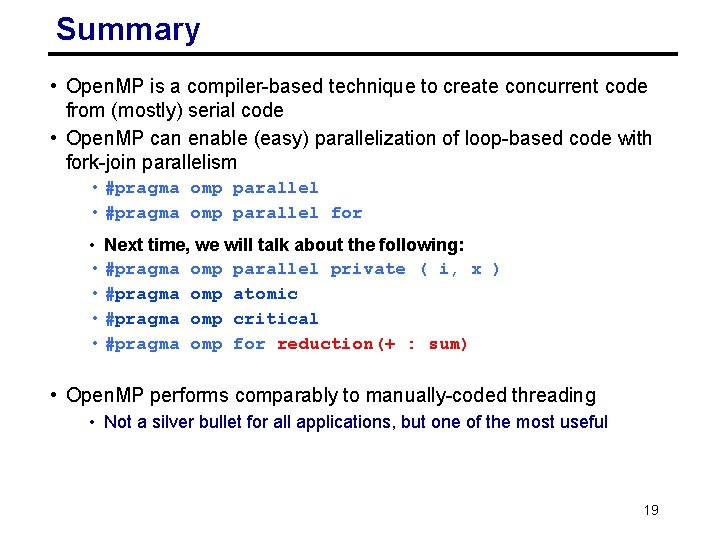

Summary • Open. MP is a compiler-based technique to create concurrent code from (mostly) serial code • Open. MP can enable (easy) parallelization of loop-based code with fork-join parallelism • #pragma omp parallel for • Next time, we will talk about the following: • #pragma omp parallel private ( i, x ) • #pragma omp atomic • #pragma omp critical • #pragma omp for reduction(+ : sum) • Open. MP performs comparably to manually-coded threading • Not a silver bullet for all applications, but one of the most useful 19