Out of sample extension of PCA Kernel PCA

- Slides: 22

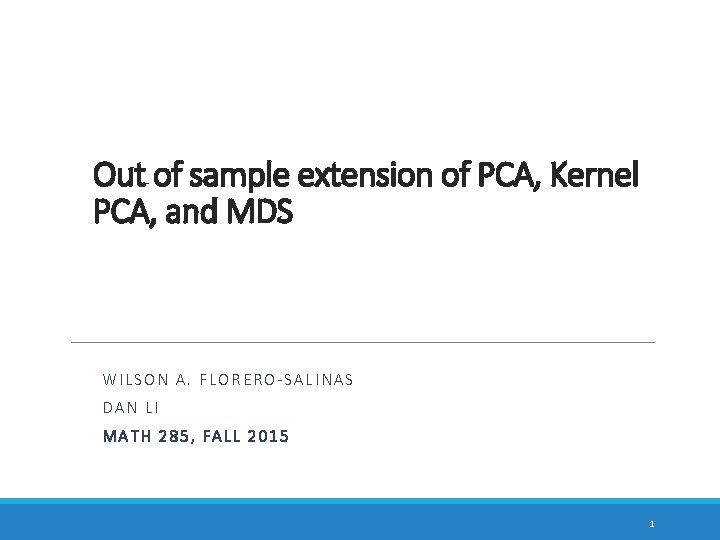

Out of sample extension of PCA, Kernel PCA, and MDS WILSON A. FLORERO-SALINAS DAN LI MATH 285, FALL 2015 1

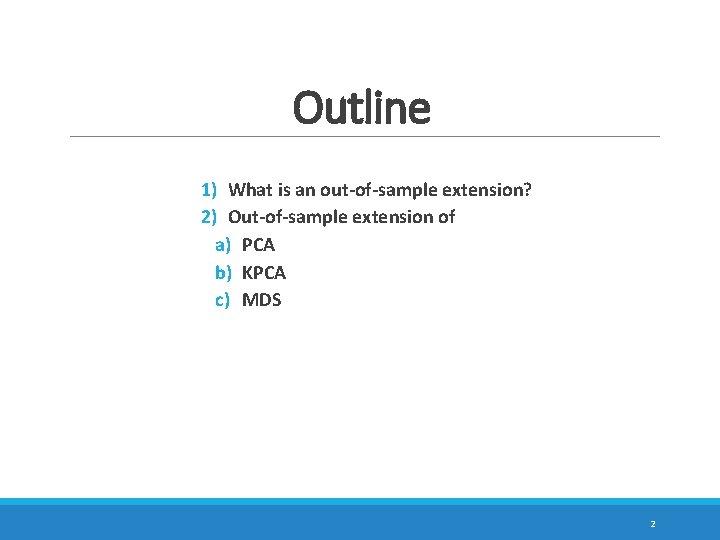

Outline 1) What is an out-of-sample extension? 2) Out-of-sample extension of a) PCA b) KPCA c) MDS 2

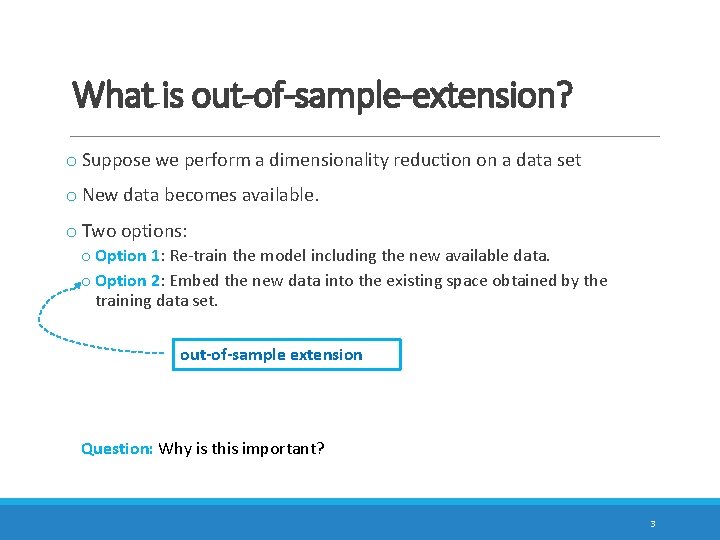

What is out-of-sample-extension? o Suppose we perform a dimensionality reduction on a data set o New data becomes available. o Two options: o Option 1: Re-train the model including the new available data. o Option 2: Embed the new data into the existing space obtained by the training data set. out-of-sample extension Question: Why is this important? 3

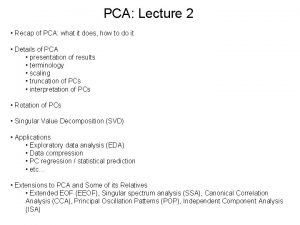

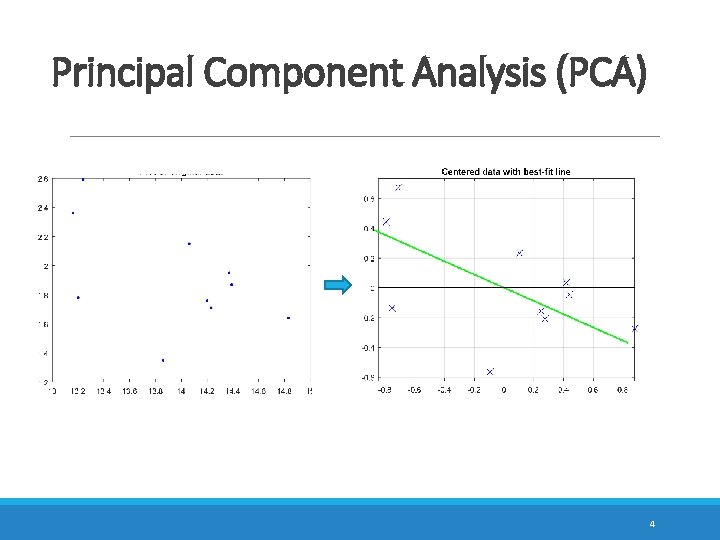

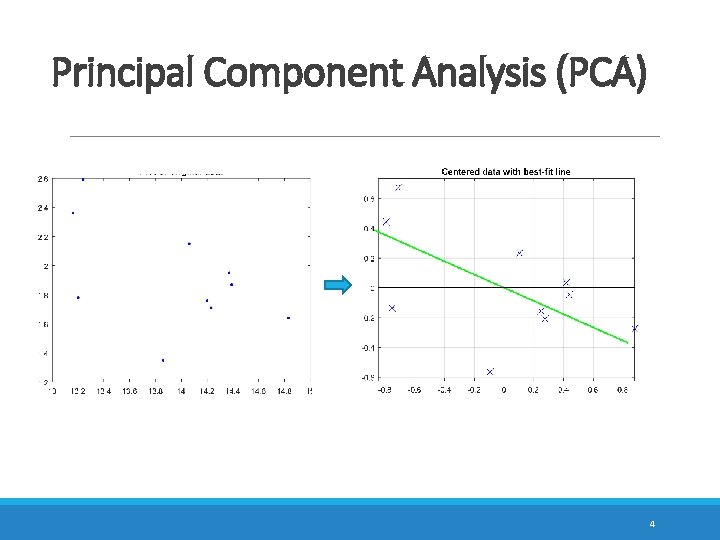

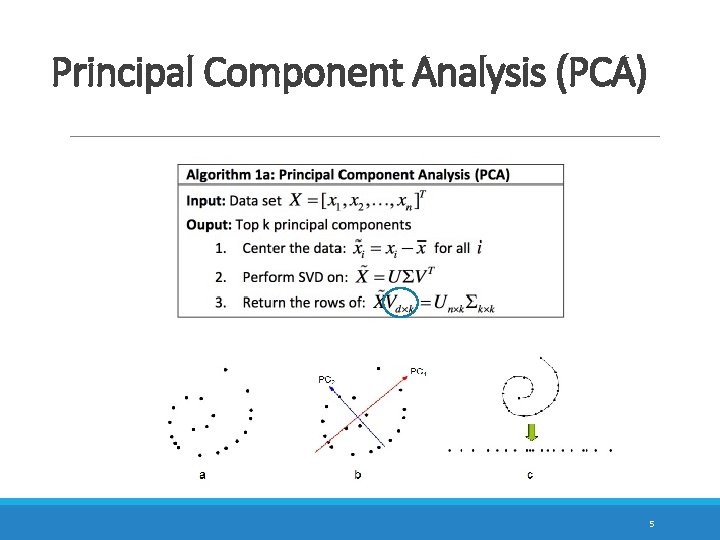

Principal Component Analysis (PCA) 4

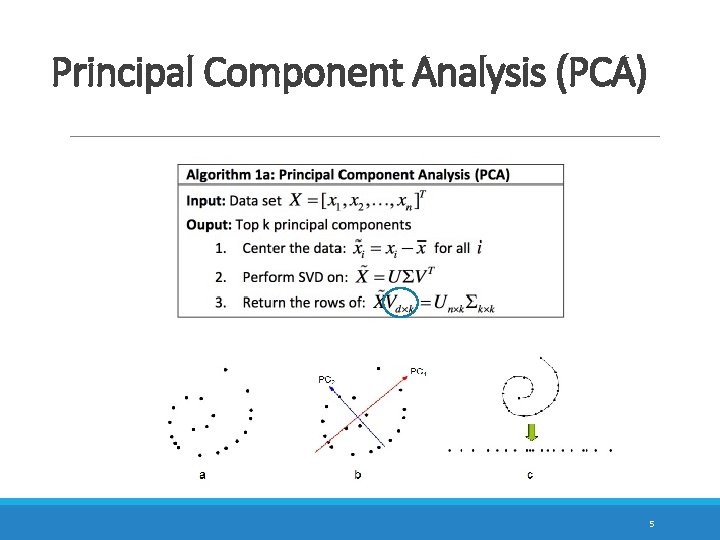

Principal Component Analysis (PCA) 5

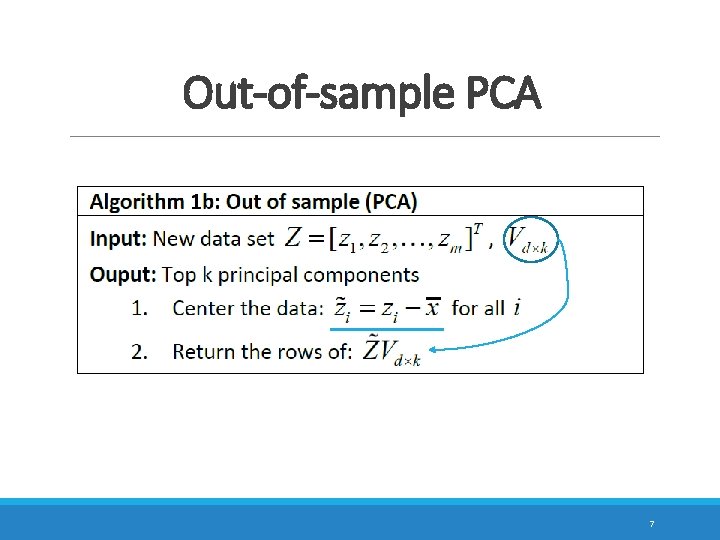

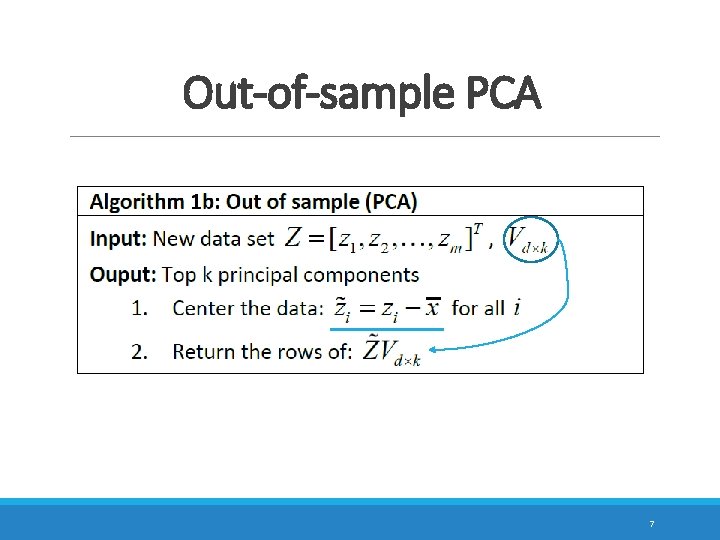

Out-of-sample PCA o Suppose new data becomes available. 6

Out-of-sample PCA 7

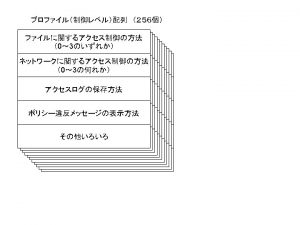

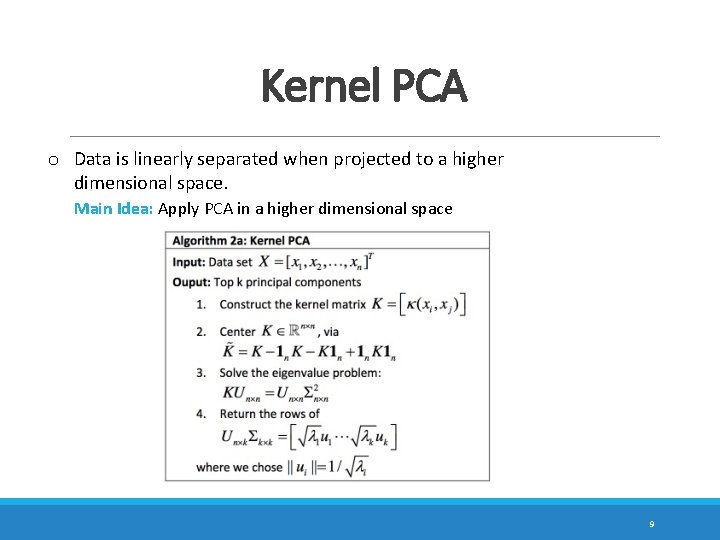

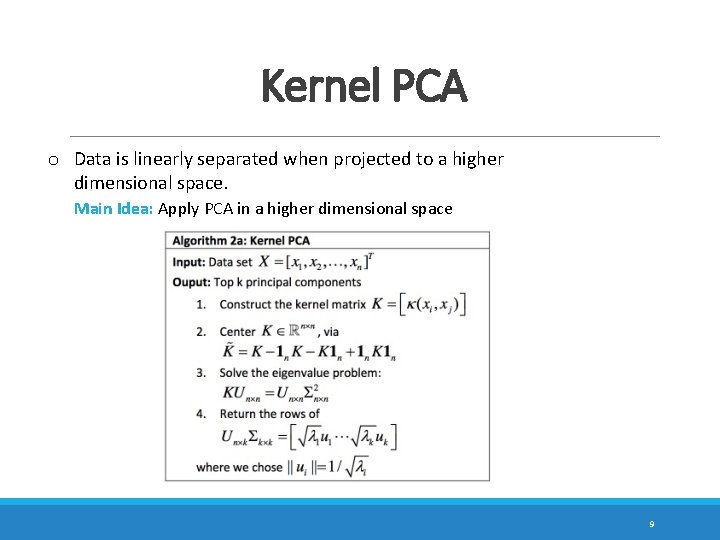

Kernel PCA Main Idea: Apply PCA in the feature space Center here, too Apply PCA Solve eigenvalue problem 8

Kernel PCA o Data is linearly separated when projected to a higher dimensional space. Main Idea: Apply PCA in a higher dimensional space 9

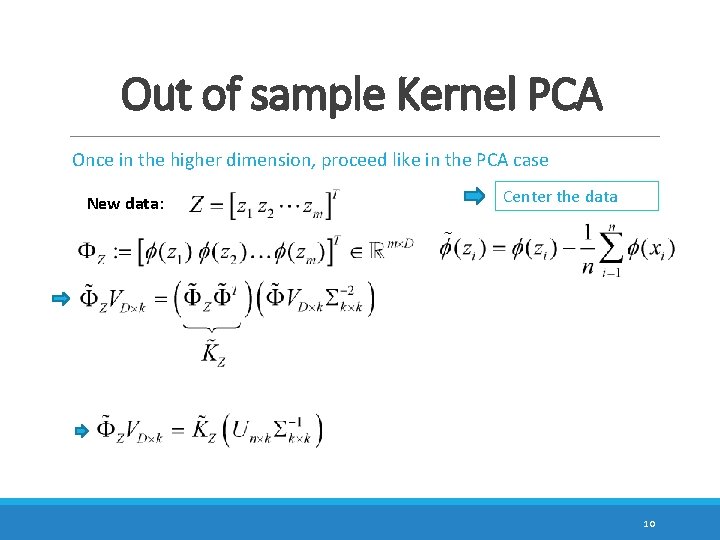

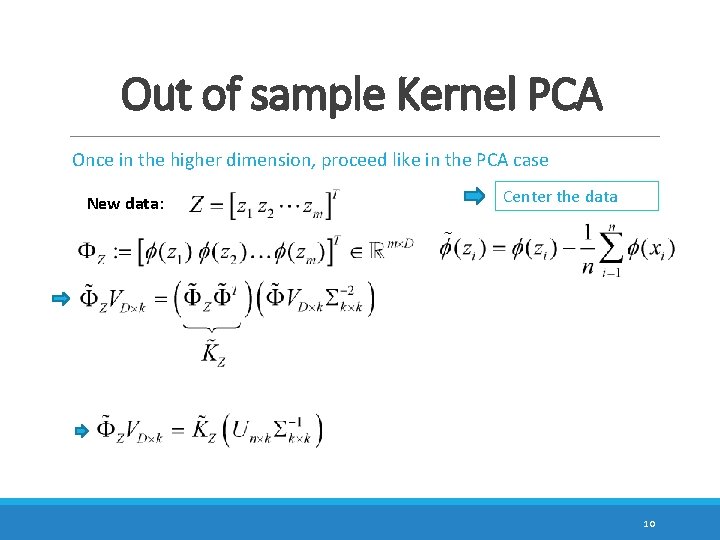

Out of sample Kernel PCA Once in the higher dimension, proceed like in the PCA case New data: Center the data 10

Out of sample Kernel PCA o Project new data into feature space which is obtained by the training data set. o Apply the kernel trick 11

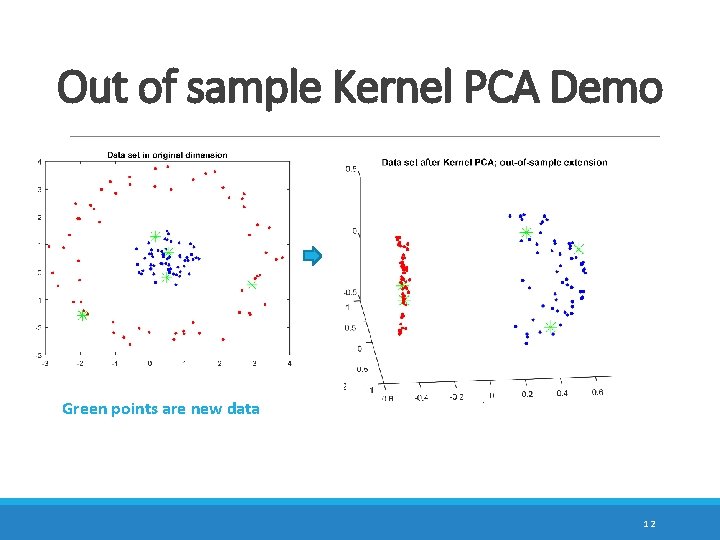

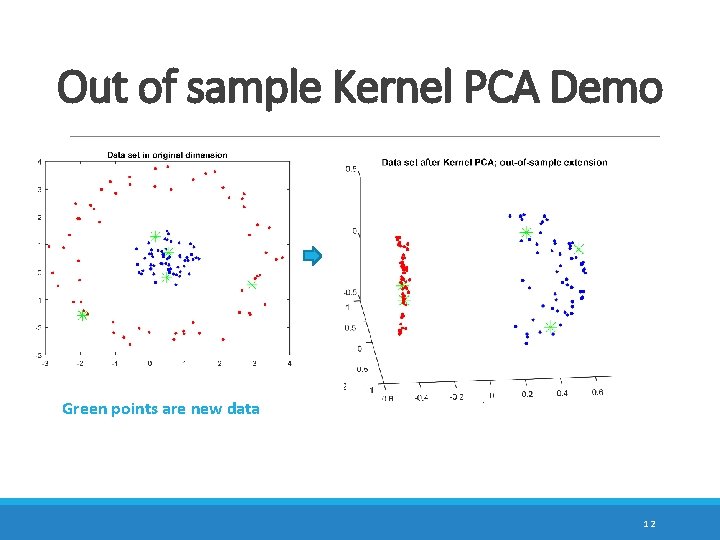

Out of sample Kernel PCA Demo Green points are new data 12

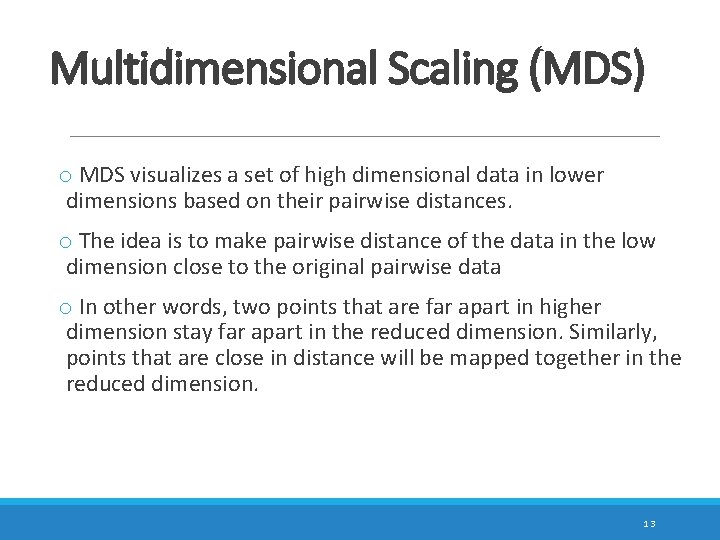

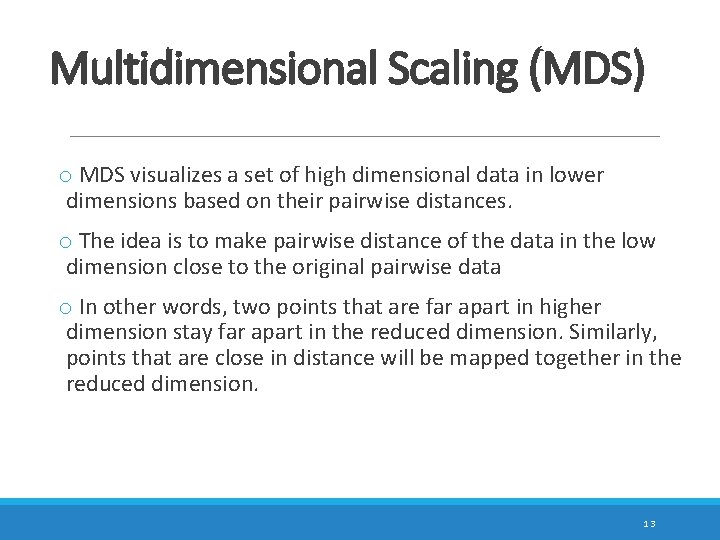

Multidimensional Scaling (MDS) o MDS visualizes a set of high dimensional data in lower dimensions based on their pairwise distances. o The idea is to make pairwise distance of the data in the low dimension close to the original pairwise data o In other words, two points that are far apart in higher dimension stay far apart in the reduced dimension. Similarly, points that are close in distance will be mapped together in the reduced dimension. 13

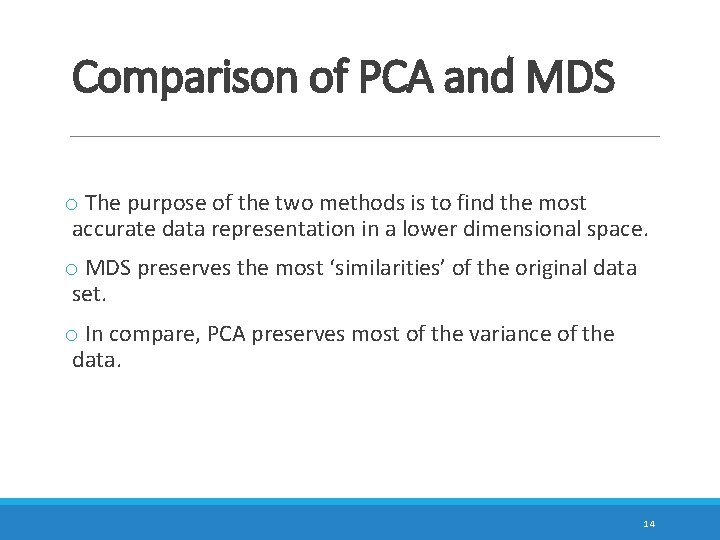

Comparison of PCA and MDS o The purpose of the two methods is to find the most accurate data representation in a lower dimensional space. o MDS preserves the most ‘similarities’ of the original data set. o In compare, PCA preserves most of the variance of the data. 14

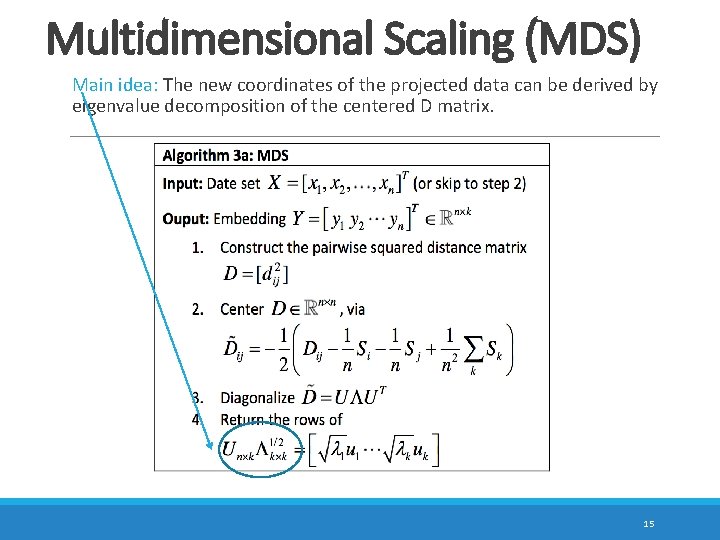

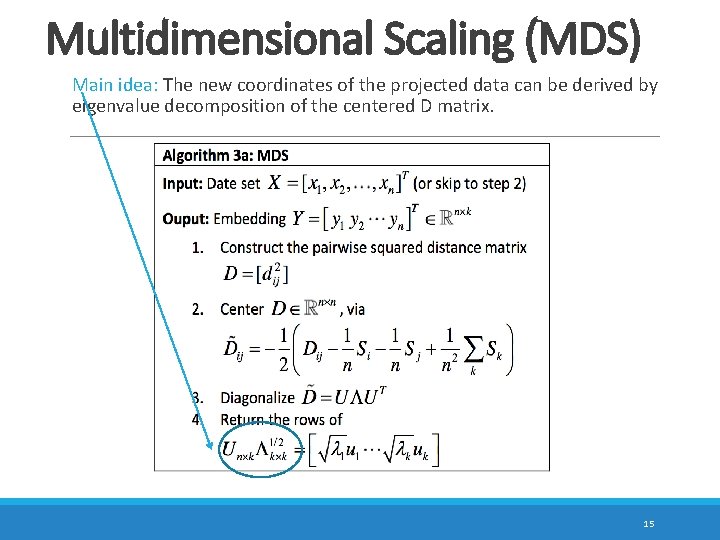

Multidimensional Scaling (MDS) Main idea: The new coordinates of the projected data can be derived by eigenvalue decomposition of the centered D matrix. 15

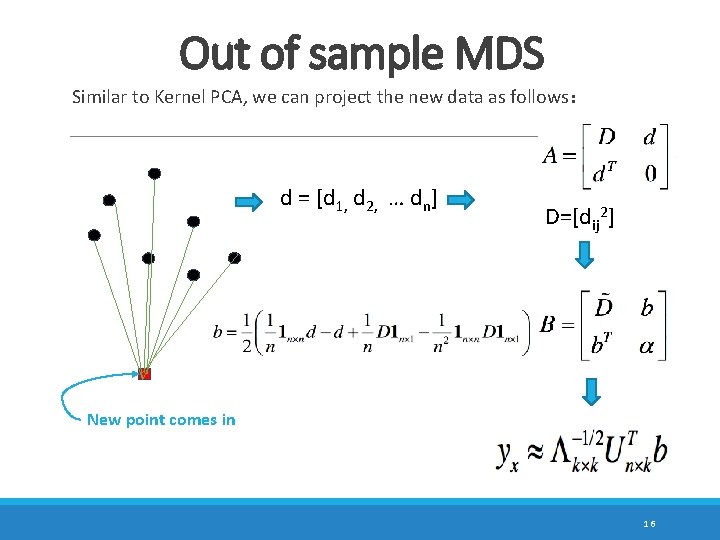

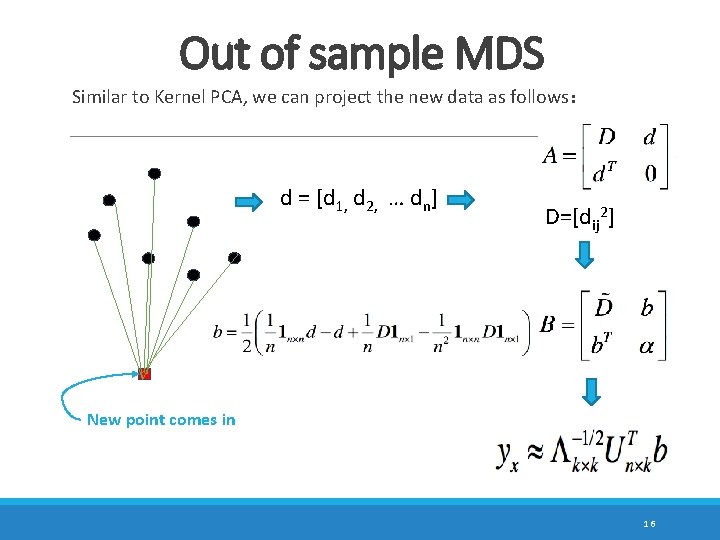

Out of sample MDS Similar to Kernel PCA, we can project the new data as follows: d = [d 1, d 2, … dn] D=[dij 2] New point comes in 16

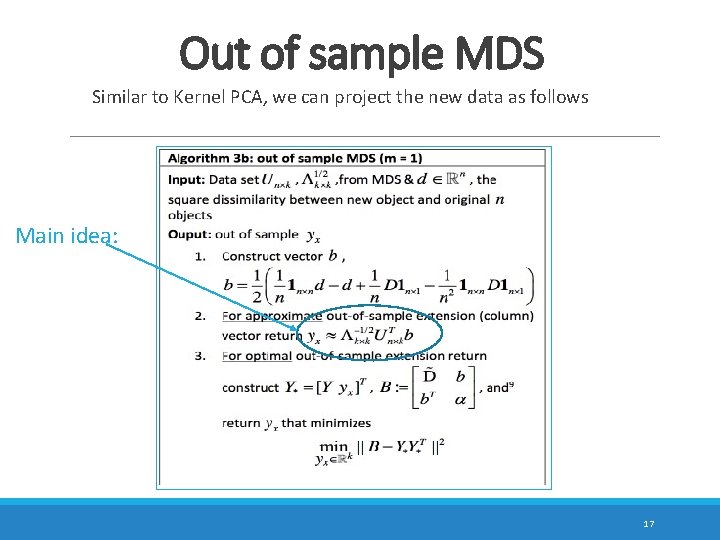

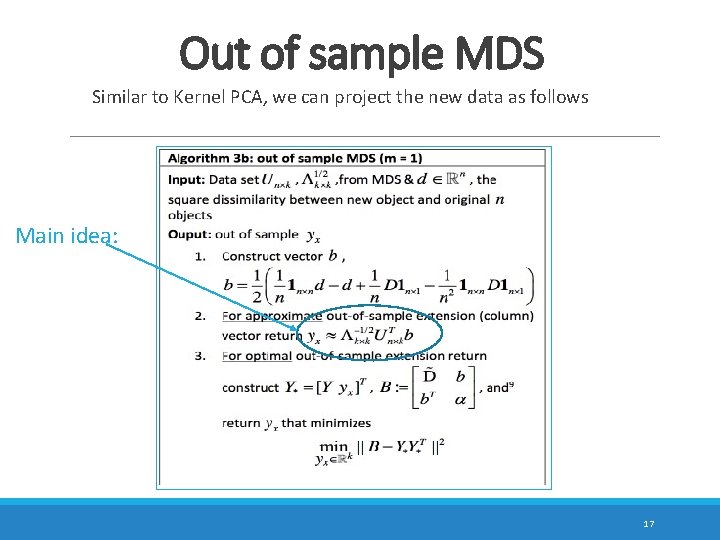

Out of sample MDS Similar to Kernel PCA, we can project the new data as follows Main idea: 17

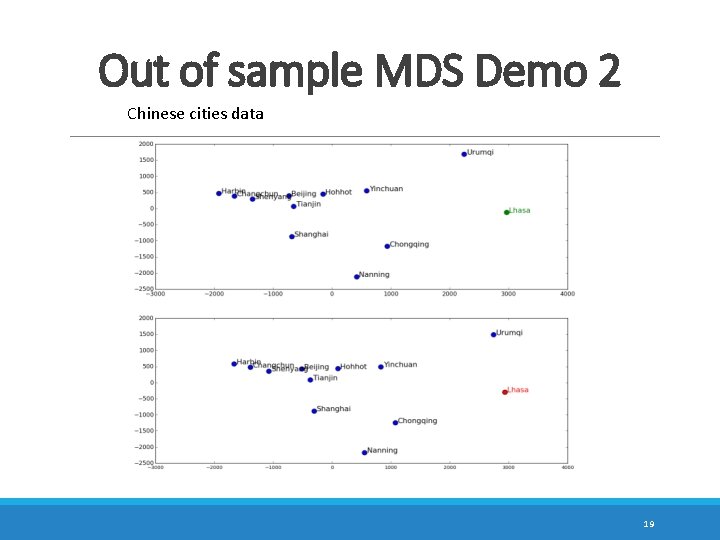

Out of sample MDS Demo 1 Chinese cities data 18

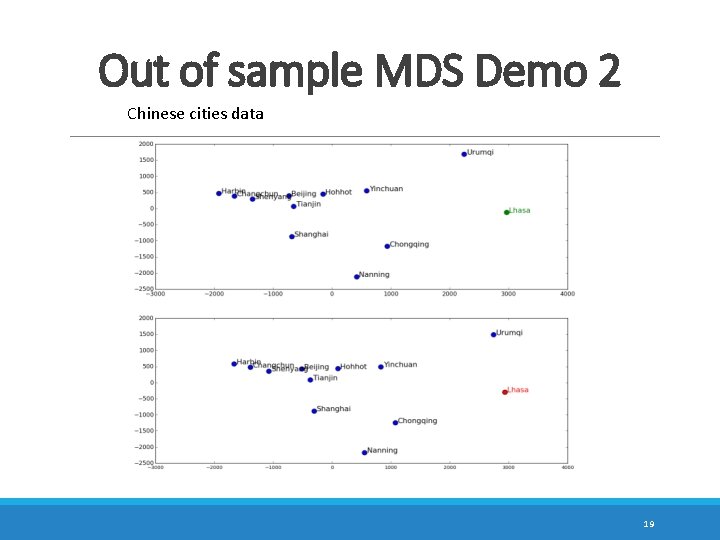

Out of sample MDS Demo 2 Chinese cities data 19

Question? 20

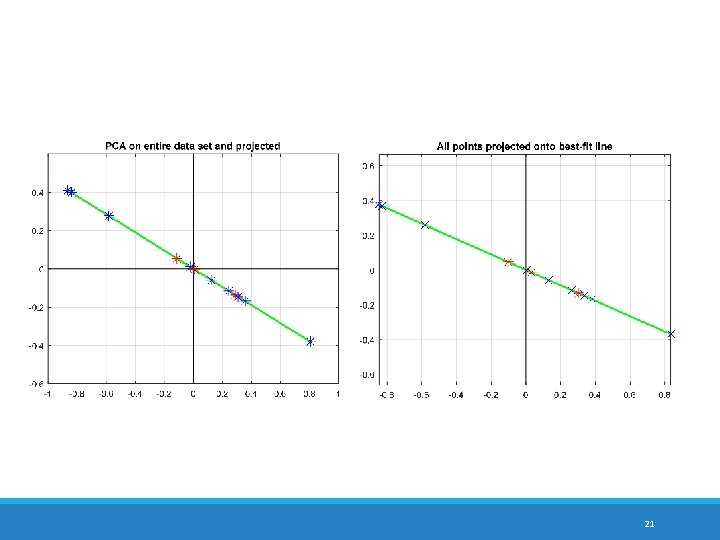

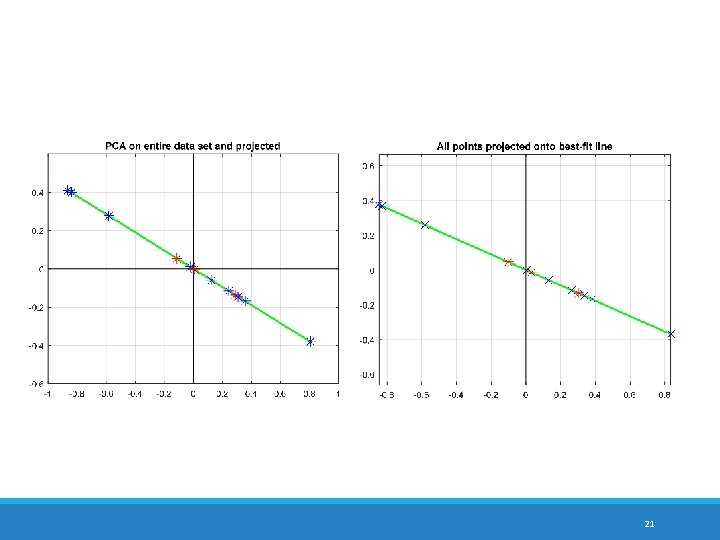

21

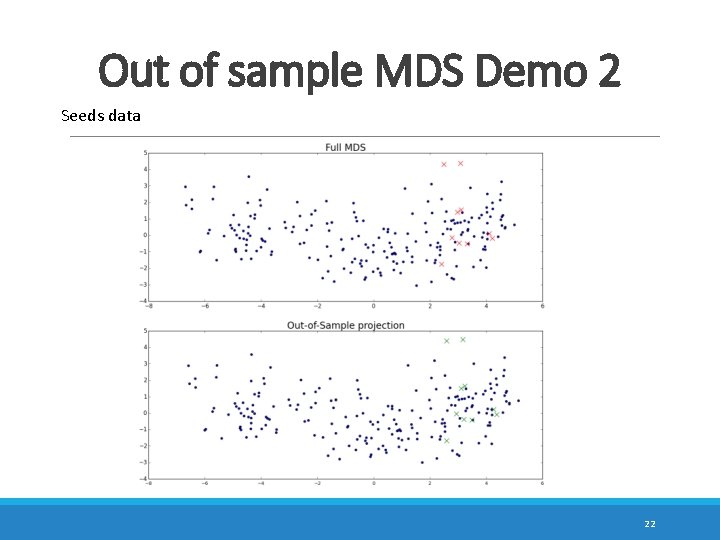

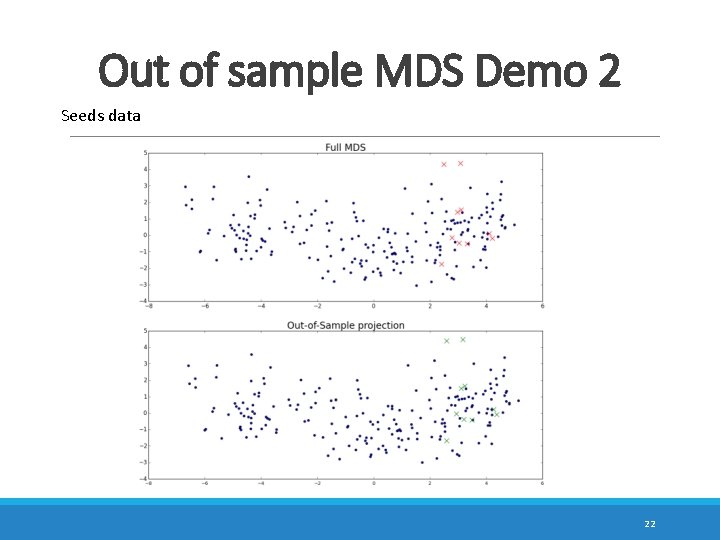

Out of sample MDS Demo 2 Seeds data 22

Kernel pca

Kernel pca Windows vista kernel extension

Windows vista kernel extension Personification in the song one thing by one direction

Personification in the song one thing by one direction Flanker brand strategy

Flanker brand strategy Extension work activities

Extension work activities Put out the light, and then put out the light

Put out the light, and then put out the light Out, out— robert frost

Out, out— robert frost Out of sight out of mind psychology

Out of sight out of mind psychology Out out allusion

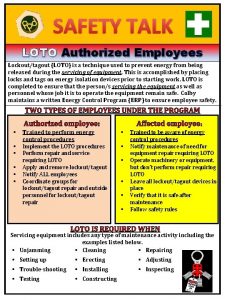

Out out allusion Loto safety talk

Loto safety talk Personification in the road not taken

Personification in the road not taken Time

Time Matthew 11 28-30 msg

Matthew 11 28-30 msg Lock out tag out pictures

Lock out tag out pictures Out, damned spot! out, i say!

Out, damned spot! out, i say! Harmony not discord

Harmony not discord Makna out of sight out of mind

Makna out of sight out of mind Log out tag out deutsch

Log out tag out deutsch Out-of-sample

Out-of-sample Demerits of cluster sampling

Demerits of cluster sampling Theoretical sampling

Theoretical sampling Volunteer sample vs convenience sample

Volunteer sample vs convenience sample Multi-stage sampling example

Multi-stage sampling example