NDN Day at Caltech Towards an RD Program

![NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [2] NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [2]](https://slidetodoc.com/presentation_image_h/312b8873319ce54c20fbc96801c9efa9/image-19.jpg)

![NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [2] NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [2]](https://slidetodoc.com/presentation_image_h/312b8873319ce54c20fbc96801c9efa9/image-20.jpg)

![NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [3] NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [3]](https://slidetodoc.com/presentation_image_h/312b8873319ce54c20fbc96801c9efa9/image-21.jpg)

![NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [4] NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [4]](https://slidetodoc.com/presentation_image_h/312b8873319ce54c20fbc96801c9efa9/image-22.jpg)

- Slides: 31

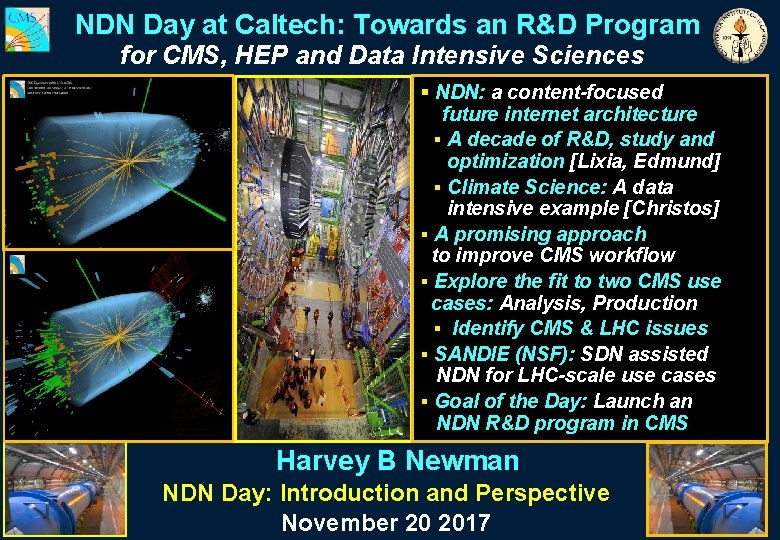

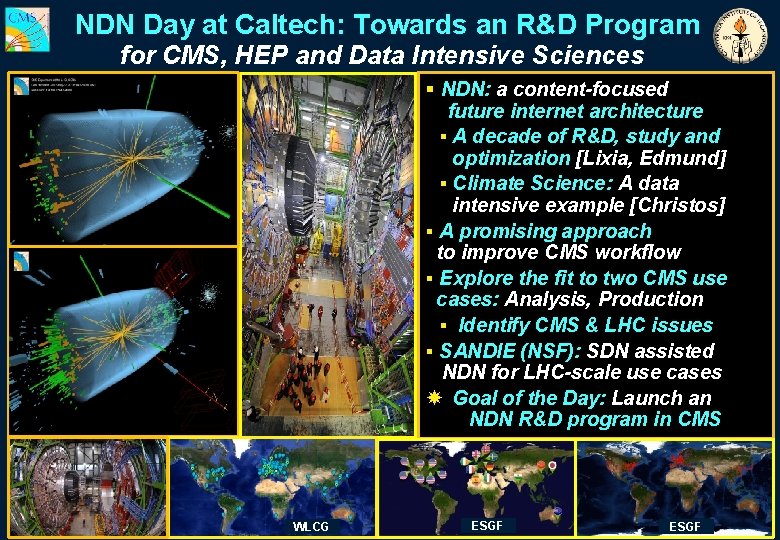

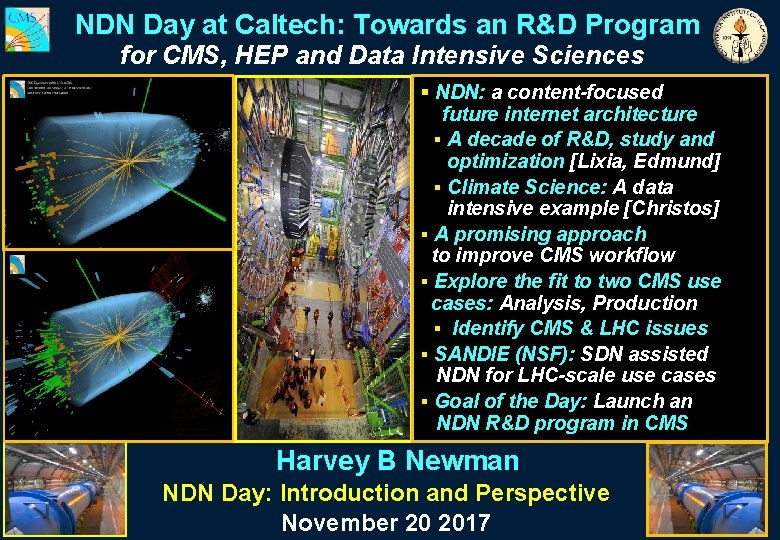

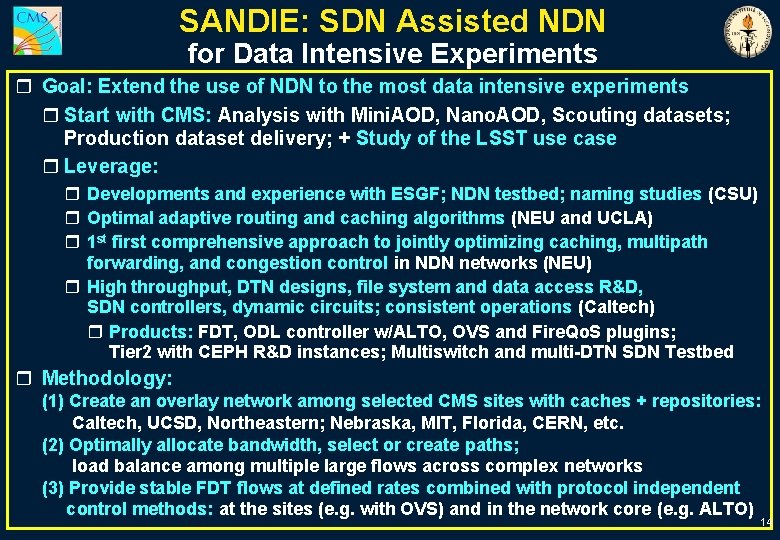

NDN Day at Caltech: Towards an R&D Program for CMS, HEP and Data Intensive Sciences § NDN: a content-focused future internet architecture § A decade of R&D, study and optimization [Lixia, Edmund] § Climate Science: A data intensive example [Christos] § A promising approach to improve CMS workflow § Explore the fit to two CMS use cases: Analysis, Production § Identify CMS & LHC issues § SANDIE (NSF): SDN assisted NDN for LHC-scale use cases § Goal of the Day: Launch an NDN R&D program in CMS Harvey B Newman NDN Day: Introduction and Perspective November 20 2017

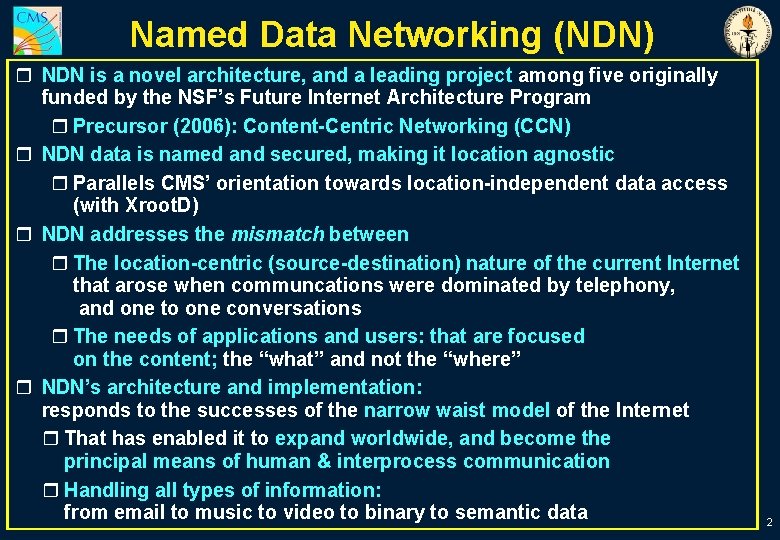

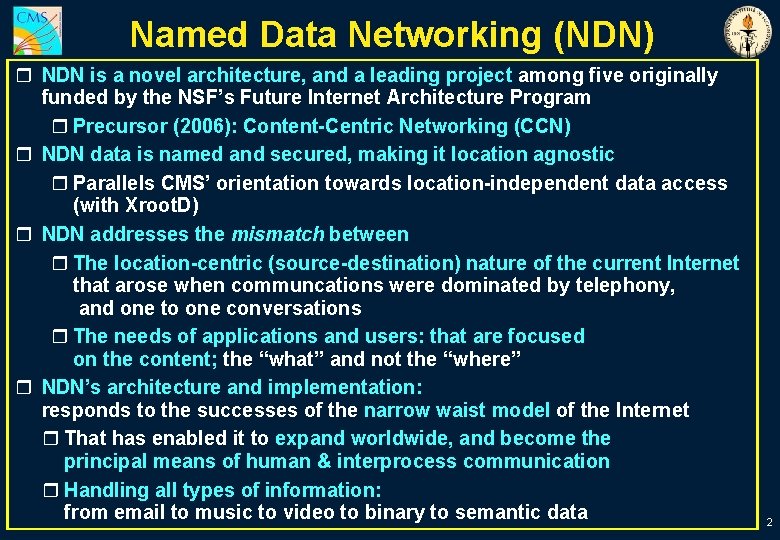

Named Data Networking (NDN) r NDN is a novel architecture, and a leading project among five originally funded by the NSF’s Future Internet Architecture Program r Precursor (2006): Content-Centric Networking (CCN) r NDN data is named and secured, making it location agnostic r Parallels CMS’ orientation towards location-independent data access (with Xroot. D) r NDN addresses the mismatch between r The location-centric (source-destination) nature of the current Internet that arose when communcations were dominated by telephony, and one to one conversations r The needs of applications and users: that are focused on the content; the “what” and not the “where” r NDN’s architecture and implementation: responds to the successes of the narrow waist model of the Internet r That has enabled it to expand worldwide, and become the principal means of human & interprocess communication r Handling all types of information: from email to music to video to binary to semantic data 2

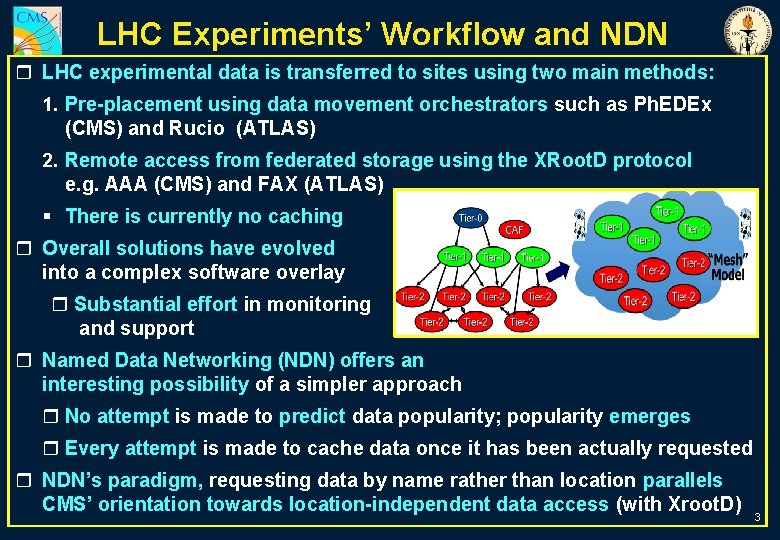

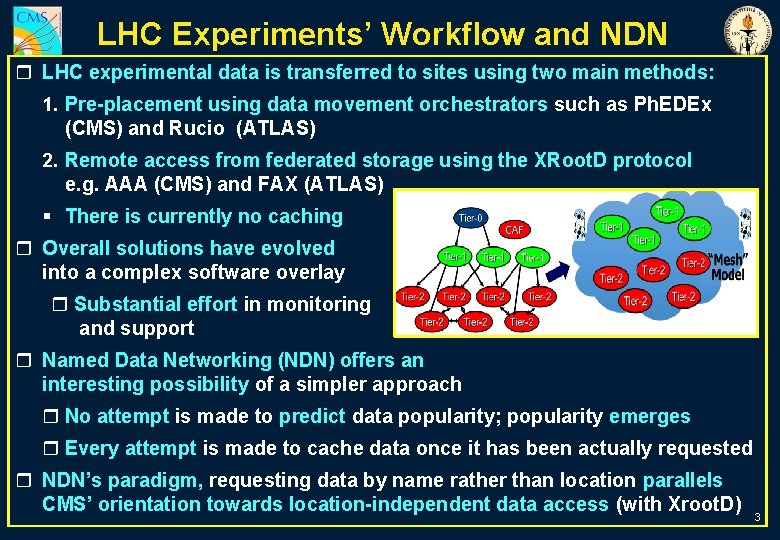

LHC Experiments’ Workflow and NDN r LHC experimental data is transferred to sites using two main methods: 1. Pre-placement using data movement orchestrators such as Ph. EDEx (CMS) and Rucio (ATLAS) 2. Remote access from federated storage using the XRoot. D protocol e. g. AAA (CMS) and FAX (ATLAS) § There is currently no caching r Overall solutions have evolved into a complex software overlay r Substantial effort in monitoring and support r Named Data Networking (NDN) offers an interesting possibility of a simpler approach r No attempt is made to predict data popularity; popularity emerges r Every attempt is made to cache data once it has been actually requested r NDN’s paradigm, requesting data by name rather than location parallels CMS’ orientation towards location-independent data access (with Xroot. D) 3

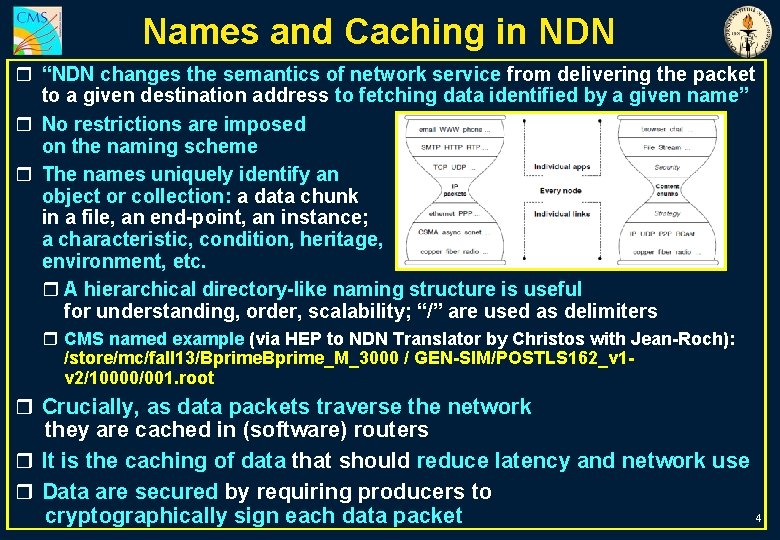

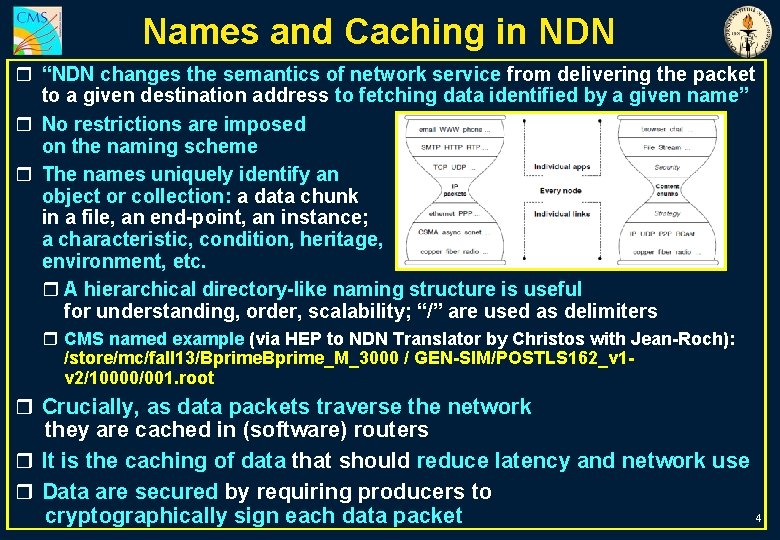

Names and Caching in NDN r “NDN changes the semantics of network service from delivering the packet to a given destination address to fetching data identified by a given name” r No restrictions are imposed on the naming scheme r The names uniquely identify an object or collection: a data chunk in a file, an end-point, an instance; a characteristic, condition, heritage, environment, etc. r A hierarchical directory-like naming structure is useful for understanding, order, scalability; “/” are used as delimiters r CMS named example (via HEP to NDN Translator by Christos with Jean-Roch): /store/mc/fall 13/Bprime_M_3000 / GEN-SIM/POSTLS 162_v 1 v 2/10000/001. root r Crucially, as data packets traverse the network they are cached in (software) routers r It is the caching of data that should reduce latency and network use r Data are secured by requiring producers to 4 cryptographically sign each data packet

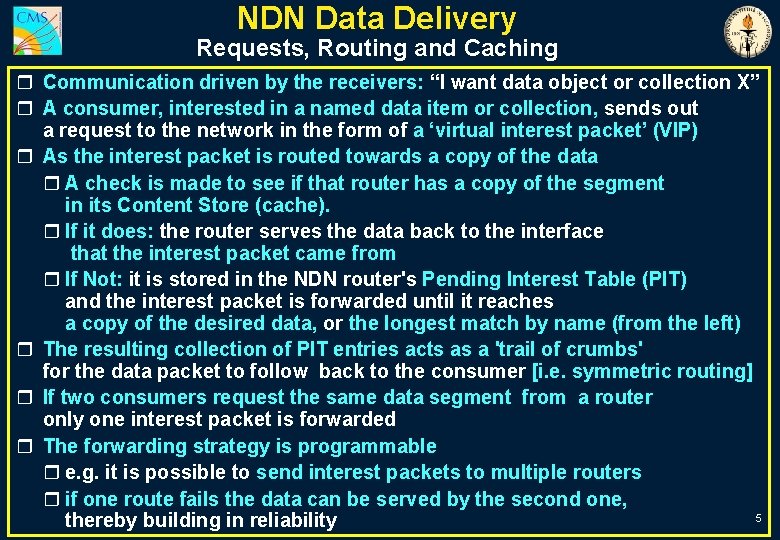

NDN Data Delivery Requests, Routing and Caching r Communication driven by the receivers: “I want data object or collection X” r A consumer, interested in a named data item or collection, sends out a request to the network in the form of a ‘virtual interest packet’ (VIP) r As the interest packet is routed towards a copy of the data r A check is made to see if that router has a copy of the segment in its Content Store (cache). r If it does: the router serves the data back to the interface that the interest packet came from r If Not: it is stored in the NDN router's Pending Interest Table (PIT) and the interest packet is forwarded until it reaches a copy of the desired data, or the longest match by name (from the left) r The resulting collection of PIT entries acts as a 'trail of crumbs' for the data packet to follow back to the consumer [i. e. symmetric routing] r If two consumers request the same data segment from a router only one interest packet is forwarded r The forwarding strategy is programmable r e. g. it is possible to send interest packets to multiple routers r if one route fails the data can be served by the second one, 5 thereby building in reliability

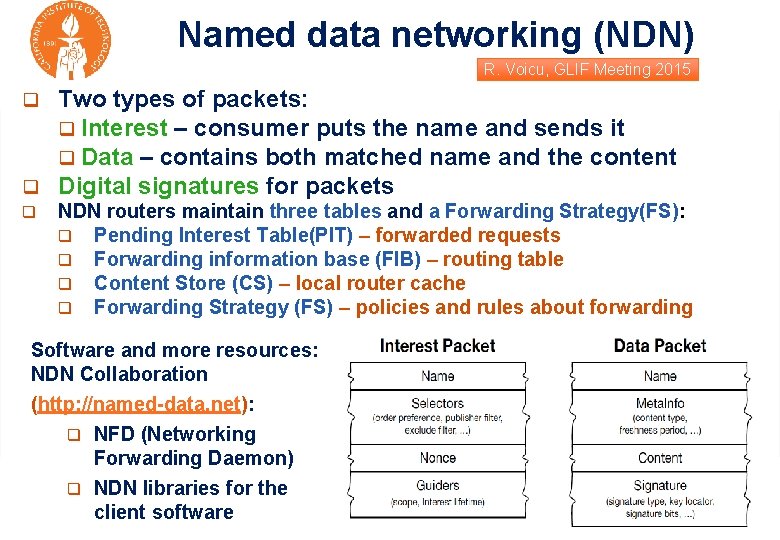

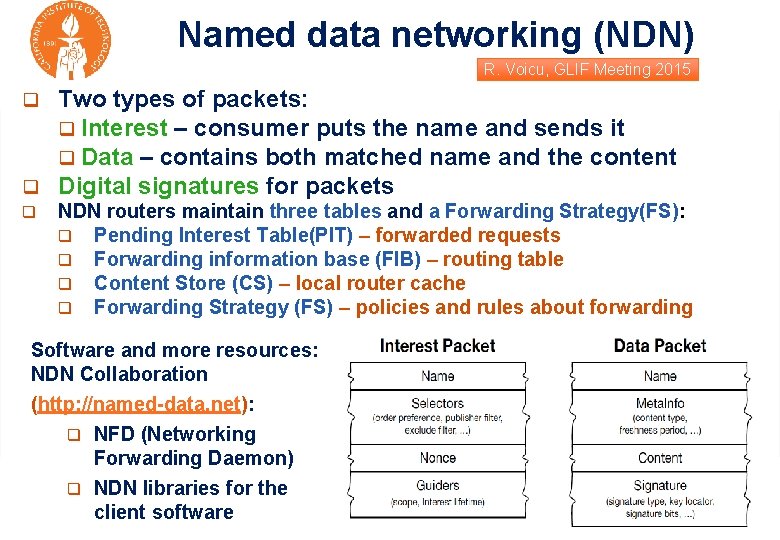

Named data networking (NDN) R. Voicu, GLIF Meeting 2015 Two types of packets: q Interest – consumer puts the name and sends it q Data – contains both matched name and the content q Digital signatures for packets q q NDN routers maintain three tables and a Forwarding Strategy(FS): q Pending Interest Table(PIT) – forwarded requests q Forwarding information base (FIB) – routing table q Content Store (CS) – local router cache q Forwarding Strategy (FS) – policies and rules about forwarding Software and more resources: NDN Collaboration (http: //named-data. net): q NFD (Networking Forwarding Daemon) q NDN libraries for the client software

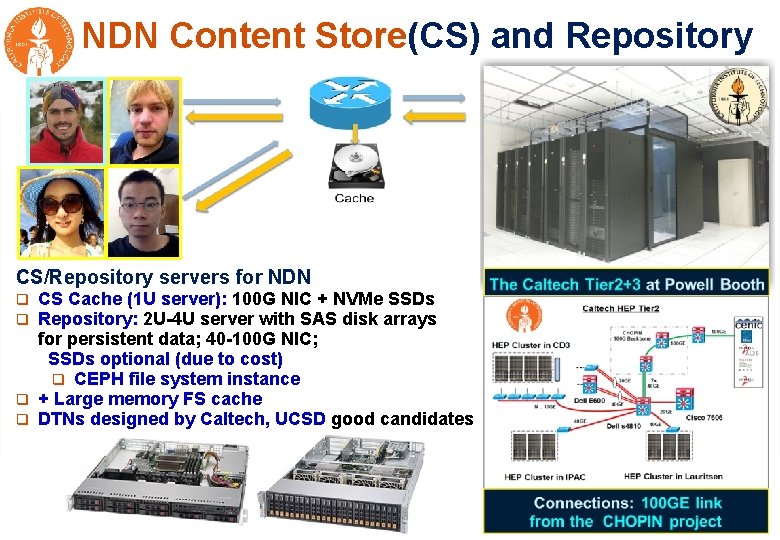

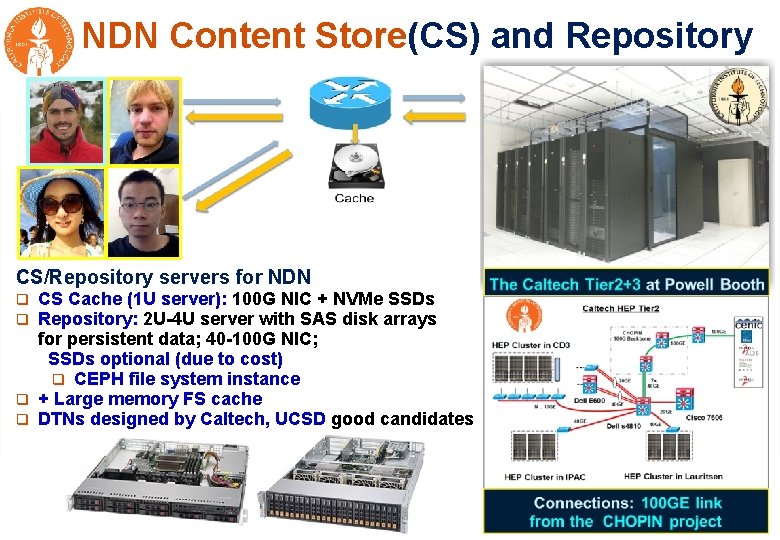

NDN Content Store(CS) and Repository CS/Repository servers for NDN CS Cache (1 U server): 100 G NIC + NVMe SSDs Repository: 2 U-4 U server with SAS disk arrays for persistent data; 40 -100 G NIC; SSDs optional (due to cost) q CEPH file system instance q + Large memory FS cache q DTNs designed by Caltech, UCSD good candidates q q

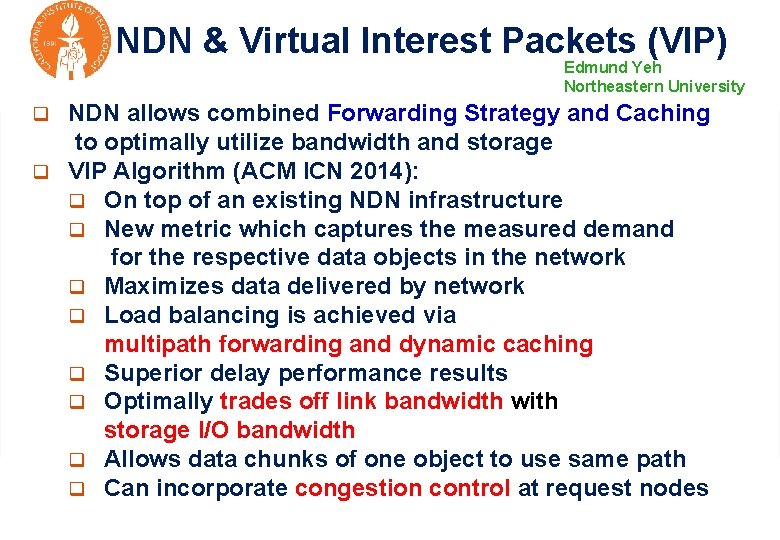

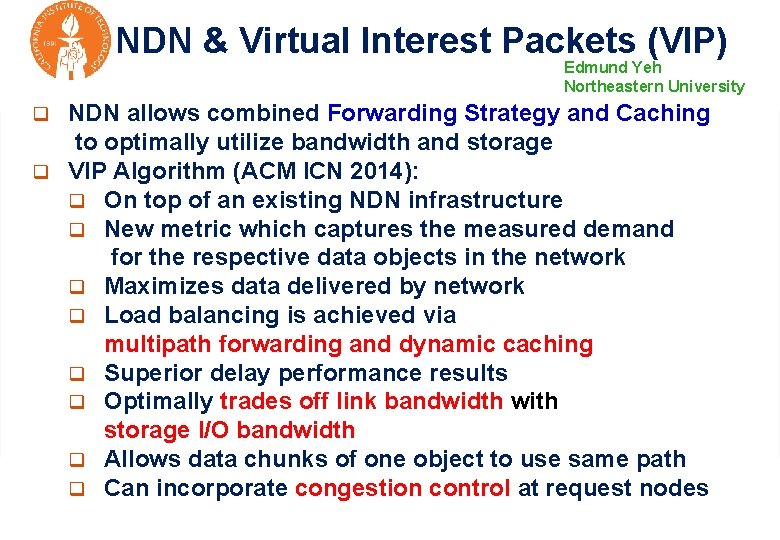

NDN & Virtual Interest Packets (VIP) Edmund Yeh Northeastern University NDN allows combined Forwarding Strategy and Caching to optimally utilize bandwidth and storage q VIP Algorithm (ACM ICN 2014): q On top of an existing NDN infrastructure q New metric which captures the measured demand for the respective data objects in the network q Maximizes data delivered by network q Load balancing is achieved via multipath forwarding and dynamic caching q Superior delay performance results q Optimally trades off link bandwidth with storage I/O bandwidth q Allows data chunks of one object to use same path q Can incorporate congestion control at request nodes q

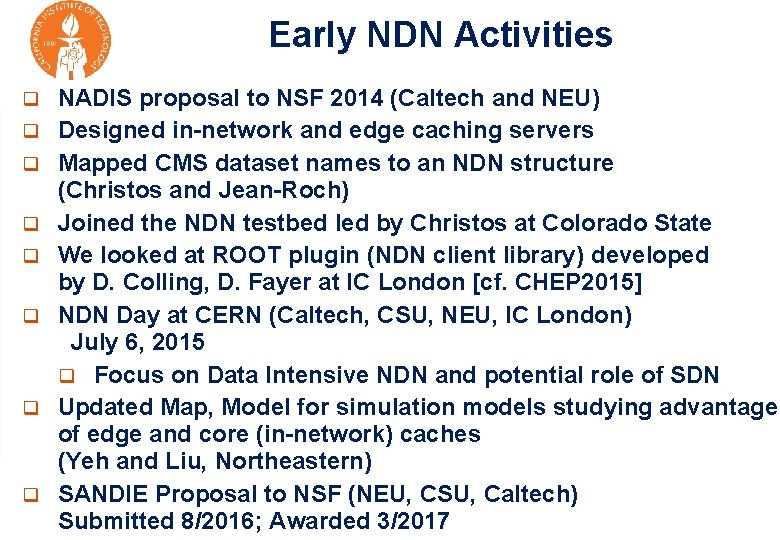

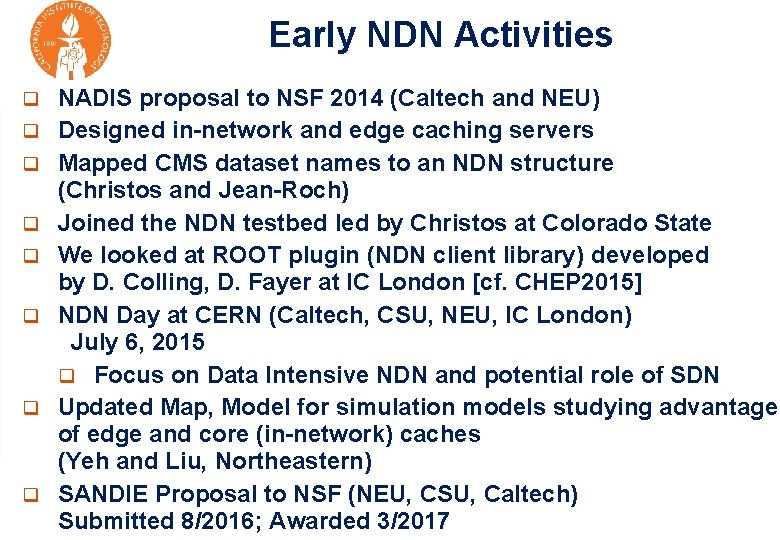

Early NDN Activities q q q q NADIS proposal to NSF 2014 (Caltech and NEU) Designed in-network and edge caching servers Mapped CMS dataset names to an NDN structure (Christos and Jean-Roch) Joined the NDN testbed led by Christos at Colorado State We looked at ROOT plugin (NDN client library) developed by D. Colling, D. Fayer at IC London [cf. CHEP 2015] NDN Day at CERN (Caltech, CSU, NEU, IC London) July 6, 2015 q Focus on Data Intensive NDN and potential role of SDN Updated Map, Model for simulation models studying advantage of edge and core (in-network) caches (Yeh and Liu, Northeastern) SANDIE Proposal to NSF (NEU, CSU, Caltech) Submitted 8/2016; Awarded 3/2017

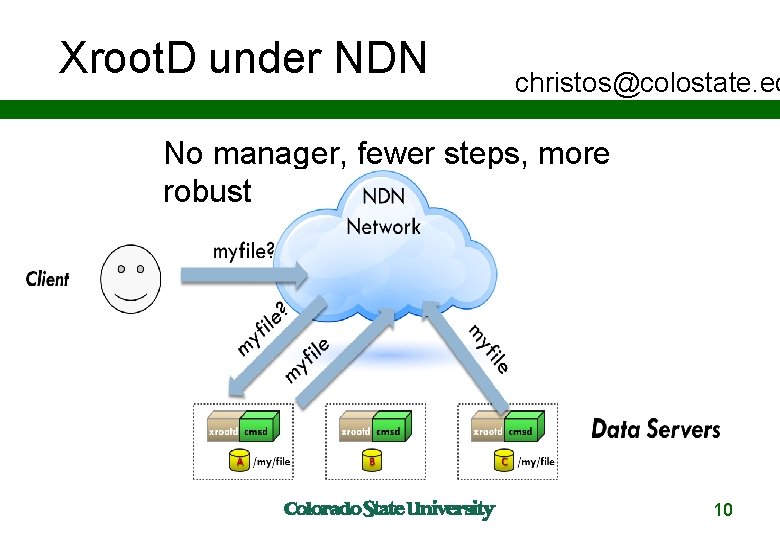

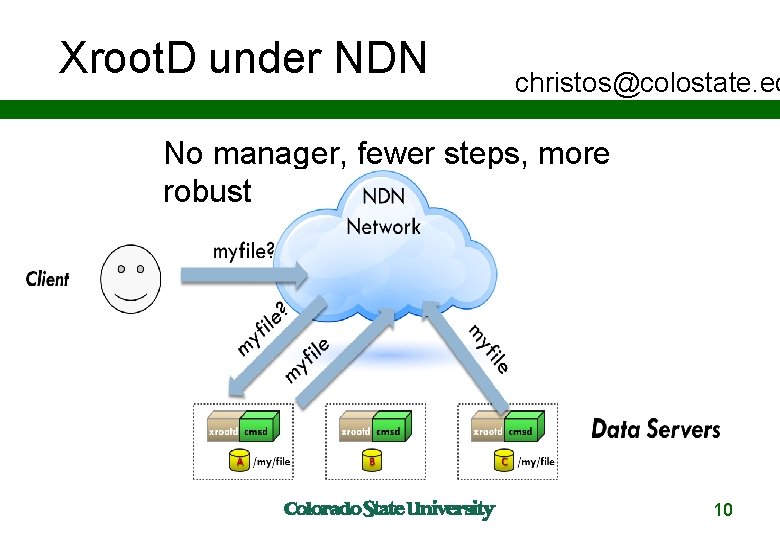

Xroot. D under NDN christos@colostate. ed No manager, fewer steps, more robust 1010

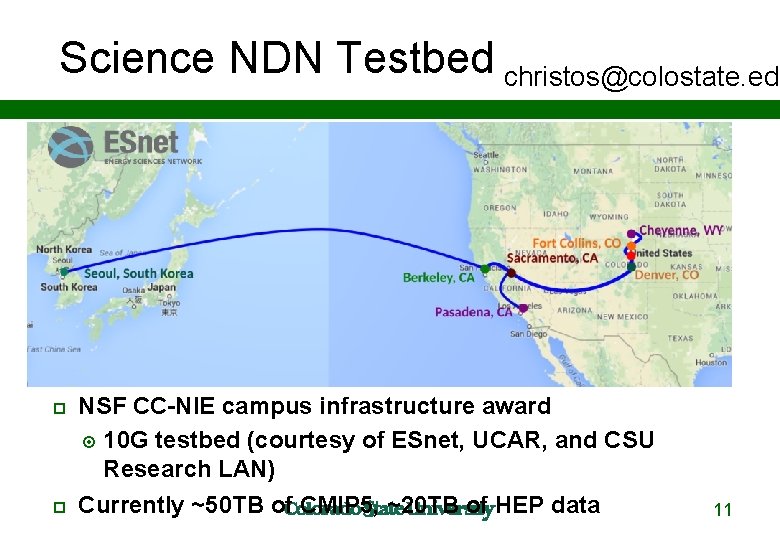

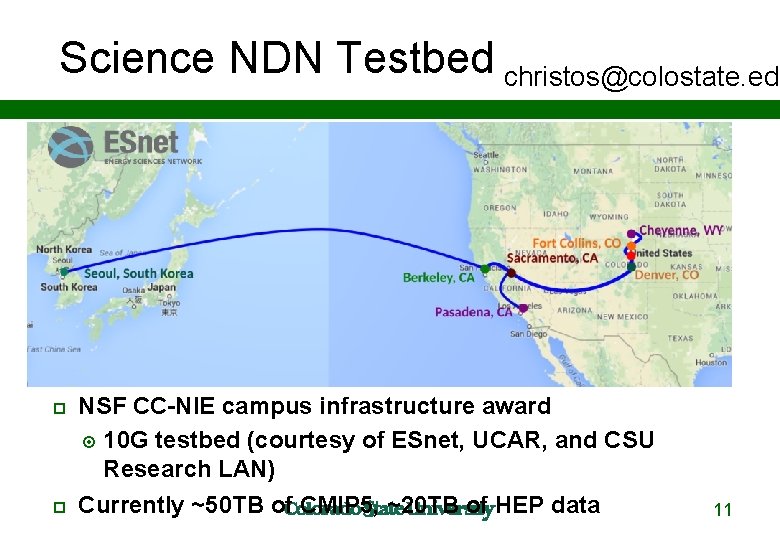

Science NDN Testbed christos@colostate. edu NSF CC-NIE campus infrastructure award 10 G testbed (courtesy of ESnet, UCAR, and CSU Research LAN) Currently ~50 TB of CMIP 5, ~20 TB of HEP data 11

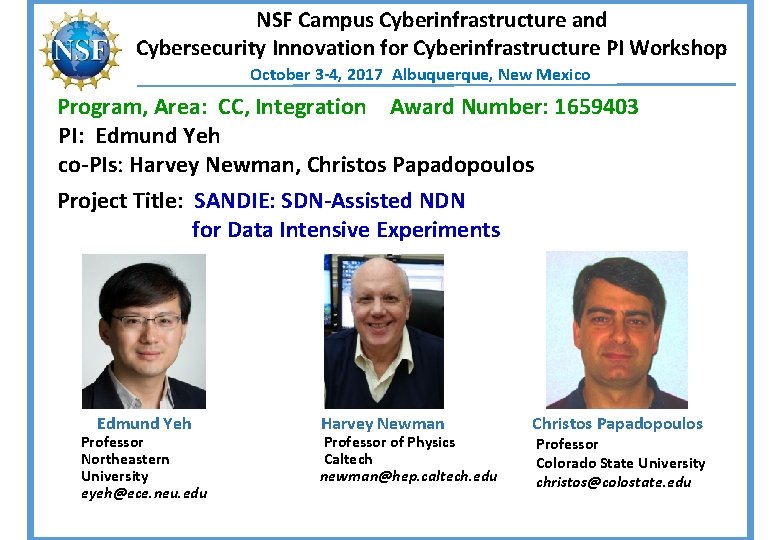

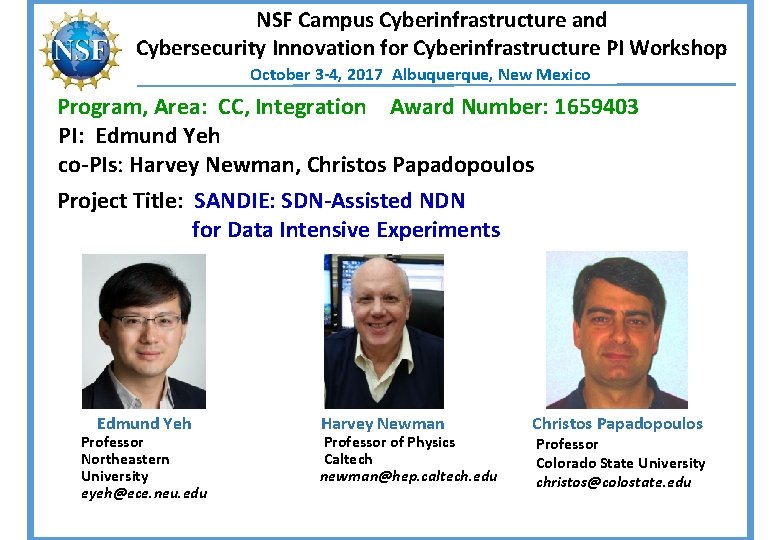

NSF Campus Cyberinfrastructure and Cybersecurity Innovation for Cyberinfrastructure PI Workshop October 3 -4, 2017 Albuquerque, New Mexico Program, Area: CC, Integration Award Number: 1659403 PI: Edmund Yeh co-PIs: Harvey Newman, Christos Papadopoulos Project Title: SANDIE: SDN-Assisted NDN for Data Intensive Experiments Edmund Yeh Professor Northeastern University eyeh@ece. neu. edu Harvey Newman Professor of Physics Caltech newman@hep. caltech. edu Christos Papadopoulos Professor Colorado State University christos@colostate. edu

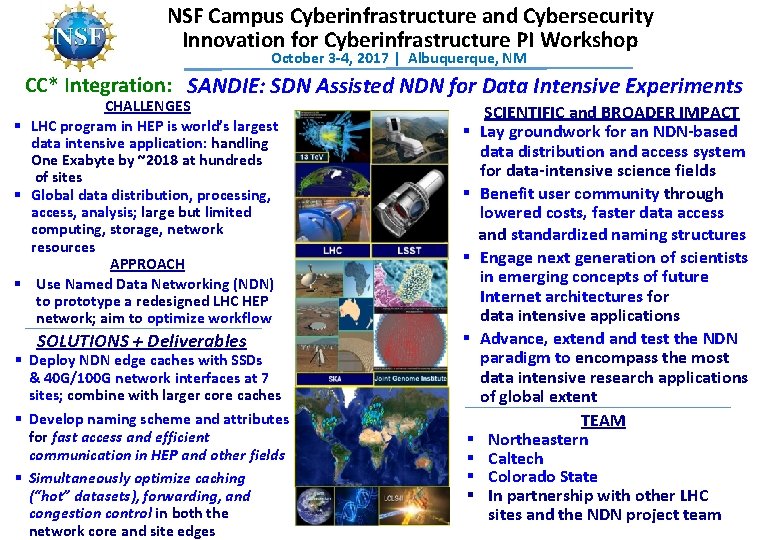

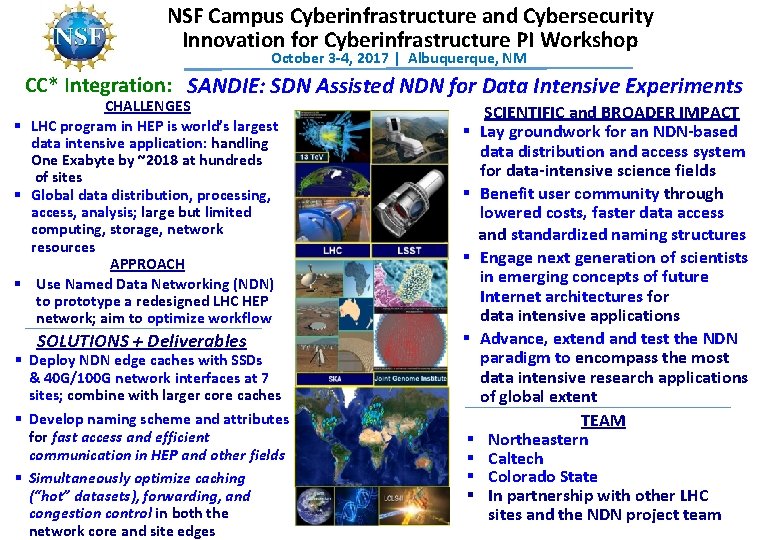

NSF Campus Cyberinfrastructure and Cybersecurity Innovation for Cyberinfrastructure PI Workshop October 3 -4, 2017 | Albuquerque, NM CC* Integration: SANDIE: SDN Assisted NDN for Data Intensive Experiments CHALLENGES § LHC program in HEP is world’s largest data intensive application: handling One Exabyte by ~2018 at hundreds of sites § Global data distribution, processing, access, analysis; large but limited computing, storage, network resources APPROACH § Use Named Data Networking (NDN) to prototype a redesigned LHC HEP network; aim to optimize workflow SOLUTIONS + Deliverables § Deploy NDN edge caches with SSDs & 40 G/100 G network interfaces at 7 sites; combine with larger core caches § Develop naming scheme and attributes for fast access and efficient communication in HEP and other fields § Simultaneously optimize caching (“hot” datasets), forwarding, and congestion control in both the network core and site edges SCIENTIFIC and BROADER IMPACT § Lay groundwork for an NDN-based data distribution and access system for data-intensive science fields § Benefit user community through lowered costs, faster data access and standardized naming structures § Engage next generation of scientists in emerging concepts of future Internet architectures for data intensive applications § Advance, extend and test the NDN paradigm to encompass the most data intensive research applications of global extent TEAM § Northeastern § Caltech § Colorado State § In partnership with other LHC sites and the NDN project team

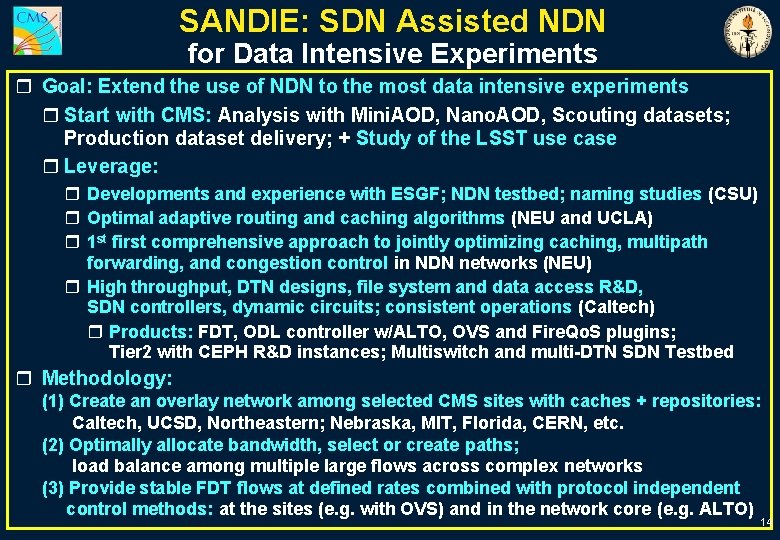

SANDIE: SDN Assisted NDN for Data Intensive Experiments r Goal: Extend the use of NDN to the most data intensive experiments r Start with CMS: Analysis with Mini. AOD, Nano. AOD, Scouting datasets; Production dataset delivery; + Study of the LSST use case r Leverage: r Developments and experience with ESGF; NDN testbed; naming studies (CSU) r Optimal adaptive routing and caching algorithms (NEU and UCLA) r 1 st first comprehensive approach to jointly optimizing caching, multipath forwarding, and congestion control in NDN networks (NEU) r High throughput, DTN designs, file system and data access R&D, SDN controllers, dynamic circuits; consistent operations (Caltech) r Products: FDT, ODL controller w/ALTO, OVS and Fire. Qo. S plugins; Tier 2 with CEPH R&D instances; Multiswitch and multi-DTN SDN Testbed r Methodology: (1) Create an overlay network among selected CMS sites with caches + repositories: Caltech, UCSD, Northeastern; Nebraska, MIT, Florida, CERN, etc. (2) Optimally allocate bandwidth, select or create paths; load balance among multiple large flows across complex networks (3) Provide stable FDT flows at defined rates combined with protocol independent control methods: at the sites (e. g. with OVS) and in the network core (e. g. ALTO) 14

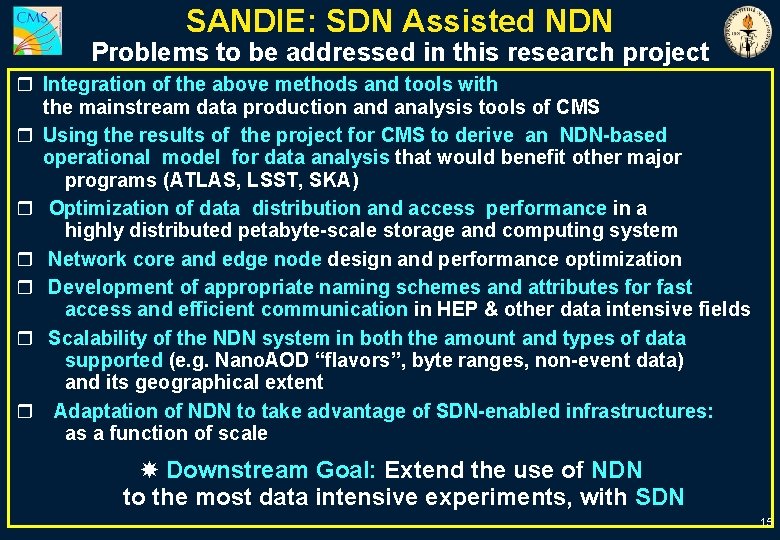

SANDIE: SDN Assisted NDN Problems to be addressed in this research project r Integration of the above methods and tools with the mainstream data production and analysis tools of CMS r Using the results of the project for CMS to derive an NDN-based operational model for data analysis that would benefit other major programs (ATLAS, LSST, SKA) r Optimization of data distribution and access performance in a highly distributed petabyte-scale storage and computing system r Network core and edge node design and performance optimization r Development of appropriate naming schemes and attributes for fast access and efficient communication in HEP & other data intensive fields r Scalability of the NDN system in both the amount and types of data supported (e. g. Nano. AOD “flavors”, byte ranges, non-event data) and its geographical extent r Adaptation of NDN to take advantage of SDN-enabled infrastructures: as a function of scale Downstream Goal: Extend the use of NDN to the most data intensive experiments, with SDN 15

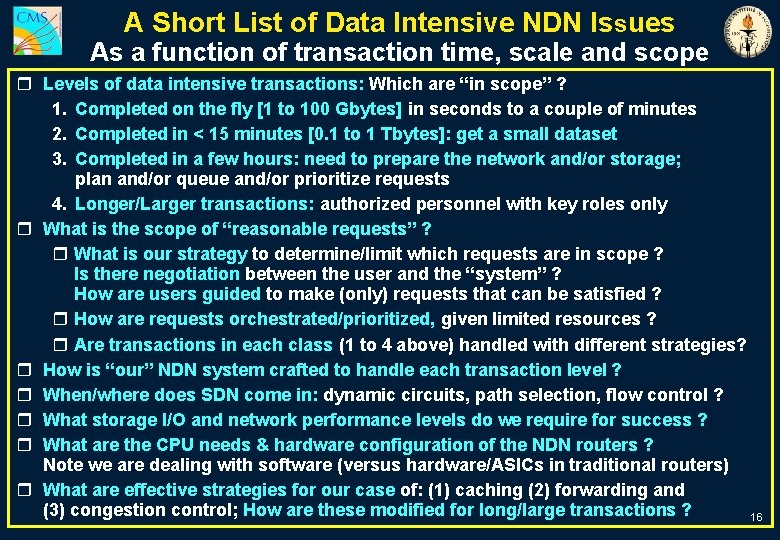

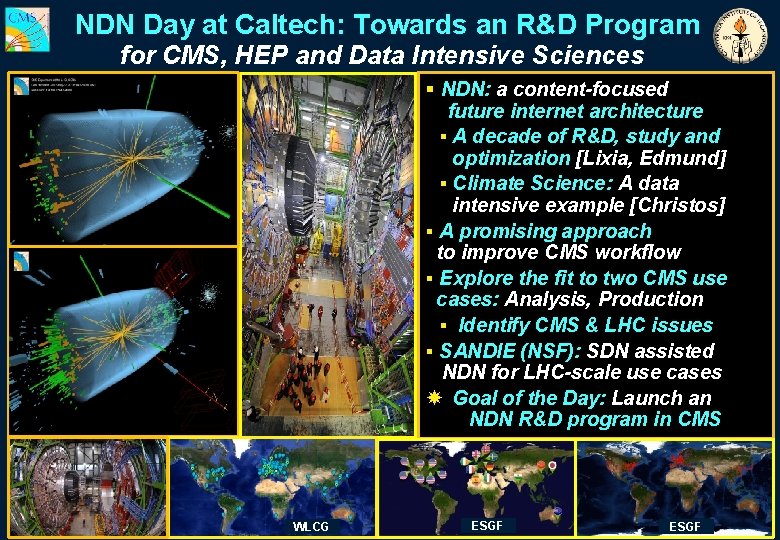

A Short List of Data Intensive NDN Issues As a function of transaction time, scale and scope r Levels of data intensive transactions: Which are “in scope” ? 1. Completed on the fly [1 to 100 Gbytes] in seconds to a couple of minutes 2. Completed in < 15 minutes [0. 1 to 1 Tbytes]: get a small dataset 3. Completed in a few hours: need to prepare the network and/or storage; plan and/or queue and/or prioritize requests 4. Longer/Larger transactions: authorized personnel with key roles only r What is the scope of “reasonable requests” ? r What is our strategy to determine/limit which requests are in scope ? Is there negotiation between the user and the “system” ? How are users guided to make (only) requests that can be satisfied ? r How are requests orchestrated/prioritized, given limited resources ? r Are transactions in each class (1 to 4 above) handled with different strategies? r How is “our” NDN system crafted to handle each transaction level ? r When/where does SDN come in: dynamic circuits, path selection, flow control ? r What storage I/O and network performance levels do we require for success ? r What are the CPU needs & hardware configuration of the NDN routers ? Note we are dealing with software (versus hardware/ASICs in traditional routers) r What are effective strategies for our case of: (1) caching (2) forwarding and (3) congestion control; How are these modified for long/large transactions ? 16

NDN Day at Caltech: Towards an R&D Program for CMS, HEP and Data Intensive Sciences § NDN: a content-focused future internet architecture § A decade of R&D, study and optimization [Lixia, Edmund] § Climate Science: A data intensive example [Christos] § A promising approach to improve CMS workflow § Explore the fit to two CMS use cases: Analysis, Production § Identify CMS & LHC issues § SANDIE (NSF): SDN assisted NDN for LHC-scale use cases Goal of the Day: Launch an NDN R&D program in CMS WLCG ESGF

NDN Day at Caltech: November 20, 2017 Backup Slides Follow 18

![NDN Day at CERN July 6 2015 Colorado State Imperial and Caltech Groups 2 NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [2]](https://slidetodoc.com/presentation_image_h/312b8873319ce54c20fbc96801c9efa9/image-19.jpg)

NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [2] r 1: 30 - 3: 00 PM: Introductions and Q&A (Christos, David, Edmund, HN, Jean-Roch, Ramiro, Iosif) Points to cover: r What interests you in NDN, or what is your role in NDN development and use ? r What are your near term plans for NDN ? r What are your longer term plans ? Do these include production use; in CMS for example ? r What do you consider the main challenges ? r [Ramiro, Julian, Iosif, Azher, Edmund, HN]: How do you see the potential to combine SDN and NDN for data intensive applications ? r 3: 00 - 3: 45 Talk by Christos on the status and outlook for NDN activities at Colorado State; issues for the HEP use case (30' + 15') [People at Caltech join at 6 AM Pacific Time] 19

![NDN Day at CERN July 6 2015 Colorado State Imperial and Caltech Groups 2 NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [2]](https://slidetodoc.com/presentation_image_h/312b8873319ce54c20fbc96801c9efa9/image-20.jpg)

NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [2] r 3: 45 - 4: 30 PM Talk by David Colling and Demonstration of the Root Plugin by the group at Imperial r 4: 30 - 5: 00 PM Recap of the status and Issues so far r 5: 00 – 5: 30 PM Key issues and next steps before David has to go. [Or - can someone take him to the airport a little later ? ] r 5: 30 – 6: 30 PM [Shall we go longer after 8 PM if needed. . . ok ? ] In-depth discussion and action items: r Consequences of the data intensiveness of the HEP use case; recall various parts of the HEP workflow using the WLCG dashboard r Caching and latencies: how much data, where and when ? Time to deliver ? r Naming and prototype setups: r We already have an example of naming from Jean-Roch; r How to get some datasets (or at least the structure and dummy data ? ) set up on the Colorado State testbed. r Which kinds of operations do we want to emulate: Including event selection ? Object Selection ? r Can we use the Imperial Root plugin for this ? 20

![NDN Day at CERN July 6 2015 Colorado State Imperial and Caltech Groups 3 NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [3]](https://slidetodoc.com/presentation_image_h/312b8873319ce54c20fbc96801c9efa9/image-21.jpg)

NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [3] r 6: 30 PM Testbed setup; Operational plans and issues r Can we make a distributed testbed among Caltech, Colorado State, CERN and Imperial ? Other sites (Northeastern, Starlight, etc. ) ? r What about scaling this up to 40 G and then 100 G for 2 -3 sites ? r What are the major object-collection and file (and file system) related issues for NDN) ? r What about the file system, for example ceph (object and file views, high performance, virtualization etc. ) r How will this complement Xroot. D ? Will it replace it [if not already answered ? r What about Open v. Switch and high throughput transfers with Qo. S ? (related to the SDN/NDN intersection) r 7: 00 PM – remarks from Edmund on NDN at Northeastern r 7: 30 PM+: If time permits: Outlook for production; How this might intersect with CMS workflow 21

![NDN Day at CERN July 6 2015 Colorado State Imperial and Caltech Groups 4 NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [4]](https://slidetodoc.com/presentation_image_h/312b8873319ce54c20fbc96801c9efa9/image-22.jpg)

NDN Day at CERN: July 6, 2015 Colorado State, Imperial and Caltech Groups [4] r 7: 30 PM+ Outlook for production: How might this intersect with CMS workflow: r For analysis: Root plugin r Use with Ph. EDEx and dynamic circuits: dynamic dataset placement r also perhaps together with Open v. Switch) r Use with ASO ? : placing batch job outputs (at several to tens of Hz) 22

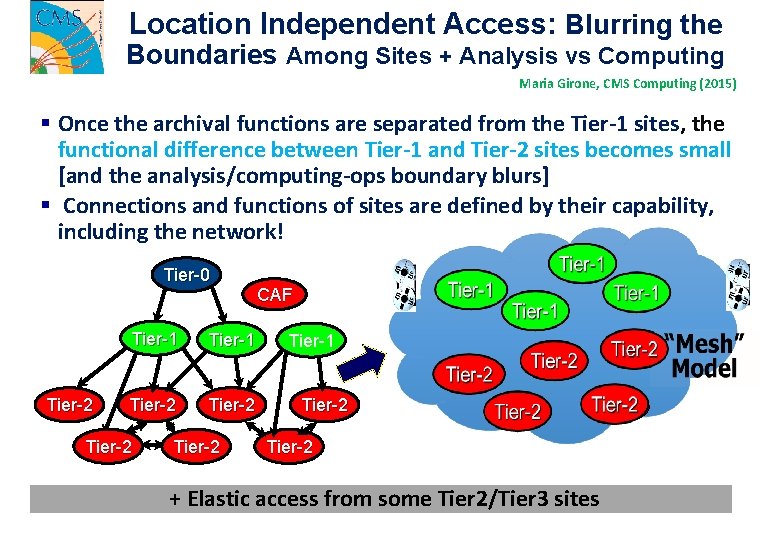

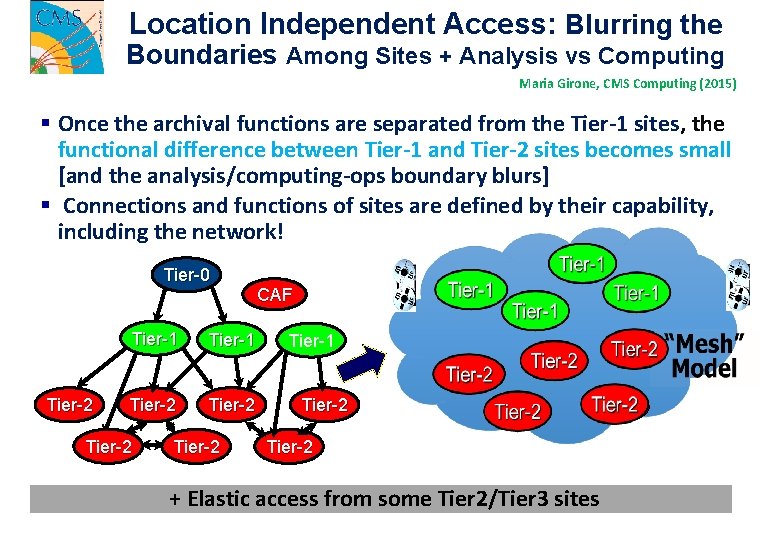

Location Independent Access: Blurring the Boundaries Among Sites + Analysis vs Computing Maria Girone, CMS Computing (2015) § Once the archival functions are separated from the Tier-1 sites, the functional difference between Tier-1 and Tier-2 sites becomes small [and the analysis/computing-ops boundary blurs] § Connections and functions of sites are defined by their capability, including the network! Tier-0 Tier-2 Tier-1 Tier-2 CAF Tier-1 Tier-2 + Elastic access from some Tier 2/Tier 3 sites

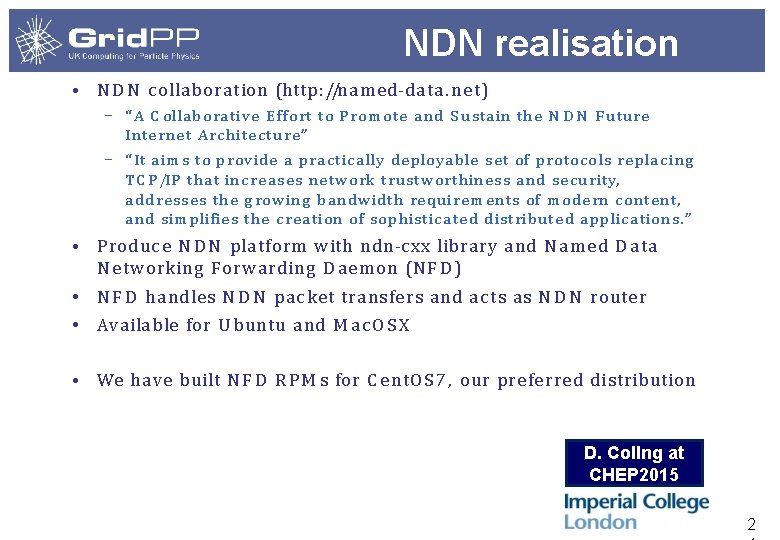

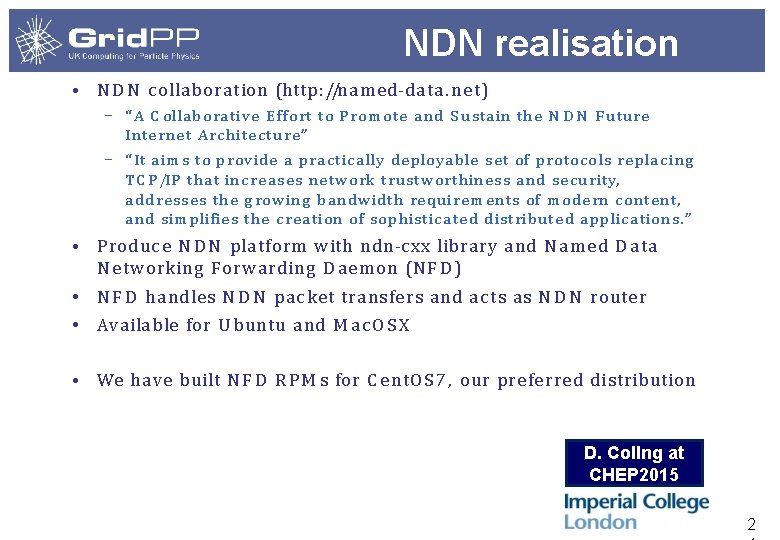

NDN realisation • N D N c ollabora tion (http : //na m e d-da ta. ne t) – “ A C olla borative E ffort to P rom ote a nd S usta in the N D N F uture Internet Arc hitecture” – “ It a ims to provide a practic a lly de ploya ble se t of protocols replacing TC P /IP tha t increa ses ne twork trustworthiness a nd se curity, addresse s the g rowing bandwidth requireme nts of m ode rn conte nt, and sim plifies the cre a tion of sophistic a te d distribute d a pplic a tions. ” • Produc e N D N pla tform with ndn-c xx libra ry a nd N a m e d D a ta N e tworking Forw arding D a e mon (N F D ) • N F D ha ndles N D N pac ket tra nsfe rs a nd a c ts a s N D N route r • Availa ble for U buntu a nd M a c O S X • We ha ve built N F D R P M s for C e nt. O S 7 , our pre fe rre d distribution D. Collng at CHEP 2015 2

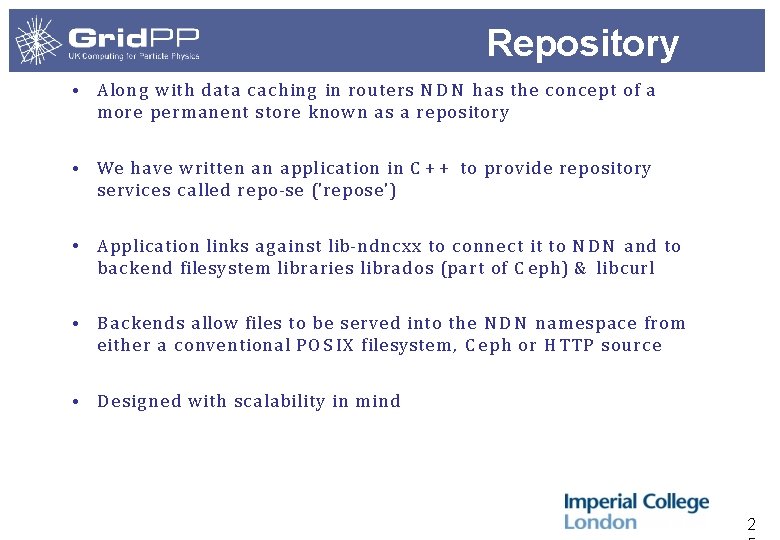

Repository • Alon g with data c hing in route rs N D N ha s the c once pt of a m ore perm a nent store known a s a re pository • We ha ve writte n a pplication in C + + to provide re pository se rvice s c a lle d re po-se ('re pose ') • Applic a tion links a g a inst lib-ndnc xx to c onnec t it to N D N a nd to b ac kend filesyste m libra ries libra dos (pa rt of C e ph) & libcurl • B a c kends a llow file s to b e se rved into the N D N na m e space from e ithe r a c onve ntiona l PO S IX filesyste m , C e ph or H TTP sourc e • D e sign e d with sc a la bility in m ind 2

Repository client • S m all sta tic c lien t libra ry, libre poc lien t, is a lso inc lud ed within the re pose c od eb a se – provid es a m inim al P O S IX -like inte rfa c e (open (), re a d(), c lose(), . . . ) an d is d esig n ed to b e linke d in to sha red lib ra ry plu g ins for othe r a pplic a tions suc h a s R O O T & G FA L 2 A lso a m in im a l " g e tfile" a pplic a tion for te sting , which fe tch e s a n • en tire file from N D N a nd write s it to th e loc a l disk using th e c lien t libra ry C u rre nt im p lem e nta tion is lim ite d to re a d-only a c c e ss for • b a sic d em onstra tion s a n d te stin g O nc e we fina lise a plan for a uthe ntic a tion it c an the n b e • e xte nd ed to in c lud e full rem ote I/O (re a d-write) O rig in a l ve rsion of c lie nt req ue ste d a n d re trieve d d a ta • se g m e nts one a tim e C u re ntly working on im p rovin g the c lie nt to se nd ou t • m ultiple in te re st p a c ke ts sim ulta ne ously 2

IC HEP NDN testbed • Insta lle d a n N D N testbe d runnin g N F D software com prisin g : – a U se r Interfa ce host – two N D N routers – two repositorie s running 'repo-se' for data storag e – C eph c luste r storag e with four 16 TB se rve rs • R outing paths a re a dded in m a nua lly, e. g. nfdc reg iste r /de m o/rados udp 4: //123. 1 23 • The clie nt on the U I re quests da ta se g m e nts from the re po-se by se ndin g out intere st pack ets to the inte rm e dia te route rs • The pa ssa g e of the inte rest pa ckets a nd returnin g da ta pa c kets c a n be monitored using the 'ndndu m p' software (https: //g ithub. com/zhe nkai/ndndu m p) • C urre ntly usin g te stbe d to build up e xpe rie nce with N D N 2

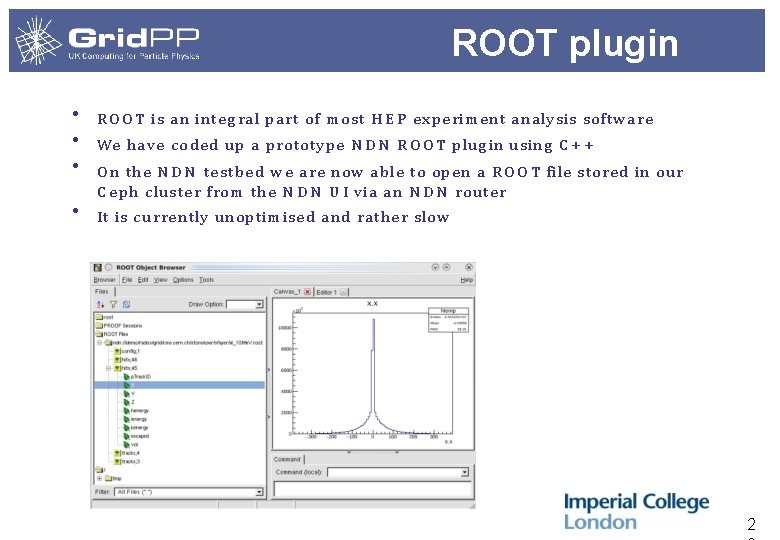

ROOT plugin • R O O T is an inte g ra l pa rt of m ost H E P e xpe rime nt a nalysis software • We have code d up a prototype N D N R O O T plug in usin g C + + • O n the N D N te stbe d w e a re now a ble to ope n a R O O T file stored in our C eph c luste r from the N D N U I via an N D N route r • It is cu rrently unoptimise d a nd ra ther slow 2

Grid testing • Te stb ed N F D softw are use s C e nt. O S 7 but our g rid c luste r worker node s a re running C e nt. O S 6 • We ha ve built the N F D softw are on C e nt. O S 6 for g rid te sting • S ubm it g rid jobs to our production c luste r which inc lude a ta rb all of the N D N softwa re in the input sa ndbox – in future will use C V M F S softw are a • S uc h jobs a re a ble to sta g e a file from the re pository to the W N loc a l disk • Pla n to te st a t sc a le with a w ell-re sourc e d host a c ting a s a n N D N co re route r • Would a lso like to te st re a ding a nd c a c hing of R O O T file s over long e r dista nc e s 29

• Possible use with LHC VO M a in use c a se is ch aotic u se r an alysis where it is difficu lt to predic t wha t data is to be re a d • A ve rsion of the experim e nt's a na lysis co de would be built with the N D N R O O T plug in • V O da ta se ts c ould be en crypte d using a n individual sym m etric ke y per da ta set • Ac c e ssing the da ta would involve two ste ps, the c lien t would – re quest the en c rypte d da ta – re quest the en c ryption key from a D ata S e t Key S erve r (D S K S ) with a sig ne d interest pa c ke t • The ke y serve r would ch e ck the use r's m em be rship of the V O an d if va lid would e ncrypt the da ta se t key with the user's public key a nd sen d it ba ck • O n re c eipt of the en c rypte d key a nd da ta pa ck ets the a pplic ation would de c rypt the ke y with user's private key an d use that to a c c e ss the data 30

Scalable routers • N D N route rs m e ntione d in the lite ra ture disc uss the c a ch ing of data in m e mory • With L H C e xperim e nt R O O T file s rou g hly 2 G B in size a nd inc re a sing , the via bility of suc h in-m e mory c a c hing is que stion able • We e nvisa g e the nee d to build a sc a lable route r sim ila r to our sc a la ble re pository • The route r would like ly h ave se vera l la yers of c a c hing : – M e m c a c hed – SSD – C e ph b ase stora g e • M a y e nd up with a hybrid route r-re pository a t e xperim e nt site s 31