Multicore Compilation an Industrial Approach Alastair F Donaldson

- Slides: 42

Multi-core Compilation an Industrial Approach Alastair F. Donaldson EPSRC Postdoctoral Research Fellow, University of Oxford Formerly at Codeplay Software Ltd. Thanks to the Codeplay Sieve team: Pete Cooper, Uwe Dolinsky, Andrew Richards, Colin Riley, George Russell www. codeplay. com Codeplay Software Ltd 2 nd Floor, 45 York Place Edinburgh, EH 1 3 HP United Kingdom Tel: +44(0)131 466 0506

Multi-core Compilation – an Industrial Approach Coverage • Limits of automatic parallelisation • Programming heterogeneous multi-core processors • Codeplay Sieve Threads approach – Like pthreads for accelerator processors • The promises and limitations of Open. CL Laboratory session: Sieve Partitioning System for Cell Linux – a Practical Introduction

Multi-core Compilation – an Industrial Approach Limits of automatic parallelisation Dream: a tool which takes a serial program, finds opportunities for parallelism, produces parallel code optimized for target processor, preserves determinism • Part of why this has not been achieved – C/C++, pointers, function pointers, multiple source files, precompiled libraries • Why this will never be achieved – Many parallelisable programs require ingenuity to parallelise! • State-of-the-art: we are good at parallelising regular loops, when we can see all the code

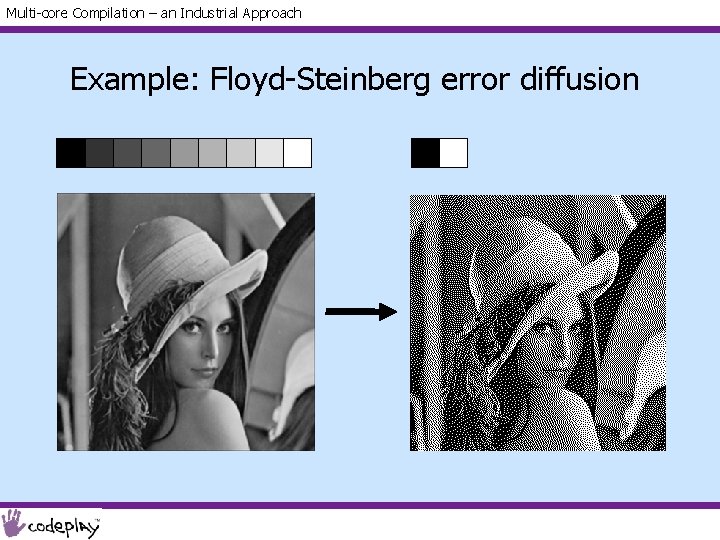

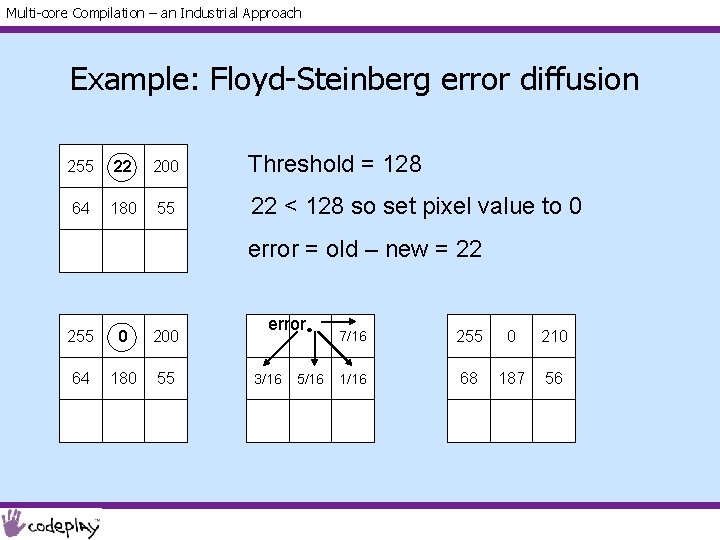

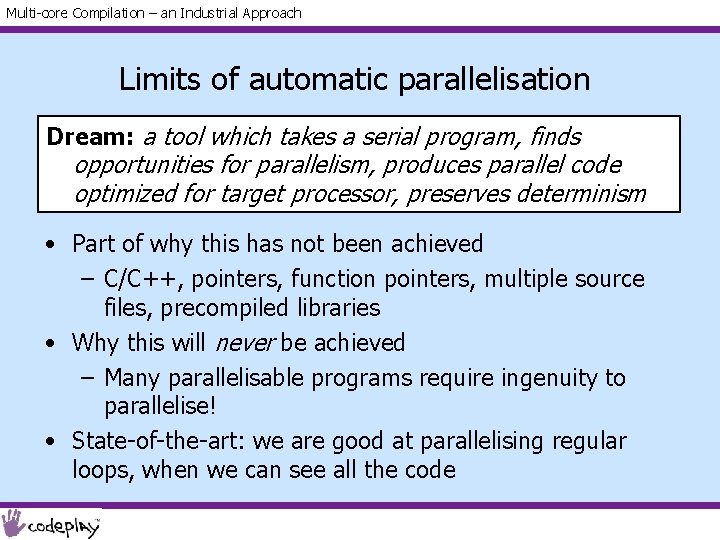

Multi-core Compilation – an Industrial Approach Example: Floyd-Steinberg error diffusion

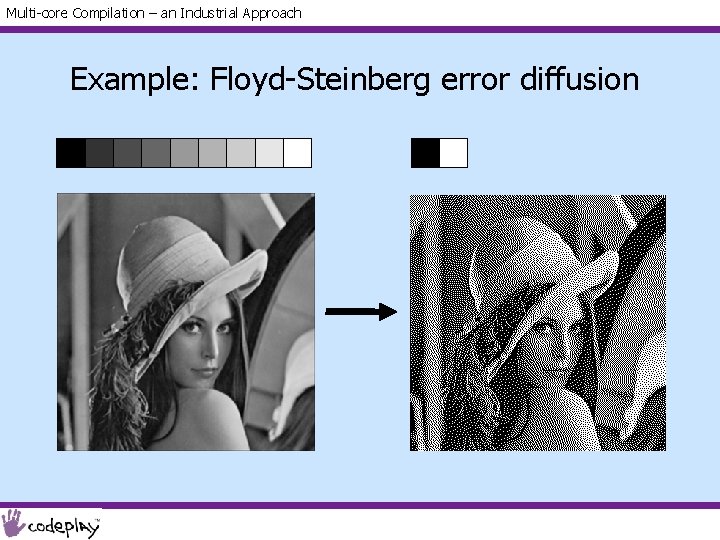

Multi-core Compilation – an Industrial Approach Example: Floyd-Steinberg error diffusion 255 22 200 Threshold = 128 64 180 55 22 < 128 so set pixel value to 0 error = old – new = 22 255 0 200 64 180 55 error 3/16 5/16 7/16 255 0 210 1/16 68 187 56

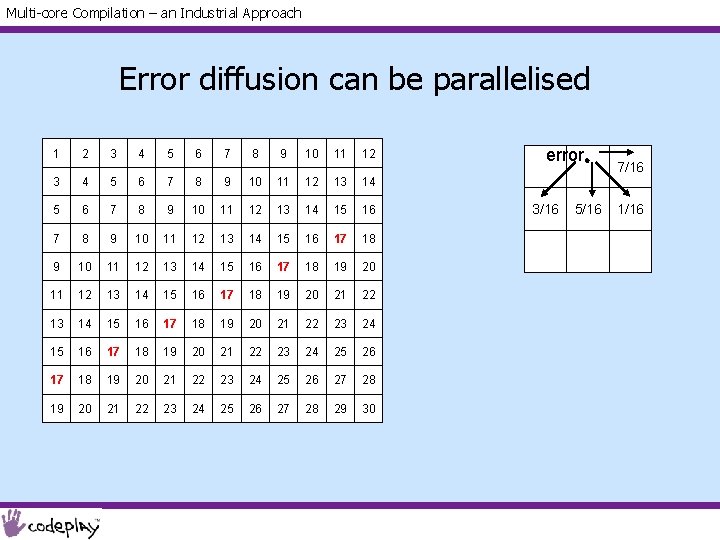

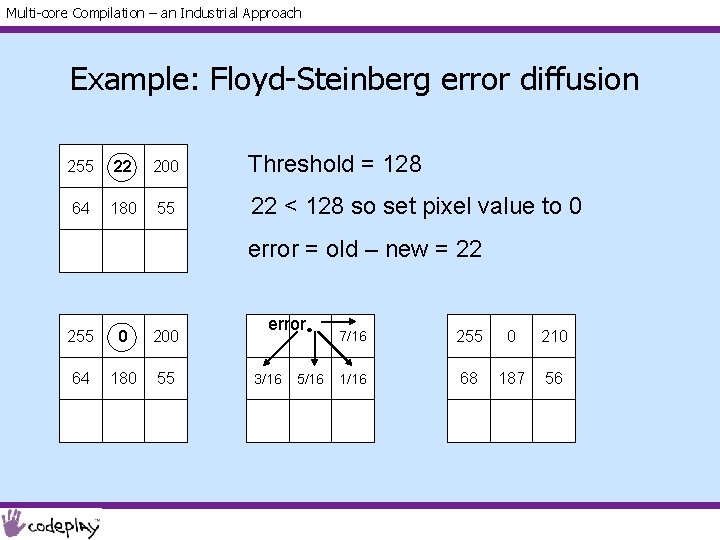

Multi-core Compilation – an Industrial Approach Error diffusion can be parallelised 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 17 18 19 20 21 22 23 24 25 26 27 28 29 30 error 3/16 5/16 7/16 1/16

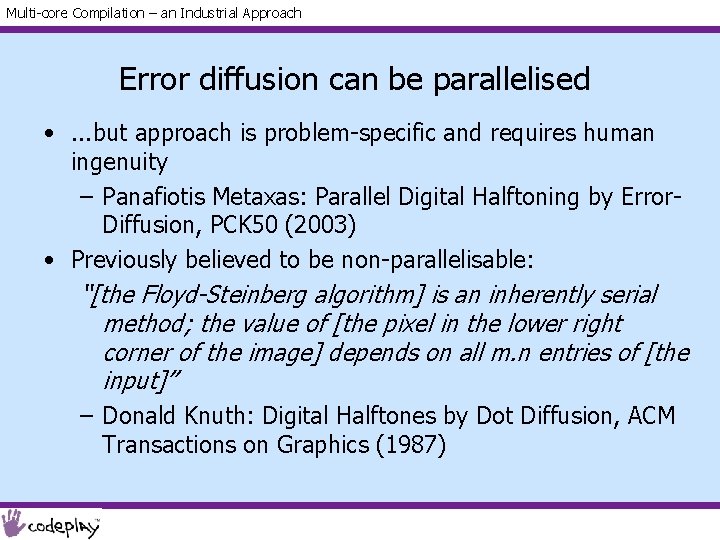

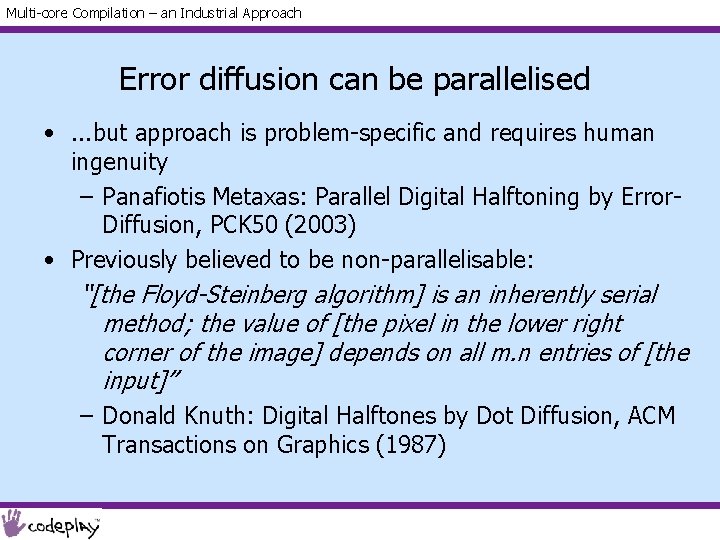

Multi-core Compilation – an Industrial Approach Error diffusion can be parallelised • . . . but approach is problem-specific and requires human ingenuity – Panafiotis Metaxas: Parallel Digital Halftoning by Error. Diffusion, PCK 50 (2003) • Previously believed to be non-parallelisable: “[the Floyd-Steinberg algorithm] is an inherently serial method; the value of [the pixel in the lower right corner of the image] depends on all m. n entries of [the input]” – Donald Knuth: Digital Halftones by Dot Diffusion, ACM Transactions on Graphics (1987)

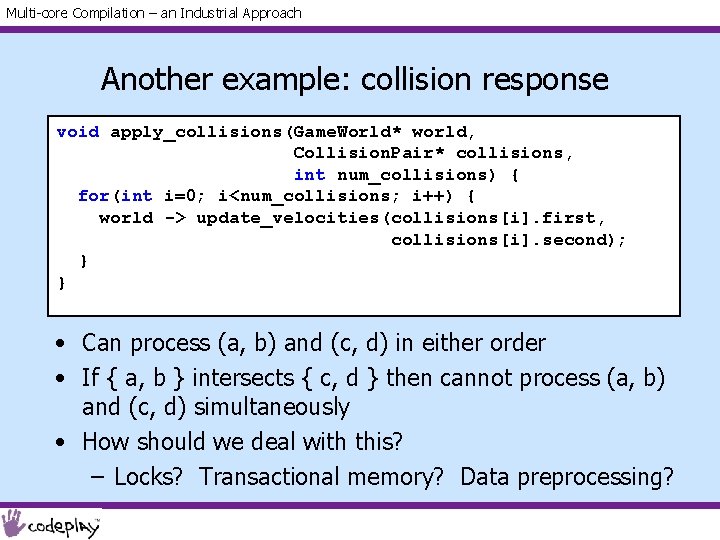

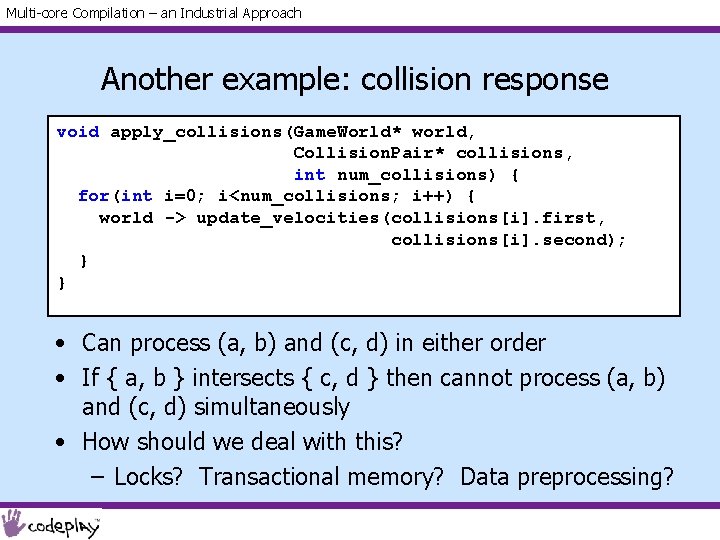

Multi-core Compilation – an Industrial Approach Another example: collision response void apply_collisions(Game. World* world, Collision. Pair* collisions, int num_collisions) { for(int i=0; i<num_collisions; i++) { world -> update_velocities(collisions[i]. first, collisions[i]. second); } } • Can process (a, b) and (c, d) in either order • If { a, b } intersects { c, d } then cannot process (a, b) and (c, d) simultaneously • How should we deal with this? – Locks? Transactional memory? Data preprocessing?

Multi-core Compilation – an Industrial Approach Our perspective • Let's nail auto-parallelisation for special cases • In general, we are stuck with multi-threading • Let's design sophisticated tools to help with multithreaded programming • Modern problem: multi-threaded programming for heterogeneous multi-core is very hard

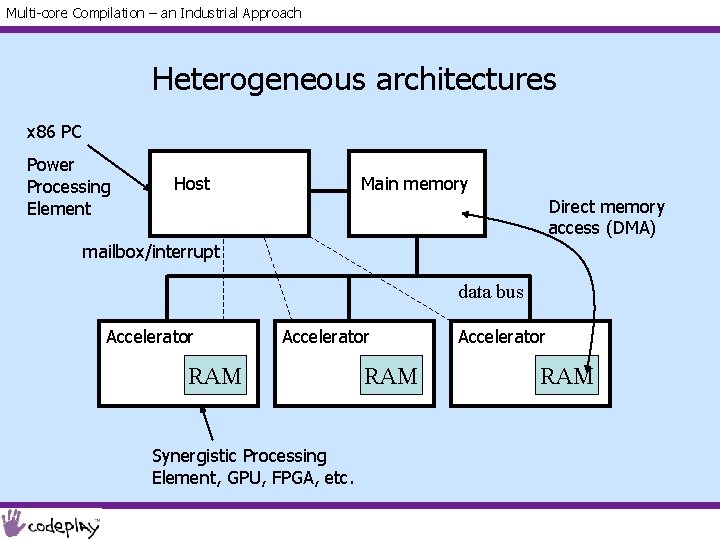

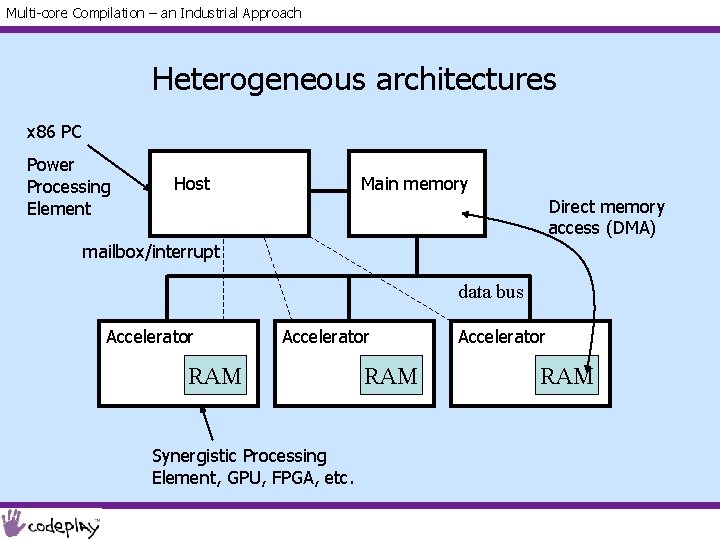

Multi-core Compilation – an Industrial Approach Heterogeneous architectures x 86 PC Power Processing Element Host Main memory Direct memory access (DMA) mailbox/interrupt data bus Accelerator RAM Synergistic Processing Element, GPU, FPGA, etc. RAM Accelerator RAM

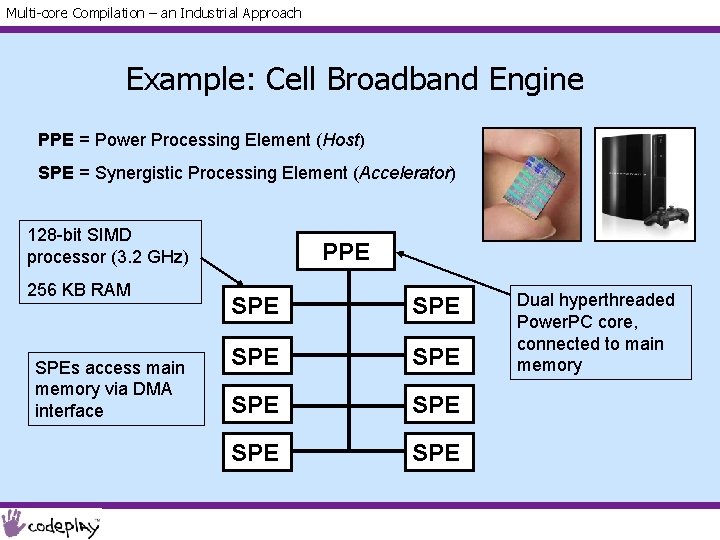

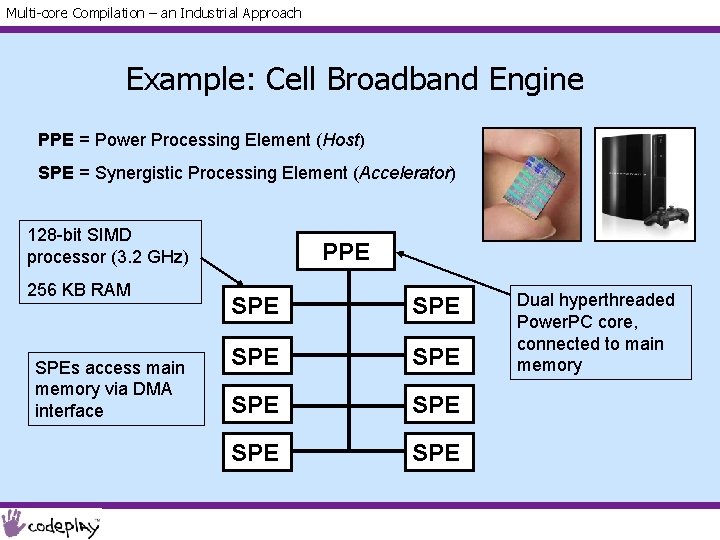

Multi-core Compilation – an Industrial Approach Example: Cell Broadband Engine PPE = Power Processing Element (Host) SPE = Synergistic Processing Element (Accelerator) 128 -bit SIMD processor (3. 2 GHz) 256 KB RAM SPEs access main memory via DMA interface PPE SPE SPE Dual hyperthreaded Power. PC core, connected to main memory

Multi-core Compilation – an Industrial Approach Programming heterogeneous machines • Write separate programs for host and accelerator • Lots of “glue” code – launch accelerators – orchestrate data movement – clear down accelerators • Can achieve great performance, but: – Time consuming – Non portable – Error prone (limited scope for static checking) – Multiple source files for logically related functionality

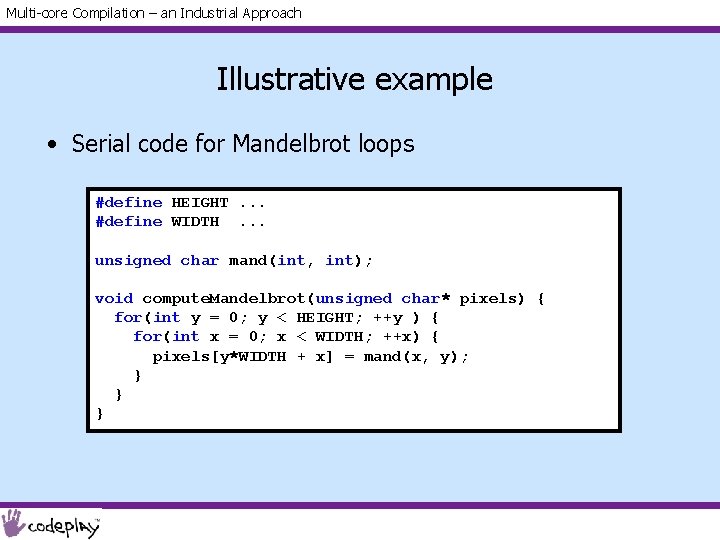

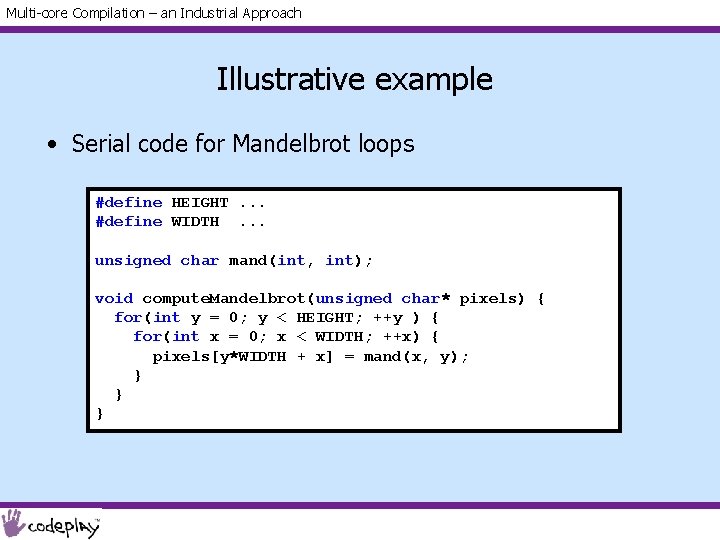

Multi-core Compilation – an Industrial Approach Illustrative example • Serial code for Mandelbrot loops #define HEIGHT. . . #define WIDTH. . . unsigned char mand(int, int); void compute. Mandelbrot(unsigned char* pixels) { for(int y = 0; y < HEIGHT; ++y ) { for(int x = 0; x < WIDTH; ++x) { pixels[y*WIDTH + x] = mand(x, y); } } }

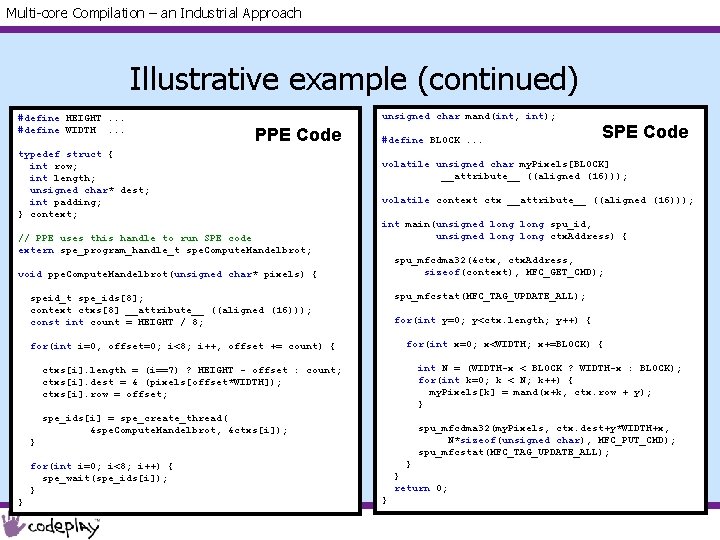

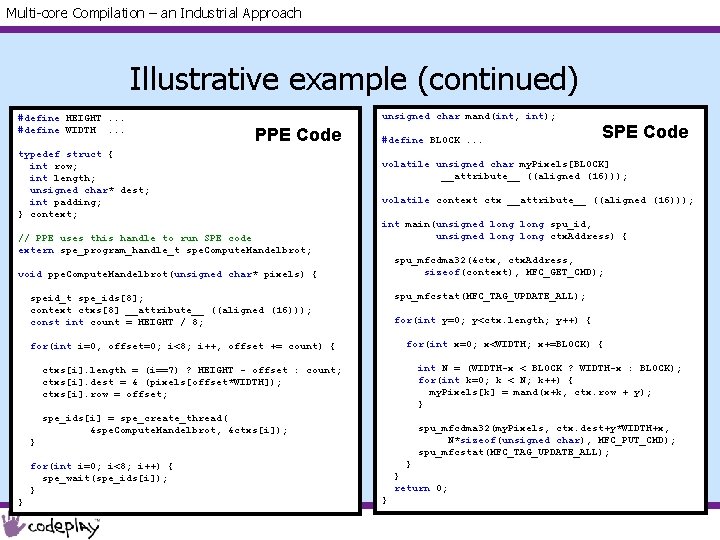

Multi-core Compilation – an Industrial Approach Illustrative example (continued) #define HEIGHT. . . #define WIDTH. . . unsigned char mand(int, int); PPE Code typedef struct { int row; int length; unsigned char* dest; int padding; } context; // PPE uses this handle to run SPE code extern spe_program_handle_t spe. Compute. Mandelbrot; #define BLOCK. . . volatile unsigned char my. Pixels[BLOCK] __attribute__ ((aligned (16))); volatile context ctx __attribute__ ((aligned (16))); int main(unsigned long spu_id, unsigned long ctx. Address) { spu_mfcdma 32(&ctx, ctx. Address, sizeof(context), MFC_GET_CMD); void ppe. Compute. Mandelbrot(unsigned char* pixels) { spu_mfcstat(MFC_TAG_UPDATE_ALL); speid_t spe_ids[8]; context ctxs[8] __attribute__ ((aligned (16))); const int count = HEIGHT / 8; for(int y=0; y<ctx. length; y++) { for(int x=0; x<WIDTH; x+=BLOCK) { for(int i=0, offset=0; i<8; i++, offset += count) { int N = (WIDTH-x < BLOCK ? WIDTH-x : BLOCK); for(int k=0; k < N; k++) { my. Pixels[k] = mand(x+k, ctx. row + y); } ctxs[i]. length = (i==7) ? HEIGHT - offset : count; ctxs[i]. dest = & (pixels[offset*WIDTH]); ctxs[i]. row = offset; spe_ids[i] = spe_create_thread( &spe. Compute. Mandelbrot, &ctxs[i]); spu_mfcdma 32(my. Pixels, ctx. dest+y*WIDTH+x, N*sizeof(unsigned char), MFC_PUT_CMD); spu_mfcstat(MFC_TAG_UPDATE_ALL); } for(int i=0; i<8; i++) { spe_wait(spe_ids[i]); } } SPE Code } } return 0; }

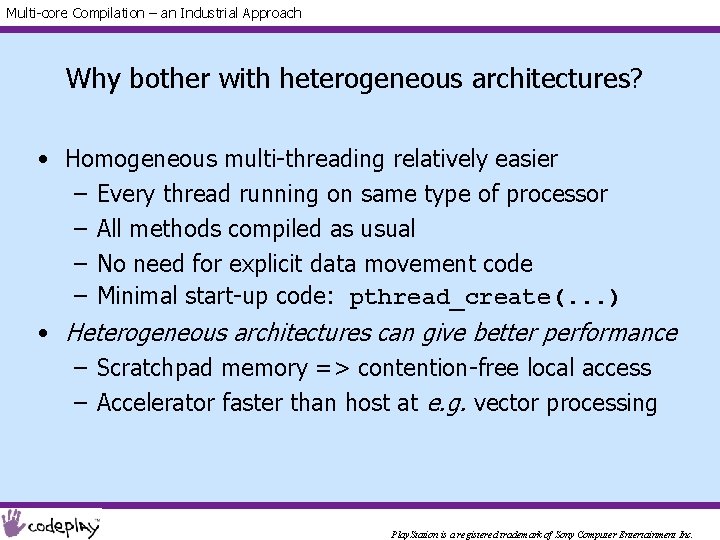

Multi-core Compilation – an Industrial Approach Why bother with heterogeneous architectures? • Homogeneous multi-threading relatively easier – Every thread running on same type of processor – All methods compiled as usual – No need for explicit data movement code – Minimal start-up code: pthread_create(. . . ) • Heterogeneous architectures can give better performance – Scratchpad memory => contention-free local access – Accelerator faster than host at e. g. vector processing Play. Station is a registered trademark of Sony Computer Entertainment Inc.

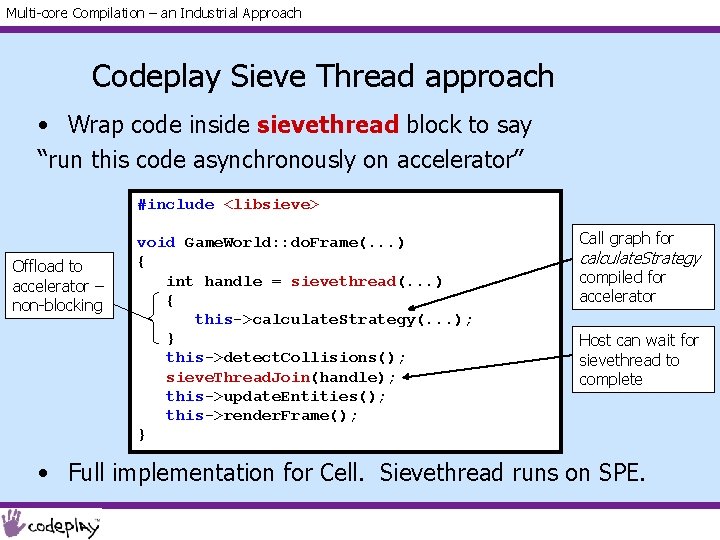

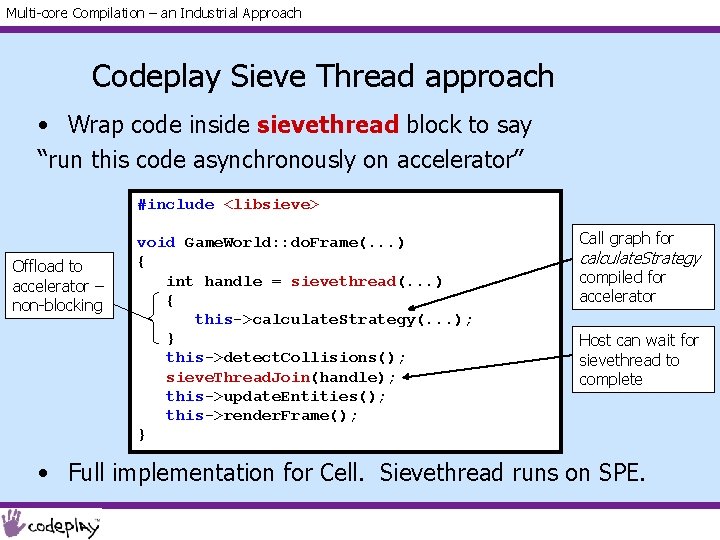

Multi-core Compilation – an Industrial Approach Codeplay Sieve Thread approach • Wrap code inside sievethread block to say “run this code asynchronously on accelerator” #include <libsieve> Offload to accelerator – non-blocking void Game. World: : do. Frame(. . . ) { // Suppose int handle = calculate. Strategy sievethread(. . . )and // detect. Collisions are independent { this->calculate. Strategy(. . . ); } this->detect. Collisions(); sieve. Thread. Join(handle); this->update. Entities(); this->render. Frame(); } Call graph for calculate. Strategy compiled for accelerator Host can wait for sievethread to complete • Full implementation for Cell. Sievethread runs on SPE.

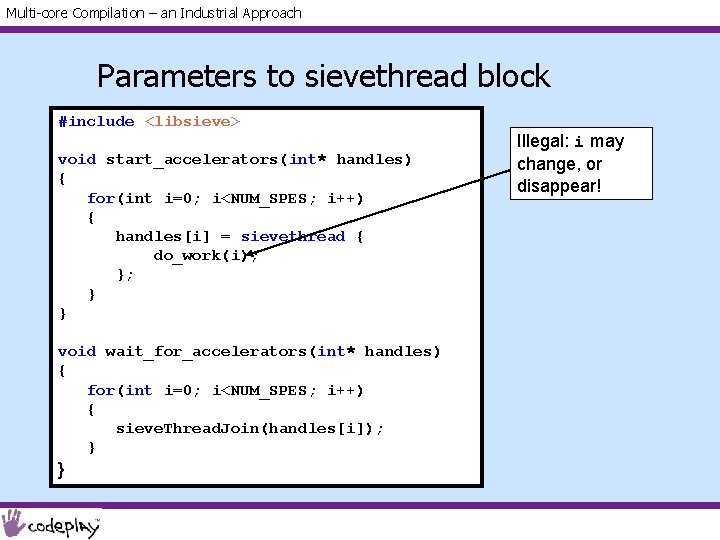

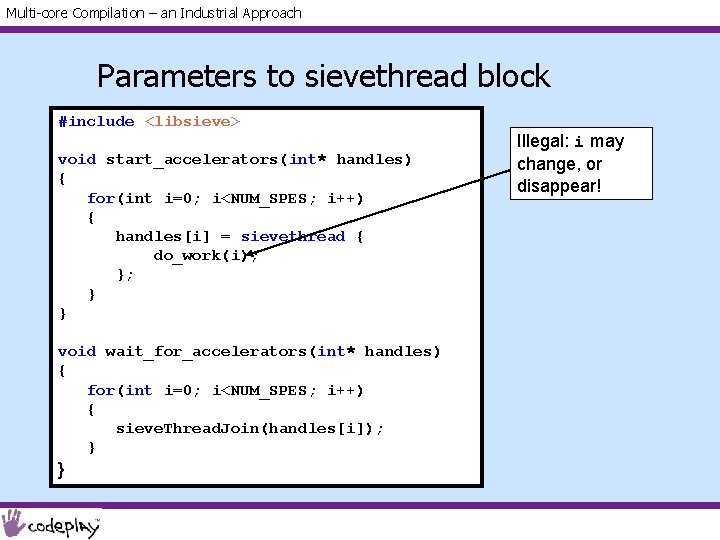

Multi-core Compilation – an Industrial Approach Parameters to sievethread block #include <libsieve> void start_accelerators(int* handles) { for(int i=0; i<NUM_SPES; i++) { handles[i] = sievethread { do_work(i); }; } } void wait_for_accelerators(int* handles) { for(int i=0; i<NUM_SPES; i++) { sieve. Thread. Join(handles[i]); } } Illegal: i may change, or disappear!

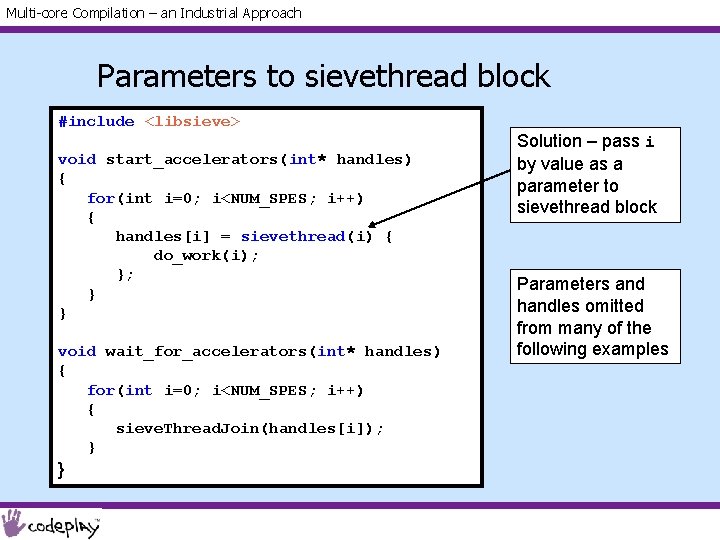

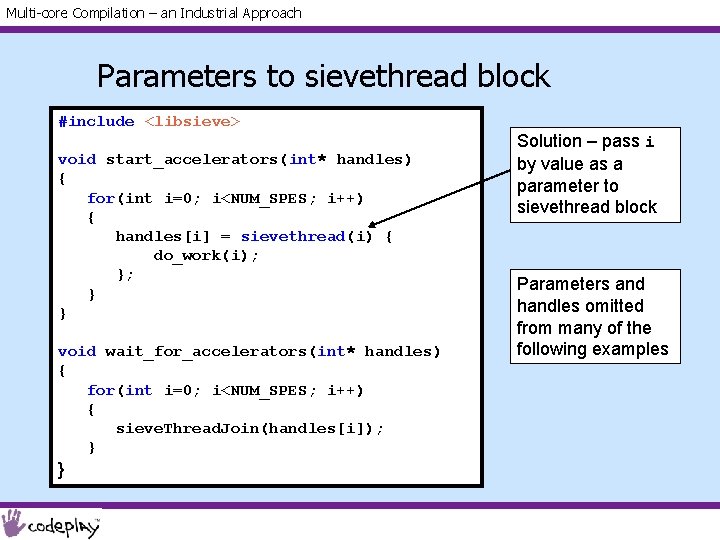

Multi-core Compilation – an Industrial Approach Parameters to sievethread block #include <libsieve> void start_accelerators(int* handles) { for(int i=0; i<NUM_SPES; i++) { handles[i] = sievethread(i) { do_work(i); }; } } void wait_for_accelerators(int* handles) { for(int i=0; i<NUM_SPES; i++) { sieve. Thread. Join(handles[i]); } } Solution – pass i by value as a parameter to sievethread block Parameters and handles omitted from many of the following examples

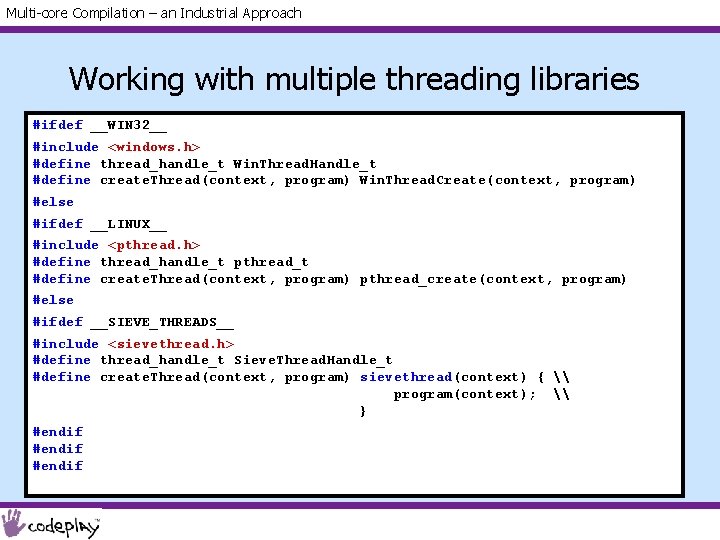

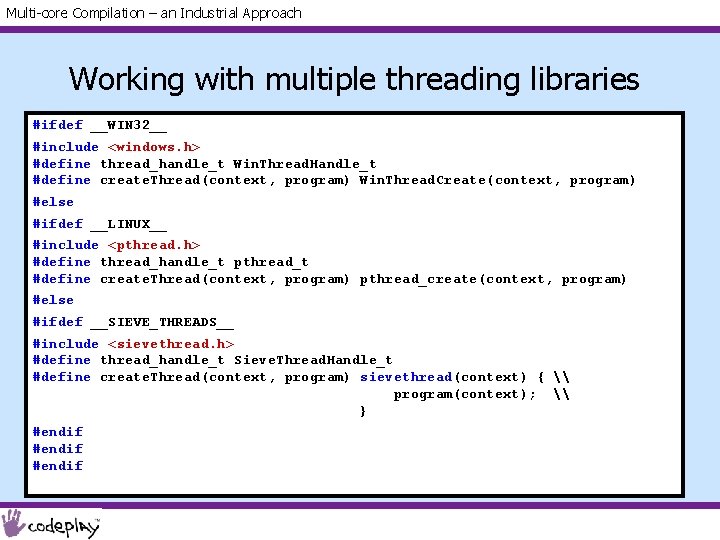

Multi-core Compilation – an Industrial Approach Working with multiple threading libraries #ifdef __WIN 32__ #include <windows. h> #define thread_handle_t Win. Thread. Handle_t #define create. Thread(context, program) Win. Thread. Create(context, program) #else #ifdef __LINUX__ #include <pthread. h> #define thread_handle_t pthread_t #define create. Thread(context, program) pthread_create(context, program) #else #ifdef __SIEVE_THREADS__ #include <sievethread. h> #define thread_handle_t Sieve. Thread. Handle_t #define create. Thread(context, program) sievethread(context) { \ program(context); \ } #endif

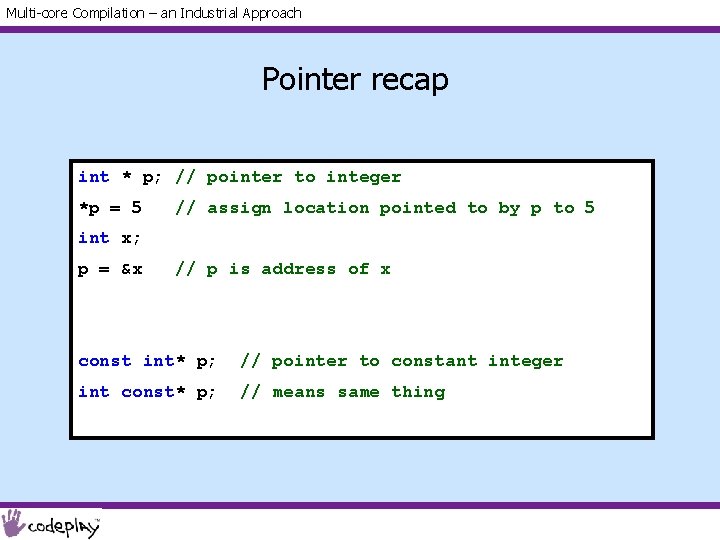

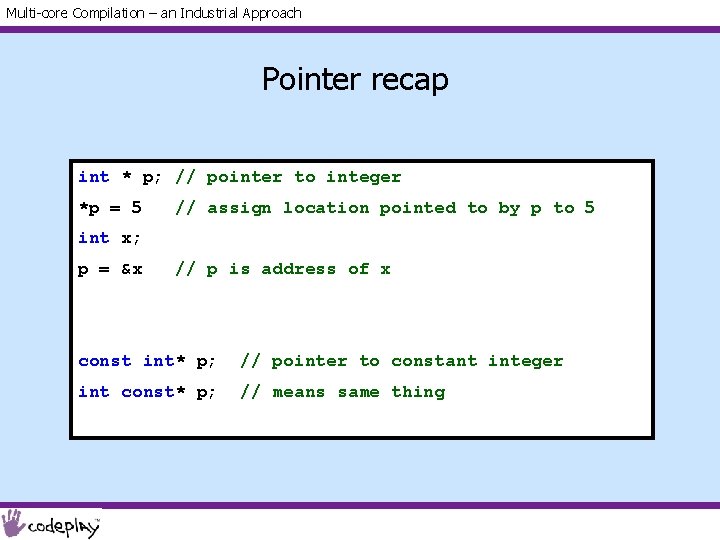

Multi-core Compilation – an Industrial Approach Pointer recap int * p; // pointer to integer *p = 5 // assign location pointed to by p to 5 int x; p = &x // p is address of x const int* p; // pointer to constant integer int const* p; // means same thing

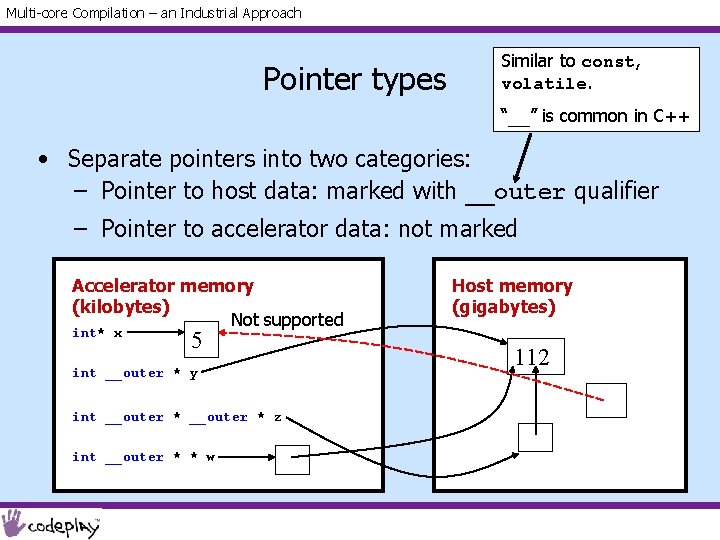

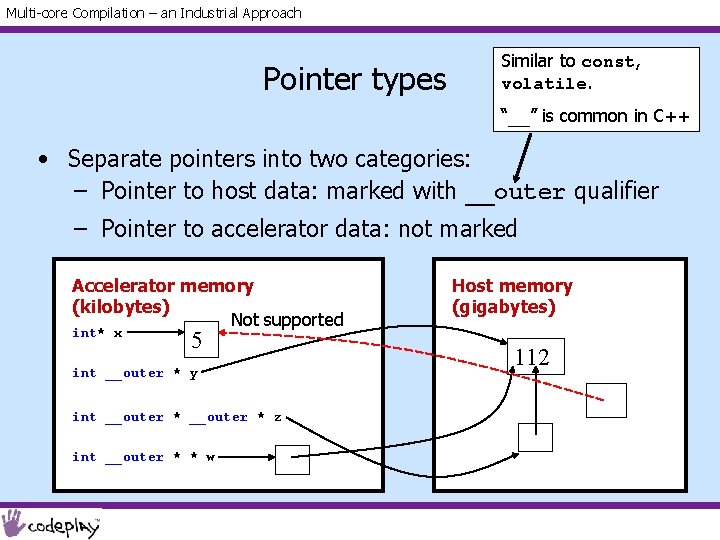

Multi-core Compilation – an Industrial Approach Pointer types Similar to const, volatile. “__” is common in C++ • Separate pointers into two categories: – Pointer to host data: marked with __outer qualifier – Pointer to accelerator data: not marked Accelerator memory (kilobytes) Not supported int* x 5 int __outer * y int __outer * z int __outer * * w Host memory (gigabytes) 112

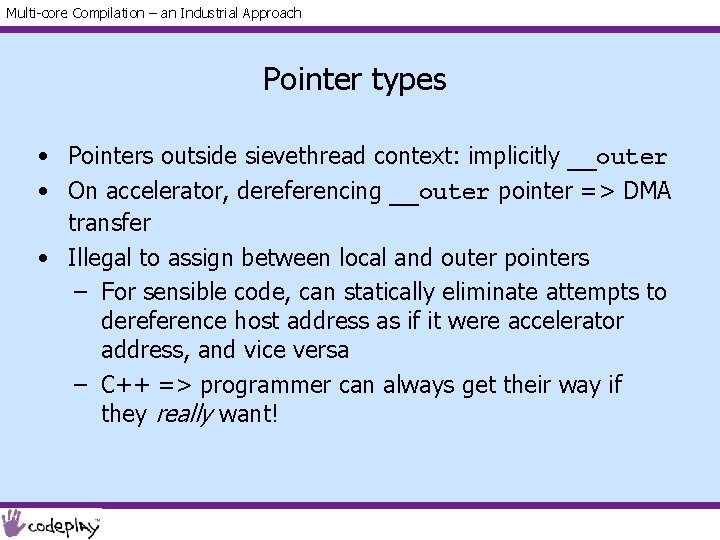

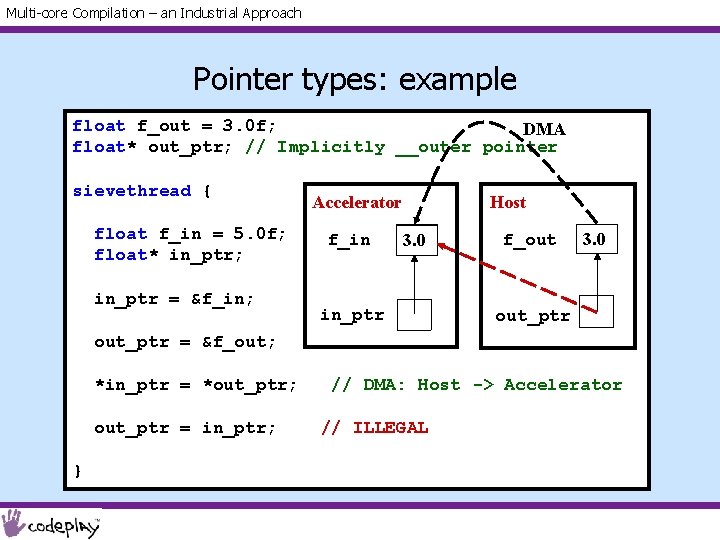

Multi-core Compilation – an Industrial Approach Pointer types • Pointers outside sievethread context: implicitly __outer • On accelerator, dereferencing __outer pointer => DMA transfer • Illegal to assign between local and outer pointers – For sensible code, can statically eliminate attempts to dereference host address as if it were accelerator address, and vice versa – C++ => programmer can always get their way if they really want!

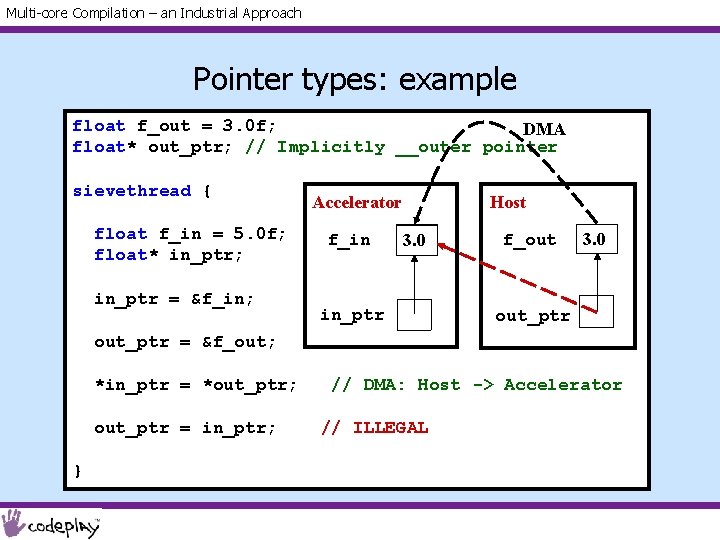

Multi-core Compilation – an Industrial Approach Pointer types: example float f_out = 3. 0 f; DMA float* out_ptr; // Implicitly __outer pointer sievethread { float f_in = 5. 0 f; float* in_ptr; in_ptr = &f_in; Accelerator f_in Host 5. 0 3. 0 in_ptr f_out 3. 0 out_ptr = &f_out; *in_ptr = *out_ptr; out_ptr = in_ptr; } // DMA: Host -> Accelerator // ILLEGAL

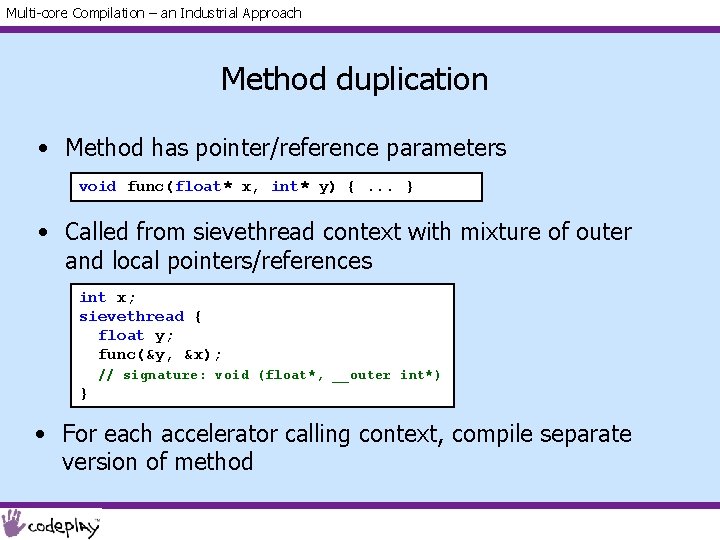

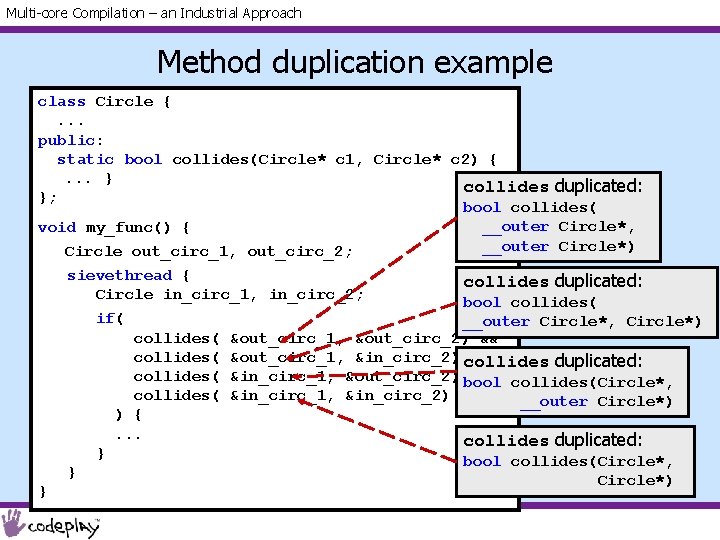

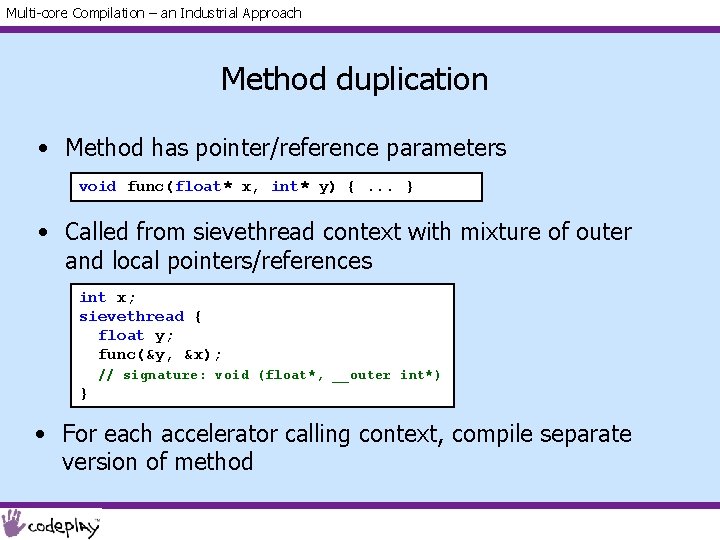

Multi-core Compilation – an Industrial Approach Method duplication • Method has pointer/reference parameters void func(float* x, int* y) {. . . } • Called from sievethread context with mixture of outer and local pointers/references int x; sievethread { float y; func(&y, &x); // signature: void (float*, __outer int*) } • For each accelerator calling context, compile separate version of method

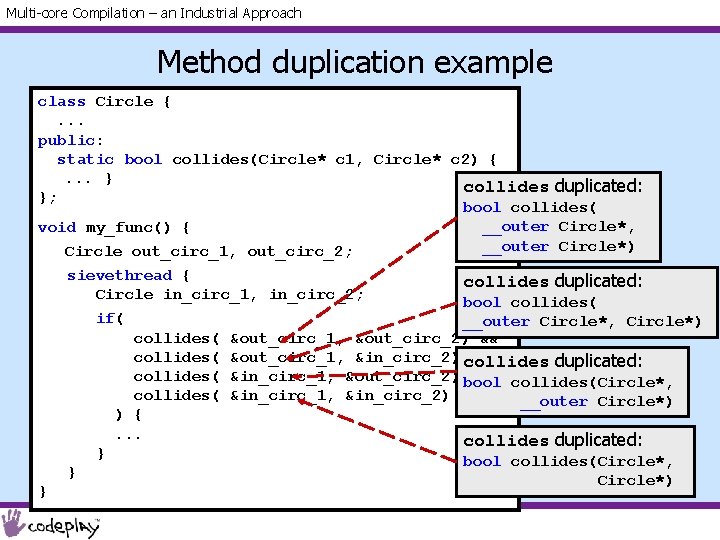

Multi-core Compilation – an Industrial Approach Method duplication example class Circle {. . . public: static bool collides(Circle* c 1, Circle* c 2) {. . . } collides duplicated: }; bool collides( __outer Circle*, void my_func() { __outer Circle*) Circle out_circ_1, out_circ_2; sievethread { Circle in_circ_1, in_circ_2; if( collides( ) {. . . } } } collides duplicated: bool collides( __outer Circle*, Circle*) &out_circ_1, &out_circ_2) && &out_circ_1, &in_circ_2) collides && duplicated: &in_circ_1, &out_circ_2) bool && collides(Circle*, &in_circ_1, &in_circ_2) __outer Circle*) collides duplicated: bool collides(Circle*, Circle*)

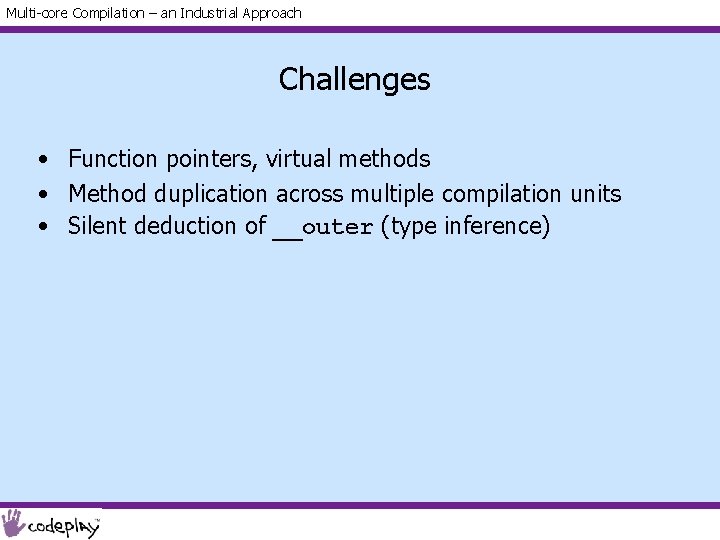

Multi-core Compilation – an Industrial Approach Challenges • Function pointers, virtual methods • Method duplication across multiple compilation units • Silent deduction of __outer (type inference)

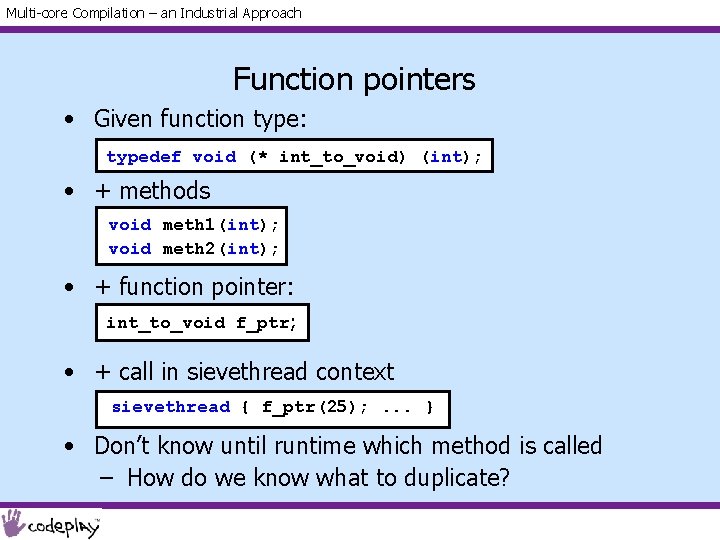

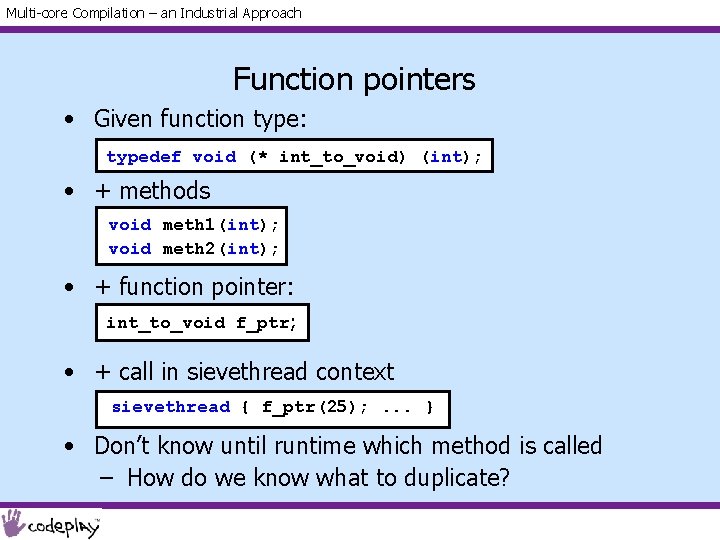

Multi-core Compilation – an Industrial Approach Function pointers • Given function type: typedef void (* int_to_void) (int); • + methods void meth 1(int); void meth 2(int); • + function pointer: int_to_void f_ptr; • + call in sievethread context sievethread { f_ptr(25); . . . } • Don’t know until runtime which method is called – How do we know what to duplicate?

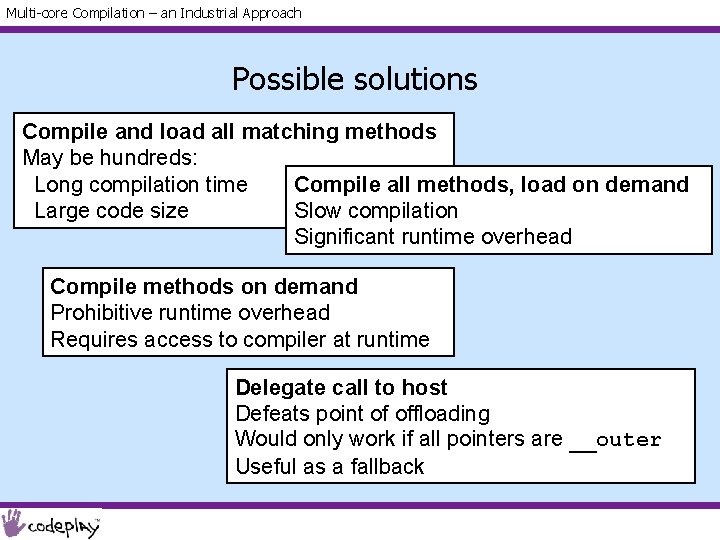

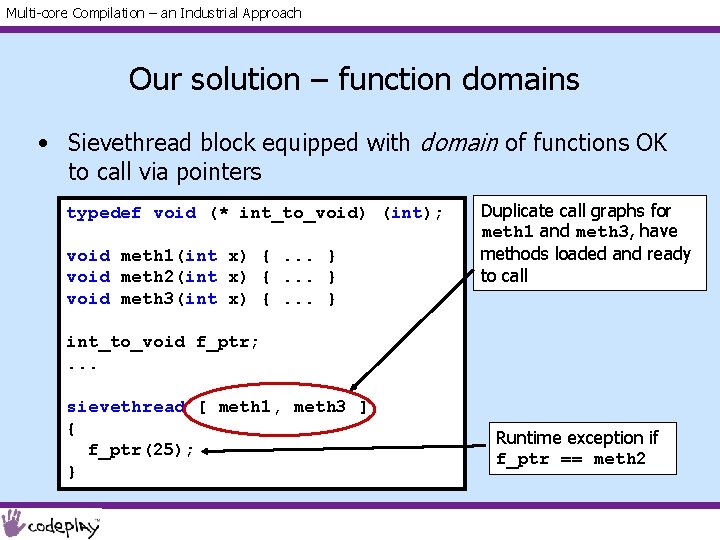

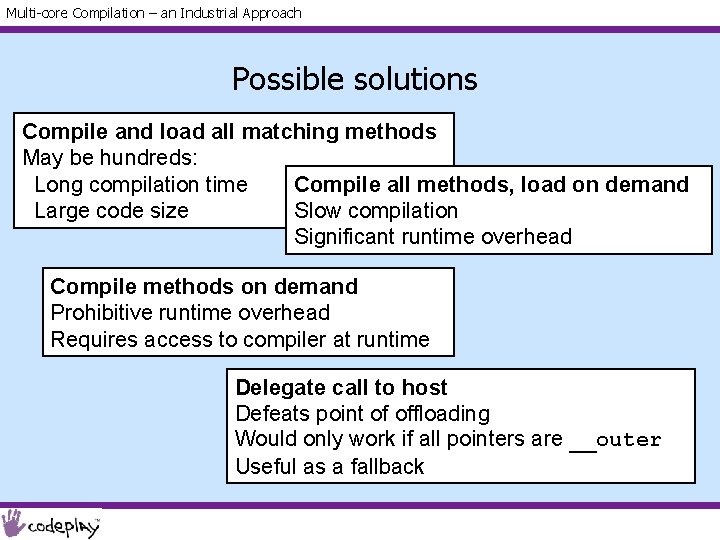

Multi-core Compilation – an Industrial Approach Possible solutions Compile and load all matching methods May be hundreds: Compile all methods, load on demand Long compilation time Slow compilation Large code size Significant runtime overhead Compile methods on demand Prohibitive runtime overhead Requires access to compiler at runtime Delegate call to host Defeats point of offloading Would only work if all pointers are __outer Useful as a fallback

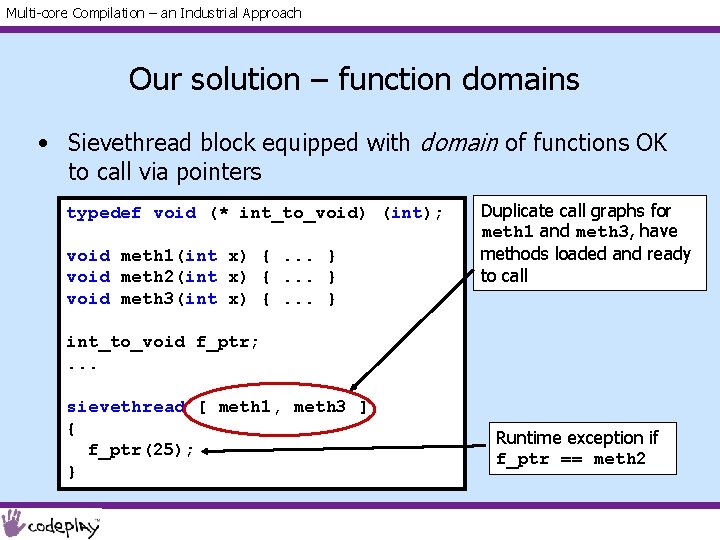

Multi-core Compilation – an Industrial Approach Our solution – function domains • Sievethread block equipped with domain of functions OK to call via pointers typedef void (* int_to_void) (int); void meth 1(int x) {. . . } void meth 2(int x) {. . . } void meth 3(int x) {. . . } Duplicate call graphs for meth 1 and meth 3, have methods loaded and ready to call int_to_void f_ptr; . . . sievethread [ meth 1, meth 3 ] { f_ptr(25); } Runtime exception if f_ptr == meth 2

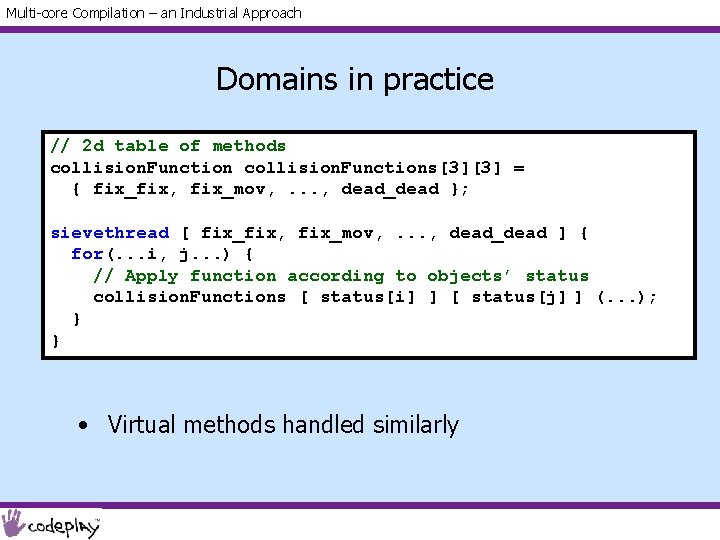

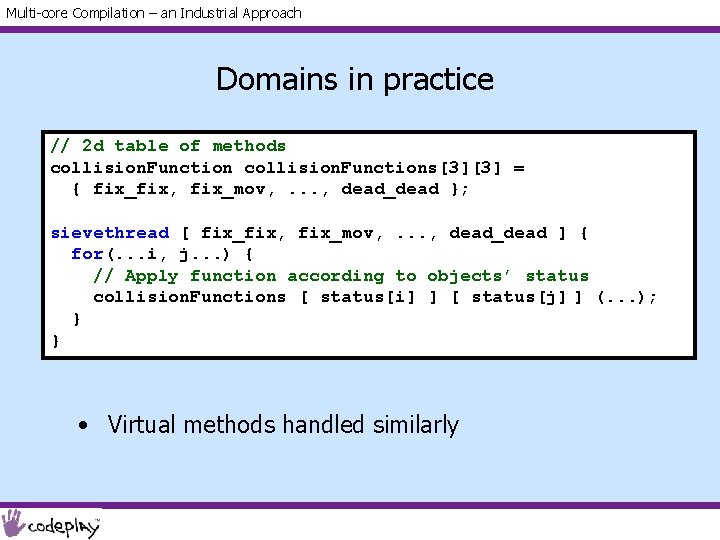

Multi-core Compilation – an Industrial Approach Domains in practice // 2 d table of methods collision. Functions[3][3] = { fix_fix, fix_mov, . . . , dead_dead }; sievethread [ fix_fix, fix_mov, . . . , dead_dead ] { for(. . . i, j. . . ) { // Apply function according to objects’ status collision. Functions [ status[i] ] [ status[j] ] (. . . ); } } • Virtual methods handled similarly

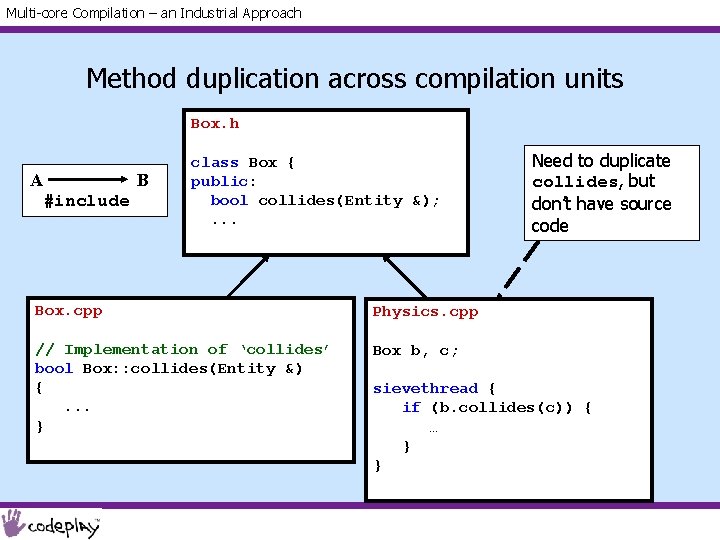

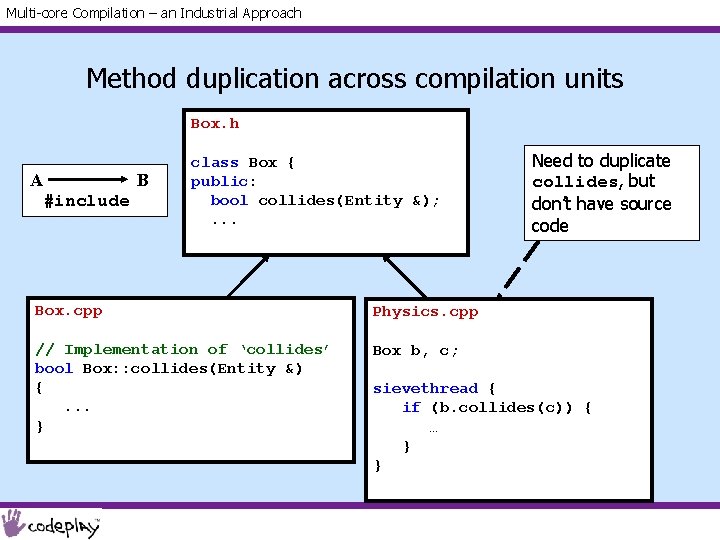

Multi-core Compilation – an Industrial Approach Method duplication across compilation units Box. h A B #include class Box { public: bool collides(Entity &); . . . Box. cpp Physics. cpp // Implementation of ‘collides’ bool Box: : collides(Entity &) {. . . } Box b, c; Need to duplicate collides, but don’t have source code sievethread { if (b. collides(c)) { … } }

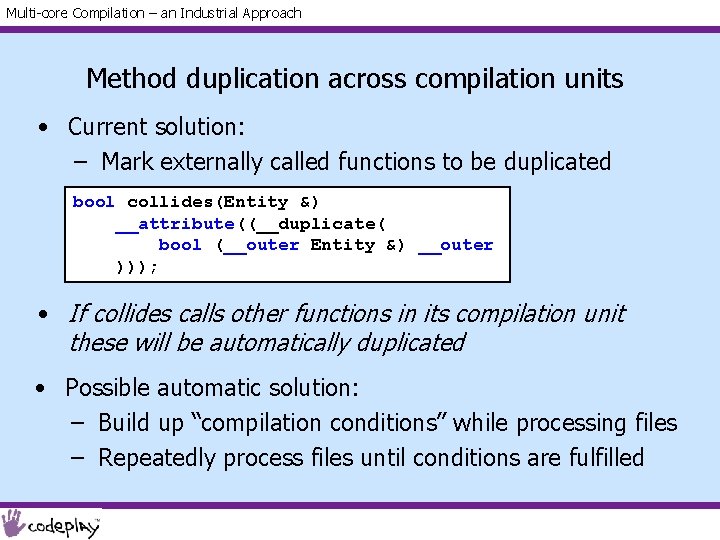

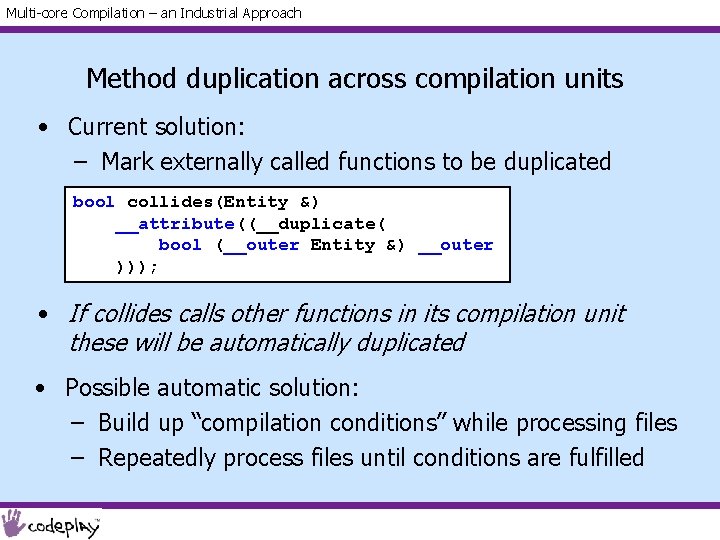

Multi-core Compilation – an Industrial Approach Method duplication across compilation units • Current solution: – Mark externally called functions to be duplicated bool collides(Entity &) __attribute((__duplicate( bool (__outer Entity &) __outer ))); • If collides calls other functions in its compilation unit these will be automatically duplicated • Possible automatic solution: – Build up “compilation conditions” while processing files – Repeatedly process files until conditions are fulfilled

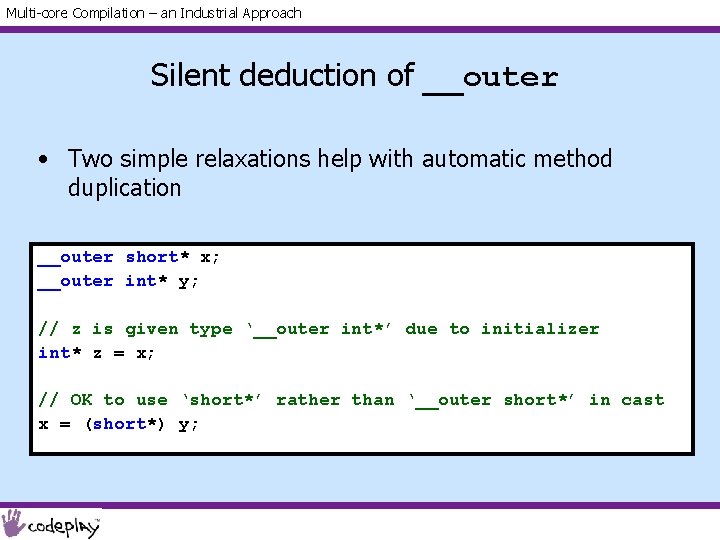

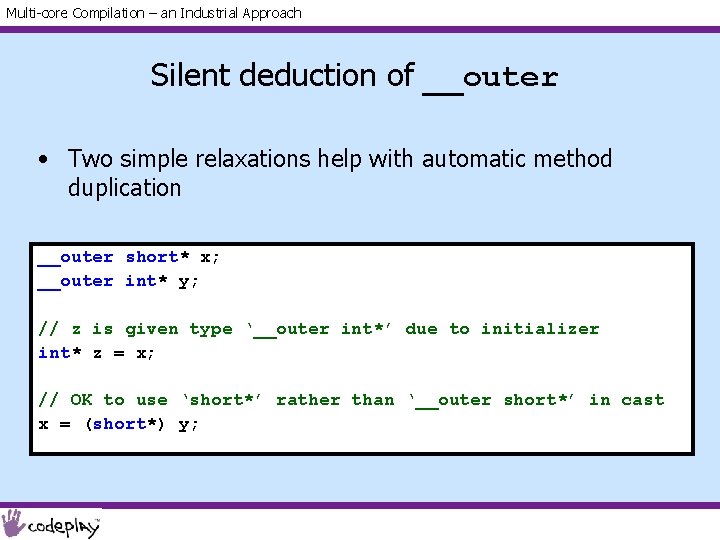

Multi-core Compilation – an Industrial Approach Silent deduction of __outer • Two simple relaxations help with automatic method duplication __outer short* x; __outer int* y; // z is given type ‘__outer int*’ due to initializer int* z = x; // OK to use ‘short*’ rather than ‘__outer short*’ in cast x = (short*) y;

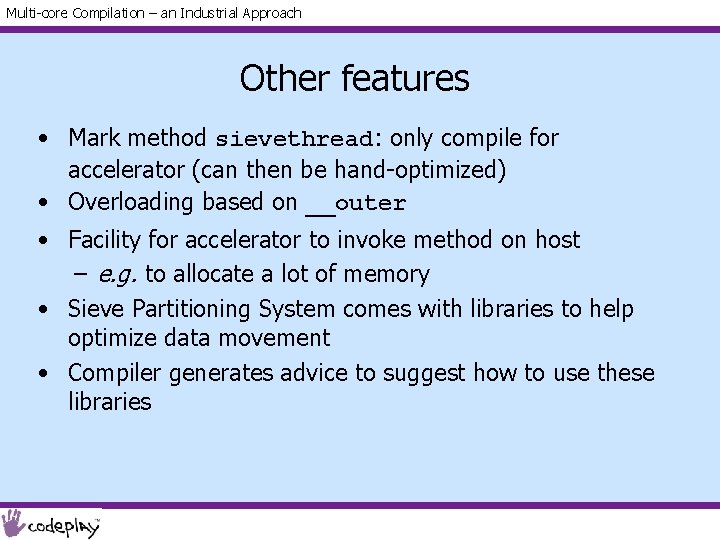

Multi-core Compilation – an Industrial Approach Other features • Mark method sievethread: only compile for accelerator (can then be hand-optimized) • Overloading based on __outer • Facility for accelerator to invoke method on host – e. g. to allocate a lot of memory • Sieve Partitioning System comes with libraries to help optimize data movement • Compiler generates advice to suggest how to use these libraries

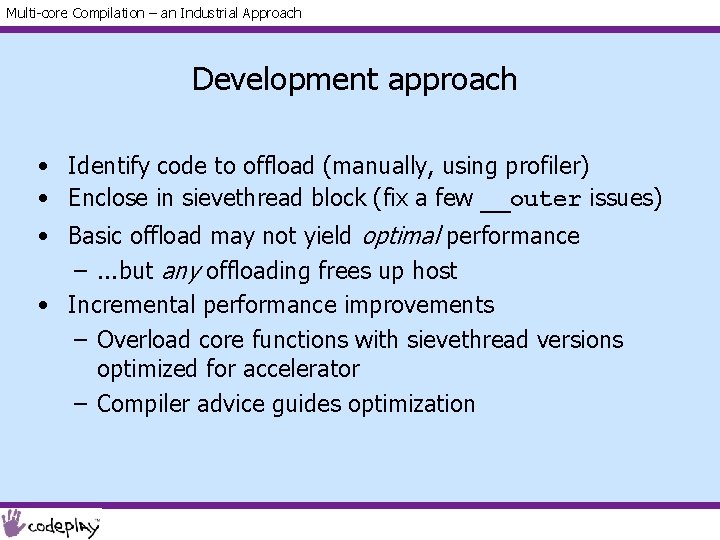

Multi-core Compilation – an Industrial Approach Development approach • Identify code to offload (manually, using profiler) • Enclose in sievethread block (fix a few __outer issues) • Basic offload may not yield optimal performance –. . . but any offloading frees up host • Incremental performance improvements – Overload core functions with sievethread versions optimized for accelerator – Compiler advice guides optimization

Multi-core Compilation – an Industrial Approach Performance • Results on PS 3 (image processing, raytracing, fractals): – Linear scaling – With 6 SPEs, speedup between 3 x and 14 x over host, after some optimization • Possible to hand-optimize as much as desired • Tradeoff: hand-optimization increases performance at expense of portability

Multi-core Compilation – an Industrial Approach Open. CL • Language and API from Khronos group for programming heterogeneous multicore systems – Codeplay is a contributing member • Motivation: unify bespoke languages for programming CPUs, GPUs and Cell BE-like systems • Host code: C/C++ with API calls to launch kernels to run on devices • Kernels written in Open. CL C – C 99 with some restrictions and some extensions • Open. CL is portable, but too low level for large applications

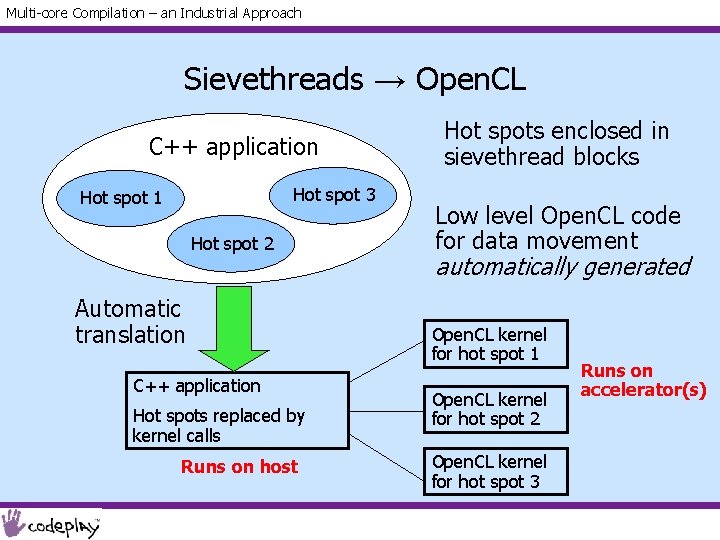

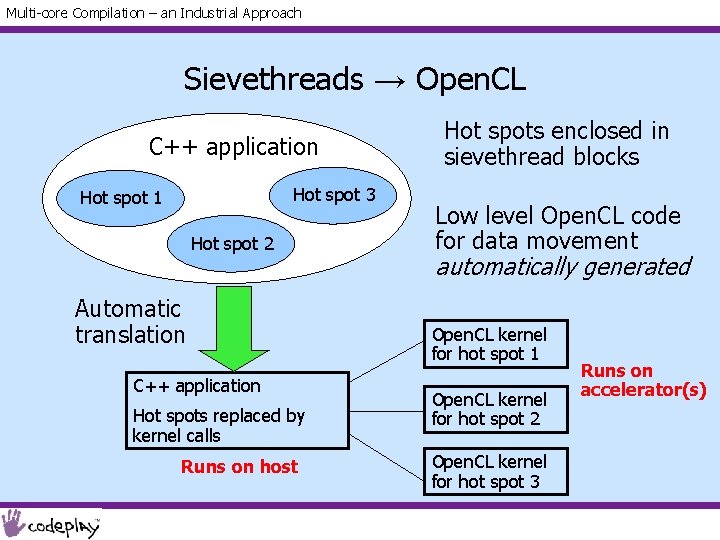

Multi-core Compilation – an Industrial Approach Sievethreads → Open. CL C++ application Hot spot 3 Hot spot 1 Hot spot 2 Automatic translation C++ application Hot spots replaced by kernel calls Runs on host Hot spots enclosed in sievethread blocks Low level Open. CL code for data movement automatically generated Open. CL kernel for hot spot 1 Open. CL kernel for hot spot 2 Open. CL kernel for hot spot 3 Runs on accelerator(s)

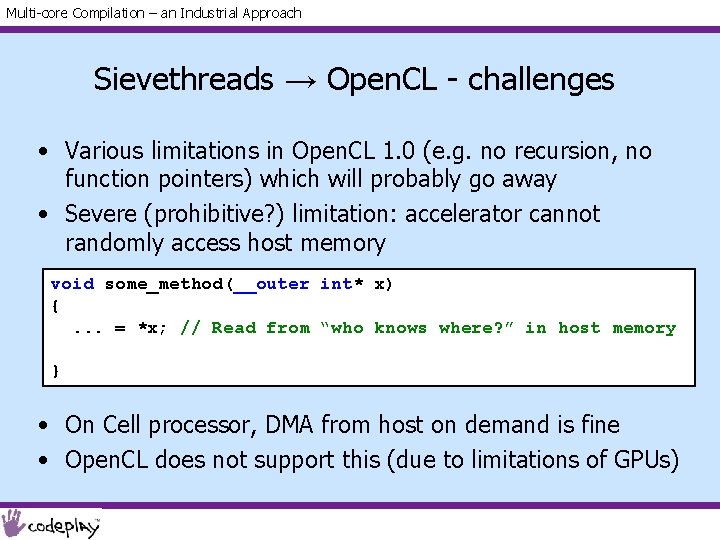

Multi-core Compilation – an Industrial Approach Sievethreads → Open. CL - challenges • Various limitations in Open. CL 1. 0 (e. g. no recursion, no function pointers) which will probably go away • Severe (prohibitive? ) limitation: accelerator cannot randomly access host memory void some_method(__outer int* x) {. . . = *x; // Read from “who knows where? ” in host memory } • On Cell processor, DMA from host on demand is fine • Open. CL does not support this (due to limitations of GPUs)

Multi-core Compilation – an Industrial Approach Related work • Hera-JVM (University of Glasgow) - Java virtual machine on Cell SPEs • CUDA (NVIDIA), Brook+ (AMD) - somewhat subsumed by Open. CL • Cilk++ (Cilk Arts) - shared memory only • Open. MP (IBM have an implementation for Cell) • PS-Algol (Atkinson, Chisholm, Cockshott) – pointers to memory vs. pointers to disk is analogous to local vs. outer pointers

Multi-core Compilation – an Industrial Approach Summary • Sievethreads: practical way get C++ code running on heterogeneous systems • Can co-exist with other threading methods • Core technology: method duplication • Main area for future work: data movement – Data movement optimizations – Declarative language for specifying data movement patterns

Multi-core Compilation – an Industrial Approach Thank you! After the break, come back and use the Sieve Partitioning System! Codeplay are interested in academic collaborations, e. g. student project applying sievethreads to a large opensource application

Alastair mcfarlane barrister

Alastair mcfarlane barrister Alastair monk

Alastair monk Donaldson toolbox

Donaldson toolbox Suzanne donaldson

Suzanne donaldson How the drums talk by bryan donaldson

How the drums talk by bryan donaldson Bergie web

Bergie web Conor donaldson

Conor donaldson Donaldson

Donaldson Dr donaldson urology

Dr donaldson urology Collin donaldson

Collin donaldson Collin donaldson

Collin donaldson Anita donaldson

Anita donaldson Mercurys composition

Mercurys composition Jenna donaldson

Jenna donaldson Collin donaldson

Collin donaldson Asymmetric multicore processing

Asymmetric multicore processing Multicore packet scheduler

Multicore packet scheduler Cache craftiness for fast multicore key-value storage

Cache craftiness for fast multicore key-value storage Autosar multicore

Autosar multicore Multiprocessor and multicore

Multiprocessor and multicore Pcie-1429

Pcie-1429 Multiprocessor programming

Multiprocessor programming Obs multicore

Obs multicore Speedy transactions in multicore in-memory databases

Speedy transactions in multicore in-memory databases Amdahl's law in the multicore era

Amdahl's law in the multicore era Compilation

Compilation Bus crash compilation

Bus crash compilation What is pure and impure interpreter

What is pure and impure interpreter Reverse compilation techniques

Reverse compilation techniques Mushaf e usmani

Mushaf e usmani Previous ipdb not found, fall back to full compilation.

Previous ipdb not found, fall back to full compilation. Robert van engelen

Robert van engelen Contoh compilation

Contoh compilation Compilation of work in work immersion

Compilation of work in work immersion Phases of reverse engineering

Phases of reverse engineering Front end back end compiler

Front end back end compiler What is data compilation

What is data compilation Sangrihitri during the vedic age was

Sangrihitri during the vedic age was Jav complition

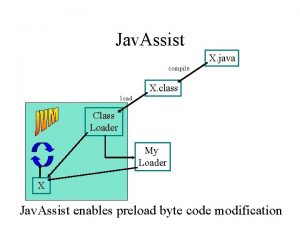

Jav complition Introduction to industrial management

Introduction to industrial management Industrial origin approach

Industrial origin approach Approaches to industrial relations

Approaches to industrial relations Process of research definition

Process of research definition