Michigan Assessment Consortium Common Assessment Development Series Module

- Slides: 37

Michigan Assessment Consortium Common Assessment Development Series Module 19 Establishing Validity 1

Narrated By: Bruce Fay Wayne RESA 2

In this module § § § The concept of validity Why validity is important How to establish evidence for valid use of your test results 3

The Concept of Validity § Historical view: The degree to which a test measures what it is intended to measure, a property of a test. § Current view: Evidence for the meaningful, appropriate, defensible use of results. 4

Validity & Proposed Use § “Validity refers to the degree to which evidence and theory support the interpretations of test scores entailed by proposed uses of the tests. ” (AERA, APA, & NCME, 1999, p. 9) 5

Validity as Evaluation § “Validity is an integrated evaluative judgment of the degree to which empirical evidence and theoretical rationales support the adequacy and appropriateness of inferences and actions based on test scores or other modes of assessment. ” (Messick, 1989, p. 13) 6

Meaning in Context § Validity is contextual § Validity is about the justifiable use of test results to make decisions § Validity may range from weak to strong 7

Prerequisites to Validity – Clear Purpose § The intended purpose for which a test is developed § How the results are to be used 8

Prerequisites to Validity – Reliability § § § Consistency / repeatability The test actually measures something A property of the test, statistical in nature All measurements have “error” Test scores are an estimate of what a student knows and is able to do. 9

Prerequisites to Validity – Fairness / Lack of Bias § Freedom from bias § Anything that would cause differential performance based on factors other than knowledge/ability in the subject matter § Bias is independent of reliability § Reliability and fairness do NOT guarantee freedom from error or validity of use 10

Prerequisite to Validity – Appropriateness § The right instrument for the right job § Measure as directly as possible 11

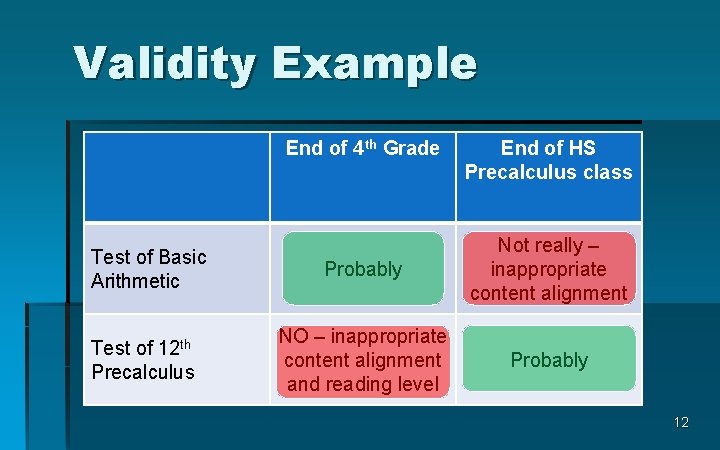

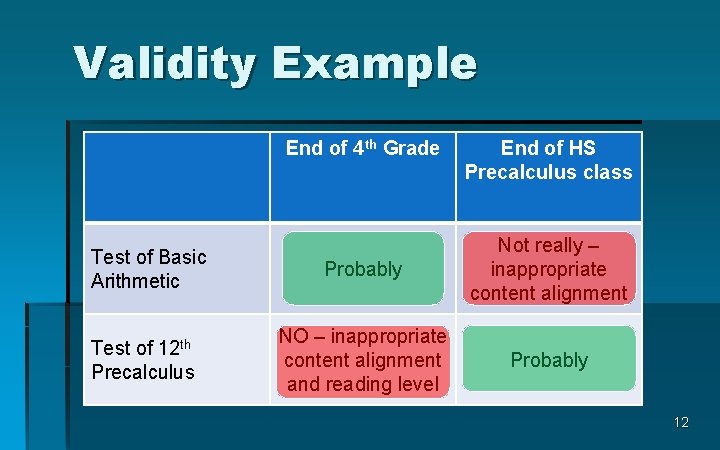

Validity Example End of 4 th Grade Test of Basic Arithmetic Test of 12 th Precalculus End of HS Precalculus class Probably Not really – inappropriate content alignment NO – inappropriate content alignment and reading level Probably 12

Purpose is the Key Clarity is Essential § Why you are developing the assessment § How you intend to use the results § What evidence you need to establish the validity of this use 13

Possible Purposes / Uses § Comparability § To provide data that is comparable across students, classrooms, schools, or … § Determine student achievement of a body of knowledge or skill set 14

Possible Purposes / Uses § Predict future performance on some other assessment § Corroborate (triangulate) other sources of information about student performance § Replace an existing assessment that is too expensive and/or time-consuming 15

Possible Purposes / Uses § Consequential decisions § Identify students for special programs, instructional placements, or other learning opportunities. 16

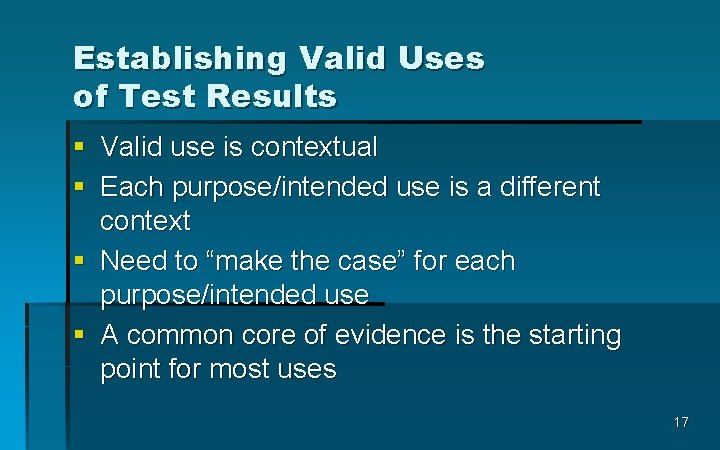

Establishing Valid Uses of Test Results § Valid use is contextual § Each purpose/intended use is a different context § Need to “make the case” for each purpose/intended use § A common core of evidence is the starting point for most uses 17

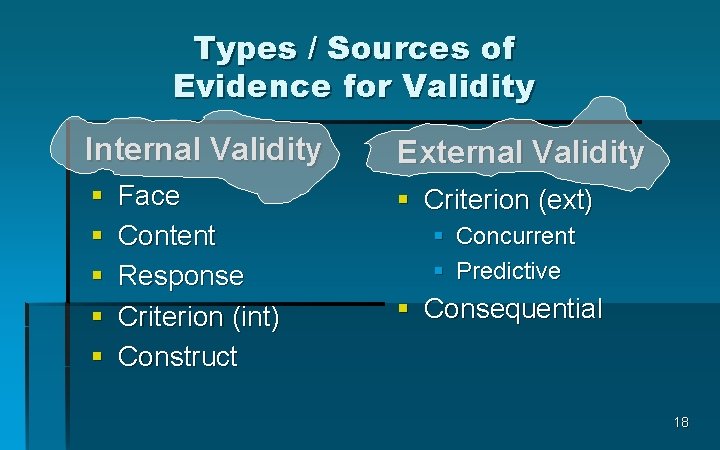

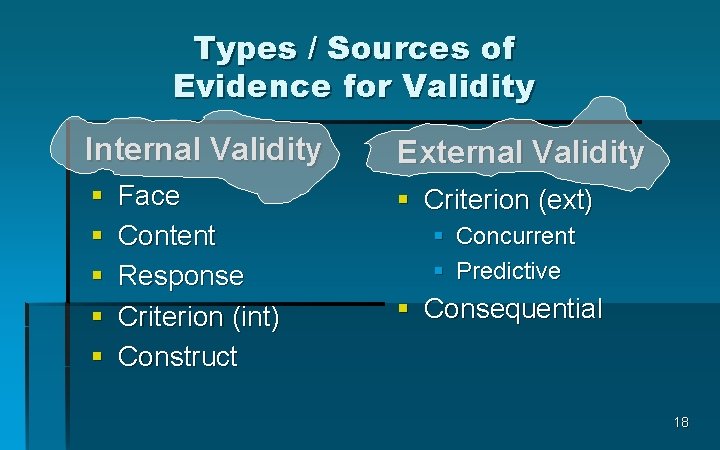

Types / Sources of Evidence for Validity Internal Validity External Validity § § § Criterion (ext) Face Content Response Criterion (int) Construct § Concurrent § Predictive § Consequential 18

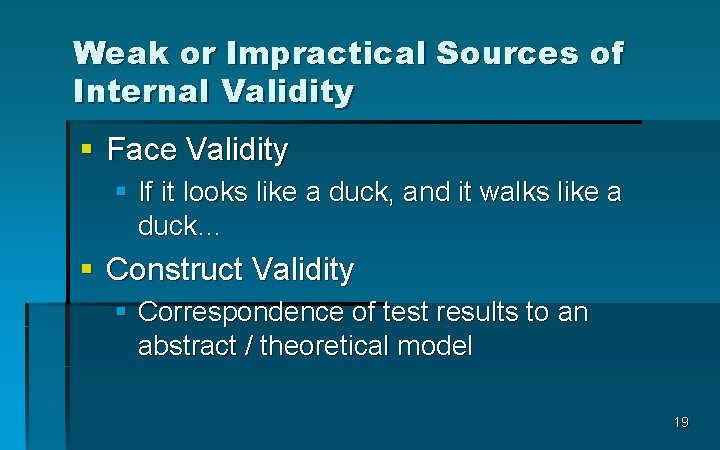

Weak or Impractical Sources of Internal Validity § Face Validity § If it looks like a duck, and it walks like a duck… § Construct Validity § Correspondence of test results to an abstract / theoretical model 19

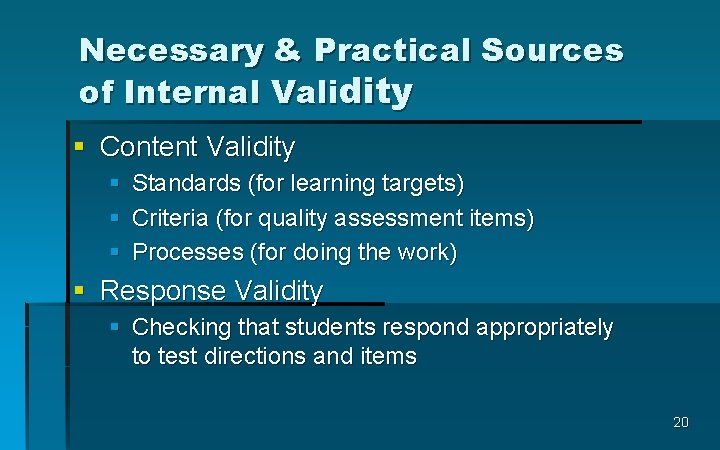

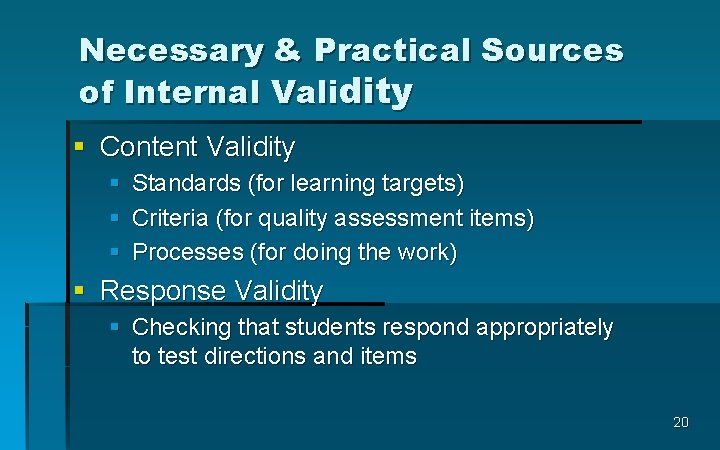

Necessary & Practical Sources of Internal Validity § Content Validity § § § Standards (for learning targets) Criteria (for quality assessment items) Processes (for doing the work) § Response Validity § Checking that students respond appropriately to test directions and items 20

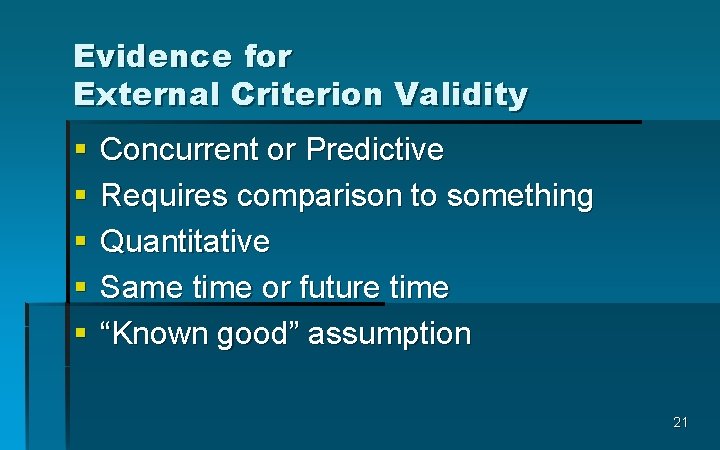

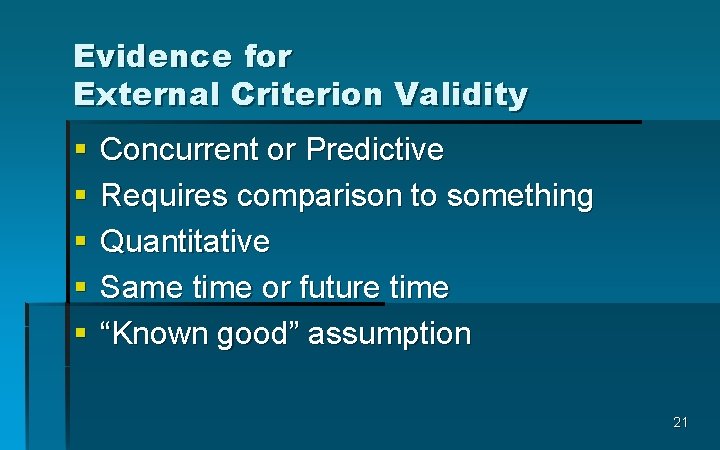

Evidence for External Criterion Validity § § § Concurrent or Predictive Requires comparison to something Quantitative Same time or future time “Known good” assumption 21

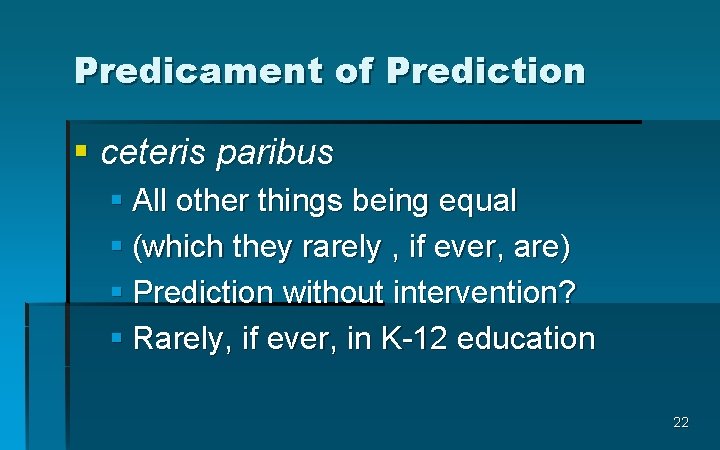

Predicament of Prediction § ceteris paribus § All other things being equal § (which they rarely , if ever, are) § Prediction without intervention? § Rarely, if ever, in K-12 education 22

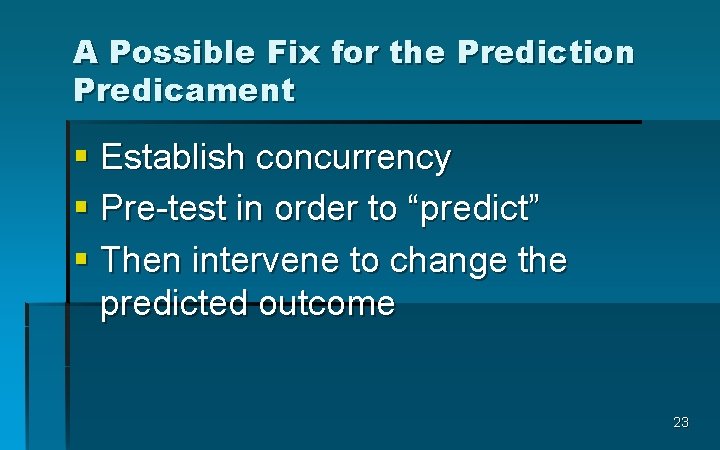

A Possible Fix for the Prediction Predicament § Establish concurrency § Pre-test in order to “predict” § Then intervene to change the predicted outcome 23

Correlation – A technical sidebar § § § Two data points for each student “Pearson r” Linear relationship Range: -1 to +1 Extremes (-1, , +1) are strong/perfect Values near 0, no linear relationship 24

A Couple of Correlation Cautions § Non-linear (curved ) relationships exist (and are actually quite common) § “Correlation does not imply causation” 25

Evidence for External Consequential Validity § “Correctness” of decisions based on test results in terms of consequences to the student § Established over multiple cases and time 26

Internal 1 st , then External § Focus first on Internal Validity § (content, response, criterion) § Define, use, document your processes § Focus next on External Validity § (concurrent, predictive, consequential) § Actual test scores from actual students § Appropriate analysis and adjustments 27

Evidence for Content Validity § § § Appropriate standards Two-way alignment Appropriate item criteria Appropriate test design criteria Test blueprints and item documents 28

More Evidence for Content Validity § Used a defined/documented process § Developed by people with content/assessment expertise § Review for bias and other criteria § Create rubrics, scoring guides, or answer keys as needed 29

Teacher-scored Items § Evidence of consistent scoring § Trained scorers § (establish inter-rater reliability if possible) § Multiple scorer process (high stakes) 30

Evidence for Response Validity § Item/test performance § Reliable § Free from bias § Appropriate responses consistent with other available information 31

A Common Assessment Development Rubric § Follow a sound process for development, administration, scoring, reporting, etc. § Implement with fidelity at each step § Document as you go § Use the provided rubric to monitor & evaluate along the way 32

Ready, Set, Go! (? ) § Take steps to ensure the it is administered properly § Monitor and document this § Note any anomalies that may affect interpretation and for future use 33

Behind the Scenes § Ensure accurate scoring § Follow procedures § Check results § Use established reporting formats § Provide accurate results promptly 34

Making Meaning § Ensure that test results are reported: § § § Using previously developed formats To the correct users In a timely fashion § Follow up on whether the users can/do make meaningful use of the results § A simple “gut level” check 35

Series Developers § § § § Kathy Dewsbury White, Ingham ISD Bruce Fay, Wayne RESA Jim Gullen, Oakland Schools Julie Mc. Daniel, Oakland Schools Edward Roeber, MSU Ellen Vorenkamp, Wayne RESA Kim Young, Ionia County ISD/MDE 36

Development Support for the Assessment Series The MAC Common Assessment Development Series is funded in part by the Michigan Association of Intermediate School Administrators in cooperation with § Michigan Department of Education § Ingham and Ionia ISDS, Oakland Schools and Wayne RESA § Michigan State University 37