Linguistic Structure in Identifying Segments in a Second

- Slides: 44

Linguistic Structure in Identifying Segments in a Second Language Kenneth de Jong Indiana University Colloquium at the Department of Linguistics THE ohio state university May 6, 2005

Also with help & collaboration from NIH in R 03 DC 04095 & NSF in BC 9910701 Kyoko Nagao Byung-jin Lim Hanyong Park Noah Silbert Minoru Fukuda of Miyazaki Municiple University Jin-young Tak of Sejong University Mi-hui Cho of Kyonggi University

Second Language Phonetic and Phonological Research • • • Very popular topic … especially lately … relative to a lot of what we linguists do Very large literature Very unsatisfying - difficult to really gain a coherent picture of what’s really known about the field

2 Reasons for the Nature of Literature 1) Segregated research threads - different questions, different data -treatment approaches - 2) Classroom oriented research in such groups as TESOL Generative-style formal analyses done by linguists Cross-language perception studies by psychologists Motor learning studies done by (almost) no one It’s hard – – – Requires double the linguistic expertise, since we deal with two linguistic systems Requires a set of typological comparisons that will support a model of how the two systems map onto one another Requires sufficient detail in all of the above to make the models reasonable

So … why do it? • We have a hard time saying no for the 16 th time • Most people are multi-lingual, esp. today • Theoretically useful – The level of rigor, specificially with respect to typological claims, is useful for the discipline – Rapid learning in the second language acquirer can show us how the linguistic cognitive system works

Topic today: Segmental Identification • Predominates phonological and psychological literature • Relatively simple (given what we know) • Largely abstracted from lexical access and syntactic parsing issues (until recently) • Essentially alphabetic

Previous, very commonly cited models • SLM - Jim Flege: – Model of production (originally) – Production problems depend on perceptual meta-classification of segments, where segments = allophone (more or less) – New v. s Similar = whether a segment in L 2 has a corresponding segment in L 1 – Beats me how we know what counts as similar, but I’m sure the IPA has something to do with it – In early learning, similar phones are stable and functional, while new phones are unstable and dysfunctional – Learning of new phones progresses rapidly, while similar phones merge with L 1 phones to form a stable and not-quite-accurate category

Previous, very commonly cited models • PAM - Cathy Best: – Model of perceptual discrimination – Discrimination abilities depend on perceptual meta-classification of segments, where segments = gestural complexes (more or less) – The degree to which two contrasting sounds fit into different categories, given L 1 experience, determines the degree to which they can be discriminable by an L 1 perceiver – Not a model of second language learning, but of cross-language perception; technically subjects should be set free after the experiment, since the experiment breaks them by beginning the process of forming additional perceptual categories

Model Architecture: Segmental Categories are Unitary Things • Most of these experimentally oriented models treat segments as unitary free-standing object categories • At odds with typical treatment in linguistic models which generally assume that crosssegment properties are operative in determining how second language classification happens

Model Architecture: Segmental Categories are Unitary Things Questions to be pursued • Parsing question: Segments are embedded Iarger units of all different kinds • Cross-segment question: Segments exist in a matrix with other segments • Within-segment question: Segments have lots of internal structure

Model Architecture: Segmental Categories are Unitary Things Questions to be pursued • Parsing question: Segments are embedded Iarger units of all different kinds • Cross-segment question: Segments exist in a matrix with other segments • Within-segment question: Segments have lots of internal structure

Parsing Question • Analyses of Korean -> English database for other studies below • Park & de Jong (2005) shows that prosodic parsing heavily affects segmental identification • C’s in VC’s are neutralized, but C’s in VCV’s are not • Korean listeners’ accuracy voicing judgments for word-final obstruents depend on whether they hear a count a VC release as an additional syllable

Model Architecture: Segmental Categories are Unitary Things Questions to be pursued • Parsing question: Segments are embedded Iarger units of all different kinds • Cross-segment question: Segments exist in a matrix with other segments • Within-segment question: Segments have lots of internal structure

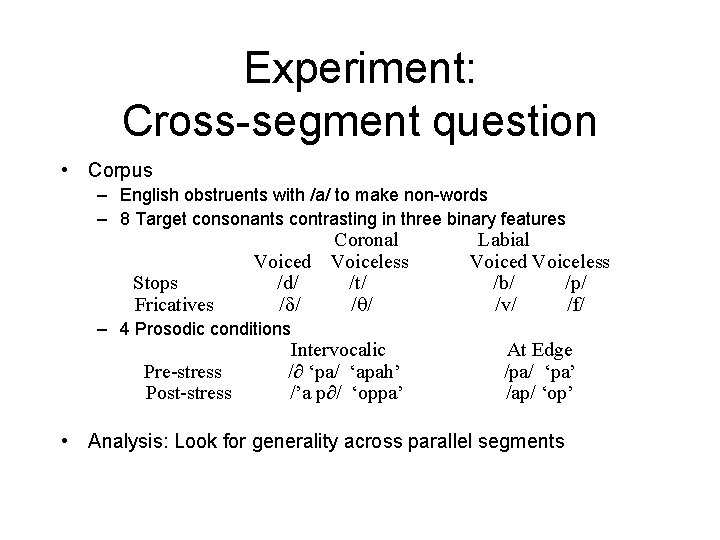

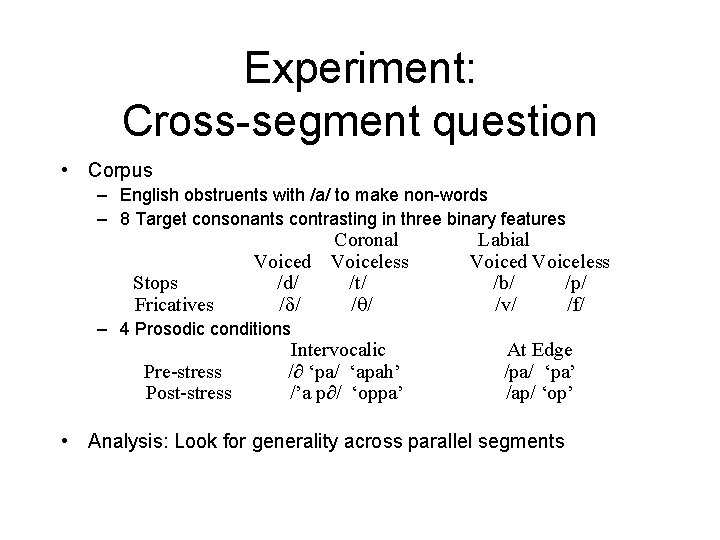

Experiment: Cross-segment question • Corpus – English obstruents with /a/ to make non-words – 8 Target consonants contrasting in three binary features Stops Fricatives Coronal Voiced Voiceless /d/ /t/ / / – 4 Prosodic conditions Pre-stress Post-stress Intervocalic /∂ ‘pa/ ‘apah’ /’a p∂/ ‘oppa’ Labial Voiced Voiceless /b/ /p/ /v/ /f/ At Edge /pa/ ‘pa’ /ap/ ‘op’ • Analysis: Look for generality across parallel segments

Experiment: Cross-segment question • Stimuli – 4 Northern mid-western English speakers in late 20’s – Cued with orthographic fonts – One consonant per non-word item, consonant included others besides the 8 targets – Produced in isolation • Listeners – 41 Korean undergrads at Kyonggi University in Seoul – Very little exposure to native English-speaking people • Procedure – Stimuli presented over headphones in a listening lab – Listeners asked to identify the consonants on a paper response sheet – Given 14 response options + one (rarely used) for ‘other: ____’

Analysis for Generalization 1: Cross-listener differences • Question: Is segmental accuracy with one segment tied to accuracy with parallel segments • Here: contrasting non-sibilant fricatives are new for the Korean listeners. They need to be distinguished from stops which are similar. (C. f. looking for copy machines in the kitchen. ) • Specific sub-question: is accuracy in distinguishing /t/ from / / linked to accuracy in distinguishing /p/ from /f/? • Regress accuracy for each listener in coronals against accuracy in labials

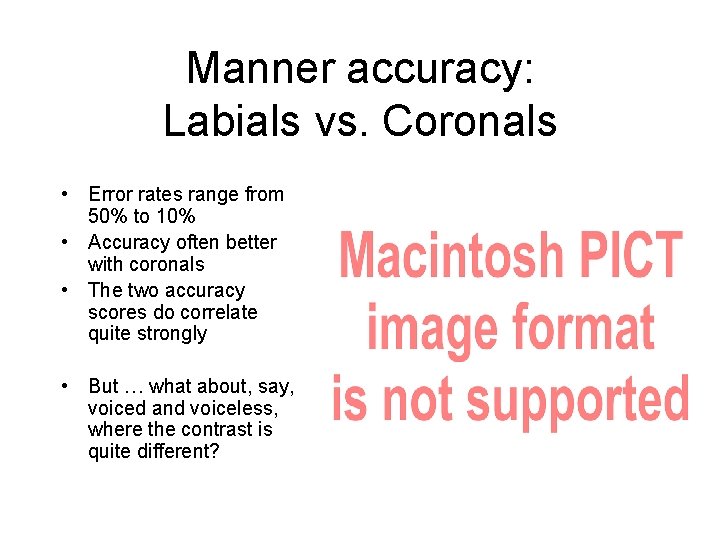

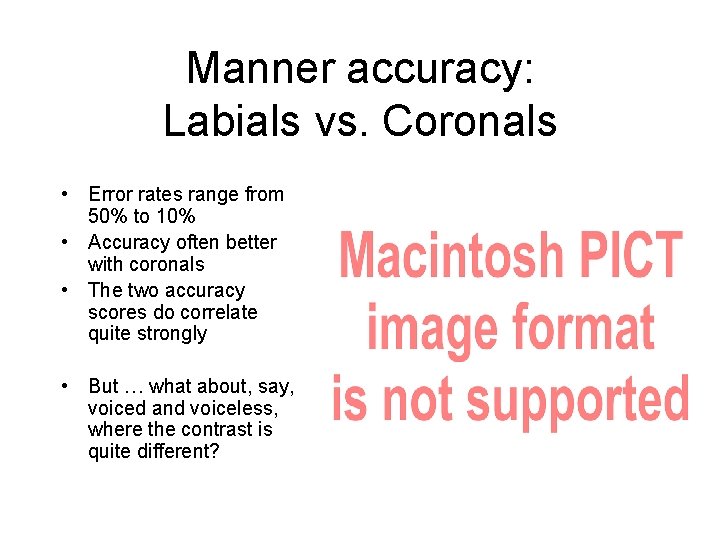

Manner accuracy: Labials vs. Coronals • Error rates range from 50% to 10% • Accuracy often better with coronals • The two accuracy scores do correlate quite strongly • But … what about, say, voiced and voiceless, where the contrast is quite different?

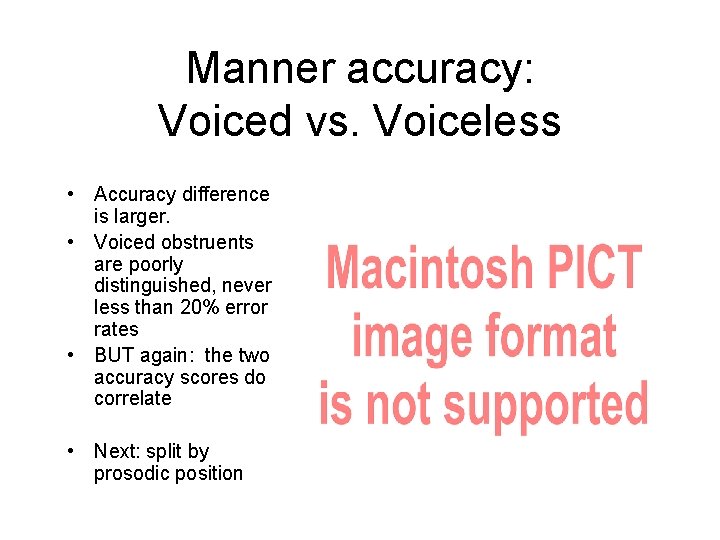

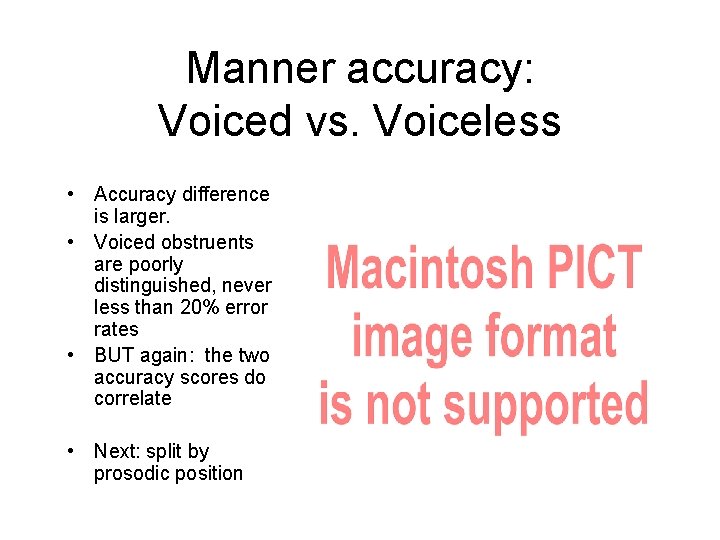

Manner accuracy: Voiced vs. Voiceless • Accuracy difference is larger. • Voiced obstruents are poorly distinguished, never less than 20% error rates • BUT again: the two accuracy scores do correlate • Next: split by prosodic position

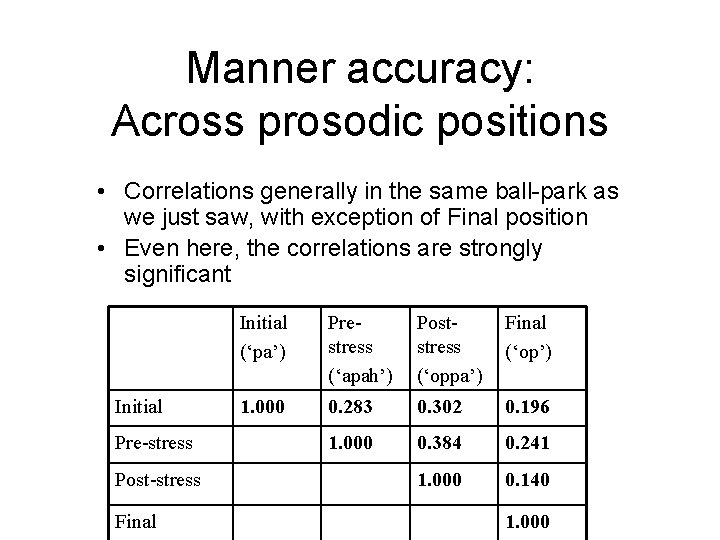

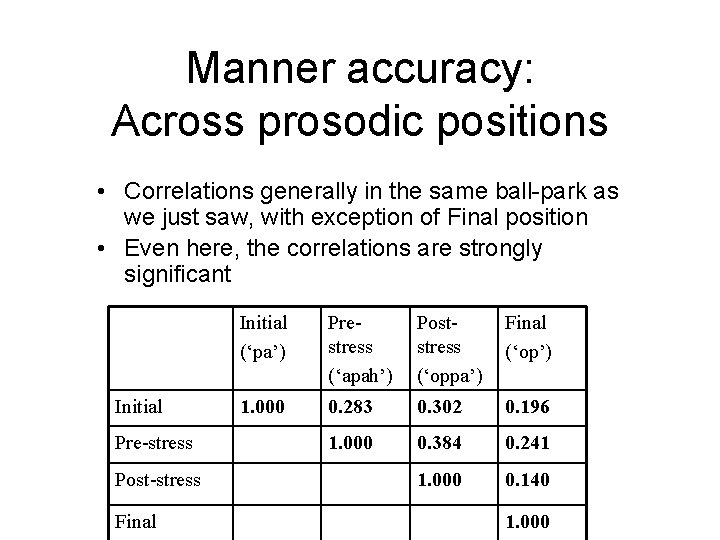

Manner accuracy: Across prosodic positions • Correlations generally in the same ball-park as we just saw, with exception of Final position • Even here, the correlations are strongly significant Initial Pre-stress Post-stress Final Initial (‘pa’) Prestress (‘apah’) Poststress (‘oppa’) Final (‘op’) 1. 000 0. 283 0. 302 0. 196 1. 000 0. 384 0. 241 1. 000 0. 140 1. 000

Interim Summary • Results suggest that distinguishing stops from fricative is a single skill (or at least a set of closely related skills). Some listeners have acquired it better than others. • Woah. Um … how do we know this isn’t just an effect of overall proficiency differences. Some listeners are more experienced, and hence are better categorizers overall? • Good question. • However, the correlation patterns for the manner contrasts are not obtained for all pairs. C. f. , the voicing contrast below.

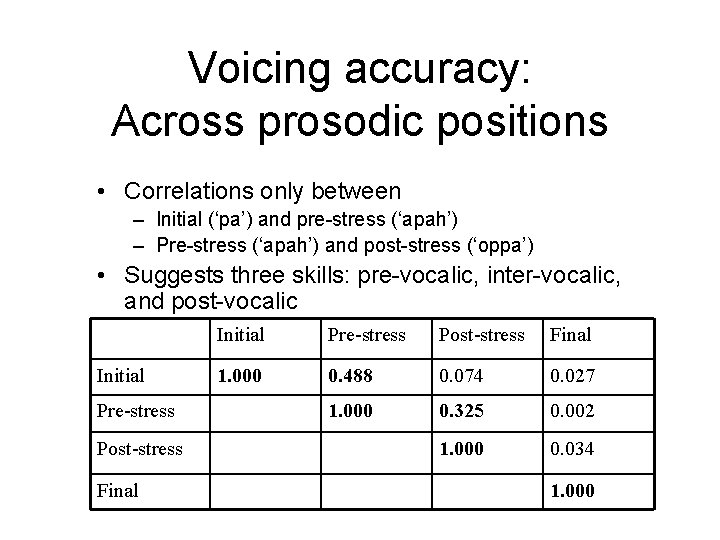

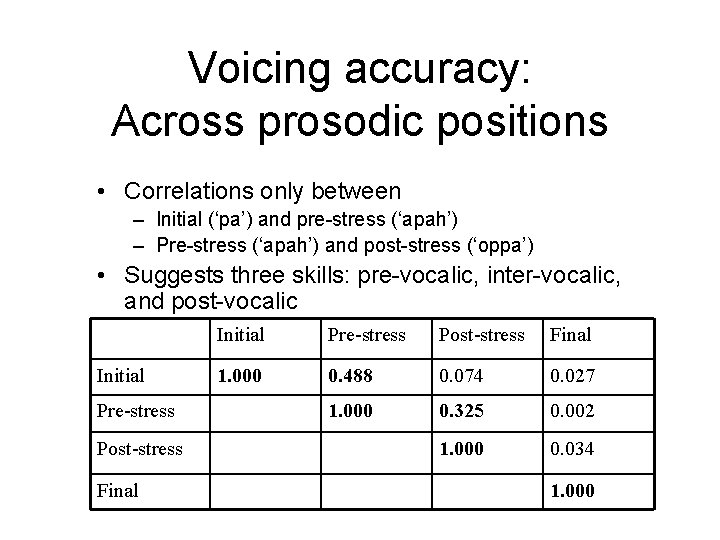

Voicing accuracy: Across prosodic positions • Correlations only between – Initial (‘pa’) and pre-stress (‘apah’) – Pre-stress (‘apah’) and post-stress (‘oppa’) • Suggests three skills: pre-vocalic, inter-vocalic, and post-vocalic Initial Pre-stress Post-stress Final 1. 000 0. 488 0. 074 0. 027 1. 000 0. 325 0. 002 1. 000 0. 034 1. 000

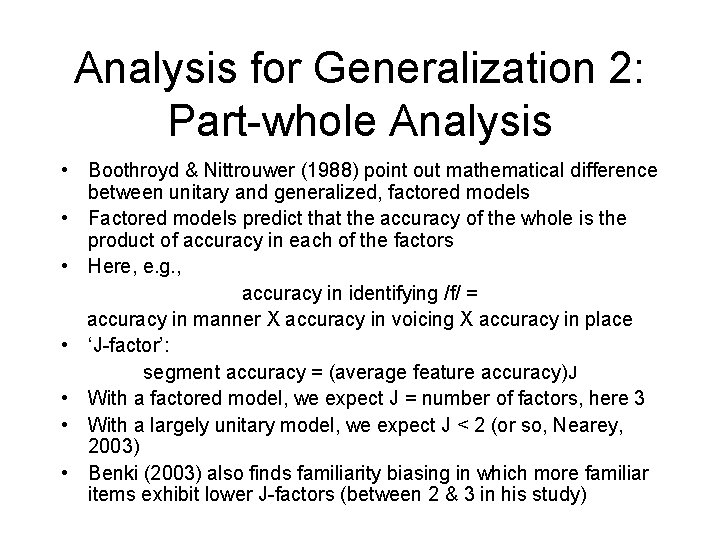

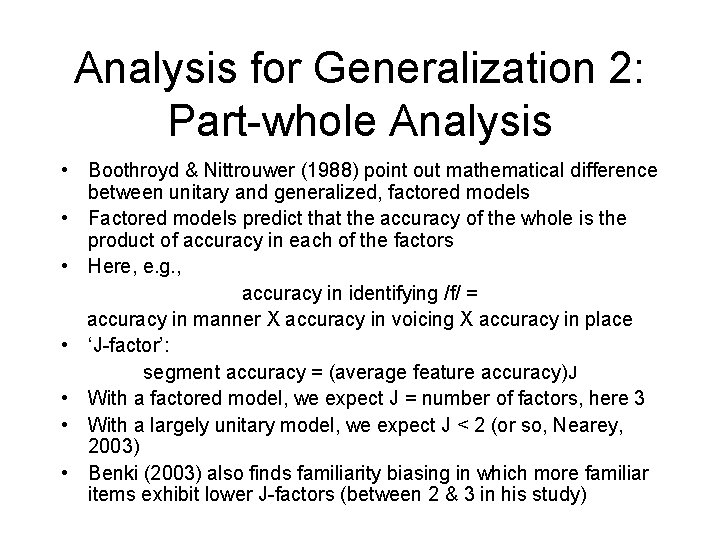

Analysis for Generalization 2: Part-whole Analysis • Boothroyd & Nittrouwer (1988) point out mathematical difference between unitary and generalized, factored models • Factored models predict that the accuracy of the whole is the product of accuracy in each of the factors • Here, e. g. , accuracy in identifying /f/ = accuracy in manner X accuracy in voicing X accuracy in place • ‘J-factor’: segment accuracy = (average feature accuracy)J • With a factored model, we expect J = number of factors, here 3 • With a largely unitary model, we expect J < 2 (or so, Nearey, 2003) • Benki (2003) also finds familiarity biasing in which more familiar items exhibit lower J-factors (between 2 & 3 in his study)

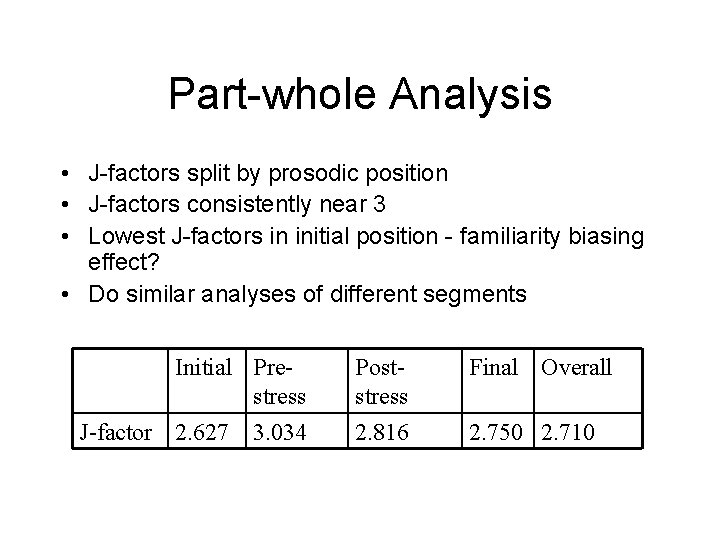

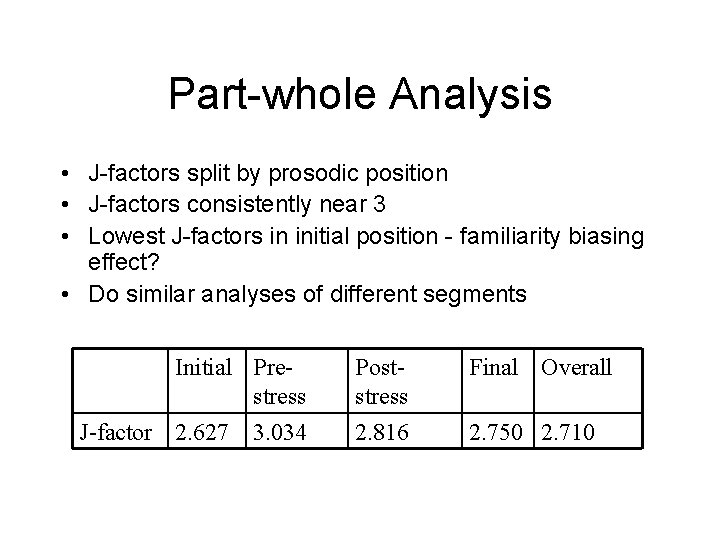

Part-whole Analysis • J-factors split by prosodic position • J-factors consistently near 3 • Lowest J-factors in initial position - familiarity biasing effect? • Do similar analyses of different segments Initial Prestress J-factor 2. 627 3. 034 Poststress Final Overall 2. 816 2. 750 2. 710

Part-whole Analysis • Segmental accuracy is very close to the product of featural accuracies for each segment • Fricatives lie almost exactly on diagonal • Stops are often slightly over diagonal • Since Korean has stops, this suggests a familiarity biasing effect

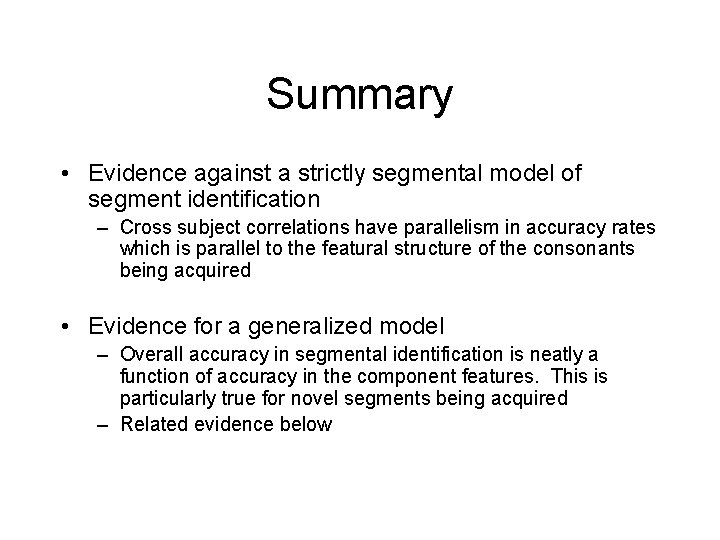

Summary • Evidence against a strictly segmental model of segment identification – Cross subject correlations have parallelism in accuracy rates which is parallel to the featural structure of the consonants being acquired • Evidence for a generalized model – Overall accuracy in segmental identification is neatly a function of accuracy in the component features. This is particularly true for novel segments being acquired – Related evidence below

Model Architecture: Segmental Categories are Unitary Things Questions to be pursued • Parsing question: Segments are embedded Iarger units of all different kinds • Cross-segment question: Segments exist in a matrix with other segments • Within-segment question: Segments have lots of internal structure

Experiment: Internal structure question • Corpus – 4 Midwestern American speakers in their mid-30’s – /pi/ and /bi/ – Metronomically Rate-varied corpus with extreme durational variability (de. Jong, 2001 a; 2001 b) • Repetition period varied continuously from 450 ms - 250 ms • This range of rates from physiological constraints study (Nelson & Perkell, 19**) • Procedure – Present excised syllable trains for identification • Subjects – – 23 native English speaking undergraduates from Indiana University 14 native Japanese speaking students from Indiana University 13 native Korean speaking students from Indiana University All monolingual through early years

Stimulus VOT Distribution • Plots VOT for /p/ and /b/ against syllable duration • VOT’s shorten for /p/ at fast rates

Stimulus VOT Distribution • Zoom in on VOT dimension • Get near merger at very fast rates

Native Responses • Logistical regression with identification responses • Add 50% boundary between /p/ & /b/ for native listeners • Slant shows normalization for rate

Question: how do Non-natives handle variability? • Mismatch in VOT production boundary – Japanese /p/ has shorter VOT – Korean /ph/ has longer VOT • Expect shifted identification responses – Japanese: more /b/ -> /p/ errors – Korean : more /p/ -> /b/ errors

Cross-language • Get shifts in expected directions • Rate normalization function is same as native listeners

Question: how do Non-natives handle variability? • Get expected shifted identification responses – Japanese: more /b/ -> /p/ errors – Korean : more /p/ -> /b/ errors • Rate normalized as well. • Question is: where? – Segmental Un-rate-differentiated Prototype: mostly in middle of distribution – Rate Extracted Model: persistent across distribution

Undifferentiated Prototype Model • Here’s the general distributional pattern

Undifferentiated Prototype Model • Here are prototypical categories with centers to which stimuli are compared

Undifferentiated Prototype Model • Using native vs. non-native centers heavily affects portions between the centers • Distance of extreme tokens from two centers is little affected

Extracted Model • A generalized criterion model divides space

Generalized Model • A shifted criterion will affect identification throughout region around boundary

Non-native Differences • Back to Actual responses • We compare native and non-native identification and highlight tokens which differ

Japanese Differences • Expect /b/->/p/ errors • Get more (red squares) • Note distribution across rates • Also get /p/ -> /b/ errors (black diamonds)

Korean Differences • Expect /p/->/b/ errors • Get them (black diamonds) • Note very odd distribution: across rates? • Also get /p/ -> /b/ errors (red squares)

Experiment 2 Summary • Differences in L 1 typical VOT show up in mismatch errors in both Japanese and Korean • Errors are distributed across the rates, suggesting a model in which generalized perceptual criteria are taken from L 1 • Reverse direction errors also indicate another aspect of non-native boundaries: Uncertainty

Model Architecture: Segmental Categories are Extracted Things Questions to be pursued • Parsing question: Segmental identification requires global identification of context • Cross-segment question: Segmental identification is a function of other segments • Within-segment question: Segmental identification is a function of generalized situation

Fine

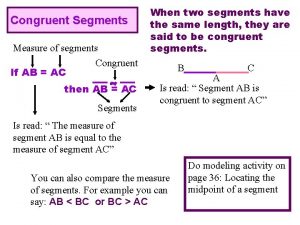

Identifying and non identifying adjective clauses

Identifying and non identifying adjective clauses How to identify clauses

How to identify clauses Identify the essential

Identify the essential Identifying market segments and targets chapter 9

Identifying market segments and targets chapter 9 Identifying market segments and targets chapter 9

Identifying market segments and targets chapter 9 Identifying market segments and targets chapter 9

Identifying market segments and targets chapter 9 Four levels of micromarketing

Four levels of micromarketing Identifying market segments and targets

Identifying market segments and targets Identifying market segments and targets

Identifying market segments and targets 186 282 miles per second into meters per second

186 282 miles per second into meters per second One third 1/3

One third 1/3 The second coming tone

The second coming tone Second conditional structure

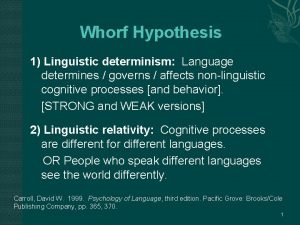

Second conditional structure Define linguistic determinism

Define linguistic determinism Determinism examples

Determinism examples Intelligence interpersonal

Intelligence interpersonal Sapir-whorf hypothesis definition

Sapir-whorf hypothesis definition Linguistic turn example

Linguistic turn example Sapir whorf hypothesis

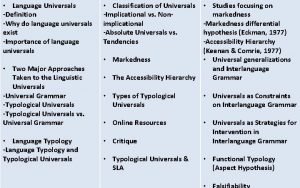

Sapir whorf hypothesis Linguistic universals definition

Linguistic universals definition Celebrities with interpersonal intelligence

Celebrities with interpersonal intelligence Confucius institute at moscow state linguistic university

Confucius institute at moscow state linguistic university Odyssey word count

Odyssey word count Linguistic transference

Linguistic transference Lqa standards

Lqa standards Linguistic intergroup bias definition

Linguistic intergroup bias definition Linguistic category model

Linguistic category model Meaning

Meaning Gest creda

Gest creda Linguistic model in hci

Linguistic model in hci Fuzzification

Fuzzification Fuzzy logic examples

Fuzzy logic examples Macrolinguistics definition

Macrolinguistics definition Sapir whorf theory

Sapir whorf theory Cross linguistic transfer

Cross linguistic transfer Ap psych thinking and language

Ap psych thinking and language Metalinguistic awareness

Metalinguistic awareness Theory of architecture 2

Theory of architecture 2 Interdisciplinary branches of linguistics

Interdisciplinary branches of linguistics Applied linguistics

Applied linguistics Principle of linguistic relativity

Principle of linguistic relativity Gest creda

Gest creda The washington fuzzy cs

The washington fuzzy cs What is linguistic variables in fuzzy logic

What is linguistic variables in fuzzy logic Acculturation

Acculturation