Learning in Games Scott E Page Russell Golman

- Slides: 58

Learning in Games Scott E Page Russell Golman & Jenna Bednar University of Michigan-Ann Arbor

Overview Analytic and Computational Models Nash Equilibrium – Stability – Basins of Attraction Cultural Learning Agent Models

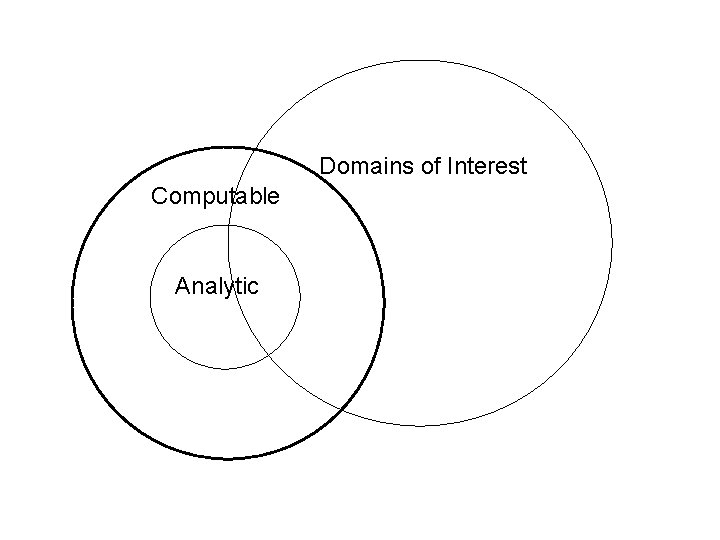

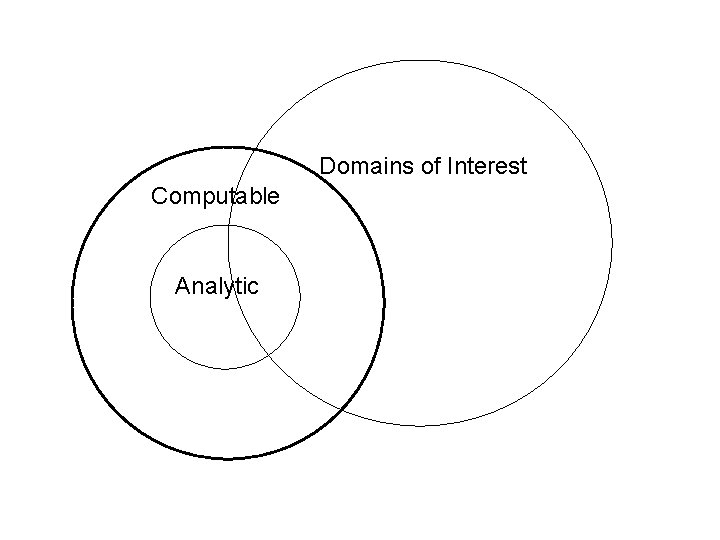

Domains of Interest Computable Analytic

Learning/Adaptation Diversity Interactions/Epistasis Networks/Geography

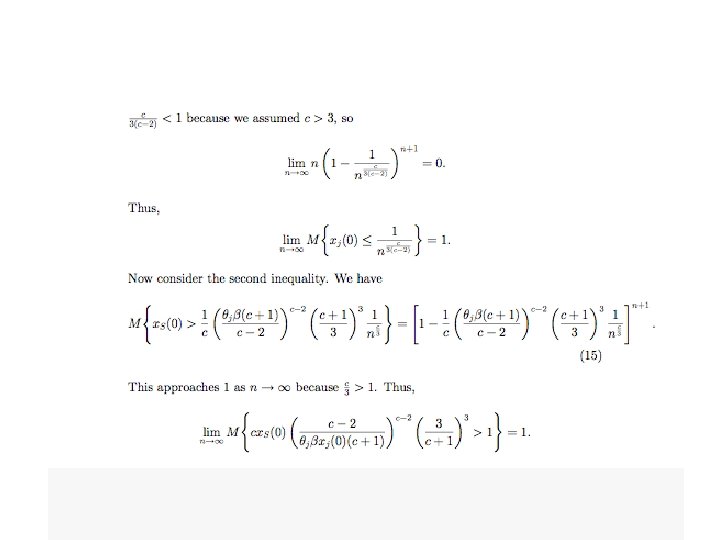

Equilibrium Science We can start by looking at the role that learning rules play in equilibrium systems. This will give us some insight into whether they’ll matter in complex systems.

Players

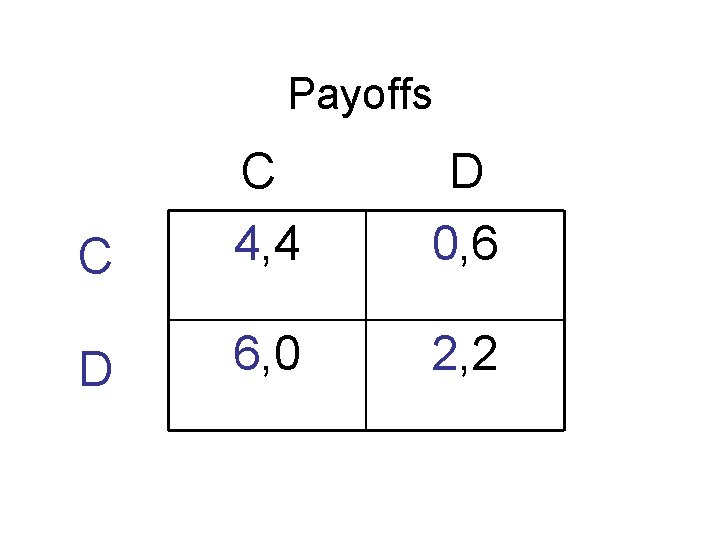

Actions Cooperate: C Defect: D

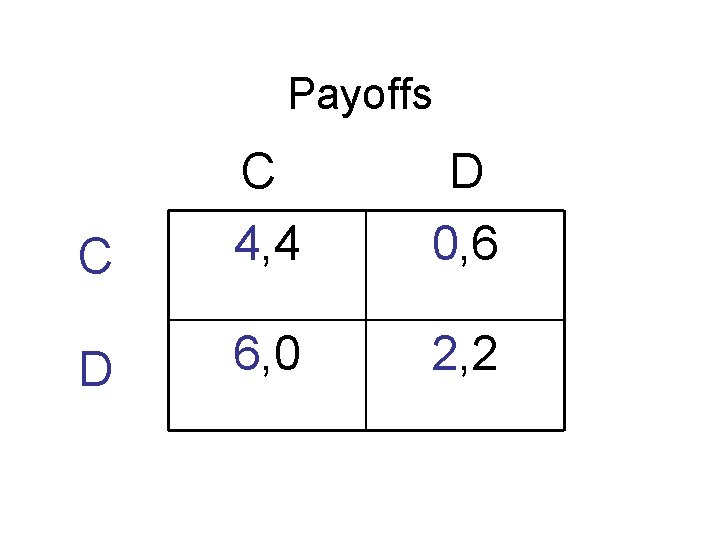

Payoffs C C 4, 4 D 0, 6 D 6, 0 2, 2

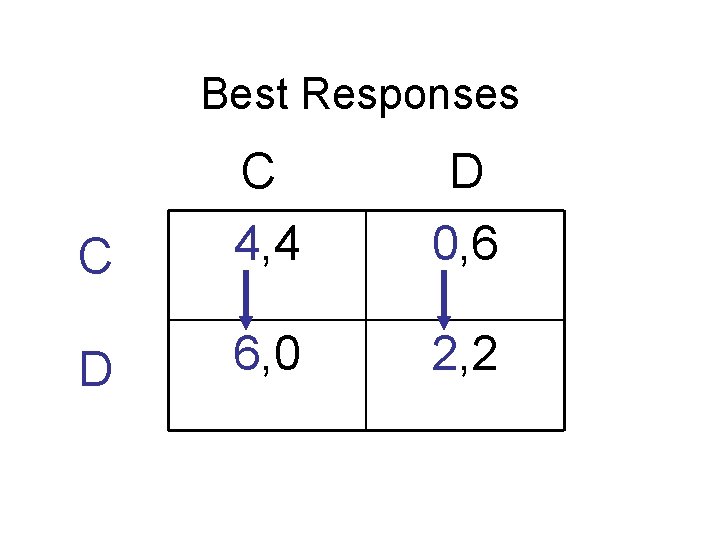

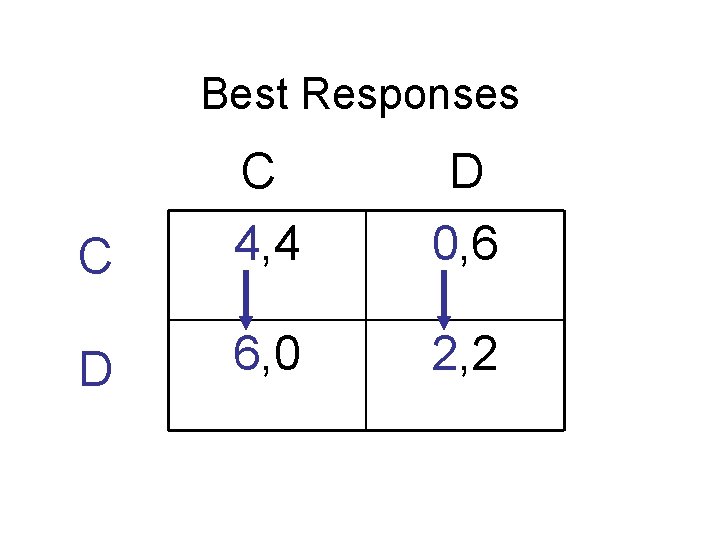

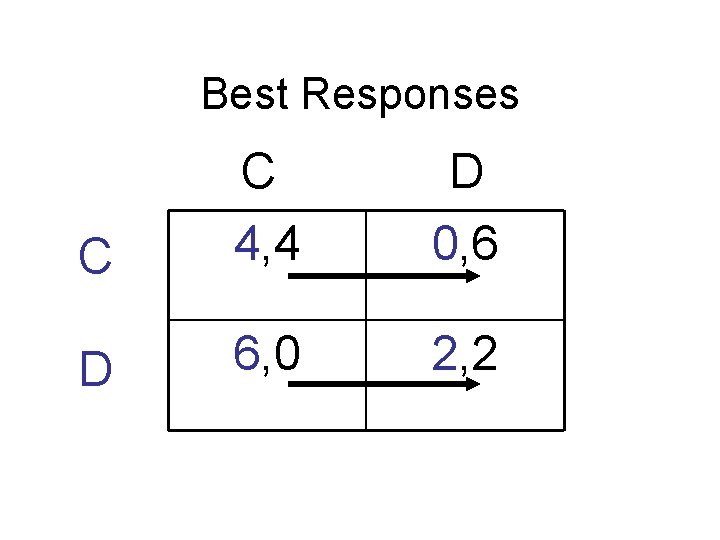

Best Responses C C 4, 4 D 0, 6 D 6, 0 2, 2

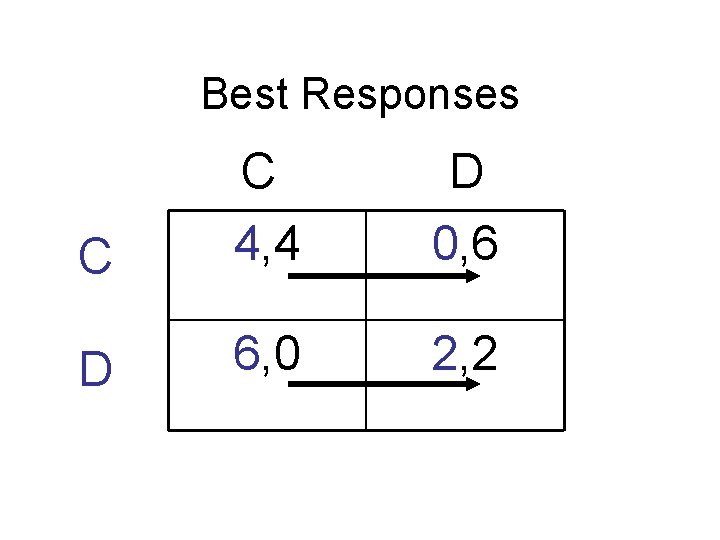

Best Responses C C 4, 4 D 0, 6 D 6, 0 2, 2

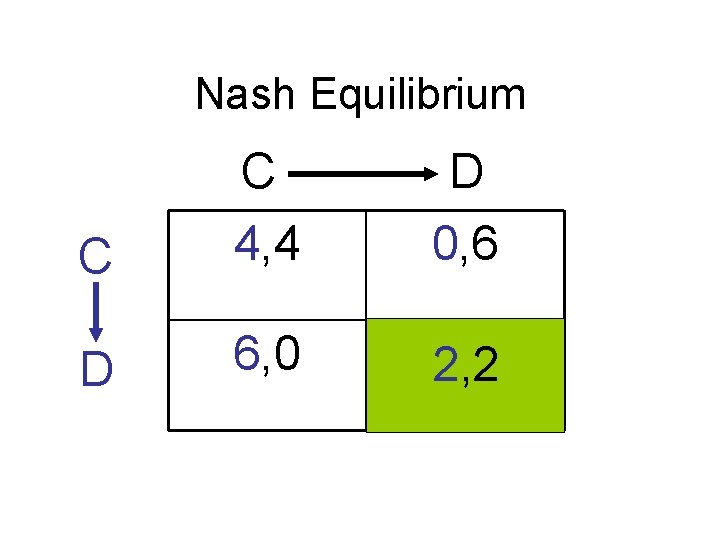

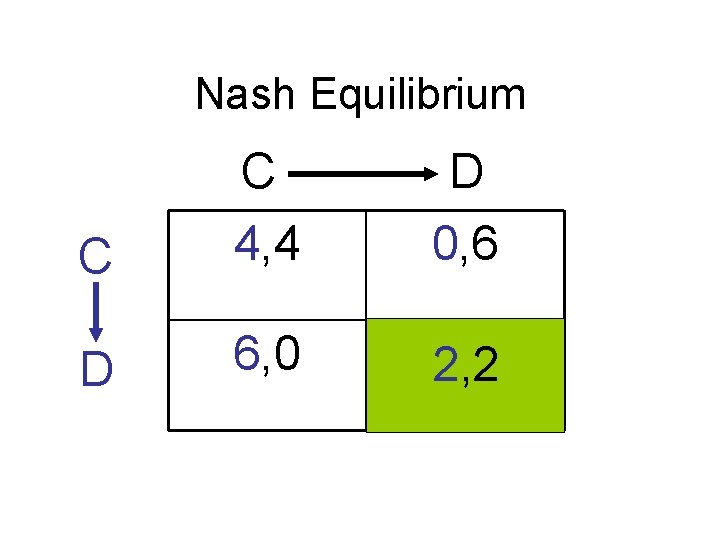

Nash Equilibrium C C 4, 4 D 0, 6 D 6, 0 2, 2

“Equilibrium” Based Science Step 1: Set up game Step 2: Solve for equilibrium Step 3: Show equilibrium depends on parameters of model Step 4: Provide empirical support

Is Equilibrium Enough? Existence: Equilibrium exists Stability: Equilibrium is stable Attainable: Equilibrium is attained by a learning rule.

Examples Best respond to current state Better respond Mimic best Mimic better Include portions of best or better Random with death of the unfit

Stability can only be defined relative to a learning dynamic. In dynamical systems, we often take that dynamic to be a best response function, but with human actors we need not assume people best respond.

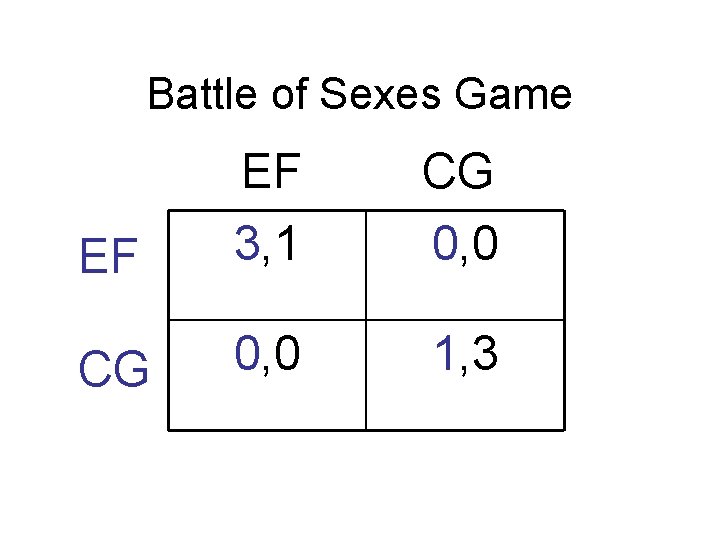

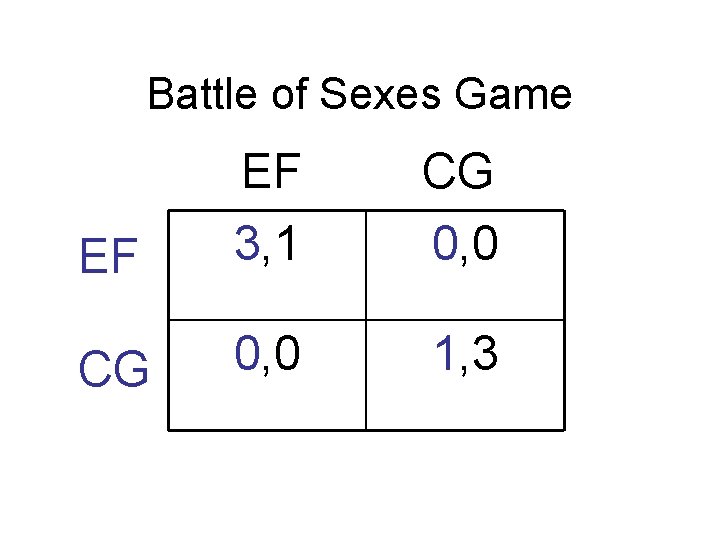

Battle of Sexes Game EF EF 3, 1 CG 0, 0 1, 3

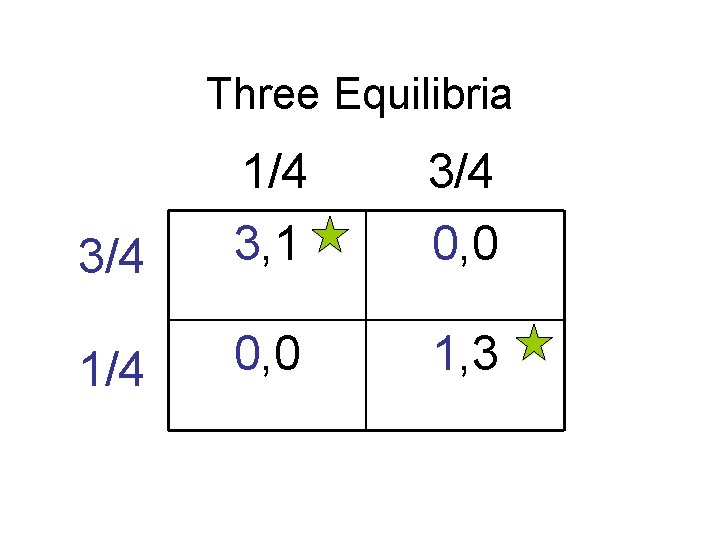

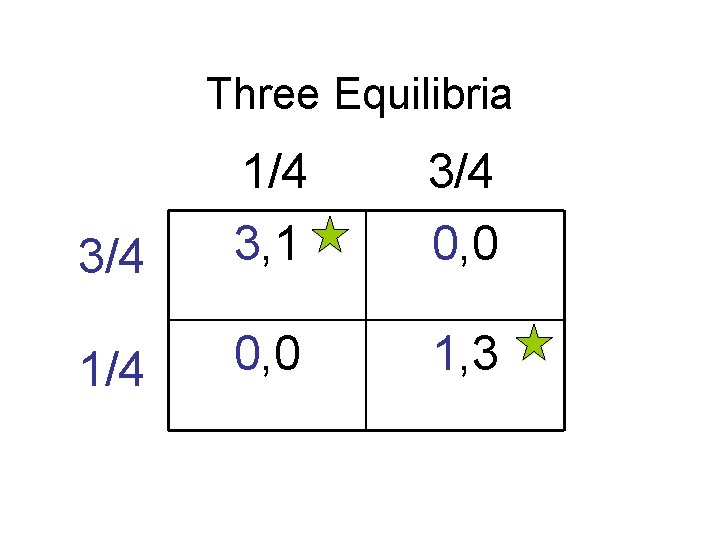

Three Equilibria 3/4 1/4 3, 1 3/4 0, 0 1, 3

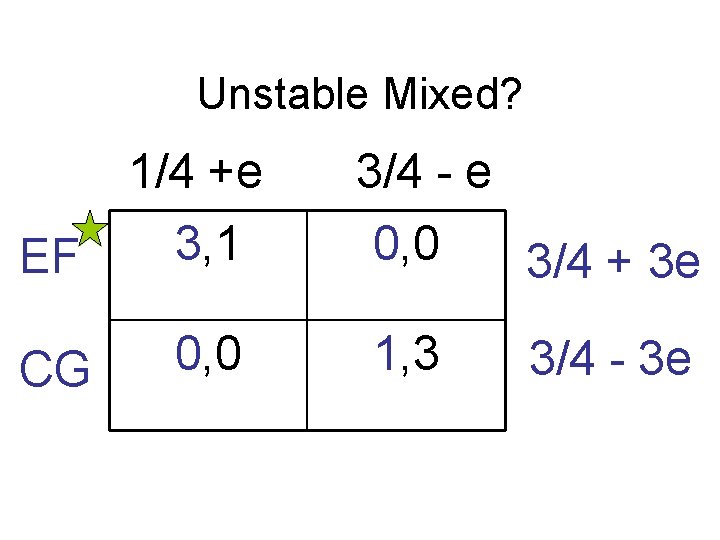

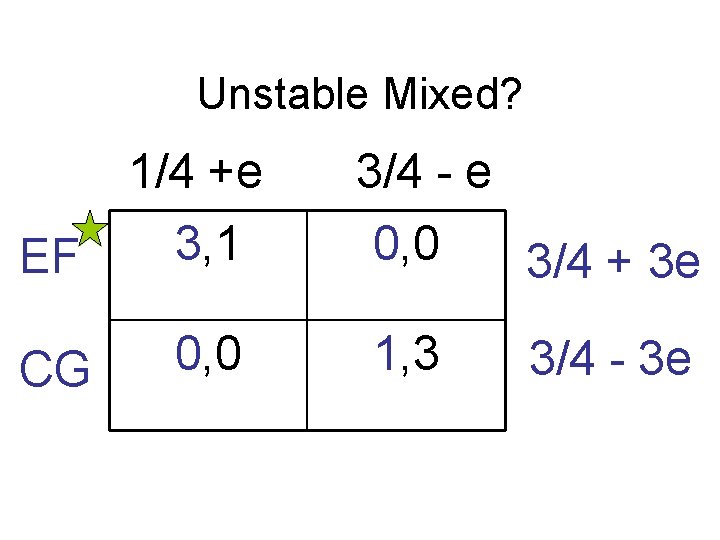

Unstable Mixed? 1/4 +e 3, 1 EF CG 0, 0 3/4 - e 0, 0 3/4 + 3 e 1, 3 3/4 - 3 e

Note the Implicit Assumption Our stability analysis assumed that Player 1 would best respond to Player 2’s tremble. However, the learning rule could be go to the mixed strategy equilibrium. If so, Player 1 would sit tight and Player 2 would return to the mixed strategy equilibrium.

Empirical Foundations We need to have some understanding of how people learn and adapt to say anything about stability.

Classes of Learning Rules Belief Based Learning Rules: People best respond given their beliefs about how other people play. Replicator Learning Rules: People replicate successful actions of others.

Stability Results An extensive literature provides conditions (fairly week) under which the two learning rules have identical stability property. Synopsis: Learning rules do not matter

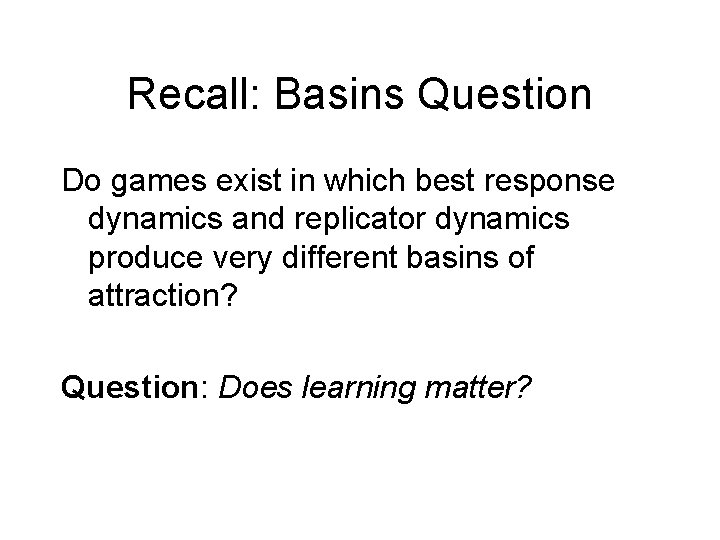

Basins Question Do games exist in which best response dynamics and replicator dynamics produce different basins of attraction? Question: Does learning matter?

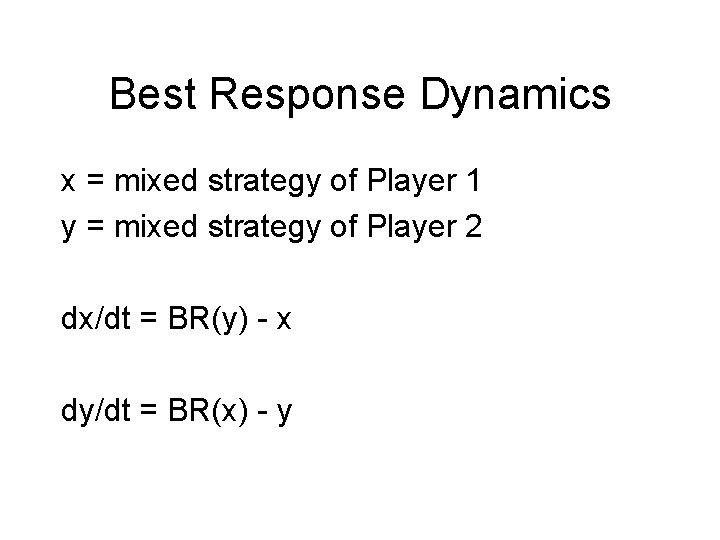

Best Response Dynamics x = mixed strategy of Player 1 y = mixed strategy of Player 2 dx/dt = BR(y) - x dy/dt = BR(x) - y

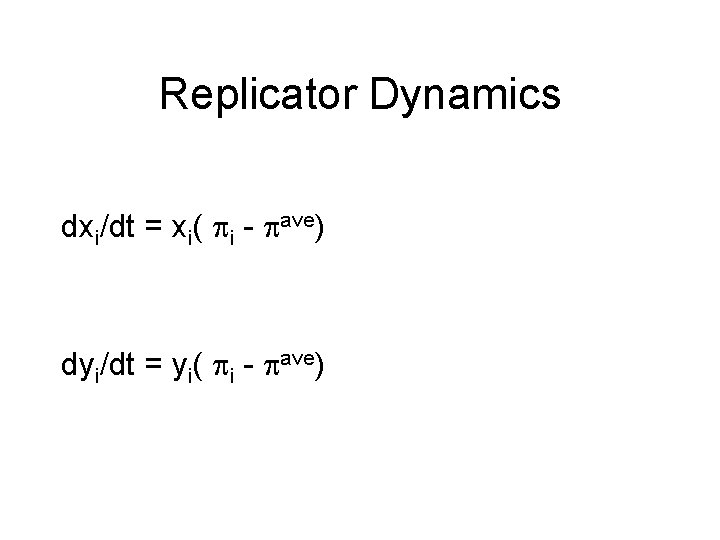

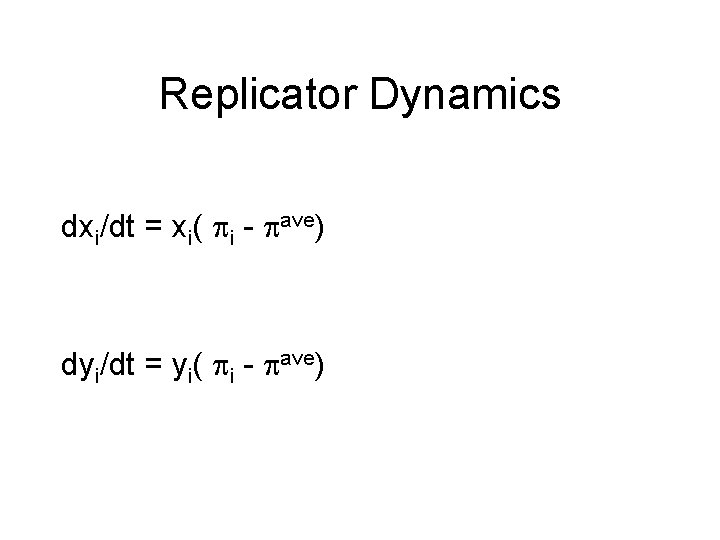

Replicator Dynamics dxi/dt = xi( i - ave) dyi/dt = yi( i - ave)

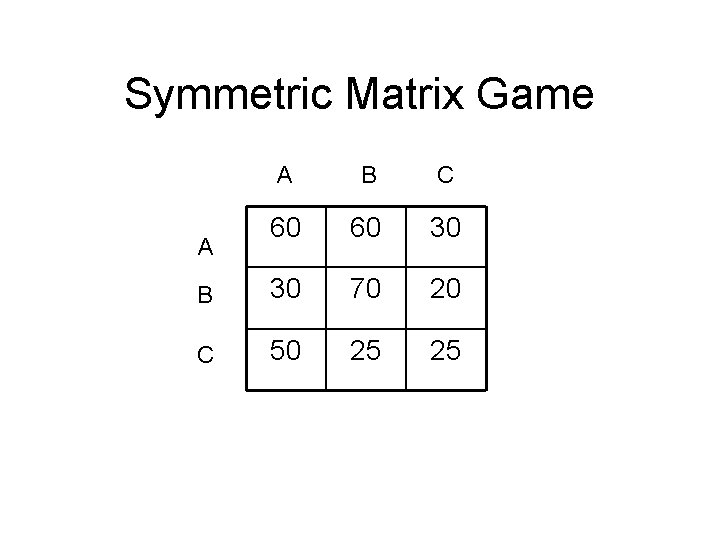

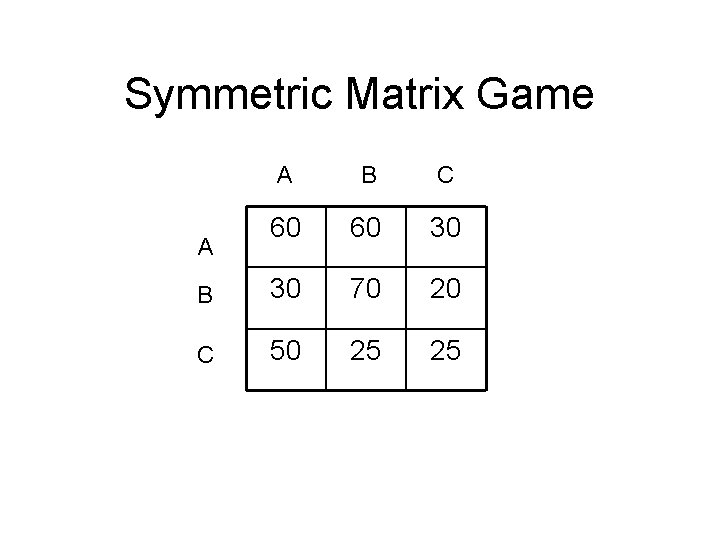

Symmetric Matrix Game A B C 60 60 30 B 30 70 20 C 50 25 25 A

A A B B B C

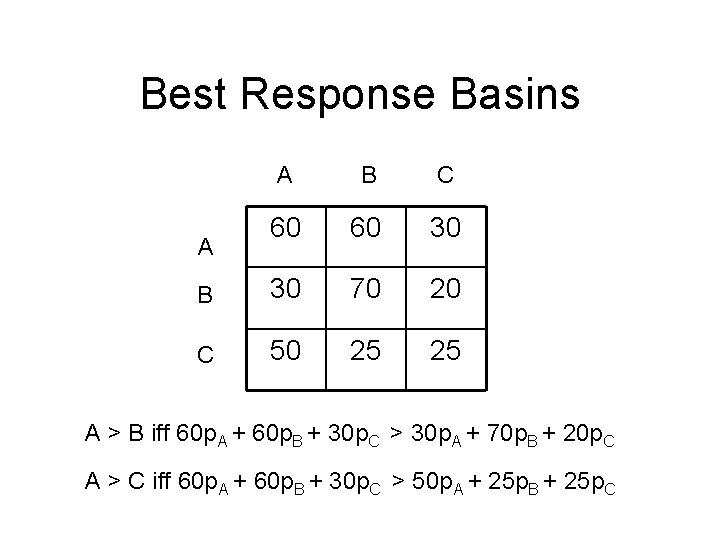

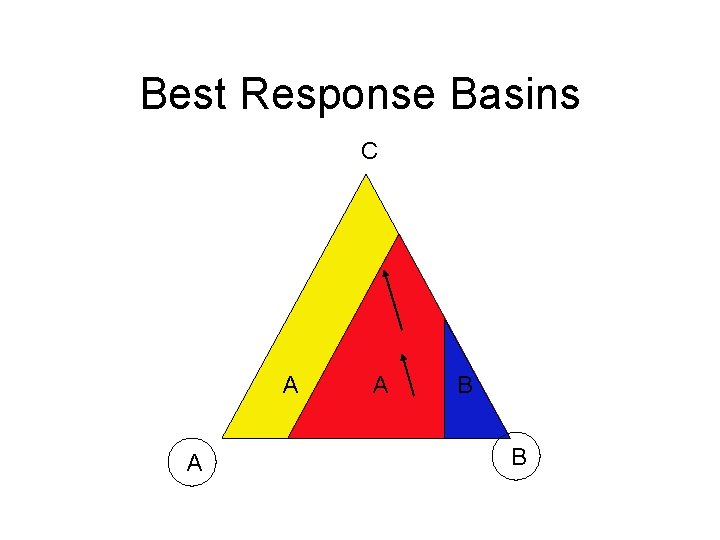

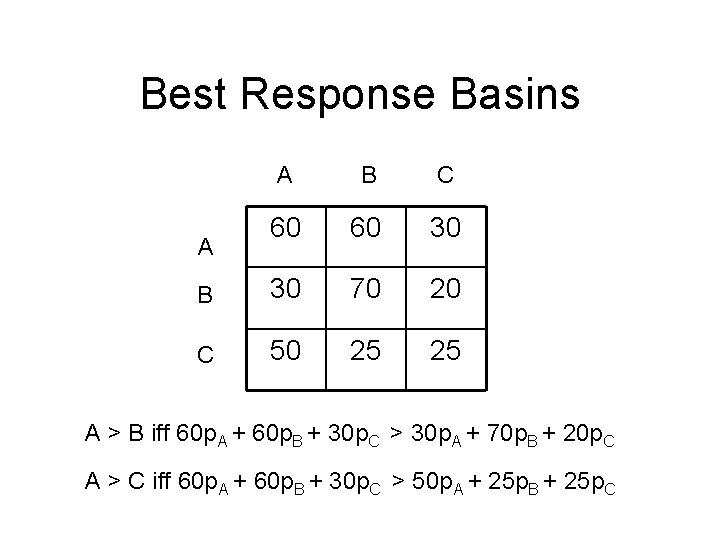

Best Response Basins A B C 60 60 30 B 30 70 20 C 50 25 25 A A > B iff 60 p. A + 60 p. B + 30 p. C > 30 p. A + 70 p. B + 20 p. C A > C iff 60 p. A + 60 p. B + 30 p. C > 50 p. A + 25 p. B + 25 p. C

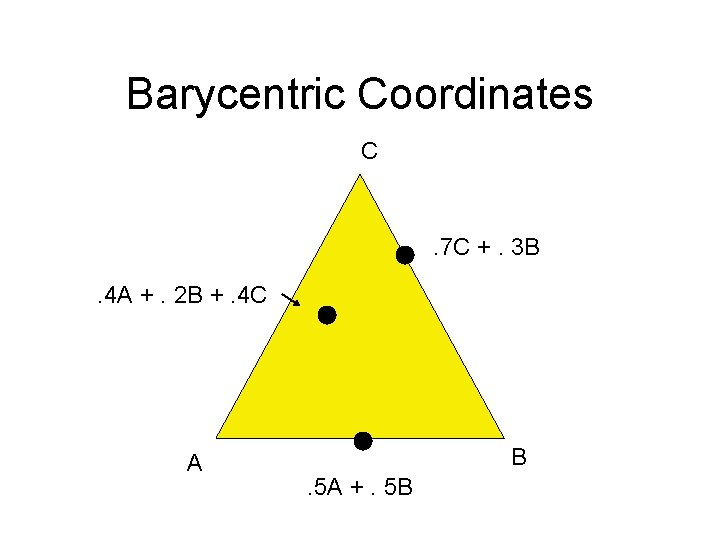

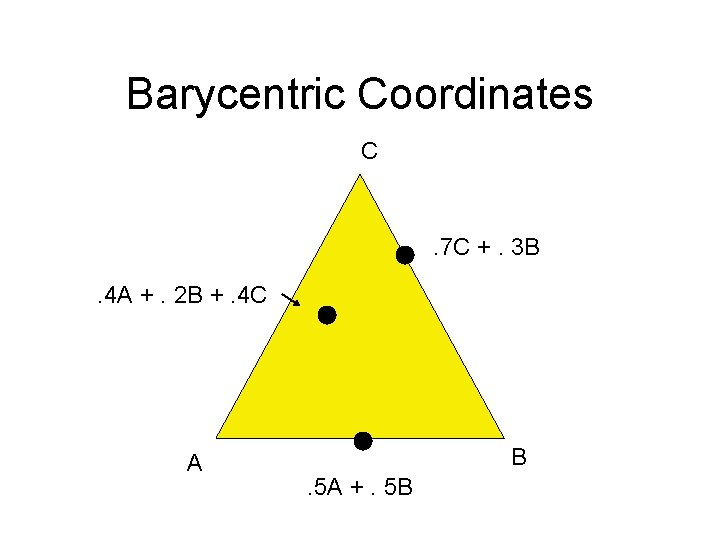

Barycentric Coordinates C . 7 C +. 3 B. 4 A +. 2 B +. 4 C A B. 5 A +. 5 B

Best Responses C A A C C B B B

Stable Equilibria C A A C C B B B

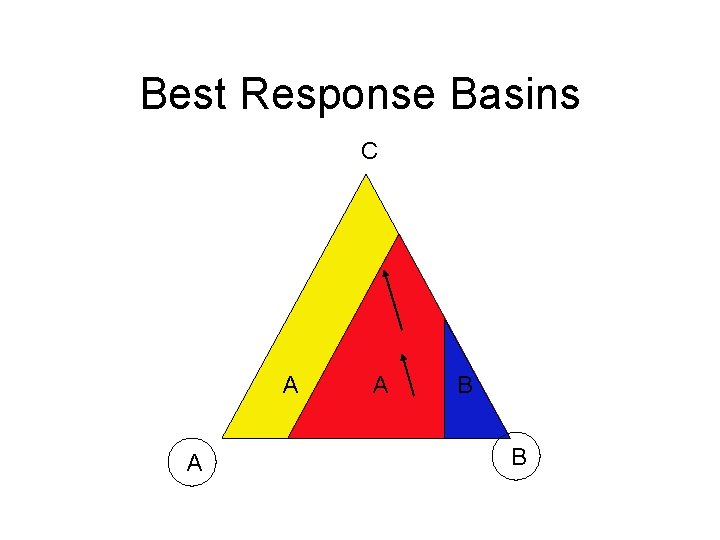

Best Response Basins C A A C A B B B

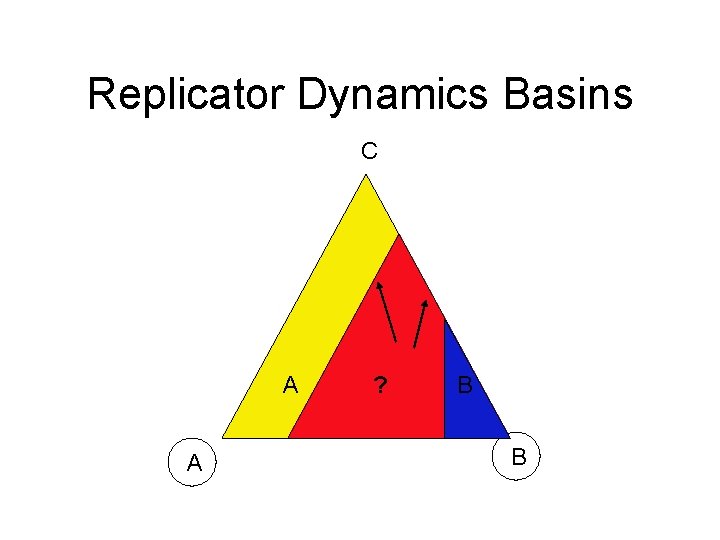

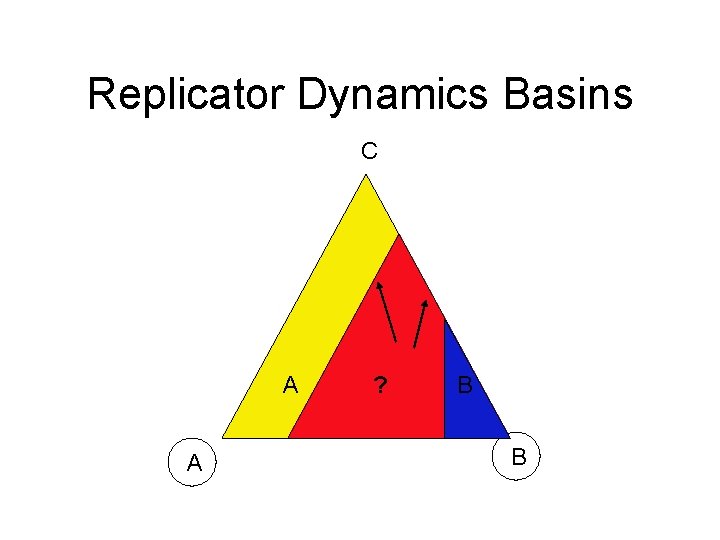

Replicator Dynamics Basins C A A C ? B B B

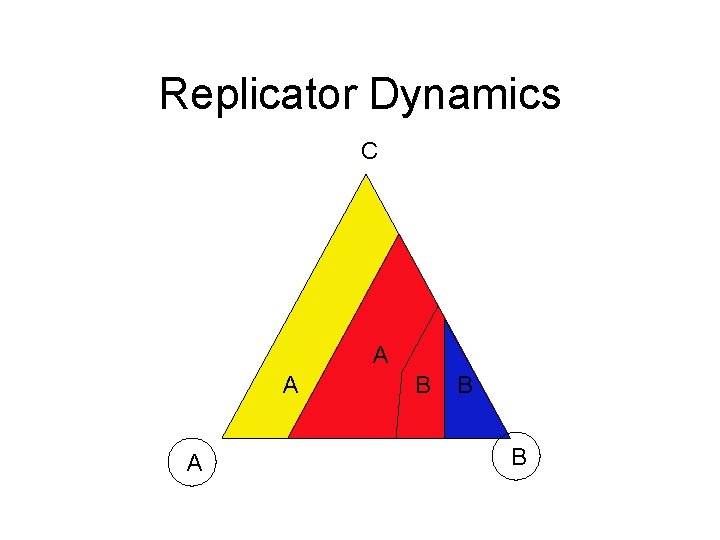

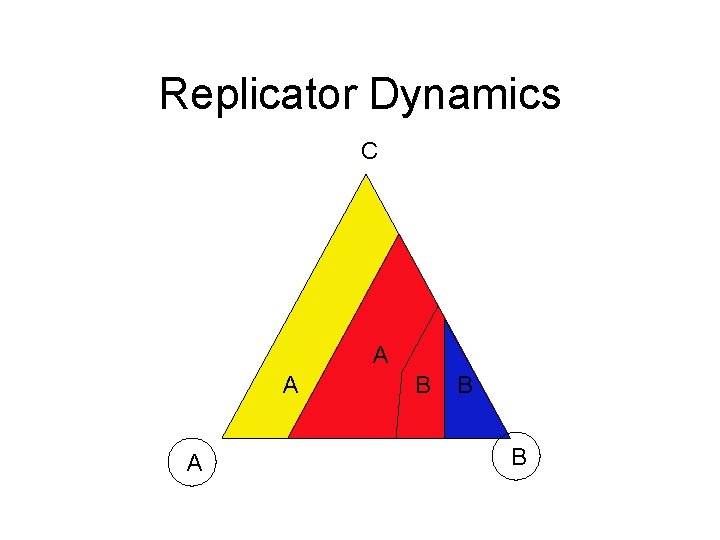

Replicator Dynamics C A A CA B B

Recall: Basins Question Do games exist in which best response dynamics and replicator dynamics produce very different basins of attraction? Question: Does learning matter?

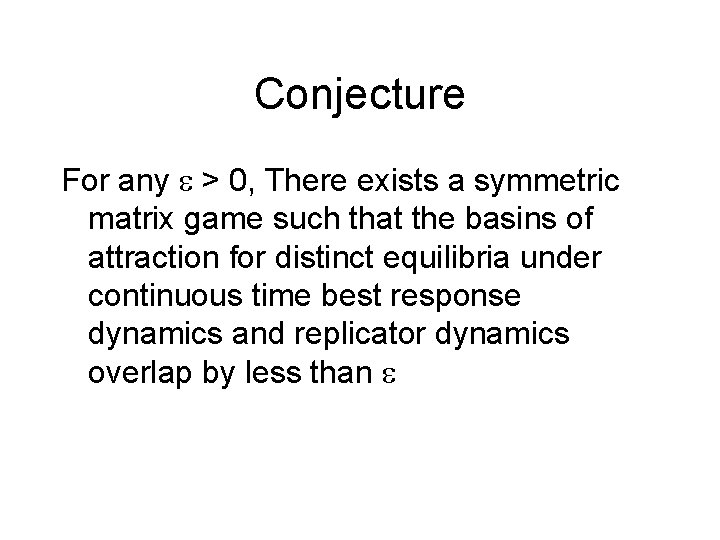

Conjecture For any > 0, There exists a symmetric matrix game such that the basins of attraction for distinct equilibria under continuous time best response dynamics and replicator dynamics overlap by less than

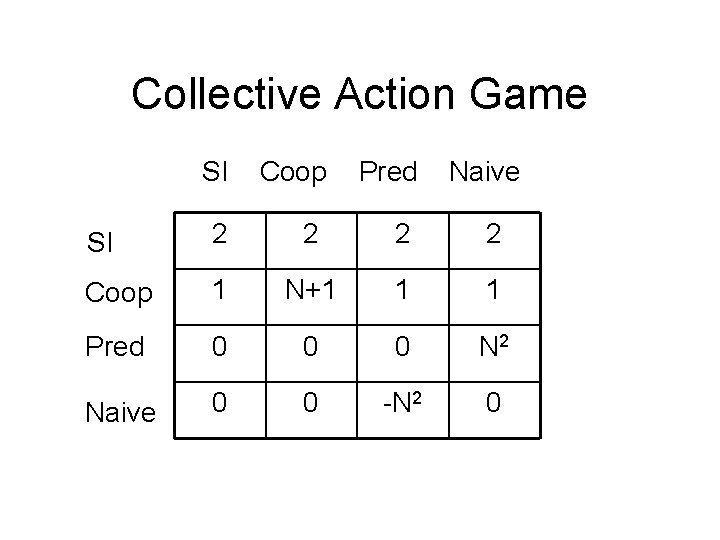

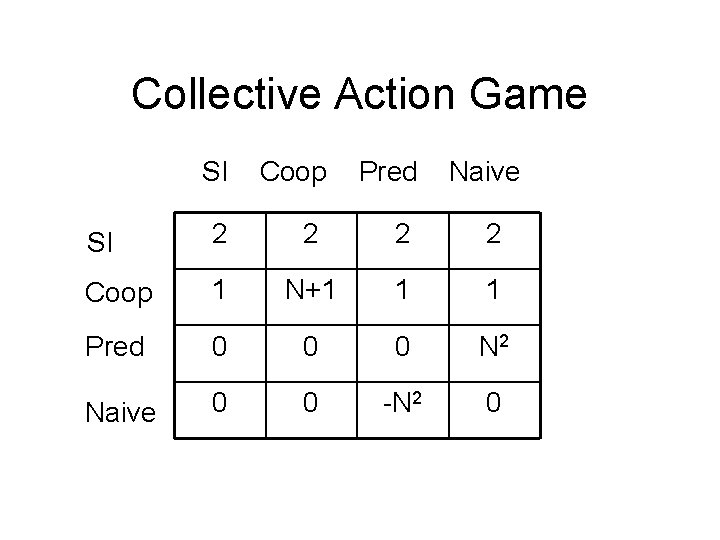

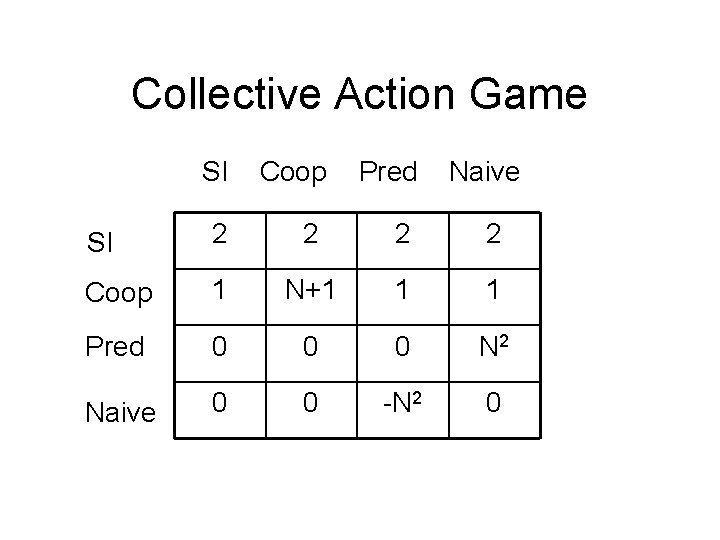

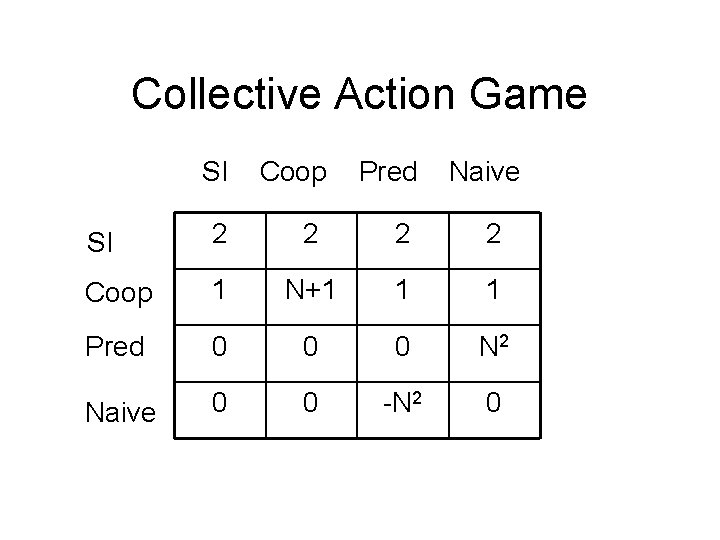

Collective Action Game SI Coop Pred Naive SI 2 2 Coop 1 N+1 1 1 Pred 0 0 0 N 2 Naive 0 0 -N 2 0

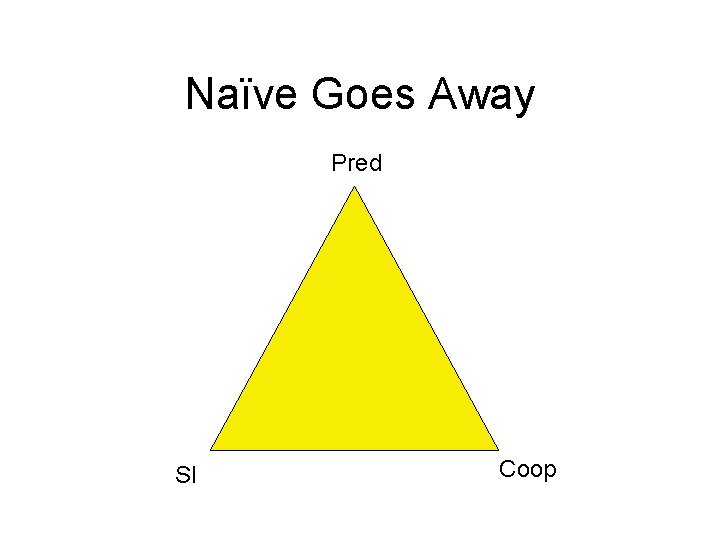

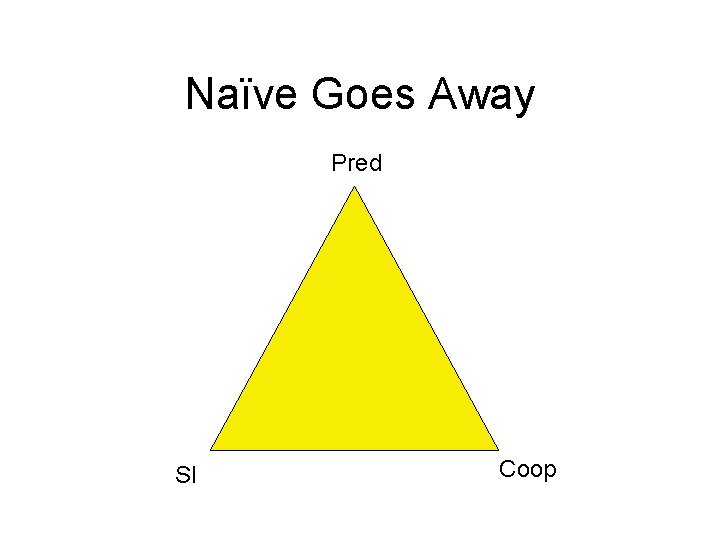

Naïve Goes Away Pred SI Coop

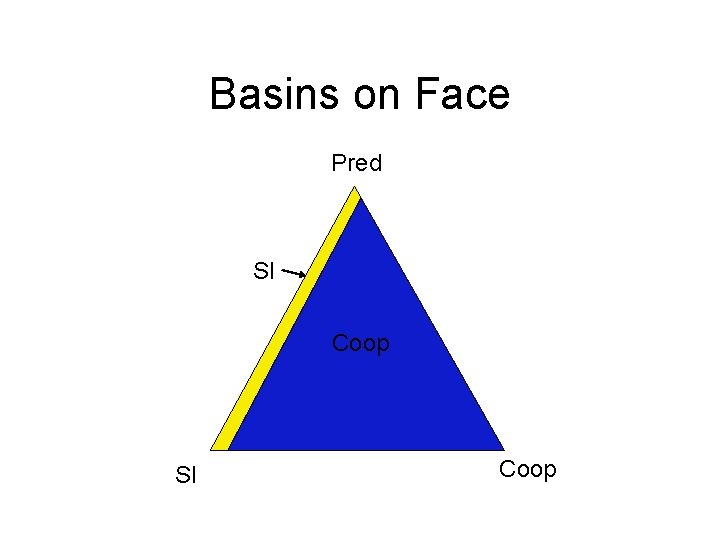

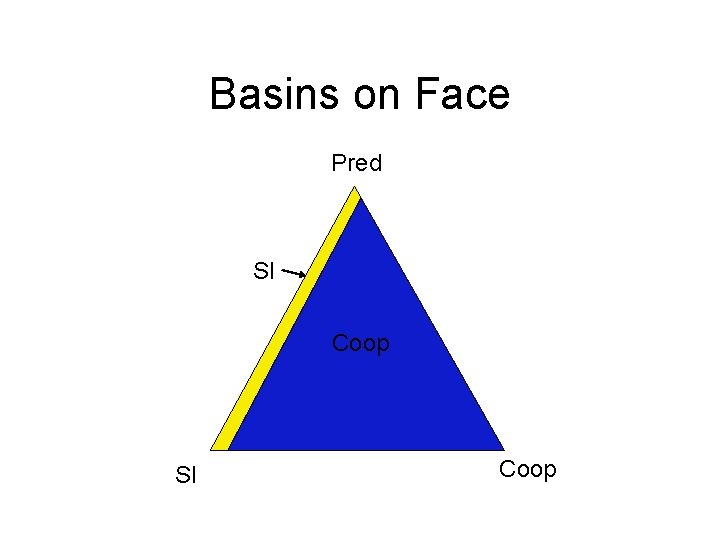

Basins on Face Pred SI Coop

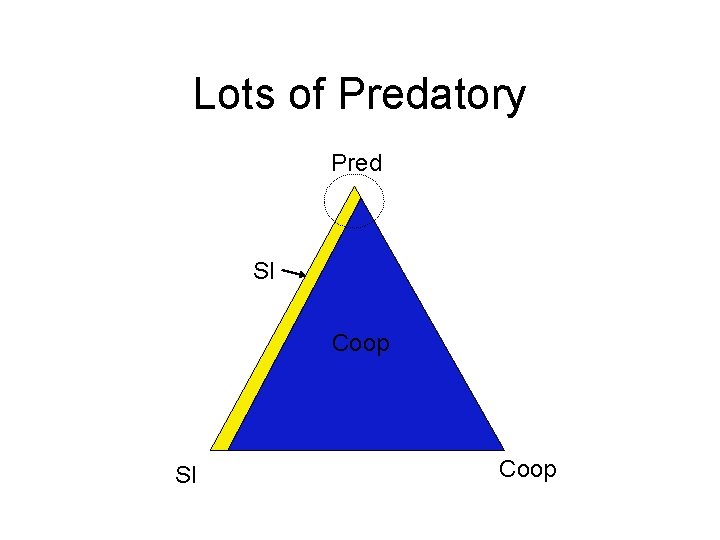

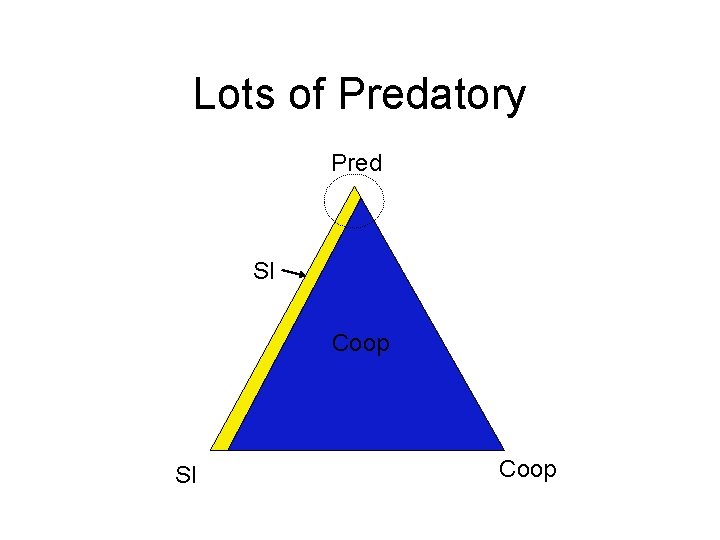

Lots of Predatory Pred SI Coop

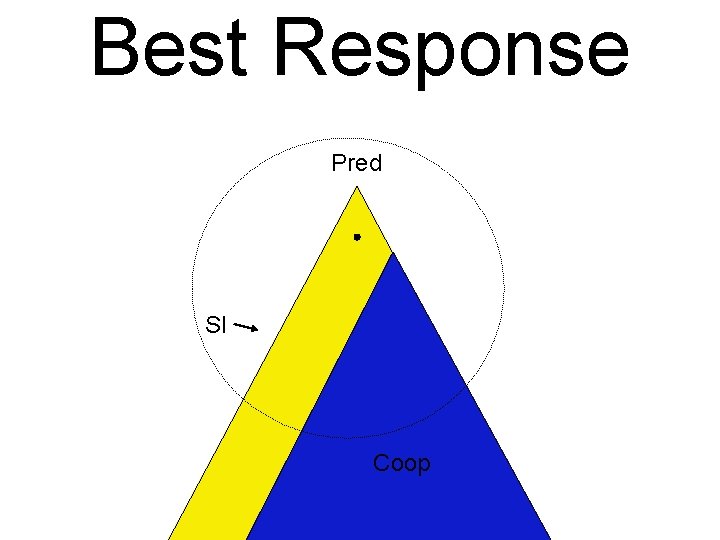

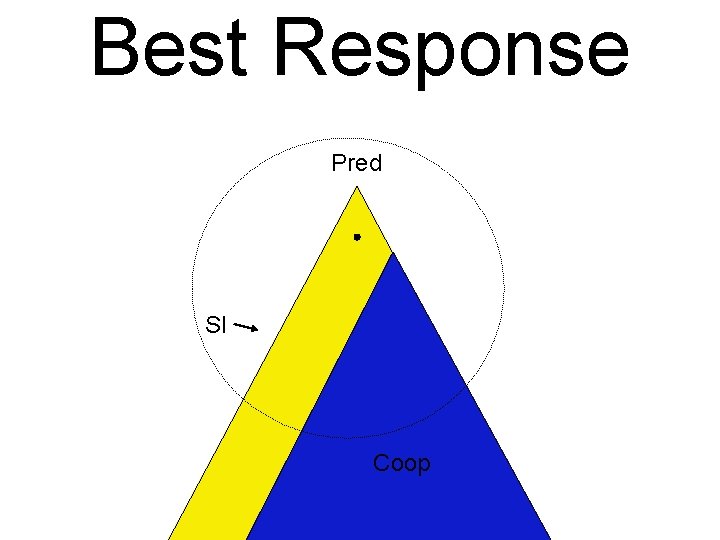

Best Response Pred SI Coop

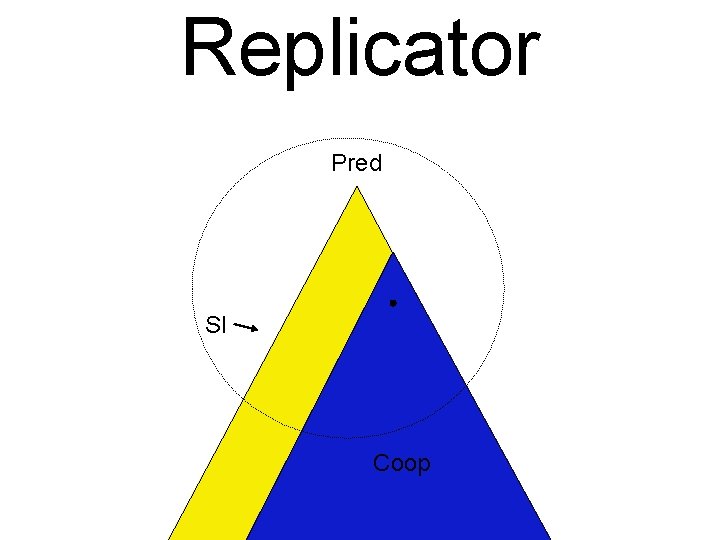

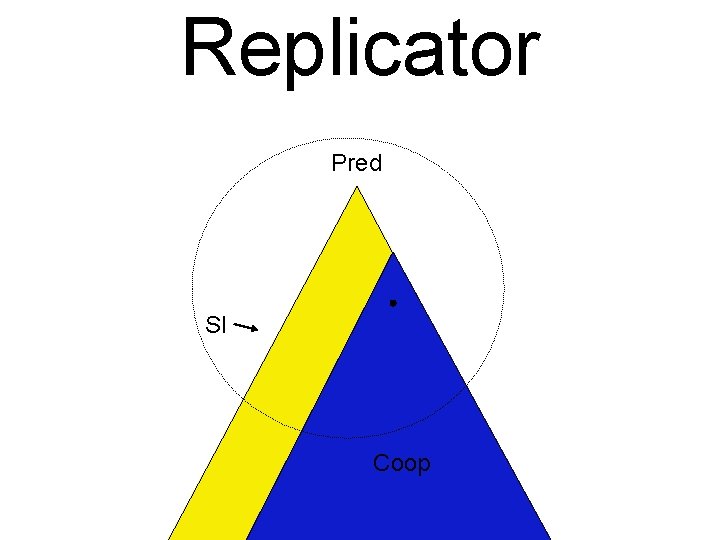

Replicator Pred SI Coop

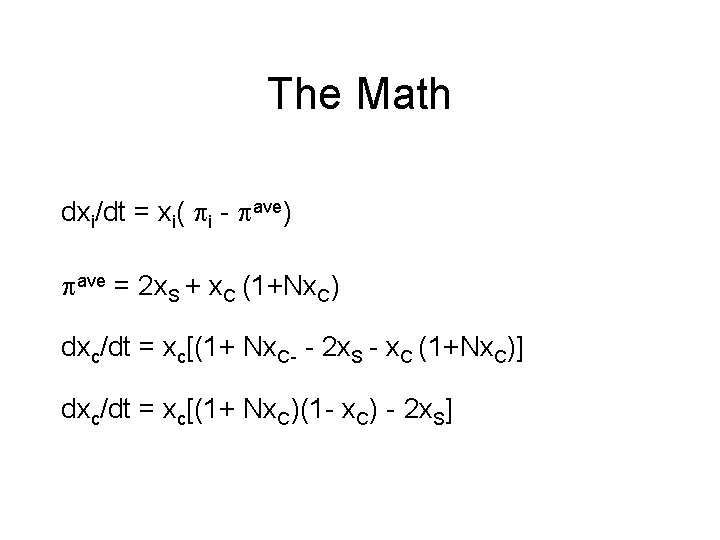

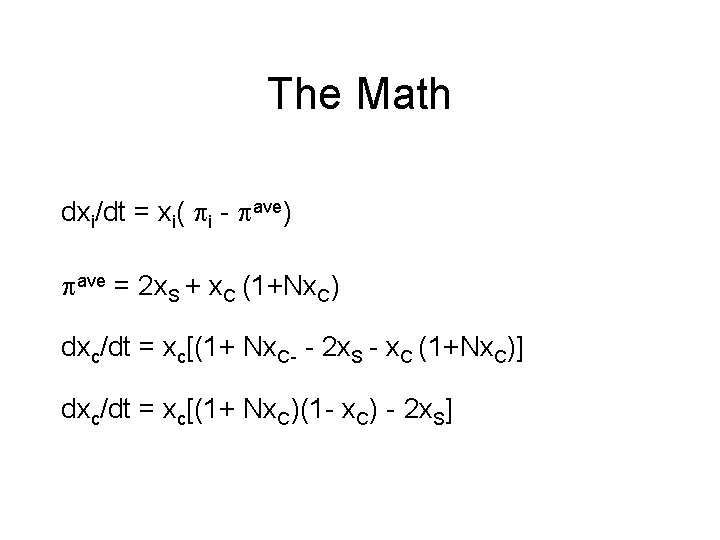

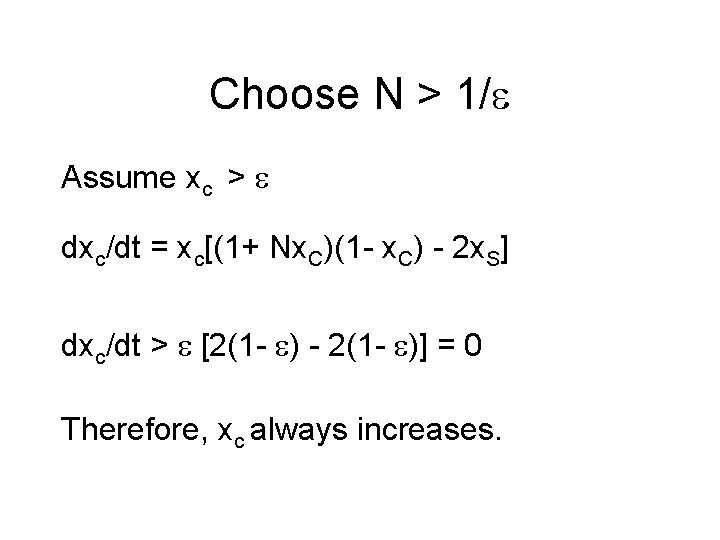

The Math dxi/dt = xi( i - ave) ave = 2 x. S + x. C (1+Nx. C) dxc/dt = xc[(1+ Nx. C- - 2 x. S - x. C (1+Nx. C)] dxc/dt = xc[(1+ Nx. C)(1 - x. C) - 2 x. S]

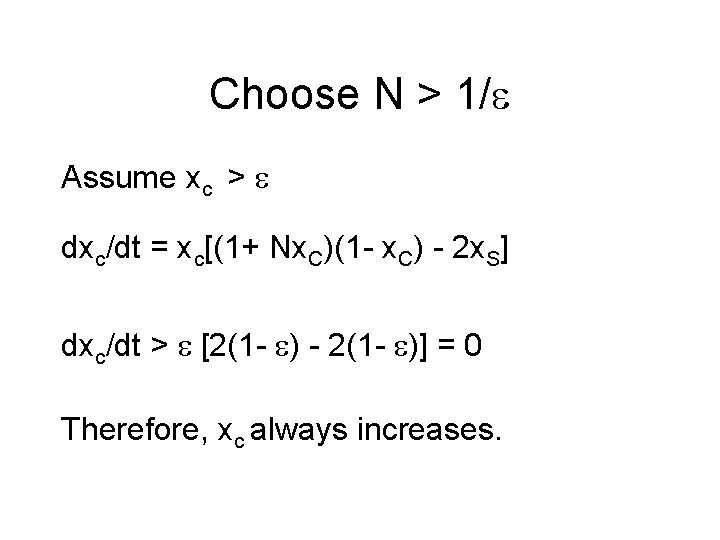

Choose N > 1/ Assume xc > dxc/dt = xc[(1+ Nx. C)(1 - x. C) - 2 x. S] dxc/dt > [2(1 - ) - 2(1 - )] = 0 Therefore, xc always increases.

Collective Action Game SI Coop Pred Naive SI 2 2 Coop 1 N+1 1 1 Pred 0 0 0 N 2 Naive 0 0 -N 2 0

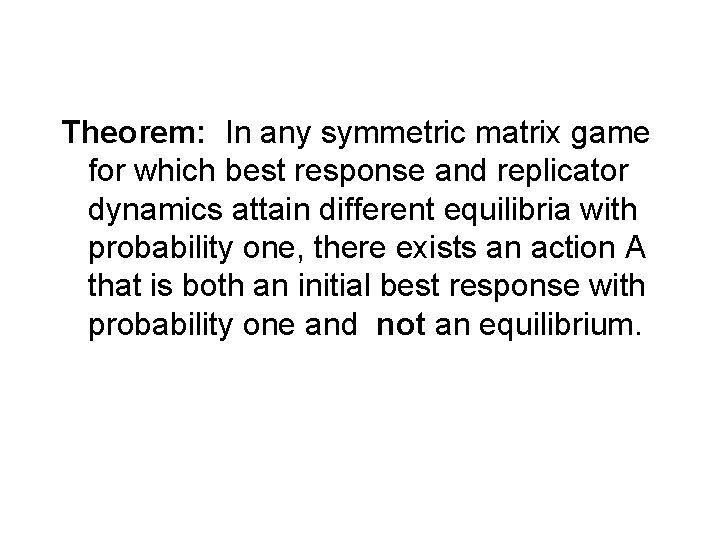

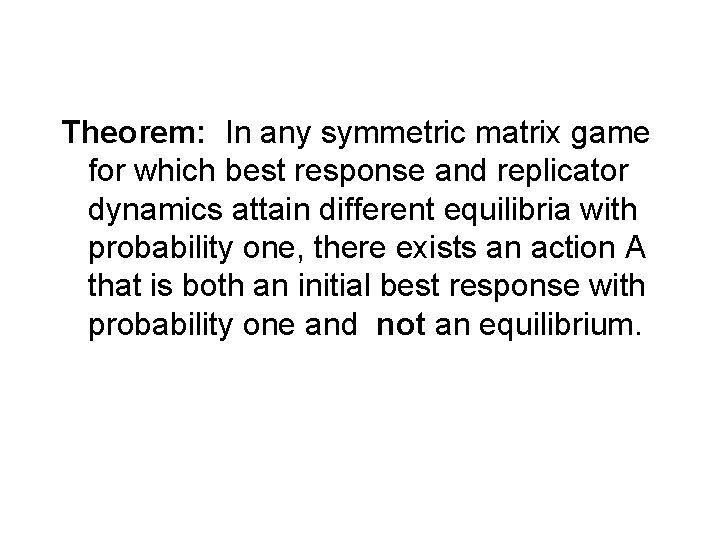

Theorem: In any symmetric matrix game for which best response and replicator dynamics attain different equilibria with probability one, there exists an action A that is both an initial best response with probability one and not an equilibrium.

Complex Systems Primatives Learning/Adaptation Diversity Interactions/Epistasis Networks/Geography

Diversity EWA (Wilcox) and Quantal Response (Golman) learning models are misspecified for heterogenous agents. EWA = “hybrid of response and belief based learning”

Convex Combinations Suppose the population learns using the following rule a (Best Response) + (1 -a) Replicator

Claim: There exists a class of games in which Best Response, Replicator Dynamics, and any fixed convex combination select distinct equilibria with probability one. Best Response -> A Replicator -> B a BR + (1 -a) Rep -> C

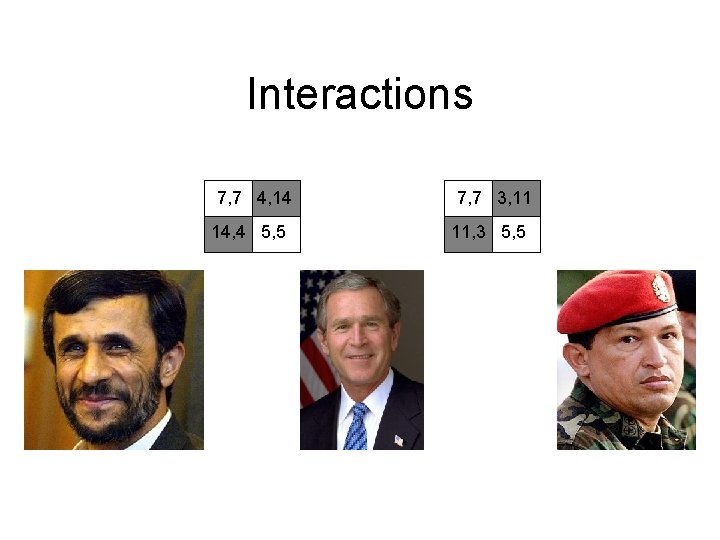

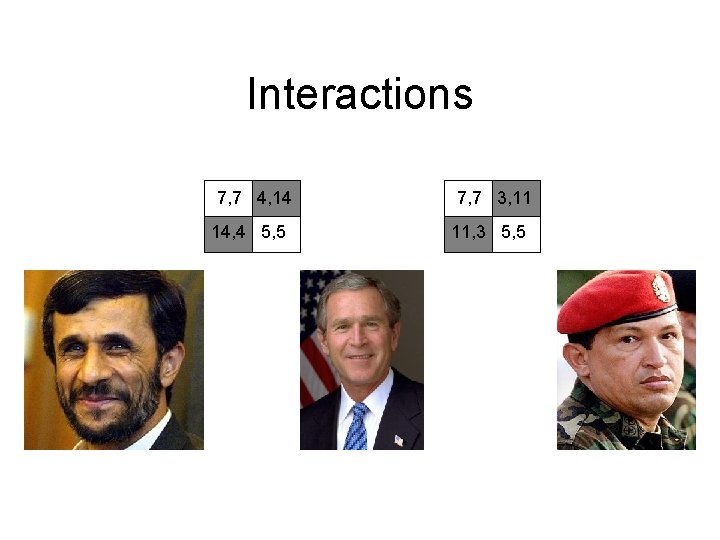

Interactions 7, 7 4, 14 14, 4 5, 5 7, 7 3, 11 11, 3 5, 5

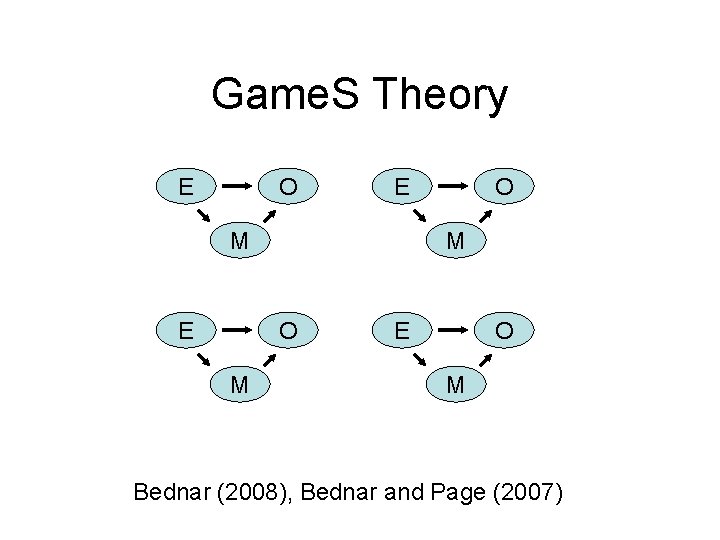

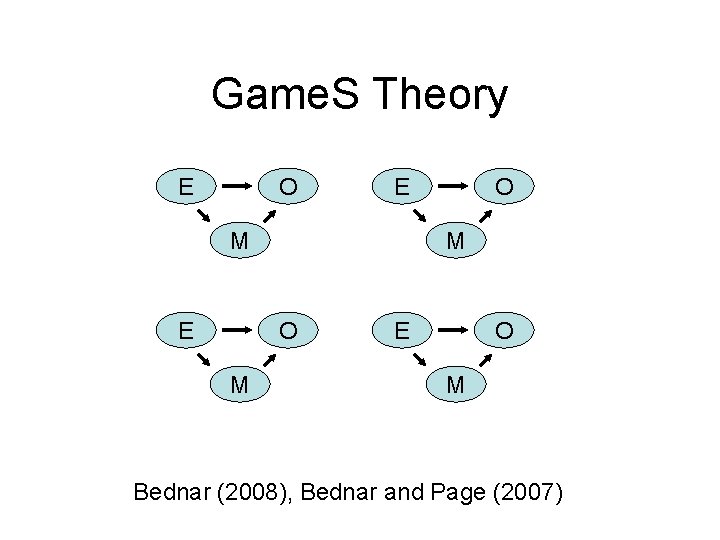

Game. S Theory E O E M O M O E O M Bednar (2008), Bednar and Page (2007)

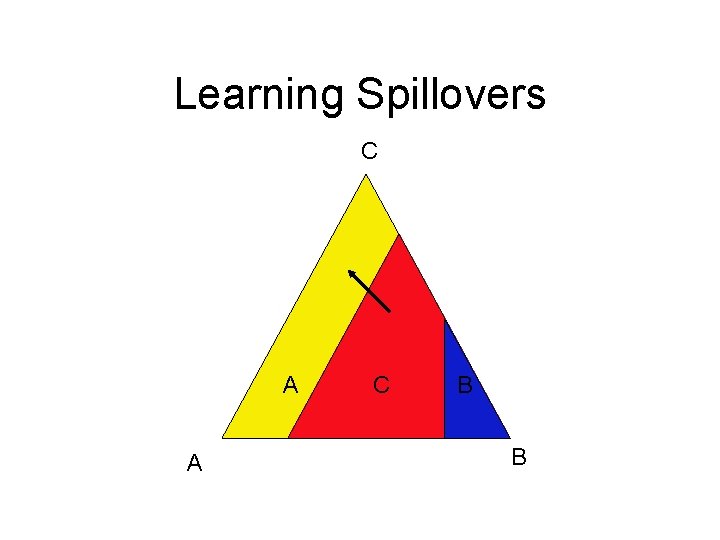

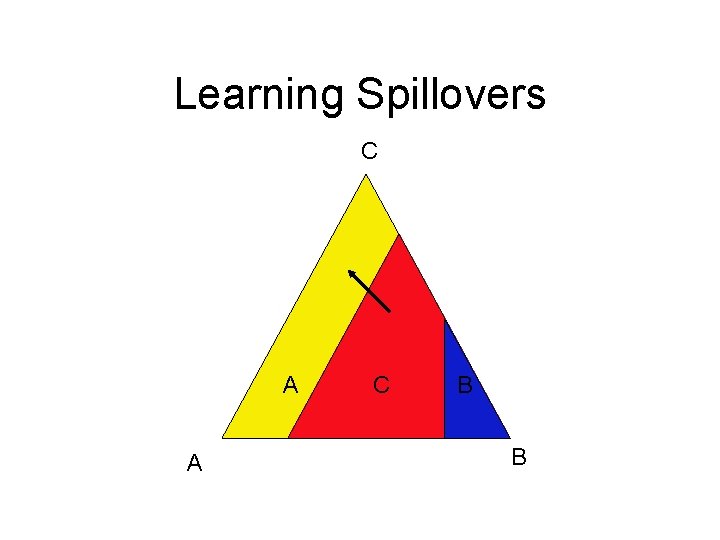

Learning Spillovers C A A C C B B B

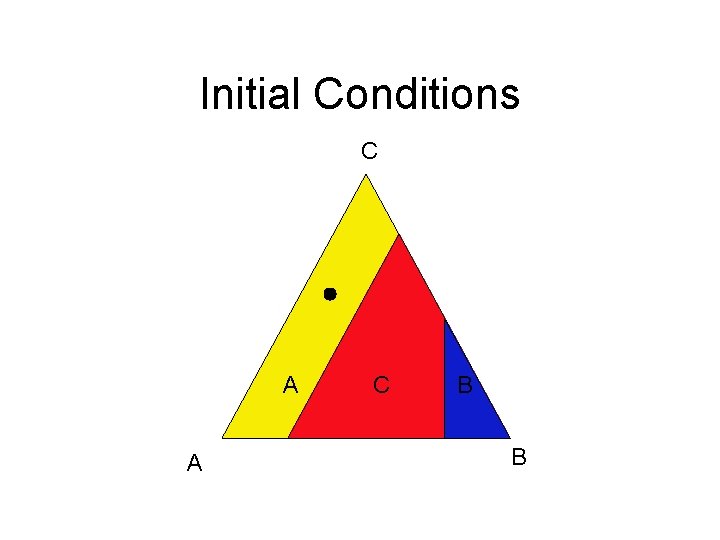

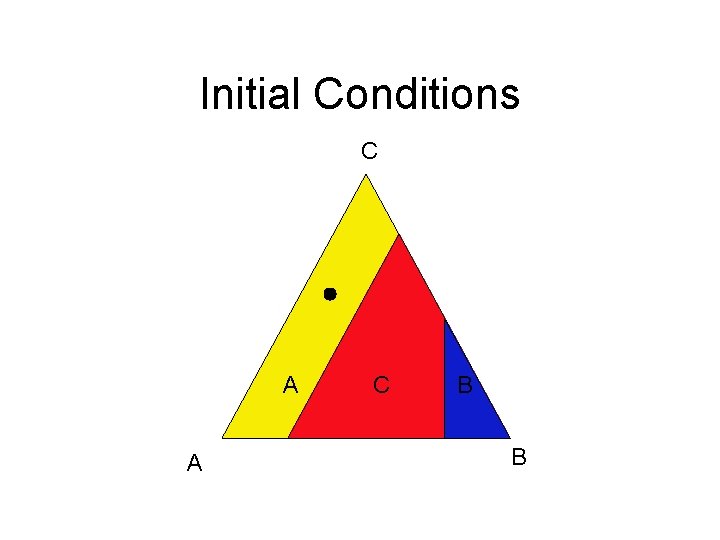

Initial Conditions C A A C C B B B

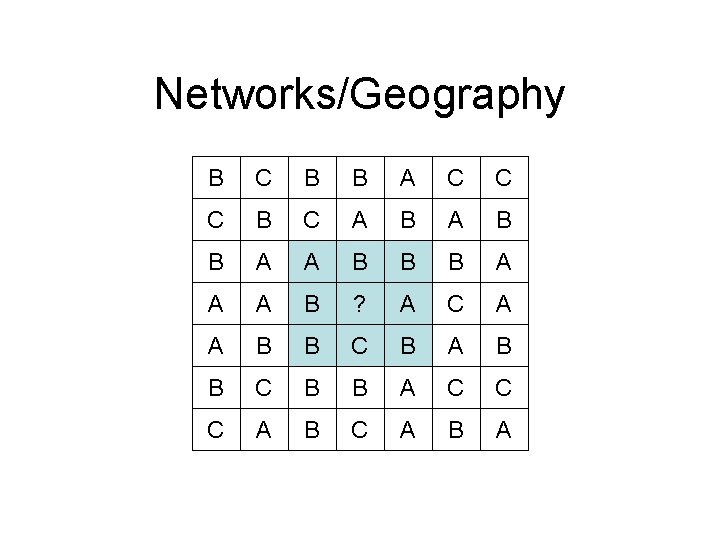

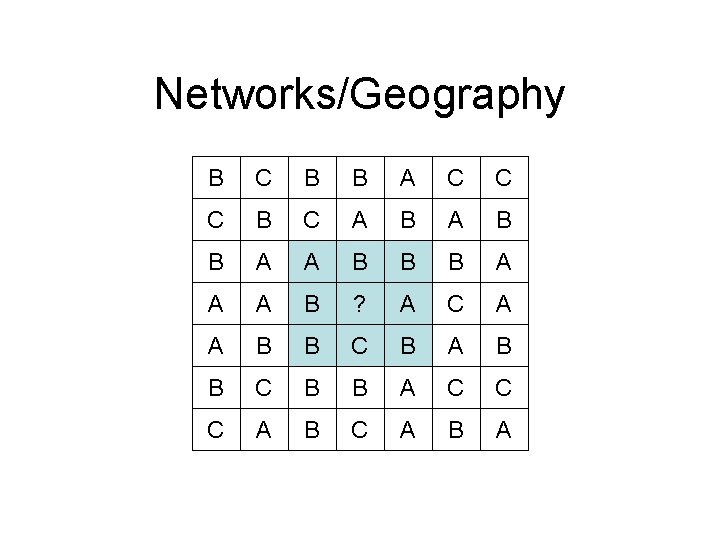

Networks/Geography B C B B A C C C B C A B B A A B B B A A A B ? A C A A B B C B B A C C C A B A

ABM complement Math

Equilibrium Selection Learning Rule Spillovers Diversity Geography/Networks