Introduction to Neural Networks and Fuzzy Logic Lecture

- Slides: 20

Introduction to Neural Networks and Fuzzy Logic Lecture 5 Dr. -Ing. Erwin Sitompul President University http: //zitompul. wordpress. com 2 0 1 8 President University Erwin Sitompul NNFL 5/1

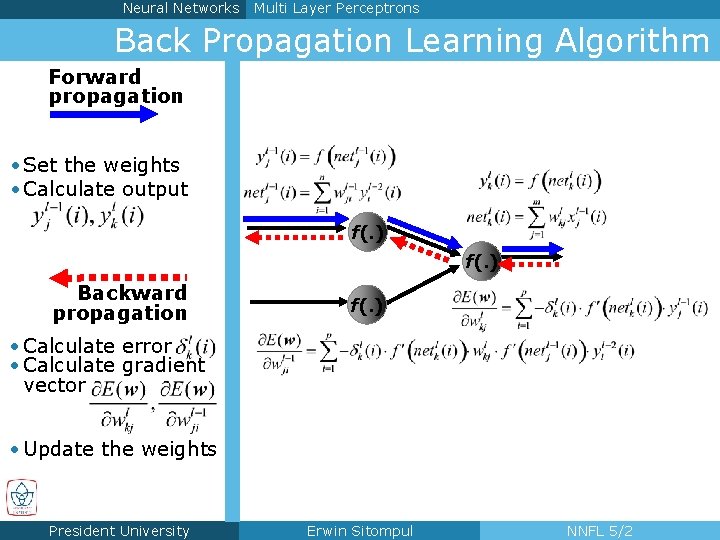

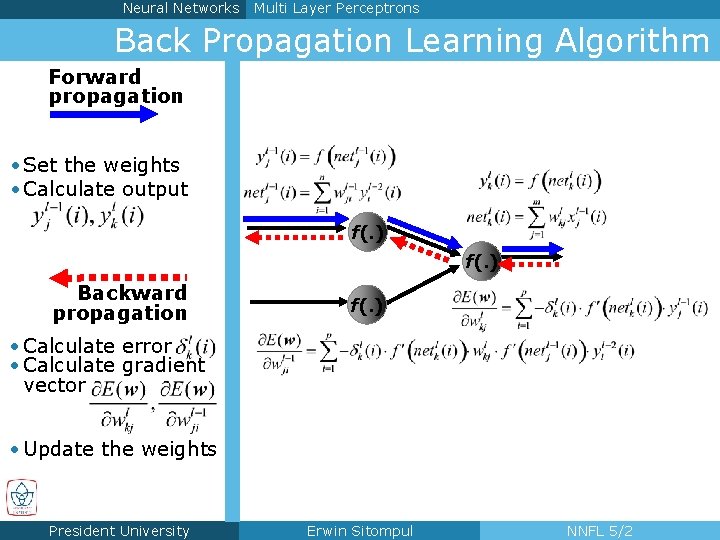

Neural Networks Multi Layer Perceptrons Back Propagation Learning Algorithm Forward propagation • Set the weights • Calculate output f(. ) Backward propagation f(. ) • Calculate error • Calculate gradient vector • Update the weights President University Erwin Sitompul NNFL 5/2

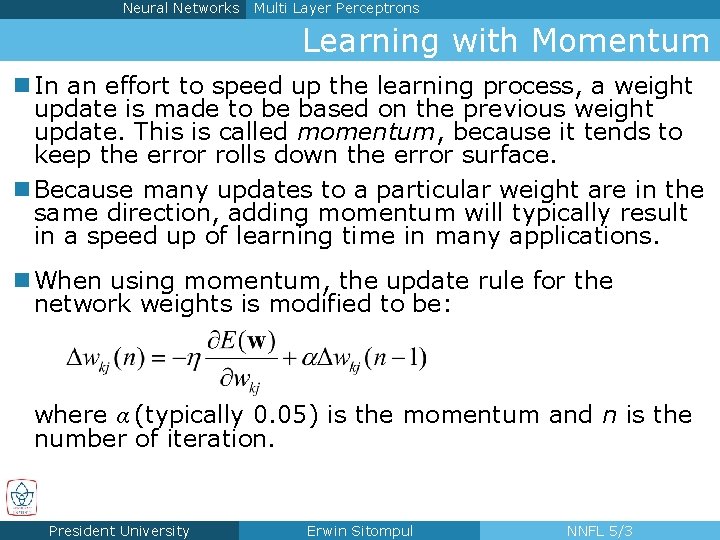

Neural Networks Multi Layer Perceptrons Learning with Momentum n In an effort to speed up the learning process, a weight update is made to be based on the previous weight update. This is called momentum, because it tends to keep the error rolls down the error surface. n Because many updates to a particular weight are in the same direction, adding momentum will typically result in a speed up of learning time in many applications. n When using momentum, the update rule for the network weights is modified to be: where α (typically 0. 05) is the momentum and n is the number of iteration. President University Erwin Sitompul NNFL 5/3

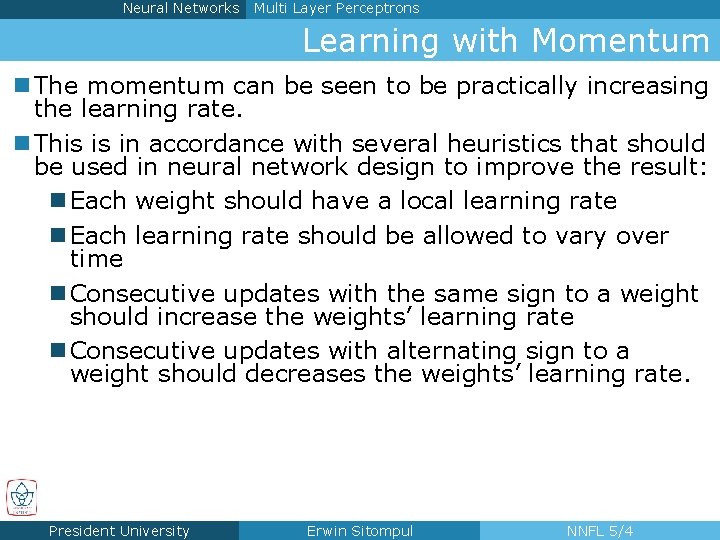

Neural Networks Multi Layer Perceptrons Learning with Momentum n The momentum can be seen to be practically increasing the learning rate. n This is in accordance with several heuristics that should be used in neural network design to improve the result: n Each weight should have a local learning rate n Each learning rate should be allowed to vary over time n Consecutive updates with the same sign to a weight should increase the weights’ learning rate n Consecutive updates with alternating sign to a weight should decreases the weights’ learning rate. President University Erwin Sitompul NNFL 5/4

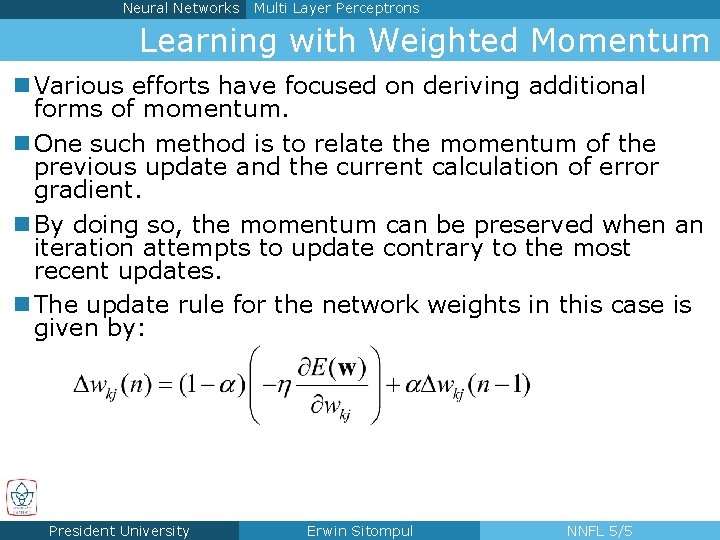

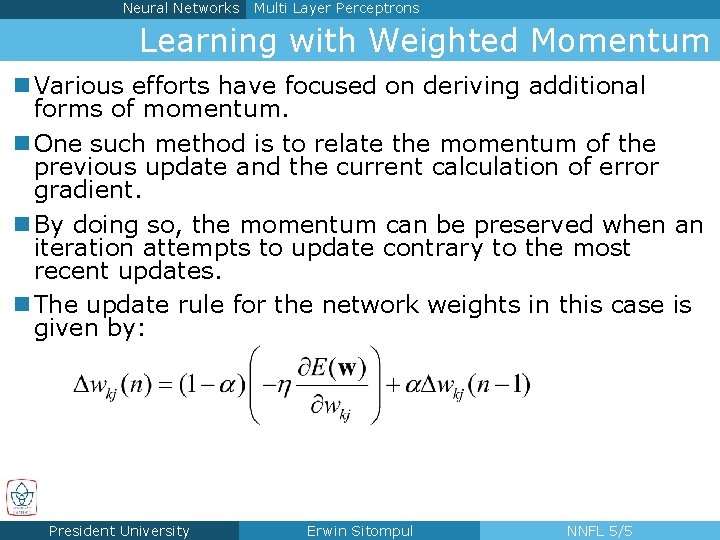

Neural Networks Multi Layer Perceptrons Learning with Weighted Momentum n Various efforts have focused on deriving additional forms of momentum. n One such method is to relate the momentum of the previous update and the current calculation of error gradient. n By doing so, the momentum can be preserved when an iteration attempts to update contrary to the most recent updates. n The update rule for the network weights in this case is given by: President University Erwin Sitompul NNFL 5/5

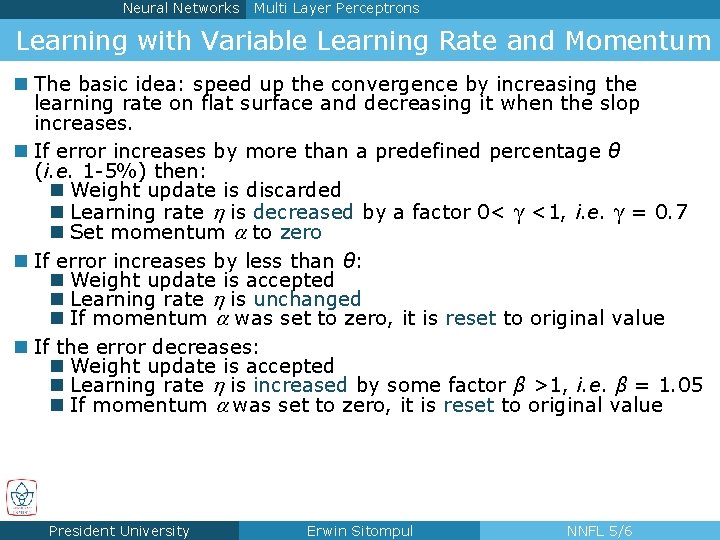

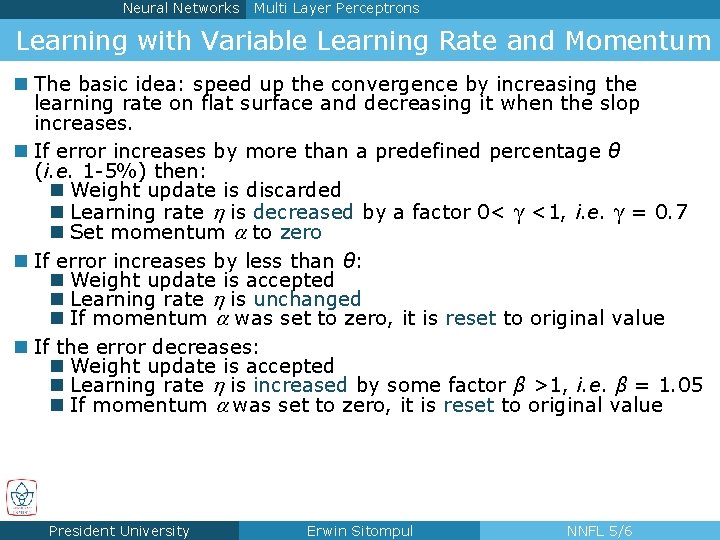

Neural Networks Multi Layer Perceptrons Learning with Variable Learning Rate and Momentum n The basic idea: speed up the convergence by increasing the learning rate on flat surface and decreasing it when the slop increases. n If error increases by more than a predefined percentage θ (i. e. 1 -5%) then: n Weight update is discarded n Learning rate h is decreased by a factor 0< γ <1, i. e. γ = 0. 7 n Set momentum a to zero n If error increases by less than θ: n Weight update is accepted n Learning rate h is unchanged n If momentum a was set to zero, it is reset to original value n If the error decreases: n Weight update is accepted n Learning rate h is increased by some factor β >1, i. e. β = 1. 05 n If momentum a was set to zero, it is reset to original value President University Erwin Sitompul NNFL 5/6

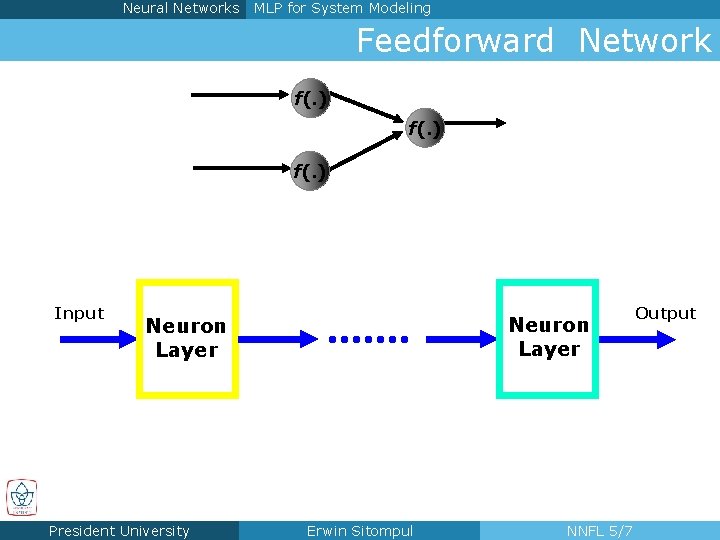

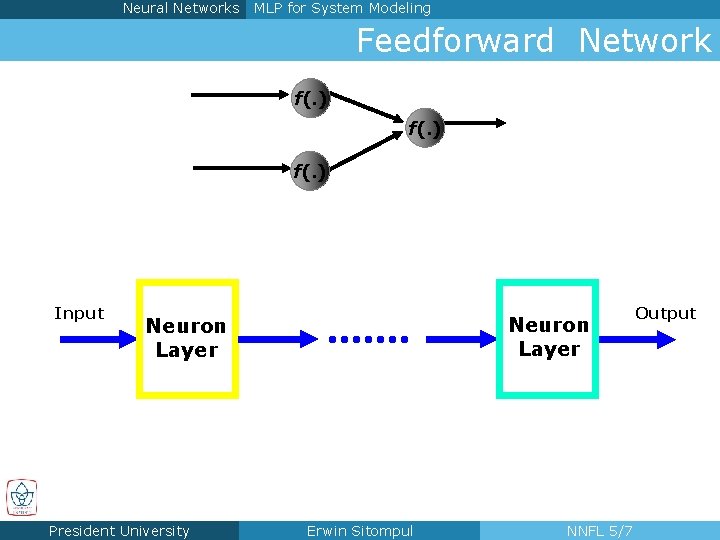

Neural Networks MLP for System Modeling Feedforward Network f(. ) Input Neuron Layer President University Erwin Sitompul NNFL 5/7 Output

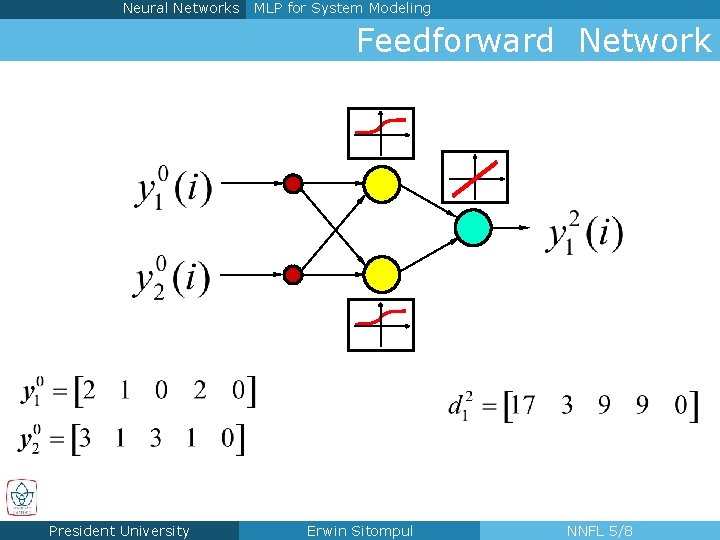

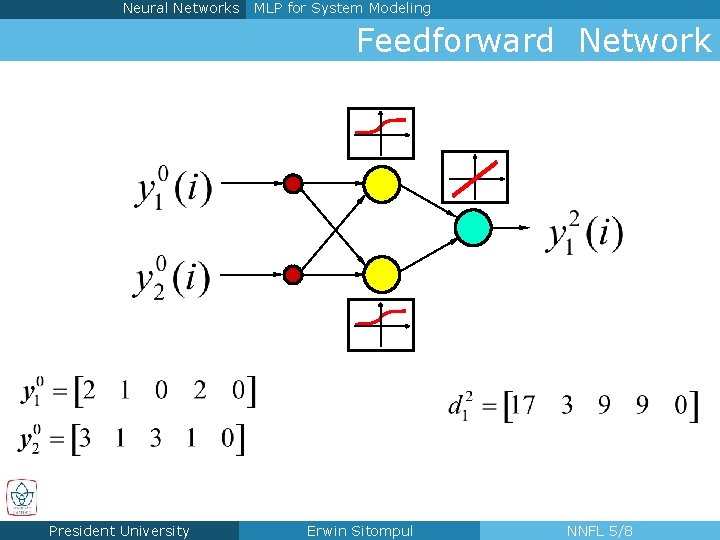

Neural Networks MLP for System Modeling Feedforward Network President University Erwin Sitompul NNFL 5/8

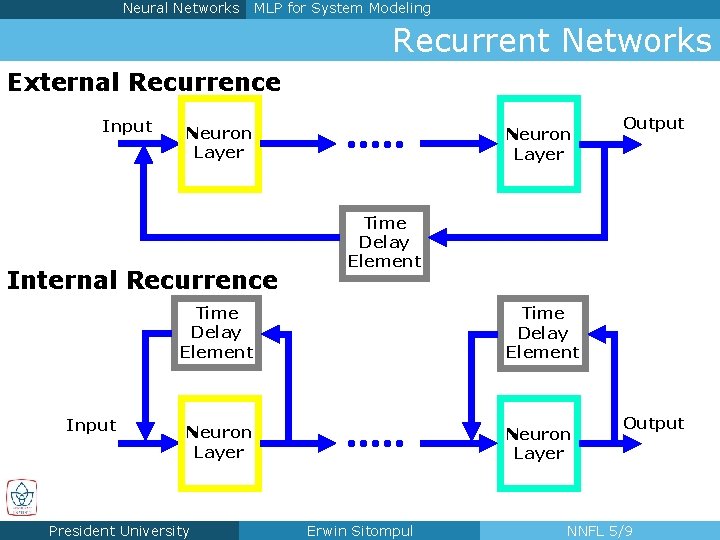

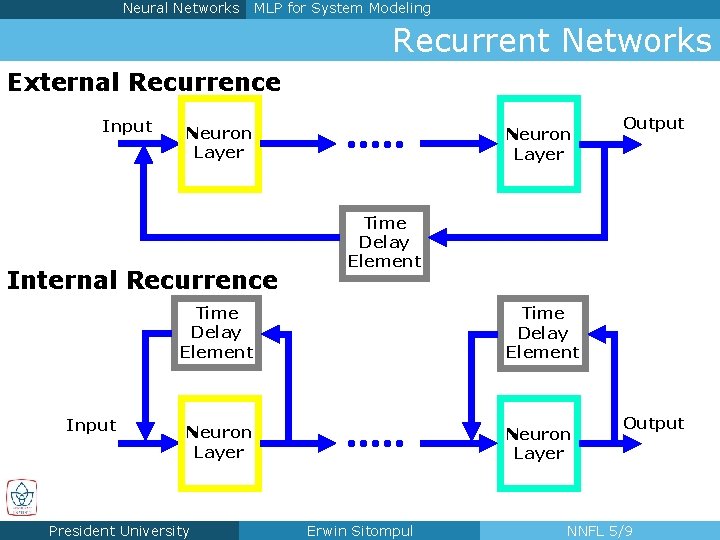

Neural Networks MLP for System Modeling Recurrent Networks External Recurrence Input Neuron Layer Internal Recurrence Input Neuron Layer Time Delay Element Neuron Layer President University Output Erwin Sitompul Output NNFL 5/9

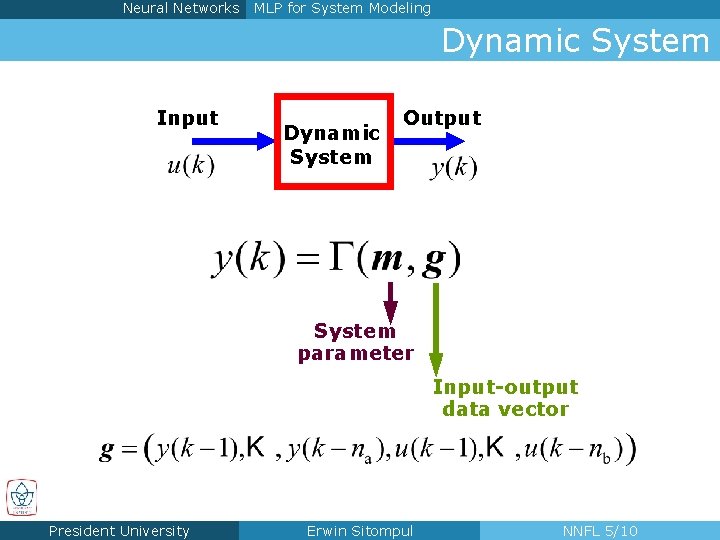

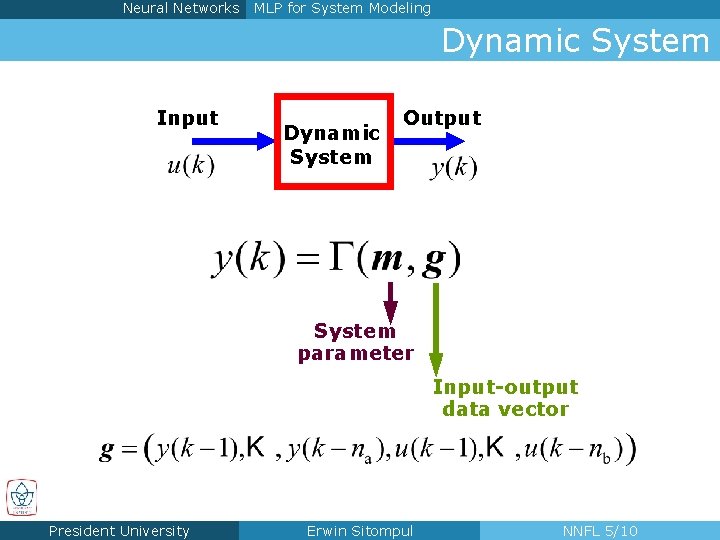

Neural Networks MLP for System Modeling Dynamic System Input Dynamic System Output System parameter Input-output data vector President University Erwin Sitompul NNFL 5/10

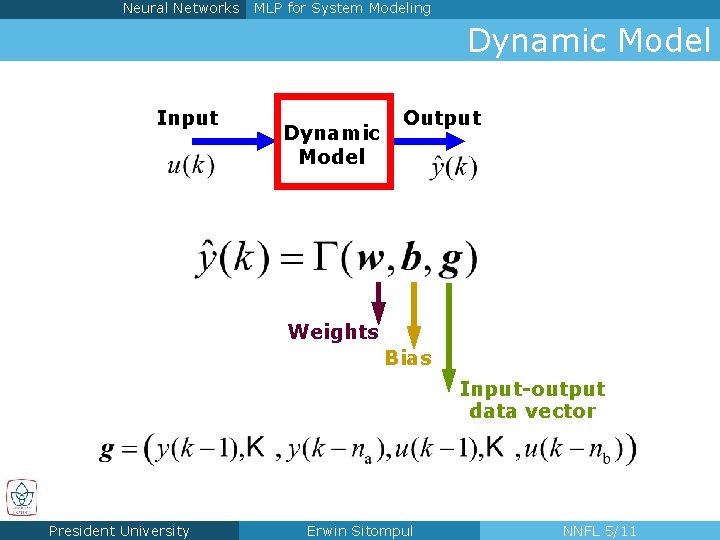

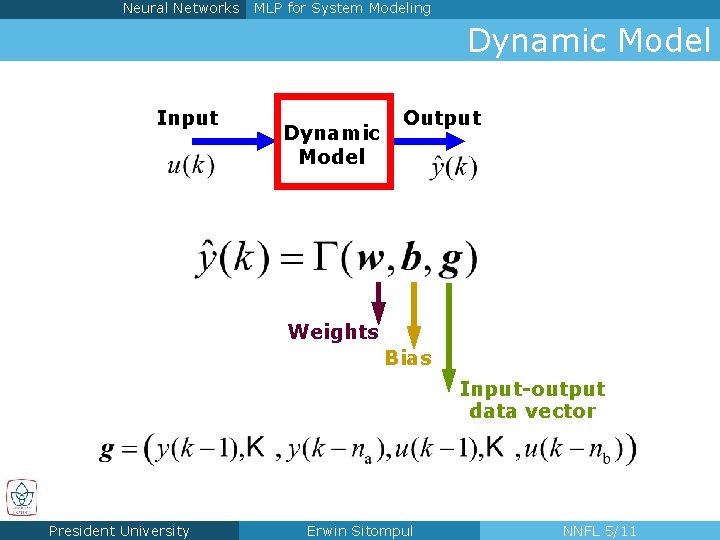

Neural Networks MLP for System Modeling Dynamic Model Input Dynamic Model Output Weights Bias Input-output data vector President University Erwin Sitompul NNFL 5/11

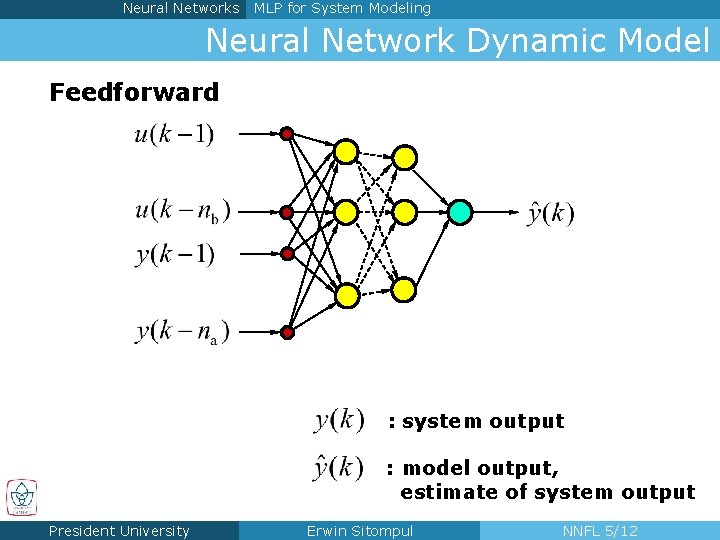

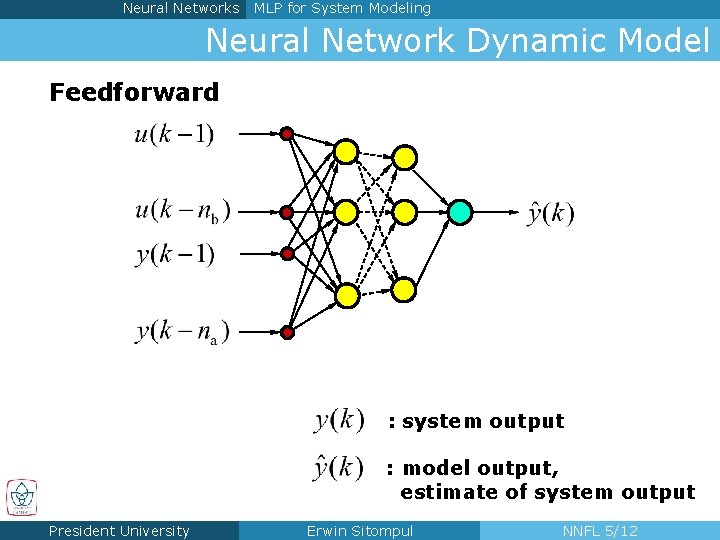

Neural Networks MLP for System Modeling Neural Network Dynamic Model Feedforward . . . : system output : model output, estimate of system output President University Erwin Sitompul NNFL 5/12

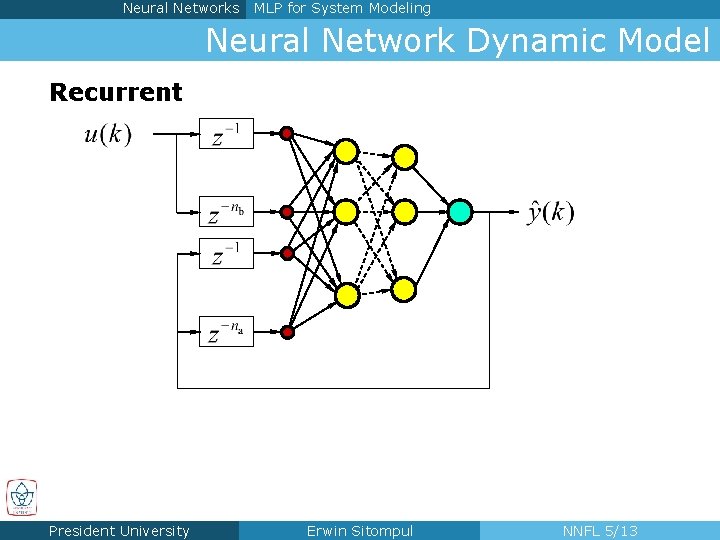

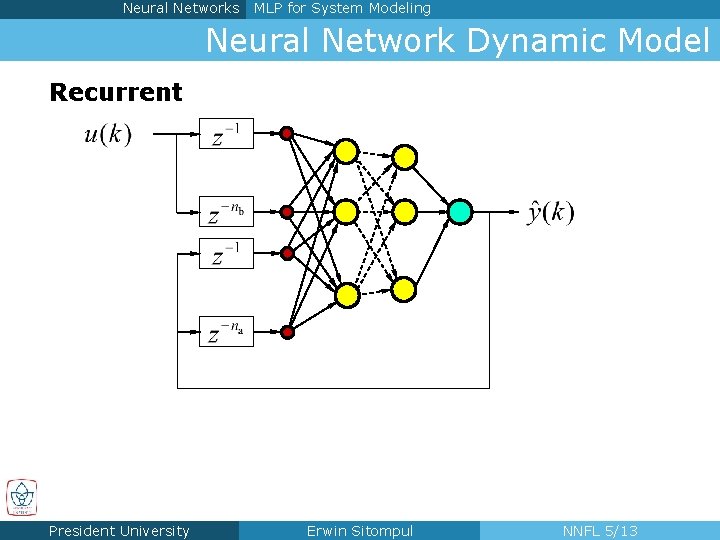

Neural Networks MLP for System Modeling Neural Network Dynamic Model Recurrent . . . President University Erwin Sitompul NNFL 5/13

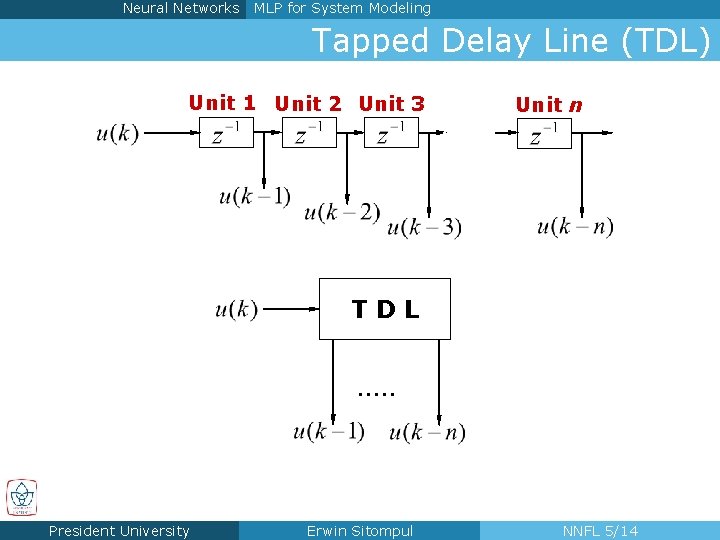

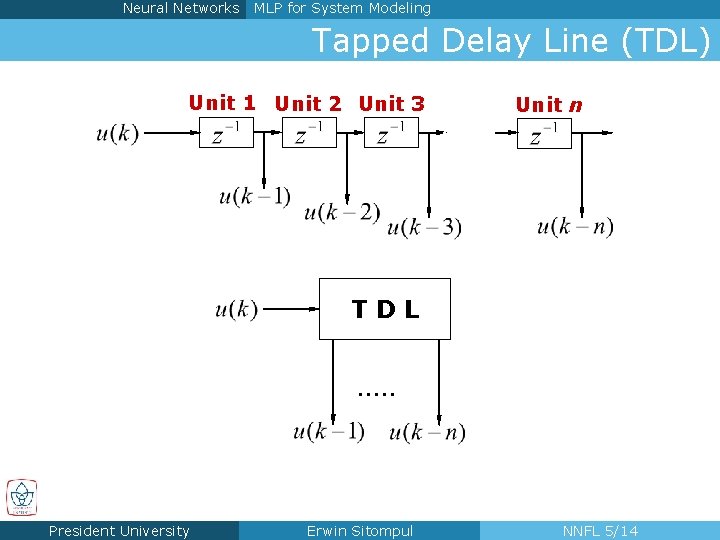

Neural Networks MLP for System Modeling Tapped Delay Line (TDL) Unit 1 Unit 2 Unit 3 Unit n . . . TDL . . . President University Erwin Sitompul NNFL 5/14

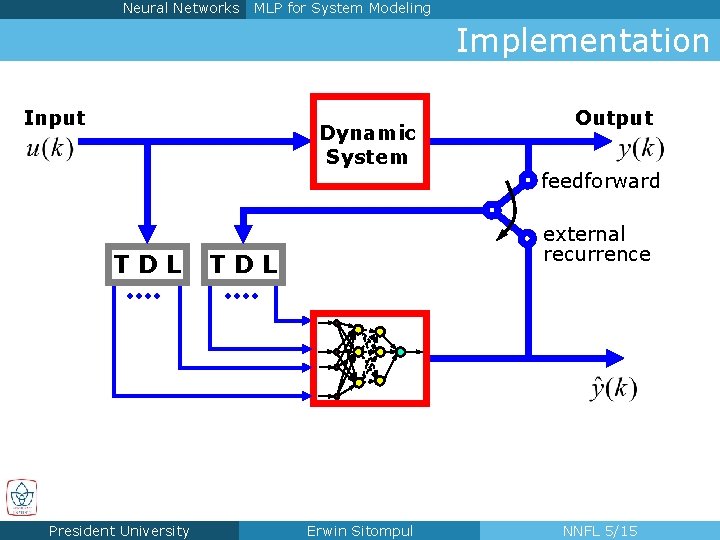

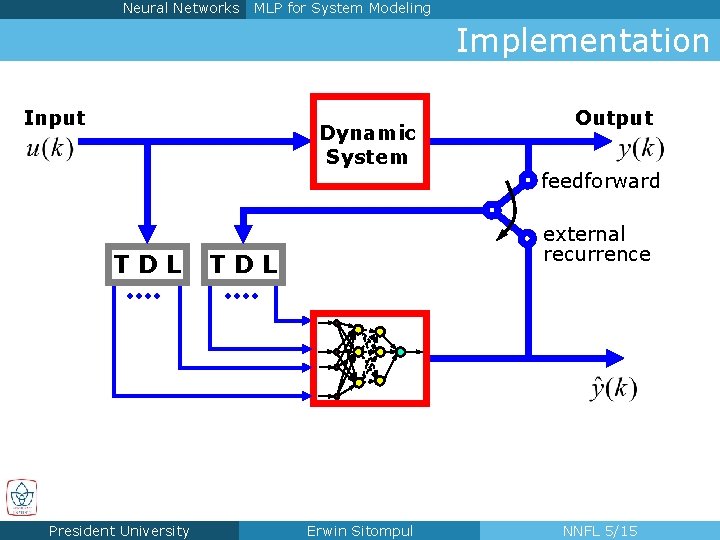

Neural Networks MLP for System Modeling Implementation Input Dynamic System TDL Output feedforward external recurrence TDL. . . President University Erwin Sitompul NNFL 5/15

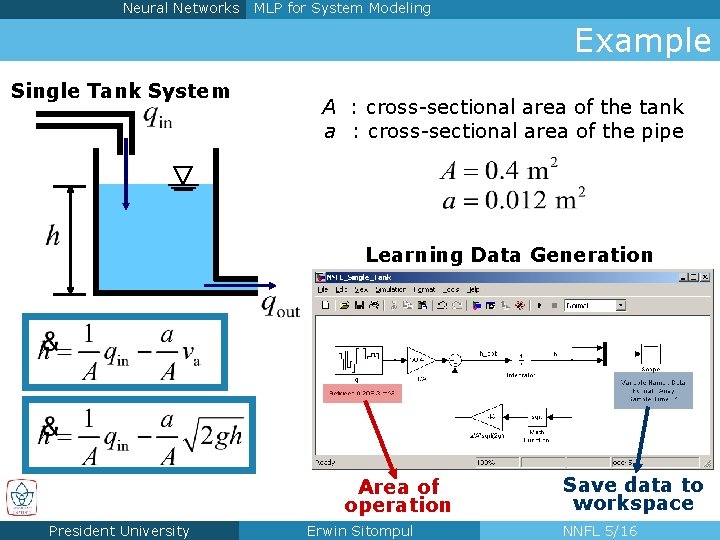

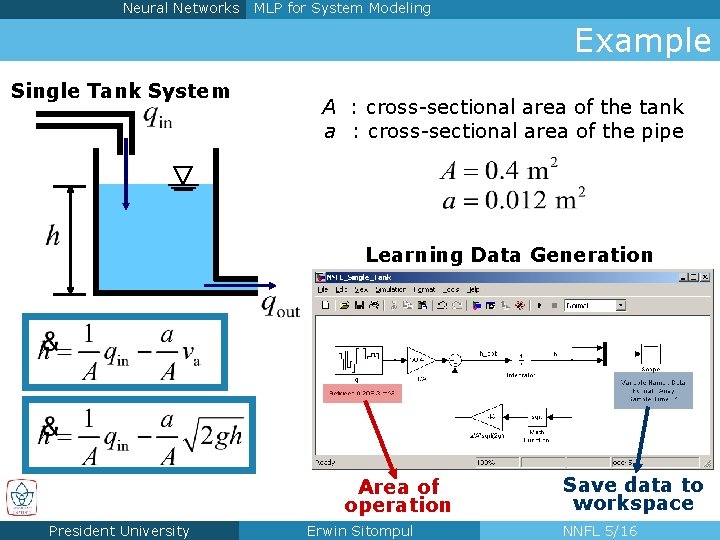

Neural Networks MLP for System Modeling Example Single Tank System A : cross-sectional area of the tank a : cross-sectional area of the pipe Learning Data Generation Area of operation President University Erwin Sitompul Save data to workspace NNFL 5/16

Neural Networks MLP for System Modeling Example Data size : 201 from 200 seconds of simulation 2– 2– 1 Network Feedforward Network President University External Recurrent Network Erwin Sitompul NNFL 5/17

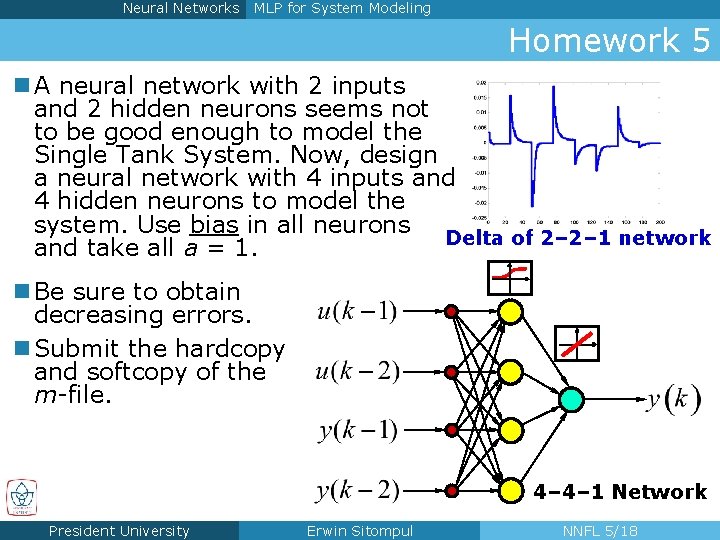

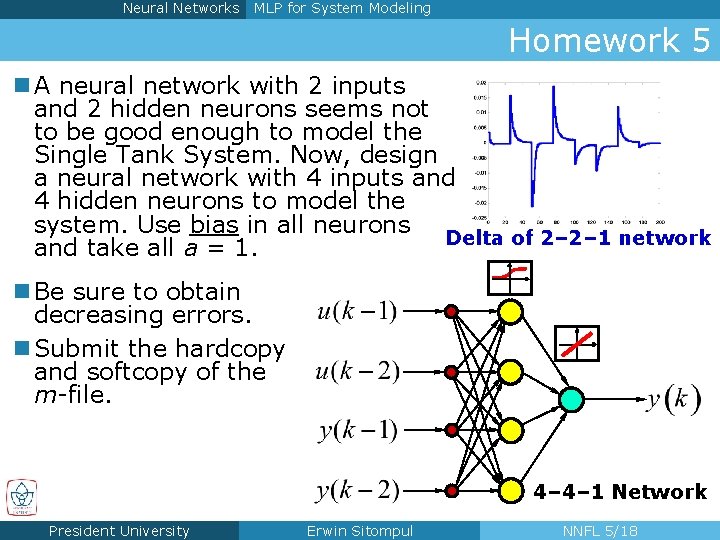

Neural Networks MLP for System Modeling Homework 5 n A neural network with 2 inputs and 2 hidden neurons seems not to be good enough to model the Single Tank System. Now, design a neural network with 4 inputs and 4 hidden neurons to model the system. Use bias in all neurons Delta of 2– 2– 1 network and take all a = 1. n Be sure to obtain decreasing errors. n Submit the hardcopy and softcopy of the m-file. 4– 4– 1 Network President University Erwin Sitompul NNFL 5/18

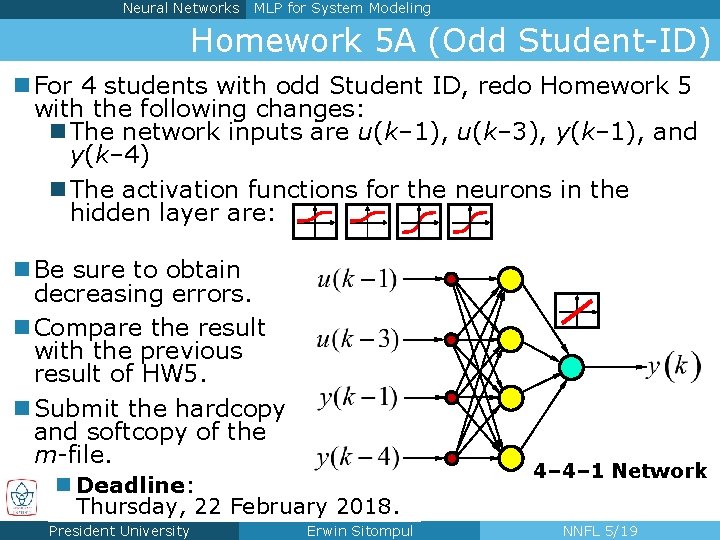

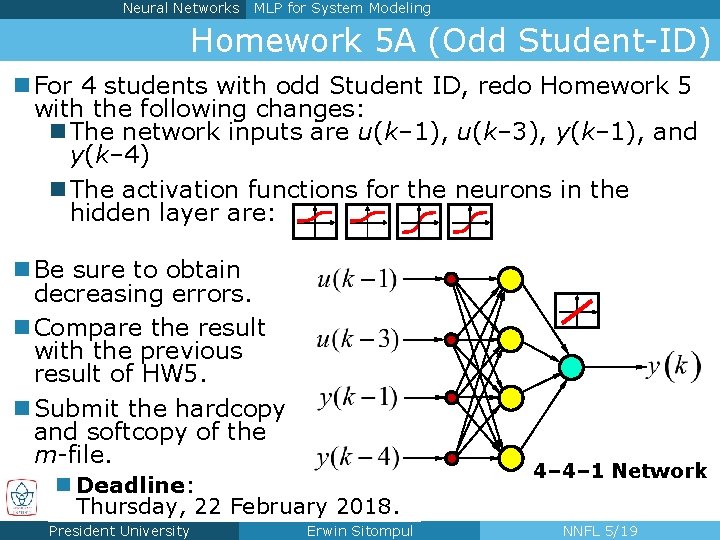

Neural Networks MLP for System Modeling Homework 5 A (Odd Student-ID) n For 4 students with odd Student ID, redo Homework 5 with the following changes: n The network inputs are u(k– 1), u(k– 3), y(k– 1), and y(k– 4) n The activation functions for the neurons in the hidden layer are: n Be sure to obtain decreasing errors. n Compare the result with the previous result of HW 5. n Submit the hardcopy and softcopy of the m-file. n Deadline: Thursday, 22 February 2018. President University Erwin Sitompul 4– 4– 1 Network NNFL 5/19

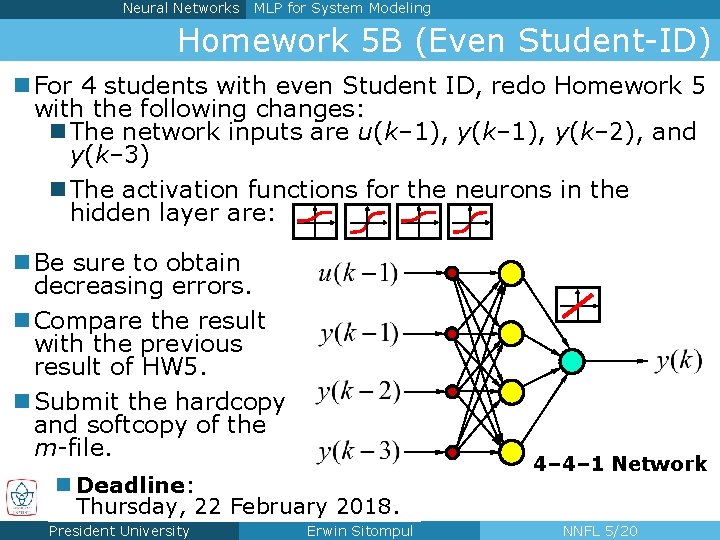

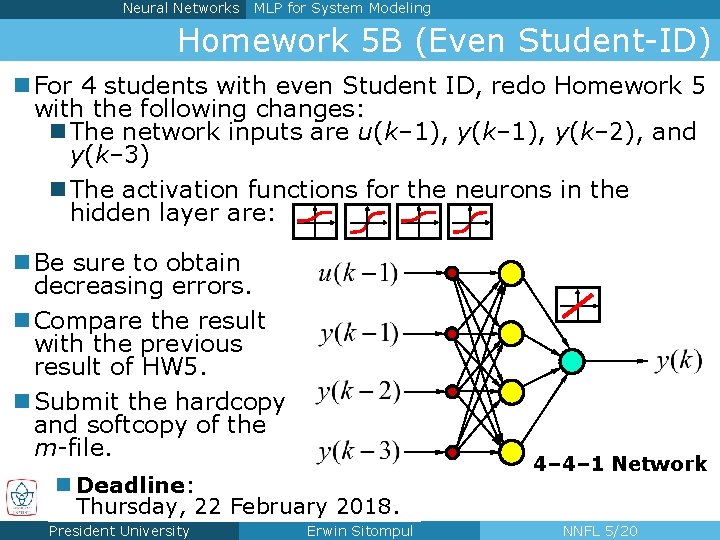

Neural Networks MLP for System Modeling Homework 5 B (Even Student-ID) n For 4 students with even Student ID, redo Homework 5 with the following changes: n The network inputs are u(k– 1), y(k– 2), and y(k– 3) n The activation functions for the neurons in the hidden layer are: n Be sure to obtain decreasing errors. n Compare the result with the previous result of HW 5. n Submit the hardcopy and softcopy of the m-file. n Deadline: Thursday, 22 February 2018. President University Erwin Sitompul 4– 4– 1 Network NNFL 5/20

Neural networks and fuzzy logic

Neural networks and fuzzy logic Fuzzy sets and fuzzy logic theory and applications

Fuzzy sets and fuzzy logic theory and applications Membership function fuzzy logic

Membership function fuzzy logic Fuzzy logic lecture

Fuzzy logic lecture Matlab neural network toolbox pdf

Matlab neural network toolbox pdf Cnn ppt for image classification

Cnn ppt for image classification Netinsights

Netinsights Visualizing and understanding convolutional neural networks

Visualizing and understanding convolutional neural networks Mippers

Mippers Least mean square algorithm in neural network

Least mean square algorithm in neural network Neural networks for rf and microwave design

Neural networks for rf and microwave design Neural networks and learning machines

Neural networks and learning machines Tipping problem fuzzy logic

Tipping problem fuzzy logic Contoh fuzzy logic dalam kehidupan sehari-hari

Contoh fuzzy logic dalam kehidupan sehari-hari Bounded product fuzzy set

Bounded product fuzzy set Fuzzy logic definition

Fuzzy logic definition Fuzzy logic controller

Fuzzy logic controller What is linguistic variables in fuzzy logic

What is linguistic variables in fuzzy logic Mfs

Mfs Fuzzy logic examples from real world

Fuzzy logic examples from real world The washington fuzzy cs

The washington fuzzy cs