Introduction to Neural Networks and Fuzzy Logic Lecture

![Neural Networks Learning Processes Learning Process ANN x Initialize: Iteration (0) x [w, b]0 Neural Networks Learning Processes Learning Process ANN x Initialize: Iteration (0) x [w, b]0](https://slidetodoc.com/presentation_image_h2/3a6e0471b580db13a1c3dab40adccc87/image-6.jpg)

- Slides: 25

Introduction to Neural Networks and Fuzzy Logic Lecture 2 Dr. -Ing. Erwin Sitompul President University http: //zitompul. wordpress. com 2 0 1 7 President University Erwin Sitompul NNFL 2/1

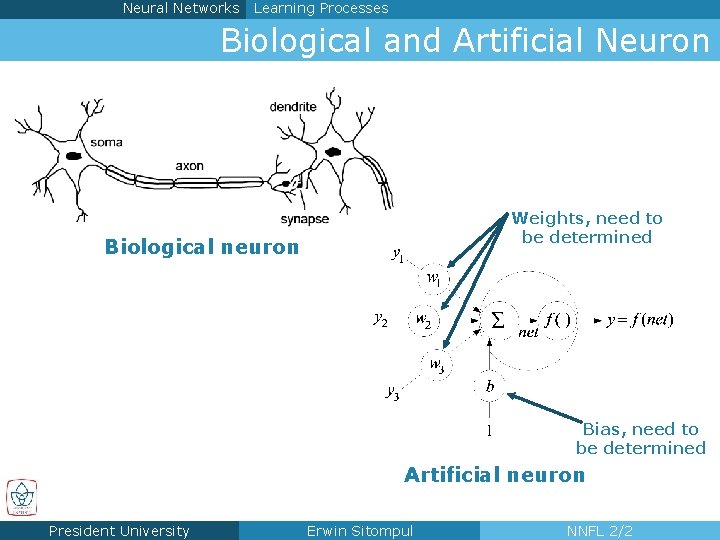

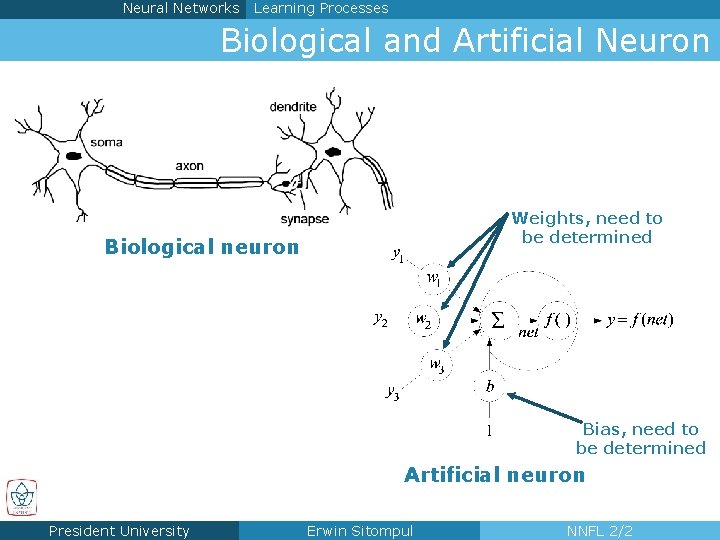

Neural Networks Learning Processes Biological and Artificial Neuron Weights, need to be determined Biological neuron Bias, need to be determined Artificial neuron President University Erwin Sitompul NNFL 2/2

Neural Networks Learning Processes Application of Neural Networks n Function approximation and prediction n Pattern recognition n Signal processing n Modeling and control n Machine learning President University Erwin Sitompul NNFL 2/3

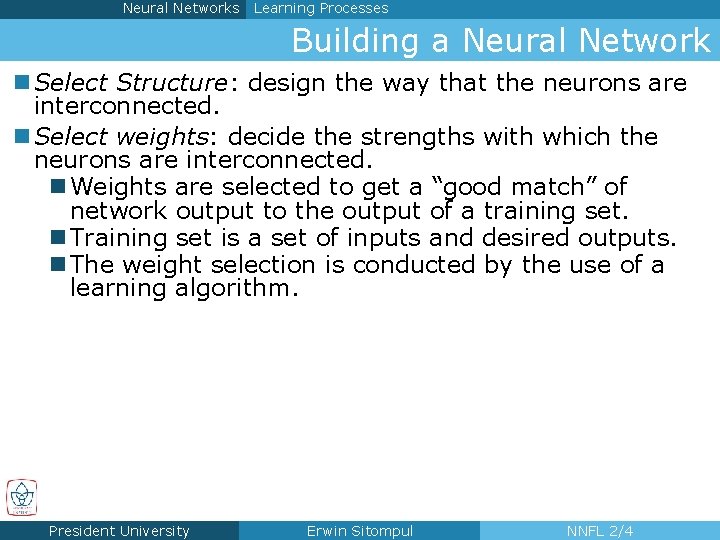

Neural Networks Learning Processes Building a Neural Network n Select Structure: design the way that the neurons are interconnected. n Select weights: decide the strengths with which the neurons are interconnected. n Weights are selected to get a “good match” of network output to the output of a training set. n Training set is a set of inputs and desired outputs. n The weight selection is conducted by the use of a learning algorithm. President University Erwin Sitompul NNFL 2/4

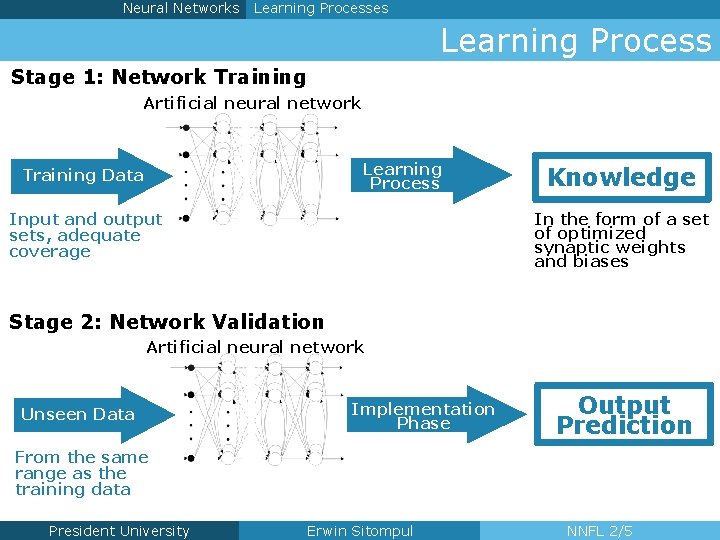

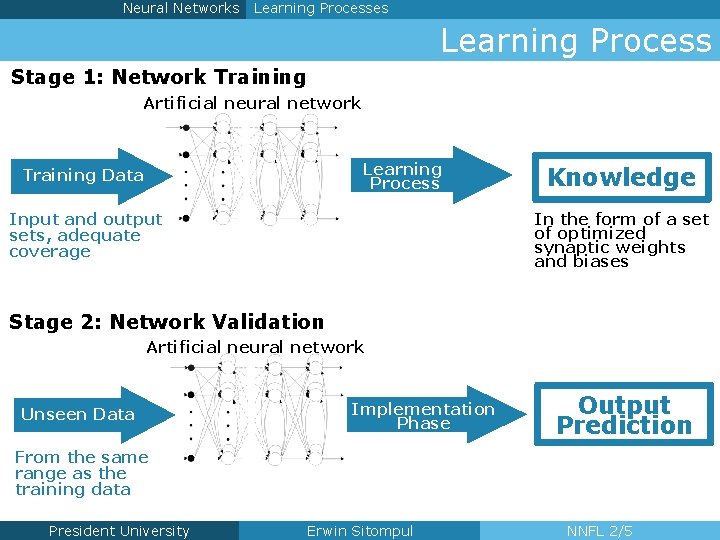

Neural Networks Learning Processes Learning Process Stage 1: Network Training Artificial neural network Learning Process Training Data Knowledge In the form of a set of optimized synaptic weights and biases Input and output sets, adequate coverage Stage 2: Network Validation Artificial neural network Unseen Data Implementation Phase Output Prediction From the same range as the training data President University Erwin Sitompul NNFL 2/5

![Neural Networks Learning Processes Learning Process ANN x Initialize Iteration 0 x w b0 Neural Networks Learning Processes Learning Process ANN x Initialize: Iteration (0) x [w, b]0](https://slidetodoc.com/presentation_image_h2/3a6e0471b580db13a1c3dab40adccc87/image-6.jpg)

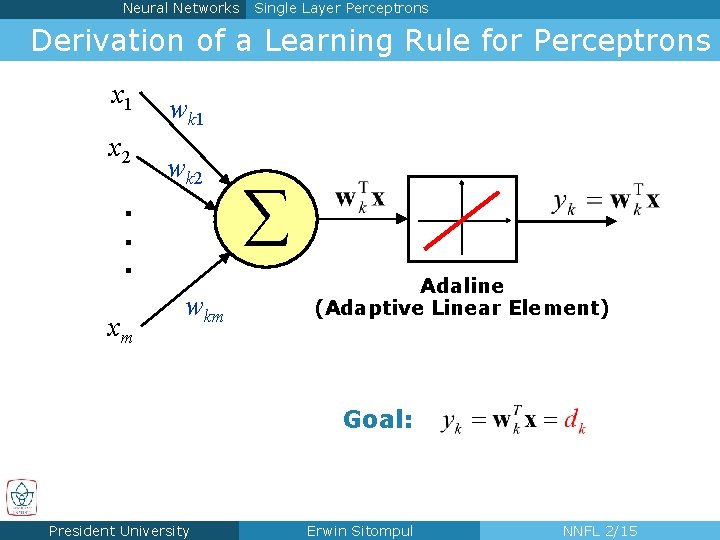

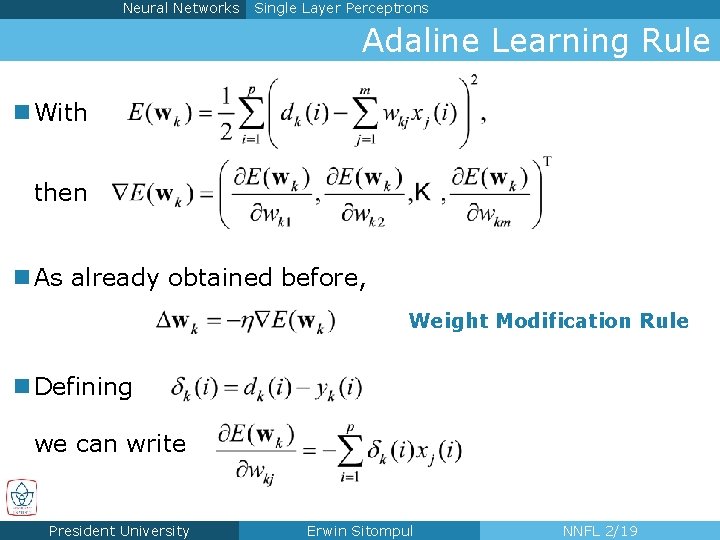

Neural Networks Learning Processes Learning Process ANN x Initialize: Iteration (0) x [w, b]0 y(0) [w, b]1 y(1) Iteration (1) x Iteration (n) x d : desired output President University y [w, b] … n Learning is a process by which the free parameters of a neural network are adapted through a process of stimulation by the environment in which the network is embedded. n In most cases, due to complex optimization plane, the optimized weights and biases are obtained as a result of a number of learning iterations. Erwin Sitompul [w, b]n y(n) ≈ d NNFL 2/6

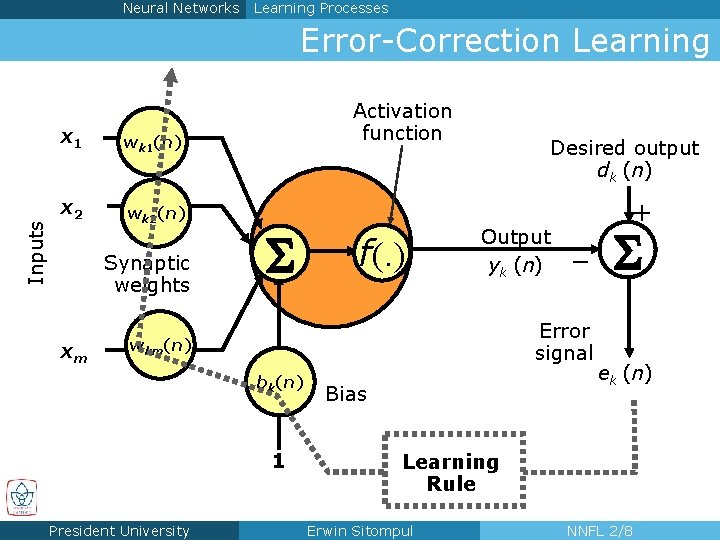

Neural Networks Learning Processes Learning Rules n Error Correction Learning n Delta Rule or Widrow-Hoff Rule n Memory Based Learning n Nearest Neighbor Rule n Hebbian Learning n Synchronous activation increases the synaptic strength n Asynchronous activation decreases the synaptic strength n Competitive Learning n Boltzmann Learning President University Erwin Sitompul NNFL 2/7

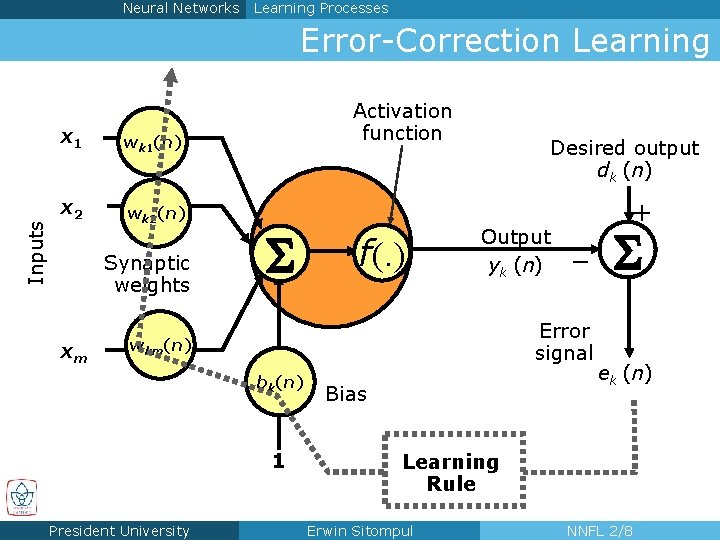

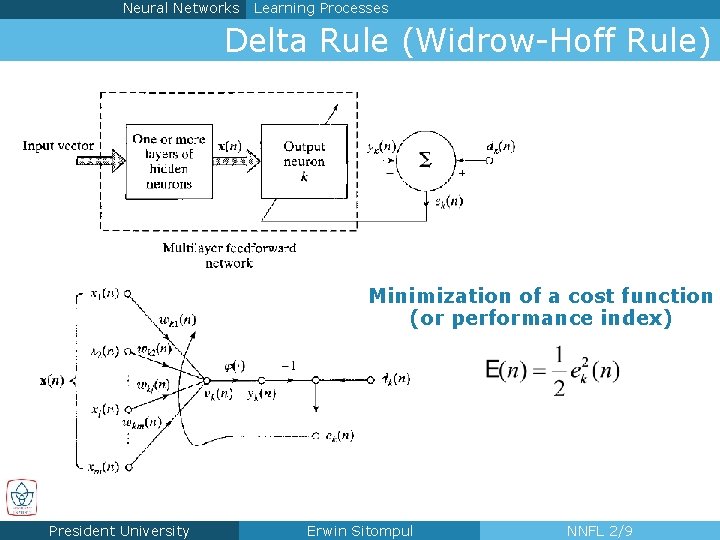

Neural Networks Learning Processes Inputs Error-Correction Learning x 1 wk 1(n) x 2 wk 2(n) Synaptic weights xm Activation function S f(. ) Desired output dk (n) Output yk (n) - Error signal wkm(n) bk(n) 1 President University + Bias S ek (n) Learning Rule Erwin Sitompul NNFL 2/8

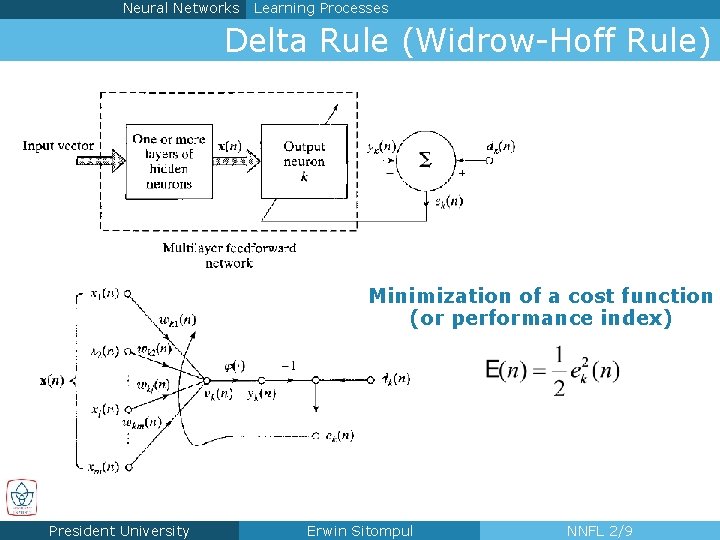

Neural Networks Learning Processes Delta Rule (Widrow-Hoff Rule) Minimization of a cost function (or performance index) President University Erwin Sitompul NNFL 2/9

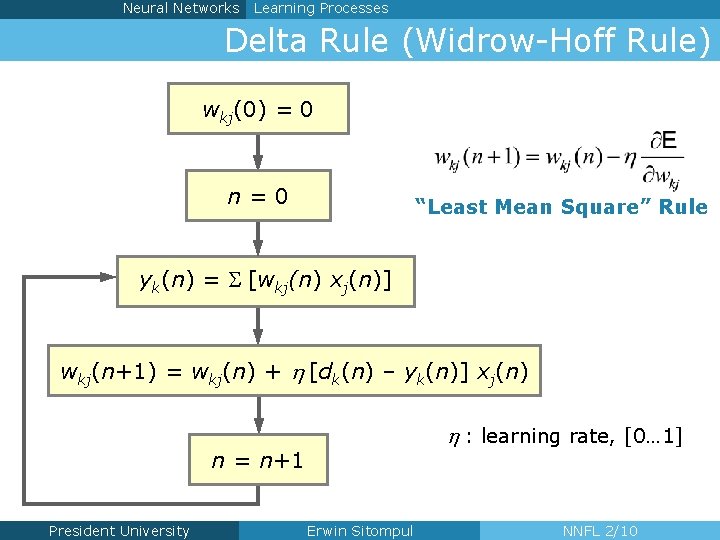

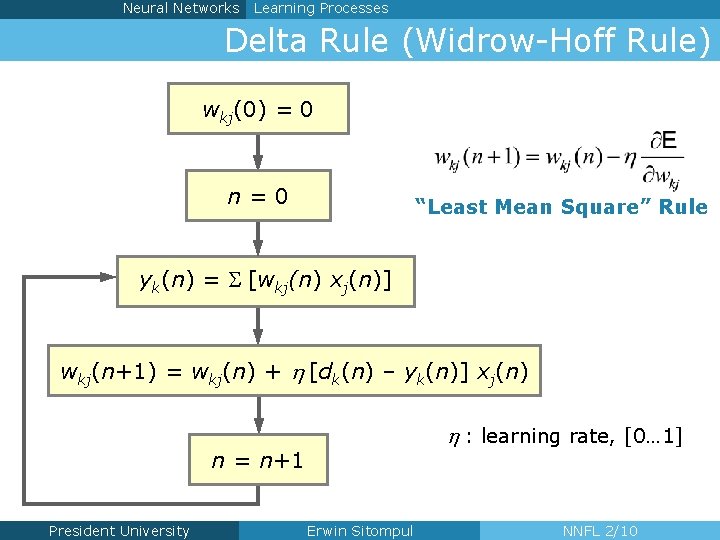

Neural Networks Learning Processes Delta Rule (Widrow-Hoff Rule) wkj(0) = 0 n=0 “Least Mean Square” Rule yk(n) = S [wkj(n) xj(n)] wkj(n+1) = wkj(n) + h [dk(n) – yk(n)] xj(n) h : learning rate, [0… 1] n = n+1 President University Erwin Sitompul NNFL 2/10

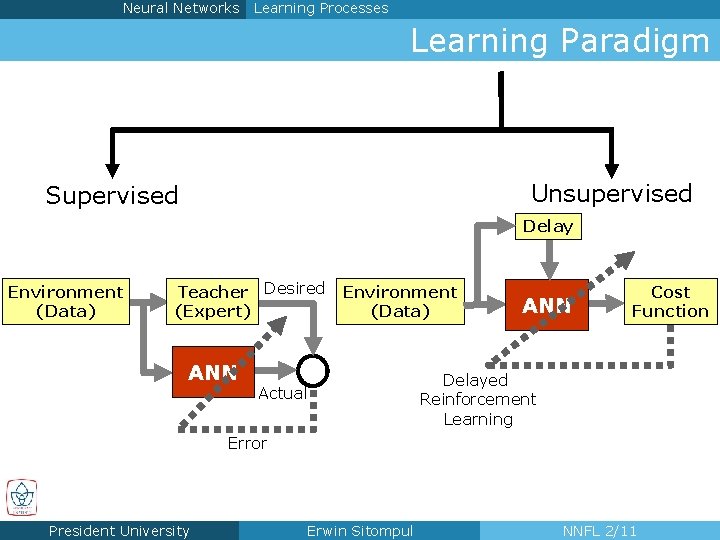

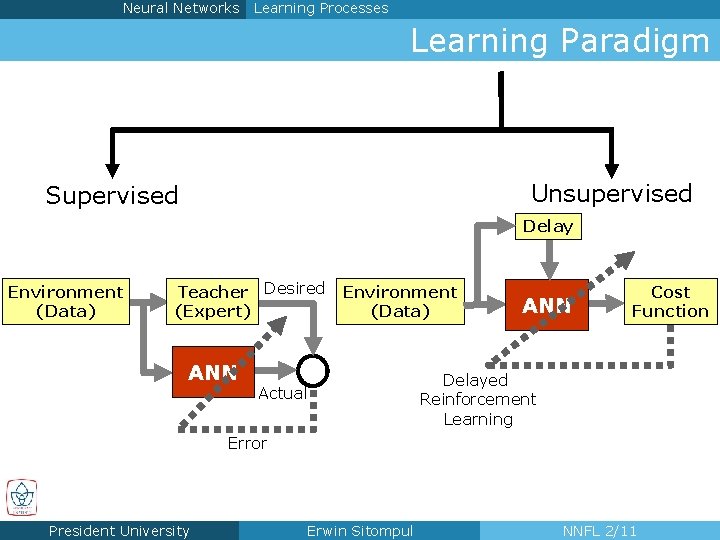

Neural Networks Learning Processes Learning Paradigm Unsupervised Supervised Delay Environment (Data) Teacher Desired (Expert) ANN - S Environment (Data) ANN Cost Function + Actual Delayed Reinforcement Learning Error President University Erwin Sitompul NNFL 2/11

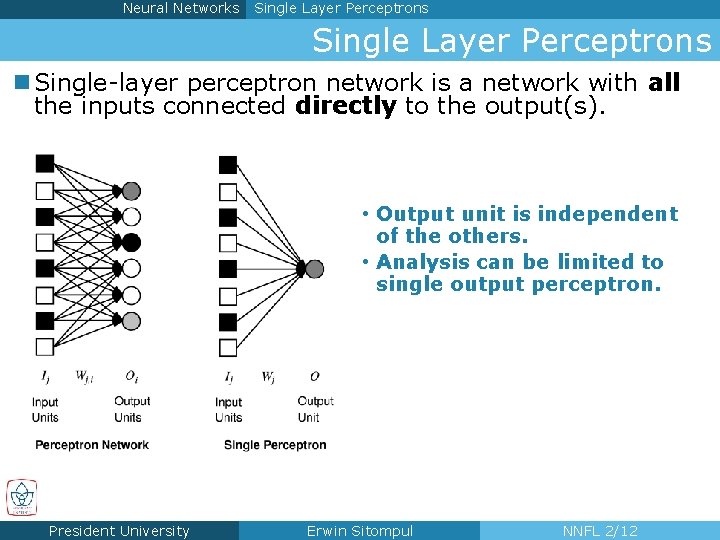

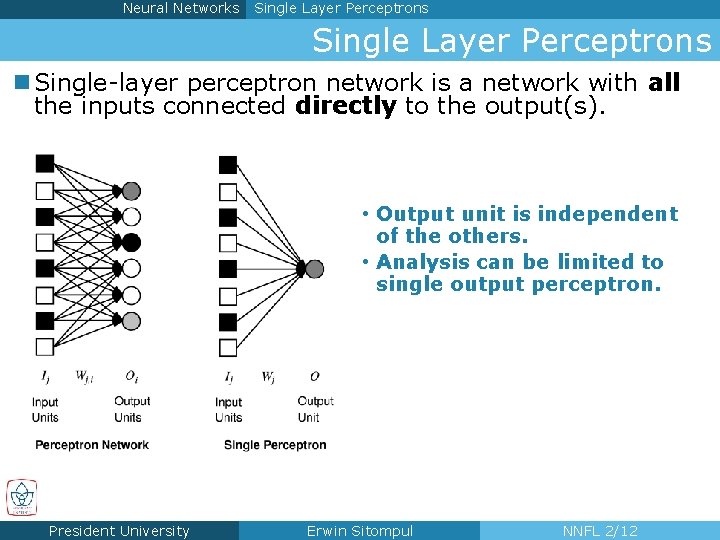

Neural Networks Single Layer Perceptrons n Single-layer perceptron network is a network with all the inputs connected directly to the output(s). • Output unit is independent of the others. • Analysis can be limited to single output perceptron. President University Erwin Sitompul NNFL 2/12

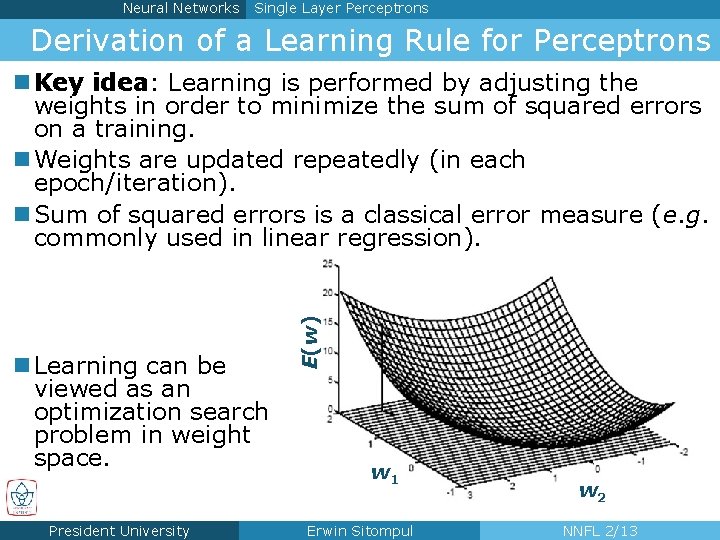

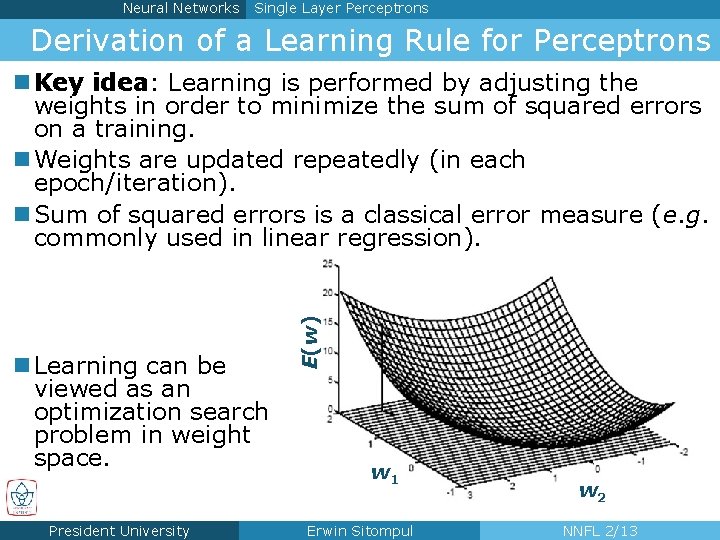

Neural Networks Single Layer Perceptrons Derivation of a Learning Rule for Perceptrons n Learning can be viewed as an optimization search problem in weight space. President University E(w) n Key idea: Learning is performed by adjusting the weights in order to minimize the sum of squared errors on a training. n Weights are updated repeatedly (in each epoch/iteration). n Sum of squared errors is a classical error measure (e. g. commonly used in linear regression). w 1 Erwin Sitompul w 2 NNFL 2/13

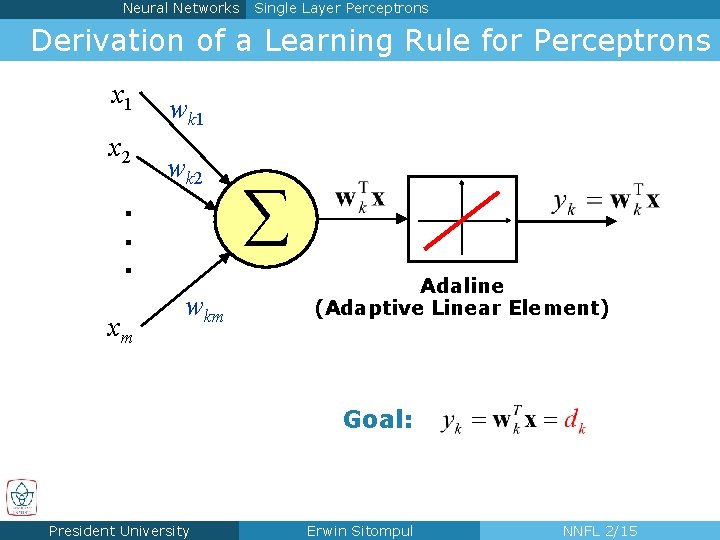

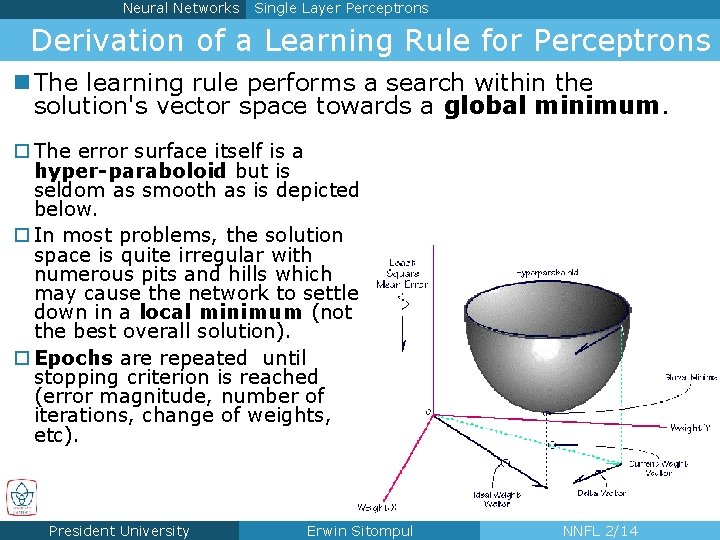

Neural Networks Single Layer Perceptrons Derivation of a Learning Rule for Perceptrons n The learning rule performs a search within the solution's vector space towards a global minimum. The error surface itself is a hyper-paraboloid but is seldom as smooth as is depicted below. In most problems, the solution space is quite irregular with numerous pits and hills which may cause the network to settle down in a local minimum (not the best overall solution). Epochs are repeated until stopping criterion is reached (error magnitude, number of iterations, change of weights, etc). President University Erwin Sitompul NNFL 2/14

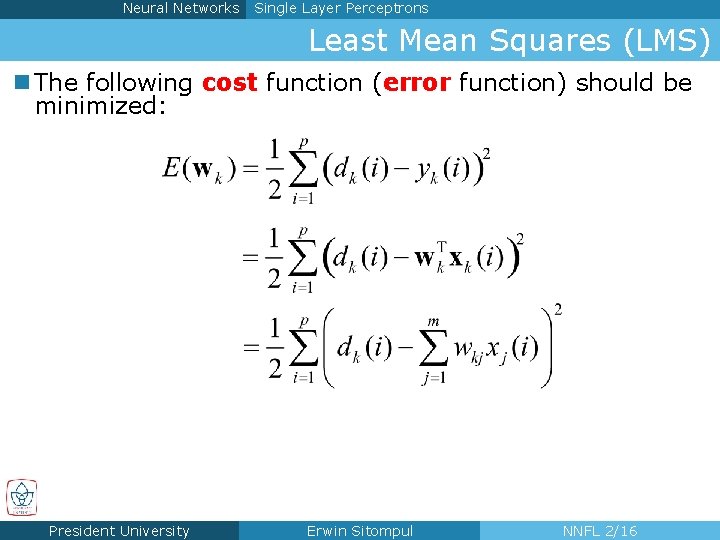

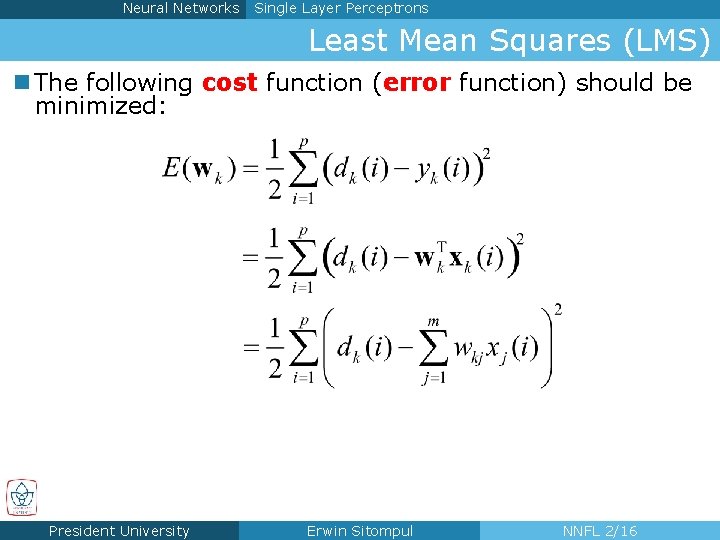

Neural Networks Single Layer Perceptrons Derivation of a Learning Rule for Perceptrons x 1 x 2 wk 1 wk 2 . . . xm wkm Adaline (Adaptive Linear Element) Goal: President University Erwin Sitompul NNFL 2/15

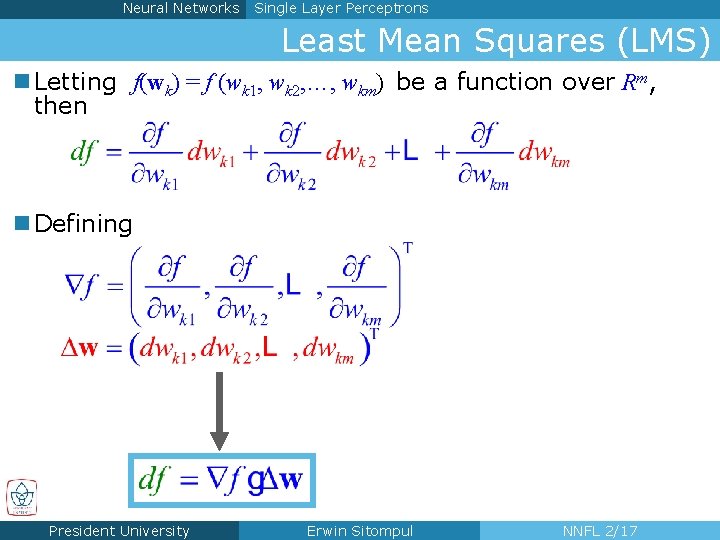

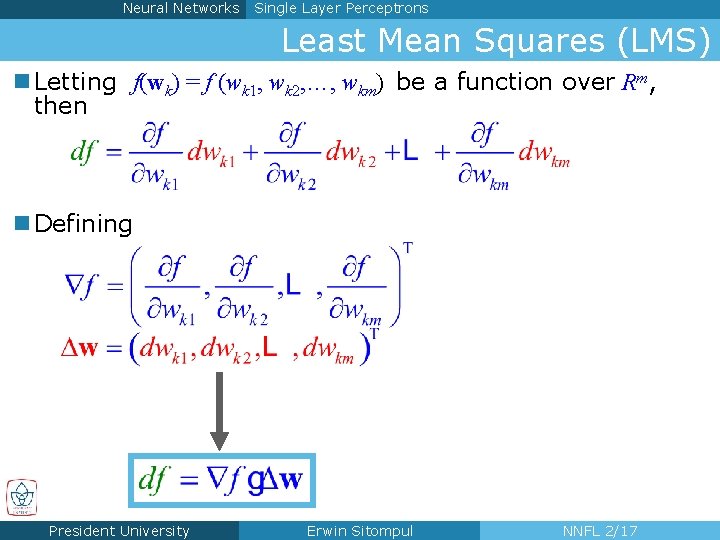

Neural Networks Single Layer Perceptrons Least Mean Squares (LMS) n The following cost function (error function) should be minimized: President University Erwin Sitompul NNFL 2/16

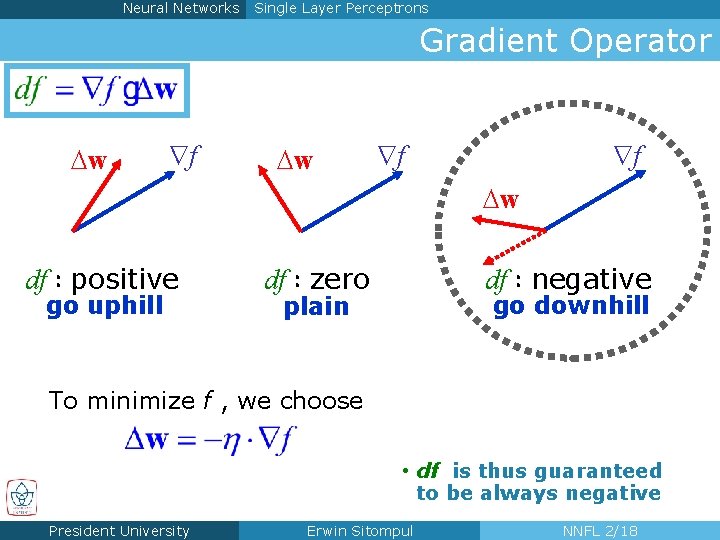

Neural Networks Single Layer Perceptrons Least Mean Squares (LMS) n Letting f(wk) = f (wk 1, wk 2, …, wkm) be a function over Rm, then n Defining President University Erwin Sitompul NNFL 2/17

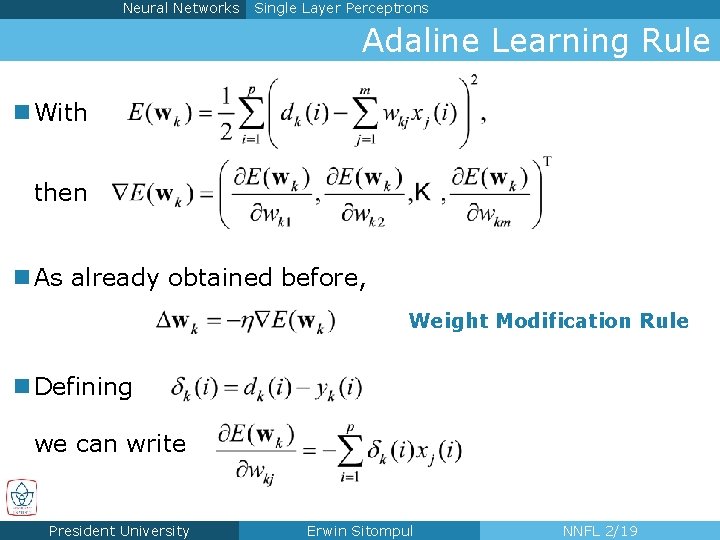

Neural Networks Single Layer Perceptrons Gradient Operator w f f w df : positive go uphill df : zero df : negative go downhill plain To minimize f , we choose • df is thus guaranteed to be always negative President University Erwin Sitompul NNFL 2/18

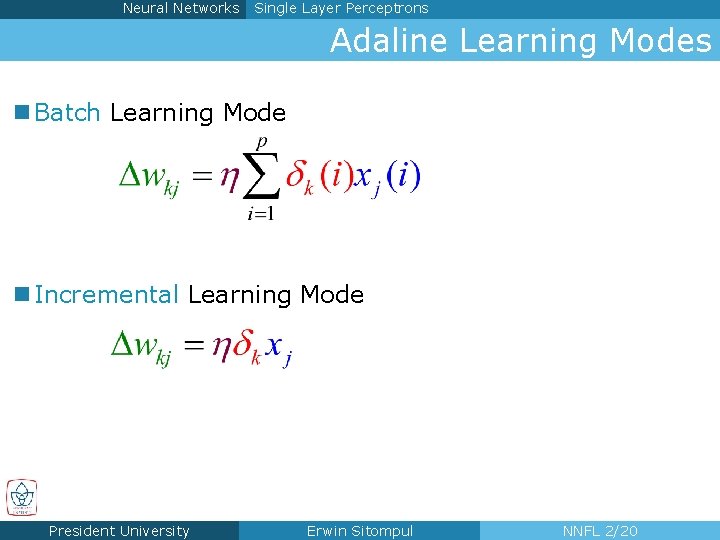

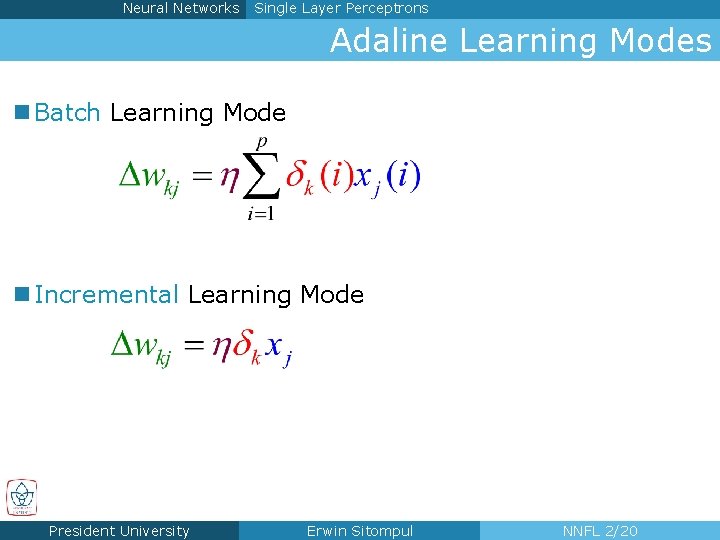

Neural Networks Single Layer Perceptrons Adaline Learning Rule n With then n As already obtained before, Weight Modification Rule n Defining we can write President University Erwin Sitompul NNFL 2/19

Neural Networks Single Layer Perceptrons Adaline Learning Modes n Batch Learning Mode n Incremental Learning Mode President University Erwin Sitompul NNFL 2/20

Neural Networks Single Layer Perceptrons Adaline Learning Rule -Learning Rule LMS Algorithm Widrow-Hoff Learning Rule President University Erwin Sitompul NNFL 2/21

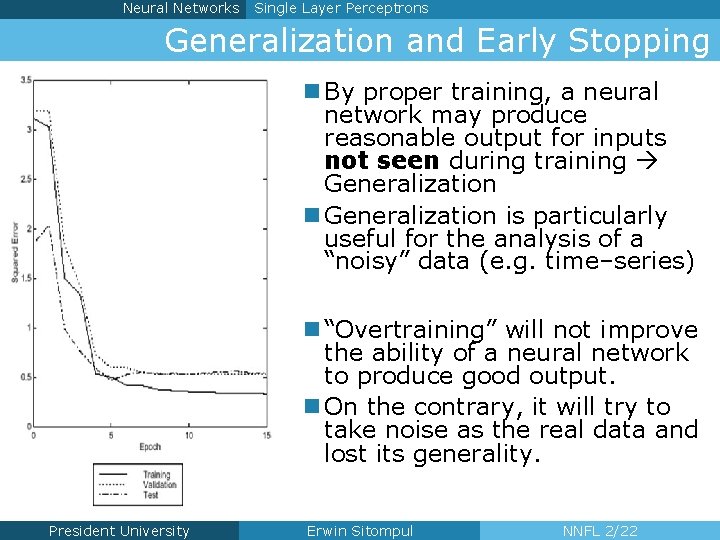

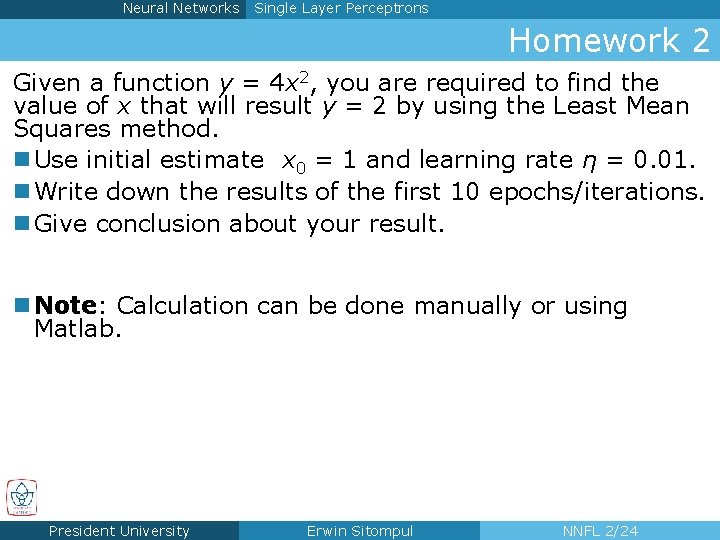

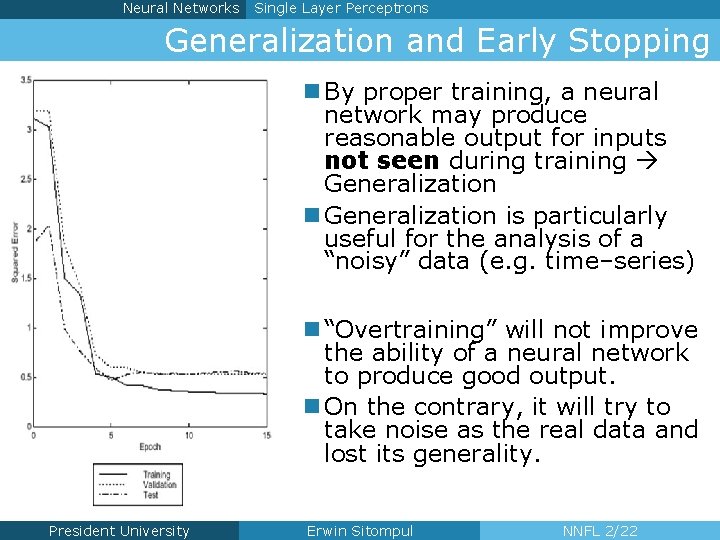

Neural Networks Single Layer Perceptrons Generalization and Early Stopping n By proper training, a neural network may produce reasonable output for inputs not seen during training Generalization n Generalization is particularly useful for the analysis of a “noisy” data (e. g. time–series) n “Overtraining” will not improve the ability of a neural network to produce good output. n On the contrary, it will try to take noise as the real data and lost its generality. President University Erwin Sitompul NNFL 2/22

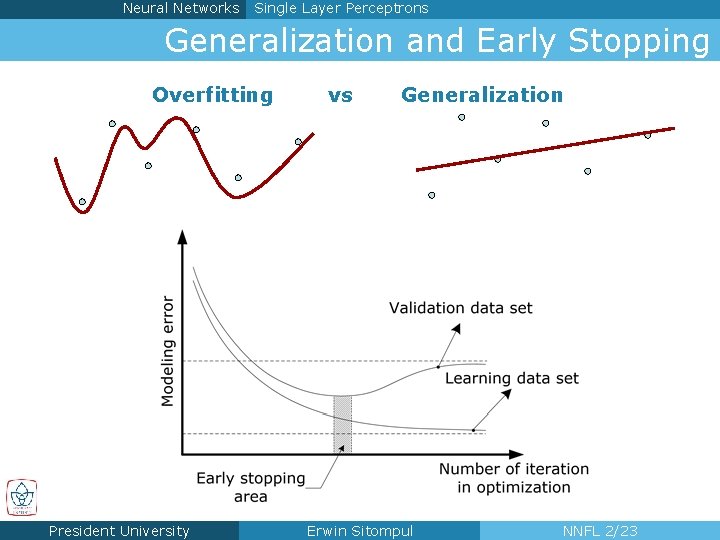

Neural Networks Single Layer Perceptrons Generalization and Early Stopping Overfitting President University vs Generalization Erwin Sitompul NNFL 2/23

Neural Networks Single Layer Perceptrons Homework 2 Given a function y = 4 x 2, you are required to find the value of x that will result y = 2 by using the Least Mean Squares method. n Use initial estimate x 0 = 1 and learning rate η = 0. 01. n Write down the results of the first 10 epochs/iterations. n Give conclusion about your result. n Note: Calculation can be done manually or using Matlab. President University Erwin Sitompul NNFL 2/24

Neural Networks Single Layer Perceptrons Homework 2 A Given a function y = 2 x 3 + cos 2 x, you are required to find the value of x that will result y = 5 by using the Least Mean Squares method. n Use initial estimate x 0 = 0. 2*Student ID and learning rate η = 0. 01. n Write down the results of the first 10 epochs/iterations. n Give conclusion about your result. n Note: Calculation can be done manually or using Matlab/Excel. n Deadline: Monday, 6 February 2017. President University Erwin Sitompul NNFL 2/25