International Conference on Autonomous Agents and Multiagent Systems

![Details of the Model Node n Mj, l-1 Aj q S Mod[Mj] q n Details of the Model Node n Mj, l-1 Aj q S Mod[Mj] q n](https://slidetodoc.com/presentation_image/31e13d063edc1c6c99df6f1a2705b204/image-9.jpg)

![Whole I-ID Ri Ai S Aj Oi Mod[Mj] Aj 1 mj, l-11, mj, l-12 Whole I-ID Ri Ai S Aj Oi Mod[Mj] Aj 1 mj, l-11, mj, l-12](https://slidetodoc.com/presentation_image/31e13d063edc1c6c99df6f1a2705b204/image-10.jpg)

![Semantics of Model Update Link Ajt Mj, l-1 t+1 st+1 Mod[Mjt+1] st mj, l-1 Semantics of Model Update Link Ajt Mj, l-1 t+1 st+1 Mod[Mjt+1] st mj, l-1](https://slidetodoc.com/presentation_image/31e13d063edc1c6c99df6f1a2705b204/image-12.jpg)

![Ri Ait+1 St Oit St+1 Ajt+1 Oit+1 Mod[Mjt+1] Mod[Mjt] mj, l-1 t+1, 1 Oj Ri Ait+1 St Oit St+1 Ajt+1 Oit+1 Mod[Mjt+1] Mod[Mjt] mj, l-1 t+1, 1 Oj](https://slidetodoc.com/presentation_image/31e13d063edc1c6c99df6f1a2705b204/image-14.jpg)

- Slides: 26

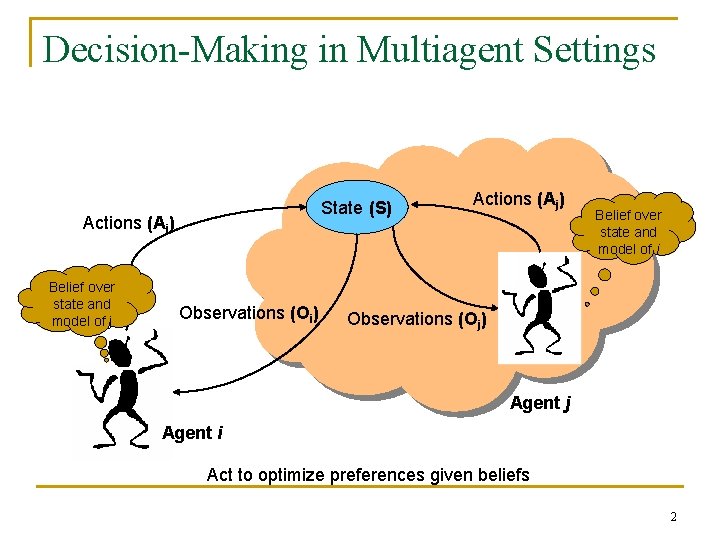

International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2007) Graphical Models for Online Solutions to Interactive POMDPs Prashant Doshi Yifeng Zeng University of Georgia Aalborg University USA Denmark Qiongyu Chen National Univ. of Singapore 1

Decision-Making in Multiagent Settings State (S) Actions (Ai) Belief over state and model of j Observations (Oi) Actions (Aj) Belief over state and model of i Observations (Oj) Agent j Agent i Act to optimize preferences given beliefs 2

Finitely Nested I-POMDP (Gmytrasiewicz&Doshi, 05) n A finitely nested I-POMDP of agent i with a strategy level l : q Interactive states: n n Beliefs about physical environments: Beliefs about other agents in terms of their preferences, capabilities, and beliefs: q q q Type: A Joint actions Possible observations Ti Transition function: S×A×S [0, 1] Oi Observation function: S×A× [0, 1] Ri Reward function: S×A 3

Belief Update 4

Forget It! n Different approach q Use the language of Influence Diagrams (IDs) to represent the problem more transparently n q Belief update Use standard ID algorithms to solve it n Solution 5

Challenges n Representation of nested models for other agents q n Influence diagram is a single agent oriented language Update beliefs on models of other agents q q New models of other agents Over time agents revise beliefs over the models of others as they receive observations 6

Related Work n Multiagent Influence Diagrams (MAIDs) (Koller&Milch, 2001) q Uses IDs to represent incomplete information games q Compute Nash equilibrium solutions efficiently by exploiting conditional independence n Network of Influence Diagrams (NIDs) (Gal&Pfeffer, 2003) q Allows uncertainty over the game q Allows multiple models of an individual agent q Solution involves collapsing models into a MAID or ID n Both model static single play games q Do not consider agent interactions over time (sequential decisionmaking) 7

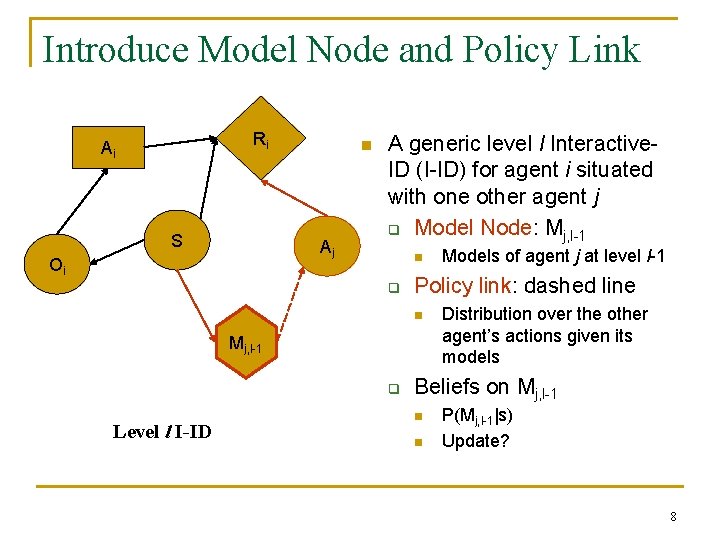

Introduce Model Node and Policy Link Ri Ai S n Aj Oi A generic level l Interactive. ID (I-ID) for agent i situated with one other agent j q Model Node: Mj, l-1 n q Policy link: dashed line n Mj, l-1 q Level l I-ID Models of agent j at level l-1 Distribution over the other agent’s actions given its models Beliefs on Mj, l-1 n n P(Mj, l-1|s) Update? 8

![Details of the Model Node n Mj l1 Aj q S ModMj q n Details of the Model Node n Mj, l-1 Aj q S Mod[Mj] q n](https://slidetodoc.com/presentation_image/31e13d063edc1c6c99df6f1a2705b204/image-9.jpg)

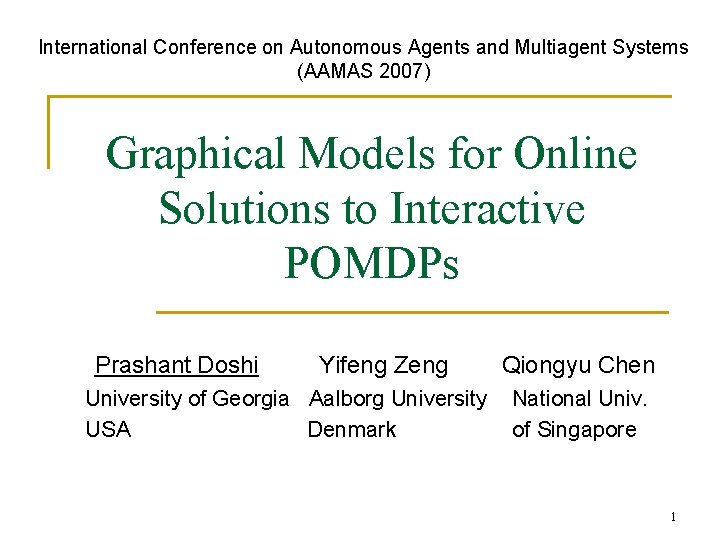

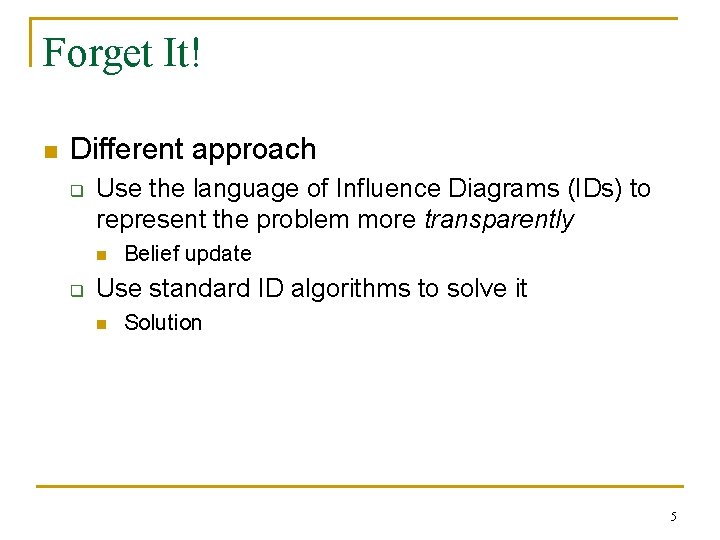

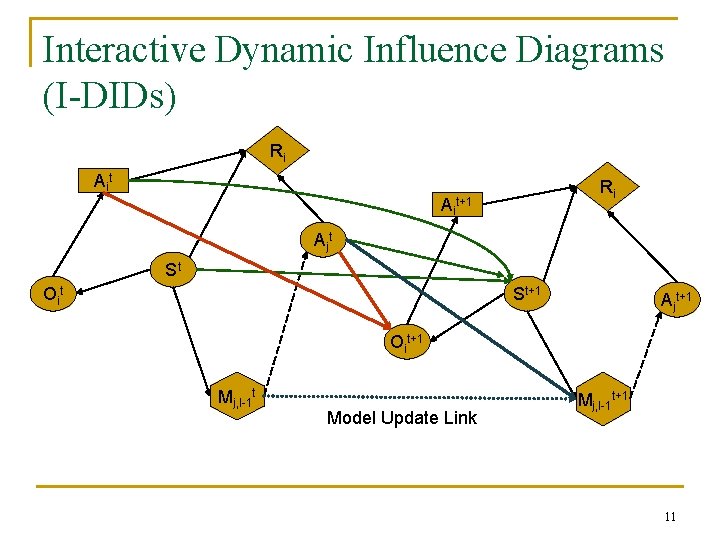

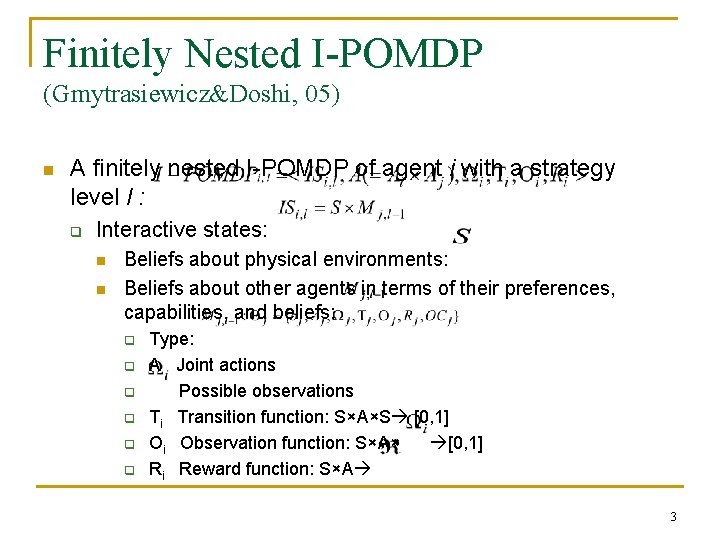

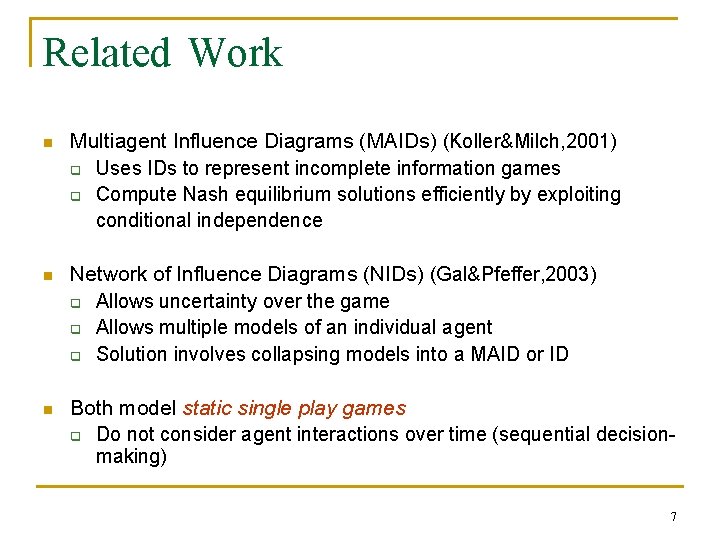

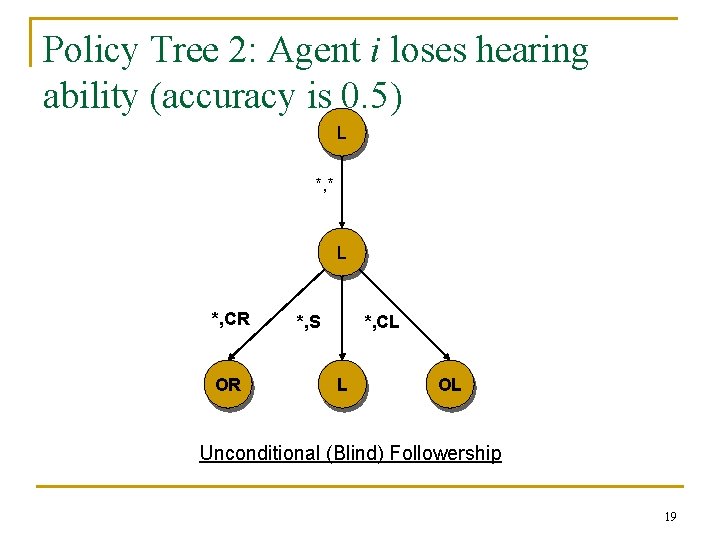

Details of the Model Node n Mj, l-1 Aj q S Mod[Mj] q n mj, l-11 Members of the model node Aj 1 CPT of the chance node Aj is a multiplexer q mj, l-12 Aj 2 Different chance nodes are solutions of models mj, l-1 Mod[Mj] represents the different models of agent j Assumes the distribution of each of the action nodes (Aj 1, Aj 2) depending on the value of Mod[Mj] mj, l-11, mj, l-12 could be I-IDs or IDs 9

![Whole IID Ri Ai S Aj Oi ModMj Aj 1 mj l11 mj l12 Whole I-ID Ri Ai S Aj Oi Mod[Mj] Aj 1 mj, l-11, mj, l-12](https://slidetodoc.com/presentation_image/31e13d063edc1c6c99df6f1a2705b204/image-10.jpg)

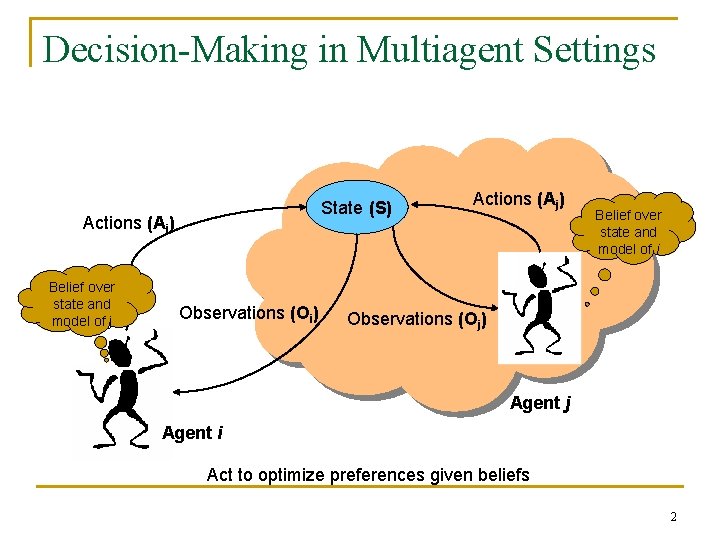

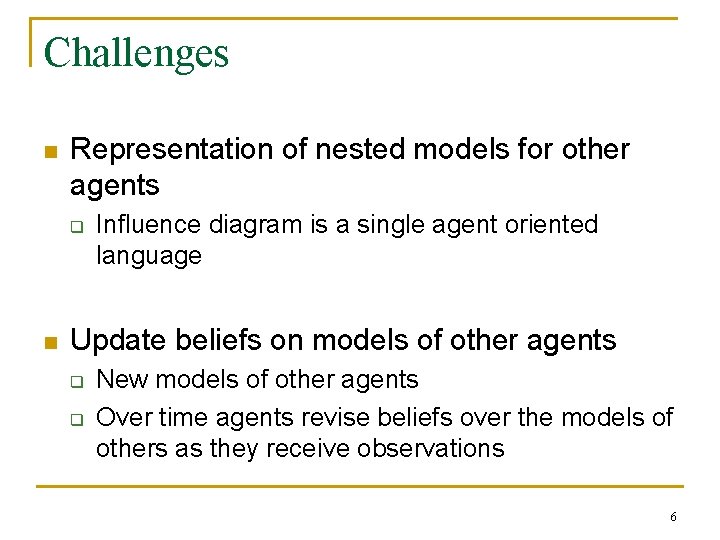

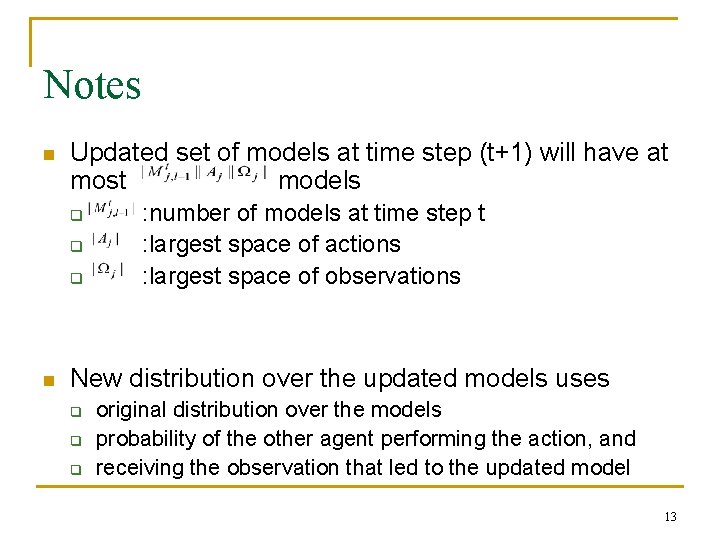

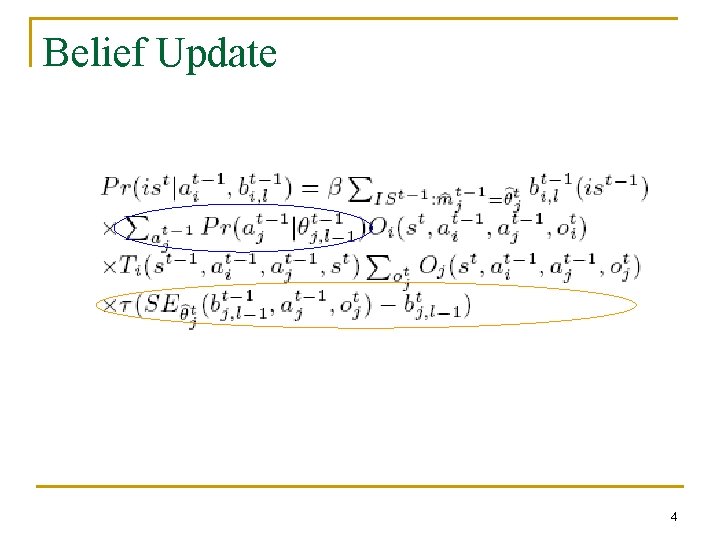

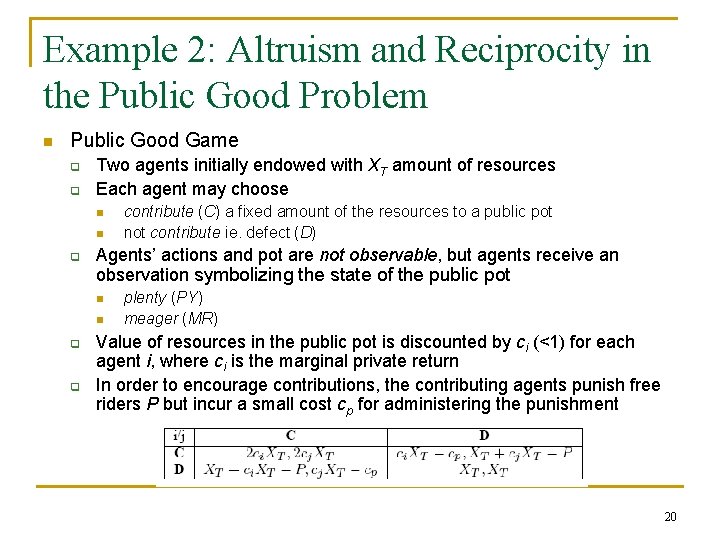

Whole I-ID Ri Ai S Aj Oi Mod[Mj] Aj 1 mj, l-11, mj, l-12 could be I-IDs or IDs mj, l-11 Aj 2 mj, l-12 10

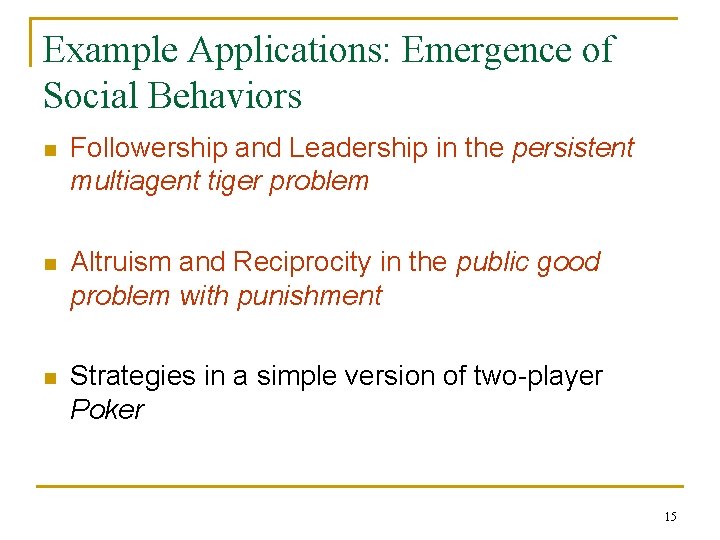

Interactive Dynamic Influence Diagrams (I-DIDs) Ri Ait+1 Ajt St Oit St+1 Ajt+1 Oit+1 Mj, l-1 t Model Update Link Mj, l-1 t+1 11

![Semantics of Model Update Link Ajt Mj l1 t1 st1 ModMjt1 st mj l1 Semantics of Model Update Link Ajt Mj, l-1 t+1 st+1 Mod[Mjt+1] st mj, l-1](https://slidetodoc.com/presentation_image/31e13d063edc1c6c99df6f1a2705b204/image-12.jpg)

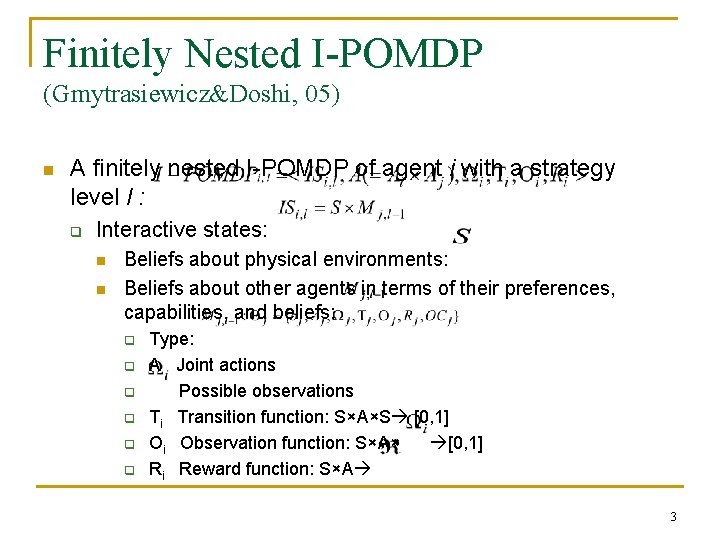

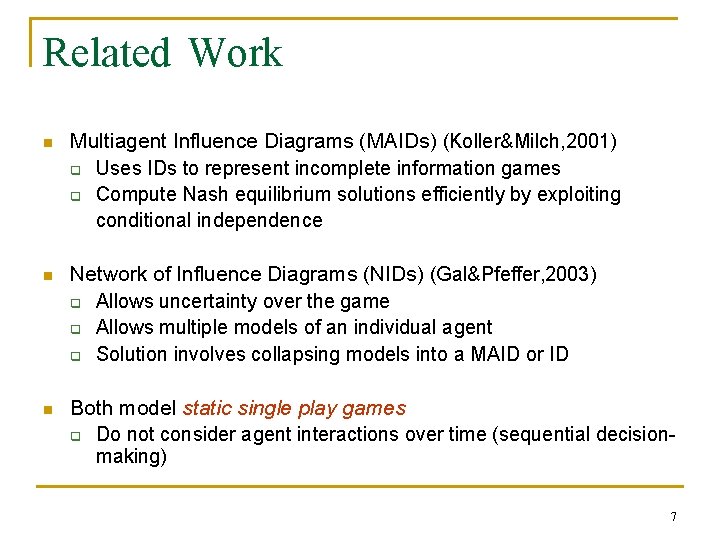

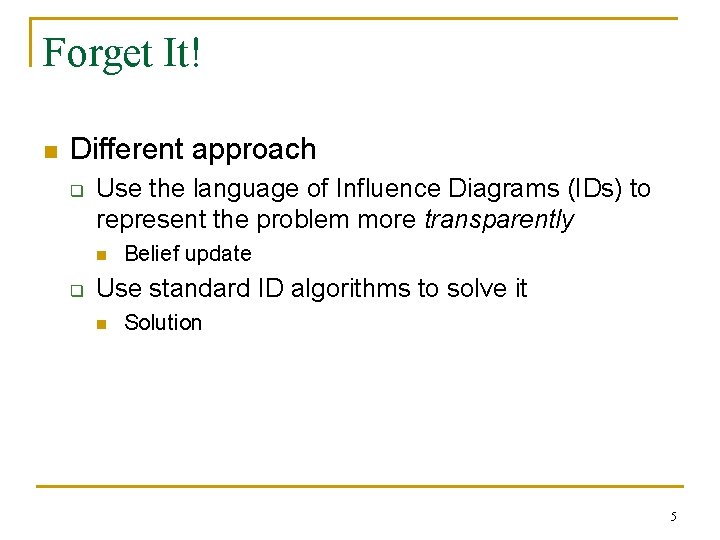

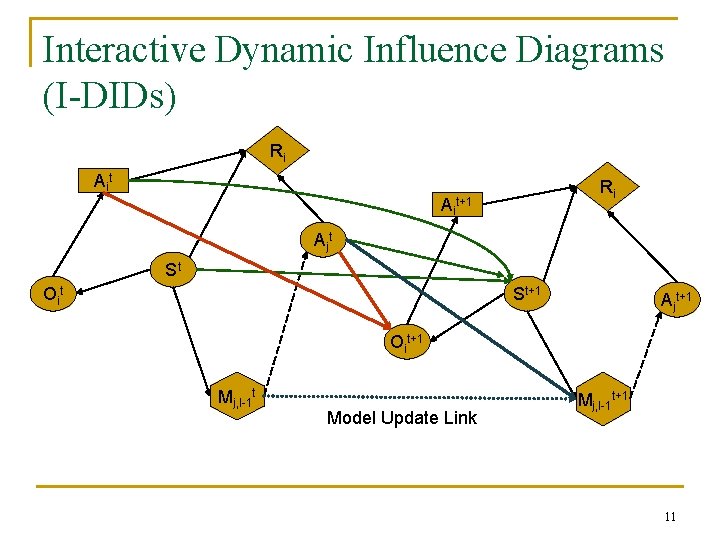

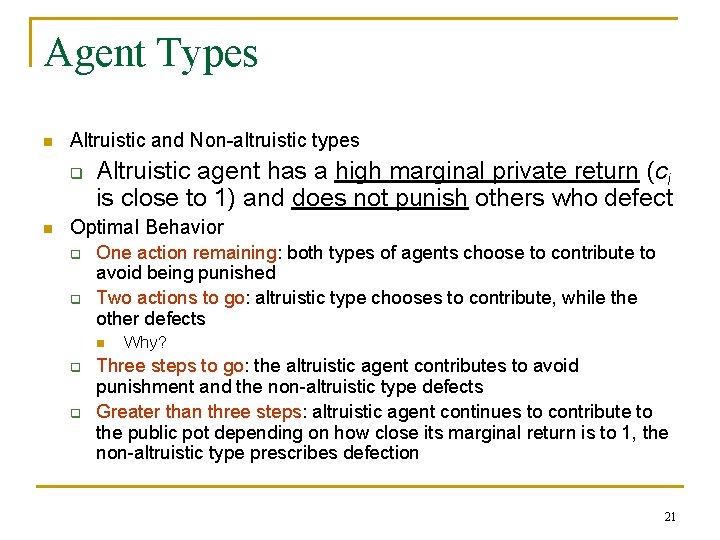

Semantics of Model Update Link Ajt Mj, l-1 t+1 st+1 Mod[Mjt+1] st mj, l-1 t+1, 1 Mod[Mjt] Oj Aj 1 Aj 2 Ajt+1 mj, l-1 t+1, 3 mj, l-1 t, 1 mj, l-1 t, 2 mj, l-1 t+1, 2 Oj 1 mj, l-1 t+1, 4 Oj 2 Aj 1 Aj 2 Aj 3 Aj 4 These models differ in their initial beliefs, each of which is the result of j updating its beliefs due to its actions and possible observations 12

Notes n Updated set of models at time step (t+1) will have at most models q q q n : number of models at time step t : largest space of actions : largest space of observations New distribution over the updated models uses q q q original distribution over the models probability of the other agent performing the action, and receiving the observation that led to the updated model 13

![Ri Ait1 St Oit St1 Ajt1 Oit1 ModMjt1 ModMjt mj l1 t1 1 Oj Ri Ait+1 St Oit St+1 Ajt+1 Oit+1 Mod[Mjt+1] Mod[Mjt] mj, l-1 t+1, 1 Oj](https://slidetodoc.com/presentation_image/31e13d063edc1c6c99df6f1a2705b204/image-14.jpg)

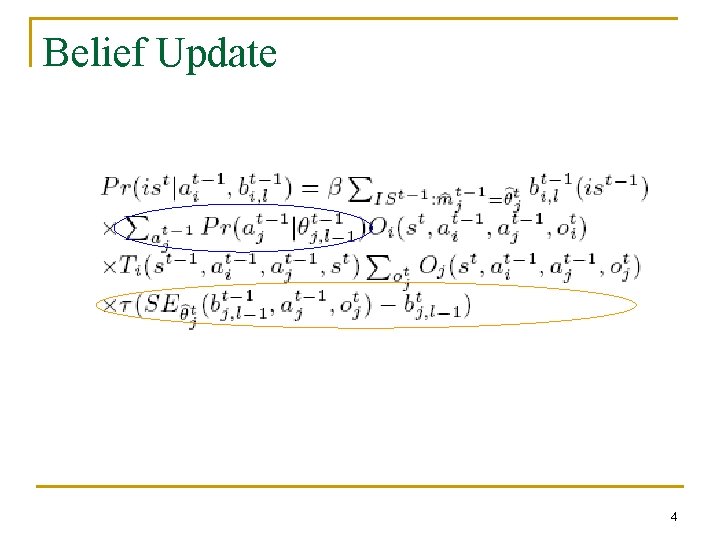

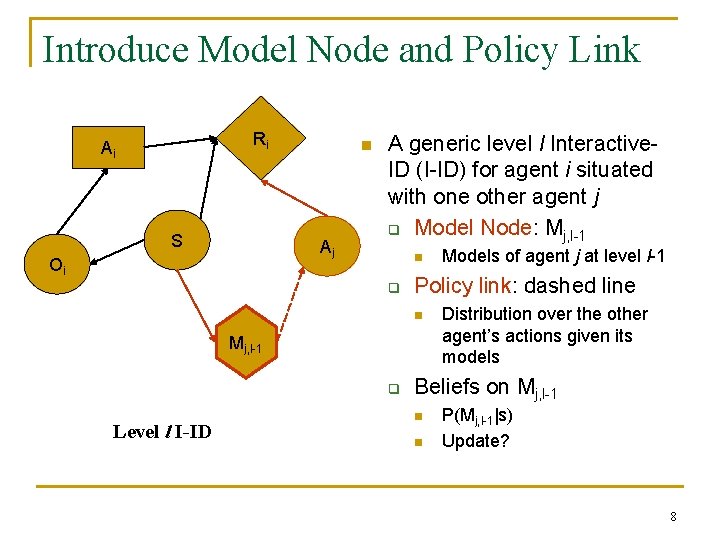

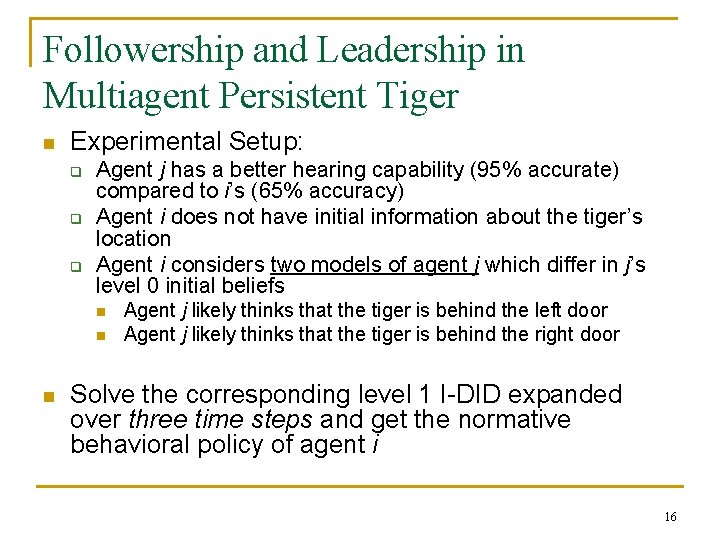

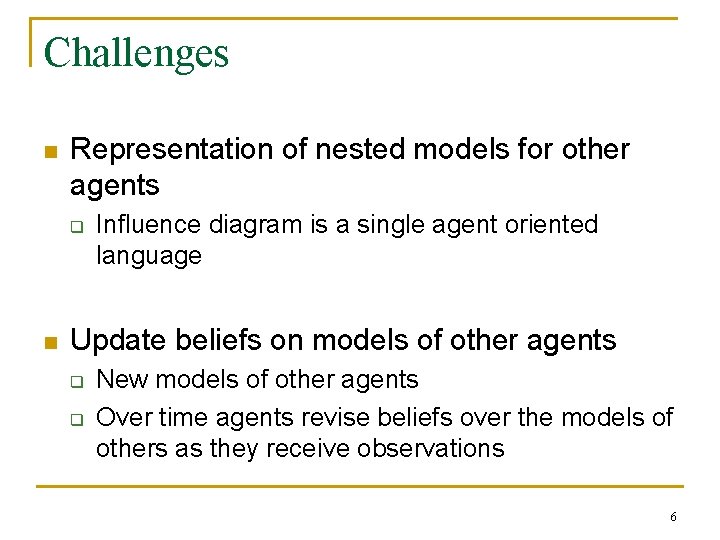

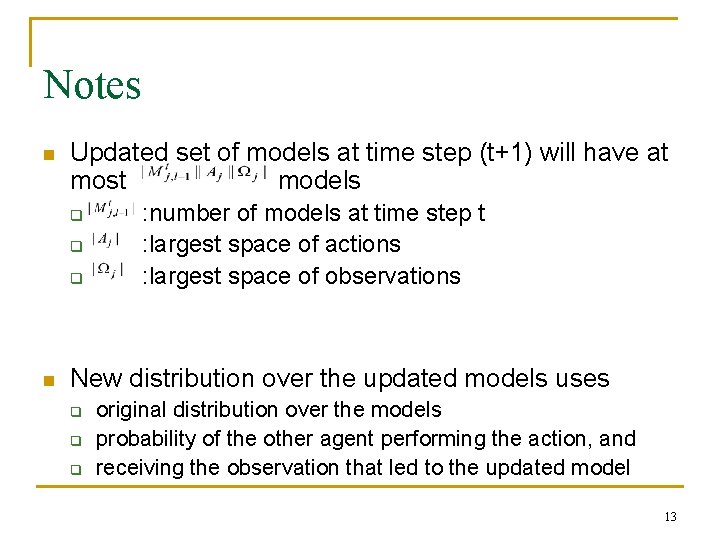

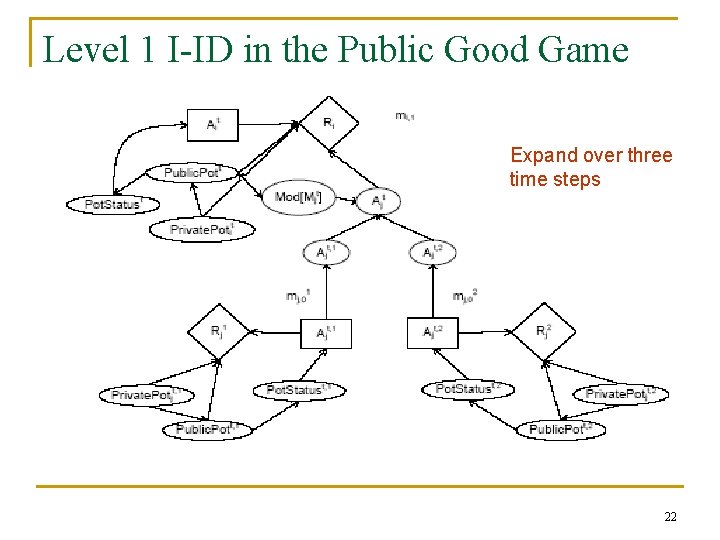

Ri Ait+1 St Oit St+1 Ajt+1 Oit+1 Mod[Mjt+1] Mod[Mjt] mj, l-1 t+1, 1 Oj Aj 1 Aj 2 mj, l-1 t+1, 3 mj, l-1 t, 1 Oj 1 mj, l-1 t, 2 mj, l-1 t+1, 4 Oj 2 Aj 4 Aj 1 Aj 2 Aj 3 14

Example Applications: Emergence of Social Behaviors n Followership and Leadership in the persistent multiagent tiger problem n Altruism and Reciprocity in the public good problem with punishment n Strategies in a simple version of two-player Poker 15

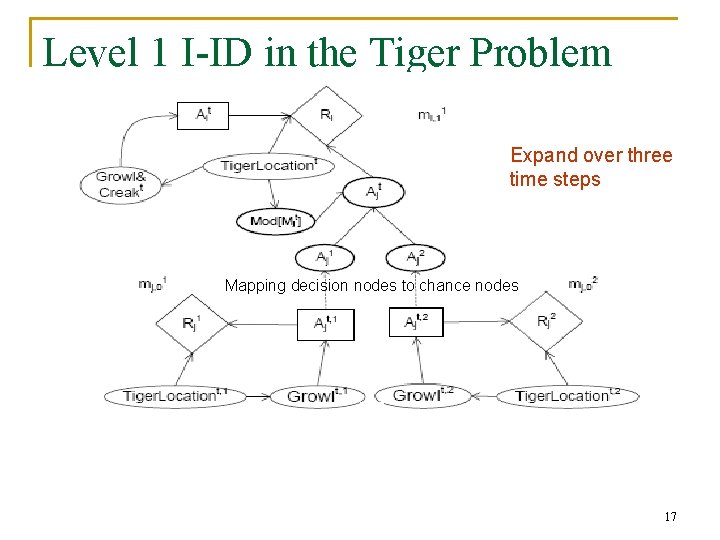

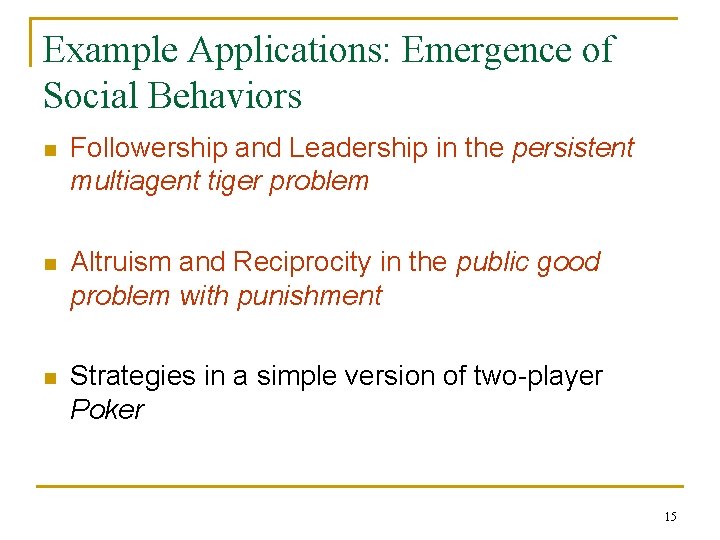

Followership and Leadership in Multiagent Persistent Tiger n Experimental Setup: q q q Agent j has a better hearing capability (95% accurate) compared to i’s (65% accuracy) Agent i does not have initial information about the tiger’s location Agent i considers two models of agent j which differ in j’s level 0 initial beliefs n n n Agent j likely thinks that the tiger is behind the left door Agent j likely thinks that the tiger is behind the right door Solve the corresponding level 1 I-DID expanded over three time steps and get the normative behavioral policy of agent i 16

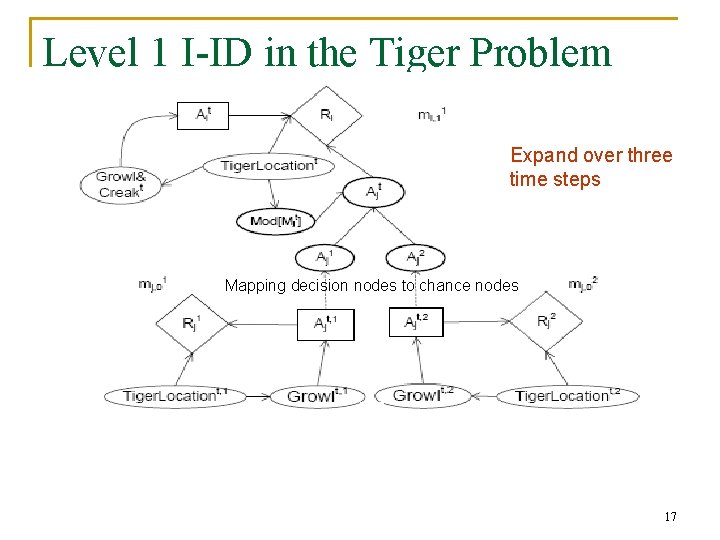

Level 1 I-ID in the Tiger Problem Expand over three time steps Mapping decision nodes to chance nodes 17

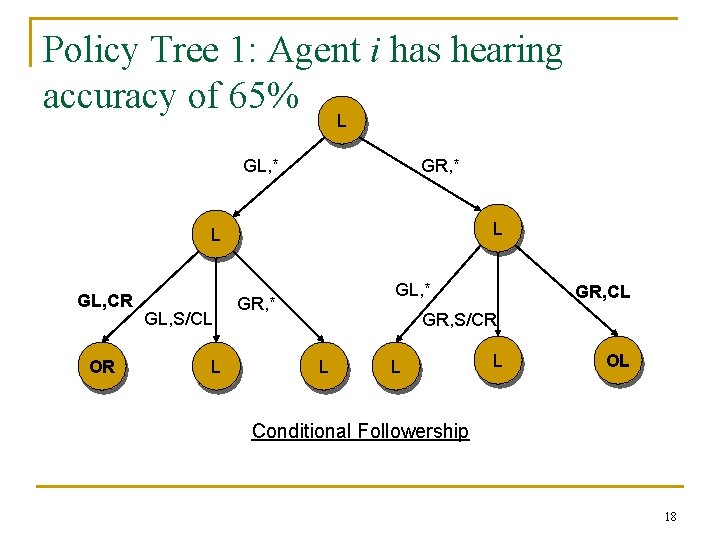

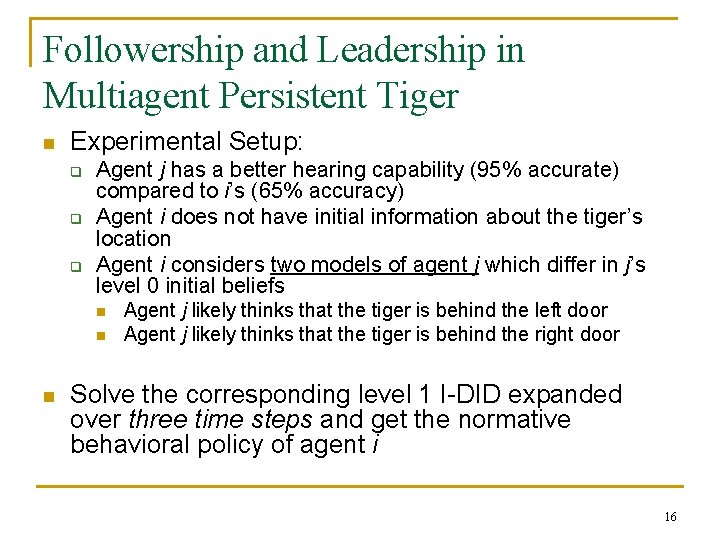

Policy Tree 1: Agent i has hearing accuracy of 65% L GL, * GR, * L L GL, CR OR GL, S/CL L GL, * GR, CL GR, S/CR L L L OL Conditional Followership 18

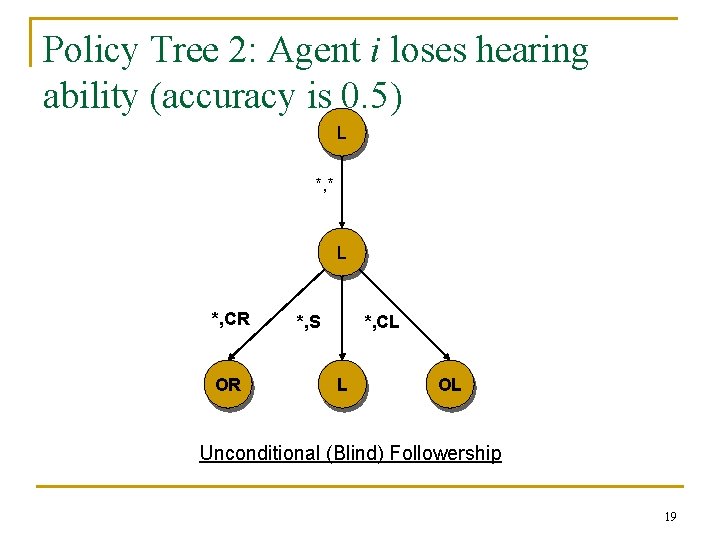

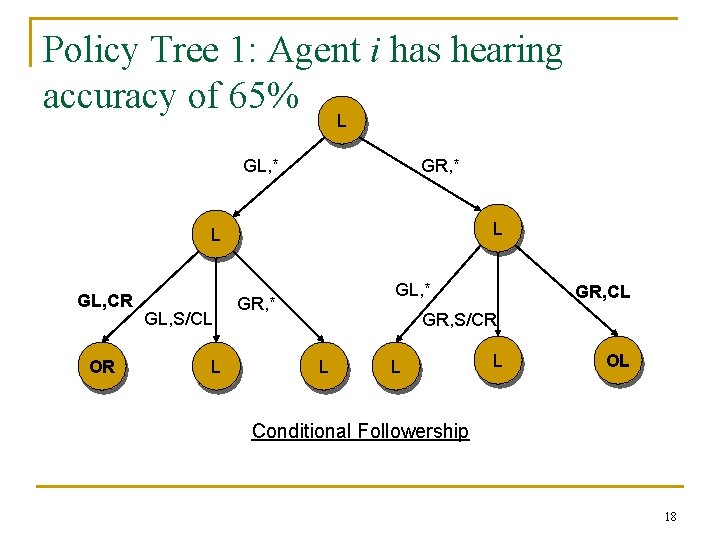

Policy Tree 2: Agent i loses hearing ability (accuracy is 0. 5) L *, * L *, CR OR *, S *, CL L OL Unconditional (Blind) Followership 19

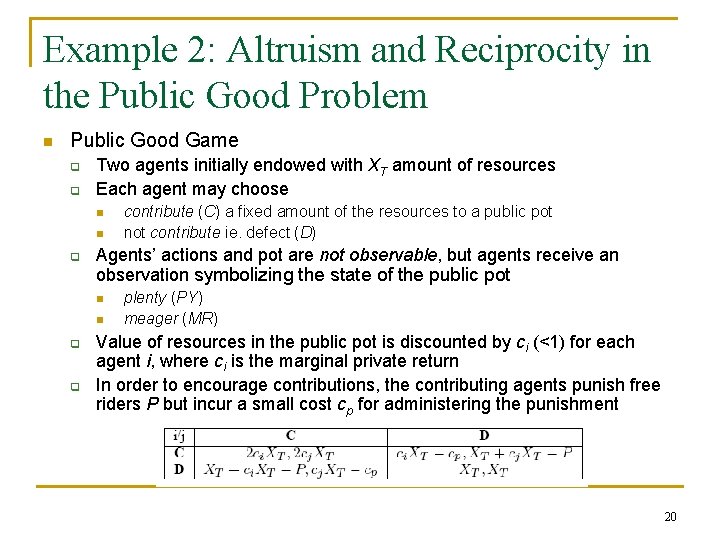

Example 2: Altruism and Reciprocity in the Public Good Problem n Public Good Game q q Two agents initially endowed with XT amount of resources Each agent may choose n n q Agents’ actions and pot are not observable, but agents receive an observation symbolizing the state of the public pot n n q q contribute (C) a fixed amount of the resources to a public pot not contribute ie. defect (D) plenty (PY) meager (MR) Value of resources in the public pot is discounted by ci (<1) for each agent i, where ci is the marginal private return In order to encourage contributions, the contributing agents punish free riders P but incur a small cost cp for administering the punishment 20

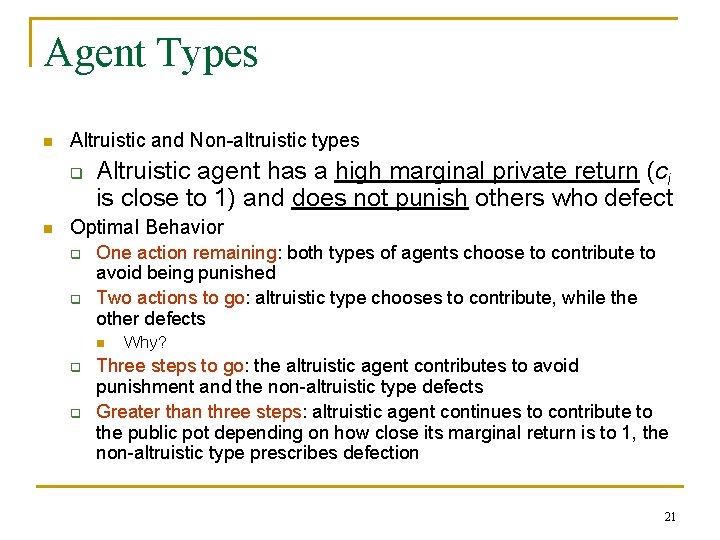

Agent Types n Altruistic and Non-altruistic types q n Altruistic agent has a high marginal private return (ci is close to 1) and does not punish others who defect Optimal Behavior q q One action remaining: both types of agents choose to contribute to avoid being punished Two actions to go: altruistic type chooses to contribute, while the other defects n q q Why? Three steps to go: the altruistic agent contributes to avoid punishment and the non-altruistic type defects Greater than three steps: altruistic agent continues to contribute to the public pot depending on how close its marginal return is to 1, the non-altruistic type prescribes defection 21

Level 1 I-ID in the Public Good Game Expand over three time steps 22

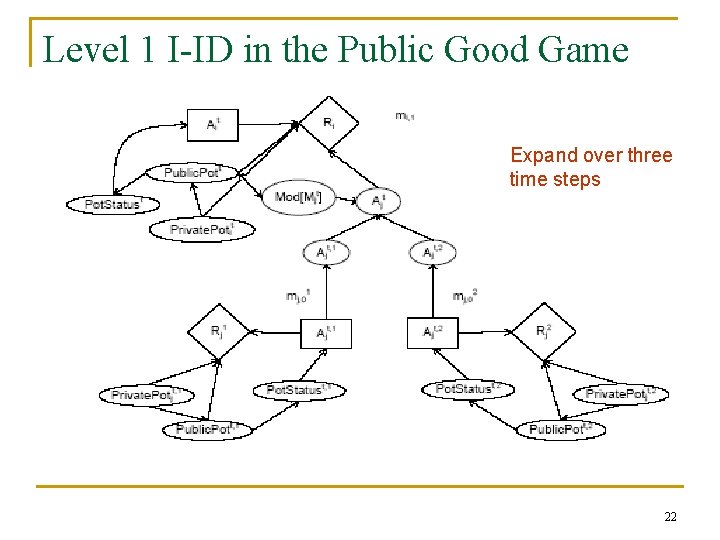

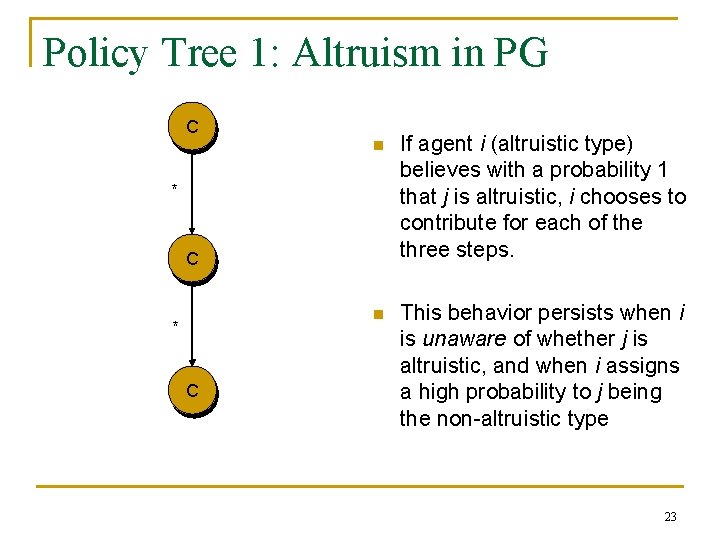

Policy Tree 1: Altruism in PG C n If agent i (altruistic type) believes with a probability 1 that j is altruistic, i chooses to contribute for each of the three steps. n This behavior persists when i is unaware of whether j is altruistic, and when i assigns a high probability to j being the non-altruistic type * C 23

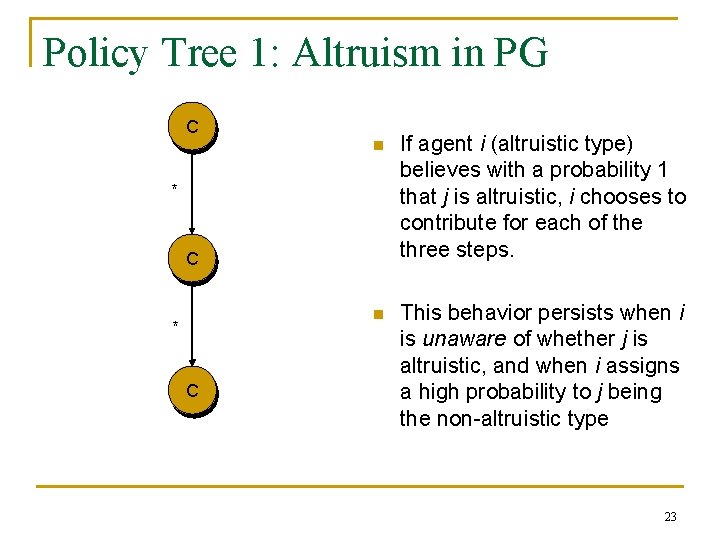

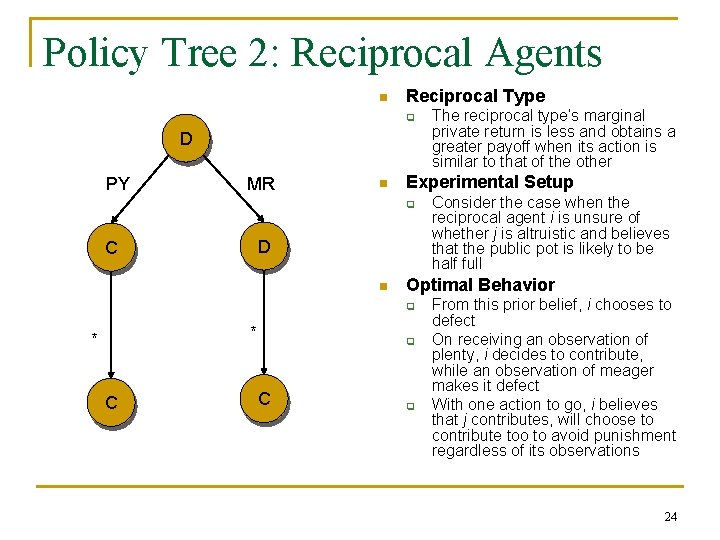

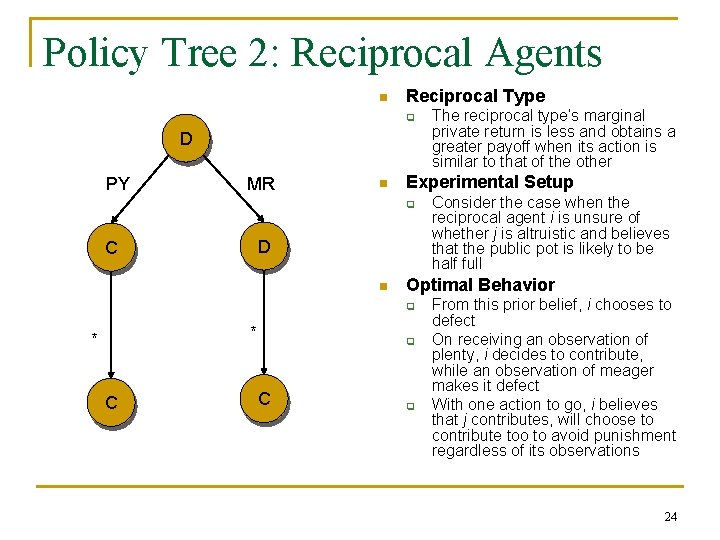

Policy Tree 2: Reciprocal Agents n Reciprocal Type q D PY MR n Experimental Setup q D C n * C q C Consider the case when the reciprocal agent i is unsure of whether j is altruistic and believes that the public pot is likely to be half full Optimal Behavior q * The reciprocal type’s marginal private return is less and obtains a greater payoff when its action is similar to that of the other q From this prior belief, i chooses to defect On receiving an observation of plenty, i decides to contribute, while an observation of meager makes it defect With one action to go, i believes that j contributes, will choose to contribute too to avoid punishment regardless of its observations 24

Conclusion and Future Work n I-DIDs: A general ID-based formalism for sequential decision-making in multiagent settings q n n Online counterparts of I-POMDPs Solving I-DIDs approximately for computational efficiency (see AAAI ’ 07 paper on model clustering) Apply I-DIDs to other application domains Visit our poster on I-DIDs today for more information 25

Thank You! 26