Indexing Information Retrieval in Practice All slides Addison

- Slides: 49

Indexing Information Retrieval in Practice All slides ©Addison Wesley, 2008

Indexes � Indexes are data structures designed to make search faster � Text search has unique requirements, which leads to unique data structures � Most common data structure is inverted index � general name for a class of structures � “inverted” because documents are associated with words, rather than words with documents � similar to a concordance

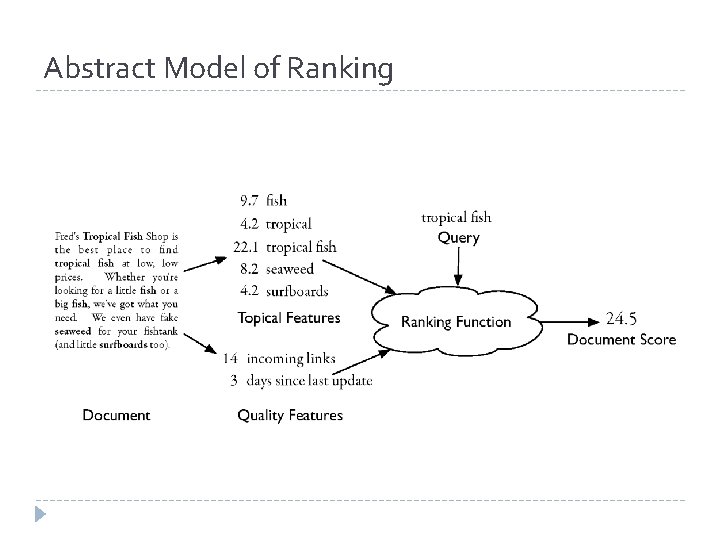

Indexes and Ranking � Indexes are designed to support search � faster response time, supports updates � Text search engines use a particular form of search: ranking � documents are retrieved in sorted order according to a score computing using the document representation, the query, and a ranking algorithm � What is a reasonable abstract model for ranking? � enables discussion of indexes without details of retrieval model

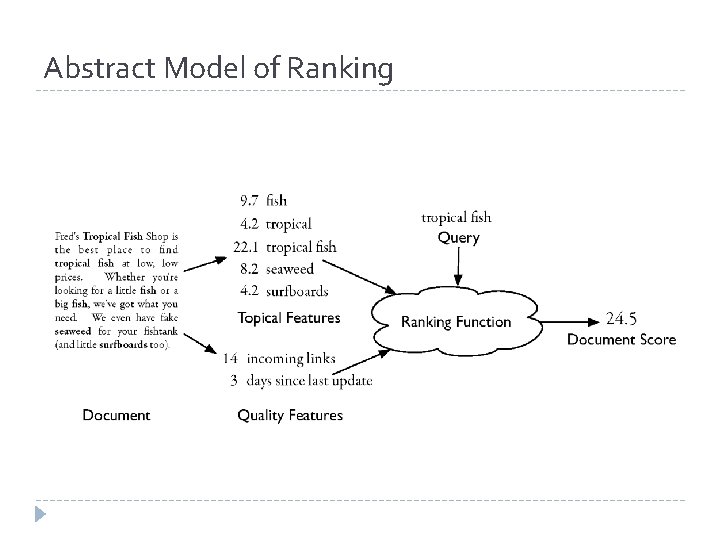

Abstract Model of Ranking

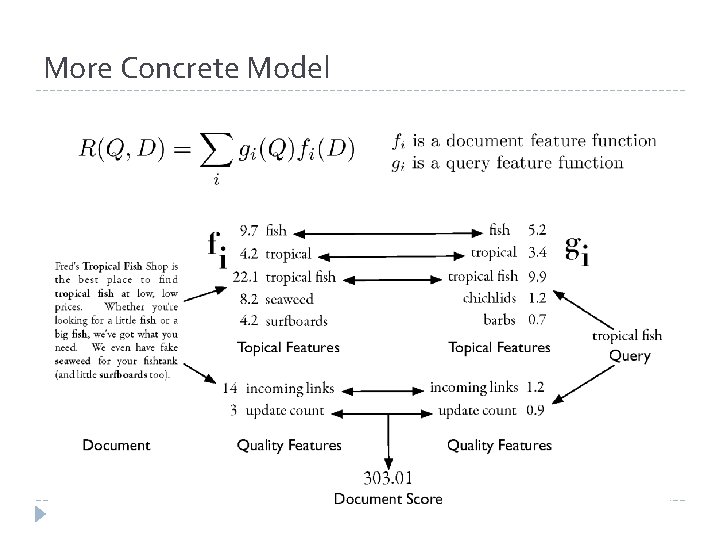

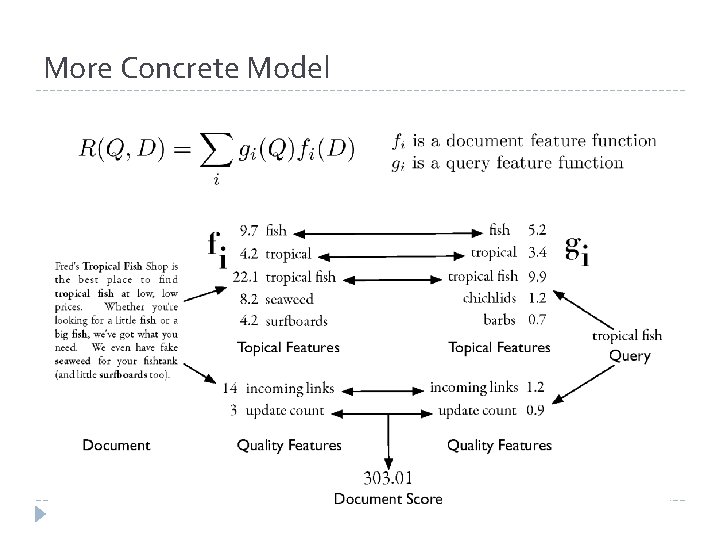

More Concrete Model

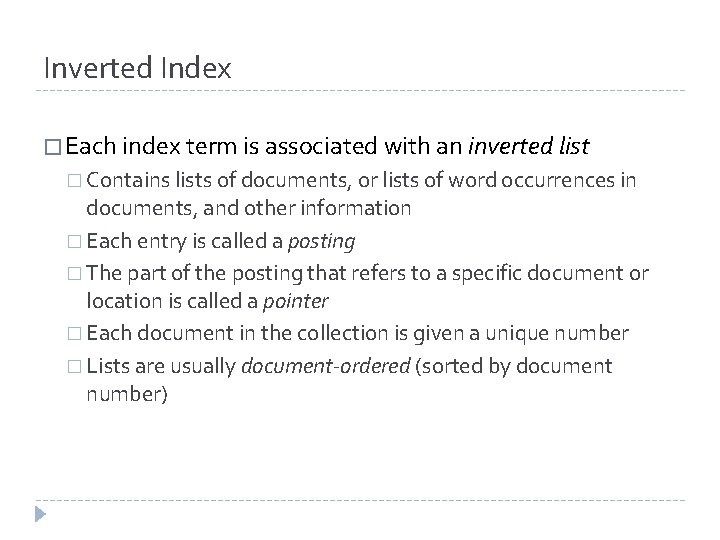

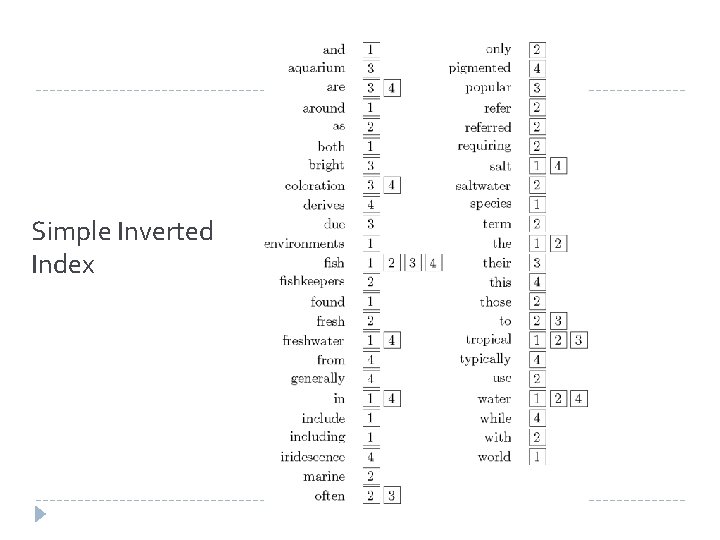

Inverted Index � Each index term is associated with an inverted list � Contains lists of documents, or lists of word occurrences in documents, and other information � Each entry is called a posting � The part of the posting that refers to a specific document or location is called a pointer � Each document in the collection is given a unique number � Lists are usually document-ordered (sorted by document number)

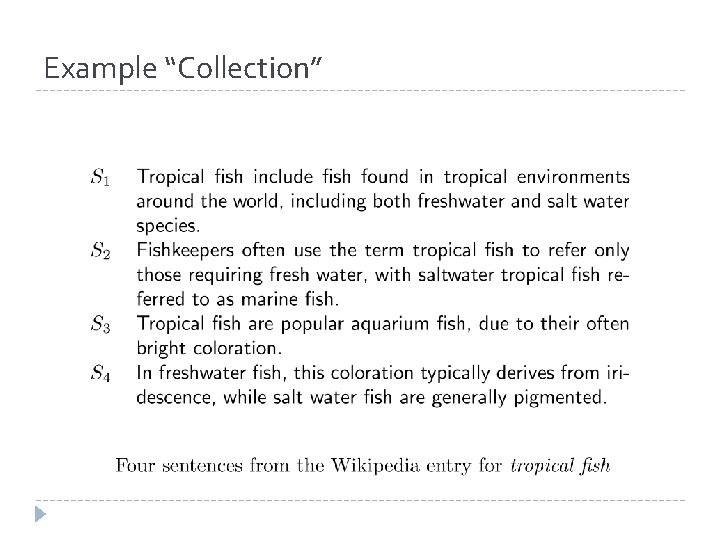

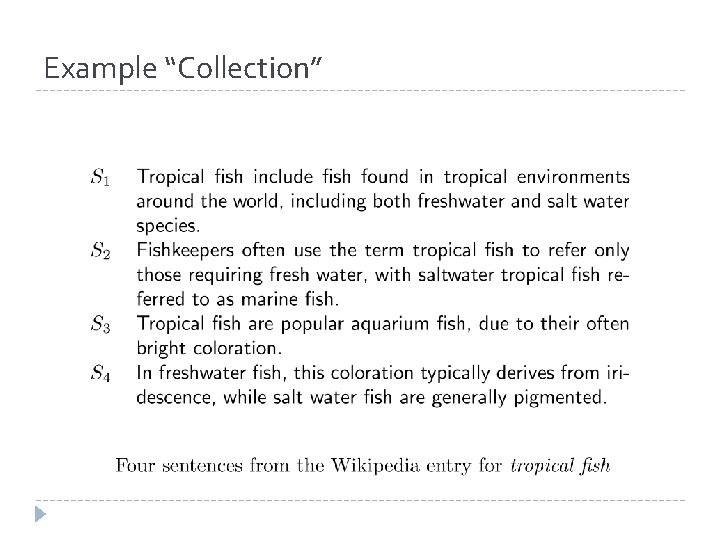

Example “Collection”

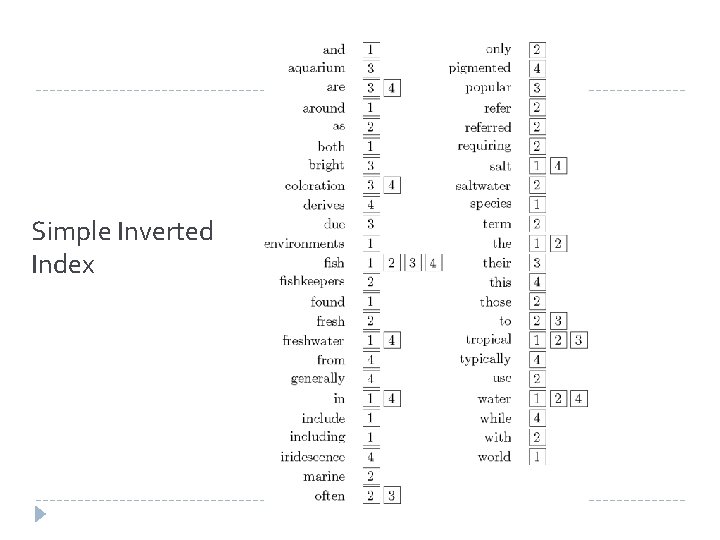

Simple Inverted Index

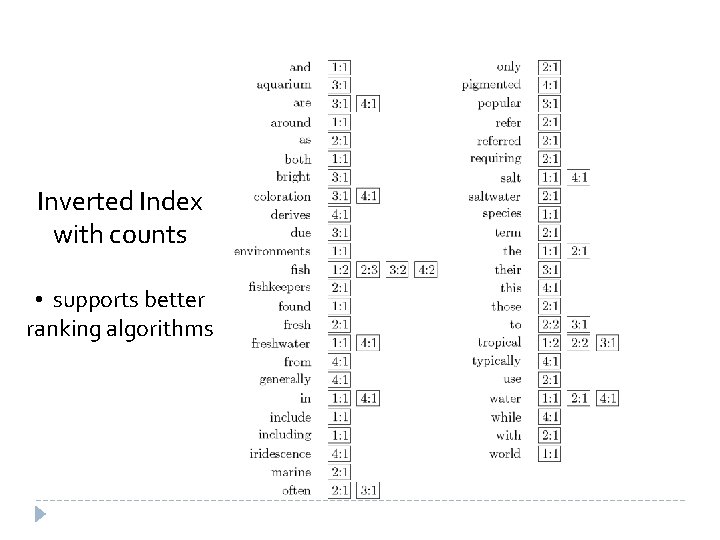

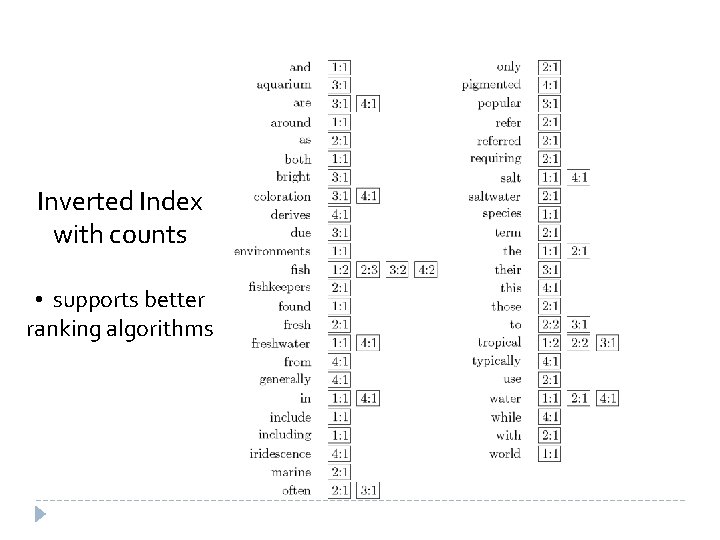

Inverted Index with counts • supports better ranking algorithms

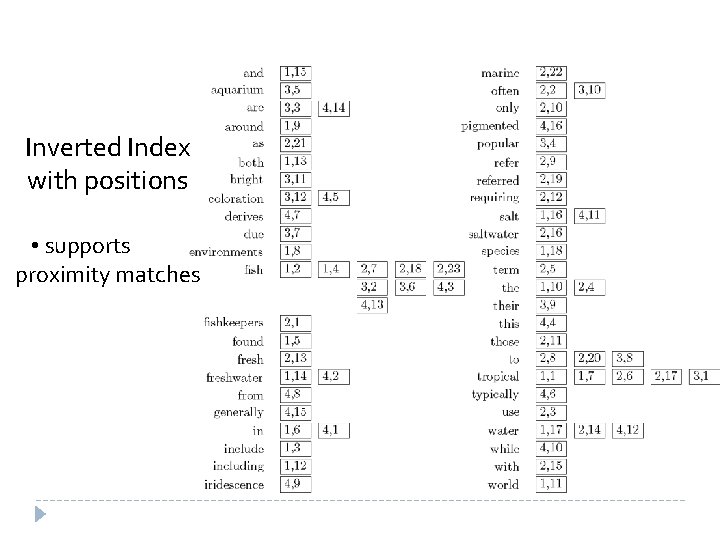

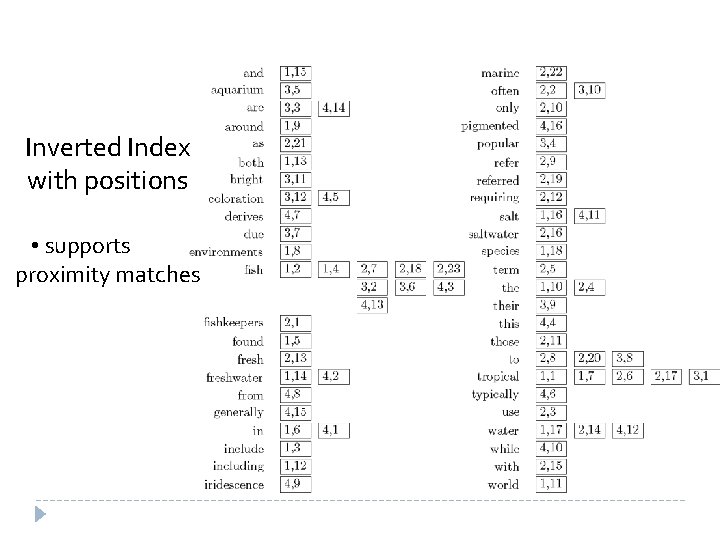

Inverted Index with positions • supports proximity matches

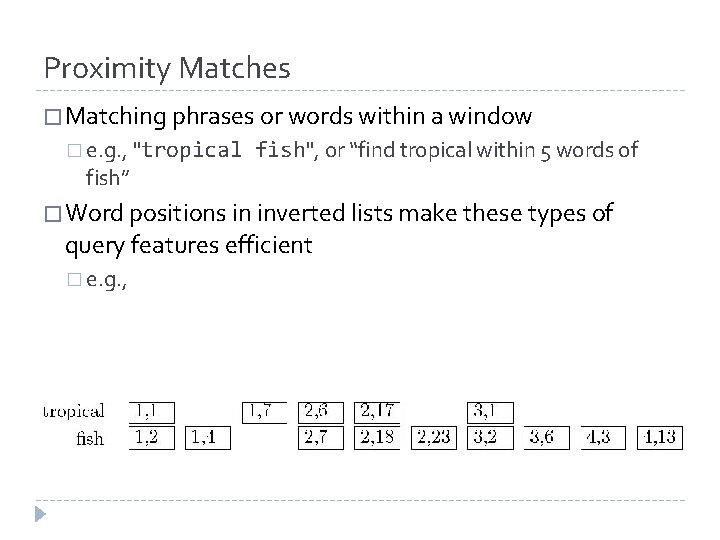

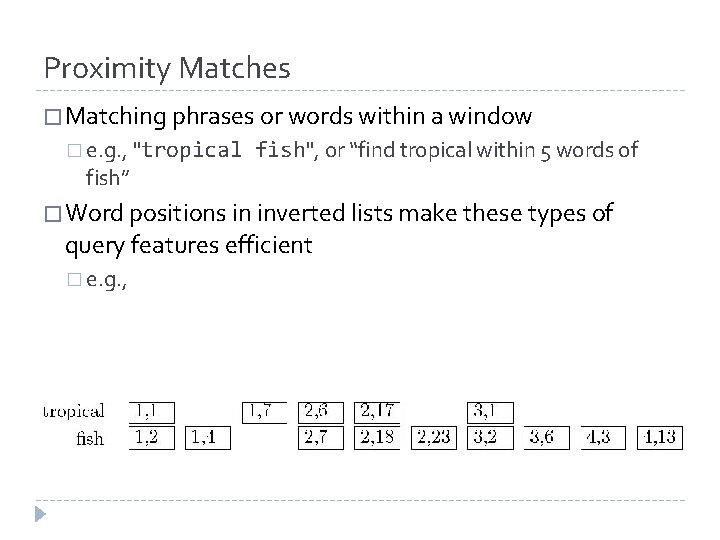

Proximity Matches � Matching phrases or words within a window � e. g. , "tropical fish” fish", or “find tropical within 5 words of � Word positions in inverted lists make these types of query features efficient � e. g. ,

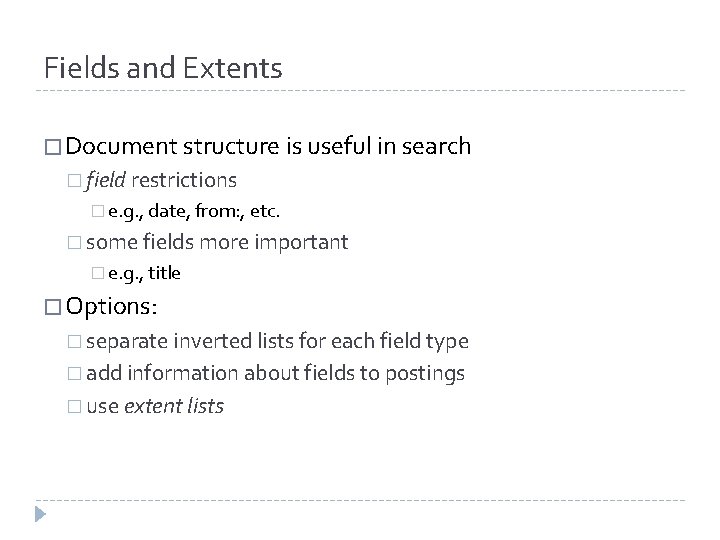

Fields and Extents � Document structure is useful in search � field restrictions � e. g. , date, from: , etc. � some fields more important � e. g. , title � Options: � separate inverted lists for each field type � add information about fields to postings � use extent lists

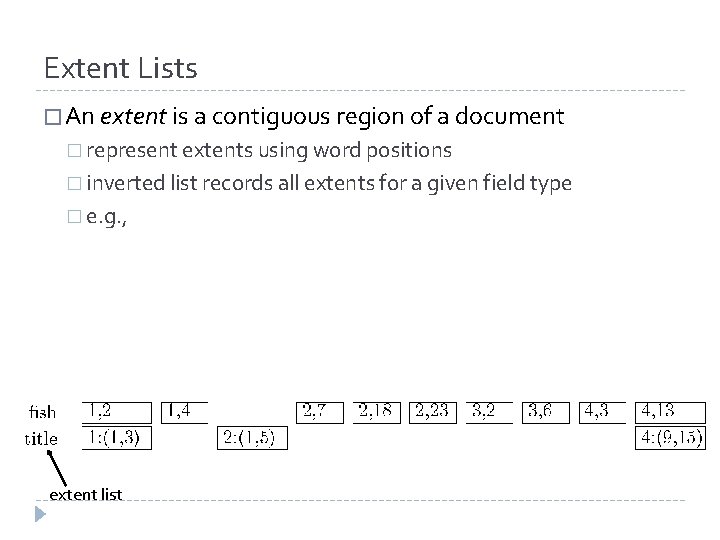

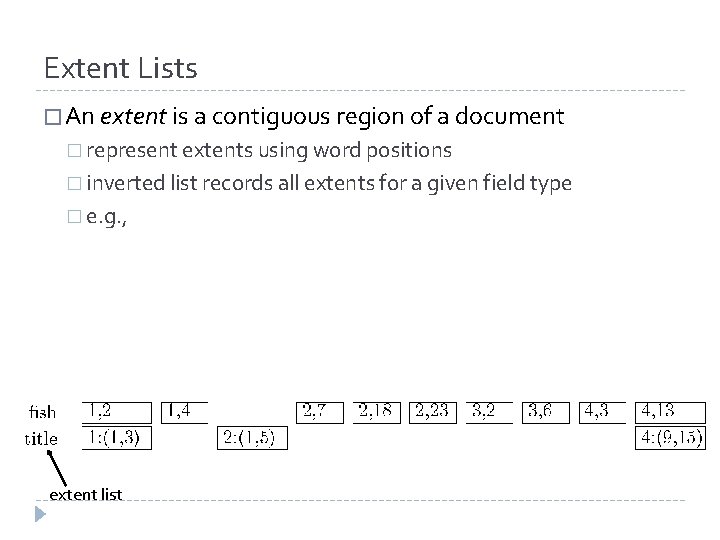

Extent Lists � An extent is a contiguous region of a document � represent extents using word positions � inverted list records all extents for a given field type � e. g. , extent list

Other Issues � Precomputed scores in inverted list � e. g. , list for “fish” [(1: 3. 6), (3: 2. 2)], where 3. 6 is total feature value for document 1 � improves speed but reduces flexibility � Score-ordered lists � query processing engine can focus only on the top part of each inverted list, where the highest-scoring documents are recorded � very efficient for single-word queries

Compression � Inverted lists are very large � e. g. , 25 -50% of collection for TREC collections using Indri search engine � Much higher if n-grams are indexed � Compression of indexes saves disk and/or memory space � Typically have to decompress lists to use them � Best compression techniques have good compression ratios and are easy to decompress � Lossless compression – no information lost

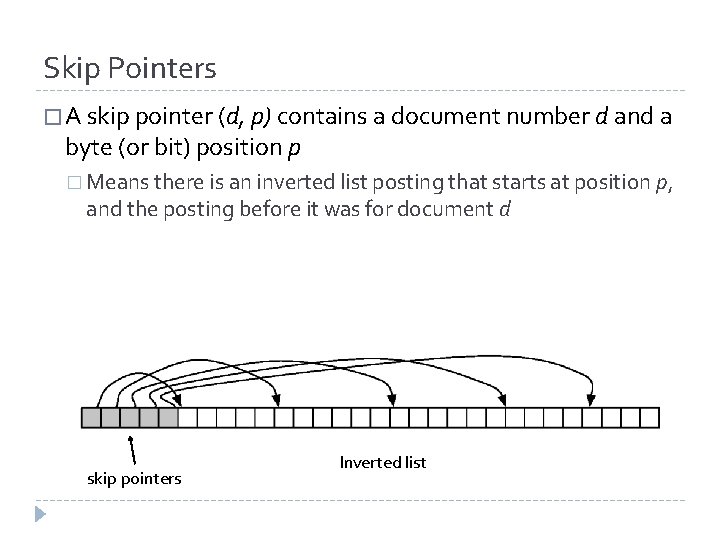

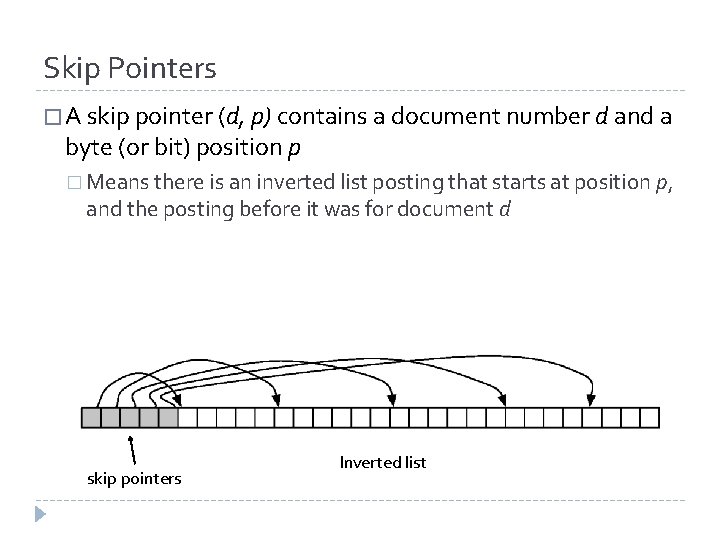

Skipping � Search involves comparison of inverted lists of different lengths � Can be very inefficient � “Skipping” ahead to check document numbers is much better � Compression makes this difficult � Variable size, only d-gaps stored � Skip pointers are additional data structure to support skipping

Skip Pointers � A skip pointer (d, p) contains a document number d and a byte (or bit) position p � Means there is an inverted list posting that starts at position p, and the posting before it was for document d skip pointers Inverted list

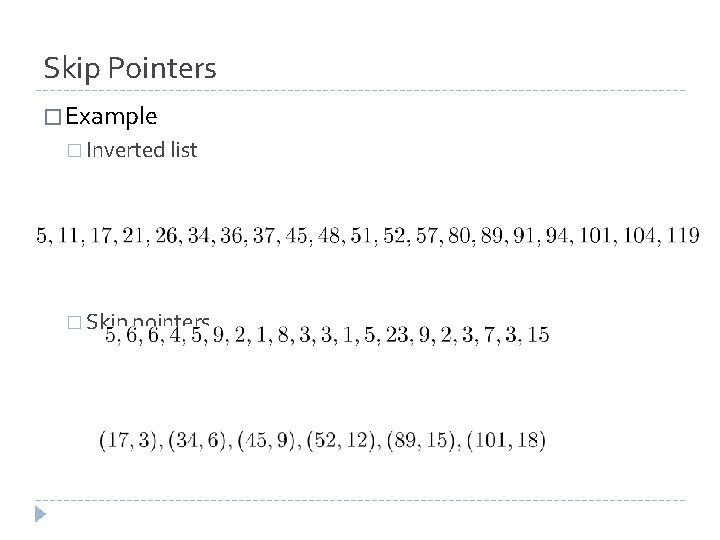

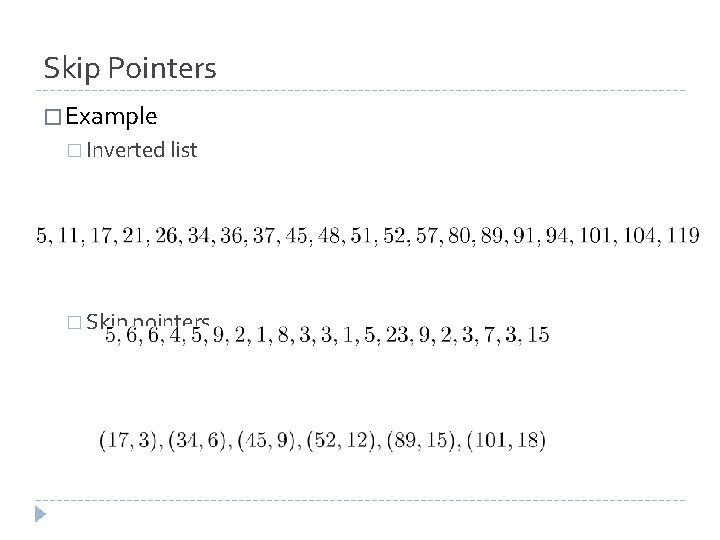

Skip Pointers � Example � Inverted list � D-gaps � Skip pointers

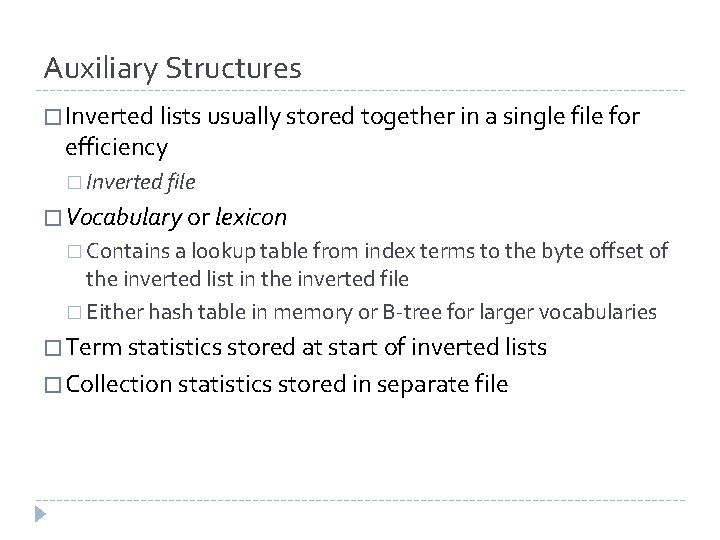

Auxiliary Structures � Inverted lists usually stored together in a single file for efficiency � Inverted file � Vocabulary or lexicon � Contains a lookup table from index terms to the byte offset of the inverted list in the inverted file � Either hash table in memory or B-tree for larger vocabularies � Term statistics stored at start of inverted lists � Collection statistics stored in separate file

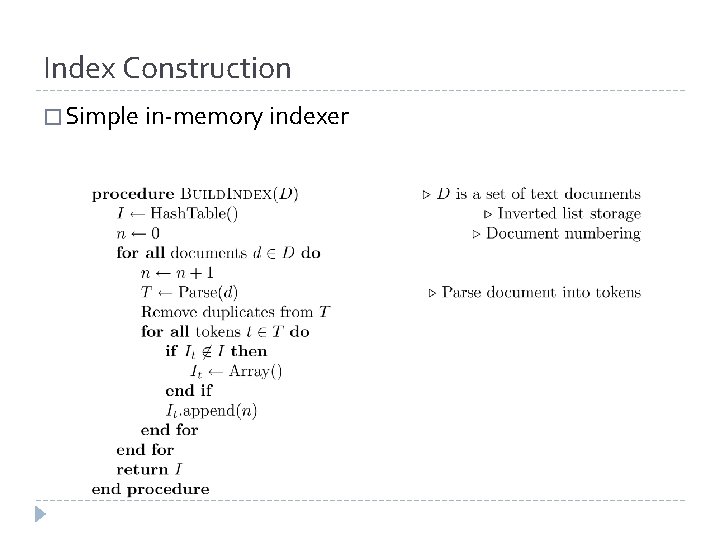

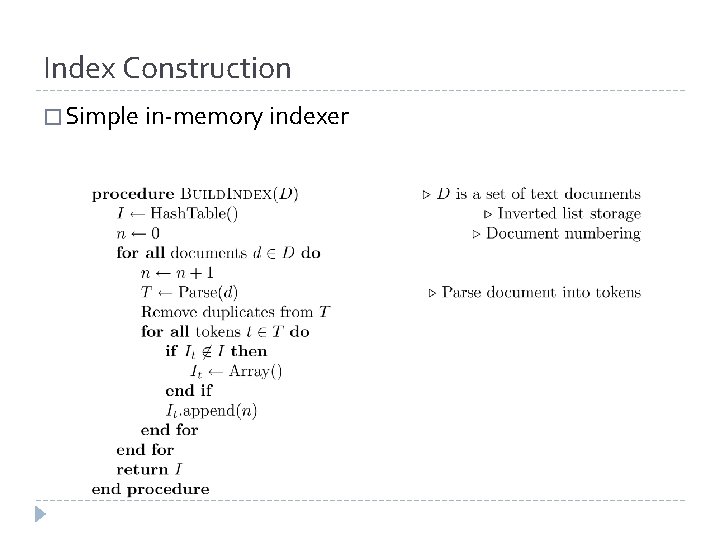

Index Construction � Simple in-memory indexer

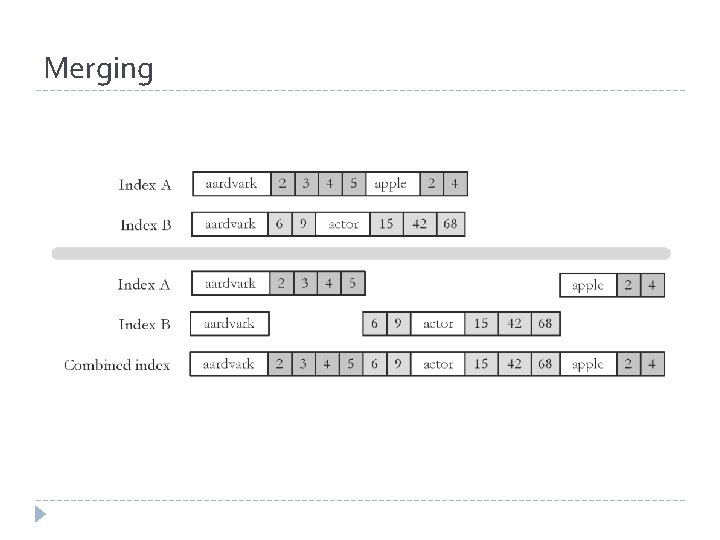

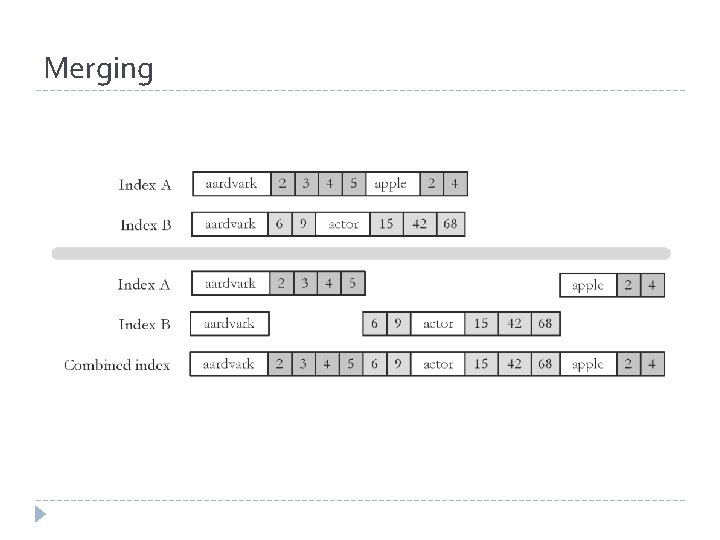

Merging � Merging addresses limited memory problem � Build the inverted list structure until memory runs out � Then write the partial index to disk, start making a new one � At the end of this process, the disk is filled with many partial indexes, which are merged � Partial lists must be designed so they can be merged in small pieces � e. g. , storing in alphabetical order

Merging

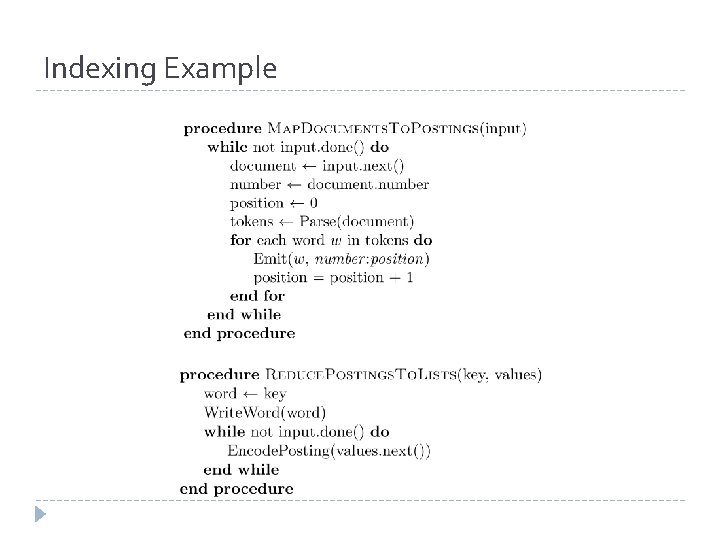

Distributed Indexing � Distributed processing driven by need to index and analyze huge amounts of data (i. e. , the Web) � Large numbers of inexpensive servers used rather than larger, more expensive machines � Map. Reduce is a distributed programming tool designed for indexing and analysis tasks

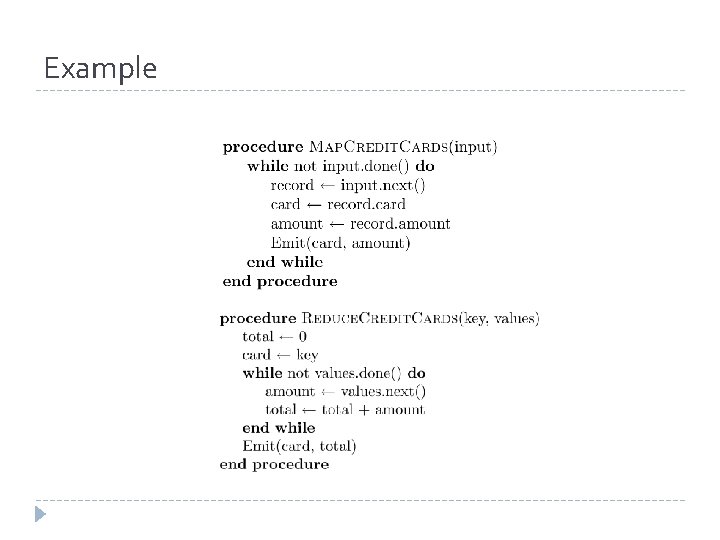

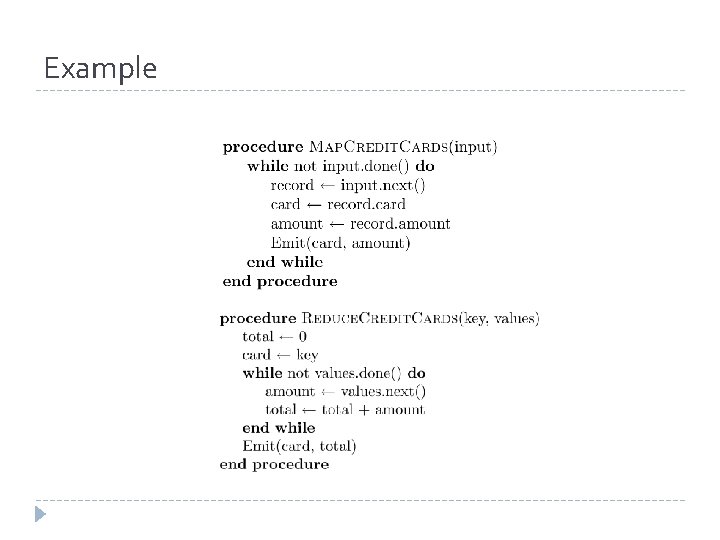

Example � Given a large text file that contains data about credit card transactions � Each line of the file contains a credit card number and an amount of money � Determine the number of unique credit card numbers � Could use hash table – memory problems � counting is simple with sorted file � Similar with distributed approach � sorting and placement are crucial

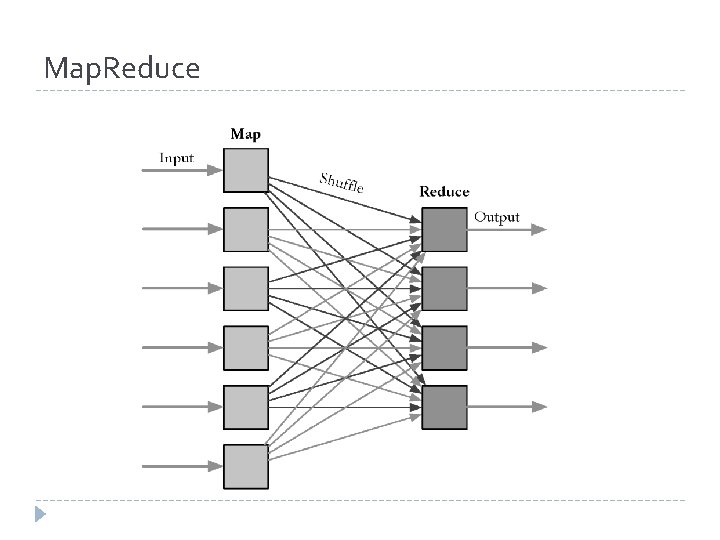

Map. Reduce � Distributed programming framework that focuses on data placement and distribution � Mapper � Generally, transforms a list of items into another list of items of the same length � Reducer Transforms a list of items into a single item � Definitions not so strict in terms of number of outputs � � Many mapper and reducer tasks on a cluster of machines

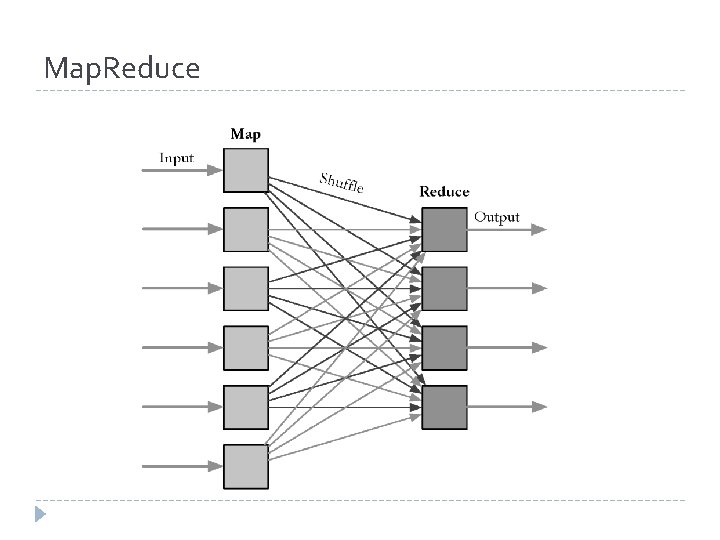

Map. Reduce � Basic process Map stage which transforms data records into pairs, each with a key and a value � Shuffle uses a hash function so that all pairs with the same key end up next to each other and on the same machine � Reduce stage processes records in batches, where all pairs with the same key are processed at the same time � � Idempotence of Mapper and Reducer provides fault tolerance � multiple operations on same input gives same output

Map. Reduce

Example

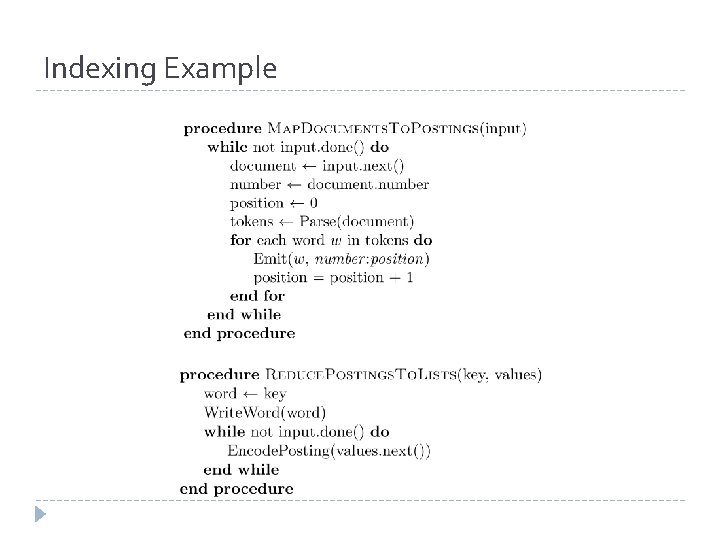

Indexing Example

Result Merging � Index merging is a good strategy for handling updates when they come in large batches � For small updates this is very inefficient � instead, create separate index for new documents, merge results from both searches � could be in-memory, fast to update and search � Deletions handled using delete list � Modifications done by putting old version on delete list, adding new version to new documents index

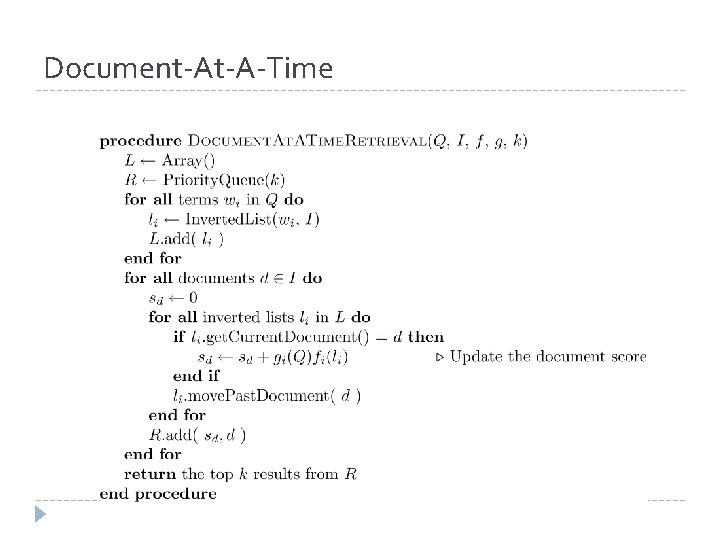

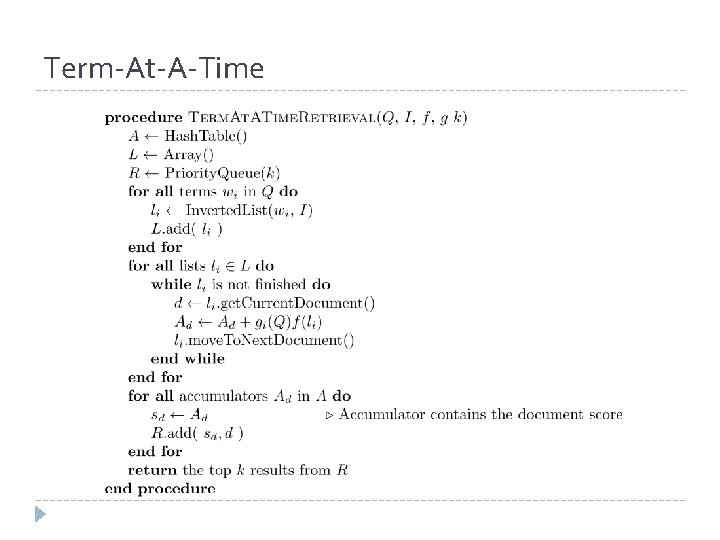

Query Processing � Document-at-a-time � Calculates complete scores for documents by processing all term lists, one document at a time � Term-at-a-time � Accumulates scores for documents by processing term lists one at a time � Both approaches have optimization techniques that significantly reduce time required to generate scores

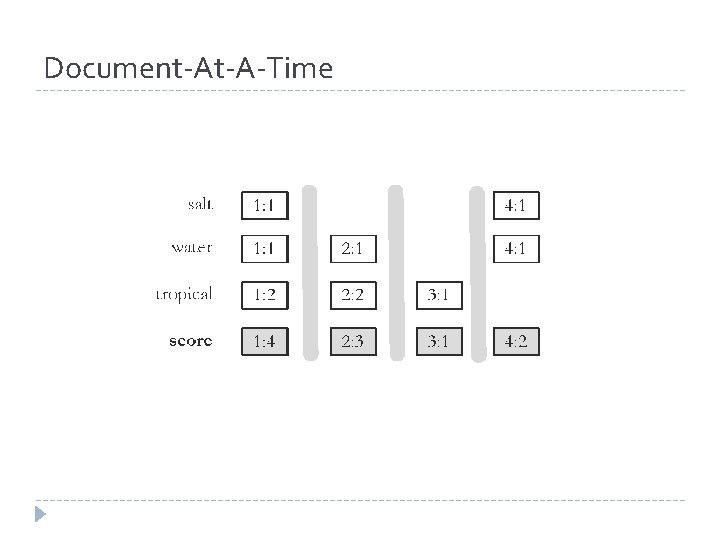

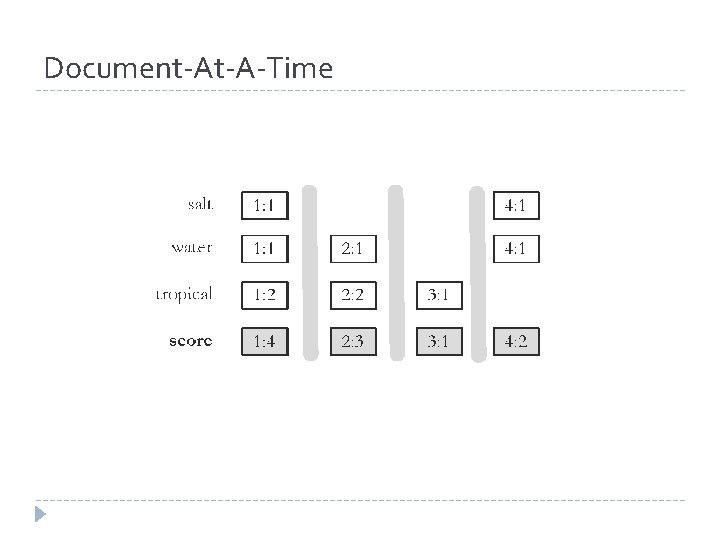

Document-At-A-Time

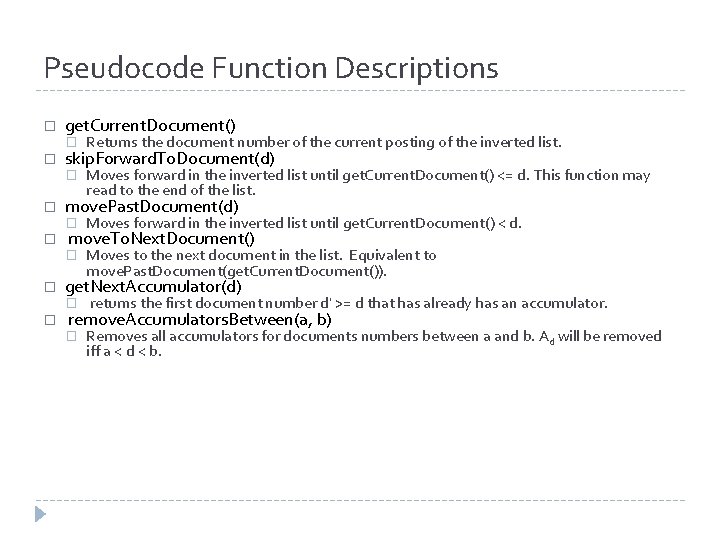

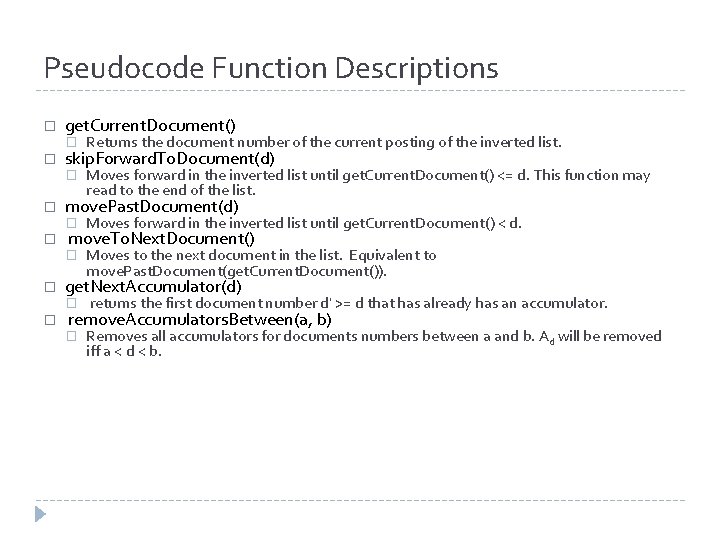

Pseudocode Function Descriptions � � � get. Current. Document() � Returns the document number of the current posting of the inverted list. � Moves forward in the inverted list until get. Current. Document() <= d. This function may read to the end of the list. skip. Forward. To. Document(d) move. Past. Document(d) � Moves forward in the inverted list until get. Current. Document() < d. � Moves to the next document in the list. Equivalent to move. Past. Document(get. Current. Document()). move. To. Next. Document() get. Next. Accumulator(d) � returns the first document number d' >= d that has already has an accumulator. � Removes all accumulators for documents numbers between a and b. Ad will be removed iff a < d < b. remove. Accumulators. Between(a, b)

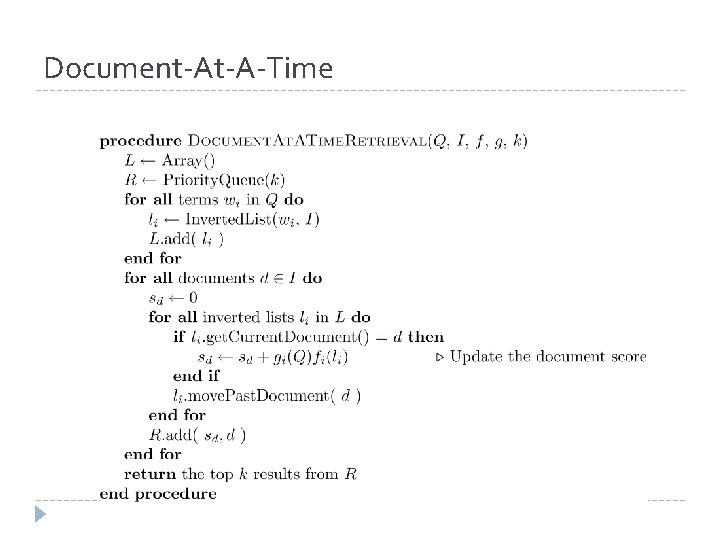

Document-At-A-Time

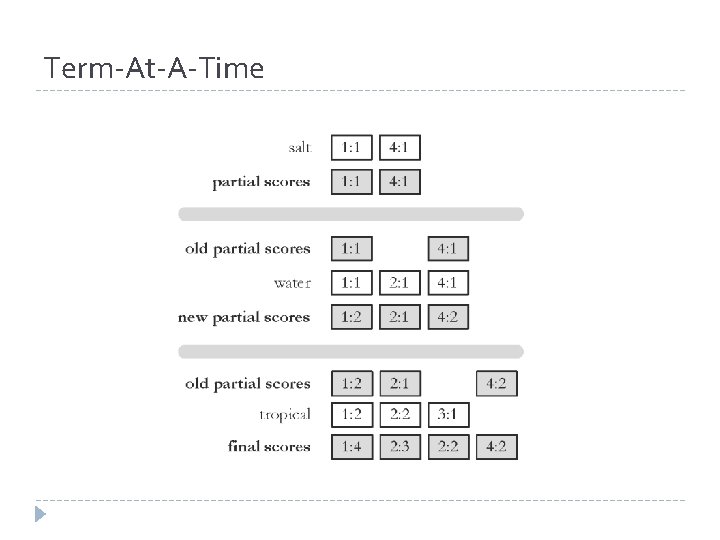

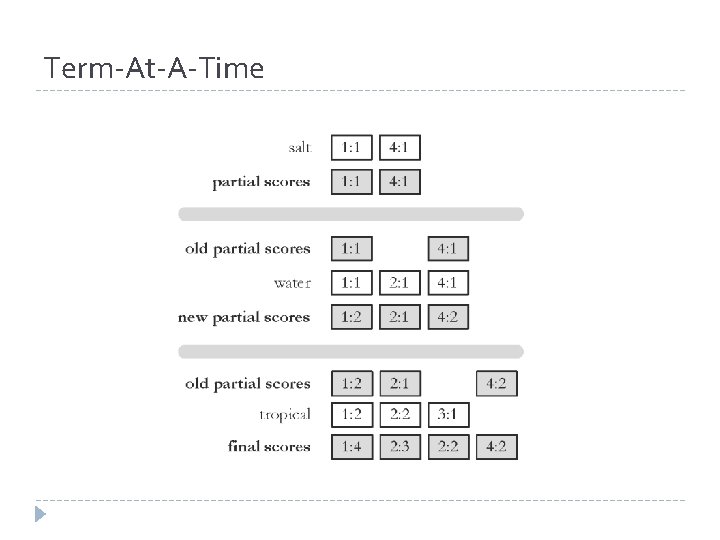

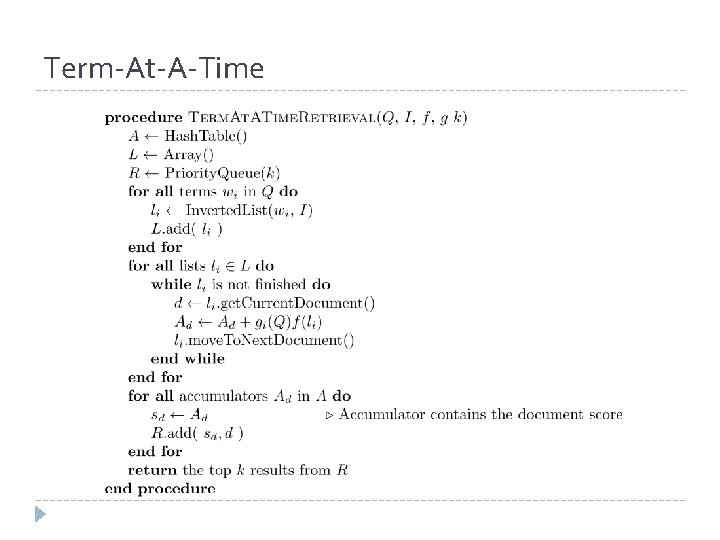

Term-At-A-Time

Term-At-A-Time

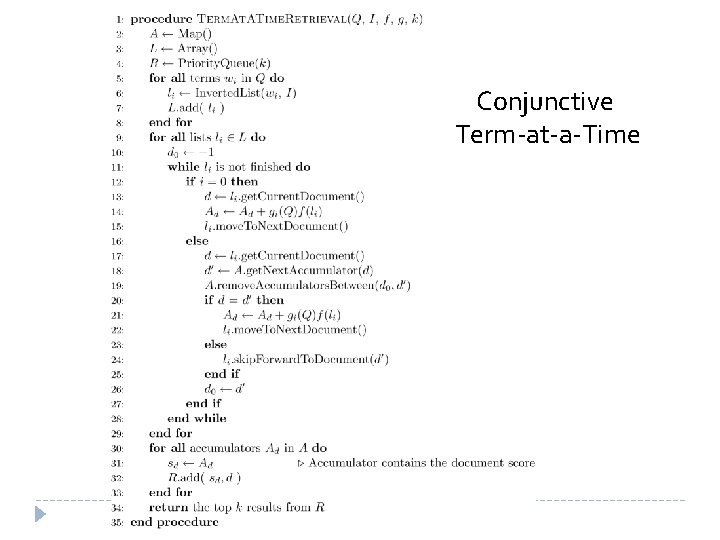

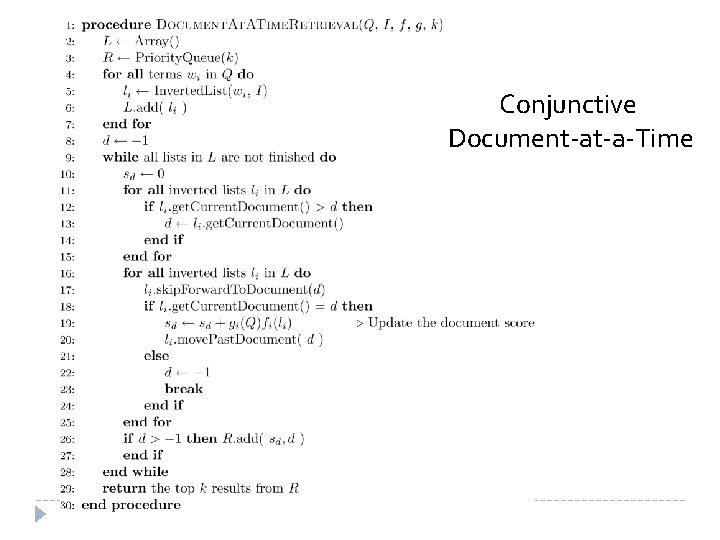

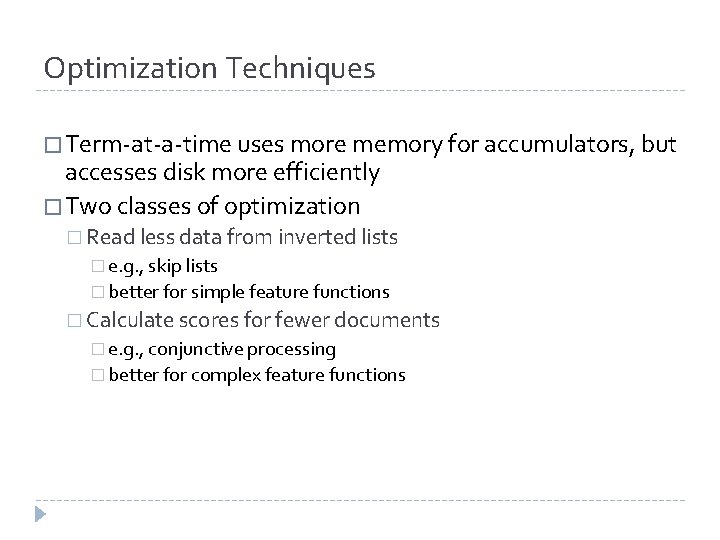

Optimization Techniques � Term-at-a-time uses more memory for accumulators, but accesses disk more efficiently � Two classes of optimization � Read less data from inverted lists � e. g. , skip lists � better for simple feature functions � Calculate scores for fewer documents � e. g. , conjunctive processing � better for complex feature functions

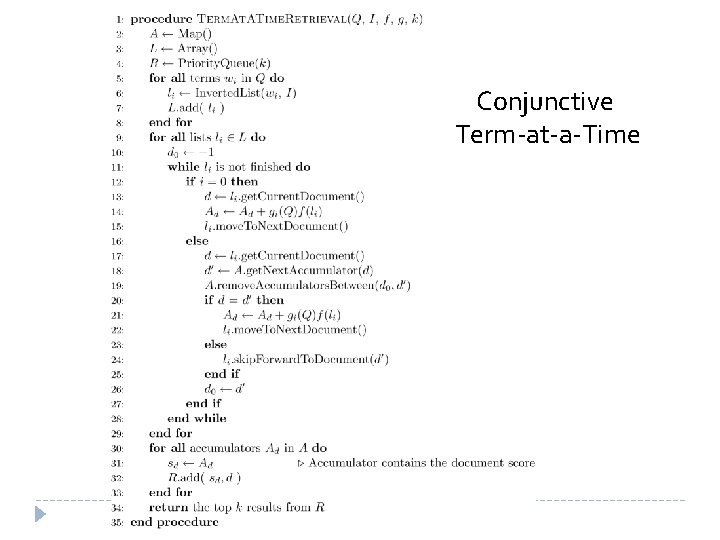

Conjunctive Term-at-a-Time

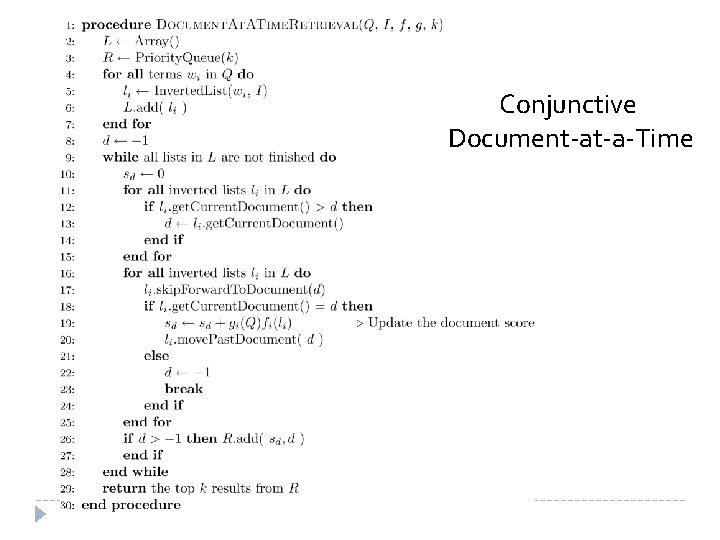

Conjunctive Document-at-a-Time

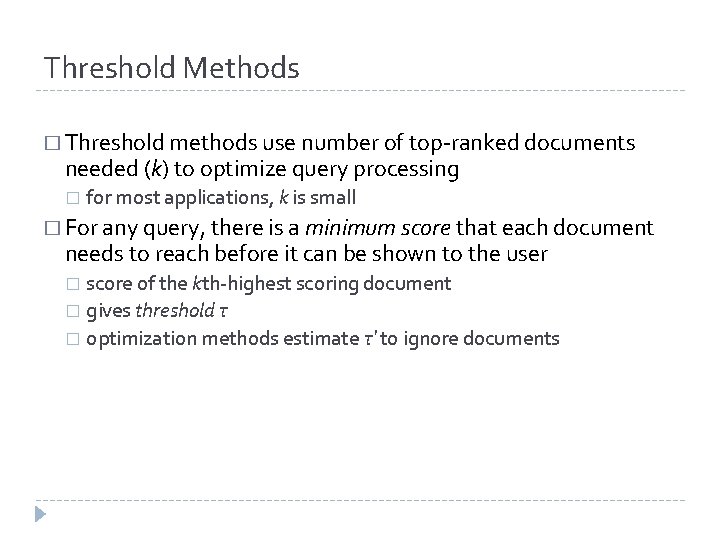

Threshold Methods � Threshold methods use number of top-ranked documents needed (k) to optimize query processing � for most applications, k is small � For any query, there is a minimum score that each document needs to reach before it can be shown to the user score of the kth-highest scoring document � gives threshold τ � optimization methods estimate τ′ to ignore documents �

Threshold Methods � For document-at-a-time processing, use score of lowest- ranked document so far for τ′ � for term-at-a-time, have to use kth-largest score in the accumulator table � Max. Score method compares the maximum score that remaining documents could have to τ′ � safe optimization in that ranking will be the same without optimization

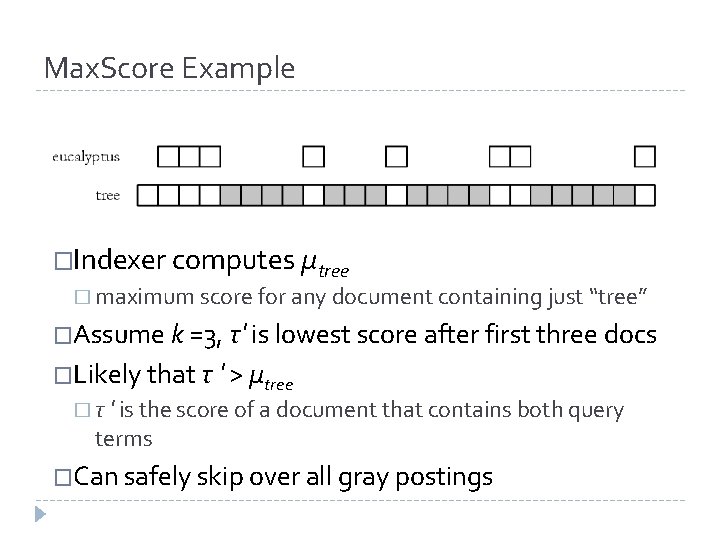

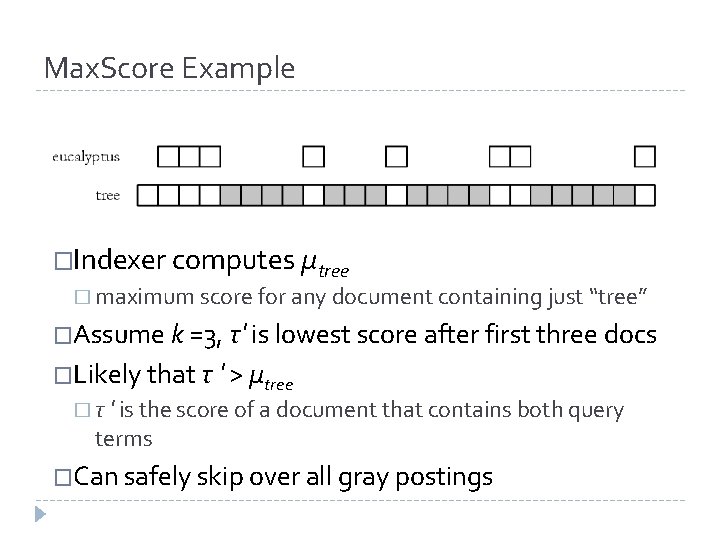

Max. Score Example �Indexer computes μtree � maximum score for any document containing just “tree” �Assume k =3, τ′ is lowest score after first three docs �Likely that τ ′ > μtree � τ ′ is the score of a document that contains both query terms �Can safely skip over all gray postings

Other Approaches � Early termination of query processing � ignore high-frequency word lists in term-at-a-time � ignore documents at end of lists in doc-at-a-time � unsafe optimization � List ordering � order inverted lists by quality metric (e. g. , Page. Rank) or by partial score � makes unsafe (and fast) optimizations more likely to produce good documents

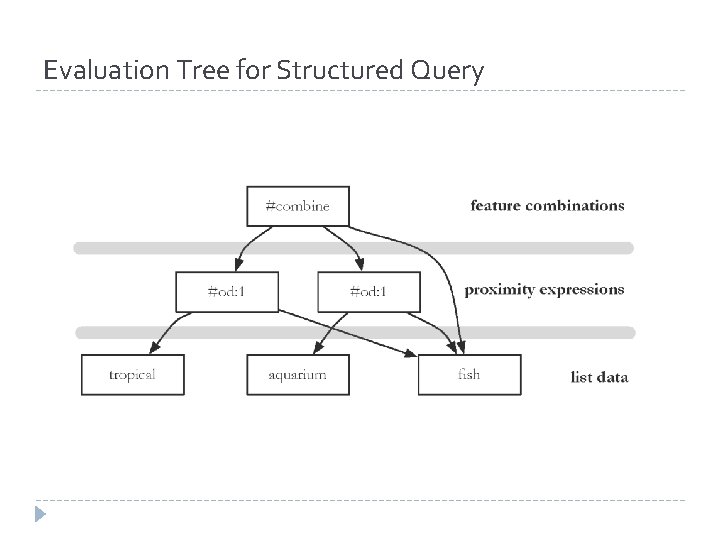

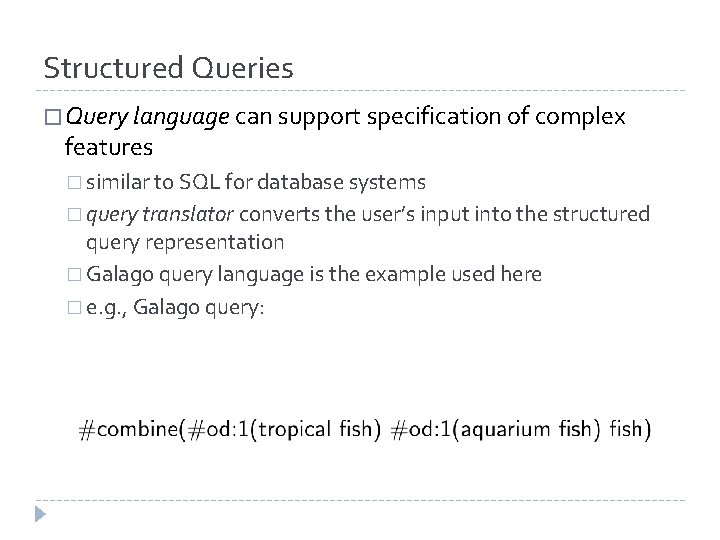

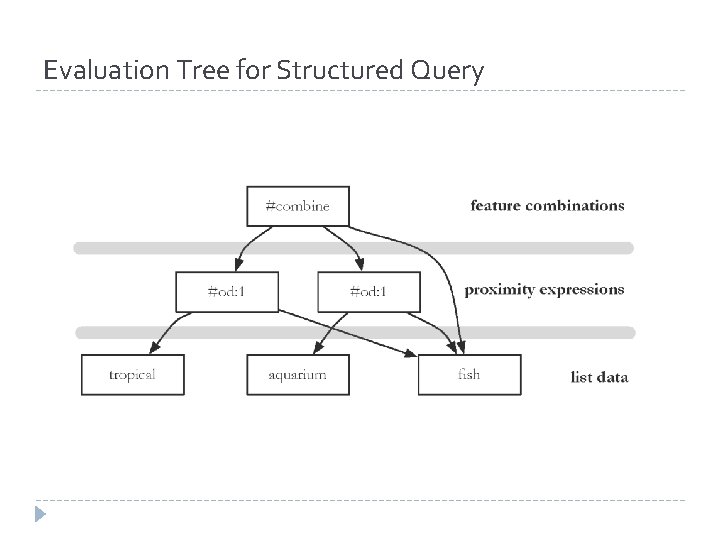

Structured Queries � Query language can support specification of complex features � similar to SQL for database systems � query translator converts the user’s input into the structured query representation � Galago query language is the example used here � e. g. , Galago query:

Evaluation Tree for Structured Query

Distributed Evaluation � Basic process All queries sent to a director machine � Director then sends messages to many index servers � Each index server does some portion of the query processing � Director organizes the results and returns them to the user � � Two main approaches � Document distribution � by far the most popular � Term distribution

Distributed Evaluation � Document distribution � each index server acts as a search engine for a small fraction of the total collection � director sends a copy of the query to each of the index servers, each of which returns the top-k results � results are merged into a single ranked list by the director � Collection statistics should be shared for effective ranking

Distributed Evaluation � Term distribution Single index is built for the whole cluster of machines � Each inverted list in that index is then assigned to one index server � � in most cases the data to process a query is not stored on a single machine � One of the index servers is chosen to process the query � usually the one holding the longest inverted list Other index servers send information to that server � Final results sent to director �

Caching � Query distributions similar to Zipf � About ½ each day are unique, but some are very popular � Caching can significantly improve effectiveness � Cache popular query results � Cache common inverted lists � Inverted list caching can help with unique queries � Cache must be refreshed to prevent stale data