Henning Schulzrinne with Vishal Singh and other IRT

- Slides: 34

Henning Schulzrinne (with Vishal Singh and other IRT members) Dept. of Computer Science Columbia University July 2007 Managing Services and Networks Using a Peer-to-peer Approach

Overview • • The transition in IT cost metrics End-to-end application-visible reliability still poor (~ 99. 5%) – even though network elements have gotten much more reliable – particular impact on interactive applications (e. g. , Vo. IP) – transient problems Lots of voodoo network management Existing network management doesn’t work for Vo. IP and other modern applications Need user-centric rather than operator-centric management Proposal: peer-to-peer management – “Do You See What I See? ” Using Vo. IP as running example -- most complex consumer application – but also applies to IPTV and other services Also use for reliability estimation and statistical fault characterization

Network management transition in cost balance • Total cost of ownership – Ethernet port cost $10 – about 80% of Columbia CS’s system support cost is staff cost • about $2500/person/year 2 new PCs/year • much of the rest is backup & license for spam filters • Does not count hours of employee or son/daughter time • PC, Ethernet port and router cost seem to have reached plateau – just that the $10 now buys a 100 Mb/s port instead of 10 Mb/s • All of our switches, routers and hosts are SNMP-enabled, but no suggestion that this would help at all

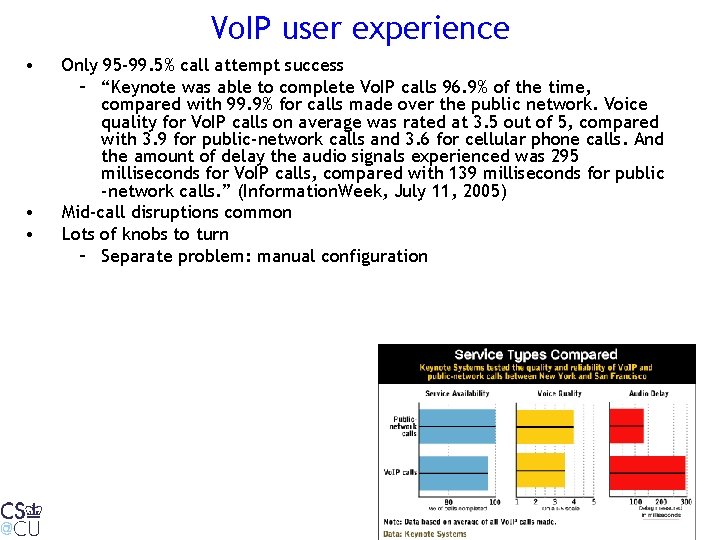

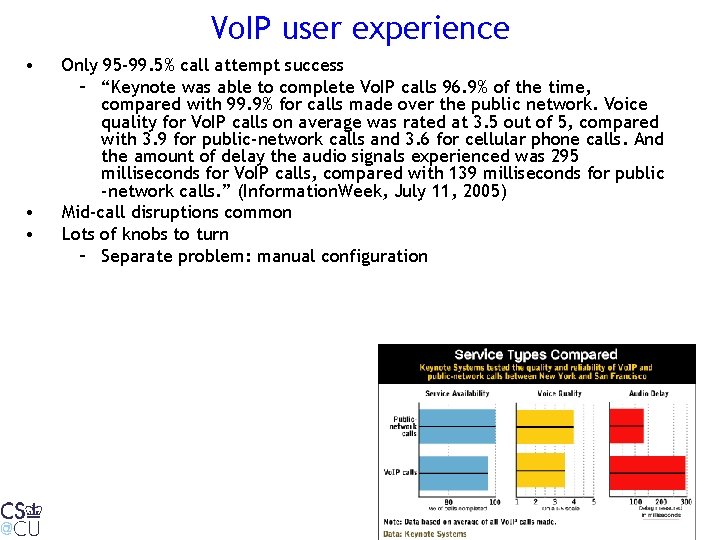

Vo. IP user experience • • • Only 95 -99. 5% call attempt success – “Keynote was able to complete Vo. IP calls 96. 9% of the time, compared with 99. 9% for calls made over the public network. Voice quality for Vo. IP calls on average was rated at 3. 5 out of 5, compared with 3. 9 for public-network calls and 3. 6 for cellular phone calls. And the amount of delay the audio signals experienced was 295 milliseconds for Vo. IP calls, compared with 139 milliseconds for public -network calls. ” (Information. Week, July 11, 2005) Mid-call disruptions common Lots of knobs to turn – Separate problem: manual configuration

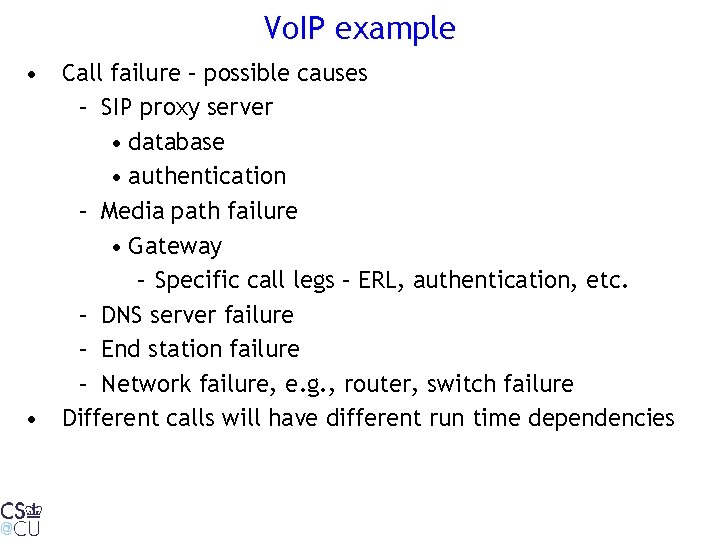

Issues in automated Vo. IP diagnosis • Increasingly complex and diverse network elements • Complex interactions & relationships between different network elements • Different run time bindings for each application usage instance – e. g. , different calls may use different DNS, SIP proxy servers, media path • Problem in one network element may manifest itself as user perceived failure of another element

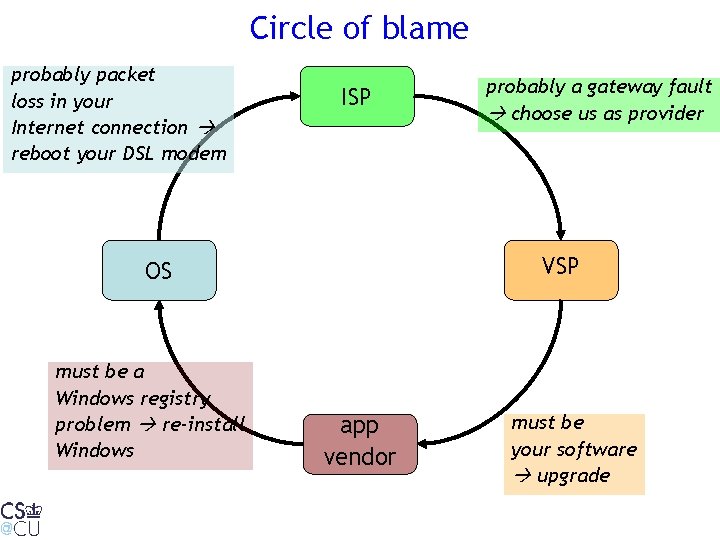

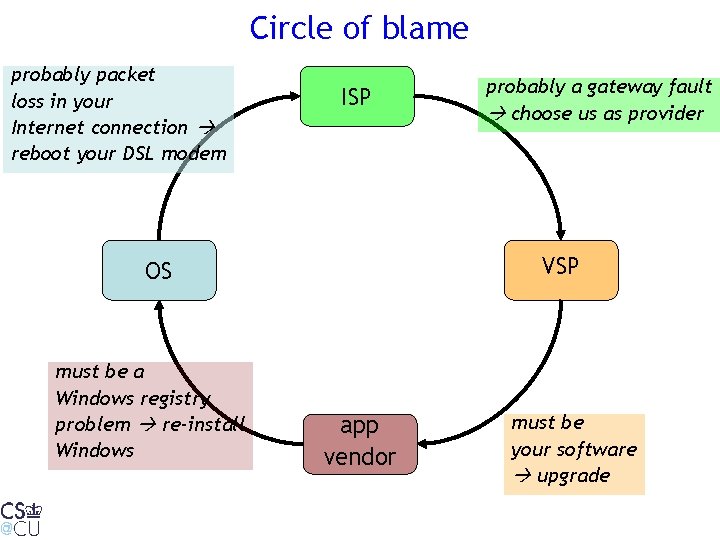

Circle of blame probably packet loss in your Internet connection reboot your DSL modem ISP VSP OS must be a Windows registry problem re-install Windows probably a gateway fault choose us as provider app vendor must be your software upgrade

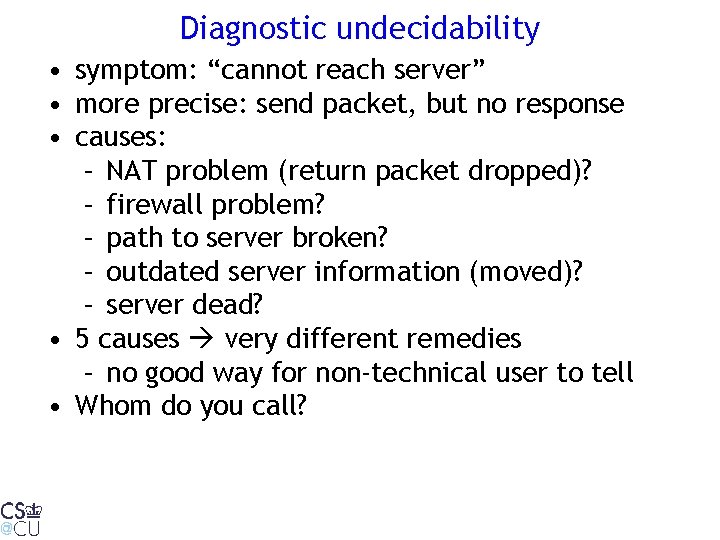

Diagnostic undecidability • symptom: “cannot reach server” • more precise: send packet, but no response • causes: – NAT problem (return packet dropped)? – firewall problem? – path to server broken? – outdated server information (moved)? – server dead? • 5 causes very different remedies – no good way for non-technical user to tell • Whom do you call?

Vo. IP diagnosis • What is automated Vo. IP diagnosis? – Determining failures in network: when, where – Automatically finding the root cause of the failure: why • Why Vo. IP diagnosis? – networks are complex --> difficult to troubleshoot problems – automatic fault diagnosis reduces human intervention • Issues in Vo. IP diagnosis – Detecting failures/faults – Finding the cause of failure, determining dependency relationships among different components for diagnosis • Solution steps and approaches

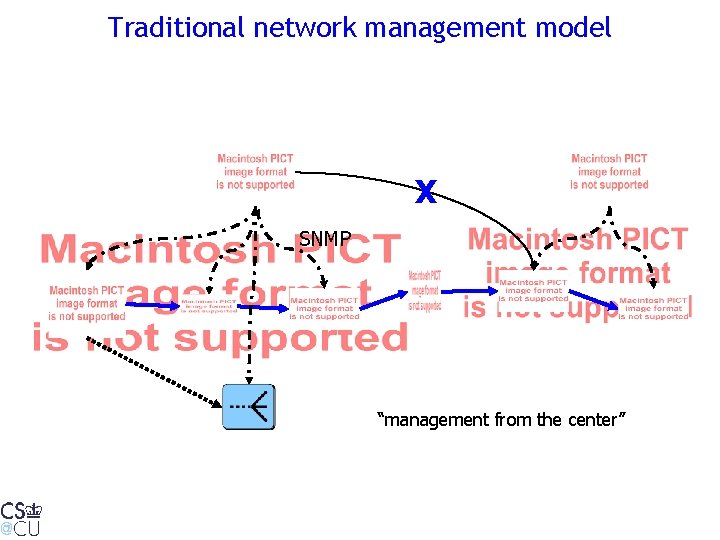

Traditional network management model X SNMP “management from the center”

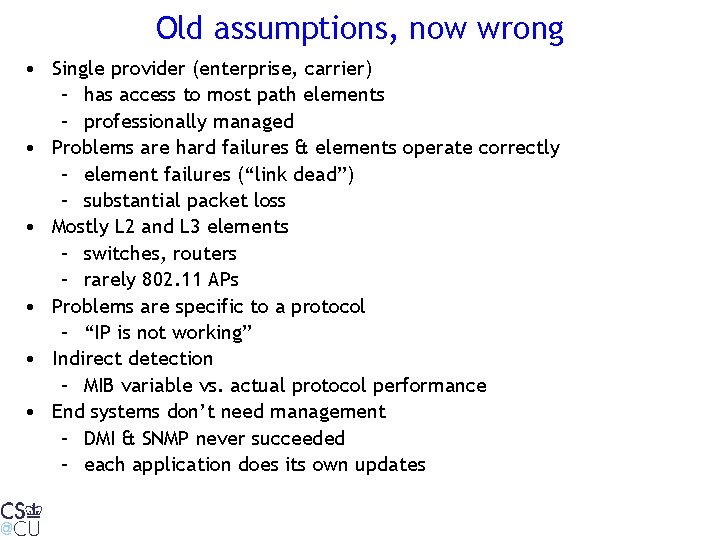

Old assumptions, now wrong • Single provider (enterprise, carrier) – has access to most path elements – professionally managed • Problems are hard failures & elements operate correctly – element failures (“link dead”) – substantial packet loss • Mostly L 2 and L 3 elements – switches, routers – rarely 802. 11 APs • Problems are specific to a protocol – “IP is not working” • Indirect detection – MIB variable vs. actual protocol performance • End systems don’t need management – DMI & SNMP never succeeded – each application does its own updates

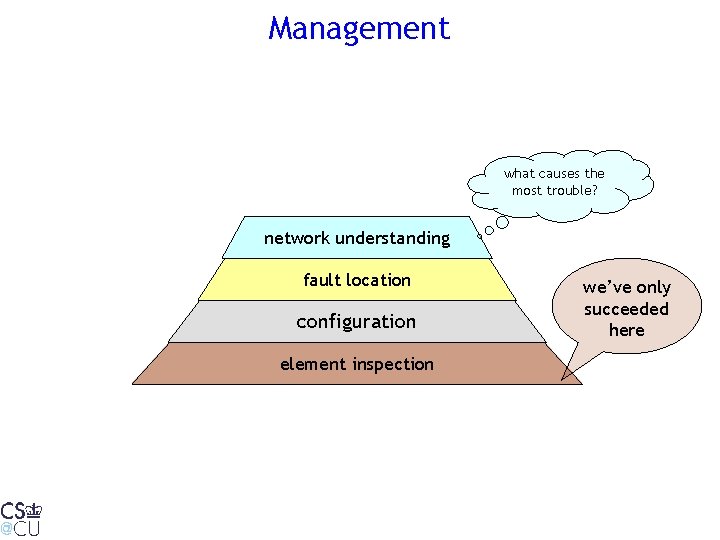

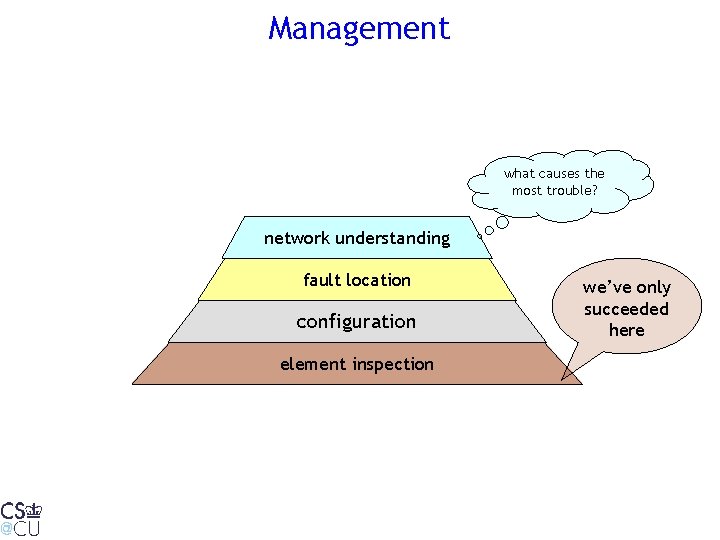

Management what causes the most trouble? network understanding fault location configuration element inspection we’ve only succeeded here

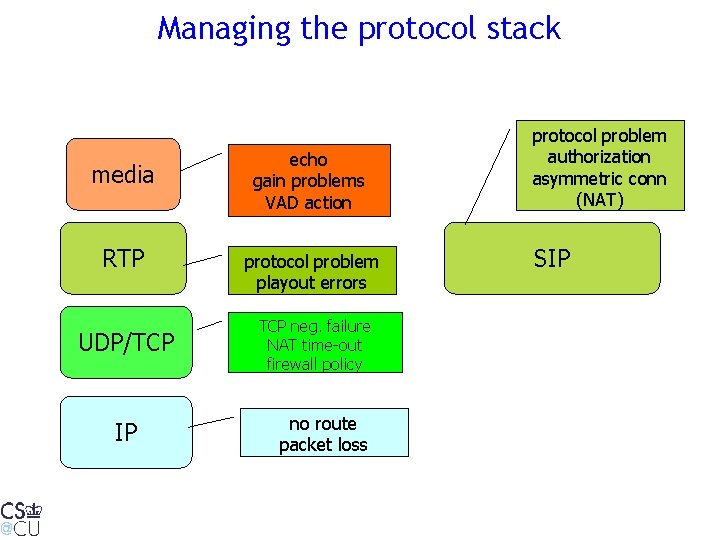

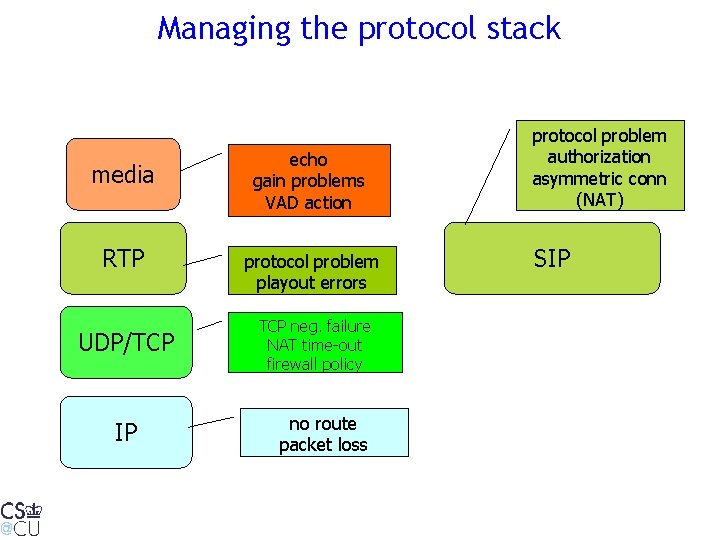

Managing the protocol stack media RTP UDP/TCP IP echo gain problems VAD action protocol problem playout errors TCP neg. failure NAT time-out firewall policy no route packet loss protocol problem authorization asymmetric conn (NAT) SIP

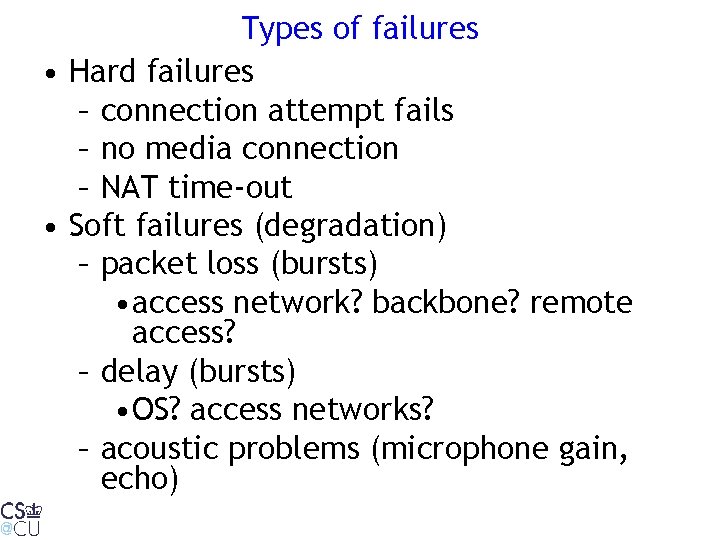

Types of failures • Hard failures – connection attempt fails – no media connection – NAT time-out • Soft failures (degradation) – packet loss (bursts) • access network? backbone? remote access? – delay (bursts) • OS? access networks? – acoustic problems (microphone gain, echo)

Examples of additional problems • ping and traceroute no longer works reliably – Win. XP SP 2 turns off ICMP – some networks filter all ICMP messages • Early NAT binding time-out – initial packet exchange succeeds, but then TCP binding is removed (“web-only Internet”) • policy intent vs. failure – “broken by design” – “we don’t allow port 25” vs. “SMTP server temporarily unreachable”

Fault localization • Fault classification – local vs. global – Does it affect only me or does it affect others also? • Global failures – Server failure e. g. SIP proxy, DNS failure, database failures – Network failures • Local failures – Specific source failure, e. g. , node A cannot make call to anyone – Specific destination or participant failure, e. g. , no one can make call to node B – Locally observed but global failures, e. g. , DNS service failed, but only B observed it

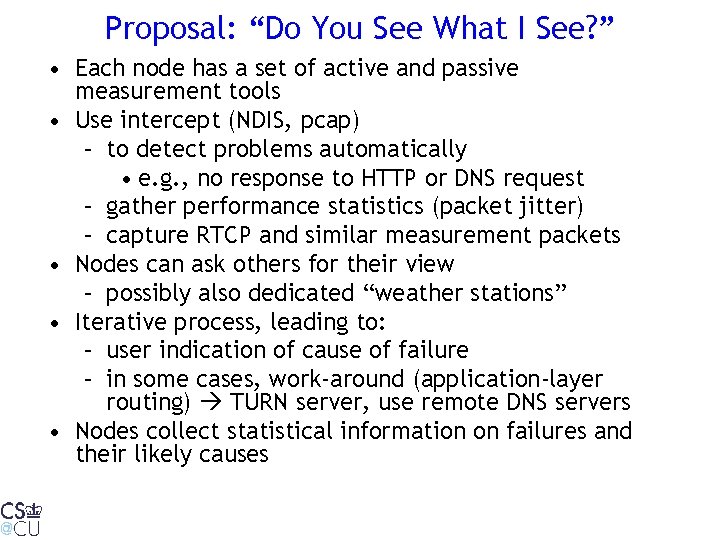

Proposal: “Do You See What I See? ” • Each node has a set of active and passive measurement tools • Use intercept (NDIS, pcap) – to detect problems automatically • e. g. , no response to HTTP or DNS request – gather performance statistics (packet jitter) – capture RTCP and similar measurement packets • Nodes can ask others for their view – possibly also dedicated “weather stations” • Iterative process, leading to: – user indication of cause of failure – in some cases, work-around (application-layer routing) TURN server, use remote DNS servers • Nodes collect statistical information on failures and their likely causes

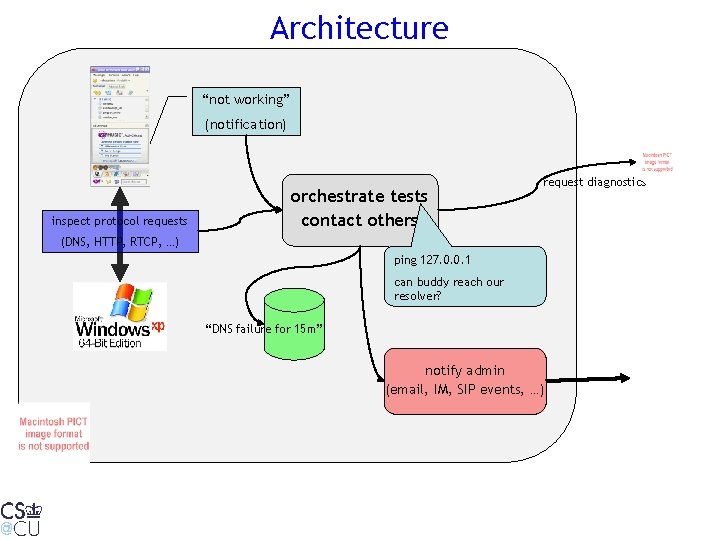

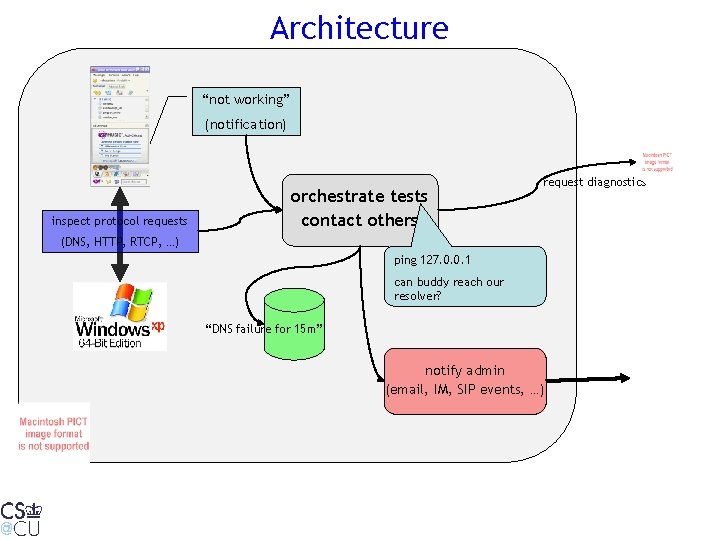

Architecture “not working” (notification) inspect protocol requests orchestrate tests contact others request diagnostics (DNS, HTTP, RTCP, …) ping 127. 0. 0. 1 can buddy reach our resolver? “DNS failure for 15 m” notify admin (email, IM, SIP events, …)

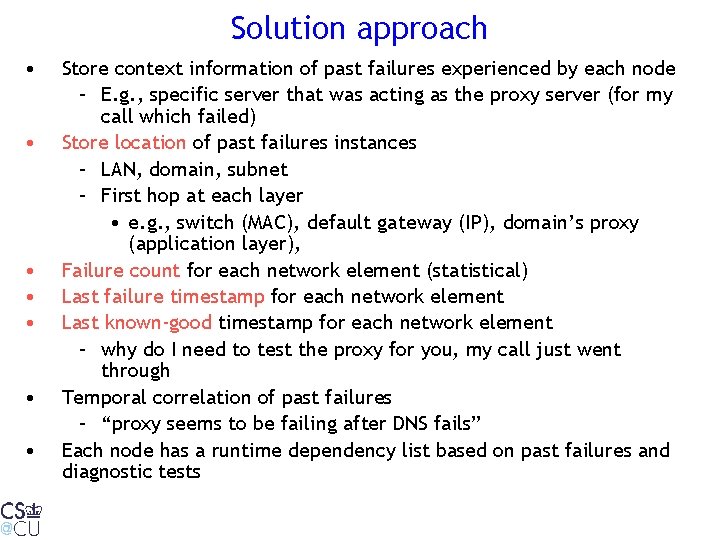

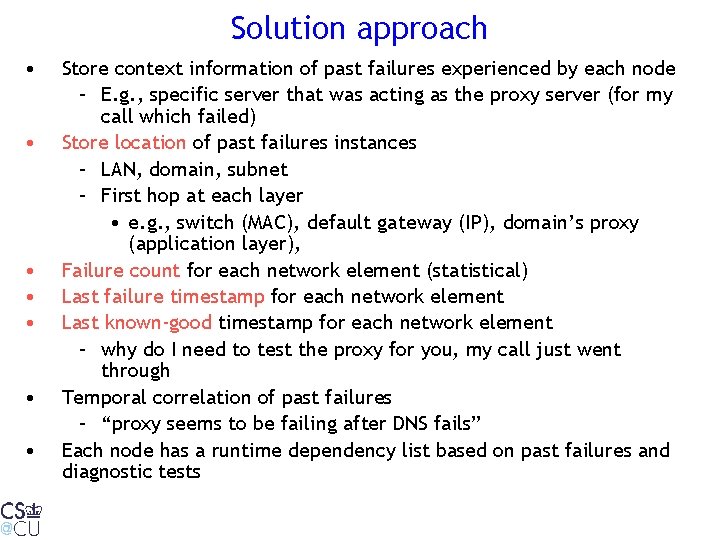

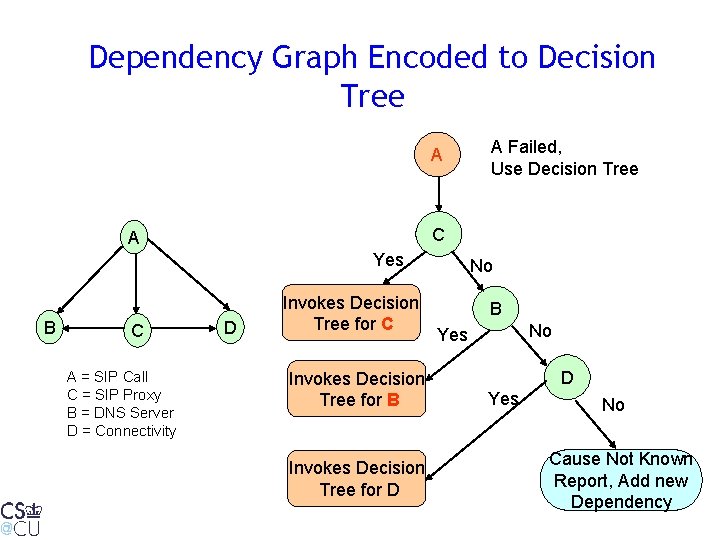

Solution approach • • Store context information of past failures experienced by each node – E. g. , specific server that was acting as the proxy server (for my call which failed) Store location of past failures instances – LAN, domain, subnet – First hop at each layer • e. g. , switch (MAC), default gateway (IP), domain’s proxy (application layer), Failure count for each network element (statistical) Last failure timestamp for each network element Last known-good timestamp for each network element – why do I need to test the proxy for you, my call just went through Temporal correlation of past failures – “proxy seems to be failing after DNS fails” Each node has a runtime dependency list based on past failures and diagnostic tests

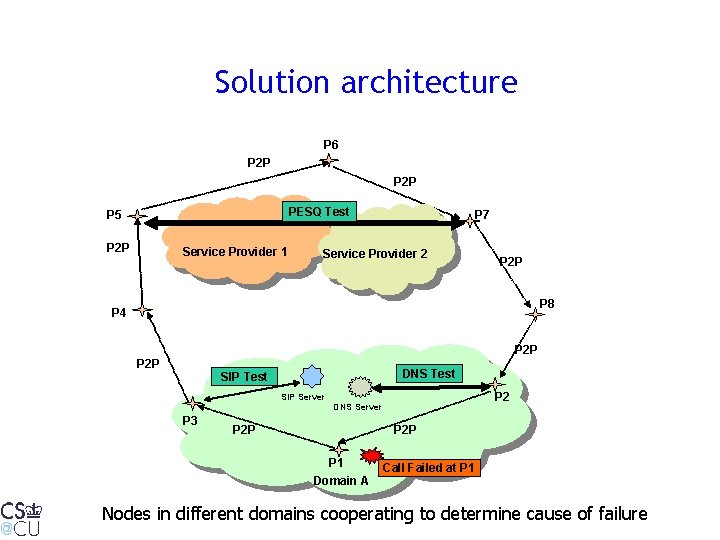

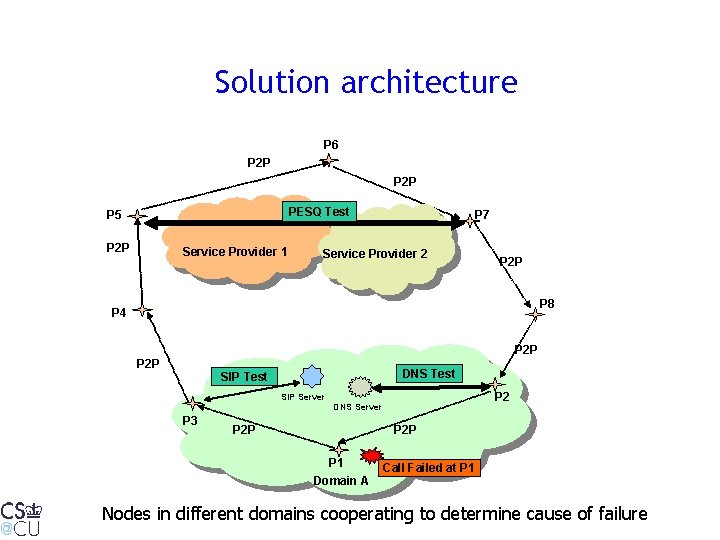

Solution architecture P 6 P 2 P PESQ Test P 5 P 2 P Service Provider 1 P 7 Service Provider 2 P 2 P P 8 P 4 P 2 P DNS Test SIP Test P 2 SIP Server DNS Server P 3 P 2 P P 1 Domain A Call Failed at P 1 Nodes in different domains cooperating to determine cause of failure

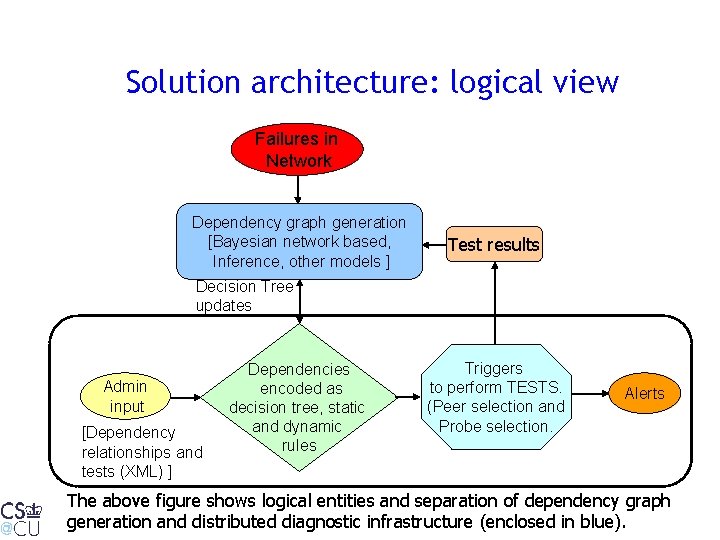

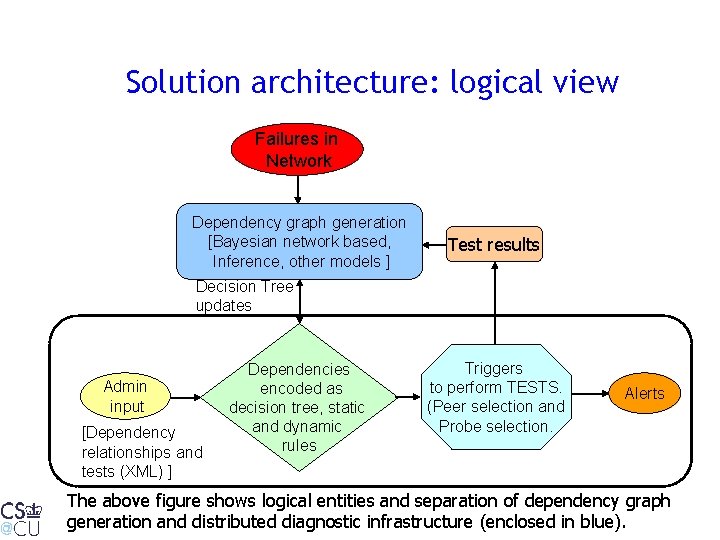

Solution architecture: logical view Failures in Network Dependency graph generation [Bayesian network based, Inference, other models ] Test results Decision Tree updates Admin input [Dependency relationships and tests (XML) ] Dependencies encoded as decision tree, static and dynamic rules Triggers to perform TESTS. (Peer selection and Probe selection. Alerts The above figure shows logical entities and separation of dependency graph generation and distributed diagnostic infrastructure (enclosed in blue).

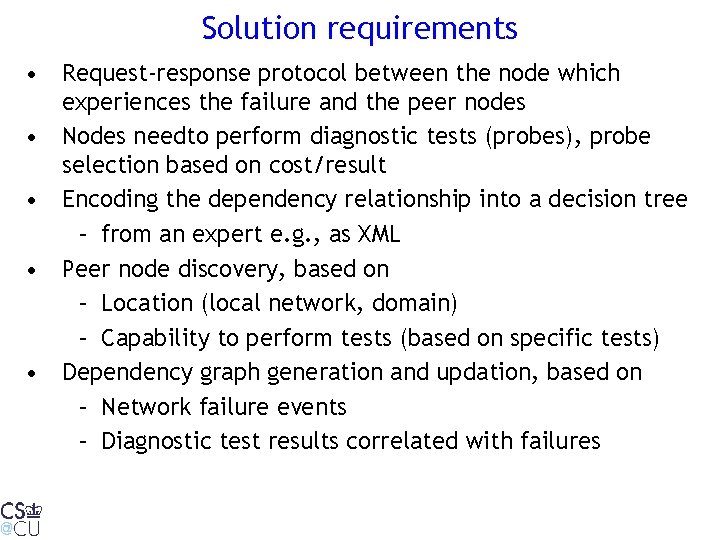

Solution requirements • Request-response protocol between the node which experiences the failure and the peer nodes • Nodes needto perform diagnostic tests (probes), probe selection based on cost/result • Encoding the dependency relationship into a decision tree – from an expert e. g. , as XML • Peer node discovery, based on – Location (local network, domain) – Capability to perform tests (based on specific tests) • Dependency graph generation and updation, based on – Network failure events – Diagnostic test results correlated with failures

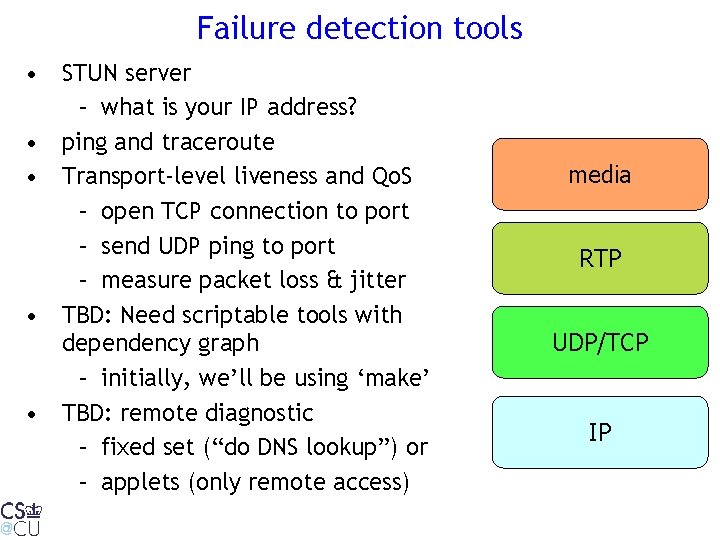

Failure detection tools • STUN server – what is your IP address? • ping and traceroute • Transport-level liveness and Qo. S – open TCP connection to port – send UDP ping to port – measure packet loss & jitter • TBD: Need scriptable tools with dependency graph – initially, we’ll be using ‘make’ • TBD: remote diagnostic – fixed set (“do DNS lookup”) or – applets (only remote access) media RTP UDP/TCP IP

Test & probe selection • Which diagnostic probe to run? • network layer or application layer and for what kind of failures. • A probe covering broad range of failures can give faster but less accurate results • e. g. , ping vs. TCP connect vs. SIP OPTIONS tests • Cost of probing – messages – CPU overhead

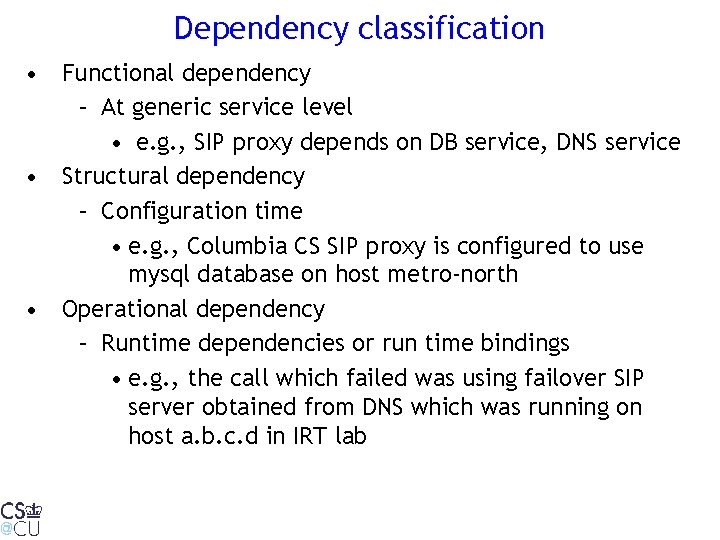

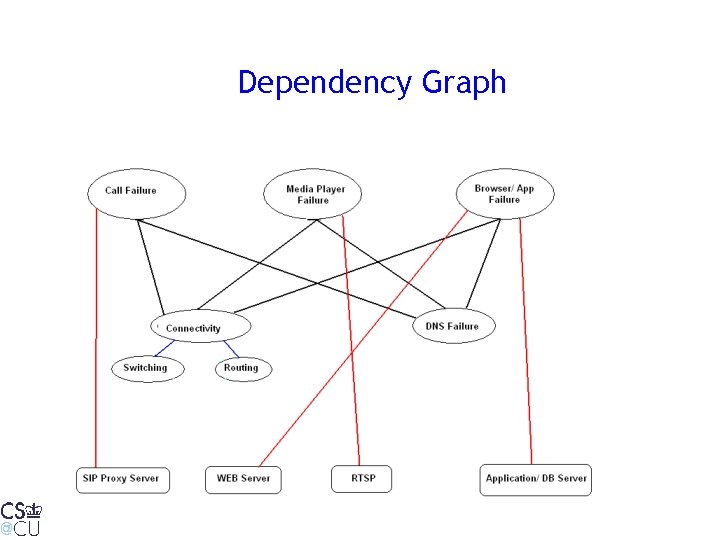

Dependency classification • Functional dependency – At generic service level • e. g. , SIP proxy depends on DB service, DNS service • Structural dependency – Configuration time • e. g. , Columbia CS SIP proxy is configured to use mysql database on host metro-north • Operational dependency – Runtime dependencies or run time bindings • e. g. , the call which failed was using failover SIP server obtained from DNS which was running on host a. b. c. d in IRT lab

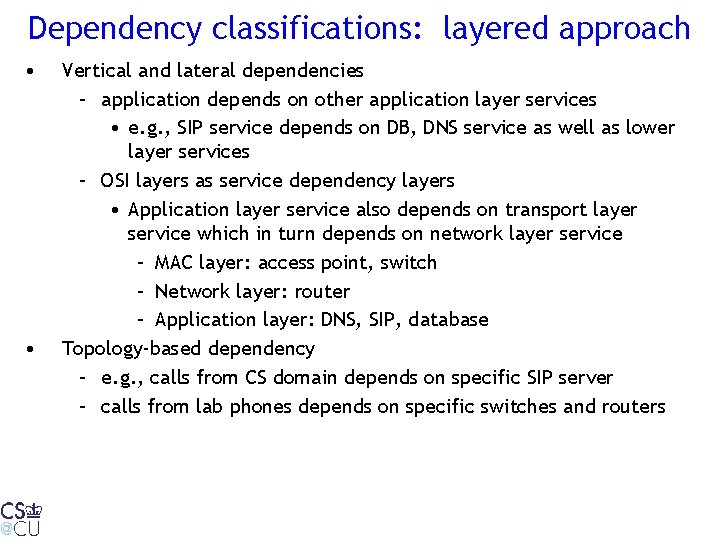

Dependency classifications: layered approach • • Vertical and lateral dependencies – application depends on other application layer services • e. g. , SIP service depends on DB, DNS service as well as lower layer services – OSI layers as service dependency layers • Application layer service also depends on transport layer service which in turn depends on network layer service – MAC layer: access point, switch – Network layer: router – Application layer: DNS, SIP, database Topology-based dependency – e. g. , calls from CS domain depends on specific SIP server – calls from lab phones depends on specific switches and routers

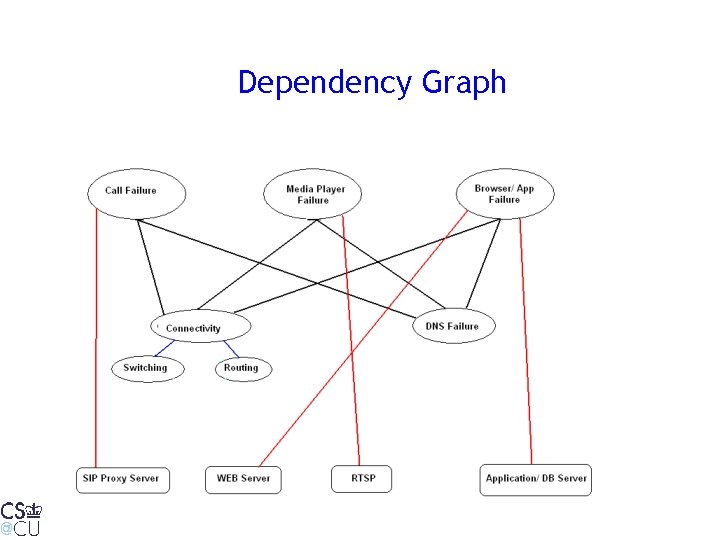

Dependency Graph

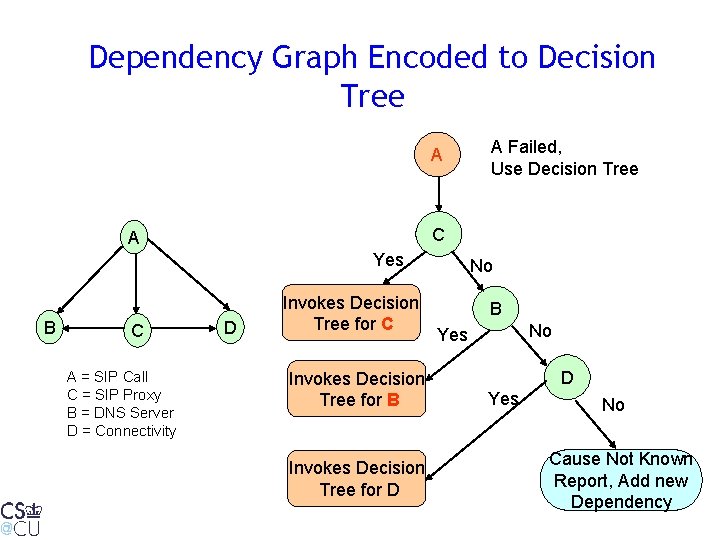

Dependency Graph Encoded to Decision Tree A C A Yes B C A = SIP Call C = SIP Proxy B = DNS Server D = Connectivity A Failed, Use Decision Tree D Invokes Decision Tree for C Invokes Decision Tree for B Invokes Decision Tree for D No B No Yes D Yes No Cause Not Known Report, Add new Dependency

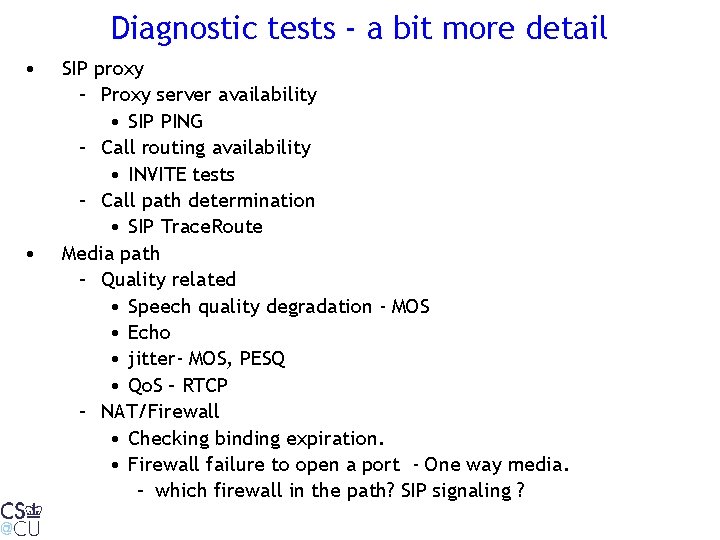

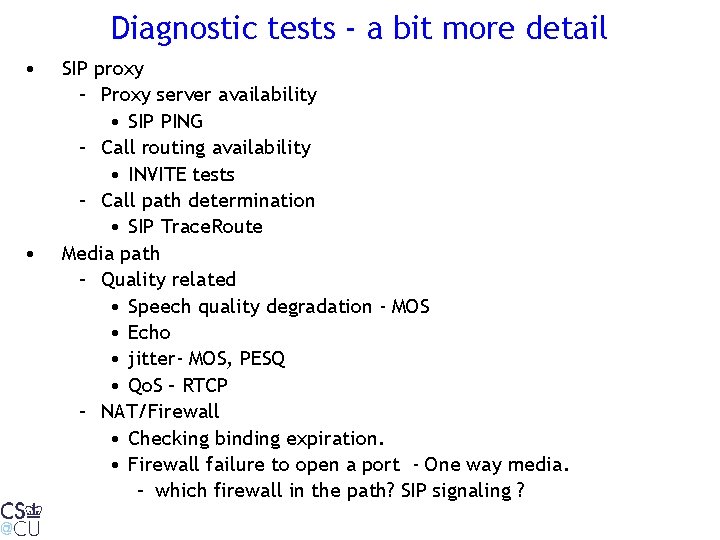

Diagnostic tests - a bit more detail • • SIP proxy – Proxy server availability • SIP PING – Call routing availability • INVITE tests – Call path determination • SIP Trace. Route Media path – Quality related • Speech quality degradation - MOS • Echo • jitter- MOS, PESQ • Qo. S – RTCP – NAT/Firewall • Checking binding expiration. • Firewall failure to open a port - One way media. – which firewall in the path? SIP signaling ?

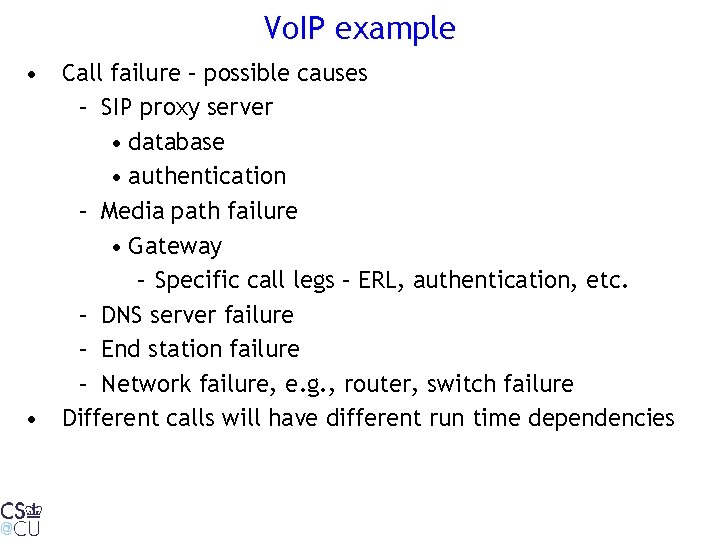

Vo. IP example • Call failure – possible causes – SIP proxy server • database • authentication – Media path failure • Gateway – Specific call legs – ERL, authentication, etc. – DNS server failure – End station failure – Network failure, e. g. , router, switch failure • Different calls will have different run time dependencies

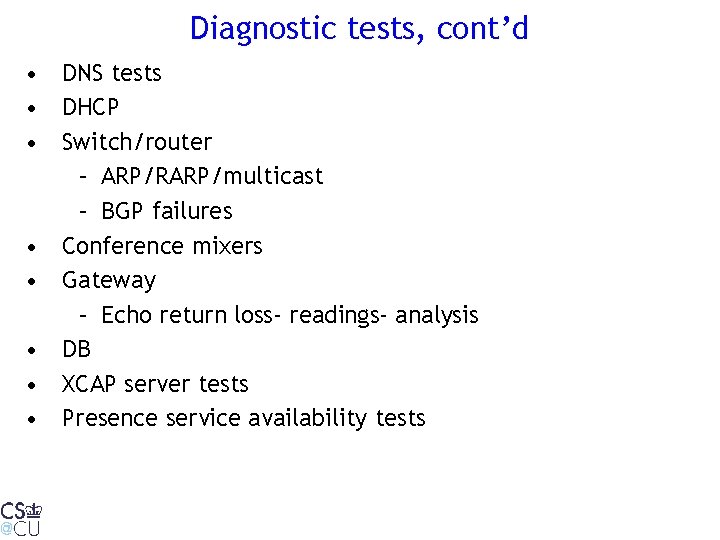

Diagnostic tests, cont’d • DNS tests • DHCP • Switch/router – ARP/RARP/multicast – BGP failures • Conference mixers • Gateway – Echo return loss- readings- analysis • DB • XCAP server tests • Presence service availability tests

Current work • Building decision tree system • Using JBoss Rules (Drools 3. 0)

Future work • Learning the dependency graph from failure events and diagnostic tests • Learning using random or periodic testing to identify failures and determine relationships • Self healing • Predicting failures • Protocols for labeling event failures --> enable automatically incorporating new devices/applications to the dependency system • Decision tree (dependency graph) based event correlation

Failure statistics • Which parts of the network are most likely to fail (or degrade) – access network – network interconnects – backbone network – infrastructure servers (DHCP, DNS) – application servers (SIP, RTSP, HTTP, …) – protocol failures/incompatibility • Currently, mostly guesses • End nodes can gather and accumulate statistics

Conclusion • Hypothesis: network reliability as single largest open technical issue prevents (some) new applications • Existing management tools of limited use to most enterprises and end users • Transition to “self-service” networks – support non-technical users, not just NOCs running HP Open. View or Tivoli • Need better view of network reliability

Henning schulzrinne

Henning schulzrinne Raj birk

Raj birk Contoh cppob

Contoh cppob Cystic fibrosis irt

Cystic fibrosis irt Irt 5433

Irt 5433 Irt lab

Irt lab Irt software

Irt software Nvu tutorial

Nvu tutorial Amd r700

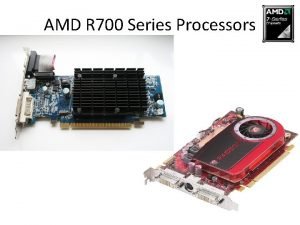

Amd r700 Vishal sundaram

Vishal sundaram Vishal dasari

Vishal dasari Vishal manghnani

Vishal manghnani U fork with plumb bob

U fork with plumb bob Vishal thakkar nose

Vishal thakkar nose Dr vishal jaiswal

Dr vishal jaiswal Dr vishal sharma

Dr vishal sharma Blake patel only connect

Blake patel only connect Plasma cell dyscrasia

Plasma cell dyscrasia Vishal gupta bits pilani

Vishal gupta bits pilani Link tree vishal tiwari

Link tree vishal tiwari Entanglement

Entanglement Carley nuzzo

Carley nuzzo Henning fjell johansen

Henning fjell johansen Henning kurz

Henning kurz Henning kurz

Henning kurz Henning fjell johansen

Henning fjell johansen Banana sunrise chair

Banana sunrise chair Matthias henning

Matthias henning Henning fjell johansen familie

Henning fjell johansen familie Henning kurz

Henning kurz Wade henning

Wade henning Henning hermjakob

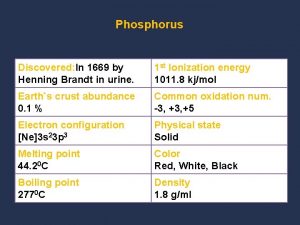

Henning hermjakob Chemical properties of phosphorus

Chemical properties of phosphorus Laura henning

Laura henning Henning jørgensen aau

Henning jørgensen aau