Heidi Schellman for the Computing Consortium DUNE COMPUTING

- Slides: 23

Heidi Schellman for the Computing Consortium DUNE COMPUTING UPDATE

2 talks • Discussion of the Computing Consortium (Schellman) • Explore and design tools and infrastructure needed for DUNE computing • Interface with DAQ, algorithms and physics groups • Analogous to other hardware consortia – subset of collaborating groups with strong commitment to the computing project • Discussion of the Computing Contributions Board (Clarke) • Computing resources are spread throughout the collaboration • Contributing storage and CPU can be done without huge local effort • Many more institutions/countries can (and often already have) contributed LBNC - December 2019 |2

Building the Computing Consortium Series of workshops • October ‘ 18 – Kickoff workshop in Edinburgh • DUNE collaboration meetings in January and May • August ‘ 19 - Data Model workshop at BNL • Worked through interfaces with DAQ and databases • September ‘ 19 - Computing Model workshop at FNAL – joint with GDB • Workflow use cases • Progress on uniform authentication • Draft Storage model • December ‘ 19 – Database workshop at Colorado State • Hardware databases • Conditions database • Formation of Frameworks task force (Andrew Norman and Paul Laycock) • Formation of Computing Contributions Board (Peter Clarke – next talk) LBNC - December 2019 |3

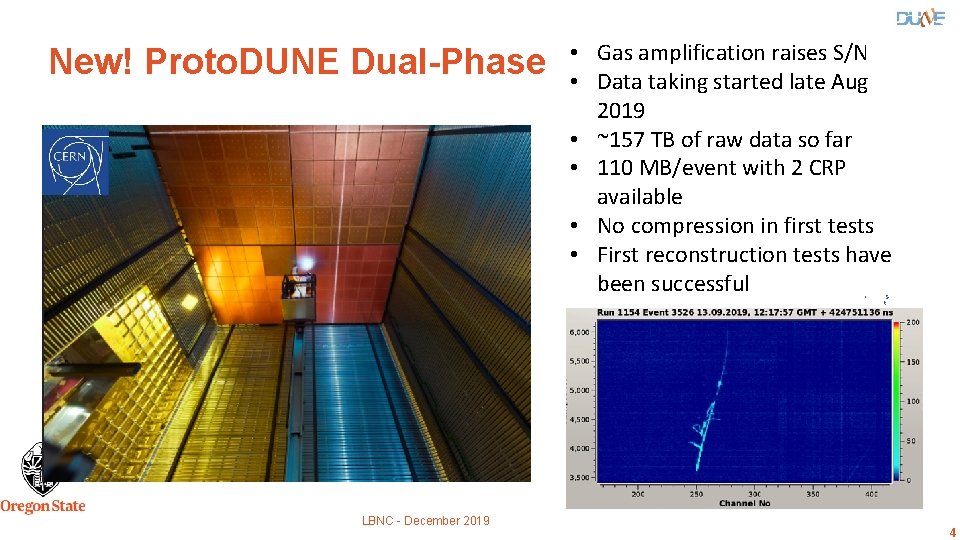

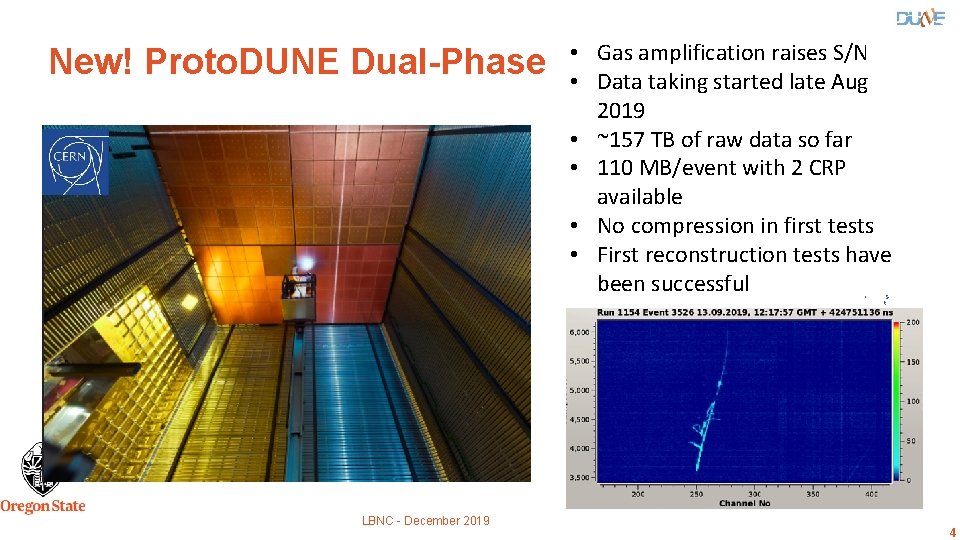

New! Proto. DUNE Dual-Phase • Gas amplification raises S/N • Data taking started late Aug 2019 • ~157 TB of raw data so far • 110 MB/event with 2 CRP available • No compression in first tests • First reconstruction tests have been successful • • LBNC - December 2019 S t a r t e d d a t a t a k i n g A u g u s t 2 0 1 9 4

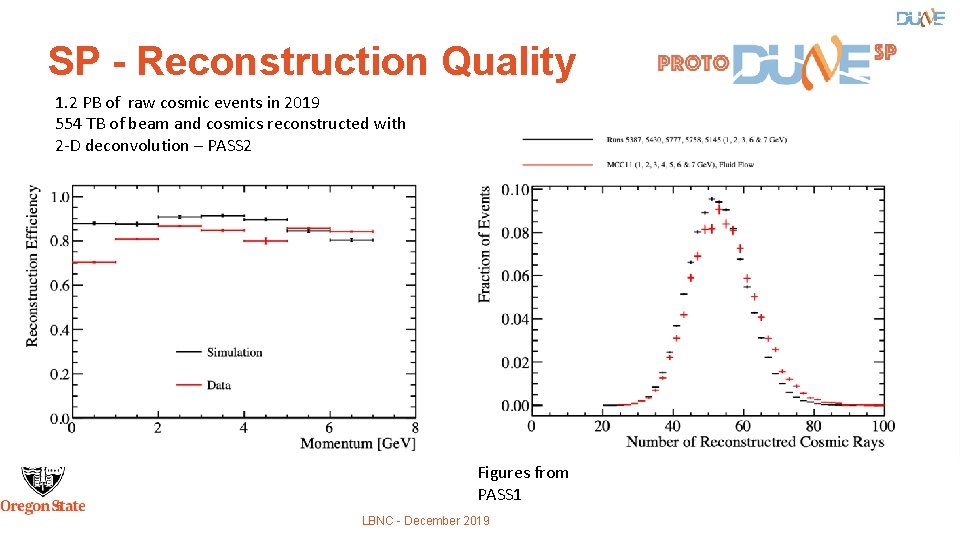

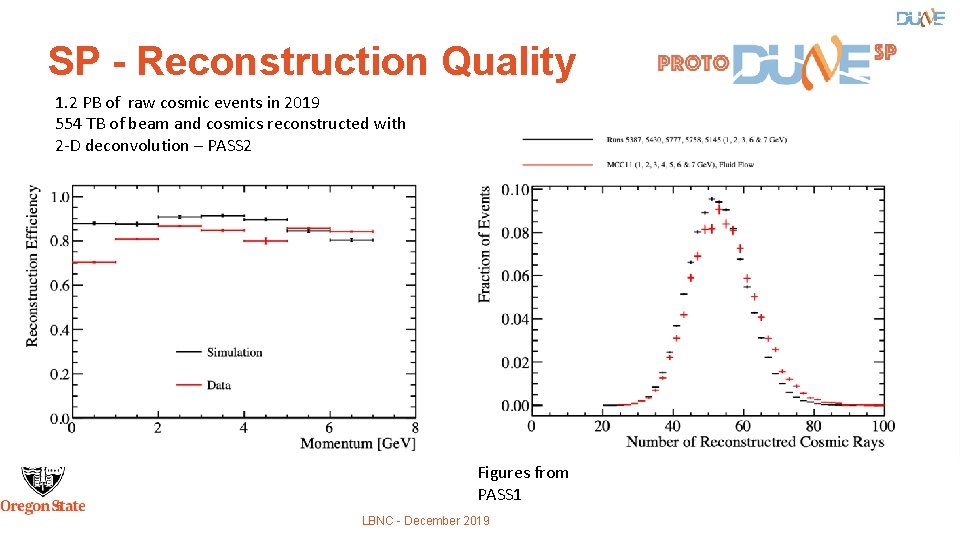

SP - Reconstruction Quality 1. 2 PB of raw cosmic events in 2019 554 TB of beam and cosmics reconstructed with 2 -D deconvolution – PASS 2 5 Figures from PASS 1 LBNC - December 2019

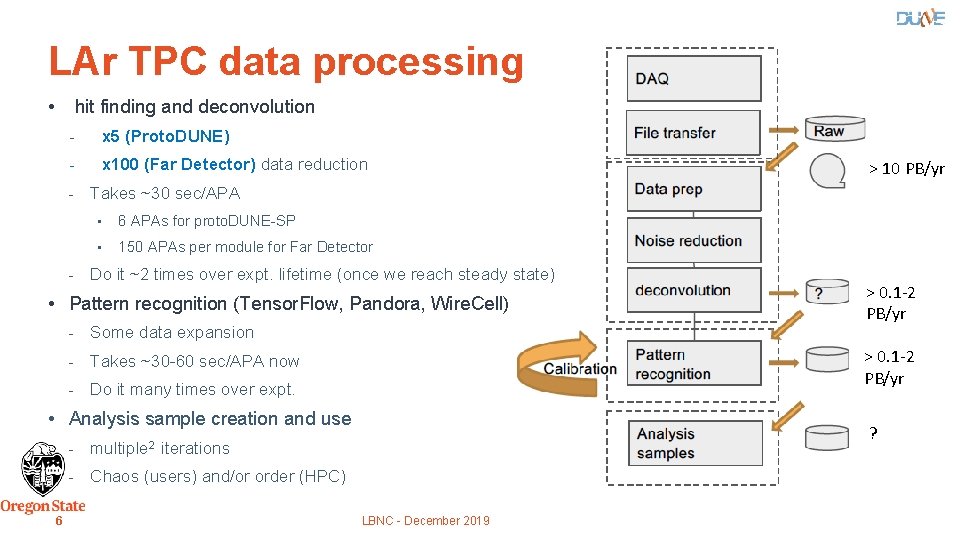

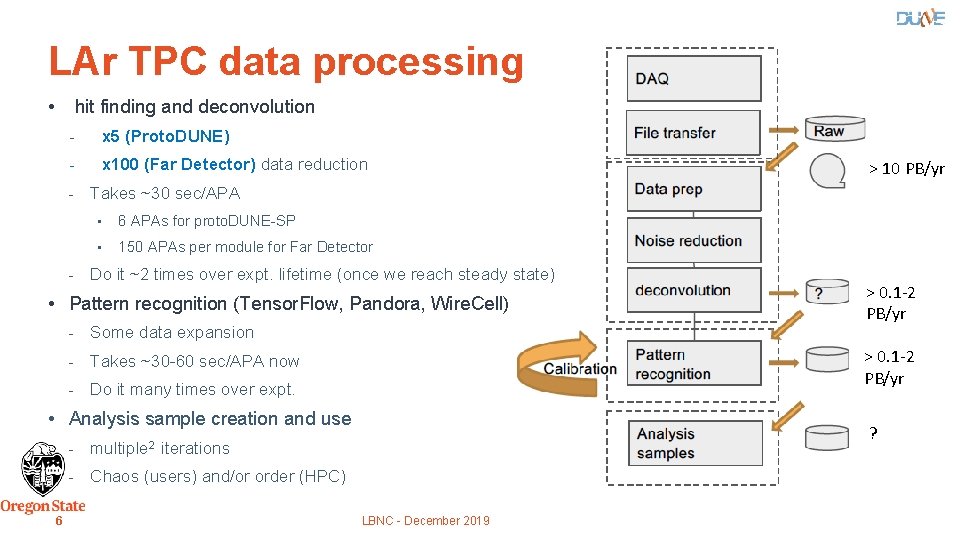

LAr TPC data processing • hit finding and deconvolution - x 5 (Proto. DUNE) - x 100 (Far Detector) data reduction > 10 PB/yr - Takes ~30 sec/APA • 6 APAs for proto. DUNE-SP • 150 APAs per module for Far Detector - Do it ~2 times over expt. lifetime (once we reach steady state) • Pattern recognition (Tensor. Flow, Pandora, Wire. Cell) - Some data expansion > 0. 1 -2 PB/yr - Takes ~30 -60 sec/APA now - Do it many times over expt. • Analysis sample creation and use - multiple 2 ? iterations - Chaos (users) and/or order (HPC) 6 > 0. 1 -2 PB/yr LBNC - December 2019

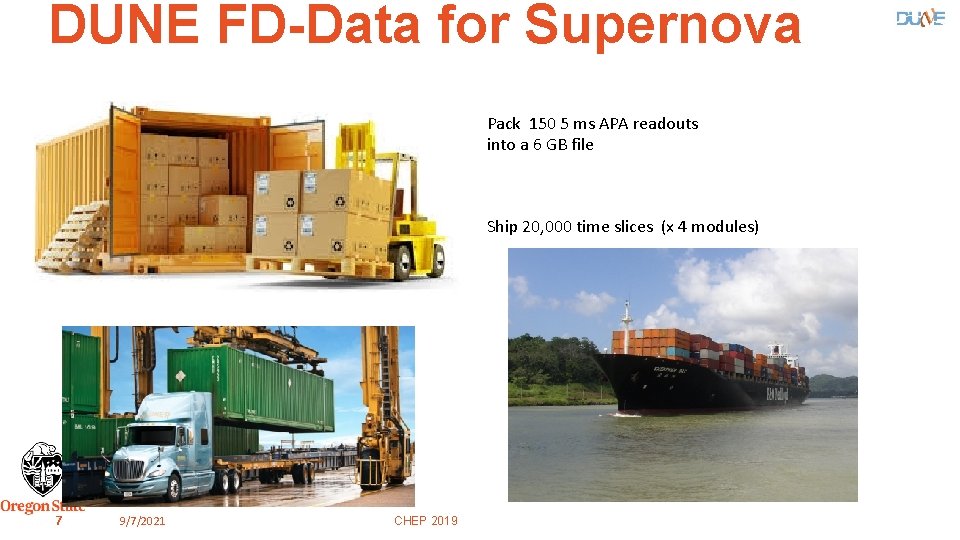

DUNE FD-Data for Supernova Pack 150 5 ms APA readouts into a 6 GB file Ship 20, 000 time slices (x 4 modules) 7 7 9/7/2021 CHEP 2019

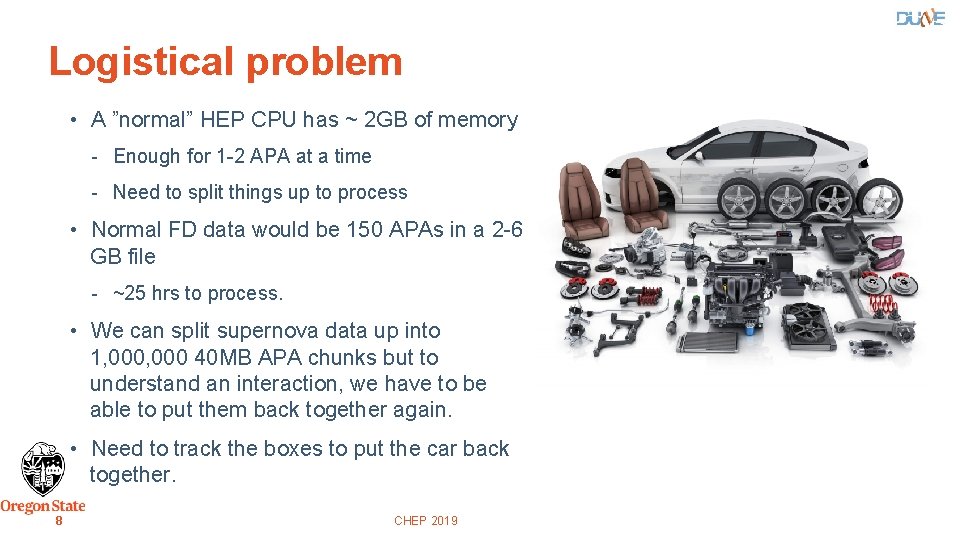

Logistical problem • A ”normal” HEP CPU has ~ 2 GB of memory - Enough for 1 -2 APA at a time - Need to split things up to process • Normal FD data would be 150 APAs in a 2 -6 GB file - ~25 hrs to process. • We can split supernova data up into 1, 000 40 MB APA chunks but to understand an interaction, we have to be able to put them back together again. • Need to track the boxes to put the car back together. 8 CHEP 2019

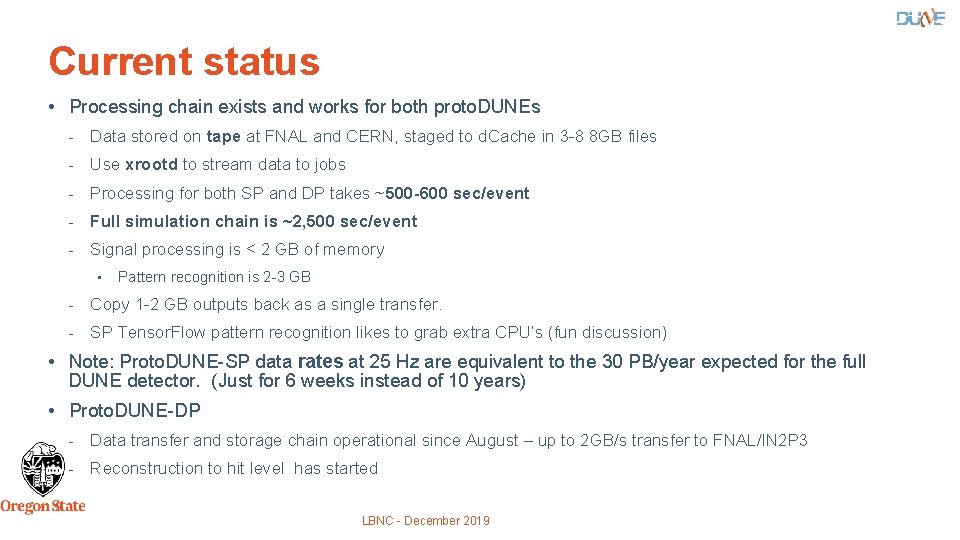

Current status • Processing chain exists and works for both proto. DUNEs - Data stored on tape at FNAL and CERN, staged to d. Cache in 3 -8 8 GB files - Use xrootd to stream data to jobs - Processing for both SP and DP takes ~500 -600 sec/event - Full simulation chain is ~2, 500 sec/event - Signal processing is < 2 GB of memory • Pattern recognition is 2 -3 GB - Copy 1 -2 GB outputs back as a single transfer. - SP Tensor. Flow pattern recognition likes to grab extra CPU’s (fun discussion) • Note: Proto. DUNE-SP data rates at 25 Hz are equivalent to the 30 PB/year expected for the full DUNE detector. (Just for 6 weeks instead of 10 years) • Proto. DUNE-DP - Data transfer and storage chain operational since August – up to 2 GB/s transfer to FNAL/IN 2 P 3 - Reconstruction to hit level has started 9 LBNC - December 2019

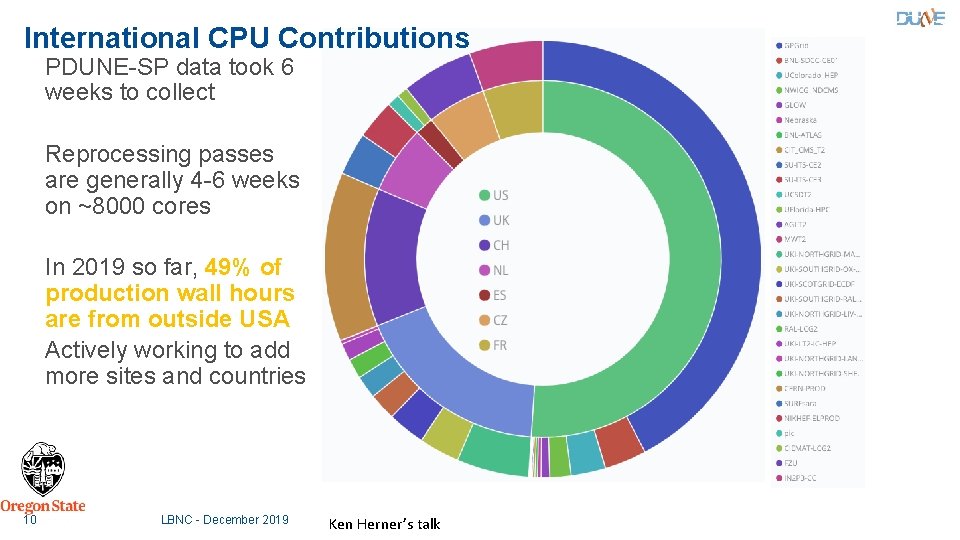

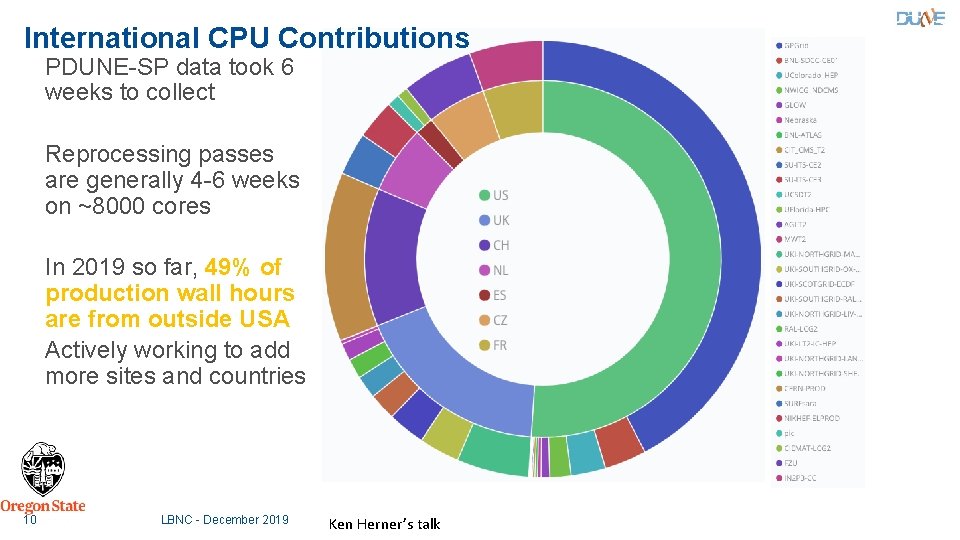

International CPU Contributions PDUNE-SP data took 6 weeks to collect Reprocessing passes are generally 4 -6 weeks on ~8000 cores In 2019 so far, 49% of production wall hours are from outside USA Actively working to add more sites and countries 10 LBNC - December 2019 Ken Herner’s talk

Future compute model draft • Tape archive for raw data at two places (FNAL + external to US) - Second site may be a consortium rather than a single site - Currently FNAL/CERN • Important derived data sets are disk resident and controlled by Rucio - Minimum of two copies in at least 2 regions - This requires strict attention to cataloging and retention policies • Data tracked through a replacement for the existing SAM system and Rucio • Data delivered either by local caches or through streaming (xrootd) • Jobs submission systems being evaluated - FNAL POMS/Glidein. WMS submissions work - Demonstration at RAL of running a DIRAC job using SAM for data delivery 11 LBNC - December 2019

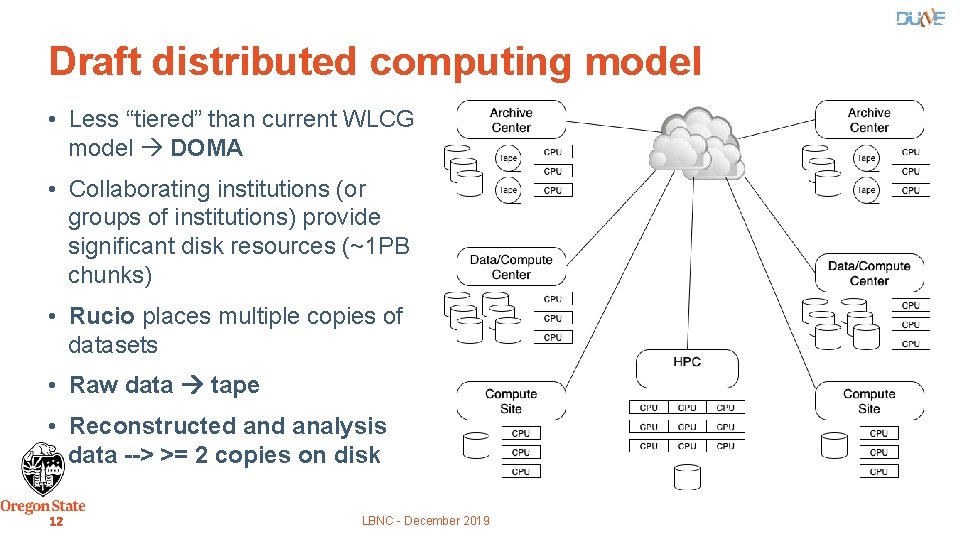

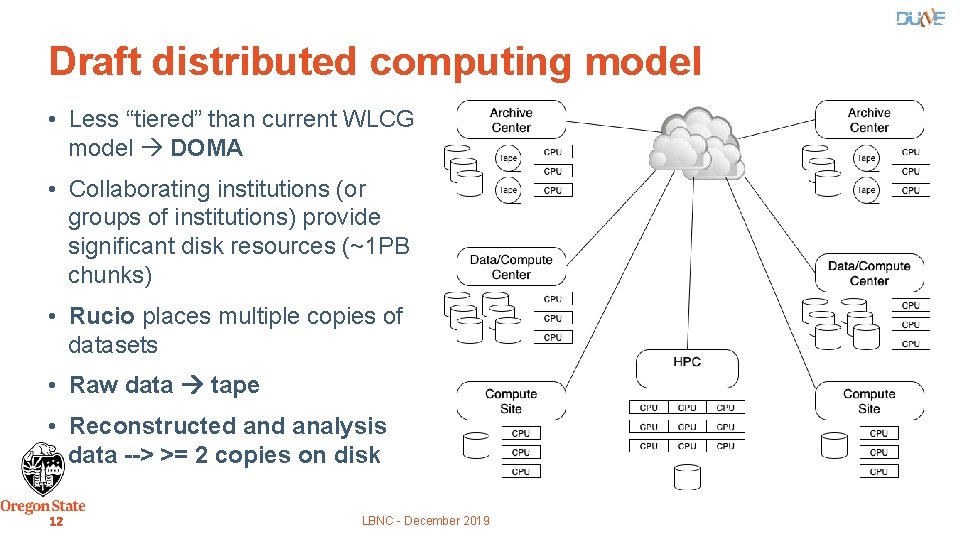

Draft distributed computing model • Less “tiered” than current WLCG model DOMA • Collaborating institutions (or groups of institutions) provide significant disk resources (~1 PB chunks) • Rucio places multiple copies of datasets • Raw data tape • Reconstructed analysis data --> >= 2 copies on disk 12 LBNC - December 2019

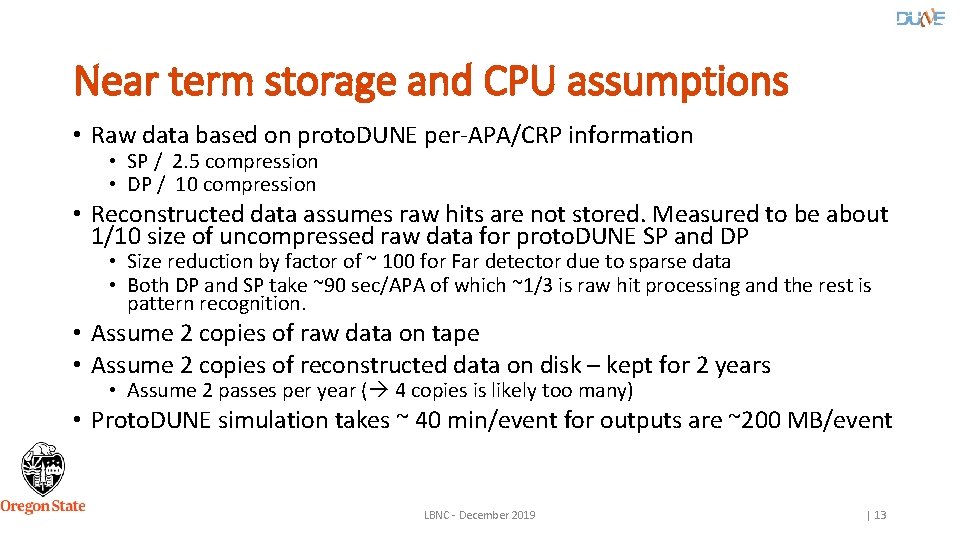

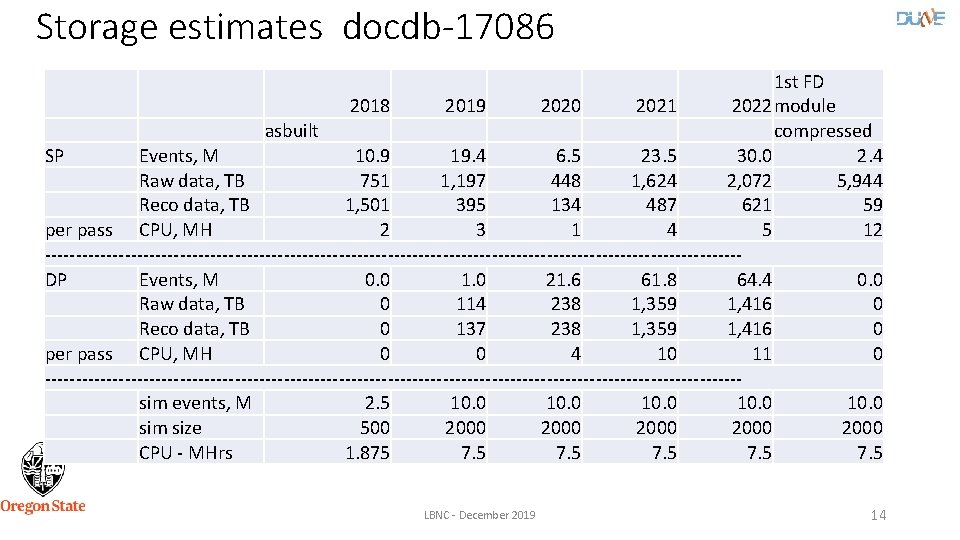

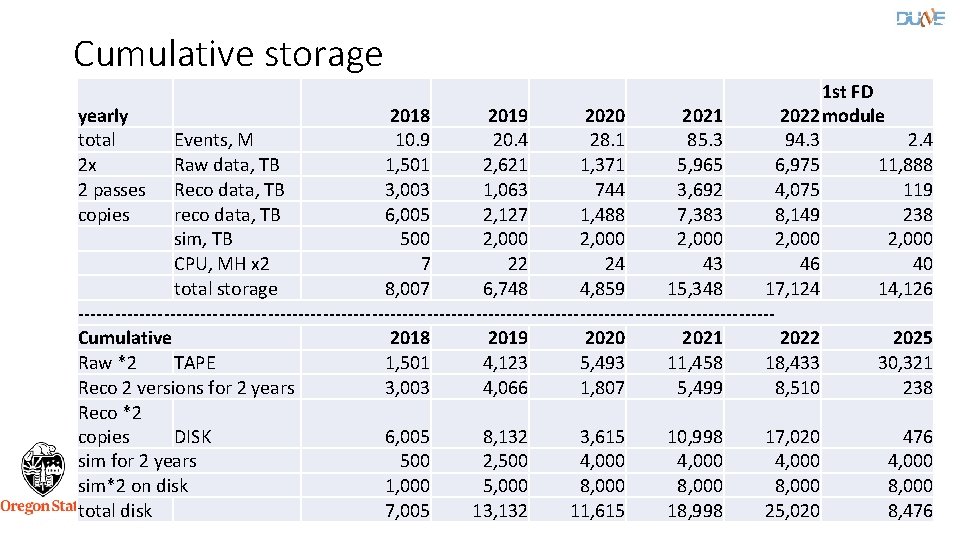

Near term storage and CPU assumptions • Raw data based on proto. DUNE per-APA/CRP information • SP / 2. 5 compression • DP / 10 compression • Reconstructed data assumes raw hits are not stored. Measured to be about 1/10 size of uncompressed raw data for proto. DUNE SP and DP • Size reduction by factor of ~ 100 for Far detector due to sparse data • Both DP and SP take ~90 sec/APA of which ~1/3 is raw hit processing and the rest is pattern recognition. • Assume 2 copies of raw data on tape • Assume 2 copies of reconstructed data on disk – kept for 2 years • Assume 2 passes per year ( 4 copies is likely too many) • Proto. DUNE simulation takes ~ 40 min/event for outputs are ~200 MB/event LBNC - December 2019 | 13

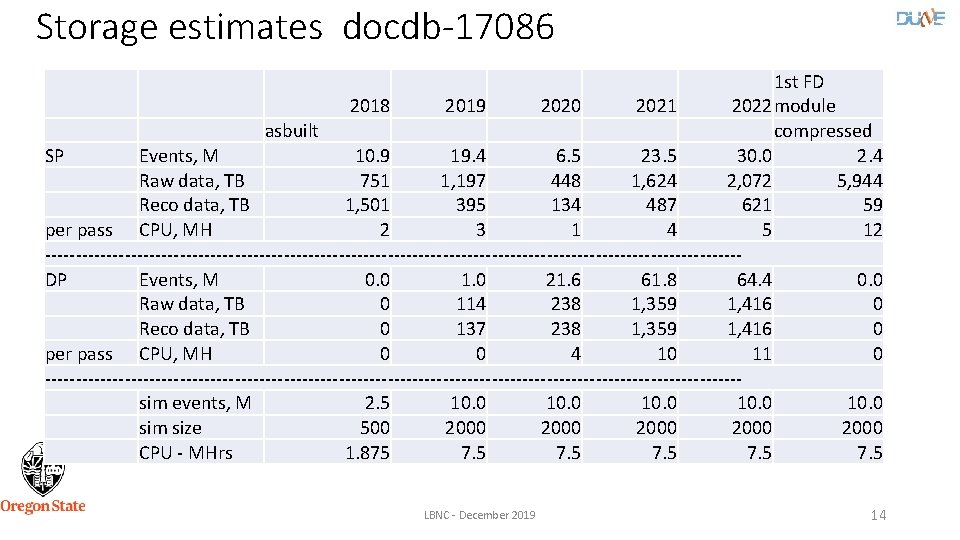

Storage estimates docdb-17086 1 st FD 2018 2019 2020 2021 2022 module asbuilt compressed SP Events, M 10. 9 19. 4 6. 5 23. 5 30. 0 2. 4 Raw data, TB 751 1, 197 448 1, 624 2, 072 5, 944 Reco data, TB 1, 501 395 134 487 621 59 per pass CPU, MH 2 3 1 4 5 12 ---------------------------------------------------------DP Events, M 0. 0 1. 0 21. 6 61. 8 64. 4 0. 0 Raw data, TB 0 114 238 1, 359 1, 416 0 Reco data, TB 0 137 238 1, 359 1, 416 0 per pass CPU, MH 0 0 4 10 11 0 ---------------------------------------------------------sim events, M 2. 5 10. 0 sim size 500 2000 2000 CPU - MHrs 1. 875 7. 5 7. 5 LBNC - December 2019 14

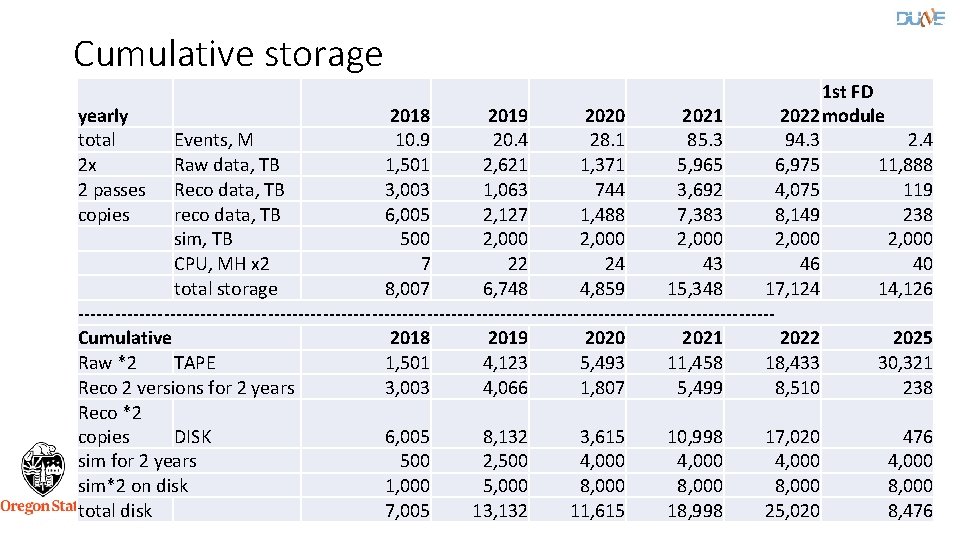

Cumulative storage 1 st FD yearly 2018 2019 2020 2021 2022 module total Events, M 10. 9 20. 4 28. 1 85. 3 94. 3 2. 4 2 x Raw data, TB 1, 501 2, 621 1, 371 5, 965 6, 975 11, 888 2 passes Reco data, TB 3, 003 1, 063 744 3, 692 4, 075 119 copies reco data, TB 6, 005 2, 127 1, 488 7, 383 8, 149 238 sim, TB 500 2, 000 2, 000 CPU, MH x 2 7 22 24 43 46 40 total storage 8, 007 6, 748 4, 859 15, 348 17, 124 14, 126 ---------------------------------------------------------Cumulative 2018 2019 2020 2021 2022 2025 Raw *2 TAPE 1, 501 4, 123 5, 493 11, 458 18, 433 30, 321 Reco 2 versions for 2 years 3, 003 4, 066 1, 807 5, 499 8, 510 238 Reco *2 copies DISK 6, 005 8, 132 3, 615 10, 998 17, 020 476 sim for 2 years 500 2, 500 4, 000 sim*2 on disk 1, 000 5, 000 8, 000 total disk 7, 005 LBNC - December 13, 132 11, 615 18, 998 25, 020 2019 | 15 8, 476

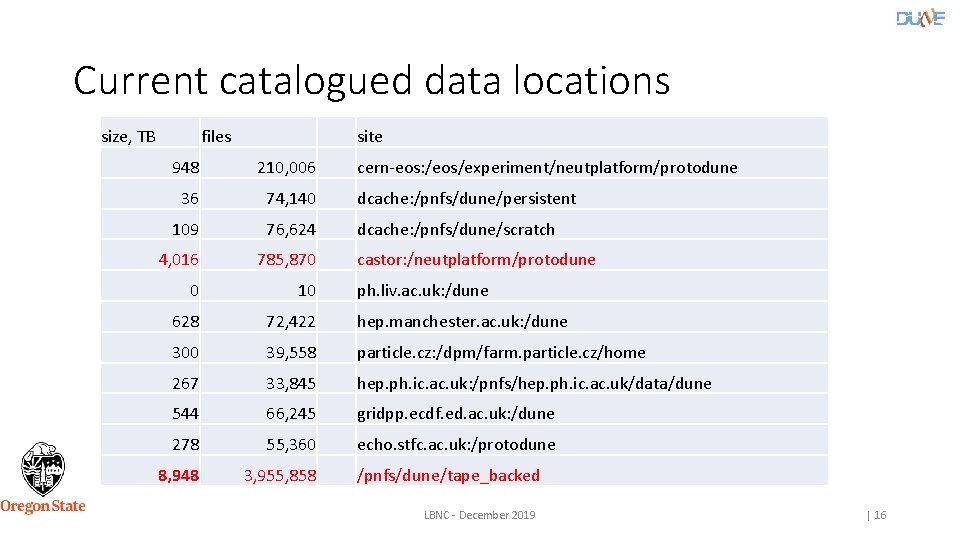

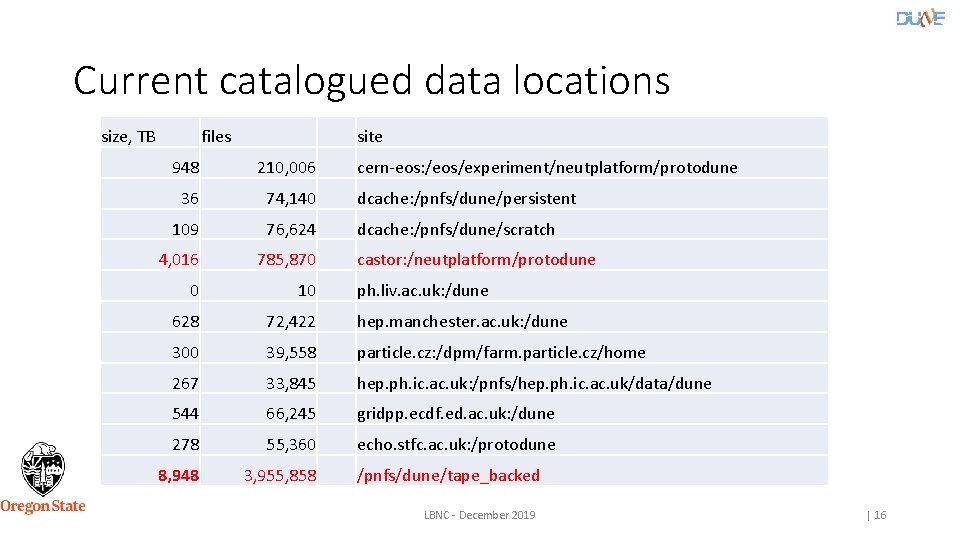

Current catalogued data locations size, TB files site 948 210, 006 cern-eos: /eos/experiment/neutplatform/protodune 36 74, 140 dcache: /pnfs/dune/persistent 109 76, 624 dcache: /pnfs/dune/scratch 4, 016 785, 870 0 10 628 72, 422 hep. manchester. ac. uk: /dune 300 39, 558 particle. cz: /dpm/farm. particle. cz/home 267 33, 845 hep. ph. ic. ac. uk: /pnfs/hep. ph. ic. ac. uk/data/dune 544 66, 245 gridpp. ecdf. ed. ac. uk: /dune 278 55, 360 echo. stfc. ac. uk: /protodune 8, 948 3, 955, 858 castor: /neutplatform/protodune ph. liv. ac. uk: /dune /pnfs/dune/tape_backed LBNC - December 2019 | 16

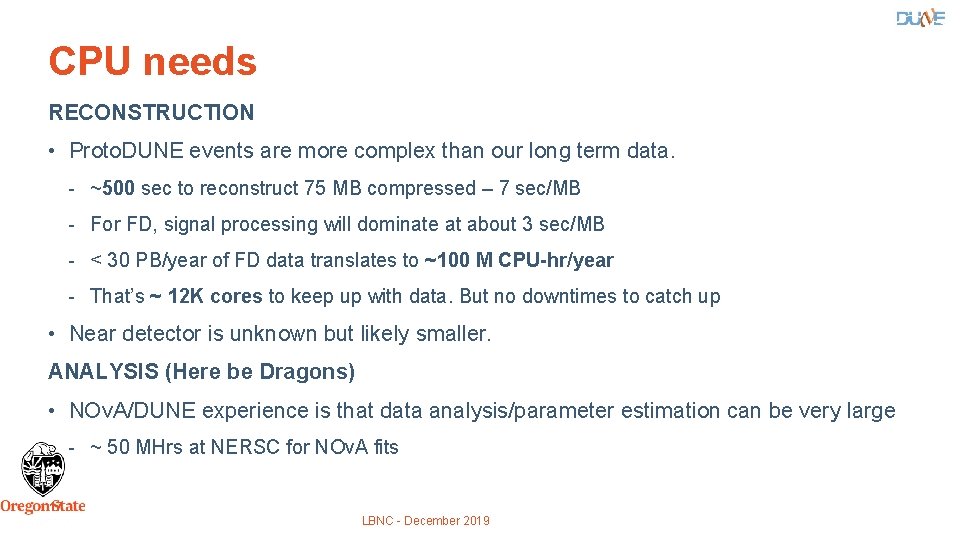

CPU needs RECONSTRUCTION • Proto. DUNE events are more complex than our long term data. - ~500 sec to reconstruct 75 MB compressed – 7 sec/MB - For FD, signal processing will dominate at about 3 sec/MB - < 30 PB/year of FD data translates to ~100 M CPU-hr/year - That’s ~ 12 K cores to keep up with data. But no downtimes to catch up • Near detector is unknown but likely smaller. ANALYSIS (Here be Dragons) • NOv. A/DUNE experience is that data analysis/parameter estimation can be very large - ~ 50 MHrs at NERSC for NOv. A fits 17 LBNC - December 2019

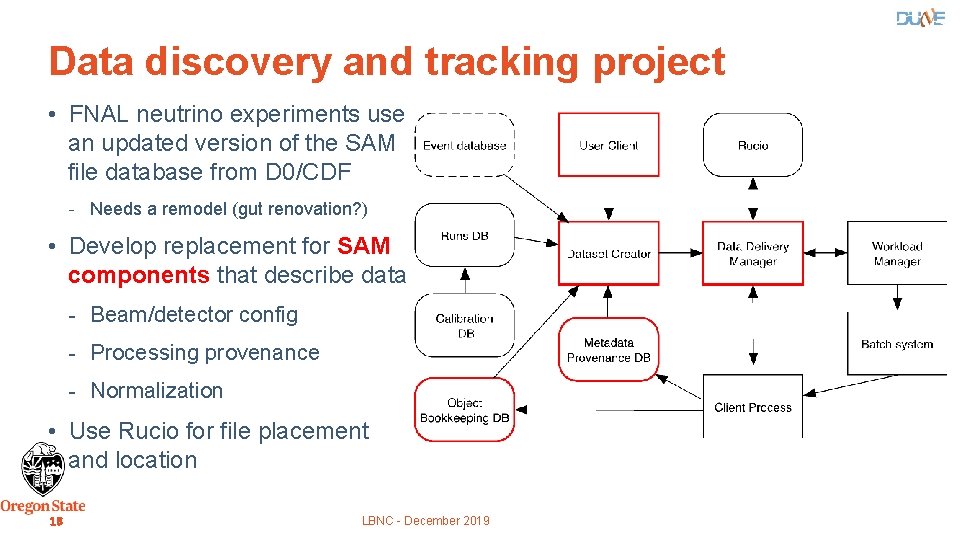

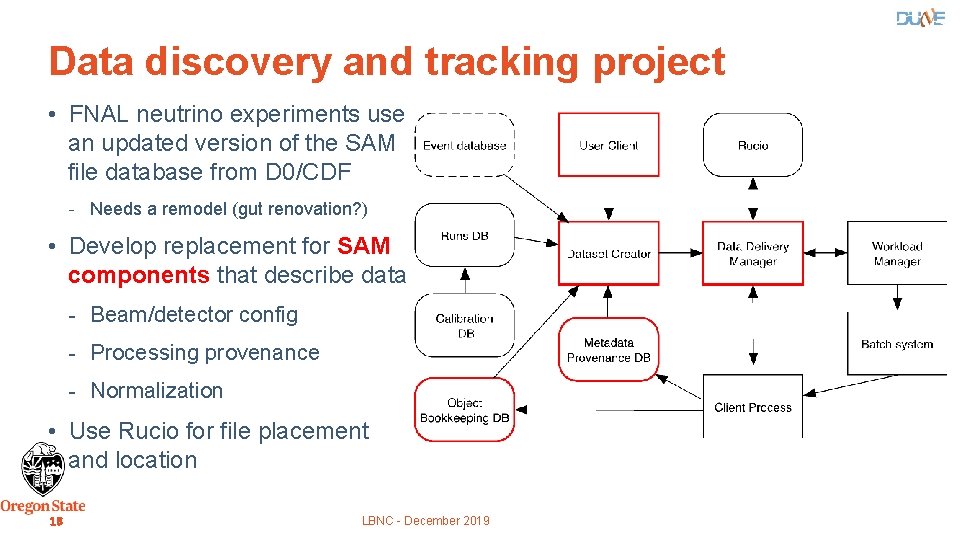

Data discovery and tracking project • FNAL neutrino experiments use an updated version of the SAM file database from D 0/CDF - Needs a remodel (gut renovation? ) • Develop replacement for SAM components that describe data - Beam/detector config - Processing provenance - Normalization • Use Rucio for file placement and location 18 LBNC - December 2019

Long term personnel needs (based on LHCb experience) ~ 20 FTE • Distributed Computing Development and Maintenance 5. 0 FTE • Software and Computing Infrastructure Development and Maintenance - 6. 0 FTE (includes DB development) • Central Services Manager and Operators - 1. 5 FTE • Computing Shift Leaders - 1. 5 FTE • User Support - 1. 0 FTE • Application Managers and Librarians - 2. 0 FTE • Database Administration - 0. 5 FTE • Distributed Data Manager - 0. 5 FTE • Distributed Workload Manager 0. 5 FTE • Distributed Production Manager 0. 5 FTE • Overall Coordination 2. 0 FTE LBNC - December 2019 Computing Professionals Collaboration Physicists User support 19

Unknowns for the future • $$$ - many of those people are computing specialists that need to be paid • Near detector: - Rate ~ 1 Hz, technology not yet decided – But software integration underway - Occupancies will be similar to Proto. DUNE at 1 Hz O(1) PB/year? • Processor technologies - HPC’s - Less memory/more memory? - GPU’s? << signal processing may love these! • Storage technologies - Tape - Spinning disk - SSD - Something else? • Networks – LHCONE + ESNET have successfully handled current loads 20 LBNC - December 2019

US consortium • 5 national labs (ANL, BNL, FNAL, LBNL and SLAC) • 5 US universities – Colorado State, Minnesota, Oregon State, Wichita State and William and Mary • 3 thrusts - Databases – Hardware and integrated design - Data model and algorithms for large scale computation on diverse hardware - Coding standards, build tools and training to ensure ability of collaborators to contribute to robust algorithms for reconstruction and analysis • White paper this month. • Formal proposal going in early in 2020. 21 LBNC - December 2019

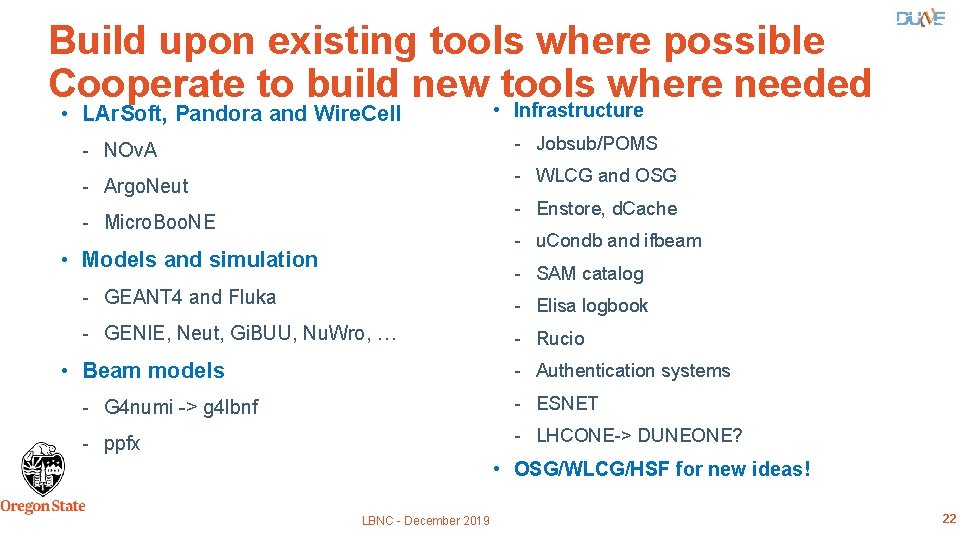

Build upon existing tools where possible Cooperate to build new • tools where needed Infrastructure • LAr. Soft, Pandora and Wire. Cell - Jobsub/POMS - NOv. A - WLCG and OSG - Argo. Neut - Enstore, d. Cache - Micro. Boo. NE - u. Condb and ifbeam • Models and simulation - SAM catalog - GEANT 4 and Fluka - Elisa logbook - GENIE, Neut, Gi. BUU, Nu. Wro, … - Rucio • Beam models - Authentication systems - G 4 numi -> g 4 lbnf - ESNET - ppfx - LHCONE-> DUNEONE? • OSG/WLCG/HSF for new ideas! LBNC - December 2019 22

Pete explains the growth of contributions LBNC - December 2019 23