GISTexter A System for Summarizing Text Documents Sanda

![A New Combiner Pattern Example: “Flood caused by ice and snow” COMPLEX_NG[10] ==> DISASTER_NG; A New Combiner Pattern Example: “Flood caused by ice and snow” COMPLEX_NG[10] ==> DISASTER_NG;](https://slidetodoc.com/presentation_image/05756060c2bb2c066e84962a3c2d9f87/image-14.jpg)

- Slides: 24

GISTexter: A System for Summarizing Text Documents Sanda Harabagiu, Dan Moldovan, Paul Morarescu, Finley Lacatusu, Rada Mihalcea, Vasile Rus and Roxana Girju Department of Computer Sciences The University of Texas at Austin TX 78712 -1188 Dept. of Computer Science & Engr. Southern Methodist University Dallas TX 75275 -0122 Department of Computer Science The University of Texas at Dallas Richardson TX 75083 -0688 Language Computer Corporation 6440 N. Central Expressway Dallas TX 75206

Outline 1. 2. 3. 4. 5. Background System Architecture Single-Document Summaries Multi-Document Summaries Results and Conclusions

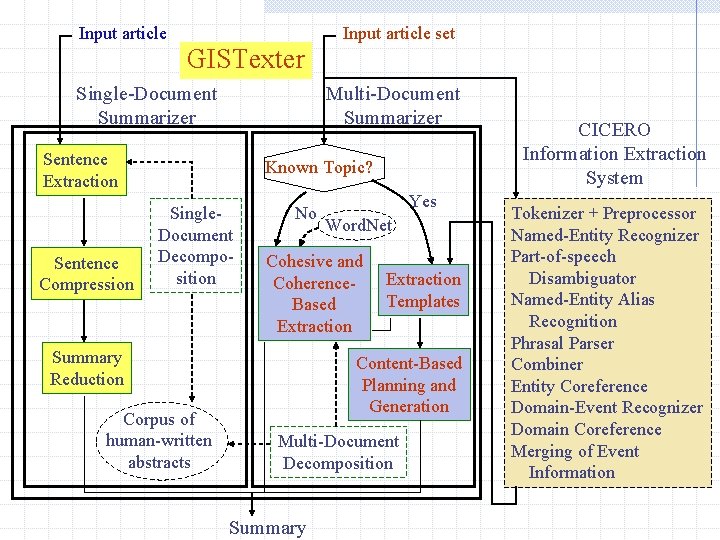

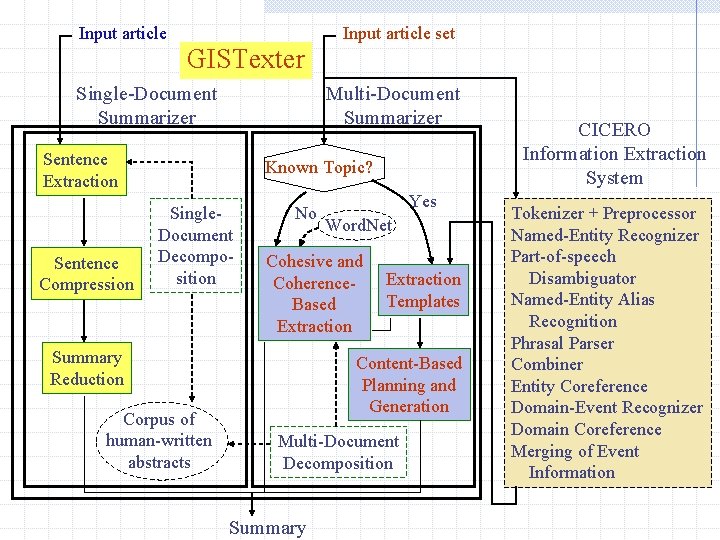

GISTexter -Generating summaries as similar as possible to human-written abstracts. Two assumptions: (1) Single-document summaries: extract the same information a human would consider when writing an abstract of the same document. (2) Multi-document summaries: capture textual information shared across the document set.

Our interest Multi-Document Summaries applicable to Question-Answering Enables the usage of IE technology !!! Need domain information use CICERO – for topics that are already encoded in it develop a back-up solution: - gisting information by combining cohesion and coherence indicators for sentence extraction.

What is gisting? -an activity in which the information taken into account is less that the full information content available. Empirical Principles: Named Entities common to the set of documents are anchors for argument structures that act like ad-hoc templates. Sometimes cue phrases indicate coherence with some related information that should be gleaned in the summary.

Input article set GISTexter Single-Document Summarizer Multi-Document Summarizer Sentence Extraction Sentence Compression Known Topic? Single. Document Decomposition No Word. Net Cohesive and Coherence. Based Extraction Summary Reduction Corpus of human-written abstracts Yes Extraction Templates Content-Based Planning and Generation Multi-Document Decomposition Summary CICERO Information Extraction System Tokenizer + Preprocessor Named-Entity Recognizer Part-of-speech Disambiguator Named-Entity Alias Recognition Phrasal Parser Combiner Entity Coreference Domain-Event Recognizer Domain Coreference Merging of Event Information

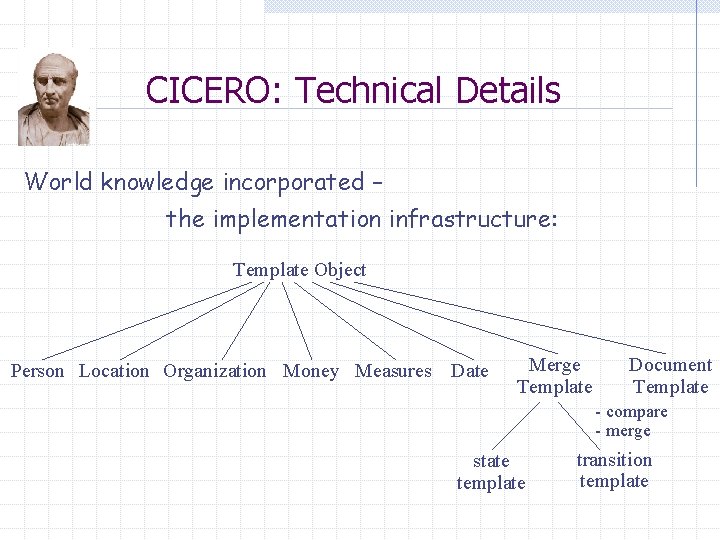

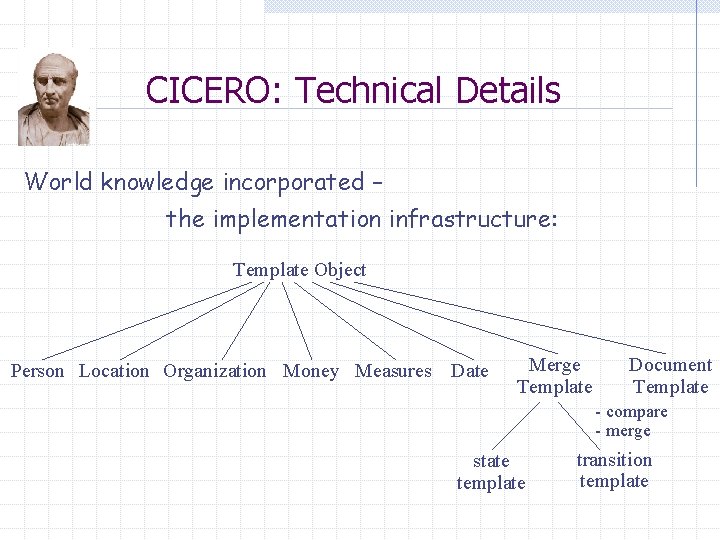

CICERO: Technical Details World knowledge incorporated – the implementation infrastructure: Template Object Person Location Organization Money Measures Date Merge Template Document Template - compare - merge state template transition template

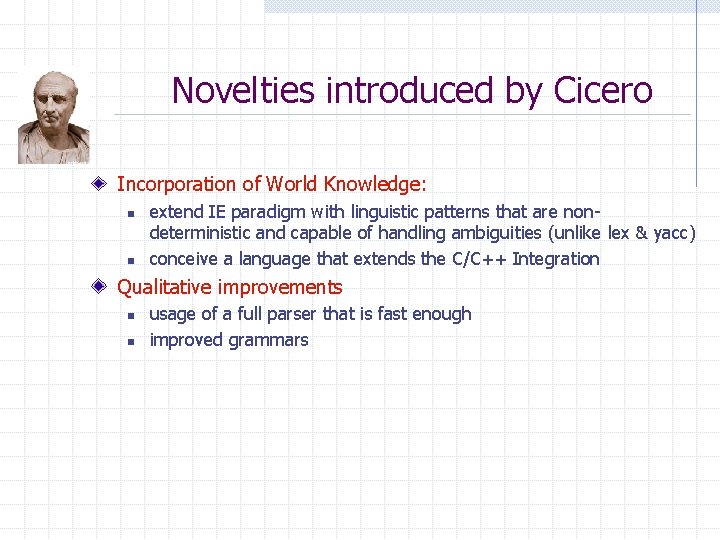

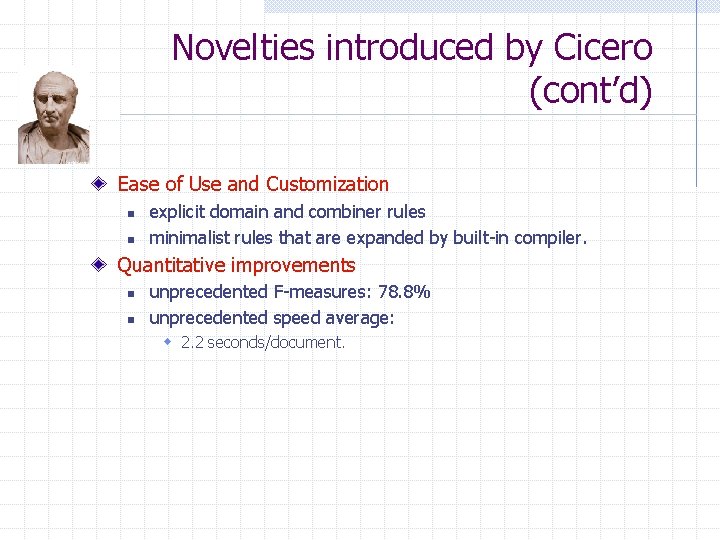

Novelties introduced by Cicero Incorporation of World Knowledge: n n extend IE paradigm with linguistic patterns that are nondeterministic and capable of handling ambiguities (unlike lex & yacc) conceive a language that extends the C/C++ Integration Qualitative improvements n n usage of a full parser that is fast enough improved grammars

Novelties introduced by Cicero (cont’d) Ease of Use and Customization n n explicit domain and combiner rules minimalist rules that are expanded by built-in compiler. Quantitative improvements n n unprecedented F-measures: 78. 8% unprecedented speed average: w 2. 2 seconds/document.

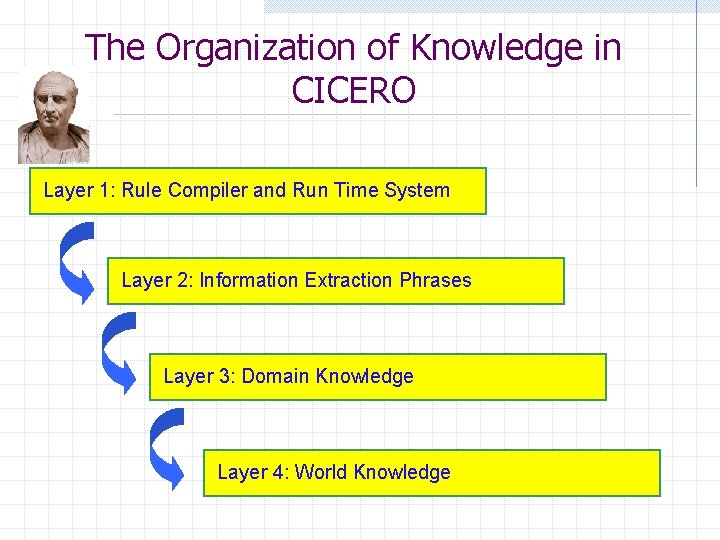

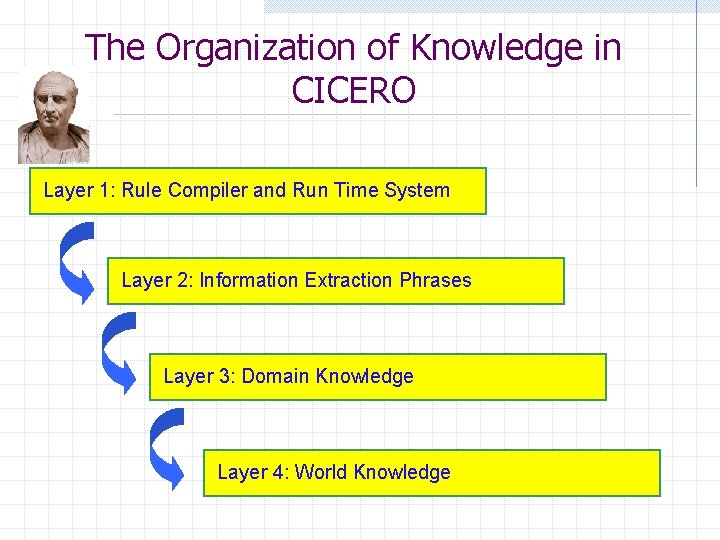

The Organization of Knowledge in CICERO Layer 1: Rule Compiler and Run Time System Layer 2: Information Extraction Phrases Layer 3: Domain Knowledge Layer 4: World Knowledge

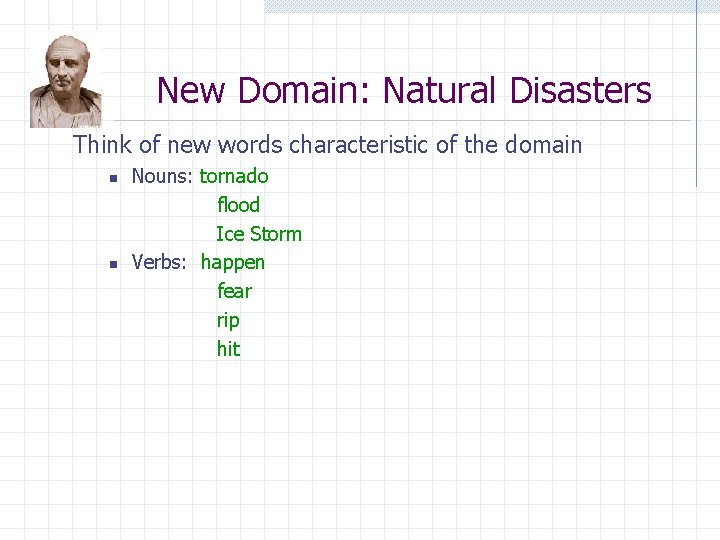

New Domain: Natural Disasters Think of new words characteristic of the domain n n Nouns: tornado flood Ice Storm Verbs: happen fear rip hit

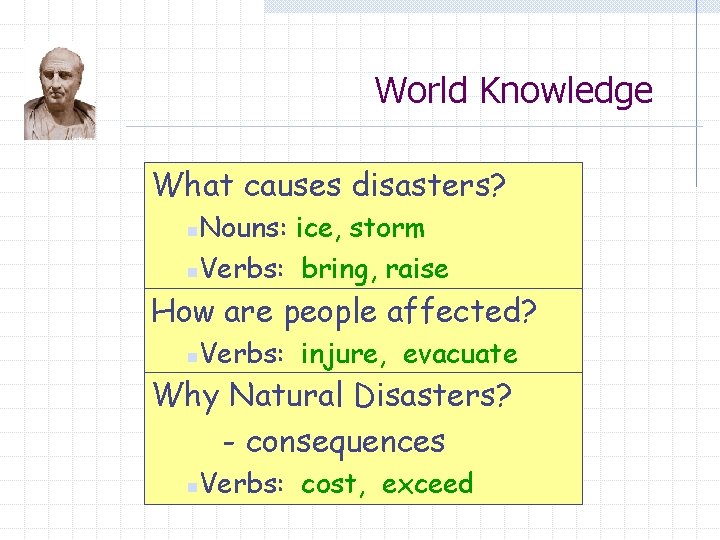

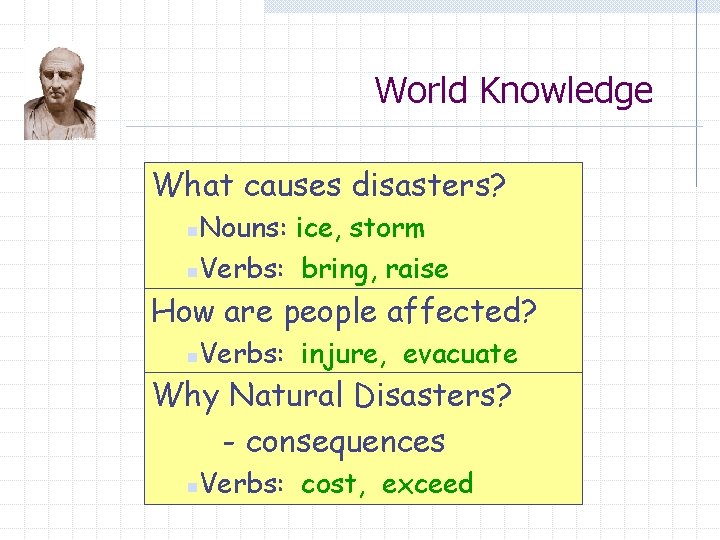

World Knowledge What causes disasters? Nouns: ice, storm n. Verbs: bring, raise n How are people affected? n Verbs: injure, evacuate Why Natural Disasters? - consequences n Verbs: cost, exceed

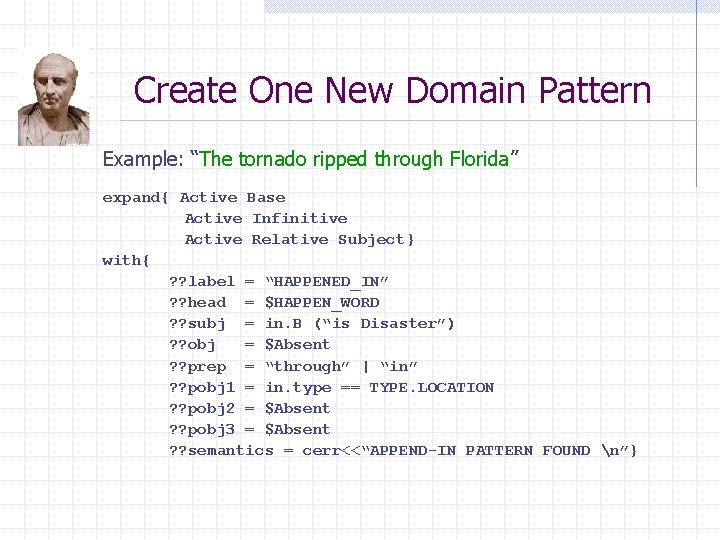

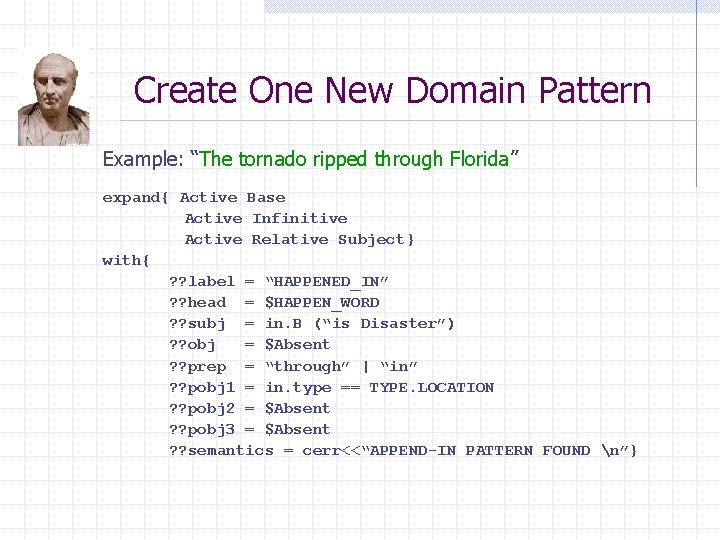

Create One New Domain Pattern Example: “The tornado ripped through Florida” expand{ Active Base Active Infinitive Active Relative Subject} with{ ? ? label = “HAPPENED_IN” ? ? head = $HAPPEN_WORD ? ? subj = in. B (“is Disaster”) ? ? obj = $Absent ? ? prep = “through” | “in” ? ? pobj 1 = in. type == TYPE. LOCATION ? ? pobj 2 = $Absent ? ? pobj 3 = $Absent ? ? semantics = cerr<<“APPEND-IN PATTERN FOUND n”}

![A New Combiner Pattern Example Flood caused by ice and snow COMPLEXNG10 DISASTERNG A New Combiner Pattern Example: “Flood caused by ice and snow” COMPLEX_NG[10] ==> DISASTER_NG;](https://slidetodoc.com/presentation_image/05756060c2bb2c066e84962a3c2d9f87/image-14.jpg)

A New Combiner Pattern Example: “Flood caused by ice and snow” COMPLEX_NG[10] ==> DISASTER_NG; out. cat += #NG; out. B["is. Disaster"] = true; ; DISASTER_NG ==> #NG[$DISASTER_WORD]: 1 { #VG[$CAUSE_WORD, in. tense ==TENSE_PAST] "by" #NG[$DISASTER_CAUSE] { ", " #NG[$DISASTER_CAUSE] }? { ", "? "and" #NG[$DISASTER_CAUSE] }? }? ; out. item = in(1). item; ;

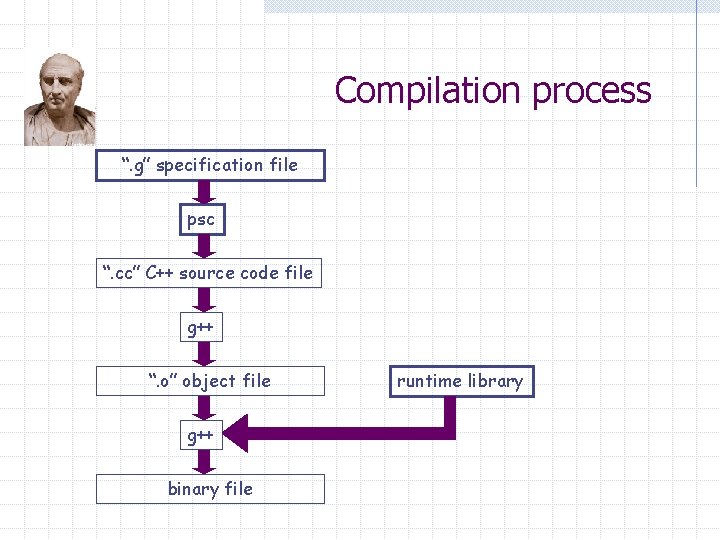

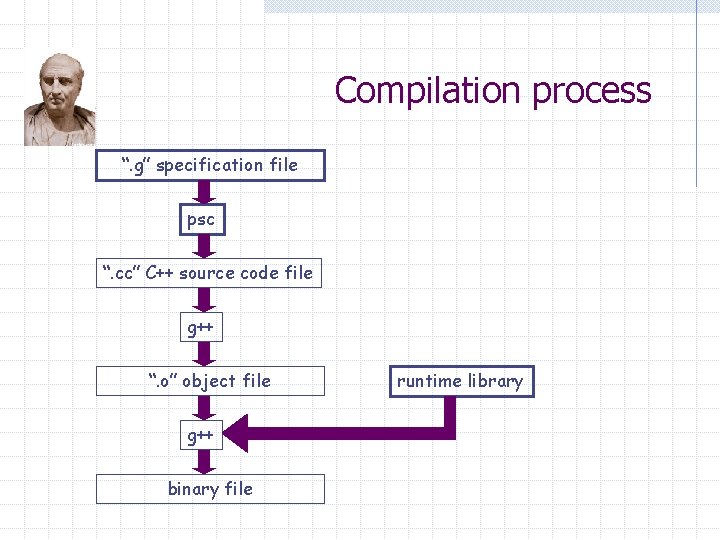

Compilation process “. g” specification file psc “. cc” C++ source code file g++ “. o” object file g++ binary file runtime library

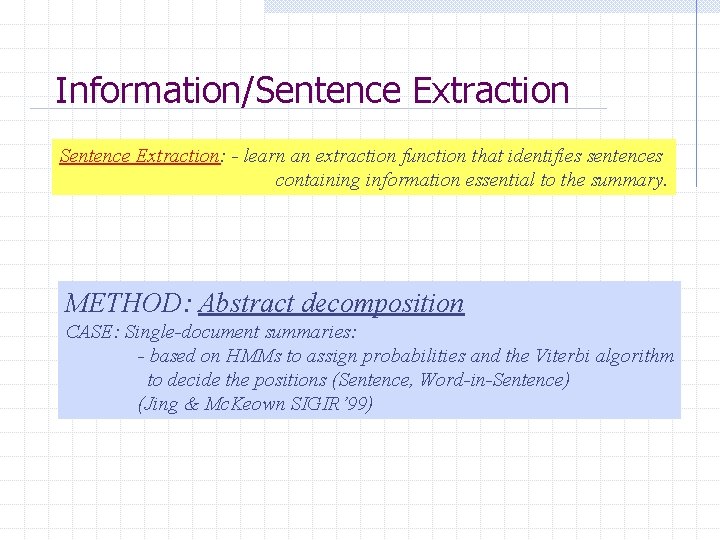

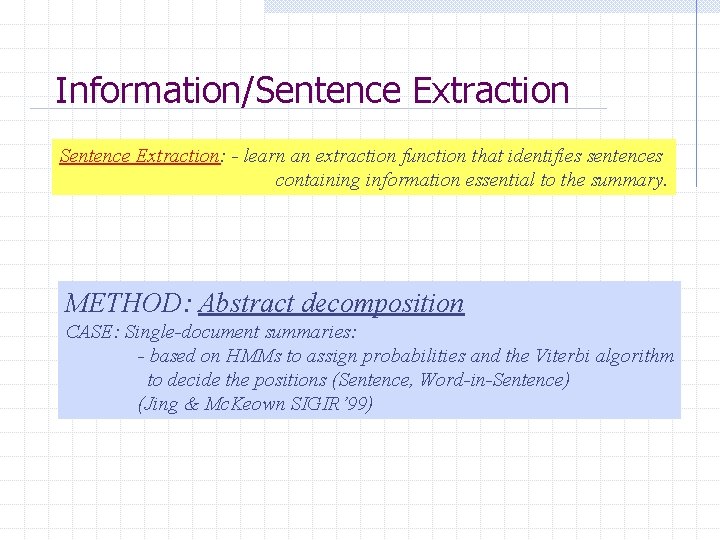

Information/Sentence Extraction: - learn an extraction function that identifies sentences containing information essential to the summary. METHOD: Abstract decomposition CASE: Single-document summaries: - based on HMMs to assign probabilities and the Viterbi algorithm to decide the positions (Sentence, Word-in-Sentence) (Jing & Mc. Keown SIGIR’ 99)

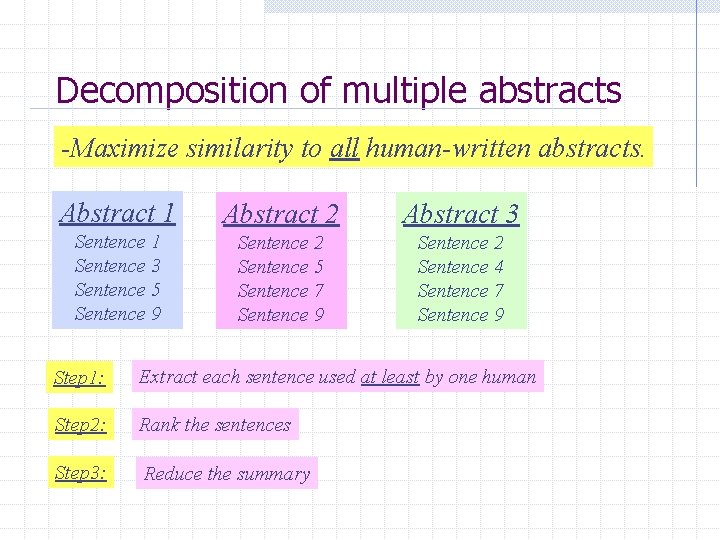

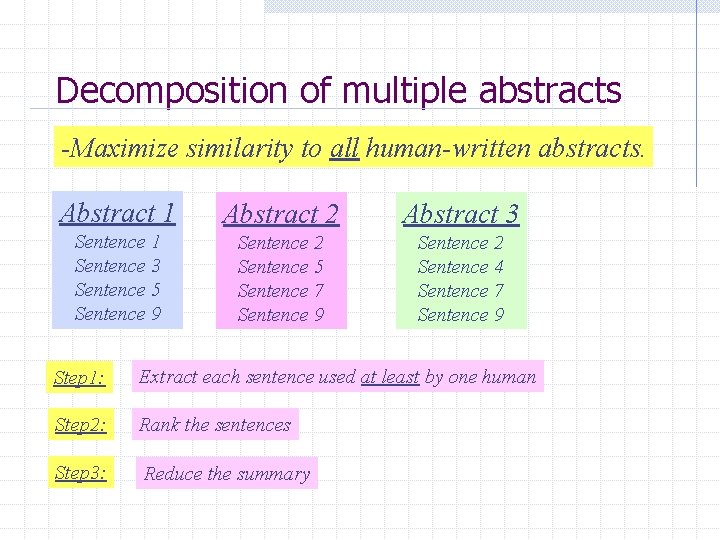

Decomposition of multiple abstracts -Maximize similarity to all human-written abstracts. Abstract 1 Abstract 2 Abstract 3 Sentence 1 Sentence 3 Sentence 5 Sentence 9 Sentence 2 Sentence 5 Sentence 7 Sentence 9 Sentence 2 Sentence 4 Sentence 7 Sentence 9 Step 1: Extract each sentence used at least by one human Step 2: Rank the sentences Step 3: Reduce the summary

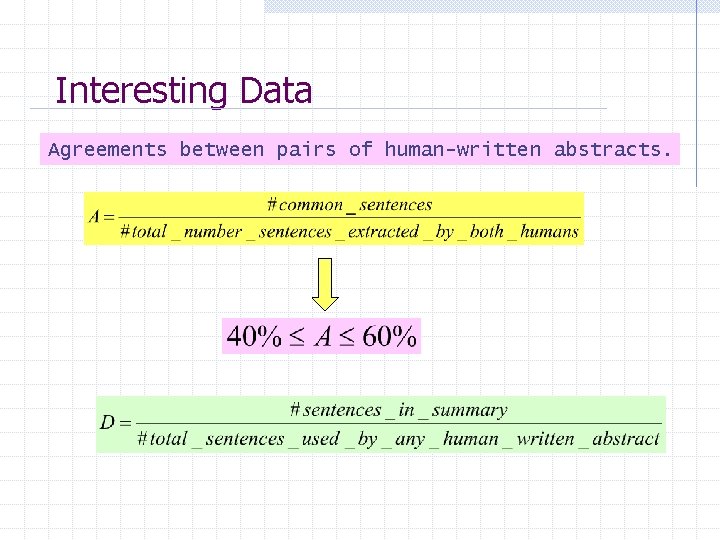

Interesting Data Agreements between pairs of human-written abstracts.

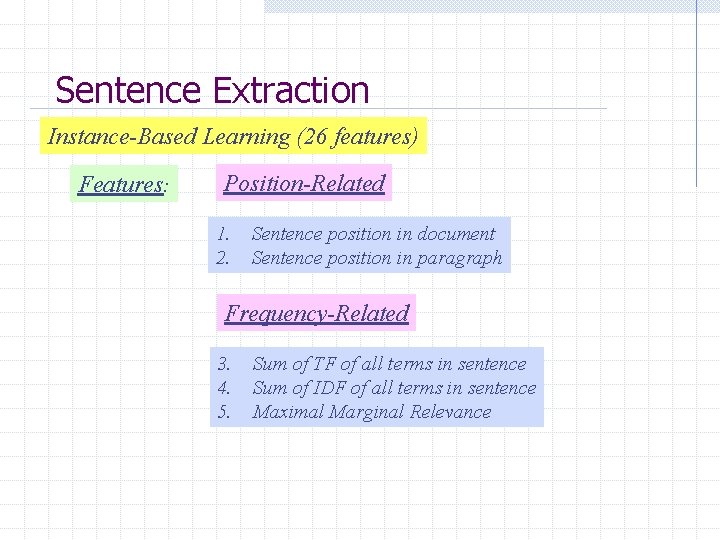

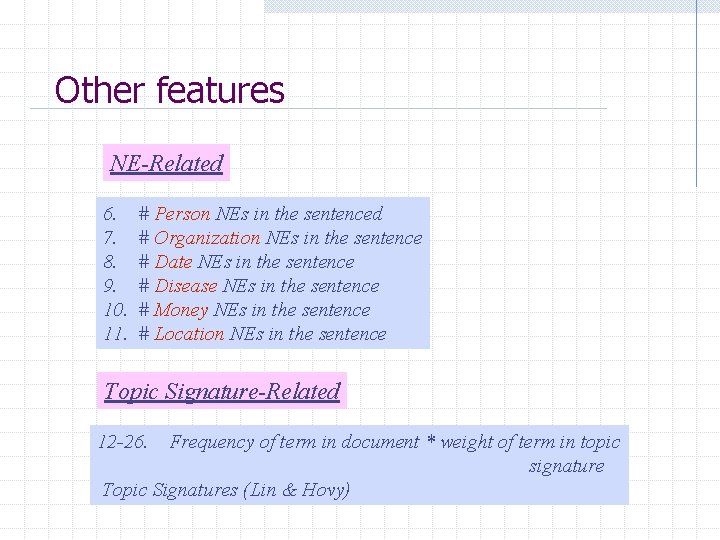

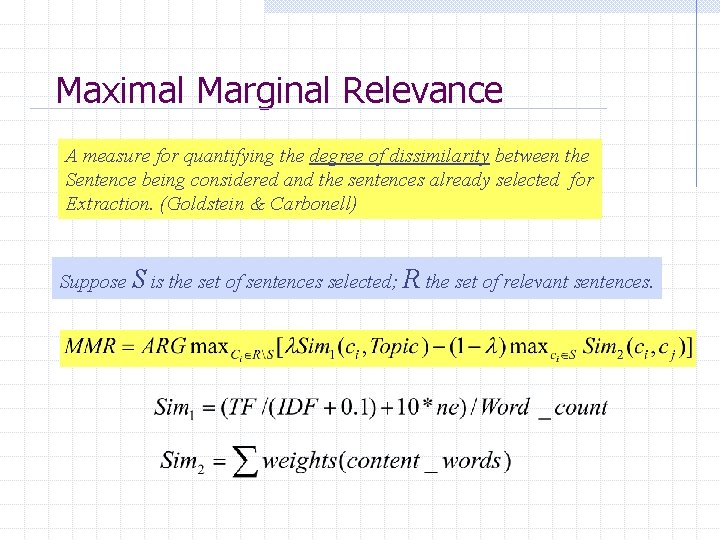

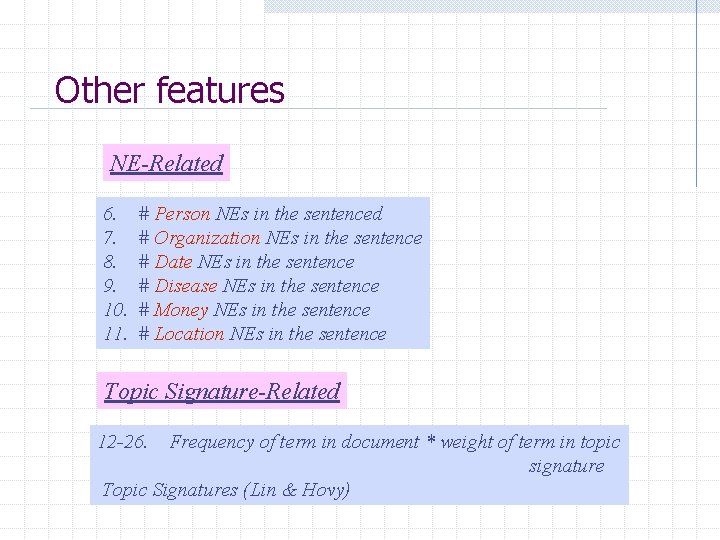

Sentence Extraction Instance-Based Learning (26 features) Features: Position-Related 1. 2. Sentence position in document Sentence position in paragraph Frequency-Related 3. 4. 5. Sum of TF of all terms in sentence Sum of IDF of all terms in sentence Maximal Marginal Relevance

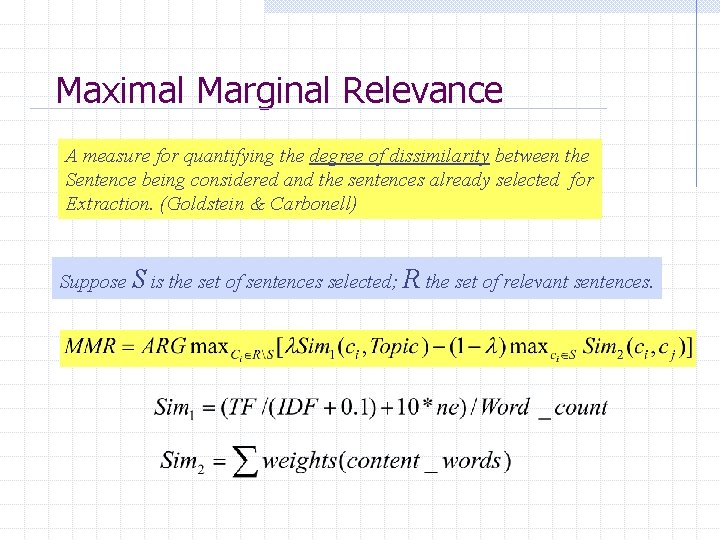

Maximal Marginal Relevance A measure for quantifying the degree of dissimilarity between the Sentence being considered and the sentences already selected for Extraction. (Goldstein & Carbonell) Suppose S is the set of sentences selected; R the set of relevant sentences.

Other features NE-Related 6. 7. 8. 9. 10. 11. # Person NEs in the sentenced # Organization NEs in the sentence # Date NEs in the sentence # Disease NEs in the sentence # Money NEs in the sentence # Location NEs in the sentence Topic Signature-Related 12 -26. Frequency of term in document * weight of term in topic signature Topic Signatures (Lin & Hovy)

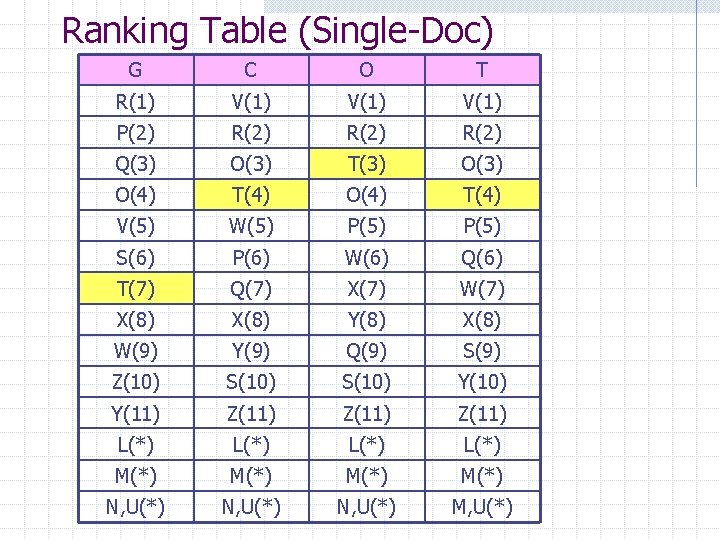

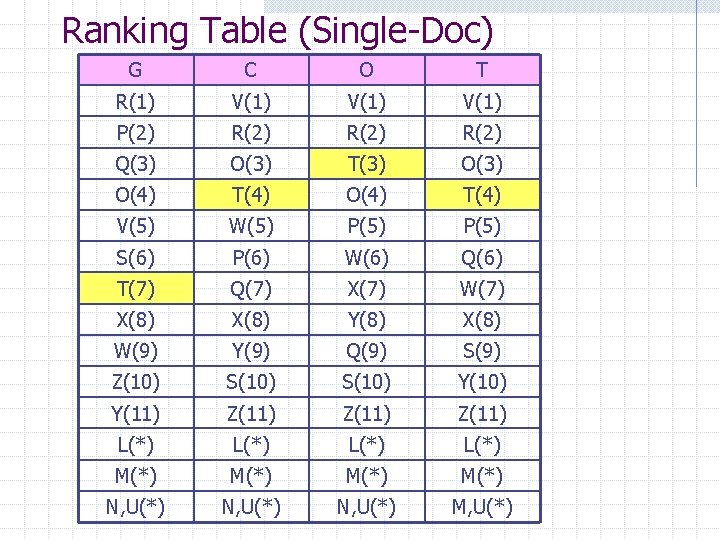

Ranking Table (Single-Doc) G C O T R(1) V(1) P(2) R(2) Q(3) O(3) T(3) O(4) T(4) V(5) W(5) P(5) S(6) P(6) W(6) Q(6) T(7) Q(7) X(7) W(7) X(8) Y(8) X(8) W(9) Y(9) Q(9) S(9) Z(10) S(10) Y(11) Z(11) L(*) M(*) N, U(*) M, U(*)

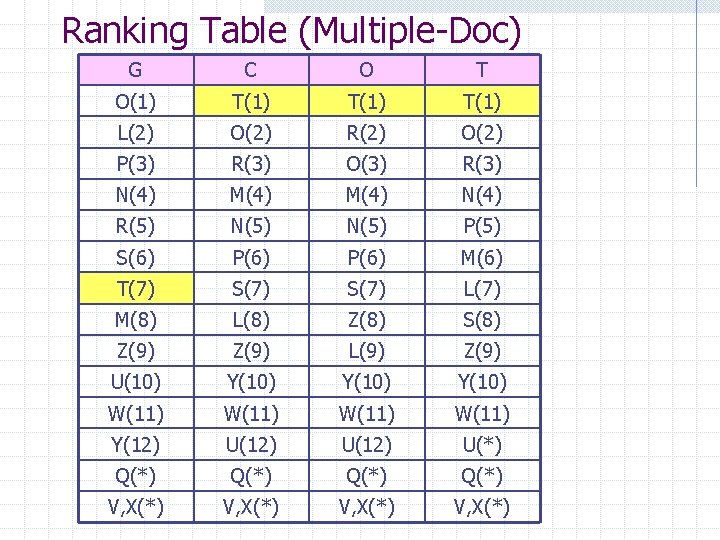

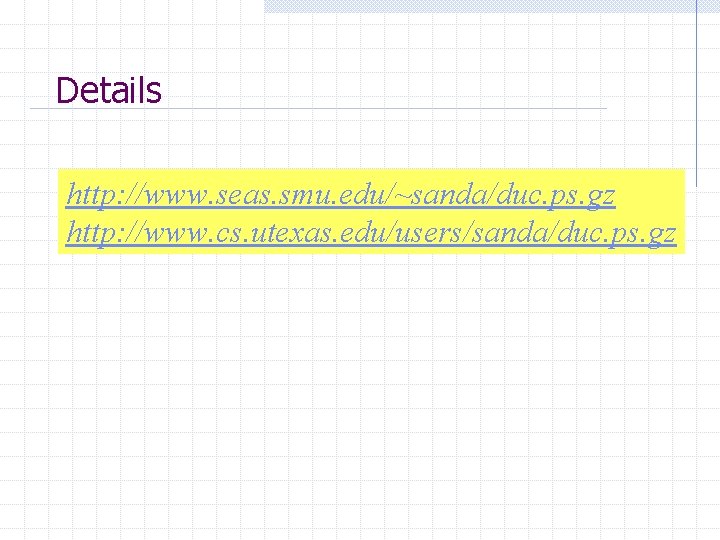

Ranking Table (Multiple-Doc) G C O T O(1) T(1) L(2) O(2) R(2) O(2) P(3) R(3) O(3) R(3) N(4) M(4) N(4) R(5) N(5) P(5) S(6) P(6) M(6) T(7) S(7) L(7) M(8) L(8) Z(8) S(8) Z(9) L(9) Z(9) U(10) Y(10) W(11) Y(12) U(12) U(*) Q(*) V, X(*)

Details http: //www. seas. smu. edu/~sanda/duc. ps. gz http: //www. cs. utexas. edu/users/sanda/duc. ps. gz