Fuzzy Ordinal Support Vector Machine Presenter WenFeng Hsiao

- Slides: 37

Fuzzy Ordinal Support Vector Machine Presenter: Wen-Feng Hsiao (蕭文峰) 2009/8/31 1

Outline Introduction Related Work Proposed Method Experiments and Results Conclusions 2

Introduction Ordinal Classification Tasks Grading (student’s performance) Rating (credit rating, customer’s rating toward products) Ranking (query results) Properties of Ordinal Classification Like multi-classification tasks, the class values in ordinal classification are discrete; but ordered. Like regression prediction tasks, the class values in ordinal classification are ordered; but not equalspaced/continuous. 3

Introduction (cont’d) SVM has been shown to be a very powerful and efficient method for multi-classification tasks. SVM has been further extended to regression domain, called SVR, short for Support Vector Regression. Therefore, several researchers try to understand if SVM can be further applied to ordinal classification tasks. 4

Introduction (cont’d) Most existent methods for ordinal classification do not make use of the ordinal characteristics of the data. But o. SVM, proposed recently by Cardoso and da Costa (2007), which makes use of ordinal characteristics of the data. It has been shown to outperform traditional methods in predicting ordinal classes. 5

Introduction (cont’d) However, our empirical experiments showed that o. SVM suffers from two problems: (1) it cannot handle datasets with noisy data; (2)it often misclassifies instances near class boundaries. We propose to apply fuzzy sets to ordinal support vector machine in a hope to resolve the above problems simultaneously. 6

Introduction (cont’d) But the challenge is how to devise a reasonable membership function (mf) to assign the membership degrees for instances. We’ve proposed two mf’s. The experiments show that the proposed mf’s are promising, though still need to be verified further. 7

Outline Introduction Related Work Proposed Method Experiments and Results Conclusions 8

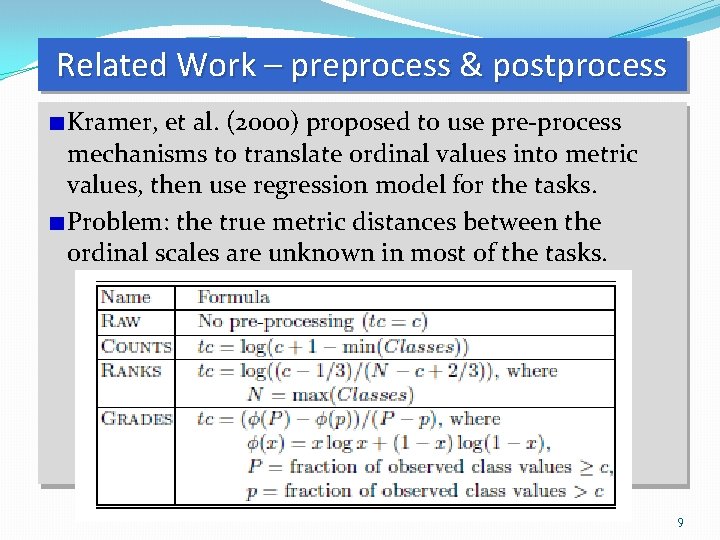

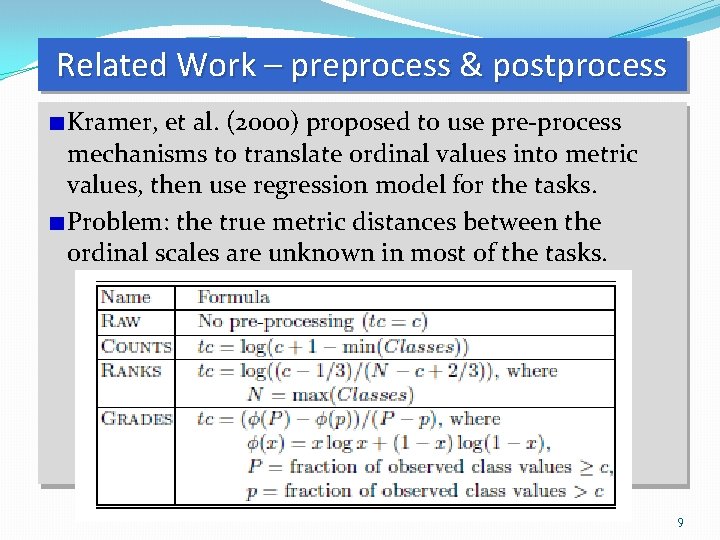

Related Work – preprocess & postprocess Kramer, et al. (2000) proposed to use pre-process mechanisms to translate ordinal values into metric values, then use regression model for the tasks. Problem: the true metric distances between the ordinal scales are unknown in most of the tasks. 9

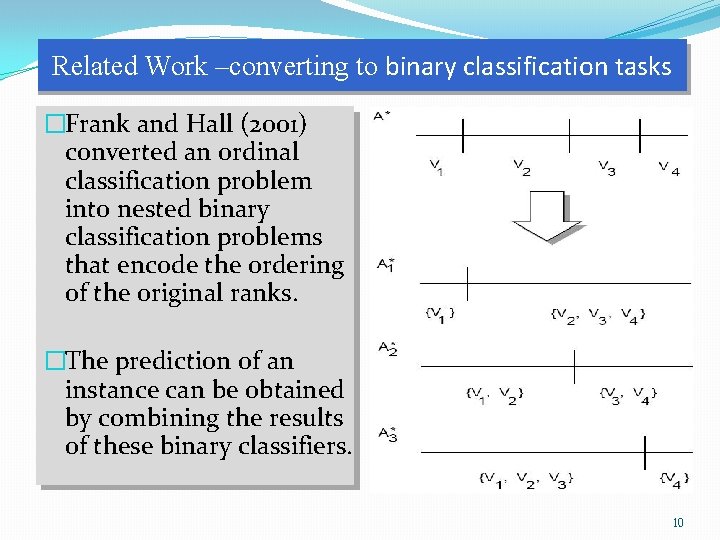

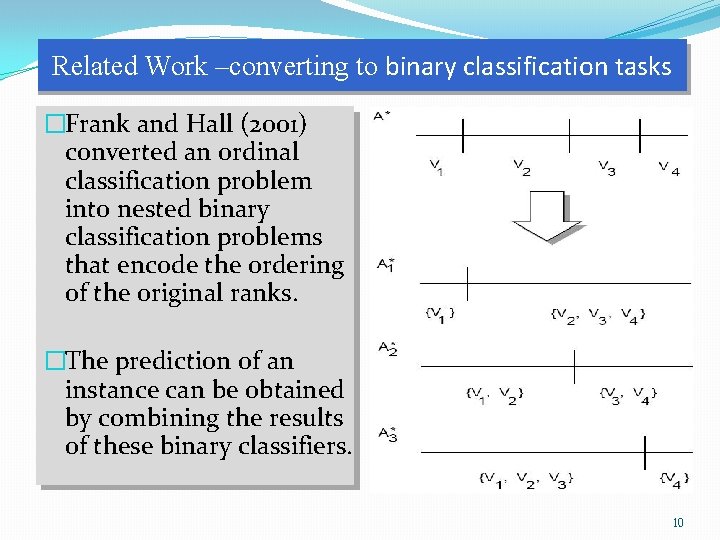

Related Work –converting to binary classification tasks �Frank and Hall (2001) converted an ordinal classification problem into nested binary classification problems that encode the ordering of the original ranks. �The prediction of an instance can be obtained by combining the results of these binary classifiers. 10

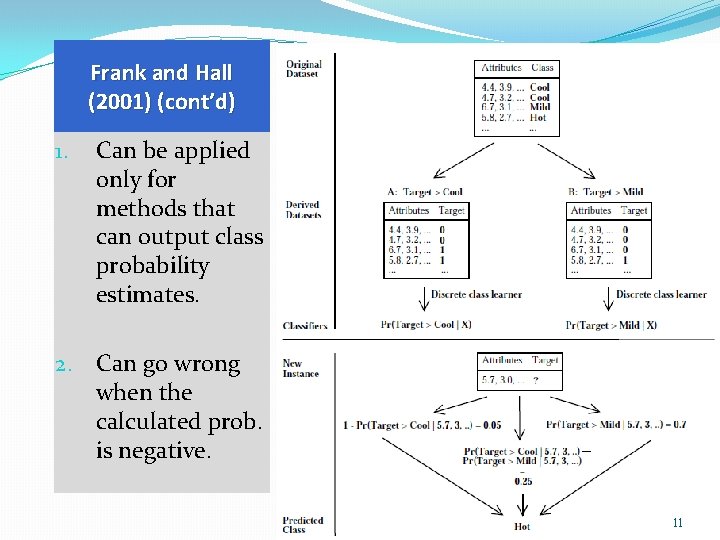

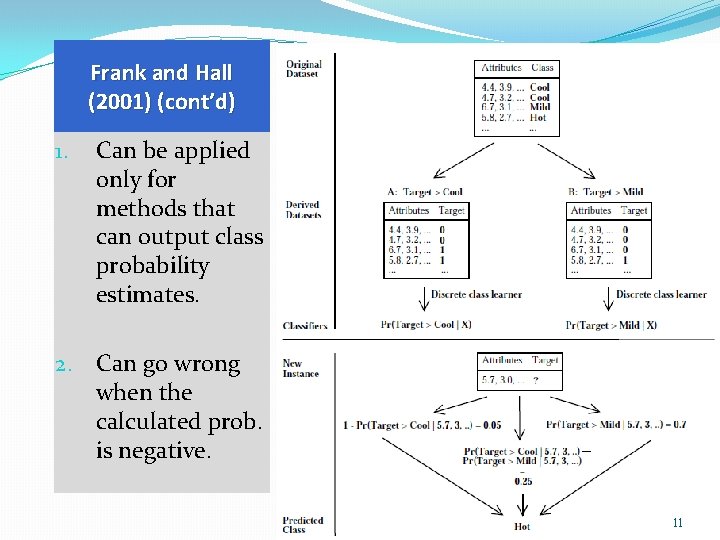

Frank and Hall (2001) (cont’d) 1. Can be applied only for methods that can output class probability estimates. 2. Can go wrong when the calculated prob. is negative. 11

Related Work – Ordinal Support Vector Machine SVM is a supervised learning method, includes both versions for classification and regression tasks: SVC and SVR. Support Vector Machine for Classification (SVC), without particular specification, SVM stands for SVC. Support Vector Machine for Regression (SVR) 12

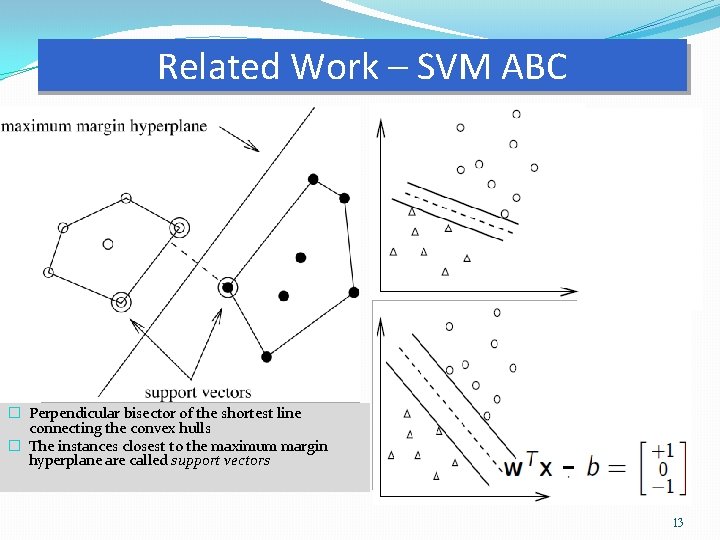

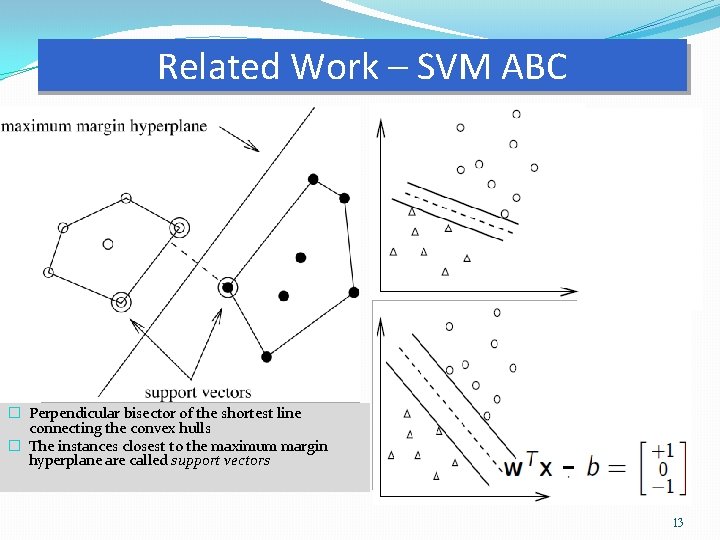

Related Work – SVM ABC � Perpendicular bisector of the shortest line connecting the convex hulls � The instances closest to the maximum margin hyperplane are called support vectors 13

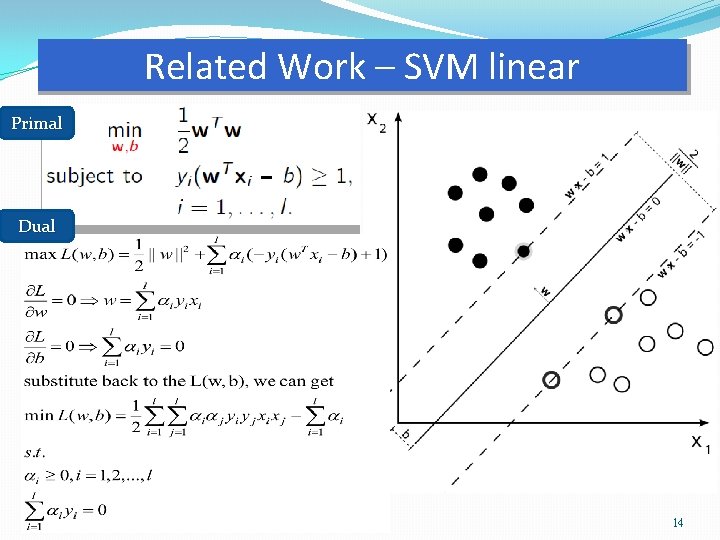

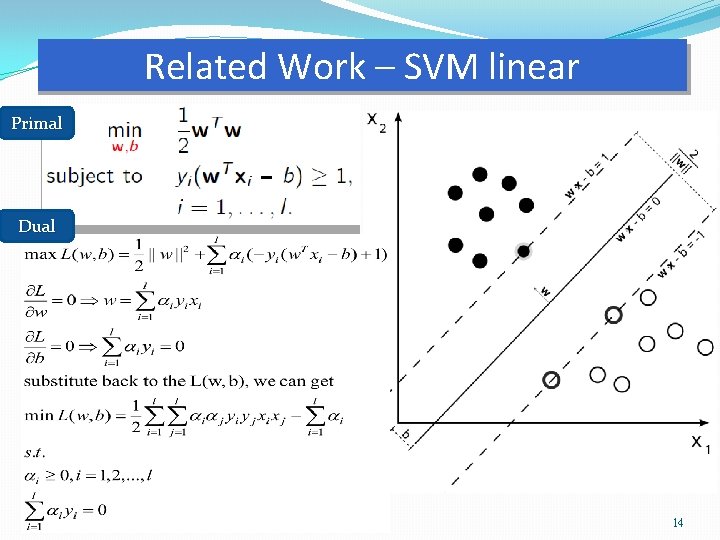

Related Work – SVM linear Primal Dual 14

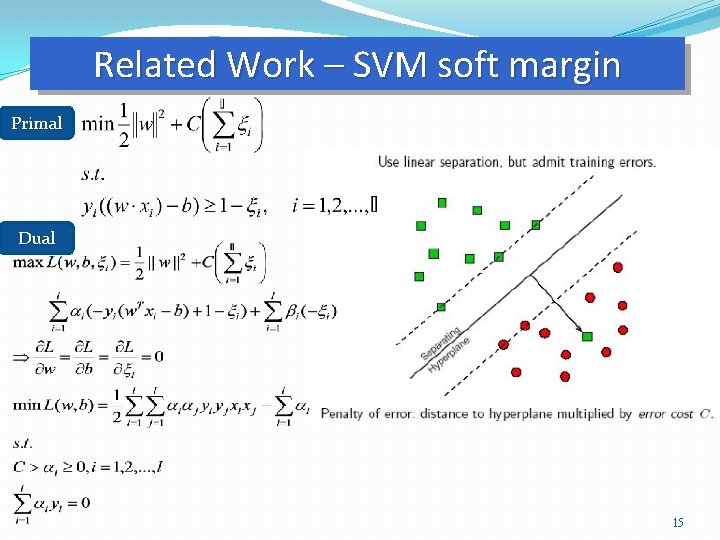

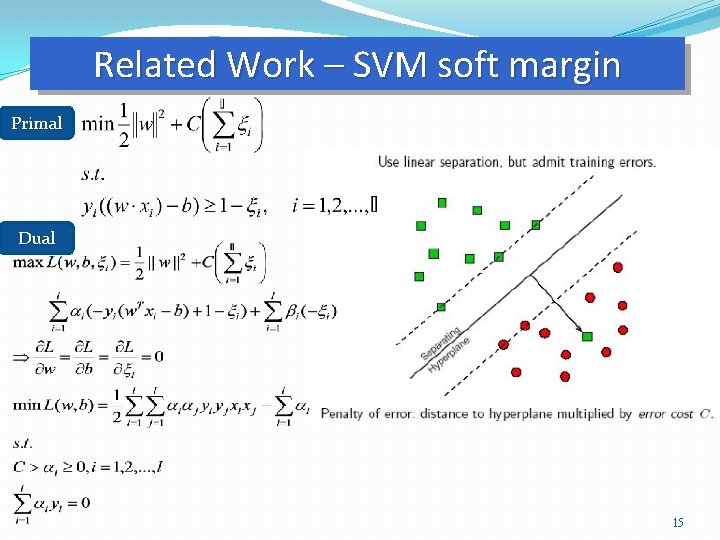

Related Work – SVM soft margin Primal Dual 15

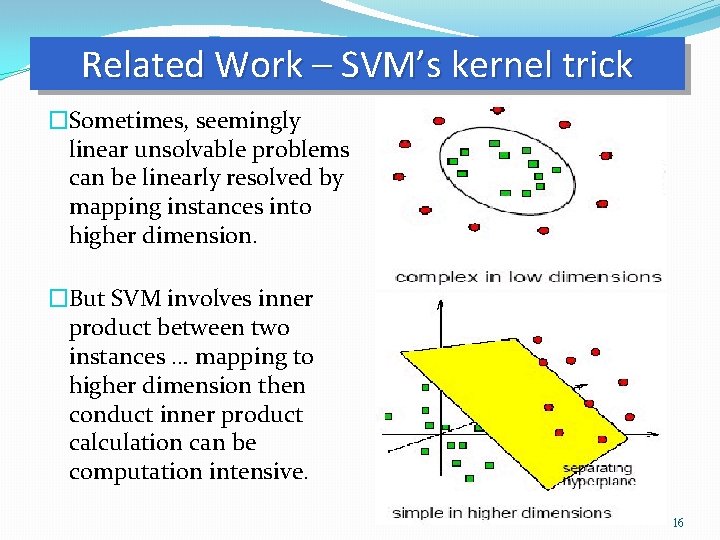

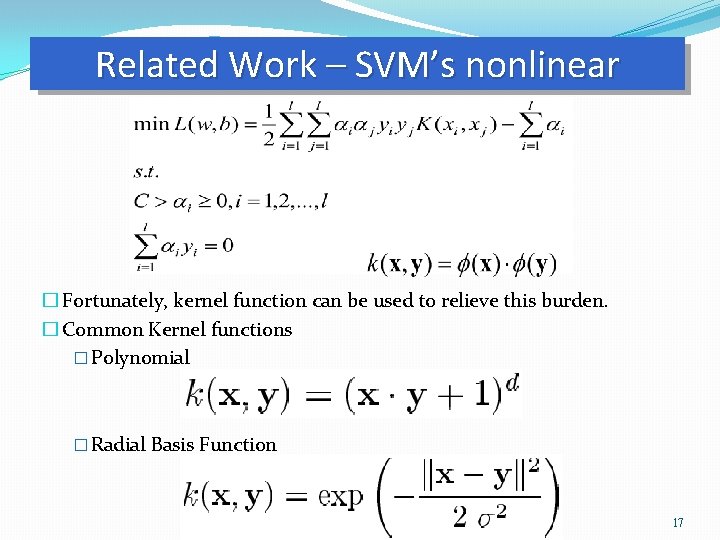

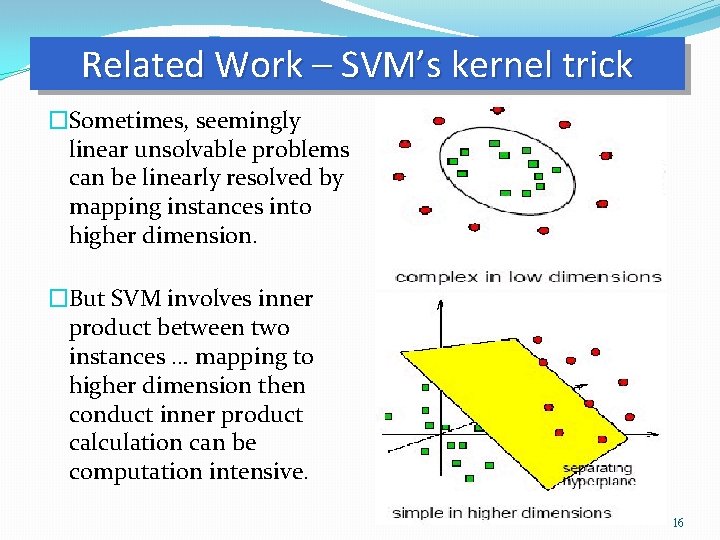

Related Work – SVM’s kernel trick �Sometimes, seemingly linear unsolvable problems can be linearly resolved by mapping instances into higher dimension. �But SVM involves inner product between two instances … mapping to higher dimension then conduct inner product calculation can be computation intensive. 16

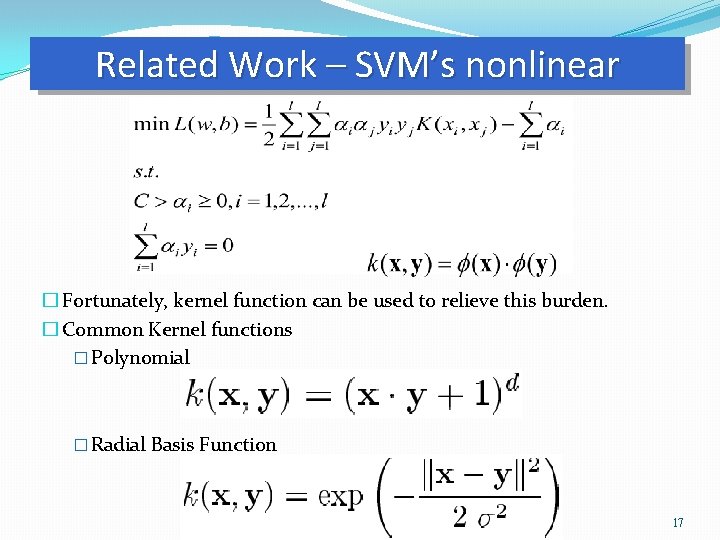

Related Work – SVM’s nonlinear � Fortunately, kernel function can be used to relieve this burden. � Common Kernel functions � Polynomial � Radial Basis Function 17

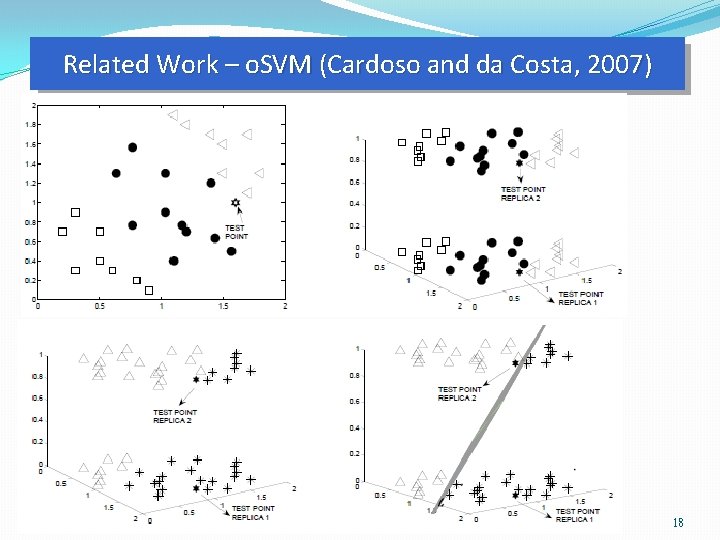

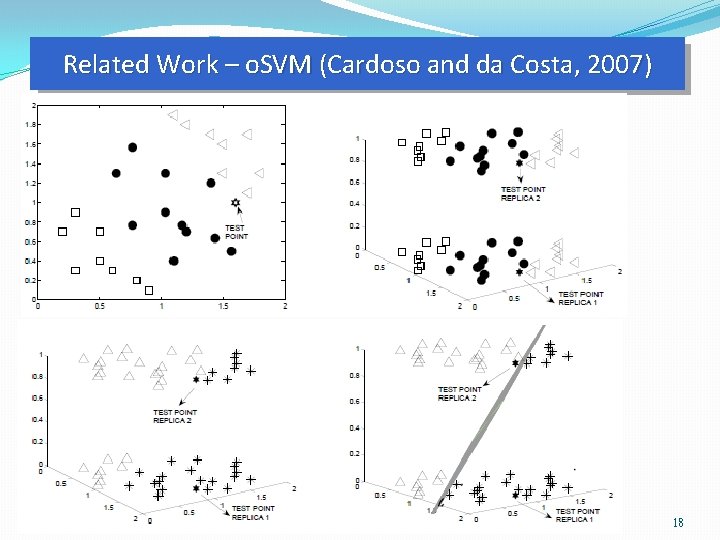

Related Work – o. SVM (Cardoso and da Costa, 2007) 18

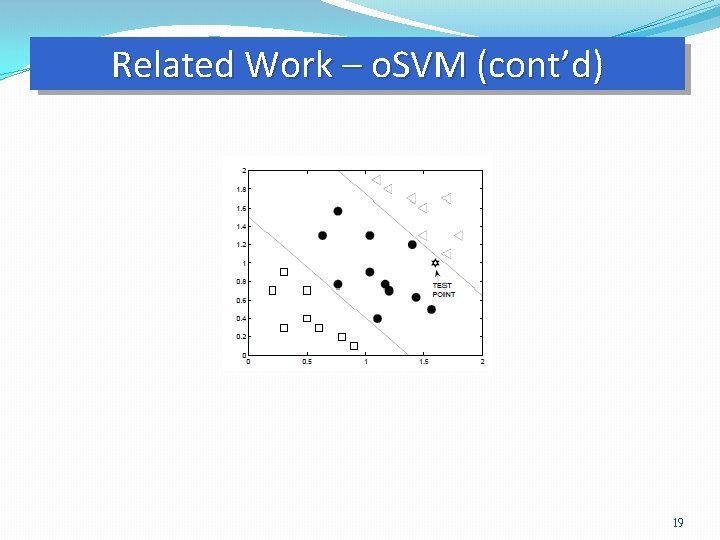

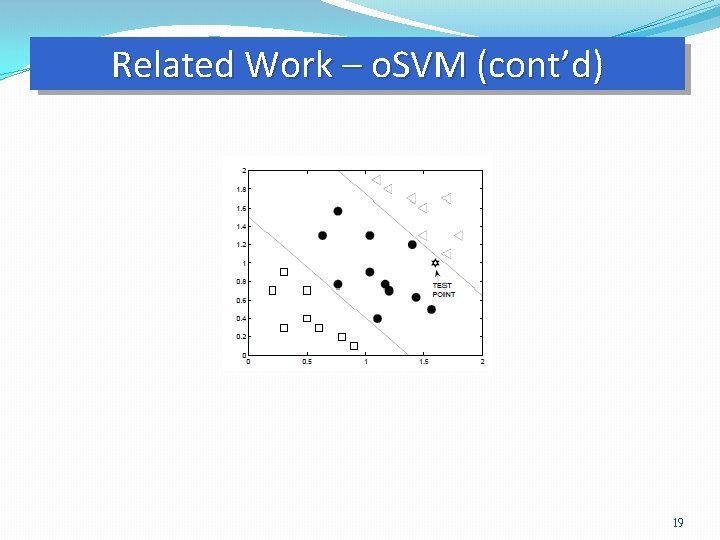

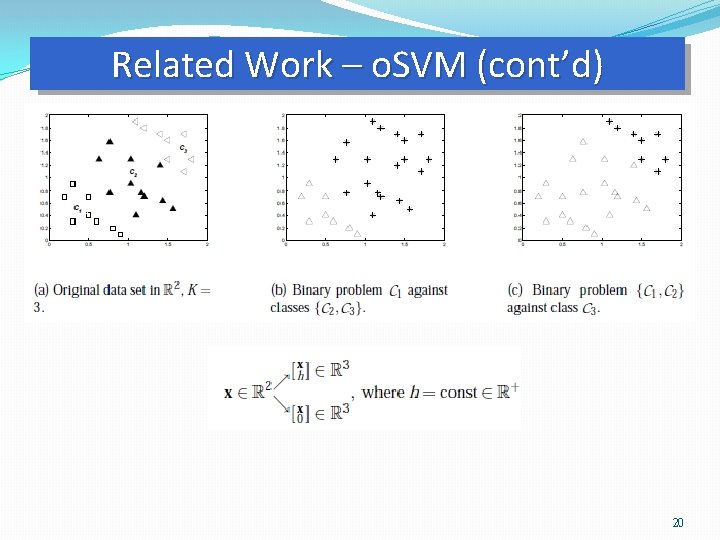

Related Work – o. SVM (cont’d) 19

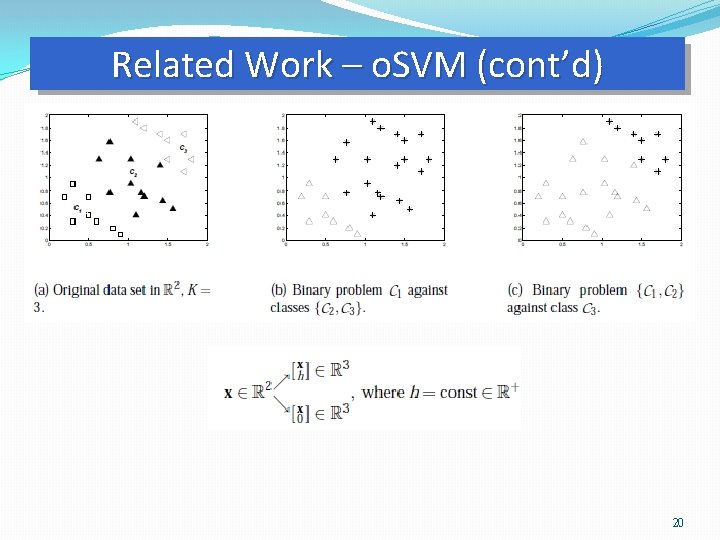

Related Work – o. SVM (cont’d) 20

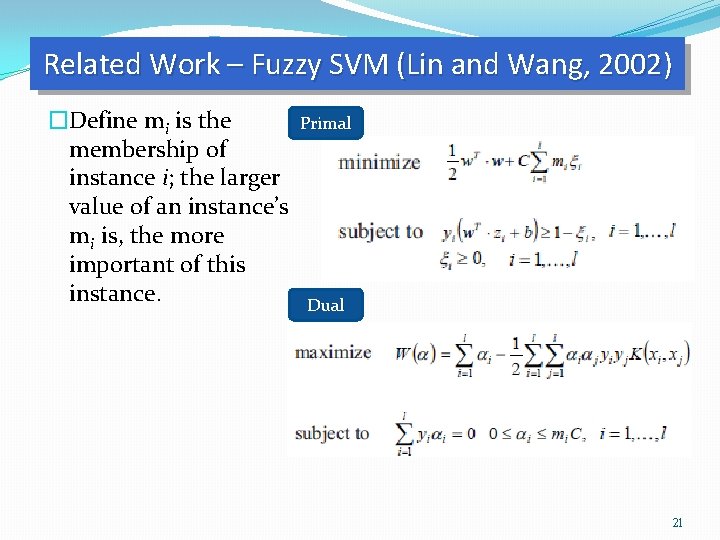

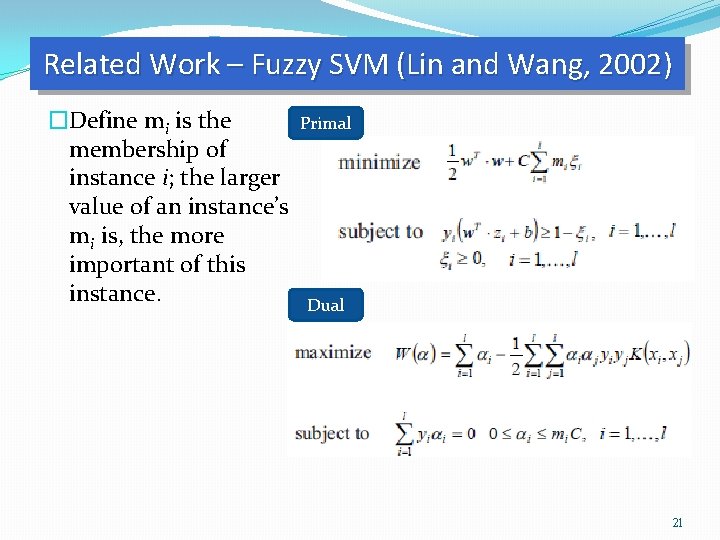

Related Work – Fuzzy SVM (Lin and Wang, 2002) �Define mi is the membership of instance i; the larger value of an instance’s mi is, the more important of this instance. Primal Dual 21

Outline Introduction Related Work Proposed Method Experiments and Results Conclusions 22

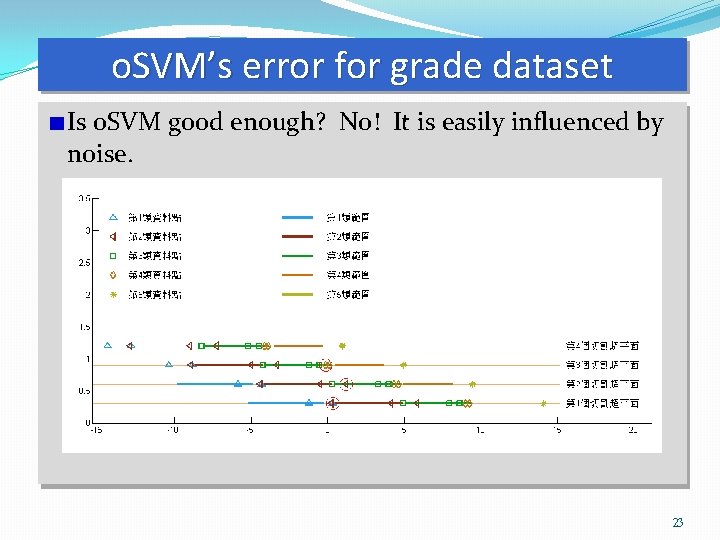

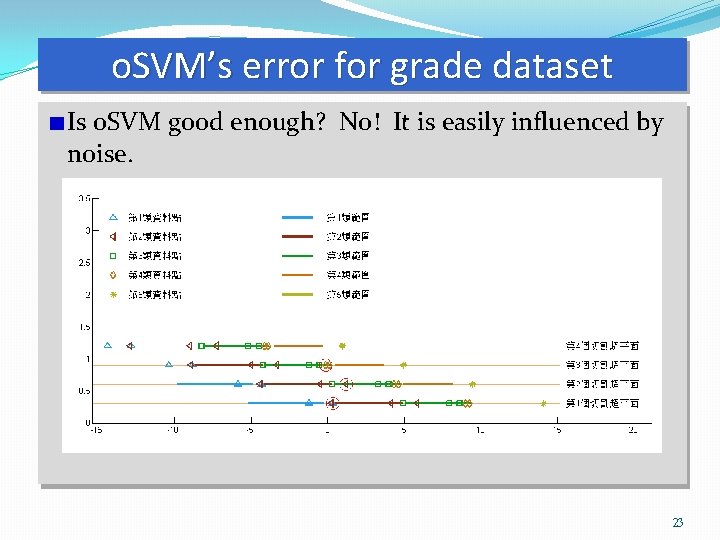

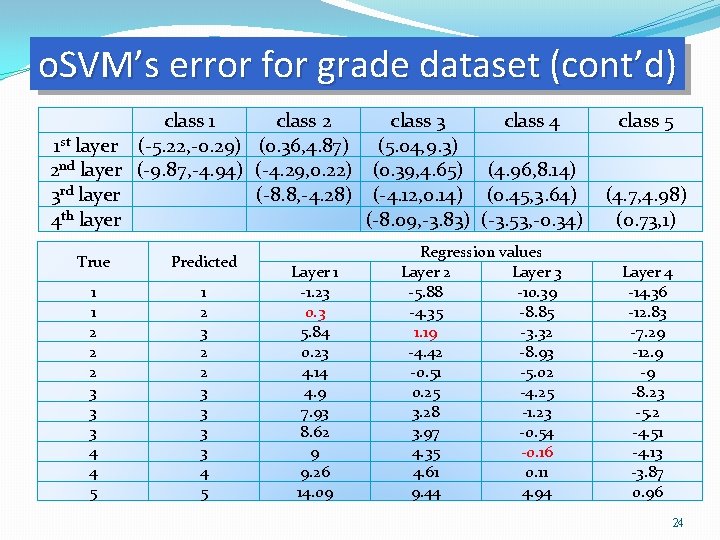

o. SVM’s error for grade dataset Is o. SVM good enough? No! It is easily influenced by noise. 23

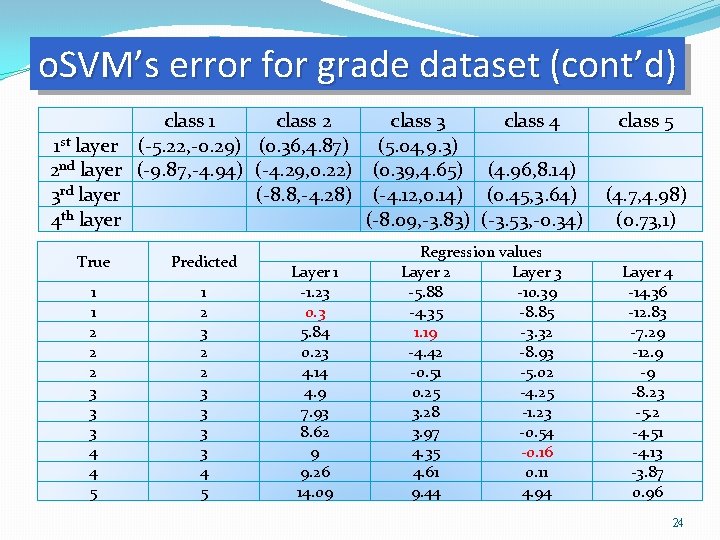

o. SVM’s error for grade dataset (cont’d) class 1 class 2 class 3 class 4 1 st layer (-5. 22, -0. 29) (0. 36, 4. 87) (5. 04, 9. 3) 2 nd layer (-9. 87, -4. 94) (-4. 29, 0. 22) (0. 39, 4. 65) (4. 96, 8. 14) 3 rd layer (-8. 8, -4. 28) (-4. 12, 0. 14) (0. 45, 3. 64) 4 th layer (-8. 09, -3. 83) (-3. 53, -0. 34) True Predicted 1 1 2 2 2 3 3 3 4 4 5 1 2 3 2 2 3 3 4 5 Layer 1 -1. 23 0. 3 5. 84 0. 23 4. 14 4. 9 7. 93 8. 62 9 9. 26 14. 09 Regression values Layer 2 Layer 3 -5. 88 -10. 39 -4. 35 -8. 85 1. 19 -3. 32 -4. 42 -8. 93 -0. 51 -5. 02 0. 25 -4. 25 3. 28 -1. 23 3. 97 -0. 54 4. 35 -0. 16 4. 61 0. 11 9. 44 4. 94 class 5 (4. 7, 4. 98) (0. 73, 1) Layer 4 -14. 36 -12. 83 -7. 29 -12. 9 -9 -8. 23 -5. 2 -4. 51 -4. 13 -3. 87 0. 96 24

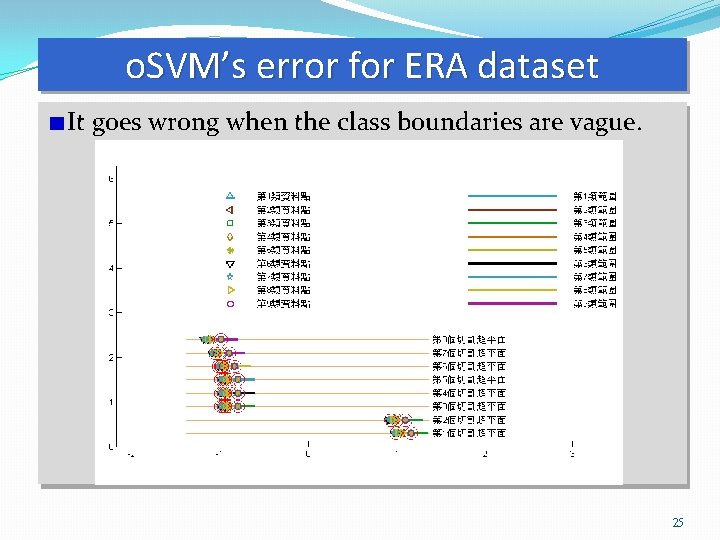

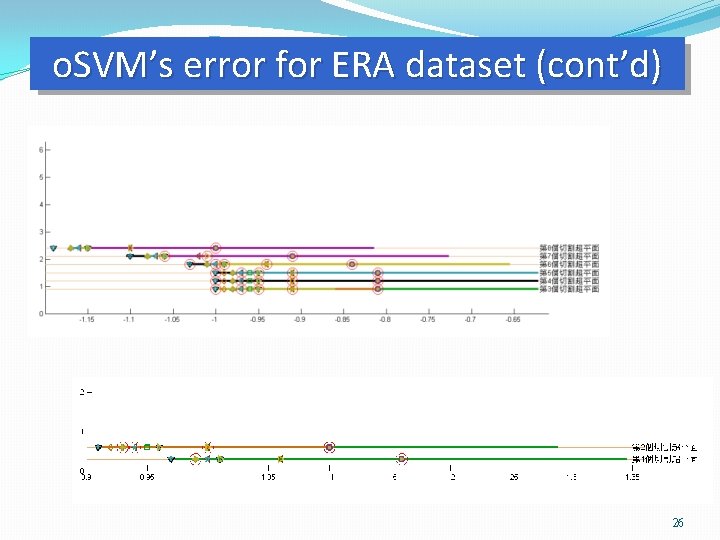

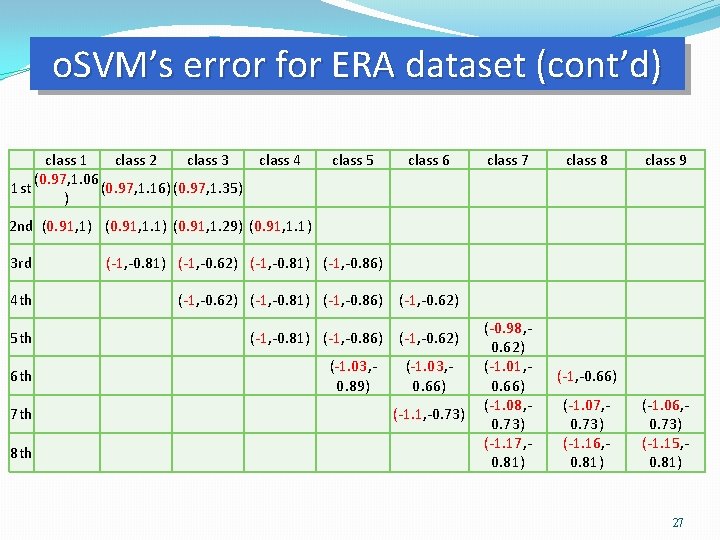

o. SVM’s error for ERA dataset It goes wrong when the class boundaries are vague. 25

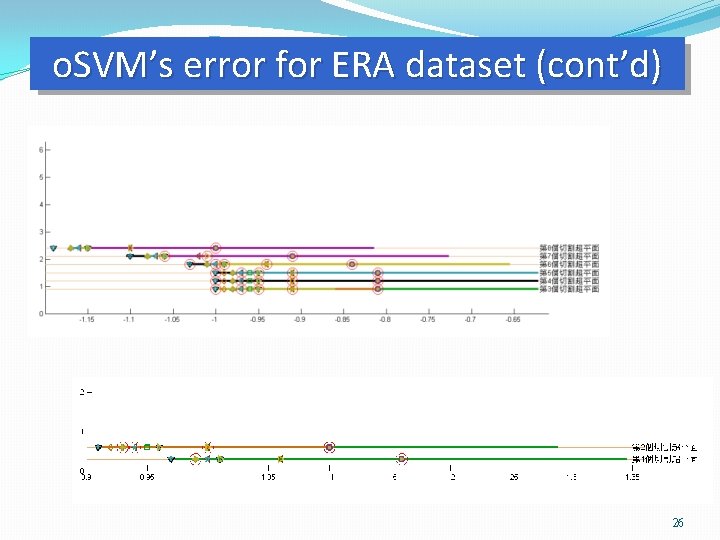

o. SVM’s error for ERA dataset (cont’d) 26

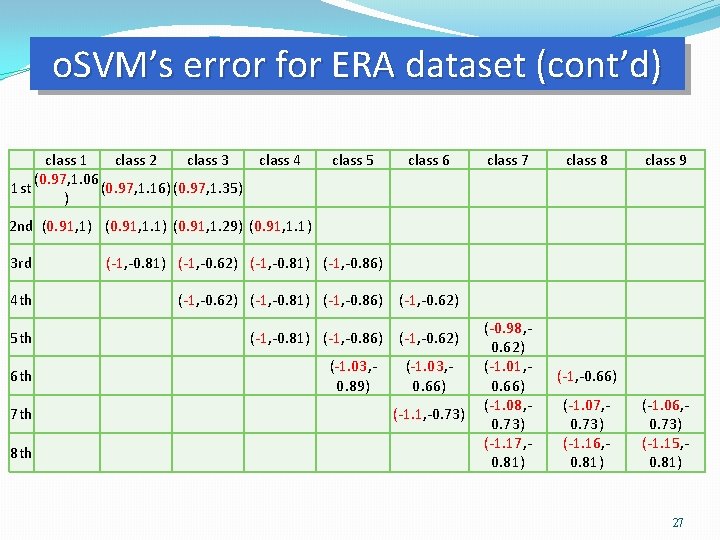

o. SVM’s error for ERA dataset (cont’d) class 1 class 2 class 3 class 4 (0. 97, 1. 06 1 st (0. 97, 1. 16) (0. 97, 1. 35) ) class 5 class 6 class 7 class 8 class 9 2 nd (0. 91, 1) (0. 91, 1. 29) (0. 91, 1. 1) 3 rd (-1, -0. 81) (-1, -0. 62) (-1, -0. 81) (-1, -0. 86) 4 th (-1, -0. 62) (-1, -0. 81) (-1, -0. 86) (-1, -0. 62) 5 th (-1, -0. 81) (-1, -0. 86) (-1, -0. 62) 6 th 7 th 8 th (-1. 03, 0. 89) (-1. 03, 0. 66) (-1. 1, -0. 73) (-0. 98, 0. 62) (-1. 01, 0. 66) (-1. 08, 0. 73) (-1. 17, 0. 81) (-1, -0. 66) (-1. 07, 0. 73) (-1. 16, 0. 81) (-1. 06, 0. 73) (-1. 15, 0. 81) 27

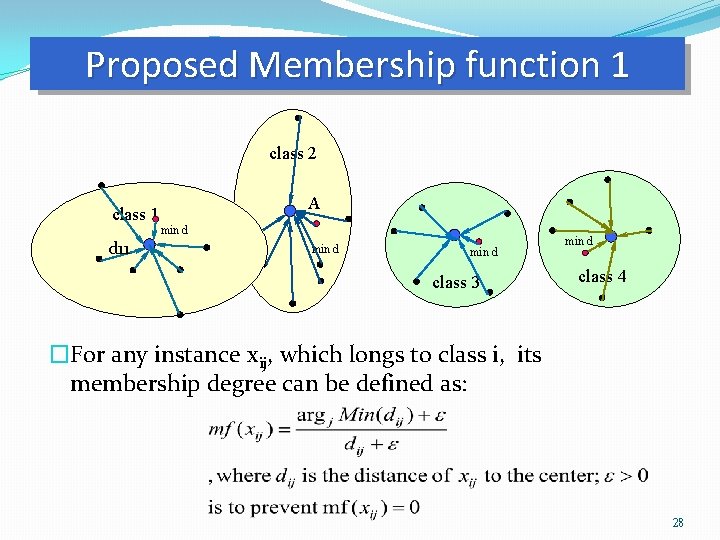

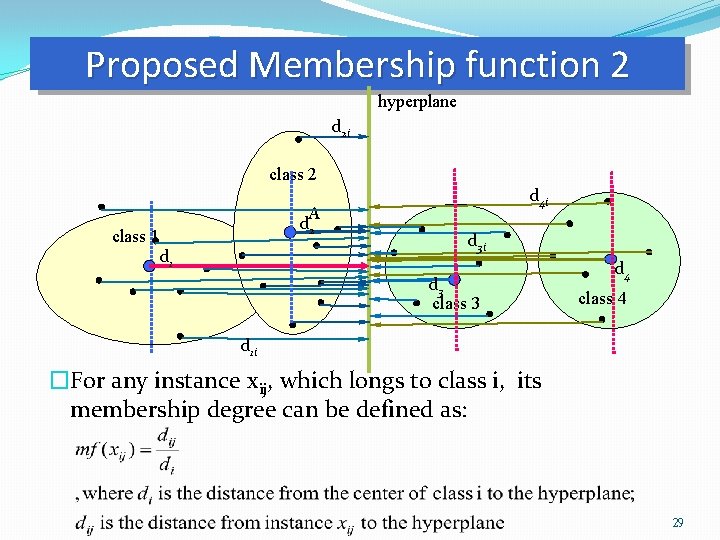

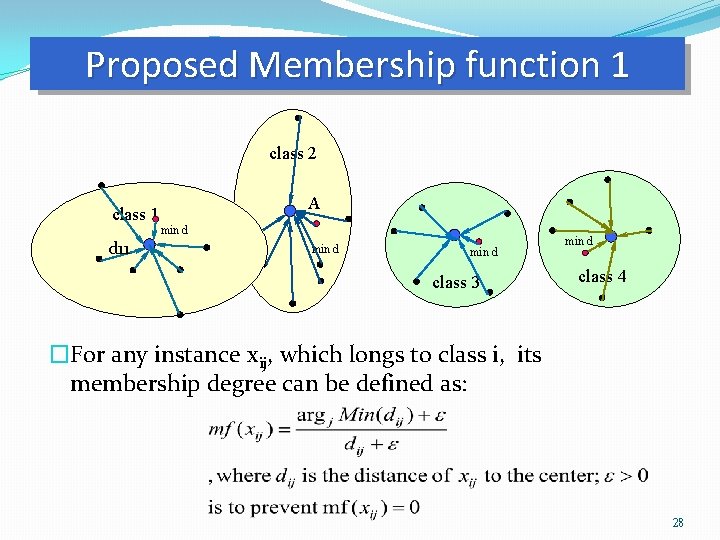

Proposed Membership function 1 class 2 class 1 d 11 A min d class 3 min d class 4 �For any instance xij, which longs to class i, its membership degree can be defined as: 28

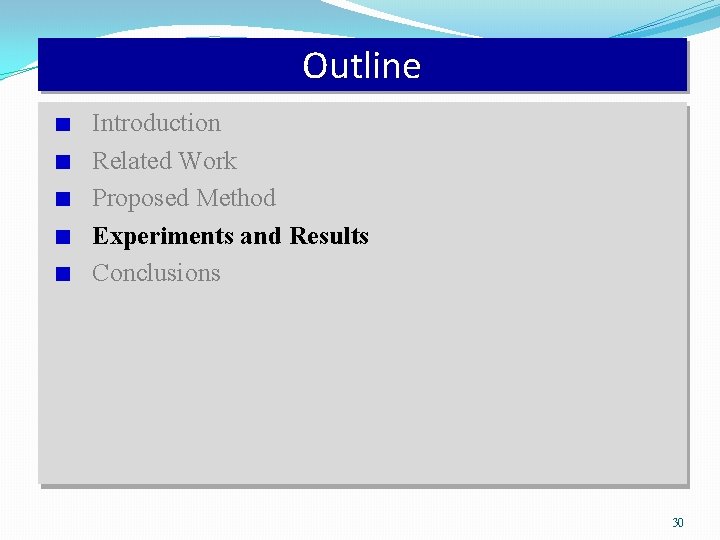

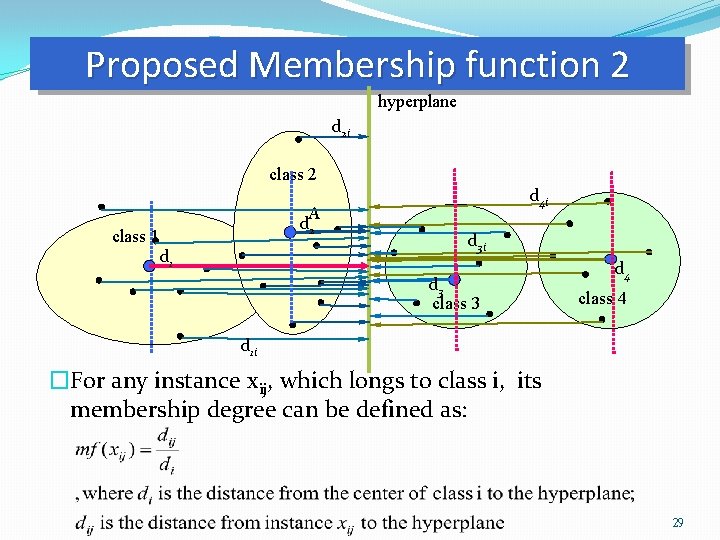

Proposed Membership function 2 hyperplane d 2 i class 2 A d 2 class 1 d 4 i d 3 class 3 d 4 class 4 d 1 i �For any instance xij, which longs to class i, its membership degree can be defined as: 29

Outline Introduction Related Work Proposed Method Experiments and Results Conclusions 30

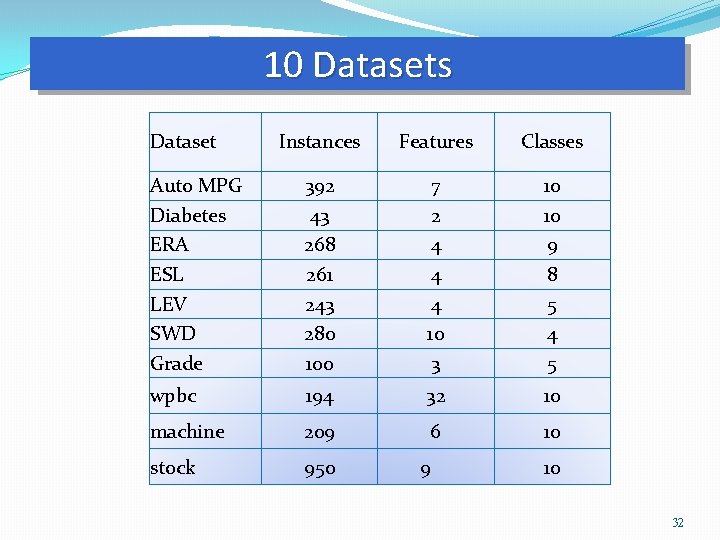

Experiments The o. SVM codes are from Cardoso and da Costa (2007) in matlab. We base their codes to develop our Fo. SVM. ordinal. Classifier is from weka (c 4. 5 is the base classifier) SVR is from libsvm’s -SVR (better than -SVR) 10 datasets are used for comparing these five classifiers Two measures are employed to compare the performance: mean zero-one error and mean absolute error. The experiments use 10 -fold cross-validation method to obtain over all performance. 31

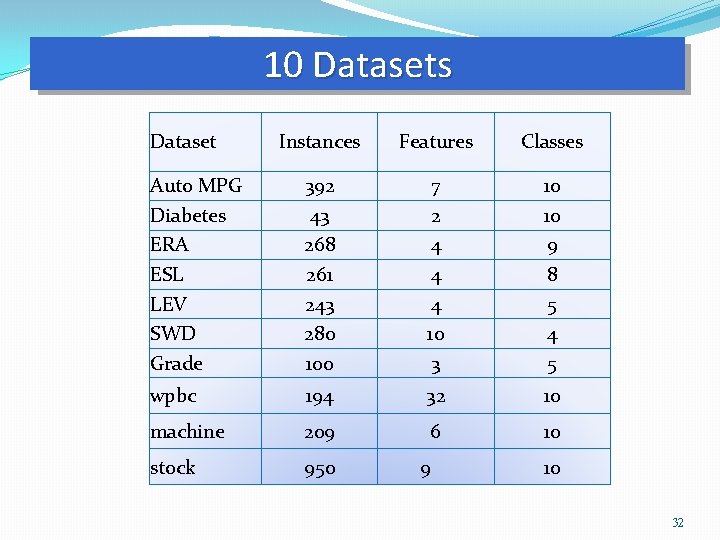

10 Datasets Dataset Instances Features Classes Auto MPG Diabetes ERA ESL LEV SWD Grade 392 43 268 261 243 280 100 7 2 4 4 4 10 3 10 10 9 8 5 4 5 wpbc 194 32 10 machine 209 6 10 stock 950 9 10 32

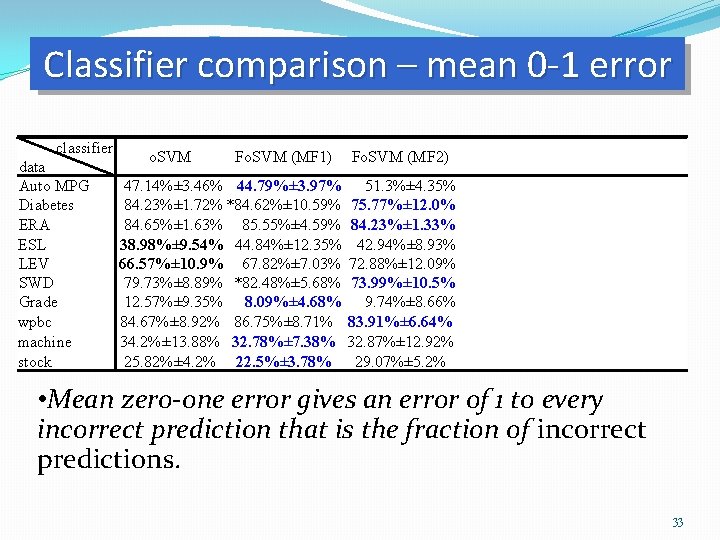

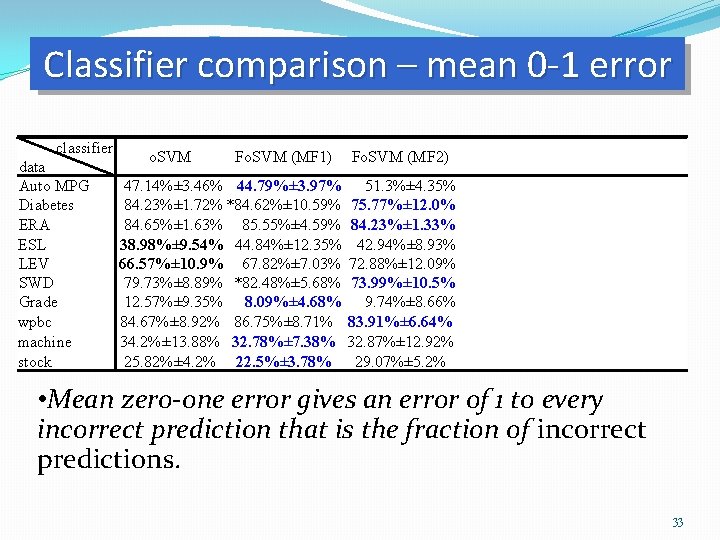

Classifier comparison – mean 0 -1 error classifier data Auto MPG Diabetes ERA ESL LEV SWD Grade wpbc machine stock o. SVM Fo. SVM (MF 1) Fo. SVM (MF 2) ordinal. Classifier libsvm 47. 14%± 3. 46% 44. 79%± 3. 97% 51. 3%± 4. 35% 53. 12%± 2. 83% *83. 44%± 16. 61% 84. 23%± 1. 72% *84. 62%± 10. 59% 75. 77%± 12. 04% 75. 77%± 12. 0% 78. 85%± 14. 09% 71. 92%± 9. 75% 84. 65%± 1. 63% 85. 55%± 4. 59% 84. 23%± 1. 33% 87. 31%± 5. 6% 85. 42%± 5. 84% 38. 98%± 9. 54% 44. 84%± 12. 35% 42. 94%± 8. 93% *55. 9%± 8. 98% 53. 28%± 8. 35% 66. 57%± 10. 9% 67. 82%± 7. 03% 72. 88%± 12. 09% 73. 43%± 9. 37% *74. 5%± 12. 19% 79. 73%± 8. 89% *82. 48%± 5. 68% 73. 99%± 10. 5% 64. 27%± 3. 3% 72. 45%± 10. 41% 12. 57%± 9. 35% 8. 09%± 4. 68% 9. 74%± 8. 66% 41. 7%± 20. 43% *43. 65%± 9. 31% 84. 67%± 8. 92% 86. 75%± 8. 71% 83. 91%± 6. 64% 87. 37%± 5. 12% 86. 54%± 3. 33% 34. 2%± 13. 88% 32. 78%± 7. 38% 32. 87%± 12. 92% 28. 82%± 14. 7% *43. 34%± 14. 52% 25. 82%± 4. 2% 22. 5%± 3. 78% 29. 07%± 5. 2% 21. 61%± 5. 02% *53. 9%± 4. 88% • Mean zero-one error gives an error of 1 to every incorrect prediction that is the fraction of incorrect predictions. 33

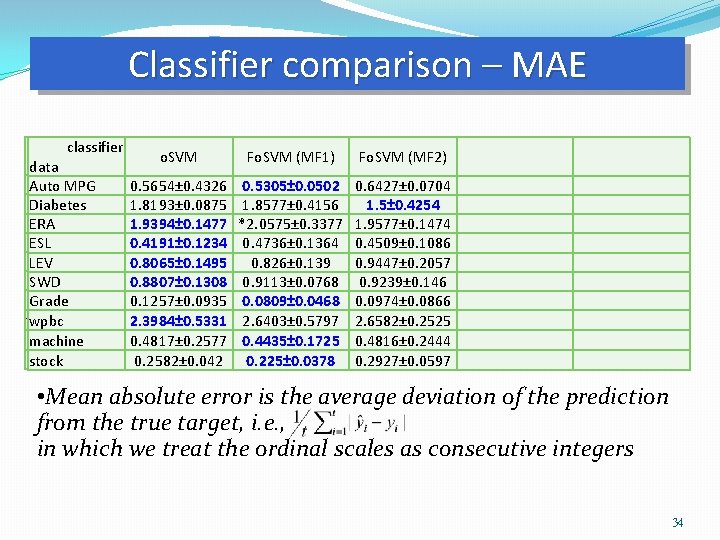

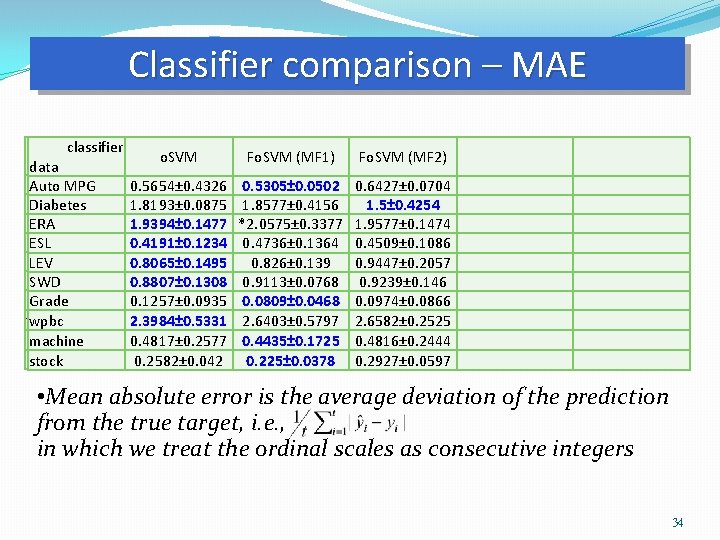

Classifier comparison – MAE classifier data Auto MPG Diabetes ERA ESL LEV SWD Grade wpbc machine stock o. SVM Fo. SVM (MF 1) 0. 5654± 0. 4326 1. 8193± 0. 0875 1. 9394± 0. 1477 0. 4191± 0. 1234 0. 8065± 0. 1495 0. 8807± 0. 1308 0. 1257± 0. 0935 2. 3984± 0. 5331 0. 4817± 0. 2577 0. 2582± 0. 042 0. 5305± 0. 0502 1. 8577± 0. 4156 *2. 0575± 0. 3377 0. 4736± 0. 1364 0. 826± 0. 139 0. 9113± 0. 0768 0. 0809± 0. 0468 2. 6403± 0. 5797 0. 4435± 0. 1725 0. 225± 0. 0378 Fo. SVM (MF 2) ordinal. Classifier 0. 6427± 0. 0704 1. 5± 0. 4254 1. 9577± 0. 1474 0. 4509± 0. 1086 0. 9447± 0. 2057 0. 9239± 0. 146 0. 0974± 0. 0866 2. 6582± 0. 2525 0. 4816± 0. 2444 0. 2927± 0. 0597 0. 6726± 0. 0451 *1. 8923± 0. 71 1. 7755± 0. 1049 0. 6146± 0. 3535 0. 9023± 0. 1102 0. 6984± 0. 0859 0. 426± 0. 2037 2. 7002± 0. 7106 0. 3928± 0. 2092 0. 2256± 0. 0587 libsvm *1. 4313± 0. 0294 1. 0808± 0. 1444 1. 7451± 0. 2711 *0. 657± 0. 0424 *1. 1290± 0. 1964 *1. 0403± 0. 1694 *0. 4365± 0. 0931 2. 3092± 0. 1139 *0. 6636± 0. 3419 *0. 6216± 0. 0765 • Mean absolute error is the average deviation of the prediction from the true target, i. e. , in which we treat the ordinal scales as consecutive integers 34

Outline Introduction Related Work Proposed Method Experiments and Results Conclusions 35

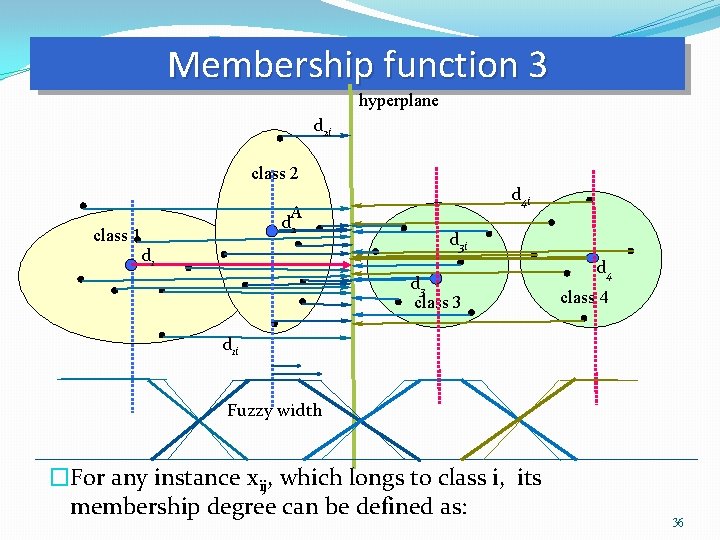

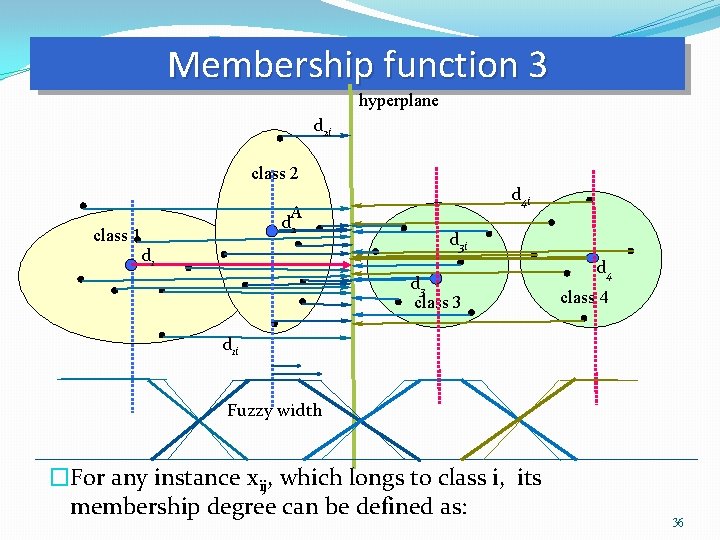

Membership function 3 hyperplane d 2 i class 2 A d 2 class 1 d 4 i d 3 class 3 d 4 class 4 d 1 i Fuzzy width �For any instance xij, which longs to class i, its membership degree can be defined as: 36

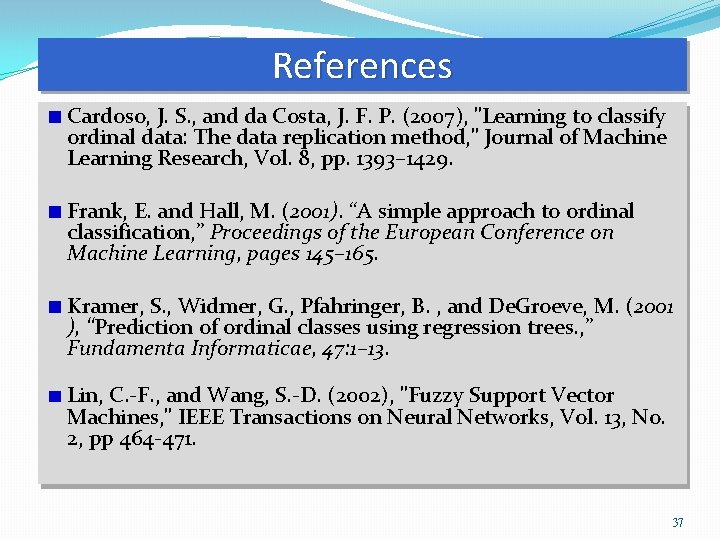

References Cardoso, J. S. , and da Costa, J. F. P. (2007), "Learning to classify ordinal data: The data replication method, " Journal of Machine Learning Research, Vol. 8, pp. 1393– 1429. Frank, E. and Hall, M. (2001). “A simple approach to ordinal classification, ” Proceedings of the European Conference on Machine Learning, pages 145– 165. Kramer, S. , Widmer, G. , Pfahringer, B. , and De. Groeve, M. (2001 ), “Prediction of ordinal classes using regression trees. , ” Fundamenta Informaticae, 47: 1– 13. Lin, C. -F. , and Wang, S. -D. (2002), "Fuzzy Support Vector Machines, " IEEE Transactions on Neural Networks, Vol. 13, No. 2, pp 464 -471. 37