Classification and Prediction Fuzzy Fuzzy Set Approaches Fuzzy

- Slides: 35

Classification and Prediction Fuzzy

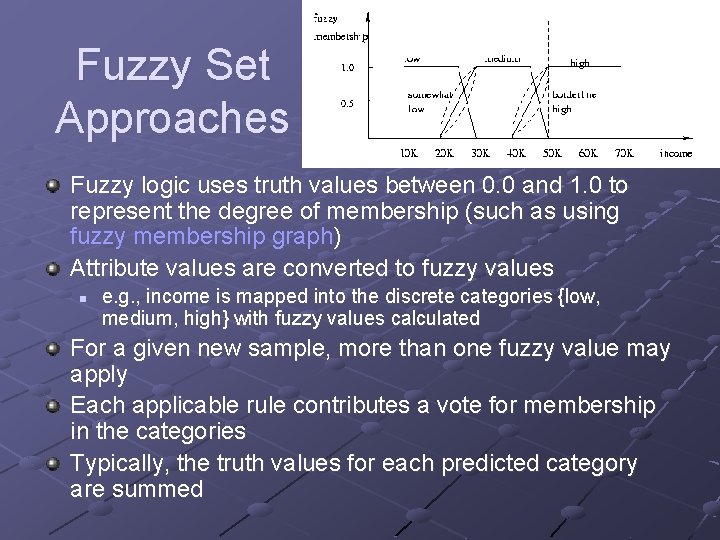

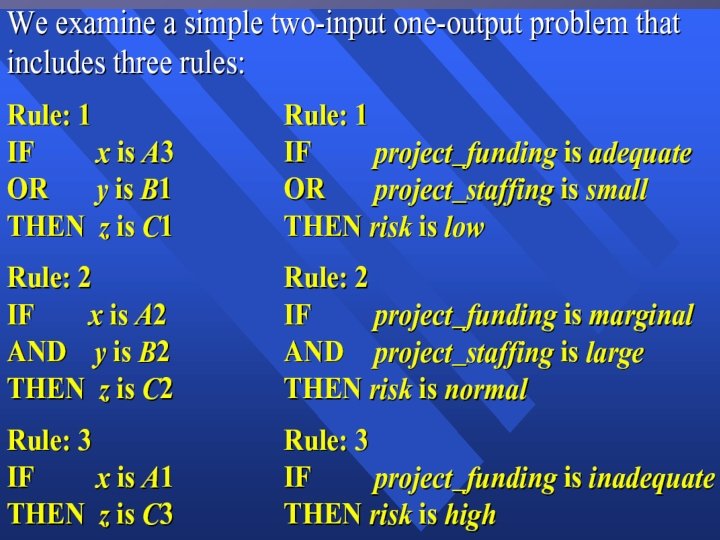

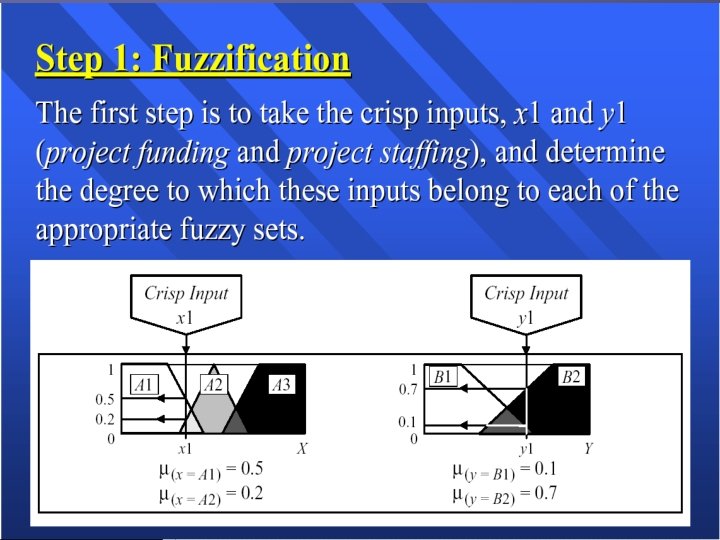

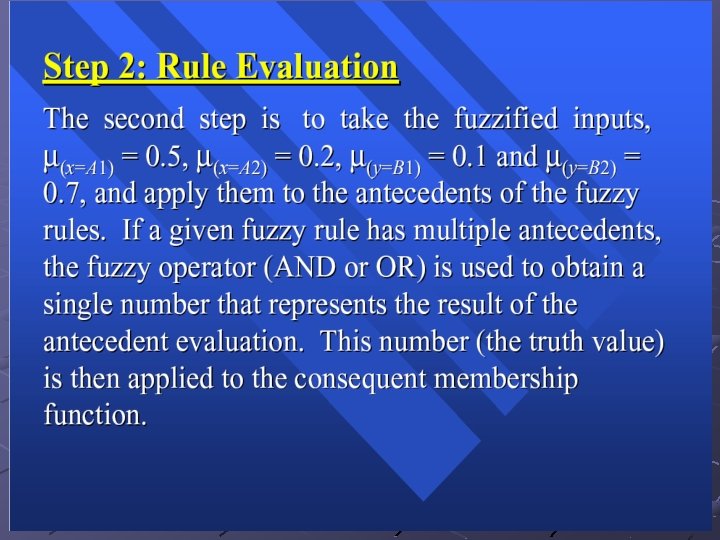

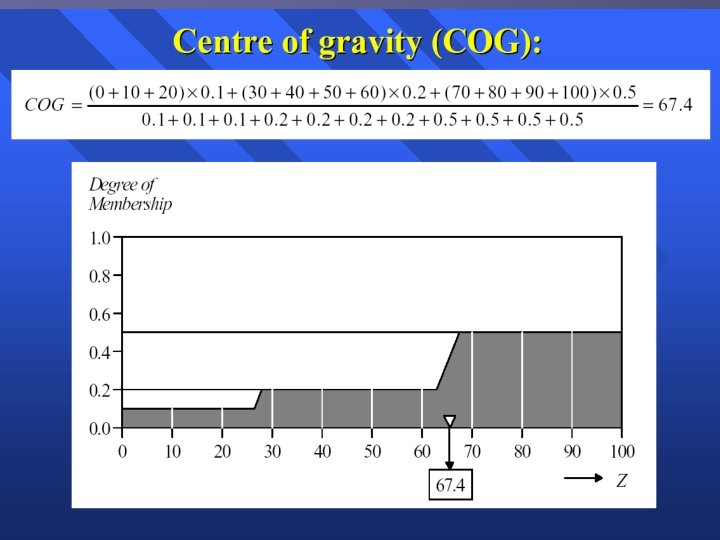

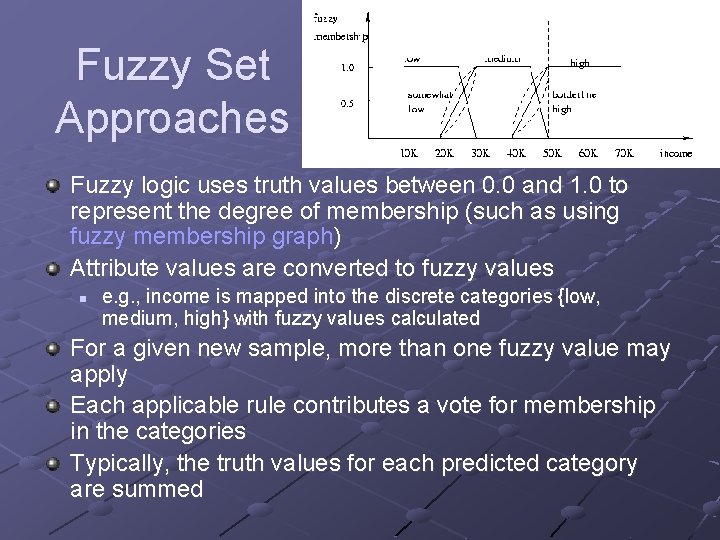

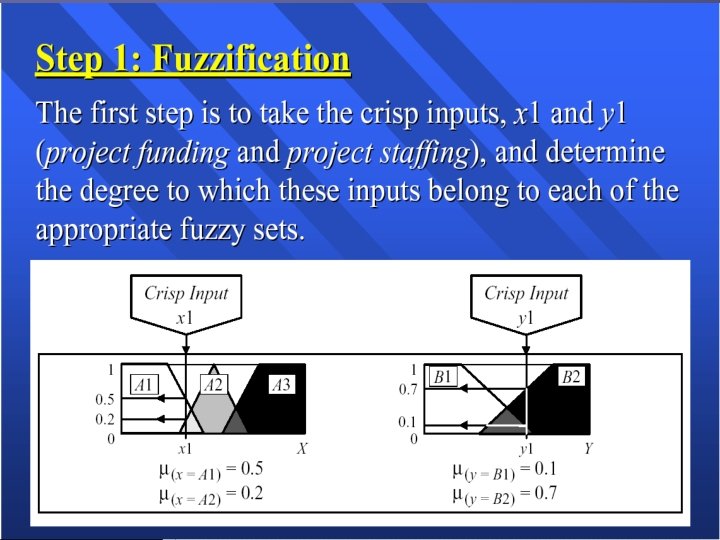

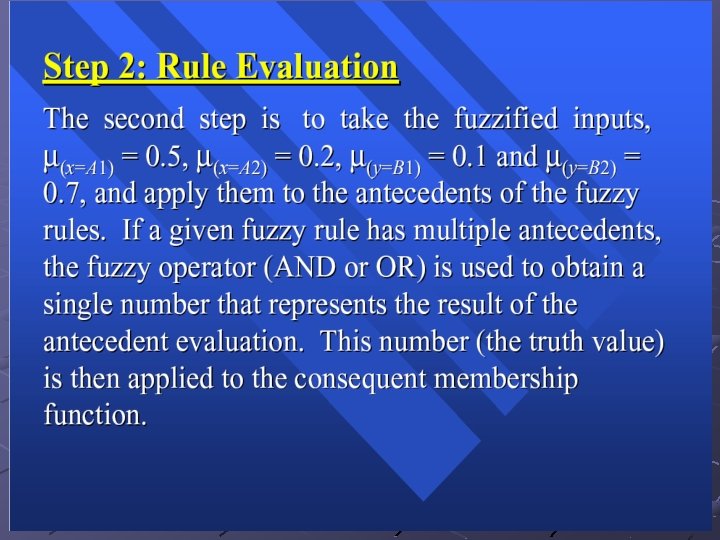

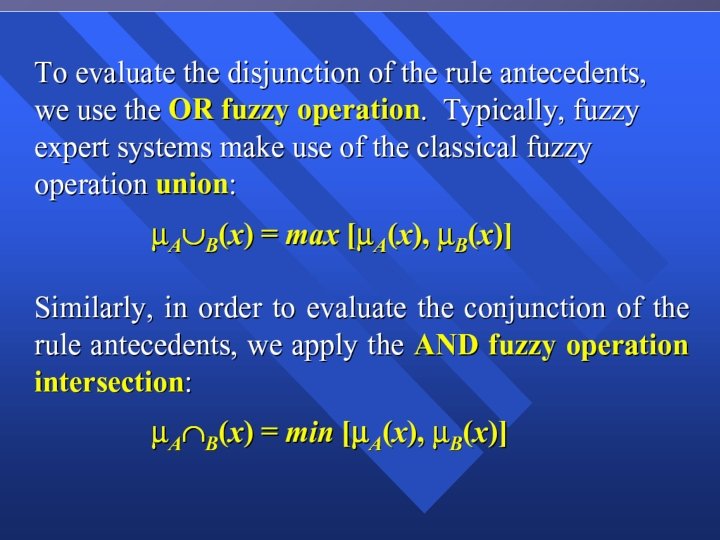

Fuzzy Set Approaches Fuzzy logic uses truth values between 0. 0 and 1. 0 to represent the degree of membership (such as using fuzzy membership graph) Attribute values are converted to fuzzy values n e. g. , income is mapped into the discrete categories {low, medium, high} with fuzzy values calculated For a given new sample, more than one fuzzy value may apply Each applicable rule contributes a vote for membership in the categories Typically, the truth values for each predicted category are summed

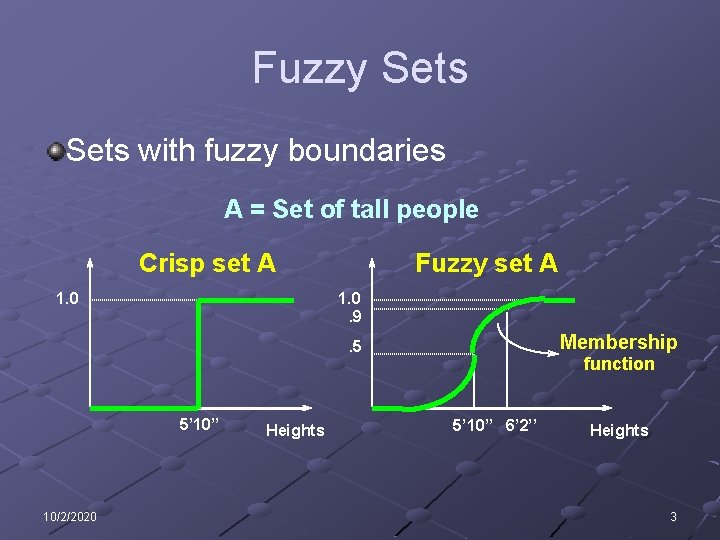

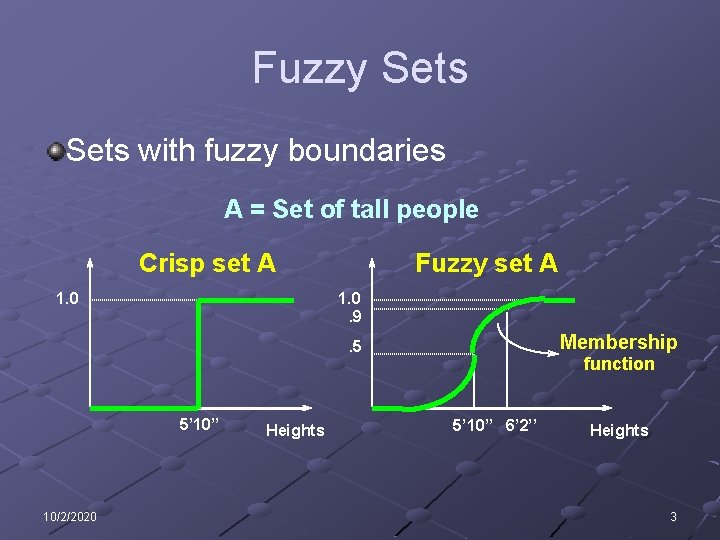

Fuzzy Sets with fuzzy boundaries A = Set of tall people Crisp set A 1. 0 Fuzzy set A 1. 0. 9 Membership . 5 5’ 10’’ 10/2/2020 Heights function 5’ 10’’ 6’ 2’’ Heights 3

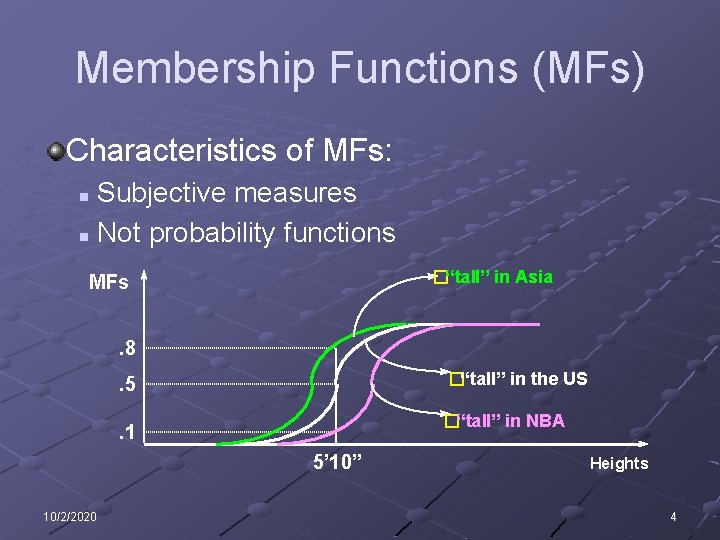

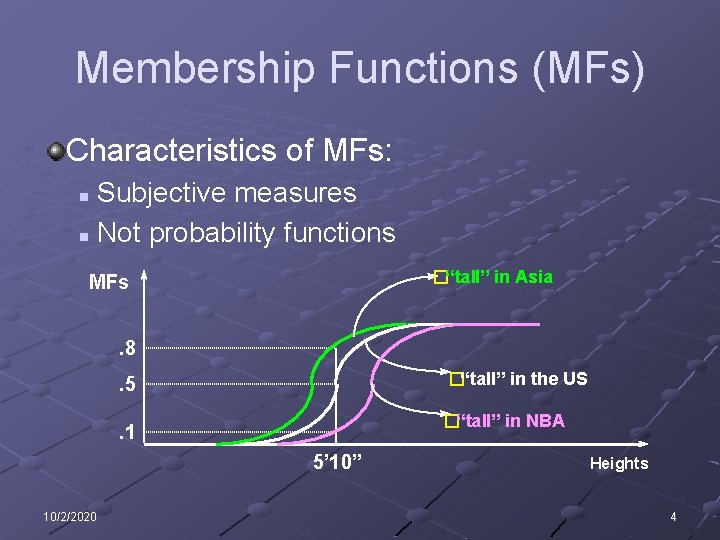

Membership Functions (MFs) Characteristics of MFs: Subjective measures n Not probability functions n �“tall” in Asia MFs. 8 �“tall” in the US . 5 �“tall” in NBA . 1 5’ 10’’ 10/2/2020 Heights 4

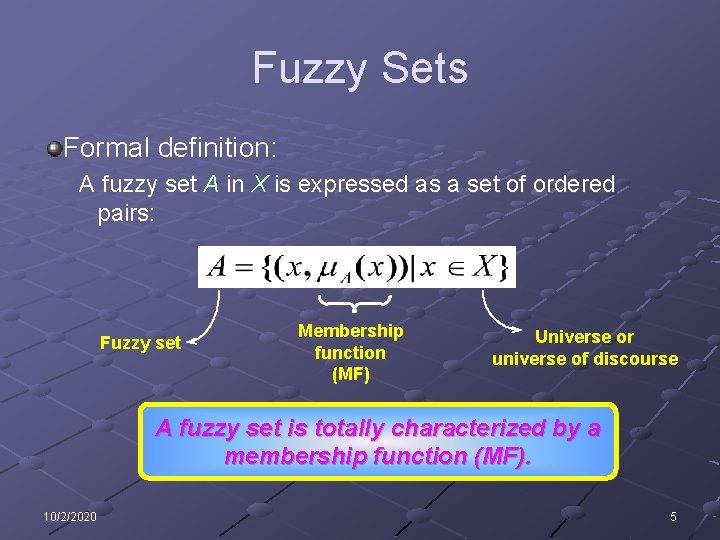

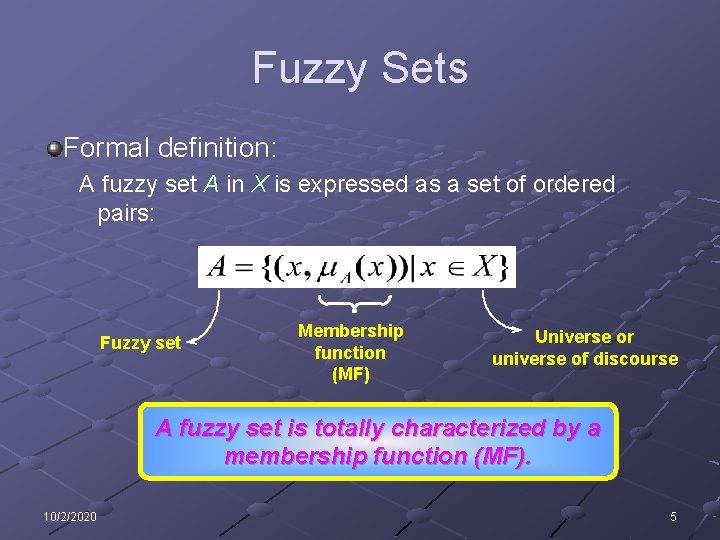

Fuzzy Sets Formal definition: A fuzzy set A in X is expressed as a set of ordered pairs: Fuzzy set Membership function (MF) Universe or universe of discourse A fuzzy set is totally characterized by a membership function (MF). 10/2/2020 5

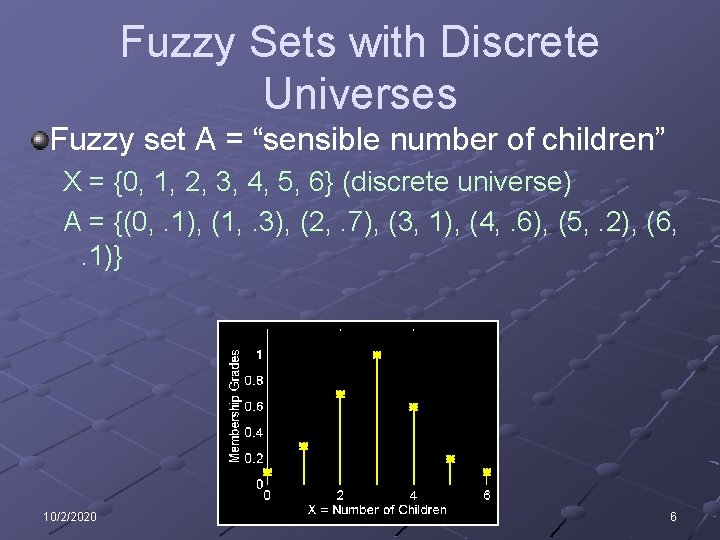

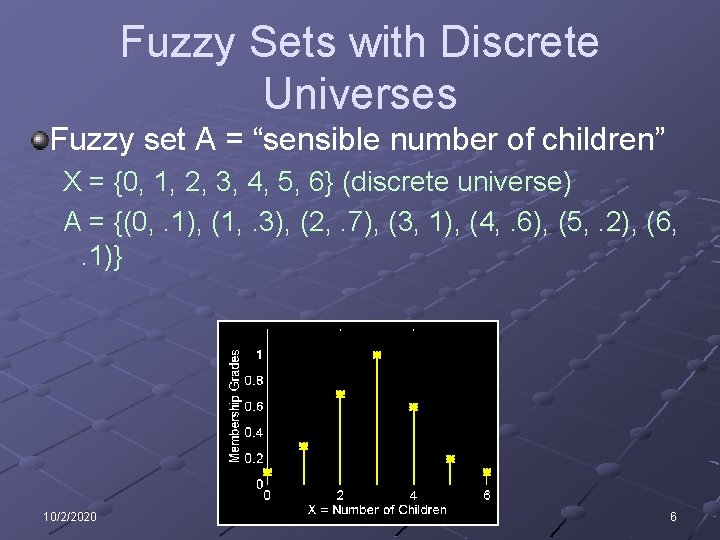

Fuzzy Sets with Discrete Universes Fuzzy set A = “sensible number of children” X = {0, 1, 2, 3, 4, 5, 6} (discrete universe) A = {(0, . 1), (1, . 3), (2, . 7), (3, 1), (4, . 6), (5, . 2), (6, . 1)} 10/2/2020 6

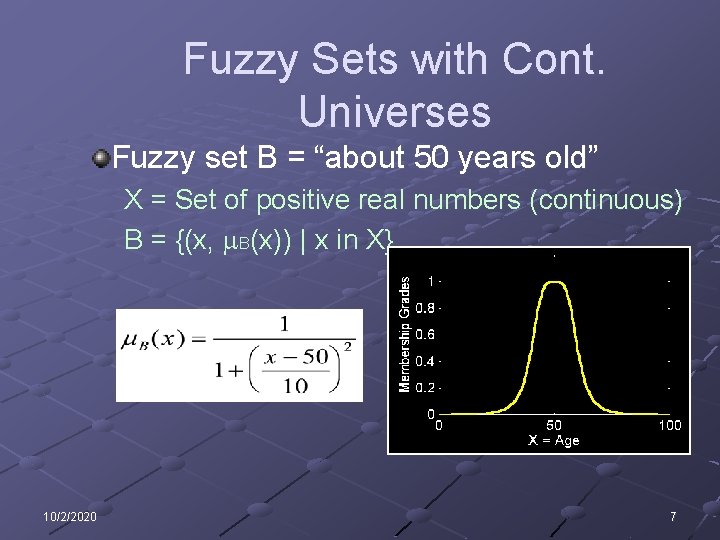

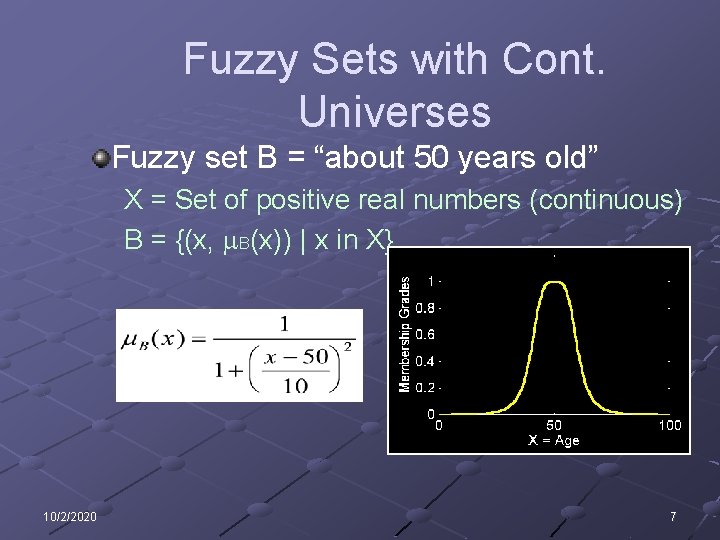

Fuzzy Sets with Cont. Universes Fuzzy set B = “about 50 years old” X = Set of positive real numbers (continuous) B = {(x, m. B(x)) | x in X} 10/2/2020 7

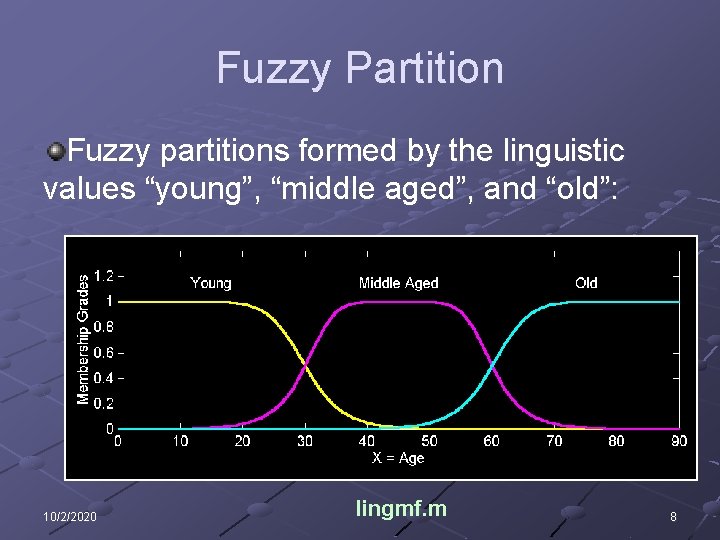

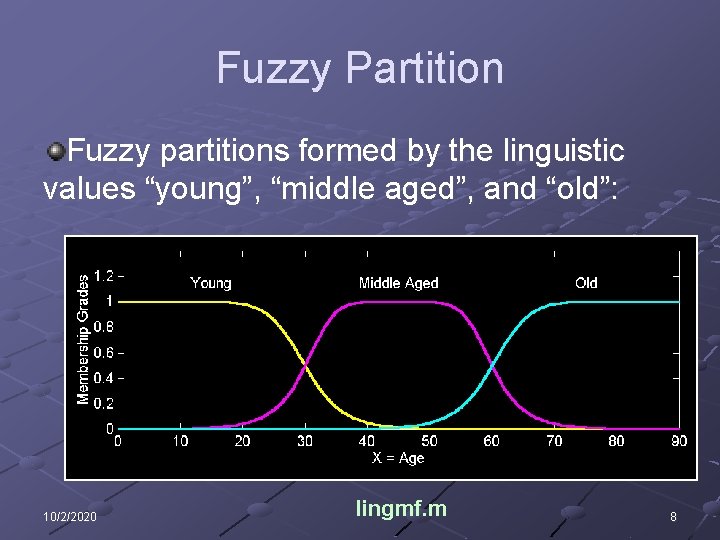

Fuzzy Partition Fuzzy partitions formed by the linguistic values “young”, “middle aged”, and “old”: 10/2/2020 lingmf. m 8

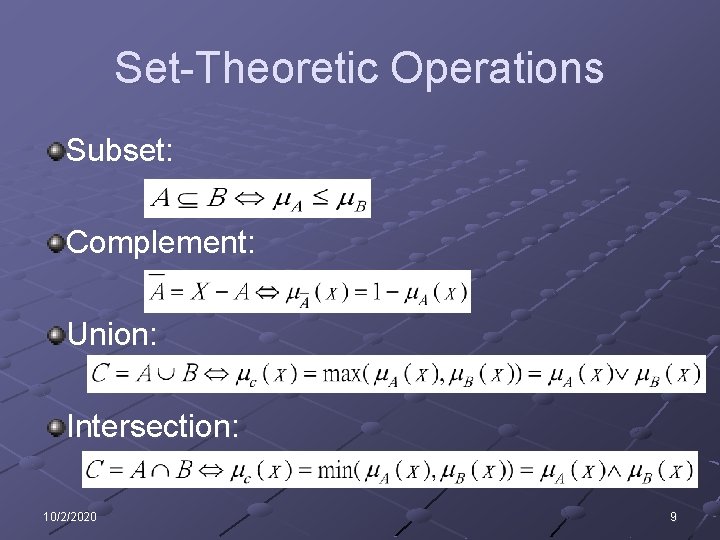

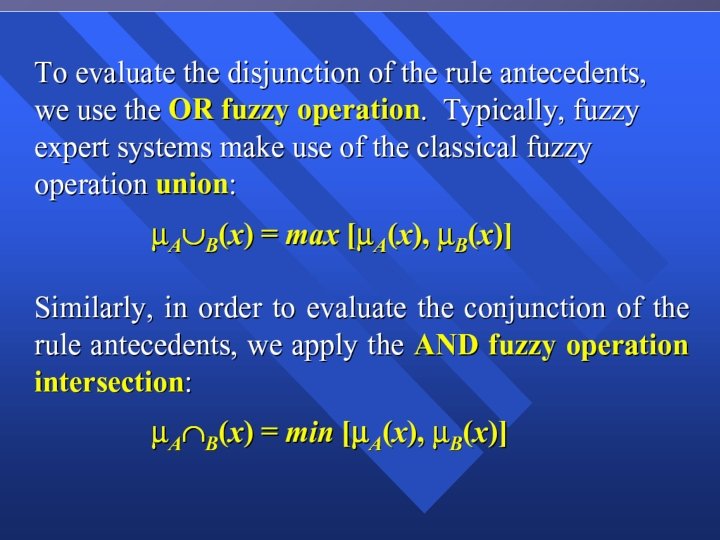

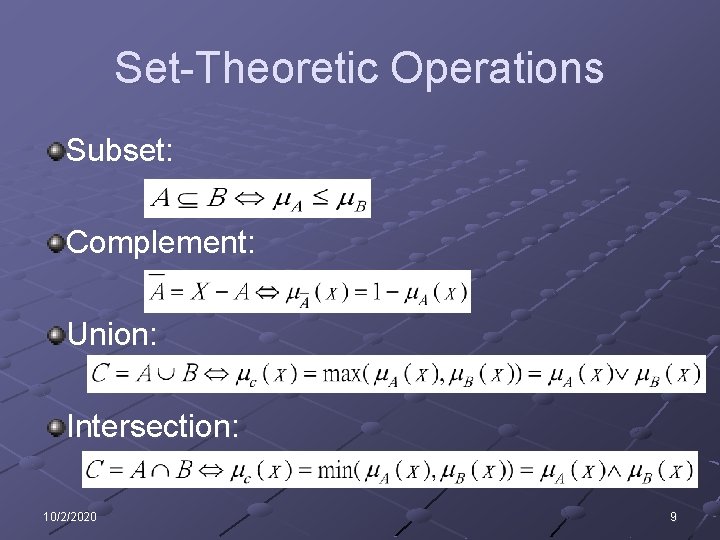

Set-Theoretic Operations Subset: Complement: Union: Intersection: 10/2/2020 9

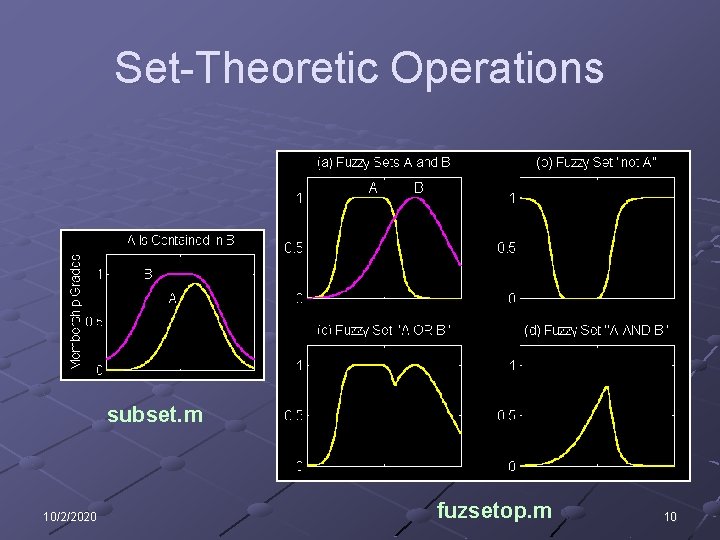

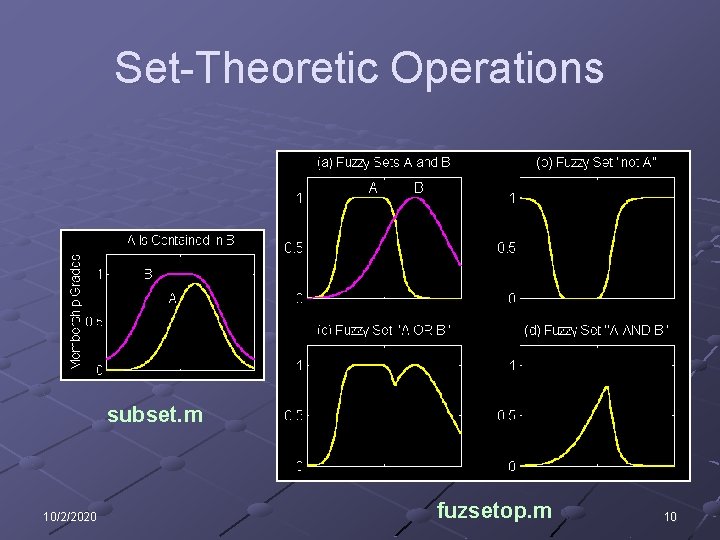

Set-Theoretic Operations subset. m 10/2/2020 fuzsetop. m 10

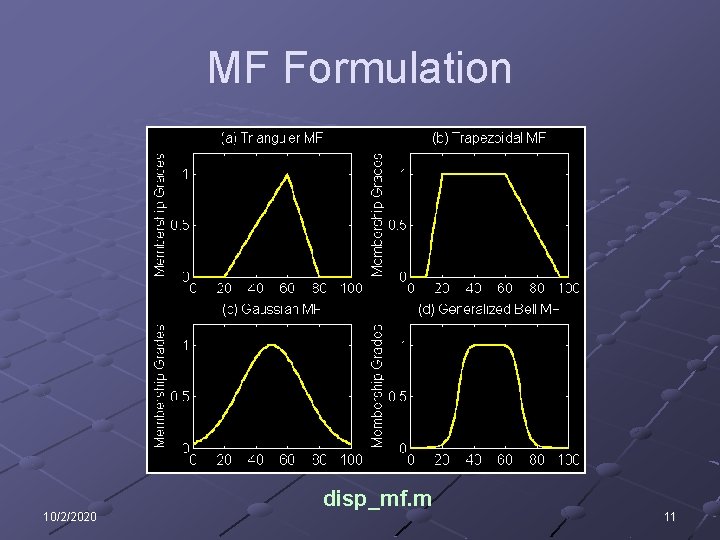

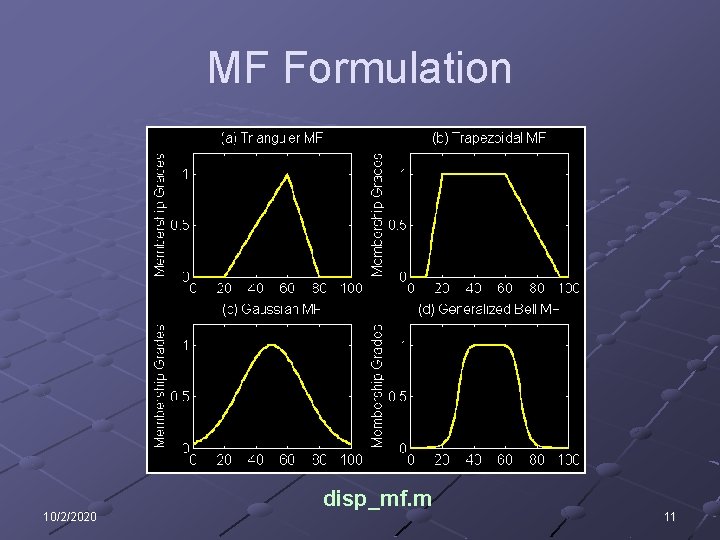

MF Formulation 10/2/2020 disp_mf. m 11

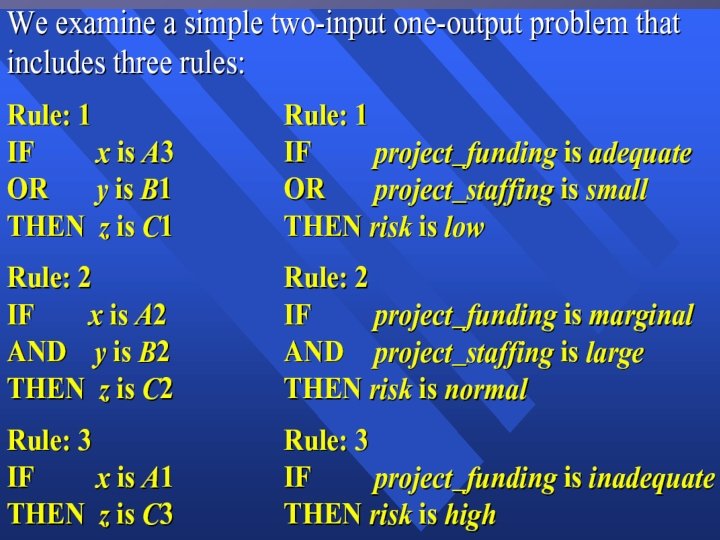

Fuzzy If-Then Rules General format: If x is A then y is B Examples: If pressure is high, then volume is small. n If the road is slippery, then driving is dangerous. n If a tomato is red, then it is ripe. n If the speed is high, then apply the brake a little. n 10/2/2020 12

Classification and Prediction Fuzzy Support Vector Machine

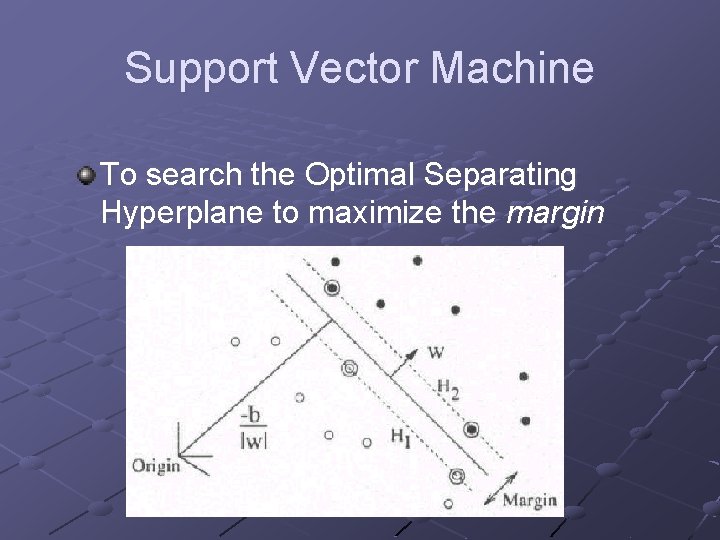

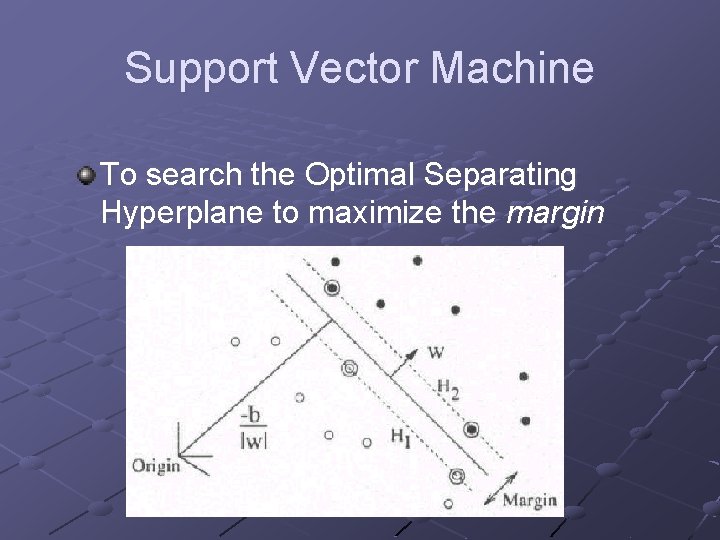

Support Vector Machine To search the Optimal Separating Hyperplane to maximize the margin

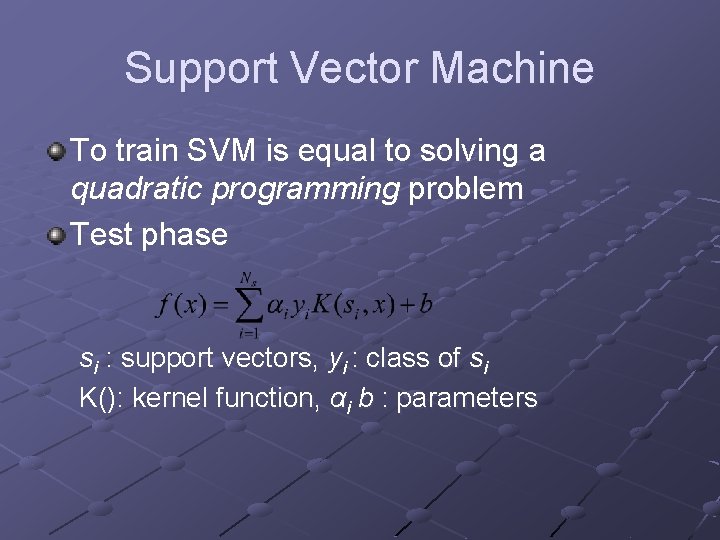

Support Vector Machine To train SVM is equal to solving a quadratic programming problem Test phase si : support vectors, yi : class of si K(): kernel function, αi b : parameters

Support Vector Machine Kernel Function n K(x, y) = (x) • (y) x, y are vectors in input space (x), (y) are vectors in feature space d (feature space) >> d (input space) n n n No need to compute (x) explicitly Tr(x, y) = sub(x) • sub(y), where sub(x) is a vector represents all the sub-trees of x. www. csie. ntu. edu. tw/~cjlin

Classification and Prediction Fuzzy Support Vector Machine Prediction

What Is Prediction? Prediction is similar to classification n First, construct a model n Second, use model to predict unknown value Major method for prediction is regression n Linear and multiple regression n Non-linear regression Prediction is different from classification n Classification refers to predict categorical class label n Prediction models continuous-valued functions

Regress Analysis and Log. Linear Models in Prediction Linear regression: Y = + X Two parameters , and specify the line and are to be estimated by using the data at hand. n using the least squares criterion to the known values of Y 1, Y 2, …, X 1, X 2, …. Multiple regression: Y = b 0 + b 1 X 1 + b 2 X 2. n Many nonlinear functions can be transformed into the above. Log-linear models: n The multi-way table of joint probabilities is approximated by a product of lower-order tables. n Probability: p(a, b, c, d) = ab ac ad bcd n

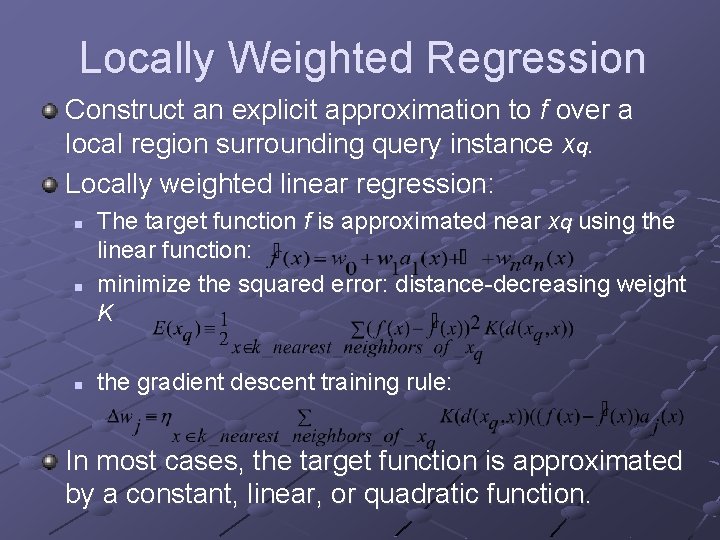

Locally Weighted Regression Construct an explicit approximation to f over a local region surrounding query instance xq. Locally weighted linear regression: n n n The target function f is approximated near xq using the linear function: minimize the squared error: distance-decreasing weight K the gradient descent training rule: In most cases, the target function is approximated by a constant, linear, or quadratic function.

Classification and Prediction Fuzzy Support Vector Machine Prediction Classification accuracy

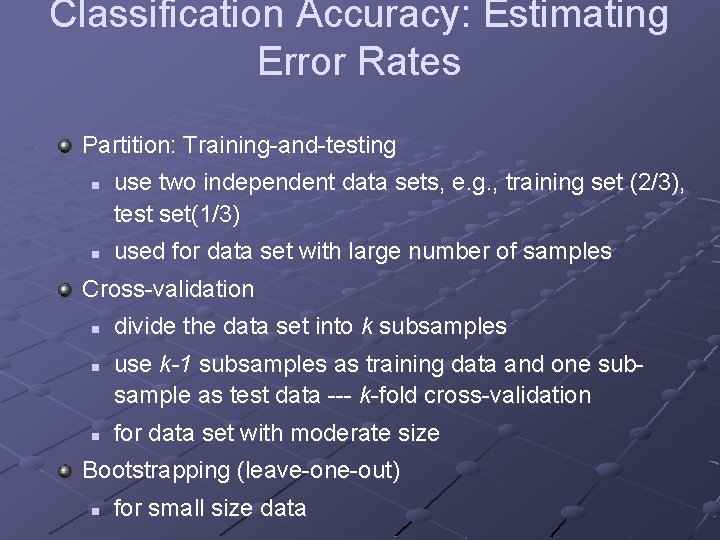

Classification Accuracy: Estimating Error Rates Partition: Training-and-testing n n use two independent data sets, e. g. , training set (2/3), test set(1/3) used for data set with large number of samples Cross-validation n divide the data set into k subsamples use k-1 subsamples as training data and one subsample as test data --- k-fold cross-validation for data set with moderate size Bootstrapping (leave-one-out) n for small size data

Boosting and Bagging Boosting increases classification accuracy n Applicable to decision trees or Bayesian classifier Learn a series of classifiers, where each classifier in the series pays more attention to the examples misclassified by its predecessor Boosting requires only linear time and constant space

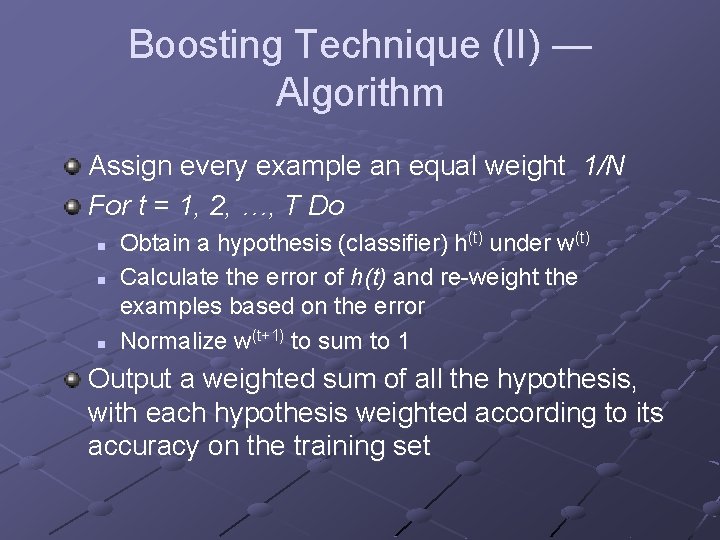

Boosting Technique (II) — Algorithm Assign every example an equal weight 1/N For t = 1, 2, …, T Do n n n Obtain a hypothesis (classifier) h(t) under w(t) Calculate the error of h(t) and re-weight the examples based on the error Normalize w(t+1) to sum to 1 Output a weighted sum of all the hypothesis, with each hypothesis weighted according to its accuracy on the training set

Is Accuracy Enough to Judge? Sensitivity: t_pos/pos Specificity: t_neg/neg Precision: t_pos/(t_pos+f_pos)

Classification and Prediction Decision tree Bayesian Classification ANN KNN GA Fuzzy SVM Prediction Some issues

Summary Classification is an extensively studied problem (mainly in statistics, machine learning & neural networks) Classification is probably one of the most widely used data mining techniques with a lot of extensions Scalability is still an important issue for database applications: thus combining classification with database techniques should be a promising topic Research directions: classification of non-relational data, e. g. , text, spatial, multimedia, etc. .

Fuzzy logic

Fuzzy logic Crisp set vs fuzzy set

Crisp set vs fuzzy set Crisp set vs fuzzy set

Crisp set vs fuzzy set Image sets

Image sets Total set awareness set consideration set

Total set awareness set consideration set Training set validation set test set

Training set validation set test set Contoh fuzzy set

Contoh fuzzy set Plt fuzzy set

Plt fuzzy set Image sets

Image sets Fuzzy melody set

Fuzzy melody set What is the overlap of data set 1 and data set 2?

What is the overlap of data set 1 and data set 2? Pattern recognition

Pattern recognition Bounded set vs centered set

Bounded set vs centered set The function from set a to set b is

The function from set a to set b is Make a prediction about kenny and franchesca

Make a prediction about kenny and franchesca Prediction reading strategy

Prediction reading strategy Paspc

Paspc Difference between prediction and forecasting

Difference between prediction and forecasting Branch prediction techniques

Branch prediction techniques Gene prediction in prokaryotes and eukaryotes

Gene prediction in prokaryotes and eukaryotes Instruction set of 8086

Instruction set of 8086 Stack isa example

Stack isa example Carpenter tools and materials

Carpenter tools and materials Classification of instruction set of 8085

Classification of instruction set of 8085 Characteristics of tabulation

Characteristics of tabulation Will might may for predictions

Will might may for predictions Championship branch prediction

Championship branch prediction Corner prediction

Corner prediction Chapter 26 hunger games

Chapter 26 hunger games Phd secondary structure prediction

Phd secondary structure prediction Variance in regression

Variance in regression Merit pridiction

Merit pridiction Singkong prediction

Singkong prediction Good readers making prediction by

Good readers making prediction by Make a prediction

Make a prediction Fb24 prediction

Fb24 prediction