Evolving Heuristics for Searching Games Evolutionary Computation and

![Rush Hour Domain Specific Heuristic GP-Rush [Hauptman et al, 2009] Hand Crafted heuristics: Goal Rush Hour Domain Specific Heuristic GP-Rush [Hauptman et al, 2009] Hand Crafted heuristics: Goal](https://slidetodoc.com/presentation_image_h2/af02205782b9828cfc390c23d0637dd8/image-11.jpg)

- Slides: 29

Evolving Heuristics for Searching Games Evolutionary Computation and Artificial Life Supervisor: Moshe Sipper Achiya Elyasaf June, 2010

Overview Searching Games State-Graphs • • Representation Uninformed Search Heuristics Informed Search Rush Hour • • Domain Specific Heuristic Evolving Heuristics Coevolving Game Boards Results Freecell • • Domain Specific Heuristic Coevolving Game Boards Learning Methods Results 2

Searching Games State-Graphs Representation Every puzzle/game can be represented as a state graph: • Single player games such as puzzles, board games etc. : every piece move can be counted as a different state • Multi player games such as chess, robocode etc. – the place of the player / the enemy, rest of the parameters (health, shield…) define a state 3

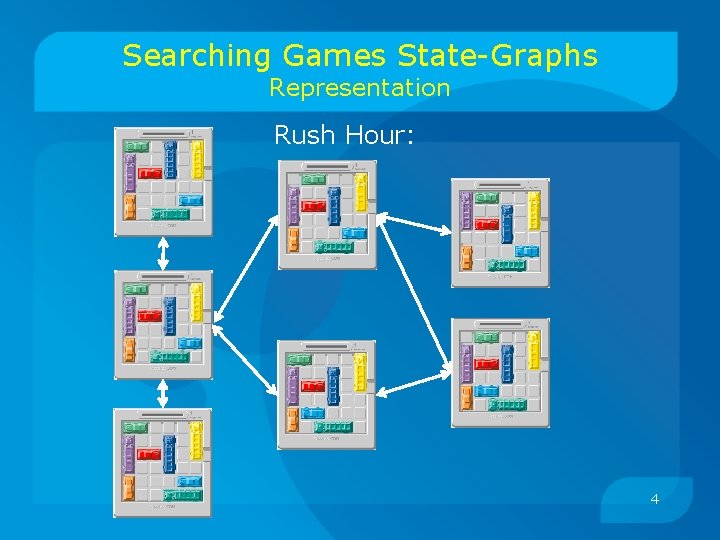

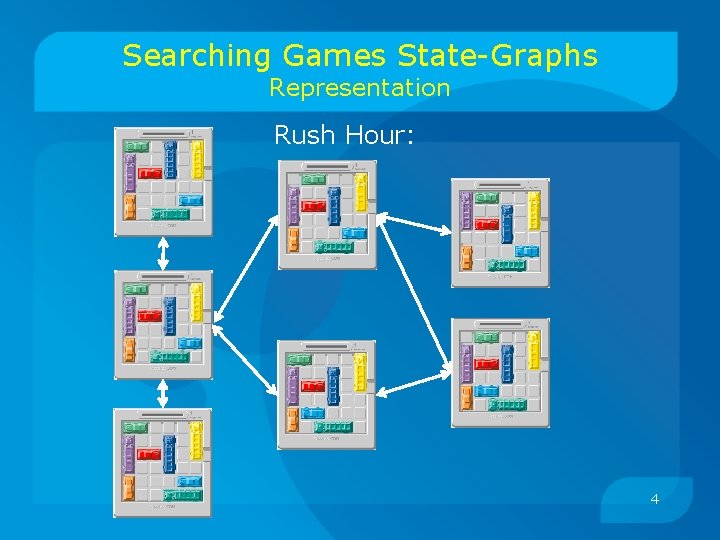

Searching Games State-Graphs Representation Rush Hour: 4

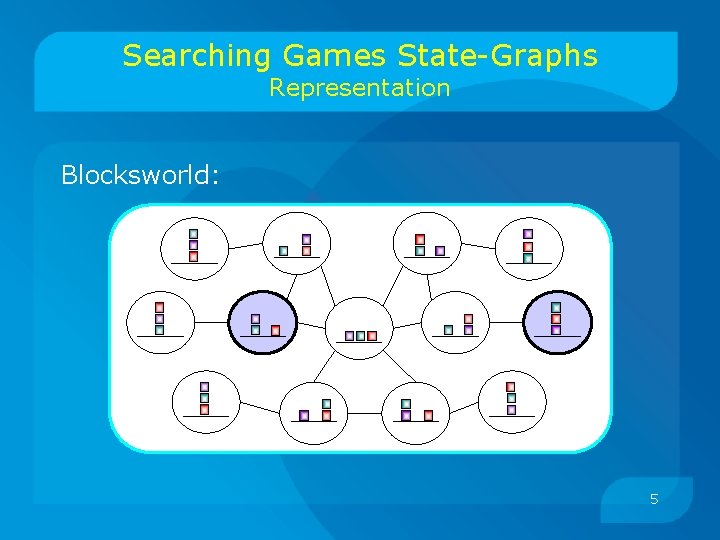

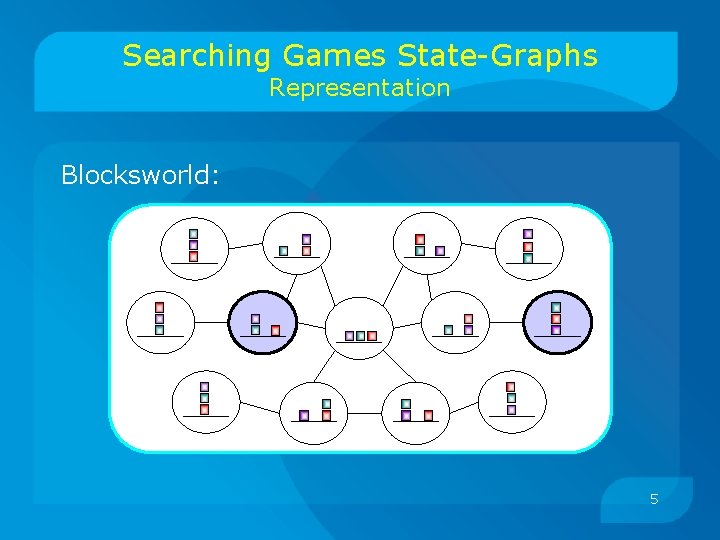

Searching Games State-Graphs Representation Blocksworld: 5

Searching Games State-Graphs Uninformed Search BFS – Exponential in the search depth DFS – Linear in the length of the current search path. BUT: • We might “never” track down the right path. • Usually games contain cycles Iterative Deepening: Combination of BFS & DFS • Each iteration DFS with a depth limit is performed. • Limit grows from one iteration to another • Worst case - traverse the entire graph 6

Searching Games State-Graphs Uninformed Search Most of the game domains are PSPACEComplete! Worst case - traverse the entire graph We need an informed-search! 7

Searching Games State-Graphs Heuristics h: states -> Real. • For every state s, h(s) is an estimation of the • • • minimal distance/cost from s to a solution h is perfect: an informed search that tries states with highest h-score first – will simply stroll to solution Bad heuristic means the search might never get to answer For hard problems, finding h is hard We need a good heuristic function to guide informed search 8

Searching Games State-Graphs Informed Search (Cont. ) IDA*: Iterative-Deepening with A* • The expanded nodes are pushed to the DFS stack • by descending heuristic values Let g(si) be the min depth of state si: Only nodes with f(s)=g(s)+h(s)<depth-limit are visited Near optimal solution (depends on path-limit) The heuristic need to be admissible 10

Overview Searching Games State-Graphs • • Representation Uninformed Search Heuristics Informed Search Rush Hour • • Domain Specific Heuristic Evolving Heuristics Coevolving Game Boards Results Freecell • • Domain Specific Heuristic Coevolving Game Boards Learning Methods Results 14

![Rush Hour Domain Specific Heuristic GPRush Hauptman et al 2009 Hand Crafted heuristics Goal Rush Hour Domain Specific Heuristic GP-Rush [Hauptman et al, 2009] Hand Crafted heuristics: Goal](https://slidetodoc.com/presentation_image_h2/af02205782b9828cfc390c23d0637dd8/image-11.jpg)

Rush Hour Domain Specific Heuristic GP-Rush [Hauptman et al, 2009] Hand Crafted heuristics: Goal distance – Manhattan distance Blocker estimation – lower bound (Admissble) Hybrid blockers distance – combine the two above Is Move To Secluded – did the car enter a secluded area Is Releasing move 15

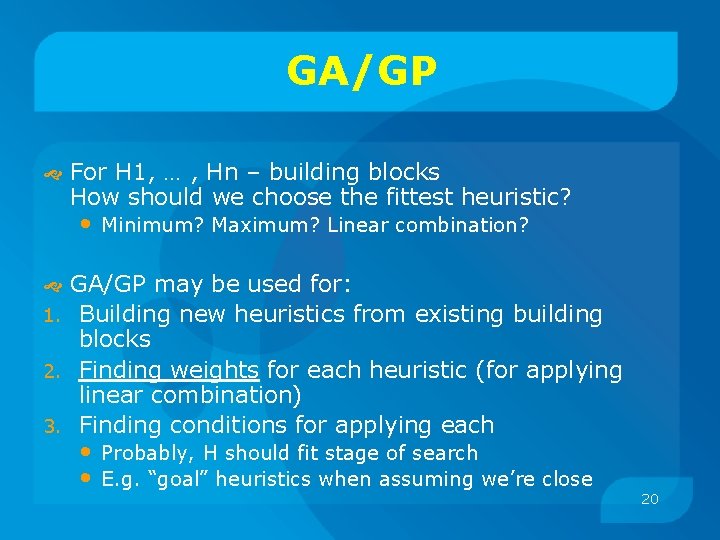

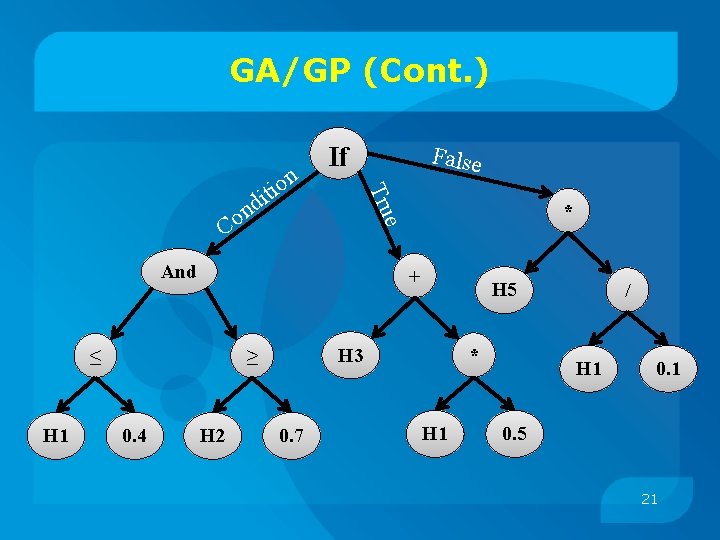

GA/GP For H 1, … , Hn – building blocks How should we choose the fittest heuristic? • Minimum? Maximum? Linear combination? GA/GP may be used for: 1. Building new heuristics from existing building blocks 2. Finding weights for each heuristic (for applying linear combination) 3. Finding conditions for applying each • • Probably, H should fit stage of search E. g. “goal” heuristics when assuming we’re close 20

GA/GP (Cont. ) And + ≤ H 1 H 2 H 5 H 3 ≥ 0. 4 * e n o C False Tru n o i dit If 0. 7 * H 1 / H 1 0. 5 21

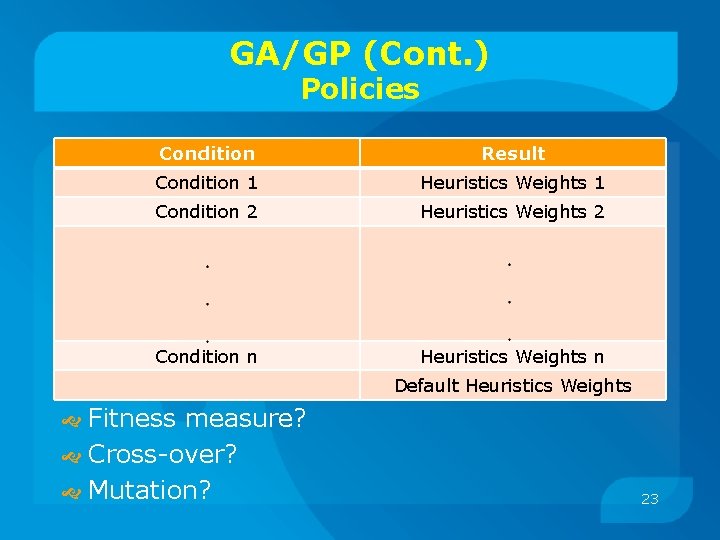

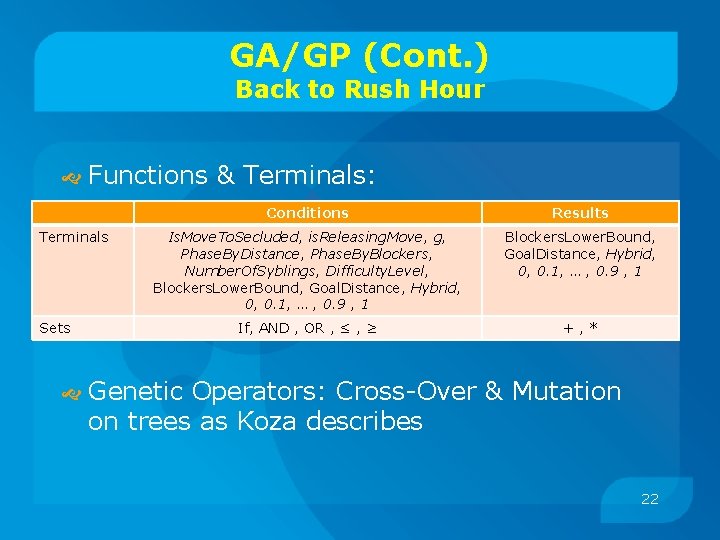

GA/GP (Cont. ) Back to Rush Hour Functions & Terminals: Terminals Sets Conditions Results Is. Move. To. Secluded, is. Releasing. Move, g, Phase. By. Distance, Phase. By. Blockers, Number. Of. Syblings, Difficulty. Level, Blockers. Lower. Bound, Goal. Distance, Hybrid, 0, 0. 1, … , 0. 9 , 1 If, AND , OR , ≤ , ≥ +, * Genetic Operators: Cross-Over & Mutation on trees as Koza describes 22

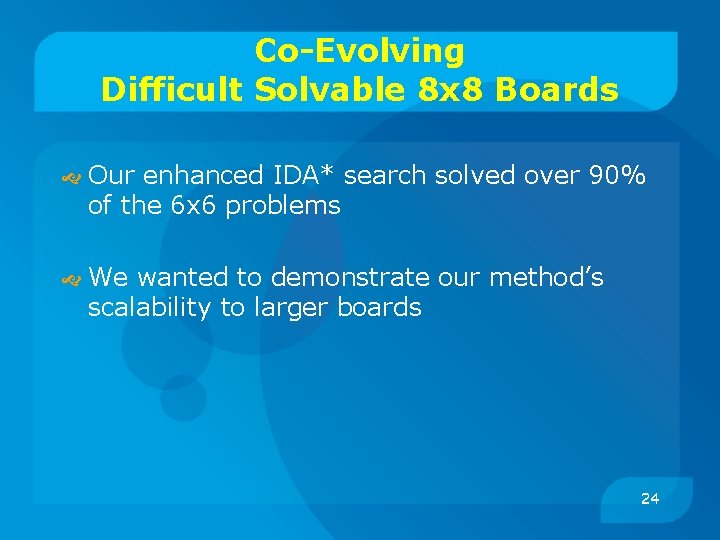

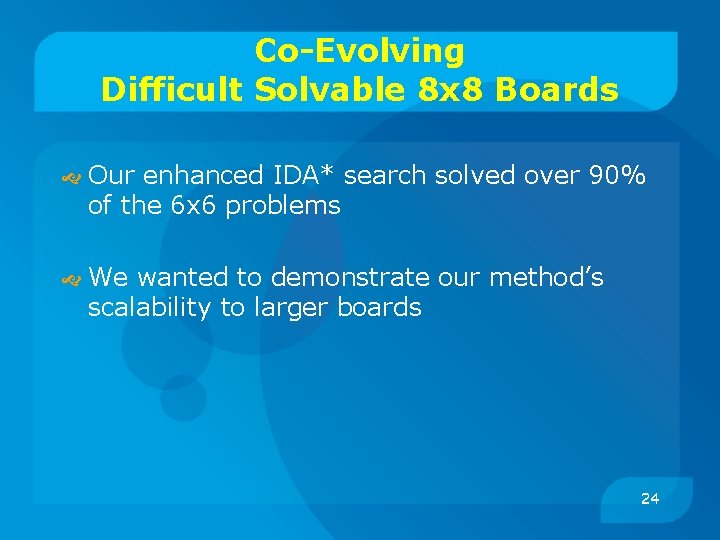

GA/GP (Cont. ) Policies Condition Result Condition 1 Heuristics Weights 1 Condition 2 Heuristics Weights 2 Condition n Heuristics Weights n Default Heuristics Weights Fitness measure? Cross-over? Mutation? 23

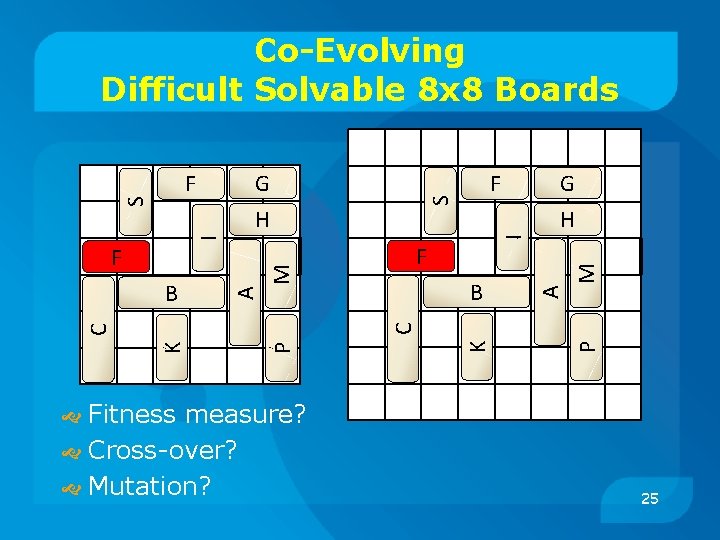

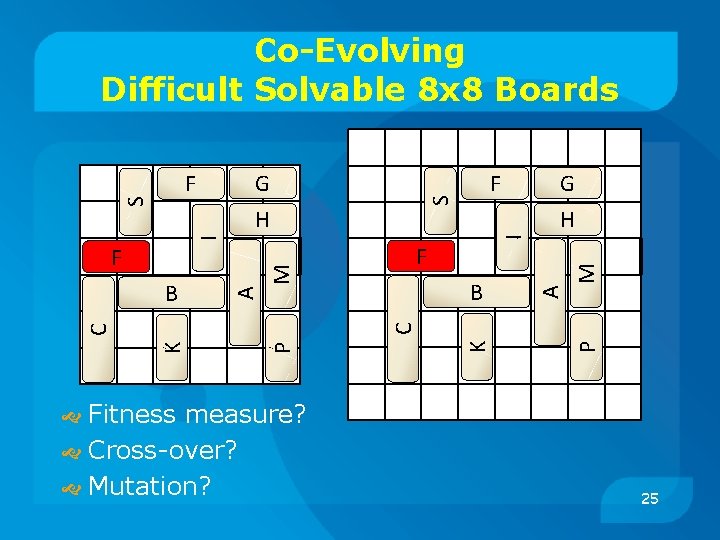

Co-Evolving Difficult Solvable 8 x 8 Boards Our enhanced IDA* search solved over 90% of the 6 x 6 problems We wanted to demonstrate our method’s scalability to larger boards 24

Co-Evolving Difficult Solvable 8 x 8 Boards G F S H I I H B M F Fitness measure? Cross-over? Mutation? P K C C A M F B G A S F 25

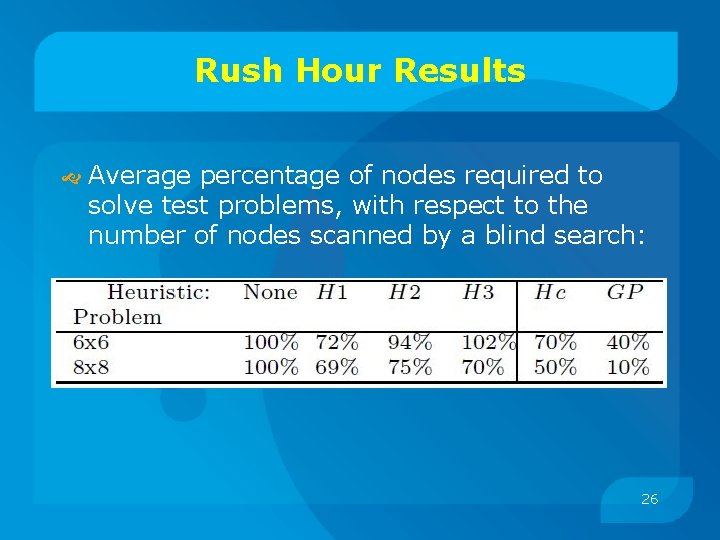

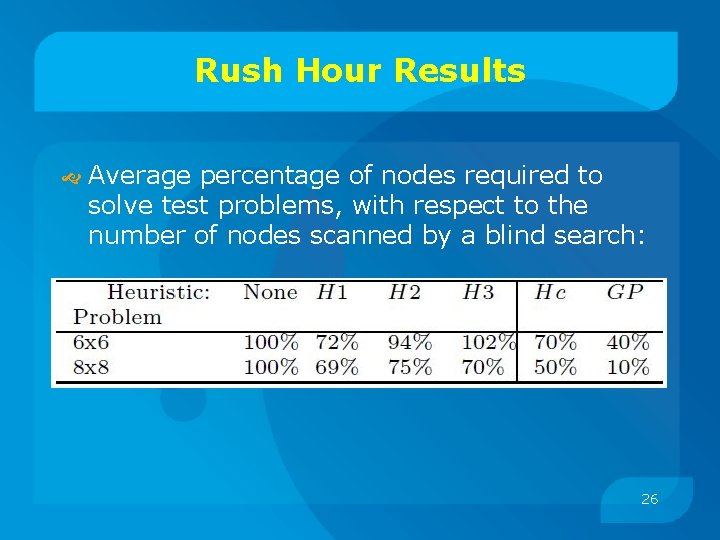

Rush Hour Results Average percentage of nodes required to solve test problems, with respect to the number of nodes scanned by a blind search: 26

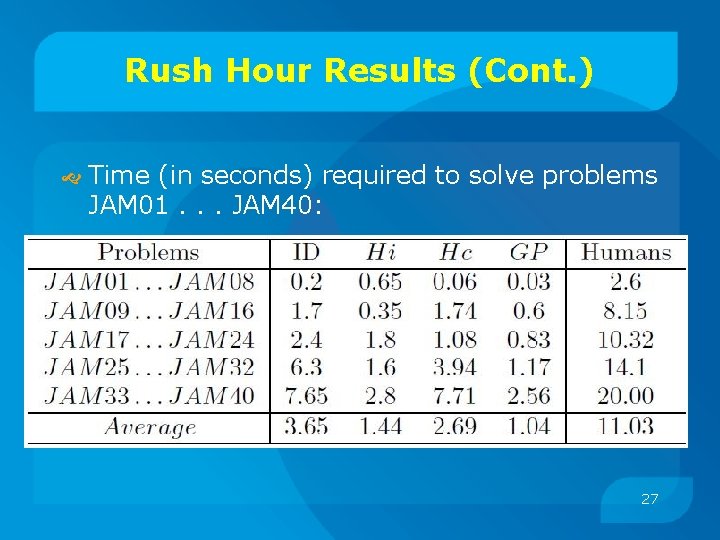

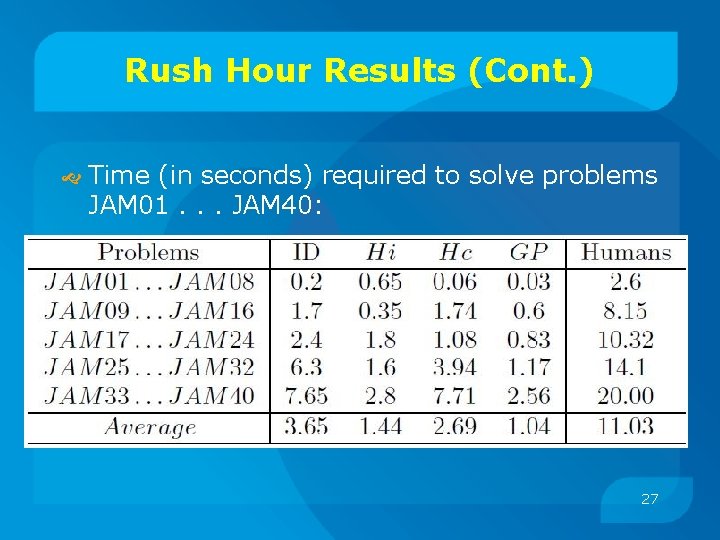

Rush Hour Results (Cont. ) Time (in seconds) required to solve problems JAM 01. . . JAM 40: 27

Overview Searching Games State-Graphs • • Representation Uninformed Search Heuristics Informed Search Rush Hour • • Domain Specific Heuristic Evolving Heuristics Coevolving Game Boards Results Freecell • • Domain Specific Heuristic Coevolving Game Boards Learning Methods Results 28

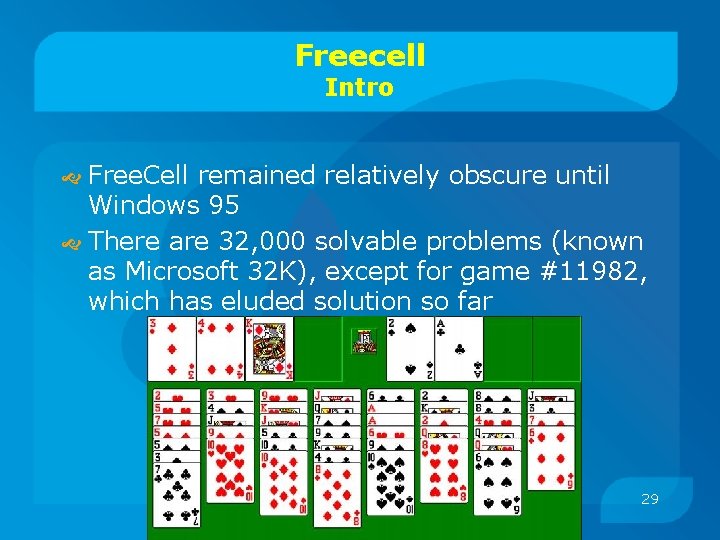

Freecell Intro Free. Cell remained relatively obscure until Windows 95 There are 32, 000 solvable problems (known as Microsoft 32 K), except for game #11982, which has eluded solution so far 29

Freecell Intro (Cont. ) Foundations Freecells Cascades 30

Freecell Heuristics Lowest card at Foundations Number of well placed cards Num of cards not at Foundations Num of Freecells and free Cascades Sum of the Cascades bottom cards Highest home card – lowest home card 31

Freecell Learning methods As opposed to Rush-Hour, blind search could not solve even one problem The best solver to date solves 89% of Microsoft 32 K Reasons: • High branching factor • Hard to generate a good heuristic 32

Freecell Learning methods In Rush Hour: • Hyper-Heuristics population • Each generation – all individuals solve 5 • • different randomly selected instances Test set - 20% of the problems Training set – the rest In Freecell: • This method failed 33

Freecell Learning methods First try: Sort the problems by difficulty Learn gradually the whole training set FAILED: • Days of training • Over fitting and forgetness 34

Freecell Learning methods Second try: Co-evolution: • First population – Hyper-Heuristics • Second population – Game boards with Hillis “Hall of Fame” FAILD: • Ambiguous reason for low fitness 35

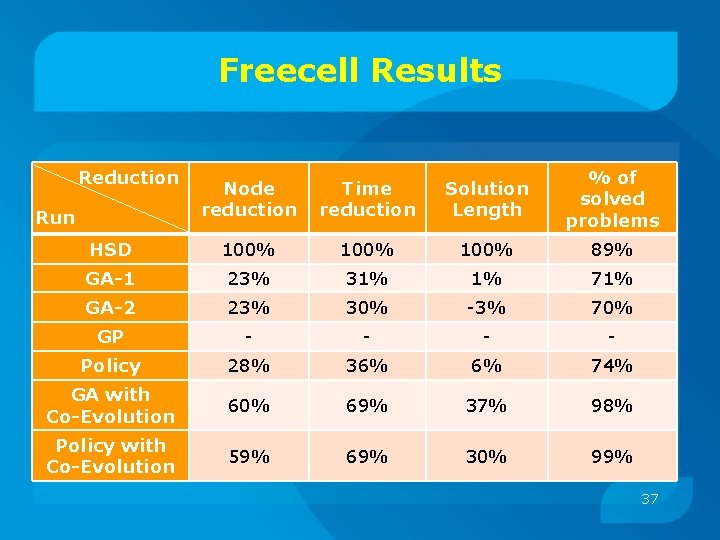

Freecell Learning methods Third try: Co-evolution: SUCCESS: • First population – Hyper-Heuristics • Second population – Group of 8 game boards • Fast learning process • No ambiguity • We create the right competioin 36

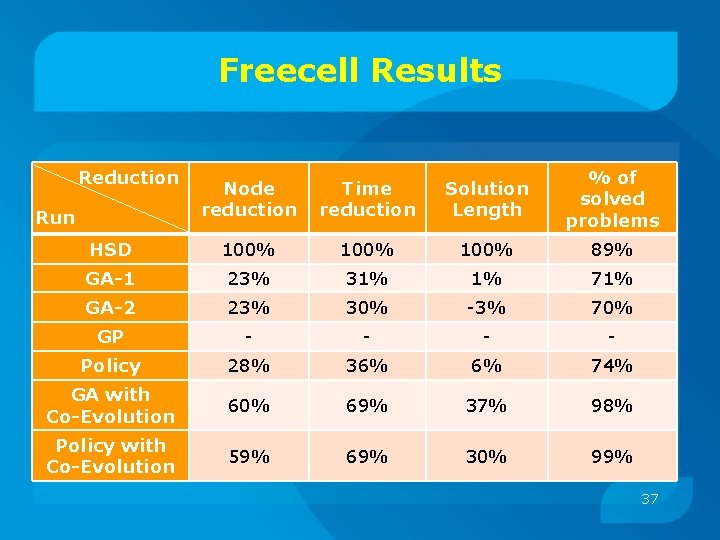

Freecell Results Node reduction Time reduction Solution Length % of solved problems HSD 100% 89% GA-1 23% 31% 1% 71% GA-2 23% 30% -3% 70% GP - - Policy 28% 36% 6% 74% GA with Co-Evolution 60% 69% 37% 98% Policy with Co-Evolution 59% 69% 30% 99% Reduction Run 37