Evolving Local Search Heuristics for SAT Using Genetic

![SAT local search heuristics: GSAT Family n GSAT [Selman, Mitchell, Levesque 1992] – Select SAT local search heuristics: GSAT Family n GSAT [Selman, Mitchell, Levesque 1992] – Select](https://slidetodoc.com/presentation_image/99d7ff6b8243b0b33762b0d888121cf2/image-7.jpg)

![SAT Local Search: Walksat [Selman, Kautz, Cohen 1994] – Pick random broken clause BC. SAT Local Search: Walksat [Selman, Kautz, Cohen 1994] – Pick random broken clause BC.](https://slidetodoc.com/presentation_image/99d7ff6b8243b0b33762b0d888121cf2/image-8.jpg)

![SAT Local Search: Novelty Family Novelty [Mc. Allester, Selman, Kautz 1997] – Pick random SAT Local Search: Novelty Family Novelty [Mc. Allester, Selman, Kautz 1997] – Pick random](https://slidetodoc.com/presentation_image/99d7ff6b8243b0b33762b0d888121cf2/image-9.jpg)

- Slides: 28

Evolving Local Search Heuristics for SAT Using Genetic Programming Alex Fukunaga Computer Science Department University of California, Los Angeles

Outline n Satisfiability testing n Local search for SAT n CLASS: A GP system for discovering SAT local search heuristics n Empirical evaluation of CLASS

Propositional Satisfiability (SAT) n Given: – Set of boolean variables – A well formed formula using the variables Problem: Is there some assignment of truth values (true, false) to the variables such that the formula evaluates to true? n Examples: n 1) (a or b or ~c) and (~a or ~b or c) Satisfiable (let a=true, b=false, c=true) 2) (a or b or c) and (~a or ~b) and (~b or ~c) and (~a or ~c) Unsatisfiable.

SAT n NP-complete – First problem to be proven NP-complete (Cook, 1973) n Numerous applications – – n Circuit verification Scheduling AI Planning Theorem proving Very active research area – Recent applications in VLSI CAD – Annual SAT conference

Local Search for SAT Local search is very successful at solving hard, satisfiable SAT instances. n Many local search algorithm variants for SAT proposed since the discovery of GSAT [Selman, Mitchell, Levesque 1992]. n Generic SAT local search framework: T: = randomly generated truth assignment For j: = 1 to cutoff n If T satisfies formula then return T V: = Choose a variable using some variable selection heuristic T’ : = T with value of V reversed Return failure (no satisfying assignment found).

Definitions n Positive/Negative/Net Gain – Given candidate variable assignment T for CNF formula F, let B 0 be the total # of clauses that are currently unsatisfied in F. Let T’ be the state of F if variable V is flipped. Let B 1 be the total # of clauses which would be unsatisfied in T’. – The net gain of V is B 1 -B 0. The negative gain of V is the # of clauses which are satisfied in T but unsatisfied in T’. The positive gain of V is the # of clauses which are unsatisfied in T but satisfied in T’. n Variable Age – The age of a variable is the # of flips since it was last flipped.

![SAT local search heuristics GSAT Family n GSAT Selman Mitchell Levesque 1992 Select SAT local search heuristics: GSAT Family n GSAT [Selman, Mitchell, Levesque 1992] – Select](https://slidetodoc.com/presentation_image/99d7ff6b8243b0b33762b0d888121cf2/image-7.jpg)

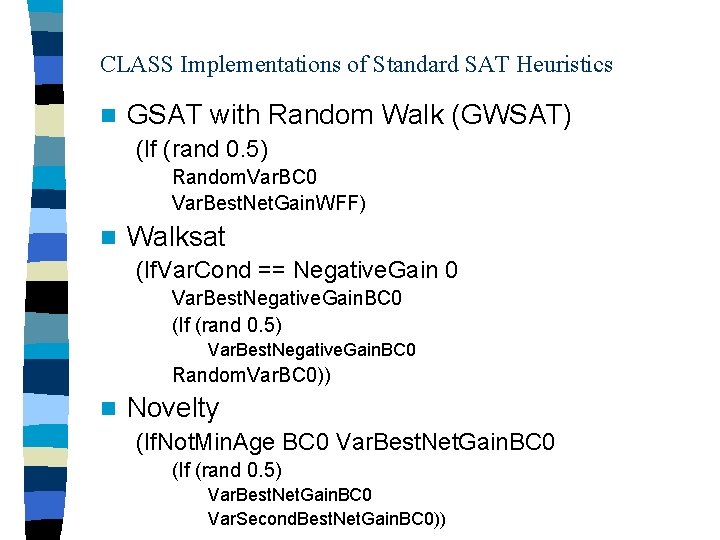

SAT local search heuristics: GSAT Family n GSAT [Selman, Mitchell, Levesque 1992] – Select variable with highest net gain. Break ties randomly. – “make greedy moves” n HSAT [Gent and Walsh, 1993] – Same as GSAT, but break ties in favor of maximum age variable. – “make greedy moves, n GWSAT [Selman, Kautz 1993] – with probability p, select variable in randomly unsatisfied (broken) clause; otherwise same as GSAT.

![SAT Local Search Walksat Selman Kautz Cohen 1994 Pick random broken clause BC SAT Local Search: Walksat [Selman, Kautz, Cohen 1994] – Pick random broken clause BC.](https://slidetodoc.com/presentation_image/99d7ff6b8243b0b33762b0d888121cf2/image-8.jpg)

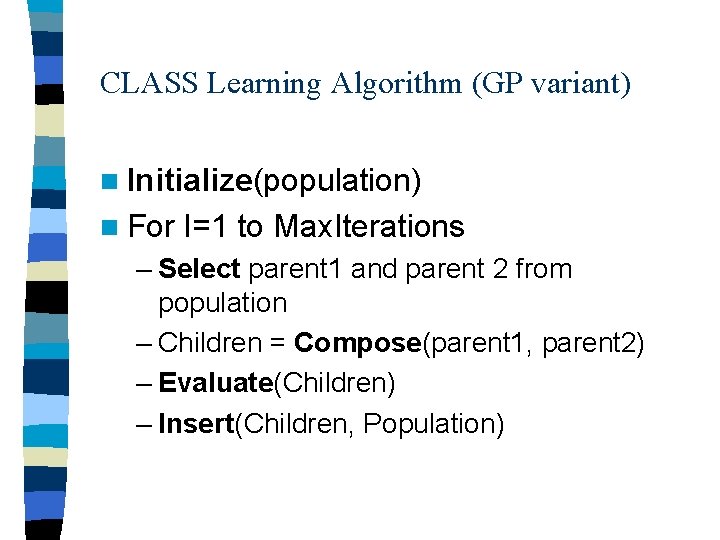

SAT Local Search: Walksat [Selman, Kautz, Cohen 1994] – Pick random broken clause BC. If any variable in BC has negative gain of 0, randomly select one. Otherwise, with probability p, select random variable from BC to flip, and with probability (1 -p), select variable in BC with minimal negative gain (break ties randomly). n Beating a correct, optimized implementation of Walksat is difficult. n Many variants of “walksat” appear in literature n – Many of them are incorrect interpretations/ implementations of [Selman, Kautz, Cohen] Walksat. – Most of them perform significantly worse. – None of them perform better.

![SAT Local Search Novelty Family Novelty Mc Allester Selman Kautz 1997 Pick random SAT Local Search: Novelty Family Novelty [Mc. Allester, Selman, Kautz 1997] – Pick random](https://slidetodoc.com/presentation_image/99d7ff6b8243b0b33762b0d888121cf2/image-9.jpg)

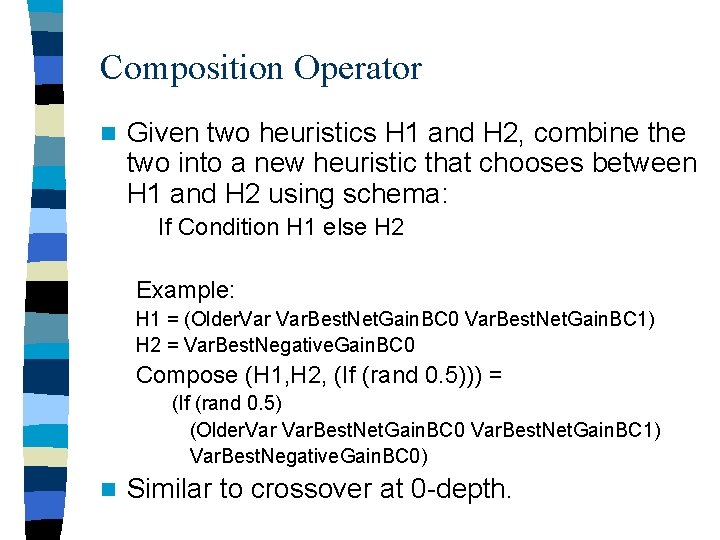

SAT Local Search: Novelty Family Novelty [Mc. Allester, Selman, Kautz 1997] – Pick random unsatisfied clause BC. Select variable v in BC with maximal net gain, unless va has the minimal age in BC. In the latter case, select v with probability (1 -p); otherwise, flip v 2 with 2 nd highest net gain. n Novelty+ [Hoos and Stutzle 2000] – Same as Novelty, but after BC is selected, with probability pw, select random variable in BC; otherwise continue with Novelty n n R-Novelty [Mc. Allester, Selman, Kautz 1997] and RNovelty+ [Hoos and Stutzle 2000]– similar to Novelty/Novelty+, but more complex.

Structure of the standard SAT heuristics n Score variables with respect to gain metric – Walksat uses negative gain, – GSAT and Novelty use net gain. n Restricted set of candidate variables – GSAT – any var in formula; – Walksat/Novelty – pick variable from a single random unsatisfied clause n Ranking of variables with respect to scoring metric. – Greedy choices considered by all heuristics. Novelty variants also consider 2 nd best variable. n Variable Age – prevent cycles and force exploration – Novelty variants, HSAT, Walksat+tabu Conditional branching: Some boolean condition (random number of function of above primitives) evaluated as basis for branch in decision process n Heuristic has, compact, unobvious combinatorial structure n

Some observations on composite variable selection heuristics Difficult to determine a priori how effective any given heuristic is. n Many heuristics look similar to Walksat, but performance varies significantly (c. f. [Mc. Allester, Selman, Kautz 1997] n Empirical evaluation necessary to evaluate complex heuristics. Previous efforts to discover new heuristics involved significant experimentation. n Humans are skilled at identifying primitives, but find it difficult/time-consuming to combine the primitives into effective composite heuristics. n – Let’s automate the grunt-work!

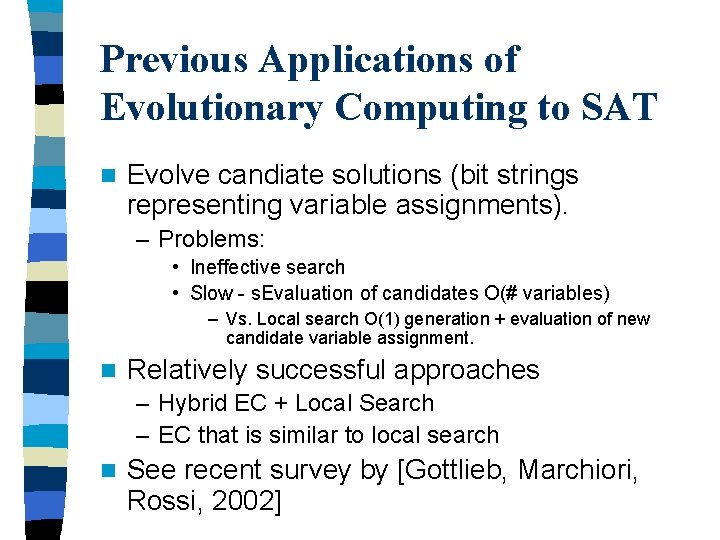

Previous Applications of Evolutionary Computing to SAT n Evolve candiate solutions (bit strings representing variable assignments). – Problems: • Ineffective search • Slow - s. Evaluation of candidates O(# variables) – Vs. Local search O(1) generation + evaluation of new candidate variable assignment. n Relatively successful approaches – Hybrid EC + Local Search – EC that is similar to local search n See recent survey by [Gottlieb, Marchiori, Rossi, 2002]

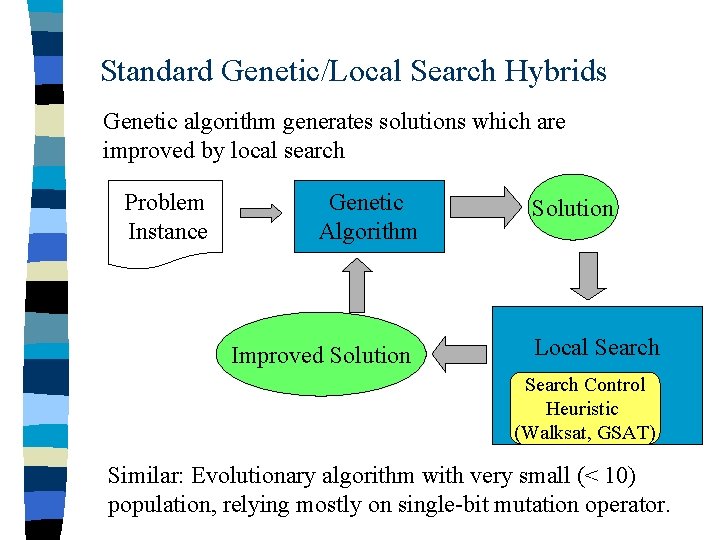

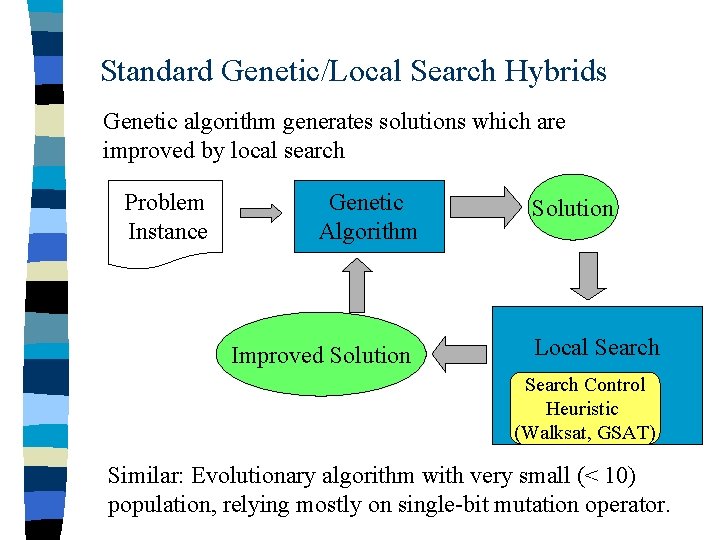

Standard Genetic/Local Search Hybrids Genetic algorithm generates solutions which are improved by local search Problem Instance Genetic Algorithm Improved Solution Local Search Control Heuristic (Walksat, GSAT) Similar: Evolutionary algorithm with very small (< 10) population, relying mostly on single-bit mutation operator.

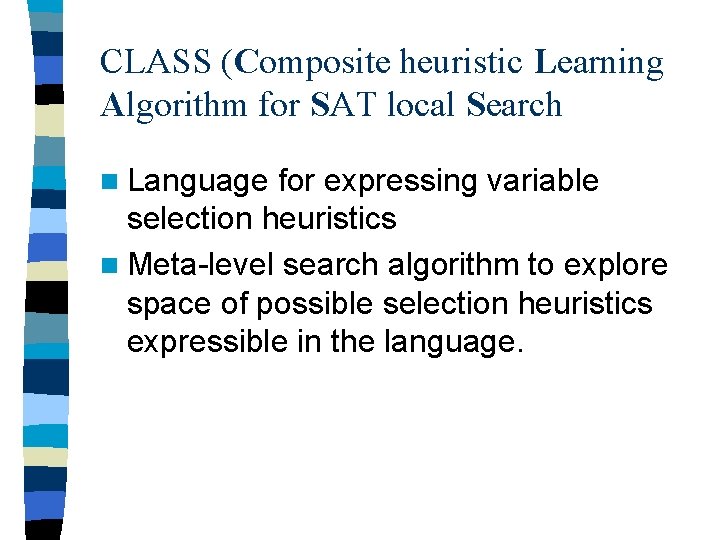

CLASS (Composite heuristic Learning Algorithm for SAT local Search n Language for expressing variable selection heuristics n Meta-level search algorithm to explore space of possible selection heuristics expressible in the language.

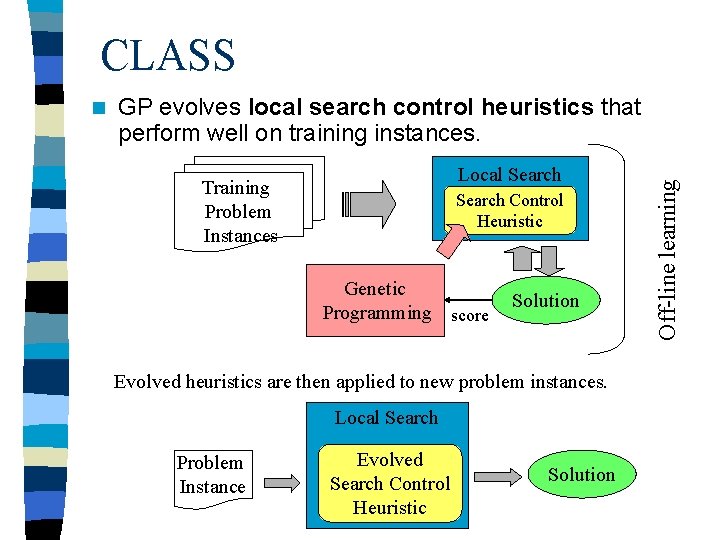

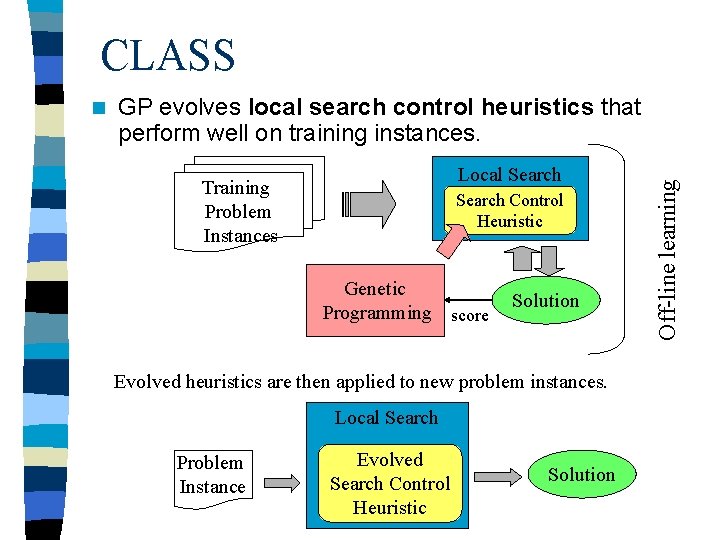

CLASS GP evolves local search control heuristics that perform well on training instances. Local Search Training Problem Instances Search Control Heuristic Genetic Programming score Solution Evolved heuristics are then applied to new problem instances. Local Search Problem Instance Evolved Search Control Heuristic Solution Off-line learning n

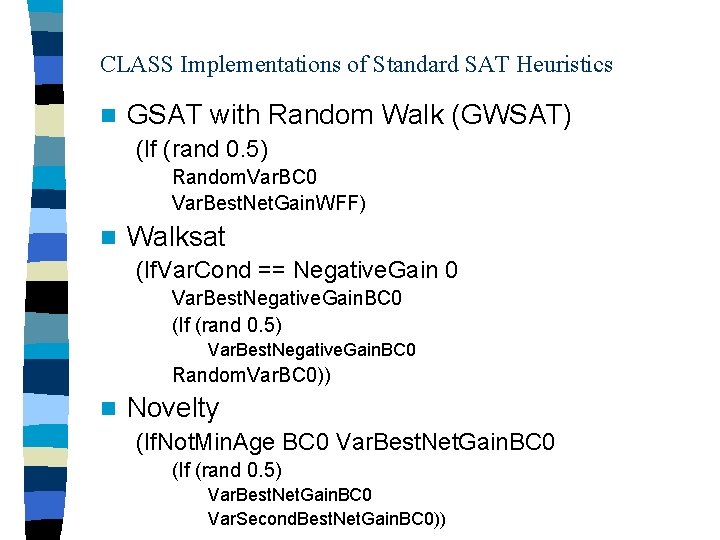

CLASS Implementations of Standard SAT Heuristics n GSAT with Random Walk (GWSAT) (If (rand 0. 5) Random. Var. BC 0 Var. Best. Net. Gain. WFF) n Walksat (If. Var. Cond == Negative. Gain 0 Var. Best. Negative. Gain. BC 0 (If (rand 0. 5) Var. Best. Negative. Gain. BC 0 Random. Var. BC 0)) n Novelty (If. Not. Min. Age BC 0 Var. Best. Net. Gain. BC 0 (If (rand 0. 5) Var. Best. Net. Gain. BC 0 Var. Second. Best. Net. Gain. BC 0))

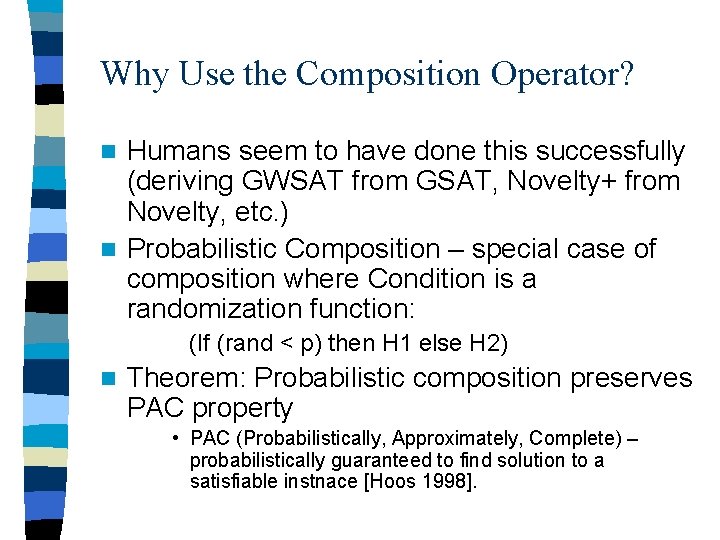

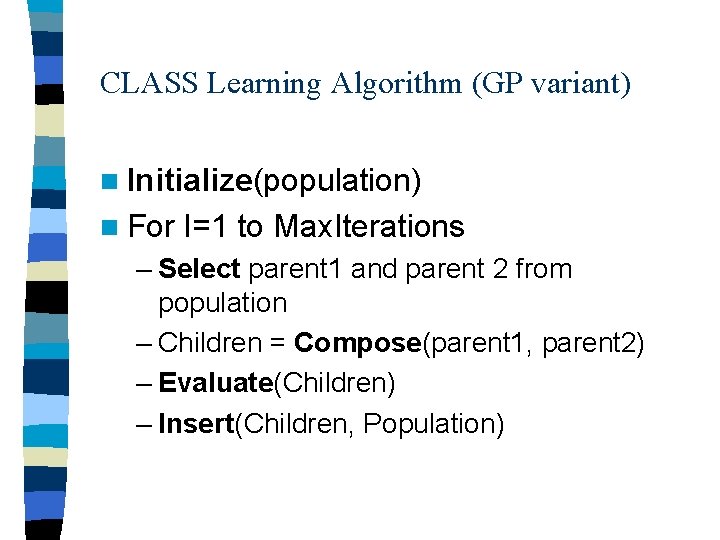

CLASS Learning Algorithm (GP variant) n Initialize(population) n For I=1 to Max. Iterations – Select parent 1 and parent 2 from population – Children = Compose(parent 1, parent 2) – Evaluate(Children) – Insert(Children, Population)

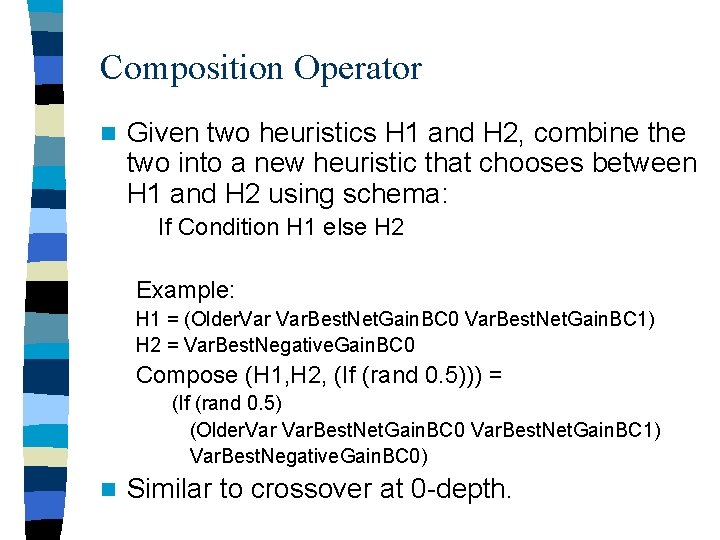

Composition Operator n Given two heuristics H 1 and H 2, combine the two into a new heuristic that chooses between H 1 and H 2 using schema: If Condition H 1 else H 2 Example: H 1 = (Older. Var. Best. Net. Gain. BC 0 Var. Best. Net. Gain. BC 1) H 2 = Var. Best. Negative. Gain. BC 0 Compose (H 1, H 2, (If (rand 0. 5))) = (If (rand 0. 5) (Older. Var. Best. Net. Gain. BC 0 Var. Best. Net. Gain. BC 1) Var. Best. Negative. Gain. BC 0) n Similar to crossover at 0 -depth.

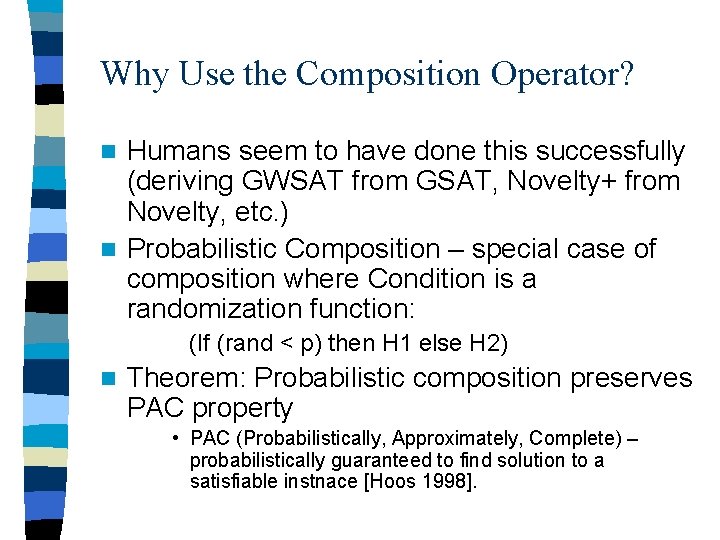

Why Use the Composition Operator? Humans seem to have done this successfully (deriving GWSAT from GSAT, Novelty+ from Novelty, etc. ) n Probabilistic Composition – special case of composition where Condition is a randomization function: n (If (rand < p) then H 1 else H 2) n Theorem: Probabilistic composition preserves PAC property • PAC (Probabilistically, Approximately, Complete) – probabilistically guaranteed to find solution to a satisfiable instnace [Hoos 1998].

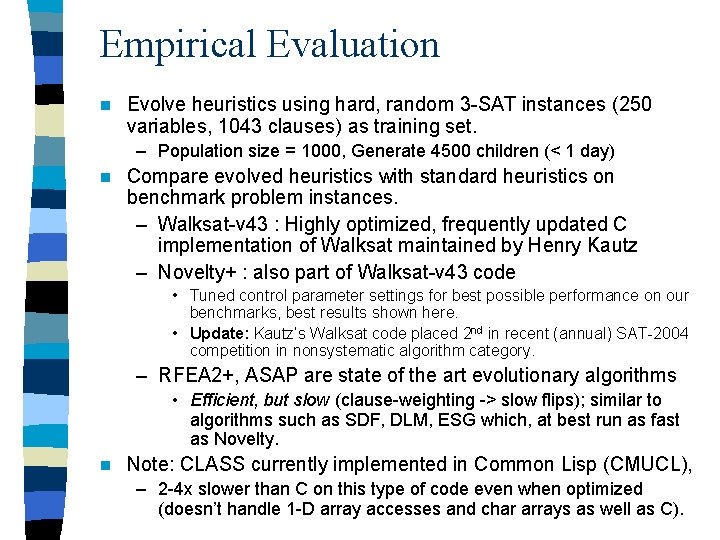

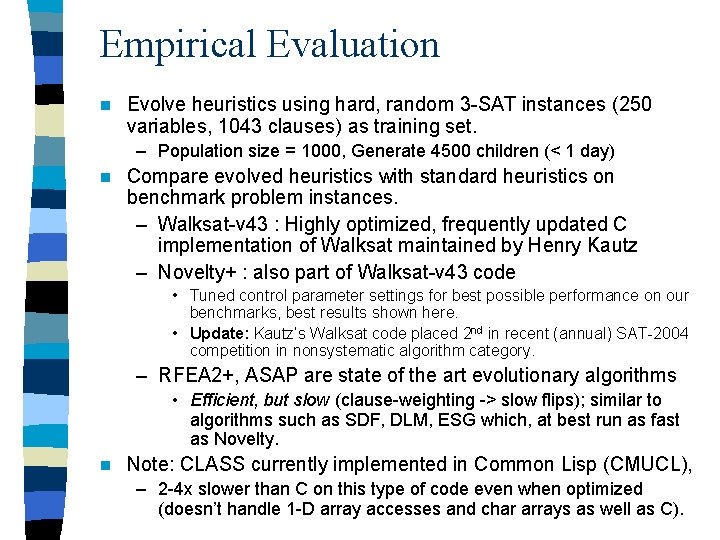

Empirical Evaluation n Evolve heuristics using hard, random 3 -SAT instances (250 variables, 1043 clauses) as training set. – Population size = 1000, Generate 4500 children (< 1 day) n Compare evolved heuristics with standard heuristics on benchmark problem instances. – Walksat-v 43 : Highly optimized, frequently updated C implementation of Walksat maintained by Henry Kautz – Novelty+ : also part of Walksat-v 43 code • Tuned control parameter settings for best possible performance on our benchmarks, best results shown here. • Update: Kautz’s Walksat code placed 2 nd in recent (annual) SAT-2004 competition in nonsystematic algorithm category. – RFEA 2+, ASAP are state of the art evolutionary algorithms • Efficient, but slow (clause-weighting -> slow flips); similar to algorithms such as SDF, DLM, ESG which, at best run as fast as Novelty. n Note: CLASS currently implemented in Common Lisp (CMUCL), – 2 -4 x slower than C on this type of code even when optimized (doesn’t handle 1 -D array accesses and char arrays as well as C).

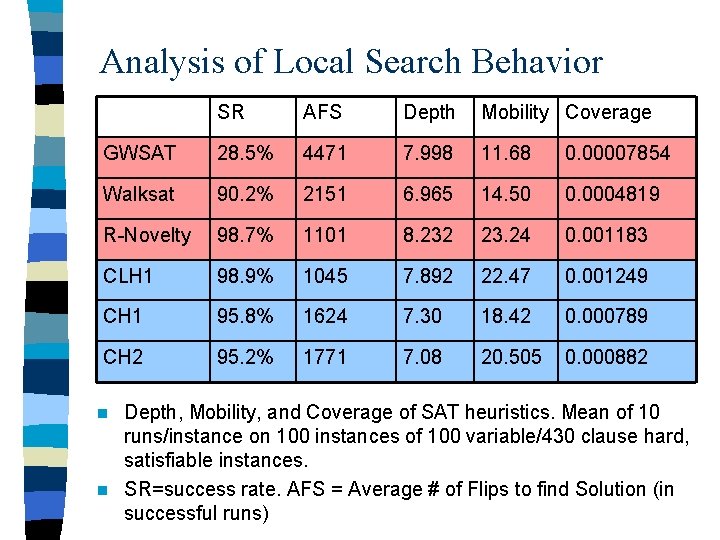

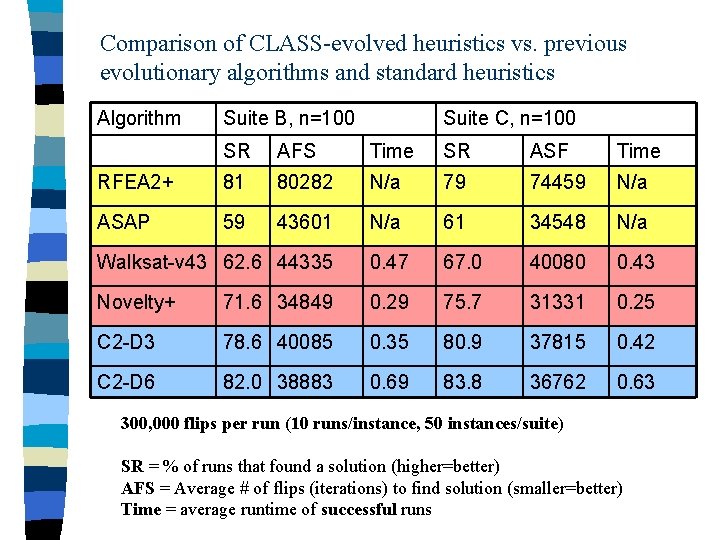

Comparison of CLASS-evolved heuristics vs. previous evolutionary algorithms and standard heuristics Algorithm Suite B, n=100 Suite C, n=100 SR AFS Time SR ASF Time RFEA 2+ 81 80282 N/a 79 74459 N/a ASAP 59 43601 N/a 61 34548 N/a Walksat-v 43 62. 6 44335 0. 47 67. 0 40080 0. 43 Novelty+ 71. 6 34849 0. 29 75. 7 31331 0. 25 C 2 -D 3 78. 6 40085 0. 35 80. 9 37815 0. 42 C 2 -D 6 82. 0 38883 0. 69 83. 8 36762 0. 63 300, 000 flips per run (10 runs/instance, 50 instances/suite) SR = % of runs that found a solution (higher=better) AFS = Average # of flips (iterations) to find solution (smaller=better) Time = average runtime of successful runs

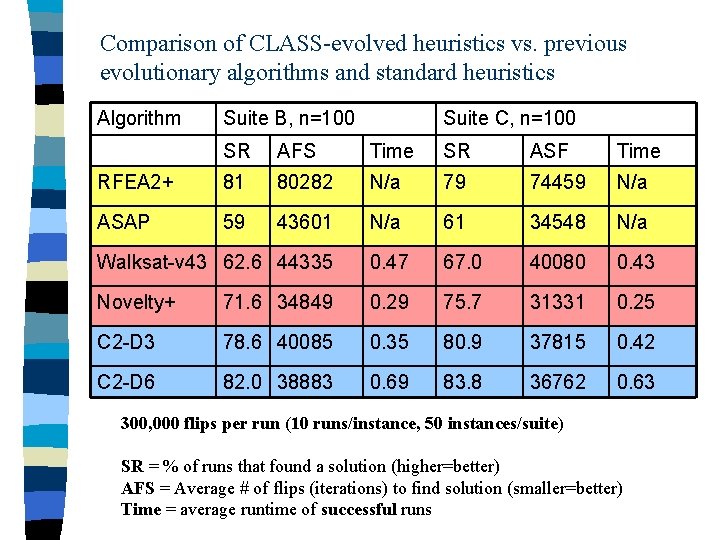

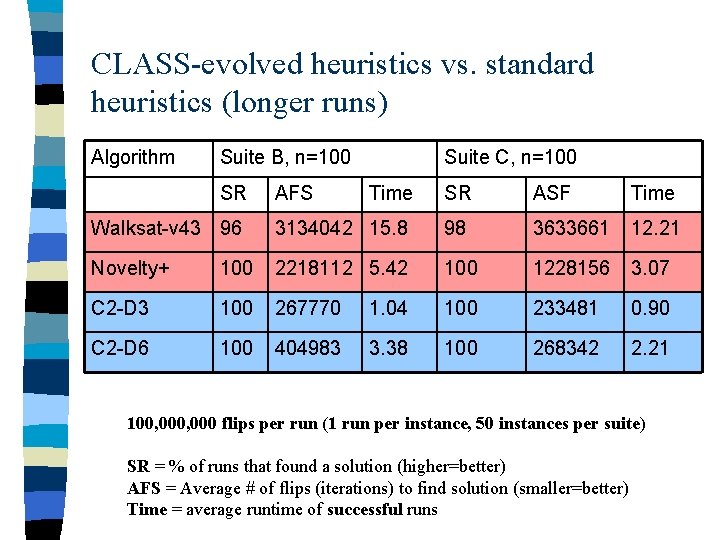

CLASS-evolved heuristics vs. standard heuristics (longer runs) Algorithm Suite B, n=100 SR AFS Suite C, n=100 Time SR ASF Time Walksat-v 43 96 3134042 15. 8 98 3633661 12. 21 Novelty+ 100 2218112 5. 42 100 1228156 3. 07 C 2 -D 3 100 267770 1. 04 100 233481 0. 90 C 2 -D 6 100 404983 3. 38 100 268342 2. 21 100, 000 flips per run (1 run per instance, 50 instances per suite) SR = % of runs that found a solution (higher=better) AFS = Average # of flips (iterations) to find solution (smaller=better) Time = average runtime of successful runs

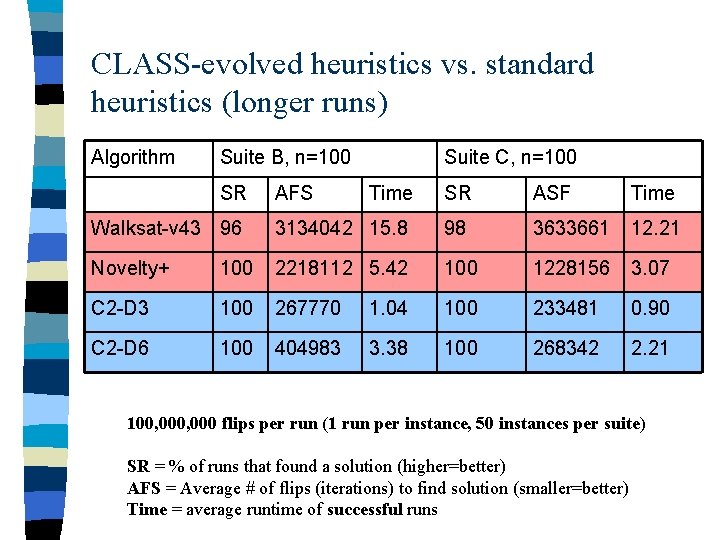

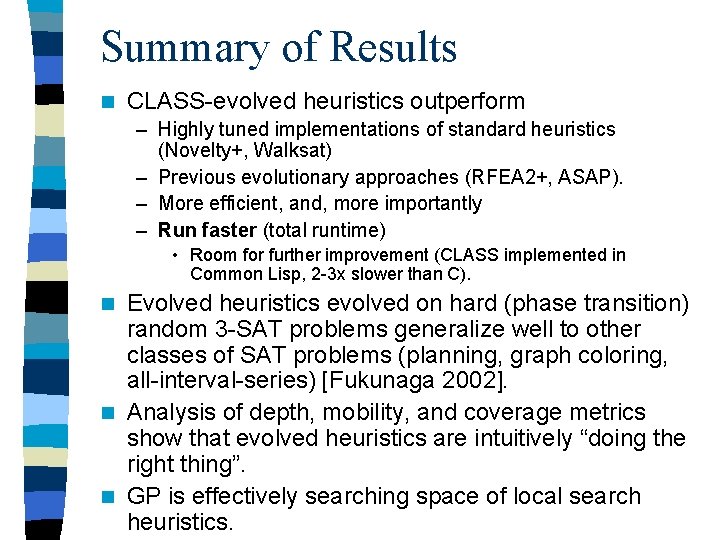

Evolved Heuristics Generalize Well n Using a training set of 100 -250 variable phase transition instances results in robust heuristics that perform well on: – Problems from the same class as training set – Bigger problems – Standard benchmarks from different completely different problem classes (AI Planning, circuit verification, graph coloring). – See AAAI 2002 paper.

Analysis of Heuristics Learned by CLASS What are the learned heuristics doing? n Why do learned heuristics generalize? n Analyze heuristics using some intuitive metrics proposed by [Schuurmans and Southey, 2000]. n – Depth: Greediness/Aggressiveness of algorithm – Mobility: How fast algorithm moves between attractors – Coverage: How well is algorithm sampling the total search space.

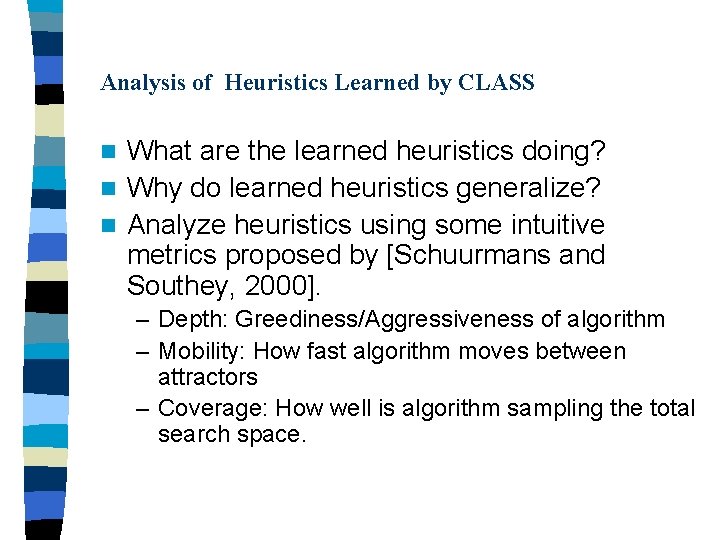

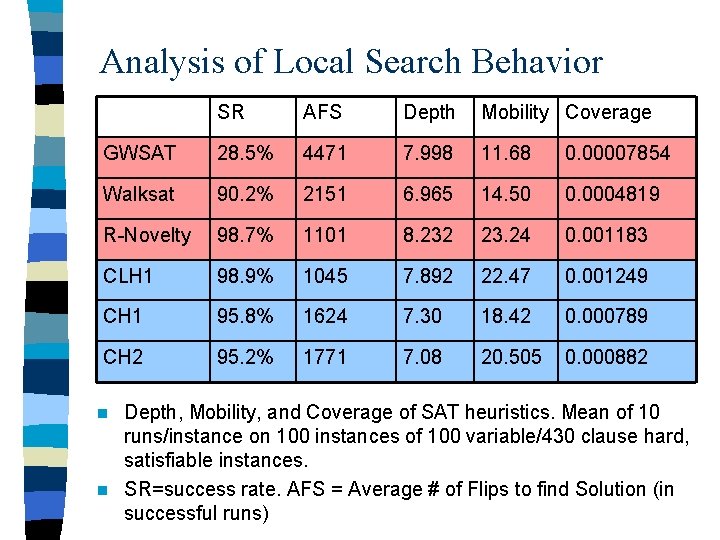

Analysis of Local Search Behavior SR AFS Depth Mobility Coverage GWSAT 28. 5% 4471 7. 998 11. 68 0. 00007854 Walksat 90. 2% 2151 6. 965 14. 50 0. 0004819 R-Novelty 98. 7% 1101 8. 232 23. 24 0. 001183 CLH 1 98. 9% 1045 7. 892 22. 47 0. 001249 CH 1 95. 8% 1624 7. 30 18. 42 0. 000789 CH 2 95. 2% 1771 7. 08 20. 505 0. 000882 Depth, Mobility, and Coverage of SAT heuristics. Mean of 10 runs/instance on 100 instances of 100 variable/430 clause hard, satisfiable instances. n SR=success rate. AFS = Average # of Flips to find Solution (in successful runs) n

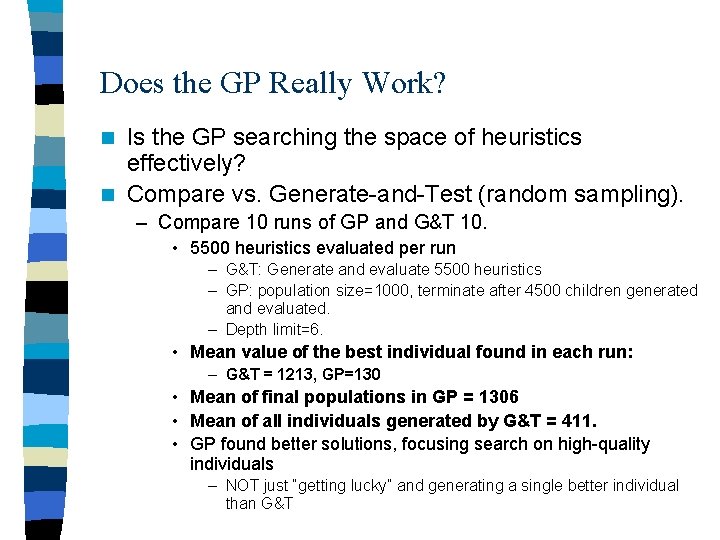

Does the GP Really Work? Is the GP searching the space of heuristics effectively? n Compare vs. Generate-and-Test (random sampling). n – Compare 10 runs of GP and G&T 10. • 5500 heuristics evaluated per run – G&T: Generate and evaluate 5500 heuristics – GP: population size=1000, terminate after 4500 children generated and evaluated. – Depth limit=6. • Mean value of the best individual found in each run: – G&T = 1213, GP=130 • Mean of final populations in GP = 1306 • Mean of all individuals generated by G&T = 411. • GP found better solutions, focusing search on high-quality individuals – NOT just “getting lucky” and generating a single better individual than G&T

Summary of Results n CLASS-evolved heuristics outperform – Highly tuned implementations of standard heuristics (Novelty+, Walksat) – Previous evolutionary approaches (RFEA 2+, ASAP). – More efficient, and, more importantly – Run faster (total runtime) • Room for further improvement (CLASS implemented in Common Lisp, 2 -3 x slower than C). Evolved heuristics evolved on hard (phase transition) random 3 -SAT problems generalize well to other classes of SAT problems (planning, graph coloring, all-interval-series) [Fukunaga 2002]. n Analysis of depth, mobility, and coverage metrics show that evolved heuristics are intuitively “doing the right thing”. n GP is effectively searching space of local search heuristics. n

Conclusion: CLASS is a Human Competitive system for SAT heuristic discovery. SAT is a widely studied, difficult, NP-complete problem. n CLASS uses GP to automate the task of generating good, composite SAT local search heuristics. n CLASS generates heuristics that are competitive with state of the art human-designed SAT local search heuristics. n – Evolved heuristics significantly outperform heuristics that were state of the art and considered significant achievements at the time of their publication (Novelty/+, Walksat, GSAT). n Future Work: Entry in SAT-2005 competition.