EVALUATION OF IMAGE SEGMENTATION METHODS Jayaram K Udupa

![SEGMENTATION EVALUATION: Empirical Software Systems (cont’d) Imaris [173] W fee Thresholding (microscopic images) ITK SEGMENTATION EVALUATION: Empirical Software Systems (cont’d) Imaris [173] W fee Thresholding (microscopic images) ITK](https://slidetodoc.com/presentation_image/4257f41de004d7d7e423cc528f643813/image-30.jpg)

- Slides: 34

EVALUATION OF IMAGE SEGMENTATION METHODS Jayaram K. Udupa Medical Image Processing Group - Department of Radiology University of Pennsylvania 423 Guardian Drive - 4 th Floor Blockley Hall Philadelphia, Pennsylvania - 19104 -6021 1

CAVA: Computer-Aided Visualization and Analysis The science underlying computerized methods of image processing, analysis, and visualization to facilitate new therapeutic strategies, basic clinical research, education, and training. 2

CAD CAD: Computer-Aided Diagnosis The science underlying computerized methods for the diagnosis of diseases via images 3

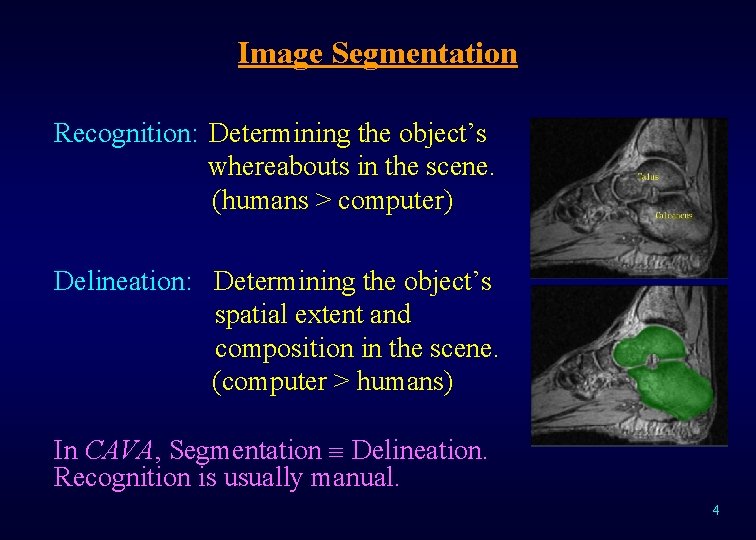

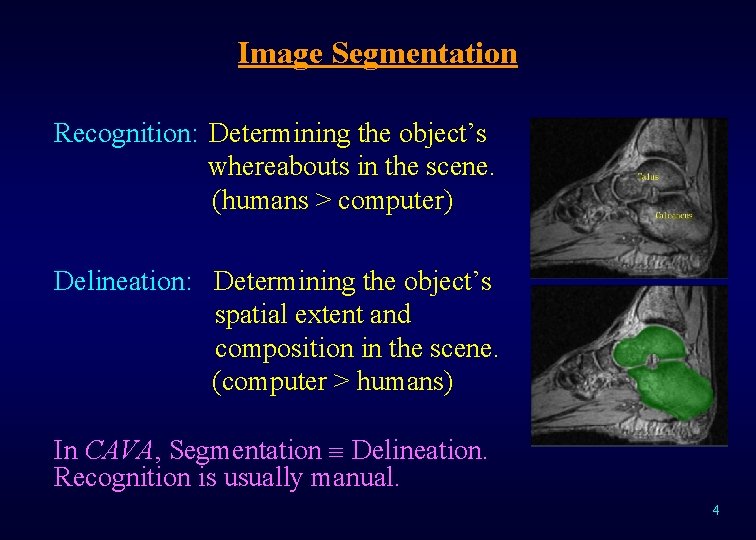

Image Segmentation Recognition: Determining the object’s whereabouts in the scene. (humans > computer) Delineation: Determining the object’s spatial extent and composition in the scene. (computer > humans) In CAVA, Segmentation Delineation. Recognition is usually manual. 4

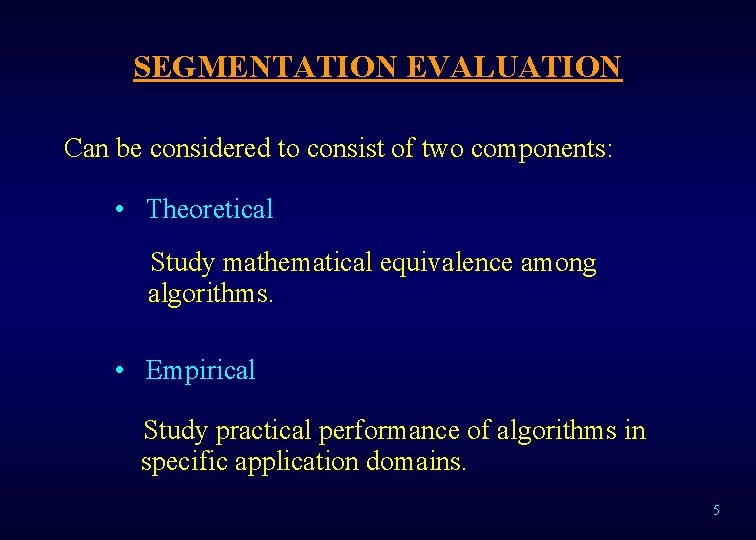

SEGMENTATION EVALUATION Can be considered to consist of two components: • Theoretical Study mathematical equivalence among algorithms. • Empirical Study practical performance of algorithms in specific application domains. 5

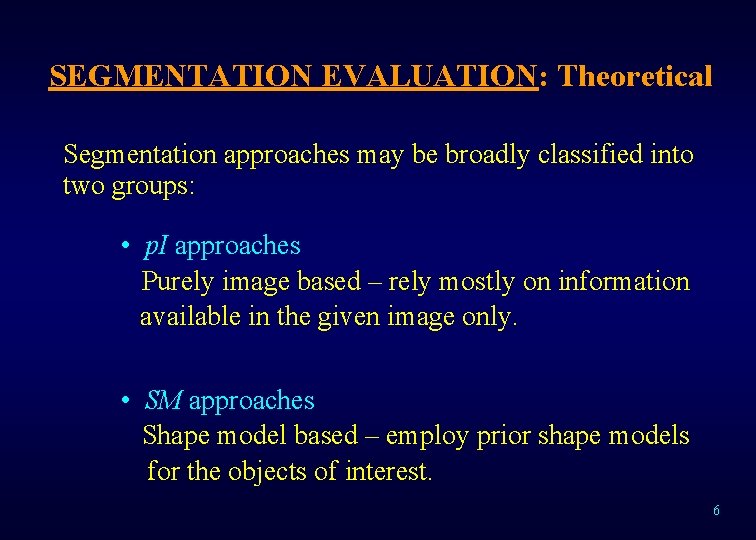

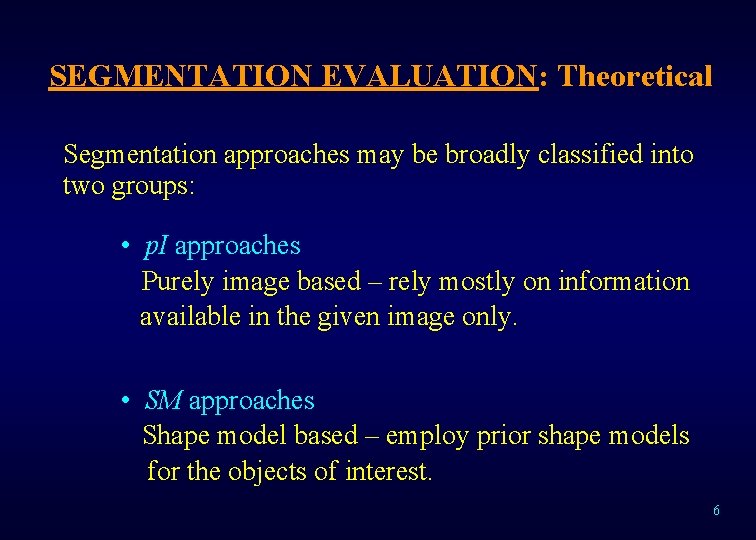

SEGMENTATION EVALUATION: Theoretical Segmentation approaches may be broadly classified into two groups: • p. I approaches Purely image based – rely mostly on information available in the given image only. • SM approaches Shape model based – employ prior shape models for the objects of interest. 6

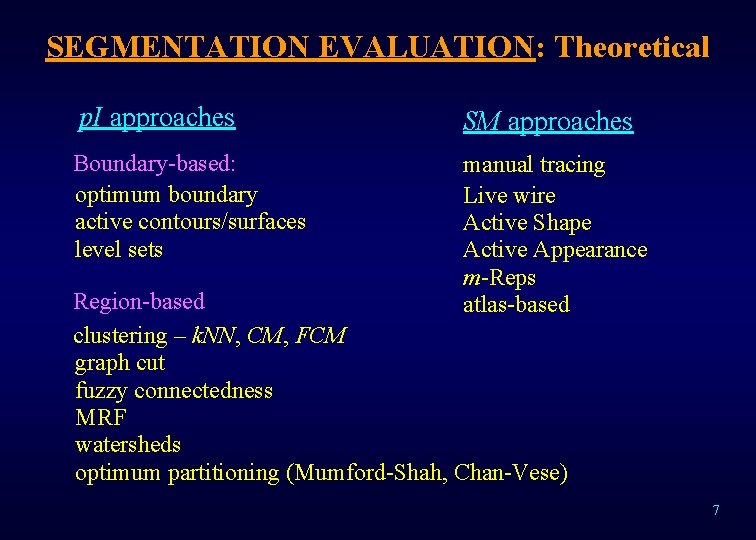

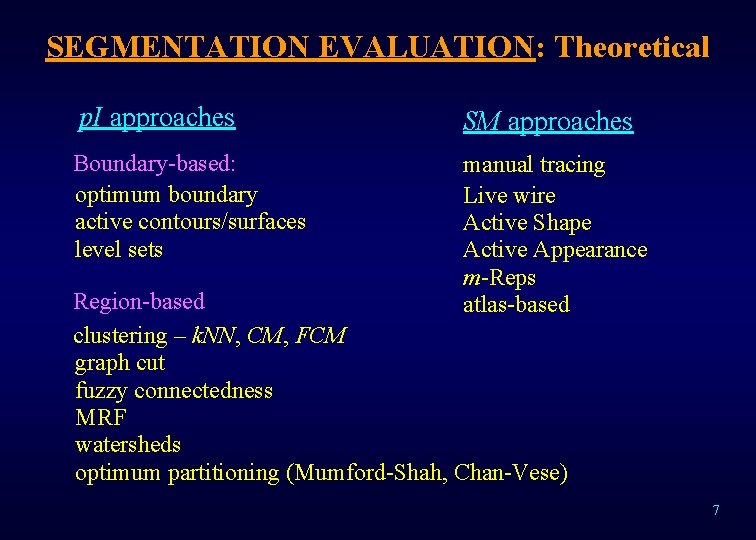

SEGMENTATION EVALUATION: Theoretical p. I approaches Boundary-based: optimum boundary active contours/surfaces level sets SM approaches manual tracing Live wire Active Shape Active Appearance m-Reps atlas-based Region-based clustering – k. NN, CM, FCM graph cut fuzzy connectedness MRF watersheds optimum partitioning (Mumford-Shah, Chan-Vese) 7

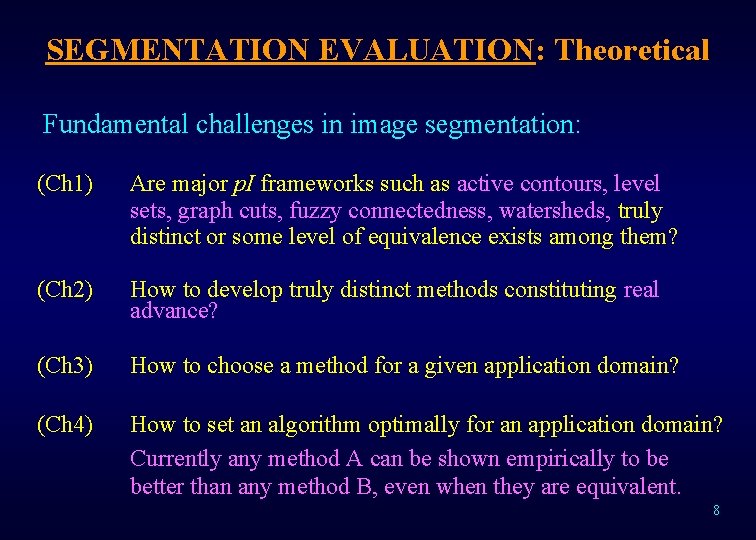

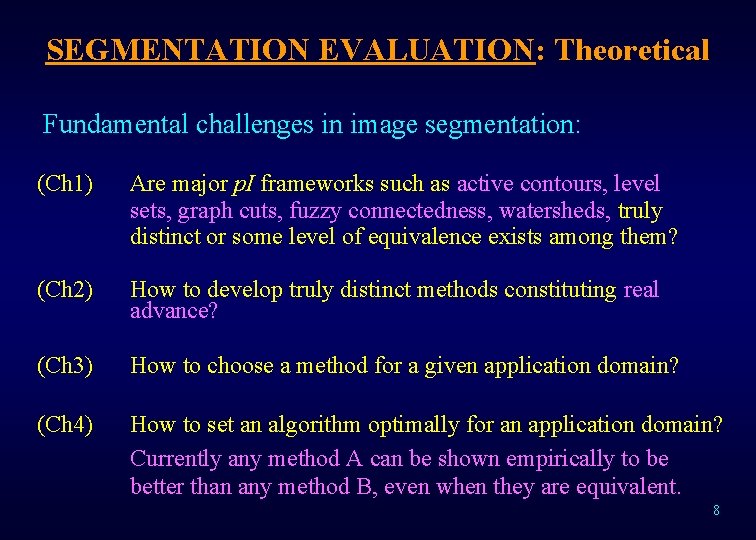

SEGMENTATION EVALUATION: Theoretical Fundamental challenges in image segmentation: (Ch 1) Are major p. I frameworks such as active contours, level sets, graph cuts, fuzzy connectedness, watersheds, truly distinct or some level of equivalence exists among them? (Ch 2) How to develop truly distinct methods constituting real advance? (Ch 3) How to choose a method for a given application domain? (Ch 4) How to set an algorithm optimally for an application domain? Currently any method A can be shown empirically to be better than any method B, even when they are equivalent. 8

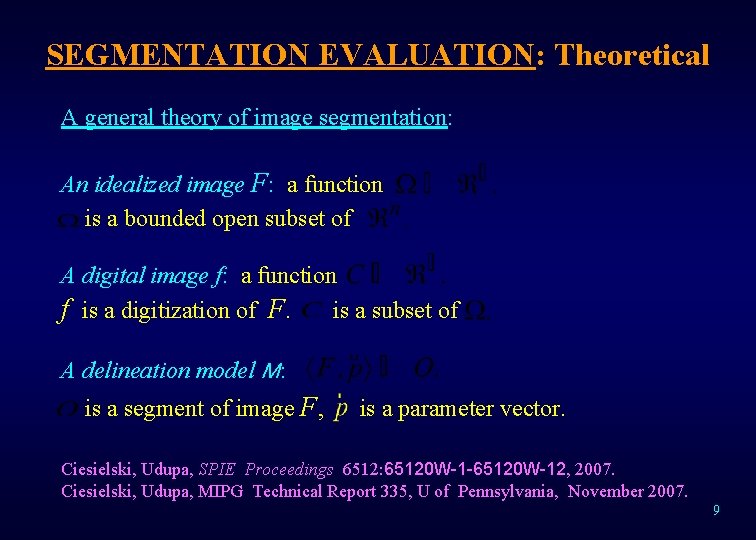

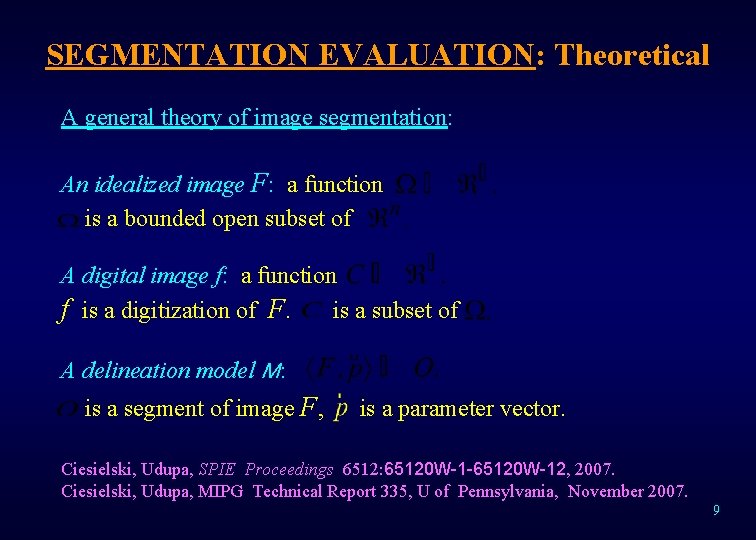

SEGMENTATION EVALUATION: Theoretical A general theory of image segmentation: An idealized image F: a function is a bounded open subset of A digital image f: a function f is a digitization of F. is a subset of A delineation model M: is a segment of image F, is a parameter vector. Ciesielski, Udupa, SPIE Proceedings 6512: 65120 W-1 -65120 W-12, 2007. Ciesielski, Udupa, MIPG Technical Report 335, U of Pennsylvania, November 2007. 9

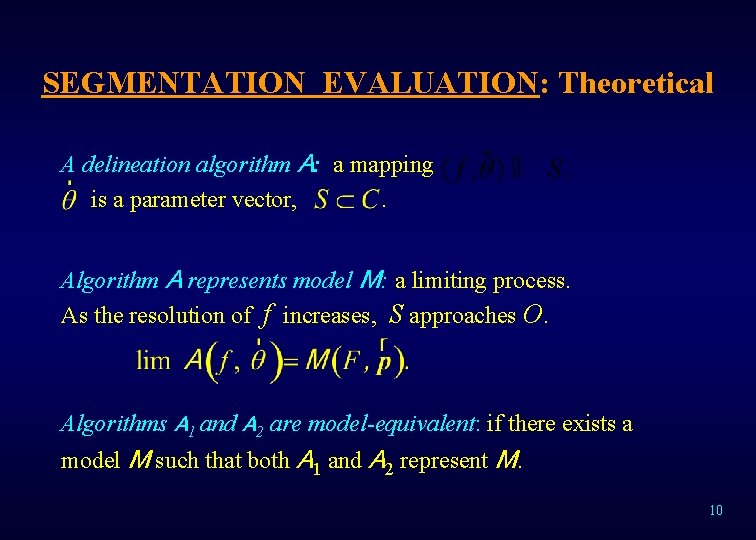

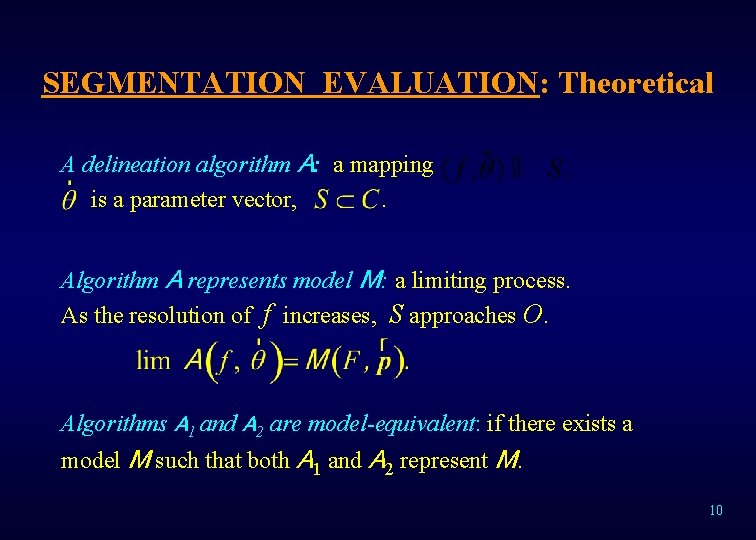

SEGMENTATION EVALUATION: Theoretical A delineation algorithm A: a mapping is a parameter vector, . Algorithm A represents model M: a limiting process. As the resolution of f increases, S approaches O. Algorithms A 1 and A 2 are model-equivalent: if there exists a model M such that both A 1 and A 2 represent M. 10

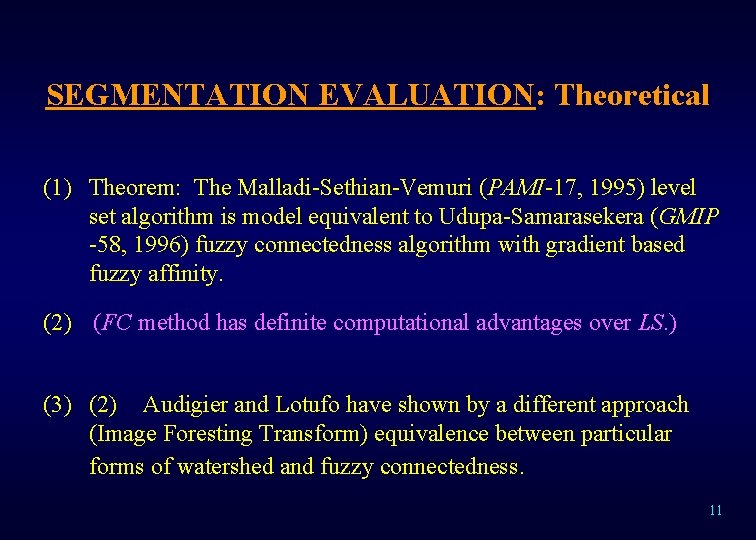

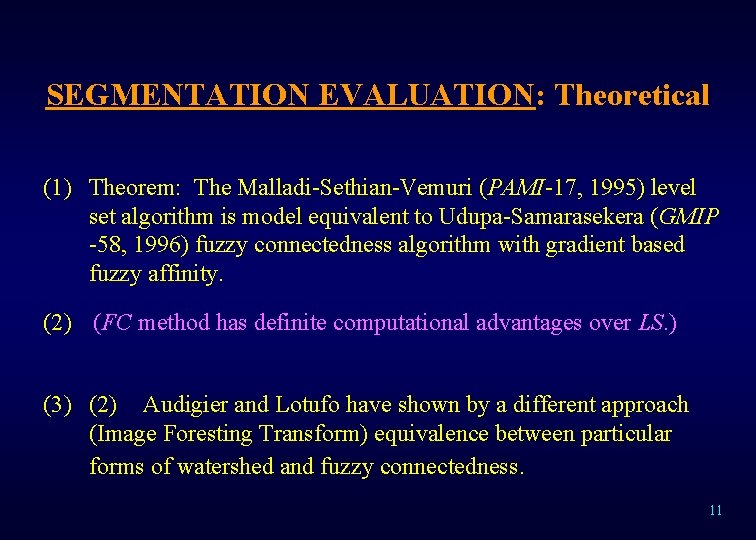

SEGMENTATION EVALUATION: Theoretical (1) Theorem: The Malladi-Sethian-Vemuri (PAMI-17, 1995) level set algorithm is model equivalent to Udupa-Samarasekera (GMIP -58, 1996) fuzzy connectedness algorithm with gradient based fuzzy affinity. (2) (FC method has definite computational advantages over LS. ) (3) (2) Audigier and Lotufo have shown by a different approach (Image Foresting Transform) equivalence between particular forms of watershed and fuzzy connectedness. 11

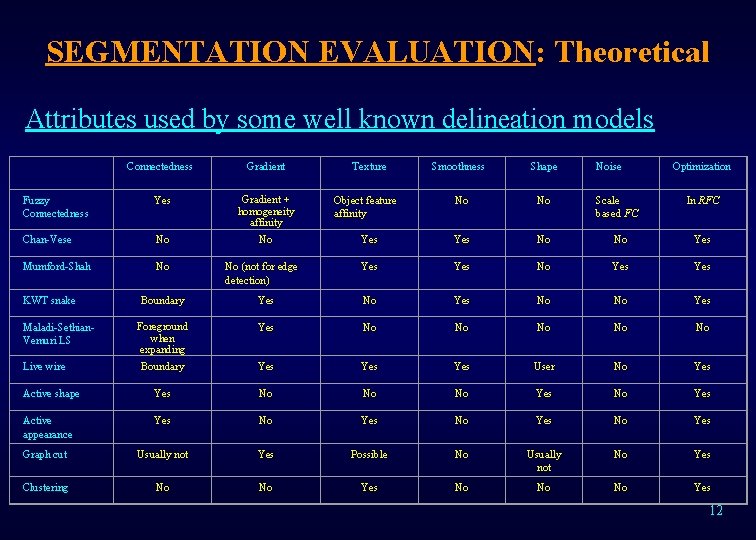

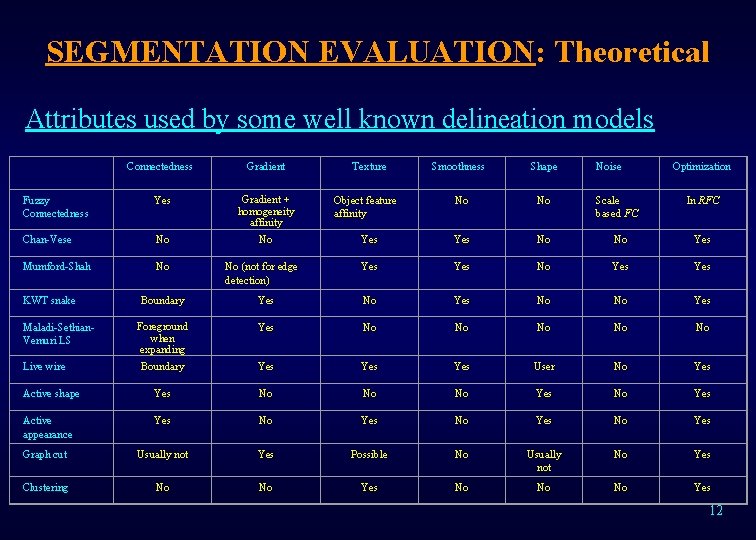

SEGMENTATION EVALUATION: Theoretical Attributes used by some well known delineation models Connectedness Gradient Texture Fuzzy Connectedness Yes Gradient + homogeneity affinity Object feature affinity No No Chan-Vese No No Yes Mumford-Shah No No (not for edge detection) Yes No Yes Boundary Yes No No Yes Foreground when expanding Yes No No No Boundary Yes Yes User No Yes Active shape Yes No No No Yes Active appearance Yes No Yes Graph cut Usually not Yes Possible No Usually not No Yes Clustering No No Yes No No No Yes KWT snake Maladi-Sethian. Vemuri LS Live wire Smoothness Shape Noise Scale based FC Optimization In RFC 12

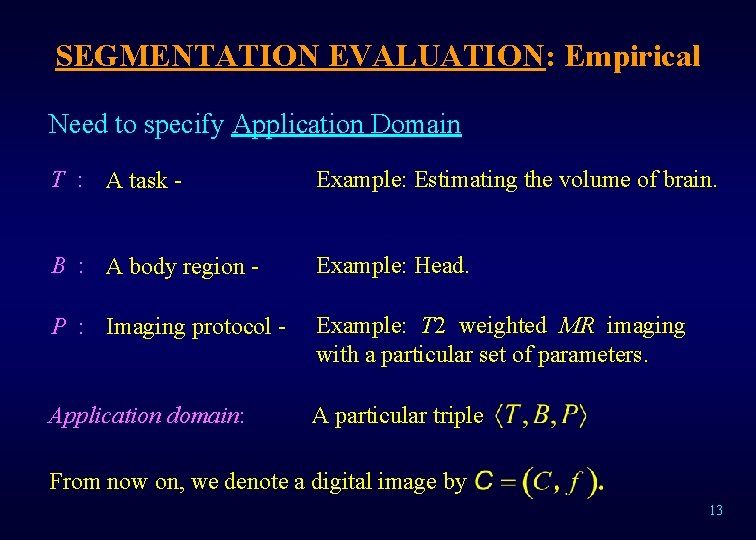

SEGMENTATION EVALUATION: Empirical Need to specify Application Domain T : A task - Example: Estimating the volume of brain. B : A body region - Example: Head. P : Imaging protocol - Example: T 2 weighted MR imaging with a particular set of parameters. Application domain: A particular triple From now on, we denote a digital image by 13

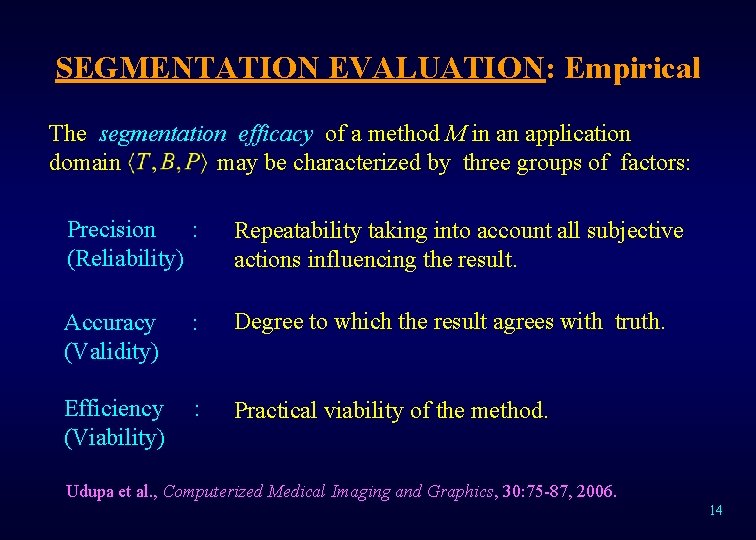

SEGMENTATION EVALUATION: Empirical The segmentation efficacy of a method M in an application domain may be characterized by three groups of factors: Precision : (Reliability) Repeatability taking into account all subjective actions influencing the result. Accuracy : (Validity) Degree to which the result agrees with truth. Efficiency : (Viability) Practical viability of the method. Udupa et al. , Computerized Medical Imaging and Graphics, 30: 75 -87, 2006. 14

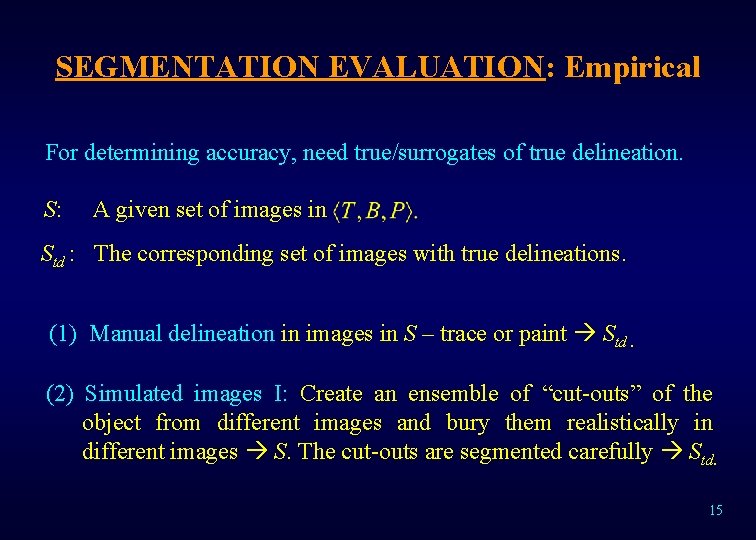

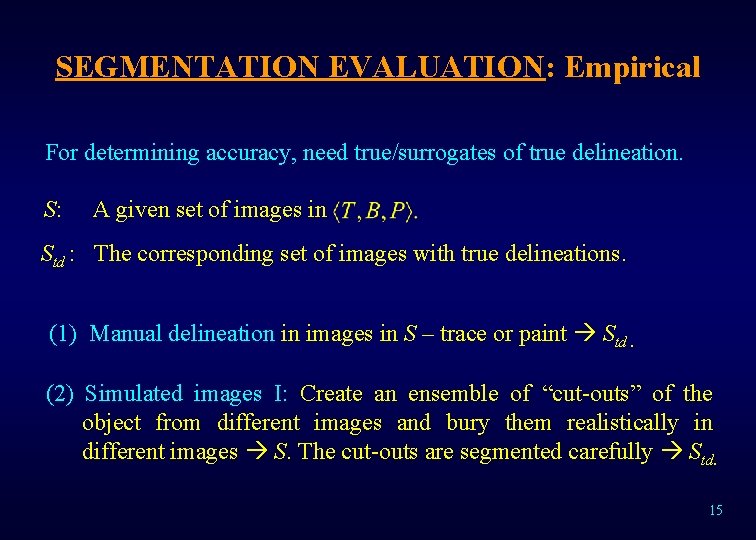

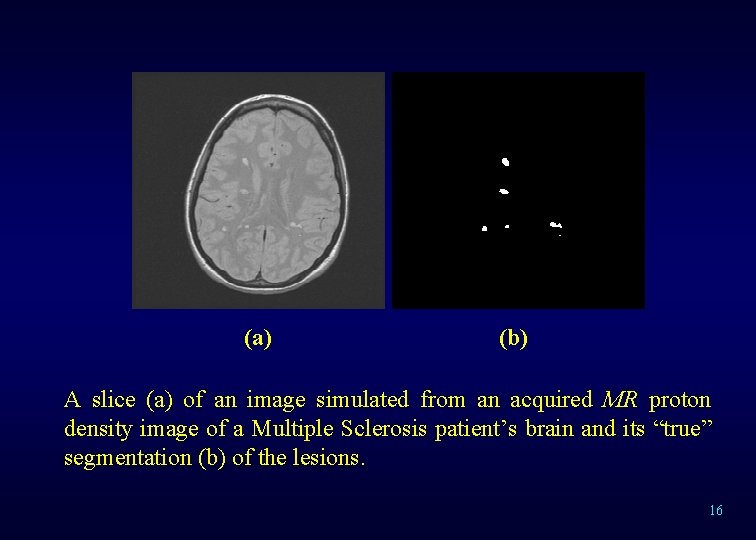

SEGMENTATION EVALUATION: Empirical For determining accuracy, need true/surrogates of true delineation. S: A given set of images in Std : The corresponding set of images with true delineations. (1) Manual delineation in images in S – trace or paint Std. (2) Simulated images I: Create an ensemble of “cut-outs” of the object from different images and bury them realistically in different images S. The cut-outs are segmented carefully Std. 15

(a) (b) A slice (a) of an image simulated from an acquired MR proton density image of a Multiple Sclerosis patient’s brain and its “true” segmentation (b) of the lesions. 16

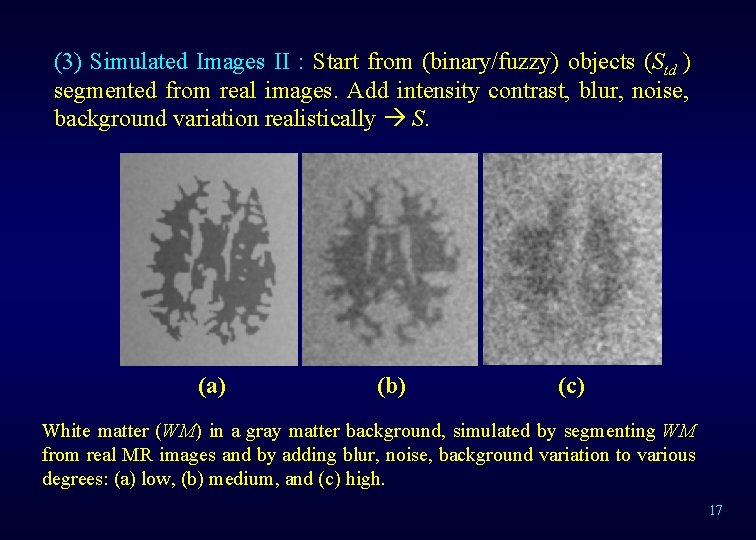

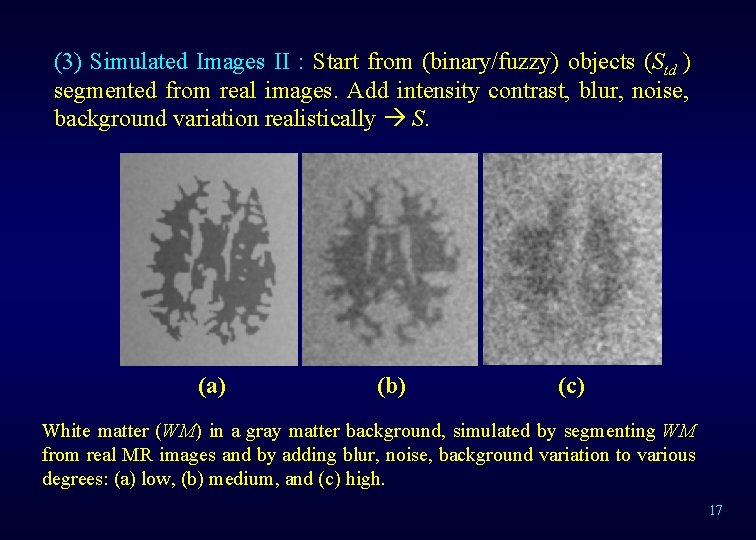

(3) Simulated Images II : Start from (binary/fuzzy) objects (Std ) segmented from real images. Add intensity contrast, blur, noise, background variation realistically S. (a) (b) (c) White matter (WM) in a gray matter background, simulated by segmenting WM from real MR images and by adding blur, noise, background variation to various degrees: (a) low, (b) medium, and (c) high. 17

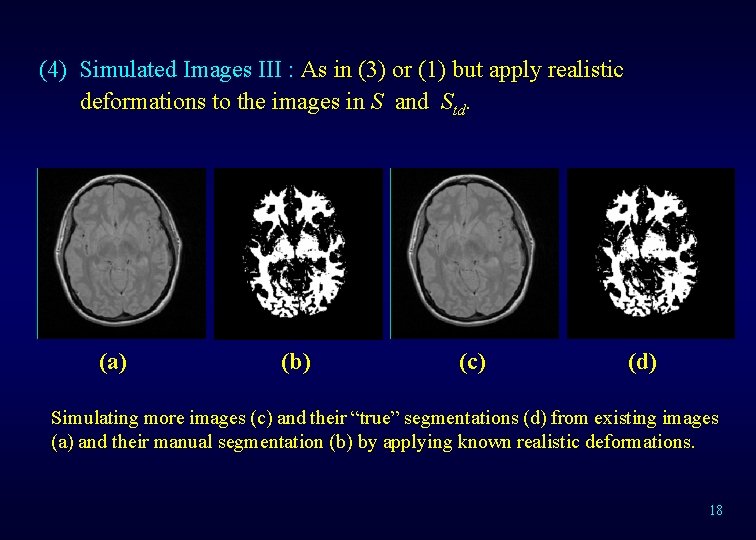

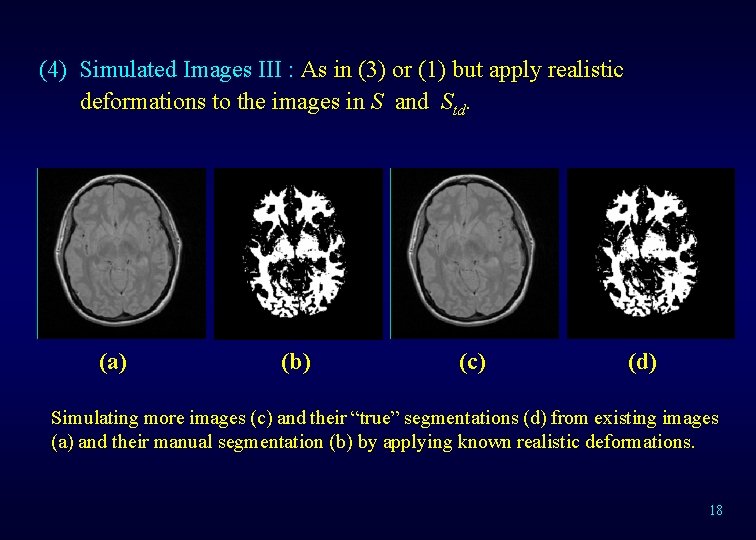

(4) Simulated Images III : As in (3) or (1) but apply realistic deformations to the images in S and Std. (a) (b) (c) (d) Simulating more images (c) and their “true” segmentations (d) from existing images (a) and their manual segmentation (b) by applying known realistic deformations. 18

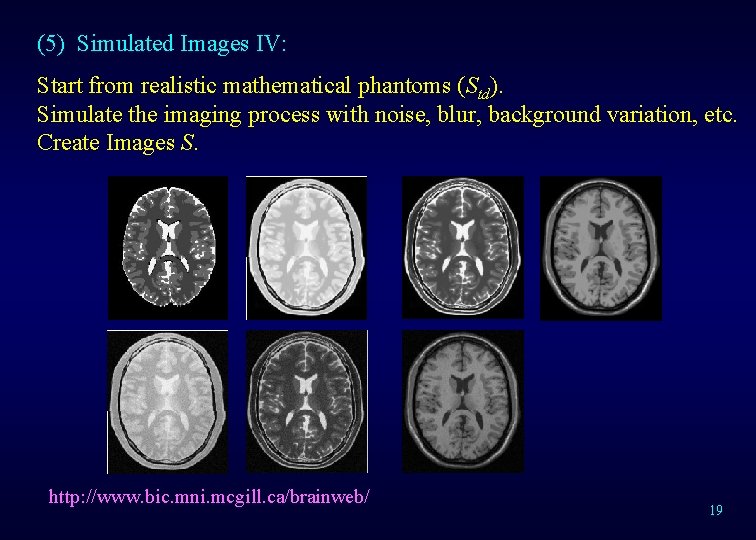

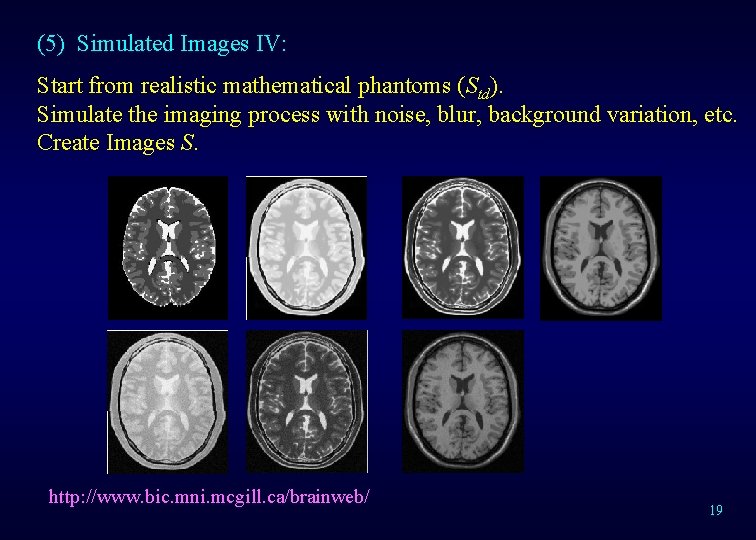

(5) Simulated Images IV: Start from realistic mathematical phantoms (Std). Simulate the imaging process with noise, blur, background variation, etc. Create Images S. http: //www. bic. mni. mcgill. ca/brainweb/ 19

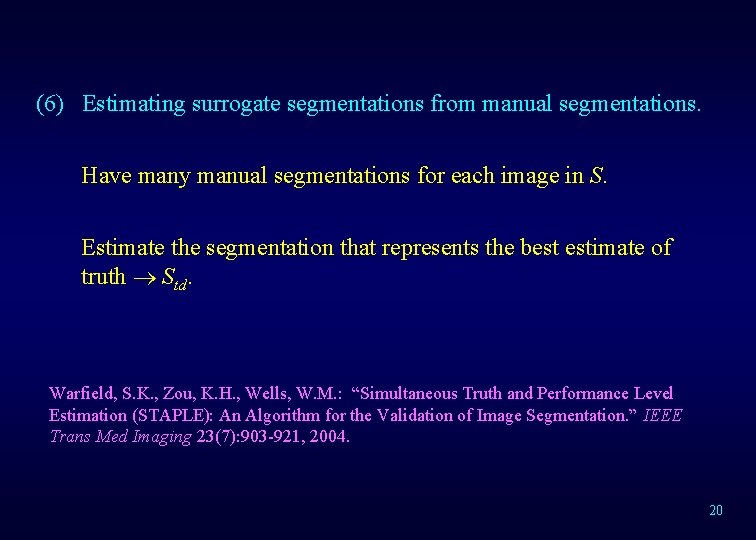

(6) Estimating surrogate segmentations from manual segmentations. Have many manual segmentations for each image in S. Estimate the segmentation that represents the best estimate of truth Std. Warfield, S. K. , Zou, K. H. , Wells, W. M. : “Simultaneous Truth and Performance Level Estimation (STAPLE): An Algorithm for the Validation of Image Segmentation. ” IEEE Trans Med Imaging 23(7): 903 -921, 2004. 20

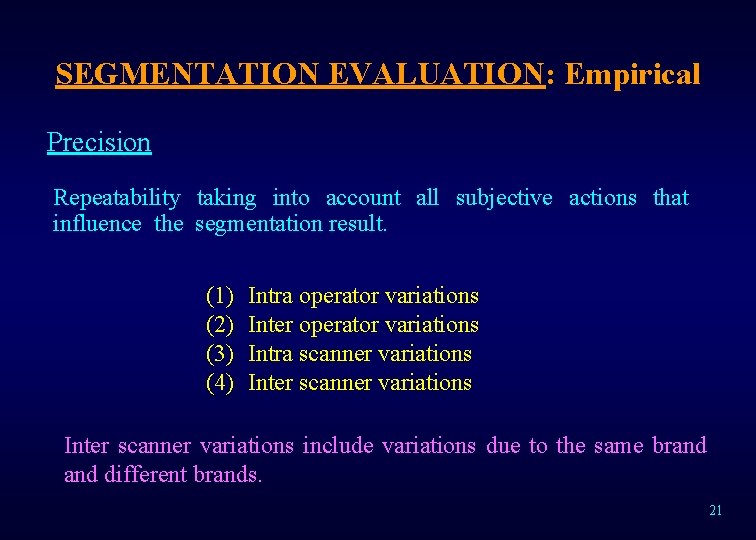

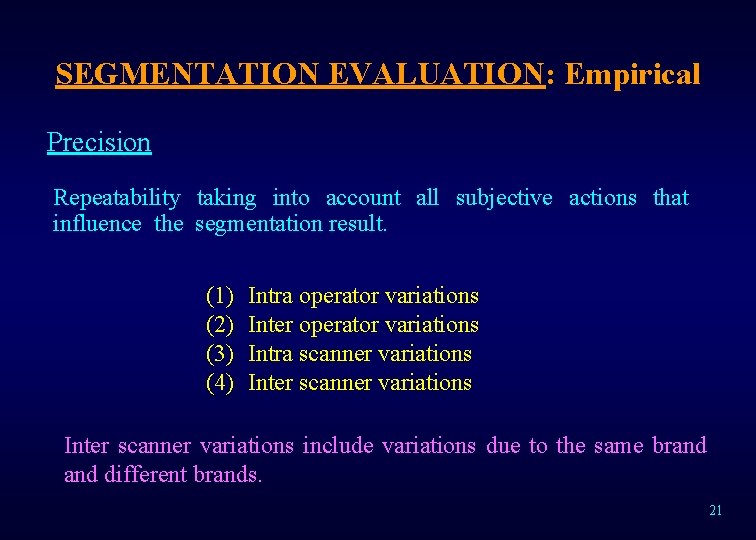

SEGMENTATION EVALUATION: Empirical Precision Repeatability taking into account all subjective actions that influence the segmentation result. (1) (2) (3) (4) Intra operator variations Inter operator variations Intra scanner variations Inter scanner variations include variations due to the same brand different brands. 21

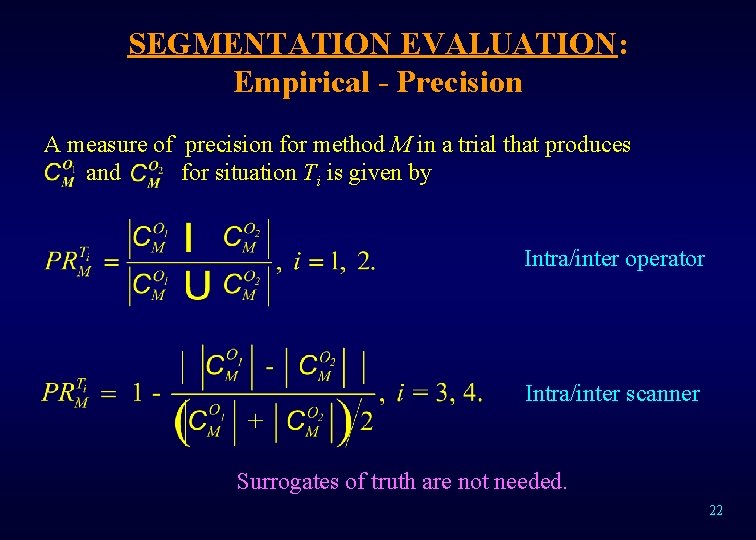

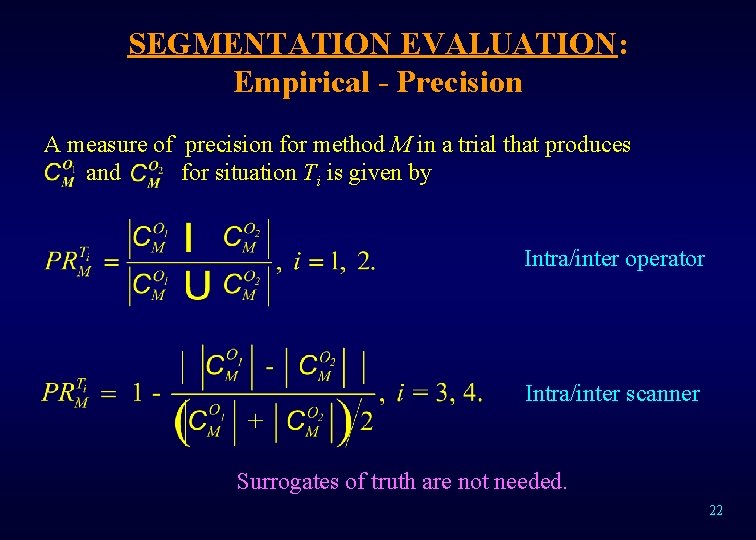

SEGMENTATION EVALUATION: Empirical - Precision A measure of precision for method M in a trial that produces and for situation Ti is given by Intra/inter operator Intra/inter scanner Surrogates of truth are not needed. 22

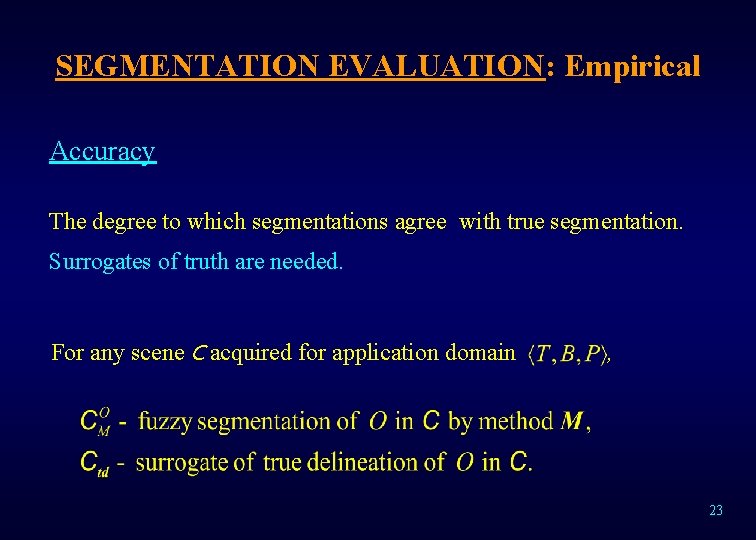

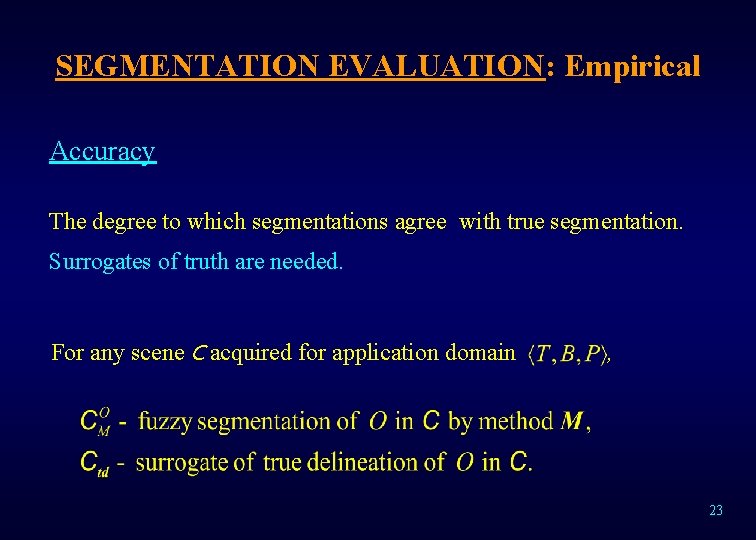

SEGMENTATION EVALUATION: Empirical Accuracy The degree to which segmentations agree with true segmentation. Surrogates of truth are needed. For any scene C acquired for application domain , 23

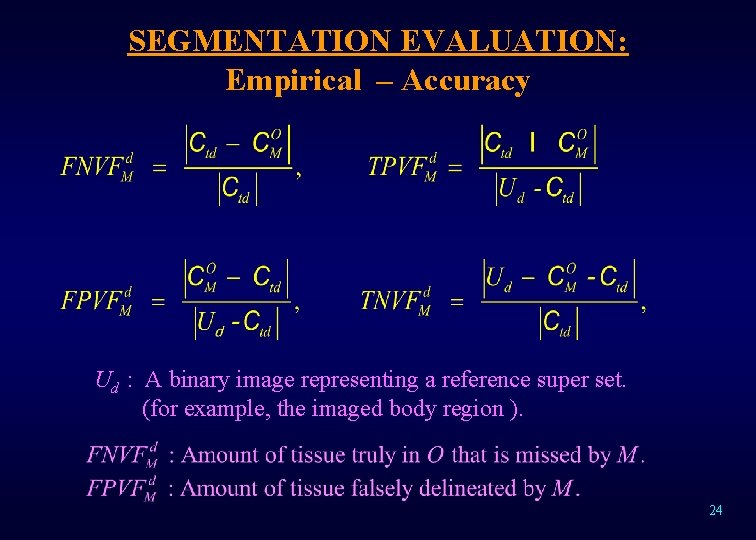

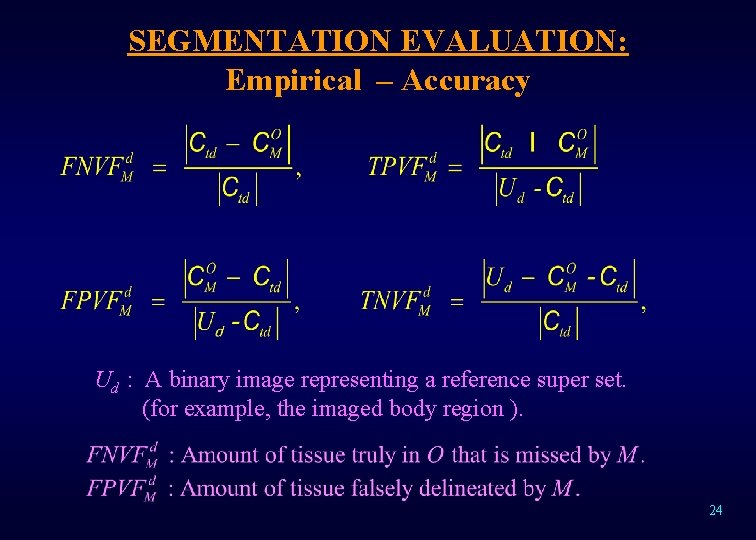

SEGMENTATION EVALUATION: Empirical – Accuracy Ud : A binary image representing a reference super set. (for example, the imaged body region ). 24

SEGMENTATION EVALUATION: Empirical – Accuracy Requirements for accuracy metrics: (1) Capture M’s behavior of trade-off between FP and FN. (2) Satisfy fractional relations: (3) (4) (5) (6) Capable of characterizing the range of behavior of M. Boundary-based FN and FP metrics may also be devised. Any monotonic function g(FNVF, FPVF) is fine as a metric. Appropriate for 25

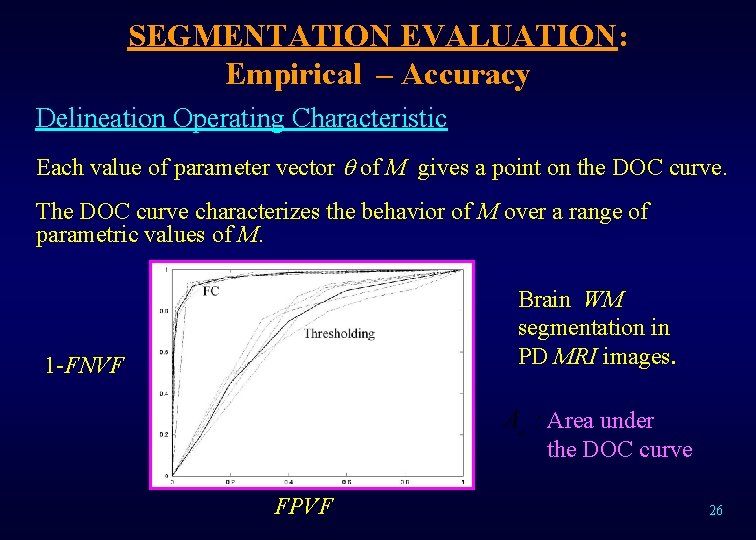

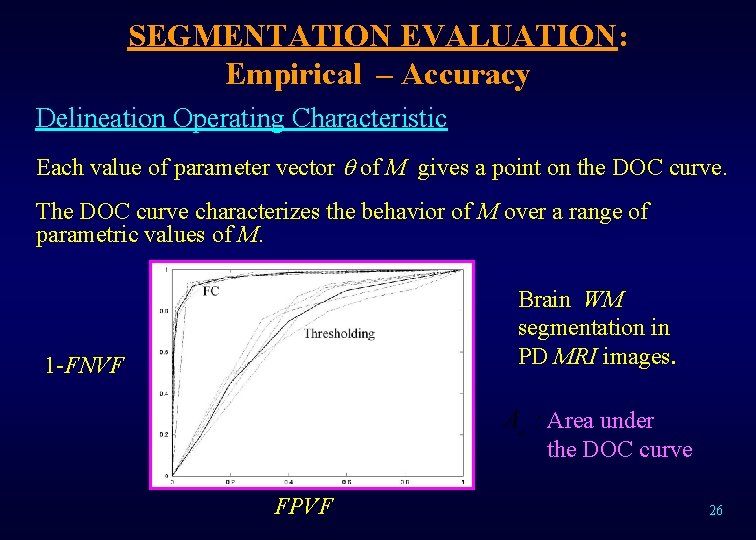

SEGMENTATION EVALUATION: Empirical – Accuracy Delineation Operating Characteristic Each value of parameter vector of M gives a point on the DOC curve. The DOC curve characterizes the behavior of M over a range of parametric values of M. Brain WM segmentation in PD MRI images. 1 -FNVF Area under the DOC curve FPVF 26

SEGMENTATION EVALUATION: Empirical Efficiency Describes practical viability of a method. Four factors should be considered: (1) Computational time – for one time training of M (2) Computational time – for segmenting each scene (3) Human time – for one-time training of M (4) Human time – for segmenting each scene (2) and (4) are crucial. (4) determines the degree of automation of M. 27

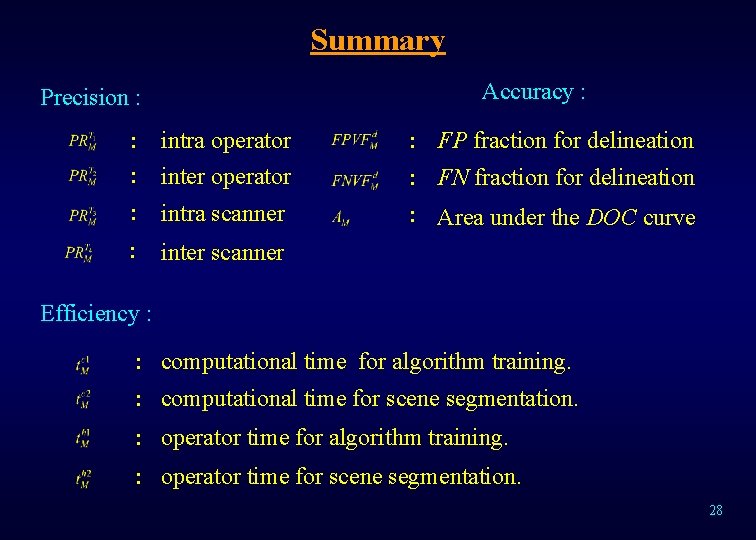

Summary Precision : Accuracy : : intra operator : inter operator : FP fraction for delineation : intra scanner : Area under the DOC curve : FN fraction for delineation : inter scanner Efficiency : : computational time for algorithm training. : computational time for scene segmentation. : operator time for algorithm training. : operator time for scene segmentation. 28

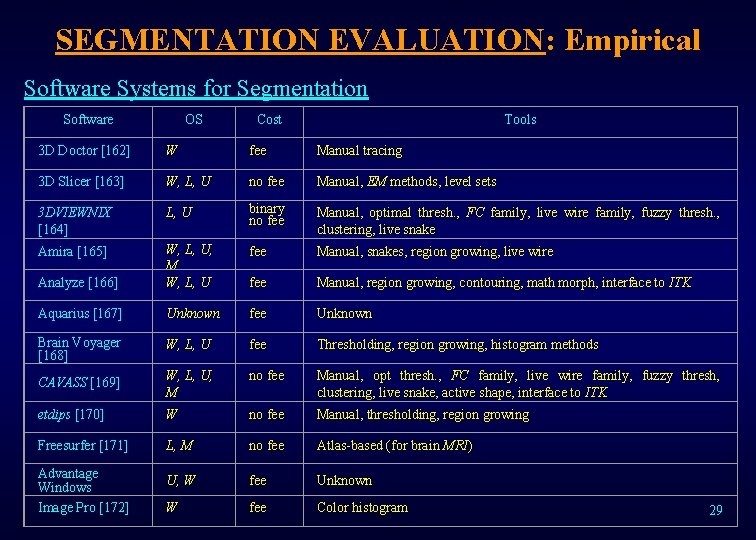

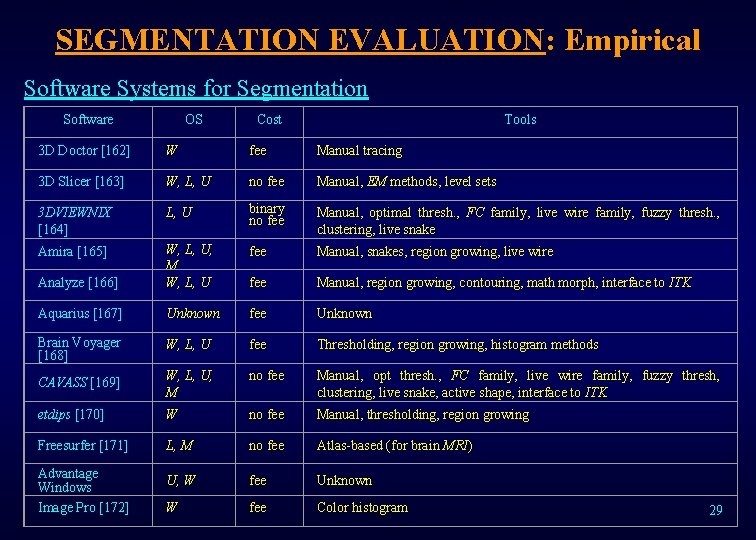

SEGMENTATION EVALUATION: Empirical Software Systems for Segmentation Software OS Cost Tools 3 D Doctor [162] W fee Manual tracing 3 D Slicer [163] W, L, U no fee Manual, EM methods, level sets 3 DVIEWNIX [164] L, U binary no fee Manual, optimal thresh. , FC family, live wire family, fuzzy thresh. , clustering, live snake Amira [165] fee Manual, snakes, region growing, live wire Analyze [166] W, L, U, M W, L, U fee Manual, region growing, contouring, math morph, interface to ITK Aquarius [167] Unknown fee Unknown Brain Voyager [168] W, L, U fee Thresholding, region growing, histogram methods CAVASS [169] W, L, U, M no fee Manual, opt thresh. , FC family, live wire family, fuzzy thresh, clustering, live snake, active shape, interface to ITK etdips [170] W no fee Manual, thresholding, region growing Freesurfer [171] L, M no fee Atlas-based (for brain MRI) Advantage Windows Image Pro [172] U, W fee Unknown W fee Color histogram 29

![SEGMENTATION EVALUATION Empirical Software Systems contd Imaris 173 W fee Thresholding microscopic images ITK SEGMENTATION EVALUATION: Empirical Software Systems (cont’d) Imaris [173] W fee Thresholding (microscopic images) ITK](https://slidetodoc.com/presentation_image/4257f41de004d7d7e423cc528f643813/image-30.jpg)

SEGMENTATION EVALUATION: Empirical Software Systems (cont’d) Imaris [173] W fee Thresholding (microscopic images) ITK [174] no fee Thresh. , level sets, watershed, fuzzy connectedness, active shape, region growing, etc. Me. Vis. Lab [175] W, L, U, M W, L binary no fee Manual, thresh. , region growing, fuzzy connectedness, live wire MRVision [176] L, U, M fee Manual, region growing Osiris [177] W, M no fee Thresholding, region growing Radio. Dexter [178] Surf. Driver [179] Unknown fee Unknown W, M fee Manual Slice. Omatic [180] W fee Thresholding, watershed, region growing, snakes Syngo In. Space [181] VIDA [182] Unknown fee Automatic bone removal Unknown fee Manual, thresholding Vitrea [183] Unknown fee Unknown Vol. View [184] W, L, U fee Level sets, region growing, watershed Voxar [185] W, L, U fee Unknown 30

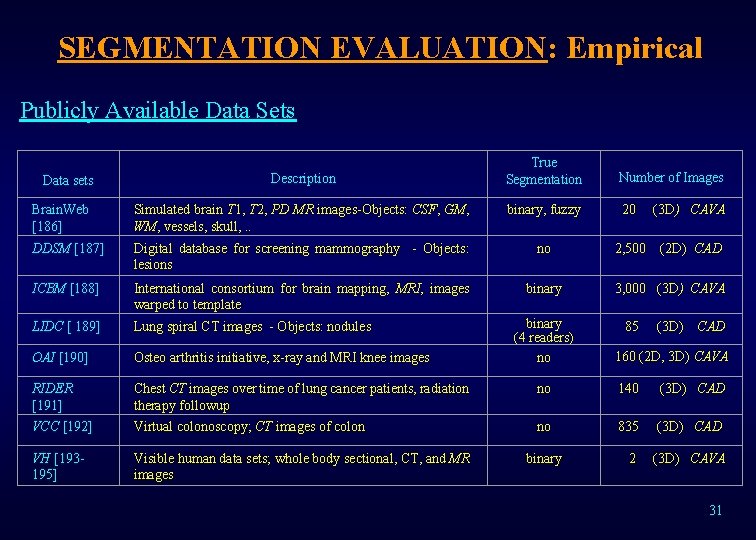

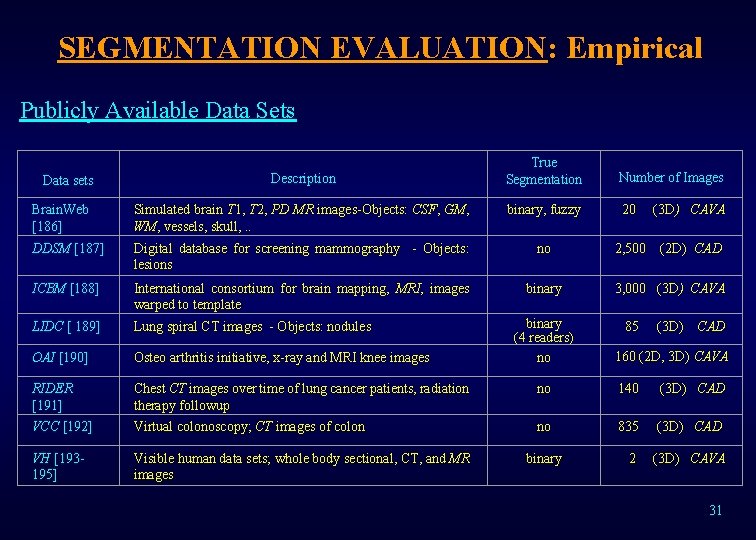

SEGMENTATION EVALUATION: Empirical Publicly Available Data Sets Data sets Description True Segmentation Number of Images Brain. Web [186] Simulated brain T 1, T 2, PD MR images-Objects: CSF, GM, WM, vessels, skull, . . binary, fuzzy 20 (3 D) CAVA DDSM [187] Digital database for screening mammography - Objects: lesions no 2, 500 (2 D) CAD ICBM [188] International consortium for brain mapping, MRI, images warped to template binary 3, 000 (3 D) CAVA LIDC [ 189] Lung spiral CT images - Objects: nodules 85 (3 D) CAD OAI [190] Osteo arthritis initiative, x-ray and MRI knee images binary (4 readers) no RIDER [191] Chest CT images over time of lung cancer patients, radiation therapy followup no 140 (3 D) CAD VCC [192] Virtual colonoscopy; CT images of colon no 835 (3 D) CAD VH [193195] Visible human data sets; whole body sectional, CT, and MR images binary 2 (3 D) CAVA 160 (2 D, 3 D) CAVA 31

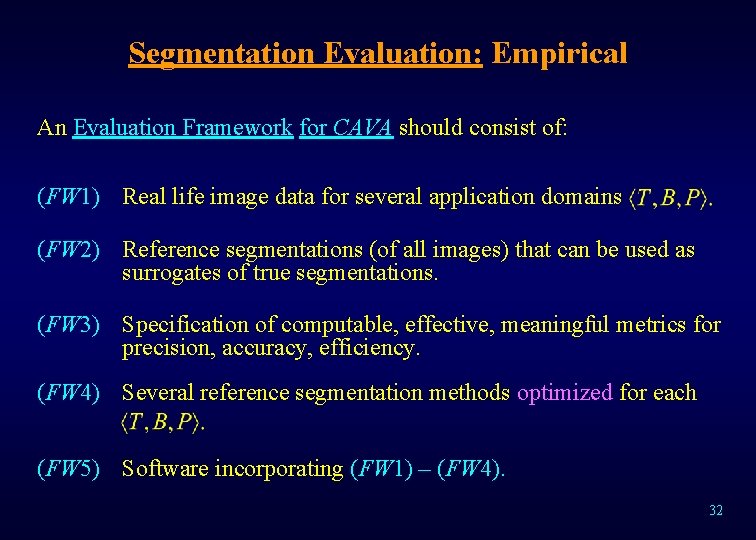

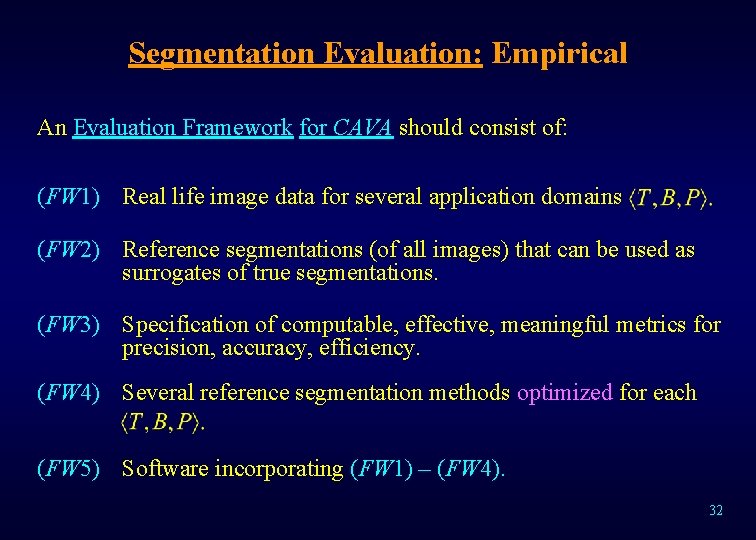

Segmentation Evaluation: Empirical An Evaluation Framework for CAVA should consist of: (FW 1) Real life image data for several application domains (FW 2) Reference segmentations (of all images) that can be used as surrogates of true segmentations. (FW 3) Specification of computable, effective, meaningful metrics for precision, accuracy, efficiency. (FW 4) Several reference segmentation methods optimized for each (FW 5) Software incorporating (FW 1) – (FW 4). 32

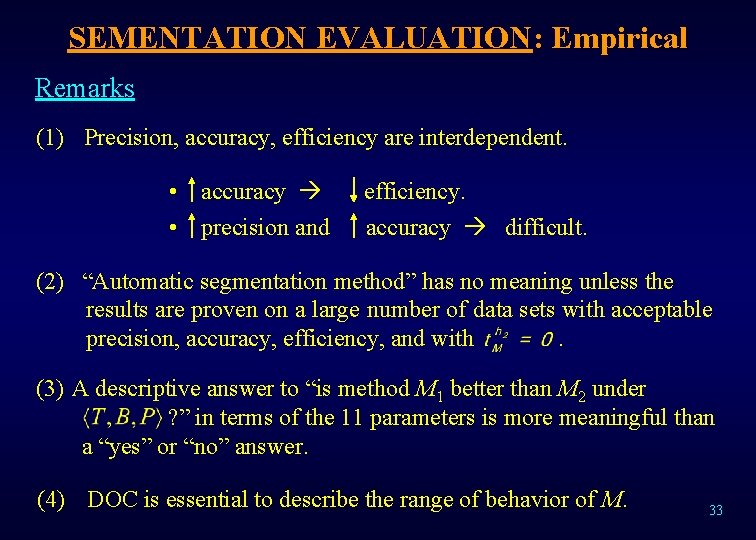

SEMENTATION EVALUATION: Empirical Remarks (1) Precision, accuracy, efficiency are interdependent. • accuracy efficiency. • precision and accuracy difficult. (2) “Automatic segmentation method” has no meaning unless the results are proven on a large number of data sets with acceptable precision, accuracy, efficiency, and with . (3) A descriptive answer to “is method M 1 better than M 2 under ? ” in terms of the 11 parameters is more meaningful than a “yes” or “no” answer. (4) DOC is essential to describe the range of behavior of M. 33

Concluding Remarks (1) Need unifying segmentation theories that can explain equivalences/distinctness of existing algorithms. (2) This can ensure true advances in segmentation. (2) Need evaluation frameworks with FW 1 -FW 5. (3) This can standardize methods of empirical comparison of competing and distinct algorithms. 34