Evaluating Medical Literature Dr Shounak Das Aug 26

- Slides: 50

Evaluating Medical Literature Dr. Shounak Das Aug. 26, 2008

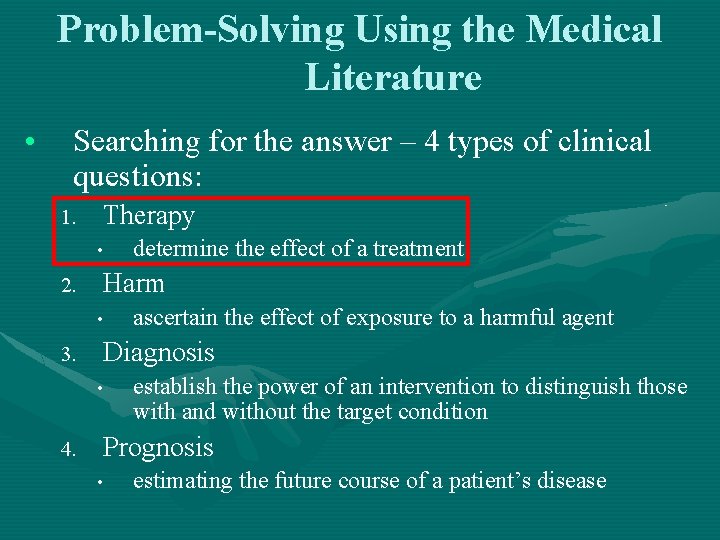

Problem-Solving Using the Medical Literature • Searching for the answer – 4 types of clinical questions: 1. Therapy • 2. Harm • 3. ascertain the effect of exposure to a harmful agent Diagnosis • 4. determine the effect of a treatment establish the power of an intervention to distinguish those with and without the target condition Prognosis • estimating the future course of a patient’s disease

Problem-Solving Using the Medical Literature • Sources of evidence: Ø Prefiltered • • Ø authors have already accumulated best of published +/- unpublished evidence (i. e. a systematic review) examples = Best Evidence, Cochrane Library, Up. To. Date, and Clinical Evidence Unfiltered • MEDLINE = U. S. National Library of Medicine database (contains primary studies and reviews)

Evaluation of Primary Studies Assessing Therapies • When using the medical literature to answer a clinical question, approach the study using three discrete steps* : 1) 2) 3) Are the results of the study valid? What are the results? How can I apply these results to patient care? *these 3 steps are applicable to primary studies assessing harm, diagnosis, and prognosi as well as therapies; they are also applicable for the evaluation of systematic reviews

1. Are the Results of the Study Valid? • Assessing validity requires answering 2 further questions: a) Did experimental and control groups begin the study with a similar prognosis? b) Did experimental and control groups retain a similar prognosis after the study started?

1 a. Did Experimental and Control Groups Begin the Study with a Similar Prognosis? • To determine whether or not the experimental and control groups began the study with similar prognoses, we ask 4 questions: 1. 2. 3. Were patients randomized? Was randomization concealed (blinded or masked)? Were patients analyzed in the groups to which they were randomized? 4. Were patients in the treatment and control groups similar with respect to known prognostic factors?

1 a. Did Experimental and Control Groups Begin the Study with a Similar Prognosis? • Were patients randomized? Ø randomization is important so that the experimental and control groups are matched for both known and unknown prognostic factors

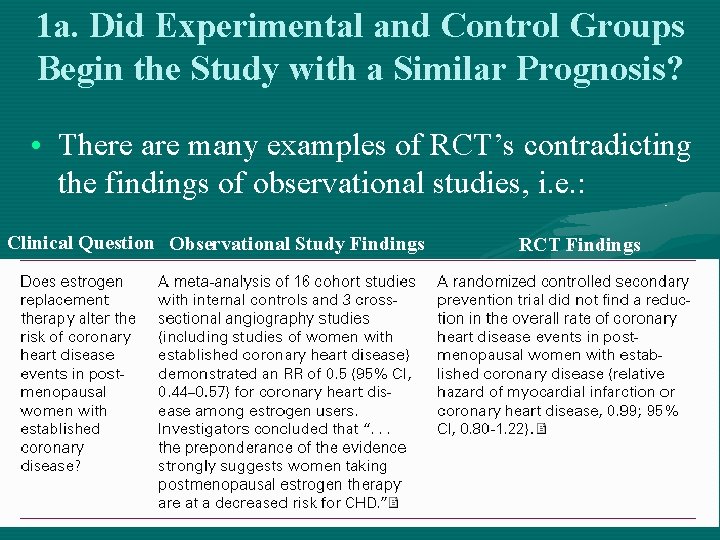

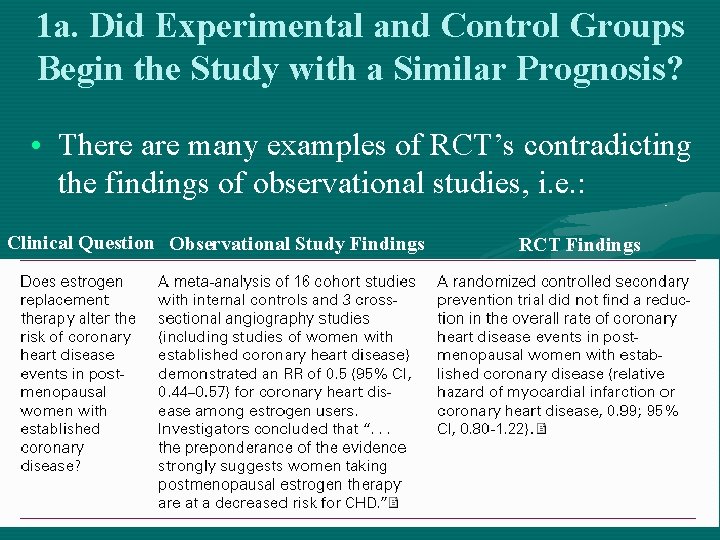

1 a. Did Experimental and Control Groups Begin the Study with a Similar Prognosis? • There are many examples of RCT’s contradicting the findings of observational studies, i. e. : Clinical Question Observational Study Findings RCT Findings

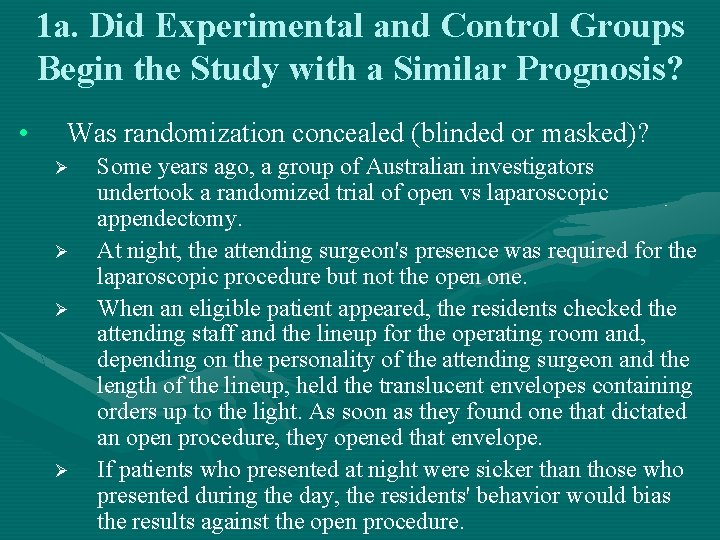

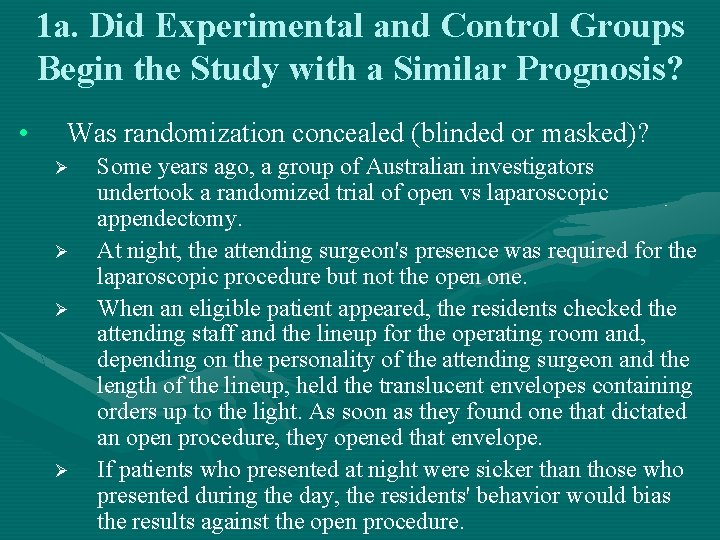

1 a. Did Experimental and Control Groups Begin the Study with a Similar Prognosis? • Was randomization concealed (blinded or masked)? Ø Ø Some years ago, a group of Australian investigators undertook a randomized trial of open vs laparoscopic appendectomy. At night, the attending surgeon's presence was required for the laparoscopic procedure but not the open one. When an eligible patient appeared, the residents checked the attending staff and the lineup for the operating room and, depending on the personality of the attending surgeon and the length of the lineup, held the translucent envelopes containing orders up to the light. As soon as they found one that dictated an open procedure, they opened that envelope. If patients who presented at night were sicker than those who presented during the day, the residents' behavior would bias the results against the open procedure.

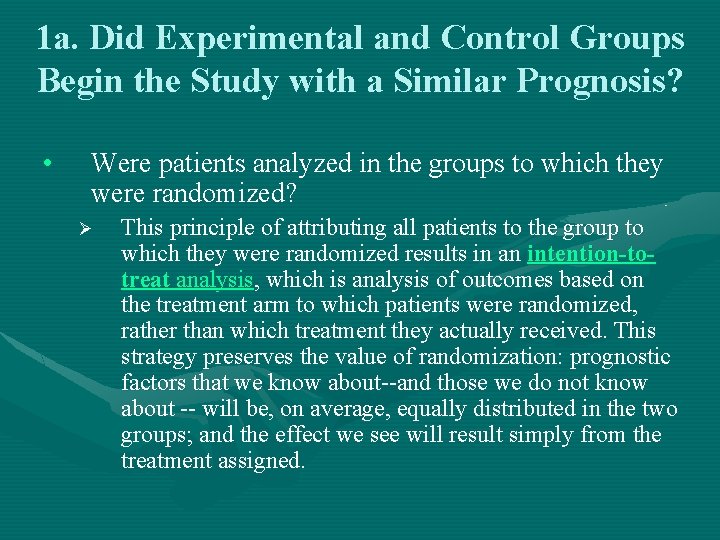

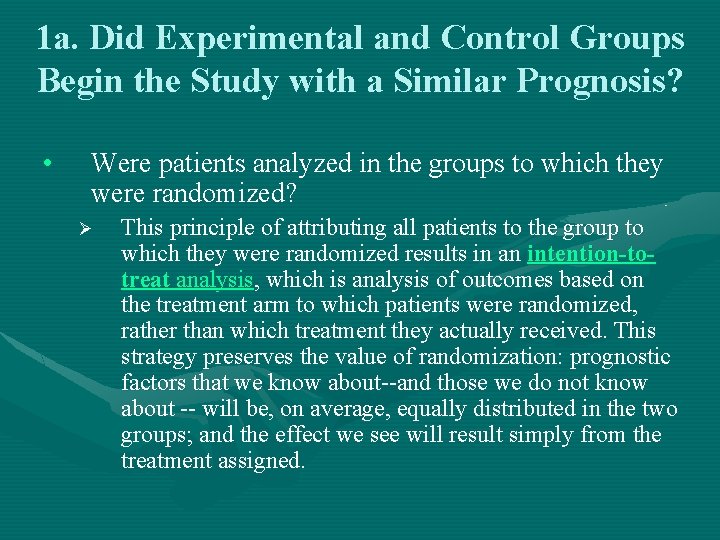

1 a. Did Experimental and Control Groups Begin the Study with a Similar Prognosis? • Were patients analyzed in the groups to which they were randomized? Ø This principle of attributing all patients to the group to which they were randomized results in an intention-totreat analysis, which is analysis of outcomes based on the treatment arm to which patients were randomized, rather than which treatment they actually received. This strategy preserves the value of randomization: prognostic factors that we know about--and those we do not know about -- will be, on average, equally distributed in the two groups; and the effect we see will result simply from the treatment assigned.

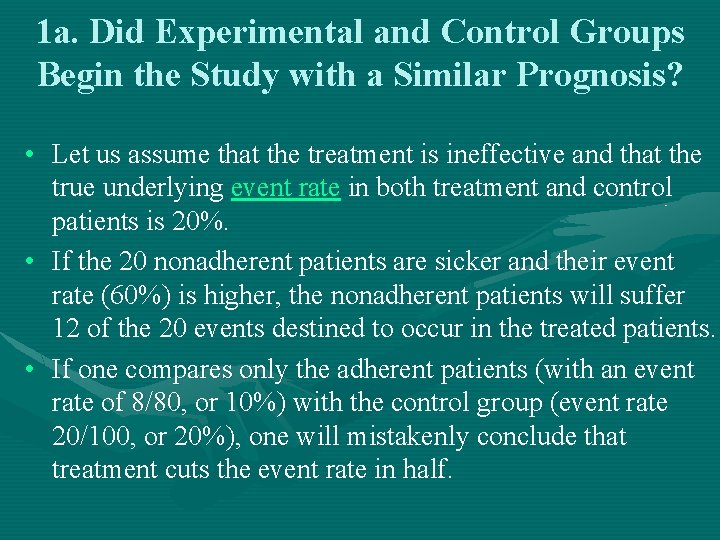

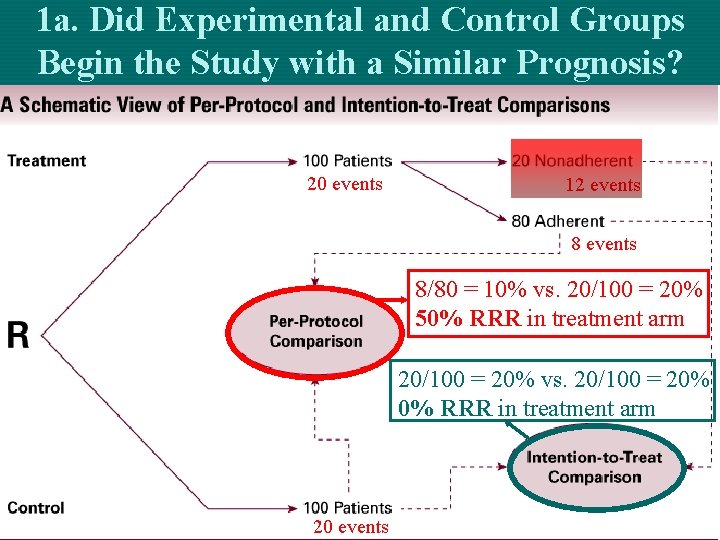

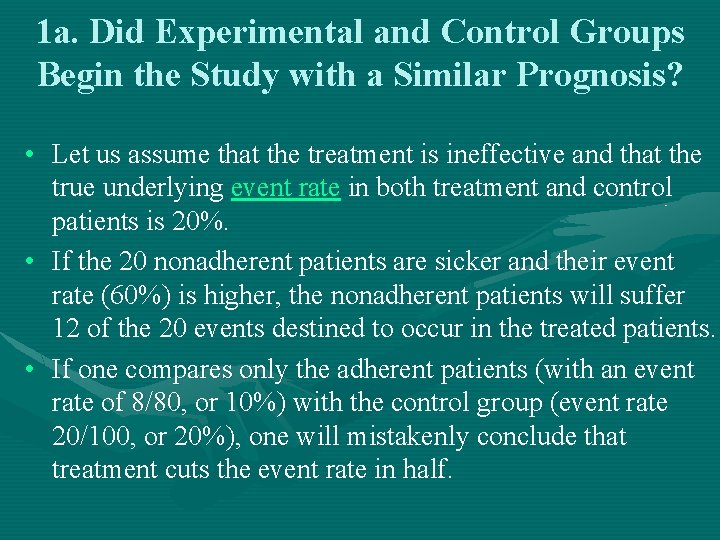

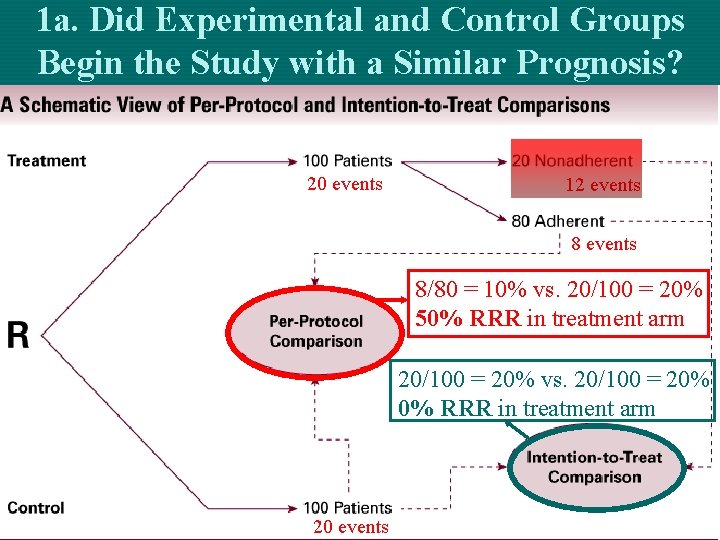

1 a. Did Experimental and Control Groups Begin the Study with a Similar Prognosis? • Let us assume that the treatment is ineffective and that the true underlying event rate in both treatment and control patients is 20%. • If the 20 nonadherent patients are sicker and their event rate (60%) is higher, the nonadherent patients will suffer 12 of the 20 events destined to occur in the treated patients. • If one compares only the adherent patients (with an event rate of 8/80, or 10%) with the control group (event rate 20/100, or 20%), one will mistakenly conclude that treatment cuts the event rate in half.

1 a. Did Experimental and Control Groups Begin the Study with a Similar Prognosis? 20 events 12 events 8/80 = 10% vs. 20/100 = 20% 50% RRR in treatment arm 20/100 = 20% vs. 20/100 = 20% 0% RRR in treatment arm 20 events

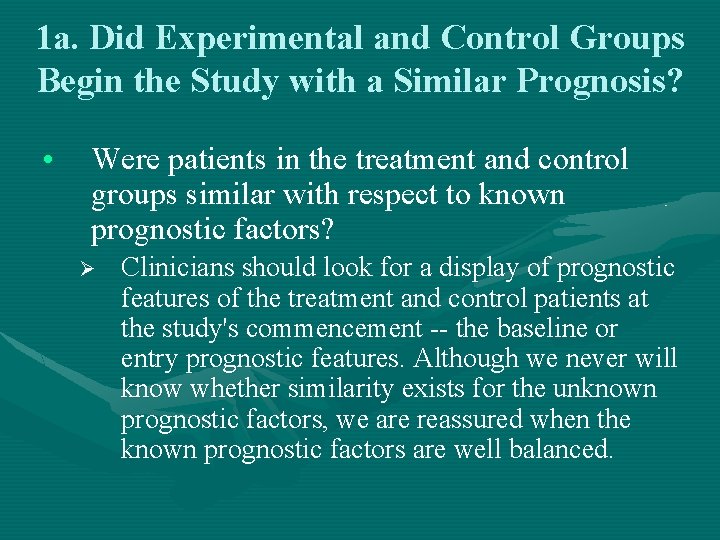

1 a. Did Experimental and Control Groups Begin the Study with a Similar Prognosis? • Were patients in the treatment and control groups similar with respect to known prognostic factors? Ø Clinicians should look for a display of prognostic features of the treatment and control patients at the study's commencement -- the baseline or entry prognostic features. Although we never will know whether similarity exists for the unknown prognostic factors, we are reassured when the known prognostic factors are well balanced.

1. Are the Results of the Study Valid? • Assessing validity requires answering 2 further questions: a) Did experimental and control groups begin the study with a similar prognosis? b) Did experimental and control groups retain a similar prognosis after the study started?

1 b. Did Experimental and Control Groups Retain a Similar Prognosis After the Study Started? • To determine whether or not the experimental and control groups retain a similar prognosis, we ask 4 questions: 1. Were patients aware of group allocations? 2. Were clinicians aware of group allocations? 3. Were outcome assessors aware of group allocations? 4. Was follow-up complete?

1 b. Did Experimental and Control Groups Retain a Similar Prognosis After the Study Started? • Were patients aware of group allocations? Ø Patients who take a treatment that they believe is efficacious may feel and perform better than those who do not, even if the treatment has no biologic action – the placebo effect. Ø The best way to avoid the placebo effect skewing the results is to ensure that patients are unaware of whether they are receiving the experimental treatment.

1 b. Did Experimental and Control Groups Retain a Similar Prognosis After the Study Started? • Were clinicians aware of group allocations? If randomization succeeds, treatment and control groups in a study begin with a very similar prognosis. However, randomization provides no guarantee that the two groups will remain prognostically balanced. Differences in patient care other than the intervention (cointerventions) under study can bias the results. Ø Effective blinding eliminates the possibility of either conscious or unconscious differential administration of effective (co)interventions to treatment and control groups. Ø

1 b. Did Experimental and Control Groups Retain a Similar Prognosis After the Study Started? • Were outcome assessors aware of group allocations? Ø Unblinded study personnel who are measuring or recording outcomes such as physiologic tests, clinical status, or quality of life may provide different interpretations of marginal findings or may offer differential encouragement during performance tests, either one of which can distort results.

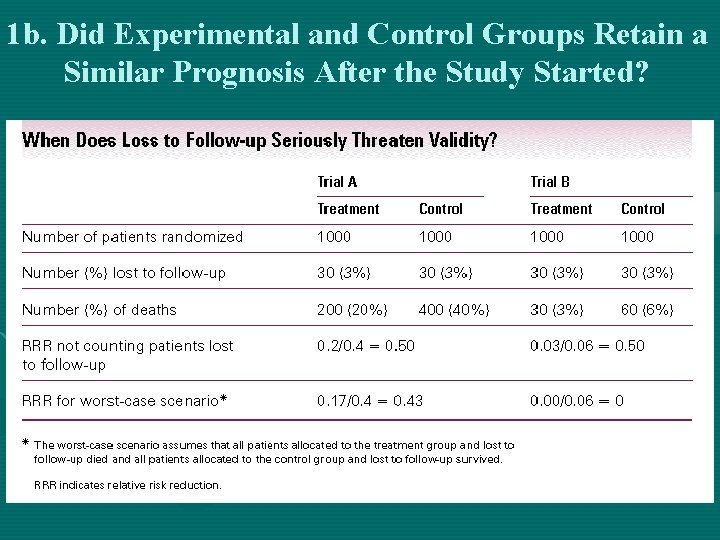

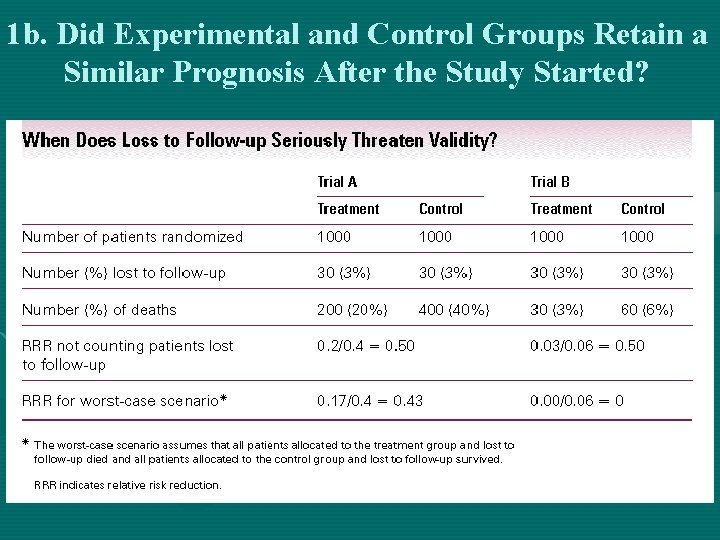

1 b. Did Experimental and Control Groups Retain a Similar Prognosis After the Study Started? • Was follow-up complete? Ø The greater the number of patients who are lost to follow-up, the more a study's validity is potentially compromised. The reason is that patients who are lost often have different prognoses from those who are retained. The situation is completely analogous to the reason for the necessity for an intention-totreat analysis.

1 b. Did Experimental and Control Groups Retain a Similar Prognosis After the Study Started?

1. Are the Results of the Study Valid? • The final assessment of validity is never a "yes" or "no" decision. Rather, think of validity as a continuum ranging from strong studies that are very likely to yield an accurate estimate of the treatment effect to weak studies that are very likely to yield a biased estimate of effect.

Evaluation of Primary Studies Assessing Therapies • When using the medical literature to answer a clinical question, approach the study using three discrete steps : 1) 2) 3) Are the results of the study valid? What are the results? How can I apply these results to patient care?

2. What are the Results? • Once validity of a study is assessed, the next step is to analyze the results. This involves looking at: a) How large was the treatment effect? b) How precise was the estimate of the treatment effect?

2 a. How Large Was the Treatment Effect? • Most frequently, randomized clinical trials carefully monitor how often patients experience some adverse event or outcome. Examples of these dichotomous outcomes ("yes" or "no" outcomes -- ones that either happen or do not happen) include cancer recurrence, myocardial infarction, and death. Patients either do or do not suffer an event, and the article reports the proportion of patients who develop such events.

2 a. How Large Was the Treatment Effect? • Even if the outcome is not one of these dichotomous variables, investigators sometimes elect to present the results as if this were the case. For example, in a study of the use of forced expiratory volume in 1 second (FEV 1) in the assessment of the efficacy of oral corticosteroids in patients with chronic stable airflow limitation, investigators defined an event as an improvement in FEV 1 over baseline of more than 20%. • The investigators' choice of the magnitude of change required to designate an improvement as "important" can affect the apparent effectiveness of the treatment

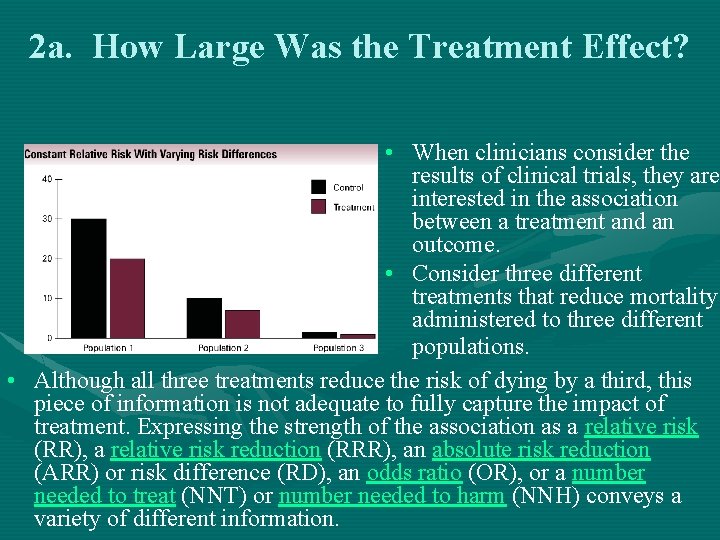

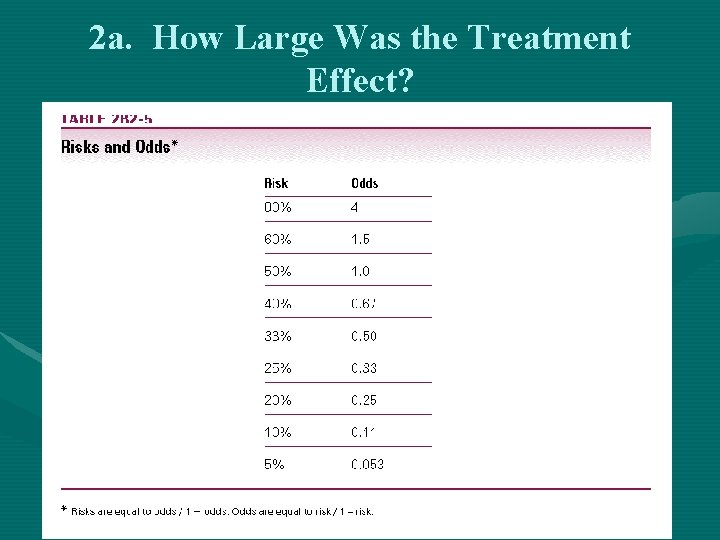

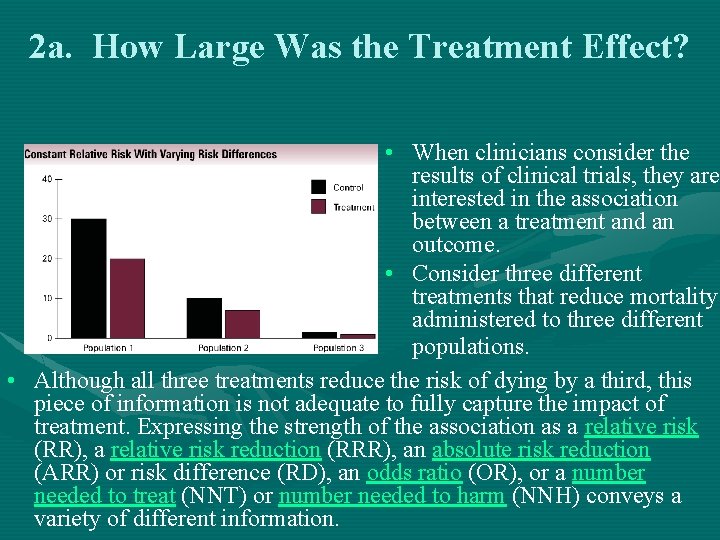

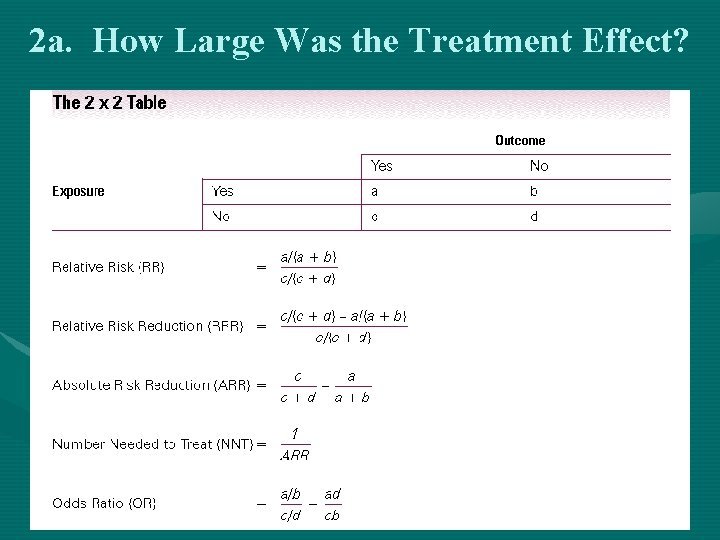

2 a. How Large Was the Treatment Effect? • When clinicians consider the results of clinical trials, they are interested in the association between a treatment and an outcome. • Consider three different treatments that reduce mortality administered to three different populations. • Although all three treatments reduce the risk of dying by a third, this piece of information is not adequate to fully capture the impact of treatment. Expressing the strength of the association as a relative risk (RR), a relative risk reduction (RRR), an absolute risk reduction (ARR) or risk difference (RD), an odds ratio (OR), or a number needed to treat (NNT) or number needed to harm (NNH) conveys a variety of different information.

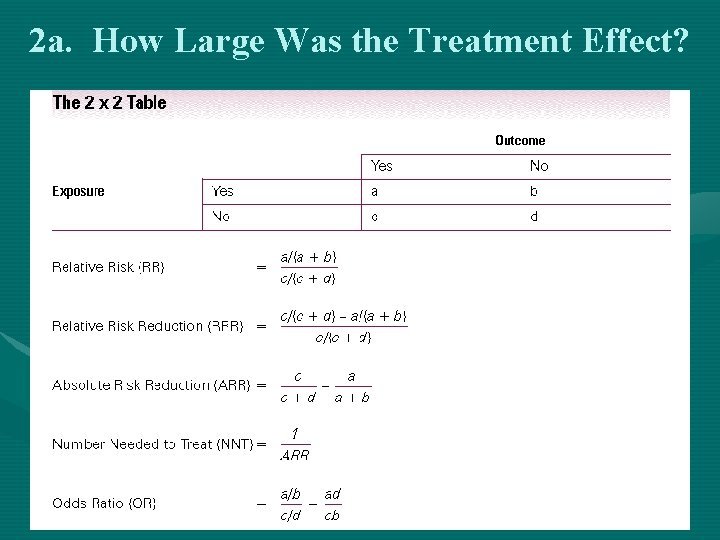

2 a. How Large Was the Treatment Effect?

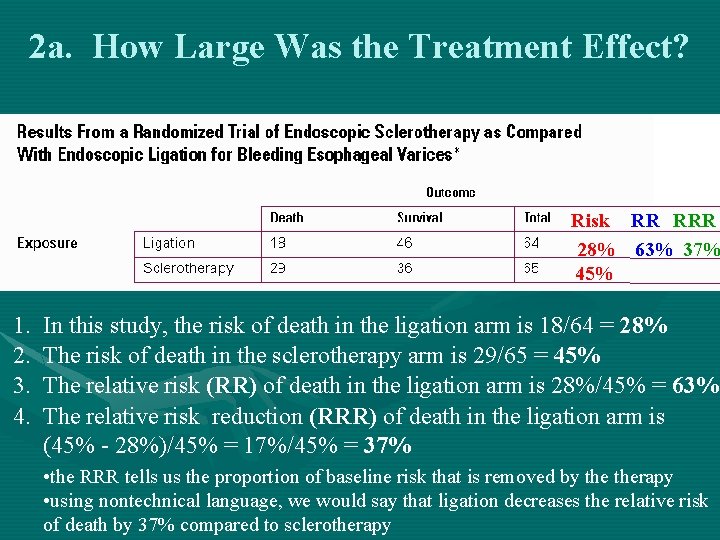

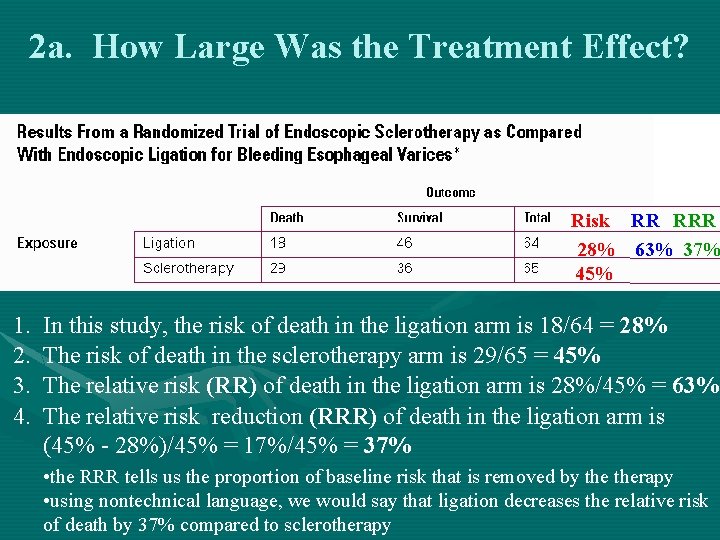

2 a. How Large Was the Treatment Effect? Risk RR RRR 28% 63% 37% 45% 1. 2. 3. 4. In this study, the risk of death in the ligation arm is 18/64 = 28% The risk of death in the sclerotherapy arm is 29/65 = 45% The relative risk (RR) of death in the ligation arm is 28%/45% = 63% The relative risk reduction (RRR) of death in the ligation arm is (45% - 28%)/45% = 17%/45% = 37% • the RRR tells us the proportion of baseline risk that is removed by therapy • using nontechnical language, we would say that ligation decreases the relative risk of death by 37% compared to sclerotherapy

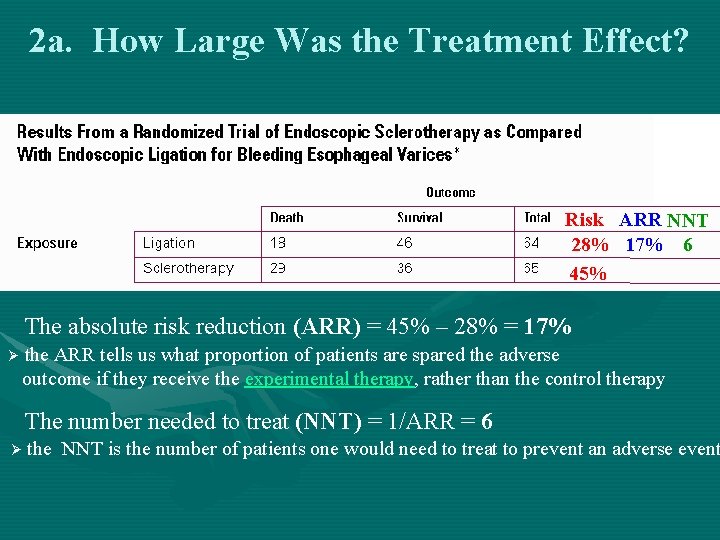

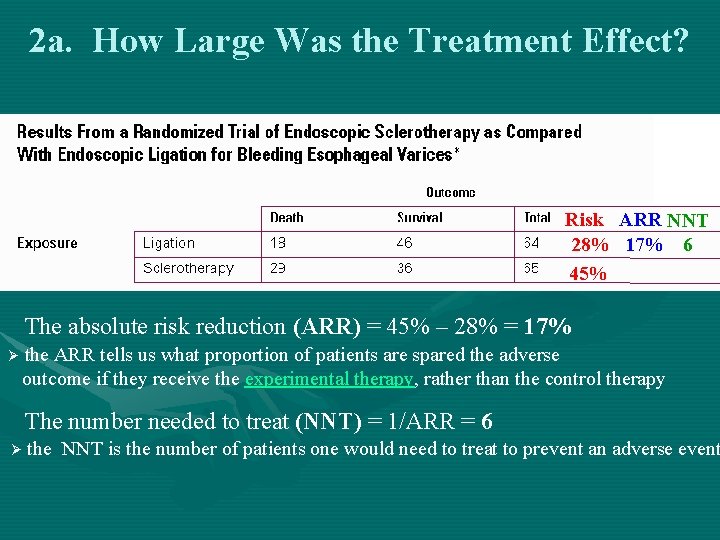

2 a. How Large Was the Treatment Effect? Risk ARR NNT 28% 17% 6 45% (28%) The absolute risk reduction (ARR) = 45% – 28% = 17% Ø the ARR tells us what proportion of patients are spared the adverse outcome if they receive the experimental therapy, rather than the control therapy The number needed to treat (NNT) = 1/ARR = 6 Ø the NNT is the number of patients one would need to treat to prevent an adverse event

2 a. How Large Was the Treatment Effect?

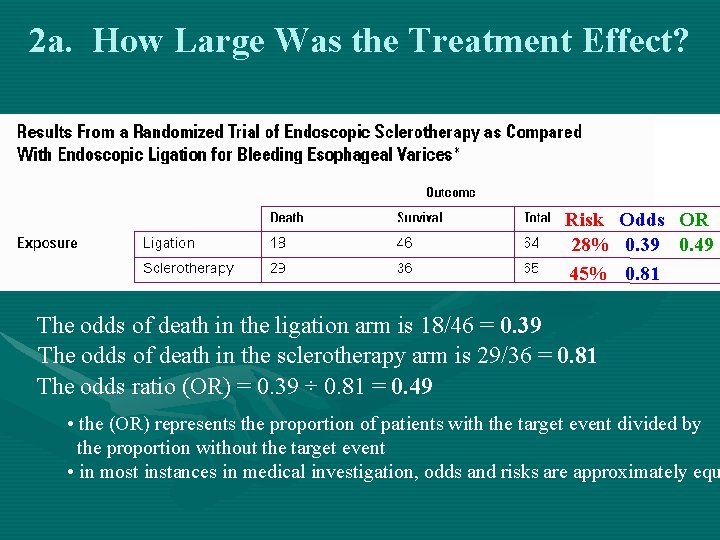

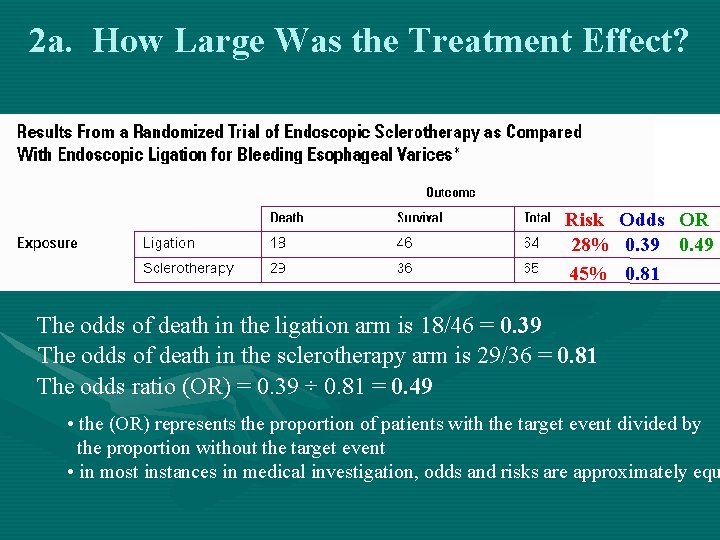

2 a. How Large Was the Treatment Effect? Risk Odds OR 28% 0. 39 0. 49 (28%) 45% 0. 81 The odds of death in the ligation arm is 18/46 = 0. 39 The odds of death in the sclerotherapy arm is 29/36 = 0. 81 The odds ratio (OR) = 0. 39 ÷ 0. 81 = 0. 49 • the (OR) represents the proportion of patients with the target event divided by the proportion without the target event • in most instances in medical investigation, odds and risks are approximately equ

2 a. How Large Was the Treatment Effect? • Relative Risk and Odds Ratio vs Absolute Risk Reduction: Why the Fuss? Ø distinguishing between OR and RR will seldom have major importance Ø we must pay much more attention to distinguishing between the OR and RR vs the ARR (or its reciprocal the NNT)

2 a. How Large Was the Treatment Effect? (ARR) (10%) (0. 5%) • Forrow and colleagues demonstrated that clinicians were less inclined to treat patients after presentation of trial results as the absolute change in the outcome compared with the relative change in the outcome. • The pharmaceutical industry's awareness of this phenomenon may be responsible for their propensity to present physicians with treatment-associated relative risk reductions.

2. What are the Results? • Once validity of a study is assessed, the next step is to analyze the results. This involves looking at: a) How large was the treatment effect? b) How precise was the estimate of the treatment effect?

2 b. How Precise Was the Estimate of the Treatment Effect? • Realistically, the true risk reduction can never be known. The best we have is the estimate provided by rigorous controlled trials, and the best estimate of the true treatment effect is that observed in the trial. This estimate is called a point estimate, a single value calculated from observations of the sample that is used to estimate a population value or parameter.

2 b. How Precise Was the Estimate of the Treatment Effect? • Investigators often tell us the neighborhood within which the true effect likely lies by calculating confidence intervals. Ø We usually (though arbitrarily) use the 95% confidence interval. Ø You can consider the 95% confidence interval as defining the range that includes the true relative risk reduction 95% of the time.

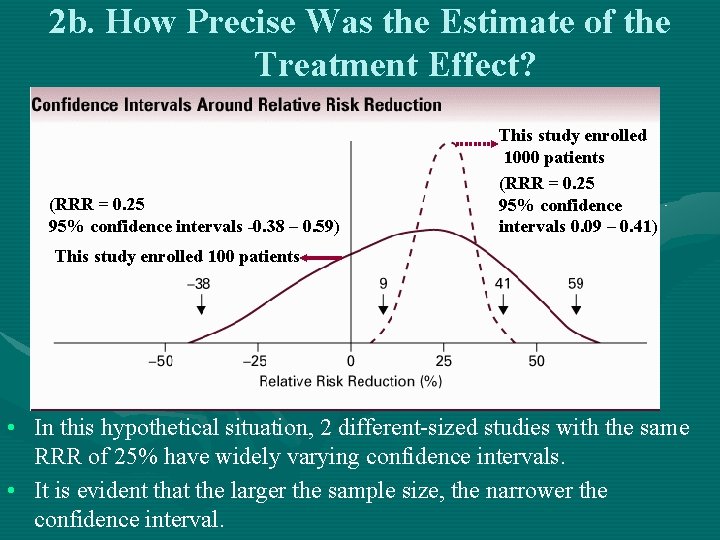

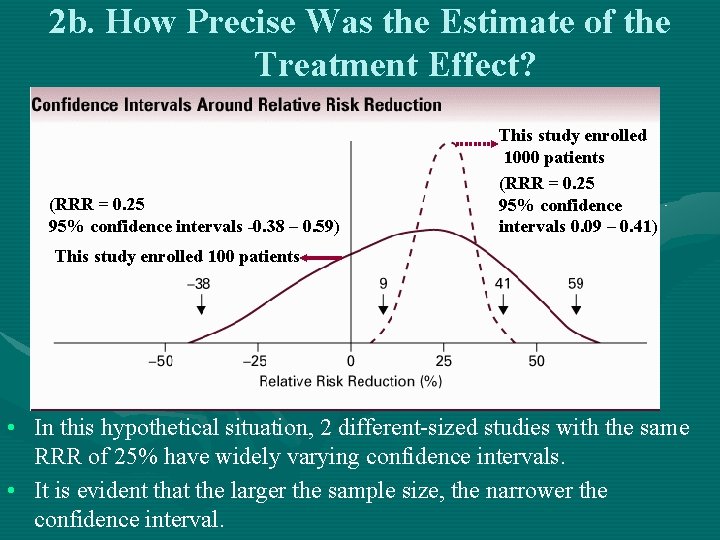

2 b. How Precise Was the Estimate of the Treatment Effect? (RRR = 0. 25 95% confidence intervals -0. 38 – 0. 59) This study enrolled 1000 patients (RRR = 0. 25 95% confidence intervals 0. 09 – 0. 41) This study enrolled 100 patients • In this hypothetical situation, 2 different-sized studies with the same RRR of 25% have widely varying confidence intervals. • It is evident that the larger the sample size, the narrower the confidence interval.

2 b. How Precise Was the Estimate of the Treatment Effect? • When is the sample size big enough? In a positive study -- a study in which the authors conclude that the treatment is effective -- if the lowest RRR that is consistent with the study results is still important (that is, it is large enough for you to recommend the treatment to the patient), then the investigators have enrolled sufficient patients. Ø In a negative study -- in which the authors have concluded that the experimental treatment is no better than control therapy -- if the RRR at the upper boundary would, if true, be clinically important, the study has failed to exclude an important treatment effect (i. e. has not enrolled sufficient patients). Ø

Evaluation of Primary Studies Assessing Therapies • When using the medical literature to answer a clinical question, approach the study using three discrete steps : 1) 2) 3) Are the results of the study valid? What are the results? How can I apply these results to patient care?

3. How Can I Apply These Results to Patient Care? • Before embarking on therapy based on a study for a particular patient, ask 3 questions: a) Were the study patients similar to the patient in my practice? b) Were all clinically important outcomes considered? c) Are the likely treatment benefits worth the potential harm and costs?

3 a. Were the study patients similar to the patient in my practice? Ø Ø If the patient had qualified for enrollment in the study -- that is, if she had met all inclusion criteria and had violated none of the exclusion criteria -- you can apply the results with considerable confidence. Even here, however, there is a limitation. Treatments are not uniformly effective in every individual. Conventional randomized trials estimate average treatment effects. Applying these average effects means that the clinician will likely be exposing some patients to the cost and toxicity of the treatment without benefit. A better approach than rigidly applying the study's inclusion and exclusion criteria is to ask whethere is some compelling reason why the results should not be applied to the patient.

3 b. Were all clinically important outcomes considered (and were the outcomes that were measured clinically significant)? Ø Treatments are indicated when they provide important benefits. Demonstrating that a bronchodilator produces small increments in forced expired volume in patients with chronic airflow limitation does not provide a sufficient reason for administering this drug. What is required is evidence that the treatment improves outcomes that are important to patients, such as reducing shortness of breath during the activities required for daily living. We can consider forced expired volume as a substitute or surrogate outcome. Investigators choose to substitute these variables for those that patients would consider important, usually because they would have had to enroll many more patients and follow them for far longer periods of time.

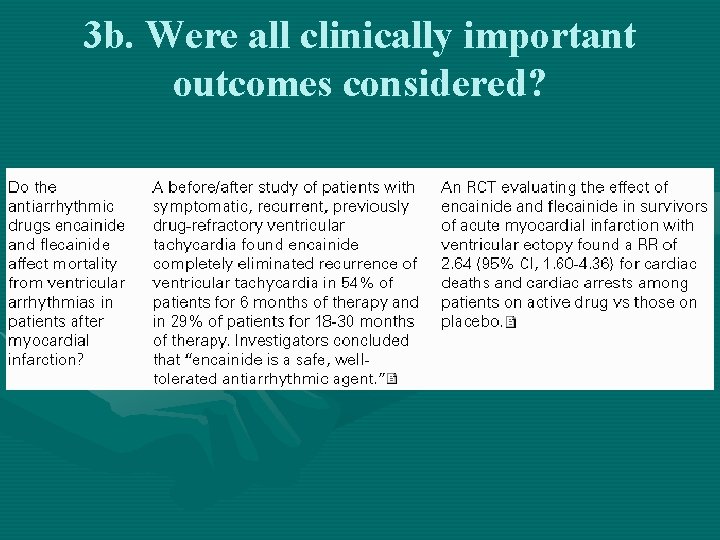

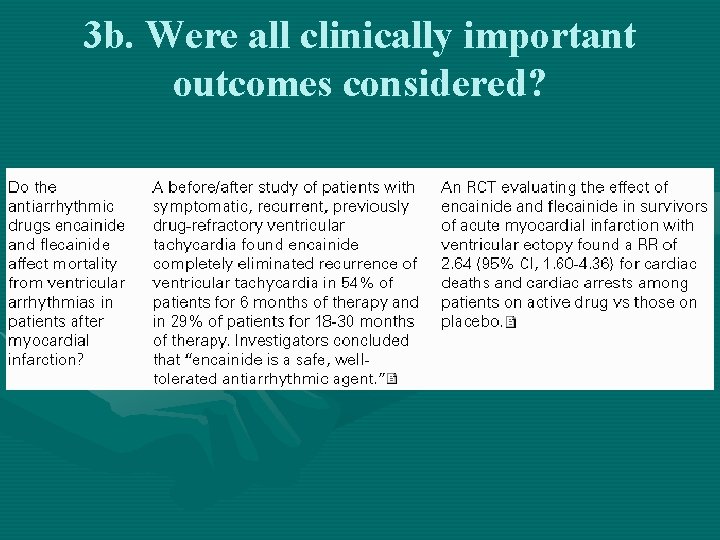

3 b. Were all clinically important outcomes considered?

3 b. Were all clinically important outcomes considered? Ø Even when investigators report favorable effects of treatment on one clinically important outcome, you must consider whethere may be deleterious effects on other outcomes. For example a cancer chemotherapeutic agent may lengthen life but decreases its quality.

3 c. Are the likely treatment benefits worth the potential harm and costs? Ø Ø If you can apply the study's results to a patient, and its outcomes are important, the next question concerns whether the probable treatment benefits are worth the effort that you and the patient must put into the enterprise. the NNT can help clinicians judge the degree of benefit and the degree of harm patients can expect from therapy.

3 c. Are the likely treatment benefits worth the potential harm and costs? Ø Ø As a result of taking aspirin, patients with hypertension without known coronary artery disease can expect a reduction of approximately 15% in their relative risk of cardiovascular related events. For an otherwise low-risk woman with hypertension and a baseline risk of cardiovascular related event of between 2. 5% and 5%, this translates into an NNT of approximately 200 during a 5 -year period. For every 161 patients treated with aspirin, one would experience a major hemorrhage (NNH = 161). Thus, in 1000 of these patients, aspirin would be responsible for preventing five cardiovascular events, but it would also be responsible for causing approximately six serious bleeding episodes.

3 c. Are the likely treatment benefits worth the potential harm and costs? Ø Ø Trading off benefit and risk requires an accurate assessment of medication adverse effects. Although RCTs are the correct vehicle for reporting commonly occurring side effects, reports regularly neglect to include these outcomes. Clinicians must often look to other sources of information – often characterized by weaker methodology – to obtain an estimate of the adverse effects of therapy. The preferences or values that determine the correct choice when weighing benefit and risk are those of the individual patient.

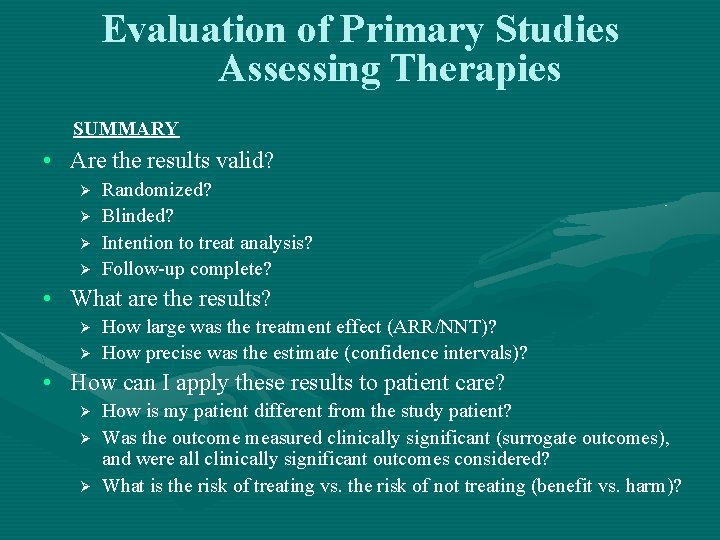

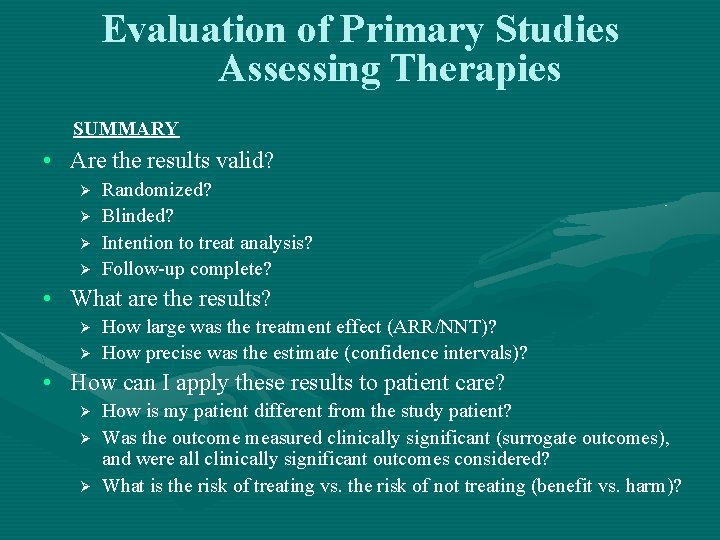

Evaluation of Primary Studies Assessing Therapies SUMMARY • Are the results valid? Ø Ø Randomized? Blinded? Intention to treat analysis? Follow-up complete? • What are the results? Ø Ø How large was the treatment effect (ARR/NNT)? How precise was the estimate (confidence intervals)? • How can I apply these results to patient care? Ø Ø Ø How is my patient different from the study patient? Was the outcome measured clinically significant (surrogate outcomes), and were all clinically significant outcomes considered? What is the risk of treating vs. the risk of not treating (benefit vs. harm)?

User’s Guides to the Medical Literature • The “User’s Guides to the Medical Literature” is a valuable resource. Please consult this for analyzing studies on harm, diagnosis, prognosis, and systematic reviews. The principles are the same as presented today, but the steps are different. • There is a direct link to the “User’s Guides” from the JAMA home page – www. jama. ama-assn. org

Shounak das

Shounak das Nonilateral

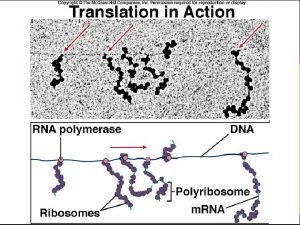

Nonilateral Translation

Translation Universal codon chart

Universal codon chart El filibusterismo august 1891

El filibusterismo august 1891 Aug q

Aug q Aug comm device

Aug comm device Aug kodon

Aug kodon Das alte ist vergangen das neue angefangen

Das alte ist vergangen das neue angefangen Ninguém quer a morte só saúde e sorte

Ninguém quer a morte só saúde e sorte Das alles ist deutschland das alles sind wir

Das alles ist deutschland das alles sind wir Jesus ich bin das licht der welt

Jesus ich bin das licht der welt Menosprezo das artes e das letras

Menosprezo das artes e das letras Doctors license number

Doctors license number Gbmc infoweb

Gbmc infoweb Hepburn osteometric board

Hepburn osteometric board Torrance memorial transitional care unit

Torrance memorial transitional care unit Cartersville medical center medical records

Cartersville medical center medical records Writing and evaluating expressions

Writing and evaluating expressions How to rewrite fraction exponents

How to rewrite fraction exponents Purchasing performance evaluation

Purchasing performance evaluation Lesson 15-1 defining and evaluating a logarithmic function

Lesson 15-1 defining and evaluating a logarithmic function Evaluating trading strategies

Evaluating trading strategies Evaluate 32 3/5

Evaluate 32 3/5 Finding evaluating and processing information

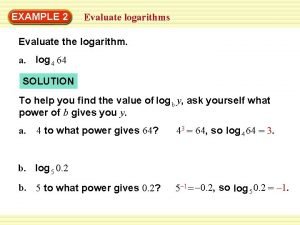

Finding evaluating and processing information Evaluate the logarithm

Evaluate the logarithm Evaluating trigonometric expressions

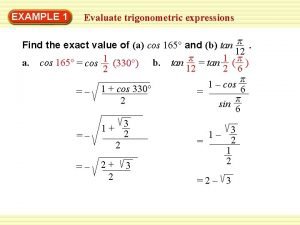

Evaluating trigonometric expressions Thalia mililani

Thalia mililani Controlling the sales force is an activity of

Controlling the sales force is an activity of Steps of textbook evaluation

Steps of textbook evaluation Evaluating functions and operations on functions

Evaluating functions and operations on functions In identifying company resources with competitive value

In identifying company resources with competitive value Evaluate absolute value expressions calculator

Evaluate absolute value expressions calculator Reporting and evaluating research

Reporting and evaluating research Understanding the management process

Understanding the management process Chapter 6 trigonometric functions

Chapter 6 trigonometric functions Sales force performance evaluation

Sales force performance evaluation Chapter 14 evaluating channel member performance

Chapter 14 evaluating channel member performance Evaluating broadcast and print media

Evaluating broadcast and print media Evaluating arithmetic series

Evaluating arithmetic series Lesson plan on evaluating algebraic expressions

Lesson plan on evaluating algebraic expressions Evaluating credible sources activity

Evaluating credible sources activity Sin 45 exact value

Sin 45 exact value Paradigm of the channel design decision

Paradigm of the channel design decision Simplifying integer exponents

Simplifying integer exponents 12-2 practice evaluating limits algebraically

12-2 practice evaluating limits algebraically Thinking, language and intelligence psychology summary

Thinking, language and intelligence psychology summary Evaluating alternatives and making choices among them

Evaluating alternatives and making choices among them Rumelt's criteria for evaluating strategies example

Rumelt's criteria for evaluating strategies example Finding limits graphically

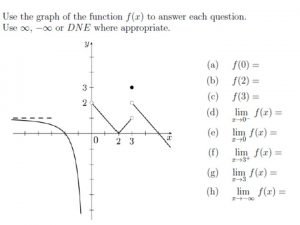

Finding limits graphically Perbedaan controlling dan evaluating

Perbedaan controlling dan evaluating