Eris Performance Load Testing Part 1 Performance Load

- Slides: 44

Eris Performance & Load Testing

Part 1 Performance & Load Testing Basics

Performance & Load Testing Basics ü ü ü ü Introduction to Performance Testing Difference between Performance, Load and Stress Testing Why Performance Testing? When is it required? What should be tested? Performance Testing Process Load Test configuration for a web system Practice Questions

Introduction to Performance Testing Ø Performance testing is the process of determining the speed or effectiveness of a computer, network, software program or device. Ø Before going into the details, we should understand the factors that governs Performance testing: ü ü Throughput Response Time Tuning Benchmarking

Throughput Ø Capability of a product to handle multiple transactions in a give period. Ø Throughput represents the number of requests/business transactions processed by the product in a specified time duration. Ø As the number of concurrent users increase, the throughput increases almost linearly with the number of requests. As there is very little congestion within the Application Server system queues.

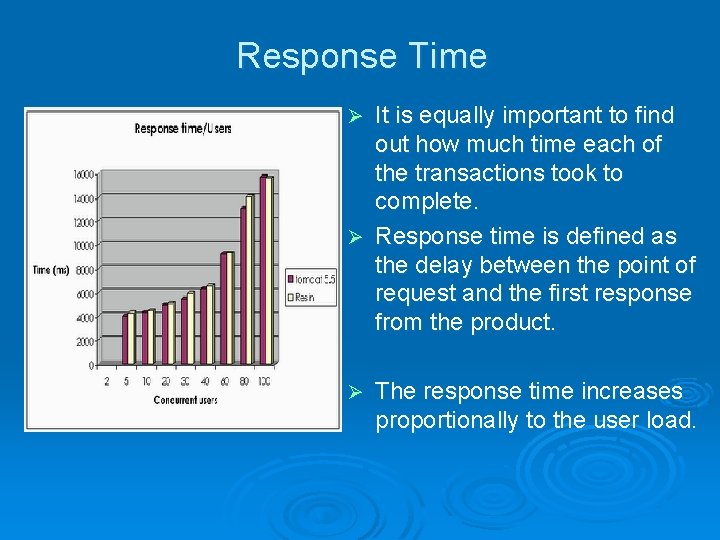

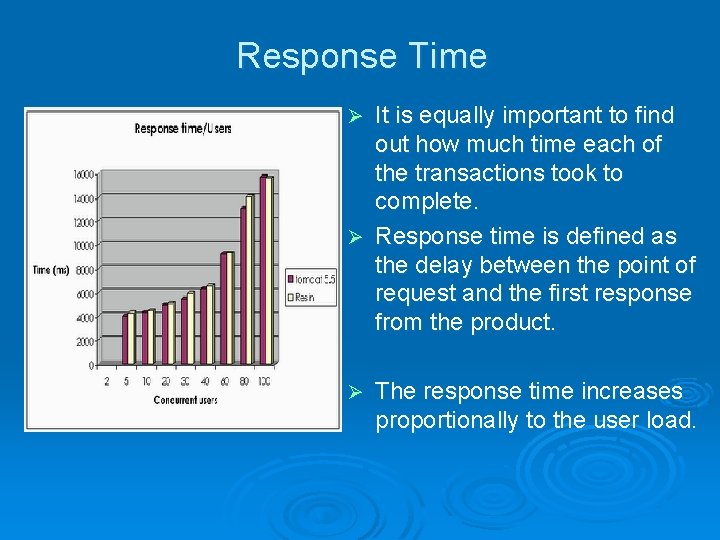

Response Time It is equally important to find out how much time each of the transactions took to complete. Ø Response time is defined as the delay between the point of request and the first response from the product. Ø Ø The response time increases proportionally to the user load.

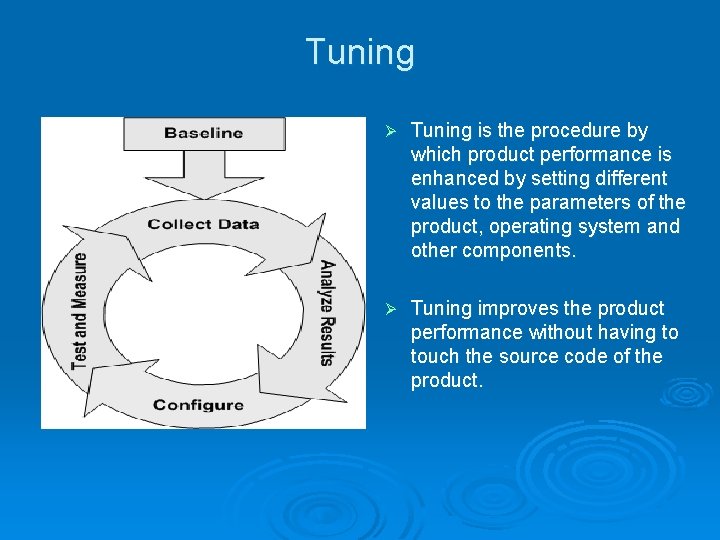

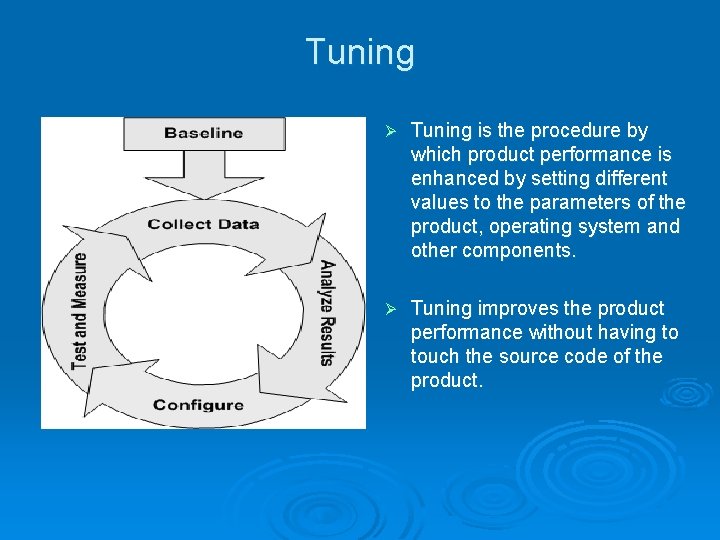

Tuning Ø Tuning is the procedure by which product performance is enhanced by setting different values to the parameters of the product, operating system and other components. Ø Tuning improves the product performance without having to touch the source code of the product.

Benchmarking Ø A very well-improved performance of a product makes no business sense if that performance does not match up to the competitive products. Ø A careful analysis is needed to chalk out the list of transactions to be compared across products so that an apple-apple comparison becomes possible.

Performance Testing- Definition Ø The testing to evaluate the response time (speed), throughput and utilization of system to execute its required functions in comparison with different versions of the same product or a different competitive product is called Performance Testing. Ø Performance testing is done to derive benchmark numbers for the system. Ø Heavy load is not applied to the system Ø Tuning is performed until the system under test achieves the expected levels of performance.

Difference between Performance, Load and Stress Testing Load Testing Ø Process of exercising the system under test by feeding it the largest tasks it can operate with. Ø Constantly increasing the load on the system via automated tools to simulate real time scenario with virtual users. Examples: Ø Testing a word processor by editing a very large document. Ø For Web Application load is defined in terms of concurrent users or HTTP connections.

Difference between Performance, Load and Stress Testing Ø Trying to break the system under test by overwhelming its resources or by taking resources away from it. Ø Purpose is to make sure that the system fails and recovers gracefully. Example: Ø Double the baseline number for concurrent users/HTTP connections. Ø Randomly shut down and restart ports on the network switches/routers that connects servers.

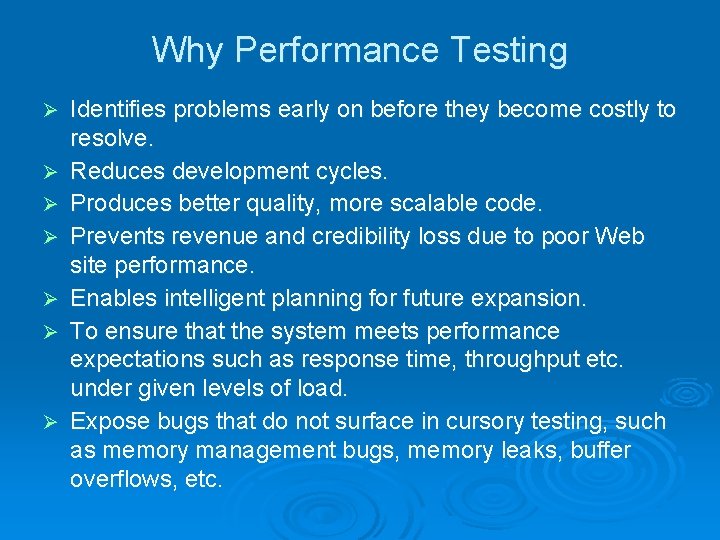

Why Performance Testing Ø Ø Ø Ø Identifies problems early on before they become costly to resolve. Reduces development cycles. Produces better quality, more scalable code. Prevents revenue and credibility loss due to poor Web site performance. Enables intelligent planning for future expansion. To ensure that the system meets performance expectations such as response time, throughput etc. under given levels of load. Expose bugs that do not surface in cursory testing, such as memory management bugs, memory leaks, buffer overflows, etc.

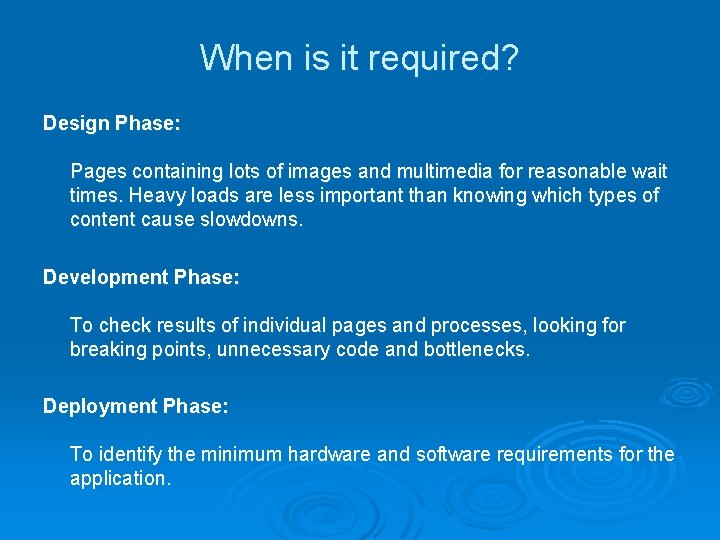

When is it required? Design Phase: Pages containing lots of images and multimedia for reasonable wait times. Heavy loads are less important than knowing which types of content cause slowdowns. Development Phase: To check results of individual pages and processes, looking for breaking points, unnecessary code and bottlenecks. Deployment Phase: To identify the minimum hardware and software requirements for the application.

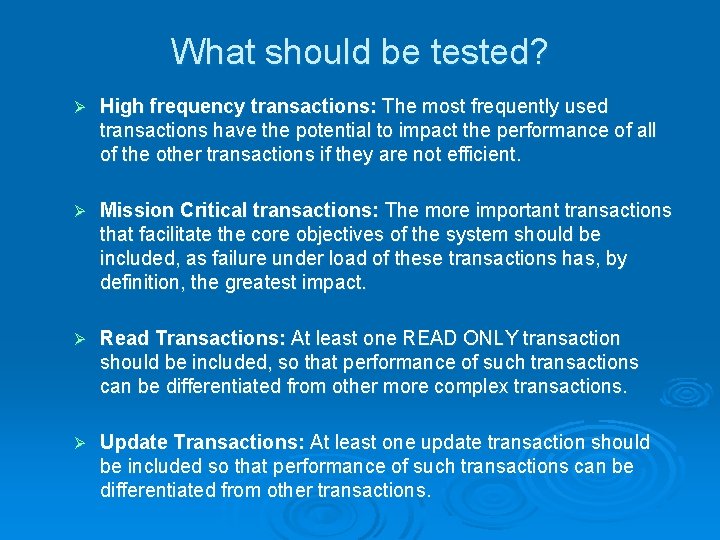

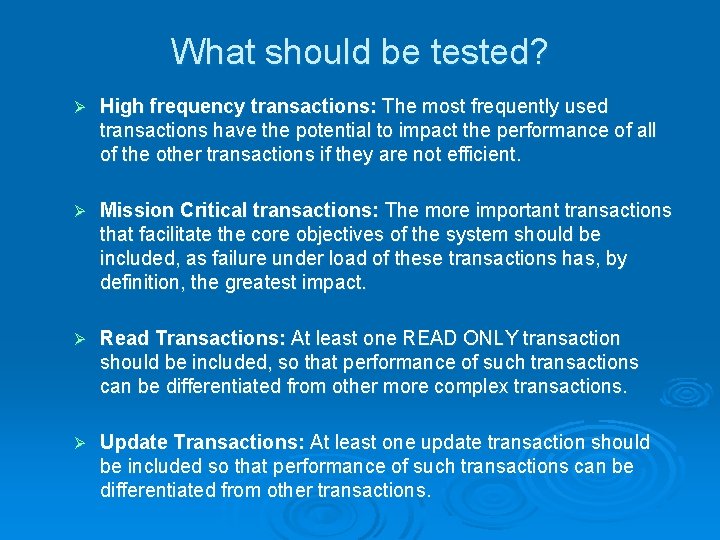

What should be tested? Ø High frequency transactions: The most frequently used transactions have the potential to impact the performance of all of the other transactions if they are not efficient. Ø Mission Critical transactions: The more important transactions that facilitate the core objectives of the system should be included, as failure under load of these transactions has, by definition, the greatest impact. Ø Read Transactions: At least one READ ONLY transaction should be included, so that performance of such transactions can be differentiated from other more complex transactions. Ø Update Transactions: At least one update transaction should be included so that performance of such transactions can be differentiated from other transactions.

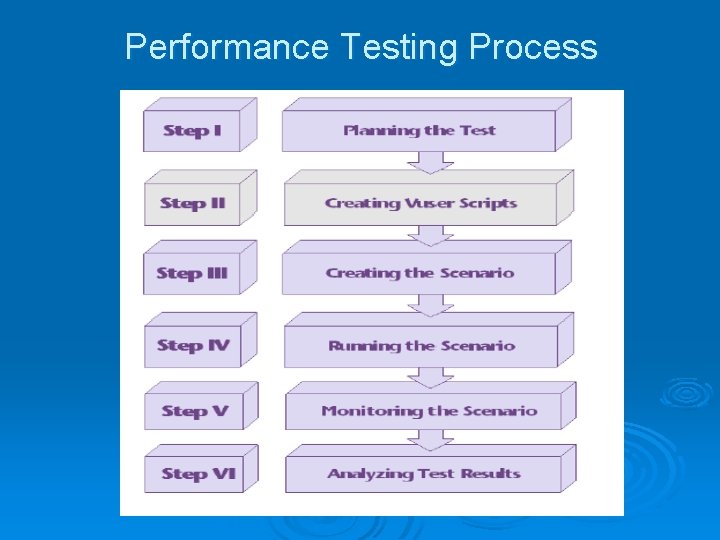

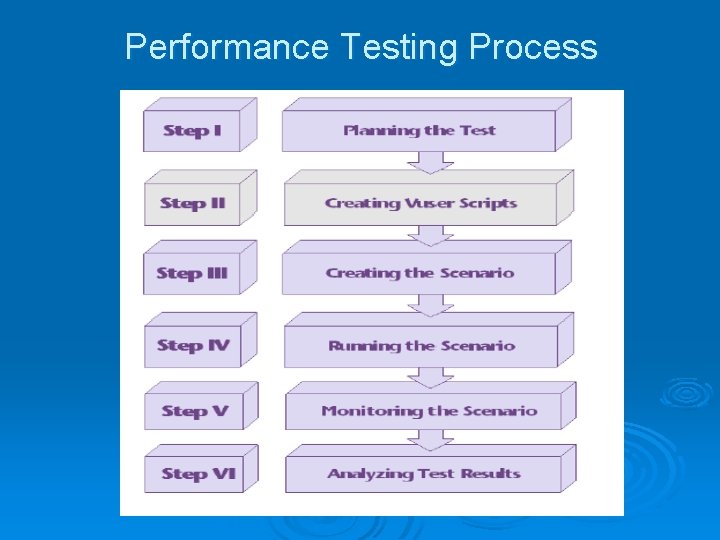

Performance Testing Process

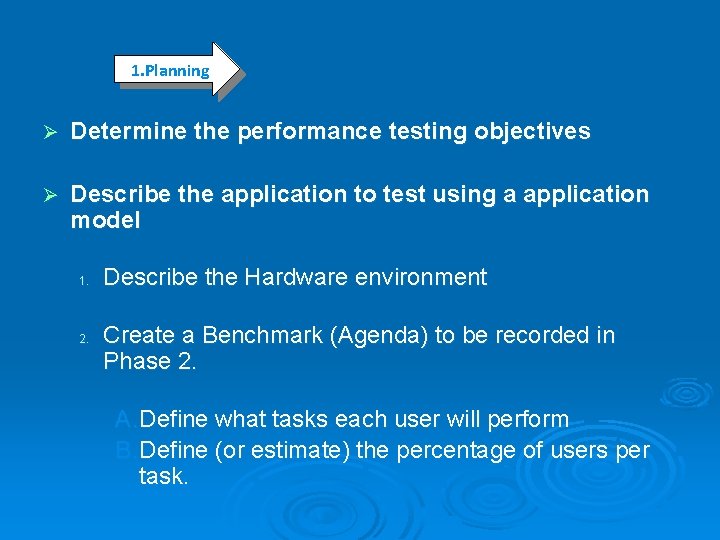

1. Planning Ø Determine the performance testing objectives Ø Describe the application to test using a application model 1. 2. Describe the Hardware environment Create a Benchmark (Agenda) to be recorded in Phase 2. A. Define what tasks each user will perform B. Define (or estimate) the percentage of users per task.

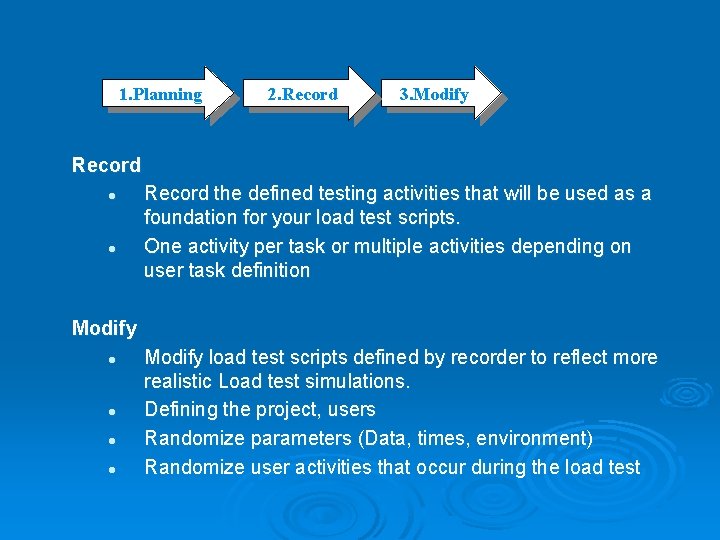

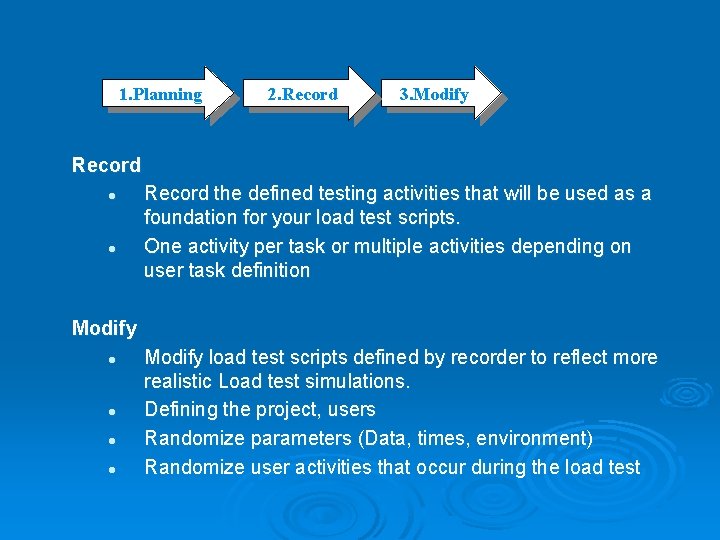

1. Planning 2. Record 3. Modify Record l l Record the defined testing activities that will be used as a foundation for your load test scripts. One activity per task or multiple activities depending on user task definition Modify l l Modify load test scripts defined by recorder to reflect more realistic Load test simulations. Defining the project, users Randomize parameters (Data, times, environment) Randomize user activities that occur during the load test

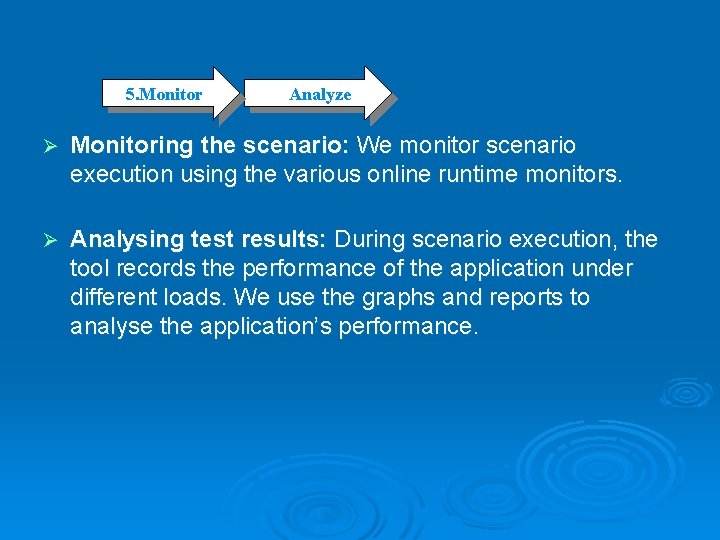

5. Monitor 6. Analyze Ø Monitoring the scenario: We monitor scenario execution using the various online runtime monitors. Ø Analysing test results: During scenario execution, the tool records the performance of the application under different loads. We use the graphs and reports to analyse the application’s performance.

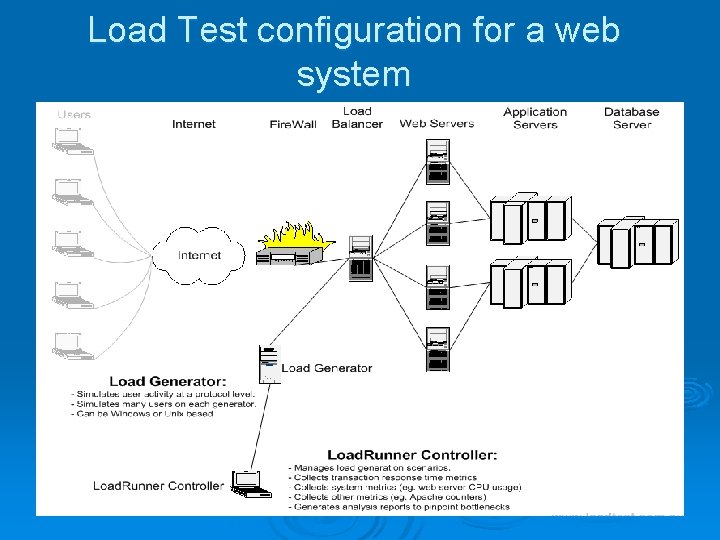

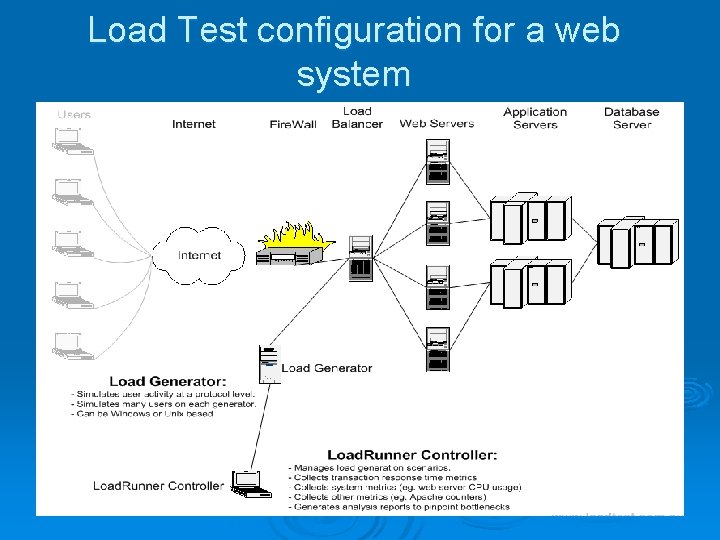

Load Test configuration for a web system

Part 2 Load Testing Tool

Test Tool Modules Ø Recorder Ø Scenario Generator Ø Scenario Parameter Editor Ø Test Case Creator Ø Runnig Tests Ø Analyzing Results

Recorder l l l l Recorder runs on the project server It serves the client requests Starts or stops recording at any time Records any user interaction with the server Resets recording Renders screen capture to client application. It listens the end-user operations on the application and saves it as a test scenario

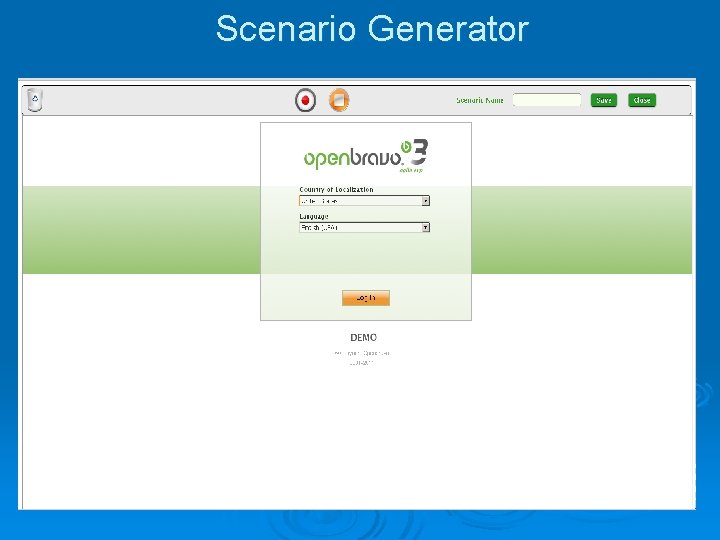

Scenario Generator

l l l Wraps any application The end user actions on the embedded application are listened. Starts, stops and clears recording. Captures all the user events Saves the operation as a new scenario

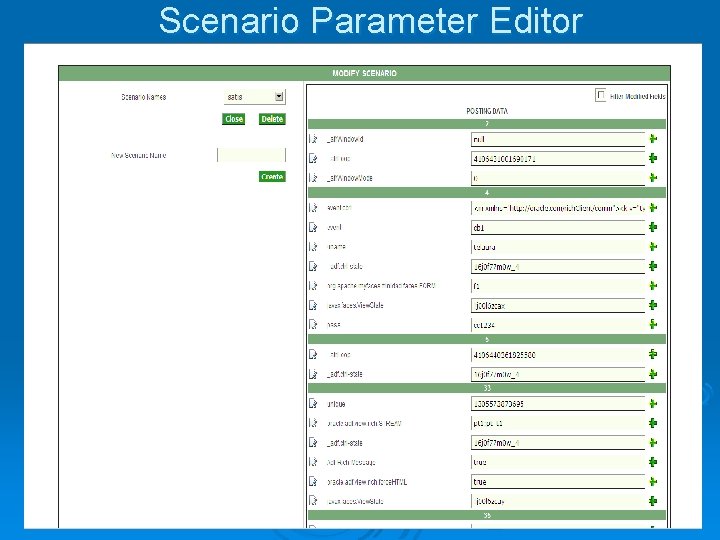

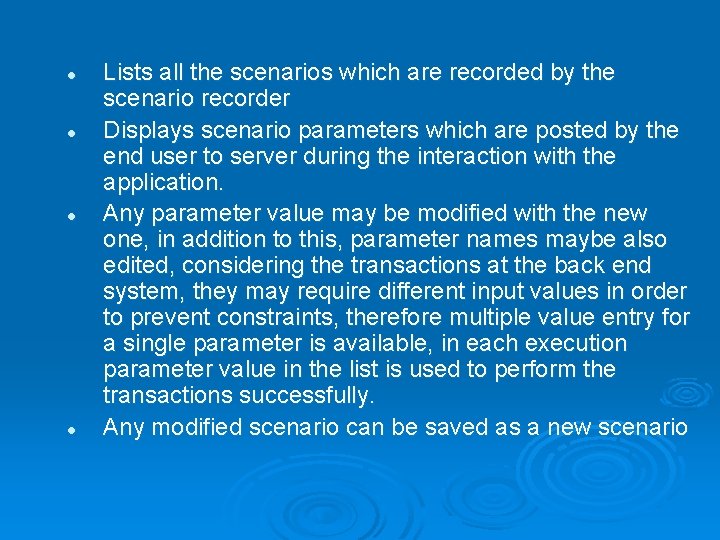

Scenario Parameter Editor

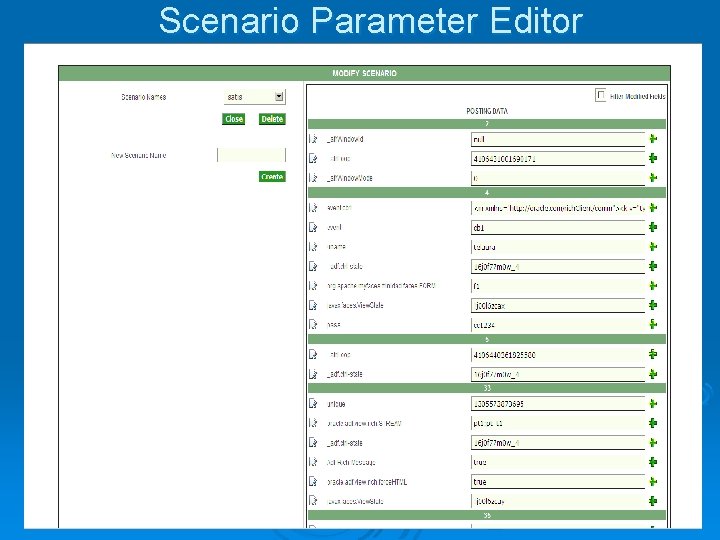

l l Lists all the scenarios which are recorded by the scenario recorder Displays scenario parameters which are posted by the end user to server during the interaction with the application. Any parameter value may be modified with the new one, in addition to this, parameter names maybe also edited, considering the transactions at the back end system, they may require different input values in order to prevent constraints, therefore multiple value entry for a single parameter is available, in each execution parameter value in the list is used to perform the transactions successfully. Any modified scenario can be saved as a new scenario

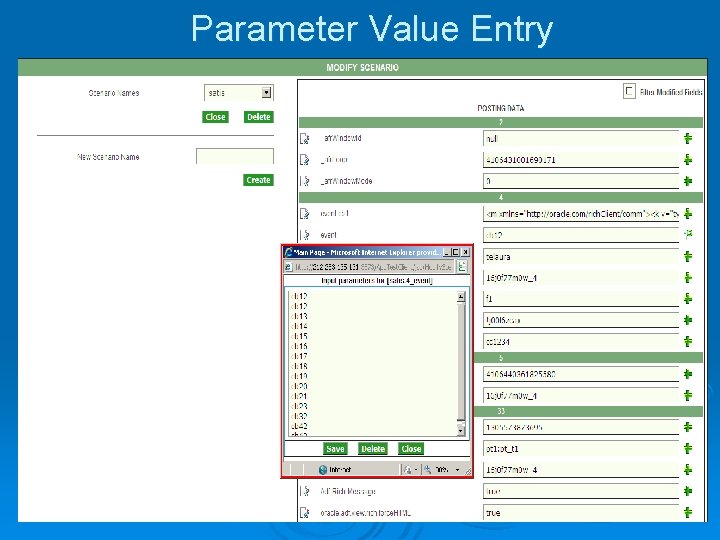

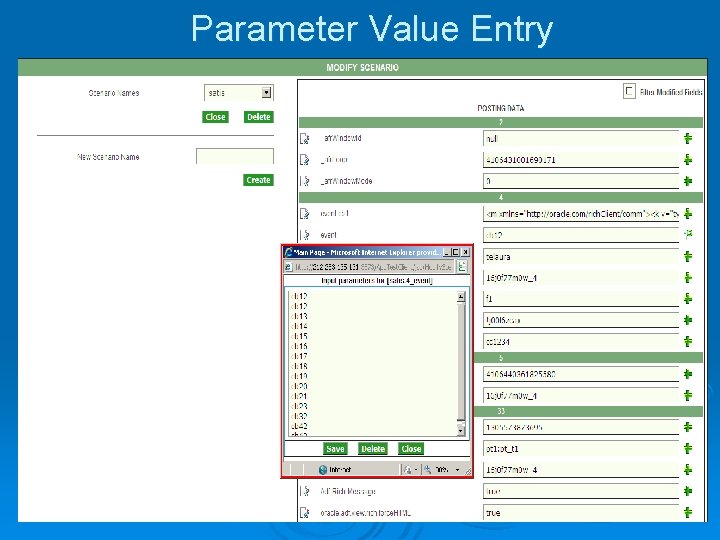

Parameter Value Entry

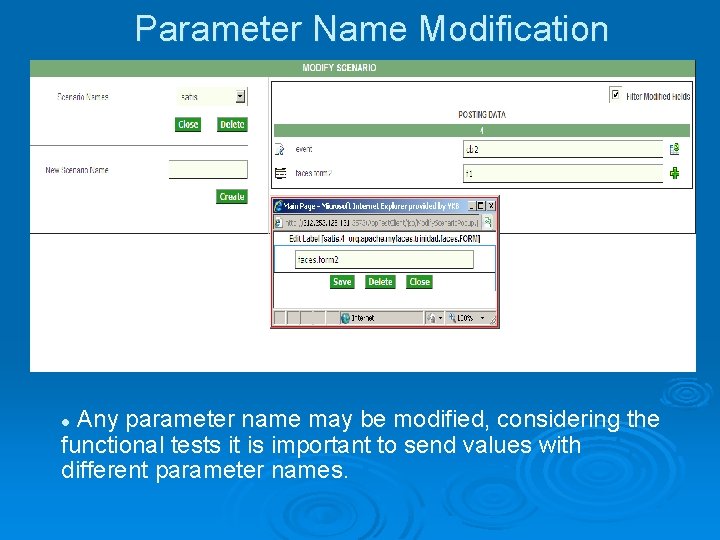

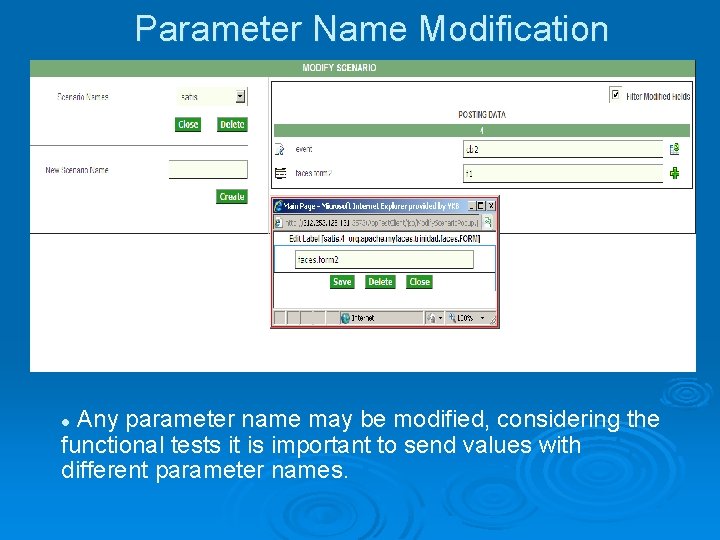

Parameter Name Modification Any parameter name may be modified, considering the functional tests it is important to send values with different parameter names. l

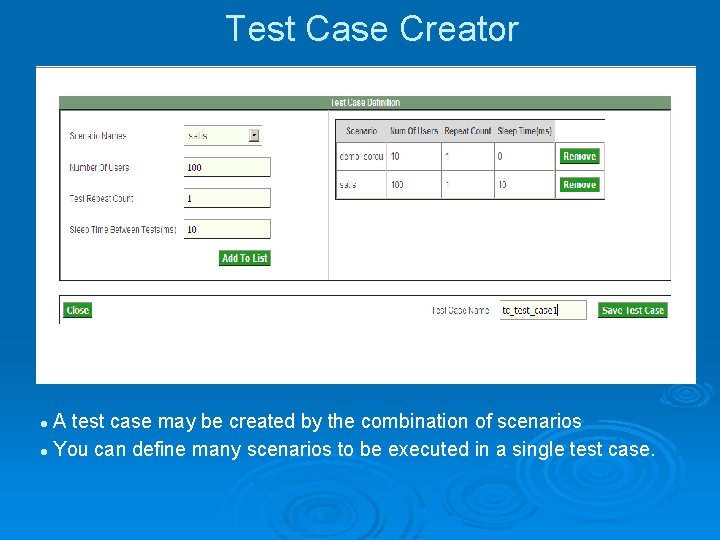

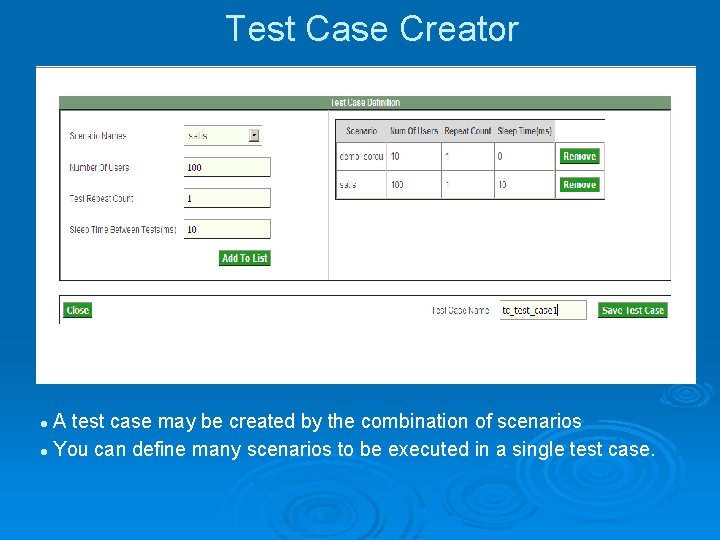

Test Case Creator A test case may be created by the combination of scenarios l You can define many scenarios to be executed in a single test case. l

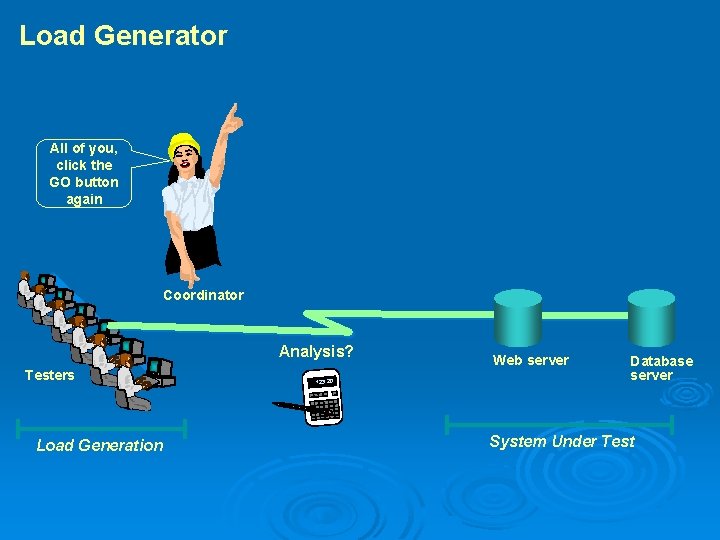

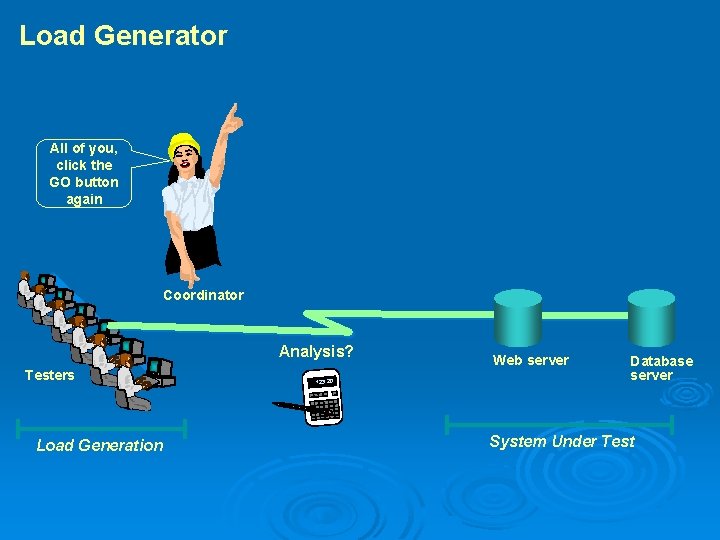

Load Generator All of you, click the GO button again Coordinator Analysis? Testers Load Generation 123. 20 Web server Database server System Under Test

l l Defines the scenario execution strategy A scenario can be executed with n users with n time repetation and n mili seconds sleep time between the executions. Scenarios are defined to work together to form a test case. Considering the different scenario execution definition and combination, many test case can be created to be executed.

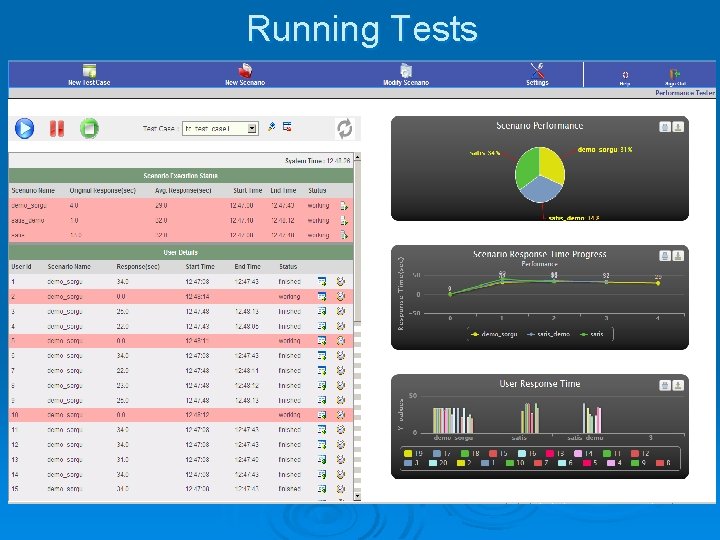

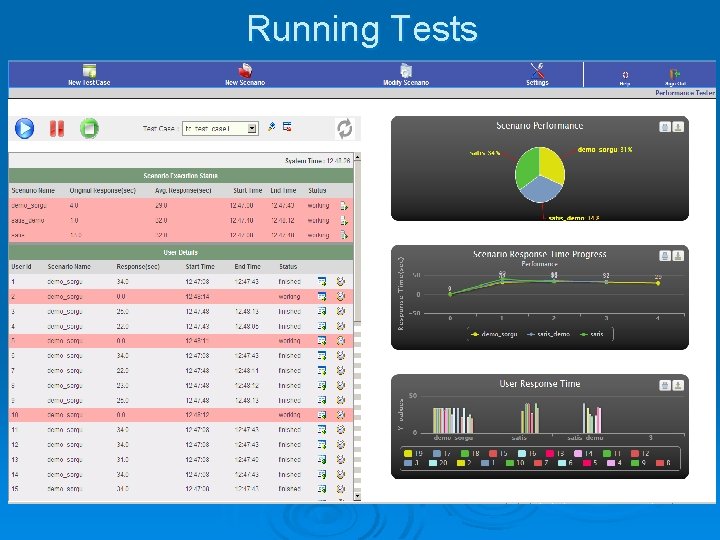

Running Tests

l l l l A test case is selected from the pre defined list. Test case may be viewed and modified at any time. When run button clicked, all the users created and executes the test case which contains scenarios. At any time, the test execution may be stopped or suspended. Test progress is illustrated on the GUI. Regarding the execution status, scenario and users have different color. All the users are created at the same time and performs the scenarios. The execution of the scenarios and user behaviours may be viewed real time.

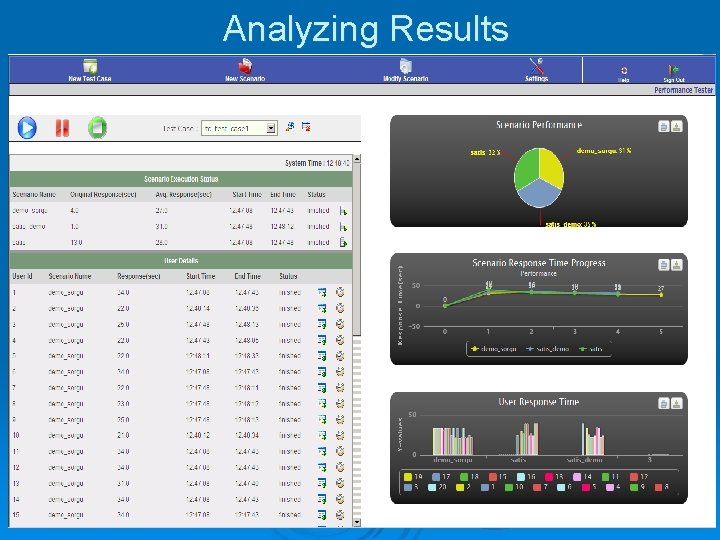

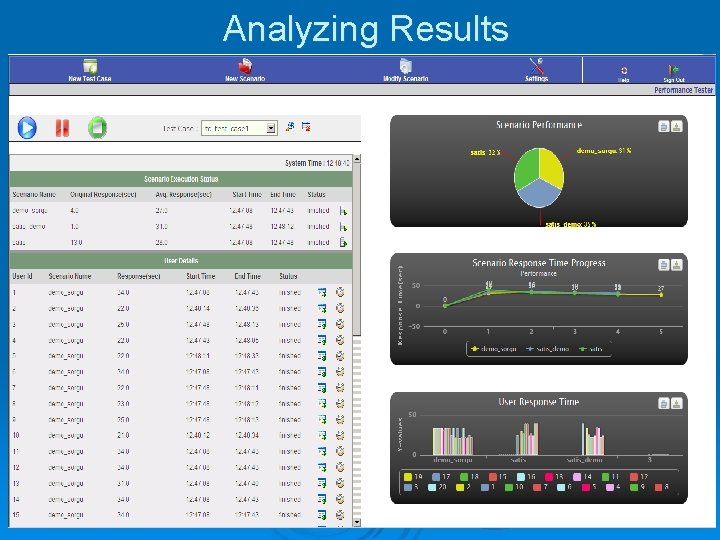

Analyzing Results

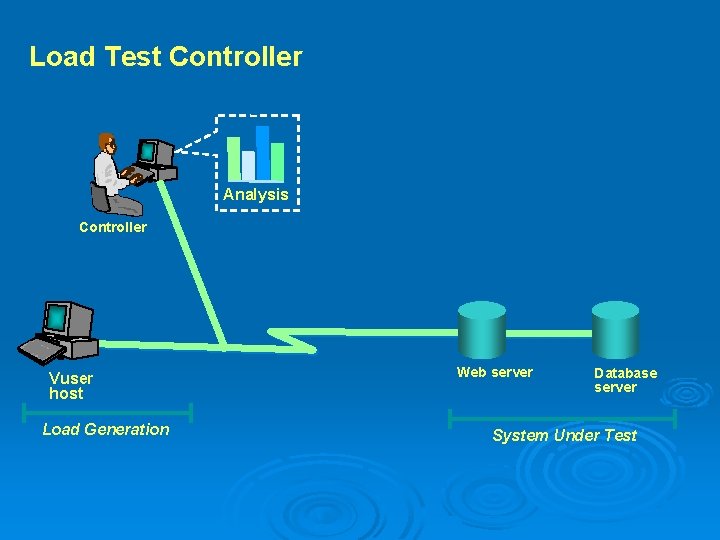

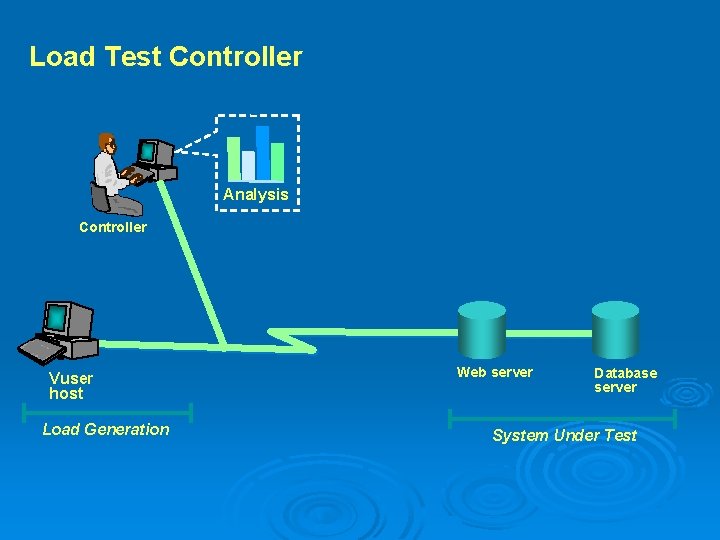

Load Test Controller Analysis Controller Vuser host Load Generation Web server Database server System Under Test

l l l While the test is being executed the results are displayed at runtime. The original and virtual scenario response time are displayed on the screen. Scenario execution start and end time information is displayed along with average response time, the status field for the scenario changes while the test is in progress, it may be working, suspended, stopped or failed. Scenario execution times are compared with a graphic at the right corner of the screen, the system gives information about the response time ratios, in addition to this, during the test the change in the ratios may be observed. In the second graphic at the right frame, the cut point response times are displayed for each scenario

l l l For each scenario all the user execution detail is displayed. For each users, the execution start and end times, average response time and status information is displayed considering the scenario. When the scenario icon is clicked, the user details belonging to that scenario is dipayed at the bottom. At the right frame belowest graphic displayes the user response times for each scenario, the change in the response time for each user can be viewed in this graphic. For each user two action button is available, the first one displayes the user interaction steps with the server during the execution of the scenario, the second one compares the execution steps with the original scenario.

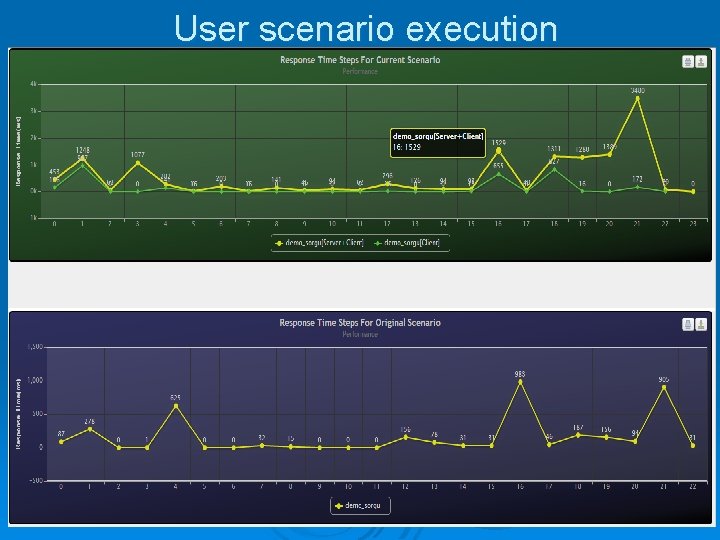

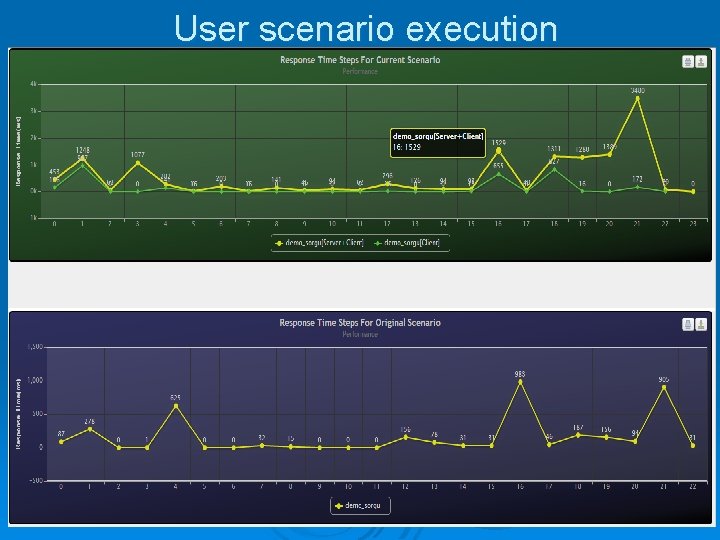

User scenario execution

l l l The user scenario execution is diplayed as a graphic. The graphic displayes the user interaction with the server to perform a single scenario. The right axis displayes the execution steps while the left one prints the execution time for each step in miliseconds. The yellow lines display the execution time which passes in the server. The green lines display the execution time which passes in the client side, in other words, it is data rendering time, if the network is slow or the data amount is huge then the green line execution times will be higher accordingly. All the graphics can be printed out.

l l l The second graphic at the below displayes the original end user scenario execution time considering all the steps. The execution time for each step is displayed in miliseconds. For both graphics if the value mark points are clicked the data exchange with the server and other details are displayed. All the graphics can be downloaded with the jpeg, png, svg and pdf file formats. All the graphics can be printed out.

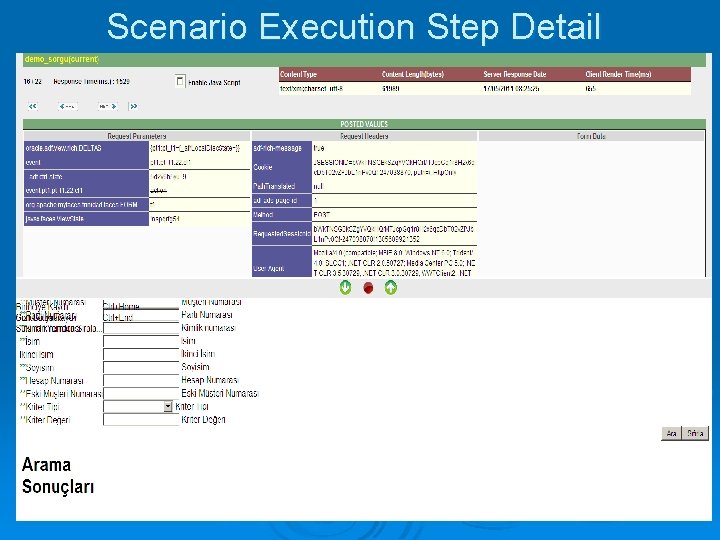

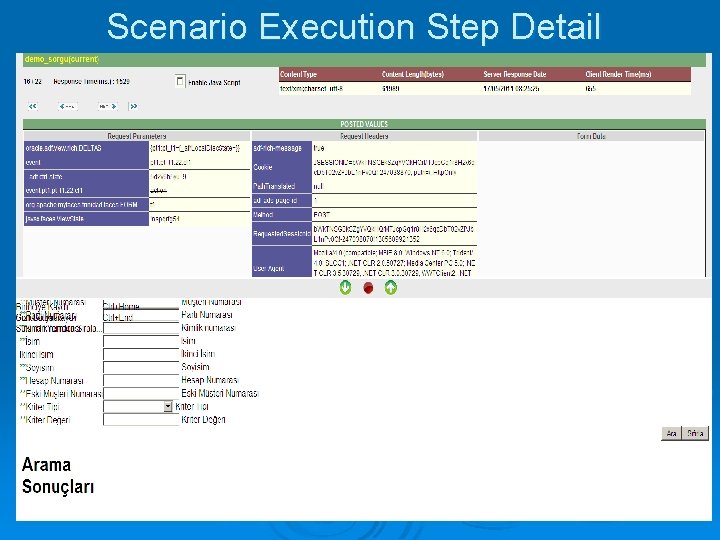

Scenario Execution Step Detail

l l l l Each user scenario execution consists of many steps, for a single step all the posted and received data is diplayed. The step response time, client posted datas like request, form and headers are displayed. The server response data in html format are displayed at the bottom frame. Server response time, response type, content length and client data render time may be observed. All the steps can be iterated. In each step, all the data exchange details are displayed. If there is an error in the response html, the error message is also displayed with an indication. Execution step details are available for both original and virtual users.

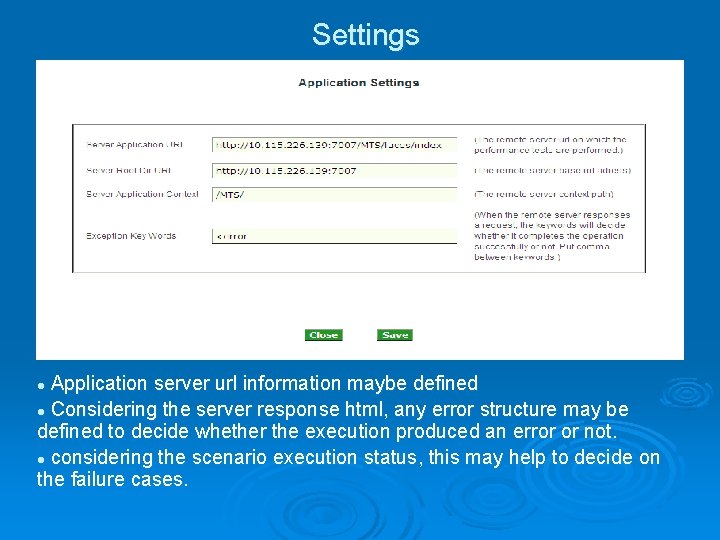

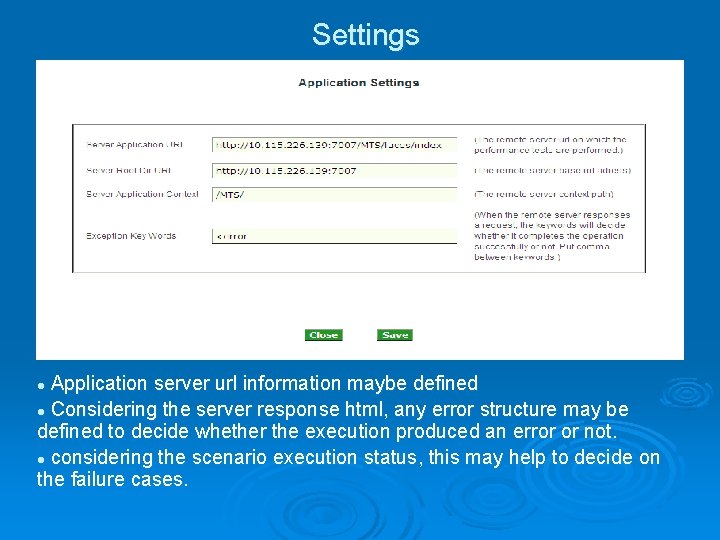

Settings Application server url information maybe defined l Considering the server response html, any error structure may be defined to decide whether the execution produced an error or not. l considering the scenario execution status, this may help to decide on the failure cases. l

Thank You